Abstract

Causal parameters may not be point identified in the presence of unobserved confounding. However, information about non-identified parameters, in the form of bounds, may still be recovered from the observed data in some cases. We develop a new general method for obtaining bounds on causal parameters using rules of probability and restrictions on counterfactuals implied by causal graphical models. We additionally provide inequality constraints on functionals of the observed data law implied by such causal models. Our approach is motivated by the observation that logical relations between identified and non-identified counterfactual events often yield information about non-identified events. We show that this approach is powerful enough to recover known sharp bounds and tight inequality constraints, and to derive novel bounds and constraints.

1. INTRODUCTION

Directed acyclic graphs (DAGs) are commonly used to represent causal relationships between random variables, with a directed edge from A to Y (A → Y) representing that A “directly causes” Y. Under the interventionist view of causality, this relationship is taken to mean that Y may change if any set of variables S that includes A is set, possibly contrary to fact, to values s. The operation that counterfactually sets values of variables is known as an intervention and has been denoted by the do(s) operator in [12].

The variable Y after an intervention do(a) is performed is denoted Y(a), and is referred to as a potential outcome, or a counterfactual random variable [10]. Distributions over counterfactuals such as P(Y(a)) may be used to quantify cause-effect relationships by means of a hypothetical randomized controlled trial (RCT). For example, the average causal effect (ACE) is defined as and is a comparison of means in two arms of a hypothetical RCT, with the arms defined by do(a) and do(a′) operations.

Since counterfactuals are not observed directly in the data, assumptions are needed to link counterfactual parameters with the observed data distribution. These assumptions are provided by causal models (often represented by DAGs), which are substantively justified using background knowledge or learned directly from data [19].

Under some causal models, counterfactual distributions P(Y(a)) may be identified exactly (expressed as functionals of the observed data distribution) [20, 18, 6]. However, when causally relevant variables are not observed, counterfactual distributions may not be identified.

The ideal approach for dealing with non-identified parameters is additional data collection that would “expand” the observed data distribution by rendering previously unobserved variables observable, and thus a previously non-identified parameter identifiable.

If additional data collection is not possible, the alternative is to impose additional parametric assumptions on the causal model which would imply identification, or retreat to a weaker notion of identification, where the observed data distribution is used to obtain bounds on the non-identified parameter of interest. The existence of such bounds may yield substantively significant conclusions, for instance by indicating that the causal effect is present (if the corresponding parameter is bounded away from 0).

A well known example of a causal model with a non-identified causal parameter with non-trivial bounds is the instrumental variable (IV) model [1, 9, 17]. The original sharp bounds for the causal parameter in the IV model were derived using a computationally intensive, and difficult to interpret, convex polytope vertex enumeration approach [1]. Subsequent work [13] has extended this approach to other scenarios where a counterfactual objective can be expressed as a linear function of the observed data law. These approaches are limited both by computational complexity and by the required linear form of the objective.

Bounds on causal parameters are related to inequality constraints on the observed data law implied by hidden variable DAGs, as demonstrated by the derivation of the original IV inequalities [1], and subsequent work on inequality constraints [4, 7]. The approach developed in [22] for deriving such inequality constraints is very general and is conjectured to be able to recover all constraints implied by a hidden variable DAG, but is computationally challenging to evaluate, and has no bounded running time.

In this paper, we present a new approach for deriving bounds on non-identified causal parameters that directly uses restrictions implied by a causal model, rules of probability theory, and logical relations between identified and non-identified counterfactual events. We then build on this approach to present a new class of inequality constraints on the observed data law.

The paper is organized as follows. We introduce notation and relevant concepts in Section 2. We provide an intuitive introduction to our method by re-deriving known sharp bounds in the binary IV model [1] in Section 3. In Section 4, we present results important to our approach, and provide a general algorithm for obtaining bounds on causal parameters. Section 5 demonstrates how our approach may be used to derive generalized instrumental variable inequalities, of which the original IV inequalities and Bonet’s inequalities [3] are special cases. Finally, in Section 6 we make use of these results to provide novel bounds and inequality constraints for two sample models.

2. PRELIMINARIES

We let denote a DAG with a vertex set V such that each element of V corresponds to a random variable. The statistical model of is the set of joint distributions P(V) that are Markov relative to the DAG . Specifically, it’s the set , where is the set of parents of V in .

The causal model of a DAG is also a set of joint distributions, but over counterfactual random variables. A counterfactual Y(a) denotes the random variable Y in a counterfactual world, where A is exogenously set to the value a.

Such a causal model can be described by a set of structural equations , where each fV can be thought of as a causal mechanism that maps values of (parents of V) and the exogenous noise term ϵV to a value of V. For a given set of values a of , variation of ϵV yields the counterfactual random variable V(a) as the output of fV(a, ϵV).

Other counterfactuals can be defined through recursive substitution [15], as follows:

| (1) |

This definition, following from the structural equation model view of the causal model of a DAG, allows the effects of exogenous intervention to propagate downstream to the outcome of interest. Under this view, only the noise variables in the set {ϵV : V ∈ V} are random. The distributions of the observed data and of counterfactual random variables can be thought of as the distributions of different functions of {ϵV : V ∈ V} as described in (1).

As a notational convention, given any set {Y1, …, Yk} ≡ Y, and a set of treatments A set to a, we will denote a set of counterfactuals {Y1(a), …, Yk(a)} defined by (1) by the shorthand Y(a). We will sometimes denote single variable events Y(a) = y via the shorthand y(a) for conciseness, similarly multivariable events Y(a) = y will sometimes be denoted as y(a).

One consequence of (1) is that some counterfactuals Y (a) only depend on a subset of values in a, specifically those values that make an appearance in one of the base cases of the definition. Restrictions of this sort are sometimes called exclusion restrictions.

The recursive substitution definition above implies the following generalized consistency property, which states that for any disjoint subsets A, B, Y of V,

| (2) |

If all variables V in a causal model represented by a DAG are observed, every interventional distribution P(Y(a)), where A ⊆ V, Y ⊆ V \ A, is identified from P(V) via the following functional: , known as the g-formula [16].

In practice, not all variables in a causal model may be observed. In a hidden variable causal model, represented by a DAG , where no data is available on variables in H, not every counterfactual distribution is identified.

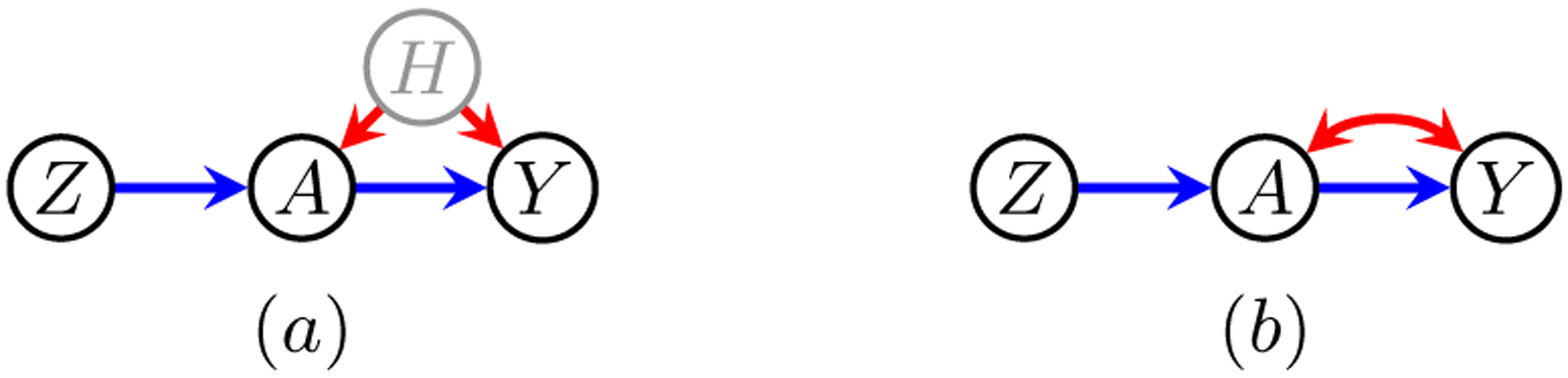

Reasoning about parameter identification is often performed via an acyclic directed mixed graph (ADMG) summary of called a latent projection [21]. The latent projection keeps vertices corresponding to V, and adds two kinds of edges between these vertices. A directed edge (→) between any Vi, Vj ∈ V is added if there exists a directed path from Vi to Vj in and all intermediate vertices on the path are in H. A bidirected edge (↔) between any Vi, Vj ∈ V is added if there exists a path from Vi to Vj which starts with an edge into Vi, ends with an edge into Vj, has no two adjacent edges pointing into the same vertex on the path, and has all intermediate elements in H. See Fig. 1 (a) and (b) for a simple example of this construction for the IV model.

Figure 1:

(a) The classic instrumental variable model, and (b) its latent projection.

If P(Y(a)) is identified from P(V) in a causal model represented by a hidden variable DAG , then its identifying functional may be expressed using via the ID algorithm [20]. See [14] for details.

If P(y(a)) is not identified from P(V) in a causal model given by , bounds may nevertheless be placed on this distribution. Before describing our approach for obtaining bounds in full generality, we illustrate how it may be used to obtain known sharp bounds for a non-identified counterfactual probability in the binary IV model.

3. BOUNDS IN THE BINARY INSTRUMENTAL VARIABLE MODEL

The instrumental variable model is represented graphically in Fig. 1 (a), with its latent projection shown in Fig. 1 (b). We are interested in the counterfactual probability P(y(a)), which is known not to be identified without parametric assumptions. In this section, we demonstrate that known sharp bounds on P(y(a)) can be recovered by reasoning causally about the structure of this graph and the associated counterfactual distributions.

The Instrumental Variable Thought Experiment

We consider the binary IV model in Fig. 1 (a) in the setting of clinical trials with non-compliance. We interpret Z to indicate treatment assignment, A to indicate treatment actually taken, and Y to indicate a clinical outcome of interest. Unobserved factors, such as personality traits, may influence both treatment decision and outcome, and thus act as confounders.

Suppose we intervene to assign some subject to treatment arm z. We observe that after this intervention, our subject takes treatment a and has outcome y. Thus, in this subject, we observe the event A(z) = a ∧ Y (z) = y.

Now suppose we are interested in intervening directly on treatment for the same subject. We would like to set A = a, leaving Z (arm assignment) to the physician’s choice. Under the model, the outcome Y depends only on A and the noise term ϵY, representing in this case subject-specific personality traits. Because these traits are unchanged by intervention on A or Z, and A is set to the same value it took under our first intervention, we must conclude that under this second intervention we would observe the same outcome as under the first, denoted by the event Y(a) = y.

This thought experiment demonstrates that an event in one hypothetical world, under one intervention, can imply an event under another intervention. We call this phenomenon “cross-world implication,” and it is formalized in proposition 2. We now develop the intuition further to recover sharp bounds for the IV model with binary random variables.

Sharp Bounds in the Binary IV Model

In the following derivation of sharp bounds for P(y(a)) in the binary IV model, we will denote values 1 for all variables by lower case (e.g. a), and values 0 by a lower case with a bar (e.g. ).

Our strategy will be to partition the event Y(a) = y into smaller, more manageable events:

| (3) |

| (4) |

| (5) |

Note that events in (4) and (5) are related to the compliers and never-takers principal strata [5].

To see that these events form a partition (i.e. are mutually exclusive and exhaustive) of the event Y(a) = y, we first observe that by the exclusion restriction in the model, and generalized consistency, and Y(z) in equations (3) and (4) respectively will be equal to Y(a). Then we can see that (3) covers the portion of Y(a) = y where , (4) covers the portion where , and (5) covers the portion where .

Because these events partition Y(a) = y, the sum of their probabilities will be equal to P(Y(a) = y), and a sum of lower-bounds on their probabilities will yield a lower bound on P(Y(a) = y). A general form of partitions of this sort for counterfactual events under models with an exclusion restriction will be given in Proposition 5.

The event (3) represents a single world event with an identified probability, that therefore does not need to be bounded. We will see that we can recover sharp bounds without bounding the probability of (5).

We therefore turn our attention to event (4). This event can be understood as a conjunction of events in two worlds. First, in the world in which Z is set to . Then, A(z) = a ∧ Y(z) = y in the world in which Z is set to z. As a cross world event, (4) does not have an identified density. Our goal will be to provide lower bounds for this event that are identified.

First, we find some event E1 under the intervention that entails . We can then identify outcomes under the (conflicting) intervention Z = z that are compatible with E1, i.e. that would not be ruled out by observing E1 under intervention . We denote such events by ψz(E1). Now, by definition:

| (6) |

We note that E1 ⇒ ψz(E1) by construction, as E1 rules out all outcomes in the sample space not in ψz(E1), so P(E1, ﹁(A(z) = a ∧ Y(z) = y)) is bounded from above by P(ψz(E1), ﹁(A(z) = a ∧ Y(z) = y)).

Substituting this bound into equation (6) yields:

| (7) |

Because E1 was chosen to entail , the probability of event (4) is bounded from below by P(E1, (A(z) = a ∧ Y(z) = y)). Therefore by equation (7), the probability of event (4) is also bounded from below by:

| (8) |

Through exactly analogous reasoning, we can obtain another lower bound on the probability of event (4) by starting with some event E2 under Z = z that entails A(z) = a ∧ Y(z) = y:

| (9) |

To apply these bounds, we must select events that satisfy the criteria for E1 and E2. We start by examining potential events E1. We note that there are only three options: , , and . It turns out that we need only consider the latter two of these (see Proposition 10 in Appendix C).

First, we take E1 to be . Then we note is:

The only outcome under the intervention Z = z excluded from this event is . Any subject who experienced this event could not have experienced , due to the exclusion restriction in the IV model.

According to (8), to obtain a bound we will need to subtract from the mass of E1 the mass of the portion of ψz(E1) where A(z) = a ∧ Y(z) = y does not hold. This will be the mass of the first two events in the disjunction above.

Using this value of E1, we therefore obtain the following lower bound on the probability of (4):

| (10) |

We now consider the bound induced by using as the event E1. Following an analogous procedure, we produce the lower bound:

| (11) |

Next, we consider possible values of E2. In this simple case, there is only one such possibility, A(z) = a ∧ Y(z) = y, which of course entails itself. We observe that is equivalent to , yielding the following lower bound by expression (9):

We now have all the pieces we need to obtain a sharp lower bound on P(y(a)). We make use of the fact that distributions of potential outcomes after interventions on Z are identified as the distribution of the corresponding observed random variables conditioned on Z (since Z is randomized in the IV model). Noting that the density of the event (4) is also bounded from below by 0, we add the identified density of the event (3) to the best of the lower bounds we have obtained for (4). Then

This is the sharp lower bound obtained by Balke [1]. P(y(a)) may be bounded from above by 1 less the lower bound on . In the binary case, bounds on the ACE may simply be represented as differences between appropriate bounds on P(y(a)) and . Each of these bounds bounds is sharp for the binary IV model. However, characterizing models for which bounds derived by the procedure we propose, described in the next section, are sharp is an open problem.

4. BOUNDS ON COUNTERFACTUAL EVENTS

In this section we provide a graphical criterion for the presence of an implicative relationship between counterfactual events, which we call cross-world implications, and demonstrate its use in bounding non-identified probabilities of counterfactual events. We then show how these bounds can be aggregated to bound non-identified counterfactual events of primary interest. Proofs of all claims are found in Appendix A.

Causal Irrelevance, Event Implication and Event Contradiction

In deriving bounds on a counterfactual event under the IV model, we made use of the exclusion restriction Y(z, a) = Y(a). We begin this section by providing a general graphical criterion for when such restrictions appear in causal models.

Proposition 1 (Causal Irrelevance). If all directed paths from Z to Y contain members of A, then

In such cases, we say Z is causally irrelevant to Y given A, because after intervening on A, intervening on Z will not affect Y. If in addition the joint distribution P(Y(z), A(z)) is identified, Z is said to be a generalized instrument for A with respect to Y. If the set A can be partitioned into A1 and A2 such that A1 is causally irrelevant to Y given A2, then any such A1 is said to be causally irrelevant to Y in A. See also rule 3* in [8], and the discussion of minimal labeling of counterfactuals in [15]. As noted earlier, constraints in a causal model corresponding to the existence of causally irrelevant variables are sometimes called exclusion restrictions.

In the following proposition, we observe that whenever an exclusion restriction appears in the graph, there exists a logical implication connecting counterfactual events across interventional worlds.

Proposition 2 (Cross-world Implication). Let Z be causally irrelevant to Y given A. Then

We define a collection of events to be compatible if none of them implies the negation of any other event in the collection. We define a collection of events to be contradictory if it is not compatible. Conceptually, events in different hypothetical worlds are contradictory if, under the model, no single subject can experience all of the events under their corresponding interventions. For example, in the IV model though experiment, we saw that no single subject can experience both the event A(z) = a ∧ Y(z) = y and the event Y(a) ≠ y, rendering them contradictory.

It will be of use to be able to determine whether events are contradictory through reference to the graphical model. To that end, we provide a recursive graphical criterion that is sufficient to establish that events are contradictory.

Proposition 3 (Contradictory Events). Two events X(a) = x and Y(b) = y are contradictory if there exists Z ∈ X ∪ Y such that Z(a) ≠ Z(b), and all of the following hold:

Variables in the subsets of both X ∪ A and Y ∪ B causally relevant for Z are set to the same values in x, a, and y, b.

Let C ∈ {X ∪ A} \ {Y ∪ B} be any variable that is causally relevant to Z in X∪A and causally relevant to Z given Y ∪ B, with C set to c in x, a. Then X(a) = x and Y(b) = y ∧ C(b) = c′ are known to be contradictory by this proposition if c ≠ c′.

Let C ∈ {Y∪B} \ {X∪A} be any variable that is causally relevant to Z in Y∪B and causally relevant to Z given X ∪ A, with C set to c in y, b. Then Y(b) = y and X(a) = x ∧ C(a) = c′ are known to be contradictory by this proposition if c ≠ c′.

Propositions 2 and 3 provide graphical criteria for implication and contradiction, based on paths in the causal diagram. Both criteria are stated in terms of exclusions restrictions in the graph. It should be noted that not all exclusion restrictions can be represented graphically; for example, some exclusions may obtain only for certain levels of the variables in the graph, and not universally. If such context-specific exclusion restrictions arise, they may lead to implications or contradictions not captured by these criteria. However, in the absence of exclusion restrictions not represented by the graphical model, the graphical criteria provided by these propositions are necessary and sufficient. See Appendix B for details.

Bounds Via a Single Cross-World Implication

In this section, we describe a lower bound on P(y(a)) induced by a single cross-world implication, of the sort described by Proposition 2. We will demonstrate that this line of reasoning can be used to recover the bounds for the IV model in [9, 17], and produce a new class of bounds on densities of counterfactual events where the density is identified under intervention on a subset of the treatment variables.

We begin with a simple result from probability theory, which can broadly be viewed as stating that supersets will always have weakly larger measure than their subsets.

Proposition 4. Let E1, E2 be any events in a causal model such that E1 ⇒ E2. Then P(E1) ≤ P(E2).

In the case of the IV model, we have noted the exclusion restriction between Z and Y given A. Due to the implication established by the IV thought experiment and formalized in Proposition 2, Proposition 4 then yields P(Y (a) = y) ≥ P(A(z) = a, Y(z) = y) for any value of z. Noting that Z has no parents in the model, and that therefore the interventional distribution is identified as the conditional, we can write this as maxz P(A = a, Y = y | Z = z), which is equivalent to the binary IV bounds in [9, 17].

We now present new bounds on causal parameters, based on the observation that the empty set may act as a generalized instrument for any treatment set A with respect to any outcome Y. This observation allows us to combine Propositions 2 and 4 to obtain the following Corollary.

Corollary 1. For any sets of variables Y, A,

A consequence of this Corollary is that for discrete variables, densities of counterfactual events can be nontrivially bounded for any causal model, though we do not expect these bounds to be informative in general.

Finally, we present bounds on densities of counterfactuals when a subset of the treatment set can act as a generalized instrument for the remainder.

Corollary 2. Let and partition A, such that the density is identified, where is the subset of a corresponding to . Then

where is the subset of a corresponding to .

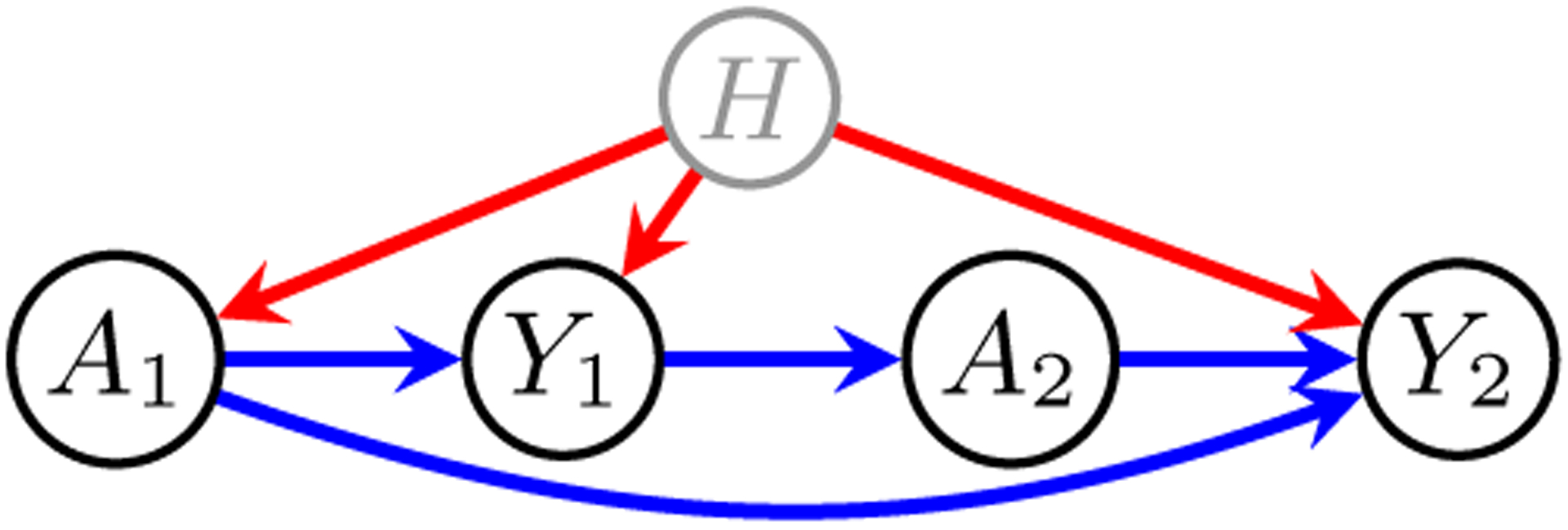

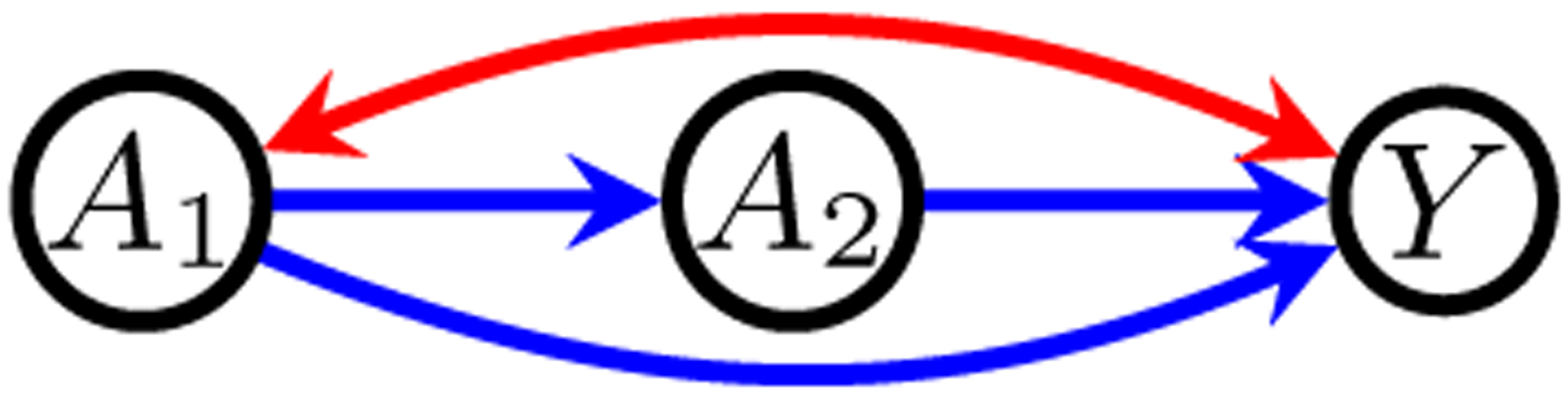

Example 1 (Sequential Treatment Scenario). In the model depicted in Fig. 2, the A variables represent treatments and the Y variables represent outcomes. This model may be applicable if the initial treatment A1 is selected by the subject – and is therefore confounded with the outcomes through the subject’s unobserved traits H – but the second treatment A2 is selected solely on the basis of the first-stage outcome, Y1.

Figure 2:

A Sequential Treatment Scenario

We may be interested in P(Y2(a1, a2)) – the distribution of the second stage outcome under intervention on both treatments. This distribution is not identified. However, since P(A1(a2) = a1, Y2(a2) = y2) is identified as , Corollary 2 yields the bounds:

Bounds Via Multiple Cross-World Implications

In this section, we show how information from multiple cross-world implications may be used to obtain bounds. We begin by describing a partition of the event of interest, where the partition is defined by cross-world potential outcomes. We then develop a method for bounding the density of the cross-world events in the partition, and aggregate the bounds. This section generalizes the procedure used to obtain sharp bounds for the binary IV model in Section 3.

Partitioning the Event of Interest

We denote our event of interest as Y(a1) = y. We are interested in providing a lower bound for the non-identified probability P(Y(a1) = y). We begin by defining a partition of the event to interest. We will work with these partition sets for the remainder of the section.

Proposition 5 (Partition Sets). Let Z be causally irrelevant to Y given A. Assume Z, A are discrete, and take levels z1, ⋯, zM and a1, ⋯, aN respectively.

Then the following events are a partition of Y(a1) = y:

| (12) |

| (13) |

and, for k = 2, ⋯, N,

| (14) |

We now develop lower bounds on the density of each of the partition events, which we can then use to lower bound the density of the target event Y(a1) = y.

Bounding Partition-Set Densities

Event (13) is a single world event with identified density, so there is no need to find a lower bound. Subjects that experience event (12) will never experience Y(z) = y ∧ A(z) = a1 under any intervention Z = z with an identified distribution. Because our strategy uses information from identified distributions to bound unidentified densities, we cannot provide bounds on the density of this partition event.

We now turn our attention to events of the form of (14). We let Ek denote the event of the form of (14) where the first term is A(z1) = ak. Then we note Ek can be represented as the disjunction , where denotes the event:

| (15) |

Because , we know . It follows from Proposition 4 that

| (16) |

Unfortunately is also not point-identified, and must be bounded from below itself.

The event conjoins statements about potential outcomes under two different interventions. Its density is the portion of the population who would experience A(z1) = ak under Z = z1, and A(zj) = a1 ∧ Y(zj) = y under Z = zj. We know the exact proportion of the population who would experience either, because Z is a generalized instrument, but we do not know the exact portion of the population that would experience both.

To address this problem, we first consider the problem of bounding the density of an event conjoining potential outcomes under two different interventions in general terms, in the following Proposition. This result can then be directly applied to lower bound the density of .

Proposition 6 (Cross-World Lower Bounds). Let ψc(E) represent the disjunction of all outcomes in the sample space under intervention C = c that do not contradict the event E, such that E ⇒ ψc(E).

Let Ex be any event that implies X(a) = x, and Ey be any event that implies Y(b) = y. Then P (X(a) = x, Y(b) = y) is bounded from below by each of:

Proposition 6 is useful because, in each of the bounds provided, each of the densities involved are in terms of events under a single intervention. If densities under those interventions are identified, the bounds can be calculated exactly.

We return to our goal of bounding from below. In the case of , the two interventions we are interested in are on the same set of variables, Z. As described above, we are interested in the proportion of patients who experience A(z1) = ak under the intervention Z = z1, and A(zj) = a1 ∧ Y(zj) = y under the intervention Z = zj. Substituting these values for X(a) = x and Y(b) = y into Proposition 6 immediately yields the following Corollary.

Corollary 3 (Lower bounds on . Let E1 be an event under intervention Z = z1 that entails A(z1) = ak, and E2 be event under intervention Z = zj that entails A(zj) = a1 ∧ Y(zj) = y.

Then P(A(z1) = ak ∧ A(zj) = a1 ∧ Y(zj) = y) is bounded from below by each of:

With this result in hand, we can we can modify (16) to obtain the following bound on P(Ek) in terms of the observed data law. Let ξ(·) represent the set of lower bounds on the density P(·) obtained through Corollary 3 for all possible values of E1 and E2. Then

| (17) |

Recalling that P(Y(a1) = y) can be bounded from below by the sum of lower bounds on densities of its partition sets, we obtain the lower bound

where the first term corresponds to the density of event (13) and the second term is a sum is over lower bounds on the densities of the events of the form of (14).

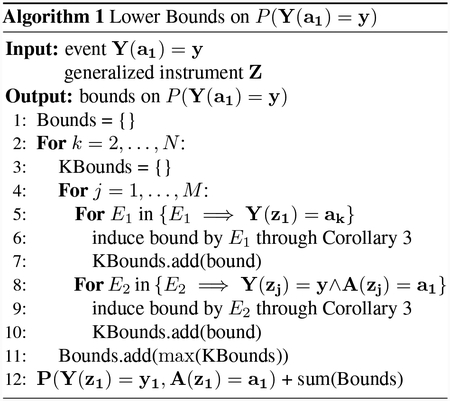

Algorithm 1 summarizes how the results described in this section can be used to calculate these bounds.

5. GENERALIZED INSTRUMENTAL INEQUALITIES

In this section, we develop generalized instrumental inequalities and show that the IV inequalities, and Bonet’s inequalities [3], are special cases. To begin, we bound the sum of probabilities of events in terms of the size of the largest subset thereof that is made up of compatible events.

Proposition 7. Let E1, ⋯, EN be events under arbitrary interventions such that at most k of the events are compatible. Then

This result is a consequence of the fact that by construction, no value of ϵV can lead to contradictory events. If such a value did exist, observing one of the events leaves open the possibility that ϵV takes that value, in which case we would observe the other event under the appropriate intervention, and the events would not be contradictory. It follows that if we are adding the densities of events of which at most k are compatible, no set in the domain of ϵV may have its measure counted more than k times.

We make use of this result, in combination with our existing results about causal irrelevance, to obtain the following class of inequality constraints.

Corollary 4 (Generalized Instrumental Inequalities). Let Z be causally irrelevant to Y given A, and let be any set of triples (z, a, y) which represent levels of Z, A, Y. Then

where

This result makes use of the fact that by Proposition 3, if Z is causally irrelevant to Y given A and (z = z′ ∧ a ≠ a′) ∨ (a = a′ ∧ y ≠ y′), then A(z) = a ∧ Y(z) = y and A(z′) = a′ ∧ Y(z′) = y′ are contradictory. can therefore be interpreted as the size of largest compatible subset of .

The IV inequalities derived in [11], which can be written ∀a∑y maxz P(Y(z), A(z)) ≤ 1, are a special case of the generalized instrumental inequalities with k = 1. For each selection of a, the sum is over densities of events with different values of y, rendering them pairwise contradictory. We now review the inequality derived in [3].

Example 2 (Bonet’s Inequalities). Bonet [3] presents the following constraint for the IV model, where treatment and outcome are binary and the instrument is ternary:

These densities are respectively equal to the densities of the following events, through the fact that densities under intervention on variables with no parent are identified as the conditional distribution:

| (18) |

| (19) |

| (20) |

| (21) |

| (22) |

It can easily be confirmed that no subset of size 3 or greater is mutually compatible. For example, event (18) is compatible with events (20) and (22), but these are incompatible with each other, due to Z taking the same value in both but A taking a different value in each. The same pattern follows for all events; each event is compatible with two others which in turn are not compatible with each other.

It follows from Corollary 4 that the sum of the densities of these events must be bounded from above by 2.

6. EXAMPLE APPLICATIONS

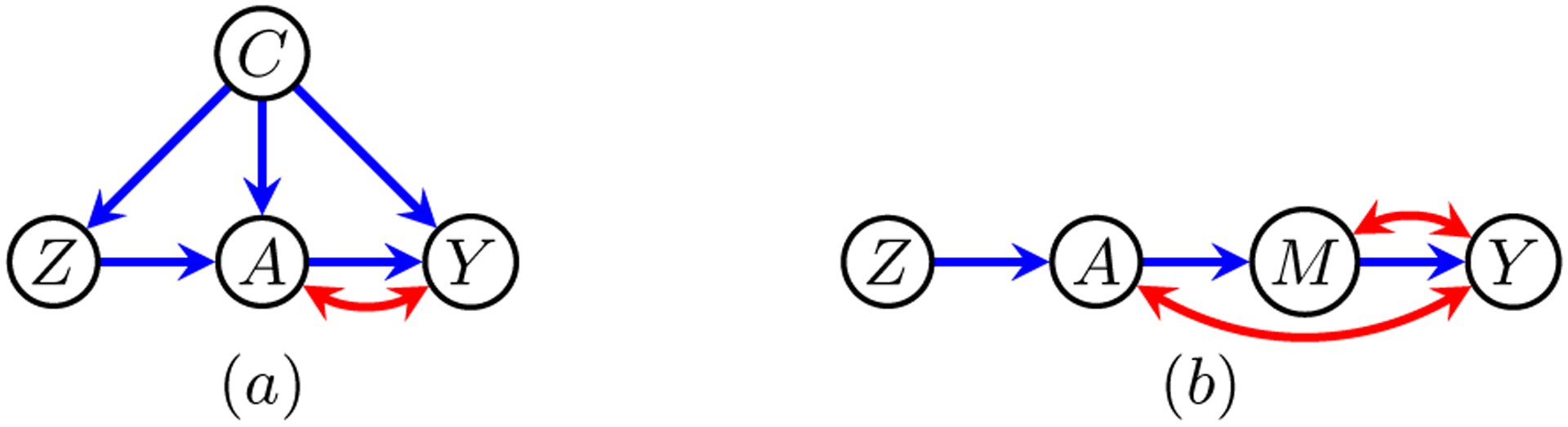

In this section, we derive bounds and inequality constraints for the ADMGs presented in Fig. 3 using the results presented in Sections 4 and 5. We are not aware of any existing methods that can obtain the bounds presented below. Code used to obtain these results, as well as a general implementation of the methods described in this paper, is publicly available1.

Figure 3:

(a) The IV model with covariates, and (b) the confounded frontdoor IV model.

Due to space constraints, we denote the identified distribution under intervention on Z = z as Pz(·). In addition, we do not consider more complicated scenarios, e.g. involving multiple instruments Z and treatments A, instruments with challenging identifying functionals, or non-binary variables. However, bounds and constraints in such scenarios may be obtained using our software.

The IV Model With Covariates

We first consider the model represented by Fig. 3 (a). In the traditional IV model, the instrument must be randomized with respect to the treatment and outcome. In practice, it can be difficult to find such instruments. The IV model with covariates allows for the instrument to be conditionally randomized.

In the social sciences, exogenous shocks are often used as instrumental variables. For example, suppose an earthquake damages a number of school buildings, increasing class size at nearby schools. An economist studying the effect of class size on test scores might use school closure due to the earthquake as an instrument for class size.

This instrument may not be entirely plausible. Families with more resources may be able to avoid living in areas at risk of earthquake damage, and wealthier school districts may be better able to build robust school buildings. In this case, the instrument would be confounded with the treatment and outcome. Observed baseline covariates for the school districts, including information on tax revenue, may be sufficient to account for this kind of confounding. In settings of this kind, the IV model with covariates is appropriate, whereas the traditional IV model is not.

We present the following lower bound on under this model when variables are binary:

A derivation of these bounds is provided in Appendix E.

We can use Corollary 4 to obtain inequality constraints on the observed data law implied by the model. Such constraints cannot easily be concisely expressed. Two representative expressions, each bounded from above by 1, are as follows:

Front-Door IV Model With Confounding

This model, illustrated in Fig. 3 (b), is appropriate when the effect of treatment is only through an observed mediator, which is itself confounded with the outcome. In such cases, the traditional IV model can be applied by ignoring data on M, but tighter bounds can be obtained when the mediator is considered. When all variables are binary, our method yields the following lower bound on :

Finally, we present two functionals of the observed data law that, under the model, are bounded from above by 1. Each is representative of a class of constraints that does not have a concise general formula.

7. CONCLUSION

The methods pursued in this work take advantage of identified counterfactual distributions to bound causal parameters that are not identified, and provide inequality constraints on functionals of the observed data law. These bounds expand the class of causal models under which counterfactual random variables may be meaningfully analyzed, and the inequality constraints facilitate falsification of causal models by observed data. Characterizing the conditions under which these bounds and inequalities are sharp remains an open question. We also leave open application of these ideas to other areas of study interested in counterfactual parameters, such as missing data, dependent data, and policy learning.

A. Proofs

Proof of Proposition 1 Under these conditions, V(·) is never evaluated in the recursive evaluation of Y(Z = z, A = a) by equation (1) for any V ∈ {Z \ A}. □

Proof of Proposition 2 By generalized consistency, A(z) = a implies Y(z, a) = Y(z), and by causal irrelevance Y(z, a) = Y(a). □

Proof of Proposition 3 We will show that conditions (i), (ii), (iii) require that Z(a) = Z(b) for all Z ∈ X ∪ Y. It follows that if there exists Z ∈ X ∪ Y such that Z(a) ≠ Z(b), there is no single value of ϵV that leads to X(a) = x and to Y(b) = y, and the events must be contradictory.

Let C1 be all variables that are causally relevant to Z in both X ∪ A and Y ∪ B, let C2 be all variables that are causally relevant to Z in {X ∪ A} \ {Y ∪ B}, and that are causally relevant to Z given Y ∪ B, and let C3 be all variables that are causally relevant to Z in {Y ∪ B} \ {X ∪ A} and that are causally relevant to Z given X ∪ A.

We note that condition (i) specifies that C1(a) = C1(b). Then, condition (ii) requires that C2(a) = C2(b); otherwise there would be a contradiction between X(a) = x and Y(b) = y ∧ C2(b) = c2. In other words, there are no values of ϵV that lead to X(a) = x that do not lead to Y(b) = y ∧ C2(b) = C2(a). For an analogous reason, condition (iii) requires that C3(a) = C3(b),

We next note that by construction, no variable D ≠ Z in {X ∪ Y ∪ A ∪ B} \ {C1 ∪ C2 ∪ C3} causally relevant to Z given C1 ∪ C2 ∪ C3.

Under conditions (i); (ii); (iii), by consistency Z(a) = Z(c1, c2, c3, a, x\{z}). By causal irrelevance of all variables D ≠ Z in A ∪ X not in C1 ∪ C2 ∪ C3 given C1 ∪ C2 ∪ C3 we have Z(c1, c2, c3, a, x\{z}) = Z(c1, c2, c3). For the same reasons, Z(b) = Z(c1, c2, c3, b, y\{z}) = Z(c1, c2, c3). This yields Z(a) = Z(b), completing the proof. This recursive definition of contradiction between events must resolve because each recursive call expands the number of variables specified in one of the events, and there are a finite number of variables in the graph. □

Proof of Proposition 5 Each event in the partition either directly specifies Y(a1) = y, or specifies an event that implies Y(a1) = y by Proposition 2, so we know the disjunction of these events is a subset of Y(a1) = y.

Now we show that all sets are disjoint. Event (13) and all events of the form of (14) are pairwise disjoint, as each requires a different event under the intervention Z = z1. These events are all disjoint from event (12), as the former each specifies a value of z′ for which A(z′) = a, and the latter specifies that no such z′ can exist.

Finally, we show that the events are exhaustive. In addition to the requirement that Y(a1) = y specified above, a disjunction of the partition set events requires that A(z1) take a value in a1, ⋯, aN, which is tautological. It also requires that ∀z′(A(z′) ≠ a1) or ∃z′(A(z′) = a1), which is likewise tautological. No other requirements are present. □

Proof of Proposition 6 Ex ∧ Y(b) ≠ y ⇒ ψb(Ex) ∧ Y(b) ≠ y. Therefore P(Ex, Y(b) ≠ y) ≤ P(ψb(Ex), Y(b) ≠ y).

P(Ex) = P(Ex, Y(b) = y) + P(Ex, Y(b) ≠ y), which, in combination with the preceding, yields P(Ex, Y(b) = y) P(Ex) − P(ψb(Ex), Y(b) ≠ y).

By construction, Ex ⇒ X(a) = x, yielding the first bound. A symmetric argument yields the second. □

Proof of Proposition 7 The inclusion-exclusion formula states that . Under the conditions of the Proposition, ∑i<j P(Ei ∩ Ej) ≤ k − 1, as any set of values of ϵV may imply at most k − 1 of the events in {Ei ∩ Ej | i < j}, and the set of all possible values of ϵV has measure 1. Noting that by definition, we have . □

Proof of Corollary 2 is causally irrelevant to Y given A, so the lower bounds follow directly from Propositions 2 and 4. The upper bounds follow because, by the same reasoning, . □

Proof of Corollary 4 Proposition 3 tells us that if Z is a generalized instrument for A with respect to Y, two events of the form A(z) = a ∧ Y(a) = y and A(z′) = a′ ∧ Y(z′) = y′ are contradictory if : ﹁((z = z′ ∧ a ≠ a′) ∨ (a = a′ ∧ y ≠ y′)). Therefore Φ(S) provides the size of the largest subset of events that are mutually compatible. The result then follows immediately from Proposition 7. □

B. Equivalence Class Completeness

In this appendix we introduce an assumption we call equivalence class completeness. Following [2], we say two values in the domain of ϵV are in the same equivalence class if they will produce the same results through equation (1) for every variable under every intervention.

Assumption 1 (Equivalence Class Completeness). Every equivalence class of ϵV is non-empty in the domain of ϵV.

We note that this assumption precludes the possibility of vacuous edges (edges that reflect no causal influence) in the graph. In the case of a vacuous edge X → V, for example, there will be no values of ϵV that produce V = v for some setting of X = x and V = v′ for another setting of X = x′, because V is not a function of X. This would mean that the equivalence class of values of ϵV that lead to V = v under intervention X = x and V = v′ under intervention X = x′ is empty, violating the assumption.

For the same reason, this assumption precludes the possibility of context-specific exclusion restrictions. If there is an edge X → V such that for some level of the other parents of V, denoted by Y = y, V is not a function of X, then there will exist no value of ϵV that leads to V = v under intervention X = x, Y = y but to V = v′ under intervention X = x′, Y = y.

We now show that under this assumption, the criteria in Propositions 2 and 3 for cross-world implication and event contradiction respectively are necessary as well as sufficient. It follows that unless there exists background knowledge that the equivalence class completeness assumption is violated, due for example to deterministic causal relationships, all implications and contradictions relevant for deriving bounds and inequality constraints can be obtained using these criteria.

Proposition 8. Under the equivalence class completeness assumption, Z is causally irrelevant to Y given A if and only if:

Proof. Sufficiency is given by proposition 2. We demonstrate necessity as follows. Assume Z is not causally irrelevant to Y given A, i.e. there is a path from Z to Y not through A. Then by equivalence class completeness, there must be values of ϵV for which Y is a function of Z when A is exogenously set and Z does not take the value z under no intervention. Therefore, there will exist values of ϵV such that Y(a, z) ≠ Y(a). By generalized consistency A(z) = a ∧ Y(z) = y ⇒ Y(a, z) = y, which contradicts A(z) = a∧Y(z) = y ⇒ Y(a) = y. □

Proposition 9. Under the equivalence class completeness assumption, two events X(a) = x and Y(b) = y are contradictory if and only if there exists Z ∈ X ∪ Y such that Z(a) ≠ Z(b), and all of the following hold:

Variables in the subsets of both X ∪ A and Y ∪ B causally relevant for Z are set to the[same values in x, a, and y, b.

Let C ∈ {X ∪ A} \ {Y ∪ B} be any variable that is causally relevant to Z in X ∪ A and causally relevant to Z given Y ∪ B, with C set to c in x, a. Then X(a) = x and Y(b) = y ∧ C(b) = c′ are contradictory when c ≠ c′.

Let C ∈ {Y ∪ B} \ {X ∪ A} be any variable that is causally relevant to Z in Y ∪ B and causally relevant to Z given X ∪ A, with C set to c in y, b. Then Y(b) = y and X(a) = x ∧ C(a) = c′ are contradictory when c ≠ c′.

Proof. Sufficiency is given by Proposition 3. To see the necessity of condition (i), we note that if variables causally relevant in X ∪ A and in Y ∪ B took different values in x, a and y, b, then if Z(x, a) ≠ Z(y, b), there must be an equivalence class that leads to these two results under their respective interventions. By the equivalence class completeness assumption it will be non-empty. Therefore there exists a value of ϵV that leads to both events, and they are not contradictory.

We now demonstrate the necessity of condition (ii). If (ii) does not hold, there must be a variable D that is causally relevant to Z in X ∪ A and given Y ∪ B that can take different values under equivalence classes of ϵV that lead to X(a) = a and Y(b) = y under their respective interventions. Because D is causally relevant given both the remainder of X ∪ A, and given all of Y ∪ B, and can for single value of ϵV take different values under the relevant interventions, it is possible for that value of ϵV to yield different values of Z under the two interventions. By equivalence class completeness, an ϵV leading to this result must exist, leading to a lack of contradiction between the two events. Condition (iii) is necessary by an analogous argument. □

C. Redundant Lower Bounds

We present results that establish the redundance of lower bounds induced by certain events E1 and E2 through Corollary 3.

We first observe that the event chosen for E1 in Proposition 6 should be compatible with the event Y(b) = y. If it is not, ψb(E1) ∧ Y(b) ≠ y is equivalent to ψb(E1). Because E1 ⇒ ψb(E1), by Proposition 4 any such E1 will induce a negative lower bound, which is of course uninformative. An analogous argument can be made for E2.

We next consider a proposition that explains why we did not need to consider the bound induced by to obtain sharp bounds in Section 3.

Proposition 10. Let E1 imply X(a) = a and let Y(b) ≠ y imply ψb(E1). Then the event E2 ≜ Y(b) = y induces, through Proposition 6, a weakly better bound than does E1. An analogous claim holds for E2.

Proof. P(ψb(E1), Y(b) ≠ y) = P(Y(b) ≠ y), as Y(b) ≠ y ⇒ ψb(E1) by assumption.

The lower bound induced by E1, given by Proposition 6 as P(E1) − P(ψb(E1), Y(b) ≠ b), can now be expressed as P(Y(b) = y) − P (﹁E1).

We now note ψa(Y(b) = b)) ∧ X(a) ≠ x ⇒ ﹁ E1, as E1 ⇒ X(a) = x by construction. Therefore P(﹁E1) ≥ P(ψa(Y(b) = b) ∧ X(a) ≠ x), and the bound induced by E2 ≜ Y(b) = y, given by Proposition 6 as P(Y(b) = y) − P(ψa(Y(b) = b)) ∧ X(a) ≠ x), must be better than that induced by E1. □

In the binary IV case described in Section 3, every event under intervention Z = z is compatible with the event . This means that in particular is implied by ﹁(A(z) = a ∧ Y (z) = y). The lower bound on event (4) induced by is therefore redundant given the bound induced by E2 ≜ A(z) = a ∧ Y(z) = y.

Next, we identify an additional condition under which bounds induced by particular valid choices of E1 and E2 are irrelevant. This condition does not appear in the IV model.

Proposition 11. If two candidates for events E1 (E2), under Proposition 6 are each compatible with the same events under B = b (A = a), the candidate event with larger density will induce a better bound.

Proof. The bound in Proposition 6 is expressed as the density of E1 (E2) less a function of the events compatible with E1 (E2). If the two candidate events are compatible with the same set of events, the negative quantity in the bound will be the same. The bound with the larger positive quantity – the density of E1 (E2) – must be larger. □

Proposition 12. Under the equivalence class completeness assumption, an event Y(a) = y ∧ X(a) = x is compatible with the same events under intervention A = a′ as is X(a) = x if and only if Y, and all descendants of Y in X to which Y is causally relevant given the remainder of X, have at least one causally relevant ancestor in A that takes different values in a than in a′.

Proof. If an event does not contradict Y(a) = y ∧ X(a) = x, it will not contradict the less restrictive event X(a) = x.

We consider an event compatible with X(a) = x. By Proposition 9, if it is to contradict X(a) = x ∧ Y(a) = y under the equivalence class completeness assumption, then there must be a variable Z satisfying the conditions of that proposition. This Z cannot be in Y, or any of its descendants in X to which it is causally relevant given the remainder of X, by the condition that they each have a causally relevant ancestor in A that differs between a and a′. If it is any variable to which Y is not causally relevant, then the causally relevant ancestors are the same in X(a) = x ∧ Y(a) = y as in X(a) = x, so the event must also be compatible with X(a) = x ∧ Y(a) = y if it is compatible with X(a) = x.

Finally, we demonstrate the necessity of these conditions. If they failed to hold, some variable in Y ∪ X in Y or to which Y is causally relevant given the remainder of X would have no causally relevant ancestor in A that differed under the two interventions. We call such a variable Z, and say it takes value z. Then we construct the event X′(a′) = x′ ∧ Y′(a′) = y′ ∧ Z(a′) ≠ z, with X′, Y′ denoting X \ {Z}, Y \ {Z}. This event contradicts Y(a) = y ∧ X(a) = x but does not contradict X(a) = x by Proposition 9.

We note that because the bounds derived by Corollary 3 do not make use of any additional implications that may result from violations of the equivalence class completeness assumption, these results lead directly to the following Corollary:

Corollary 5. Let the event be compatible with the same events under A = a′ as X(a) = x by Proposition 12, and be a valid candidate for E1 (E2). Then by Proposition 11, any event X(a) = x ∧ W(a) = yw, with W ⊂ Y, that is also a valid candidate for E1 (E2) will induce a better bound through Corollary 3 than .

D. Numerical Examples of Bound Width

In this section, we provide numerical examples of bounds in two models. These examples demonstrate that bounds tend to be most informative when the instrument and treatment are highly correlated. It is our hope that they will provide some intuition about when these bounds will be of use.

Consider the causal model described by the graph in Fig. 4. Suppose all variables are binary and we are interested in the probability P(Y(A1 = 1, A2 = 1) = 1). If we observe the following probabilities,

we can use Corollary 1 to obtain the bounds

Figure 4:

The Inclusive Frontdoor Model

These bounds are quite wide, and unlikely to be informative. Now suppose that we observe the following conditional probability

| (23) |

Noting that P(A1(a2) = a1, Y(a2) = y) is identified as , we can now use Corollary 2 to obtain the bounds

These bounds are much tighter, and exclude .5, which may be important in some cases. If instead we observe the conditional probability

| (24) |

then Corollary 2 yields the much less informative bounds

In this example A2 is used as a generalized instrument for A1, as discussed in Section 4. The tightness of the bounds therefore depends on the relationship between the two, as demonstrated by the differences in bounds under the conditional probabilities (23) and (24).

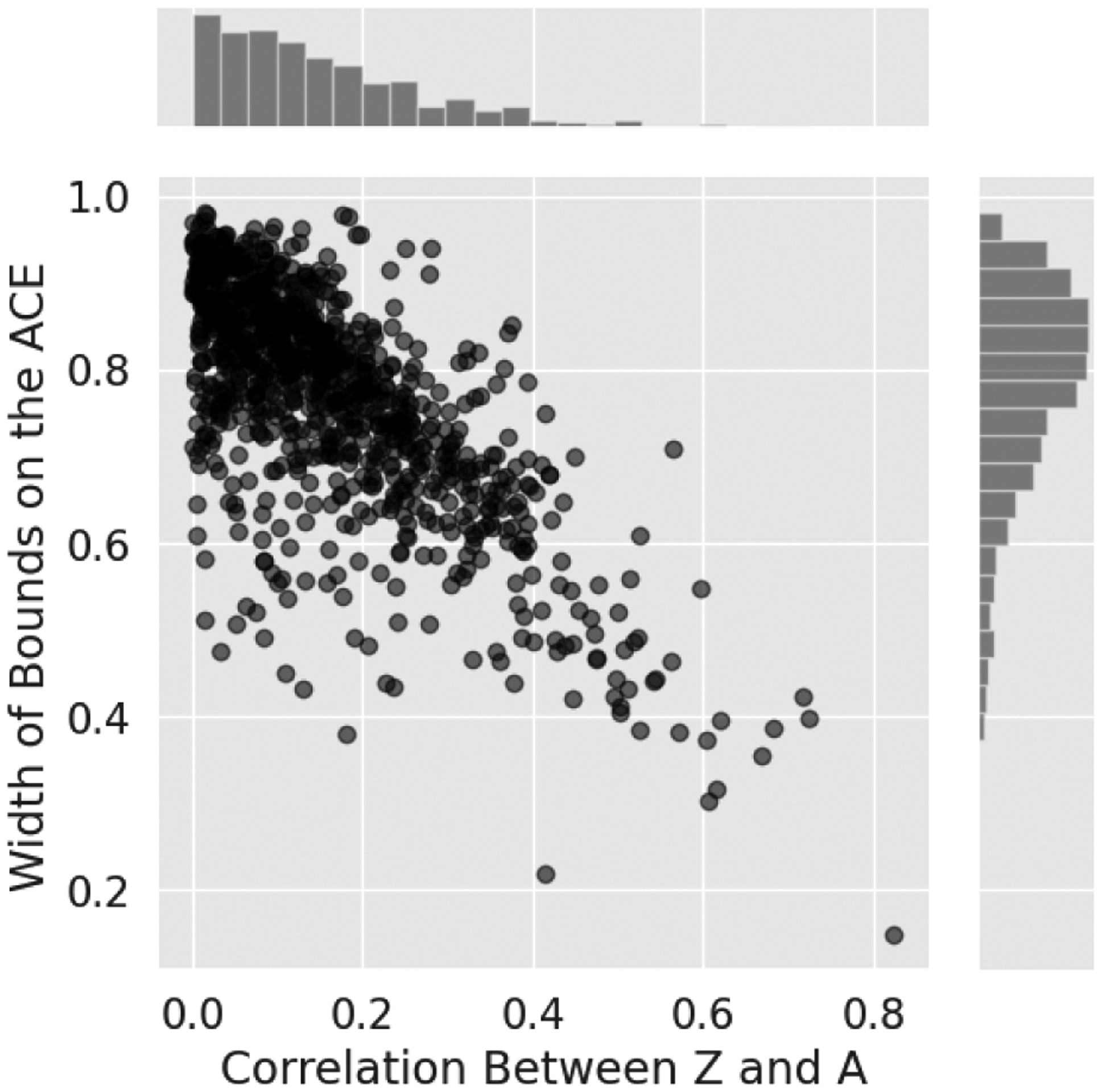

We now consider the IV Model with Covariates, depicted in Fig. 3 (a), and discussed in Section 6. To build an understanding of the utility of our bounds, we randomly generated distributions from the model. These distributions were generated by sampling the parameter for each observed binary random variable, conditional on each setting of its parents, from a symmetric Beta distribution, with parameters equal to 1. The unobserved variable U was assumed to have cardinality 16, to allow for every possible equivalence class [2], and its distribution was drawn from a symmetric Dirichlet distribution with parameters equal to 0.1.

We then calculated the correlation between A and Z, as well as bounds on the ACE, E[Y(A = 1) − Y (A = 0)], for each distribution. The results are presented in Fig. 5.

Figure 5:

Each point represents a randomly generated distribution in the IV model with covariates, depicted in Fig. 3 (a). This plot shows informally that correlation between the generalized instrument Z and the treatment A is associated with tighter bounds on the ACE, E[Y(A = 1) − Y (A = 0)]. The histograms show the marginal distributions of correlation and bound width.

We observe that, as expected, greater correlation between the generalized instrument and the treatment is associated with tighter bounds. This pattern persisted across simulations for additional models, and across various approaches to sampling distributions from the model.

The marginal distributions of correlation and bound width, shown as histograms in Fig. 5 seem to be sensitive to the approach used to sample distributions from the model. For distributions sampled as described above, and used to generate Fig. 5, the mean width of bounds on the ACE was 0.77, with a standard deviation of 0.12. In 4% of the distributions, the bounds excluded 0. We find that these values are also sensitive to changes in how distributions were sampled.

E. Bounds on in the IV Model With Covariates

The remainder of this section is a LaTeX friendly printout of the steps taken by our implementation of the algorithm described in this work when applied to bounding in the IV model with covariates, as described in Section 6.

To begin, we partition as described in Proposition 5:

As before, no lower bound is provided for the first event in the partition, and the second has an identified density. We now consider the last event in the partition .

The following events imply and can therefore be used as E1 events in Corollary 3:

Likewise, the following events imply and can therefore be used as E2 events in Corollary 3:

By Proposition 10, , which implies , and therefore would be a candidate for use as an E1 event, is redundant.

We now iterate through each potential event for E1 and E2, examining the resulting bound.

The event is compatible with . Therefore to compute the bound induced by using it as an E1 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E1 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is y(z), yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E1 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E1 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E1 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E1 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E1 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E1 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E2 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E2 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

The event is compatible with . Therefore to compute the bound induced by using it as an E2 event, we must subtract from its density the portion of this compatible event that does not entail the negation of . This portion is , yielding the bound .

This concludes the derivation of the bounds presented for the IV model with covariates in Section 6.

Footnotes

Contributor Information

Noam Finkelstein, Department of Computer Science, Johns Hopkins University, Baltimore, MD.

Ilya Shpitser, Department Computer Science, Johns Hopkins University, Baltimore, MD.

References

- [1].Balke Alexander and Pearl Judea. Bounds on treatment effects from studies with imperfect compliance. Journal of the American Statistical Association, 92(439):1171–1176, 1997. [Google Scholar]

- [2].Balke Alexander Abraham. Probabilistic Counterfactuals: Semantics, Computation, and Applications. PhD thesis, USA, 1996. [Google Scholar]

- [3].Bonet Blai. Instrumentality tests revisited. In Proceedings of the Seventeenth Conference on Uncertainty and Artificial Intelligence, pages 48–55, 2001. [Google Scholar]

- [4].Evans Robin J.. Graphical methods for inequality constraints in marginalized dags. In Proceedings of the 2012 IEEE International Workshop on Machine Learning for Signal Processing, 2012. [Google Scholar]

- [5].Frangakis Constantine and Rubin Donald B.. Principal stratification in causal inference. Biometrics, 58(1):21–29, 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Huang Yimin and Valtorta Marco. Pearl’s calculus of interventions is complete. In Twenty Second Conference On Uncertainty in Artificial Intelligence, 2006. [Google Scholar]

- [7].Kang Changsung and Tian Jin. Inequality constraints in causal models with hidden variables. In Proceedings of the Twenty Second Conference on Uncertainty in Artificial Intelligence, pages 233–240. AUAI Press, 2006. [Google Scholar]

- [8].Malinsky Daniel, Shpitser Ilya, and Richardson Thomas. A potential outcomes calculus for identifying conditional path-specific effects. Proceedings of machine learning research, 89:3080, 2019. [PMC free article] [PubMed] [Google Scholar]

- [9].Manski CF. Nonparametric bounds on treatment effects. The American Economic Review, 80:319–323, 1990. [Google Scholar]

- [10].Neyman Jerzy. Sur les applications de la thar des probabilities aux experiences agaricales: Essay des principle. excerpts reprinted (1990) in English. Statistical Science, 5:463–472, 1923. [Google Scholar]

- [11].Pearl Judea. Causal inference from indirect experiments. Artificial intelligence in medicine, 7(6):561–582, 1995. [DOI] [PubMed] [Google Scholar]

- [12].Pearl Judea. Causality: Models, Reasoning, and Inference. Cambridge University Press, 2 edition, 2009. [Google Scholar]

- [13].Ramsahai Roland R.. Causal bounds and observable constraints for non-deterministic models. Journal of Machine Learning Research, 2012. [Google Scholar]

- [14].Richardson Thomas S., Evans Robin J., Robins James M., and Shpitser Ilya. Nested Markov properties for acyclic directed mixed graphs, 2017. Working paper. [Google Scholar]

- [15].Richardson Thomas S. and Robins Jamie M.. Single world intervention graphs (SWIGs): A unification of the counterfactual and graphical approaches to causality. preprint: http://www.csss.washington.edu/Papers/wp128.pdf, 2013. [Google Scholar]

- [16].Robins James M.. A new approach to causal inference in mortality studies with sustained exposure periods – application to control of the healthy worker survivor effect. Mathematical Modeling, 7:1393–1512, 1986. [Google Scholar]

- [17].Robins James M.. The analysis of randomized and non-randomized aids treatment trials using a new approach to causal inference in longitudinal studies. In Sechrest L, Freeman H, and Mulley A, editors, Health Service Research Methodology: A Focus on AIDS, pages 113–159. NCHSR, U.S. Public Health Service, 1989. [Google Scholar]

- [18].Shpitser Ilya and Pearl Judea. Identification of joint interventional distributions in recursive semi-Markovian causal models. In Proceedings of the Twenty-First National Conference on Artificial Intelligence (AAAI-06). AAAI Press, Palo Alto, 2006. [Google Scholar]

- [19].Spirtes Peter, Glymour Clark, and Scheines Richard. Causation, Prediction, and Search. Springer Verlag, New York, 2 edition, 2001. [Google Scholar]

- [20].Tian Jin and Pearl Judea. On the testable implications of causal models with hidden variables. In Proceedings of the Eighteenth Conference on Uncertainty in Artificial Intelligence (UAI-02), volume 18, pages 519–527. AUAI Press, Corvallis, Oregon, 2002. [Google Scholar]

- [21].Verma Thomas S. and Pearl Judea. Equivalence and synthesis of causal models. Technical Report R-150, Department of Computer Science, University of California, Los Angeles, 1990. [Google Scholar]

- [22].Wolfe Elie, Spekkens Robert AW., and Fritz Tobias. The inflation technique for causal inference with latent variables. https://arxiv.org/abs/1609.00672, 2016.