Abstract

Histopathological images contain rich phenotypic information that can be used to monitor underlying mechanisms contributing to disease progression and patient survival outcomes. Recently, deep learning has become the mainstream methodological choice for analyzing and interpreting histology images. In this paper, we present a comprehensive review of state-of-the-art deep learning approaches that have been used in the context of histopathological image analysis. From the survey of over 130 papers, we review the field’s progress based on the methodological aspect of different machine learning strategies such as supervised, weakly supervised, unsupervised, transfer learning and various other sub-variants of these methods. We also provide an overview of deep learning based survival models that are applicable for disease-specific prognosis tasks. Finally, we summarize several existing open datasets and highlight critical challenges and limitations with current deep learning approaches, along with possible avenues for future research.

Keywords: Deep Learning, Convolutional Neural Networks, Computational Histopathology, Digital Pathology, Histology Image Analysis, Survey, Review

1. Introduction

The examination and interpretation of tissue sections stained with haematoxylin and eosin (H&E) by anatomic pathologists is an essential component in the assessment of disease. In addition to providing diagnostic information, the phenotypic information contained in histology slides can be used for prognosis. Features such as nuclear atypia, degree of gland formation, presence of mitosis and inflammation can all be indicative of how aggressive a tumour is, and may also allow predictions to be made about the likelihood of recurrence after surgery. Over the last 50 years, several scoring systems have been proposed that allow pathologists to grade tumours based on their appearance, for example, the Gleason score for prostate cancer (Epstein et al., 2005) and the Nottingham score for breast cancer (Rakha et al., 2008). These systems provide important information to guide decisions about treatment and are valuable in assessing heterogeneous disease. There is, however, considerable inter-pathologist variability, and some systems that require quantitative analysis, for example the residual cancer burden index (Symmans et al., 2007), are too time-consuming to use in a routine clinical setting.

The first efforts to extract quantitative measures from microscopy images were in cytology. Prewitt and Mendelsohn (1966) laid out the steps required for the “effective and efficient discrimination and interpretation of images” which described the basic paradigm of object detection, feature extraction and finally the training of a classification function that is still in use more than 50 years later. Early work in cytology and histopathology was usually limited to the analysis of the small fields of view that could be captured using conventional microscopy, and image acquisition was a time-consuming process (Mukhopadhyay et al., 2018). The introduction of whole slide scanners in the 1990s made it much easier to produce digitized images of whole tissue slides at microscopic resolution, and this led to renewed interest in the application of image analysis and machine learning techniques to histopathology. Many of the algorithms developed originally for computer-aided diagnosis in radiology have been successfully adapted for use in digital pathology, and Gurcan et al. (2009); Madabhushi and Lee (2016) provide comprehensive reviews of work carried out prior to the widespread adoption of deep learning methods.

In 2011, Beck et al. (2011) demonstrated that features extracted from histology images could aid in the discovery of new biological aspects of cancer tissue, and Yuan et al. (2012) showed that features extracted from digital pathology images are complementary to genomic data. These advancements have led to a growing interest in the use of biomarkers extracted from digital pathology images for precision medicine (Bera et al., 2019), particularly in oncology. Later in 2012, Krizhevsky et al. (2012) showed that convolutional neural networks (CNNs) could outperform previous machine learning approaches by classifying 1.2 million high-resolution images in the ImageNet LSVRC-2010 contest into 1000 different classes. At the same time, Cireşan et al. (2012) showed that CNNs could outperform competing methods in segmenting nerves in electron microscopy images and detecting mitotic cells in histopathology images (Cireşan et al., 2013). Since then, methods based on CNNs have consistently outperformed other handcrafted methods in a variety of deep learning (DL) tasks in digital pathology. The ability of CNNs to learn features directly from the raw data without the need for specialist input from pathologists and the availability of annotated histopathology datasets has also fueled the explosion of interest in deep learning applied to histopathology.

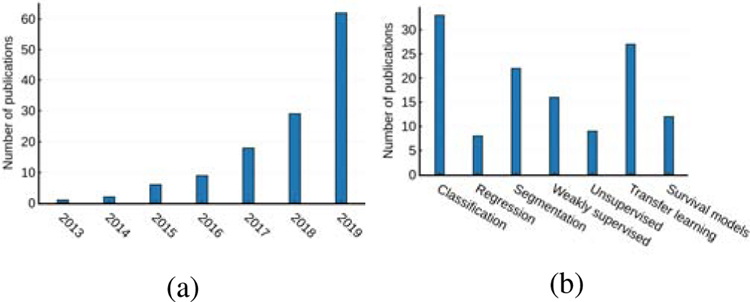

The analysis of whole-slide digital pathology images (WSIs) poses some unique challenges. The images are very large and have to be broken down into hundreds or thousands of smaller tiles before they can be processed. Both the context at low magnification, and the detail at high magnification, may be important for a task, therefore information from multiple scales needs to be integrated. In the case of survival prediction, salient regions of the image are not known a priori and we may only have weak slide level labels. The variability within each disease subtype can be high and it usually requires a highly trained pathologist to make annotations. For cell based methods, many thousands of objects need to be detected and characterized. These challenges have made it necessary to adapt existing deep learning architectures and to design novel approaches specific to the digital pathology domain. In this work, we surveyed more than 130 papers, where deep learning has been applied to a wide variety of detection, diagnosis, prediction and prognosis tasks. We carried out this extensive review by searching Google Scholar, PubMed and arXiv for papers containing keywords such as (“convolutional” or “deep learning”) and (“digital pathology” or “histopathology” or “computational pathology”). Additionally, we also included conference proceedings from MICCAI, ISBI, MIDL, SPIE and EMBC based on title/abstract of the papers. We also iterated over the selected papers to include any additional cross-referenced works that were missing from our initial search criteria. The body of research in this area is growing rapidly and this survey covers the period up to and including December 2019. A descriptive statistics of published papers according to their category and year is illustrated in Fig. 1.

Fig. 1:

(a) An overview of numbers of papers published from January 2013 to December 2019 in deep learning based computation histopathology surveyed in this paper. (b) A categorical breakdown of the number of papers published in each learning schemas.

The remainder of this paper is organised as follows. Section 2 presents an overview of various learning schemes in DL literature in the context of computational histopathology. Section 3 discusses in detail different categories of DL schemes commonly used in this field. We categorize these learning mechanisms into supervised (Section 3.1), weakly supervised (Section 3.2), unsupervised (Section 3.3), transfer learning (Section 3.4). Section 4 discusses survival models related to disease prognosis task. In Section 5, we discuss various open challenges including prospective applications and future trends in computational pathology, and finally, conclusions are presented in Section 6.

2. Overview of learning schemas

In this section, we provide a formal introduction to various learning schemes in the context of DL applied to computational pathology. These learning schemes are illustrated with an example of classifying a histology WSI as cancerous or normal. Based on these formulations, various DL models have been proposed in the literature, which are traditionally based on convolutional neural network (CNNs), recurrent neural networks (RNNs), generative adversarial networks (GANs), autoencoders (AEs) and various other variants. For a detailed and thorough background of DL fundamentals and its existing architectures, we refer readers to LeCun et al. (2015); Goodfellow et al. (2016), and with specific application of DL in medical image analysis to Litjens et al. (2017); Shen et al. (2017); Yi et al. (2019).

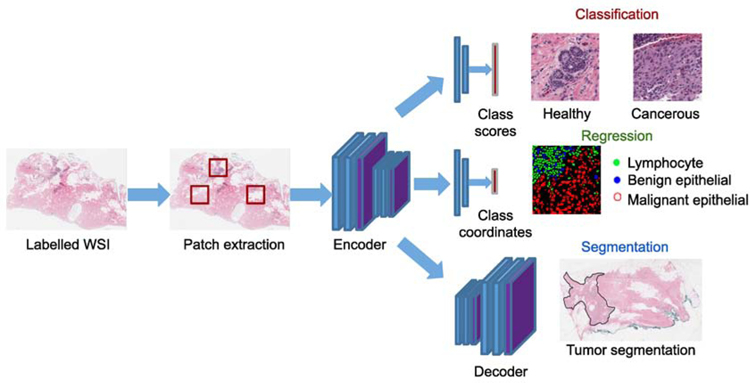

In supervised learning, we have a set of N training examples , where, each sample is an input image (a WSI of dimension H × W pixels, with Ch channels. For example, Ch = 3 channels for an RGB image) associated with a class label , with C possible classes. For example, in binary classification, C takes the scalar form {0, 1}, and the set for a regression task. The goal is to train a model fθ : x → y that best predicts the label for an unknown test image based on a loss function . For instance, x’s are the patches in WSIs and y’s are the labels annotated by the pathologist either as cancerous or normal. During the inference time, the model predicts the label of a patch from a previously unseen test set. This scheme is detailed in Section 3.1, with an example illustrated in Fig. 3.

Fig. 3:

An overview of supervised learning models.

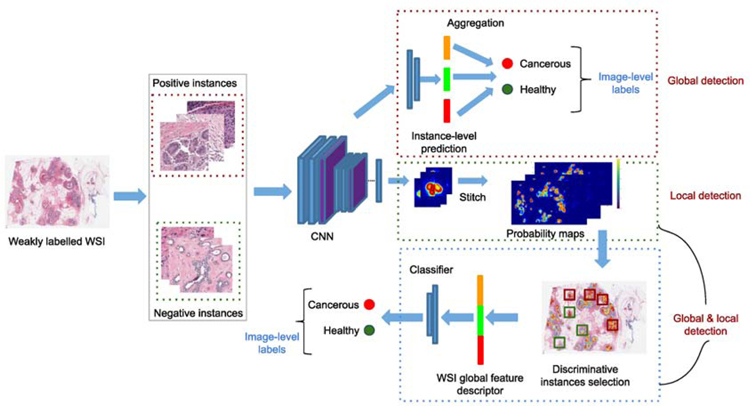

In weakly supervised learning (WSL), the goal is to train a model fθ using the readily available coarse-grained (image-level) annotations Ci, to automatically infer the fine-grained (pixel/patch)-level labels ci. In histopathology, a pathologist labels a WSI as cancer, as long as a small part of this image contains cancerous region, without indicating its exact location. Such image-level annotations (often called “weak labels”) are relatively easier to obtain in practice compared to expensive pixel-wise labels for supervised methods. An illustrative example for WSL scheme is shown in Fig. 4, and this scheme is covered in-depth in Section 3.2.

Fig. 4:

An overview of weakly supervised learning models.

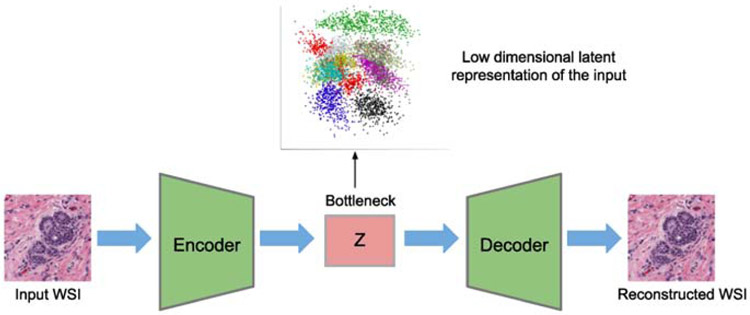

The unsupervised learning aims at identifying patterns on the image, without mapping an input image sample into a predefined set of output (i.e. label). This type of models includes fully unsupervised methods, where the raw data comes in the form of images without any expert-annotated labels. A common technique in unsupervised learning is to transform the input data into a lower-dimensional subspace, and then group these lower-dimension representations (i.e. the latent vector) into mutually exclusive or hierarchical groups, based on a clustering technique. An example of unsupervised learning scheme is illustrated in Fig. 5, with existing methods in Section 3.3.

Fig. 5:

An overview of unsupervised learning models.

In transfer learning (TL), the goal is to transfer knowledge from one domain (i.e., source) to another domain (i.e., target), by relaxing the assumption that the train and test set must be independent and identically distributed. Formally, given a domain , which is defined by the feature space , a marginal probability distribution P(X) (where ), and a task consisting of label space and a prediction function f (‧). The aim of transfer learning is to improve the predictive function in target domain by using the knowledge in source domain and source task . For example, in histology, this scenario can occur while training a classifier on the source task and possibly fine-tuning on a target task , with limited or no annotations. This scheme is explained in-detail in Section 3.4. Note that, the domain adaptation, which is a sub-field of transfer learning, is discussed thoroughly in Section 3.4.1.

Next, we discuss various deep neural network (DNN) models in each of these learning schemes published in histopathology domain, along with the existing challenges and gaps in current research, and possible future directions in this perspective.

3. Methodological approaches

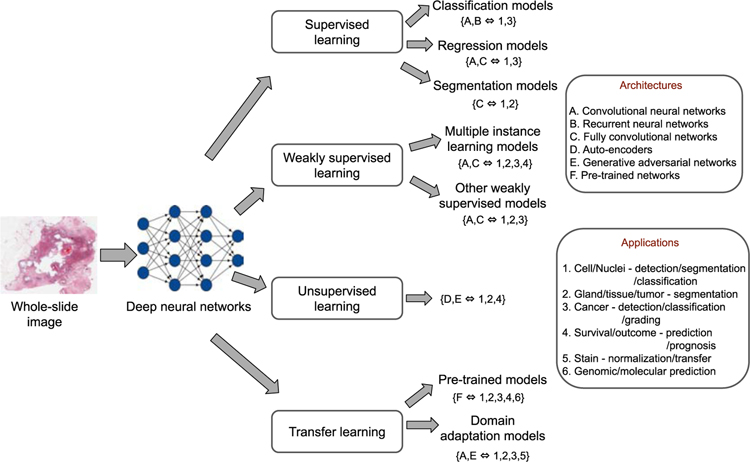

The aim of this section is to provide a general reference guide to various deep learning models applied in computational histopathology from a methodological perspective. The DL models discussed in the following sections were originally developed for specific applications, but are applicable to a wide variety of histopathological tasks (Fig. 2). Based on the learning schemes, the following sections are divided into supervised, weakly supervised, unsupervised and transfer learning approaches. The details are presented next.

Fig. 2:

An overview of deep neural network models in computational histopathology. These models have been constructed using various deep learning architectures (shown in alphabetical order) and applied to various histopathological image analysis tasks (depicted in numerical order).

3.1. Supervised learning

Among the supervised learning techniques, we identify three major canonical deep learning models based on the nature of tasks that are solved in digital histopathology: classification, regression and segmentation based models, as illustrated in Fig. 3. The first category of models contains methods related to pixel-wise/sliding-window classification based approaches, which are traditionally formulated as object detection (Girshick, 2015) or image classification tasks (He et al., 2016) in the computer vision literature. The second category of models focuses on predicting the position of objects (e.g., cells or nuclei (Sirinukunwattana et al., 2016)) or sometimes predicting a cancer severity score (e.g., H-score of breast cancer images (Liu et al., 2019)) by enforcing topological/spatial constraints in DNN models. Finally, the last category of models is related to fully convolutional network (FCN) based approaches (Long et al., 2015; Ronneberger et al., 2015), which are widely adopted to solve semantic or instance segmentation problems in computer vision and medical imaging scenarios. The overview of papers in supervised learning is summarized in Table 1.

Table 1:

Overview of supervised learning models. The acronyms for the staining stands for: H&E (haematoxylin and eosin); DAB-H (Diaminobenzidine-Hematoxylin); IFL (Immunofluorescent); ER (Estrogen receptor), PR (Progesterone receptor); PC (Phase contrast); HPF (High power field); Pap (Papanicolaou stain); PHH3 (Phosphohistone-H3); IHC (Immunohistochemistry staining); PAS (Periodic acid–Schiff). Note: (✓) indicates the code is publicly available and the link is provided in their respective paper.

| Reference | Cancer types | Staining | Application | Method | Dataset |

|---|---|---|---|---|---|

| Classification models | |||||

| A. Local-level task | |||||

| Cireşan et al. (2013) | Breast | H&E | Mitosis detection | Pixel based CNN classifier | ICPR2012 (50 images) |

| Wang et al. (2014) | Breast | H&E | Mitosis detection | Cascaded ensemble of CNN + hand-crafted features | ICPR2012 (50 images) |

| Song et al. (2015) | Cervix | H&E | Segmentation of cervical cytoplasm and nuclei | Multi-scale CNN + graph-partitioning approach | Private set containing 53 cervical cancer images |

| Kashif et al. (2016) | Colon | H&E | Cell detection | Spatially constrained CNN + handcrafted features | 15 images of colorectal cancer tissue images |

| Xing et al. (2016) | Multi-Cancers | H&E, IHC | Nuclei segmentation | CNN + selection-based sparse shape model | Private set containing brain tumour (31), pancreatic NET (22), breast cancer (35) images |

| Romo-Bucheli et al. (2016) | Breast | H&E | Tubule nuclei detection and classification | CNN based classification of pre-detected candidate nuclei | 174 images with ER(+) breast cancer cases |

| Wang et al. (2016b) | Lung | H&E | Cell detection | Two shared-weighted CNNs for joint cell detection and classification | TCGA (300 images) |

| Albarqouni et al. (2016) | Breast | H&E | Mitosis detection | Multi-scale CNN via crowdsourcing layer | AMIDA2013 (666 - HPF images) |

| Song et al. (2017) | Cervix | Pap, H&E | Segmentation of cervical cells | Multi-scale CNN model | Overlapping cervical cytology image segmentation challenge (ISBI 2015) - 8 images, private set - 21 images |

| Gao et al. (2017) | Multi-Cancers | IFL | Cell classification | CNN (LeNet-5) based classification of HEp2-cells | ICPR2012 (28 images), ICPR2014 (83 images) |

| Rao (2018) | Breast | H&E | Mitosis detection | Faster RCNN based multi-scale region proposal model | ICPR2012 (50 images), AMIDA2013 (23 images), ICPR2014 (2112 images) |

| Tellez et al. (2018) | Breast | H&E, PHH3 | Mitosis detection | Ensemble of CNNs using H&E registered to PHH3 tissue slides as reference standard | TNBC (36 images), TUPAC (814 images) |

| Qaiser et al. (2019b) | Colon | H&E | tumour segmentation | Combination of CNN and persistent homology feature based patch classifier | Two private sets containing 75 and 50 colorectal adenocarcinoma WSIs |

| B. Global-level task | |||||

| Cruz-Roa et al. (2014) | Breast | H&E | Detection of invasive ductal carcinoma | CNN based patch classifier | Private set - 162 cases |

| Ertosun and Rubin (2015) | Brain | H&E | Glioma grading | Ensemble of CNN models | TCGA (54 WSIs) |

| Litjens et al. (2016) | Multi-Cancers | H&E | Detection of prostate and breast cancer | CNN based pixel classifier | Two private sets (225 + 173 WSIs) |

| Bejnordi et al. (2017) | Breast | H&E | Breast cancer classification | Stacked CNN incorporating contextual information | Private set - 221 images |

| Agarwalla et al. (2017) | Breast | H&E | tumour segmentation | CNN + 2D-LSTM for representation learning and context aggregation | Camelyon16 (400 WSIs) |

| Kong et al. (2017) | Breast | H&E | Detection of breast cancer metastases | CNN with the 2D-LSTM to learn spatial dependencies between neighboring patches | Camelyon16 (400 WSIs) |

| Vandenberghe et al. (2017) | Breast | IHC | IHC scoring of HER2 status in breast cancer | CNN based patch classifier | 71 WSIs of invasive breast carcinoma (Private set) |

| Cruz-Roa et al. (2017) | Breast | H&E | Detection of invasive breast cancer | CNN based patch classifier | TCGA + four other private sets (584 cases) |

| Sharma et al. (2017) | Stomach | H&E, IHC | Gastric cancer classification and necrosis detection | Patch-based CNN classifier | Private set - 454 WSIs |

| BenTaieb and Hamarneh (2018)(✓) | Breast | H&E | Detection of breast cancer metastases | CNN based recurrent visual attention model | Camelyon16 (400 WSIs) |

| Awan et al. (2018) | Breast | H&E | Breast cancer classification | CNN based patch classification model incorporating contextual information | BACH 2018 challenge (400 WSIs) |

| Li and Ping (2018) (✓) | Breast | H&E | Detection of breast cancer metastases | CNN + CRF to model spatial correlations between neighboring patches | Camelyon16 (400 WSIs) |

| Bejnordi et al. (2018) | Breast | H&E | Detection of invasive breast cancer | Multi-stage CNN that first identifies tumour-associated stromal alterations and further classify into normal/benign vs invasive breast cancer | Private set - 2387 WSIs |

| Cruz-Roa et al. (2018) | Breast | H&E | Detection of invasive breast cancer | Patch based CNN model with adaptive sampling method to focus only on high uncertainty regions | TCGA + 3 other public datasets (596 cases) |

| Qaiser et al. (2019b) | Breast | IHC | Immunohistochemical scoring of HER2 | Deep reinforcement learning model that treats IHC scoring as a sequential learning task using CNN + RNN | HER2 scoring contest (172 images), private set - 82 gastroenteropancreatic NET images |

| Wei et al. (2019) (✓) | Lung | H&E | Classifcation of histologic subtypes on lung adenocarcinoma | ResNet-18 based patch classifier | Private set - 143 WSIs |

| Nagpal et al. (2019) | Prostate | H&E | Predicting Gleason score | CNN based regional Gleason pattern classification + k-nearest-neighbor based Gleason grade prediction | TCGA (397 cases) + two private sets (361 + 11 cases) |

| Shaban et al. (2019a) (✓) | Mouth | H&E | tumour infiltrating lymphocytes abundance score prediction for disease free survival | CNN (MobileNet) based patch classifier, followed by statistical analysis | 70 cases of oral squamous cell carcinoma WSIs (Private set) |

| Halicek et al. (2019) | Head & Neck | H&E | Detection of squamous cell carcinoma and thyroid carcinoma | CNN (Inception-v4) based patch classifier | Private set - 381 images |

| Xu et al. (2019) | Breast | H&E | Detection of breast cancer | Deep hybrid attention (CNN + LSTM) network | BreakHis (7,909 images) |

| Zhang et al. (2019) (✓) | Bladder | H&E | Bladder cancer diagnosis | CNN + RNN to generate clinical diagnostic descriptions and network visual attention maps | 913 images of urothelial carcinoma from TCGA and private set |

| Regression models | |||||

| Xie et al. (2015a) | Multi-Cancers | Ki-67 | Nuclei detection | CNN based hough voting approach | Neuroendocrine tumour set (private - 44 images) |

| Xie et al. (2015b) | Multi-Cancers | H&E, Ki-67 | Cell detection | CNN based structured regression model | TCGA (Breast-32 images), HeLa cervical cancer (22 images), Neuroendocrine tumour images (60 images) |

| Chen et al. (2016a) | Breast | H&E | Mitosis detection | FCN based deep regression network | ICPR2012 (50 images) |

| Sirinukunwattana et al. (2016) | Colon | H&E | Nuclei detection and classification | CNN with spatially constrained regression | CRCHisto (100 images) |

| Naylor et al. (2018) (✓) | Multi-Cancers | H&E | Nuclei segmentation | CNN based regression model for touching nuclei segmentation | TNBC (50 images), MoNuSeg (30 images) |

| Xie et al. (2018b) | Multi-Cancers | H&E, Ki-67 | Cell detection | Structured regression model based on fully residual CNN | TCGA (Breast-70 image patches), Bone marrow (11 image patches), HeLa cervical cancer (22 images), Neuroendocrine tumour set (59 image patches) |

| Graham et al. (2019b) (✓) | Multi-Cancers | H&E | Nuclei segmentation and classification | CNN based instance segmentation and classification framework | CoNSeP (41 images), MoNuSeg (30 images), TNBC (50 images), CRCHisto (100 images), CPM-15 (15 images), CPM-17 (32 images) |

| Xing et al. (2019) | Pancreas | Ki-67 | Nuclei detection and classification | FCN based structured regression model | Pancreatic neuroendocrine tumour set (private - 38 images) |

| Segmentation models | |||||

| BenTaieb and Hamarneh (2016) | Colon | H&E | Segmentation of colon glands | A loss function accounting for boundary smoothness and topological priors in FCN learning | GLAS challenge (165 images) |

| Chen et al. (2017a) | Multi-Cancers | H&E | Segmentation of glands and nuclei | Multi-task learning framework with contour-aware FCN model for instance segmentation | GLAS challenge (165 images), MICCAI 2015 nucleus segmentation challenge (33 images) |

| Xu et al. (2017) | Colon | H&E | Segmentation of colon glands | Multi-channel deep network model for gland segmentation and instance recognition | GLAS challenge (165 images) |

| de Bel et al. (2018) | Kidney | PAS | Segmentation of renal tissue structures | Evaluated three different architectures: FCN, Multi-scale FCN and UNet | 15 WSIs of renal allograft resections (private set) |

| Van Eycke et al. (2018) | Colon | H&E, IHC | Segmentation of glandular epithelium in H&E and IHC staining images | CNN model based on integration of DCAN, UNet and ResNet models | GLAS challenge (165 images) and a private set containing colorectal tissue microarray images |

| Gecer et al. (2018) | Breast | H&E | Detection and classification of breast cancer | Ensemble of multi-scale FCN’s followed by CNN based patch classifier | 240 breast histopathology WSIs (private set) |

| Gu et al. (2018) | Breast | H&E | Detection of breast cancer metastasis | UNet based multi-resolution network with multi-encoder and single decoder model | Camelyon16 (400 images) |

| Guo et al. (2019) | Breast | H&E | Detection of breast cancer metastasis | Classification (Inception-V3) based semantic segmentation model (DCNN) | Camelyon16 (400 images) |

| Bulten et al. (2020) | Prostate | H&E | Grading of prostate cancer | UNet based segmentation of Gleason growth patterns, followed by subsequent cancer grading | 1243 WSIs of prostate biopsies (private set) |

| Lin et al. (2019) | Breast | H&E | Detection of breast cancer metastasis | FCN based model for fast inference of WSI analysis | Camelyon16 (400 WSIs) |

| Liu et al. (2019) | Breast | DAB-H | Immunohistochemical scoring for breast cancer | Multi-stage FCN framework that directly predicts H-Scores of breast cancer TMA images | 105 TMA images of breast adenocarcinomas (private set) |

| Bulten et al. (2019) | Prostate | IHC, H&E | Segmentation of epithelial tissue | Pre-trained UNet on IHC is used as a reference standard to segment epithelial structures in H&E WSIs | 102 prostatectomy WSIs |

| Swiderska-Chadaj et al. (2019) | Multi-Cancers | IHC | Lymphocyte detection | Investigated the effectiveness of four DL methods - FCN, UNet, YOLO and LSM | LYON19 (test set containing 441 region-of-interests (ROIs)) |

| Graham et al. (2019a) | Colon | H&E | Segmentation of colon glands | FCN with minimum information loss units and atrous spatial pyramid pooling | GLAS challenge (165 images), CRAG dataset (213 images) |

| Ding et al. (2019) | Colon | H&E | Segmentation of colon glands | Multi-scale FCN model with a high-resolution branch to circumvent the loss in max-pooling layers | GLAS challenge (165 images), CRAG dataset (213 images) |

| Zhao et al. (2019) | Breast | H&E | Detection and classification of breast cancer metastasis | Feature pyramid aggregation based FCN network with synergistic learning approach | Camelyon16 (400 WSIs), Camelyon17 (1000 WSIs) |

| Qu et al. (2019) (✓) | Lung | H&E | Nuclei segmentation and classification | FCN trained with perceptual loss | 40 tissue images of lung adenocarcinoma (private set) |

| Ho et al. (2019) | Breast | H&E | Breast cancer multi-class tissue segmentation | Deep multi-magnification model with multi-encoder, multi-decoder and multi-concatenation network | Private set containing TNBC (38 images) and breast margin dataset (10 images) |

| Tokunaga et al. (2019) | Lung | H&E | Segmentation of multiple cancer subtype regions | Multiple UNets trained with different FOV images + an adaptive weighting CNN for output aggregation | 29 WSIs of lung adenocarcinoma (private set) |

| Lin et al. (2019) | Breast | H&E | Detection of breast cancer metastasis | FCN based model with anchor layers for fast and accurate prediction of cancer metastasis | Camelyon16 (400 images) |

| Pinckaers and Litjens (2019) (✓) | Colon | H&E | Segmentation of colon glands | Incorporating neural ordinary differential equations in UNet to allow an adaptive receptive field | GLAS challenge (165 images) |

| Seth et al. (2019) | Breast | H&E | Segmentation of DCIS | Compared UNets trained at multiple resolutions | training:183 WSIs, testing:19 WSIs (private set) |

3.1.1. Classification models

This category of methods uses a sliding window approach (i.e., patch centred on a pixel of interest) to identify objects (such as cells, glands, nuclei) or make image-level predictions (such as disease diagnosis and prognosis). Within this category, we further identify two sub-categories: (i) local-level tasks, and (ii) global-level tasks. The former stream of methods is based on a region (i.e., cell, nuclei) represented by a spatially pooled feature representations or scores, aiming at identifying or localizing objects. While the latter consists of methods related to image-level prediction tasks such as whole-slide level disease grading.

A. Local-level task:

Image classification such as detection of cells or nuclei is notably one of the most successful tasks, where deep learning techniques have made a tremendous contribution in the field of digital pathology. Methods based on CNNs have been extensively used for pixel-wise prediction task by a sliding window approach, to train the networks on small image patches rather than the entire WSI. Due to giga-resolution of WSIs (e.g., 100, 000 × 100, 000 pixels), applying a CNN directly to WSI is impractical, and hence, the entire WSI is divided into segments of small patches for analysis. In practice, these image patches are often annotated by the pathologist as a region containing an object of interest (e.g., cells/nuclei) or a background. A large corpus of deep learning methods applied to digital pathology is akin to computer vision models applied to visual object recognition task (Russakovsky et al., 2015).

The earliest seminal work proposed in Cireşan et al. (2013) revolutionised the entire field of digital histopathology, by applying CNN based pixel prediction to detect mitosis in routinely stained H&E breast cancer histology images. Subsequently, Rao (2018) proposed a variant of the Faster-RCNN, which significantly outperformed all other competing techniques in both ICPR 2012 and the AMIDA 2013 mitosis detection challenge. The next set of methods were developed based on CNNs or a combination of CNN and handcrafted features. Since training of CNN models is often complex and requires a more extensive training set, the earliest works (Wang et al., 2014; Kashif et al., 2016; Xing et al., 2016; Romo-Bucheli et al., 2016; Wang et al., 2016b) focused on integrating CNN with biologically interpretable handcrafted features and these models showed excellent performance results in addressing the touching nuclei segmentation problem. A hybrid method, based on persistent homology, Qaiser et al. (2019b) was able to capture the degree of spatial connectivity among touching nuclei, which is quite difficult to achieve using CNN models (Sabour et al., 2017).

Training a deep CNN from scratch requires large amounts of annotated data, which is very expensive and cumbersome to obtain in practice. A promising alternative is to use a pre-trained network (trained on a vast set of natural images, such as ImageNet) to fine-tune on a problem in different domain with limited number of annotations. Along these lines, Gao et al. (2017); Valkonen et al. (2019) proposed a fine-tuning based transfer learning approach, which consistently performed better than full training on a single dataset alone. In particular, Gao et al. (2017) made several interesting observations about improving CNN performance by optimising the hyperparameters of the network, augmenting the training data and fine-tuning rather than full training of the model. For more details and methods that are based on transfer learning are discussed thoroughly in Section 3.4.

Recent studies (Albarqouni et al., 2016; Irshad et al., 2017; Amgad et al., 2019; Marzahl et al., 2019; Hou et al., 2020) have investigated the use of crowdsourcing approaches to alleviate the annotation burden on expert pathologists. Outsourcing labelling to non-experts can, however, lead to subjective and inconsistent labels and conventional DL models may find it challenging to train with noisy annotations. One way to improve the annotation quality is to collect multiple redundant labels per example and aggregate them via various voting techniques before training the model. For instance, Albarqouni et al. (2016) proposed to incorporate data aggregation as a part of the CNN learning process through an additional crowdsourcing layer for improving model performance. An alternative approach is to make use of expert advice (such as an experienced pathologist) by providing feedback for annotating rare and challenging cases (Amgad et al., 2019). It is evident from the above studies that it is possible to train models using non-expert annotations successfully, but that care has to be taken to ensure quality. An easy and reliable way to obtain crowd labels for a large-scale database is to first obtain a set of precomputed annotations from an automated system, and correct only those labels with inconsistent markings under expert supervision (Marzahl et al., 2019; Hou et al., 2020). For example, Marzahl et al. (2019) showed the use of precomputed labels lead to an increase in model performance, which was independent of the annotator’s expertise and that this reduced the interaction time by more than 30% compared to other crowdsourcing approaches. A thorough and in-depth treatment of crowdsourcing methods applicable to medical imaging (including histopathology) is provided in Ørting et al. (2019).

The addition of multi-scale and contextual knowledge into CNN plays an essential role in identifying overlapping cell structures in histopathology images. Conventional single scale models often suffer from two main limitations: 1) the raw-pixel intensity information around a small window does not have enough information about the degree of overlap between cells, and 2) use of a large window leads to an increase in the number of model parameters and training time. To alleviate these issues, several authors (Song et al., 2015, 2017) proposed a multi-scale CNN model to accurately solve the overlapping cell segmentation problem, with the addition of domain-specific shape priors during training. Despite several modifications to CNN architectures, however, traditional deep learning methods often lack generalisation ability due to stain variations across datasets and this is addressed in Section 3.4.2

In summary, among the bottom-up approaches, CNN is the current gold standard technique applied to a wide variety of low-level histopathology tasks such as cell or nuclei detection. Methods based on multi-scale CNN and transfer learning approaches are becoming increasingly popular due to their excellent generalization adaptability across a wide range of datasets and scanning protocols.

B. Global-level task:

Most of the published deep learning methods in this category focus on patch-based classification approach for whole-slide level disease prediction task. These techniques range from the use of simple CNN architectures (Cruz-Roa et al., 2014; Ertosun and Rubin, 2015) to more sophisticated models (Qaiser and Rajpoot, 2019; Zhang et al., 2019) for accurate tissue-level cancer localization and WSI-level disease grading. For instance, Cruz-Roa et al. (2014, 2017) proposed a simple 3-layer CNN for identifying invasive ductal carcinoma in breast cancer images which outperformed all the previous handcrafted methods by a margin of 5%, in terms of average sensitivity and specificity. The main disadvantage of these methods is the relatively long computational time required to carry out a dense patch-wise prediction over an entire WSI. To address this issue, Cruz-Roa et al. (2018) proposed a combination of CNN and adaptive sampling based on quasi-Monte Carlo sampling and a gradient-based adaptive strategy, to precisely focus only on those regions with high-uncertainty. Subsequently, a few authors (Litjens et al., 2016; Vandenberghe et al., 2017) employed a simpler patch-based CNN model for the identification of breast and prostate cancer in WSI, achieving an AUC of 0.99 for the breast cancer experiment. In more recent years, some authors (Bejnordi et al., 2018; Wei et al., 2019; Nagpal et al., 2019; Shaban et al., 2019a; Halicek et al., 2019) have trained networks from scratch (i.e., full training) on huge set of WSIs. These networks include the most popular deep learning models traditionally used for natural image classification task such as VGGNet (Simonyan and Zisserman, 2014), InceptionNet (Szegedy et al., 2015), ResNet (He et al., 2016) and MobileNet (Howard et al., 2017) architectures. There is no generic rule about the choice of architectures, with the type of disease prediction task. However, the main success of these CNN models depends on the number of images available for training, choice of network hyper-parameters and various other boosting techniques (Cireşan et al., 2013; Nagpal et al., 2019) (Refer to Section 5.1 for more details).

A few authors try to encode both local and global contextual information into CNN learning process for more accurate disease prediction in WSIs. Typically, contextual knowledge is incorporated into a CNN framework by modelling the spatial correlations between neighbouring patches, using the strengths of CNNs and conditional random fields (CRF) (Zheng et al., 2015; Chen et al., 2017b). These techniques have been extensively used in computer vision tasks for sequence labeling (Artieres et al., 2010; Peng et al., 2009) and semantic image segmentation problems (Chen et al., 2017b). While in digital pathology, for instance, Kong et al. (2017) introduced a spatially structured network (Spatio-Net) combining CNN with 2D Long-short Term Memory (LSTM) to jointly learn the image appearance and spatial dependency features for breast cancer metastasis detection. A similar approach has also been adopted in Agarwalla et al. (2017) to aggregate features from neighbouring patches using 2D-LSTM’s on WSIs. In contrast, Li and Ping (2018) proposed an alternative technique based on CRF for modelling spatial correlations through a fully connected CRF component. The advantages of such models are that the whole DNN can be trained in an end-to-end manner with the standard backpropagation algorithm, with a slight overhead in complexity. Alternative methods have also been proposed to encode global contextual knowledge by adopting different patch level aggregation strategies. For example, Bejnordi et al. (2017) employed a cascaded CNN model to aggregate patch-level pyramid representations to simultaneously encode multi-scale and contextual information for breast cancer multi-classification. Similarly, Awan et al. (2018) adopted a ResNet based patch classification model to output a high dimensional feature space. These features are then combined using a support vector machine (SVM) classifier to learn the context of a large patch, for discriminating different classes in breast cancer.

Although the above methods include contextual information in the form of patch-based approaches, they still suffer from loss of visual context due to disjoint/random selection of small image patches. Furthermore, applying a CNN based classification model directly to WSI is computationally expensive, and it scales linearly with an increasing number of input image patches (Qaiser and Rajpoot, 2019). Some recent studies (Qaiser and Rajpoot, 2019; BenTaieb and Hamarneh, 2018; Xu et al., 2019) explored task-driven visual attention models (Mnih et al., 2014; Ranzato, 2014) for histopathology WSI analysis. Such models selectively focus on the most diagnostically useful areas (such as tissue components) while ignoring the irrelevant regions (such as the background) for further analysis. These kinds of visual attention models have been extensively explored in computer vision applications including object detection (Liu et al., 2016), image classification (Mnih et al., 2014), image captioning (Sharma et al., 2015), and action recognition (Xu et al., 2015b) tasks.

In routine clinical diagnosis, typically, a pathologist first examines different locations within a WSI to identify diagnostically indicative areas, and then combines this information over time across different eye fixations, to predict the presence or absence of cancer. This human visual attention mechanism can be modelled as a sequential learning task in deep learning using RNNs. For instance, Qaiser and Rajpoot (2019) modelled the prediction of immunohistochemical (IHC) scoring of HER2 (Qaiser et al., 2018) as a sequential learning problem, where the whole DNN is optimized via policy gradients trained under a deep reinforcement learning (DRL) framework. Furthermore, the authors also incorporated an additional task-specific mechanism to inhibit the model from revisiting the previously attended locations for further diagnosis. Similarly, BenTaieb and Hamarneh (2018); Xu et al. (2019) proposed recurrent attention mechanisms to selectively attend and classify the most discriminate regions in WSI for breast cancer prediction. Inspired by recent works (Xu et al., 2015b; Krause et al., 2017) in image captioning for natural scenes, Zhang et al. (2019) proposed an attention-based multi-modal DL framework to automatically generate clinical diagnostic descriptions and tissue localization attention maps, mimicking the pathologist. An attractive feature of their system is the ability to create natural language descriptions of the histopathology findings, whose structure closely resembles that of a standard clinical pathology report.

In essence, attention-based models are gaining popularity in recent years and have several intriguing properties over traditional sliding-window (patch-based) approaches: i) by enforcing a region selection mechanism (i.e., attention), the model tries to learn only the most relevant diagnostically useful areas for disease prediction; ii) the number of model parameters is drastically reduced leading to faster inference time; and iii) the model complexity is independent of the size of WSI.

3.1.2. Regression models

This category of methods focuses on detection or localization of objects by directly regressing the likelihood of a pixel being the centre of an object (e.g., cell or nucleus centre). Detection of cells or nuclei in histopathology images is challenging due to their highly irregular appearance and their tendency to occur as overlapping clumps, which results in difficulty in separating them as a single cell or a nucleus (Naylor et al., 2018; Xie et al., 2018b; Graham et al., 2019b). The use of pixel-based classification approaches for this task may result in suboptimal performance, as they do not necessarily consider the topological relationship between pixels that lie in the object centre with those in their neighbourhood (Sirinukunwattana et al., 2016). To tackle this issue, many authors cast the object detection task as a regression problem, by enforcing topological constraints, such that the pixels near object centres have higher probability values than those further away. This formulation has shown to achieve better detection or localization of objects, even with significant variability in both the object appearance and their locations in images.

Deep regression models proposed in the literature are mainly based on either CNN or FCN architectures (Long et al., 2015). In the context of FCN, the earlier methods by Chen et al. (2016a); Xie et al. (2018a) proposed a simple FCN based regression model for detecting cells in histopathology images. The most recent methods attempt to improve the detection task by modifying the loss function (Xie et al., 2018b) or incorporating additional features into popular deep learning architectures (Graham et al., 2019b). Xie et al. (2015a, 2018b) proposed a structured regression model based on fully residual convolutional networks for detecting cells. The authors adopted a weighted MSE loss by assigning higher weights to misclassified pixels that are closer to cell centres. A similar approach by Xing et al. (2019), adopted a residual learning based FCN architecture for simultaneous nucleus detection and classification in pancreatic neuroendocrine tumour Ki-67 images. In their model, an additional auxiliary task (i.e., ROI extraction) is also introduced to assist and boost the nucleus classification task using weak annotations. To solve the challenging touching nuclei segmentation problem, Naylor et al. (2018) proposed a model to identify superior markers for the watershed algorithm by regressing the intra-nuclear distance map. Graham et al. (2019b) went one step further, proposing a unified FCN model for simultaneous nuclear instance segmentation and classification which effectively encodes both the horizontal and vertical distance information of nuclei pixels to their centre of mass for accurate nuclei separation in multi-tissue histology images.

Other authors adopted alternative methods by modifying the output layer of CNN, to include distance constraints or a voting mechanism into the network learning process. For instance, Sirinukunwattana et al. (2016) introduced a new layer modifying the output of a CNN to predict a probability map which is topologically constrained, such that the high confidence scores are likely to be assigned to the pixels closer to nuclei centre in colon histology images. This method was later extended in Swiderska-Chadaj et al. (2019) to detect lymphocytes in immunohistochemistry images. Xie et al. (2015a) proposed an alternative method based on the voting mechanism for nuclei localization. This can be viewed as an implicit Hough-voting codebook, which learns to map an image patch to a set of voting offsets (i.e., nuclei positions) and the corresponding confidence scores to weight each vote. This set of weighted votes is then aggregated to estimate the final density map used to localize the nuclei positions in neuroendocrine tumour images.

3.1.3. Segmentation models

Segmentation of histological primitives such as cells, glands, nuclei and other tissue components is an essential pre-requisite for obtaining reliable morphological measurements to assess the malignancy of several carcinomas (Chen et al., 2017a; Sirinukunwattana et al., 2017; Bulten et al., 2020). Accurate segmentation of structures from histology images often requires the pixel-level delineation of object contour or the whole interior of the object of interest. CNNs trained to classify each patch centred on a pixel of interest as either foreground or background, can be used for segmentation tasks by employing a sliding-window approach. However, given the large size of giga-pixel WSIs, patch-based approaches lead to a large number of redundant computations in overlapping regions, in turn resulting in a drastic increase in computational complexity and loss of contextual information (Chen et al., 2017a; Lin et al., 2019). The other alternative is to employ fully convolutional networks (FCN) (Long et al., 2015; Ronneberger et al., 2015), which take as input an arbitrary sized image (or a patch) and output a similar-sized image in a single forward pass. The whole FCN model can be trained via end-to-end backpropagation and directly outputs a dense per-pixel prediction score map. Hence, segmentation models in histopathology are mainly built on the representative power of FCN and its variants, which are generally formulated as a semantic segmentation task, with applications ranging from nucleus/gland/duct segmentation (Kumar et al., 2019; Sirinukunwattana et al., 2017; Seth et al., 2019) to the prediction of cancer (Liu et al., 2019; Bulten et al., 2019) in WSIs.

In order to determine an optimal model suitable for a given task, Swiderska-Chadaj et al. (2019); de Bel et al. (2018) compared FCN with UNet architecture (Ronneberger et al., 2015) and found that better generalization ability and robustness was achieved using a UNet model. The key feature of the UNet is the upsampling path of the network, which learns to propagate the contextual information to high-resolution layers, along with additional skip connections to yield more biologically plausible segmentation maps, compared to the standard FCN model. The traditional FCN model also lacks smoothness constraints, which can result in poor delineation of object contours and formation of spurious regions while segmenting touching/overlapping objects (Zheng et al., 2015). To circumvent this problem, BenTaieb and Hamarneh (2016) formulated a new loss function to incorporate boundary smoothness and topological priors into FCN learning, for discriminating epithelial glands with other tissue structures in histology images.

The appearance of histological objects such as glands and nuclei vary significantly in their size, shape and often occur as overlapping clumped instances, which makes them difficult to distinguish with the other surrounding structures. A few methods attempted to address this issue by leveraging the representation power of FCN with multi-scale feature learning strategies (Chen et al., 2017b; Lin et al., 2017); to effectively delineate varying size objects in histology images. For instance, Chen et al. (2017a) proposed a multi-level contextual FCN with auxiliary supervision mechanism (Xie and Tu, 2015) to segment both glands and nuclei in histology images. They also devised an elegant multi-task framework to integrate object appearance with contour information, for precise identification of touching glands. This work was later extended in Van Eycke et al. (2018) by combining the efficient techniques of DCAN (Chen et al., 2017a), UNet, and identity mapping in ResNet to build an FCN model for segmenting epithelial glands in double-stained images.

Some authors have proposed variants of FCN to enhance segmentation - in particular at glandular boundaries, by compensating for the loss occurring in max-pooling layers of FCNs. For example, Graham et al. (2019a) introduced minimum information loss dilated units in residual FCNs, to help retain the maximal spatial resolution critical for segmenting glandular structures at boundary locations. Later, Ding et al. (2019) employed a similar technique to circumvent the loss of global information by introducing a high-resolution auxiliary branch in the multi-scale FCN model, to locate and shape the glandular objects. Zhao et al. (2019) proposed a feature pyramid based model (Lin et al., 2017) to aggregate local-to-global features in FCN, to enhance the discriminative capability of the model in identifying breast cancer metastasis. Moreover, they also devised a synergistic learning approach to collaboratively train both the primary detector and an extra decoder with semantic guidance, to help improve the model’s ability to retrieve metastasis.

Conventional FCN based models are fundamentally designed to predict the class label for each pixel as either foreground or background, but are unable to predict the individual object instances (i.e., recognizing the categorical label of foreground pixels). In computer vision, such problems can be formulated as an “instance-aware semantic segmentation” task (Hariharan et al., 2014; Li et al., 2017), where segmentation and classification of object instances are performed simultaneously in a joint end-to-end manner. In histology, Xu et al. (2017) formulated the gland instance segmentation as two sub-tasks - gland segmentation and instance recognition task, using a multi-channel deep network model (Dai et al., 2016). The gland segmentation is performed using FCN, while, the gland instance boundaries are recognized using the location (Girshick, 2015) and boundary cues (Xie and Tu, 2015). A similar formulation has been adopted in Qu et al. (2019) to solve the joint segmentation and classification of nuclei using an FCN trained with perceptual loss (Johnson et al., 2016).

Most deep learning methods in digital pathology are applied on small-sized image patches rather than the entire WSI, restricting the prediction ability of the model to a narrow field-of-view. The conventional patch-based approaches often suffer from three main limitations: i) the extracted individual patches from WSI have a narrow field-of-view, with limited contextual knowledge about the surrounding structures; ii) patch-based models are not consistent with the way a pathologist analyzes a slide under a microscope; and iii) a large number of redundant computations are carried out in overlapping regions, resulting in increased computational complexity and slower inference speed. In order to alleviate the first two issues, attempts have been made to mimic the way in which a pathologist usually analyzes a slide at various magnification levels before arriving at the final decision. Such mechanisms are integrated into the FCN model by designing multi-magnification networks (Ho et al., 2019; Tokunaga et al., 2019), each trained on different field-of-view image patches to obtain a better discriminative feature representation compared to a single-magnification model. For instance, Ho et al. (2019) proposed a multi-encoder and multi-decoder FCN model utilizing multiple input patches at various magnification levels (e.g., 20x, 10x and 5x) to obtain intermediate feature representations that are shared among each FCN model for accurate breast cancer image segmentation. A similar approach has been adopted in Tokunaga et al. (2019); Gecer et al. (2018) by training multiple FCN’s on different field-of-view images, which are aggregated to obtain a final segmentation map. In contrast, Gu et al. (2018) designed a multiple encoder model to aggregate information across different magnification levels, but utilized only one decoder to generate a final prediction map.

Nevertheless, the above patch-based models still suffer from significant computational overhead at higher magnification levels, and hence, do not scale well to WSIs. Therefore, some authors (Lin et al., 2019, 2018) have proposed a variant of FCN which consists of a dense scanning mechanism, that shares computations in overlapping regions during image scanning. To further improve the prediction accuracy of the FCN model, a new pooling layer named as ‘anchor layer’ is also introduced in Lin et al. (2019) by reconstructing the loss occurred in max-pooling layers. Such models have been shown to have inference speeds a hundred times faster than traditional patch-based approaches, while still ensuring a higher prediction accuracy in WSI analysis. On the other hand, Guo et al. (2019) presented an alternative method for fast breast tumour segmentation, in which, a network first pre-selects the possible tumour area via CNN based classification, and later refines this initial segmentation using an FCN based model. Their proposed framework obtains dense predictions with 1/8 size of original WSI in 11.5 minutes (on CAMELYON16 dataset), compared to the model trained using FCN alone.

3.2. Weakly supervised learning

The idea of weakly supervised learning (WSL) is to exploit coarse-grained (image-level) annotations to automatically infer fine-grained (pixel/patch-level) information. This paradigm is particularly well suited to the histopathology domain, where the coarse-grained information is often readily available in the form of image-level labels, e.g., cancer or non-cancer, but where pixel-level annotations are more difficult to obtain. Weakly supervised learning dramatically reduces the annotation burden on a pathologist (Xu et al., 2014), and an overview of these models is provided in Table 2.

Table 2:

Overview of weakly supervised learning models. Note: (✓) indicates the code is publicly available and the link is provided in their respective paper.

| Reference | Cancer types | Staining | Application | Method | Dataset |

|---|---|---|---|---|---|

| Multiple instance learning (MIL) | |||||

| Hou et al. (2015) | Brain | H&E | Glioma subtype classification | Expectation-maximization based MIL with CNN + logistic regression | TCGA (1,064 slides) |

| Jia et al. (2017) | Colon | H&E | Segmentation of cancerous regions | FCN based MIL + deep supervision and area constraints | Two private sets containing colon cancer images (910+60 images) |

| Liang et al. (2018) | Stomach | H&E | Gastric tumour segmentation | Patch-based FCN + iterative learning approach | China Big Data and AI challenge (1,900 images) |

| Ilse et al. (2018) (✓) | Multi-Cancers | H&E | Cancer image classification | MIL pooling based on gated-attention mechanism | CRCHisto (100 images) |

| Shujun Wang et al. (2019) | Stomach | H&E | Gastric cancer detection | Two-stage CNN framework for localization and classification | Private set (608 images) |

| Wang et al. (2019) | Lung | H&E | Lung cancer image classification | Patch based FCN + context-aware block selection and feature aggregation strategy | Private (939 WSIs), TCGA (500 WSIs) |

| Campanella et al. (2019) (✓) | Multi-Cancers | H&E | Multiple cancer diagnosis in WSIs | CNN (ResNet) + RNNs | Prostate (24,859 slides), skin (9,962 slides), breast cancer metastasis (9,894 slides) |

| Dov et al. (2019) | Thyroid | — | Thyroid malignancy prediction | CNN + ordinal regression for prediction of thyroid malignancy score | Private set (cytopathology 908 WSIs) |

| Xu et al. (2019) (✓) | Multi-Cancers | H&E | Segmentation of breast cancer metastasis and colon glands | FCN trained on instance-level labels, which are obtained from image-level annotations | Camelyon16 (400 WSIs), Colorectal adenoma private dataset (177 WSIs) |

| Huang and Chung (2019) | Breast | H&E | Localization of cancerous evidence in histopathology images | CNN + multi-branch attention modules and deep supervision mechanism | PCam (327,680 patches extracted from Camelyon16) and Camelyon16 (400 WSIs) |

| Other approaches | |||||

| Campanella et al. (2018) | Prostate | H&E | Prostate cancer detection | CNN trained under MIL formulation with top-1 ranked instance aggregation approach | Prostate biopsies (12,160 slides) |

| Akbar and Martel (2018) (✓) | Breast | H&E | Detection of breast cancer metastasis | Clustering (VAE + K-means) based MIL framework | Camelyon16 (400 WSIs) |

| Tellez et al. (2019b) (✓) | Multi-Cancers | H&E | Compression ofgigapixel histopathology WSIs | Unsupervised feature encoding method (VAE, Bi-GAN, contrastive training) that maps high-resolution image patches to low-dimensional embedding vectors | Camelyon16 (400 WSIs), TUPAC16 (492 WSIs), Rectum (74 WSIs) |

| Qu et al. (2019) (✓) | Multi-Cancers | H&E | Nuclei segmentation | Modified UNet trained using coarse level-labels + dense CRF loss for model refinement | MoNuSeg (30 images), lung cancer private set (40 images) |

| Bokhorst et al. (2019) | Colon | H&E | Segmentation of tissue types in colorectal cancer | UNet with modified loss functions to circumvent sparse manual annotations | Colorectal cancer WSIs (private set - 70 images) |

| Li et al. (2019a) (✓) | Breast | H&E | Mitosis detection | FCN trained with concentric loss on weakly annotated centriod label | ICPR12 (50 images), ICPR14 (1,696 images), AMIDA13 (606 images), TUPAC16 (107 images) |

In this survey, we explore one particular form of WSL, namely multiple-instance learning (MIL), which aims to train a model using a set of weakly labeled data (Dietterich et al., 1997; Quellec et al., 2017). In MIL, a training set consists of bags, labeled as positive or negative; and each bag includes many instances, whose label is to be predicted or unknown. For instance, each histology image with cancer/non-cancer label forms a ‘bag’ and each pixel/patch extracted from the corresponding image is referred to as an ‘instance’ (e.g., pixels containing cancerous cells). Here, the main goal is to train a classifier to predict both bag-level and instance-level labels, while only bag-level labels are given in the training set. We further categorize MIL approaches into three categories similar to Cheplygina et al. (2019): i) global detection - identifying a target pattern in a histology image (i.e., at bag level) such as the presence or absence of cancer; ii) local detection - identifying a target pattern in an image patch or a pixel (i.e., at instance level) such as highlighting the cancerous tissues or cells; iii) global and local detection - detecting whether an image has cancer and also identifying the location where it occurs within an image. These categories are illustrated in Fig. 4. There is also a significant interest in histopathology to include various kinds of weak annotations such as image-level tags (Campanella et al., 2019), points (Qu et al., 2019), bounding boxes (Yang et al., 2018), polygons (Wang et al., 2019) and percentage of the cancerous region within each image (Jia et al., 2017), to obtain clinically satisfactory performance with minimal annotation effort. For an in-depth review of MIL approaches in medical image analysis, refer to Quellec et al. (2017); Cheplygina et al. (2019); Rony et al. (2019); Kandemir and Hamprecht (2015).

Due to the variable nature of histopathology image appearances, the standard instance-level aggregation methods, such as voting or pooling, do not guarantee accurate image-level predictions, due to misclassifications of instance-level labels (Campanella et al., 2019; Rony et al., 2019). Hence, several papers on global detection based MIL method rely on alternative instance-level aggregation strategies to obtain reliable bag-level predictions suitable for a given histology task. For instance, Hou et al. (2015) integrated an expectation-maximization based MIL method with a CNN to output patch-level predictions. These instances are later aggregated by training a logistic regression model to classify glioma subtypes in WSIs. Dov et al. (2019) proposed an alternative approach based on ordinal regression framework for aggregating instances containing follicular (thyroid) cells to simultaneously predict both thyroid malignancy and TBS score in whole-slide cytopathology images. Recently, a remarkable work in Campanella et al. (2019) adopted an RNN model to integrate semantically rich feature representations across patch-level instances to obtain a final slide-level diagnosis. In their method, the author’s managed to obtain an AUC greater than 0.98 in detecting four types of cancers on an extensive multi-centre dataset of 44,732 WSIs, without expensive pixel-wise manual annotations.

The local detection based MIL approaches are based on an image-centric paradigm, where image-to-image prediction is performed using an FCN model - by computing features for all instances (pixels) together. These approaches are generally applied to image segmentation task for precisely delineating cancerous region in histology images. In the local detection approach, the bag labels are propagated to all instances to train a classifier in a supervised manner. However, sometimes even the best bag-level classifier seems to underperform on instance-level predictions due to lack of supervision (Cheplygina et al., 2019). To tackle this issue, additional weak constraints have been incorporated into FCN models to improve segmentation accuracy. For example, Jia et al. (2017) included an area constraint in the MIL formulation by calculating the rough estimate of the relative size of the cancerous region. However, calculating such area constraints is tedious and can only be performed by an expert pathologist. Consequently, Xu et al. (2019) proposed an alternative MIL framework to generate instance-level labels from image-level annotations. These predicted instance-level labels are later assigned to their corresponding image pixels to train an FCN in an end-to-end manner, while achieving comparable performance with supervised counterparts. Finally, in some cases, both a large number of bag labels and a partial set of instance labels are also adopted in FCN based reiterative learning framework (Liang et al., 2018), to further optimize final instance-level predictions.

Arguably, the most popular and clinically relevant MIL approach in histopathology is the global and local detection paradigm. In this approach, rather than just diagnosing cancer at whole-slide level, we can simultaneously localize the discriminative areas (instances) containing cancerous tissues or cells. In this context, the methods utilize either the bag-level label (Shujun Wang et al., 2019) or both bag-level and some coarse level instance annotations (Wang et al., 2019) to infer a global level decision. Note that the instance-level predictions are not usually validated due to lack of costly annotations, and are generally visualized as either a heatmap (Shujun Wang et al., 2019; Wang et al., 2019) or a saliency map (Huang and Chung, 2019) to highlight the diagnostically significant locations in WSIs. The main essence of this approach is to capture the instance-wise dependencies and their impact on the final image-level decision score.

There is a some disagreement among MIL methods regarding the accuracy of instance-level predictions, when trained with only bag-level labels (Cheplygina et al., 2019; Kandemir and Hamprecht, 2015). The critical and often overlooked issue among MIL methods is that even the best bag-level classifier may not be an optimal instance-level classifier for instance predictions and vice versa (Cheplygina et al., 2019). Such problems have naturally led to new solutions that integrate the visual attention models with MIL techniques to enhance the interpretability of final model predictions (Ilse et al., 2018; Huang and Chung, 2019). For instance, Huang and Chung (2019) proposed a CNN model combining multi-branch attention modules and a deep supervision mechanism (Xie and Tu, 2015), which aims to localize the discriminative evidence for the class-of-interest from a set of weakly labeled training data. Such attention-based models can precisely pinpoint the location of cancer evidence in WSI, as well as achieving a competitive slide-level accuracy, thereby enhancing the interpretability of current DL models in histopathology applications.

Not all methods identified as weakly supervised in the literature necessarily fall under the MIL category. For instance, the methods in Qu et al. (2019); Bokhorst et al. (2019); Li et al. (2019a) use the term “weakly supervised” to indicate that the model training has been performed on sparse set of annotations such as points inside the region of interest (Li et al., 2019a; Qu et al., 2019), bounding box (Yang et al., 2018) and also some partial pixel-level annotations of cancerous region (Bokhorst et al., 2019). These approaches alleviate the need for expensive annotations by proposing newer variants of loss functions (Li et al., 2019a), feature encoding strategies (Tellez et al., 2019b; Akbar and Martel, 2018), loss balancing mechanisms (Bokhorst et al., 2019), and methods to derive coarse labels from weak annotations (Qu et al., 2019) in order to eventually train fully-supervised models in a weakly supervised way.

3.3. Unsupervised learning

The goal of unsupervised learning is to learn something useful about the underlying data structure without the use of labels. The term “unsupervised” is sometimes used loosely among the digital pathology community for approaches that are not fully unsupervised. For instance, stain transfer without pairing, or domain adaptation via feature distribution matching are considered as unsupervised, even though the domains can be considered as labels for two separate datasets (Gadermayr et al., 2019a; de Bel et al., 2019; Ganin et al., 2016). In this survey, we examine fully unsupervised methods, where the raw data comes in the form of images without any identifiers (e.g., domain, cancerous vs. non-cancerous, tissue etc.). These approaches are rare, since the field of unsupervised learning among the machine learning community is also still in its infancy. However, it is clear why one should be interested in such approaches as the scarcity of labeled data due to regulatory concerns and labor costs (i.e., expert annotations) is a major bottleneck in achieving clinically satisfactory performance in medical imaging (Lee and Yoon, 2017).

In unsupervised learning, the learning task is ambiguous, since it is possible to map the inputs into infinitely many subsets, provided there are no restrictions. Most unsupervised approaches aim to maximize the probability distribution of the data, subject to some constraints, in order to limit the solution space and to achieve a desired grouping/clustering for the target task. A common technique is to transform the data into a lower-dimensional subspace, followed by aggregation of feature representations into mutually exclusive or hierarchical clusters, which is illustrated in Fig. 5. Autoencoders are typically utilized for the dimensionality reduction step. Recent advances in modeling the stochasticity (Kingma and Welling, 2013), and more robustly disentangling visual features (Higgins et al., 2017; Chen et al., 2018) have made autoencoders more attractive for feature modeling and dimensionality reduction. In early work, sparse autoencoders were utilized for unsupervised nuclei detection (Xu et al., 2015a). Later, detection performance was improved by modifying the receptive field of the convolutional filters to accommodate small nuclei (Hou et al., 2019b). For more complex tasks, such as tissue and cell classification, Generative Adversarial Networks (GANs) have also been employed. Specifically, InfoGANs (Chen et al., 2016b) have been used for extracting features, which maximize the mutual information between the generated images and a predefined subset of latent (noise) codes, which are then used for tasks such as cell-level classification, nuclei segmentation, and cell counting (Hu et al., 2018a).

Finally, we examine unsupervised transfer learning approaches, where instead of directly applying learned features on a target task, learned mapping functions are used as an initialization for target tasks, possibly with very few labeled training images. Using a loss term that is similar to the reconstruction objective of autoencoders, (Chang et al., 2017) trains a convolutional network using unlabeled images pertaining to a specific modality (e.g., brain MRI or kidney histology images), to learn filter banks at different scales. The resulting filters are shift-invariant, scale-specific, and can uncover intricate patterns in various tasks, such as tumour classification of glioblastoma multiforme or kidney renal clear cell carcinoma. In machine learning, this form of unsupervised learning is called “self-supervised” learning. Since self-supervised techniques can deal with larger images in general, they offer a promising alternative to clustering approaches in histopathology, which usually require context and a larger field of view. Context-based self-supervised methods which predict spatial ordering (Noroozi and Favaro, 2016) or image rotations (Gidaris et al., 2018), and generative methods such as mapping grayscale images into their RGB counterparts have been successfully used for initializing networks for faster convergence and learning target tasks with fewer labels. However, in histopathology, the rules governing the spatial location of cell structures, or the color or staining of a histology image are different to those for natural scene images. While, this makes the task of unsupervised learning more difficult for histopathology images, it also presents an opportunity for researchers to develop novel techniques that may be applicable to medical images.

Unsupervised learning methods are desirable as they allow models to be trained with little or no labeled data. Furthermore, as these methods are constructed to disentangle relationships between samples in the dataset for grouping (or clustering), a successful unsupervised learning method can also improve the interpretability of a model, by examining how the model groups items into separate categories. While fully unsupervised methods for arbitrary tasks are still uncommon, techniques used for auxiliary tasks (e.g., pre-training) such as self-supervision (Tellez et al., 2019b) can reduce the annotation burden on the expert, thereby significantly expediting the research.

3.4. Transfer learning

The most popular and widely adopted technique in digital pathology is the use of transfer learning approach. In transfer learning, the goal is to extract knowledge from one domain (i.e., source) and apply it to another domain (i.e., target) by relaxing the assumption that the train and test set must be independent and identically distributed. In histopathology, transfer learning is typically done using ImageNet pretrained models such as VGGNet (Simonyan and Zisserman, 2014), InceptionNet (Szegedy et al., 2015, 2016), ResNet (He et al., 2016), MobileNet (Howard et al., 2017), DenseNet (Huang et al., 2017), and various other variants of these models. These pre-trained models have been widely applied to various cancer grading and prognosis tasks (Refer, Table 4 and Section 4 for more details). A critical analysis of best-performing methods on various Grand Challenges is discussed thoroughly in Section 5.1.

Table 4:

Overview of transfer learning models. Note: (✓) indicates the code is publicly available and the link is provided in their respective paper.

| Reference | Cancer types | Staining | Application | Method | Dataset |

|---|---|---|---|---|---|

| Wang et al. (2016a) | Breast | H&E | Detection of breast cancer metastasis | Pre-trained GoogleNet model | Camelyon16 (400 WSIs) |

| Liu et al. (2017) | Breast | H&E | Detection of breast cancer metastasis | Pre-trained Inception-V3 model | Camelyon16 (400 WSIs) |

| Han et al. (2017) | Breast | H&E | Breast cancer multi-classification | CNN integrated with feature space distance constraints for identifying feature space similarities | BreaKHis (7,909 images) |

| Lee and Paeng (2018) | Breast | H&E | Detection and pN-stage classification of breast cancer metastasis | Patch based CNN for metastasis detection + Random forest classifier for lymph node classification | Camelyon17 (1,000 WSIs) |

| Chennamsetty et al. (2018) | Breast | H&E | Breast cancer classification | Ensemble of three pre-trained CNNs + aggregation using majority voting | BACH 2018 challenge (400 WSIs) |

| Kwok (2018) | Breast | H&E | Breast cancer classification | Inception-Resnet-V2 based patch classifier | BACH 2018 challenge (400 WSIs) |

| Bychkov et al. (2018) | Colon | H&E | Outcome prediction of colorectal cancer | A 3-layer LSTM + VGG-16 pre-trained features to predict colorectal cancer outcome | Private set (420 cases) |

| Arvaniti et al. (2018) (✓) | Prostate | H&E | Predicting Gleason score | Pre-trained MobileNet architecture | Private set (886 cases) |

| Coudray et al. (2018) (✓) | Lung | H&E | Genomics prediction from pathology images | Patch based Inception-V3 model | TCGA (1,634 WSIs) and validated on independent private set containing frozen sections (98 slides), FFPE sections (140 slides) and lung biopsies (102 slides) |

| Kather et al. (2019) (✓) | Colon | H&E | Survival prediction of colorectal cancer | Pre-trained VGG-19 based patch classifier | TCGA (862 WSIs) and two other public datasets (25 + 86 WSIs) |

| Noorbakhsh et al. (2019) (✓) | Multi-Cancers | H&E | Pan-cancer classification | Pre-trained Inception-V3 model | TCGA (27,815 WSIs) |

| Tabibu et al. (2019) (✓) | Kidney | H&E | Classification of Renal Cell Carcinoma subtypes and survival prediction | Pre-trained ResNet based patch classifier | TCGA (2,093 WSIs) |

| Akbar et al. (2019) | Breast | H&E | tumour cellularity (TC) scoring | Two separate InceptionNets: one for classification (healthy vs. cancerous tissue) and the other outputs regression scores for TC | BreastPathQ (96 WSIs) |

| Valkonen et al. (2019) (✓) | Breast | ER, PR, Ki-67 | Cell detection | Fine-tuning partially pre-trained CNN network | DigitalPanCK (152 - invasive breast cancer images) |

| Ström et al. (2020) | Prostate | H&E | Grading of prostate cancer | Ensembles of two pre-trained Inception-V3 models | Private set (8730 WSI’s) |

In digital pathology, different types of staining are used depending on the application. Immunohistochemistry (IHC) allows specific molecular targets to be visualized (e.g., Ki-67 to estimate tumour cell proliferation rate (Valkonen et al., 2019), and cytokeratin to detect micrometastases (Ehteshami Bejnordi et al., 2017), whilst H&E is a widely-used general-purpose stain. The appearance of images varies widely depending on the stain used and also on the degree of staining, and this poses a unique challenge, as CNN’s are highly sensitive to the data they were trained on (Ciompi et al., 2017). In the following sub-sections, we review two approaches used to overcome this problem.

3.4.1. Domain adaptation

Domain adaptation is a sub-field of transfer learning, where a task is learned from one or more source domains with labeled data, and the aim is to achieve similar performance on the same task on a target domain with little or no labeled data (Wang and Deng, 2018). Domain-adversarial networks are designed to learn features that are discriminative for the main prediction task whilst being insensitive to domain shift (Ganin et al., 2016; Lafarge et al., 2017; Ren et al., 2018) and this approach has been applied to digital pathology. Ciga et al. (2019) achieved state-of-the-art performance on the BACH (BreAst Cancer Histopathology) challenge task using a multi-level domain-adversarial network. Ren et al. (2018) performed unsupervised training based on siamese networks on prostate WSIs, positing that given a WSI, different patches should be given the same Gleason score, thereby extracting common features present in different parts of the WSI. This auxiliary task also helped increase the adversarial domain adaptation performance on another target dataset.

Fake (artificially generated) images are also used in domain adaptation. Brieu et al. (2019) utilized semi-automatic labeling of the nuclei with one type of staining (IHC) to alleviate the costlier annotation of another staining method (H&E), where fake H&E images are generated from IHC images to increase the dataset size. Similarly, Gadermayr et al. (2019a) used artificial data generation with GANs for semantic segmentation in kidney histology images with multiple stains. Each work uses adversarial models (i.e., generators and discriminators) for image-to-image translation utilizing cycle consistency loss for unpaired training. The translation is performed to obtain an intermediate, stain-agnostic representation (Lahiani et al., 2019), which is then fed to a network trained on this representation to perform segmentation.

3.4.2. Stain normalization

Stain normalization, augmentation and stain transfer are popular image preprocessing techniques to improve generalization of a task by modifying the staining properties of a given image to match another image visually. In contrast to the methods described in Section 3.4.1, which modify the features extracted from different image distributions so that they are indistinguishable from each other; stain normalization directly modifies the input images to obtain features that are invariant to staining variability.