Abstract

Active inference is a normative principle underwriting perception, action, planning, decision-making and learning in biological or artificial agents. From its inception, its associated process theory has grown to incorporate complex generative models, enabling simulation of a wide range of complex behaviours. Due to successive developments in active inference, it is often difficult to see how its underlying principle relates to process theories and practical implementation. In this paper, we try to bridge this gap by providing a complete mathematical synthesis of active inference on discrete state-space models. This technical summary provides an overview of the theory, derives neuronal dynamics from first principles and relates this dynamics to biological processes. Furthermore, this paper provides a fundamental building block needed to understand active inference for mixed generative models; allowing continuous sensations to inform discrete representations. This paper may be used as follows: to guide research towards outstanding challenges, a practical guide on how to implement active inference to simulate experimental behaviour, or a pointer towards various in-silico neurophysiological responses that may be used to make empirical predictions.

Keywords: Active inference, Free energy principle, Process theory, Variational Bayesian inference, Markov decision process, Mathematical review

Graphical abstract

Highlights

-

•

We review active inference on discrete state-spaces, a framework thought to underwrite perception, action, planning, decision-making and learning in biological and artificial agents.

-

•

We derive the associated process theory and discuss its biological plausibility.

-

•

We discuss outstanding challenges for the theory, its implementation and empirical validation.

1. Introduction

Active inference is a normative principle underlying perception, action, planning, decision-making and learning in biological or artificial agents, that inherits from the free energy principle, a theory of self-organisation in the neurosciences (Buckley et al., 2017, Friston, 2019, Friston et al., 2006). Active inference postulates that these processes may all be seen as optimising two complementary objective functions; namely, a variational free energy, which measures the fit between an internal model and past sensory observations, and an expected free energy, which scores possible future courses of action in relation to prior preferences.

Active inference has been employed to simulate a wide range of complex behaviours in neuropsychology and machine learning, including planning and navigation (Kaplan & Friston, 2018a), reading (Friston et al., 2018b), curiosity and abstract rule learning (Friston, Lin et al., 2017), substance use disorder (Smith, Schwartenbeck et al., 2020), approach avoidance conflict (Smith, Kirlic et al., 2020), saccadic eye movements (Parr & Friston, 2018a), visual foraging (Mirza et al., 2016, Parr and Friston, 2017a), visual neglect (Parr & Friston, 2018c), hallucinations (Adams et al., 2013), niche construction (Bruineberg et al., 2018, Constant et al., 2018), social conformity (Constant et al., 2019), impulsivity (Mirza et al., 2019), image recognition (Millidge, 2019), and the mountain car problem (Çatal et al., 2019, Friston, Adams et al., 2012, Friston et al., 2009). The key idea that underwrites these simulations is that creatures use an internal forward (generative) model to predict their sensory input, which they use to infer the causes of these data. In addition to simulate behaviour, active inference allows to answer questions about an individual’s psychological processes, by comparing the evidence of different mechanistic hypotheses in relation to behavioural data.

Active inference is very generic and allows to view different models of behaviour in the same light. For example, a drift diffusion model can now be seen in relation to predictive coding as they can both be interpreted as minimising free energy through a process of evidence accumulation (Bogacz, 2017, Buckley et al., 2017, Friston and Kiebel, 2009). Similarly, a dynamic programming model of choice behaviour corresponds to minimising expected free energy under the prior preference of maximising reward (Da Costa et al., 2020). In being generic active inference is not meant to replace any of the existing models, rather it should be used as a tool to uncover the commitments and assumptions of more specific models.

Early formulations of active inference employed generative models expressed in continuous space and time (for an introduction see Bogacz, 2017, for a review see Buckley et al., 2017), with behaviour modelled as a continuously evolving random dynamical system. However, we know that some processes in the brain conform better to discrete, hierarchical, representations, compared to continuous representations (e.g., visual working memory (Luck and Vogel, 1997, Zhang and Luck, 2008), state estimation via place cells (Eichenbaum et al., 1999, O’Keefe and Dostrovsky, 1971), language, etc.). Reflecting this, many of the paradigms studied in neuroscience are naturally framed as discrete state-space problems. Decision-making tasks are a prime candidate for this, as they often entail a series of discrete alternatives that an agent needs to choose among (e.g., multi-arm bandit tasks (Daw et al., 2006, Reverdy et al., 2013, Wu et al., 2018), multi-step decision tasks (Daw et al., 2011)). This explains why – in active inference – agent behaviour is often modelled using a discrete state-space formulation, the particular applications of which are summarised in Table 1. More recently, mixed generative models (Friston, Parr et al., 2017) – combining discrete and continuous states – have been used to model behaviour involving discrete and continuous representations (e.g., decision-making and movement (Parr & Friston, 2018d), speech production and recognition (Friston, Sajid et al., 2020), pharmacologically induced changes in eye-movement control (Parr & Friston, 2019) or reading; involving continuous visual sampling informing inferences about discrete semantics (Friston, Parr et al., 2017)).

Table 1.

Applications of active inference (discrete state-space) .

| Application | Description | References |

|---|---|---|

| Decision-making under uncertainty | Initial formulation of active inference on partially observable Markov decision processes. | Friston, Samothrakis et al. (2012) |

| Optimal control | Application of KL or risk sensitive control in an engineering benchmark—the mountain car problem. | Çatal et al. (2019) and Friston, Adams et al. (2012) |

| Evidence accumulation | Illustrating the role of evidence accumulation in decision-making through an urns task. | FitzGerald, Moran et al. (2015) and FitzGerald, Schwartenbeck et al. (2015) |

| Psychopathology | Simulation of addictive choice behaviour. | Schwartenbeck, FitzGerald, Mathys, Dolan, Wurst et al. (2015) |

| Dopamine | The precision of beliefs about policies provides a plausible description of dopaminergic discharges. | Friston et al. (2014) and FitzGerald, Dolan et al. (2015) |

| Functional magnetic resonance imaging | Empirical prediction and validation of dopaminergic discharges. | Schwartenbeck, FitzGerald, Mathys, Dolan and Friston (2015) |

| Maximal utility theory | Evidence in favour of surprise minimisation as opposed to utility maximisation in human decision-making. | Schwartenbeck, FitzGerald, Mathys, Dolan, Kronbichler et al. (2015) |

| Social cognition | Examining the effect of prior preferences on interpersonal inference. | Moutoussis et al. (2014) |

| Exploration–exploitation dilemma | Casting behaviour as expected free energy minimising accounts for epistemic and pragmatic choices. | Friston et al. (2015) |

| Habit learning and action selection | Formulating learning as an inferential process and action selection as Bayesian model averaging. | Friston et al. (2016) and FitzGerald et al. (2014) |

| Scene construction and anatomy of time | Mean-field approximation for multi-factorial hidden states, enabling high dimensional representations of the environment. | Friston and Buzsáki (2016) and Mirza et al. (2016) |

| Electrophysiological responses | Synthesising various in-silico neurophysiological responses via a gradient descent on free energy. E.g., place-cell activity, mismatch negativity, phase-precession, theta sequences, theta–gamma coupling and dopaminergic discharges. | Friston, FitzGerald et al. (2017) |

| Structure learning, curiosity and insight | Simulation of artificial curiosity and abstract rule learning. Structure learning via Bayesian model reduction. | Friston, Lin et al. (2017) |

| Hierarchical temporal representations | Generalisation to hierarchical generative models with deep temporal structure and simulation of reading. | Friston et al. (2018b) and Parr and Friston (2017b) |

| Computational neuropsychology | Simulation of visual neglect, hallucinations, and prefrontal syndromes under alternative pathological priors. | Benrimoh et al., 2018, Parr, Benrimoh et al., 2018, Parr and Friston, 2018c, Parr, Rees et al., 2018 and Parr, Rikhye et al. (2019) |

| Neuromodulation | Use of precision parameters to manipulate exploration during saccadic searches; associating uncertainty with cholinergic and noradrenergic systems. | Parr and Friston, 2017a, Parr and Friston, 2019, Sales et al., 2018 and Vincent et al. (2019) |

| Decisions to movements | Mixed generative models combining discrete and continuous states to implement decisions through movement. | Friston, Parr et al. (2017) and Parr and Friston (2018d) |

| Planning, navigation and niche construction | Agent induced changes in environment (generative process); decomposition of goals into subgoals. | Bruineberg et al., 2018, Constant et al., 2018 and Kaplan and Friston (2018a) |

| Atari games | Active inference compares favourably to reinforcement learning in the game of Doom. | Cullen et al. (2018) |

| Machine learning | Scaling active inference to more complex machine learning problems. | Tschantz et al. (2019) |

Due to the pace of recent theoretical advances in active inference, it is often difficult to retain a comprehensive overview of its process theory and practical implementation. In this paper, we hope to provide a comprehensive (mathematical) synthesis of active inference on discrete state-space models. This technical summary provides an overview of the theory, derives the associated (neuronal) dynamics from first principles and relates these to known biological processes. Furthermore, this paper and Buckley et al. (2017) provide the building blocks necessary to understand active inference on mixed generative models. This paper can be read as a practical guide on how to implement active inference for simulating experimental behaviour, or a pointer towards various in-silico neuro- and electro-physiological responses that can be tested empirically.

This paper is structured as follows. Section 2 is a high-level overview of active inference. The following sections elucidate the formulation by deriving the entire process theory from first principles; incorporating perception, planning and decision-making. This formalises the action–perception cycle: (1) an agent is presented with a stimulus, (2) it infers its latent causes, (3) plans into the future and (4) realises its preferred course of action; and repeat. This enactive cycle allows us to explore the dynamics of synaptic plasticity, which mediate learning of the contingencies of the world at slower timescales. We conclude in Section 9 with an overview of structure learning in active inference.

2. Active inference

To survive in a changing environment, biological (and artificial) agents must maintain their sensations within a certain hospitable range (i.e., maintaining homeostasis through allostasis). In brief, active inference proposes that agents achieve this by optimising two complementary objective functions, a variational free energy and an expected free energy. In short, the former measures the fit between an internal (generative) model of its sensations and sensory observations, while the latter scores each possible course of action in terms of its ability to reach the range of “preferred” states of being.

Our first premise is that agents represent the world through an internal model. Through minimisation of variational free energy, this model becomes a good model of the environment. In other words, this probabilistic model and the probabilistic beliefs1 that it encodes are continuously updated to mirror the environment and its dynamics. Such a world model is considered to be generative; in that it is able to generate predictions about sensations (e.g., during planning or dreaming), given beliefs about future states of being. If an agent senses a heat source (e.g., another agent) via some temperature receptors, the sensation of warmth represents an observed outcome and the temperature of the heat source a hidden state; minimisation of variational free energy then ensures that beliefs about hidden states closely match the true temperature. Formally, the generative model is a joint probability distribution over possible hidden states and sensory consequences – that specifies how the former cause the latter – and minimisation of variational free energy enables to “invert” the model; i.e., determine the most likely hidden states given sensations. The variational free energy is the negative evidence lower bound that is optimised in variational Bayes in machine learning (Bishop, 2006, Xitong, 2017). Technically – by minimising variational free energy – agents perform approximate Bayesian inference (Sengupta and Friston, 2016, Sengupta et al., 2016), which enables them to infer the causes of their sensations (e.g., perception). This is the point of contact between active inference and the Bayesian brain (Aitchison and Lengyel, 2017, Friston, 2012, Knill and Pouget, 2004). Crucially, agents may incorporate an optimism bias (McKay and Dennett, 2009, Sharot, 2011) in their model; thereby scoring certain “preferred” sensations as more likely. This lends a higher plausibility to those courses of action that realise these sensations. In other words, a preference is simply something an agent (believes it) is likely to work towards.

To maintain homeostasis, and ensure survival, agents must minimise surprise.2 Since the generative model scores preferred outcomes as more likely, minimising surprise corresponds to maximising model evidence.3 In active inference, this is assured by the aforementioned processes; indeed, the variational free energy turns out to be an upper bound on surprise and minimising expected free energy ensures preferred outcomes are realised, thereby avoiding surprise on average.

Active inference can thus be framed as the minimisation of surprise (Friston, 2009, Friston, 2010, Friston et al., 2006, Friston and Stephan, 2007) by perception and action. In discrete state models – of the sort discussed here – this means agents select from different possible courses of action (i.e., policies) in order to realise their preferences and thus minimise the surprise that they expect to encounter in the future. This enables a Bayesian formulation of the perception–action cycle (Fuster, 1990): agents perceive the world by minimising variational free energy, ensuring their model is consistent with past observations, and act by minimising expected free energy, to make future sensations consistent with their model. This account of behaviour can be concisely framed as self-evidencing (Hohwy, 2016).

In contrast to other normative models of behaviour, active inference is a ‘first principle’ account, which is grounded in statistical physics (Friston, 2019, Parr et al., 2020). Active inference describes the dynamics of systems that persist (i.e., do not dissipate) during some timescale of interest, and that can be statistically segregated from their environment—conditions which are satisfied by biological systems. Mathematically, the first condition means that the system is at non-equilibrium steady-state (NESS). This implies the existence of a steady-state probability density to which the system self-organises and returns to after perturbation (i.e., the agent’s preferences). The statistical segregation condition is the presence of a Markov blanket (c.f., Fig. 1) (Kirchhoff et al., 2018, Pearl, 1998): a set of variables through which states internal and external to the system interact (e.g., the skin is a Markov blanket for the human body). Under these assumptions it can be shown that the states internal to the system parameterise Bayesian beliefs about external states and can be cast a process of variational free energy minimisation (Friston, 2019, Parr et al., 2020). This coincides with existing approaches to approximate inference (Beal, 2003, Bishop, 2006, Blei et al., 2017, Jordan et al., 1998). Furthermore, it can be shown that the most likely courses of action taken by those systems are those which minimise expected free energy (or a variant thereof, see Appendix C)—a quantity that subsumes many existing constructs in science and engineering (see Section 7).

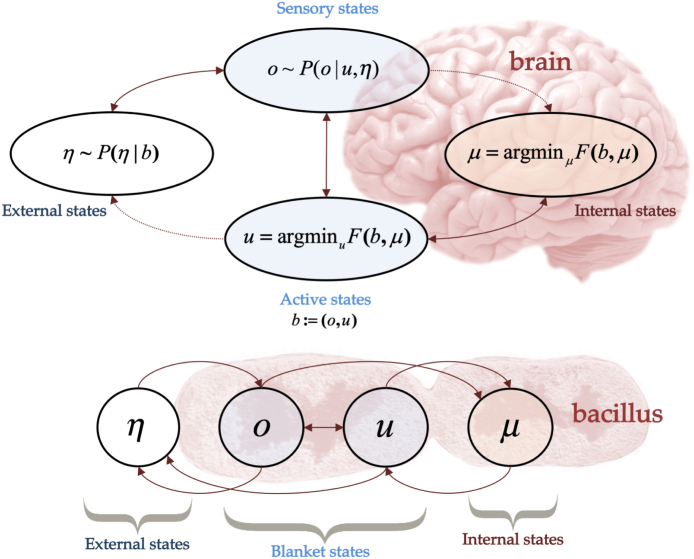

Fig. 1.

Markov blankets in active inference. This figure illustrates the Markov blanket assumption of active inference. A Markov blanket is a set of variables through which states internal and external to the system interact. Specifically, the system must be such that we can partition it into a Bayesian network of internal states , external states , sensory states and active states , (, and are often referred together as particular states) with probabilistic (causal) links in the directions specified by the arrows. All interactions between internal and external states are therefore mediated by the blanket states . The sensory states represent the sensory information that the body receives from the environment and the active states express how the body influences the environment. This blanket assumption is quite generic, in that it can be reasonably assumed for a brain as well as elementary organisms. For example, when considering a bacillus, the sensory states become the cell membrane and the active states comprise the actin filaments of the cytoskeleton. Under the Markov blanket assumption – together with the assumption that the system persists over time (i.e., possesses a non-equilibrium steady state) – a generalised synchrony appears, such that the dynamics of the internal states can be cast as performing inference over the external states (and vice versa) via a minimisation of variational free energy (Friston, 2019, Parr et al., 2020). This coincides with existing approaches to inference; i.e., variational Bayes (Beal, 2003, Bishop, 2006, Blei et al., 2017, Jordan et al., 1998). This can be viewed as the internal states mirroring external states, via sensory states (e.g., perception), and external states mirroring internal states via active states (e.g., a generalised form of self-assembly, autopoiesis or niche construction). Furthermore, under these assumptions the most likely courses of actions can be shown to minimise expected free energy. Note that external states beyond the system should not be confused with the hidden states of the agent’s generative model (which model external states). In fact, the internal states are exactly the parameters (i.e., sufficient statistics) encoding beliefs about hidden states and other latent variables, which model external states in a process of variational free energy minimisation. Hidden and external states may or may not be isomorphic. In other words, an agent uses its internal states to represent hidden states that may or may not exist in the external world.

By subscribing to the above assumptions, it is possible to describe the behaviour of viable living systems as performing active inference—the remaining challenge is to determine the computational and physiological processes that they implement to do so. This paper aims to summarise possible answers to this question, by reviewing the technical details of a process theory for active inference on discrete state-space generative models, first presented in Friston, FitzGerald et al. (2017). Note that it is important to distinguish between active inference as a principle (presented above) from active inference as a process theory. The former is a consequence of fundamental assumptions about living systems, while the latter is a hypothesis concerning the computational and biological processes in the brain that might implement active inference. The ensuing process theories theory can then be used to predict plausible neuronal dynamics and electrophysiological responses that are elicited experimentally.

3. Discrete state-space generative models

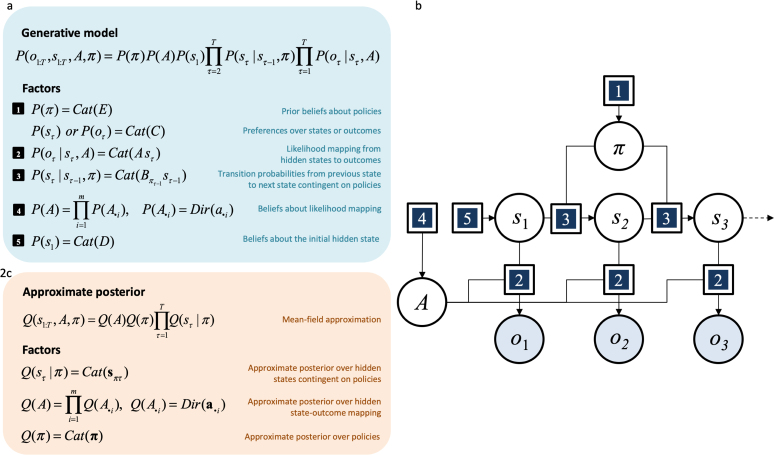

The generative model (Bishop, 2006) expresses how the agent represents the world. This is a joint probability distribution over sensory data and the hidden (or latent) causes of these data. The sorts of discrete state-space generative models used in active inference are specifically suited to represent discrete time series and decision-making tasks. These can be expressed as variants of partially observable Markov decision processes (POMDPs; Aström, 1965): from simple Markov decision processes (Barto and Sutton, 1992, Stone, 2019, White, 2001) to generalisations in the form of deep probabilistic (hierarchical) models (Allenby et al., 2005, Box and Tiao, 1965, Friston et al., 2018b). For clarity, the process theory is derived for the simplest model that facilitates understanding of subsequent generalisations; namely, a POMDP where the agent holds beliefs about the probability of the initial state (specified as ), the transition probabilities from one state to the next (defined as matrix ) and the probability of outcomes given states (i.e., the likelihood matrix ); see Fig. 2.

Fig. 2.

Example of a discrete state-space generative model. Panel 2a, specifies the form of the generative model, which is how the agent represents the world. The generative model is a joint probability distribution over (hidden) states, outcomes and other variables that cause outcomes. In this representation, states unfold in time causing an observation at each time-step. The likelihood matrix encodes the probabilities of state–outcome pairs. The policy specifies which action to perform at each time-step. Note that the agent’s preferences may be specified either in terms of states or outcomes. It is important to distinguish between states (resp. outcomes) that are random variables, and the possible values that they can take in (resp. in ), which we refer to as possible states (resp. possible outcomes). Note that this type of representation comprises a finite number of timesteps, actions, policies, states, outcomes, possible states and possible outcomes. In Panel 2b, the generative model is displayed as a probabilistic graphical model (Bishop, 2006, Jordan et al., 1998, Pearl, 1988, Pearl, 1998) expressed in factor graph form (Loeliger, 2004). The variables in circles are random variables, while squares represent factors, whose specific form are given in Panel 2a. The arrows represent causal relationships (i.e., conditional probability distributions). The variables highlighted in grey can be observed by the agent, while the remaining variables are inferred through approximate Bayesian inference (see Section 4) and called hidden or latent variables. Active inference agents perform inference by optimising the parameters of an approximate posterior distribution (see Section 4). Panel 2c specifies how this approximate posterior factorises under a particular mean-field approximation (Tanaka, 1999), although other factorisations may be used (Parr, Markovic et al., 2019, Schwöbel et al., 2018). A glossary of terms used in this figure is available in Table 2. The mathematical yoga of generative models is heavily dependent on Markov blankets. The Markov blanket of a random variable in a probabilistic graphical model are those variables that share a common factor. Crucially, a variable conditioned upon its Markov blanket is conditionally independent of all other variables. We will use this property extensively (and implicitly) in the text.

As mentioned above, a substantial body of work justifies describing certain neuronal representations with discrete state-space generative models (e.g., Luck and Vogel, 1997, Tee and Taylor, 2018, Zhang and Luck, 2008). Furthermore, it has been long known that – at the level of neuronal populations – computations occur periodically (i.e., in distinct and sometimes nested oscillatory bands). Similarly, there is evidence for sequential computation in a number of processes (e.g., attention Buschman and Miller, 2010, Duncan et al., 1994, Landau and Fries, 2012, visual perception Hanslmayr et al., 2013, Rolls and Tovee, 1994) and at different levels of the neuronal hierarchy (Friston, 2008, Friston et al., 2018b), in line with ideas from hierarchical predictive processing (Chao et al., 2018, Iglesias et al., 2013). This accommodates the fact that visual saccadic sampling of observations occurs at a frequency of approximately (Parr & Friston, 2018d). The relatively slow presentation of a discrete sequence of observations enables inferences to be performed in peristimulus time by (much) faster neuronal dynamics.

Active inference, implicitly, accounts for fast and slow neuronal dynamics. At each time-step the agent observes an outcome, from which it infers the past, present and future (hidden) states through perception. This underwrites a plan into the future, by evaluating (the expected free energy of) possible policies. The inferred (best) policies specify the most likely action, which is executed. At a slower timescale, parameters encoding the contingencies of the world (e.g., ), are inferred. This is referred to as learning. Even more slowly, the structure of the generative model is updated to better account for available observations—this is called structure learning. The following sections elucidate these aspects of the active inference process theory.

This paper will be largely concerned with deriving and interpreting the inferential dynamics that agents might implement using the generative model in Fig. 2. We leave the discussion of more complex models to Appendix A, since the derivations are analogous in those cases.

4. Variational Bayesian inference

4.1. Free energy and model evidence

Variational Bayesian inference rests upon minimisation of a quantity called (variational) free energy, which bounds the improbability (i.e., the surprise) of sensory observations, under a generative model. Simultaneously, free energy minimisation is a statistical inference technique that enables the approximation of the posterior distribution in Bayes rule. In machine learning, this is known as variational Bayes (Beal, 2003, Bishop, 2006, Blei et al., 2017, Jordan et al., 1998). Active inference agents minimise variational free energy, enabling concomitant maximisation of their model evidence and inference of the latent variables of their generative model. In the following, we consider a particular time point to be given , whence the agent has observed a sequence of outcomes . The posterior about the latent causes of sensory data is given by Bayes rule:

| (1) |

Note the policy is a random variable. This entails planning as inferring the best action sequence from observations (Attias, 2003, Botvinick and Toussaint, 2012). Computing the posterior distribution requires computing the model evidence , which is intractable forcomplex generative models embodied by biological and artificial systems (Friston, 2008)—a well-known problem in Bayesian statistics. An alternative to computing the exact posterior distribution is to optimise an approximate posterior distribution over latent causes , by minimising the Kullback–Leibler (KL) divergence (Kullback & Leibler, 1951) —a non-negative measure of discrepancy between probability distributions. We can use the definition of the KL divergence and Bayes rule to arrive at the variational free energy , which is a functional of approximate posterior beliefs:

| (2) |

From (2), one can see that by varying to minimise the variational free energy enables us to approximate the true posterior, while simultaneously ensuring that surprise remains low. The former offers the intuitive interpretation of the free energy as a generalised prediction error, as minimising free energy corresponds to suppressing the discrepancy between predictions, i.e., , and the actual state of affairs, i.e., the posterior; and indeed for a particular class of generative models, we recover the prediction error given by predictive coding schemes (see Bogacz, 2017, Buckley et al., 2017, Friston et al., 2007). Altogether, this means that variational free energy minimising agents, simultaneously, infer the latent causes of their observations and maximise the evidence for their generative model. One should note that the free energy equals the surprise only at the global free energy minimum, when the approximate posterior equals the true posterior . Outside of the global free energy minimum, the free energy upper bounds the surprise, in which case, since the true posterior is generally intractable, the tightness of the bound is generally unknowable.

To aid intuition, the variational free energy can be rearranged into complexity and accuracy:

| (3) |

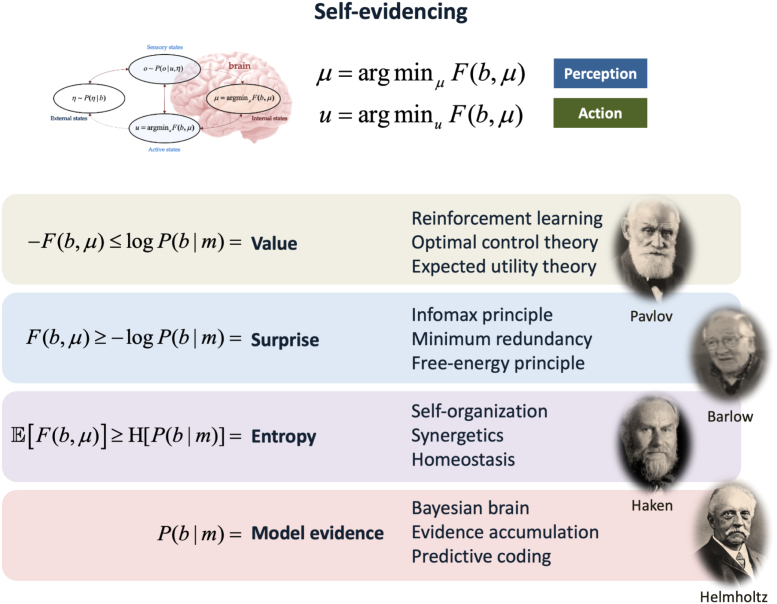

The first term of (3) can be regarded as complexity: a simple explanation for observable data , which makes few assumptions over and above the prior (i.e., with KL divergence close to zero), is a good explanation. In other words, a good explanation is an accurate account of some data that requires minimal movement for updating of prior to posterior beliefs (c.f., Occam’s principle). The second term is accuracy; namely, the probability of the data given posterior beliefs about model parameters . In other words, how well the generative model fits the observed data. The idea that neural representations weigh complexity against accuracy underwrites the imperative to find the most accurate explanation for sensory observations that is minimally complex, which has been leveraged by things like Horace Barlow’s principle of minimum redundancy (Barlow, 2001) and subsequently supported empirically (Dan et al., 1996, Lewicki, 2002, Olshausen and Field, 2004, Olshausen and O’Connor, 2002). Fig. 3 illustrates the various implications of minimising free energy.

Fig. 3.

Markov blankets and self-evidencing. This schematic illustrates the various interpretations of minimising variational free energy. Recall that the existence of a Markov blanket implies a certain lack of influences among internal, blanket and external states. These independencies have an important consequence; internal and active states are the only states that are not influenced by external states, which means their dynamics (i.e., perception and action) are a function of, and only of, particular states (i.e., internal, sensory and active states); here, the variational (free energy) bound on surprise. This surprise has a number of interesting interpretations. Given it is the negative log probability of finding a particle or creature in a particular state, minimising surprise corresponds to maximising the value of a particle’s state. This interpretation is licensed by the fact that the states with a high probability are, by definition, attracting states. On this view, one can then spin-off an interpretation in terms of reinforcement learning (Barto & Sutton, 1992), optimal control theory (Todorov & Jordan, 2002) and, in economics, expected utility theory (Bossaerts & Murawski, 2015). Indeed, any scheme predicated on the optimisation of some objective function can now be cast in terms of minimising surprise – in terms of perception and action (i.e., the dynamics of internal and active states) – by specifying these optimal values to be the agent’s preferences. The minimisation of surprise (i.e., self-information) leads to a series of influential accounts of neuronal dynamics; including the principle of maximum mutual information (Linsker, 1990, Optican and Richmond, 1987), the principles of minimum redundancy and maximum efficiency (Barlow, 1961) and the free energy principle (Friston et al., 2006). Crucially, the average or expected surprise (over time or particular states of being) corresponds to entropy. This means that action and perception look as if they are minimising entropy. This leads us to theories of self-organisation, such as synergetics in physics (Haken, 1978, Kauffman, 1993, Nicolis and Prigogine, 1977) or homeostasis in physiology (Ashby, 1947, Bernard, 1974, Conant and Ashby, 1970). Finally, the probability of any blanket states given a Markov blanket () is, on a statistical view, model evidence (MacKay, 1995, MacKay, 2003). This means that all the above formulations are internally consistent with things like the Bayesian brain hypothesis, evidence accumulation and predictive coding; most of which inherit from Helmholtz motion of unconscious inference (von Helmholtz & Southall, 1962), later unpacked in terms of perception as hypothesis testing in 20th century psychology (Gregory, 1980) and machine learning (Dayan et al., 1995).

4.2. On the family of approximate posteriors

The goal is now to minimise variational free energy with respect to . To obtain a tractable expression for the variational free energy, we need to assume a certain simplifying factorisation of the approximate posterior. There are many possible forms (e.g., mean-field, marginal, Bethe, see Heskes, 2006, Parr, Markovic et al., 2019, Yedidia et al., 2005), each of which trades off the quality of the inferences with the complexity of the computations involved. For the purpose of this paper we use a particular structured mean-field approximation (see Table 2 for an explanation of the different distributions and variables in play):

| (4) |

Table 2.

Glossary of terms and notation.

| Notation | Meaning | Type |

|---|---|---|

| Set of all possible (hidden) states. | Finite set of cardinality . | |

| (Hidden) state at time . In computations, if evaluates to the ith possible state, then interpret it as the ith unit vector in . | Random variable over . | |

| Sequence of hidden states . | Random variable over . | |

| Set of all possible outcomes. | Finite set of cardinality . | |

| Outcome at time . In computations, if evaluates to the jth possible outcome, then interpret it as the jth unit vector in . | Random variable over . | |

| Sequence of outcomes | Random variable over . | |

| Number of timesteps in a trial of observation epochs under the generative model. | Positive integer. | |

| Set of all possible actions. | Finite set. | |

| Set of all allowable policies; i.e., action sequences indexed in time. | Finite subset of . | |

| Policy or actions sequence indexed in time. | Random variable over , or element of depending on context. | |

| Approximate posterior distribution over the latent variables of the generative model . | Scalar valued probability distribution over . | |

| Variational free energy and variational free energy conditioned upon a policy. | Functionals of that evaluate to a scalar quantity. | |

| Expected free energy. | Function defined on that evaluates to a scalar quantity. | |

| Categorical distribution; probability distribution over a finite set assigning strictly positive probabilities. | Probability distribution over a finite set of cardinality parameterised by a real valued vector of probabilities in | |

| Dirichlet distribution (conjugate prior of the categorical distribution). Probability distribution over the parameter space of the categorical distribution, parameterised by a vector of positive reals. | Probability distribution over , itself parameterised by an element of . | |

| ith column and (k,i)th element of matrix . | Matrix indexing convention. | |

| Respectively inner product, Kronecker product, element-wise product and element-wise power. Following existing active inference literature, we adopt the convention for matrices. | Operation on vectors and matrices. | |

| Likelihood matrix. The probability of the state–outcome pair , namely is given by . | Random variable over the subset of with columns in . | |

| Matrix of transition probabilities from one state to the next state given action . The probability of possible state , given and action is . | Matrix in with columns in . | |

| Vector of probabilities of initial state. The probability of the th possible state occurring at time is . | Vector of probabilities in . | |

| Parameters of prior and approximate posterior beliefs about . | Matrices in . | |

| Matrices of the same size as , with homogeneous columns; any of its ith column elements are denoted by and defined by . | Matrices in . | |

| Natural logarithm, gamma function and digamma function. By convention these functions are taken component-wise on vectors and matrices. | Functions. | |

| Expectation of a random variable under a probability density , taken component-wise if is a matrix. | Real-valued operator on random variables. | |

| Matrix in . | ||

| . Note that ! | Matrix in . | |

| Softmax function or normalised exponential. | Function | |

| Shannon entropy of a probability distribution . Explicitly, | Functional over probability distributions. |

This choice is driven by didactic purposes and since this factorisation has been used extensively in the active inference literature (Friston, FitzGerald et al., 2017, Friston, Parr et al., 2017, Friston et al., 2018b). However, the most recent software implementation of active inference (available in spm_MDP_VB_X.m) employs a marginal approximation (Parr, 2019, Parr, Markovic et al., 2019), which retains the simplicity and biological interpretation of the neuronal dynamics afforded by the mean-field approximation, while approximating the more accurate inferences of the Bethe approximation. For these reasons, the marginal free energy currently stands as the most biologically plausible.

4.3. Computing the variational free energy

The next sections focus on producing biologically plausible neuronal dynamics that perform perception and learning based on variational free energy minimisation. To enable this, we first compute variational the free energy, using the factorisations of the generative model and approximate posterior (c.f., Fig. 2):

| (5) |

where

| (6) |

is the variational free energy conditioned upon pursuing a particular policy. This is the same quantity that we would have obtained by omitting and conditioning all probability distributions in the numerators of (1) by . In the next section, we will see how perception can be framed in terms of variational free energy minimisation.

5. Perception

In active inference, perception is equated with state estimation (Friston, FitzGerald et al., 2017) (e.g., inferring the temperature from the sensation of warmth), consistent with the idea that perceptions are hypotheses (Gregory, 1980). To infer the (past, present and future) states of the environment, an agent must minimise the variational free energy with respect to for each policy . This provides the agent’s inference over hidden states, contingent upon pursuing a given policy. Since the only part of the free energy that depends on is , the agent must simply minimise . Substituting by their sufficient statistics (i.e., the vector of parameters ), becomes a function of those parameters. This enables us to rewrite (6), conveniently in matrix form (see Appendix B for details):

| (7) |

This enables to compute the variational free energy gradients (Petersen & Pedersen, 2012):

| (8) |

The neuronal dynamics are given by a gradient descent on free energy (Friston, FitzGerald et al., 2017), with state-estimation expressed as a softmax function of accumulated (negative) free energy gradients, that we denote by (see Section 5.1 for an interpretation). The constant term is generally omitted since the softmax function removes it anyway.

| (9) |

The softmax function – a generalisation of the sigmoid to vector inputs – is a natural choice as the variational free energy gradient is a logarithm and the components of must sum to one. Note the continuous time gradient descent on the free energy (9); although we focus on active inference with discrete generative models, this does not preclude the belief updating from occurring in continuous time (this is particularly important when relating these dynamics to neurobiological processes, see below). Yet, any numerical implementation of active inference would implement a discretised version of (9) until convergence, for example

5.1. Plausibility of neuronal dynamics

The temporal dynamics expressed in (9) unfold at a much faster timescale than the sampling of new observations (i.e., within timesteps) and correspond to fast neuronal processing in peristimulus time. This is consistent with behaviour-relevant computations at frequencies that are higher than the rate of visual sampling (e.g., working memory (Lundqvist et al., 2016), visual stimulus perception in humans (Hanslmayr et al., 2013) and macaques (Rolls & Tovee, 1994)).

Furthermore, these dynamics (9) are consistent with predictive processing (Bastos et al., 2012, Rao and Ballard, 1999) – since active inference prescribes dynamics that minimise prediction error – although they generalise it to a wide range of generative models. Note that, while also a variational free energy, this sort of prediction error (7) is not the same as that given by predictive coding schemes (which rely upon a certain kind of continuous state-space generative model, see Bogacz, 2017, Buckley et al., 2017, Friston et al., 2007).

Just as neuronal dynamics involve translation from post-synaptic potentials to firing rates, (9) involves translating from a vector of real numbers (), to a vector whose elements are bounded between zero and one (); via the softmax function. As a result, it is natural to interpret the components of as the average membrane potential of distinct neural populations, and as the average firing rate of those populations, which is bounded thanks to neuronal refractory periods. This is consistent with mean-field formulations of neural population dynamics, in that the average firing rate of a neuronal population follows a sigmoid function of the average membrane potential (Deco et al., 2008, Marreiros et al., 2008, Moran et al., 2013). Using the fact that a softmax function is a generalisation of the sigmoid to vector inputs – here the average membrane potentials of coupled neuronal populations – it follows that their average firing follows a softmax function of their average potential. In this context, the softmax function may be interpreted as performing lateral inhibition, which can be thought of as leading to narrower tuning curves of individual neurons and thereby sharper inferences (Von Békésy, 1967). Importantly, this tells us that state-estimation can be performed in parallel by different neuronal populations, and a simple neuronal architecture is sufficient to implement these dynamics (see Parr, Markovic et al. (2019, Figure 6)).

Lastly, interpreting the dynamics in this way has a degree of face validity, as it enables us to synthesise a wide-range of biologically plausible electrophysiological responses; including repetition suppression, mismatch negativity, violation responses, place-cell activity, phase precession, theta sequences, theta–gamma coupling, evidence accumulation, race-to-bound dynamics and transfer of dopamine responses (Friston, FitzGerald et al., 2017, Schwartenbeck, FitzGerald, Mathys, Dolan and Friston, 2015).

The neuronal dynamics for state estimation coincide with variational message passing (Dauwels, 2007, Winn and Bishop, 2005), a popular algorithm for approximate Bayesian inference. This follows, as we have seen, from free energy minimisation under a particular mean-field approximation (4). If one were to use the Bethe approximation, the corresponding dynamics coincide with belief propagation (Bishop, 2006, Loeliger, 2004, Parr, Markovic et al., 2019, Schwöbel et al., 2018, Yedidia et al., 2005), another widely used algorithm for approximate inference. This offers a formal connection between active inference and message passing interpretations of neuronal dynamics (Dauwels et al., 2007, Friston, Parr et al., 2017, George, 2005). In the next section, we examine planning, decision-making and action selection.

6. Planning, decision-making and action selection

So far, we have focused on optimising beliefs about hidden states under a particular policy by minimising a variational free energy functional of an approximate posterior over hidden states, under each policy.

In this section, we explain how planning and decision-making arise as a minimisation of expected free energy—a function scoring the goodness of each possible future course of action. We briefly motivate how the expected free energy arises from first-principles. This allows us to frame decision-making and action-selection in terms of expected free energy minimisation. Finally, we conclude by discussing the computational cost of planning into the future.

6.1. Planning and decision-making

At the heart of active inference, is a description of agents that strive to attain a target distribution specifying the range of preferred states of being, given a sufficient amount of time. To work towards reaching these preferences, agents select policies , such that their predicted states at some future time point (usually, the time horizon of a policy ) reach the preferred states , which are specified by the generative model. These considerations allow us to show in Appendix C that the requisite approximate posterior over policies is a softmax function of the negative expected free energy 4 :

| (10) |

By risk we mean the difference between predicted and a priori predictions in the future (e.g., the quantification of losses as in financial risk) and ambiguity is the uncertainty associated to future observations, given states. This means that the most likely (i.e., best) policies minimise expected free energy. This ensures that future courses of action are exploitative (i.e., risk minimising) and explorative (i.e., ambiguity minimising). In particular, the expected free energy balances goal-seeking and itinerant novelty-seeking behaviour, given some prior preferences or goals. Note that the ambiguity term rests on an expectation over fictive (i.e., predicted) outcomes under beliefs about future states. This means that optimising beliefs about future states during perception is crucial to accurately predict future outcomes during planning. In summary, planning and decision-making respectively correspond to evaluating the expected free energy of different policies, which scores their goodness in relation to prior preferences and forming approximate posterior beliefs about policies.

6.2. Action selection, policy-independent state-estimation

Approximate posterior beliefs about policies allows to obtain the most plausible action as the most likely under all policies—this can be expressed as a Bayesian model average

| (11) |

In addition, we obtain a policy independent state-estimation at any time point , as a Bayesian model average of approximate posterior beliefs about hidden states under policies, which may be expressed in terms of the distribution’s parameters ():

| (12) |

Note that these Bayesian model averages may be implemented by neuromodulatory mechanisms (FitzGerald et al., 2014).

6.3. Biological plausibility

Winner take-all architectures of decision-making are already commonplace in computational neuroscience (e.g., models of selective attention and recognition (Carpenter and Grossberg, 1987, Itti et al., 1998), hierarchical models of vision (Riesenhuber & Poggio, 1999)). This is nice, since the softmax function in (10) can be seen as providing a biologically plausible (Deco et al., 2008, Marreiros et al., 2008, Moran et al., 2013), smooth approximation to the maximum operation, which is known as soft winner take-all (Maass, 2000). In fact, the generative model, presented in Fig. 2, can be naturally extended such that the approximate posterior contains an (inverse) temperature parameter multiplying the expected free energy inside the softmax function (see Appendix A.2). This temperature parameter regulates how precisely the softmax approximates the maximum function, thus recovering winner take-all architectures for high parameter values (technically, this converts Bayesian model averaging into Bayesian model selection, where the policy corresponds to a model of what the agent is doing). This parameter, regulating precision of policy selection, has a clear biological interpretation in terms of confidence encoded in dopaminergic firing (FitzGerald, Dolan et al., 2015, Friston, FitzGerald et al., 2017, Friston et al., 2014, Schwartenbeck, FitzGerald, Mathys, Dolan and Friston, 2015). Interestingly, Daw and colleagues (Daw et al., 2006) uncovered evidence in favour of a similar model employing a softmax function and temperature parameter in human decision-making.

6.4. Pruning of policy trees

From a computational perspective, planning (i.e., computing the expected free energy) for each possible policy can be cost-prohibitive, due do the combinatorial explosion in the number of sequences of actions when looking deep into the future. There has been work in understanding how the brain finesses this problem (Huys et al., 2012), which suggests a simple answer: during mental planning, humans stop evaluating a policy as soon as they encounter a large loss (i.e., a high value of the expected free energy that renders the policy highly implausible). In active inference this corresponds to using an Occam window; that is, we stop evaluating the expected free energy of a policy if it becomes much higher than the best (smallest expected free energy) policy—and set its approximate posterior probability to an arbitrarily low value accordingly. This biologically plausible pruning strategy drastically reduces the number of policies one has to evaluate exhaustively.

Although effective and biologically plausible, the Occam window for pruning policy trees cannot deal with large policy spaces that ensue with deep policy trees and long temporal horizons. This means that pruning can only partially explain how biological organisms perform deep policy searches. Further research is needed to characterise the processes in which biological agents reduce large policy spaces to tractable subspaces. One explanation – for the remarkable capacity of biological agents to evaluate deep policy trees – rests on deep (hierarchical) generative models, in which policies operate at each level. These deep models enable long-term policies, modelling slow transitions among hidden states at higher levels in the hierarchy, to contextualise faster state transitions at subordinate levels (see Appendix A). The resulting (semi Markovian) process can then be specified in terms of a hierarchy of limited horizon policies that are nested over temporal scales; c.f., motor chunking (Dehaene et al., 2015, Fonollosa et al., 2015, Haruno et al., 2003).

6.5. Discussion of the action–perception cycle

Minimising variational and expected free energy are complementary and mutually beneficial processes. Minimisation of variational free energy ensures that the generative model is a good predictor of its environment; this allows the agent to accurately plan into the future by evaluating expected free energy, which in turn enables it to realise its preferences. In other words, minimisation of variational free energy is a vehicle for effective planning and reaching preferences via the expected free energy; in turn, reaching preferences minimises the expected surprise of future states of being.

In conclusion, we have seen how agents plan into the future and make decisions about the best possible course of action. This concludes our discussion of the action–perception cycle. In the next section, we examine expected free energy in greater detail. Then, we will see how active agents can learn the contingencies of the environment and the structure of their generative model at slower timescales.

7. Properties of the expected free energy

The expected free energy is a fundamental construct of interest. In this section, we unpack its main features and highlight its importance in relation to many existing theories in neurosciences and engineering.

The expected free energy of a policy can be unpacked in a number of ways. Perhaps the most intuitive is in terms of risk and ambiguity:

| (13) |

This means that policy selection minimises risk and ambiguity. Risk, in this setting, is simply the difference between predicted and prior beliefs about final states. In other words, policies will be deemed more likely if they bring about states that conform to prior preferences. In the optimal control literature, this part of expected free energy underwrites KL control (Todorov, 2008, van den Broek et al., 2010). In economics, it leads to risk sensitive policies (Fleming & Sheu, 2002). Ambiguity reflects the uncertainty about future outcomes, given hidden states. Minimising ambiguity therefore corresponds to choosing future states that generate unambiguous and informative outcomes (e.g., switching on a light in the dark).

We can express the expected free energy of a policy as a bound on information gain and expected log (model) evidence (a.k.a., Bayesian risk):

| (14) |

The first term in (14) is the expectation of log evidence under beliefs about future outcomes, while the second ensures that this expectation is maximally informed, when outcomes are encountered. Collectively, these two terms underwrite the resolution of uncertainty about hidden states (i.e., information gain) and outcomes (i.e., expected surprise) in relation to prior beliefs.

When the agent’s preferences are expressed in terms of outcomes (c.f., Fig. 2), it is useful to express risk in terms of outcomes, as opposed to hidden states. This is most useful when the generative model is not known or during structure learning, when the state-space evolves over time. In these cases, the risk over hidden states can be replaced risk over outcomes by assuming the KL divergence between the predicted and true posterior (under expected outcomes) is small:

| (15) |

This divergence constitutes an expected evidence bound that also appears if we express expected free energy in terms of intrinsic and extrinsic value:

| (16) |

Extrinsic value is just the expected value of log evidence, which can be associated with reward and utility inbehavioural psychology and economics, respectively (Barto et al., 2013; Kauder, 1953, Schmidhuber, 2010). In this setting, extrinsic value is the negative of Bayesian risk (Berger, 1985), when reward is log evidence. The intrinsic value of a policy is its epistemic value or affordance (Friston et al., 2015). This is just the expected information gain afforded by a particular policy, which can be about hidden states (i.e., salience) or model parameters (i.e., novelty). It is this term that underwrites artificial curiosity (Schmidhuber, 2006).

Intrinsic value corresponds to the expected information gain about model parameters. It is also known as intrinsic motivation in neurorobotics (Barto et al., 2013, Deci and Ryan, 1985, Oudeyer and Kaplan, 2009), the value of information in economics (Howard, 1966), salience in the visual neurosciences and (rather confusingly) Bayesian surprise in the visual search literature (Itti and Baldi, 2009, Schwartenbeck et al., 2013, Sun et al., 2011). In terms of information theory, intrinsic value is mathematically equivalent to the expected mutual information between hidden states in the future and their consequences—consistent with the principles of minimum redundancy or maximum efficiency (Barlow, 1961, Barlow, 1974, Linsker, 1990). Finally, from a statistical perspective, maximising intrinsic value (i.e., salience and novelty) corresponds to optimal Bayesian design (Lindley, 1956) and machine learning derivatives, such as active learning (MacKay, 1992). On this view, active learning is driven by novelty; namely, the information gain afforded model parameters, given future states and their outcomes. Heuristically, this curiosity resolves uncertainty about “what would happen if I did that” (Schmidhuber, 2010). Fig. 4 illustrates the compass of expected free energy, in terms of its special cases; ranging from optimal Bayesian design through to Bayesian decision theory.

Fig. 4.

Expected free energy. This figure illustrates the various ways in which minimising expected free energy can be unpacked (omitting model parameters for clarity). The upper panel casts action and perception as the minimisation of variational and expected free energy, respectively. Crucially, active inference introduces beliefs over policies that enable a formal description of planning as inference (Attias, 2003, Botvinick and Toussaint, 2012, Kaplan and Friston, 2018a). In brief, posterior beliefs about hidden states of the world, under plausible policies, are optimised by minimising a variational (free energy) bound on log evidence. These beliefs are then used to evaluate the expected free energy of allowable policies, from which actions can be selected (Friston, FitzGerald et al., 2017). Crucially, expected free energy subsumes several special cases that predominate in the psychological, machine learning and economics literature. These special cases are disclosed when one removes particular sources of uncertainty from the implicit optimisation problem. For example, if we ignore prior preferences, then the expected free energy reduces to information gain (Lindley, 1956, MacKay, 2003) or intrinsic motivation (Barto et al., 2013, Deci and Ryan, 1985, Oudeyer and Kaplan, 2009). This is mathematically the same as expected Bayesian surprise and mutual information that underwrite salience in visual search (Itti and Baldi, 2009, Sun et al., 2011) and the organisation of our visual apparatus (Barlow, 1961, Barlow, 1974, Linsker, 1990, Optican and Richmond, 1987). If we now remove risk but reinstate prior preferences, one can effectively treat hidden and observed (sensory) states as isomorphic. This leads to risk sensitive policies in economics (Fleming and Sheu, 2002, Kahneman and Tversky, 1988) or KL control in engineering (van den Broek et al., 2010). Here, minimising risk corresponds to aligning predicted outcomes to preferred outcomes. If we then remove ambiguity and relative risk of action (i.e., intrinsic value), we are left with extrinsic value or expected utility in economics (Von Neumann & Morgenstern, 1944) that underwrites reinforcement learning and behavioural psychology (Barto & Sutton, 1992). Bayesian formulations of maximising expected utility under uncertainty is also known as Bayesian decision theory (Berger, 1985). Finally, if we just consider a completely unambiguous world with uninformative priors, expected free energy reduces to the negative entropy of posterior beliefs about the causes of data; in accord with the maximum entropy principle (Jaynes, 1957). The expressions for variational and expected free energy correspond to those described in the main text (omitting model parameters for clarity). They are arranged to illustrate the relationship between complexity and accuracy, which become risk and ambiguity, when considering the consequences of action. This means that risk-sensitive policy selection minimises expected complexity or computational cost. The coloured dots above the terms in the equations correspond to the terms that constitute the special cases in the lower panels.

8. Learning

In active inference, learning concerns the dynamics of synaptic plasticity, which are thought to encode beliefs about the contingencies of the environment (Friston, FitzGerald et al., 2017) (e.g., beliefs about , in some settings, are thought to be encoded in recurrent excitatory connections in the prefrontal cortex (Parr, Rikhye et al., 2019)). The fact that beliefs about matrices (e.g., , ) may be encoded in synaptic weights conforms to connectionist models of brain function, as it offers a convenient way to compute probabilities, in the sense that the synaptic weights could be interpreted as performing matrix multiplication as in artificial neural networks, to predict; e.g., outcomes from beliefs about states, using the likelihood matrix .

These synaptic dynamics (e.g., long-term potentiation and depression) evolve at a slower timescale than action and perception, which is consistent with the fact that such inferences need evidence accumulation over multiple state–outcome pairs. For simplicity, we will assume the only variable that is learned is , but what follows generalises to more complex generative models (c.f., Appendix A.1. Learning means that approximate posterior beliefs about follow a gradient descent on variational free energy. Seeing the variational free energy (5) as a function of (the sufficient statistic of ) we can write:

| (17) |

Here, we ignore the terms in (5) that do not depend on , as these will vanish when we take the gradient. The KL-divergence between Dirichlet distributions is (Kurt, 2013, Penny, 2001):

| (18) |

Incorporating (18) in (17), we can take the gradient of the variational free energy with respect to :

| (19) |

where is the Kronecker (i.e., outer) product. This means that the dynamics of synaptic plasticity follow a descent on (19):

| (20) |

In computational terms, these are the dynamics for evidence accumulation of Dirichlet parameters at time . Since synaptic plasticity dynamics occur at a much slower pace than perceptual inference, it is computationally much cheaper in numerical simulations to do a one-step belief update at the end of each trial of observation epochs. Explicitly, setting the free energy gradient to zero at the end of the trial gives the following update for Dirichlet parameters:

| (21) |

After which, the prior beliefs are updated to the approximate posterior beliefs for the subsequent trial. Note that in particular, the update counts the number of times a specific mapping between states and observations has been observed. Interestingly, this is formally identical to associative or Hebbian plasticity.

As one can see, the learning rule concerning accumulation of Dirichlet parameters (21) means that the agent becomes increasingly confident about its likelihood matrix by receiving new observations, since the matrix which is added onto at each timestep is always positive. This is fine as long as the structure of the environment remains relatively constant. In the next section, we will see how Bayesian model reduction can revert this process, to enable the agent to adapt quickly to a changing environment. Table 3 summarises the belief updating entailed by active inference, and Fig. 5 indicates where particular computations might be implemented in the brain.

Table 3.

Summary of belief updating.

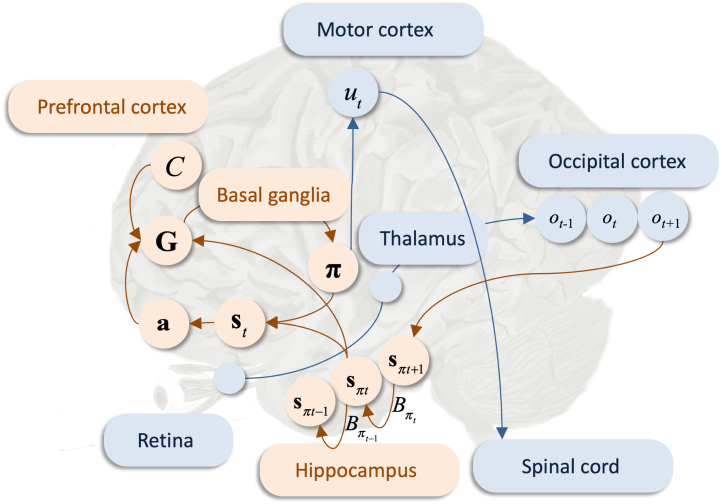

Fig. 5.

Possible functional anatomy. This figure summarises a possible (coarse-grained) functional anatomy that could implement belief updating in active inference. The arrows correspond to message passing between different neuronal populations. Here, a visual observation is sampled by the retina, aggregated in first-order sensory thalamic nuclei and processed in the occipital (visual) cortex. The green arrows correspond to message passing of sensory information. This signal is then propagated (via the ventral visual pathway) to inferior and medial temporal lobe structures such as the hippocampus; this allows the agent to go from observed outcomes to beliefs about their most likely causes in state-estimation (perception), which is performed locally. The variational free energy is computed in the striatum. The orange arrows encode message passing of beliefs. Preferences are attributed to the dorsolateral prefrontal cortex – which is thought to encode representations over prolonged temporal scales (Parr & Friston, 2017b) – consistent with the fact that these are likely to be encoded within higher cortical areas (Friston, Lin et al., 2017). The expected free energy is computed in the medial prefrontal cortex (Friston, FitzGerald et al., 2017) during planning, which leads to inferences about most plausible policies (decision-making) in the basal ganglia, consistent with the fact that the basal ganglia is thought to underwrite planning and decision-making (Berns and Sejnowski, 1996, Ding and Gold, 2013, Haber, 2003, Jahanshahi et al., 2015, Parr and Friston, 2018b, Thibaut, 2016). The message concerning policy selection is sent to the motor cortex via thalamocortical loops. The most plausible action, which is selected in the motor cortex is passed on through the spinal cord to trigger a limb movement. Simultaneously, policy independent state-estimation is performed in the ventrolateral prefrontal cortex, which leads to synaptic plasticity dynamics in the prefrontal cortex, where the synaptic weights encode beliefs about .

9. Structure learning

In the previous sections, we have addressed how an agent performs inference over different variables at different timescales in a biologically plausible fashion, which we equated to perception, planning and decision-making. In this section, we consider the problem of learning the form or structure of the generative model.

The idea here is that agents are equipped (e.g., born) with an innate generative model that entails fundamental preferences (e.g., essential to survival), which are not updated. For instance, humans are born with prior preferences about their body temperature around 37 °C and O, CO, glucose etc. concentrations within a certain range. Mathematically, this means that the parameters of these innate prior distributions – encoding the agent’s expectations as part of its generative model – have hyperpriors that are infinitely precise (e.g., a Dirac delta distribution) and thus cannot be updated in an experience dependent fashion. The agent’s generative model then naturally evolves by minimising variational free energy to become a good model of the agent’s environment but is still constrained by the survival preferences hardcoded within it. This process of learning the generative model (i.e., the variables and their functional dependencies) is called structure learning.

Structure learning in active inference is an active area of research. Active inference proposes that the agent’s generative model evolves over time to maximise the evidence for its observations. However, a complete set of mechanisms that biological agents use to do so has not yet been laid out. Nevertheless, we use this section to summarise two complementary approaches; namely, Bayesian model reduction and Bayesian model expansion (Friston, Lin et al., 2017, Friston et al., 2018a, Friston and Penny, 2011, Smith et al., 2019) – that enable to simplify and complexify the model, respectively.

9.1. Bayesian model reduction

To explain the causes of their sensations, agents must compare different hypotheses about how their sensory data are generated—and retain the hypothesis or model that is the most valid in relation to their observations (i.e., has the greatest model evidence). In Bayesian statistics, these processes are called Bayesian model comparison and Bayesian model selection—these correspond to scoring the evidence for various generative models in relation to available data and selecting the one with the highest evidence (Claeskens and Hjort, 2006, Stephan et al., 2009). Bayesian model reduction (BMR) is a particular instance of structure learning, which formalises post-hoc hypothesis testing to simplify the generative model. This precludes redundant explanations of sensory data—and ensures the model generalises to new data. Technically, it involves estimating the evidence for simpler (reduced) priors over the latent causes and selecting the model with the highest evidence. This process of simplifying the generative model – by removing certain states or parameters – has a clear biological interpretation in terms of synaptic decay and switching off certain synaptic connections, which is reminiscent of the synaptic mechanisms of sleep (e.g., REM sleep (Hobson and Friston, 2012, Hobson et al., 2014)), reflection and associated machine learning algorithms (e.g., the wake–sleep algorithm (Hinton et al., 1995)).

In the following, we show BMR for learning the likelihood matrix . Note that BMR is generic and could be used on any other variable that may be optimised during learning (e.g., see Appendix A.1), just by replacing in the following lines. To keep things concise, we denote by the sequence of available observations. The current model has a prior and we would like to test whether a reduced (i.e., less complex) prior can provide a more parsimonious explanation for the observed outcomes. Using Bayes rule, we have the following identities:

| (22) |

| (23) |

Where and . Dividing (22) by (23) yields

| (24) |

We can then use (24) in order to obtain the following relations:

| (25) |

| (26) |

We can approximate the posterior term in the expectation of (26) with the corresponding approximate posterior , which simplifies the computation. This allows us to compare the evidence of the two models (reduced and full). If the reduced model has more evidence, it implies the current model is too complex—and redundant parameters can be removed by adopting the new priors.

In conclusion, BMR allows for computationally efficient and biologically plausible hypothesis testing, to find simpler explanations for the data at hand. It has been used to emulate sleep and reflection in abstract rule learning (Friston, Lin et al., 2017), by simplifying the prior over at the end of each trial—this has the additional benefit of preventing the agent from becoming overconfident.

9.2. Bayesian model expansion

Bayesian model expansion is complementary to Bayesian model reduction. It entails adopting a more complex generative model – for example, by adding more states – if and only if the gain in accuracy in (3) is sufficient enough to outweigh the increase in complexity. This model expansion allows for generalisation and concept learning in active inference (Smith et al., 2019). Note that additional states need not always lead to a more complex model. It is in principle possible to expand a model in such a way that complexity decreases, as many state estimates might be able to remain close to their priors in place of a small number of estimates moving a lot. This ‘shared work’ by many parameters could lead to a simpler model.

From a computational perspective, concept acquisition can be seen as a type of structure learning (Gershman and Niv, 2010, Tervo et al., 2016) – that can be emulated through Bayesian model comparison. Recent work on concept learning in active inference (Smith et al., 2019), shows that a generative model equipped with extra hidden states can engage these ‘unused’ hidden states, when an agent is presented with novel stimuli during the learning process. Initially the corresponding likelihood mappings (i.e., the corresponding columns of ) are uninformative, but these are updated when the agent encounters new observations that cannot be accounted by its current knowledge (e.g., observing a cat when it has only been exposed to birds). This happens naturally, during the learning process, in an unsupervised way through free energy minimisation. To allow for effective generalisation, this approach can be combined with BMR; in which any new concept can be aggregated with similar concepts, and the associated likelihood mappings can be reset for further concept acquisition, in favour of a simpler model with higher model evidence. This approach can be further extended by updating the number of extra hidden states through a process of Bayesian model comparison.

10. Discussion

Due to the various recent theoretical advances in active inference, it is easy to lose sight of its underlying principle, process theory and practical implementation. We have tried to address this by rehearsing – in a clear and concise way – the assumptions underlying active inference as a principle, the technical details of the process theory for discrete state-space generative models and the biological interpretation of the accompanying neuronal dynamics. It is useful to clarify these results; as a first step to guide towards outstanding theoretical research challenges, a practical guide to implement active inference to simulate experimental behaviour and a pointer towards various predictions that may be tested empirically.

Active inference offers a degree of plausibility as a process theory of brain function. From a theoretical perspective its requisite neuronal dynamics correspond to known empirical phenomena and extend earlier theories like predictive coding (Bastos et al., 2012, Friston, 2010, Rao and Ballard, 1999). Furthermore, the process theory is consistent with the underlying free energy principle, which biological systems are thought to abide by—namely, the avoidance of surprising states: this can be articulated formally based on fundamental assumptions about biological systems (Friston, 2019, Parr et al., 2020). Lastly, the process theory has a degree of face validity as its predicted electrophysiological responses closely resemble empirical measurements.

However, for a full endorsement of the process theory presented in this paper, rigorous empirical validation of the synthetic electrophysiological responses is needed. To pursue this, one would have to specify the generative model that a biological agent employs for a particular task. This can be done through Bayesian model comparison of alternative generative models with respect to empirical (choice) behaviour being measured (e.g., Mirza et al., 2018). Once the appropriate generative model is formulated, evidence for a plausible but distinct implementations of active inference would need to be compared, which come from various possible approximations to the free energy (Parr, Markovic et al., 2019, Schwöbel et al., 2018, Yedidia et al., 2005), each of which yields different belief updates and simulated electrophysiological responses. Note that the marginal approximation to the free energy currently stands as the most biologically plausible (Parr, Markovic et al., 2019). From this, the explanatory power of active inference can be assessed in relation to empirical measurements and contrasted with other existing theories.

This means that the key challenge for active inference – and arguably data analysis in general – is finding the generative model that best explains observable data (i.e., evidence maximising). A solution to this problem would enable to find the generative model – entailed by an agent – by observing its behaviour. In turn, this would enable one to simulate its belief updating and behaviour accurately in-silico. It should be noted that these generative models can be specified manually for the purposes of reproducing simple behaviour (e.g., agents performing simple tasks needed for empirical validation discussed above). However, a generic solution to this problem is necessary to account for complex datasets; in particular, complex behavioural data from agents in a real environment. Moreover, a biologically plausible solution to this problem could correspond to a complete structure learning roadmap; accounting for how biological agents evolve their generative model to account for new observations. Evolution has solved this problem by selecting phenotypes with a good model of their sensory data, therefore, understanding the processes that have selected generative models that are fit for purpose for our environment might lead to important advances in structure learning and data analysis.

Discovering new generative models corresponding to complex behavioural data will demand to extend the current process theory to these models, in order to provide testable predictions and reproduce the observed behaviour in-silico. Examples of generative models that are used in learning and decision-making, yet are not accommodated by the current process theory, include Markov decision trees (Jordan et al., 1998, Jordan et al., 1997) and Boltzmann machines (Ackley et al., 1985, Salakhutdinov and Hinton, 2012, Stone, 2019).

One challenge that may arise, when scaling active inference to complex models with many degrees of freedom, will be the size of the policy trees in consideration. Although effective and biologically plausible, the current pruning strategy is unlikely to reduce the search space sufficiently to enable tractable inference in such cases. As noted above, the issue of scaling active inference may yield to the first principles of the variational free energy formulation. Specifically, generative models with a high evidence are minimally complex. This suggests that ‘scaling up’, in and of itself, is not the right strategy for reproducing more sophisticated or deep behaviour. A more principled approach would be to explore the right kind of factorisations necessary to explain structured behaviour. A key candidate here are deep temporal or diachronic generative models that have a separation of timescales. This form of factorisation (c.f., mean field approximation) replaces deep decision trees with shallow decision trees that are hierarchically composed.

To summarise, we argue that some important challenges for theoretical neuroscience include finding process theories of brain function that comply with active inference as a principle (Friston, 2019, Parr et al., 2020); namely, the avoidance of surprising events. The outstanding challenge is then to explore and fine grain such process theories, via Bayesian model comparison (e.g., using dynamic causal modelling (Friston, 2012, Friston et al., 2003)) in relation to experimental data. From a structure learning and data analysis perspective, the main challenge is finding the generative model with the greatest evidence in relation to available data. This may be achieved by understanding the processes evolution has selected for creatures with a good model of their environment. Finally, to scale active inference to behaviour with many degrees of freedom, one needs to understand how biological agents effectively search deep policy trees when planning into the future, when many possible policies may be entertained at separable timescales.

11. Conclusion