Abstract

Developing a clearer understanding of impairments that underlie the behavioral characteristics of aphasia is essential for the development of targeted treatments and will help inform theories of speech motor control. Impairments in sensorimotor integration of speech in individuals with conduction aphasia have previously been implicated in their repetition deficits. However, much less is known about the extent to which these integrative deficits occur outside of conduction aphasia and how this manifests behaviorally in areas other than speech repetition. In this study, we aimed to address these issues by examining the behavioral correlates of speech sensorimotor impairment under altered auditory feedback (AAF) and their relationship with the impaired ability to independently correct for online errors during picture naming in people with aphasia. We found that people with aphasia generate slower vocal compensation response to pitch-shift AAF stimuli compared with controls. However, when the timing of responses was controlled for, no significant difference in the magnitude of vocal pitch compensation was observed between aphasia and control groups. Moreover, no relationship was found between self-correction of naming errors and the timing and magnitude of vocal compensation responses to AAF. These findings suggest that slowed compensation is a potential behavioral marker of impaired sensorimotor integration in aphasia.

Keywords: Vocal compensation, Sensorimotor integration, Auditory feedback, Aphasia, Stroke

Introduction

Sensorimotor integration is the means by which movements are guided by sensory information. Sensory input is critical in the development of speech as well as for online error monitoring and correction. This type of information not only helps define the target of an action, but it also provides feedback during the planning and execution stages of the action. For speech production, both auditory and somatosensory information play important roles (Golfinopoulos et al., 2010; Hickok, 2012; Hickok, 2014; Tourville & Guenther, 2011). This has been demonstrated in studies examining both natural (i.e. hearing loss) and artificially manipulated auditory input (Houde & Jordan, 1998; Liu et al., 2011; Perkell et al., 2000; Ryan & Van Kirk, 1974; Waldstein, 1990; Yates, 1963). Although intelligibility tends to remain relatively high, adult onset deafness has been shown to negatively impact speech over time with declines noted in articulatory precision, loudness adjustments based on the auditory environment, and phonetic contrasts (Perkell et al., 2000; Waldstein, 1990). In terms of artificially manipulated auditory input, two common manipulations are delayed auditory feedback (DAF) and altered auditory feedback (AAF). In the first case, the speech signal itself is not manipulated; it is simply returned to the listener at a short delay. In the latter case, some parameter of the speech signal (e.g. pitch, formant structure, etc.) is altered externally and returned to the listener quickly enough that no delay is usually perceived. DAF has been shown to increase disfluencies in neurologically healthy speakers but reduce disfluencies in speakers who stutter (e.g. Yates, 1963; Ryan & Van Kirk, 1974). In the case of AAF, pitch or formant shifted feedback is provided to neurologically healthy speakers, and vocal compensation in the direction opposite to the shift occurs automatically and often without conscious awareness (e.g. Houde & Jordan, 1998; Liu, et al., 2011).

Models of speech production

The importance of sensory information has also been reflected in models of speech production. Models proposed as early as the 1960s emphasized the importance of sensory targets during speech production and perception (e.g. Fant, 1960; Stevens, 1998). More recently, speech models have been influenced by models of motor control, neurolinguistics, computational modeling, and neuroimaging data. These neurocomputational models have aimed to bring together different accounts of speech production and sought to provide a basis for specific hypotheses that may be tested empirically (Guenther & Hickok, 2016). Of particular interest is the Hierarchical State Feedback Control (HSFC) model (Hickok, 2012), a neuropsychological model of speech production which emphasizes sensory targets (auditory and somatosensory) as well as sensorimotor integration as critical components of the speech production process.

The HSFC model represents a synthesis of models of motor control, especially those including internal forward models, as well as psycholinguistic models of speech production, particularly the two-stage model described by Dell (1986) and Levelt (1999) (Hickok, 2012, 2014). It builds upon an integrated state feedback control model by adding a hierarchical component. The HSFC model has built-in mechanisms to guide speech production: inverse correction and forward prediction. Inverse correction is a feedback control mechanism where mismatches between sensory expectations and sensory feedback are monitored. Conversely, forward prediction is a feedforward mechanism based upon learned motor programs that define auditory and somatosensory targets for production. During speaking, these mechanisms integrate, and the process of error detection and transformation of error signals into corrective motor commands is the mechanism of interest to the current study – sensorimotor integration. Another neurocomputational model of speech production, the Directions into Velocities of Articulators (or DIVA) model also emphasizes feedback and feedforward control mechanisms as critical for speech production (Golfinopoulos et al., 2010; Tourville & Guenther, 2011; Tourville, Reilly, & Guenther, 2008). The difference, however, is that the HSFC model includes both internal and external feedback loops whereas the feedback control mechanism is solely external in DIVA (Guenther & Hickok, 2016). In other words, the HSFC model includes a feedback component that can detect and correct errors that occur before speech is produced whereas in the DIVA model, the error must be overtly produced first to be corrected.

Both the DIVA and HSFC models largely focus on the feedback and feedforward mechanisms that are critical to examining sensorimotor integration in individuals with aphasia. They each attempt to account for a range of phenomena including aspects of speech development, fluent and non-fluent speech production, as well as a range of disorders including aphasia, apraxia of speech, dysarthria and stuttering (Golfinopoulos et al., 2010; Hickok 2012, 2014). Perhaps most relevant to the current study is the HSFC model’s account of error detection in inner speech, the rapid correction of articulated speech, and the unique speech features of conduction aphasia (Hickok 2012, 2014).

Sensorimotor integration in aphasia

Impaired sensorimotor integration has been implicated as underlying speech impairments in aphasia (e.g. Eggert, 1977; Hickok, 2012). One type of aphasia, conduction aphasia, is characterized by relatively preserved speech fluency as well as preserved auditory comprehension (Geschwind, 1965). Paraphasias may occur in spontaneous speech and are often phonological. However, errors are typically detected by the individual who, in turn, attempts to self-correct. Often these self-corrections will be repetitive and approximate the target word. This is known as the conduite d’approche (Goodglass, 1992). Despite relative strengths in fluency and comprehension, individuals with conduction aphasia tend to have marked difficulty with speech repetition (Geschwind, 1965). As mentioned previously, repetition abilities have been used as an indicator of the integrity of the sensorimotor system during speech, and critically, it distinguishes transcortical aphasias from Broca’s and Wernicke’s aphasias (Rogalsky et al., 2015). Despite the presence of speech repetition difficulties in many types of aphasia, this symptom is typically considered the hallmark impairment of conduction aphasia.

The HSFC model attempts to account for the distinctive combination of behaviors present in conduction aphasia by building upon Wernicke’s (1908) theory that they result from the disconnection of motor and sensory speech systems (Hickok 2012, 2014). While disconnection of neural pathways may not necessarily be required for impairment to occur, incoordination or miscommunication between the two systems (i.e. sensory and motor) would likely be sufficient to cause this pattern of impairment. According to this theory, paraphasias would occur because the sensory system would no longer provide guidance for speech production as a result of the incoordination between systems. What Hickok (2012, 2014) adds to Wernicke’s (1908) original ideas is an explanation of how and why the sensory system is also involved in speech production. As described above, in the HSFC model, the sensory system defines the targets of the speech act, and without access to that critical information the system is more likely to produce errors (Hickok 2012, 2014).

Historically, conduction aphasia has been attributed to damage to the arcuate fasciculus – the major neural pathway that connects motor and sensory systems (i.e. Broca’s and Wernicke’s areas) in the classical model (Geschwind, 1965; Lichtheim, 1885; Wernicke, 1908). As such, conduction aphasia was thought of as a disconnection syndrome. More recent evidence has pointed to cortical damage as the culprit – particularly a region in the left posterior temporo-parietal junction known as area Spt (Buchsbaum et al., 2011; Hickok, Houde, & Rong, 2011; Rogalsky et al., 2015). This region of auditory cortex has been shown to activate during both phonological short-term memory tasks as well as speech production tasks (e.g. Buchsbaum et al., 2011; Buchsbaum & D’Esposito, 2008; Hickok et al., 2000). Not only that, lesions to left area Spt were associated with poor non-word and real word repetition even after accounting for damage to white matter pathways (Rogalsky et al., 2015). Other lesion studies have found similar results (e.g. Baldo, Katseff, & Dronkers, 2011; Dell et al., 2013; Fridriksson et al., 2010).

Due to the overlap in findings of left Spt activation for both memory tasks and speech production tasks, some have posited that conduction aphasia occurs as the result of damage to the phonological working memory buffer (Baldo, Klostermann, & Dronkers, 2008; Caramazza, Basili, Koller, & Berndt, 1981; Shallice & Warrington, 1977). In Baddeley’s (1992) model of working memory, the phonological store serves to maintain a speech-related memory trace for a brief period of time spanning no more than approximately two seconds. When paired with the articulatory system, the phonological loop (i.e. the phonological store/buffer plus speech articulation) can maintain information via subvocal rehearsal (Baddeley, 1992).

Damage to an individual’s phonological store could certainly explain repetition deficits in conduction aphasia. Maintaining an auditory phonological trace is critical -- especially for long sequences and nonwords. However, that type of deficit cannot account for the paraphasias present in conduction aphasia because no auditory trace must be maintained in that case (Buchsbaum et al., 2011, but cf. Martin, Dell, Saffran, & Schwartz, 1994; Martin & Saffran, 1997). Instead, Buchsbaum and colleagues (2011) argue that phonological short-term memory results from the interaction between sensorimotor systems. According to this view, the phonological store is not a separate system specialized for working memory. Instead, it is the same phonological system used during comprehension to process speech. Leff and colleagues (2009) provided evidence for this view in their study of 210 stroke survivors. Individuals with aphasia were administered the Comprehensive Aphasia Test (CAT; Swinburn et al., 2004), and a digit span task was used as a measure of auditory short-term memory. They found that auditory short-term memory deficits were associated with damage to posterior portions of the superior temporal gyrus and sulcus, even after accounting for performance on repetition, verbal fluency, and picture naming tasks. They also found that integrity of that region was predictive of performance on language comprehension tasks from the CAT, suggesting a common substrate for short-term memory and comprehension (Leff et al., 2009). While this may call into question the comprehension abilities of people with conduction aphasia, it is possible that subtle deficits in sensorimotor integration can affect behavior differentially. For example, comprehension may be able to draw on other contextual resources or subtle deficits may exist there as well, despite its status as a relative strength in this population. The task demands of any given assessment of comprehension will vary, and it could be that comprehension deficits are only revealed in particular environments. Shallice and Warrington (1977) argue that many comprehension tasks are “semantically overdetermined” and that comprehension deficits may only be demonstrated with more complex sentence types. Results from a more recent study suggest that people with conduction aphasia perform well comprehending relative clauses that require reactivation of semantic or syntactic information but not for those requiring reactivation of phonological information (Gvion & Friedmann, 2012). However, in an early study by Caramazza and Zurif (1976) examining comprehension abilities in multiple aphasia types, they demonstrated that those with conduction aphasia performed similarly to those with Broca’s aphasia. Both groups did well when comprehension relied on semantic information but performed more poorly when they had to rely on syntactic information to derive meaning (Caramazza & Zurif, 1976). Though the relationship between working memory and comprehension has been controversial for many decades, a recent meta-analysis compiling data from 26 studies of people with aphasia showed that the two abilities were correlated (Varkanitsa & Caplan, 2018). Given these results as well as previous research from both sides of the topic, it seems that while comprehension is largely preserved in conduction aphasia there are circumstances in which factors such as syntactic complexity may impact comprehension for multiple aphasia types. However, taken together with the results from Leff and colleagues’ (2009) study as well as evidence from previously mentioned lesion and functional imaging studies, there is support for the idea that left area Spt functions as a sensorimotor interface. Furthermore, it appears that sensorimotor integration may underlie both speech repetition and phonological working memory functions.

Altered auditory feedback (AAF) to examine sensorimotor integration

Studies examining AAF in neurotypical adults indicate that given an unexpected pitch alteration in auditory feedback during vowel prolongation, participants generate an on-line compensatory vocal response to correct for the perceived error (Liu et al., 2011). Limited research has been conducted in aphasia using delayed auditory feedback (DAF), but there are even fewer studies using the AAF paradigm to examine speech sensorimotor integration deficits in this population (Boller et al., 1978; Chapin et al., 1981, Jacks & Haley, 2015; Niziolek & Kiran, 2018; Behroozmand et al., 2018). Two early studies examined the effect of delayed auditory feedback on a variety of language tasks in individuals with aphasia and controls (Boller et al., 1978; Chapin et al., 1981). Speech was rated subjectively for factors including intensity, duration, and quality. Boller and colleagues (1978) found effects of DAF on speech for controls (N=10), individuals with fluent aphasia (N=10), and individuals with non-fluent aphasia (N=10) (Boller et al., 1978). In this study, raters were only asked to report whether a change in the specified factors occurred, so the nature of those changes were not fully described. However, they note that those with non-fluent aphasia were affected more than those with fluent aphasia, and individuals with conduction aphasia showed the least effect. The follow-up study aimed to reanalyze the data by more closely examining factors contributing to the original quality ratings (Chapin et al., 1981). They determined that lengthening of vowels was the feature that most predominantly occurred when participants were subjected to DAF and hypothesized that DAF interferes mostly with execution of articulation. They also attribute the performance of the participants with conduction aphasia, who were less affected by DAF than all other groups including the controls, to disconnection between sensory and motor centers much like is described by Wernicke (1908) and Geschwind (1965) (Chapin et al., 1981).

More recently, researchers have utilized AAF in aphasia in multiple ways. Jacks and Haley (2015) used combined DAF/AAF as a control condition for auditory masking to improve fluency in individuals with aphasia and/or apraxia of speech, citing its effectiveness for individuals that stutter. They found that only 1/10 participants improved in the AAF condition (as opposed to 7/10 in the auditory masking condition), and the primary effect of AAF was slowed rate (Jacks & Haley, 2015). Behroozmand and colleagues (2018) utilized AAF to specifically examine vocal correction in response to upward and downward frequency shifts during vowel prolongation. In this case, vowel prolongation was used rather than language production because most of the participants with aphasia were able to consistently produce the sound across the hundreds of trials that were included in the task. While there was much variability in the responses of the individuals with aphasia, they did produce a vocal response opposite to the direction of the shift as was expected based on previous studies conducted with neurologically healthy controls. In other words, participants were perceiving that the pitch of their voice was shifting slightly upwards or downwards while vocalizing the vowel sound. To compensate for the perceived change, they automatically changed their vocal output in the direction opposite to the feedback shift in order to bring it back to the intended level. Again, note that corrections to changes that are this subtle often occur without conscious awareness. Though the individuals with aphasia did show a vocal adaptation, it was diminished as compared to the control group (Behroozmand et al., 2018). They also reported a negative correlation between performance on the speech repetition subtest of the Western Aphasia Battery-Revised (WAB-R; Kertesz, 2006) and the magnitude of vocal compensation in response to AAF (Behroozmand et al., 2018).

An underlying impairment in sensorimotor integration has the potential to affect an individual in varying ways. As mentioned previously, impaired speech repetition abilities have been attributed to deficits in sensorimotor integration for people with conduction aphasia (e.g. Eggert, 1977; Hickok, 2012; Hickok et al., 2011). There is evidence that lower-level motor processes can be affected in aphasia and have the potential to account for errors that are typically associated with language processing, too. For example, Kurowski and Blumstein (2016) discovered acoustic features of the target word in phonemic paraphasias produced by individuals with a variety of aphasia types. In other words, competing articulatory plans resulted in some features of the target word being preserved in the error production. This indicates that the error was not simply an incorrectly selected phoneme at the phonological level that was then correctly produced (Kurowski & Blumstein, 2016). Others have also found subtle indicators of lower-level impairment in speech production (e.g. unstable phoneme production, phoneme distortions) and auditory perception (e.g. feedback processing) that cannot be explained by a co-occurring motor speech disorders (Blumstein, 1994; Blumstein et al., 1977, 1980; Haley, et al., 2013; Niziolek & Kiran, 2018). While a recent study uncovered different patterns of brain damage associated with apraxia of speech compared to aphasia, the two did not completely dissociate (Basilakos et al., 2015). This also contributes to the difficulty of clearly distinguishing between the underlying mechanisms of a given behavioral impairment.

The current study aimed to begin to fill the gap in terms of what is known about sensorimotor integration for speech processing in aphasia and its behavioral manifestation. What is not well understood is the extent to which these integrative deficits occur outside of conduction aphasia and how they might impact areas other than speech repetition. The first goal of the study was to examine the behavioral correlates of sensorimotor impairment of speech auditory feedback in individuals with aphasia using the AAF paradigm (Burnett et al., 1998). Here, we aim to replicate the results of the previous Behroozmand et al. (2018) study with a larger sample, and as such, we expected to find a smaller magnitude vocal compensation in the aphasia group as compared to the control group.

The second goal of the study was to determine whether vocal compensation in response to AAF during vowel prolongation is related to the ability to self-correct for online errors during a language task. At this point, it is not clear the extent to which integration impairments might manifest in spoken language tasks other than repetition. To that end, we planned to determine whether vocal compensatory behavior in response to AAF is correlated with self-correction behavior during a picture naming task. Naming deficits commonly occur across aphasia types and provide an opportunity to examine the relationship between sensorimotor integration and language production more broadly. Naming also has the benefit of providing a visual target so that auditory processing during the task is constrained to the individual’s processing of his or her own production. We hypothesized that weak responses to AAF would be associated with unsuccessful self-correction on the picture naming task. As a secondary analysis, we planned to determine which cortical regions, when damaged, are associated with impaired sensorimotor integration and self-correcting behavior. Left area Spt has been shown previously to play an important role in sensorimotor integration (e.g. Anderson et al., 1999; Fridriksson et al., 2010; Hickok, Buchsbaum, Humphries, & Muftuler, 2003; Rogalsky et al., 2015), and therefore, we hypothesized that behavioral performance on both the AAF and naming task would be associated with the posterior parieto-temporal regions. More specifically, individuals with damage to area Spt should be more likely to show lower levels of vocal compensation during the AAF task and fewer successful self-corrections on picture naming tasks whereas those with spared area Spt should show levels of vocal compensation more comparable with that of the control group and more successful self-corrections on the naming task.

Methods

Participants

A total of 30 individuals with chronic aphasia resulting from left hemisphere stroke were recruited to participate in this study (see Table 1). The inclusion criteria were the following: 1) Left hemisphere stroke confirmed by MRI; 2) Aphasia diagnosis; 3) At least six months post-stroke; 4) Monolingual native English speaker; 5) Ages 30 – 80; 6) Able to provide verbal or written informed consent. Exclusion criteria included self-reported (or by caregiver) history of 1) Dementia, traumatic brain injury, or psychiatric disorder; 2) Alcohol abuse. Note that a subset of this sample was included in Behroozmand et al. (2018). An individual was excluded who scored just above the cutoff for aphasia on the Western Aphasia Battery-Revised (WAB-R; Kertesz, 2006). An additional 4 participants were excluded who were unable to complete the behavioral tasks and another individual was excluded after becoming too fatigued during the AAF task. Therefore, data from a total of 24 individuals with aphasia (5 female) were included in the analyses detailed below. Of the 24 participants, 15 were included in the analyses detailed in the previous study by Behroozmand and colleagues (2018). The mean age for this group was 58.8 years (range = 38 – 78) and the mean time post-stroke was 64.9 months (range = 18 – 229). The average aphasia quotient score on the WAB-R was 61.1 (range = 31.4 – 91.1), and 5 individuals had anomic aphasia, 13 individuals had Broca’s aphasia, 5 individuals had conduction aphasia, and 1 individual had global aphasia according to the WAB-R’s classification criteria.

Table 1.

Participant characteristics.

| Aphasia | Control | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Participant | Age | Sex | Ed | AQ | Aphasia Type | Participant | Age | Sex | Ed |

| A1 | 56 | M | 16 | 57.5 | Broca’s | C1 | 52 | F | 22 |

| A2 | 62 | M | 10 | 90.2 | Anomic | C2 | 58 | M | 10 |

| A3 | 65 | M | 18 | 34.4 | Conduction | C3 | 56 | M | 23 |

| A4 | 57 | F | 13 | 74.8 | Broca’s | C4 | 71 | M | 18 |

| A5 | 57 | M | 16 | 51.7 | Conduction | C5 | 68 | F | 13 |

| A6 | 68 | M | 16 | 82.9 | Conduction | C6 | 59 | F | 22 |

| A7 | 78 | M | 16 | 72.1 | Anomic | C7 | 67 | F | 14 |

| A8 | 61 | F | 14 | 55.9 | Conduction | C8 | 60 | F | 20 |

| A9 | 46 | M | 16 | 49.1 | Broca’s | C9 | 65 | M | 18 |

| A10 | 73 | M | 16 | 55.3 | Broca’s | C10 | 56 | F | 18 |

| A11 | 52 | M | 16 | 43 | Broca’s | C11 | 63 | F | 18 |

| A12 | 43 | F | 16 | 55.2 | Broca’s | C12 | 60 | F | 16 |

| A13 | 60 | M | 12 | 91.1 | Anomic | C13 | 59 | M | 17 |

| A14 | 67 | M | 16 | 38.9 | Broca’s | C14 | 75 | M | 23 |

| A15 | 62 | F | 16 | 85.8 | Anomic | C15 | 69 | F | 23 |

| A16 | 69 | M | 14 | 72.7 | Conduction | C16 | 67 | M | 14 |

| A17 | 61 | M | 12 | 52.1 | Broca’s | C17 | 56 | F | 16 |

| A18 | 38 | F | 18 | 65.5 | Broca’s | C18 | 56 | F | 13 |

| A19 | 60 | M | 18 | 72.2 | Broca’s | C19 | 65 | F | 12 |

| A20 | 72 | M | 16 | 84.2 | Anomic | C20 | 76 | M | 12 |

| A21 | 44 | M | 16 | 65.9 | Broca’s | MEAN | 62.9 | 17.1 | |

| A22 | 51 | M | 12 | 53 | Broca’s | ||||

| A23 | 49 | M | 16 | 31.4 | Global | ||||

| A24 | 60 | M | 12 | 31.4 | Broca’s | ||||

| MEAN | 58.79 | 15.04 | 61.10 | ||||||

Ed = years of education, AQ = Quotient score (Aphasia Quotient) from the Western Aphasia Battery. Participants in bold font were also included in the Behroozmand et al., 2018 study.

In addition, 15 healthy controls were recruited from the University of South Carolina, and the larger Columbia, SC community. The inclusion criteria were as follows: 1) Monolingual native English speaker; 2) Ages 30 – 80; 3) Able to provide verbal or written informed consent. Exclusion criteria included self-reported history of 1) Dementia, traumatic brain injury, psychiatric disorder, or neurologic disorder; 2) Alcohol abuse. An additional 5 participants were included who completed the same experimental task as part of a different study. Therefore, the analyses below included data from a total of 20 age-matched control participants (t(42) = −1.548, p = .129). Of the 20 participants, 6 were included in the analyses detailed in the previous study by Behroozmand and colleagues (2018). The mean age for this group was 62.9 years (range = 52 – 76). Note that the groups were not matched on sex (χ2(1) = 7.059, p = .008) or education (t(42) = −2.128, p = .039). However, these factors were not significant predictors of overall performance on either task (see results for statistical confirmation of this statement), so the complete dataset from each group (minus the exclusions noted here) were included in the reported analyses.

All participants underwent a hearing screening prior to behavioral testing. Of the 24 participants with aphasia, 12 had thresholds of 40 dB or less at 500, 1000, 2000, and 4000 Hz in each ear. Of the 20 control participants, 18 met the same criteria. The remaining participants had higher thresholds at 2000 to 4000 Hz. Because age-related hearing loss can reasonably be expected in a sample that includes older adults, these individuals were not excluded from the study. Instead, extra time was spent ensuring that the volume was set at a comfortable listening level prior to beginning each task.

The research was approved by the University of South Carolina Institutional Review Board and informed consent was provided by all participants prior to taking part in the study. All participants were compensated for their time.

Procedures

The experiment was conducted in a sound attenuated booth, and each participant’s speech signals were recorded during an AAF task and an object picture naming task. Before beginning each task, the investigator ensured the participant understood the instructions for the next block. Participants demonstrated understanding by responding to the relevant cues appropriately in five consecutive trials. Instructions were provided in multiple modalities (e.g. verbal, written, and picture cues) as necessary, and demonstrations and practice trials were conducted prior to recording.

Task 1: Vowel prolongation

In the first task, participants were instructed to sustain a steady /a/ vowel sound for 2–3 seconds at their conversational pitch and loudness. Since a large number of trials were required for the experiment, vowel prolongation was selected rather than production of larger speech segments because in most participants it could be reliably reproduced throughout the duration of the experimental task. Not only that, many previous studies that have utilized AAF to examine sensorimotor integration have employed vowel prolongation as well (Houde & Jordan, 1998; Liu et al., 2011). Participants viewed a computer screen, which cued the production of vocalizations with the word ‘go’ shown in black on a white background. They were given a 2–3 second break between trials. During each vocalization trial, a 200 ms pitch-shift stimulus with randomized magnitude at ±100 cents perturbed the auditory feedback. If participants expressed concern about hearing alterations in their auditory feedback, they were assured that it was okay if it sounded odd but were not explicitly told to correct for the altered feedback. The onset of pitch-shift stimuli was randomized to fall between 750 – 1250 ms after the onset of the vocalization. Previous research utilizing a similar paradigm has indicated that the magnitude of response is greater for down shifts than up shifts (Liu et al., 2011), so the two trial types were distinguished in the current experiment. Additionally, listening trials were interleaved between the vocalization trials to control for any potential task order effects. During the listening trials, a pre-recorded vocalization from earlier trials was played back to the participant, and they were instructed not to speak during these trials. The listening trials served as a control condition for analyses in which correction for the perturbation (rather than detection + correction) was examined in isolation. However, this control condition is not analyzed in the current study. Approximately 150 – 200 trials were collected per condition (vocalization versus listening) resulting in a total task duration of about 60 – 65 minutes. Appropriate breaks were provided throughout to address potential effects of fatigue. Additionally, testing was conducted over the course of two days so that any given day of testing did not exceed approximately three hours.

Task 2: Picture naming

During the second task, participants were presented with a series of images including 40 colored abstract pictures and 80 colored drawings of objects that depicted high-frequency nouns as characterized by Francis and Kucera (1982). The images were selected from a computer graphics database (Art Explosion; Nova Development, 1995–2001). The abstract images were included to control for lower level visual processes in other contrasts which were not included in the current study. Each image was presented for eight seconds. Participants were instructed to name the pictures of objects and remain silent for the abstract pictures. During this task, no external alterations of the participants’ auditory feedback took place. Instead, the intent was to utilize this task to see how responses to errors occurred naturally in the context of speech rather than a simple vowel prolongation. The total task duration was approximately 16 minutes.

Data Acquisition

Participants wore insert earphones (Etymotic ER1-14A) for the AAF task and speech responses for both tasks were picked up by a head-mounted AKG microphone (Model C520), amplified using a Motu Ultralite-MK3, and recorded at 44.1 KHz sampling rate on a laboratory computer. The Max program (Cycling 74, v.5.0) controlled the timing of the visual cues for both tasks and controlled the timing, magnitude, and direction of the pitch-shift stimuli. The Max program also generated TTL pulses to mark the onset of vocalization and pitch-shift stimuli in each trial. For the picture naming task, TTL pulses marked the onset of picture display and the onset of speech. Participants were seated in a comfortable armchair in the sound booth at a desk that had a keyboard and monitor which were used to display the stimuli. Electroencephalography (EEG) signals were also collected from all participants, but those data were not included in the present study.

Data pre-processing and scoring

Task 1: Vocal compensation in response to AAF

Data were pre-processed by extracting the pitch frequency of the recorded speech signal in Praat (Boersma, 2001) using an autocorrelation method and exporting those data to a custom MATLAB code. There, the frequencies were segmented into epochs ranging from −100 ms before to 500 ms after the onset of the pitch shift stimuli and were converted from Hertz to cents using the following formula: Vocal Compensation [Cents] = 1200 × log2(F/FBaseline) (Behroozmand et al., 2018). F was the post-stimulus pitch frequency and FBaseline was the baseline pitch frequency from −100 to 0 ms pre-stimulus. Artifactual responses resulting from large magnitude voluntary pitch modulations were rejected by removing trials in which speech responses exceeded ± 500 cents. The remaining extracted pitch contours were averaged for each individual across trials for upward shifts and downward shifts separately. Peak latency was identified visually as the largest magnitude peak after the first major inflection (i.e. upward turn for a down shift or downward turn for an up shift) on the pitch contour. That point was marked on the curve and latency from the onset of vocalization was recorded (this was equivalent to the value on the x-axis). Speech response magnitude was extracted at each individual’s peak latency for the up and down shifts. Pitch contours were then averaged across participants to obtain grand averages for the participants with aphasia versus the control participants separately. Both latency and magnitude were included as variables in the analyses detailed below since they had the potential to provide different information about the compensation response. A disparity in latencies between groups could reflect a difference in identification or recognition of the pitch perturbation whereas a disparity in magnitude might indicate a difference in how the corrective response is executed or calibrated once identification of the perturbation has already taken place.

Task 2: Self-correction during picture naming

To investigate how varying degrees of sensorimotor impairment manifest in spoken language production, performance on a picture naming task was also examined. Behavioral measures of speech self-correction were extracted from the object picture naming task conducted during EEG data collection. On that task, each participant’s responses were scored offline as either correct, successful self-correction, unsuccessful self-correction, or uncorrected error. A production was scored as correct if the participant produced the target word on the first attempt. Incorrect productions were those where only a single, incorrect naming attempt was made or if the participant did not respond at all. Self-corrections were scored as successful if the participant was initially incorrect but was then able to produce the target within the eight second time window for each stimulus. Self-corrections were scored as unsuccessful if the participant made multiple naming attempts that were incorrect or if the participant initially produced the target word and then corrected to a non-target production. Note that most error types including phonological paraphasias were not considered correct productions and therefore fell into one of the other three categories. However, articulatory errors such as sound distortions were coded as correct productions. Trials during which a participant made no attempt to name the item were labeled as such (no response). Note that the control data were not used for this analysis because no errors were made in that group.

A number of metrics were derived from the scoring method used for the picture naming task including total percentage of self-corrections (total self-correct = number of self-correction attempts out of total naming errors), total percentage of successful self-corrections (total success = number of successful self-correction attempts out of total naming errors), total percentage of unsuccessful self-corrections (total no success = number of unsuccessful self-correction attempts out of total naming errors), and proportion of successful self-corrections (propsuccess = proportion of successful self-corrections out of total self-correction attempts). These metrics were chosen because it was important to identify not only when self-corrections were attempted, but also to identify whether they were successful or not. The total percentage of self-corrections provided a rough estimate of how often errors were identified with a subsequent attempt at correction. The other three metrics provided information about how often a correction was successfully executed given all opportunities (percentage of successful/unsuccessful corrections) as well as when examining only attempted corrections (proportion of successful self-corrections).

Imaging data

Each of the participants with aphasia who were recruited have taken part in a larger study in which magnetic resonance imaging (MRI) scans were collected. T1/T2 structural brain images were collected using a 3T Siemens Trio MRI system. Brain lesions were drawn on T2 MRI images and then quantified as binary lesion maps (lesion versus no lesion). The structural images were normalized to standard MNI space and the same transformation matrix was also applied to the lesion. The analysis was restricted to voxels in regions involving the left temporo-parietal junction as well as Broca’s region which was selected as a control region. The regions selected were based on the AALCAT atlas’ parcellation. This atlas combines the two well-known atlases: the AAL grey-matter atlas by Tzourio-Mazoyer and colleagues (2001), containing 116 regions of interest (ROIs), and the atlas of white matter tracts by Catani and Thiebaut de Schotten (2008), containing 34 areas. The two atlases were superimposed, and the resulting parcellation contained both grey- and white-matter ROIs. In cases of spatial overlap between the atlases, we used the labels from the white-matter atlas. The regions used in the current analysis were inferior parietal cortex, supramarginal gyrus, angular gyrus, superior temporal gyrus as well as the pars opercularis and pars triangularis regions of the inferior frontal cortex. As area Spt is not an ROI in the atlas, regions forming and adjacent to the temporal-parietal junction were included to capture that area. Additionally, pars opercularis and pars triangularis were included as control regions. These regions were identified for each individual by overlaying the AALCAT atlas onto their normalized scan.

Statistical Analyses

Prior to addressing our main research questions, we first conducted preliminary analyses to ensure that sex and education did not impact our dependent variables as our sample was not matched on those dimensions. To do so, we first conducted two independent samples t-test to compare vocal compensation magnitude and latency between male and female participants. Pearson correlations were also calculated to determine whether there was a relationship between education and vocal compensation response.

Task 1: Vocal compensation in response to AAF

For the first task we planned to examine how people with aphasia respond to AAF as compared to neurologically healthy adults and hoped to replicate the behavioral results of the Behroozmand et al., (2018) study but with a larger sample. We relied on means, standard deviations, and standard scores to describe the center and spread of the data, and then utilized a two-way ANOVA and a two-way ANCOVA to determine whether the raw differences we uncovered were statistically significant. The two-way ANOVA included main factors of group and stimulus direction to identify differences in vocal response timing. The two-way ANCOVA also included main factors of group and stimulus direction to identify differences in vocal response magnitude. However, peak latency was included as a covariate in the model to account for any differences in timing of the vocal response. Generally, we expected to find reduced vocal compensation in the aphasia group as compared to the control group.

Task 2: Self-correction during picture naming

We also aimed to examine whether vocal compensation in response to AAF during vowel prolongation was related to error correction during picture naming. We hoped that this analysis would indicate whether there was a relationship between AAF response and language production at a higher level and hypothesized that smaller magnitude vocal compensation would be associated with a larger number of unsuccessful self-corrections during picture naming. We conducted a correlation analysis to examine potential relationships between picture naming scores and speech motor correction responses to AAF for the aphasia group. Spearman’s rho was calculated because participant responses were not normally distributed. Additionally, a Bonferroni correction was applied to account for multiple comparisons.

Lesion-symptom mapping analysis

We also planned to examine which regions of brain damage were associated with impaired sensorimotor integration and self-correcting behavior. Because left Spt has been previously associated with sensorimotor integration, we hypothesized that damage to posterior parieto-temporal regions would be associated with smaller magnitude response to AAF and fewer successful self-corrections on the naming task whereas for those with spared Spt, we expected the opposite. We took a region of interest (ROI) approach to lesion-symptom mapping, and the proportion of damage in each region was calculated and used as an independent predictor of impaired compensation in AAF and impaired self-corrections during picture naming. Lesion size was included in the analysis as a co-factor and permutation thresholding was applied to control the family-wise error rate. To increase statistical power, only regions where at least 5 participants had damage were included in the LSM analysis. For each of the selected ROIs, this criterion was met.

Results

Because our groups were not matched on sex or education, we first determined whether there was a relationship between sex and education with vocal response to AAF. An independent samples t-test indicated that there was no difference between males and females when it came to magnitude (t(42) = .265, p = .484) or latency (t(42) = −.832, p = .209) of vocal response. Additionally, there was no significant correlation between education and magnitude (r = −.122, p = .431) or latency (r = −.003, p = .984) of vocal response.

Task 1: Vocal compensation in response to AAF

Firstly, we aimed to uncover how people with aphasia respond to AAF while vocalizing and started by conducting descriptive analyses of the data to characterize how the groups responded to the AAF stimuli. Mean latencies for vocal compensation (i.e. the average time it took to shift one’s pitch in the direction opposite to the perceived change) are shown for each group in Table 2. The two-way ANOVA with main factors of stimulus direction and group identified significant main effects for both factors (shift direction: F(1,84) = 7.08, p = .009, partial η2 = .08 group: F(1,84) = 13.05, p = .001, partial η2 = .13; see Table 2). This indicated that for both groups, responses to the down shift occurred more quickly on average than responses to the up shift. The main effect of group indicated that on average, individuals with aphasia took longer to respond to shifts in either direction when compared to controls.

Table 2.

Results of the two-way ANOVA conducted on vocal compensation latency.

| Tests of Between-Subjects Effects | ||||||

|---|---|---|---|---|---|---|

| Dependent Variable: peaktime | ||||||

| Source | Type III Sum of Squares | Df | Mean Square | F | Sig. | Partial Eta Squared |

| Corrected Model | .110a | 3 | .037 | 6.719 | .000 | .194 |

| Intercept | 7.556 | 1 | 7.556 | 1390.515 | .000 | .943 |

| stimdirection | .038 | 1 | .038 | 7.082 | .009 | .078 |

| Group | .071 | 1 | .071 | 13.045 | .001 | .134 |

| stimdirection * group | .001 | 1 | .001 | .170 | .681 | .002 |

| Error | .456 | 84 | .005 | |||

| Total | 8.320 | 88 | ||||

| Corrected Total | .566 | 87 | ||||

R Squared = .194 (Adjusted R Squared = .165)

peaktime = timing of the peak vocal compensation, stimdirection = direction of the pitch shift (up or down).

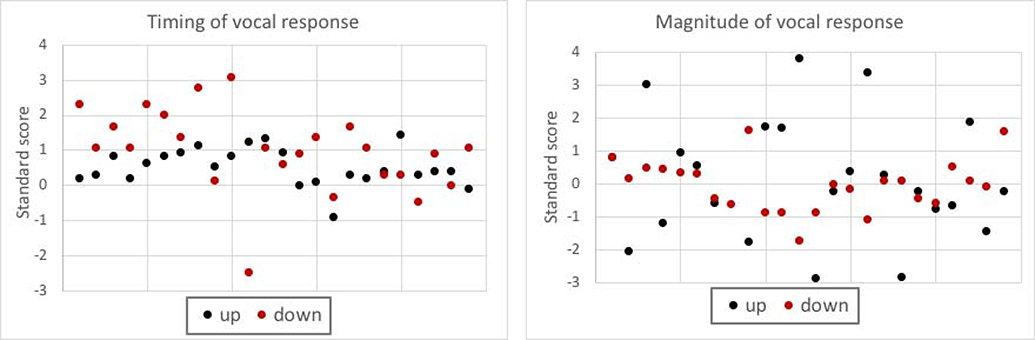

Standard scores for the aphasia group were calculated based on the control mean and standard deviation and plotted in Figure 1. Although most participants responded more slowly than the controls, approximately 88% performed within one standard deviation of the control group in at least one shift direction. However, there was little consistency in performance between shift directions. In other words, an individual who was two standard deviations slower than controls in response to the up shift may have been even slower in response to the down shift or may have performed more quickly in that condition.

Figure 1.

Scatterplots of vocal responses to AAF in the aphasia group. Timing and magnitude were converted to standard scores using the respective means of the control group. Responses to the up shift and down shift are reported separately. Note that data points are ordered by participant number, so vertical pairs of dots are the responses from a single participant.

Means and standard deviations as well as adjusted means, which accounted for differences in speech response latency, and standard errors are reported in Table 3. Adjusted means were calculated using the mean of the vocal response latency. The average timing of the vocal compensation response across groups was approximately 297 milliseconds, so the mean magnitude of response was calculated for each group at that latency. In our 2-way analysis of covariance, group (aphasia vs control) and stimulus direction (up vs down) were included as main factors and timing of the vocal response was included as a covariate because it was significantly different between the groups. The goal was to determine whether there was a statistically significant difference in vocal compensation magnitude between groups after accounting for differences in timing of the vocalization. The results of the analysis were not significant (full model: F(4,83)=.447, p=.774; see Table 4). The ANCOVA indicated that after controlling for differences in the timing of the speech response, there were no significant differences in speech compensation magnitude between groups or stimulus directions.

Table 3.

Summary statistics for latency (in milliseconds (ms)) and magnitude (in cents) of vocal response for both groups and shift directions. Adjusted means represent the mean magnitude or each group at 296.8ms to account for differences in timing of the peak vocal response.

| Latency | Aphasia | Control | ||||||

| M | SD | M | SD | |||||

| Up | 341 ms | 51 | 290 ms | 98 | ||||

| Down | 305 ms | 76 | 242 ms | 65 | ||||

| Magnitude | Aphasia | Control | ||||||

| M | SD | Madj | SE | M | SD | Madj | SE | |

| Up | 21.28 | 15.49 | 20.68 | 3.35 | 20.88 | 7.42 | 20.97 | 3.50 |

| Down | 24.60 | 16.02 | 24.49 | 3.19 | 25.55 | 20.36 | 26.30 | 3.72 |

M = mean, SD = standard deviation, Madj = adjusted means, SE = standard error.

Table 4.

Results of the two-way ANCOVA conducted on vocal compensation magnitude.

| Tests of Between-Subjects Effects | ||||||

|---|---|---|---|---|---|---|

| Dependent Variable: peakcomp | ||||||

| Source | Type III Sum of Squares | df | Mean Square | F | Sig. | Partial Eta Squared |

| Corrected Model | 435.090a | 4 | 108.773 | .446 | .775 | .021 |

| Intercept | 1816.050 | 1 | 1816.050 | 7.441 | .008 | .082 |

| peaktime | 82.812 | 1 | 82.812 | .339 | .562 | .004 |

| stimdirection | 418.776 | 1 | 418.776 | 1.716 | .194 | .020 |

| group | 20.582 | 1 | 20.582 | .084 | .772 | .001 |

| stimdirection * group | 12.720 | 1 | 12.720 | .052 | .820 | .001 |

| Error | 20258.061 | 83 | 244.073 | |||

| Total | 67500.059 | 88 | ||||

| Corrected Total | 20693.151 | 87 | ||||

R Squared = .021 (Adjusted R Squared = −.026)

peakcomp = magnitude of the peak vocal compensation, peaktime = latency of the peak vocal compensation, stimdirection = direction of the pitch shift (up or down).

While both groups demonstrated a slightly larger magnitude response for the down shift as compared to the up shift, this difference was not statistically significant (F(1,83) = 1.72, p = .19, partial η2 = .02). In other words, when an individual perceived their pitch going down while vocalizing, they would make a larger correction than they did when they perceived their pitch going up. The aphasia group compensated to a similar degree to the control group in both conditions. In terms of variation in performance as measured by standard deviations, while we expected there to be more variation in the aphasia group, that was not true in all cases. In response to the down shift, there was less variation in the aphasia group. However, for the up shift there was more variance in the aphasia group.

Standard scores for the aphasia group were calculated based on the control mean and are presented in Figure 1. Though approximately 88% of individuals performed within one standard deviation of the control mean in at least one shift direction, the dispersion of the data appears greater as compared to the timing of the vocal response. Again, there was little correspondence between response to the different shift directions.

In light of the lack of significant results in the ANCOVA conducted on vocal compensation magnitude, a separate post-hoc analysis was done with the aim of discovering whether vocal compensation response varied based upon aphasia type. Since there was only one individual with global aphasia, those data points were removed for the purposes of this analysis. This time, a multivariate ANOVA (MANOVA) was conducted with aphasia type and stimulus direction as main factors and timing and magnitude of vocal compensation as dependent variables. In this case, no covariate was included since the timing of vocal compensation was a main factor in the model. Results indicated that there were no significant differences based on aphasia type (anomic, Broca’s, and conduction) or stimulus direction in magnitude or timing of vocal compensation response (see Table 5).

Table 5.

Results of the MANOVA examining aphasia subtypes.

| Tests of Between-Subjects Effects | |||||||

|---|---|---|---|---|---|---|---|

| Source | Dependent Variable | Type III Sum of Squares | df | Mean Square | F | Sig. | Partial Eta Squared |

| Corrected Model | peaktime | .027a | 5 | .005 | 1.231 | .313 | .133 |

| peakcomp | 1020.657b | 5 | 204.131 | .781 | .569 | .089 | |

| Intercept | peaktime | 4.109 | 1 | 4.109 | 932.888 | .000 | .959 |

| peakcomp | 18922.950 | 1 | 18922.950 | 72.407 | .000 | .644 | |

| aphasia_type | peaktime | .007 | 2 | .004 | .826 | .445 | .040 |

| peakcomp | 102.633 | 2 | 51.317 | .196 | .823 | .010 | |

| stimdirection | peaktime | .008 | 1 | .008 | 1.849 | .181 | .044 |

| peakcomp | 14.853 | 1 | 14.853 | .057 | .813 | .001 | |

| aphasia_type * stimdirection | peaktime | .007 | 2 | .004 | .819 | .448 | .039 |

| peakcomp | 750.631 | 2 | 375.316 | 1.436 | .250 | .067 | |

| Error | peaktime | .176 | 40 | .004 | |||

| peakcomp | 10453.712 | 40 | 261.343 | ||||

| Total | peaktime | 5.044 | 46 | ||||

| peakcomp | 35252.770 | 46 | |||||

| Corrected Total | peaktime | .203 | 45 | ||||

| peakcomp | 11474.370 | 45 | |||||

R Squared = .133 (Adjusted R Squared = .025)

R Squared = .089 (Adjusted R Squared = −.025)

peaktime = timing of the peak vocal compensation, peakcomp = magnitude of the peak vocal compensation, stimdirection = direction of the pitch shift (up or down)

Task 2: Self-correction during picture naming

Our second aim was to determine whether vocal compensation in response to AAF during vowel prolongation was related to spontaneous self-correction of errors during a picture naming task. We expected that individuals who showed less vocal compensation in response to AAF would also demonstrate fewer attempts at self-correction and have fewer instances of successful self-correction. Performance on the picture naming task is summarized for the participants with aphasia in Table 6 (note that all control participants correctly named all items). In a first step, a correlation analysis was conducted to reveal relationships between vocal compensation behavior (timing and magnitude) and self-correction behavior on the picture naming task, and those results are listed in Table 7. No correlations between the measures of self-correction and the measures of speech compensation were statistically significant. Additionally, while one of the five measures of self-correction were moderately correlated with lesion size (proportion of successful self-corrections: r(22) = −.60, p = .002) there was no linear correlation between the measures of speech compensation and lesion size.

Table 6.

Responses by type for participants with aphasia on the picture naming task. Both raw number of items (raw) and proportion of the total items (prop) are reported for each category.

| Aphasia Type | Correct | SC – S | SC – U | Incorrect | No Response | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Raw | Prop | Raw | Prop | Raw | Prop | Raw | Prop | Raw | Prop | ||

| A1 | Broca’s | 26 | .33 | 20 | .25 | 30 | .38 | 4 | .05 | 0 | 0 |

| A2 | Anomic | 59 | .74 | 12 | .15 | 4 | .05 | 5 | .06 | 0 | 0 |

| A3 | Conduction | 1 | .01 | 0 | 0 | 77 | .96 | 0 | 0 | 2 | .03 |

| A4 | Broca’s | 57 | .71 | 7 | .09 | 4 | .05 | 11 | .14 | 1 | .01 |

| A5 | Conduction | 35 | .44 | 14 | .18 | 25 | .31 | 4 | .05 | 2 | .03 |

| A6 | Conduction | 59 | .74 | 15 | .19 | 2 | .03 | 3 | .04 | 1 | .01 |

| A7 | Anomic | 42 | .53 | 12 | .15 | 14 | .18 | 7 | .09 | 5 | .06 |

| A8 | Conduction | 18 | .23 | 5 | .06 | 18 | .23 | 23 | .29 | 16 | .20 |

| A9 | Broca’s | 27 | .34 | 15 | .19 | 18 | .23 | 13 | .16 | 7 | .09 |

| A10 | Broca’s | 7 | .09 | 0 | 0 | 0 | 0 | 11 | .14 | 62 | .78 |

| A11 | Broca’s | 25 | .31 | 4 | .05 | 13 | .16 | 14 | .18 | 24 | .30 |

| A12 | Broca’s | 27 | .34 | 2 | .03 | 11 | .14 | 33 | .41 | 7 | .09 |

| A13 | Anomic | 68 | .85 | 3 | .04 | 1 | .01 | 5 | .06 | 3 | .04 |

| A14 | Broca’s | 1 | .01 | 0 | 0 | 0 | 0 | 79 | .99 | 0 | 0 |

| A15 | Anomic | 66 | .83 | 3 | .04 | 4 | .05 | 5 | .06 | 2 | .03 |

| A16 | Conduction | 50 | .63 | 14 | .18 | 13 | .16 | 3 | .04 | 0 | 0 |

| A17 | Broca’s | 27 | .34 | 3 | .04 | 35 | .44 | 15 | .19 | 0 | 0 |

| A18 | Broca’s | 67 | .84 | 1 | .01 | 0 | 0 | 12 | .15 | 0 | 0 |

| A19 | Broca’s | 70 | .88 | 1 | .01 | 2 | .03 | 7 | .09 | 0 | 0 |

| A20 | Anomic | 73 | .91 | 4 | .05 | 1 | .01 | 2 | .03 | 0 | 0 |

| A21 | Broca’s | 60 | .75 | 6 | .08 | 2 | .03 | 12 | .15 | 0 | 0 |

| A22 | Broca’s | 39 | .49 | 5 | .06 | 5 | .06 | 31 | .39 | 0 | 0 |

| A23 | Global | 8 | .10 | 0 | 0 | 3 | .04 | 69 | .86 | 0 | 0 |

| A24 | Broca’s | 1 | .01 | 0 | 0 | 2 | .03 | 77 | .96 | 0 | 0 |

SC – S = successful self-correction, SC – U = unsuccessful self-correction.

Table 7.

Correlation results for the relationship between vocal compensation behavior, measures of self-correction during picture naming, and lesion size in participants with aphasia.

| Up shift | Down shift | |||||

|---|---|---|---|---|---|---|

| Peak time | Peak magnitude | Peak time | Peak magnitude | Lesion Size | ||

| total self-correct | Spearman correlation | .088 | −.186 | .281 | .200 | −.285 |

| total success | Spearman correlation | .029 | .153 | −.025 | .141 | −.580 |

| total no success | Spearman correlation | .121 | −.308 | .230 | .087 | −.034 |

| Propsuccess | Spearman correlation | .016 | .330 | −.209 | .120 | −.602* |

correlation is significant at the Bonferroni-corrected p-value of .0025. propsuccess = proportion of successful self-corrections out of total self-correction attempts.

Another post-hoc analysis was conducted to determine if there were differences in self-correction between the aphasia types. Again, the individual with global aphasia was excluded from this analysis and a MANOVA was conducted with each of the self-correction metrics as the dependent variable and aphasia type as the independent variable. Significant differences were uncovered in total self-corrections (F(2)=6.424, p=.007) and total successful self-corrections (F(2)=4.152, p=.031) (see Table 8). Post hoc pairwise comparisons revealed that individuals with Broca’s aphasia produced significantly fewer self-corrections overall than those with conduction aphasia (mean difference =.47, p=.008), although there was no statistical difference between anomic and conduction aphasia. Also, there was a trend indicating that individuals with anomic aphasia may produce more successful self-corrections than those with Broca’s aphasia (mean difference=.252, p=.050).

Table 8.

Results of the MANOVA conducted on the self-correction measures.

| Tests of Between-Subjects Effects | |||||||

|---|---|---|---|---|---|---|---|

| Source | Dependent Variable | Type III Sum of Squares | df | Mean Square | F | Sig. | Partial Eta Squared |

| Corrected Model | totSC | .885a | 2 | .442 | 6.424 | .007 | .391 |

| totsuccess | .278b | 2 | .139 | 4.152 | .031 | .293 | |

| totnosuccess | .275c | 2 | .137 | 2.709 | .091 | .213 | |

| propsuccess | .256d | 2 | .128 | 1.553 | .236 | .134 | |

| Intercept | totSC | 6.105 | 1 | 6.105 | 88.653 | .000 | .816 |

| totsuccess | 1.450 | 1 | 1.450 | 43.273 | .000 | .684 | |

| totnosuccess | 1.645 | 1 | 1.645 | 32.433 | .000 | .619 | |

| propsuccess | 3.901 | 1 | 3.901 | 47.324 | .000 | .703 | |

| group | totSC | .885 | 2 | .442 | 6.424 | .007 | .391 |

| totsuccess | .278 | 2 | .139 | 4.152 | .031 | .293 | |

| totnosuccess | .275 | 2 | .137 | 2.709 | .091 | .213 | |

| propsuccess | .256 | 2 | .128 | 1.553 | .236 | .134 | |

| Error | totSC | 1.377 | 20 | .069 | |||

| totsuccess | .670 | 20 | .034 | ||||

| totnosuccess | 1.014 | 20 | .051 | ||||

| propsuccess | 1.649 | 20 | .082 | ||||

| Total | totSC | 7.603 | 23 | ||||

| totsuccess | 2.133 | 23 | |||||

| totnosuccess | 2.876 | 23 | |||||

| propsuccess | 5.941 | 23 | |||||

| Corrected Total | totSC | 2.262 | 22 | ||||

| totsuccess | .949 | 22 | |||||

| totnosuccess | 1.289 | 22 | |||||

| propsuccess | 1.905 | 22 | |||||

R Squared = .391 (Adjusted R Squared = .330)

R Squared = .293 (Adjusted R Squared = .223)

R Squared = .213 (Adjusted R Squared = .134)

R Squared = .134 (Adjusted R Squared = .048)

totSC = total self-corrections out of total errors, totsuccess = total successful self-corrections out of total errors, totnosuccess = total unsuccessful self-corrections, propsuccess = proportion of successful self-corrections out of total self-corrections

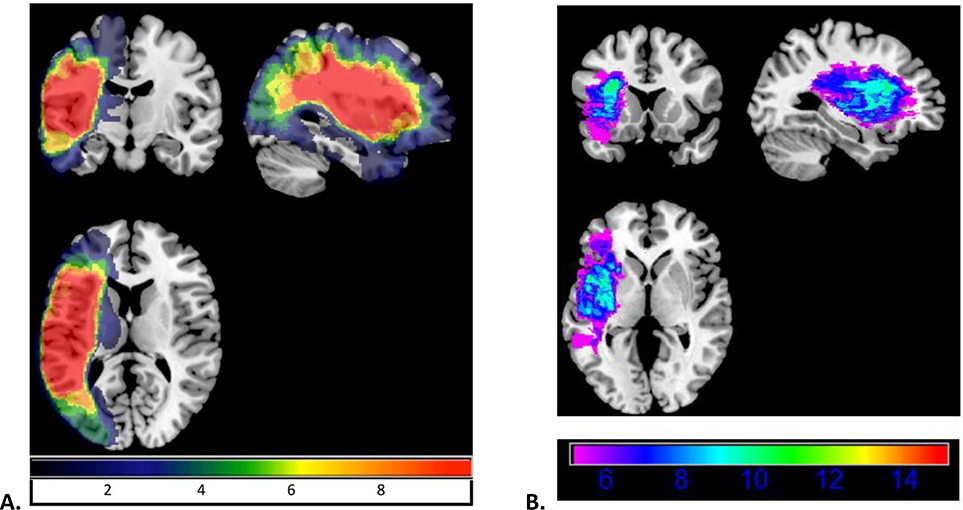

As a secondary planned analysis, we hoped to discover whether patterns of neural damage could account for differences in vocal compensation in response to AAF or self-correction on the naming task using an ROI-based lesion-symptom mapping analysis. Here, we expected to find that damage to left area Spt would be associated with diminished vocal compensation and fewer attempts at self-correction during naming. The same measures from the correlation analysis were also entered into the lesion-symptom mapping analysis as dependent variables. Figure 2 shows the overlap of damaged regions across participants. No regions survived at the .05 significance level for any of the behavioral measures indicating that damage to none of the regions of interest was associated with the behavioral measures.

Figure 2.

Lesion overlap map for all participants with aphasia. Panel A shows the overlap in all regions. Panel B only shows the regions in which at least 5 participants (approximately 20%) had damage. In both panels, areas highlighted in warmer colors were damaged in a greater number of participants.

Discussion

The goal of the current study was to examine sensorimotor integration impairment in aphasia and its behavioral correlates. More specifically, the aim was to study the extent to which sensorimotor deficits during speech occur in a heterogeneous sample of people with aphasia by examining behavioral correlates of the impairment as well as exploring how it may affect language function beyond speech repetition. Our analysis showed that individuals with aphasia differ from controls in the speech compensation response to AAF. We found that individuals with aphasia tend to take longer to compensate in response to pitch shifts in their auditory feedback compared with controls. However, when the difference in timing of responses was controlled for, our analysis showed that individuals with aphasia tend to compensate to a similar response magnitude as controls. For both groups, the response to the down shift was larger in magnitude and quicker than the response to the up shift. Additionally, there were only small differences between groups when it came to variability in magnitude of speech response.

Very few significant results were revealed in the analyses that aimed to connect speech compensation behavior with error detection and correction during naming. A correlation analysis failed to reveal a significant relationship between speech compensation latency or magnitude and self-correction during a naming task. Also, an ROI-based lesion-symptom mapping analysis did not reveal any overlap of regions predictive of speech compensation and self-correction.

Previous studies have shown that when an unexpected alteration in some dimension of the speech output (e.g. perturbation of pitch or formant frequency) occurs, participants generate an on-line compensatory response to correct for the perceived error (Behroozmand et al., 2015; Burnett, Freedland, & Larson, 1998; Houde & Jordan, 1998; Keough, Hawco, & Jones, 2013; Liu et al., 2011; Villacorta, Perkell, & Guenther, 2007). This response is automatic and unconscious and tends to occur in the direction opposite to the perceived change (Burnett et al., 1998; Keough et al., 2013). Our control sample consistently demonstrated this compensation response, and as was found in the study conducted by Liu and colleagues (2011), responses to the down shift were larger than responses to the up shift. We found that this disparity in response based on shift direction also occurred in the aphasia group. However, those individuals had diminished compensation responses as compared to the controls, though that disparity was no longer present after accounting for timing of the speech response. This was different than what was found in Behroozmand et al.’s (2018) study of 15 people with aphasia, likely because the current study included a larger sample and accounted for differences in latency of peak compensatory response. In the 2018 study, Behroozmand and colleagues found that their participants with aphasia and the controls both produced vocal compensation in the direction opposite to the direction of the shift. However, the peak magnitude of the response was diminished for the individuals with aphasia. In the current sample, we did not see a similar disparity between groups in the magnitude of vocal compensation response, but our calculations accounted for differences in the timing of the peak. Behroozmand et al. (2018) note that the peak occurred approximately 300 ms after the pitch shift and calculated those peaks between 250 and 350 ms. At the group level, the peaks in our sample occurred at a comparable time. However, there were a number of participants in the aphasia group whose peak vocal compensation was delayed. After accounting for those differences in timing, we did not find a significant difference in the magnitude of the response.

One notable aspect of these findings is the relative lack of variability as measured by standard errors in the speech compensation response once differences in timing were accounted for. For individuals with aphasia, the variability in performance within the group was largely comparable to the variability in performance within the control group when considering the standard error, though when simply looking at standard deviations, this pattern was inconsistent. In aphasia, performance typically must be viewed in light of a multitude of sources of variability from many factors such as age, aphasia severity, lesion location, and lesion size. However, in this case it appears that those factors may play a smaller role in behavioral performance.

The correlation analysis we conducted failed to uncover a relationship between the measures of speech compensation and self-correcting behavior. The idea was that individuals who have difficulty automatically and unconsciously detecting and correcting minor speech errors could also have difficulty when detecting and correcting errors that originate at other levels of processing. If this was the case, it could provide evidence of a fundamental deficit in sensorimotor integration and provide an account that unifies behaviors that are probed in different ways. The present study provides no evidence in favor of that claim. Despite this, it may still be worth examining this issue further to either confirm the lack of a relationship or to elucidate the relationship using a different method. The lesion-symptom mapping analysis was also inconclusive. No regions survived multiple comparisons for any of the behavioral measures at the .05 significance level or in an exploratory analysis at the .10 significance level. Considering the small sample size and the fact that many results in small samples are driven by lesion size, these results are not completely surprising. Again, it will take further research to tease out these relationships, if they indeed exist.

Our results indicate that individuals with aphasia demonstrate a vocal compensation response to AAF that would suggest a preserved sensorimotor integration system in this population at least in terms of the magnitude of the response. However, it must be determined whether the slowed timing of the compensation response is a direct result of a deficiency in sensorimotor integration or, rather, if other factors such as slowed processing speed could be an underlying mechanism. In other words, a slowed response may result from delayed detection of the pitch shift, or it may result from a longer time spent developing the corrective motor plan. Regardless, it seems that given enough time, people with aphasia can compensate to a level comparable to that of neurologically healthy controls.

If sensorimotor systems subserve both working memory and comprehension, as posited by Buchsbaum and colleagues (2011), these results would be logical considering the composition of aphasia types included in this study. The sample consisted mostly of individuals with anomic, conduction, and Broca’s aphasias, all of whom would be expected to have relatively preserved comprehension abilities. However, this makes the unique combination of deficits present in conduction aphasia difficult to reconcile with a potential impairment in sensorimotor integration caused by damage to Spt. It could be that while Spt is involved in sensorimotor integration, certain patterns of damage involving the region are not sufficient to impair behavior or perhaps there are multiple sensorimotor systems at play here. It would also be of interest to determine whether this preserved magnitude of response also occurs for those with Wernicke’s and global aphasia, particularly considering the more posterior lesion sites associated with Wernicke’s aphasia and the relationship between posterior superior temporal gyrus/sulcus and comprehension and auditory short-term memory abilities (Leff et al., 2009).

The results also underscore the fact that aphasia is primarily a disorder of language, so the finding that our measurements taken at a much lower level of processing were largely comparable to those of control participants (at least in one dimension) is reasonable. We might expect that individuals with a co-occurring speech disorder such as apraxia of speech may perform differently, and that presents an opportunity for continued investigation into the topic. While we were unable to correlate performance on the AAF task to self-correction in picture naming, there are several other reasons a self-correction may occur including errors at the semantic or phonological levels of processing which, of course, would be expected in this population. The subtle vocal response to AAF also represents a much higher level of granularity than is available when measuring self-corrections. Our self-correction measures do provide a proxy of identification and correction of external errors; however, we are unable to account for corrections that occur prior to the articulation of the word.

There are several limiting factors associated with this study that should be mentioned. Sample size and sample composition appeared to be a limitation, particularly for the lesion-symptom mapping analysis. The sample used here is smaller than what is typically utilized to obtain robust lesion-symptom mapping results, particularly if lesion size is included as a regressor (as has been done here). The distribution of aphasia types within the sample was not equal either, which may have created some biases in lesion distribution. With a majority of participants with Broca’s and anomic aphasia, we would expect to see fewer lesions in left area Spt, a particular region of interest for sensorimotor integration. Future studies should aim to achieve a better balance in lesion distribution and aphasia type to guard against that type of issue. It is also possible that the scoring mechanism used to identify self-correction during the naming task was not sufficiently sensitive or that the use of only a single, relatively short naming task was insufficient to detect more subtle relationships. For example, in a study investigating self-monitoring of errors in aphasia, responses on a 615-item naming test were scored by the first response for error type but also scored for verbalizations indicating detection or repair (Schwartz, Middleton, Brecher, Gagliardi, & Garvey, 2016). This allowed for the examination of self-correction grouped by error type. Future studies should consider using longer naming tests to allow more opportunities for self-correction as well as different ways of quantifying successful and unsuccessful self-correcting behavior.

With the current study taking only a single step in examining sensorimotor deficits in speech production in aphasia, there are multiple avenues for future research. It would be useful to determine to what extent these shifts are detected overtly and how well that corresponds to compensation behavior (if at all). The differences we found in the timing of the vocal response could originate from either the initial detection of the shift or it may take people with aphasia longer to develop or select a corrective motor plan. Finding a way to differentiate between these components of the response may provide some insight into the nature of the delay. It will be important in the future to group individuals by aphasia type to examine differences and look at a variety of language measures including spontaneous speech tasks. Owing to the eventual goal of influencing assessment or treatment practices, it will also be important to determine if compensation can be modified with behavioral treatment either explicitly or indirectly as well as to explore the effects of neurostimulation on compensation behavior. Hopefully, the current study will be part of the foundation upon which a body of research can be conducted to not only contribute to our understanding of basic sensorimotor integrative speech functions in neurologically healthy and impaired populations but to also improve outcomes for those most impacted by deficits in these areas.

Taken together, the current data suggest that there are potential behavioral markers of sensorimotor integration in aphasia. With AAF as our proxy for sensorimotor integration, the timing of the vocal compensation response may serve as a marker of subtle deficits. Individuals with aphasia are consistently responding to AAF, and although there is much variability in the degree of response, it may have to potential to become a useful tool for assessment or differential diagnosis once the relationship of those responses with other behavioral characteristics of aphasia are clarified. We were able to replicate the behavioral speech compensation patterns that have been identified in other studies with controls, and we extended this to a clinical population of persons with aphasia. Though there is little to no research regarding these markers in aphasia, our data can serve as a first step towards understanding markers of sensorimotor integration in a variety of aphasia types. While the goal was to connect these differences in sensorimotor function to higher-level language ability, namely self-correction in a picture naming task, that relationship remains largely unclear but should be explored further. It is possible that a refinement in our questions and methods will reveal the nature of that connection. There is potential to use what we are learning about these markers to detect impairment in a basic integrative function and elucidating the relationship with language could inform treatment in the future. Uncovering and targeting those basic functions subsequently to or concomitantly with traditional treatments may expedite the recovery process or result in more positive long-term outcomes.

Highlights.

People with aphasia change their pitch to compensate for altered auditory feedback

Vocal pitch compensation is slower in aphasia compared with normal speakers

Slowed compensation is not correlated with self-correction during naming in aphasia

Acknowledgments

This study was supported by the following research grants from NIH/NIDCD: DC015831 (PI: Behroozmand), DC011739 (PI:Fridriksson), DC014664 (PI: Fridriksson), DC014435 (Trainee: Johnson)

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Anderson JM, Gilmore R, Roper S, Crosson B, Bauer RM, Nadeau S, Beversdorf DQ, … and Heilman KM (1999). Conduction aphasia and the arcuate fasciculus: A reexamination of the Wernicke-Geschwind model. Brain and Language, 70, 1–12. doi: 10.1006/brln.1999.2135. [DOI] [PubMed] [Google Scholar]

- Baddeley A (1992). Working memory. Science, 254, 556–559. doi: 10.1126/science.1736359. [DOI] [PubMed] [Google Scholar]

- Baldo JV, Katseff S, & Dronkers NF (2011). Brain regions underlying repetition and auditory-verbal short-term memory deficits in aphasia: Evidence from voxel-based lesion symptom mapping. Aphasiology, 26, 338–354. doi: 10.1080/02687038.2011.602391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldo JV, Klostermann EC, & Dronkers NF (2008). It’s either a cook or a baker: Patients with conduction aphasia get the gist but lose the trace. Brain and Language, 105, 134–140. doi: 10.1016/j.bandl.2007.12.007. [DOI] [PubMed] [Google Scholar]

- Behroozmand R, Phillip L, Johari K, Bonilha L, Rorden C, Hickok G, & Fridriksson J (2018). Sensorimotor impairment of speech auditory feedback processing in aphasia. NeuroImage, 165, 102–111. doi: 10.1016/j.neuroimage.2017.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behroozmand R, Shebek R, Hansen DR, Oya H, Robin DA, Howard MA III, Greenlee JDW (2015). Sensory-motor networks involved in speech production and motor control: An fMRI study. NeuroImage, 109, 418–428. doi: 10.1016/j.neuroimage.2015.01.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boersma P (2001). Praat, a system for doing phonetics by computer. Glot International,5(9/10), 341–345. [Google Scholar]

- Buchsbaum BR, Baldo J, Okada K, Berman KF, Dronkers N, D’Esposito M, & Hickok G (2011). Conduction aphasia, sensory-motor integration, and phonological short-term memory: An aggregate analysis of lesion and fMRI data. Brain & Language, 119, 119–128. doi: 10.1016/j.bandl.2010.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchsbaum BR, & D’Esposito M (2008). The search for the phonological store: From loop to convolution. Journal of Cognitive Neuroscience, 20, 762–778. doi: 10.1162/jocn.2008.20501. [DOI] [PubMed] [Google Scholar]