Abstract

Artificial intelligence (AI) is a combination of different technologies that enable machines to sense, comprehend, and learn with human-like levels of intelligence. AI technology will eventually enhance human capability, provide machines genuine autonomy, and reduce errors, and increase productivity and efficiency. AI seems promising, and the field is full of invention, novel applications; however, the limitation of machine learning suggests a cautious optimism as the right strategy. AI is also becoming incorporated into medicine to improve patient care by speeding up processes and achieving greater accuracy for optimal patient care. AI using deep learning technology has been used to identify, differentiate catalog images in several medical fields including gastrointestinal endoscopy. The gastrointestinal endoscopy field involves endoscopic diagnoses and prognostication of various digestive diseases using image analysis with the help of various gastrointestinal endoscopic device systems. AI-based endoscopic systems can reliably detect and provide crucial information on gastrointestinal pathology based on their training and validation. These systems can make gastroenterology practice easier, faster, more reliable, and reduce inter-observer variability in the coming years. However, the thought that these systems will replace human decision making replace gastrointestinal endoscopists does not seem plausible in the near future. In this review, we discuss AI and associated various technological terminologies, evolving role in gastrointestinal endoscopy, and future possibilities.

Keywords: Artificial intelligence, Gastrointestinal endoscopy, Machine learning, Endoscopic diagnosis, Gastric cancer, Gastrointestinal diseases

Core Tip: Artificial intelligence (AI) technology seems promising, and the field is full of invention and novel applications in gastrointestinal endoscopy. AI-based endoscopic systems can reliably detect and provide crucial information on gastrointestinal pathology based on their training and validation. These systems will make gastroenterology practice easier, faster, more reliable, and reduce inter-observer variability in the coming years. Gastroenterologists should welcome and embrace AI-assisted technologies in their practice as and when commercially available after thorough vetting in validation studies. A strong collaboration among physicians, computer scientists, and entrepreneurs is also needed to promote AI’s clinical use in medical practice.

INTRODUCTION

Artificial intelligence (AI) is a combination of advanced computational technologies that allows machines to learn and perform with intelligence similar to human brain. More recently, AI is becoming an integral part of practice of medicine, helping provide accurate and optimal patient care. Electronic health records have enhanced and accelerated physicians’ capabilities for treating patients and providing crucial data for innovation and development by using machine learning (ML)[1,2]. AI is probably the best technology for rapid and reliably accurate means to treat the growing patient population.

AI using deep learning (DL) technology has been used to identify, differentiate and catalog images in several medical fields including gastrointestinal endoscopy[1,2]. The gastrointestinal endoscopy field involves endoscopic diagnoses and prognostication of various digestive diseases using image analysis with the help of various gastrointestinal endoscopic device systems[3]. As the field of AI in endoscopy is going to grow like several applications in every-day life around us. Gastroenterologists need to be acquainted with the emerging applications, advantages, and limitations of AI as it pertains to gastrointestinal endoscopy and should embrace it sooner than later.

Despite the overwhelming initial excitement, a lot more remains to be discovered in the field of AI and endoscopy as the technology evolves and becomes more sophisticated. In this review, we discuss AI and associated various technological terminologies, evolving role in gastrointestinal endoscopy, and future possibilities. We searched the internet using PubMed, Google Scholar, and Google for related abstracts and published articles in the English language using keywords: “artificial intelligence and endoscopy”, “machine learning”, “gastric cancer”, “computer-aided diagnosis and endoscopy”, “computer-aided detection”, “CAD”, “neural networks” and “endoscopy” for this review.

AI RELATED TERMINOLOGY

AI refers to a machine or computer’s ability to perform tasks with cognition, similar to those of humans, such as learning and problem solving that mimic the human mind[1,4]. The fundamental principle of this technology is “machine learning”, which employs teaching computer algorithms to learn and recognize patterns in the data automatically[4,5]. On the other hand, DL is based on the human brain’s biological neural network called multi-layered artificial neural networks (ANNs)[5,6]. DL can recognize patterns, analyze data, and make conclusions and decisions[6].

ML

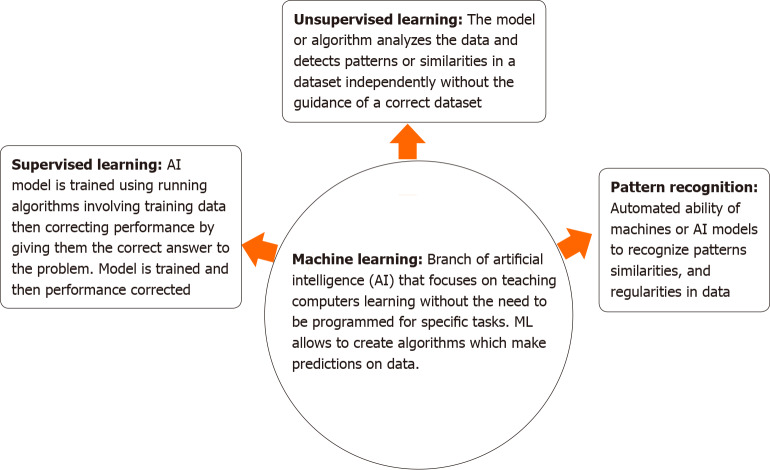

ML is a subdivision of AI that "focuses on teaching computers how to learn from available data, and identify similarities or patterns and can predict data without the need for external human intervention or programming[4,5]. ML allows us to create algorithms (Figure 1). These algorithms learn from and make predictions on data. ML has its foundations in computational learning theory in pattern recognition[4,5,7].

Figure 1.

Schematic representation of different aspects of machine learning approach. AI: Artificial intelligence; ML: Machine learning.

DL

DL or deep structured learning is part of a broader family of ML that uses algorithms for machines to comprehend training images and speech to extract specific features. DL technology is ideal for quantifying images and providing classification. A flexible set of rules and techniques makes it possible for the neural network to teach itself to accomplish goals it was not trained to solve[4,6,7].

ANNs

A neural network usually refers to software, hardware, or both modeled after and is similar to aspects of the human brain. Neural networks are layers of interconnected artificial neurons. Different layers analyze and learn from the previous layer and together can engage and perform more complex decision making[4,6]. Several studies involving AI in medicine use ANN’s.

Convolutional neural networks

Convolutional neural networks (CNNs) are a specific class of deep neural networks, usually applied to visual image analysis including image recognition and classification. These require minimal preprocessing and are also known as space invariant ANNs based on their shared weights architecture and translational invariants characteristic[4,6,7]. CNN is designed to utilize large datasets to learn patterns in correlating images. CNN’s connect inputs through pattern recognition that are trained through datasets[4,6,7].

AI AND ENDOSCOPY

Over time the image recognition using AI with ML has dramatically improved and currently has multiple applications in medicine as far as diagnostic imaging is concerned[1-3]. AI-related software is being used in the histological classification of gastric biopsy and characterization of colorectal lesions using endocytoscopy, diabetic retinopathy, skin cancer classification[3,8,9]. AI-based systems for endoscopy primarily improve detection rates, help predict histology based neoplastic vs non-neoplastic behavior and subsequently guide therapy in the form of endoscopic resection vs surgical resection[8,9]. AI-based computer algorithms provide diagnostic information by analyzing surface micro topological patterns, color differences, micro-vascular and pit pattern, narrow band imaging (NBI), high-magnification and endocytoscopy appearance from still images and video frames. The results are further validated subsequently by using another test database or prospective in vivo clinical trials[3,8,9].

Initial studies have demonstrated the ability of AI-based endoscopy software in diagnosing Barrett’s esophagus (BE), esophageal and stomach cancer, and Helicobacter pylori (H. pylori)-related gastritis, and colorectal polyps[10]. Endobrain (Olympus; Tokyo, Japan) is one of the initial AI-based endoscopic system available for commercial use. The rapid progress of various AI systems will allow for more advanced predictions.

AI in upper endoscopy

Gastrointestinal malignancies remain the leading cause of mortality and morbidity worldwide. A high-quality endoscopic examination for the recognition of gastrointestinal neoplasms is essential for the gastroenterologist to enhance the detection of gastrointestinal neoplasms and optimize the treatment plans. Besides neoplasms, AI has also been increasingly used in other gastrointestinal diseases, including gastroesophageal reflux disease (GERD), infection, inflammation, and hemorrhage[10-13].

AI in GERD diagnosis

Upper endoscopy is the diagnostic modality of choice for GERD and its complications including BE. AI is reliably able to depict the anatomical location of endoscopic images. Takiyama et al[14] reported a CNN model that is able to examine upper endoscopic images with precise anatomical localization with area under the receiver operating characteristic curve (AUROC) of 1.00 for the larynx and esophagus, and AUROC of 0.99 for stomach and duodenum landmarks. AI can also accurately predict the diagnosis of GERD. Using an ANN model, Pace et al[15] were able to predict GERD’s diagnosis using only 45 clinical variables in 159 cases with 100% accuracy. Changes in intrapapillary capillary loops and incremental increase as identified by NBI using deep CNN’s have been proposed as a disease marker for GERD. Near focus-NBI containing AI model has been described as a novel method for the diagnosis of GERD[16].

AI in BE and esophageal cancer

Early detection of BE neoplastic changes is essential, especially in promising curative endoscopic treatment such as endoscopic mucosal resection (EMR) and radiofrequency ablation[10,11]. van der Sommen et al[11] developed an automated algorithm to help in the differentiation of early neoplastic lesions in BE. This algorithm included specific textures, color filters, and ML from one hundred images from upper endoscopy, and helped in detection of early neoplastic lesions with a sensitivity and specificity of 83%. Another study by a similar group used a novel computer model with 60 ex vivo volumetric laser endomicroscopy (VLE) images[10]. VLE is an advanced imaging technology utilizing an optical coherence-based probe that provides a microscopic scan of the esophageal wall layers. The study compared the performance of the computer algorithm in detecting VLE images containing BE neoplasia vs VLE image interpretation by experts. The histological diagnosis, correlated with the images, served as the reference standard. The algorithm showed the ability to diagnose BE neoplasia with a sensitivity of 90% and specificity of 93%[10]. Current VLE consoles in clinical use have built-in AI for dysplasia and cancer detection.

The critical goal of Barrett’s surveillance programs is early detection of dysplasia. ML model has been used to differentiate dysplastic and non-neoplastic lesions in various studies[10,11,17]. Computer-based analysis can be used to identify malignant tissue at the cellular level by microscopic visualization of the mucosal surface using endocytoscopy images[17]. An image analysis model was able to diagnose esophageal squamous dysplasia by high-resolution microendoscopy (HRME), exhibited a sensitivity of 87% and specificity of 97%[18]. Further cost-effective modifications of this model using tablet-interfaced HRME with full automation for real-time analysis resulted in diagnostic accuracy of esophageal squamous cell carcinoma with 95% sensitivity and 91% specificity[19].

Horie et al[20] used CNNs-mediated AI for esophageal cancer diagnosis. A total of 8428 endoscopic images were used to train the CNN, including both white-light and narrow-band images. The model was able to diagnose esophageal cancer with a sensitivity of 95%. The ability to detect cancers smaller than 10 mm was 100%. This model had a very high diagnostic accuracy of 98% in differentiating between superficial and advanced esophageal cancer[20]. AI-based models have also been used for disease prognostication. An ANN model with improved accuracy was also described by Sato et al[21], 418 esophageal cancer cases were used to predict 1-year and 5-year survival rates. In summary AI-based technologies and endoscopic systems show great promising results in improved detection of BE related dysplasia and esophageal carcinoma and show current and future promise in reducing mortality and morbidity related to these disease states.

AI in H. pylori infection diagnosis

H. pylori infection is an established risk factor for the development of gastroduodenal ulcers and gastric cancer. AI-based research in this field has been focused primarily to aid in the endoscopic diagnosis of H. pylori infections[12,22]. The AI-based neural network model was used to predict H. pylori infection using related gastric histologic features. Parameters of 84 images from 30 patients were used to train this model, and reported a sensitivity of 85.4% and specificity of 90.9% for the detection of H. pylori infection[22]. The model successfully identified gastric atrophy and gastric intestinal metaplasia, and was helpful in prediction of the infection severity with above 80% accuracy[22].

A prospective pilot study with an AI-based model for detection of H. pylori infections demonstrated better yield with blue laser imaging-bright and linked color imaging training (AUROCs of 0.96 and 0.95) than white light imaging training (0.66)[23]. Another CNN model used 596 endoscopic images to detect the H. pylori infections. This model revealed exiting results with 86.7% of sensitivity and specificity[24]. Shichijo et al[12] used endoscopic images to diagnose H. pylori infection and compared the performance of a CNN model to 23 endoscopists. The CNN model exhibited better sensitivity (88.9% vs 79.0%) and specificity (87.4% vs 83.2%), a superior accuracy (87.7% vs 82.4%), and lesser time for diagnosis (194 s vs 230 s)[12,24].

AI in gastric cancer

Gastric cancer is the fifth most common form of cancer and the third leading cause of cancer-related death worldwide[8]. Early-stage endoscopic detection of gastric cancer helps us to triage patients for minimally invasive endoscopic therapy, including EMR or endoscopic submucosal dissection[8,25,26]. Esophagogastroduodenoscopy (EGD) is the current standard procedure for the diagnoses of gastric cancer. EGD in the diagnoses of gastric carcinoma carries a false-negative rate as high as 4.6%-25.8%[25]. Early gastric cancer lesions show only subtle morphologic changes can be easily missed and are difficult to distinguish from back-ground mucosa with atrophic changes[8,25,26].

AI is currently being utilized primarily for the detection and characterization of gastrointestinal neoplasms. Kubota et al[26] utilized a backpropagation model after 10-time cross-validation using 902 gastric cancer endoscopic images. The diagnostic accuracy of this model was found to be limited, 77.2%, 49.1%, 51.0%, and 55.3% for T1-4 staging[26]. Hirasawa et al[8] created and trained a CNN based model containing 13584 endoscopic images and subsequently analyzed 2296 images with 92.2% sensitivity for gastric cancer detection. The diagnostic efficiency of neoplasm with 6 mm or greater size was 98.6%[8]. This model was able to identify all invasive cancers, which can be extremely hard to diagnose from gastritis even by experts. Gastritis findings with erythema, atrophy, and intestinal metaplasia were most common reasons for misdiagnosis[8].

Zhu et al[25] developed and trained a CNN model to recognize gastric cancer (M/SM1 vs deeper than SM1) using 790 conventional endoscopic images and subsequently performed analysis with another two hundred and three images. This study demonstrated superior accuracy (89.2%) and specificity (95.6%) as far as depth of invasion of gastric cancer was concerned[25]. Another computer-aided diagnosis (CAD) system using a support vector machine (SVM) was also used to identify early gastric cancer using magnifying NBI images[27]. This study showed very high diagnosis rate [accuracy 96.3%, positive predictive value (PPV) 98.3%, sensitivity 96.7%, specificity 95%] and area concordance performance (accuracy 73.8%, PPV 75.3%, sensitivity 65.5%, specificity 80.8%)[27].

AI in small intestinal endoscopic imaging

Video capsule endoscopy (VCE) is an integral part of endoscopic detection of small intestinal endoscopy. VCE is a subjective, time consuming diagnostic study, and due to low-resolution frame images, the interpretation of capsule endoscopy images and disease diagnosis relies on the image reviewer. AI has been used for VCE diagnosis of angiodysplasia, celiac or intestinal hookworms[13,28,29]. Leenhardt et al[13] developed a CNN based gastrointestinal angiodysplasia detection model using six hundred control and six hundred angiodysplasia images to create 4166 small bowel capsule endoscopy videos. Overall diagnostic performance was high with a sensitivity of 100%, a specificity of 96%, with a PPV of 96%, and negative predictive value (NPV) of 100%[29]. Another study using CNN based model showed sensitivity and specificity of 100% for celiac disease diagnosis[29]. Seguí et al[30] described a CNN model for small-intestine motility characterization using motility events. The mean classification accuracy was 96% for different intestinal motility events (“turbid”, “bubbles”, “clear blob”, “wrinkles”, “wall”, and “undefined”). More important and recent clinical studies related to AI, VCE and small intestinal imaging are summarized in Table 1. Currently available published studies show promising diagnostic accuracy using CNN for small intestinal imaging. Further multi center validation study will provide impetus to its use in clinical practice.

Table 1.

Recent clinical studies in artificial intelligence using central neural network and capsule endoscopy for small intestinal imaging

|

Ref.

|

Type of study

|

Aim of study

|

Images/video

|

Results

|

| Aoki et al[58], 2020 | Retrospective | Detection of mucosal breaks/erosion | 20 capsule endoscopy videos | Detection rate, expert 87%; trainee, 55% |

| Klang et al[59], 2020 | Retrospective | Detection of small intestinal ulcers in Crohn’s disease | 17640 images from 49 patients | Accuracy, 96.7%; sensitivity, 96.8%; specificity, 96.6% (5-fold) |

| Tsuboi et al[60], 2020 | Retrospective | Detection of small intestinal angiodysplasia | 2237 images from 141 patients | Sensitivity, 98.8%; specificity, 98.4% |

| Ding et al[61], 2019 | Retrospective | Detection of small intestinal abnormal images | 158235 images from 1970 patients | Sensitivity, 99.9%; reading time, 5.9 min |

| Saito et al[62], 2020 | Retrospective | Detection and classification of protruding lesions | 30584 images from 292 patients | Sensitivity, 90.7%; specificity, 79.8%; reading time, 530.462 s |

Improvement in capsule endoscopy may include improved capsule with controlled locomotion and navigation. This may enhance mucosal visualization and help better delineate small intestinal pathology further. This may provide us with the ability to perform real time VCE assisted forceps biopsy or fine needle aspiration (FNA) of the target lesion as well as endoscopic therapy in future[31,32]. Few proposed improvement include self-propelled mechanism with external control or a video capsule endoscope externally propelled by magnetic force with navigational controls. Multiple such prototypes for different indications are being currently investigated to assess suitability in clinical practice.

AI and endoscopic ultrasound

CAD has been used to assist endosonographers in the endoscopic ultrasound (EUS) evaluation of pancreatic lesions[33,34]. The diagnosis of a pancreatic neoplasm in the setting of chronic pancreatitis (CP) is challenging. EUS imaging is unable to distinguish neoplastic from reactive changes reliably. To differentiate between pancreatic cancer (PC) and CP, CAD techniques were used to extract EUS image parameters. A total of 105 features were extracted from 262 PC and 126 CP patient EUS images. Sixteen of these features were used for classification using a SVM model with a sensitivity rate of 94%[33]. Another ANN model based on digital image analysis of EUS images accurately classified PC with an area under the curve of 0.93[34].

ANN-based approach to differentiate PC and mass-forming CP using vascularity parameters obtained from post-processing of contrast-enhanced EUS videos suggest that vascularity parameters can reliably differentiate between PC and CP and can be used in an automated CAD system with excellent results (94.64% sensitivity and 94.44% specificity)[35]. EUS elastography was used for the differential diagnosis of CP and PC using EUS elastography movies and calculating hue histograms of individual images, followed by extended neural network analysis. Multilayer perceptron neural networks (MNNs) were initially trained to differentiate benign or malignant cases. This approach resulted in an outstanding average testing performance of 95% and a superior training performance of 97%[36]. Cytologic diagnosis of PC can be challenging at times. Attempts to integrate computer models into the cytopathologic diagnosis of PC have been studied. MNN using segment images of cell clusters from FNA of pancreatic tumors was able to differentiate between benign and malignant cells. The accuracy of MNN based slide analysis was equivalent to the cytopathologist review. Interestingly, the MNN model’s predictions showed a sensitivity of 80% for inconclusive FNA results[37]. Future advances in technology can help yield a more definitive diagnosis.

AI based models also provide an emerging approach to evaluate the risk of PC in cystic neoplasms. Using computer-aided frameworks, AI algorithms can recognize pancreatic cysts with malignant potential that are at increased risk of developing into PC[38,39]. A study using ANN and personal health data was useful in predicting the risk of PC, with a sensitivity of 80.7% and a specificity of 80.7%[39]. This particular ANN based model was able to stratify patients into low, medium, and high cancer risks[39].

AI in lower endoscopy

Colorectal cancer (CRC) is the second leading cause of cancer-related deaths in the United States. Over the past three decades, the rates of colon cancer and colon cancer-related mortality have decreased due to the introduction of colonoscopy and polypectomy of adenomatous polyps[40,41]. A large cohort study conducted in the United States has also demonstrated an approximately 70% reduction in deaths after the institution of colonoscopy[42]. Colonoscopy is the best screening modality currently for diagnosis of adenomatous polyp and CRC. The CRC miss rate on screening colonoscopies is as high as 4%-5%[43], with the interval CRC reported as 7.2%-9% despite adherence to current guidelines[44]. The rate of interval CRC development is primarily due to missed lesions, newer lesions, and inadequate endoscopic treatment[45]. The overall pooled miss rate of polyps in a meta-analysis is 22% for polyps of any given size[46]. Consequently, the importance of standardizing the quality of colonoscopy and increasing the detection of adenomatous polyps remains an important goal.

Adenoma detection rate (ADR) has become a key quality measure for evaluating an endoscopist’s ability to find adenomas. However, rates of adenoma detection vary among endoscopists (7%-53%)[43]. Higher ADRs are associated with lower CRC mortality, with every 1% increase in ADR reflecting an associated 3.0% decrease in risk of interval CRC[43,45,46]. Given an increased focus on ADR, AI, or CAD are becoming rapidly integrated technologies utilized throughout modern medicine. In Gastroenterology, the use of AI is now being utilized to increase polyp detection rates and distinguish between adenomatous polyps and their hyperplastic counterparts. The use of AI in colonoscopy allows for the standardization of colonoscopy regardless of the endoscopists’ skills.

The primary role of AI in colonoscopy is thought to center around increased polyp detection and histopathological polyp differentiation without modification of the colonoscope or procedure. AI allows for real-time automatic polyp detection to help assist the endoscopist in detecting adenomatous lesions and signaling the endoscopist’s attention. AI or CAD-based models are trained using thousands of colonoscopic images to help identify and differentiate between hyperplastic and adenomatous polyps. After its initial training, the software continues to improve its detection abilities over time without the need for reprogramming. The introduction of AI in polyp detection was first performed in 2003, with pilot studies noting > 90% accuracy in preliminary studies. Initial models noted acceptable performance ranges with sensitivities of 48%-90%. However, these databases were small based on dataset of images of fewer than 20 polyps[3,47].

AI in colon polyp identification

CAD endoscopy allows for real-time automatic polyp detection and would assist the endoscopist in detecting lesions reliably and consistently. In the past, multiple CAD systems have been introduced in colon polyp detection. Initial systems utilized hand-crafted features and variables that allowed for the identification of polyps. However, the usage of hand-crafted algorithms presented multiple issues, including calculation speed, computer power, lower sensitivity, and false-positive rate resulting from the presence of artifacts such as blood vessels, stool, and folds. Considerable advancement using DL automatically searches and defines important features of images resulting in better outcomes[48]. Retrospective studies for polyp detection using AI have demonstrated sensitivities higher than 90%, with NPV of 95%-97%. However, these trials are thought to be small-scale and non-clinical investigations[49].

Recent open, non-blinded trials in patients randomized to a standard colonoscopy vs those randomized to colonoscopy with CAD noted a significantly increased ADR 20.3% vs 29.1% in the CAD group. An increase in the mean number of adenomas detected was noted, 0.53 in the CAD group vs 0.31 in the standard colonoscopy group, mostly due to increased detection of diminutive adenomas[50]. A meta-analysis of 2815 patients correlated with the above study and demonstrated an ADR in the CAD colonoscopy population of 32.9% vs the standardized colonoscopy group of 20.8%[51].

AI in colon polyp optical pathology

Optical diagnosis of colorectal polyps may prove to be more cost-effective and time saving than traditional post-polypectomy pathology diagnosis. Optical diagnosis eliminates the need for a pathologist as the polyps do not need to be reviewed by a pathologist. The application of CAD to magnifying narrow-band imaging has recently attracted the most significant interest in AI and colonoscopy. Initial studies performed by Tischendorf et al[52] and Gross et al[53] demonstrated the diagnostic accuracy of neoplastic polyps, being 85.3% and 93.1%, respectively. These studies utilized similar algorithms based on nine vessel features, which included (length, brightness, perimeter) from magnifying NBI images. The software was then able to classify these images into two pathological predictions, neoplastic vs non-neoplastic. Recently retrospective studies on newly developed CAD systems utilizing DL algorithms have demonstrated 96.3% sensitivity and 78.1% specificity in identifying neoplastic or hyperplastic polyps[54]. Magnifying chromoendoscopy with CAD systems recognizing quantitative analysis of pit stricture or texture analysis of the entire image. Studies by Takemura et al[55] have demonstrated an overall accuracy of 98.5%.

However, despite advancements in CAD and AI, there has not been much success in utilizing these modalities with white light endoscopy to identify polyp pathology. Komeda et al[56] applied a DL algorithm under white light endoscopy, with accuracy limited to 75.1%. Future developments include proposed combination detection systems with a DL algorithm for polyp detection under white light and a secondary algorithm that predicts the pathology of the detected polyp has been performed[57].

Limitations of AI in gastrointestinal endoscopy

Limitations of AI-based approaches in endoscopy stems from the fact that computers interpret data differently from those assessed by humans. Significant challenges of AI-based systems computer learning algorithms include: (1) AI-based systems need an extensive database for training to compare imaging findings requiring thousands of images; (2) Lack of real abstract human-like thinking in challenging cases; and (3) Existing systems are research-based mostly and suffer from considerable selection bias. Further, many of these systems are created in single institutions and serve a unique patient population, and results may not be entirely reproducible in everyday endoscopy practice. Limitations may be the result of imaging datasets, trial designs, and outcome indicators. For example, standard endoscopic images suffer from artifacts such as bubbles, mucus and stool, light exposure, and visualization angle by the endoscope camera, and the legal responsibility of misdiagnosis or incorrect diagnosis by AI-based systems. The current framework does not account for the medicolegal aspect of AI trained computers and devices in making definitive diagnosis as far as detection of gastrointestinal pathology is concerned. As the use of AI increases in our day to day life and in medicine, further clarification of the role of AI related technology in medicine specially as it pertains to medicolegal responsibility is needed as far as diagnosis and therapy related to AI based systems is concerned. The producers of AI based medical systems should seek advice regarding the liability requirements including possibility of multiple serial claims arising from errors or service disruption affecting an AI based system. In addition, similar to the use of any other medical equipment product liability would apply in relation to hardware or software malfunction. The medical practitioner would remain liable for any negligent use of the systems and need to make sure that the systems are in good working order with proper maintenance and that there are proper product liability protections in place. In essence for any AI-related medical systems to be widely adopted for diagnosis or treatment, we need to make sure the system is affordable, makes the physician’s job precise and efficient and has suitable mechanisms in place for medicolegal responsibilities.

To make the existing endoscopy based AI systems more robust, further advances in AI technology will be required to provide a reliable and accurate platform before implementation in general practice. To facilitate real-time assessment during the endoscopic examination, training, or testing CNN with unprocessed video should be used to simulate everyday endoscopy situation and make the model more realistic. In addition, the patient risk stratification should be incorporated into current CNN based models. This will further enhance the performance of AI based models specially in high-risk populations and reduce misdiagnosis in the general population. Future multicenter randomized trials are required to test various AI models in gastrointestinal endoscopy. These trials should be focused on answering the basic question whether AI models can enhance physician performance and determine the detection rate in day to day clinical settings. In the end a strong multidisciplinary collaboration among physicians, computer scientists, and entrepreneurs is required to promote AI’s clinical use in medical practice.

CONCLUSION

AI-based endoscopic systems can reliably detect and provide crucial information on gastrointestinal pathology based on their training and validation. These systems will make gastroenterology practice easier, faster, more reliable, and reduce inter-observer variability in the coming years. The thought that these systems will replace human decision making replace gastrointestinal endoscopists does not seem plausible in the near future.

Essential uses of AI in endoscopy will range from early identification of cancers using imaging enhancement with neural networks, predicting prognosis, and treatment outcomes. AI has found increasing applications in several non-medical specialties. What makes the use somewhat limited in medicine is the lack of such systems’ ability to think in a human-like manner in challenging non-algorithmic approaches and diagnostic dilemmas.

Significant advances in technology make us wonder about the day when sophisticated and powerful AI-based systems will replace gastroenterologists. Currently that does not seem to be the reality. Gastroenterologists should welcome and embrace AI-assisted technologies in their practice as and when commercially available after thorough vetting in different validation studies.

ACKNOWLEDGEMENTS

We are thankful to Mr. Parasher N for his excellent technical inputs and editing help.

Footnotes

Conflict-of-interest statement: There is no conflict of interest associated with any of the senior author or other coauthors contributed their efforts in this manuscript.

Manuscript source: Invited manuscript

Corresponding Author's Membership in Professional Societies: American Gastroenterological Association, 1469129.

Peer-review started: August 1, 2020

First decision: September 30, 2020

Article in press: November 29, 2020

Specialty type: Gastroenterology and hepatology

Country/Territory of origin: United States

Peer-review report’s scientific quality classification

Grade A (Excellent): A

Grade B (Very good): B, B

Grade C (Good): C, C, C, C

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Cerwenka H, Hashimoto N, Liu L, Niu ZS, Zhu YL S-Editor: Chen XF L-Editor: A P-Editor: Liu JH

Contributor Information

Gulshan Parasher, Division of Gastroenterology and Hepatology, Department of Internal Medicine, University of New Mexico School of Medicine, Albuquerque, NM 87131, United States.

Morgan Wong, Division of Gastroenterology and Hepatology, Department of Internal Medicine, University of New Mexico School of Medicine, Albuquerque, NM 87131, United States.

Manmeet Rawat, Division of Gastroenterology and Hepatology, Department of Internal Medicine, University of New Mexico School of Medicine, Albuquerque, NM 87131, United States. manmeetrawat@gmail.com.

References

- 1.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25:44–56. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 2.Suzuki K. Overview of deep learning in medical imaging. Radiol Phys Technol. 2017;10:257–273. doi: 10.1007/s12194-017-0406-5. [DOI] [PubMed] [Google Scholar]

- 3.Liedlgruber M, Uhl A. Computer-aided decision support systems for endoscopy in the gastrointestinal tract: a review. IEEE Rev Biomed Eng. 2011;4:73–88. doi: 10.1109/RBME.2011.2175445. [DOI] [PubMed] [Google Scholar]

- 4.Russell S, Norvig P. Artificial intelligence: a modern approach. 3rd ed. Harlow: Pearson Education, 2009; 1-3. [Google Scholar]

- 5.Murphy KP. Machine learning, a probabilistic perspective. 1st ed. Cambridge: The MIT Press, 2012; 1-2. [Google Scholar]

- 6.Schmidhuber J. Deep learning in neural networks: an overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 7.Dey A. Machine Learning Algorithms: A Review. Int J Com Sci Inf Tech. 2016;7:1174–1179. [Google Scholar]

- 8.Hirasawa T, Aoyama K, Tanimoto T, Ishihara S, Shichijo S, Ozawa T, Ohnishi T, Fujishiro M, Matsuo K, Fujisaki J, Tada T. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer. 2018;21:653–660. doi: 10.1007/s10120-018-0793-2. [DOI] [PubMed] [Google Scholar]

- 9.Misawa M, Kudo SE, Mori Y, Cho T, Kataoka S, Yamauchi A, Ogawa Y, Maeda Y, Takeda K, Ichimasa K, Nakamura H, Yagawa Y, Toyoshima N, Ogata N, Kudo T, Hisayuki T, Hayashi T, Wakamura K, Baba T, Ishida F, Itoh H, Roth H, Oda M, Mori K. Artificial Intelligence-Assisted Polyp Detection for Colonoscopy: Initial Experience. Gastroenterology. 2018;154:2027–2029. doi: 10.1053/j.gastro.2018.04.003. [DOI] [PubMed] [Google Scholar]

- 10.Swager AF, van der Sommen F, Klomp SR, Zinger S, Meijer SL, Schoon EJ, Bergman JJGHM, de With PH, Curvers WL. Computer-aided detection of early Barrett's neoplasia using volumetric laser endomicroscopy. Gastrointest Endosc. 2017;86:839–846. doi: 10.1016/j.gie.2017.03.011. [DOI] [PubMed] [Google Scholar]

- 11.van der Sommen F, Zinger S, Curvers WL, Bisschops R, Pech O, Weusten BL, Bergman JJ, de With PH, Schoon EJ. Computer-aided detection of early neoplastic lesions in Barrett's esophagus. Endoscopy. 2016;48:617–624. doi: 10.1055/s-0042-105284. [DOI] [PubMed] [Google Scholar]

- 12.Shichijo S, Nomura S, Aoyama K, Nishikawa Y, Miura M, Shinagawa T, Takiyama H, Tanimoto T, Ishihara S, Matsuo K, Tada T. Application of Convolutional Neural Networks in the Diagnosis of Helicobacter pylori Infection Based on Endoscopic Images. EBioMedicine. 2017;25:106–111. doi: 10.1016/j.ebiom.2017.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Leenhardt R, Vasseur P, Li C, Saurin JC, Rahmi G, Cholet F, Becq A, Marteau P, Histace A, Dray X CAD-CAP Database Working Group. A neural network algorithm for detection of GI angiectasia during small-bowel capsule endoscopy. Gastrointest Endosc. 2019;89:189–194. doi: 10.1016/j.gie.2018.06.036. [DOI] [PubMed] [Google Scholar]

- 14.Takiyama H, Ozawa T, Ishihara S, Fujishiro M, Shichijo S, Nomura S, Miura M, Tada T. Automatic anatomical classification of esophagogastroduodenoscopy images using deep convolutional neural networks. Sci Rep. 2018;8:7497. doi: 10.1038/s41598-018-25842-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Pace F, Buscema M, Dominici P, Intraligi M, Baldi F, Cestari R, Passaretti S, Bianchi Porro G, Grossi E. Artificial neural networks are able to recognize gastro-oesophageal reflux disease patients solely on the basis of clinical data. Eur J Gastroenterol Hepatol. 2005;17:605–610. doi: 10.1097/00042737-200506000-00003. [DOI] [PubMed] [Google Scholar]

- 16.Gulati S, Bernth J, Liao J, Poliyivets D, Chatu S, Emmanuel A, Haji A, Liu H, Hayee B. OTU-07 Near focus narrow and imaging driven artificial intelligence for the diagnosis of gastro-oesophageal reflux disease. Gut. 2019;68 (Suppl 2):A4. [Google Scholar]

- 17.Kodashima S, Fujishiro M, Takubo K, Kammori M, Nomura S, Kakushima N, Muraki Y, Goto O, Ono S, Kaminishi M, Omata M. Ex vivo pilot study using computed analysis of endo-cytoscopic images to differentiate normal and malignant squamous cell epithelia in the oesophagus. Dig Liver Dis. 2007;39:762–766. doi: 10.1016/j.dld.2007.03.004. [DOI] [PubMed] [Google Scholar]

- 18.Shin D, Protano MA, Polydorides AD, Dawsey SM, Pierce MC, Kim MK, Schwarz RA, Quang T, Parikh N, Bhutani MS, Zhang F, Wang G, Xue L, Wang X, Xu H, Anandasabapathy S, Richards-Kortum RR. Quantitative analysis of high-resolution microendoscopic images for diagnosis of esophageal squamous cell carcinoma. Clin Gastroenterol Hepatol. 2015;13:272–279. doi: 10.1016/j.cgh.2014.07.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Quang T, Schwarz RA, Dawsey SM, Tan MC, Patel K, Yu X, Wang G, Zhang F, Xu H, Anandasabapathy S, Richards-Kortum R. A tablet-interfaced high-resolution microendoscope with automated image interpretation for real-time evaluation of esophageal squamous cell neoplasia. Gastrointest Endosc. 2016;84:834–841. doi: 10.1016/j.gie.2016.03.1472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Horie Y, Yoshio T, Aoyama K, Yoshimizu S, Horiuchi Y, Ishiyama A, Hirasawa T, Tsuchida T, Ozawa T, Ishihara S, Kumagai Y, Fujishiro M, Maetani I, Fujisaki J, Tada T. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest Endosc. 2019;89:25–32. doi: 10.1016/j.gie.2018.07.037. [DOI] [PubMed] [Google Scholar]

- 21.Sato F, Shimada Y, Selaru FM, Shibata D, Maeda M, Watanabe G, Mori Y, Stass SA, Imamura M, Meltzer SJ. Prediction of survival in patients with esophageal carcinoma using artificial neural networks. Cancer. 2005;103:1596–1605. doi: 10.1002/cncr.20938. [DOI] [PubMed] [Google Scholar]

- 22.Huang CR, Sheu BS, Chung PC, Yang HB. Computerized diagnosis of Helicobacter pylori infection and associated gastric inflammation from endoscopic images by refined feature selection using a neural network. Endoscopy. 2004;36:601–608. doi: 10.1055/s-2004-814519. [DOI] [PubMed] [Google Scholar]

- 23.Nakashima H, Kawahira H, Kawachi H, Sakaki N. Artificial intelligence diagnosis of Helicobacter pylori infection using blue laser imaging-bright and linked color imaging: a single-center prospective study. Ann Gastroenterol. 2018;31:462–468. doi: 10.20524/aog.2018.0269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Itoh T, Kawahira H, Nakashima H, Yata N. Deep learning analyzes Helicobacter pylori infection by upper gastrointestinal endoscopy images. Endosc Int Open. 2018;6:E139–E144. doi: 10.1055/s-0043-120830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zhu Y, Wang QC, Xu MD, Zhang Z, Cheng J, Zhong YS, Zhang YQ, Chen WF, Yao LQ, Zhou PH, Li QL. Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy. Gastrointest Endosc. 2019;89:806–815. doi: 10.1016/j.gie.2018.11.011. [DOI] [PubMed] [Google Scholar]

- 26.Kubota K, Kuroda J, Yoshida M, Ohta K, Kitajima M. Medical image analysis: computer-aided diagnosis of gastric cancer invasion on endoscopic images. Surg Endosc. 2012;26:1485–1489. doi: 10.1007/s00464-011-2036-z. [DOI] [PubMed] [Google Scholar]

- 27.Kanesaka T, Lee TC, Uedo N, Lin KP, Chen HZ, Lee JY, Wang HP, Chang HT. Computer-aided diagnosis for identifying and delineating early gastric cancers in magnifying narrow-band imaging. Gastrointest Endosc. 2018;87:1339–1344. doi: 10.1016/j.gie.2017.11.029. [DOI] [PubMed] [Google Scholar]

- 28.He JY, Wu X, Jiang YG, Peng Q, Jain R. Hookworm Detection in Wireless Capsule Endoscopy Images With Deep Learning. IEEE Trans Image Process. 2018;27:2379–2392. doi: 10.1109/TIP.2018.2801119. [DOI] [PubMed] [Google Scholar]

- 29.Zhou T, Han G, Li BN, Lin Z, Ciaccio EJ, Green PH, Qin J. Quantitative analysis of patients with celiac disease by video capsule endoscopy: A deep learning method. Comput Biol Med. 2017;85:1–6. doi: 10.1016/j.compbiomed.2017.03.031. [DOI] [PubMed] [Google Scholar]

- 30.Seguí S, Drozdzal M, Pascual G, Radeva P, Malagelada C, Azpiroz F, Vitrià J. Generic feature learning for wireless capsule endoscopy analysis. Comput Biol Med. 2016;79:163–172. doi: 10.1016/j.compbiomed.2016.10.011. [DOI] [PubMed] [Google Scholar]

- 31.Yim S, Gultepe E, Gracias DH, Sitti M. Biopsy using a magnetic capsule endoscope carrying, releasing, and retrieving untethered microgrippers. IEEE Trans Biomed Eng. 2014;61:513–521. doi: 10.1109/TBME.2013.2283369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Stewart FR, Newton IP, Näthke I, Huang Z, Cox BF, Cochran S. Development of a therapeutic capsule endoscope for treatment in the gastrointestinal tract: bench testing to translational trial. 2017 IEEE International Ultrasonics Symposium (IUS); 2017 Sep 6-9; Washington, D.C., United States. Piscataway (NJ): IEEE, 2017: 1-4. [Google Scholar]

- 33.Zhu M, Xu C, Yu J, Wu Y, Li C, Zhang M, Jin Z, Li Z. Differentiation of pancreatic cancer and chronic pancreatitis using computer-aided diagnosis of endoscopic ultrasound (EUS) images: a diagnostic test. PLoS One. 2013;8:e63820. doi: 10.1371/journal.pone.0063820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Das A, Nguyen CC, Li F, Li B. Digital image analysis of EUS images accurately differentiates pancreatic cancer from chronic pancreatitis and normal tissue. Gastrointest Endosc. 2008;67:861–867. doi: 10.1016/j.gie.2007.08.036. [DOI] [PubMed] [Google Scholar]

- 35.Săftoiu A, Vilmann P, Dietrich CF, Iglesias-Garcia J, Hocke M, Seicean A, Ignee A, Hassan H, Streba CT, Ioncică AM, Gheonea DI, Ciurea T. Quantitative contrast-enhanced harmonic EUS in differential diagnosis of focal pancreatic masses (with videos) Gastrointest Endosc. 2015;82:59–69. doi: 10.1016/j.gie.2014.11.040. [DOI] [PubMed] [Google Scholar]

- 36.Săftoiu A, Vilmann P, Gorunescu F, Gheonea DI, Gorunescu M, Ciurea T, Popescu GL, Iordache A, Hassan H, Iordache S. Neural network analysis of dynamic sequences of EUS elastography used for the differential diagnosis of chronic pancreatitis and pancreatic cancer. Gastrointest Endosc. 2008;68:1086–1094. doi: 10.1016/j.gie.2008.04.031. [DOI] [PubMed] [Google Scholar]

- 37.Momeni-Boroujeni A, Yousefi E, Somma J. Computer-assisted cytologic diagnosis in pancreatic FNA: An application of neural networks to image analysis. Cancer Cytopathol. 2017;125:926–933. doi: 10.1002/cncy.21915. [DOI] [PubMed] [Google Scholar]

- 38.Corral JE, Hussein S, Kandel P, Bolan CW, Bagci U, Wallace MB. Deep Learning to Classify Intraductal Papillary Mucinous Neoplasms Using Magnetic Resonance Imaging. Pancreas. 2019;48:805–810. doi: 10.1097/MPA.0000000000001327. [DOI] [PubMed] [Google Scholar]

- 39.Muhammad W, Hart GR, Nartowt B, Farrell JJ, Johung K, Liang Y, Deng J. Pancreatic Cancer Prediction Through an Artificial Neural Network. Front Artif Intell. 2019;2:2. doi: 10.3389/frai.2019.00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Brenner H, Chang-Claude J, Jansen L, Knebel P, Stock C, Hoffmeister M. Reduced risk of colorectal cancer up to 10 years after screening, surveillance, or diagnostic colonoscopy. Gastroenterology. 2014;146:709–717. doi: 10.1053/j.gastro.2013.09.001. [DOI] [PubMed] [Google Scholar]

- 41.Sharma P, Burke CA, Johnson DA, Cash BD. The importance of colonoscopy bowel preparation for the detection of colorectal lesions and colorectal cancer prevention. Endosc Int Open. 2020;8:E673–E683. doi: 10.1055/a-1127-3144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Nishihara R, Wu K, Lochhead P, Morikawa T, Liao X, Qian ZR, Inamura K, Kim SA, Kuchiba A, Yamauchi M, Imamura Y, Willett WC, Rosner BA, Fuchs CS, Giovannucci E, Ogino S, Chan AT. Long-term colorectal-cancer incidence and mortality after lower endoscopy. N Engl J Med. 2013;369:1095–1105. doi: 10.1056/NEJMoa1301969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Corley DA, Levin TR, Doubeni CA. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014;370:2541. doi: 10.1056/NEJMc1405329. [DOI] [PubMed] [Google Scholar]

- 44.Patel SG, Ahnen DJ. Prevention of interval colorectal cancers: what every clinician needs to know. Clin Gastroenterol Hepatol. 2014;12:7–15. doi: 10.1016/j.cgh.2013.04.027. [DOI] [PubMed] [Google Scholar]

- 45.Kaminski MF, Regula J, Kraszewska E, Polkowski M, Wojciechowska U, Didkowska J, Zwierko M, Rupinski M, Nowacki MP, Butruk E. Quality indicators for colonoscopy and the risk of interval cancer. N Engl J Med. 2010;362:1795–1803. doi: 10.1056/NEJMoa0907667. [DOI] [PubMed] [Google Scholar]

- 46.Ahn SB, Han DS, Bae JH, Byun TJ, Kim JP, Eun CS. The Miss Rate for Colorectal Adenoma Determined by Quality-Adjusted, Back-to-Back Colonoscopies. Gut Liver. 2012;6:64–70. doi: 10.5009/gnl.2012.6.1.64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Rees CJ, Rajasekhar PT, Wilson A, Close H, Rutter MD, Saunders BP, East JE, Maier R, Moorghen M, Muhammad U, Hancock H, Jayaprakash A, MacDonald C, Ramadas A, Dhar A, Mason JM. Narrow band imaging optical diagnosis of small colorectal polyps in routine clinical practice: the Detect Inspect Characterise Resect and Discard 2 (DISCARD 2) study. Gut. 2017;66:887–895. doi: 10.1136/gutjnl-2015-310584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Kudo SE, Mori Y, Misawa M, Takeda K, Kudo T, Itoh H, Oda M, Mori K. Artificial intelligence and colonoscopy: Current status and future perspectives. Dig Endosc. 2019;31:363–371. doi: 10.1111/den.13340. [DOI] [PubMed] [Google Scholar]

- 49.Karkanis SA, Iakovidis DK, Maroulis DE, Karras DA, Tzivras M. Computer-aided tumor detection in endoscopic video using color wavelet features. IEEE Trans Inf Technol Biomed. 2003;7:141–152. doi: 10.1109/titb.2003.813794. [DOI] [PubMed] [Google Scholar]

- 50.Wang P, Berzin TM, Glissen Brown JR, Bharadwaj S, Becq A, Xiao X, Liu P, Li L, Song Y, Zhang D, Li Y, Xu G, Tu M, Liu X. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: a prospective randomised controlled study. Gut. 2019;68:1813–1819. doi: 10.1136/gutjnl-2018-317500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Aziz M, Fatima R, Dong C, Lee-Smith W, Nawras A. The impact of deep convolutional neural network-based artificial intelligence on colonoscopy outcomes: A systematic review with meta-analysis. J Gastroenterol Hepatol. 2020;35:1676–1683. doi: 10.1111/jgh.15070. [DOI] [PubMed] [Google Scholar]

- 52.Tischendorf JJ, Gross S, Winograd R, Hecker H, Auer R, Behrens A, Trautwein C, Aach T, Stehle T. Computer-aided classification of colorectal polyps based on vascular patterns: a pilot study. Endoscopy. 2010;42:203–207. doi: 10.1055/s-0029-1243861. [DOI] [PubMed] [Google Scholar]

- 53.Gross S, Trautwein C, Behrens A, Winograd R, Palm S, Lutz HH, Schirin-Sokhan R, Hecker H, Aach T, Tischendorf JJ. Computer-based classification of small colorectal polyps by using narrow-band imaging with optical magnification. Gastrointest Endosc. 2011;74:1354–1359. doi: 10.1016/j.gie.2011.08.001. [DOI] [PubMed] [Google Scholar]

- 54.Chen PJ, Lin MC, Lai MJ, Lin JC, Lu HH, Tseng VS. Accurate Classification of Diminutive Colorectal Polyps Using Computer-Aided Analysis. Gastroenterology. 2018;154:568–575. doi: 10.1053/j.gastro.2017.10.010. [DOI] [PubMed] [Google Scholar]

- 55.Takemura Y, Yoshida S, Tanaka S, Onji K, Oka S, Tamaki T, Kaneda K, Yoshihara M, Chayama K. Quantitative analysis and development of a computer-aided system for identification of regular pit patterns of colorectal lesions. Gastrointest Endosc. 2010;72:1047–1051. doi: 10.1016/j.gie.2010.07.037. [DOI] [PubMed] [Google Scholar]

- 56.Komeda Y, Handa H, Watanabe T, Nomura T, Kitahashi M, Sakurai T, Okamoto A, Minami T, Kono M, Arizumi T, Takenaka M, Hagiwara S, Matsui S, Nishida N, Kashida H, Kudo M. Computer-Aided Diagnosis Based on Convolutional Neural Network System for Colorectal Polyp Classification: Preliminary Experience. Oncology. 2017;93 Suppl 1:30–34. doi: 10.1159/000481227. [DOI] [PubMed] [Google Scholar]

- 57.Mori Y, Kudo SE, Misawa M, Mori K. Simultaneous detection and characterization of diminutive polyps with the use of artificial intelligence during colonoscopy. VideoGIE. 2019;4:7–10. doi: 10.1016/j.vgie.2018.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Aoki T, Yamada A, Aoyama K, Saito H, Fujisawa G, Odawara N, Kondo R, Tsuboi A, Ishibashi R, Nakada A, Niikura R, Fujishiro M, Oka S, Ishihara S, Matsuda T, Nakahori M, Tanaka S, Koike K, Tada T. Clinical usefulness of a deep learning-based system as the first screening on small-bowel capsule endoscopy reading. Dig Endosc. 2020;32:585–591. doi: 10.1111/den.13517. [DOI] [PubMed] [Google Scholar]

- 59.Klang E, Barash Y, Margalit RY, Soffer S, Shimon O, Albshesh A, Ben-Horin S, Amitai MM, Eliakim R, Kopylov U. Deep learning algorithms for automated detection of Crohn's disease ulcers by video capsule endoscopy. Gastrointest Endosc 2020; 91: 606-613. :e2. doi: 10.1016/j.gie.2019.11.012. [DOI] [PubMed] [Google Scholar]

- 60.Tsuboi A, Oka S, Aoyama K, Saito H, Aoki T, Yamada A, Matsuda T, Fujishiro M, Ishihara S, Nakahori M, Koike K, Tanaka S, Tada T. Artificial intelligence using a convolutional neural network for automatic detection of small-bowel angioectasia in capsule endoscopy images. Dig Endosc. 2020;32:382–390. doi: 10.1111/den.13507. [DOI] [PubMed] [Google Scholar]

- 61.Ding Z, Shi H, Zhang H, Meng L, Fan M, Han C, Zhang K, Ming F, Xie X, Liu H, Liu J, Lin R, Hou X. Gastroenterologist-Level Identification of Small-Bowel Diseases and Normal Variants by Capsule Endoscopy Using a Deep-Learning Model. Gastroenterology. 2019;157:1044–1054. doi: 10.1053/j.gastro.2019.06.025. [DOI] [PubMed] [Google Scholar]

- 62.Saito H, Aoki T, Aoyama K, Kato Y, Tsuboi A, Yamada A, Fujishiro M, Oka S, Ishihara S, Matsuda T, Nakahori M, Tanaka S, Koike K, Tada T. Automatic detection and classification of protruding lesions in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest Endosc. 2020;92:144–151. doi: 10.1016/j.gie.2020.01.054. [DOI] [PubMed] [Google Scholar]