Abstract

Atypical social–emotional reciprocity is a core feature of autism spectrum disorder (ASD) but can be difficult to operationalize. One measurable manifestation of reciprocity may be interpersonal coordination, the tendency to align the form and timing of one’s behaviors (including facial affect) with others. Interpersonal affect coordination facilitates sharing and understanding of emotional cues, and there is evidence that it is reduced in ASD. However, most research has not measured this process in true social contexts, due in part to a lack of tools for measuring dynamic facial expressions over the course of an interaction. Automated facial analysis via computer vision provides an efficient, granular, objective method for measuring naturally occurring facial affect and coordination. Youth with ASD and matched typically developing youth participated in cooperative conversations with their mothers and unfamiliar adults. Time-synchronized videos were analyzed with an open-source computer vision toolkit for automated facial analysis, for the presence and intensity of facial movements associated with positive affect. Both youth and adult conversation partners exhibited less positive affect during conversations when the youth partner had ASD. Youth with ASD also engaged in less affect coordination over the course of conversations. When considered dimensionally across youth with and without ASD, affect coordination significantly predicted scores on rating scales of autism-related social atypicality, adaptive social skills, and empathy. Findings suggest that affect coordination is an important interpersonal process with implications for broader social–emotional functioning. This preliminary study introduces a promising novel method for quantifying moment-to-moment facial expression and emotional reciprocity during natural interactions.

Keywords: affect/emotion, social–emotional reciprocity, computer vision, interpersonal coordination, facial expression, synchrony

Lay Summary:

This study introduces a novel, automated method for measuring social–emotional reciprocity during natural conversations, which may improve assessment of this core autism diagnostic behavior. We used computerized methods to measure facial affect and the degree of affect coordination between conversation partners. Youth with autism displayed reduced affect coordination, and reduced affect coordination predicted lower scores on measures of broader social–emotional skills.

Introduction

A core feature of autism spectrum disorder (ASD) is atypical social–emotional reciprocity with others, including reduced interpersonal sharing of emotions and affect [American Psychiatric Association, 2013]. Despite being integral to the diagnosis of ASD, there is a lack of established methods for operationalizing emotional reciprocity objectively or granularly. This limits the ability of researchers and clinicians to reliably assess this key behavior and to measure differences across individuals, contexts, or time.

One observable, measurable manifestation of social–emotional reciprocity may be interpersonal coordination, or social partners’ tendency to align the form and timing of their behaviors [Bernieri & Rosenthal, 1991]. Interpersonal coordination is a dynamic social process involving the perception of a social cue in another person, followed by a nonconscious adaptation of one’s own behavior into a reciprocal response. This phenomenon is well studied in neurotypical individuals across behavioral modalities, including body movements [Bernieri, Davis, Rosenthal, & Knee, 1994; LaFrance & Broadbent, 1976; Paxton & Dale, 2013; Ramseyer & Tschacher, 2011], speech [Cappella & Planalp, 1981; Garrod & Pickering, 2004], and facial expressions [Dimberg, Thunberg, & Elmehed, 2000; Hess & Fischer, 2013], and has been repeatedly linked to rapport and connectedness between partners [Chartrand & Bargh, 1999; Hove & Risen, 2009; Stel & Vonk, 2010; Wiltermuth & Heath, 2009].

Interpersonal coordination is particularly well documented with respect to facial affect; when neurotypical individuals view emotional facial expressions, they automatically respond with congruent activity in their own facial musculature [Dimberg, 1982, 1988]. This “facial mimicry” is thought to facilitate sharing and understanding of emotional cues [Hatfield, Cacioppo, & Rapson, 1994]. Indeed, engaging in facial mimicry seems to improve performance on emotion perception tasks, while obstruction of mimicry worsens performance [see Hess & Fischer, 2013 for a review].

There is evidence that children and adults with ASD exhibit reduced, atypical, or delayed spontaneous mimicry responses to photographs and videos of emotional facial expressions [Beall, Moody, McIntosh, Hepburn, & Reed, 2008; McIntosh, Reichmann-Decker, Winkielman, & Wilbarger, 2006; Oberman, Winkielman, & Ramachandran, 2009; Stel, van den Heuvel, & Smeets, 2008, but see also Magnée, de Gelder, van Engeland, & Kemner, 2007]. However, it is difficult to generalize these results to real-world social functioning, because the majority of research (in both neurotypical and ASD populations) has measured one-sided mimicry responses to stimuli presented on a computer, rather than spontaneous bidirectional coordination within the context of true social interaction. While there is a significant literature on interpersonal coordination more broadly (e.g., in body movements) within interactive settings, studies on facial coordination specifically are much more limited.

Some notable exceptions have begun to explore interpersonal facial affect coordination during natural interactions, generally using either behavioral annotations or facial electromyography (EMG; facial muscle activity). In nonclinical samples, findings confirm the presence of facial affect coordination among social dyads and suggest that coordination both influences, and is influenced by, social–emotional context [Heerey & Crossley, 2013; Hess & Bourgeois, 2010; Messinger, Mahoor, Chow, & Cohn, 2009; Riehle, Kempkensteffen, & Lincoln, 2017; Stel & Vonk, 2010]. In ASD, a recent meta-analysis on facial expression production found that just five out of 37 included papers focused on bidirectional sharing of affect in naturalistic settings. This meta-analysis also implicated “social congruency” (i.e., sharing affect with someone else or aligning affect appropriately with the situation) as a particular area that distinguishes people with ASD from normative samples [Trevisan, Hoskyn, & Birmingham, 2018].

There is a need for more research on real-world social interactions, in order to better understand the nature and function of interpersonal facial coordination [Seibt, Mühlberger, Likowski, & Weyers, 2015]. One reason for the paucity of research in this area has been a lack of tools for measuring dynamic facial expressions over the course of spontaneous, live interactions. Behavioral coding is time- and training-intensive, and specialized equipment like facial EMG impacts how natural interactions feel and may not be well tolerated by individuals with ASD. However, recent advances in automated facial analysis via computer vision have the potential to provide an efficient, non-obtrusive method of measuring naturally occurring facial expressions with high spatial and temporal granularity [Cohn & Kanade, 2007; Messinger et al., 2009].

In this preliminary study, we use an open-source automated facial analysis system [Baltrusaitis, Zadeh, Lim, & Morency, 2018] to analyze interpersonal coordination of positive affect during conversations between youth with ASD and familiar and unfamiliar interaction partners. Our overarching goal was to determine whether the automated measurement of interpersonal facial affect coordination can provide insight into the nature and magnitude of social–emotional reciprocity differences in ASD. Based on previous literature, we hypothesized that youth with ASD would exhibit less coordination with social partners than typically developing peers, and that the degree of coordination would be associated with standard, cross-diagnostic measures of social–emotional functioning.

Methods

Participants

Participants were 20 youth with ASD and 16 typically developing control (TDC) youth, aged 9–16. See Table 1 for participant characterization. All participants in the ASD group met Diagnostic and Statistical Manual for Mental Disorders, Fifth Edition [DSM-5; American Psychiatric Association, 2013] criteria for ASD, and met diagnostic cutoffs on the Autism Diagnostic Observation Schedule, Second Edition [ADOS-2; Lord et al., 2012] and the Autism Diagnostic Interview—Revised [Rutter, Le Couteur, & Lord, 2003b]. All TDCs failed to meet ASD thresholds on the ADOS-2 and Social Communication Questionnaire, Lifetime Form [SCQ; Rutter, Bailey, & Lord, 2003], and based on clinician judgment by DSM-5 criteria. All participants were fluent in English and had full-scale IQs of 80 or above per the Wechsler Abbreviated Scale of Intelligence, Second Edition [Wechsler, 2011], or a corresponding short form of the Wechsler Intelligence Scale for Children, Fourth Edition [WISC-IV; Wechsler, 2003]. Groups were matched on mean age, mean verbal and nonverbal cognitive ability, and gender ratio (see Table 1).

Table 1.

Participant Characterization

| ASD M (SD) | TDC M (SD) | t or χ2 | p | |

|---|---|---|---|---|

| n | 20 | 16 | ||

| Gender (M:F) | 19:1 | 14:2 | 0.66 | 0.42 |

| Age (years) | 13.80 (1.38) | 14.21 (2.03) | −0.72 | 0.48 |

| Full-scale IQ | 108.50 (14.15) | 113.94 (12.68) | −1.20 | 0.24 |

| Verbal IQ | 110.05 (17.58) | 115.94 (13.62) | −1.10 | 0.28 |

| Nonverbal IQ | 105.20 (11.92) | 109.44 (14.14) | −0.98 | 0.34 |

| ADOS-2 CSS | 6.45 (1.54) | 1.00 (0.00) | 15.85 | <0.001 |

| SCQ total | 21.90 (6.94) | 1.44 (1.68) | 12.74 | <0.001 |

| SRS-2 SCI | 71.15 (9.03) | 45.63 (7.53) | 9.06 | <0.001 |

| Vineland-II Socialization | 78.11 (14.38) | 114.33 (14.82) | −7.20 | <0.001 |

| IRI total | 48.20 (18.08) | 58.50 (15.59) | −1.80 | 0.08 |

Abbreviations: ADOS-2 CSS, Autism Diagnostic Observation Schedule, Second Edition Calibrated Severity Score; SCQ, Social Communication Questionnaire; SRS-2 SCI, Social Responsiveness Scale, Second Edition Social Communication and Interaction T-score; Vineland-II Socialization, Vineland Adaptive Behavior Scales, Second Edition Socialization standard score; IRI, Interpersonal Reactivity Index.

The biological mother of each youth participated as the interaction partner in the familiar condition. Clinically significant ASD symptoms were ruled out in mothers with the Adult Self-Report Form of the Social Responsiveness Scale, Second Edition [SRS-2; Constantino & Gruber, 2012]; all fell within the normative range (Total T-score < 60).

Visit Procedures

This research was prospectively reviewed and approved by the University of Rochester Research Subjects Review Board. Written informed consent was obtained from mothers for their child’s and own participation, and all youth provided assent.

Youth participated in two 10-min conversations, first with their mother (familiar condition) and then with a female undergraduate research assistant (RA; unfamiliar condition). RAs had experience with ASD but were not given specific guidelines for this task; instead, they were simply instructed to converse naturally. Dyads were seated facing one another four feet apart and asked to collaboratively plan a 2-week vacation, a modification of a task that has been used successfully to measure interpersonal coordination in adolescents [Bernieri et al., 1994]. The task was intended to be positive and cooperative. To alleviate potential anxiety or executive functioning burden, participants were told to have fun and not to worry about logistical constraints (e.g., money). Research staff later reviewed recordings of each conversation for outward signs of significant anxiety or disengagement. All youth in both groups were judged to complete the task without evident difficulty and to adequately participate in the conversations for the full 10 min.

Two high-definition video cameras captured a portrait view of each interaction partner. Cameras were wall-mounted to ensure that they remained stable, and the room setup and lighting were consistent across all interactions. Video data were recorded and exported at a resolution of 1,080 × 920 pixels and a sampling rate of 30 frames/sec.

Mothers completed rating scales regarding their children’s day-to-day social–emotional functioning. The School-Age Form of the SRS-2 measured the degree of social atypicality associated with ASD. We focused on the Social Communication and Interaction (SCI) T-score, which maps on to diagnostic criteria in this domain, with higher scores indicating more atypicality. The Vineland Adaptive Behavior Scales, Second Edition [Vineland-II; Sparrow, Cicchetti, & Balla, 2005] Parent/Caregiver Rating Form assessed adaptive social skills. We focused on the Socialization domain standard score, as it relates most directly to our dependent measures (facial expressions). Higher scores indicate higher social ability. Finally, the Interpersonal Reactivity Index [IRI; Davis, 1983] was completed as a measure of dispositional empathy; higher scores indicate more empathy. The IRI is typically administered as a self-report measure, so we adapted the wording for mothers to report on their children. Similar adaptations have been used with youth with ASD [Demurie, De Corel, & Roeyers, 2011; Oberman et al., 2009]. One participant from each group had incomplete Vineland-II data and was dropped from those analyses. See Table 1 for descriptive statistics for social–emotional functioning measures.

Automated Facial Analysis

Recordings from the two cameras were time-synchronized to the frame with Adobe Premiere video editing software. A time-locked visual cue of turning the lights in the room off and on again was used as a reference point for synchronization. Accuracy was then verified by visualizing and confirming overlap between the audio channels for each video pair.

Time-synchronized videos were analyzed with OpenFace, an open-source toolkit for automated facial behavior analysis [Baltrusaitis et al., 2018]. One function of OpenFace is automated facial action unit (AU) recognition, based on the Facial Action Coding System [FACS; Ekman & Friesen, 1978]. FACS is a widely used method of characterizing facial expressions in terms of activity in specific anatomical locations on the face (AUs). While FACS coding is traditionally done manually by trained raters, computer vision programs based on FACS (e.g., OpenFace) have been developed and validated for a variety of clinical applications [see Cohn & De La Torre, 2015]. We used OpenFace for this study because it is fully open source, and has demonstrated state-of-the-art performance with respect to facial landmark detection, head pose estimation, and facial AU recognition [Baltrusaitis et al., 2018].

We applied OpenFace AU recognition algorithms to analyze videos frame-by-frame for the presence and intensity of movement in AU6 and AU12, which are active when a person smiles. AU6 corresponds to the orbicularis oculi, or cheek raiser muscle, and AU12 corresponds to the zygomaticus major, or lip puller, muscle. Activity in AU6 and AU12 was highly correlated in all interaction partner groups; thus, the intensity ratings (0–5 scale) for the two AUs were averaged to yield a single index of smiling at each frame [Messinger et al., 2009].

OpenFace data were post-processed using the Python Biological Motion Capture toolbox, developed in-house. Overall smile magnitude was calculated for each person as the area under the curve for the averaged AU6/AU12 signal. Interpersonal smile coordination was quantified through windowed cross-correlations between time series data for youth smiling and adult (i.e., mother or RA) smiling [Boker, Rotondo, Xu, & King, 2002]. In accordance with previous literature [Riehle et al., 2017], we used a window size of 7 sec, which was moved over the time series in increments of 1/30 sec (one frame). The maximum time lag was set to 2 sec, with a lag increment of 1/30 sec. This time lag allows for the capture of coordinated affect that is exactly synchronous in time, as well as coordinated affect that occurs at a brief latency between interaction partners. Resulting correlation coefficients were Fisher z-transformed [Ramseyer & Tschacher, 2011] and cleared of all negative correlations [Riehle et al., 2017]. For each window, a single peak cross-correlation value was obtained. Peak cross-correlations from each window were then averaged across the duration of each interaction to yield a mean correlation coefficient for each dyad. This procedure of calculating windowed cross-correlations and choosing the peak correlation within each window is well established, and the most widely used method for analyzing interpersonal movement coordination in the literature across disciplines [Delaherche et al., 2012]. Change in smile coordination over the course of the interaction was then estimated by calculating the linear slope of peak cross-correlations across windows. A positive slope indicates increased coordination over time.

Accounting for global head motion and mouth movement associated with speech are known challenges of automated facial expression analysis and can add noise to face digitizations and AU estimation, particularly when measuring spontaneous behavior in naturalistic environments. We accounted for these factors by quantifying head motion and speech-related mouth movements in all interaction partners, and testing for differences across groups and conditions in order to control for such differences as necessary. Per-video metrics of head motion were estimated by averaging over the frame-to-frame difference in the three rotation and translation parameters, and averaging the result. Per-video estimates of speech-related movement were calculated by estimating the area under the curve for AU25 (lips parting). We also accounted for the presence of missing data as a result of the face moving out of view, by calculating the proportion of frames for which AUs could not be detected in each partner.

Results

Data Quality

Independent T-tests or Mann Whitney U-tests (for data with non-normal distributions) were conducted to test for group differences in missing data, speech-related movement, and head motion.

There were no group differences in missing data or speech-related movement in youth or adult interaction partners in either familiarity condition (ps ranging from 0.39 to 0.81). This finding further suggests that groups were similar in terms of active participation and speech contributions within their conversations. There also were no significant group differences in head motion for mothers, or for youth within either familiarity condition (ps ranging from 0.19 to 0.72). However, RAs exhibited significantly more head motion in their interactions with TDCs than with youth with ASD, U = 74.00, p = 0.006. We, therefore, controlled for all adult head motion (i.e., in both RAs and mothers) in relevant analyses.

Group Differences in Smile Magnitude and Interpersonal Smile Coordination

Descriptive statistics for smile magnitude and interpersonal smile coordination variables are provided in Table 2. Youth smile magnitude was analyzed in a 2 × 2 mixed model analysis of variance, with between-subjects factor of group (ASD, TDC) and within-subjects factor of familiarity (mother, RA). Adult (i.e., mothers and RAs) smile magnitude, interpersonal smile coordination, and change in smile coordination over time were analyzed in three separate linear mixed-effects models, with adult head motion included as a covariate at each level of familiarity. These models were also run without covarying adult head motion, and the pattern of the results was unchanged.

Table 2.

Descriptive Statistics for Experimental Variables

| ASD M (SD) | TDC M (SD) | |

|---|---|---|

| Youth smile magnitude | ||

| Mother | 0.68 (0.36) | 0.94 (0.49) |

| Research assistant* | 0.53 (0.49) | 1.00 (0.61) |

| Adult smile magnitude | ||

| Mother | 0.74 (0.26) | 0.84 (0.42) |

| Research assistant** | 0.86 (0.40) | 1.29 (0.42) |

| Interpersonal smile coordination | ||

| Mother | 0.53 (0.07) | 0.56 (0.07) |

| Research assistant** | 0.52 (0.08) | 0.61 (0.08) |

| Change in smile coordination over time | ||

| Mother | 0.26 (0.16) | 0.32 (0.17) |

| Research assistant** | 0.24 (0.15) | 0.40 (0.17) |

Note: Asterisks denote significant group differences;

p < 0.05;

p ≤ 0.01.

Across mother and RA familiarity conditions, TDCs smiled significantly more than youth with ASD, with a large effect size, F(1,34) = 5.77, p = 0.02, ηp2 = 0.15. Neither the interaction term, F(1,34) = 2.74, p = 0.11, ηp2 = 0.08, nor the effect of familiarity, F(1,34) = 0.50, p = 0.49, ηp2 = 0.01, were significant.

Similarly, mothers and RAs smiled significantly more in their interactions with TDCs than with youth with ASD, with a large effect size, F(1,36.17) = 5.90, p = 0.02, ηp2 = 0.14. In this case, there was also a significant main effect of familiarity with a large effect size, F (1,34.50) = 10.72, p = 0.002, ηp2 = 0.24, with RAs smiling more than mothers. The interaction term did not reach significance, F(1,34.01) = 2.89, p = 0.10, ηp2 = 0.08.

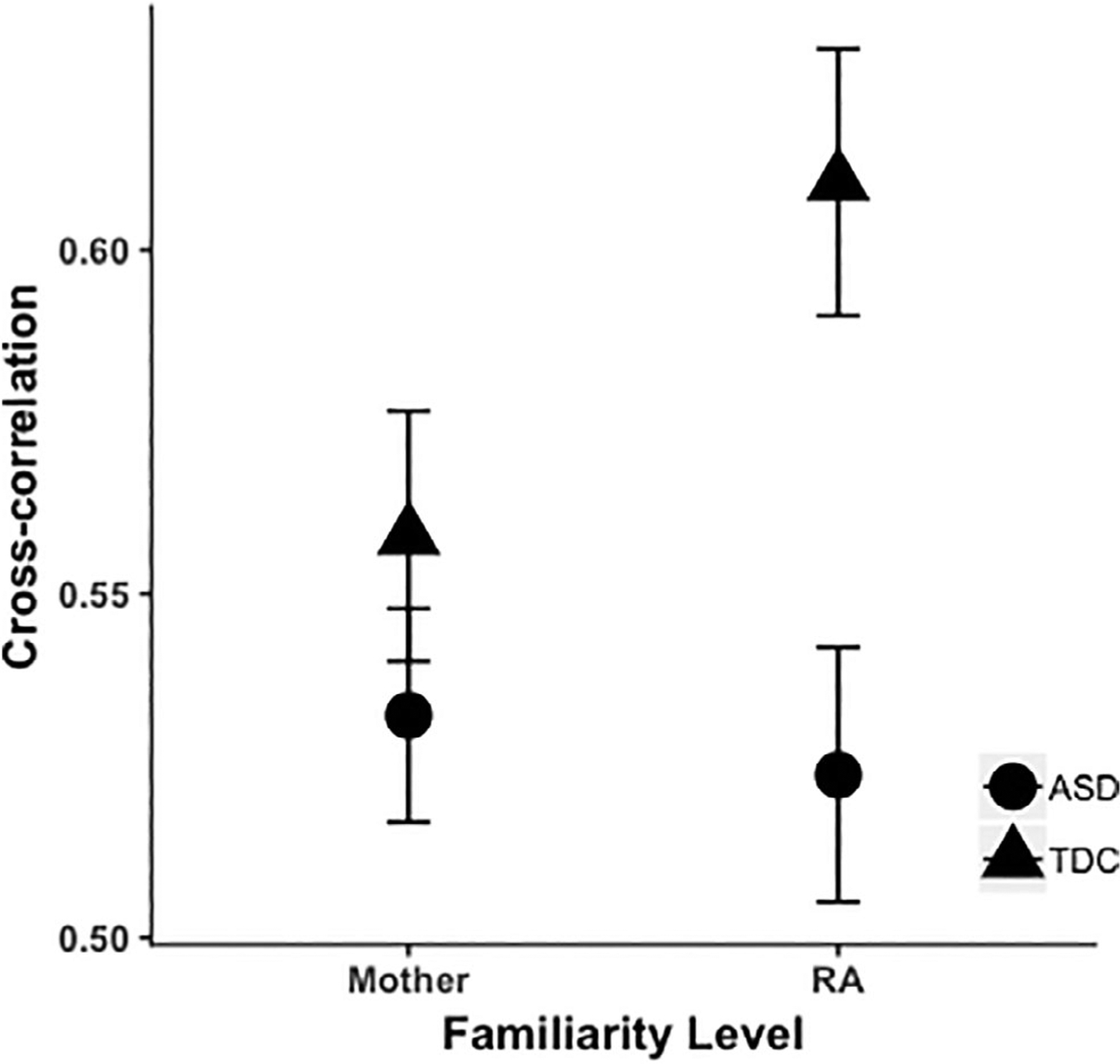

For interpersonal smile coordination (see Fig. 1), there was a main effect of group with a medium effect size, F(1,34.93) = 4.38, p = 0.04, ηp2 = 0.11. When collapsing across mother and RA familiarity conditions, interpersonal smile coordination was higher in TDCs relative to youth with ASD. Neither the interaction term, F(1,32.01) = 4.10, p = 0.05, ηp2 = 0.11, nor the effect of familiarity, F(1,32.51) = 3.71, p = 0.06, ηp2 = 0.10, reached significance.

Figure 1.

Interpersonal smile coordination by diagnostic group and familiarity level, depicted by group means (±SEM) for averaged peak windowed cross-correlations (r) between interaction partners’ smile magnitudes.

The analysis for change in smile coordination over time yielded a similar pattern. There was a significant main effect of group with a medium effect size, F(1,35.96) = 4.75, p = 0.04, ηp2 = 0.12, with more of an increase over time in smile coordination in the TDC group than the ASD group across familiarity conditions. The interaction term, F(1,33.07) = 2.06, p = 0.16, ηp2 = 0.06, and effect of familiarity, F(1,33.59) = 1.05, p = 0.31, ηp2 = 0.03, were not significant.

Association Between Interpersonal Smile Coordination and Social–Emotional Functioning

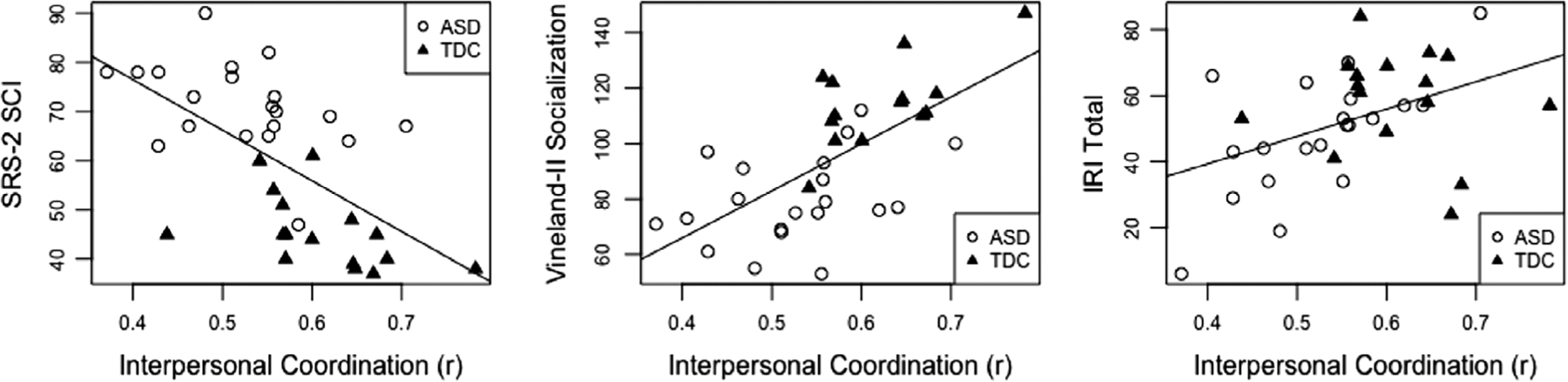

Linear regressions were conducted to evaluate relationships between interpersonal smile coordination and mothers’ ratings of social–emotional functioning. In each regression, interpersonal smile coordination and youth smile magnitude were entered as a set of independent variables. This allowed us to test the predictive significance of interpersonal smile coordination while controlling for overall youth smiling, as generally reduced affect is associated with ASD. These analyses focused on smile behavior within the youth-RA condition (as opposed to the mother condition), because behavior with an unfamiliar person was thought to be more relevant to day-to-day social ability than behavior with a highly familiar person. Regression results are both presented in Table 3 and depicted in Figure 2.

Table 3.

Dimensional Relationships Between Interpersonal Smile Coordination and Social–Emotional Functioning

| SRS-2 SCI | Vineland-II Socialization | IRI Total | |

|---|---|---|---|

| Interpersonal smile coordination | −0.40* | 0.64*** | 0.48* |

| Youth smile magnitude | −0.35* | 0.04 | −0.09 |

| Model F | 13.51*** | 12.06*** | 3.82* |

| Adjusted R2 | 0.42 | 0.40 | 0.14 |

Note. In three separate linear regression analyses, SRS-2 SCI, Vineland-II Socialization, and IRI Total scores were predicted by youth-RA interpersonal smile coordination and youth smile magnitude as a set of predictors. Effects are presented as standardized beta coefficients for ease of comparison across measures. SRS-2 SCI, Social Responsiveness Scale, Second Edition Social Communication and Interaction T-score; Vineland-II Socialization, Vineland Adaptive Behavior Scales, Second Edition Socialization standard score; IRI, Interpersonal Reactivity Index.

p < 0.05;

p ≤ 0.001.

Figure 2.

Scatterplots with regression lines relating interpersonal smile coordination (unfamiliar condition) to scores on parent rating scales of social–emotional functioning. SRS-2 SCI, Social Responsiveness Scale, Second Edition Social Communication and Interaction T-Score (higher scores = more social atypicality); Vineland-II Socialization, Vineland Adaptive Behavior Scales, Second Edition Socialization standard score (higher scores = better adaptive social skills); IRI Total, Interpersonal Reactivity Index (higher scores = more empathy).

First, SRS-2 SCI T-scores were predicted by the set of two independent variables—interpersonal smile coordination and youth smile magnitude. The model including both predictors accounted for a significant portion of the variance in SCI scores, R2adj = 0.42, p < 0.001. Interpersonal smile coordination, β = −0.40, p = 0.02, and youth smile magnitude, β = −0.35, p = 0.04, were both significant independent predictors of SCI scores. This suggests that youth’s smiling and their coordination of smiles with social partners are related to broader autism social symptomatology represented across a dimension spanning typical to atypical.

Next, the overall model predicting Vineland-II Socialization domain standard scores from the set of predictors (interpersonal smile coordination and youth smile magnitude) accounted for a significant portion of the variance in Socialization scores, R2adj = 0.40, p < 0.001. Only interpersonal smile coordination was a significant independent predictor of Socialization scores when controlling for youth smile magnitude, β = 0.64, p = 0.001, suggesting that interpersonal smile coordination is strongly related to dimensional day-to-day adaptive social functioning.

Last, the model predicting IRI Total Scores accounted for a significant portion of IRI Total Score variance, R2adj = 0.14, p = 0.03. Again, interpersonal smile coordination, but not youth smile magnitude, was independently predictive of IRI Total Scores, β = 0.48, p = 0.02, suggesting a relationship between smile coordination and a dimensional measure of empathy.

Given that regression results could be driven by group differences in the predictor and outcome variables, we repeated the above analyses to test whether the relationships held when controlling for diagnostic group. The pattern of results remained the same for Vineland-II Socialization and IRI Total scores, with interpersonal smile coordination, but not youth smile magnitude, significantly predicting adaptive social skills, β = 0.31, p = 0.048, and empathy, β = 0.43, p = 0.047. For SRS-2 SCI T-scores, only youth smile magnitude, β = −0.23, p = 0.03, and not interpersonal smile coordination, β = −0.14, p = 0.19, remained a significant independent predictor when controlling for group.

Discussion

The primary goal of this study was to use computer vision-based facial analysis to examine whether youth with ASD are less likely than typically developing peers to demonstrate interpersonal facial affect coordination with social partners during natural conversations. Results indicate that, overall, both youth and their adult conversation partners smiled less during conversations when the youth had ASD. Youth with ASD also generally showed less smile coordination with conversation partners and less growth in coordination over the time course of their conversations. This suggests that while typically developing youth became increasingly emotionally attuned with their social partners over the course of an interaction, this was not the case for youth with ASD. Given that the hypothesized function of interpersonal coordination is to foster social affiliation and meaningful relationships [Lakin, Jefferis, Cheng, & Chartrand, 2003], differences in achieving coordinated exchanges could have deleterious effects on interaction and relationship quality for youth with ASD. In addition, a lack of contingent emotional responses from a child with ASD may in turn affect the responses they receive from social partners, which could ultimately result in fewer opportunities for children with ASD to process emotional cues and links between their and others’ emotional states.

While group by familiarity interaction terms did not reach statistical significance with our sample size, group differences for all experimental variables appeared more pronounced during interactions in which partners were unfamiliar, relative to mother–youth interactions. This suggests that highly familiar social contexts may be protective for youth with ASD, fostering more effective social communication. This pattern may also reflect increased social motivation or effort on the part of typically developing youth when interacting with an unfamiliar adult. In other words, they may have been more motivated to match the RA’s positive affect in an effort to be viewed more favorably or to make the interaction more mutually comfortable, whereas this type of accommodation would not be necessary in interactions with their mothers. Youth with ASD may be less likely to make such accomodations when meeting someone new, which may then also impact the behavior of the new acquiantance.

We also sought to examine relationships between interpersonal affect coordination and broad, cross-diagnostic domains of social–emotional functioning affected in ASD. When considered dimensionally across youth with and without ASD, interpersonal smile coordination was significantly associated with established parent rating scales of autism-related social atypicality, adaptive social skills, and empathy. These associations held even when controlling for the amount that youth smiled, suggesting that these effects are not simply driven by a general reduction in facial expressiveness in youth with ASD.

It is important to note that the results of these dimensional regression analyses, conducted across both diagnostic groups, may be driven by group differences in both interpersonal smile coordination and scores on rating scales. Still, an inspection of the scatterplots (Fig. 2) suggests that the relationships between interpersonal smile coordination and each domain of social–emotional functioning exist on a linear continuum across youth with and without ASD. In addition, follow-up analyses controlling for group showed the same pattern of relationships between interpersonal smile coordination and adaptive social functioning and empathy. The relationship between interpersonal smile coordination and autism social symptomatology (SRS-2 SCI T-scores) became nonsignificant when controlling for both youth smiling and group, suggesting that this particular relationship should be interpreted more cautiously. We were not adequately powered to investigate these relationships separately by group; therefore, it cannot be concluded that facial affect coordination predicts variability within the autism spectrum. To fully understand associations between individual differences in interpersonal affect coordination and individual differences in other domains, particularly within diagnostic groups, more research with larger samples is needed.

Findings suggest that interpersonal affect coordination is an important social process with implications for both typical and atypical social–emotional functioning. This is consistent with a large literature linking facial affect coordination with emotion processing and empathy [Adolphs, Damasio, Tranel, Cooper, & Damasio, 2000; Hatfield et al., 1994; Neal & Chartrand, 2011; Niedenthal, Brauer, Halberstadt, & Innes-Ker, 2001; Oberman, Winkielman, & Ramachandran, 2007; Sonnby-Borgström, Jönsson, & Svensson, 2003; Stel & van Knippenberg, 2008; Stel & Vonk, 2010]. Some have theorized that facial coordination comes online automatically to augment processing of subtle emotional cues [Niedenthal, 2007], and maybe the result of a direct link between perception and action systems [see Kinsbourne & Helt, 2011; Moody & McIntosh, 2006; Winkielman, McIntosh, & Oberman, 2009 for discussions related to ASD]. As a result, humans are able to rapidly process information gleaned from their social environment, and to automatically generate appropriate corresponding behaviors. In this way, social processes can become embodied across interaction partners, such that coordinated actions (e.g., facial expressions) are reflective of coordinated mental states.

Results also have important implications for how social interaction differences are best conceptualized and studied in ASD. Dynamic measures, taking both interaction partners’ behaviors into account, were more strongly associated with broader social–emotional functioning than measures from youth with autism alone. Furthermore, both youth and adult interaction partners demonstrated less positive affect in interactions where the youth had ASD. These findings build on previous work advocating that a complete conceptualization of social difficulties in ASD must account for factors that both social partners bring to the interaction. For example, Sasson et al. [2017] found that unfamiliar social partners view individuals with ASD less favorably, based particularly on their nonverbal cues. They are consequently less inclined to engage individuals with ASD socially, which may contribute to poorer interaction quality for people with ASD. Moreover, typically developing adults preferred to interact with typically developing (vs. autistic) partners, whereas individuals with autism showed a preference for autistic partners, further suggesting that social differences in ASD are at least partly relational and thus should be studied in interactive contexts [Morrison et al., 2020]. Smiling behavior and interpersonal affect coordination should be further explored across dyads with various diagnostic compositions.

There are several limitations to this study that should be considered when interpreting results. First, this is a preliminary study with a small sample size, hindering generalization to the ASD population as a whole. Moreover, this sample had average to above-average intellectual and language ability, and is therefore not representative of the full autism spectrum. Second, computer vision facial analysis in conversational settings is subject to some methodological noise from head movement and speech. While we attempted to account for these variables in our analyses, this area of research will benefit hugely from the development of computational behavioral analysis platforms that are less sensitive to head pose variability and speech-related movement. Third, computer vision programs like OpenFace do not provide direct information on the quality of facial movements, but merely their presence and intensity. Previous studies suggest that facial expressions in people with ASD tend to be more odd or awkward relative to controls [Trevisan et al., 2018], which could also influence interpersonal affect coordination. Future studies should consider the interplay between various facial AUs, which may better capture the quality of facial expressions.

This study used a cooperative interaction task meant to elicit positive affect. Using similar methods to analyze more neutral or negatively valenced conversational contexts would offer additional insight into potential differences in emotional expression and reciprocity in ASD. Finally, we did not attempt to distinguish between voluntary and nonconscious smiling behavior, which may have different underlying mechanisms [Rinn, 1984]. In particular, previous studies suggest that spontaneous facial mimicry is specifically atypical in ASD, in the context of intact voluntary imitation of facial expressions, as well as intact unconscious mimicry when explicitly instructed to attend to facial features [Magnée et al., 2007; McIntosh et al., 2006; Oberman et al., 2009; Press, Richardson, & Bird, 2010; Southgate & Hamilton, 2008]. To better understand the mechanisms underlying reduced interpersonal affect coordination in ASD, an important future direction is to examine how the degree of visual attention to the face of the interaction partner influences smiling behavior in both partners and coordination between partners.

In conclusion, this study introduces a promising novel method for quantifying moment-to-moment facial expression and emotional reciprocity during natural interactions. Automated computational analysis methods have the potential to significantly improve the measurement of these core diagnostic behaviors in ASD, in that they are fine-grained, objective, low-resource, and deployable to a variety of natural social contexts. Methods such as these are particularly important for increasing our ability to more precisely assess individual differences, developmental trajectories, and treatment outcomes.

Acknowledgments

We are grateful to the families that participated in this research. This research was supported by grants from the McMorris Family Foundation and the National Institute on Deafness and Other Communication Disorders (R01 DC009439). We would also like to thank Laura Soskey Cubit, Jessica Keith, Kim Schauder, Kelsey Csumitta, Paul Allen, and our undergraduate research assistants for their invaluable contributions.

References

- Adolphs R, Damasio H, Tranel D, Cooper G, & Damasio AR (2000). A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. The Journal of Neuroscience, 20 (7), 2683–2690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Arlington, VA: American Psychiatric Association. [Google Scholar]

- Baltrusaitis T, Zadeh A, Lim YC, & Morency L (2018). OpenFace 2.0: Facial behavior analysis toolkit Proceedings of 2018 13th IEEE International Conference on Automatic Face Gesture Recognition, 15–19 May 2018. Xi’an, China, pp. 59–66. [Google Scholar]

- Beall PM, Moody EJ, McIntosh DN, Hepburn SL, & Reed CL (2008). Rapid facial reactions to emotional facial expressions in typically developing children and children with autism spectrum disorder. Journal of Experimental Child Psychology, 101(3), 206–223. [DOI] [PubMed] [Google Scholar]

- Bernieri FJ, Davis JM, Rosenthal R, & Knee CR (1994). Interactional synchrony and rapport: Measuring synchrony in displays devoid of sound and facial affect. Personality and Social Psychology Bulletin, 20(3), 303–311. [Google Scholar]

- Bernieri FJ, & Rosenthal R (1991). Interpersonal coordination: Behavior matching and interactional synchrony In Feldman RS & Rime B (Eds.), Fundamentals of nonverbal behavior (pp. 401–432). New York, NY: Cambridge University Press. [Google Scholar]

- Boker SM, Rotondo JL, Xu M, & King K (2002). Windowed cross-correlation and peak picking for the analysis of variability in the association between behavioral time series. Psychological Methods, 7(3), 338–355. [DOI] [PubMed] [Google Scholar]

- Cappella JN, & Planalp S (1981). Talk and silence sequences in informal conversations III: Interspeaker influence. Human Communication Research, 7(2), 117–132. [Google Scholar]

- Chartrand TL, & Bargh JA (1999). The chameleon effect: The perception–behavior link and social interaction. Journal of Personality and Social Psychology, 76(6), 893–910. [DOI] [PubMed] [Google Scholar]

- Cohn J, & Kanade T (2007). Automated facial image analysis for measurement of emotion expression In Coan JA & Allen JJB (Eds.), The handbook of emotion elicitation and assessment (pp. 222–238). New York, NY: Oxford University Press. [Google Scholar]

- Cohn JF, & De La Torre F (2015). Automated face analysis for affective computing In Calvo R, D’Mello S, Gratch J, & Kappas A (Eds.), The Oxford handbook of affective computing (pp. 131–150). New York, NY: Oxford University Press. [Google Scholar]

- Constantino JN, & Gruber CP (2012). Social responsiveness scale (2nd ed.). Torrance, CA: Western Psychological Services. [Google Scholar]

- Davis MH (1983). Measuring individual differences in empathy: Evidence for a multidimensional approach. Journal of Personality and Social Psychology, 44(1), 113–126. [Google Scholar]

- Delaherche E, Chetouani M, Mahdhaoui A, Saint-Georges C, Viaux S, & Cohen D (2012). Interpersonal synchrony: A survey of evaluation methods across disciplines. IEEE Transactions on Affective Computing, 3(3), 349–365. [Google Scholar]

- Demurie E, De Corel M, & Roeyers H (2011). Empathic accuracy in adolescents with autism spectrum disorders and adolescents with attention-deficit/hyperactivity disorder. Research in Autism Spectrum Disorders, 5(1), 126–134. [Google Scholar]

- Dimberg U (1982). Facial reactions to facial expressions. Psychophysiology, 19(6), 643–647. [DOI] [PubMed] [Google Scholar]

- Dimberg U (1988). Facial electromyography and the experience of emotion. Journal of Psychophysiology, 2(4), 277–282. [Google Scholar]

- Dimberg U, Thunberg M, & Elmehed K (2000). Unconscious facial reactions to emotional facial expressions. Psychological Science, 11(1), 86–89. [DOI] [PubMed] [Google Scholar]

- Ekman P, & Friesen WV (1978). Facial action coding system. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Garrod S, & Pickering MJ (2004). Why is conversation so easy? Trends in Cognitive Sciences, 8(1), 8–11. [DOI] [PubMed] [Google Scholar]

- Hatfield E, Cacioppo JT, & Rapson RL (1994). Emotional contagion. Paris, France: Cambridge University Press. [Google Scholar]

- Heerey EA, & Crossley HM (2013). Predictive and reactive mechanisms in smile reciprocity. Psychological Science, 24 (8), 1446–1455. [DOI] [PubMed] [Google Scholar]

- Hess U, & Bourgeois P (2010). You smile—I smile: Emotion expression in social interaction. Biological Psychology, 84(3), 514–520. [DOI] [PubMed] [Google Scholar]

- Hess U, & Fischer A (2013). Emotional mimicry as social regulation. Personality and Social Psychology Review, 17(2), 142–157. [DOI] [PubMed] [Google Scholar]

- Hove MJ, & Risen JL (2009). It’s all in the timing: Interpersonal synchrony increases affiliation. Social Cognition, 27(6), 949–960. [Google Scholar]

- Kinsbourne M, & Helt M (2011). Entrainment, mimicry, and interpersonal synchrony In Fein DA (Ed.), The neuropsychology of autism (pp. 339–365). New York, NY: Oxford University Press. [Google Scholar]

- LaFrance M, & Broadbent M (1976). Group rapport: Posture sharing as a nonverbal indicator. Group & Organization Studies, 1(3), 328–333. [Google Scholar]

- Lakin JL, Jefferis VE, Cheng CM, & Chartrand TL (2003). The chameleon effect as social glue: Evidence for the evolutionary significance of nonconscious mimicry. Journal of Nonverbal Behavior, 27(3), 145–162. [Google Scholar]

- Lord C, Rutter ML, DiLavore PS, Risi S, Gotham K, & Bishop SL (2012). Autism diagnostic observation schedule (2nd ed.). Los Angeles: Western Psychological Services. [Google Scholar]

- Magnée MJCM, de Gelder B, van Engeland H, & Kemner C (2007). Facial electromyographic responses to emotional information from faces and voices in individuals with pervasive developmental disorder. Journal of Child Psychology and Psychiatry, 48(11), 1122–1130. [DOI] [PubMed] [Google Scholar]

- McIntosh DN, Reichmann-Decker A, Winkielman P, & Wilbarger JL (2006). When the social mirror breaks: Deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Developmental Science, 9(3), 295–302. [DOI] [PubMed] [Google Scholar]

- Messinger DS, Mahoor MH, Chow S-M, & Cohn JF (2009). Automated measurement of facial expression in infant-mother interaction: A pilot study. Infancy, 14(3), 285–305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moody EJ, & McIntosh DN (2006). Mimicry and autism: Bases and consequences of rapid, automatic matching behavior In Imitation and the social mind: Autism and typical development (pp. 71–95). New York, NY: The Guilford Press. [Google Scholar]

- Morrison KE, DeBrabander KM, Jones DR, Faso DJ, Ackerman RA, & Sasson NJ (2020). Outcomes of real-world social interaction for autistic adults paired with autistic compared to typically developing partners. Autism, 24(5), 1067–1080. [DOI] [PubMed] [Google Scholar]

- Neal DT, & Chartrand TL (2011). Embodied emotion perception: Amplifying and dampening facial feedback modulates emotion perception accuracy. Social Psychological and Personality Science, 2(6), 673–678. [Google Scholar]

- Niedenthal PM (2007). Embodying emotion. Science, 316 (5827), 1002–1005. [DOI] [PubMed] [Google Scholar]

- Niedenthal PM, Brauer M, Halberstadt JB, & Innes-Ker ÅH (2001). When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cognition & Emotion, 15 (6), 853–864. [Google Scholar]

- Oberman LM, Winkielman P, & Ramachandran VS (2007). Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Social Neuroscience, 2 (3–4), 167–178. [DOI] [PubMed] [Google Scholar]

- Oberman LM, Winkielman P, & Ramachandran VS (2009). Slow echo: Facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Developmental Science, 12(4), 510–520. [DOI] [PubMed] [Google Scholar]

- Paxton A, & Dale R (2013). Frame-differencing methods for measuring bodily synchrony in conversation. Behavior Research Methods, 45(2), 329–343. [DOI] [PubMed] [Google Scholar]

- Press C, Richardson D, & Bird G (2010). Intact imitation of emotional facial actions in autism spectrum conditions. Neuropsychologia, 48(11–3), 3291–3297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramseyer F, & Tschacher W (2011). Nonverbal synchrony in psychotherapy: Coordinated body movement reflects relationship quality and outcome. Journal of Consulting and Clinical Psychology, 79(3), 284–295. [DOI] [PubMed] [Google Scholar]

- Riehle M, Kempkensteffen J, & Lincoln TM (2017). Quantifying facial expression synchrony in face-to-face dyadic interactions: Temporal dynamics of simultaneously recorded facial EMG signals. Journal of Nonverbal Behavior, 41(2), 85–102. [Google Scholar]

- Rinn WE (1984). The neuropsychology of facial expression: A review of the neurological and psychological mechanisms for producing facial expressions. Psychological Bulletin, 95(1), 52–77. [PubMed] [Google Scholar]

- Rutter ML, Bailey A, & Lord C (2003). Social communication questionnaire. Los Angeles, CA: Western Psychological Services. [Google Scholar]

- Rutter ML, Le Couteur A, & Lord C (2003). Autism diagnostic interview—Revised. Los Angeles, CA: Western Psychological Services. [Google Scholar]

- Sasson NJ, Faso DJ, Nugent J, Lovell S, Kennedy DP, & Grossman RB (2017). Neurotypical peers are less willing to interact with those with autism based on thin slice judgments. Scientific Reports, 7, 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seibt B, Mühlberger A, Likowski KU, & Weyers P (2015). Facial mimicry in its social setting. Frontiers in Psychology, 6, 1122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sonnby-Borgström M, Jönsson P, & Svensson O (2003). Emotional empathy as related to mimicry reactions at different levels of information processing. Journal of Nonverbal Behavior, 27(1), 3–23. [Google Scholar]

- Southgate V, & Hamilton A. F. d. C. (2008). Unbroken mirrors: Challenging a theory of autism. Trends in Cognitive Science, 12(6), 225–229. [DOI] [PubMed] [Google Scholar]

- Sparrow SS, Cicchetti DV, & Balla DA (2005). Vineland adaptive behavior scales, Second Edition (Vineland-II). Livonia, MN: Pearson. [Google Scholar]

- Stel M, van den Heuvel C, & Smeets RC (2008). Facial feedback mechanisms in autistic spectrum disorders. Journal of Autism and Developmental Disorders, 38(7), 1250–1258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stel M, & van Knippenberg A (2008). The role of facial mimicry in the recognition of affect. Psychological Science, 19 (10), 984–985. [DOI] [PubMed] [Google Scholar]

- Stel M, & Vonk R (2010). Mimicry in social interaction: Benefits for mimickers, mimickees, and their interaction. British Journal of Psychology, 101(2), 311–323. [DOI] [PubMed] [Google Scholar]

- Trevisan DA, Hoskyn M, & Birmingham E (2018). Facial expression production in autism: A meta-analysis. Autism Research, 11(12), 1586–1601. [DOI] [PubMed] [Google Scholar]

- Wechsler D (2003). Wechsler intelligence scale for children (4th ed.). San Antonio, TX: Pearson. [Google Scholar]

- Wechsler D (2011). Wechsler Abbreviated Scale of Intelligence (2nd ed.). San Antonio, TX: Pearson. [Google Scholar]

- Wiltermuth SS, & Heath C (2009). Synchrony and cooperation. Psychological Science, 20(1), 1–5. [DOI] [PubMed] [Google Scholar]

- Winkielman P, McIntosh DN, & Oberman L (2009). Embodied and disembodied emotion processing: Learning from and about typical and autistic individuals. Emotion Review, 1(2), 178–190. [Google Scholar]