Highlights

-

•

We studied facial identity and expression processing in adults with and without autism.

-

•

No group differences were observed on standard behavioural face processing tasks.

-

•

No group differences were observed in average activity in face related brain areas.

-

•

No group differences were observed in neural representations and repetition suppression.

-

•

Minor group-differences were observed in functional connectivity, involving amygdala.

Keywords: fMRI, Autism, Face processing, Multivoxel pattern analysis, Adaptation, Functional connectivity

Abstract

The ability to recognize faces and facial expressions is a common human talent. It has, however, been suggested to be impaired in individuals with autism spectrum disorder (ASD). The goal of this study was to compare the processing of facial identity and emotion between individuals with ASD and neurotypicals (NTs).

Behavioural and functional magnetic resonance imaging (fMRI) data from 46 young adults (aged 17–23 years, NASD = 22, NNT = 24) was analysed. During fMRI data acquisition, participants discriminated between short clips of a face transitioning from a neutral to an emotional expression. Stimuli included four identities and six emotions. We performed behavioural, univariate, multi-voxel, adaptation and functional connectivity analyses to investigate potential group differences.

The ASD-group did not differ from the NT-group on behavioural identity and expression processing tasks. At the neural level, we found no differences in average neural activation, neural activation patterns and neural adaptation to faces in face-related brain regions. In terms of functional connectivity, we found that amygdala seems to be more strongly connected to inferior occipital cortex and V1 in individuals with ASD. Overall, the findings indicate that neural representations of facial identity and expression have a similar quality in individuals with and without ASD, but some regions containing these representations are connected differently in the extended face processing network.

1. Introduction

Face processing is a crucial, extensively used human skill. Most people are experts in face recognition and can distinguish extraordinarily well between different facial identities and expressions (Tanaka, 2001, Tanaka and Gauthier, 1997). Nevertheless, individuals with autism spectrum disorder (ASD) are characterized by atypical verbal and non-verbal social communication, in which face processing plays an important role. Individuals with ASD may exhibit difficulties in both facial identity processing (i.e. struggling to recognize someone based on facial features) and facial emotion processing (i.e. struggling to recognize and interpret facial expressions) (Barton et al., 2004). These difficulties have been proposed to belong to the core deficits of ASD (Dawson et al., 2005, Schultz, 2005, Tanaka and Sung, 2016), even underlying the characteristic social difficulties of individuals with ASD (Dawson et al., 2005, Nomi and Uddin, 2015, Schultz, 2005). However, when tested experimentally, the empirical evidence for atypicalities in facial identity processing in ASD is mixed. Reviews conclude that individuals with ASD generally – but not consistently - differ from healthy controls in terms of identity processing on a quantitative level (i.e. how well facial identity is discriminated). However, they disagree on whether there are also qualitative differences between the groups (i.e. use of different strategies for identity recognition) (Tang et al., 2015, Weigelt et al., 2012). Weigelt and colleagues (2012) conclude that individuals with ASD have a specific difficulty remembering faces, based on the finding that differences between groups are more likely to show up in studies that present facial stimuli consecutively (i.e. not simultaneously). Likewise, the empirical evidence regarding difficulties in facial expression processing is mixed, in particular when considering basic emotions. Some studies suggest general problems with all emotions, others find specific difficulties (e.g. for one particular emotion, only for negative emotions, or only for especially complex emotions, but not for others), and other studies do not find differences at all (Harms et al., 2010, Uljarevic and Hamilton, 2013).

1.1. Neural basis of face perception

The literature on the neural basis of face perception is extensive. An influential neural model has been suggested by Haxby and colleagues (Haxby et al., 2000a). In this model, invariant facial features play an important role in identity recognition, while variant facial features aid social communication (e.g. understanding what someone is feeling based on the facial expression). The authors propose a hierarchical model mediating face perception, comprising a core system and an extended system. In the core model, the early perception of faces is carried out by inferior occipital regions (e.g. occipital face area, OFA). In turn, this region provides input to fusiform gyrus and superior temporal cortex. Processing of invariant facial features (~identity) is associated with stronger activity in inferior temporal (fusiform) and inferior occipital regions (Kanwisher and Yovel, 2006). On the other hand, changeable aspects of the face (~expression) are processed by superior temporal cortex (Hoffman and Haxby, 2000, Puce et al., 1998, Wicker et al., 1998). More recent work has suggested a higher degree of overlap between identity and emotion processing. More specifically, it has been shown that superior temporal cortex responds to identity (e.g. Dobs et al., 2018, Fox et al., 2011) and fusiform gyrus is activated while processing expressions (e.g. LaBar et al., 2003, Schultz and Pilz, 2009). Some studies even suggest facilitatory interactions between identity and emotion processing (e.g. Andrews and Ewbank, 2004, Calder and Young, 2005, Winston et al., 2004, Yankouskaya et al., 2017).

The three-region core network is complemented by an extended brain network aiding the further processing and interpretation of visual facial information (Haxby et al., 2002). For instance, anterior temporal cortex has been implicated for biographical knowledge as a part of identity processing (Gorno-Tempini et al., 1998, Leveroni et al., 2000). Furthermore, amygdala and inferior frontal cortex play a crucial role in the processing of emotion (Adolphs and Tranel, 1999, Brothers, 1990, Haxby et al., 2000b, Sprengelmeyer et al., 1998).

Importantly, Haxby and colleagues emphasize that effective face processing can only be accomplished by the coordinated interaction and collaboration between several brain regions. The idea of multiple brain regions jointly collaborating in a face processing network has also been supported by other authors (e.g. Ishai, 2008, Tovée, 1998, Zhen et al., 2013). In addition, Nomi and Uddin (2015) highlight the importance of studying distributed neural networks to understand atypical face perception in ASD.

1.2. Neural face processing in ASD across methods

In this paper, we investigated how the neural processing of faces differs between individuals with and without ASD. For this purpose, we used different methods of analysis. Group differences in face processing between individuals with ASD and neurotypicals (NTs) have been investigated in previous neuro-imaging studies.

First, a myriad of studies have compared the neural activity level of individuals with and without ASD. Reviews show that inferior occipital gyrus, fusiform gyrus, superior temporal sulcus, amygdala, and inferior frontal gyrus have been found to be less active in individuals with ASD when looking at faces (Di Martino et al., 2009, Dichter, 2012, Nomi and Uddin, 2015, Philip et al., 2012). Studies specific to identity recognition have shown hypo-active inferior frontal and posterior temporal cortices in individuals with ASD (Koshino et al., 2008). Regarding expressive faces, reviews and meta-analyses show that fusiform gyrus, superior temporal sulcus and amygdala are less active in individuals with ASD (Aoki et al., 2015, Di Martino et al., 2009, Dichter, 2012, Nomi and Uddin, 2015, Philip et al., 2012).

Second, the literature pertaining neural representations characterizing facial identity and facial expression processing in ASD is sparse. In the last decade, there has been an exponential growth of studies investigating the properties of neural representations through multivariate methods. These methods are known under names such as multi-voxel pattern analysis (MVPA), brain decoding, and representational similarity analysis (RSA) (Haynes and Rees, 2006, Kriegeskorte et al., 2008, Norman et al., 2006). It has been suggested that this approach offers a more sensitive measure to pinpoint how information is represented in the brain, compared to univariate analyses (Haxby et al., 2001, Koster-Hale et al., 2013). Multivariate methods have indeed been applied successfully to assess properties of face representations in neurotypicals (Goesaert and Op de Beeck, 2013, Kriegeskorte et al., 2007, Nemrodov et al., 2019, Nestor et al., 2011), and to compare and distinguish representations between ASD and NT-groups in other domains (Gilbert et al., 2009, Koster-Hale et al., 2013, Lee Masson et al., 2019, Pegado et al., 2020). Strikingly, however, multivariate approaches have rarely been used to study face processing in the ASD population. One study performed MVPA to study facial emotion processing using dynamic facial stimuli, finding no differences between individuals with and without ASD (Kliemann et al., 2018).

Third, pertaining adaptation to faces in ASD, literature is again sparse. One study found that individuals with ASD show reduced neural adaptation to neutral faces in the fusiform gyrus (Ewbank et al., 2017). In addition, reduced neural habituation to neutral faces (Kleinhans et al., 2009, Swartz et al., 2013) and sad faces (Swartz et al., 2013) in amygdala has been observed in individuals with ASD. This suggests that the processing of repeated face presentations occurs differently in individuals with ASD.

Finally, observed atypical functional connectivity patterns in individuals with ASD are inconsistent (Kleinhans et al., 2008, Koshino et al., 2008, Murphy et al., 2012, Wicker et al., 2008). Indeed, reviews suggest both hypo- and hyperconnectivity between the core as well as the extended brain regions involved in the perception of neutral as well as expressive faces (Dichter, 2012, Nomi and Uddin, 2015)

1.3. Current study

A myriad of studies have investigated face processing in autism, but the methodological approach is generally diffuse and scattered. For instance, studies involving only identity processing or only emotion processing, only behavioural processing or only neural processing, or involving only a selective and particular analysis approach. This fragmented approach has yielded inconsistent and scattered findings. With the present study, we aimed to offer an integrative picture of face processing abilities in ASD. For this purpose, we complemented a very comprehensive battery of behavioural face processing tasks with one of the most comprehensive series of fMRI analyses involving univariate, multivariate, adaptation, and functional connectivity analyses.

In this study, young adults with ASD and age- and IQ matched neurotypicals performed behavioural face processing tasks outside of the scanner. In addition, they performed a one-back task with dynamic facial stimuli while fMRI data was acquired. The stimuli, presented in a variable block design, displayed a face changing from a neutral to an emotional expression. The fMRI analyses included (1) a univariate analysis to study brain activity levels along the face processing network, (2) multi-voxel pattern analysis (MVPA) to assess the quality of neural representations of identity and expression, (3) release from adaptation to the repetition of facial identity and/or expression, and (4) functional connectivity among the core and extended face processing network.

Based on the large body of literature, especially given the use of dynamic facial stimuli, we expected to find differences between the groups. Behaviourally, we expected to observe poorer face processing performance, especially in tasks involving a memory component and impeding the use of perceptual matching strategies. At a univariate level, we expected to find differences between the groups. Due to the large inconsistencies in the literature, we made no a priori predictions about the regions of interest in which we would observe these differences. At a multivariate level, due to the sparseness of previous studies in ASD, we made no strong a priori predictions. Yet, based on the model of Haxby and colleagues (2000a), we expected that facial identities would be more robustly decoded from neural responses in inferior occipital cortex, fusiform gyrus and anterior temporal cortex. On the other hand, we expected facial expressions to be more reliably decoded from neural responses in superior temporal cortex, amygdala and inferior frontal cortex. Regarding the release from adaptation, we again had no strong a priori predictions due to the sparseness of studies in ASD. Since inferior occipital cortex and amygdala were implicated in previous studies, we expected potential group differences to show up in those regions. Here, again, we expected different anatomical regions to be differently involved in facial identity versus expression processing. Finally, regarding functional connectivity among the face network, we expected to find atypical functional connectivity patterns in the ASD-group, most likely involving hypoconnectivity in the ASD-group. However, we made no strong predictions due to the largely inconsistent findings in the literature.

2. Methods

2.1. Participants

Fifty-two young adults participated in this study (all male, ages 17–23 years), including 27 men with a formal ASD diagnosis and 25 age-, gender-, and IQ-matched neurotypical (NT) participants. Participants, and participants’ parents when the participant was under 18, completed informed consent prior to scanning. The study was approved by the Medical Ethics committee of the University Hospital Leuven (UZ Leuven).

Participants with ASD were diagnosed by a multidisciplinary team following DSM-IV or DSM-5 criteria. They were diagnosed and recruited through the Autism Expertise Centre and the Psychiatric Clinic at the University Hospital Leuven. None of the participants with ASD had comorbid neurological, psychiatric or genetic conditions. Healthy adults were recruited as NT participants through online advertising. None of the NT participants, nor first degree relatives, had a history of neurological, psychiatric, or medical conditions known to affect brain structure or function. None of the participants took psychotropic medication. All participants had normal or corrected-to-normal vision, and an IQ above 80. Participants generally showed an above average intelligence, resulting in an ASD-group consisting of mainly high functioning individuals with ASD. In addition, participants with ASD showed high levels of social adaptive functioning (e.g., most attend high school or higher education, or have a regular job).

Due to a range of problems during acquisition (technical or instructional difficulties; too much motion, see later sections), six participants were excluded from all analyses. Five of the excluded participants were part of the ASD-group. Hence, analyses were ultimately run on 46 (22 ASD and 24 NT) participants.

2.2. IQ, screening questionnaires and matching

Intelligence quotient (IQ) was measured using the following subtests of the Wechsler Adult Intelligence Scale (WAIS-IV-NL): block design, similarities, digit span, vocabulary, symbol search, and picture completion, allowing the computation of verbal, performance, and full-scale IQ (Table 1). In addition, a Dutch version of the Social Responsiveness Scale for adults (SRS-A: Constantino and Todd, 2005; Dutch version: Noens et al., 2012) was completed both by participants and at least one significant other (mother, father, sister, or partner). The SRS is a tool to assess autistic traits in adults. Reported scores are t-scores, ranging from 20 (very high level of social responsiveness) to 80 (severe deficits in social responsiveness). Thus, the higher the t-score, the more autistic traits are present. In contrast to other measures, a difference in SRS-scores between the groups was expected. Finally, to rule out differences between the groups regarding depression and anxiety, participants completed a Dutch version of the Beck Depression Inventory (BDI-II-NL) (Beck et al., 1996; Dutch version: Van der Does, 2002) and a Dutch version of the Spielberger State-Trait Anxiety Inventory (ZBV) (Van der Ploeg, 1980).

Table 1.

Participant characteristics of ASD-group (NASD = 22) and NT-group (NNT = 24) (p < 0.05 shown in bold)

| ASD |

NT |

Difference |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Min | Max | SD | Mean | Min | Max | SD | T | p | |

| Age | 19.18 | 17 | 23 | 1.82 | 20.08 | 17 | 23 | 1.98 | 1.61 | 0.1154 |

| Verbal IQ | 113.95 | 98 | 134 | 10.49 | 113.29 | 90 | 140 | 13.41 | 0.19 | 0.8536 |

| Performance IQ | 114.45 | 81 | 153 | 16.61 | 111.54 | 85 | 141 | 16.47 | 0.60 | 0.5537 |

| Full scale IQ | 111.86 | 89 | 147 | 13.07 | 111.67 | 87 | 143 | 14.48 | 0.73 | 0.4702 |

| BDI-II-NL | 8.05 | 0 | 23 | 6.25 | 4.83 | 0 | 21 | 5.92 | 1.79 | 0.0803 |

| STAI State | 32.82 | 20 | 50 | 6.94 | 31.58 | 24 | 42 | 5.79 | 0.66 | 0.5145 |

| STAI Trait | 38.18 | 23 | 62 | 9.99 | 33.71 | 22 | 53 | 8.25 | 1.66 | 0.1037 |

| SRS self | 58.45 | 42 | 77 | 10.25 | 48.21 | 36 | 61 | 7.67 | 3.86 | 0.0004 |

| SRS other | 63.68 | 46 | 87 | 11.06 | 44.25 | 20 | 69 | 9.05 | 6.55 | < 0.0001 |

Note. Abbreviations. BDI: Beck Depression Questionnaire. STAI: State‐Trait Anxiety Inventory. SRS: Social Responsiveness Scale.

NT participants were recruited to match ASD participants, which was largely successful (see Table 1). We found no significant differences between the ASD-group and the NT-group with regard to a range of control measures. As expected, scores on the SRS differed significantly between the groups. Both for the self-report and for the other-report version of the SRS questionnaire, individuals with ASD scored significantly higher than NTs. Interestingly, we found an interaction between informant (i.e. the person who filled out the SRS-scale) and group (F1,44 = 5.23, p = 0.0270): individuals with ASD scored lower (i.e. more socially adaptive) when filling out the questionnaire themselves, whereas the opposite trend emerged in the control group.

2.3. Behavioural data acquisition

Participants performed several behavioural face processing tests, selected to avoid low-level perceptual strategies and to probe face memory abilities. The Benton Face Recognition Test (BFRT; Benton et al., 1983) was used to assess the ability to recognize individual faces. The Cambridge Face Memory Test (CFMT; Duchaine and Nakayama, 2006) was used to assess the ability to remember individual faces. The Emotion Recognition task (ERT; Kessels et al., 2014, Montagne et al., 2007) and the Emotion Recognition Index (ERI; Scherer and Scherer, 2011) were used to assess the ability to recognize facial emotions.

We used a digitized version of the BFRT (BFRT-c) (Rossion and Michel, 2018). This test required participants to match facial identities despite changes in lighting, viewpoint and size, preventing them from relying on a low-level pixel-matching strategy. The test comprised a total of 22 trials. In every trial, four grayscale photographs were presented on the screen. In the upper part of the screen, a test face was presented, while in the lower part three faces were displayed: one target face and two distractor faces. Participants were instructed to select which of the three ‘lower’ faces had the same identity as the test face. Test, target and distractor stimuli were shown simultaneously to minimize the memory load.

The CFMT also required participants to match faces across viewpoints and levels of illumination. However, this test did involve a memory component. Furthermore, the test consisted of three stages. In the first stage, three study images of the same face were presented subsequently for three seconds: frontal, left and right viewpoint. Then, three faces were displayed, comprising one of the three study images and two distractor images. Participants were instructed to select the target identity, i.e. the identity they recognized from the study images. In the second stage, participants received the same instructions, but had to generalize the identity to a novel image, as the target image was different from the study images (i.e. a novel image of the same identity in which the illumination and/or pose varied). In the third stage, noise on the images added an extra level of difficulty to the task. The test comprised 72 items: 18 items in the first stage, 30 items in the second stage, 24 items in the third stage.

The ERT allowed us to investigate the explicit recognition of six dynamic facial expressions (i.e. anger, fear, happiness, sadness, disgust, surprise). Short video clips were presented, displaying a dynamic face in front view. These dynamic faces changed from a neutral to an emotional expression at either 40, 60, 80 or 100% intensity of the expression. Participants were instructed to select the corresponding emotion from six written labels displayed on the screen. Each intensity level comprised 24 trials, resulting in a total of 96 trials.

The ERI was also used to investigate the ability to recognize emotions. Pictures of posed expressions were presented for three seconds. Participants were instructed to select which emotion was expressed in the picture by clicking the correct label. The test included more items for difficult emotions (i.e. sadness, fear, anger) and relatively fewer items for easily recognizable emotions (happiness, disgust), with a total of 30 trials.

2.4. fMRI data acquisition

2.4.1. Stimuli

Stimuli were taken from the Emotion Recognition Task (ERT: Kessels et al., 2014, Montagne et al., 2007). They consisted of 24 two-second video clips in which a face slowly transitioned from a neutral to a 100% expressive emotional face, while keeping identity constant (Fig. 1). Six ‘basic’ (Ekman and Cordaro, 2011) facial expressions were included: anger, disgust, fear, happiness, sadness, and surprise. Each emotion was expressed by four different individuals: all Caucasian, two male and two female identities. All possible combinations between the four identities and six emotions resulted in twenty-four dynamic face stimuli.

Fig. 1.

Example of dynamic stimulus presented in the scanner. Faces gradually transitioned from a neutral to an emotional facial expression (happy in this case).

Stimuli were generated by morphing two static pictures: one showing a neutral and one showing an emotional facial expression. These stimuli were first constructed by Montagne and colleagues (2007) and used in previous (behavioural) research (Evers et al., 2015, Poljac et al., 2013). In addition, the same stimuli were already used during behavioural testing, in the Emotion Recognition Task, with the difference that we only used the clips transitioning from a neutral to a 100% expressive facial expression for fMRI data acquisition.

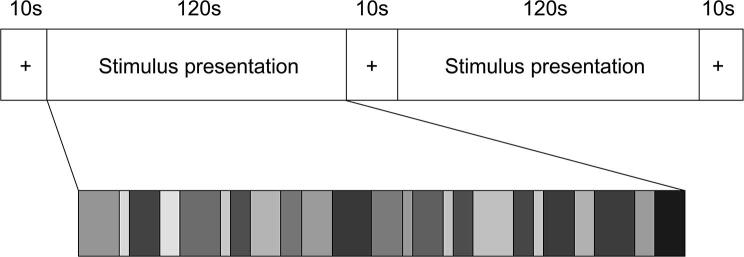

2.4.2. Design

The fMRI experiment followed a design with short blocks of variable lengths. Every run lasted 270 s and consisted of two two-minute periods of stimulus presentation. Stimulus presentation was preceded and followed by a ten-second fixation block (Fig. 2). Every two-minute period of stimulus presentation contained 60 trials shown in 24 blocks: one block per stimulus condition. These 24 blocks had variable lengths: every stimulus was shown for either one, two, three, or four consecutive trials. Therefore, as every stimulus lasted for two seconds, the length of every block varied between two and eight seconds. In this study, we used a design with short trials, variable block lengths and no inter-stimulus intervals. This kind of design is based on the ‘continuous carry-over design’ proposed and used by Aguirre (2007). A very similar design has previously been used in our research group (Bulthé et al., 2015, Bulthé et al., 2014).

Fig. 2.

Design of one run. Ten seconds of fixation alternated by two two-minute periods of stimulus presentation. Within a two-minute period of stimulus presentation, all 24 stimuli were shown at least once, in blocks of different lengths (2–8 s).

Participants performed a change detection task in which identity and emotion processing were integrated. They were instructed to press a button whenever the current stimulus differed from the previous one, regardless of the change occurring in identity or emotion. This one-back task ensured that participants were looking at the stimuli and paying attention to both identity and facial expression. Six up to twelve runs were acquired for each participant, depending on the length of scanning that was comfortable for a participant. We found a marginally significant difference in the number of acquired runs between the ASD-group (meanASD = 9.64 runs) and the NT-group (meanNT = 10.54 runs) (Mann-Whitney U test; W = 345, p = 0.0494).

2.4.3. fMRI data acquisition

fMRI data were collected using a 3 T Philips Ingenia CX scanner, with a 32-channel head coil, using a T2*-weighted echo-planar (EPI) pulse sequence (52 slices in a transverse orientation, FOV = 210 × 210 × 140 mm, reconstructed in–plane resolution = 2.19 × 2.19 mm, slice thickness = 2.5 mm, interslice gap = 0.2 mm, repetition time (TR) = 3000 ms, echo time (TE) = 30 ms, flip angle = 90 degrees). In addition, a T1-weighted anatomical scan was acquired from every participant (182 sagittal slices, FOV = 250 × 250 × 218 mm, resolution = 0.98 × 0.98 × 1.2 mm, slice thickness = 1.2 mm, no interslice gap, repetition time (TR) = 9.6 ms, echo time (TE) = 4.6 ms).

2.5. Analyses

2.5.1. Behavioural analyses

For each of the face processing tasks, we analysed the reaction times and accuracy with repeated measures ANOVA using the Afex package v0.22–1 (Singmann et al., 2018) in R v3.4.3 (R Development Core Team, R., 2012). ‘Accuracy’ and ‘Reaction time’ were included as dependent variables, and ‘Group’ (NT vs. ASD) as a between-subject factor. For the analysis of the CFMT, we additionally included the different stages of the test (intro, no noise and noise) as a within-subject factor. For analyses of reaction times, only correct trials were taken into account. When the data did not meet the assumption of normally distributed residuals, we performed a square root transformation on the data.

In addition to the face processing tasks, we analysed accuracy and reaction times on the task performed during fMRI data acquisition using custom code in MATLAB. Due to technical problems, responses failed to (reliably) register in all runs of five participants (three ASD and two NT), and in at least one run of eight other participants. These runs were excluded from further behavioural analyses.

2.5.2. fMRI pre-processing

Data were pre-processed using MATLAB with the Statistical Parametric Mapping software (SPM 8, Wellcome Department of Cognitive Neurology, London). First, functional scans were corrected for differences in slice timing. Second, the slice-timing-corrected scans were realigned to a mean scan per subject in order to correct for motion. The six motion parameters obtained during this process were used (1) as confounds in the general linear model, and (2) to check for excessive head motion, a priori defined as scan-to-scan movement exceeding one voxel in either direction (three participants excluded: two individuals with ASD, one NT) (cf. Bulthé et al., 2019, Pegado et al., 2018, Peters et al., 2016, Van Meel et al., 2019). Third, anatomical scans were co-registered with the slice-timing-corrected and realigned functional scans. Fourth, the anatomical and functional scans were normalized, resampling the scans to a voxel size of 2.5 × 2.5 × 2.5 mm. Finally, slice-timing-corrected, realigned, co-registered and normalized functional images were spatially smoothed with a Gaussian kernel with a full-width half maximum (FWHM) of 5 mm.

2.5.3. fMRI statistics

The BOLD response for each run was modelled using a general linear model (GLM). We constructed two different GLMs using different conditions for (1) the univariate, multivariate and functional connectivity analyses and (2) the adaptation analysis. In both approaches, we followed the logic of the continuous carry-over design proposed by Aguirre (2007).

In the first approach, the responses to individual stimuli are referred to as ‘direct effects’ (Aguirre, 2007). In this general linear model, the onset and duration of each stimulus block (i.e. a face with a particular identity and particular expression) were utilised to capture the signal for one specific condition. Therefore, the GLM contained 24 regressors of interest (one for each condition, i.e. every stimulus) and six motion correction parameters per run. As a result, we obtained a beta-value for every condition (every stimulus), and for the six regressors accounting for head motion. Estimation of the GLM resulted in beta-values. These beta-values were used during the univariate, multivariate and functional connectivity analyses.

We used a different approach to model the adaptation effects, referred to as ‘indirect effects’ or ‘carry-over effects’ (Aguirre, 2007). Instead of regressors referring to individual conditions (as in the first approach), the regressors in this model refer to the relationship between the conditions (i.e. relation between stimuli). More specifically, we modelled four adaptation conditions to classify the relationship between the 24 original conditions (i.e. 24 stimuli). Trials were assigned to the ‘AllSame’-condition when the current stimulus was a repetition of the previous stimulus. When the current stimulus had the same identity but a different emotion than the previous one, the trial was labelled ‘DiffEmo’. When the emotional expression was repeated but the identity changed, the trial was assigned to the ‘DiffId’-condition. Finally, if both identity and emotion were different in the current trial compared to the previous one, the trial was labelled ‘AllDiff’. Additionally, to account for the presence of fixation trials, we introduced two more conditions. The first trial of stimulus presentation after a fixation trial was assigned to the ‘FixtoStim’ condition. Fixation trials themselves were labelled ‘Fix’. This resulted in a total of six conditions for the adaptation analysis. For every participant, a GLM was estimated using the onsets and durations of the newly defined adaptation conditions. Accordingly, the GLM consisted of 12 regressors per run: six regressors of interest (the six conditions described above) and six motion correction parameters. Estimation of the GLM resulted in beta-values. These were used during the adaptation analysis.

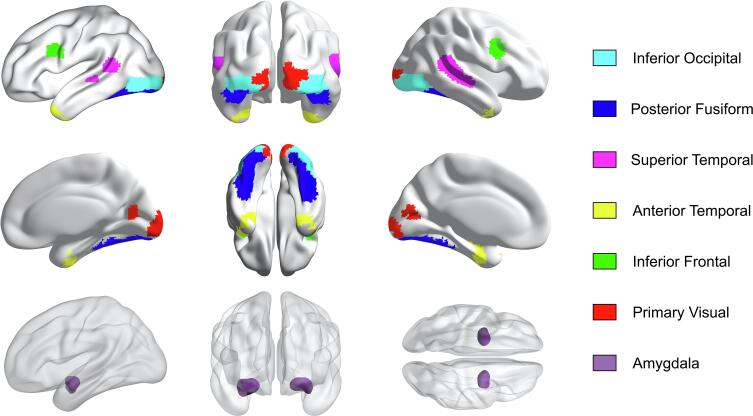

2.5.4. Regions of interest

Next, we defined our regions of interest (ROIs). We included seven ROIs comprising the network of regions mostly involved in face processing. First, the regions that are typically considered the core face-selective regions, i.e. inferior occipital cortex (including occipital face area ‘OFA’), posterior fusiform cortex (including fusiform face area ‘FFA’), and superior temporal cortex (including superior temporal sulcus ‘STS’). Next, the regions that are often considered as the extended face network, i.e. amygdala, anterior temporal cortex (including temporal pole), and inferior frontal cortex (including orbitofrontal cortex). Finally, the region where visual information enters the cortex, i.e. V1. The relevance of these regions is supported by a large literature (e.g. Adolphs, 2008, Avidan et al., 2014, Hoffman and Haxby, 2000, Ishai, 2008, Kanwisher et al., 1997, Pitcher et al., 2011, Rotshtein et al., 2005). Apart from V1, this network is also activated in the automated brain mapping database Neurosynth when searching for terms such as ’faces’ and ‘facial expressions’.

Next, we created masks for these ROIs. As all the intended regions were incorporated in WFU Pickatlas’ ‘aal’, we used this atlas to delineate anatomical masks. We incorporated potential inter-subject variability by dilating all masks 3-dimensionally with 1 voxel, restricted to the lobe they belonged to. More specifically, regions were defined as follows: Inferior occipital cortex, (Occipital_Inf_L (d1) + Occipital_Inf_R (d1)) * Occipital Lobe; Fusiform cortex, (Fusiform_L (d1) + Fusiform_R (d1)) * Temporal Lobe; Superior temporal cortex, (Temporal_Sup_L (d1) + Temporal_Sup_R (d1)) * Temporal Lobe; Amygdala, Amygdala_L (d1) + Amygdala_R (d1); Anterior temporal cortex, (Temporal_Pole_Mid_L (d1) + Temporal_Pole_Mid_R (d1) + Temporal_Pole_Sup_L (d1) + Temporal_Pole_Sup_R (d1)) * Temporal Lobe; Inferior frontal cortex: (Frontal_Inf_Oper_L (d1) + Frontal_Inf_Oper_R (d1) + Frontal_Inf_Tri_L (d1) + Frontal_Inf_Tri_R (d1) + Frontal_Inf_Orb_L (d1) + Frontal_Inf_Orb_R (d1)) * Frontal Lobe; V1, (Calcarine_L (d1) + Calcarine_R (d1)) * Occipital Lobe.

After defining and inspecting the anatomical masks, we decided to merge the (very large) fusiform gyrus with the temporal pole, and re-divide them in a posterior fusiform mask (encompassing FFA) and a larger anterior temporal mask (including temporal pole). We partitioned the merged mask along Y-coordinate 50 in a standard (91x109x91) space, based on literature (Grill-Spector et al., 2004, Spiridon et al., 2006, Summerfield et al., 2008), and visually ensured that FFA resided in the posterior fusiform mask. In addition, we used existing face parcels of FFA, OFA and STS (Julian et al., 2012) to confirm that these regions were situated within our posterior fusiform, inferior occipital and superior temporal masks, respectively.

We ensured that all masks were mutually exclusive by deleting all overlapping voxels. Anatomical masks were co-registered to a functional scan (fully pre-processed except for smoothing) to bring them in the same space as the pre-processed images (63x76x55). Finally, the anatomical masks were functionally restricted by computing the intersection with the face selective voxels. For this purpose, we used a whole-brain second level “all versus fixation” contrast across all participants. This contrast map was similar to probabilistic maps of face sensitive regions (Engell and McCarthy, 2013). Our “all versus fixation” contrast map was thresholded at p < .005, uncorrected. With this threshold, the smallest ROI contained 174 voxels, which was large enough to eventually allow the reduction of all ROIs to this size. Table 2 depicts the number of voxels in each ROI, both for the purely anatomical masks and for the functionally restricted masks. Fig. 3 shows the final ROIs, after functional restriction thresholded at p < .005 uncorrected.

Table 2.

Number of voxels in the purely anatomical and in the functionally restricted masks with a threshold of p < .005 uncorrected.

| # voxels anatomical | # voxels after restriction | |

|---|---|---|

| Inferior Occipital | 1473 | 1364 |

| Posterior Fusiform | 3222 | 1384 |

| Superior Temporal | 3586 | 563 |

| Amygdala | 639 | 258 |

| Anterior Temporal | 3969 | 174 |

| Inferior Frontal | 7608 | 381 |

| V1 | 2249 | 710 |

Fig. 3.

Regions of interest after functional restriction thresholded at p < .005, uncorrected. The top and middle row display the six cortical regions of interest, the bottom row shows the single subcortical region in this study: amygdala.

2.5.5. Univariate analyses

We conducted an ROI-based univariate analysis using custom code in MATLAB. For every participant, the average beta-value across all runs was computed for the “all versus fixation” contrast. These values were used in a two-sample t-test to test for group differences. We corrected for multiple comparisons by controlling the false discovery rate (FDR) with q < 0.05 (Benjamini and Hochberg, 1995). Below, we will use the term ‘activation’ to refer to average beta-values.

2.5.6. Multivariate analyses

The multivariate analysis involved an ROI-based decoding analysis, performed in MATLAB using custom code and the LIBSVM toolbox (Chang and Lin, 2011). The classification was conducted using support vector machines (SVM) with the default parameters of the LIBSVM toolbox. The code was very similar to the code made available by Bulthé and colleagues (2019). To make sure that the size of the ROIs was not driving the decoding results, we redefined all ROIs to have an identical number of voxels, equal to the number of voxels in the smallest ROI (i.e. the anterior temporal cortex). Accordingly, we randomly selected 174 voxels from the bigger ROIs to define these new equally sized ROIs.

The fMRI activity profiles (t-maps) in every ROI were used as input for an SVM-based decoding classification. First, we extracted response patterns for each stimulus condition, corresponding to a list containing the t-values of all voxels in the region. We did this separately for every subject, every ROI and for each run. These patterns were standardized to have a mean of zero and a standard deviation of 1 across all voxels within an ROI. Subsequently, a repeated random subsampling cross-validation procedure was used, in which the data were randomly assigned to either the training set (70% of the data), or the test set (30% of the data). This subsampling occurred 100 times. We trained the SVM to pairwisely distinguish all 24 conditions (four identities and six emotions), acquiring decoding accuracies for all 276 combinations in every ROI and for every subject. To obtain decoding accuracies for identity in every ROI, we computed the average decoding accuracy across all combinations that included different identities while emotion remained stable (e.g. happy identity 1 versus happy identity 2, sad identity 2 versus sad identity 4). We acquired decoding accuracies for emotion in every ROI by calculating the average decoding accuracy of all combinations that included different expressions, while identity stayed the same (e.g. happy identity 1 versus sad identity 1, sad identity 3 versus surprise identity 3). Using a two-tailed one sample t-test, we tested whether decoding accuracy significantly differed from chance level (0.5). We tested group differences for both identity-decoding and emotion-decoding with a two-sample t-test, and corrected for multiple comparisons by controlling for the false discovery rate (FDR, q < 0.05) (Benjamini and Hochberg, 1995). In addition, we checked for an interaction between ROI and condition (identity versus emotion). For this purpose, we performed a repeated measures ANOVA with ‘Decoding accuracy’ as the dependent variable, ‘Group’ as between-subject factor, and ‘Condition’ (identity, emotion) and ‘ROI’ as within-subject factors.

2.5.7. Adaptation analysis

For this analysis, data were processed using MATLAB with the Statistical Parametric Mapping software (SPM12, Wellcome Department of Cognitive Neurology, London). To assess possible differences in adaptation to faces between ASD and NT participants, we constructed a different GLM, as described in section 2.5.3. fMRI statistics.

We ran an ROI-based analysis, in which an average (across voxels) beta-value was computed for every adaptation condition, in every participant within every ROI. The same ROIs as in the univariate analysis were used. Based on the average beta-values in every ROI, we calculated three adaptation indices to capture the release from adaptation. As a first step, we subtracted the average beta-value in the ‘AllSame’ condition (baseline) from the average beta-value in the three conditions of interest (i.e. ‘AllDiff’, ‘DiffId’, ‘DiffEmo’). This resulted in three adaptation values (‘AllDiff-AllSame’, ‘DiffId-AllSame’, ‘DiffEmo-AllSame’) for every participant in every ROI. Subsequently, we computed the average adaptation indices for every group within every ROI. To assess whether the adaptation indices were significantly different from zero – thus showing a significant release from adaptation - a two-sided one-sample t-test was applied. In addition, a two-sample t-test allowed us to assess differences in adaptation indices between the two groups. Below, we will use the term ‘activation’ to refer to average beta-values.

2.5.8. Functional connectivity analysis

We investigated intrinsic functional connectivity by looking at correlations between temporal fluctuations in the BOLD signal across ROIs. These fluctuations represent neuronal activity organized into structured spatiotemporal profiles, reflecting the functional architecture of the brain. It is generally believed that the level of co-activation of anatomically separated brain regions reflects functional communication among these regions (Gillebert and Mantini, 2013). Intrinsic functional connectivity is typically studied in resting-state data but can also be investigated based on task-related data. However, for the latter, an additional pre-processing step is necessary to subtract the contribution of the stimulus-evoked BOLD response (Fair et al., 2007, Gillebert and Mantini, 2013). This approach was used here.

The functional connectivity analysis was adapted from previous work, used both within our research group (Boets et al., 2013, Bulthé et al., 2019, Pegado et al., 2020, Van Meel et al., 2019) and elsewhere (e.g., King et al., 2018). As done before, several additional pre-processing steps were performed on the already pre-processed (but non-smoothed) data: regression of head motion parameters (and their first derivatives), regression of signals from cerebrospinal fluid (i.e. ventricles) and white matter (and their first derivatives), bandpass filtering between 0.01 and 0.2 Hz (Balsters et al., 2016, Baria et al., 2013), and spatial smoothing at 5 mm FWHM. Finally, regression of stimulus-related fluctuations in the BOLD response was performed by including 24 regressors corresponding to the timing of the presented stimuli (Boets et al., 2013, Ebisch et al., 2013, Fair et al., 2007, Gillebert and Mantini, 2013).

Seed regions included in this functional connectivity analysis were the same seven, equally sized regions as included in the multivariate analysis. Within each ROI, an average BOLD time course was calculated across all voxels in that region. In a next step, we used these averaged BOLD time courses to obtain a functional connectivity matrix for every subject. More specifically, we computed Pearson cross-correlations between the BOLD time courses of all pairs of ROIs. These matrices were then transformed to Z-scores using a Fisher’s r-to-Z transformation. Next, group-level functional connectivity matrices were calculated by performing a random-effects analysis across subjects with a pFDR thresholded at 0.001. Finally, to compare the functional connectivity values between the two groups, we conducted two-sample t-tests on the group-level functional connectivity matrices, pFDR thresholded at 0.05.

3. Results

3.1. Behavioural results

Accuracy and reaction times on the behavioural tasks were examined using group (NT vs. ASD) as a between-subject factor. For the CFMT, the different stages of the test (intro, no noise and noise) were added as a within-subject factor. Results are displayed in Table 3. Generally, across all tasks, there were no significant main effects of group for accuracy or reaction times. Both groups performed equally well and fast on the Emotion Recognition Index (ERI), the Emotion Recognition Task (ERT), and the Benton Face Recognition Task (BFRT). Analysis of the Cambridge Face Memory Test (CFMT) revealed that there was no significant effect of group on accuracy or on reaction times. The interaction between group and test phase was not significant for the accuracy, nor for the reaction times. Post hoc comparisons between groups in the different stages of the CFMT confirmed that significant group differences were observed in neither of the three stages.

Table 3.

Results behavioural analyses.

| ASD | NT | Difference | ||||||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | df1, df2 | F | p | ||

| ERI | ACC (%) | 69.2 | 3.2 | 70.6 | 3.9 | 1,44 | 0.62 | 0.44 |

| RT (s) | 1.9 | 1.9 | 1.7 | 1.6 | 1,44 | 3.28 | 0.08 | |

| ERT | ACC (%) | 61.6 | 11.5 | 60.7 | 10 | 1,44 | 0.11 | 0.74 |

| RT (s) | 2.0 | 2.1 | 1.9 | 1.9 | 1,44 | 1.93 | 0.17 | |

| BFRT | ACC (%) | 80.3 | 10.5 | 82.1 | 7.5 | 1,44 | 1.39 | 0.25 |

| RT (s) | 11.8 | 3.3 | 10.5 | 5.8 | 1,44 | 1.14 | 0.29 | |

| CFMT | Total ACC (%) | 65.2 | 15.0 | 69.1 | 14.3 | 1,44 | 1.08 | 0.31 |

| Total RT (s) | 4.1 | 4.4 | 3.9 | 2.9 | 1,44 | 0.02 | 0.90 | |

| Intro ACC (%) | 93.9 | 12.7 | 97.5 | 4.6 | 1,44 | 1.37 | 0.25 | |

| Intro RT (s) | 2.9 | 1.7 | 2.5 | 1.2 | 1,44 | 2.34 | 0.13 | |

| No noise ACC (%) | 61.8 | 18.0 | 62.5 | 18.9 | 1,44 | 0.04 | 0.84 | |

| No noise RT (s) | 4.9 | 3.0 | 4.5 | 3.0 | 1,44 | 0.24 | 0.63 | |

| Noise ACC (%) | 47.6 | 18.7 | 55.6 | 19.8 | 1,44 | 2.02 | 0.16 | |

| Noise RT (s) | 4.5 | 5.7 | 4.8 | 3.6 | 1,44 | 0.39 | 0.53 | |

Accuracy and reaction times of the task in the scanner were examined and compared between groups. For analyses of accuracy, both correct responses and correct non-responses were taken into account. Both groups performed equally well (meanASD = 92.67%, meanNT = 93.70%; t44 = 0.79, p = 0.43) and equally fast (meanASD = 1.01 s, meanNT = 1.00 s; t38 = 0.18, p = 0.86). These results are consistent with the results of the ERT completed outside of the scanner. The high scores suggest the task was rather easy and that it served its purpose of keeping participants’ attention on the stimuli.

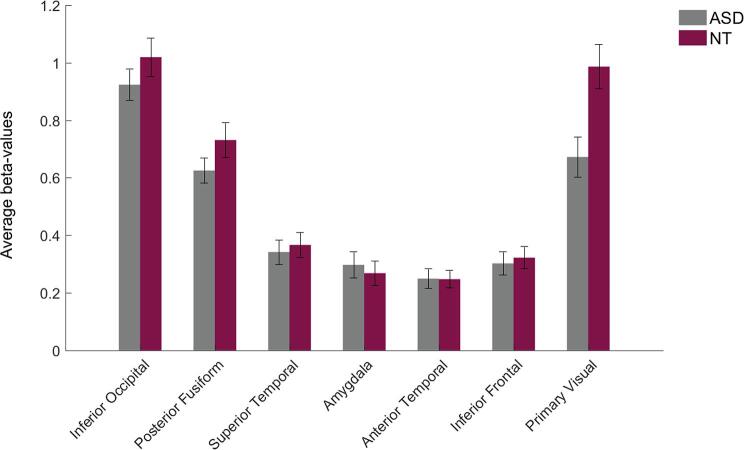

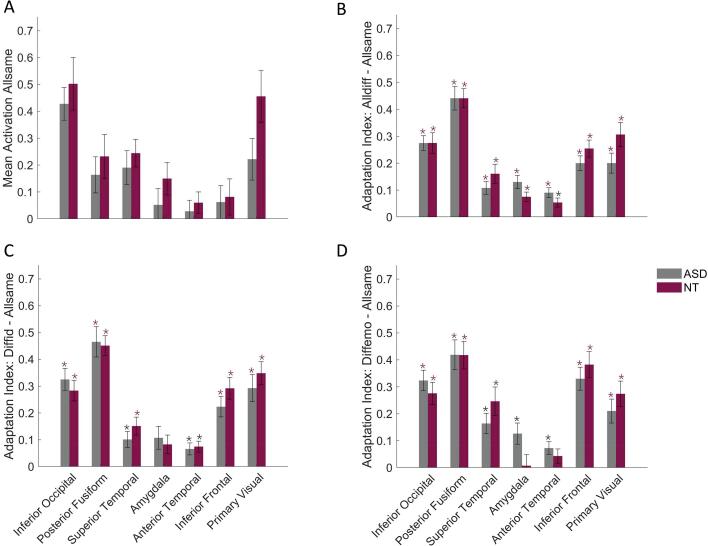

3.2. Univariate fMRI results: Neural activation while watching faces

We performed ROI-based univariate analyses to investigate the average levels of activation within the ROIs, and whether these differed between the groups. Results of the ROI-based univariate “all versus fixation” analysis are depicted in Fig. 4, displaying average neural activation levels while participants were watching faces. We found no significant difference in neural activation between the ASD-group and the NT-group (Table 4). If anywhere, the most consistent difference was found in V1, which is not an area in which a difference would be expected a priori.

Fig. 4.

Average activation (mean beta-values) across all voxels within each of the ROIs for the “all faces versus fixation” contrast in both groups. No differences were found. Error bars display standard errors of the mean (SEM).

Table 4.

Results univariate fMRI analysis (p < 0.05 shown in bold).

| Mean beta valueacross voxels | Differencebetween groups | ||||

|---|---|---|---|---|---|

| ASD | NT | t44 | punc. | pFDR | |

| Inferior Occipital | 0.92 | 1.02 | 1.08 | 0.2841 | > 0.5 |

| Posterior Fusiform | 0.63 | 0.73 | 1.37 | 0.1762 | > 0.5 |

| Superior Temporal | 0.34 | 0.37 | 0.40 | > 0.5 | > 0.5 |

| Amygdala | 0.30 | 0.27 | 0.46 | > 0.5 | > 0.5 |

| Anterior Temporal | 0.25 | 0.25 | 0.05 | > 0.5 | > 0.5 |

| Inferior Frontal | 0.30 | 0.32 | 0.35 | > 0.5 | > 0.5 |

| V1 | 0.67 | 0.99 | 2.94 | 0.0052 | 0.0937 |

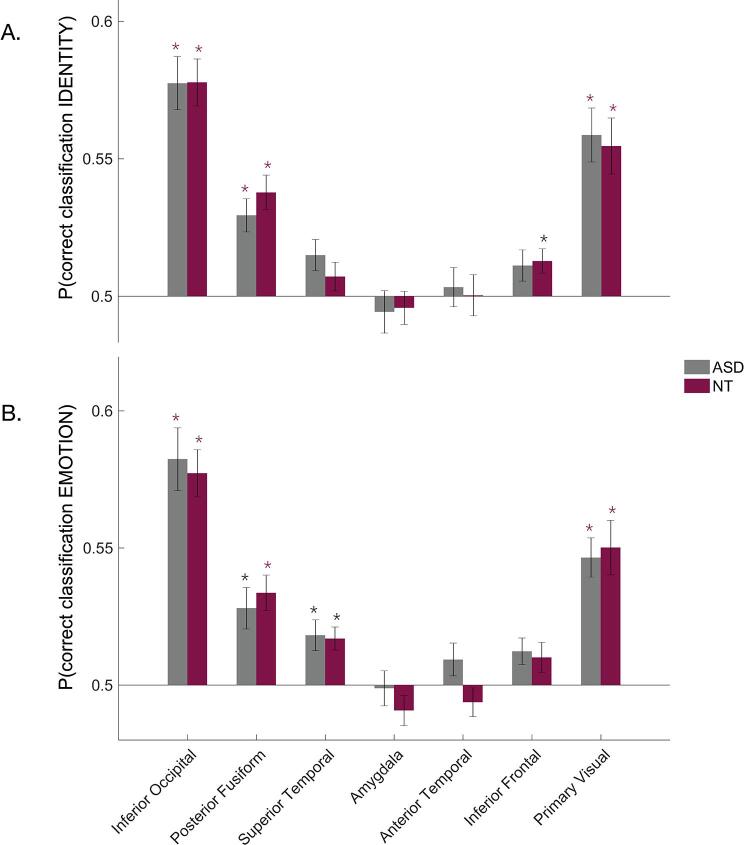

3.3. Multivariate fMRI results: Decoding facial identity and emotional expression

We performed ROI-based multi-voxel pattern analyses to investigate the quality of neural representations. In other words, we studied whether different facial emotions and identities can be decoded on the basis of the neural activation pattern in a particular ROI. In addition, we tested for group differences in the quality of these neural representations. All statistical details are given in Table 5.

Table 5.

Results multivariate fMRI analysis (p < 0.05 shown in bold).

| ASD |

NT |

Difference |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| t21 | punc. | pFDR | t23 | punc. | pFDR | t44 | punc. | pFDR | ||

| Inferior Occipital | Identity | 8.01 | <0.0001 | <0.0001 | 9.11 | <0.0001 | <0.0001 | 0.02 | > 0.5 | > 0.5 |

| Emotion | 7.20 | <0.0001 | <0.0001 | 8.99 | <0.0001 | <0.0001 | 0.36 | > 0.5 | > 0.5 | |

| Posterior Fusiform | Identity | 4.92 | 0.0001 | 0.0004 | 5.99 | <0.0001 | <0.0001 | 0.95 | 0.3453 | > 0.5 |

| Emotion | 3.72 | 0.0013 | 0.0077 | 5.23 | <0.0001 | 0.0002 | 0.57 | > 0.5 | > 0.5 | |

| Superior Temporal | Identity | 2.64 | 0.0153 | 0.0696 | 1.38 | 0.1822 | > 0.5 | 1.02 | 0.3133 | > 0.5 |

| Emotion | 3.25 | 0.0039 | 0.0176 | 4.05 | 0.0005 | 0.0023 | 0.18 | > 0.5 | > 0.5 | |

| Amygdala | Identity | 0.74 | 0.4654 | 1.4077 | −0.71 | 0.4869 | > 0.5 | 0.15 | > 0.5 | > 0.5 |

| Emotion | 0.18 | > 0.5 | > 0.5 | 1.68 | 0.1065 | 0.3220 | 0.97 | > 0.5 | > 0.5 | |

| Anterior Temporal | Identity | 0.46 | > 0.5 | > 0.5 | 0.04 | 0.9696 | > 0.5 | 0.29 | > 0.5 | > 0.5 |

| Emotion | 1.56 | 0.1328 | 0.4018 | 1.19 | 0.2465 | > 0.5 | 1.97 | 0.0555 | > 0.5 | |

| Inferior Frontal | Identity | 1.95 | 0.0643 | 0.2334 | 2.87 | 0.0086 | 0.0390 | 0.23 | > 0.5 | > 0.5 |

| Emotion | 2.53 | 0.0195 | 0.0707 | 1.84 | 0.0787 | 0.2856 | 0.30 | > 0.5 | > 0.5 | |

| V1 | Identity | 5.99 | <0.0001 | <0.0001 | 5.36 | <0.0001 | 0.0001 | 0.28 | > 0.5 | > 0.5 |

| Emotion | 6.50 | <0.0001 | <0.0001 | 5.05 | <0.0001 | 0.0002 | 0.29 | > 0.5 | > 0.5 | |

Firstly, different facial identities could be reliably distinguished based on neural responses in inferior occipital cortex, posterior fusiform cortex, and V1 in both groups. In addition, facial identity could be decoded from neural activation patterns in inferior frontal cortex in the NT-group, but not the ASD-group (Fig. 5A). Identities could not be reliably decoded from neural responses in superior temporal cortex, amygdala and anterior temporal cortex. Secondly, different facial expressions could be decoded from neural activation patterns in inferior occipital cortex, posterior fusiform cortex, superior temporal cortex, and V1 in both groups (Fig. 5B). Emotion could not be reliably distinguished based on neural responses in the other ROIs: amygdala, anterior temporal cortex and inferior frontal cortex. Regarding the difference between the groups, we found no significant group differences in neural response patterns, in neither identity nor emotion.

Fig. 5.

Results multivariate fMRI analysis. (A) MVPA results for decoding of facial identity, FDR corrected. (B) MVPA results for decoding of facial emotional expression, FDR corrected. Red stars indicate a significance level of pFDR < 0.001, while grey stars indicate a significance level of 0.001 < pFDR < 0.05. Error bars display standard errors of the mean (SEM). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Finally, we wanted to check if particular regions of interest play a different role in identity versus emotion discrimination. For this purpose, we performed a repeated measures ANOVA with ‘Decoding accuracy’ as the dependent variable, ‘Group’ as between-subject factor, and within-subject factors ‘Condition’ (identity, emotion) and ‘ROI’ (restricted to the ROIs that showed significant classification for both emotion and identity: inferior occipital cortex, posterior fusiform cortex and superior temporal cortex). We found no significant interaction between ROI and Condition on decoding accuracy (F2,225 = 0.69, p = .5008), suggesting the ROIs play a similar role in the decoding of identity and emotion.

3.4. Adaptation fMRI results

We were interested in the differences in adaptation to faces between individuals with and without ASD. For this purpose, we calculated three adaptation indices by subtracting the 'AllSame' baseline from the three other conditions of interest (AllDiff, DiffId, DiffEmo). This resulted in three adaptation indices: 'AllDiff-AllSame', 'DiffId-AllSame', and 'DiffEmo-AllSame'. These adaptation indices captured the release from adaptation and were computed in both groups within each ROI. All statistical details are given in Table 6. Importantly, there were no significant differences between the groups concerning the average level of activation in the AllSame baseline condition (t44 < 1.86, punc. > 0.0697, pFDR > 0.50, Fig. 6A).

Table 6.

Results adaptation fMRI indices (p < 0.05 shown in bold).

| ASD | NT | Difference | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| AI | t21 | punc | pFDR | t23 | punc | pFDR | t44 | punc | pFDR | |

| Inferior Occipital | AI1 | 10.01 | <0.0001 | <0.0001 | 7.24 | <0.0001 | <0.0001 | <0.01 | > 0.5 | > 0.5 |

| AI2 | 7.98 | <0.0001 | <0.0001 | 7.44 | <0.0001 | <0.0001 | 0.75 | 0.4575 | > 0.5 | |

| AI3 | 8.49 | <0.0001 | <0.0001 | 6.75 | <0.0001 | <0.0001 | 0.85 | 0.4003 | > 0.5 | |

| Posterior Fusiform | AI1 | 10.13 | <0.0001 | <0.0001 | 12.38 | <0.0001 | <0.0001 | 0.01 | > 0.5 | > 0.5 |

| AI2 | 8.23 | <0.0001 | <0.0001 | 12.31 | <0.0001 | <0.0001 | 0.21 | > 0.5 | > 0.5 | |

| AI3 | 7.51 | <0.0001 | <0.0001 | 8.13 | <0.0001 | <0.0001 | 0.01 | > 0.5 | > 0.5 | |

| Superior Temporal | AI1 | 4.64 | 0.0001 | 0.0004 | 4.51 | 0.0002 | 0.0006 | 1.21 | 0.2336 | > 0.5 |

| AI2 | 3.43 | 0.0025 | 0.0092 | 4.54 | 0.0002 | 0.0005 | 1.10 | 0.2733 | > 0.5 | |

| AI3 | 4.30 | 0.0003 | 0.0011 | 4.63 | 0.0001 | 0.0004 | 1.24 | 0.2220 | > 0.5 | |

| Amygdala | AI1 | 5.18 | <0.0001 | 0.0001 | 4.25 | 0.0003 | 0.0009 | 1.81 | 0.0771 | > 0.5 |

| AI2 | 2.52 | 0.0199 | 0.0516 | 2.40 | 0.0250 | 0.0649 | 0.45 | > 0.5 | > 0.5 | |

| AI3 | 3.24 | 0.0039 | 0.0119 | 0.17 | 0.8633 | 2.2383 | 2.10 | 0.0419 | > 0.5 | |

| Anterior Temporal | AI1 | 4.92 | <0.0001 | 0.0002 | 3.25 | 0.0035 | 0.0091 | 1.48 | 0.1471 | > 0.5 |

| AI2 | 2.96 | 0.0075 | 0.0225 | 3.62 | 0.0014 | 0.0043 | 0.29 | > 0.5 | > 0.5 | |

| AI3 | 3.07 | 0.0058 | 0.0152 | 1.62 | 0.1181 | 0.3573 | 0.83 | 0.4132 | > 0.5 | |

| Inferior Frontal | AI1 | 7.47 | <0.0001 | <0.0001 | 8.03 | <0.0001 | <0.0001 | 1.30 | 0.2010 | > 0.5 |

| AI2 | 5.87 | <0.0001 | <0.0001 | 7.15 | <0.0001 | <0.0001 | 1.22 | 0.2282 | > 0.5 | |

| AI3 | 7.74 | <0.0001 | <0.0001 | 7.93 | <0.0001 | <0.0001 | 0.81 | 0.4245 | > 0.5 | |

| V1 | AI1 | 5.42 | <0.0001 | 0.0001 | 6.87 | <0.0001 | <0.0001 | 1.81 | 0.0768 | > 0.5 |

| AI2 | 5.80 | <0.0001 | <0.0001 | 8.08 | <0.0001 | <0.0001 | 0.84 | 0.4043 | > 0.5 | |

| AI3 | 7.74 | 0.0001 | 0.0005 | 5.78 | <0.0001 | <0.0001 | 0.98 | 0.3348 | > 0.5 | |

Note. AI1: 'AllDiff-AllSame’, AI2: ‘DiffId-AllSame', and AI3: ‘DiffEmo-AllSame’.

Fig. 6.

Results adaptation analysis. (A) Average level of activation (beta-values) in the baseline condition (‘AllSame’). (B) Results showing release from adaptation to changes in both facial identity and emotion (AllDiff-AllSame). (C) Results showing release from adaptation to changes in facial identity (DiffId-AllSame). (D) Results showing release from adaptation to changes in facial expression (DiffEmo-AllSame). Red stars indicate a significance level of pFDR < 0.001, while grey stars indicate a significance level of 0.001 < pFDR < 0.05. Error bars display standard errors of the mean (SEM). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

The first adaptation index (‘AllDiff-AllSame’) was significant in all ROIs in both groups. Hence, we observed a release from adaptation when a stimulus changed regarding both identity and emotion. Additionally, we found no group differences with regard to the release from adaptation to identity and emotion simultaneously (Fig. 6B).

The second adaptation index (‘DiffId-AllSame’) was significant in the inferior occipital cortex, posterior fusiform cortex, superior temporal cortex, anterior temporal cortex, inferior frontal cortex and V1. In amygdala, the adaptation index was significant in neither of the groups. Hence, we observed a release from adaptation when a different facial identity was presented while the emotional expression remained stable in all ROIs except amygdala. Additionally, we found no significant differences between the groups regarding the release from adaptation to identity (Fig. 6C).

The third adaptation index (‘DiffEmo-AllSame’) was significant in the inferior occipital cortex, posterior fusiform cortex, superior temporal cortex, inferior frontal cortex and V1. In both the amygdala and the anterior temporal cortex, we observed a non-significant adaptation index for the NT-group, whereas it was significant in the ASD-group. Thus, we observed a release from adaptation when a different facial expression was presented while the identity remained stable, in most ROIs. Additionally, we found no significant differences between the adaptation indices of both groups (Fig. 6D).

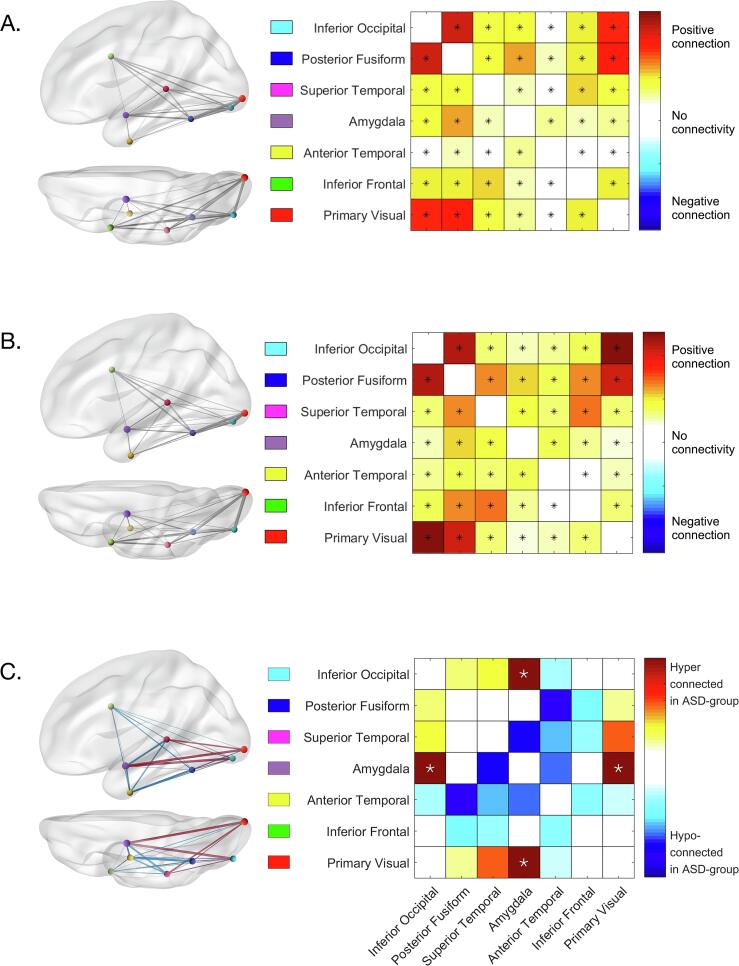

3.5. Functional connectivity

We investigated the functional connectivity among all pairs of ROIs, as well as potential group differences in connectivity. Within each group, we found that all pairwise connections between the investigated ROIs were significant with a critical pFDR of 0.001 (ASD-group: all t21 > 3.67, pFDR < 0.001, Fig. 7A; NT-group: all t23 > 3.50, pFDR < 0.001, Fig. 7B). Next, we compared the patterns of connectivity between the ASD- and NT-groups (Fig. 7C). After FDR correction, we found significantly stronger connections in the ASD-group between amygdala and inferior occipital cortex (t44 = 2.30, pFDR = 0.0132), and amygdala and V1 (t44 = 2.23, pFDR = 0.0156).

Fig. 7.

Functional connectivity levels in the ASD-group (A) and in the NT-group (B). Higher t-values (red) indicate a higher level of connectivity. Stars indicate pairs of regions that are significantly connected (pFDR < 0.001). Within the brain, the thickness of the line indicates the strength of connection. (C) Differences in functional connectivity levels between both groups. Higher t-values (red) indicate higher connections in the ASD-group, while lower values (blue) indicate weaker connections in the ASD-group. Stars indicate significant differences between the groups at pFDR < 0.05. Within the brain, the thickness of the lines indicates the size of the group difference, with red lines signalling hyperconnectivity and blue lines signalling hypoconnectivity in the ASD-group. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

We checked whether individual differences in the number of acquired fMRI runs affected the observed group differences by running an ANCOVA on the functional connectivity data in every ROI-combination that showed a significant difference between the groups (i.e. inferior occipital cortex – amygdala and amygdala – V1). In our model, we included ‘Connectivity’ as a dependent variable, ‘Group’ as a between-subject factor and ‘Number of runs’ as a covariate. This additional ANCOVA revealed that group differences remained significant even when adding the number of acquired runs as a covariate: main effect of group on functional connectivity between amygdala and inferior occipital (F1,43 = 5.19, p = .0277) and between amygdala and V1 (F1,43 = 5.01, p = .0304).

4. Discussion

4.1. Equal behavioural performance in ASD and NT

At the behavioural level, we did not find any differences between individuals with and without ASD concerning the recognition of facial identity and expression. Note that detailed inspection of the behavioural data shows that there is not even the slightest tendency for even a subthreshold group difference. Nevertheless, we intentionally selected tasks that should yield the highest chance of revealing group differences, as the face processing tasks did not allow mere perceptual matching (cf. Weigelt et al., 2012). Likewise, respecting the behavioural task in the scanner, we did not observe differences between groups, similar to Kleinhans and colleagues (2009). While behavioural studies have often demonstrated atypical facial identity (Tang et al., 2015, Weigelt et al., 2012) and facial expression processing (Harms et al., 2010, Uljarevic and Hamilton, 2013), this is certainly not a consistent finding (e.g. Adolphs et al., 2001, Kleinhans et al., 2009, Sterling et al., 2008). However, when studies do not show differences between the groups on standardized tests, this is typically in the case of children and adolescents (e.g. McPartland et al., 2011, O’Hearn et al., 2010) or familiar faces (e.g. Barton et al., 2004). Hence, although our finding seems quite unique for the studied population, it is not necessarily at odds with the more general literature. It is relevant to emphasize that we studied a sample of high functioning individuals. As far as they experience difficulties with face processing, they might have learned to compensate for these difficulties (Harms et al., 2010).

Note that the question about differences between groups at the neural level is not necessarily contingent upon general performance differences at the behavioural level. Studies often report similar behavioural performance (e.g. Pierce, 2001), which can be reached by different neural information processes. Furthermore, any observed neural difference would represent a genuine neural difference between the groups, as it cannot be a by-product of a behavioural inequality. In this regard, a recent series of EEG studies in school-aged boys with ASD versus matched controls showed highly significant group differences in neural processing of facial identity and expression. Neural differences were observed, even though the ASD participants were unimpaired on a large battery of behavioural facial identity and expression processing tasks (Van der Donck et al., 2020, Van der Donck et al., 2019, Vettori et al., 2019).

4.2. Similar univariate brain activity in ASD and NT

We found no significant differences in the average level of brain activity in response to dynamic face stimuli between individuals with and without ASD, in any of the ROIs. This is in line with a number of neuroimaging studies reporting equal face-evoked activity across groups (e.g. Aoki et al., 2015; Bird et al., 2006; Hadjikhani et al., 2004a, Hadjikhani et al., 2004b, Kleinhans et al., 2008). However, the bulk of previous studies did find group differences. These studies mainly reported hypoactivation of fusiform gyrus, superior temporal cortex, amygdala, and inferior frontal cortex in individuals with ASD. A few studies reported hyperactivation in superior temporal cortex, amygdala and anterior temporal cortex (for reviews and meta-analyses, see Aoki et al., 2015, Di Martino et al., 2009, Dichter, 2012, Nomi and Uddin, 2015, Philip et al., 2012).

It is not clear why we did not observe group differences, whereas other researchers did. A possible argument could be our fairly rough ROI definition at a normative anatomical level. Indeed, Kleinhans and colleagues (2008) defined their fusiform region in a similar anatomical way and they did not observe any group differences in face selective activation either. However, even when a strictly defined subject-specific fusiform face area is used, a difference in activity between individuals with and without ASD is not consistently reported (e.g. Hadjikhani et al., 2004a). Another explanation for our inconsistent findings may be the contrast we used to calculate univariate activity. Here, we contrasted neural activity when participants were looking at dynamic faces with neural activity when looking at a fixation cross. Most previous studies have used more specific contrasts, such as faces versus objects (e.g. Humphreys et al., 2008) or emotional faces versus neutral faces (e.g. Critchley et al., 2000). However, Pelphrey et al., (2007) applied an identical contrast, comparing brain activity during dynamic face processing versus presentation of a fixation cross. Contrasting our results, they did observe differences between adults with and without ASD in several face-sensitive regions, including hypo-activation in fusiform gyrus and amygdala. Finally, a major difference between our study and most other studies is that ours was tailored towards the detection of subtle differences in the quality of facial representations via MVPA and adaptation fMRI analysis, and therefore entailed many more trials and runs than conventional subtraction-based fMRI studies. As a result, it is plausible that minor group differences in activity level of the face processing network may have been eliminated, because both participant groups reached maximal plateau level due to the repeated stimulation. Although speculative, supporting evidence for this reasoning is found in the observation that other studies pinpointing social cognition in ASD via extensive MVPA fMRI designs did not observe group differences in basic activity level even though they did observe group differences in the neural representations (e.g. Lee Masson et al., 2019).

Unexpectedly, we did observe a significant (uncorrected) group difference in activity level in V1, even though it did not survive FDR correction. This finding contrasts with findings of Hadjikani and colleagues (Hadjikhani et al., 2004b) who reported normal activity levels in early visual areas in ASD during face processing. Moreover, the direction of the group difference in V1 (increased activity in NT compared to ASD) contrasts with findings of the general perceptual neuroimaging literature of ASD. Indeed, generally, if group differences in brain activity are observed, individuals with ASD typically show reduced activity in higher-level social brain areas and increased activity in low-level primary visuo-perceptual areas (Samson et al., 2012). This particular pattern has been interpreted as evidence for impaired holistic and predictive processing, and increased mobilization of low-level perceptual strategies in ASD (Mottron et al., 2006, Sapey-Triomphe et al., 2020, Van de Cruys et al., 2014).

4.3. Similar quality of multivariate neural representations in ASD and NT

We were able to consistently classify different emotions and identities based on neural activity patterns in a series of face selective brain regions. We observed this was possible in inferior occipital cortex, posterior fusiform cortex, and V1 for both identity and emotion, and in superior temporal cortex for emotion but not identity. Generally, we did not observe any robust anatomical specificity for the sensitivity in decoding of identity versus emotion.

Our results are largely consistent with previous fMRI studies showing significant decoding based on neural responses of face identity in inferior occipital cortex, fusiform gyrus and V1 (Anzellotti et al., 2014, Goesaert and Op de Beeck, 2013; Nestor et al., 2016, 2011; Nichols et al., 2010); and of facial expression in inferior occipital cortex, fusiform gyrus, superior temporal cortex and V1 (Harry et al., 2013, Wegrzyn et al., 2015). Furthermore, the levels of decoding in our study are comparable to decoding levels in previous studies, but the p-values are lower, probably because we have a larger number of participants. In contrast to previous fMRI studies, we did not find significant identity decoding based on neural responses in anterior temporal cortex (Anzellotti et al., 2014, Goesaert and Op de Beeck, 2013, Kriegeskorte et al., 2007), superior temporal cortex or inferior frontal cortex (Nestor et al., 2016). Also contrasting some findings in the literature, we did not find significant decoding of facial expressions based on neural responses in anterior temporal cortex and amygdala (Wegrzyn et al., 2015).

Most importantly in the present context, we tested whether the two groups differed with regard to the quality of neural representations. Thus far, as far as we know, only one MVPA study investigated potential differences in facial (expression) representations in ASD. This study yielded evidence for intact facial expression representations in adults with ASD (Kliemann et al., 2018). Likewise, we found no convincing evidence for differences in the quality of neural facial representations, not in terms of identity nor in terms of expression processing. The anterior temporal ROI was the only region where a trend was present, showing a slightly increased decoding of facial expression based on neural responses in ASD (two-tailed uncorrected p = 0.0555). It could be argued that we should be careful before excluding the possibility that there might be a small effect in that region. Nevertheless, we had no a priori hypothesis that there would be an effect confined to the anterior temporal cortex, and the group difference resulted from the combination of non-significant above-chance decoding in ASD and non-significant below chance decoding in NT. Furthermore, we observed an effect in the opposite direction than what would be expected given the literature about lower facial emotion recognition performance in ASD. For these reasons we think it is appropriate to only look at the p-values corrected for multiple comparisons. Hence, we found no indication for any group difference in quality of facial identity and expression representations in any of the ROIs.

4.4. Similar neural adaptation to facial identity and expression in ASD and NT

We found significant activity in our baseline (AllSame) condition within all ROIs and significant adaptation indices in almost all ROIs (except anterior temporal cortex and amygdala). This indicates a significant release from adaptation in almost all ROIs when a change in identity, emotion or both occurs. Our findings are in line with the finding of adaptation to repeated identity in inferior occipital cortex, fusiform gyrus and superior temporal cortex (Andrews and Ewbank, 2004, Winston et al., 2004, Xu and Biederman, 2010), and adaptation to repeated expression in fusiform gyrus (Xu and Biederman, 2010) and STS (Winston et al., 2004). However, our findings partly contrast the findings of Andrews and Ewbank (2004), who did not find adaptation to repeated identities in the superior temporal lobe.

Furthermore, we did not find significant differences in adaptation to faces between ASD and NT participants. These findings contrast with results obtained by Ewbank and colleagues (2017), who found reduced adaptation to neutral faces in individuals with ASD. In addition, this contradicts the finding of reduced neural habituation in the amygdala in ASD individuals (Swartz et al., 2013). The difference between our results and previous findings could be due to differences in the studied population, as Swartz and colleagues (2013) studied children and adolescents. Another way to account for the difference could be the task, as we used an explicit task in which participants focused on the emotion and identity of faces, while the two abovementioned studies used an implicit task.

4.5. Slight differences in functional connectivity of the face processing network in ASD versus NT

We studied intrinsic functional connectivity between all seven ROIs by regressing out the task-based activity. In addition, we assessed possible differences between individuals with and without ASD. We opted to investigate this artificial resting-state functional connectivity, because a targeted task-based functional connectivity analysis was not compatible with the design of this study. In particular, task-based functional connectivity entails a contrast between tasks or stimuli, which was not possible due to the combined presentation of both conditions (identity and emotion) at the same time point.

We found that all ROIs implicated in the extended face processing network show a pattern of temporal co-activation. Thus, all regions seem to be working closely together when processing dynamic faces in both groups. This is in line with the literature that has identified these ROIs as being relevant for face processing and previous studies showing significant connections between these regions in both groups (Kleinhans et al., 2008). Whereas we observed a pattern of significant temporal co-activation between all ROIs, there was still some differentiation in the pattern of functional connections. Some areas, such as inferior occipital cortex, posterior fusiform cortex and V1 were very strongly co-activated in both groups. In contrast, amygdala and anterior temporal cortex showed an overall relatively weaker functional connectivity with other regions.

When comparing individuals with and without ASD regarding intrinsic functional connectivity of the face processing network, we noticed atypical functional connectivity patterns for the amygdala. In particular, the ASD-group showed stronger functional connections between the amygdala and lower-level visual areas (i.e. V1 and inferior occipital cortex). This specific finding has not been observed in previous studies. Earlier studies, using various different task paradigms, have suggested atypical functional connectivity, mainly involving hypoconnectivity among several face-sensitive regions in the ASD-group, but results are not consistent. For instance, Wicker and colleagues (2008) adopted an effective functional connectivity approach using dynamic facial expression stimuli. They demonstrated that occipital cortex activity had a weaker influence on activity in the fusiform gyrus in adults with ASD. Kleinhans et al. (2008) applied a task-based functional connectivity analysis contrasting faces and houses to reveal reduced connectivity between fusiform gyrus and amygdala in the ASD-group. Koshino and colleagues (2008) also used a task-based approach contrasting faces and fixation, and found reduced connectivity between fusiform gyrus and inferior frontal cortex in individuals with ASD. Finally, studies comparing individuals with and without ASD on resting-state fMRI data also typically observed hypoconnectivity among several face-related regions, including the fusiform gyrus, superior temporal sulcus, and amygdala (e.g. Abrams et al., 2013, Alaerts et al., 2014, Anderson et al., 2011, Guo et al., 2016). For an overview of pooled resting-state fMRI studies in ASD, see the ABIDE consortium web pages (http://fcon_1000.projects.nitrc.org/indi/abide/; Di Martino et al., 2014, Di Martino et al., 2017) and recent reviews (Hull et al., 2017, Lau et al., 2019).

Note that hypoconnectivity has not been unanimously observed either, and that some authors observed functional hyperconnectivity in occipitotemporal brain regions (e.g. Keown et al., 2013, Supekar et al., 2013). Others have emphasized the individual variability and idiosyncrasy of spontaneous connectivity patterns in the autistic brain (Hahamy et al. 2015). In this regard, our observation of functional hyperconnectivity between amygdala and inferior occipital cortex, and amygdala and V1 in the ASD-group may be relatively uncommon but not irreconcilable with previous research. Rather, it adds yet another way functional connectivity -especially of the face processing network- can be atypical in individuals with ASD. We can attribute the inconsistency of results to the use of various different designs and analysis approaches and/or to the heterogeneity of individuals on the autism spectrum. Our findings suggest that the amygdala functions differently in the face processing network in the ASD-group, while overall each node is still equally activated. Speculatively, as the amygdala is involved in attributing salience and emotional valence to perceptual input (Adolphs, 2010), the stronger functional connectivity between amygdala and low-level visual regions may suggest that individuals with ASD attribute emotional meaning to more basic perceptual features of the face, instead of emphasizing the higher-level integrative socio-communicative cues

4.6. Methodological considerations

In our study, we used dynamic stimuli to investigate the processing of facial identity and expression, while previous studies have mostly used static stimuli. Dynamic stimuli are suggested to be more ecologically valid, therefore possibly enabling to more robustly reveal the atypical activation and connectivity patterns in individuals with ASD (Sato et al., 2012, Smith et al., 2010). Indeed, it has been shown in typical participants that dynamic facial stimuli yield stronger activations in social brain regions (fusiform gyrus, superior temporal cortex, inferior frontal cortex, and amygdala) compared to static facial stimuli (Kilts et al., 2003, LaBar et al., 2003, Sato et al., 2004, Schultz and Pilz, 2009, Trautmann et al., 2009). Individuals with ASD seem to be lacking this enhanced response to dynamic stimuli, shown in studies directly comparing responses to dynamic and static facial stimuli in individuals with ASD and neurotypicals (Pelphrey et al., 2007, Sato et al., 2012). In response to dynamic facial stimuli, individuals with ASD showed hypo-activation in fusiform gyrus (Pelphrey et al., 2007), superior temporal cortex (Wicker et al., 2008), and amygdala (Pelphrey et al., 2007). When looking at visual movement in general, decreased responses in V1 have been observed in individuals with ASD compared to neurotypicals (Robertson et al., 2014). Hence, dynamic (facial) stimuli seem to yield higher responses and larger differences between the groups. As we only observed subtle differences between the groups in terms of functional connectivity, our findings are not in line with the finding of enhanced responses to dynamic stimuli. However, our findings are in line with the only other MVPA study in this domain -that also used dynamic facial stimuli- observing no differences between the groups.