Abstract

Background

Impairments in social cognition contribute significantly to disability in schizophrenia patients (SzP). Perception of facial expressions is critical for social cognition. Intact perception requires an individual to visually scan a complex dynamic social scene for transiently moving facial expressions that may be relevant for understanding the scene. The relationship of visual scanning for these facial expressions and social cognition remains unknown.

Methods

In 39 SzP and 27 healthy controls (HC), we used eye-tracking to examine the relationship between performance on The Awareness of Social Inference Test (TASIT), which tests social cognition using naturalistic video clips of social situations, and visual scanning, measuring each individual’s relative to the mean of HC. We then examined the relationship of visual scanning to the specific visual features (motion, contrast, luminance, faces) within the video clips.

Results

TASIT performance was significantly impaired in SzP for trials involving sarcasm (p<10−5). Visual scanning was significantly more variable in SzP than HC (p<10−6), and predicted TASIT performance in HC (p=0.02) but not SzP (p=0.91), differing significantly between groups (p=0.04). During the visual scanning, SzP were less likely to be viewing faces (p=0.0001) and less likely to saccade to facial motion in peripheral vision (p=0.008).

Conclusions

SzP show highly significant deficits in use of visual scanning of naturalistic social scenes to inform social cognition. Alterations in visual scanning patterns may originate from impaired processing of facial motion within peripheral vision. Overall, these results highlight the utility of naturalistic stimuli in the study of social cognition deficits in schizophrenia.

Keywords: social cognition, visual search, motion, face emotion recognition, attention

Introduction

Deficits in social cognition are a major cause of psychosocial disability in schizophrenia, but the underlying mechanisms remain incompletely understood (M. F. Green, Horan, & Lee, 2015; 2019). In addition to the perception of auditory cues and cognitive processing speed, visual scanning of social scenes — the exploration of the scenes with saccadic eye-movements — is an important component of social cognition (M. F. Green et al., 2015; Zaki & Ochsner, 2009). Visual scanning patterns are driven in part by variation in low-level visual features, such as luminance, contrast, and motion speed (Kusunoki, Gottlieb, & Goldberg, 2000; Marsman et al., 2016; Shepherd, Steckenfinger, Hasson, & Ghazanfar, 2010; White et al., 2017), as well as by as-yet unexplained cognitive factors (Wilming et al., 2017). Disturbances in the processing of all of these visual features have been reported previously in SzP (Butler et al., 2009; Calderone et al., 2013; Chen et al., 1999; Javitt, 2009; Martinez et al., 2018; Taylor et al., 2012) and have been linked to social cognitive deficits (M. F. Green et al., 2015; 2019; Javitt, 2009). However, how processing of visual features affects visual scanning and in turn social cognition in SzP has not been studied.

To examine the relationship between visual processing, visual scanning, and social cognition, we used a validated naturalistic test of social cognition — The Awareness of Social Inference Test (TASIT) (McDonald, Flanagan, Rollins, & Kinch, 2003) — while tracking eye-movements. Unlike the static stimuli in traditional tests of social cognition, such as ER-40 or Reading the Mind in the Eyes Test (Pinkham, Penn, Green, & Harvey, 2015), TASIT contains the motion dynamics of real-word social situations, which are the strongest predictors of visual scanning in naturalistic scenarios (White et al., 2017).

The TASIT consists of a series of video-based vignettes in which 2–3 individuals interact. In each vignette the main actor is either being sarcastic or lying to another character. In the sarcasm videos, the main actor uses exaggerated facial expressions and auditory prosody to indicate that the intended meaning is counterfactual to the plain meaning of his utterance. Correctly answering the questions about the sarcasm videos, then, requires the viewer to optimally detect both the visual and auditory social cues. By contrast, lies are detected by comparing the content of what the actor is saying to information conveyed elsewhere in the video, so that auditory prosody and facial expression are not critical factors.

SzP show reliable deficits in this task and are particularly impaired in detection of sarcasm (Pinkham et al., 2015; Sparks, McDonald, Lino, O’Donnell, & Green, 2010). Furthermore, TASIT deficits correlate significantly with real-world social functioning, supporting its ecological relevance (Pinkham et al., 2015). This test thus serves as a potentially powerful platform to use for investigating underlying mechanisms related to social cognition. We hypothesized that visual scanning patterns would differ in SzP compared to HC, and that visual scanning would predict TASIT performance in both groups independent of other relevant factors, such as the detection of sarcasm in spoken sentences, recognition of facial expressions, and cognitive processing speed (Holdnack, Prifitera, Weiss, & Saklofske, 2015).

During visual scanning, each saccade represents a decision to move the eyes from the current location to another visual feature that may provide additional information about the social scene (Corbetta, Patel, & Shulman, 2008; Patel, Sestieri, & Corbetta, 2019). Given that faces are a key visual feature used to make inferences about the mental states of the people in the scene, we hypothesized that the divergent visual scanning patterns in SzP would result in decreased viewing of faces.

Sometimes the faces are near the current focus (in central vision, <5° from the fovea or center of the retina) and sometimes they are further away in peripheral vision (>5° from the fovea). In general, SzP are impaired in the processing of low-level visual features that depend on magnocellular visual processing pathways, in particular motion (Butler et al., 2005; Javitt, 2009; Martinez et al., 2018). Facial expressions in the real world involve slow subtle movements (Sowden, Schuster, & Cook, 2019), and SzP seem particularly impaired in processing moving facial expressions (Arnold, Iaria, & Goghari, 2016; Johnston et al., 2010; Kohler et al., 2008). Since magnocellular processing dominates peripheral vision, SzP may have greater deficits in the processing of peripheral versus central moving facial expressions (Dias et al., 2020; Javitt, 2009; Martinez et al., 2018). Therefore, we examined the visual scanning of faces in peripheral vision relative to low-level visual features (motion, contrast, and luminance), hypothesizing that the likelihood that SzP make saccades to moving facial expressions in peripheral vision would be reduced compared to healthy controls (HC).

However, methods for comparing visual scanning patterns between groups and linking them to the visual features in a video largely do not exist, thus requiring us to adapt or create a number of analytical techniques for this study. A key principle underlying our approach was to use the HC visual scanning pattern as the “gold standard” by which to compare SzP patterns, and then to examine the intervals during which SzP visual scanning patterns diverged from the HC. This approach takes advantage of previous observations that HC show highly convergent scan patterns of naturalistic scenes despite the lack of explicit instruction where to look (Marsman et al., 2016; Wilming et al., 2017).

We then used neurophysiologically based models of the visual field and saccade generation to examine the relationship of what visual features fell into central versus peripheral vision and visual scanning. Combined with automated detection and classification of visual features, these techniques allowed us to model the processing of the visual scene for each individual in a way that mimics their experience of the visual scanning of real-life social situations, thus allowing us to test our hypotheses about the link between divergent visual scanning patterns and social cognition.

Materials and Methods

Participants

42 SzP and 30 HC were recruited with informed consent in accordance with New York State Psychiatric Institute’s Institutional Review Board. 3 SzP and 3 HC were excluded from further behavioral analyses because of problems with the eye-tracking data, such as gross errors in calibration, leaving 39 SzP and 27 HC in the analyses. SzP were moderately ill, domiciled, recruited from the community, and stabilized on medication. HC were demographically matched to SzP with no history of major psychiatric disorders. See Supplementary Materials for more details.

Behavioral Testing

In multiple separate sessions, participants were evaluated on a combination of standard neuropsychological assessments, symptomology scales, and other behavioral tests, including the processing speed index (PSI) in the WAIS-III (Weschler, 1997), Attitudinal Prosody (auditory sarcasm) (Leitman, Ziwich, Pasternak, & Javitt, 2006; Orbelo, Grim, Talbott, & Ross, 2005), Penn Emotion Recognition (ER-40) (Heimberg, Gur, Erwin, Shtasel, & Gur, 1992), and The Awareness of Social Inference Test (TASIT) (McDonald et al., 2003) with eye-tracking. PSI was chosen to represent cognitive processing speed as it best reflects full-scale cognitive capabilities in SzP and consists of visual search tasks that require visual scanning (Bulzacka et al., 2016). SzP also performed the MATRICS Cognitive Consensus Battery (MCCB) (Nuechterlein et al., 2008).

Group Comparisons and Correlations

All group and condition comparisons (except for those detailed in Saccades to Visual Features below) were performed using repeated measures ANOVAs and post-hoc t-tests with a threshold of p<0.05. All correlations between conditions were performed using linear regression, with group co-variance assessed with ANCOVAs. To assess the relative contributions of the various neuropsychological measures to predicting TASIT performance, the z-transformed measures were entered into a linear regression model predicting TASIT performance (also z-transformed) to give their partial correlations. These relative weights were then used to create a composite score to serve as a univariate predictor of TASIT performance. Since HC performance was near the maximum for TASIT, TASIT performance was arcsin transformed to perform Normal statistics.

Visual Scanning Measures and Analyses

To compare variability in visual scanning patterns between groups, we first calculated the mean eye-position and the standard deviation (sd) elliptical boundary on each video frame for each group. The elliptical boundary axes were determined by sd of the mean x and the mean y positions. We then compared group differences in the ellipse area averaged across frames, correcting for autocorrelation (number_of_frames*=number_of_frames/2Te, where Te is the number of frames the autocorrelation takes to drop to 1/e). We also counted, for each group, the percentage of individuals that fell outside of the HC 2 sd elliptical boundary. Each individual HC’s eye position was compared to the leave-one-out average and 2 sd elliptical boundary derived from all of the other HC.

To assess the degree of divergence of each individual’s eye position from the mean HC position, we calculated the z-transformed distance for each individual for each video frame. The z-transformed distance is the distance between the individual’s eye position and the mean HC position divided by the standard deviation of the HC eye position distribution (again individual HC were compared against a leave-one-out average of the other HC). This measure (log transformed for Normal statistics) emphasizes divergence by more heavily weighting eye positions that are distant from the HC mean during intervals when HC eye positions are relatively convergent, or when the HC standard deviation is small.

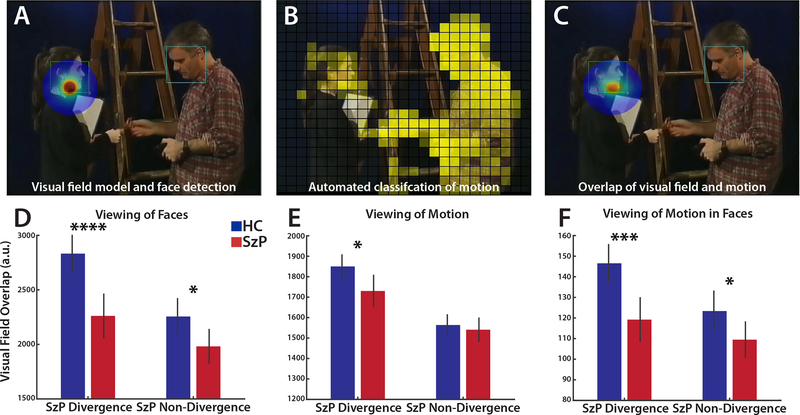

Visual Features Within the Visual Field

To quantify which visual features each individual looked at, we simulated the visual processing of the video frame using algorithms designed to mimic the processing by visual cortex. We first applied automated detection and outlining of faces to generate binary face masks (Zhu & Ramanan, 2012) (Figure 3A). Then each video frame was divided into 1°x1° cells and the strength of low-level visual features (motion speed, contrast, and luminance) was quantified for each cell in each video frame (Russ & Leopold, 2015) (Figure 3B). These visual features were then temporally smoothed and normalized to the maximum intensity to mimic processing in the visual cortex, and the low-level visual features were multiplied by the face masks to generate maps of visual features within faces.

To determine which visual features each participant was seeing, we modeled the visual field with a 2D representation of the V4 cortical magnification factor (Sereno et al., 1995) centered on the eye position for each video frame and smoothly weighted by its proximity to the center of the visual field (Figure 3A). This visual field model was then multiplied by each visual feature map to generate a salience map (Figure 3C). The surface integral of this salience map summarizes how much each visual feature is represented in the visual field for that participant on each video frame. To compare what each group was viewing, these summary measures were averaged across frames and individuals. See Supplemental Materials for further details.

Saccades to Visual Features

We next examined the number of saccades made to faces as a function of saccade amplitude in each group. For every saccade made to a face, saccade amplitude was binned by 0.25° intervals for each individual across sarcasm or lie trials before group averaging. To then examine which visual features (motion, contrast, luminance) drove saccades to faces in peripheral vision, we quantified the intensity of the visual feature within that face in the 133ms interval prior to the saccade. We then plotted the density of saccades as a function of both saccade amplitude and visual feature strength for each group, and then searched the group difference map for significant clusters of saccades. Saccade amplitudes ≥5° were defined as saccades to peripheral faces, as that amplitude approximates the boundary between foveal and peripheral processing (Nieuwenhuys, Voogd, & van Huijzen, 2008) (see Supplemental Materials for details). False-positive rates were determined by permutation testing, shuffling group labels, and repeating the analysis 10,000 times.

Results

TASIT Performance

Demographically, SzP were matched to HC, with a small increase in mean age (40.6(11.0) versus 35.2(9.3), p=0.04) in SzP (Table 1). As predicted, SzP were highly impaired on comprehension of TASIT clips (group: F1,64=25.63, p<10−5, Cohen’s d=1.3), with a greater impairment in comprehension of sarcasm versus lies (group x sarcasm/lie: F1,64=9.43, p=0.003, Figure 1A).

Table 1:

Demographics

| Demographic | SzP | HC | Statistics |

|---|---|---|---|

| Age | 40.6(11.0) | 35.2(9.3) | t(61)=2.1, p=0.04 |

| Patient SES | 30.3(13.5) | 34.4(13.5) | t(56)=1.2, NS |

| Parent SES | 39.7(15.4) | 45.3(13.5) | t(59)=1.5, NS |

| Gender (F/total) | 10/39 | 11/27 | |

| Edinburgh Inventory | 17.3(3.9) | 17.5(3.4) | t(59)=0.2, NS |

| Vision (40in) (median) | 20/32 | 20/32 | Wilcoxon RS p=0.7 |

| Color Blindness (# subjects) | 0/39 | 0/27 | |

| PANSS Positive | 15.8(6.1) | ||

| PANSS Negative | 13.3(3.6) | ||

| PANSS Total | 56.5(14.5) | ||

| Antipsychotic Dose (CPZ Equivalents) | 481(671) |

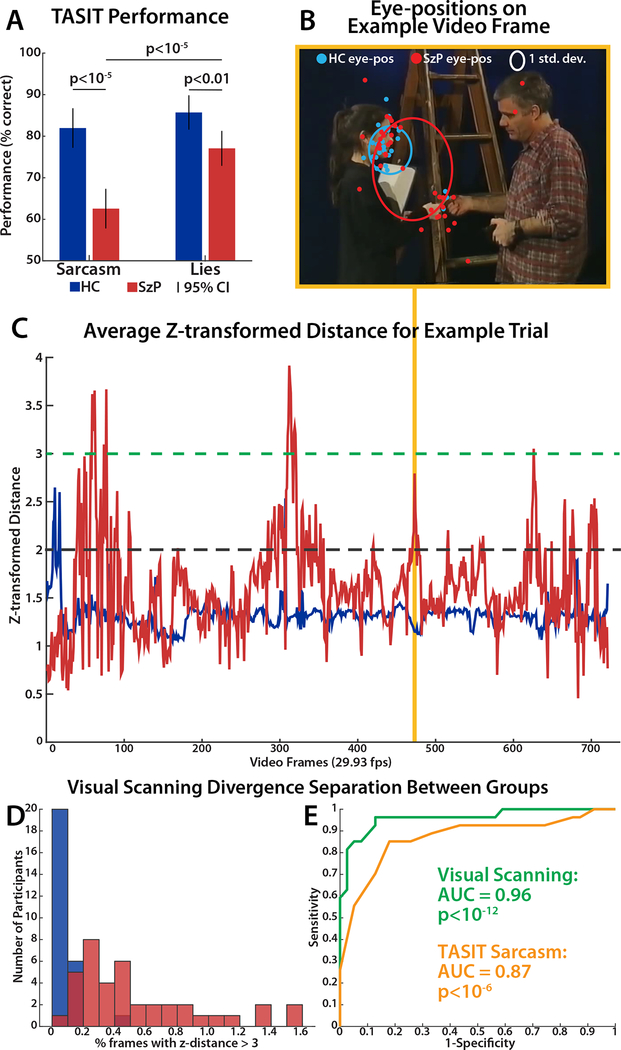

Figure 1:

TASIT and visual scanning performance. A) TASIT performance by group where 50% is chance. B) Example video frame of gaze positions of HC (blue) and SzP (red). HC eye positions in blue are tightly clustered versus the scatter of the red eye positions of the SzP, as demonstrated by the difference in the area of the elliptical boundary representing 1 standard deviation for each group. C) SzP and HC visual scanning divergence time-course. Divergence measured as average z-transformed distance. Dotted lines mark thresholds for various analyses: green for ROC analyses (Figure 1D & 1E) and black for visual feature analyses (Figure 3). D) Histogram showing distribution of participants in each group of % video frames with eye position z-transformed distance > 3. E) ROC curves for group segregation based on visual scanning (z-transformed distance > 3) and TASIT.

Eye position Variability and Visual Scanning Performance

Across trials, SzP as a group made more saccades than HC (1009.1(242.1) versus 970.8(233.2), t15=4.6, p=0.0003) with no difference between sarcasm and lie trials, resulting in a lower mean fixation duration of 536ms versus 560ms. SzP eye positions overall were also more variable across all TASIT video frames compared to HC: the average area of the ellipse representing the standard deviation of the x and y eye position across video frames was 32.5% larger in SzP versus HC (t880=5.0, p<10−6, ellipses in Figure 1B).

We further quantified visual scanning variability of each group as the percentage of participants that fell outside the HC 2 sd elliptical boundary on each video frame. SzP visual scanning variability was substantial, with an average of 18.7(10.7)% of eye positions more than 2 sd from the HC mean eye position, while the HC eye positions rarely deviated (6.1(2.3)%, consistent with a Normal distribution). Reversing the analysis (comparing all participants to the SzP mean eye position) resulted in 8.7(3.2)% of HC eye positions falling outside the SzP 2 sd elliptical boundary compared to SzP (6.2(5.2)%, further supporting increased SzP eye position variability. Moreover, the SzP visual scanning variability was not evenly distributed through all frames of the videos: there were 78 intervals across the 16 videos during which >=50% of SzP were outside of the 2 sd ellipse.

SzP Visual Scanning Divergence

To quantify individual visual scanning divergence, we calculated the z-transformed distance of each individual’s eye position versus the mean HC position. Across video frames, the SzP mean z-transformed distance of 1.57(0.54) was significantly different from the HC mean z-transformed distance of 1.31(0.16), and did not differ for lie versus sarcasm trials (ANOVA, group: F2,64=5.8, p=0.02; lie/sarcasm: F2,64=0.05, p=0.83; group x lie/sarcasm: F2,64=0.2, p=0.7). Similar to the variability measure above, SzP were not divergent on every video frame. Rather, there were troughs where SzP and HC divergence was similar, and peaks where SzP were much more divergent than HC (Figure 1C). Applying a divergence threshold of a z-transformed distance of 2 (black dashed line Figure 1C), we found that SzP diverged on 11.8% of the total frames in 320 intervals.

We then used an ROC analysis to examine whether these SzP divergence peaks were driven by individual outliers or by a systematic deviation across the group. With a threshold of z-transformed distance > 2, SzP could be separated from HC with an ROC AUC=0.83. This AUC was greater than expected by chance (p=0.002) even given the biasing nature of the analysis, demonstrating that these peaks in SzP divergence were driven by a systematic deviation away from the HC visual scanning pattern during these intervals. Increasing the threshold to z-transformed distance > 3 (green dashed line Figure 1C) generates a ROC AUC=0.96 (p<0.0001), substantially better than TASIT sarcasm performance itself (AUC=0.87, p=0.05, Figure 1D and 1E). These results demonstrate the potential of visual scanning as a diagnostic biomarker: if an individual’s eye position is >3 z-transformed distance away from the HC mean for more than 0.55 seconds (0.2%) of the 276 seconds of TASIT sarcasm video clips, in our sample they are highly likely to have schizophrenia.

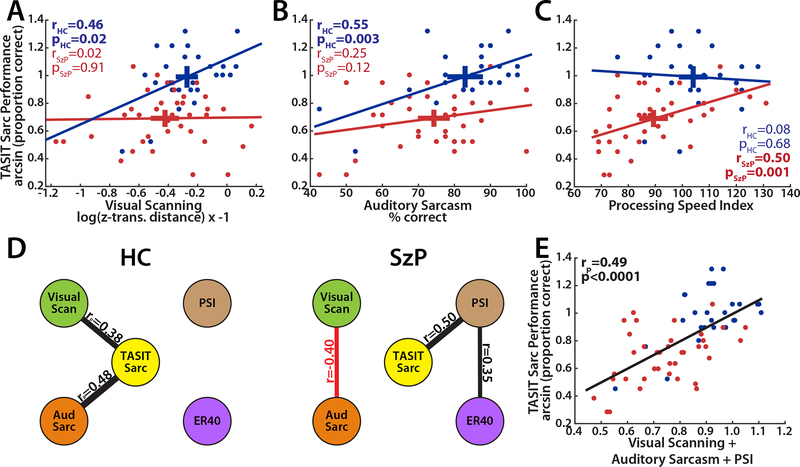

Visual Scanning Versus TASIT Performance

We next examined the relationship of visual scanning and TASIT performance (Figure 2). The slope of the relationship between scanning behavior and TASIT performance (arcsin transformed for Normal statistics) differed significantly between groups (group x z-distance: F2,64=4.6, p=0.04), with a significant relationship observed in HC (r=0.46, p=0.02) but not SzP (r=0.02, p=0.91), indicating that visual scanning performance was related to the comprehension of the TASIT sarcasm videos only in HC (Figure 2A). Visual scanning performance did not correlate with TASIT lie performance either within or between groups.

Figure 2:

A) Correlation of visual scanning with TASIT sarcasm performance. Crosses represent horizontal and vertical 95% confidence intervals. Visual scanning is measured as z-transformed distance, log transformed for Normal statistics, and inverted so that higher values represent better performance. B) Correlation of auditory sarcasm detection performance and TASIT sarcasm performance by group. C) Correlation of cognitive processing speed as measured by the WAIS-IV Processing Speed Index (PSI) by group. D) Summary of correlations between TASIT sarcasm, visual scanning auditory sarcasm, cognitive processing speed (PSI) and face-emotion recognition (measured by ER-40) in HC and SzP. E) Correlation of composite score representing the combination of visual scanning, auditory sarcasm, and processing speed index with TASIT sarcasm performance.

To understand the lack of correlation in SzP, we next explored the relationship of visual scanning and TASIT sarcasm performance relative to measures of other abilities critical for following these social situations: the detection of auditory sarcasm, the speed of cognitive processing (measured by PSI), and face-emotion recognition (measured by ER-40). SzP were significantly impaired in all of these measures (Table 2) with a significant correlation with TASIT sarcasm performance across groups (Table 3). For auditory sarcasm, the group x covariate interaction was not significant, but similar to visual scanning the correlation was significant in HC (r=0.55, p=0.003) but not SzP (r=−.25, p=0.12) (Figure 2B). Cognitive processing speed did exhibit a significant group x covariate interaction but with the opposite pattern as visual scanning and auditory sarcasm, with a strong correlation in SzP (r=0.50, p=0.001) but not HC (r=0.08, p=0.68) (Figure 2C). Face-emotion recognition did not exhibit a group x covariate interaction nor a significant correlation within either group (HC: r=0.20, p=0.31; SzP: r=0.30, p=0.06).

Table 2:

SzP vs. HC performance

| Measure | t64 | p |

|---|---|---|

| Visual scanning | −2.3 | 0.027 |

| Auditory Sarcasm | −2.6 | 0.012 |

| General Cognition | −3.8 | 0.0004 |

| Face-emotion Recognition | −3.3 | 0.001 |

Table 3:

Predictors of TASIT Sarcasm performance

| Predictors of TASIT | Group | Covariate | Group x covariate |

|---|---|---|---|

| Visual Scanning | F2,64=32.3, p<10−6 | F2,64=1.7, p=0.2 | F2,64=4.6, p=0.036 |

| Auditory Sarcasm | F2,64=27.6, p<10−5 | F2,64=10.1, p=0.002 | F2,64=2.1, p=0.15 |

| General Cognition | F2,64=23.7, p<10−5 | F2,64=5.6, p=0.021 | F2,64=4.8, p=0.031 |

| Face-emotion Recognition | F2,64=24.0, p<10−5 | F2,64=4.6, p=0.036 | F2,64=0.1, p=0.8 |

We next examined which combinations of these measures independently predicted TASIT performance in each group and across groups. For HC, only visual scanning and auditory sarcasm remained significant in stepwise regression (visual scanning: rp=0.38, p=0.021; auditory sarcasm: rp=0.48, p=0.005) (Figure 2D). For SzP, only cognitive processing speed remained significant. Combining the three within-group significant predictors of TASIT sarcasm performance (visual scanning, auditory sarcasm, and cognitive processing speed) explained 42% of the variance in TASIT performance across both groups, or 24% of the variance in TASIT performance after accounting for group membership (composite score: F2,64=19.1, p=0.00005, group: F2,64=13.2, p=0.0006), with no difference in the relationship between groups (group x composite score: F2,64=0.9, p=0.34; Figure 2E). Exploration of the other relationships in SzP revealed a negative correlation between visual scanning and auditory sarcasm (r=−0.40, p=0.01) not present in HC (r=0.16, p=0.4), as well as a positive correlation of face-emotion recognition and cognitive processing speed in SzP (r=0.35, p=0.033) but not HC (r=0.06, p=0.8, Figure 2D).

TASIT sarcasm performance in SzP correlated with the MCCB composite score (r=0.40 p=0.014), though within MCCB only the Speed of Processing (SoP) domain score (r=0.45, p=0.006) was significant. TASIT sarcasm performance in SzP also correlated strongly with the Brief Assessment of Cognition in Schizophrenia Symbol Coding score (BACS-SC, r=0.43, p=0.007), but not category fluency (r=0.24, p=0.16). Antipsychotic medication dose did not correlate with TASIT performance and controlling for age did not change above results (details in Supplemental Materials).

Visual Features Missed by SzP

We next examined what visual features SzP may be missing compared to HC during the SzP peak divergence intervals (Figures 3A–C for analysis details). A strong group x interval effect (F2,64=40.2, p<10−7) demonstrated a 20% decrease in the amount of time SzP spent looking at faces during the SzP divergence intervals compared to HC (t64=4.1, p=0.0001, Figure 3D). However, for basic visual features (motion, contrast, and luminance), the SzP deficit was much weaker, with a somewhat larger effect for motion versus the other visual features. A group x interval x visual feature effect (F6,64=3.2, p=0.04) showed that SzP spent a little less time looking at motion (6.5%) during the SzP divergence intervals compared to contrast and luminance (4.1% and 4.5% respectively, Figure 3E and Supplemental Figure 1).

Figure 3:

Viewing of visual features during intervals when SzP eye positions are divergent. A) Modeling of where in the video frame each participant was looking by centering a visual field model on their eye position (rainbow halo). Green squares represent automated face detection boundaries. B) Example visual feature map for motion speed, normalized by maximum motion and averaged over previous 133ms interval. Each visual feature cell was approximately 1° x 1°, with the size rounded to represent each video frame as 25 × 21 cells. C) The motion map (3B) was filtered through the visual field model (3A), creating a map of which visual feature the participant was viewing on each frame, weighted by distance from the center of the visual field. Face-masked visual feature maps (3F) masked the non-normalized versions of these visual field feature maps with a face mask (square outlines). D) Faces viewed during SzP divergence versus non-divergence intervals (SzP mean z-transformed distance >2 or <2, respectively; black dotted line in Figure 1C). The “visual field overlap” measure represents the combination of the time spent viewing a visual feature and the proximity of that visual feature to the center of the visual field. The greater viewing of faces by HC versus SzP during the divergence intervals demonstrates that HC were often viewing faces during these intervals and SzP were not. E) Viewing of motion during SzP divergence and non-divergence intervals. F) Viewing of motion within faces during SzP divergence and non-divergence intervals. *p≤0.05, **p≤0.01, ***p≤0.001, ****p≤0.0001

We also did not find significant differences in the viewing of basic visual features within faces (Figure 3F and Supplemental Figure 1): there were no group x interval x visual feature effects (F6,64=2.3, p=0.11). In addition, the 18.6%−21.0% decrease in the viewing of the three face-masked visual features more closely resembled the 20% decrease in the viewing of faces than to the 4.1–6.5% decrease in viewing the three non-face-masked visual features discussed above, suggesting that the deficit was more specific to viewing faces than any of the basic visual features.

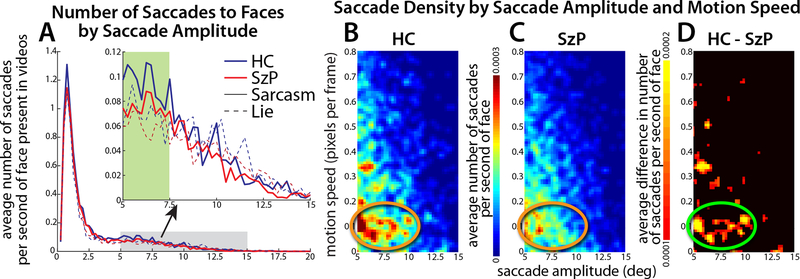

Visual Features Driving Divergent Visual Scanning Patterns

Finally, we examined what visual features may be driving the divergence in the visual scanning of the TASIT videos in the SzP. Both groups made more saccades in sarcasm trials versus lie trials (trial type: F2,64=28.2, p=10−6) with no significant difference between groups. 18.8% of saccades to faces were made to faces in the periphery (>5°, or more than 5 boxes in Figure 3B). Of those saccades HC consistently made more saccades to faces in the 5–7.5° range than SzP (permutation testing: 10/10 bins, p=0.037, green zone in Figure 4A).

Figure 4:

Saccades to faces in peripheral vision. A) Average number of saccades to faces by saccade amplitude for sarcasm and lie trials. Amplitude binned by 0.25°. Gray shaded area shows peripheral field counts highlighted in inset. Green shaded area in inset highlights the saccade amplitudes over which the number of HC saccades was significantly greater than SzP. B-C) 2D histogram showing density of saccades to the periphery (gray zone in 4A) for each group as a function of saccade amplitude and motion speed. Saccade amplitude was binned by 0.25°, and motion speed was binned by 0.0075 (1/80th of the 99.9th percentile of the motion distribution). Orange circles highlight the cluster of missing peripheral saccades in SzP versus HC. D) Group difference map. Green circle highlights the cluster of missing peripheral saccades in SzP versus HC.

We then quantified the relationship between the amplitude of the saccade to faces and the visual features present in the faces before the saccade. The number (density) of saccades to faces in the peripheral visual field made by each group were stratified by the strength of the low-level visual features in those faces (Figures 4B–D and Supplemental Figure 2). For motion, the saccade density plots showed a high-density cluster of saccades in HC to slow motion speed starting in the 5–7.5° saccade amplitude range and extending to 10° (orange circle in Figure 4B). This range of speeds (<0.4 pixels/frame) matched that of facial expressions (Sowden et al., 2019). There was no similar cluster in SzP (orange circle in Figure 4C), leading to a significant group difference (green circle in Figure 4D, p=0.008, permutation testing). No clusters survived significance in the saccade density plots for other visual features or for saccade density plots to non-face locations, indicating that the greater number of saccades to peripheral faces in HC was driven specifically by face motion as opposed to other types of motion (Supplemental Figure 3).

Discussion

To our knowledge, this is the first study to evaluate the role of visual scanning in the comprehension of dynamic naturalistic social scenes in SzP. There were five main findings. First, as expected, SzP were more impaired in the comprehension of the TASIT sarcasm versus lie videos. Second, SzP visual scanning patterns often diverged from those of HC. Third, visual scanning integrity and auditory sarcasm detection predicted TASIT sarcasm performance in HC, whereas only cognitive processing speed predicted TASIT performance in SzP. Fourth, SzP often missed viewing the faces that drew the gaze of HC. Finally, SzP orient less often to moving facial expressions in the periphery than HC. Overall these findings suggest that SzP are unable to rely on detection of moving facial expressions in the periphery to efficiently guide visual scanning of complex dynamic social scenes as HC do, instead relying on alternate strategies that rely on cognitive abilities to, at least partially, overcome the social cognition deficits.

TASIT broadly tests the ability to use auditory and visual social cues to make inferences about the actors’ mental states, and correlates with overall social functioning (Pinkham et al., 2015). Despite the fact that detection of both lies and sarcasm requires inference of the speaker’s internal mental state, different types of information are used to make the inference in the two situations. For both lies and sarcasm, the viewer must understand that the communicated information is counterfactual to reality. However, in the case of lies (as portrayed in the TASIT), the information is communicated by comparing the information content of what the main actor is saying at different points in the video.

By contrast, in the case of sarcasm, the information is also communicated through modulation of tone of voice (attitudinal prosody (Leitman et al., 2006; 2010)) and facial expressions. The TASIT was normed to be equally sensitive to impairments in sarcasm and lie detection in traumatic brain injury (McDonald et al., 2006). The differential deficit in sarcasm versus lie detection therefore suggests differential impairment in ability to utilize sensory information and to orient to critical features of the environment. The specific deficit in the sarcasm versus lie trials also indicates that performance deficits were not caused solely by reduced vigilance due to medications or other reasons, and that SzP were generally able to both follow the dialogue and actions on screen and understand the questions.

The systematic divergence of SzP and HC visual scanning patterns suggests that SzP miss seeing certain visual features that HC spontaneously decide are important, namely faces. The naturalistic stimuli allowed us to explain why SzP may not have been looking at those faces. The failure to process motion (and specifically biological motion) in central vision is not a new finding (Butler et al., 2005; Chen, Levy, Sheremata, & Holzman, 2004; Martinez et al., 2018; Okruszek & Pilecka, 2017). However, our findings suggest that motion processing deficits in SzP may have the largest impact in peripheral vision. These peripheral deficits also appear to be independent of previously detailed deficits in face-emotion recognition deficits, usually measured in central vision (Arnold et al., 2016; Johnston et al., 2010; Kohler et al., 2008). Our findings suggest that the deficits in motion processing in peripheral vision reduce the likelihood that faces are ever brought into central vision for further inspection, and when they are, the previously detailed face-emotion recognition deficits may additionally impact the understanding of the social scene.

The correlation of visual scanning and TASIT sarcasm performance in HC but not SzP suggests that most SzP are unable to rely on stimulus-driven visual scanning to locate these faces. The ones that are able to mimic the HC visual scanning pattern face another problem—they are unable to detect auditory expressions of sarcasm, a negative correlation that suggests that SzP can have intact visual or auditory processing but not both. Instead, the better-performing SzP may be using an alternative strategy, as evidenced by the relationship of TASIT performance with cognitive processing speed in SzP, the correlation between face-emotion recognition and cognitive processing speed, and the specificity of TASIT sarcasm performance correlating with BACS-SC (which requires multiple fast saccades between pre-specified locations) and not category fluency (which does not require eye movements). Rather than the “bottom-up” stimulus-driven strategy employed by the HC, these SzP may be using a “top-down” strategy of targeting saccades to the known locations of the faces and then making fast decisions about the expressions in those faces. This alternative strategy may reflect either compensation or inversely a second deficit in the worst-performing SzP.

While this study provides a framework for how sensory processing deficits can impact social cognition and ultimately social functioning, there remain many gaps to fill. First is to understand what factors account for the ~50% in unexplained variance in TASIT performance between groups: SzP may also have additional impairments in making inferences about mental states that are independent of the sensory deficits and cognitive deficits measured by the PSI (M. F. Green & Horan, 2010; Pinkham et al., 2015; Savla, Vella, Armstrong, Penn, & Twamley, 2013).

Another potential source of intergroup variance is the impact of the chronicity of the disease. While medications do not appear to affect social functioning (Velthorst et al., 2017), many years of the lack of experience with social interactions may have exaggerated intergroup differences through decreased or altered use of the underlying brain circuits. Another gap is understanding what SzP are looking at instead of faces. This will require increased use of automated visual feature detection algorithms to further classify what objects and visual features are on screen at any given time. Another gap is in understanding how these findings, especially the biomarker-like separation of SzP and HC in Figures 1D–E, generalize and replicate, not only in a larger cohort but also with other naturalistic or real-world stimuli. In particular, longer naturalistic stimuli are needed: the short videos of the TASIT prevented us from assessing the number of saccades made to peripheral stimuli in individuals and directly measuring their impact on social cognition. Lastly, these findings need to related to measures of brain pathology in SzP, including recent models of how excitatory-inhibitory circuit disturbances in SzP may underlie visual processing and cognitive operations (Anderson et al., 2016; Murray et al., 2014) and how brain areas involved in social cognition (such as those in the temporoparietal junction/posterior superior temporal sulcus, or TPJ-pSTS) may underlie visual scanning behavior (Corbetta et al., 2008; M. F. Green et al., 2015; Patel et al., 2019).

Although this study focused on SzP, similar approaches could also be applied to other groups with known social cognitive and functioning deficits (e.g. ASD) (Morrison et al., 2019; Veddum, Pedersen, Landert, & Bliksted, 2019). The use of naturalistic stimuli paired with the analytical techniques described here will increasingly serve as a bridge between the basic neuroscience literature and clinical studies of patient populations by providing smoothly varying behavioral measures that can be used as regressors to search for neural correlates (Jacoby, Bruneau, Koster-Hale, & Saxe, 2016; Russ & Leopold, 2015). These methods take advantage of not only increased computational power and the associated advances in computer vision, but also the vast literature in visual neuroscience collected over the past 4 decades. Moreover, the simplicity of administering tests based on naturalistic stimuli promises to produce low-burden and easily deployed clinical assessment tools that directly link the symptoms each individual is experiencing with underlying cognitive and neural deficits, leading the way to individualized treatment regimens aimed at improving social functioning.

Supplementary Material

Acknowledgements:

We wish to thank Rachel Marsh, Guillermo Horga, and Chad Sylvester for their comments on this manuscript.

Funding: We wish to thank the funding agencies who supported this work: NIMH (GHP: K23MH108711 and T32MH018870; DCJ: R01MH049334; DAL and RAB: Intramural Research Program ZIA MH002898), Brain & Behavior Research Foundation (GHP), American Psychiatric Foundation (GHP), Sidney R. Baer Foundation (GHP), Leon Levy Foundation (GHP), and the Herb and Isabel Stusser Foundation (DCJ).

Footnotes

Conflict of Interest: GHP receives income and equity from Pfizer, Inc through family; DCJ has equity interest in Glytech, AASI, and NeuroRx. He serves on the board of Promentis. He holds intellectual property rights for use of NMDAR in treatment of schizophrenia, NMDAR antagonists in treatment of depression and PTSD, fMRI-based prediction of ECT response, and EEG-based diagnosis of neuropsychiatric disorders. Within the past 2 years he has received consulting payments/honoraria from Takeda, Pfizer, FORUM, Glytech, Autifony, and Lundbeck. SCA, ECJ, DRB, HD, NS, CCK, JPSP, LPB, JG, AM, RAB, KNO, and DAL reported no biomedical financial interests or potential conflicts of interest.

References

- Anderson EJ, Tibber MS, Schwarzkopf DS, Shergill SS, Fernandez-Egea E, Rees G, & Dakin SC (2016). Visual population receptive fields in people with schizophrenia have reduced inhibitory surrounds. The Journal of Neuroscience : the Official Journal of the Society for Neuroscience, 3620–15. 10.1523/JNEUROSCI.3620-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnold AEGF, Iaria G, & Goghari VM (2016). Efficacy of identifying neural components in the face and emotion processing system in schizophrenia using a dynamic functional localizer. Psychiatry Research: Neuroimaging, 248, 55–63. 10.1016/j.pscychresns.2016.01.007 [DOI] [PubMed] [Google Scholar]

- Bulzacka E, Meyers JE, Boyer L, Le Gloahec T, Fond G, Szöke A, et al. (2016). WAIS-IV Seven-Subtest Short Form: Validity and Clinical Use in Schizophrenia. Archives of Clinical Neuropsychology : the Official Journal of the National Academy of Neuropsychologists 10.1093/arclin/acw063 [DOI] [PubMed] [Google Scholar]

- Butler PD, Abeles IY, Weiskopf NG, Tambini A, Jalbrzikowski M, Legatt ME, et al. (2009). Sensory contributions to impaired emotion processing in schizophrenia. Schizophrenia Bulletin, 35(6), 1095–1107. 10.1093/schbul/sbp109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Butler PD, Zemon V, Schechter I, Saperstein AM, Hoptman MJ, Lim KO, et al. (2005). Early-stage visual processing and cortical amplification deficits in schizophrenia. Archives of General Psychiatry, 62(5), 495–504. 10.1001/archpsyc.62.5.495 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calderone DJ, Martinez A, Zemon V, Hoptman MJ, Hu G, Watkins JE, et al. (2013). Comparison of psychophysical, electrophysiological, and fMRI assessment of visual contrast responses in patients with schizophrenia, 67, 153–162. 10.1016/j.neuroimage.2012.11.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Y, Levy DL, Sheremata S, & Holzman PS (2004). Compromised late-stage motion processing in schizophrenia. Biological Psychiatry, 55(8), 834–841. 10.1016/j.biopsych.2003.12.024 [DOI] [PubMed] [Google Scholar]

- Chen Y, Palafox GP, Nakayama K, Levy DL, Matthysse S, & Holzman PS (1999). Motion perception in schizophrenia. Archives of General Psychiatry, 56(2), 149–154. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Patel GH, & Shulman GL (2008). The reorienting system of the human brain: from environment to theory of mind. Neuron, 58(3), 306–324. 10.1016/j.neuron.2008.04.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dias EC, Van Voorhis AC, Braga F, Todd J, Lopez-Calderon J, Martinez A, & Javitt DC (2020). Impaired Fixation-Related Theta Modulation Predicts Reduced Visual Span and Guided Search Deficits in Schizophrenia. Cerebral Cortex (New York, N.Y. : 1991), 30(5), 2823–2833. 10.1093/cercor/bhz277 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green MF, & Horan WP (2010). Social Cognition in Schizophrenia. Current Directions in Psychological Science, 19(4), 243–248. 10.1177/0963721410377600 [DOI] [Google Scholar]

- Green MF, Horan WP, & Lee J (2015). Social cognition in schizophrenia, 16(10), 620–631. 10.1038/nrn4005 [DOI] [PubMed] [Google Scholar]

- Green MF, Horan WP, & Lee J (2019). Nonsocial and social cognition in schizophrenia: current evidence and future directions. World Psychiatry, 18(2), 146–161. 10.1002/wps.20624 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heimberg C, Gur RE, Erwin RJ, Shtasel DL, & Gur RC (1992). Facial emotion discrimination: III. Behavioral findings in schizophrenia. Psychiatry Research, 42(3), 253–265. [DOI] [PubMed] [Google Scholar]

- Holdnack JA, Prifitera A, Weiss LG, & Saklofske DH (2015). Chapter 12. WISC-V and the Personalized Assessment Approach. WISC-V Assessment and Interpretation (pp. 373–414). Elsevier Inc. 10.1016/B978-0-12-404697-9.00012-1 [DOI] [Google Scholar]

- Jacoby N, Bruneau E, Koster-Hale J, & Saxe R (2016). Localizing Pain Matrix and Theory of Mind networks with both verbal and non-verbal stimuli, 126(C), 39–48. 10.1016/j.neuroimage.2015.11.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Javitt DC (2009). When doors of perception close: bottom-up models of disrupted cognition in schizophrenia. Annual Review of Clinical Psychology, 5, 249–275. 10.1146/annurev.clinpsy.032408.153502 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnston PJ, Enticott PG, Mayes AK, Hoy KE, Herring SE, & Fitzgerald PB (2010). Symptom correlates of static and dynamic facial affect processing in schizophrenia: evidence of a double dissociation? Schizophrenia Bulletin, 36(4), 680–687. 10.1093/schbul/sbn136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohler CG, Martin EA, Milonova M, Wang P, Verma R, Brensinger CM, et al. (2008). Dynamic evoked facial expressions of emotions in schizophrenia. Schizophrenia Research, 105(1–3), 30–39. 10.1016/j.schres.2008.05.030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kusunoki M, Gottlieb JP, & Goldberg ME (2000). The lateral intraparietal area as a salience map: the representation of abrupt onset, stimulus motion, and task relevance. Vision Research, 40(10–12), 1459–1468. 10.1016/S0042-6989(99)00212-6 [DOI] [PubMed] [Google Scholar]

- Leitman DI, Wolf DH, Ragland JD, Laukka P, Loughead J, Valdez JN, et al. (2010). “It’s Not What You Say, But How You Say it”: A Reciprocal Temporo-frontal Network for Affective Prosody. Frontiers in Human Neuroscience, 4, 19. 10.3389/fnhum.2010.00019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leitman DI, Ziwich R, Pasternak R, & Javitt DC (2006). Theory of Mind (ToM) and counterfactuality deficits in schizophrenia: misperception or misinterpretation? Psychological Medicine, 36(8), 1075–1083. 10.1017/S0033291706007653 [DOI] [PubMed] [Google Scholar]

- Marsman J-BC, Cornelissen FW, Dorr M, Vig E, Barth E, & Renken RJ (2016). A novel measure to determine viewing priority and its neural correlates in the human brain. Journal of Vision, 16(6), 3–3. 10.1167/16.6.3 [DOI] [PubMed] [Google Scholar]

- Martinez A, Gaspar PA, Hillyard SA, Andersen SK, Lopez-Calderon J, Corcoran CM, & Javitt DC (2018). Impaired Motion Processing in Schizophrenia and the Attenuated Psychosis Syndrome: Etiological and Clinical Implications. American Journal of Psychiatry, appiajp201818010072. 10.1176/appi.ajp.2018.18010072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald S, Bornhofen C, Shum D, Long E, Saunders C, & Neulinger K (2006). Reliability and validity of The Awareness of Social Inference Test (TASIT): a clinical test of social perception. Disability and Rehabilitation, 28(24), 1529–1542. 10.1080/09638280600646185 [DOI] [PubMed] [Google Scholar]

- McDonald S, Flanagan S, Rollins J, & Kinch J (2003). TASIT: A new clinical tool for assessing social perception after traumatic brain injury. The Journal of Head Trauma Rehabilitation, 18(3), 219–238. [DOI] [PubMed] [Google Scholar]

- Morrison KE, Pinkham AE, Kelsven S, Ludwig K, Penn DL, & Sasson NJ (2019). Psychometric Evaluation of Social Cognitive Measures for Adults with Autism. Autism Research : Official Journal of the International Society for Autism Research, 12(5), 766–778. 10.1002/aur.2084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray JD, Anticevic A, Gancsos M, Ichinose M, Corlett PR, Krystal JH, & Wang X-J (2014). Linking microcircuit dysfunction to cognitive impairment: effects of disinhibition associated with schizophrenia in a cortical working memory model. Cerebral Cortex (New York, N.Y. : 1991), 24(4), 859–872. 10.1093/cercor/bhs370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieuwenhuys R, Voogd J, & van Huijzen C (2008). The Human Central Nervous System (4 ed.). New York: Springer-Verlag. [Google Scholar]

- Nuechterlein KH, Green MF, Kern RS, Baade LE, Barch DM, Cohen JD, et al. (2008). The MATRICS Consensus Cognitive Battery, part 1: test selection, reliability, and validity. The American Journal of Psychiatry, 165(2), 203–213. 10.1176/appi.ajp.2007.07010042 [DOI] [PubMed] [Google Scholar]

- Okruszek Ł, & Pilecka I (2017). Biological motion processing in schizophrenia - Systematic review and meta-analysis. Schizophrenia Research, 1–8. 10.1016/j.schres.2017.03.013 [DOI] [PubMed] [Google Scholar]

- Orbelo DM, Grim MA, Talbott RE, & Ross ED (2005). Impaired comprehension of affective prosody in elderly subjects is not predicted by age-related hearing loss or age-related cognitive decline. Journal of Geriatric Psychiatry and Neurology, 18(1), 25–32. 10.1177/0891988704272214 [DOI] [PubMed] [Google Scholar]

- Patel GH, Sestieri C, & Corbetta M (2019). The evolution of the temporoparietal junction and posterior superior temporal sulcus. Cortex; a Journal Devoted to the Study of the Nervous System and Behavior, 118, 38–50. 10.1016/j.cortex.2019.01.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinkham AE, Penn DL, Green MF, & Harvey PD (2015). Social Cognition Psychometric Evaluation: Results of the Initial Psychometric Study. Schizophrenia Bulletin. 10.1093/schbul/sbv056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russ BE, & Leopold DA (2015). Functional MRI mapping of dynamic visual features during natural viewing in the macaque., 109, 84–94. 10.1016/j.neuroimage.2015.01.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Savla GN, Vella L, Armstrong CC, Penn DL, & Twamley EW (2013). Deficits in domains of social cognition in schizophrenia: a meta-analysis of the empirical evidence. Schizophrenia Bulletin, 39(5), 979–992. 10.1093/schbul/sbs080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, et al. (1995). Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science (New York, NY), 268(5212), 889–893. [DOI] [PubMed] [Google Scholar]

- Shepherd SV, Steckenfinger SA, Hasson U, & Ghazanfar AA (2010). Human-monkey gaze correlations reveal convergent and divergent patterns of movie viewing. Current Biology : CB, 20(7), 649–656. 10.1016/j.cub.2010.02.032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sowden S, Schuster B, & Cook J (2019). The role of movement kinematics in facial emotion expression. Presented at the th International Conference on Educational Neuroscience. 10.3389/conf.fnhum.2019.229.00018/event_abstract [DOI] [Google Scholar]

- Sparks A, McDonald S, Lino B, O’Donnell M, & Green MJ (2010). Social cognition, empathy and functional outcome in schizophrenia. Schizophrenia Research, 122(1–3), 172–178. 10.1016/j.schres.2010.06.011 [DOI] [PubMed] [Google Scholar]

- Taylor SF, Kang J, Brege IS, Tso IF, Hosanagar A, & Johnson TD (2012). Meta-analysis of functional neuroimaging studies of emotion perception and experience in schizophrenia. Biological Psychiatry, 71(2), 136–145. 10.1016/j.biopsych.2011.09.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Veddum L, Pedersen HL, Landert A-SL, & Bliksted V (2019). Do patients with high-functioning autism have similar social cognitive deficits as patients with a chronic cause of schizophrenia? Nordic Journal of Psychiatry, 73(1), 44–50. 10.1080/08039488.2018.1554697 [DOI] [PubMed] [Google Scholar]

- Velthorst E, Fett A-KJ, Reichenberg A, Perlman G, van Os J, Bromet EJ, & Kotov R (2017). The 20-Year Longitudinal Trajectories of Social Functioning in Individuals With Psychotic Disorders. American Journal of Psychiatry, 174(11), 1075–1085. 10.1176/appi.ajp.2016.15111419 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weschler D (1997). WAIS-III Wechsler Adult Intelligence Scale.

- White BJ, Berg DJ, Kan JY, Marino RA, Itti L, & Munoz DP (2017). Superior colliculus neurons encode a visual saliency map during free viewing of natural dynamic video. Nature Communications, 8(1), 14263. 10.1038/ncomms14263 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilming N, Kietzmann TC, Jutras M, Xue C, Treue S, Buffalo EA, & König P (2017). Differential Contribution of Low- and High-level Image Content to Eye Movements in Monkeys and Humans. 10.1093/cercor/bhw399 [DOI] [PMC free article] [PubMed]

- Zaki J, & Ochsner KN (2009). The need for a cognitive neuroscience of naturalistic social cognition. Annals of the New York Academy of Sciences, 1167, 16–30. 10.1111/j.1749-6632.2009.04601.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu X, & Ramanan D (2012). Face detection, pose estimation, and landmark localization in the wild. Cvpr [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.