Abstract

This work proposes a deep learning model for skin cancer detection from skin lesion images. In this analytic study, from HAM10000 dermoscopy image database, 3400 images were employed including melanoma and non-melanoma lesions. The images comprised 860 melanoma, 327 actinic keratoses and intraepithelial carcinoma (AKIEC), 513 basal cell carcinoma (BCC), 795 melanocytic nevi, 790 benign keratosis, and 115 dermatofibroma cases. A deep convolutional neural network was developed to classify the images into benign and malignant classes. A transfer learning method was leveraged with AlexNet as the pre-trained model. The proposed model takes the raw image as the input and automatically learns useful features from the image for classification. Therefore, it eliminates complex procedures of lesion segmentation and feature extraction. The proposed model achieved an area under the receiver operating characteristic (ROC) curve of 0.91. Using a confidence score threshold of 0.5, a classification accuracy of 84%, the sensitivity of 81%, and specificity of 88% was obtained. The user can change the confidence threshold to adjust sensitivity and specificity if desired. The results indicate the high potential of deep learning for the detection of skin cancer including melanoma and non-melanoma malignancies. The proposed approach can be deployed to assist dermatologists in skin cancer detection. Moreover, it can be applied in smartphones for self-diagnosis of malignant skin lesions. Hence, it may expedite cancer detection that is critical for effective treatment.

Keywords: Skin Cancer, Deep Learning, Melanoma, Transfer Learning, Dermoscopy

Introduction

Skin cancer is one of the most common cancers worldwide. There are several types of skin cancer. Basal cell carcinoma (BCC) and squamous cell carcinoma (SCC) are by far the most prevalent forms of skin cancer [ 1 ]. BSS and SCC start in the epidermis, i.e. the outer layer of the skin, and since they are typically caused by sun exposure, they often develop in sun-exposed areas such as the face, ears, neck, head, arms, and hands. BCC rarely spread to other parts of the body, but SCC may spread to nearby organs or lymph nodes. Since BCC and SCC originate in keratinocytes (the most common type of skin cells), BCC and SCC are sometimes called keratinocyte cancers [ 1 ]. Actinic Keratoses (Solar Keratoses) and Intraepithelial Carcinoma (Bowen’s disease) which are referred to in this paper as AKIEC, are common non-invasive lesions that are precursors of SCC. If untreated, they may progress to invasive SCC [ 2 ].

Melanoma is another form of skin cancer that develops in melanocytes [ 1 ]. Melanocyte is a type of skin cell in the epidermis that produce melanin, a dark pigment responsible for skin color. Melanin also acts as a natural sunscreen to protect deeper layers of the skin from sun damage. Although in the US, melanoma accounts for 5% of skin cancers but is responsible for 75% of skin cancer deaths [ 3 ]. Due to the high mortality rate of melanoma, skin cancer is sometimes categorized as melanoma and non-melanoma.

It is difficult for a dermatologist to detect skin cancer from a dermoscopy image of a skin lesion [ 3 ]. In some cases, biopsy and pathology examination is needed to diagnose cancer. Previous studies have developed computer-based systems for skin cancer recognition from skin lesion images. Prior to 2016, these models [ 4 - 6 ] were based on conventional machine learning techniques which required segmentation of the lesion from the surrounding skin in the image, followed by the extraction of useful features from the lesion area. These features describe characteristics such as the shape, texture, and color of the lesion. Finally, the features are fed into a classifier to detect cancer. This method, however, is cumbersome, because it is difficult to define and extract features that would be useful to identify cancer.

With recent advances in software and hardware technologies, deep learning has emerged as a powerful tool for feature learning. Feature engineering, i.e. the process of defining and extracting features by a human expert, is a cumbersome and time-consuming task. Deep learning eliminates the need for feature engineering because it can automatically learn and extract useful features from the raw data. Deep learning has revolutionized many fields especially computer vision. In biomedical engineering, deep learning has demonstrated great success in recent work [ 7 - 9 ]. Since 2016, several studies have deployed deep learning for skin cancer detection. In 2017, researchers from Stanford University conducted a study [ 3 ] with a deep learning convolutional neural network (CNN) model trained with 125000 clinical images of skin lesions. This model was then validated with new skin lesion images and achieved performance on par with skilled dermatologists in identifying cancer. This work demonstrates deep learning efficacy in skin cancer recognition. However, the database of this study is not publicly available, preventing other researchers to develop models for further improvements.

In 2018, Tschandl et al., published HAM10000 [ 2 ], a public dataset of 10000 dermoscopy images obtained from Austrian and Australian patients. Around 8000 of the images in this dataset are benign lesions, and the rest are malignant lesions. The ground truth for this dataset was confirmed by either pathology, expert consensus, or confocal microscopy. In the present study, a deep learning model is proposed to identify the malignancy of skin lesion images from HAM10000 dataset.

Technical Presentation

Data Description

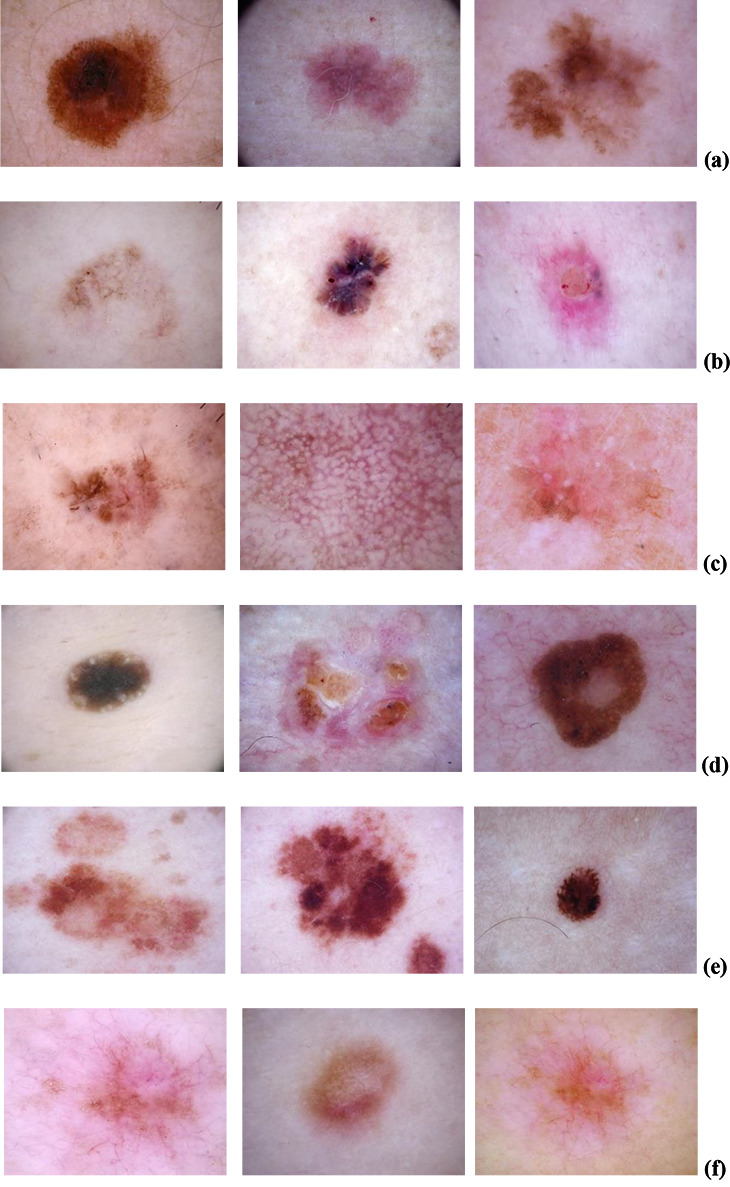

From HAM10000 dataset, 3400 images (1700 benign and 1700 malignant) were employed in this analytic study. The benign images included 115 cases of dermatofibroma, 795 cases of melanocytic nevi, and 790 cases of benign keratosis (BK), and the malignant images included 513 cases of BCC, 327 cases of AKIEC, and 860 cases of melanoma. Figure 1 shows three representative images for each type of lesion. For each lesion type, 90% of cases were selected randomly and were considered as the training set, and the rest of the images were designated as the test set. Thus, the training set size was 3060 (1530 benign and 1530 malignant), and the test set size was 340 (170 benign and 170 malignant).

Figure 1.

Three representative images for (a) melanoma, (b) basal cell carcinoma (BCC), (c) Actinic keratoses and intraepithelial carcinoma (AKIEC), (d) benign keratosis (BK), (e) melanocytic nevi, and (f) dermatofibroma, are shown.

Deep learning

In order to classify skin lesions into benign and malignant categories, a deep learning CNN model is proposed with AlexNet [ 10 ] as a pre-trained model. This transfer learning was conducted because AlexNet has been trained for object recognition which is closely related to skin lesion classification. The last three layers of AlexNet, i.e. the last fully connected layer, softmax and classification layers, were replaced with corresponding layers suitable for binary classification. First, all images were resized to 227×227 to match the input size of AlexNet. The model was trained with the training set, using the SGDM algorithm, with an initial learning rate of 0.0001, and a mini-batch size of 30, and 40 epochs. These parameters were determined empirically. The training was conducted with Matlab 2018Ra on a computer with an Nvidia GTX 1050 GPU. Once the model was trained, it was validated with the test data, and the performance was measured using classification accuracy, the area under the ROC curve (AUC), sensitivity, and specificity.

Results

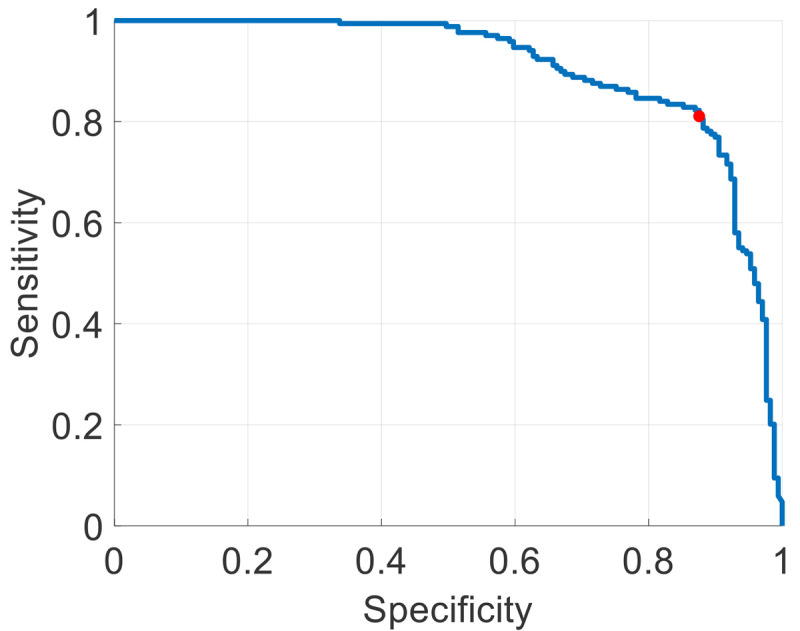

The ROC curve of the proposed CNN model is plotted in Figure 2, which shows the trade-off between sensitivity and specificity. The model achieved an AUC of 0.91. A threshold of 0.5 was considered for the model confidence score, which is shown by the red point on the ROC curve in Figure 2. With this threshold, a classification accuracy of 84%, a sensitivity of 81%, and a specificity of 88% was achieved. The model training time was roughly 16 minutes.

Figure 2.

The receiver operating characteristic (ROC) curve of the proposed model is plotted, where the confidence threshold of 0.5 is shown by a red point on the curve.

Discussion

A deep learning model is proposed in this study to detect skin cancer in dermoscopy images. These images included melanoma and non-melanoma cancers. The proposed system achieved a high performance (AUC=0.91) in distinguishing malignant and benign lesions. These results demonstrate the strength of deep learning in detecting cancer. In this work, dermatologists’ performance was not investigated to be compared with the model accuracy. However, previous studies [ 3 ] has shown that skin cancer detection by human experts contain a significant error. Since the performance is highly related to the dataset, it is not possible to compare the results of this work to the performance achieved by dermatologists in other studies. Thus, in future work, we intend to collect the opinion of dermatologists, in order to compare their performance with that of the model with on the same dataset.

The performance of deep learning models highly depends on the amount of training data. Since the majority of images in HAM10000 dataset are benign, only 3400 images were used in this study so that the data comprise an equal number of benign and malignant images. Despite the limited amount of training data, the proposed model achieved high accuracy in discriminating benign and malignant lesions. Nevertheless, it is expected that larger training sets significantly enhance the performance of the model. Therefore, the main challenge in this area is the lack of a large comprehensive public database of skin lesion images.

The threshold for the confidence score of CNN was set to 0.5 in this analysis. However, the threshold can be adjusted based on user preference. For example, if it is more important to not miss cancerous legions than it is to misidentify benign legions as malignant, then the user must reduce the threshold to improve sensitivity at the expense of specificity. This means moving the red point on the curve towards the left.

The proposed approach can be deployed in computer-aided detection systems to assist dermatologists to identify skin cancer. Moreover, it can be implemented in smartphones to be applied on skin lesion photographs taken by patients. This allows for early detection of cancer, especially for those without access to doctors. Early diagnosis can significantly facilitate the treatment and improve the survival chance.

Conclusion

This paper proposed a deep convolutional neural network for skin cancer identification from dermoscopy images. Data included melanoma and non-melanoma malignancies. The results indicated the strength of deep learning in skin cancer detection. This approach can be employed in smartphones to allow self-diagnosis of skin cancer. Moreover, it can be implemented in computer-aided systems to assist dermatologists in the detection of malignant lesions.

Footnotes

Conflict of Interest: None

References

- 1.Xu Y G, Aylward J L, Swanson A M, Spiegelman V S, Vanness E R, Teng JM, et al. Nonmelanoma skin cancers: basal cell and squamous cell carcinomas. Abeloff’s Clinical Oncology. 2020:1052–73. doi: 10.1016/B978-0-323-47674-4.00067-0. [DOI] [Google Scholar]

- 2.Tschandl P, Rosendahl C, Kittler H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci Data. 2018;5:180161. doi: 10.1038/sdata.2018.161. [ PMC Free Article ] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Esteva A, Kuprel B, Novoa R A, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115–8. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Masood A, Ali Al-Jumaily A. Computer aided diagnostic support system for skin cancer: a review of techniques and algorithms. Int J of Biomed Imaging. 2013:323268. doi: 10.1155/2013/323268. [ PMC Free Article ] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Burroni M, Corona R, Dell’Eva G, Sera F, Bono R, Puddu P, et al. Melanoma computer-aided diagnosis: reliability and feasibility study. Clin Cancer Res. 2004;10(6):1881–6. doi: 10.1158/1078-0432.ccr-03-0039. [DOI] [PubMed] [Google Scholar]

- 6.Rosado B, Menzies S, Harbauer A, Pehamberger H, Wolff K, Binder M, et al. Accuracy of computer diagnosis of melanoma: a quantitative meta-analysis. Arch of Dermatol. 2003;139(3):361–7. doi: 10.1001/archderm.139.3.361. [DOI] [PubMed] [Google Scholar]

- 7.Ameri A. EMG-based wrist gesture recognition using a convolutional neural network. Teh Univ Med J TUMS Publications. 2019;77(7):434–9. [Google Scholar]

- 8.Ameri A, Akhaee MA, Scheme E, Englehart K. Regression convolutional neural network for improved simultaneous EMG control. J Neural Eng. 2019;16(3):036015. doi: 10.1088/1741-2552/ab0e2e. [DOI] [PubMed] [Google Scholar]

- 9.Ameri A, Akhaee M A, Scheme E, Englehart K. Real-time, simultaneous myoelectric control using a convolutional neural network. PloS One. 2018;13(9):e0203835. doi: 10.1371/journal.pone.0203835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks. Communications of the ACM. 2017;60(6):84–90. [Google Scholar]