Abstract

Introduction

Value-based healthcare delivery models have emerged to address the unprecedented pressure on long-term health system performance and sustainability and to respond to the changing needs and expectations of patients. Implementing and scaling the benefits from these care delivery models to achieve large-system transformation are challenging and require consideration of complexity and context. Realist studies enable researchers to explore factors beyond ‘what works’ towards more nuanced understanding of ‘what tends to work for whom under which circumstances’. This research proposes a realist study of the implementation approach for seven large-system, value-based healthcare initiatives in New South Wales, Australia, to elucidate how different implementation strategies and processes stimulate the uptake, adoption, fidelity and adherence of initiatives to achieve sustainable impacts across a variety of contexts.

Methods and analysis

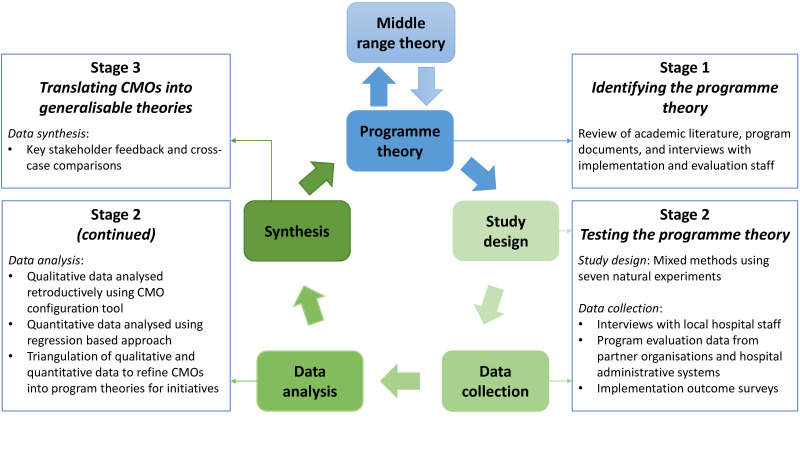

This exploratory, sequential, mixed methods realist study followed RAMESES II (Realist And Meta-narrative Evidence Syntheses: Evolving Standards) reporting standards for realist studies. Stage 1 will formulate initial programme theories from review of existing literature, analysis of programme documents and qualitative interviews with programme designers, implementation support staff and evaluators. Stage 2 envisages testing and refining these hypothesised programme theories through qualitative interviews with local hospital network staff running initiatives, and analyses of quantitative data from the programme evaluation, hospital administrative systems and an implementation outcome survey. Stage 3 proposes to produce generalisable middle-range theories by synthesising data from context–mechanism–outcome configurations across initiatives. Qualitative data will be analysed retroductively and quantitative data will be analysed to identify relationships between the implementation strategies and processes, and implementation and programme outcomes. Mixed methods triangulation will be performed.

Ethics and dissemination

Ethical approval has been granted by Macquarie University (Project ID 23816) and Hunter New England (Project ID 2020/ETH02186) Human Research Ethics Committees. The findings will be published in peer-reviewed journals. Results will be fed back to partner organisations and roundtable discussions with other health jurisdictions will be held, to share learnings.

Keywords: health services administration & management, heart failure, diabetic foot, general diabetes, end stage renal failure, bone diseases

Strengths and limitations of this study.

Each of the value-based healthcare initiatives are based on evidence-informed clinical models of care, allowing our investigation to focus on understanding of the implementation strategies and processes that facilitate effective adoption and scaling.

These seven natural experiments span many sites and patient conditions, creating the opportunity to explore diverse implementation strategies across a variety of settings.

This research envisages a realist study, a methodological approach to provide a deep understanding of how complex implementation strategies and processes achieve a variety of outcomes for different populations, given varied circumstances and timelines.

This study uses a mixed methods design to create optimal conditions for iterative exploration and rigour when developing programme implementation theories.

Some assumptions may need to be made for the use of retrospective data to complement prospectively collected data, which potentially represents a limitation pending the direction and level of uncertainty surrounding these assumptions.

Introduction

Background

Globally, healthcare systems face unprecedented pressures on long-term performance and sustainability.1–3 Substantial challenges include the ageing of populations, growing prevalence of chronic conditions, increased rates of hospital utilisation, rising costs of new medical technologies and medicines, and inefficient allocation of scarce resources.4–6 Healthcare spending consumes an increasingly large proportion of government expenditure worldwide,7 without always delivering commensurate improvements in outcomes.8 This is evidenced by only around 60% of care aligning with current evidence, 30% considered low value and 10% resulting in harm.9 Further, patient expectations of healthcare are rising, and there is a developing understanding for how subjective patient outcomes, such as satisfaction and experience, can influence how people use the system and benefit from it.10 11 In response, governments and policy makers have turned their focus to optimising access, efficiency and quality of care through system-wide transformation.12–15

Value-based healthcare

Value-based healthcare, a concept that has received substantial attention recently, is described as a shift away from ‘volume-oriented’ systems (activity as a primary measure of performance) towards those that additionally focus on the ’value’ of outcomes achieved.16 17 The core principles of value-based healthcare in New South Wales (NSW), Australia, expressly ‘considers what value means for patients, clinicians and the health system, and aims to provide health services that deliver value across four domains: improved health outcomes; improved experiences of receiving care; improved experiences of providing care; and better effectiveness and efficiency of care’.18 These principles are considered compatible with ensuring system sustainability, while keeping patient-centred care front of mind.18 How to best generate value is expressed differently throughout the academic literature,19–21 and is unlikely to be entirely achieved by realignment of healthcare funding models alone. Efforts to date have largely focused on implementing evidence-based models that attempt to align delivery of the right care in the right setting at the right time, to reduce unwarranted practice variation and to promote patient-centred care.18

In NSW, Australia, the orientation towards value-based healthcare is focused on the ‘quadruple aim’ approach that prioritises resources towards improving population health, improving the experience of those receiving and providing care, and managing available resources and the per capita costs of care.22 There are several state-wide programmes designed to scale and support change through a range of system-wide approaches for targeted cohorts of people. Leading Better Value Care (LBVC) is one of those programmes and is positioned as a value-based healthcare programme for specific conditions that commenced public health system-wide implementation across over 100 health facilities in NSW from 2017 to 2018. The principles of the programme involve clinicians, networks and organisations implementing best-practice, value-based care, and treatment and management for chronic conditions.23 Seven of the eight Tranche One LBVC initiatives have been identified as having a high potential to avoid unnecessary hospitalisation (which is a focus of this work) and are supported by multiple organisations, including the NSW Ministry of Health at the macro-level, and the Agency for Clinical Innovation (ACI), Bureau of Health Information and the Clinical Excellence Commission at the meso-level. Further details regarding the aims of each initiative are provided in table 1.

Table 1.

Description of the seven Tranche One LBVC initiatives

| Initiative | Patient population | Description |

| Osteoarthritis chronic care programme | People with diagnosed osteoarthritis of the knee or hip | Aims to improve daily function and delay, avoid or improve recovery from knee or hip joint replacement surgery. The initiative involves expanding outpatient-based multidisciplinary conservative treatment options and support self-management strategies, including exercise, injury avoidance and encouragement of weight loss with drug therapies. |

| Osteoporosis refracture prevention | People aged 50 years and older who present to hospital with osteoporotic fracture | Aims to prevent refractures for people with osteoporosis by improving identification of conditions underlying minimal trauma fractures and streamlining case management processes. The initiative includes case management that supports patient access to medical consultations, community-based care and refracture education. Enhancing primary care management and fewer refractures is oriented towards reducing hospital admissions. |

| Chronic heart failure | People aged over 18 years, admitted with symptoms suggestive of chronic heart failure | Aims to reduce 28-day readmission and 30-day mortality by a focus on reducing unwarranted variation from best practice, enhance prevention, improve the management and mitigation of risks for people with chronic heart failure. These aims are to be achieved by improving early, accurate diagnosis, exacerbation management, transfer of care to multidisciplinary teams and palliative care. Multidisciplinary coordination of acute and primary care is leveraged to support self-management, including preventing acute exacerbation of chronic heart failure. |

| Chronic obstructive pulmonary disease | Acute admitted patients aged 40 years and older with COPD | Aims to reduce 28-day readmission and 30 day mortality by a focus on reducing unwarranted clinical variation and optimise lung function for people with COPD, with the goal of preventing deterioration that leads to acute episodes and hospital admission. The initiative involves patient education, chronic disease self-management and pulmonary rehabilitation with conventional drug therapies. |

| Inpatient management of diabetes mellitus | Acute admitted patients aged 16 years and older with diabetes requiring subcutaneous insulin management | Glycaemic instability puts inpatients with diabetes at greater risk of infections and other complications. This initiative aims to reduce the length of hospital admission for people with diabetes requiring subcutaneous insulin management by optimising glucose management. The initiative involves improving capacity to manage insulin and glucose among junior medical officers and general ward staff, and access to inpatient diabetes management teams, including safe transfers and standardised identification processes. |

| Diabetes high-risk foot service | People over 15 years of age with diabetic foot-related infections and/or ulcers of the foot or lower limb, including diabetes-related foot ulceration, infection and acute Charcot’s neuropathy | Aims to improve treatment and patient outcomes and reduce complications and associated hospitalisations for people with diabetic foot-related infections and/or ulcers of the foot or lower limb by enhancing equitable access to best-practice preventative care and management. The initiative involves outpatient-based high-risk foot services incorporating a multidisciplinary team approach and following treatment guidelines, and reducing state-wide variation in access to services. |

| Renal supportive care | People with chronic kidney disease or end-stage kidney disease deciding whether to pursue renal replacement therapies (RRT), conservatively managed without RRT, receiving RRT but experiencing symptoms impacting quality of life, and those withdrawing from dialysis | Aims to enhance patient (and carer) experience by supporting outpatient-based symptom management and palliative care for people with chronic and end-stage kidney disease. The initiative involves the Renal Supportive Care model of patient (and carer) support regardless of whether they embark on, or cease, RRT, backed by advanced and palliative care planning. |

Source: Adapted and reproduced with permission from NSW Health, Leading Better Value Care, viewed 21 May 2020, <https://www.health.nsw.gov.au/Value/lbvc/Pages/default.aspx>.

COPD, chronic obstructive pulmonary disease.;.

While the initiatives focus on the organisation and delivery of care for specific patient cohorts, the overarching implementation strategies are based on guiding principles that span across the initiatives. The initiatives aim to create shared priorities between healthcare organisations, through an alignment framework that monitors care processes and outcomes against best-practice guidelines. NSW hospital networks (encompassing public hospitals, health institutions and services to defined geographical areas of the State, referred to as Local Health Districts) are responsible for designing local improvement plans that involve executive sponsorship, governance and leadership structures. Underpinning the implementation of the LBVC programme are resources provided by the supporting organisations in the form of project management, system-level enablers, funding for local implementation, an online collaboration portal, as part of a multilevel implementation package outlined in table 2. While the LBVC projects seek to focus on best clinical practice and value-based care,18 the important variations in context and how these interact with change mechanisms that drive implementation outcomes present unique challenges for the implementation of this system-wide programme at scale. This information is important in guiding how similar large-system projects can be scaled up and replicated in other local contexts.

Table 2.

Summary of the multilevel implementation package for the Leading Better Value Care programme

| Macro-level policy and system drivers |

|

| Meso-level implementation support agency working across health service organisations |

|

| Hospital-level local implementation |

|

Implementation and scaling of value-based care initiatives

The LBVC initiatives seek to accelerate best clinical practice and value-based care but the challenge lies in identifying how best to implement change at scale in complex adaptive healthcare systems. Healthcare systems are complex, adaptive, dynamic webs of vast numbers of interactions between healthcare professionals, administrators, support staff, patients, their families and a multitude of associated stakeholders.24–26 There are few linear pathways, making implementation of any project a challenging endeavour. Along with the multitude of factors and interactions encountered when navigating complex healthcare systems, multiple aspects of implementation must also be considered. Much research effort is devoted to building the evidence base for particular models of care, in the form of clinical efficacy and effectiveness.27 However, less focus is paid to improving implementation outcomes, distinct from service system outcomes and clinical treatment outcomes, that measure the effects of deliberate and purposive actions to implement new treatments, practices and services.28 These measures focus on the acceptability, adoption, appropriateness, implementation costs, feasibility, fidelity, penetration and sustainment of implementing innovations (see online supplemental Appendix 1 for implementation outcome taxonomy), and are inherently linked to concepts of cost-effectiveness (as they are closely related to process measures used in economic evaluations and outcomes that are valued by patients). A focus on improving these outcomes is required to understand how the implementation and scaling of evidence-based models of care occurs. Therefore, it is imperative that we improve our understanding of local system dynamics, generative patterns of outcomes and conditional causal mechanisms for the implementation of large-system, value-based care transformation programmes, to inform health system improvement efforts. The evidence base for how to implement change will therefore not take the form of ‘what works’. Rather, it will be best placed to achieve broad understanding about ‘what tends to work, for whom, in what circumstances, how and why’.

bmjopen-2020-044049supp001.pdf (38KB, pdf)

Realist study

The level of complexity that is intrinsic to implementing large-system, value-based healthcare transformations presents a challenge to the use of traditional research methods. Healthcare operates in an open system, where regularities between events are not necessarily always able to be probabilistically specified or measured.29 Therefore, it is important to apply methodologies that situate change as a conditional, contextually dependent and time-sensitive processes that occur across multilevel, nested and dynamic systems.30 Realist studies, which take an approach grounded in the philosophy of scientific realism,31 are based on the assumption that programmes or initiatives work under certain conditions and are heavily influenced by the way that different stakeholders respond to them (see glossary of terms; table 3). A form of theory-driven research, realist studies seek to understand how a programme works, or is expected to work within specific contexts, and what conditions may hinder or promote successful outcomes.32–34 A realist approach is ideal for complex, large-system health system implementation programmes,35 as it acknowledges the non-linearity and context specificity of change process in complex adaptive health systems.36 37 This theory-driven method is increasingly being used for studying the implementation of complex interventions within health systems.38

Table 3.

Glossary of key terms for implementation science, complexity theory and realist studies

| Implementation science | The scientific study of processes to translate research evidence into practice, understanding what influences translational outcomes, and evaluating the adoption of interventions.26 |

| Implementation outcome | The effects of deliberate actions to implement an innovation.28 |

| Complexity | The behaviour embedded in highly composite systems or models of systems with large numbers of interacting components26; the complexity of a behaviour is equal to the length of its description.77 |

| Adaptation | The capacity to adjust to internal and external circumstances; usually thought of in terms of modifying behaviours over time.26 |

| Systems | A group of interacting elements that form a unified functional whole.26 |

| Complex adaptive system | A dynamic, self-similar collective of interacting, adaptive agents and their artefacts.26 |

| Realist study | A theory-driven approach based on a realist philosophy of science that is used to evaluate ‘what works, for whom, under what circumstances and how’ under the assumption that complex programmes and interventions work differently under certain circumstances.31 34 41 |

| Programme theory | Description of what is supposed to be carried out in the implementation of programmes (theory of action) and how and why that is expected to work (theory of change).78 79 |

| Context–mechanism–outcome configuration | Proposition-building set of possible explanatory relationships between the components of realist studies: (C) context or circumstances; (M) mechanisms or underlying social processes; (O) outcomes or results.80 81 |

| Middle-range theory | Consist of limited sets of assumptions from which specific hypotheses are logically derived and confirmed by empirical investigation.82 These theories are considered more abstract and generalisable than ‘programme theories’ but do not constitute a ‘grand social theory’ themselves, instead they are considered adaptive and cumulative explanations. |

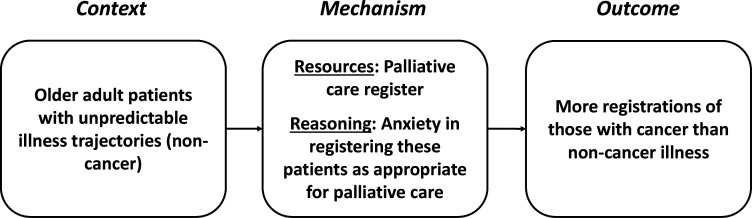

Realist studies assert that programmes to implement healthcare improvements are ‘theories incarnate’,34 that is, the roll out of programmes always has a theoretical underpinning, whether explicit or not. Therefore, it is important to make these theories explicit, from the perspectives of many actors. These programme theories are usually described as configurations between contexts (circumstances under which programme implementation works), mechanisms (generative causes of how implementation of programmes contribute to benefits) and outcomes (the intended consequences of programme implementation) (see figure 1 for context–mechanism–outcome (CMO) configuration example. Source: Adapted and reproduced from Dalkin et al.39 Use of this image is supported by a Creative Commons License, http://creativecommons.org/licenses/by/4.0/). When focussing on the science around how a programme was implemented, rather than the programme itself, there are questions as to how ‘outcomes’ should be defined, whether to categorise process ‘outputs’ separately from outcomes, and which of these to include in a CMO configuration. Arguably, configurations of system and implementation efforts (context, actors, processes, content or object of the intervention, and implementation outcomes) could be first observed, then assessed on how they relate to endpoint patient and health outcomes. The process of theory refinement within a realist study would ideally inform the way these propositions are configured.

Figure 1.

Example context–mechanism–outcome configuration for why UK primary care practices placed fewer patients with non-cancer illness on their palliative care integrated care pathway register, compared with those with cancer illness.

Within a realist paradigm, context is more than a setting or place, rather it is anything which influences whether, how and why the mechanism will work. The epistemological assumption guiding realist studies is that programme implementation triggers ‘mechanisms’ of change differently, depending on the context. In realism, mechanisms are considered the ‘underlying entities, processes, or social structures which operate in particular contexts to generate outcomes of interest’,40 and it is these mechanisms that trigger change rather than the programme implementation itself.15 Comparisons between CMO configurations can be used to formulate generalisable theoretical models (middle-range theories) for how and in what circumstances programme implementation achieves different outcomes. Delivering transferable models for how to implement large-system, value-based healthcare initiatives not only benefits ongoing application within their current contexts, but can inform how improvement initiatives can be scaled to other contexts, jurisdictions and settings.

Aims

The aim of this study is to undertake a realist study of the implementation of seven value-based healthcare initiatives. Specifically, we will develop and refine programme theories that unpack how varying strategies, contextual features and change mechanisms led to different implementation and programme outcomes. From this, we will build a nuanced model of the factors, conditions and levers that stimulate and inform the spread of local, multiagency, evidence-informed improvement projects into sustainable, nationwide impacts. These aims will be achieved by answering the research question: When implementing a large-system, state-wide value-based care programme to reduce unwarranted variation and improve patient-centred care, what tends to work, how, why, for whom, to what extent and in what circumstances, over what duration? The specific objectives of the study are to:

Identify the change strategies, processes and theorised mechanisms through which implementation of the seven value-based care initiatives influenced implementation and programme outcomes.

Investigate the impact of different contextual circumstances and agents on the mechanisms of initiative implementation over varying timelines.

Develop macro-level, meso-level and micro-level implementation models for successful value-based initiatives in healthcare, based on a mix of implementation strategies and mechanisms in specific contexts that achieve desired implementation outcomes.

Methods and analysis

Study design

This study will apply an exploratory, sequential, mixed methods realist study design, following the RAMESES II (Realist And Meta-narrative Evidence Syntheses: Evolving Standards) reporting standards for realist studies.41 Data will be collated from numerous sources to posit, test and refine hypothesised theories for how implementation strategies and processes lead to intended or unintended outcomes, depending on the influence of various contextual factors (eg, structure, relations, culture).42–45 We will use both qualitative and quantitative data to uncover these patterns of outcomes in different contexts and to explicate the mechanisms for implementing value-based healthcare initiatives. Our iterative, three-stage model is depicted in figure 2 (Source: Adapted from Pawson et al,34 Panmunthygala et al,46 CMO: context–mechanism–outcome). Table 4 shows the three stages of the study and summarises each stage’s objective, the focal outcomes, sources of data and analysis approaches. Data collection for this study will commence in November 2020 and will be completed by July 2022.

Figure 2.

Three stages of the realist study and proposed process. CMO, context–mechanism–outcome configurations.

Table 4.

Relationship between data source, analysis approaches and research objectives

| Stage | Data source | Data analysis | Objectives |

| 1 | Qualitative:

|

Qualitative:

|

(a) Identify the change strategies, processes and theorised mechanisms through which implementation of the seven value-based care initiatives influenced implementation and programme outcomes. (b) Investigate the impact of different contextual circumstances and agents on the mechanisms of initiative implementation over varying timelines. |

| 2 | Qualitative:

Quantitative:

|

Qualitative:

Quantitative:

|

|

| 3 | Data from stage 1, 2 and 3 | Mixed methods:

|

(c) Develop macro-level, meso-level and micro-level implementation models for successful value-based initiatives in healthcare, based on a mix of implementation strategies and mechanisms in specific contexts that achieve desired implementation outcomes. |

Stage 1: identifying the initial programme theories

In stage 1, we will identify initial theories for how the value-based care initiatives are implemented into routine care. This stage will be informed by a realist synthesis of the literature around large-system hospital interventions (in preparation). Realist,47 48 exploratory semistructured interviews with programme implementers and evaluators, and analysis of documents from the macro-level and meso-level implementation support agencies will be used to map the actual process of implementation as it was done (work as done), compared with how it was planned (work as imagined).49 This information will be used to refine existing programme logics and process models50 for the implementation of value-based care initiatives. Additionally, relevant aspects of the social and environmental context will be collated and developed during the interviews and document review into determinant frameworks50 to structure the analysis of the hypothesised CMO configurations. Through this mapping exercise, we will uncover the underlying system dynamics (relationships between agents, their attributes and rules of behaviour, network structure, feedback loops) that influence the implementation.

Stage 2: testing the hypothesised programme theories

During stage 2, focus will shift from developing theories, to testing theories and refining meta-narratives for the configuration of implementation support across initiatives. A mixed method approach using realist, exploratory semistructured interviews with local hospital network staff, quantitative project evaluation data and implementation outcome measure surveys will be used to test and refine CMOs, constructed in stage 1. This stage will follow a convergent (complementary qualitative and quantitative datasets) model, integrating and merging separately analysed data through side-by-side comparison and contrast.51–54 The convergent model will include capturing narrative and experiential information from local hospital network staff to highlight the mechanisms of implementation in different contexts, and variations in outcomes from the value-based care initiatives. These will be tested against the hypothesised initial programme theories, by eliciting comments to confirm, deny and refine aspects of the theory. Concurrent to these qualitative approaches, we will examine the quantitative relationships between implementation processes and the relevant implementation and programme outcomes for different populations, circumstances and time points, according to the programme theories. Explicating these relationships between variables will build on the structure and process models created in stage 1, to articulate generative or conditional causal models.55

Stage 3: translating CMOs into generalisable theoretical models for implementation (middle-range theories)

The process of testing programme theories in stage 2 will lead to the refinement of CMOs for the seven value-based care initiatives, which will be translated into generalisable theoretical models (middle-range theories) for implementing large-system, value-based care programmes in stage 3. The process of translation will begin by presenting and defending CMOs with key stakeholders. Further testing will be undertaken by explicitly seeking disconfirming or contradictory data and considering other interpretations that might account for the same findings. Cross-case comparisons will then be used to determine how the same mechanism could produce different outcomes in different contexts and settings. The research team will review our realist findings for each initiative (including programme theories and CMO configurations) ‘vertically’ to identify common thematic elements according to context, mechanism and outcomes. Data will also be analysed across case studies ‘horizontally’ to uncover potentially generative causal patterns or regularities between mechanism and outcomes under certain contextual conditions. This process will eventually translate the specifics of each individual initiative to more analytically generalised abstract theories.

Qualitative methods

Qualitative sampling and recruitment procedures

A purposive sample supported by snowballing will identify respondents best placed to cast light on the proposed programme theories. Interview invitation emails will be distributed from the investigators via the state-wide implementation support agencies and local hospital networks to identified staff involved in the implementation of initiatives. We intend to interview up to 30 implementers and evaluators from the programme implementation support agencies for stage 1, and up to 30 local hospital network staff in stage 2 (see table 4 outlining the data source for each stage). Realist studies rely less on the concept of data saturation because realist hypotheses are confirmed or abandoned using a multi-method strategy for relevance and rigour.31 56 Our iterative process of data collection will include the possibility of re-interviewing participants to further develop our programme theories as we become more knowledgeable about the programme through refinement against other data (documentary, interviews with other stakeholders and quantitative findings).34 48 57 Specific inclusion and exclusion criteria are provided in online supplemental Appendix 2.

bmjopen-2020-044049supp002.pdf (23.2KB, pdf)

Qualitative data collection

The realist interview builds knowledge of why there is variation around what happens in natural settings which helps develop, test and refine theories. The interviewer–interviewee relationship adopts the teacher–learner cycle beginning with the interviewer teaching ‘the particular programme theory under test’ to the interviewee and then ‘the respondent, having learnt the theory under test, is able to teach the interviewer about the components of a programme in a particularly informed way’.58 Interviews will take place either face-to-face at the interviewee’s workplace or a mutually agreed location via telephone or videoconference, depending on the participants’ preferences. The interview guides will be pilot tested with two stakeholders. Interviews will be approximately 30–45 min in duration, undertaken by three experienced qualitative researchers (MNS, EFA, CP). Information from public and internal programme documents that is relevant to the implementation of the seven value-based care initiatives will be reviewed to inform the development of programme theories.

Qualitative data analysis

All audio recordings will be transcribed verbatim and entered into NVivo V.12 for data management.59 Analysis will be undertaken by multiple researchers conducting the interviews, in a concurrent and continuous manner alongside data collection. Ongoing analysis work ensures early immersion in the data.33 Consistent with realist approaches, data analysis will be retroductive in that it will oscillate between an inductive and deductive logic to multiple data sources, as well as incorporating the researchers’ own insights of generative causation for the programme theories.60 After data collection, qualitative analysts will read through, and systematically analyse each transcript individually before engaging in group work consensus-building around CMOs and causal explanations.61 Qualitative analysts will then revisit our programme theories and refine these in the light of CMO data. Programme documents will be analysed alongside interview transcripts in NVivo to confirm or disconfirm our CMOs, ensuring a diversity of data sources and another level of clarification.

Quantitative methods

Programme evaluation data

Evaluation data relating to the implementation outcomes of value-based care initiatives and contextual factors impacting these processes will be accessed by researchers with institutional approval from the partner organisations. These data will be sourced from programme roadmap milestone measures, performance reporting systems, fidelity audits, hospital culture surveys, patient and carer surveys, and quality of life measures. Deidentified routinely collected administrative data related to programme implementation (eg, clinical processes indicative of implementation fidelity) will be collected from the Enterprise Data Warehouse for Analysis Reporting and Decisions (EDWARD), which is mandated business intelligence standard focused on reports for NSW Health Local Health Districts in running their operations. The specific outcomes of interest will be identified during stage 1 of the realist study pending both access and quality of available data.

Implementation outcome measure survey

The Acceptability of Intervention Measure (AIM), Intervention Appropriateness Measure (IAM) and Feasibility of Intervention Measure (FIM)62 will be administered via survey at a single time point across all local hospital networks. These measures include four simple questions at fifth grade readability level and do not require any specialist training to administer, score or interpret. Items are measured on a five-point Likert scale and scores are calculated as a mean. The AIM, IAM and FIM each demonstrate strong psychometric properties.62 Local hospital network staff involved in the implementation of initiatives will be invited to take part in the survey in an email distributed by the researchers, via the programme implementation support agencies. The survey will be administered online, using REDCap (Research Electronic Data Capture) tools hosted at Macquarie University.63

Quantitative data analysis

Statistical analyses will be conducted using commonly available software packages to explore the impact of implementation strategies and processes on a range of implementation and programme outcomes which will be identified during stage 1 of the realist study depending on the access to and quality of data. Descriptive statistics will be used to present continuous data as mean and standard deviation (SD), median and (inter-quartile range (IQR), or sample maximum and minimum, and categorical data as frequencies and proportions. For the purposes of this realist study, programme implementation strategies and processes will generally be categorised as independent variables, where implementation and programme outcomes will be categorised as dependent variables. Interaction effects will be considered where appropriate.

Patient and public involvement

The views of Australian public representatives from the NSW Ministry of Health and implementation support agencies were incorporated into the design of the study, from the stage of research grant funding application. The research questions and observations of interest were co-developed in consultation with the project partners, informed by their experience implementing the initiatives of interest for this study protocol. A Project Advisory Group was established with representatives from local hospital networks to support the design and recruitment process for the study, minimise time burden for participation and identify pathways for disseminating findings across the health system.

Ethics and dissemination

Ethical approval has been granted by Macquarie University (Project ID 23816) and Hunter New England Human (Project ID 2020/ETH02186) Research Ethics Committees. All interview participants will receive written information and provide informed consent prior to data collection taking place (online supplemental Appendix 3). All interview participants will be assured of anonymity and confidentiality during all data management, handling and reporting processes. Ethical considerations regarding the use of routinely collected administrative data where it is impractical to obtain individual informed consent will be managed in accordance with the National Health and Medical Research Council (NHMRC) National Statement on Ethical Conduct in Human Research (2007)—Updated 2018.64

bmjopen-2020-044049supp003.pdf (687.6KB, pdf)

Data storage and retention

Electronic data will be stored on a password-protected university server and accessible only to members of the study team. Hard copy data will be stored in locked cabinets within secure offices. Data will be destroyed seven years after the date of the final peer-reviewed publication, in accordance with ethical guidelines.

Dissemination

A series of roundtables will be conducted across Commonwealth, State and Territory health systems in Australia to extend the benefits from this programme of work. Special attention will be paid to hospitals, local hospital networks and States as health systems managers. The results from this project will be submitted for publication in peer-reviewed academic journals and will be presented at conferences and seminars with identifiable information omitted.

Discussion

This realist study will support the implementation of value-based healthcare improvement initiatives, by leveraging our understanding of complex adaptive systems,65 66 evidence-based medicine67 and the emerging science of implementation.68 69 Successful systems-wide improvement depends on identifying, learning and capturing the benefits from local innovation and understanding that, in complex systems, local contexts are critical to success.24 70–73 Our investigation of programme implementation in varying populations, circumstances and time points will deliver a transferable model for implementing large-system improvements in healthcare. This evidence-based ‘road map’ of implementation practices is expected to enable local successes to be effectively translated into meaningful, systems-wide change.

Realist studies are based on the premise that it is not possible to create and measure entirely closed system experiments for healthcare research in real-world conditions. Protocols for controlled trials are usually produced to reduce the risk of outcome and reporting bias in the evaluation,74 75 and to enable replication.76 However, it is not possible to describe every detail of a realist study in advance, as their iterative and exploratory nature mean that important factors for examination emerge as theory develops.41 Therefore, protocol deviation in realist studies does not necessarily constitute a risk of bias, given the inherent flexibility of realist study design. This realist study protocol will ensure rigour in planning, provide transparency of approach, support ethical considerations and demonstrate realist assumptions in our project conception.

An important consideration for our realist study is articulating the bounds of inquiry and finding a level of abstraction that allows the building of theories that are generalisable to other settings and implementation of similar programmes. This work focuses specifically on the implementation of seven LBVC initiatives, which have consistent overarching goals and a suite of implementation strategies. The proposed realist study will only provide insights towards the science and practice of implementation and will not necessarily articulate the overall benefit or value of the identified initiatives. A parallel investigation is being conducted by the project team to identify, measure and test an economic benefits measurement framework for value-based healthcare, using these initiatives, that articulates the value from expected health outcomes and preferences for experience and equity (Partington et al, forthcoming). It is intended that the realist study outputs will be at a level of abstraction that allows observations ‘across’ programmes to see where mechanisms are operating, and how different contexts for them lead to other outcomes, thereby supporting generalisation.

Supplementary Material

Footnotes

Twitter: @JanetCLong, @jfredlevesque, @HealthDataProf, @RClaywilliams, @DrDianeWatson, @RLystad, @kimlsutherland3

Contributors: JB, J-FL, DEW, HC, ROD, RM, FR, YT, RC-W and JW initiated the project partnership, conceptualised the project and obtained funding. JB, MNS, J-FL, EFA, AP, J-FL and DEW were responsible for the overall study design. Initial drafting of the protocol was by JB, MNS, EFA, J-FL, with input from all other authors (CP, HMN, WW, JW, RC-W, JW, ROD, JF-L, RM, FR, HC, YT, DEW, GA, PDH, RL, VM, GL, KS and RH). All authors read and approved the final manuscript.

Funding: This work was supported by the Medical Research Future Fund (MRFF) (APP1178554, CI Braithwaite). The funding arrangement ensured the funder has not and will not have any role in study design, collection, management, analysis and interpretation of data, drafting of manuscripts and decision to submit for publication.

Competing interests: None declared.

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

References

- 1.World Health Organization Everybody business: strengthening health systems to improve health outcomes: WHO’s framework for action, 2007. Available: https://www.who.int/healthsystems/strategy/everybodys_business.pdf?ua=1 [Accessed June 29 /2020].

- 2.Organisation for Economic Cooperation and Development Tackling wasteful spending on health. Paris, France: OECD, 2017. [Google Scholar]

- 3.Forum WE. Sustainable health systems. Visions, strategies, critical uncertainties and scenarios. Geneva, Switzerland: World Economic Forum, 2013. [Google Scholar]

- 4.Amalberti R, Vincent C, Nicklin W, et al. . Coping with more people with more illness. Part 1: the nature of the challenge and the implications for safety and quality. Int J Qual Health Care 2019;31:154–8. 10.1093/intqhc/mzy235 [DOI] [PubMed] [Google Scholar]

- 5.Braithwaite J, Vincent C, Nicklin W, et al. . Coping with more people with more illness. Part 2: new generation of standards for enabling healthcare system transformation and sustainability. Int J Qual Health Care 2019;31:159–63. 10.1093/intqhc/mzy236 [DOI] [PubMed] [Google Scholar]

- 6.Crisp N. What would a sustainable health and care system look like? BMJ 2017;358:j3895. 10.1136/bmj.j3895 [DOI] [PubMed] [Google Scholar]

- 7. ChangAY, CowlingK, MicahAE, et al. . Past, present, and future of global health financing: a review of development assistance, government, out-of-pocket, and other private spending on health for 195 countries, 1995-2050. Lancet 2019;393:2233–60. 10.1016/S0140-6736(19)30841-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mafi JN, Parchman M. Low-Value care: an intractable global problem with no quick fix. BMJ Qual Saf 2018;27:333–6. 10.1136/bmjqs-2017-007477 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Braithwaite J, Glasziou P, Westbrook J. The three numbers you need to know about healthcare: the 60-30-10 challenge. BMC Med 2020;18:102. 10.1186/s12916-020-01563-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lateef F. Patient expectations and the paradigm shift of care in emergency medicine. J Emerg Trauma Shock 2011;4:163–7. 10.4103/0974-2700.82199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Choi C-J, Hwang S-W, Kim H-N. Changes in the degree of patient expectations for patient-centered care in a primary care setting. Korean J Fam Med 2015;36:103–12. 10.4082/kjfm.2015.36.2.103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Garling P. Special Commission of inquiry into acute care services in New South Wales public hospitals: inquiry into the circumstances of the appointment of Graeme Reeves by the former southern area health service. NSW Department of Premier and Cabinet, 2008. [Google Scholar]

- 13.Levinson W, Kallewaard M, Bhatia RS, et al. . 'Choosing wisely': a growing international campaign. BMJ Qual Saf 2015;24:167–74. 10.1136/bmjqs-2014-003821 [DOI] [PubMed] [Google Scholar]

- 14.Stark Z, Boughtwood T, Phillips P, et al. . Australian genomics: a federated model for integrating genomics into healthcare. Am J Hum Genet 2019;105:7–14. 10.1016/j.ajhg.2019.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Best A, Greenhalgh T, Lewis S, et al. . Large-system transformation in health care: a realist review. Milbank Q 2012;90:421–56. 10.1111/j.1468-0009.2012.00670.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.The Economist Intelligence Unit Value-Based healthcare: a global assessment, 2016. Available: https://perspectives.eiu.com/sites/default/files/EIU_Medtronic_Findings-and-Methodology_1.pdf [Accessed 29 June 2020].

- 17.Counte MA, Howard SW, Chang L, et al. . Global advances in value-based payment and their implications for global health management education, development, and practice. Front Public Health 2018;6:379. 10.3389/fpubh.2018.00379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Koff E, Lyons N. Implementing value-based health care at scale: the NSW experience. Med J Aust 2020;212:e101:104–6. 10.5694/mja2.50470 [DOI] [PubMed] [Google Scholar]

- 19.Porter ME. What is value in health care? N Engl J Med 2010;363:2477–81. 10.1056/NEJMp1011024 [DOI] [PubMed] [Google Scholar]

- 20.Porter ME, Teisberg EO. Redefining health care: creating value-based competition on results. Boston, USA: Harvard Business Press, 2006. [Google Scholar]

- 21.Gray M. Value based healthcare. BMJ 2017;356:j437. 10.1136/bmj.j437 [DOI] [PubMed] [Google Scholar]

- 22.Berwick DM, Nolan TW, Whittington J. The triple aim: care, health, and cost. Health Aff 2008;27:759–69. 10.1377/hlthaff.27.3.759 [DOI] [PubMed] [Google Scholar]

- 23.Health NSW. NSW Health strategic priorities: 2018-19. Sydney, Australia: NSW Health, 2018. [Google Scholar]

- 24.Braithwaite J, Churruca K, Ellis LA. Can we fix the uber-complexities of healthcare? J R Soc Med 2017;110:392–4. 10.1177/0141076817728419 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Braithwaite J, Churruca K, Ellis LA, et al. . Complexity science in healthcare-aspirations, approaches, applications and accomplishments: a white paper. Sydney, Australia: Macquarie University, 2017. [Google Scholar]

- 26.Braithwaite J, Churruca K, Long JC, et al. . When complexity science meets implementation science: a theoretical and empirical analysis of systems change. BMC Med 2018;16:63. 10.1186/s12916-018-1057-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Glasgow RE, Vinson C, Chambers D, et al. . National Institutes of health approaches to dissemination and implementation science: current and future directions. Am J Public Health 2012;102:1274–81. 10.2105/AJPH.2012.300755 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Proctor E, Silmere H, Raghavan R, et al. . Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health 2011;38:65–76. 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fleetwood S. The critical realist conception of open and closed systems. J Econ Methodol 2017;24:41–68. 10.1080/1350178X.2016.1218532 [DOI] [Google Scholar]

- 30.Westhorp G. Using complexity-consistent theory for evaluating complex systems. Evaluation 2012;18:405–20. 10.1177/1356389012460963 [DOI] [Google Scholar]

- 31.Pawson R. The science of evaluation: a realist manifesto. London, England: Sage Publications, 2013. [Google Scholar]

- 32.Jagosh J, Pluye P, Macaulay AC, et al. . Assessing the outcomes of participatory research: protocol for identifying, selecting, appraising and synthesizing the literature for realist review. Implement Sci 2011;6:24. 10.1186/1748-5908-6-24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pawson R. Evidence-based policy. a realist perspective. London, England: Sage Publications, 2006. [Google Scholar]

- 34.Pawson R, Tilley N, Tilley N. Realistic evaluation. London, England: Sage Publications, 1997. [Google Scholar]

- 35.Clay-Williams R, Braithwaite J. Reframing implementation as an organisational behaviour problem: inside a teamwork improvement intervention. J Health Organ Manag 2015;29:670–83. [DOI] [PubMed] [Google Scholar]

- 36.Marchal B, van Belle S, van Olmen J, et al. . Is realist evaluation keeping its promise? A review of published empirical studies in the field of health systems research. Evaluation 2012;18:192–212. 10.1177/1356389012442444 [DOI] [Google Scholar]

- 37.Plsek PE, Greenhalgh T. The challenge of complexity in health care. BMJ 2001;323:625–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Randell R, Greenhalgh J, Hindmarsh J, et al. . Integration of robotic surgery into routine practice and impacts on communication, collaboration, and decision making: a realist process evaluation protocol. Implement Sci 2014;9:52. 10.1186/1748-5908-9-52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Dalkin SM, Greenhalgh J, Jones D, et al. . What's in a mechanism? development of a key concept in realist evaluation. Implement Sci 2015;10:49. 10.1186/s13012-015-0237-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Astbury B, Leeuw FL. Unpacking black boxes: mechanisms and theory building in evaluation. Am J Eval 2010;31:363–81. 10.1177/1098214010371972 [DOI] [Google Scholar]

- 41.Wong G, Westhorp G, Manzano A, et al. . RAMESES II reporting standards for realist evaluations. BMC Med 2016;14:96. 10.1186/s12916-016-0643-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mukumbang FC, Van Belle S, Marchal B, et al. . Realist evaluation of the antiretroviral treatment adherence Club programme in selected primary healthcare facilities in the metropolitan area of Western Cape Province, South Africa: a study protocol. BMJ Open 2016;6:e009977. 10.1136/bmjopen-2015-009977 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Pawson R, Greenhalgh T, Harvey G, et al. . Realist review--a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy 2005;10 Suppl 1:21–34. 10.1258/1355819054308530 [DOI] [PubMed] [Google Scholar]

- 44.Herepath A, Kitchener M, Waring J. A realist analysis of hospital patient safety in Wales: applied learning for alternative contexts from a multisite case study. Health Serv Deliv Res 2015;3:1–242. 10.3310/hsdr03400 [DOI] [PubMed] [Google Scholar]

- 45.Smith M. Testable theory development for small-n studies: critical realism and middle-range theory. Int J Inf Technol Syst Approach 2010;3:41–56. [Google Scholar]

- 46.Ranmuthugala G, Cunningham FC, Plumb JJ, et al. . A realist evaluation of the role of communities of practice in changing healthcare practice. Implement Sci 2011;6:49. 10.1186/1748-5908-6-49 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Mukumbang FC, Marchal B, Van Belle S, et al. . Using the realist interview approach to maintain theoretical awareness in realist studies. Qual Res 2019. [Google Scholar]

- 48.Manzano A. The craft of interviewing in realist evaluation. Evaluation 2016;22:342–60. 10.1177/1356389016638615 [DOI] [Google Scholar]

- 49.Braithwaite J, Wears RL, Hollnagel E. Resilient health care, volume 3: reconciling work-as-imagined and work-as-done. Boca Raton, FL: CRC Press, Taylor & Francis Group, 2016. [Google Scholar]

- 50.Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci 2015;10:53. 10.1186/s13012-015-0242-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Rapport F, Hogden A, Faris M, et al. . Qualitative research in healthcare: modern methods, clear translation: a white paper. Sydney, Australia: Macquarie University, 2018. [Google Scholar]

- 52.Mirzoev T, Etiaba E, Ebenso B, et al. . Study protocol: realist evaluation of effectiveness and sustainability of a community health workers programme in improving maternal and child health in Nigeria. Implement Sci 2016;11:83. 10.1186/s13012-016-0443-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ozawa S, Pongpirul K. 10 best resources on … mixed methods research in health systems. Health Policy Plan 2014;29:323–7. 10.1093/heapol/czt019 [DOI] [PubMed] [Google Scholar]

- 54.Pommier J, Guével M-R, Jourdan D. Evaluation of health promotion in schools: a realistic evaluation approach using mixed methods. BMC Public Health 2010;10:43. 10.1186/1471-2458-10-43 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Greenhalgh T, Humphrey C, Hughes J, et al. . How do you modernize a health service? A realist evaluation of whole-scale transformation in London. Milbank Q 2009;87:391–416. 10.1111/j.1468-0009.2009.00562.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Mason M. Sample size and saturation in PhD studies using qualitative interviews. Forum Qual Soc Res 2010;11:3. [Google Scholar]

- 57.Emmel N. Sampling and choosing cases in qualitative research: a realist approch. London: England: Sage Publications, 2013. [Google Scholar]

- 58.Pawson R, Tilley N. Realistic evaluation. British cabinet office, available at, 2004. Available: http://www.communitymatters.com.au/RE_chapter.pdf [Accessed 29 July 2020].

- 59.International QSR. NVivo qualitative data analysis software, 1999

- 60.Gilmore B, McAuliffe E, Power J, et al. . Data analysis and synthesis within a realist evaluation: toward more transparent methodological approaches. Int J Qual Methods 2019;18:1–11. 10.1177/1609406919859754 [DOI] [Google Scholar]

- 61.De Brún A, McAuliffe E. Identifying the context, mechanisms and outcomes underlying collective leadership in teams: building a realist programme theory. BMC Health Serv Res 2020;20:1–13. 10.1186/s12913-020-05129-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Weiner BJ, Lewis CC, Stanick C, et al. . Psychometric assessment of three newly developed implementation outcome measures. Implement Sci 2017;12:108. 10.1186/s13012-017-0635-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Harris PA, Taylor R, Thielke R, et al. . Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009;42:377–81. 10.1016/j.jbi.2008.08.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.National Health and Medical Research Council,, Australian Reseach Council and Universities Australia . National statement on ethical conduct in human research: 2007 (updated 2018). Canberra: Commonwealth of Australia, 2018. [Google Scholar]

- 65.Braithwaite J, Runciman WB, Merry AF. Towards safer, better healthcare: harnessing the natural properties of complex sociotechnical systems. Qual Saf Health Care 2009;18:37–41. 10.1136/qshc.2007.023317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Braithwaite J. Changing how we think about healthcare improvement. BMJ 2018;361:k2014. 10.1136/bmj.k2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Runciman WB, Hunt TD, Hannaford NA, et al. . CareTrack: assessing the appropriateness of health care delivery in Australia. Med J Aust 2012;197:100–5. 10.5694/mja12.10510 [DOI] [PubMed] [Google Scholar]

- 68.Sarkies MN, Bowles K-A, Skinner EH, et al. . The effectiveness of research implementation strategies for promoting evidence-informed policy and management decisions in healthcare: a systematic review. Implement Sci 2017;12:132. 10.1186/s13012-017-0662-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Rapport F, Clay-Williams R, Churruca K, et al. . The struggle of translating science into action: foundational concepts of implementation science. J Eval Clin Pract 2018;24:117–26. 10.1111/jep.12741 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Braithwaite J, Mannion R, Matsuyama Y, et al. . Health systems improvement across the globe: success stories from 60 countries. London, England: CRC Press, 2017. [Google Scholar]

- 71.Braithwaite J, Matsuyama Y, Johnson J. Healthcare reform, quality and safety: perspectives, participants, partnerships and prospects in 30 countries. London, England: CRC Press, 2017. [DOI] [PubMed] [Google Scholar]

- 72.Braithwaite J, Mannion R, Matsuyama Y, et al. . Healthcare systems: future predictions for global care. London, England: CRC Press, 2018. [Google Scholar]

- 73.Sarkies MN, Skinner EH, Bowles K-A, et al. . A novel counterbalanced implementation study design: methodological description and application to implementation research. Implement Sci 2019;14:45. 10.1186/s13012-019-0896-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.McNamee D. Protocol reviews at the Lancet. Lancet 1997;350:6 10.1016/S0140-6736(05)66237-X [DOI] [Google Scholar]

- 75.Jones G, Abbasi K. Trial protocols at the BMJ. BMJ 2004;329:1360. 10.1136/bmj.329.7479.1360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Loscalzo J. Experimental irreproducibility: causes,(mis) interpretations, and consequences. Circulation 2012;125:1211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Siegenfeld AF, Bar-Yam Y. An introduction to complex systems science and its applications. Complexity 2020;2020:1–16. 10.1155/2020/6105872 [DOI] [Google Scholar]

- 78.Funnell SC, Rogers PJ. Purposeful program theory: Effective use of theories of change and logic models. Hoboken, USA: John Wiley & Sons, 2011. [Google Scholar]

- 79.RAMESES II project “Theory" in Realist Evaluation, 2017. Available: https://www.ramesesproject.org/media/RAMESES_II_Theory_in_realist_evaluation.pdf [Accessed 29 July 2020].

- 80.Jackson SF, Kolla G. A new realistic evaluation analysis method: linked coding of context, mechanism, and outcome relationships. Am J Eval 2012;33:339–49. [Google Scholar]

- 81.de Souza DE. Elaborating the Context-Mechanism-Outcome configuration (CMOc) in realist evaluation: a critical realist perspective. Evaluation 2013;19:141–54. 10.1177/1356389013485194 [DOI] [Google Scholar]

- 82.Pawson R. Middle-Range realism. EurJ Sociol 2000;41:283–325. 10.1017/S0003975600007050 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2020-044049supp001.pdf (38KB, pdf)

bmjopen-2020-044049supp002.pdf (23.2KB, pdf)

bmjopen-2020-044049supp003.pdf (687.6KB, pdf)