Abstract

Our contribution is a unified cross-modality feature disentagling approach for multi-domain image translation and multiple organ segmentation. Using CT as the labeled source domain, our approach learns to segment multi-modal (T1-weighted and T2-weighted) MRI having no labeled data. Our approach uses a variational auto-encoder (VAE) to disentangle the image content from style. The VAE constrains the style feature encoding to match a universal prior (Gaussian) that is assumed to span the styles of all the source and target modalities. The extracted image style is converted into a latent style scaling code, which modulates the generator to produce multi-modality images according to the target domain code from the image content features. Finally, we introduce a joint distribution matching discriminator that combines the translated images with task-relevant segmentation probability maps to further constrain and regularize image-to-image (I2I) translations. We performed extensive comparisons to multiple state-of-the-art I2I translation and segmentation methods. Our approach resulted in the lowest average multi-domain image reconstruction error of 1.34±0.04. Our approach produced an average Dice similarity coefficient (DSC) of 0.85 for T1w and 0.90 for T2w MRI for multi-organ segmentation, which was highly comparable to a fully supervised MRI multi-organ segmentation network (DSC of 0.86 for T1w and 0.90 for T2w MRI).1

Keywords: Multi-domain translation, Disentagled networks, Unsupervised multi-modal MRI segmentation, abdominal organs

1. Introduction

Magnetic resonance imaging guided radiation therapy treatments require accurate segmentation, which is currently done by clinicians [1]. Despite the availability of in-treatment-room-imaging, due to lack of fast segmentation methods, these treatments cannot be used to adapt treatments and precisely target tumors. Deep learning methods cannot be directly applied to MRI as large expert-labeled datasets are lacking. Therefore, we developed an unsupervised multi-domain MRI (T1-weighted, T2-weighted) segmentation approach by using CT as a source domain. Our approach performs parameter efficient multi-domain adaptation without requiring multiple one-to-one domain adaptation networks. Cross-domain adaptation has been successfully used for one-to-one domain adaptation and medical image segmentation [2-4]. These methods produce image-to-image (I2I) translations using the generative adversarial networks (GAN) [5], including the cycle GAN [6]. GANs map random noise into output images by modeling the intensity distribution of a target domain. But GANs make two unrealistic assumptions for model convergence; the discriminators have infinite capacity to drive the generators, and very large number of training samples are available [7]. The work in [8] showed that the use of appropriate priors like Lipschitz densities are needed to constrain GAN training and avoid mode collapse. Bijection constraints, which regularize GAN training by matching a joint distribution of an image and a latent distribution [9, 10], have shown feasibility to model diverse intensity and color variations within the same modality. However, these constraints do not specify the dependency (or correlation) structure between the image and the latent distribution and are not guaranteed to cover the different modes in the required target task [11, 12]. Prior methods that combined image and feature-level constraints as in [13, 4, 6, 3] are intrinsically one-to-one mappers, and extension to multi-domain mapping would require networks, which is not computationally feasible for practical applications.

Disentangling methods [14, 15], which extract a domain-invariant content and domain specific style have shown generalizable classification performance on target domains while reducing mode collapse. Example approaches include, task-relevant losses [11], domain classifier losses [16-18], and domain generalization methods that match style features with a known prior assumed to span across a number of seen and unseen domains [12, 11]. However, in addition to requiring domain-specific style encoders [16, 17], these methods use image-level matching losses, which is insufficient to model translation of multiple organs that transform differently with respect to one another across the imaging domains.

Key improvements and differences:

We used one universal content encoder and one variational auto-encoder to extract image content and style code from multiple domains. The style code is converted into a vector of latent style scales that modulate the generator filters processing content features; target domain code is injected into the generator for synthesizing target modality. Our approach requires no additional networks for encoding domain-specific style features, and requires similar number of networks and parameters as a one-to-one mapper. To our best knowledge, ours is the first approach to perform such scalable multi cross-modality adaptation for disparate medical imaging modalities. We improve upon mode-seeking constraints [19] to reduce mode collapse by introducing a joint distribution discriminator that combines images with their generated segmentation probability maps to compute domain mismatches. This preserves organs geometry and appearance.

Contributions:

A compact cross-modality feature disentangling approach for diverse medical imaging modalities adaptation,

An end-to-end multi-domain translation and unsupervised cross-modality segmentation network, and

A new joint distribution (image, segmentation map) discriminator to force preservation of multiple organ appearance on generated images.

2. Method

2.1. Notation:

Bold letters denote a matrix, x, X; mapping functions are denoted by non-bold letters, e.g., Ec : xi → c, vectors are indicated by italicized letters, e.g., d. are the set of N domains, H is the height and W is the width of the images. The domain code is represented using one-hot-coding, and is denoted by . Source domain is indicated by i, the target domain by j, and the transformed image by . Domain-invariant content encoder is denoted by Ec : xi → c, where , and {H′, W′, C} are the height, width of the convolved feature image and number of content features, respectively. The style encoder is denoted by Es : xi → s, where , Cs is the number of style features. G is the generator. Ls is the latent scale layer that maps style code s into a vector of latent style scales , where F is the number of filters in the generator. Dc and D are content and multi-modality GAN discriminators. Ds is the joint distribution (image, segmentation probability map) discriminator. Si is the segmentator for modality i.

2.2. Feature disentanglement and image translation

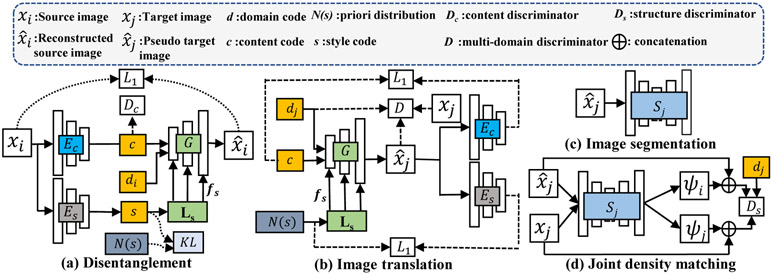

Style and content feature disentanglement

Disentangled image content and style features (Fig. 1a) are computed using a sequence of convolutional layers and a variational auto-encoder (VAE) [20], respectively. Assuming that a latent Gaussian prior () spans the styles of all the domains, the encoder Es extracts a style code that matches with this prior using KL-divergence. The style code is then transformed into latent style scale fs by a latent scale (LS) layer [21]. The latent style scale for each domain is learned. It modulates (as a multiplier) the strength of the various generator filters processing the image content features to produce the desired I2I translation. The vector of latent style scale outputs are shown as multiple outputs for LS in Fig 1(a). These are combined with generator filters using residual blocks. The domain code is injected through channel-wise concatenation with the content code. A self-reconstruction loss is combined with the KL-divergence loss in the VAE as:

| (1) |

where . The domain invariant content features are produced using an adversarial training by:

| (2) |

Fig. 1:

Many-to-many domain translation and multi-domain MRI segmentation with joint distribution discriminator.

Image translation losses:

We compute content reconstruction, latent code regression, domain adversarial loss and mode-seeking losses to constrain multi-domain I2I translation (Fig. 1 b).

Content reconstruction loss forces content preservation in the transformation upon transforming an image xi from domain i to j. This is computed as:

| (3) |

Latent code regression loss constrains the generator to produce unique mappings of image for a given latent code z. This is accomplished through a reverse mapping that produces point-wise estimates of latent code as done in [9, 22]. The latent regression error is then computed as:

| (4) |

Domain adversarial loss is computed to distinguish the generated images from the distribution of the individual domains using styles coded from xj and sampled from . This is computed as:

| (5) |

Multi-domain translation is additionally stabilized by employing bias center instance normalization (BCIN) in both the generator and discriminators, as it has been shown to improve the consistency and diversity in the generated I2I transformations[23]. BCIN also allows the domain labels dj ∈ d to be directly injected into G and D, which eliminates additional computations otherwise needed for calculating domain classification loss via the discriminator as done in [17, 22].

Mode seeking loss:

As proposed in [19], we compute a mode-seeking loss to prevent mode collapse such that the chances of producing the same image from two different latent vectors is reduced. Briefly, given two random latent style codes z1, , an image xi ~ Xi, target domain code dj and a generator G, mode seeking regularization maximizes the ratio of the distance between the generated images and the corresponding latent vectors as:

| (6) |

Finally, a new joint distribution discriminator is employed as described in the next Subsection to constrain the multi-domain translation for segmentation.

2.3. Segmentation

CT is the source domain containing expert segmentations, {Xi, Yi}, and the target MRI domain only contains the image sequences for training . Separate multi-organ segmentation networks Sj are trained for each target modality j (Fig. 1(c)) by using transformed images , obtained via randomly sampled style z during segmentation training. Cross entropy loss was used to optimize these networks as:

| (7) |

Joint distribution structure discriminator

Prior work [4] has shown that modality hallucination occurs while transforming highly disparate modalities when GAN training is not regularized to model the structures of interest. Therefore, we introduce a new adversarially trained joint distribution structure discriminator Ds (Fig. 1(d)) that explicitly conditions image generation by focusing domain mismatch detection within the structures of interest. This is done by treating images and their segmentation probabilities as a joint distribution, and implemented as channel-wise concatenation. Voxel-wise segmentation probability maps (channel-wise accumulation except the 0-th channel that corresponds to background label) for the CT to MRI translated and the unrelated real MRIs ψj are obtained from the SoftMax operation of the segmentation network Sj. Ds uses the domain code dj to compute domain mismatch as:

| (8) |

The total loss is computed as:

| (9) |

2.4. Implementation details and network structure

All networks were implemented using the Pytorch library and were trained on Nvidia GTX V100 with 16 GB memory. The ADAM algorithm[24] with an initial learning rate of 2e-4 and batch size of 1 was used during training. We set λvae=1, λc=1, λlr=10, λms=1, λst=1 and λseg=5 in the training. The learning rate was kept constant for the first 50 epochs and decayed to zero in the next 50 epochs. In order to ensure stable training, the encoders, G and VAE networks are trained cooperatively and optimized at a different iteration than the discriminators and segmentors.

The content encoder Ec is a fully convolutional network for projecting the images into a spatial feature map. The feature map retains the spatial structure by using a small output stride of 2. The style encoder Es is composed of several convolution and pooling layers followed by global pooing and fully connected layers, with the output layer implemented using a reparameterization trick as done in [20]. The latent scale module Ls consists of 5 fully connected layers with tanh operation to produce style scale features within a range of [−1, 1]. The generator G is composed of 5 residual blocks with BCIN used for domain code injection. The segmentation networks S are implemented using a standard Unet[25]. Content discriminator Dc is implemented using PatchGAN. The joint density structure discriminator Ds and multi-domain discriminator D are implemented using PatchGAN with BCIN used for domain injection. Details of all networks are included in the supplementary documents.

3. Experiments and Results

Dataset:

We used 20 MRIs (T1w and T2w MRI) from the Combined Healthy Abdominal Organ Segmentation (CHAOS) challenge data [26] and a completely different set of 30 patients with expert-segmented CT scans from [27] for the analysis. CHAOS CT scans only have liver segmentations. Ten MRIs were used in training (without expert segmentations) and validation (with expert segmentations) while the remaining 10 MRIs were held out for independent testing.

Networks were trained using 256×256 pixels image patches obtained from 14038 individual CT slices, and from 8000 T1w and 7872 T2w MRI slices, respectively. All MRIs were acquired from 1.5T Philips scanners, with a resolution of 256×256, pixel spacing [1.36mm to 1.89mm] and slice thickness [5.5mm to 9mm], and processed with bias field correction. CTs had a resolution of 512×512 pixels, a pixel resolution [0.7 to 0.8mm], and a slice thickness [3mm to 3.2mm]. CT and MR image sets were normalized in range −1 to +1 prior to training and testing.

Experiments:

Performance comparisons were done against state-of-the-art multi-domain disentanglement based translation methods, DRIFT++ [22] and starGAN [17]. Translation accuracy was evaluated using cyclic reconstruction [8] of CT going through T1w and T2w MRI using mean absolute error (MAE) and through T-SNE cluster distances between translated and real MRIs.

Segmentation performance was compared against multiple translation based segmentation methods including Cycada [13], CycleGAN [6], variational auto-encoder based method UNIT [28], synergistic feature encoder (SIFA) [29], and the SynSeg [3] methods. Dice similarity coefficient (DSC) was used to measure accuracy. All methods were trained from scratch with identical image sets and subject to reasonable hyperparameter optimization for equitable comparisons.

3.1. Results and discussion

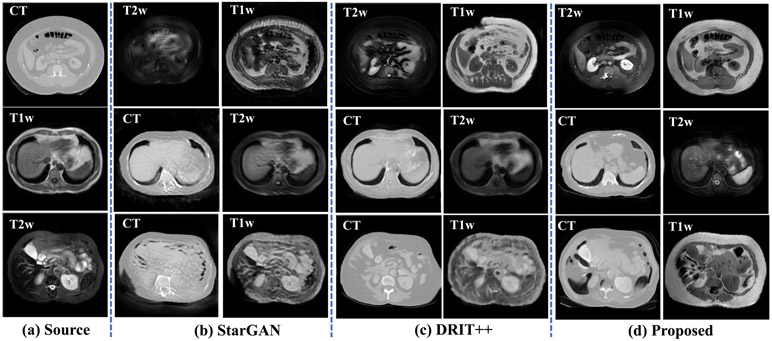

Image translation

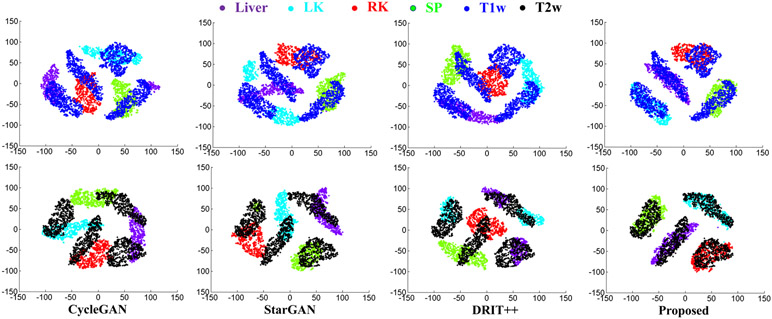

Fig. 2 shows example translation from all three modalities using our and other methods. As shown, both StarGAN[17] and DRIT++[22] produce less accurate translation for all three modalities compared with our method. Fig. 3 shows T-SNE clusterings computed from the signal intensities of the various organs of interest using multiple methods and overlayed with the distribution of real T1w and T2w MRIs. The average cluster distance to the corresponding real MRI using our method was 5.05 and 14.00 for T2w and T1w MRIs, respectively. The average cluster distances of comparison methods were much higher with CycleGAN[6] of 73.90 for T2w, 101.37 for T1w, StarGAN[17] of 73.39 for T2w and 77.49 for T1w, and DRIT++[22] of 87.32 for T2w and 70.73 for T1w MRIs, respectively. Furthermore, as shown in Table 1, our method produced the lowest reconstruction error for one step and two-step reconstructions.

Fig. 2:

Multi-domain many-to-many translation.

Fig. 3:

TSNE clusters computed from the generated and real T1w, T2w MRI of organs.

Table 1:

Reconstruction Error.

| Method | One-step reconstruction | Two-step reconstruction | ||

|---|---|---|---|---|

| CT →T1w→CT | CT→ T2w→CT | CT→T1w→T2w→CT | CT→T2w→T1w→CT | |

| StarGAN | 2.30±1.37 | 2.56±1.30 | 5.56±2.44 | 6.55±2.07 |

| DRIT++ | 2.32±1.00 | 2.53±1.08 | 6.13±1.40 | 6.04±2.23 |

| Proposed | 1.34±0.40 | 1.20±0.39 | 2.36±0.44 | 2.27±0.67 |

Segmentation

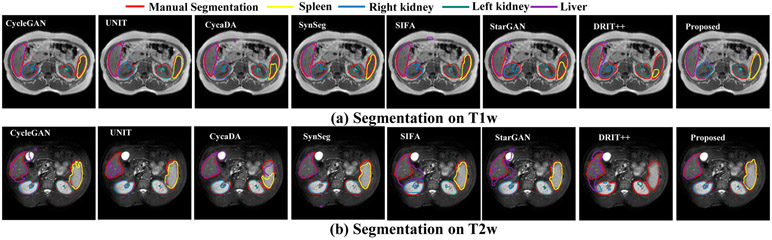

Table 2 shows the segmentation accuracies achieved by our and multiple methods. We also evaluated the performance when a network trained on CT was directly applied to T1w MRI and T2w MRIs. As seen, the performance worsens when using the network trained on CT dataset alone. We evaluated the performance of the approach without the joint density structure discriminator in order to evaluate its use for segmentation performance. As shown, adding the joint density discriminator improved the segmentation performance. Overall, our approach produced an average accuracy of 0.85 for T1w and 0.90 for T2w MRI, which was higher than all other compared methods and only slightly lower than fully supervised training with T1w MRI, and slightly improved over supervised training for T2w MRI. Fig. 4 shows example segmentations produced for T1w and T2w images using all the compared methods against expert segmentation.

Table 2:

Overall segmentation accuracy on CHAOS dataset (In-Phase). Liver-LV, Spleen-SP, Left kidney-LK, Right kidney-RK.

| Method | T1w | T2W | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LV | SP | LK | RK | Avg | LV | Sp | LK | RK | Avg | |

| MRI supervised | 0.91 | 0.86 | 0.82 | 0.83 | 0.86 | 0.92 | 0.87 | 0.91 | 0.90 | 0.90 |

| CT only | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | 0.08 | 0.29 | 0.22 | 0.15 |

| CycleGAN[6] | 0.82 | 0.83 | 0.63 | 0.61 | 0.72 | 0.86 | 0.75 | 0.88 | 0.87 | 0.84 |

| UNIT[28] | 0.89 | 0.81 | 0.64 | 0.62 | 0.74 | 0.87 | 0.76 | 0.91 | 0.88 | 0.86 |

| CycaDA[13] | 0.85 | 0.73 | 0.71 | 0.70 | 0.75 | 0.88 | 0.68 | 0.86 | 0.86 | 0.82 |

| SynSeg[3] | 0.89 | 0.85 | 0.73 | 0.70 | 0.79 | 0.88 | 0.77 | 0.89 | 0.85 | 0.85 |

| SIFA[29] | 0.90 | 0.85 | 0.77 | 0.78 | 0.83 | 0.89 | 0.77 | 0.90 | 0.89 | 0.86 |

| StarGAN[17] | 0.76 | 0.60 | 0.56 | 0.67 | 0.65 | 0.83 | 0.69 | 0.69 | 0.73 | 0.74 |

| DRIT++[22] | 0.83 | 0.63 | 0.61 | 0.66 | 0.68 | 0.73 | 0.81 | 0.81 | 0.82 | 0.79 |

| Proposed − Ds | 0.89 | 0.79 | 0.74 | 0.73 | 0.79 | 0.87 | 0.86 | 0.91 | 0.89 | 0.88 |

| Proposed + Ds | 0.90 | 0.84 | 0.81 | 0.83 | 0.85 | 0.90 | 0.89 | 0.92 | 0.90 | 0.90 |

Fig. 4:

Segmentation results comparing the various methods to the proposed method.

Ablation tests

We evaluated the impact of mode-seeking loss [19] and joint distribution matching losses introduced in this work for T2w MRI segmentation. The two step reconstruction error increased 27% and 19%, while segmentation accuracy dropped by 8% and 7% when removing mode seeking loss and structure discriminator loss, respectively.

Discussion

We developed a multi cross-modality adaptation-based unsupervised segmentation approach that uses only single encoder/decoder for producing one to many mapping. Prior feature disentanglement medical image analysis methods used separate one-to-one mapping based segmentors[30, 29, 31], wherein each additional modality would require one additional encoder and decoder. Also, multi-domain adaptation was often done to handle scanner-related imaging variation in that same modality [30, 32, 31]. Our work also extends unsupervised image-level classification [14, 33] to unsupervised multi-domain, multiple organ segmentation. Our results showed improved segmentation and reconstruction performance against the compared methods.

4. Conclusion

We developed a multi-domain adversarial translation and segmentation method applied to unsupervised multiple MRI sequences segmentation. We showed that a universal multi-domain disentanglement using content and style extractors can produce reasonably accurate multi-domain translation and reasonably accurate segmentation of multiple organs.

Supplementary Material

Acknowledgement.

This work was supported by the MSK Cancer Center support grant/core grant P30 CA008748.

Footnotes

This paper has been accepted by MICCAI2020

References

- 1.Kupelian P, Sonke J: Magnetic-resonance guided adaptive radiotherapy: a solution to the future. Semin Radiat Oncol 24(3) (2014) 227–32 [DOI] [PubMed] [Google Scholar]

- 2.Chartsias A, Joyce T, Dharmakumar R, Tsaftaris SA: Adversarial image synthesis for unpaired multi-modal cardiac data In: Intl Workshop on Simulation and Synthesis in Medical Imaging, Springer; (2017) 3–13 [Google Scholar]

- 3.Huo Y, Xu Z, Moon H, Bao S, Assad A, Moyo TK, Savona MR, Abramson RG, Landman BA: Synseg-net: Synthetic segmentation without target modality ground truth. IEEE Trans. Med. Imaging 38(4) (2018) 1016–1025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jiang J, Hu YC, Tyagi N, Zhang P, Rimner A, Mageras GS, Deasy JO, Veeraraghavan H: Tumor-aware, adversarial domain adaptation from ct to mri for lung cancer segmentation In: Int Conf on Med Image Comput Comput Assisted Interv (MICCAI), Springer; (2018) 777–785 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y: Generative adversarial nets. In: Advances in Neural Information Processing Systems(NeurIPS). (2014) 2672–2680 [Google Scholar]

- 6.Zhu JY, Park T, Isola P, Efros A: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Int Conf Computer Vision (ICCV), ICCV; (2017) 2223–2232 [Google Scholar]

- 7.Arora S, Ge R, Liang Y, Ma T, Zhang Y: Generalization and equilibrium in generative adversarial nets GANs. In: Int Conf Machine Learning (ICML) ICML’17 (2017) 224–232 [Google Scholar]

- 8.Qi GJ: Loss-sensitive generative adversarial networks on lipschitz densities. Intl J Computer Vision (2019) 1–23 [Google Scholar]

- 9.Zhu JY, Zhang R, Pathak D, Darrell T, Efros AA, Wang O, Shechtman E: Toward multimodal image-to-image translation. In: Int Conf Neural Information Processing Systems(NeurIPS). (2017) 465–476 [Google Scholar]

- 10.Donahue J, Krähenbühl P, Darrell T: Adversarial feature learning. CoRR abs/1605.09782 (2016) [Google Scholar]

- 11.Peng X, Huang Z, Sun X, Saenko K: Domain agnostic learning with disentangled representations. In: Int Conf on Machine Learning. (2019) 5102–5112 [Google Scholar]

- 12.Liu A, Liu YC, Yeh YY, Wang YCF: A unified feature disentangler for multi-domain image translation and manipulation. In: Int Conf on Neural Information Processing Systems (NeurIPS). (2018) 2595–2604 [Google Scholar]

- 13.Hoffman J, Tzeng E, Park T, Zhu JY, Isola P, Saenko K, Efros A, Darrell T: Cycada: Cycle-consistent adversarial domain adaptation. In: Int Conf on Machine Learning. (2018) 1989–1998 [Google Scholar]

- 14.Huang X, Liu MY, Belongie S, Kautz J: Multimodal unsupervised image-to-image translation. In: Euro Conf Computer Vision (ECCV). (2018) 172–189 [Google Scholar]

- 15.Liu Y, Yeh Y, Fu T, Wang S, Chiu W, Wang YF: Detach and adapt: Learning cross-domain disentangled deep representation. In: IEEE Conf on Computer Vision and Pattern Recognition(CVPR). (2018) 8867–8876 [Google Scholar]

- 16.Lee HY, Tseng HY, Huang JB, Singh M, Yang MH: Diverse image-to-image translation via disentangled representations. In: Euro Conf on Computer Vision (ECCV). (2018) 35–51 [Google Scholar]

- 17.Choi Y, Choi M, Kim M, Ha JW, Kim S, Choo J: Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In: IEEE Conf on Computer Vision and Pattern Recognition (CVPR). (2018) 8789–8797 [Google Scholar]

- 18.Liu Y, Wang Z, Jin H, Wassell I: Multi-task adversarial network for disentangled feature learning. In: IEEE Conf on Computer Vision and Pattern Recognition (CVPR). (2018) 3743–3751 [Google Scholar]

- 19.Mao Q, Lee H, Tseng H, Ma S, Yang M: Mode seeking generative adversarial networks for diverse image synthesis. In: IEEE Conf on Computer Vision and Pattern Recognition (CVPR). (2019) 1429–1437 [Google Scholar]

- 20.Kingma DP, Welling M: Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 (2013) [Google Scholar]

- 21.Alharbi Y, Smith N, Wonka P: Latent filter scaling for multimodal unsupervised image-to-image translation. In: IEEE Conf on Computer Vision and Pattern Recognition (CVPR). (2019) 1458–1466 [Google Scholar]

- 22.Lee HY, Tseng HY, Mao Q, Huang JB, Lu YD, Singh M, Yang MH: Drit++: Diverse image-to-image translation via disentangled representations. Interl J of Computer Vision (2020) 1–16 [Google Scholar]

- 23.Yu X, Ying Z, Li T, Liu S, Li G: Multi-mapping image-to-image translation with central biasing normalization. arXiv preprint arXiv:1806.10050 (2018) [Google Scholar]

- 24.Kingma DP, Ba J: Adam: A method for stochastic optimization. Int Conf on Learning Representations (ICLR) (2014) [Google Scholar]

- 25.Ronneberger O, Fischer P, Brox T: U-net: Convolutional networks for biomedical image segmentation In: Conf on Med Image Comput Comput Assist Interv (MICCAI), Springer; (2015) 234–241 [Google Scholar]

- 26.Ali E, Alper SM, Oğuz D, Mustafa B, Sinem NG: CHAOS - combined (CT-MR) healthy abdominal organ segmentation challenge data [Google Scholar]

- 27.Landman B, Xu Z, Igelsias J, Styner M, Langerak T, Klein A: MICCAI multi-atlas labeling beyond the cranial vault-workshop and challenge (2015) [Google Scholar]

- 28.Hou L, Agarwal A, Samaras D, Kurc TM, Gupta RR, Saltz JH: Unsupervised histopathology image synthesis. arXiv preprint arXiv:1712.05021 (2017) [Google Scholar]

- 29.Chen C, Dou Q, Chen H, Qin J, Heng PA: Synergistic image and feature adaptation: Towards cross-modality domain adaptation for medical image segmentation. In: Proc Conf on Artificial Intelligence (AAAI). Volume 33 (2019) 865–872 [Google Scholar]

- 30.Yang J, Dvornek NC, Zhang F, Chapiro J, Lin M, Duncan JS: Unsupervised domain adaptation via disentangled representations: Application to cross-modality liver segmentation In: Conf on Med Image Comput Comput Assist Interv (MICCAI), Springer; (2019) 255–263 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chartsias A, Joyce T, Papanastasiou G, Semple S, Williams M, Newby D, Dharmakumar R, Tsaftaris SA: Factorised spatial representation learning: Application in semi-supervised myocardial segmentation In: Intl Conf Medical Image Comput Comput Assisted Interv, Springer; (2018) 490–498 [Google Scholar]

- 32.Ouyang C, Kamnitsas K, Biffi C, Duan J, Rueckert D: Data efficient unsupervised domain adaptation for cross-modality image segmentation In: Intl Conf Medical Image Comput Comput Assisted Interv, Springer; (2017) 507–515 [Google Scholar]

- 33.Li H, Pan SJ, Wang S, Kot AC: Domain generalization with adversarial feature learning. In: IEEE Conf on Computer Vision and Pattern Recognition. (2018) 5400–5409 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.