Abstract

The fate of scientific hypotheses often relies on the ability of a computational model to explain the data, quantified in modern statistical approaches by the likelihood function. The log-likelihood is the key element for parameter estimation and model evaluation. However, the log-likelihood of complex models in fields such as computational biology and neuroscience is often intractable to compute analytically or numerically. In those cases, researchers can often only estimate the log-likelihood by comparing observed data with synthetic observations generated by model simulations. Standard techniques to approximate the likelihood via simulation either use summary statistics of the data or are at risk of producing substantial biases in the estimate. Here, we explore another method, inverse binomial sampling (IBS), which can estimate the log-likelihood of an entire data set efficiently and without bias. For each observation, IBS draws samples from the simulator model until one matches the observation. The log-likelihood estimate is then a function of the number of samples drawn. The variance of this estimator is uniformly bounded, achieves the minimum variance for an unbiased estimator, and we can compute calibrated estimates of the variance. We provide theoretical arguments in favor of IBS and an empirical assessment of the method for maximum-likelihood estimation with simulation-based models. As case studies, we take three model-fitting problems of increasing complexity from computational and cognitive neuroscience. In all problems, IBS generally produces lower error in the estimated parameters and maximum log-likelihood values than alternative sampling methods with the same average number of samples. Our results demonstrate the potential of IBS as a practical, robust, and easy to implement method for log-likelihood evaluation when exact techniques are not available.

Author summary

Researchers often validate scientific hypotheses by comparing data with the predictions of a mathematical or computational model. This comparison can be quantified by the ‘log-likelihood’, a number that captures how well the model explains the data. However, for complex models common in neuroscience and computational biology, obtaining exact formulas for the log-likelihood can be difficult. Instead, the log-likelihood is usually approximated by simulating synthetic observations from the model (‘sampling’), and seeing how often the simulated data match the actual observations. To reach correct scientific conclusions, it is crucial that the log-likelihood estimates produced by such sampling methods are accurate (unbiased). Here, we introduce inverse binomial sampling (IBS), a method which differs from traditional approaches in that the number of samples drawn from the model is not fixed, but adaptively adjusted in a simple way. For each data point, IBS samples from the model until it matches the observation. We show that IBS is unbiased and has other desirable statistical properties, both theoretically and via empirical validation on three case studies from computational and cognitive neuroscience. Across all examples, IBS outperforms fixed sampling methods, demonstrating the utility of IBS as a practical, robust, and easy to implement method for log-likelihood evaluation.

This is a PLOS Computational Biology Methods paper.

Introduction

The likelihood function is one of the most important mathematical objects for modern statistical inference. Briefly, the likelihood function measures how well a model with a given set of parameters can explain an observed data set. For a data set of discrete observations, the likelihood has the intuitive interpretation of the probability that a random sample generated from the model matches the data, for a given setting of the model parameters.

In many scientific disciplines, such as computational neuroscience and cognitive science, computational models are used to give a precise quantitative form to scientific hypotheses and theories. Statistical inference then plays at least two fundamental roles for scientific discovery. First, our goal may be parameter estimation for a model of interest. Parameter values may have a significance in themselves, for example we may be looking for differences in parameters between distinct experimental conditions in a clinical or behavioral study. Second, we may be considering a number of competing scientific hypotheses, instantiated by different models, and we want to evaluate which model ‘best’ captures the data according to some criteria, such as explanation (what evidence the data provide in favor of each model?) and prediction (which model best predicts new observations?).

Crucially, the likelihood function is a key element for both parameter estimation and model evaluation. A principled method to find best-fitting model parameters for a given data set is maximum-likelihood estimation (MLE), which entails optimizing the likelihood function over the parameter space [1]. Other common parameter estimation methods, such as maximum-a-posteriori (MAP) estimation or full or approximate Bayesian inference of posterior distributions, still involve the likelihood function [2]. Moreover, almost all model comparison metrics commonly used for scientific model evaluation are based on likelihood computations, from predictive metrics such as Akaike’s information criterion (AIC; [3]), the deviance information criterion (DIC; [4]), the widely applicable information criterion (WAIC; [5]), leave-one-out cross-validation [6]; to evidence-based metrics such as the marginal likelihood [7] and (loose) approximations thereof, such as the Bayesian information criterion (BIC; [8]) or the Laplace approximation [7].

However, many complex computational models, such as those developed in computational biology [9–11], neuroscience [12, 13] and cognitive science [14], take the form of a generative model or simulator, that is an algorithm which given some context information and parameter settings returns one or more simulated observations (a synthetic data set). In those cases, the likelihood is often impossible to calculate analytically, and even when the likelihood might be available in theory, the numerical calculations needed to obtain it might be overwhelmingly expensive and intractable in practice. In such situations, the only thing one can do is to run the model to simulate observations (‘samples’). In the absence of a likelihood function, common approaches to ‘likelihood-free inference’ generally try and match summary statistics of the data with summary statistics of simulated observations [15, 16].

In this paper, we ask instead the question of whether we can use samples from a simulator model to directly estimate the likelihood of the full data set, without recurring to summary statistics, in a ‘correct’ and ‘efficient’ manner, for some specific definition of these terms. The answer is yes, as long as we use the right sampling method.

In brief, a sampling method consists of a ‘sampling policy’ (a rule that determines how long to keep drawing samples for) and an ‘estimator’ which converts the samples to a real-valued number. To estimate the likelihood of a single observation (e.g., the response of a participant on a single trial of a behavioral experiment), the most obvious sampling policy is to draw a fixed amount of samples from the simulator model, and the simplest estimator is the fraction of samples that match the observation (or is ‘close enough’ to it, for continuous observations). However, most basic applications, such as computing the likelihood of multiple observations, require one to estimate the logarithm of the likelihood, or log-likelihood (see Methods for the underlying technical reasons). The ‘fixed sampling’ method described above cannot provide unbiased estimates for the log-likelihood (see Methods). Such bias vanishes in the asymptotic limit of infinite samples, but drawing samples from the model can be computationally expensive, especially if the simulator model is complex. In practice, the bias introduced by any fixed sampling method can translate to considerable biases in estimates of model parameters, or even reverse the outcome of model comparison analyses. In other words, using poor sampling methods can cause researchers to draw conclusions about scientific hypotheses which are not supported by their data.

In this work, we introduce inverse binomial sampling (IBS) as a powerful and simple technique for correctly and efficiently estimating log-likelihoods of simulator-based models. Crucially, IBS is a sampling method that provides uniformly unbiased estimates of the log-likelihood [17, 18] and calibrated estimates of their variance, which is also uniformly bounded.

We note that the problem of estimating functions f(p) from observations of a Bernoulli distribution with parameter p has been studied for mostly theoretical reasons in the mid-20th century, with major contributions represented by [17–21]. These works have largely focused on deriving the set of functions f(p) for which an unbiased estimate exists, and demonstrating that for those functions, the inverse sampling policy (see Methods) is in a precise sense ‘efficient’. Our main contribution here is to demonstrate that inverse binomial sampling provides a practically and theoretically efficient solution for a common problem in computational modeling; namely likelihood-free inference of complex models. To back up our claims, we provide theoretical arguments for the efficiency of IBS and a practical demonstration of its value for log-likelihood estimation and fitting of simulation-based models, in particular those used in computational cognitive science. We note that [22] had previously proposed inverse binomial sampling as a method for likelihood-free inference for certain econometric models, but did not present an empirical assessment of the quality of the estimation and to our knowledge has not led to further adoption of IBS.

The paper is structured as follows. In the Methods, after setting the stage with useful “Definitions and notation”, we describe more in detail the issues with the fixed sampling method and why they cannot be fixed (“Why fixed sampling fails”). We then present a series of arguments for why IBS solves these issues, and in particular why being unbiased here is of particular relevance (“Is inverse binomial sampling really better?”). In the Results, we present an empirical comparison of IBS and fixed sampling in the setting of maximum-likelihood estimation. As case studies, we take three model-fitting problems of increasing complexity from computational cognitive science: an ‘orientation discrimination’ task, a ‘change localization’ task, and a complex sequential decision making task. In all problems, IBS generally produces lower error in the estimated parameters than fixed sampling with the same average number of samples. IBS also returns solutions that are very close in value to the true maximum log-likelihood. We conclude by discussing further applications and extensions of IBS in the Discussion. Our theoretical analyses and empirical results demonstrate the potential of IBS as a practical, robust, and easy-to-implement method for log-likelihood evaluation when exact or numerical solutions are unavailable.

Implementations of IBS with tutorials and examples are available at the following link: https://github.com/lacerbi/ibs.

Methods

Definitions and notation

The two fundamental ingredients to run IBS are:

A data set consisting of N ‘trials’ characterized by ‘stimuli’ si and discrete ‘responses’ ri.

A generative model g for the data (also known as a ‘simulator’): a stochastic function that takes as input a stimulus s and a parameter vector θ (and possibly other information) and outputs a response r.

In this section we expand on and provide motivations for the above assumptions, and introduce related definitions and notation used in the rest of the paper.

Here and in the following, for ease of reference, we use the language of behavioral and cognitive modeling (e.g., ‘trial’ for data points, ‘stimulus’ for independent or contextual variables, ‘response’ for observations or outcomes), but the statistical techniques that we discuss in the paper apply to any model and data set from any domain as long as they satisfy the fundamental assumption of IBS delineated above.

The likelihood function

We assume that we want to model a data set consisting of N ‘trials’ (data points), where

si is the stimulus (i.e., the experimental context, or independent variable) presented on the i-th trial; typically, si is a scalar or vector of discrete or continuous variables (more generally, there are no restrictions on what si can be as long as the simulator can accept it as input);

ri is the response (i.e., the experimental observations, outcomes, or dependent variables) measured on the i-th trial; ri can be a scalar or vector, but crucially we assume it takes discrete values.

The requirement that ri be discrete will be discussed below.

Given a data set , and a model parametrized by parameter vector θ, we can write the likelihood function for the responses given the stimuli and model parameters as

| (1) |

where the first line follows from the chain rule of probability, and holds in general, whereas in the second step we applied the reasonable ‘causal’ (or ‘no-time-travel’) assumption that the response at the i-th trial is not influenced by future stimuli. We also used the causality assumption that current responses are not influenced by future responses to choose a specific order to apply the chain rule in the first line.

Note that Eq 1 assumes that the researcher is not interested in statistically modeling the stimuli, which are taken to be given (i.e., on the right-hand side of the conditional probability). This choice is without loss of generality, as any variable of statistical interest can always be relabeled and become an element of the ‘response’ vector. For compactness, from now on we will denote , in a slight abuse of notation.

Eq 1 describes the most general class of models, in which the response in the current trial might be influenced by the history of both previous stimuli and previous responses. Many models commonly make a stronger conditional independence assumption between trials, such that the response on the current trial only depends on the current stimulus. Under this stronger assumption, the likelihood takes a simpler form,

| (2) |

While Eq 2 is simpler, it still includes a wide variety of models. For example, note that time-dependence can be easily included in the model by incorporating time into the ‘stimulus’ s, and including time-dependent parameters explicitly in the model specification. In the rest of this work, for simplicity we consider models that make conditional independence assumptions as in Eq 2, but our techniques apply in general also for likelihoods as per Eq 1.

Given that the likelihood of the i-th trial can be directly interpreted as the probability of observing response ri in the i-th trial (conditioned on everything else), we denote such quantity with pi ∈ [0, 1]. The value pi is a function of θ, depends on the current stimulus and response, and may or may not depend on previous stimuli or responses.

With this notation, we can simply write the likelihood as

| (3) |

Finally, we note that it is common practice to work with the logarithm of the likelihood, or log-likelihood, that is

| (4) |

The typical rationale for switching to the log-likelihood is that for large N the likelihood tends to be a vanishingly small quantity, so the logarithm makes it easier to handle numerically (‘numerical convenience’). However, we will see later that there are statistically meaningful reasons to prefer the logarithmic representation (i.e., a sum of independent terms).

Crucially, we assume that the likelihood function is unavailable in a tractable form—for example, because the model is too complex to derive an analytical expression for the likelihood. Instead, IBS provides a technique for estimating Eq 4 via simulation.

The generative model or simulator

While we assume no availability of an explicit representation of the likelihood function, we assume that the model of interest is represented implicitly by a stochastic generative model (or ‘simulator’). In the most general case, the simulator is a stochastic function g that takes as input the current stimulus si, arrays of past stimuli and responses, and a parameter vector θ, and outputs a discrete response ri, conditional on all past events,

| (5) |

As mentioned in the previous section, a common assumption for a model is that the response in the current trial only depends on the current stimulus and parameter vector, in which case

| (6) |

For example, the model g(⋅) could be simulating the responses of a human participant in a complex cognitive task; the (discrete) choices taken by a rodent in a perceptual decision-making experiment; or the spike count of a neuron in sensory cortex for a specific time bin after a stimulus presentation.

We list now the requirements that the simulator model needs to satisfy to be used in conjuction with IBS.

Discrete response space. Lacking an expression for the likelihood function, the only way to estimate the likelihood or any function thereof is by drawing samples r ∼ g(si, …; θ) on each trial, and matching them to the response ri. This approach requires that there is a nonzero probability for a random sample r to match ri, hence the assumption that the space of responses is discrete. We will see in the Discussion a possible method to extend IBS to larger or continuous response spaces.

Conditional simulation. An important requirement of the generative model, stated implicitly by Eqs 5 and 6, is that the simulator should afford conditional simulation, in that we can simulate the response ri for any trial i, given the current stimulus si, and possibly previous stimuli and responses. Note that this class of models, while large, does not include all possible simulators, in that some simulators might not afford conditional generation of responses. For example, models with latent dynamics might be able to generate a full sequence of responses given the stimuli, but it might not be easy or computationally tractable to generate the response in a given trial, conditional on a specific sequence of previous responses.

Computational cost. Finally, for the purpose of some of our analyses we assume that drawing a sample from the generative model is at least moderately computationally expensive, which limits the approximate budget of samples one is willing to use for each likelihood evaluation (in our analyses, up to about a hundred, on average, per likelihood evaluation). Number of samples is a reasonable proxy for any realistic resource expenditure since most costs (e.g., time, energy, number of processors) would be approximately proportional to it. Therefore, we also require that every response value in the data has a non-negligible probability of being sampled from the model—given the available budget of samples one can reasonably draw. In this paper, we will focus on the low-sample regime, since that is where IBS considerably outperforms other approaches.

For our analyses of performance of the algorithm, we also assume that the computational cost is independent of the stimulus, response or model parameters, but this is not a requirement of the method.

Reduction to Bernoulli sampling

Given the conditional independence structure codified by Eq 3, to estimate the log-likelihood of the entire data set, we cannot do better than estimating pi on each trial independently, and combining the results. However, combining estimates into a well-behaved estimate of is non-trivial (see “Why not an unbiased estimator of the likelihood?”). Instead, it is easier to estimate for each trial and calculate the log-likelihood

| (7) |

We can estimate this log-likelihood by simply summing estimates across trials, in which case the central limit theorem guarantees that the distribution of is normally distributed for large values of N, which is true for typical values of N of the order of a hundred or more (see later).

We can make one additional simplification, without loss of generality. The generative model specifies an implicit probability distribution ri ∼ g(si, …; θ) for each trial. However, to estimate the log-likelihood, we do not need to know the full distribution, only the probability for a random sample r from the model to match the observed response ri. Therefore, we can convert each sample r to

| (8) |

and lose no information relevant for estimating the log-likelihood. By construction, x follows a Bernoulli distribution with probability pi. Note that this holds regardless of the type of data, the structure of the generative model or the model parameters. The only difference between different models and data sets is the distribution of the likelihood pi across trials. Moreover, since pi is interpreted as the parameter of a Bernoulli distribution, we can apply standard frequentist or Bayesian statistical reasoning to it.

In conclusion, we can reduce the problem of estimating the log-likelihood of a given model by sampling to a smaller problem: given a method to draw samples (x1, x2, …) from a Bernoulli distribution with unknown parameter p, estimate log p as precisely and accurately as possible using on average as few samples as possible.

Sampling policies and estimators

A sampling policy is a function that, given a sequence of samples x ≡ (x1, x2, …, xk), decides whether to draw an additional sample or not [20]. In this work, we compare two sampling policies:

The commonly used fixed policy: Draw a fixed number of samples M, then stop.

The inverse binomial sampling policy: Keep drawing samples until xk = 1, then stop.

In our case, an estimator (of log p) is a function that takes as input a sequence of samples x = (x1, x2, …, xk) and returns an estimate of log p. We recall that the bias of an estimator of log p, for a given true value of the Bernoulli parameter p, is defined as

| (9) |

where the expectation is taken over all possible sequences x generated by the chosen sampling policy under the Bernoulli probability p. Such estimator is (uniformly) unbiased if for all 0 < p ≤ 1 (that is, the estimator is centered around the true value).

- Fixed sampling. For the fixed sampling policy, since all samples are independent and identically distributed, a sufficient statistic for estimating p from the samples (x1, x2, …, xM) is the number of ‘hits’, . The most obvious estimator for an obtained sequence of samples x is then

but this estimator has infinite bias; since as long as p ≠ 1, there is always a nonzero chance that m(x) = 0, in which case (and thus ). This divergence can be fixed in multiple ways; in the main text we use(10)

Note that any estimator based on the fixed sampling policy will always produce biased estimates of log p, as guaranteed by the reasoning in the following section. As an empirical validation, we show in S1 Appendix B.1 that our results do not depend on the specific choice of estimator for fixed sampling (Eq 11).(11) - Inverse binomial sampling. For inverse binomial sampling we note that, since x is a binary variable, the policy will always result in a sequence of samples of the form

where the length of the sequence is a stochastic variable, which we label K (a positive integer). Moreover, since each sample is independent and a ‘hit’ with probability p, the length K follows a geometric distribution with parameter 1 − p,(12)

We convert a value of K into an estimate for log p using the IBS estimator,(13)

Crucially, Eq 14 combined with the IBS policy provides a uniformly unbiased estimator of log p [18]. Moreover, we can show that is the uniformly minimum-variance unbiased estimator of log p under the IBS policy. For a full derivation of the properties of the IBS estimator, we refer to S1 Appendix A.1. Eq 14 can be written compactly as , where ψ(z) is the digamma function [23].(14)

We now provide an understanding of why fixed sampling is not a good policy, despite its intuitive appeal, and then show why IBS solves many of the problems with fixed sampling.

Why fixed sampling fails

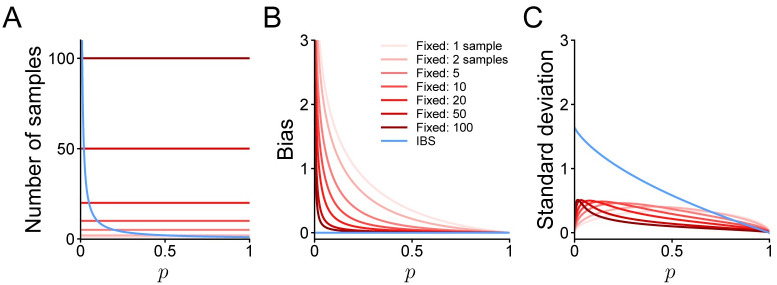

We summarize in Fig 1 the properties of the IBS estimator and of fixed sampling, for different number of samples M, as a function of the trial likelihood p. In particular, we plot the expected number of samples, the bias, and the standard deviation of the estimators.

Fig 1. Properties of estimators.

A. Number of samples used by fixed (red curves) or inverse binomial sampling (blue; expected value) to estimate the log-likelihood log p on a single trial with probability p. IBS uses on average trials. B. Bias of the log-likelihood estimate. The bias of IBS is identically zero. C. Standard deviation of the log-likelihood estimate.

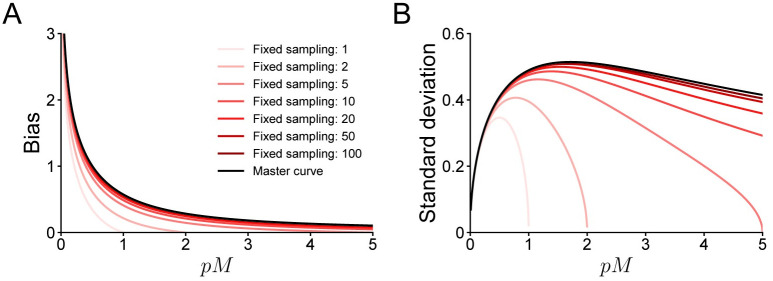

The critical disadvantage of the fixed sampling policy with M samples is that its estimates of the log-likelihood are inevitably biased (see Fig 1B). Fixed sampling is ‘inevitably’ biased because the bias decreases as one takes more samples, but for p → 0, the estimator remains biased. More precisely, in a joint limit where M → ∞, p → 0 and pM → λ for some constant λ, the bias collapses onto a single ‘master curve’ (see Fig 2; and S1 Appendix A.2 for the derivation). In particular, we observe that the bias is close to zero for λ ≫ 1 and that it diverges when λ ≪ 1, or equivalently, for and , respectively.

Fig 2. Asymptotic properties of fixed sampling.

A. Bias of fixed sampling estimators of the log-likelihood, plotted as a function of pM, where p is the likelihood on a given trial, and M the number of samples. As M → ∞, the bias converges to a master curve (Eq S3 in S1 Appendix). B. Same, but for standard deviation of the estimate.

To convey the intuition for why the bias diverges for small probabilities, we provide a gambling analogy. Imagine playing a slot machine and losing the first 100 bets you make. You can now deduce that this slot machine likely has a win rate less than 1%, but there is no way of knowing whether it is 1%, 0.1%, 0.01% or even 0% apart from any prior beliefs you may have (for example, you expect that the house has stacked the odds in their favor but not overwhelmingly so). In practice, this uncertainty is unlikely to affect your decision whether to continue playing the slot machine, since the expected value of the slot machine depends linearly on its win rate. However, if your goal is to estimate the logarithm of the win rate, the difference between these percentages becomes infinitely large as the true win rate tends to 0. We provide a more formal treatment of the bias of fixed sampling in S1 Appendix A2.

Why fixed sampling cannot be fixed

The asymptotic analyses above suggest an obvious solution to prevent fixed sampling estimators from becoming strongly biased: make sure to draw enough samples so that . Although this solution will succeed in theory, it has practical issues. First of all, choosing M requires knowledge of pi on each trial, which is equivalent to the problem we set out the solve in the first place. Moreover, even if one can derive or estimate an upper bound on (for example, in behavioral models that include a lapse rate, that is a nonzero probability of giving a uniformly random response), fixed sampling will be inefficient. As shown in Fig 2, the bias in is small when λ ≈ 1 or and increasing M even further has diminishing returns, at least for the purpose of reducing bias. If we choose M inversely proportional to the probability pi on the trial where the model is least likely to match the observed response, we will draw many more samples than necessary for all other trials.

One might hope that in practice the likelihood pi is approximately the same across trials, but the opposite is true. As an example, take a typical ‘orientation discrimination’ psychophysical task in which a participant has to detect whether a presented oriented grating is tilted clockwise or anti-clockwise from vertical, and consider a generative model for the observer’s responses that includes sensory measurement noise and lapses (see Results for details). Moreover, imagine that the experiment contains ≈ 500 trials, and the participant’s true lapse rate is 1%. The model will always assign more probability to correct responses than errors, so, for all correct trials, pi will be at least 0.5. However, there will likely be a handful of trials where the participant lapses and makes a grave error (responding incorrectly to a stimulus very far from the decision boundary), in which case pi will be 0.5 times the lapse rate. This hypothetical scenario is not exceptional, in fact it is almost inevitable in any experiment where participants occasionally make unpredictable responses, and perform hundreds or more trials.

A more sophisticated solution would relax the assumption that M needs to be constant for all trials, and instead choose M as a function of pi on each trial. However, since pi is unknown, one would need to first estimate pi by sampling, choose Mi for each trial, then re-estimate . Such an iterative procedure would create a non-fixed sampling scheme, in which Mi adapts to pi on each trial. This approach is promising, and it is, in fact, how we originally arrived at the idea of using inverse binomial sampling for log-likelihood estimation, while working on the complex cognitive model described in the Results.

Finally, a heuristic solution would be to disregard any statistical concerns, pick M based on some intuition or from similar studies in the literature, and hope that the bias turns out to be negligible. We do not intend to dissuade researchers from using such pragmatic approaches if they work in practice. Unfortunately, this one does not. As Fig 2 shows, estimating log-likelihoods with fixed sampling can cause biases of 1 or more points of model evidence if the data set contains even a single trial on which . Since differences in log-likelihoods larger than 5 to 10 points are often regarded as strong evidence for one model over another [24–26], it is well possible for such biases to reverse the outcome of a model comparison analysis. This point bears repeating; if one uses fixed sampling to estimate log-likelihoods and the number of samples is too low, one risks of drawing conclusions about scientific hypotheses that are not supported by the experimental data one has collected.

Why not an unbiased estimator of the likelihood?

In this paper, we focus on finding an unbiased estimator of the log-likelihood, but one might wonder why we do not look instead for an unbiased estimator of the likelihood. In fact, we already have such an estimator. Fixed sampling provides an unbiased estimate of pi for each trial, and since all estimates are unbiased and statistically independent, ∏i pi is also unbiased.

The critical issue is the shape of the distribution of these estimates. While a central limit theorem for products of random variables exists, it only holds if all the estimates are almost surely not zero. For estimates of pi obtained via fixed sampling, this is not the case, and we would not obtain a well-behaved (i.e., log-normal) distribution of ∏i pi. In fact, the distribution would be highly multimodal with the main peak being at ∏i pi = 0. This property makes this estimator unusable for all practical purposes (e.g., from maximum-likelihood estimation to Bayesian inference).

Instead, by switching to log-likelihood estimation, we can find an estimator (IBS) which is both unbiased and whose estimates are guaranteed to be well-behaved (in particular, normally distributed). We stress that normality is not just a desirable addition, but a fundamental feature with substantial practical consequences for how estimators are used, as we will see more in detail in the following section.

Is inverse binomial sampling really better?

While one could expect that the unbiasedness of the IBS estimator would come at a cost, such as more samples, a much higher variance, or perhaps a particularly complex implementation, we show here that IBS is not only unbiased, but it is sample-efficient, its estimates are low-variance, and can be implemented in a few lines of code.

Implementation

We present in Table 1 a description in pseudo-code of the basic IBS algorithm to estimate the log-likelihood of a given parameter vector θ for a given data set and generative model. The procedure is based on the inverse binomial sampling scheme introduced previously, generalized sequentially to multiple trials.

Table 1. Inverse binomial sampling algorithm.

| Input: Stimuli , responses , generative model , parameters θ |

|

1: for

i ← 1 … N

do ⊳ Sequential loop over all trials 2: Ki ← 1 3: while sample_from_model(, θ, si) ≠ ri do 4: Ki ← Ki + 1 5: ⊳ IBS estimator from Eq 14 6: return ⊳ Return total log-likelihood estimate |

Basic sequential implementation of the IBS estimator.

For each trial, we draw sampled responses from the generative model, given the stimulus si in that trial, using the subroutine sample_from_model, until one matches the observed response ri. This yields a value of Ki on each trial i, which IBS converts to an estimate (where we use the convention that a sum with zero terms equals 0). We make our way sequentially across all trials, returning then the summed log-likelihood estimate for the entire data set.

In practice, depending on the programming language of choice, it might be useful to take advantage of numerical features such as vectorization to speed up computations. An alternative ‘parallel’ implementation of IBS is described in S1 Appendix.

One might wonder how to choose the θ to evaluate in the algorithm in Table 1 in the first place. IBS is agnostic of how candidate θ are proposed. Most often, the function that implements the IBS algorithm will be passed to a chosen optimization or inference algorithm, and the job of proposing θ will be taken care of by the chosen method. For maximum-likelihood estimation, we recommend derivative-free optimization methods that deal effectively and robustly with noisy evaluations, such as Bayesian Adaptive Direct Search (BADS; [27]) or noise-robust CMA-ES [28, 29]. At the end of optimization, most methods will then return a candidate solution and possibly an estimate of the value of the target function at . However, since the target function is noisy and the final estimate is biased (because, by definition, it is better than the other evaluated locations), we recommend to re-estimate multiple times to obtain a higher-precision, unbiased estimate of the log-likelihood at (see also later, “Iterative multi-fidelity”).

Implementations of IBS in different programming languages can be found at the following web page: https://github.com/lacerbi/ibs.

Computational time

The number of samples that IBS takes on a trial with probability pi is geometrically distributed with mean . We saw earlier that for fixed-sampling estimators to be approximately unbiased, one needs at least samples, and IBS does exactly that in expectation. Moreover, since IBS adapts the number of samples it takes on different trials, it will be considerably more sample-efficient than fixed sampling with constant M across trials. For example, in the aforementioned example of the orientation discrimination task, when most trials have a likelihood pi ≥ 0.5, IBS will often take just 1 or 2 samples on those trials. Therefore, it will allocate most of its samples and computational time on trials where pi is low and those samples are needed.

Variance

The variance of the IBS estimator can be derived as

| (15) |

where we introduced the dilogarithm or Spence’s function Li2(z) [30]. The variance (plotted in Fig 1C as standard deviation) increases when p → 0, but it does not diverge; instead, it converges to . Therefore, IBS is not only uniformly unbiased, but its variance is uniformly bounded. The full derivation of Eq 15 is reported in S1 Appendix A.3.

We already mentioned that is the minimum-variance unbiased estimator of log p given the inverse binomial sampling policy, but it also comes close (less than ∼ 30% distance) to saturating the information inequality, which specifies the minimum variance that can be theoretically achieved by any estimator under a non-fixed sampling policy (an analogue of the Cramer-Ráo bound [18]). We note that fixed sampling eventually saturates the information inequality in the limit M → ∞, but as mentioned in the previous section, the fixed-sampling approach can be highly wasteful or substantially biased (or both), not knowing a priori how large M has to be across trials. See S1 Appendix A.4 for a full discussion of the information inequality and comparison between estimators.

Eq 15 has theoretical relevance, but requires us to know the true value of the likelihood p, which is unknown in practice. Instead, we define the estimator of the variance of a specific IBS estimate, having sampled for K times until a ‘hit’, as

| (16) |

where ψ1(z) is the trigamma function, the derivative of the digamma function [23]. We derived Eq 16 from a Bayesian interpretation of the IBS estimator, which can be found in S1 Appendix A.5. Note that Eqs 15 and 16 correspond to slightly different concepts, in that the former represents the variance of the estimator for a known p (from a frequentist point of view), while the latter is the posterior variance of for a known K (for which there is no frequentist analogue). See also later “Higher-order moments” for further discussion.

Iterative multi-fidelity

We define a multi-fidelity estimator as an estimator with a tunable parameter that affords different degrees of precision at different computational costs (i.e., from a cheaper, inaccurate estimate to a very accurate but expensive one), borrowing the term from the literature on computer simulations and surrogate models [31, 32]. IBS provides a particularly convenient way to construct an iterative multi-fidelity estimator in that we can perform R independent ‘repeats’ of the IBS estimate at θ, and combine them by averaging,

| (17) |

where denotes the r-th independent estimate obtained via IBS. For R = 1, we recover the standard (‘1-rep’) IBS estimator. The variances in Eq 17 are computed empirically using the estimator in Eq 16.

Importantly, we do not need to perform all R repeats at the same time, but we can iteratively refine our estimates whenever needed, and only need to store the current estimate, its variance and the number of repeats performed so far:

| (18) |

Crucially, while a similar procedure could be performed with any estimator (including fixed sampling), the fact that IBS is unbiased and its variance is bounded ensures that the combined iterative estimator is also unbiased and eventually converges to the true value for R → ∞, with variance bounded above by .

Finally, we note that the iterative multi-fidelity approach described in this section can be extended such that, instead of having the same number of repeats R for all trials, one could adaptively allocate a different number of repeats ri to each trial so as to minimize the overall variance of the estimated log-likelihood (see S1 Appendix C.2).

Bias or variance?

In the previous sections, we have seen that IBS is always unbiased, whereas fixed sampling can be highly biased when using too few samples. However, with the right choice of M, fixed sampling can have lower variance. We now list several practical and theoretical arguments for why bias can have a larger negative impact than variance, and being unbiased is a desirable property for an estimator of the log-likelihood.

To use IBS or fixed sampling to estimate the log-likelihood of a given data set, we sum estimates of across trials. Being the sum of independent random variables, as N → ∞, the standard deviation of will grow proportional to , whereas the bias grows linearly with N. For a concrete example, see S1 Appendix A.6.

When using the log-likelihood (or a derived metric) for model selection, it is common to collect evidence for a model, possibly hierarchically, across multiple datasets (e.g., different participants in a behavioral experiment), which provides a second level of averaging that can reduce variance but not bias.

Besides model selection, the other key reason to estimate log-likelihoods is to infer parameters of a model, for example via maximum-likelihood estimation. For this purpose, one would use an optimization algorithm that calls the routine that estimates many times with different candidate values of θ, and uses this information to estimate the value that maximizes . Powerful, sample-efficient optimization algorithms, such as those based on Bayesian optimization, work by building a statistical approximation (a surrogate) of the objective function [27, 33–35], most commonly via Gaussian processes [36]. These methods can operate successfully with noisy objectives by effectively averaging function values from nearby parameter vectors. By contrast, no optimization algorithm can handle bias. This argument is not limited to maximum-likelihood estimation, as recent methods have been proposed to use Gaussian process surrogates to perform (approximate) Bayesian inference and infer posterior distributions [37–40]; also these techniques can handle variance in the estimates but not bias.

The ability to combine unbiased estimates of known variance iteratively (as described in the previous section) is particularly useful with adaptive fitting methods based on Gaussian processes, whose algorithmic cost grows super-linearly in the number of distinct training points [36]. Thanks to iterative multi-fidelity estimation, these methods would have the opportunity to refine their estimates of the log-likelihood at a previously evaluated point, whenever deemed useful, without incurring an increased algorithmic cost.

On a conceptual level, bias is potentially more dangerous than variance. Bias can cause researchers to confidently draw false conclusions, variance, when properly accounted for, causes decreased statistical power and lack of confidence. Appropriate statistical tools can account for variance and explain seemingly conflicting findings resulting from underpowered studies [41], whereas bias is much harder to recognize or correct no matter what statistical techniques one uses.

Finally, while for the sake of argument we structured this section as an opposition between bias and variance, it is not an exact dichotomy and both properties matter, together with other considerations (see S1 Appendix A.6). For example, there are situations in which the researcher may knowingly decide to increase bias to reduce both variance and computational costs. Notably, while this trade-off is easy to achieve with the IBS estimator (see S1 Appendix C.1), there is no similarly easy technique to de-bias a biased estimator.

Higher-order moments

So far, we have considered the mean (or bias) and variance of and in detail, but ignored any higher-order moments. This is justified since to estimate the log-likelihood of a model with a given parameter vector we will sum these estimates across many trials. Therefore, the central limit theorem guarantees that the distribution of is Gaussian with mean and variance determined by the mean and variance of or on each trial, at least as long as the distribution of pi across trials satisfies a regularity condition (specifically, the Lindeberg [42] or Lyapunov conditions [43, Chapter 7.3], both of which place restrictions on the degree to which the variance of any single trial can dominate the distribution of ). A sufficient but far from necessary condition is that there exists a lower bound on pi, which is the case for example for a behavioral model with a lapse rate. Using the same argument, the total number of samples Ktot that IBS uses to estimate is also approximately Gaussian.

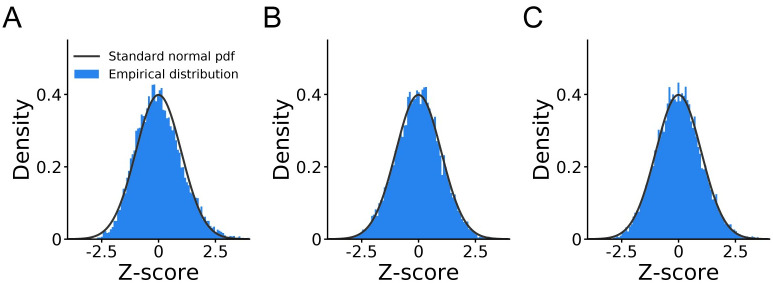

In the following, we demonstrate empirically that the distributions of the number of samples taken by IBS and of the estimates are Gaussian. Importantly, we also show that the estimate of the variance from Eq 16, , is calibrated. That is, we expect the fraction of estimates within the credible interval to be (approximately) Φ(β) − Φ(−β), where Φ(x) is the cumulative normal distribution function and β > 0.

As a realistic scenario, we consider the psychometric function model described in the Results. For each simulated data set, we estimated the log-likelihood under the true data-generating parameters θtrue (see S1 Appendix B.1 for details). Specifically, for each data set we ran IBS and recorded the estimated log-likelihood , the total number of samples Ktot taken, and a Bayesian estimate for the variance of from Eq 16. For the total number of samples Ktot and the estimate, we can compute the theoretical mean and variance by knowing the trial likelihoods pi, which we can evaluate exactly in this example.

For each obtained Ktot, we computed a z-score by subtracting the exact mean and dividing by the exact standard deviation, obtained by knowing the mean and variance of geometric random variables underlying the samples taken in each trial. If Ktot is normally distributed, we expect that the variable z across data sets should appear to be distributed as a standard normal, . If Ktot is not normally distributed, we should see deviations from normality in the distribution of z, especially in the tails. By comparing the histogram of z-scores with a standard normal in Fig 3A, we see that the total number of samples is approximately normal, with some residual skew.

Fig 3. Normality of the IBS estimator.

A.z-score plot for the total number of samples used by IBS. B.z-score plot for the estimates returned by IBS, using the exact variance formula for known probability. C. Calibration plot for the estimates returned by IBS, using the variance estimate from Eq 16. These figures show that the number of samples taken by IBS and the estimated log-likelihood are (approximately) Gaussian, and that the variance estimate from Eq 16 is calibrated.

We did the same analysis for the estimate , using the z-scored variable

| (19) |

where here is the exact variance of the estimator computed via Eq 15. The histogram of z-scores in Fig 3B is again very close to a standard normal.

Finally, in practical scenarios we do not know the true likelihoods, so the key question is whether we can obtain valid estimates of the variance of via Eq 16. If such an estimate is correctly calibrated, the distribution of z-scores should remain approximately Gaussian if we use Eq 16 for the denominator of Eq 19. Indeed, the calibration plot in Fig 3C shows an excellent match with a standard normal, confirming that our proposed estimator of the variance is well calibrated.

Results

In this section, we examine the performance of IBS and fixed sampling on several realistic model-fitting problems of increasing complexity. The example problems we consider here model tasks drawn from psychophysics and cognitive science: an Orientation discrimination experiment; a Change localization task; and playing a Four-in-a-row game that involves complex sequential decision making. For the first problem, we can derive the exact analytical expression for the log-likelihood; for the second problem, we have an integral expression for the log-likelihood that we can approximate numerically; and finally, for the third problem, we are in the true scenario in which the log-likelihood is intractable.

The rationale for our numerical experiments is that so far we have analyzed fixed sampling and IBS in terms of the bias in their log-likelihood estimates for individual parameter vectors. However, these log-likelihood estimators are often used as elements of a more complex statistical procedure, such as maximum-likelihood estimation. It is plausible that biases in log-likelihood estimates will lead to biases in parameter estimates obtained by maximizing the log-likelihood, but the exact relationship between those biases, and the role of variance in optimization is not immediate. Similarly, it is unclear how bias and variance of individual log-likelihood estimates will affect the estimate of the maximum log-likelihood, often used for model selection (e.g., unbiased estimates of the log-likelihood do not guarantee an error-free estimate of the maximum log-likelihood, which is affected by other factors; see later “Log-likelihood loss”). Therefore, we conduct an empirical study showing that, in practice, IBS leads to more accurate parameter and maximum log-likelihood estimates than fixed sampling, given the same budget of computational resources.

First, we describe the procedure used to perform our numerical experiments. Code to run all our numerical experiments and analyses is available at the following link: https://github.com/basvanopheusden/ibs-development.

Procedure

For each problem, we simulate data from the generative model given different known settings θtrue of model parameters, and we compare the accuracy (and other statistics) of both IBS and fixed sampling in recovering the true data-generating parameters through maximum-likelihood estimation. Since these methods provide noisy and possibly biased estimates of , and due to variability in the simulated datasets, the estimates that result from optimizing the log-likelihood will also be noisy and possibly biased. To explore performance in a variety of settings, and to account for variability in the data-generation process, for each problem we consider 40 ⋅ D different parameter settings, where D is the number of model parameters (that is, the dimension of θ), and for each parameter setting we generate 100 distinct synthetic datasets.

For each dataset, we compare fixed sampling with different numbers of samples M (from M = 1 to M = 50 or M = 100, depending on the problem), and IBS with different number of ‘repeats’ R, as defined in the Methods (from R = 1 to up to R = 50, depending on the problem). In each scenario, we directly compare the two methods in terms of number of samples by computing the average number of samples used by IBS for a given number of repeats R. To prevent IBS from ‘hanging’ on particularly bad parameter vectors, we adopt the ‘early stopping threshold’ technique described in S1 Appendix C.1. Finally, if available, we also test the performance of maximum-likelihood estimation using the ‘exact’ log-likelihood function (calculated either analytically or via numerical integration).

For all methods, we maximize the log-likelihood with Bayesian Adaptive Direct Search (BADS [27]; github.com/lacerbi/bads), a hybrid Bayesian optimization algorithm based on the mesh-adaptive direct search framework [44], which affords a fast, robust exploration of the function landscape via Gaussian process surrogates. Briefly, BADS works by alternating between two stages: in the Poll stage, the algorithm evaluates points in a random mesh surrounding the current point, in a fairly model-free way; in the Search stage, following the principles of Bayesian optimization [33], the algorithm builds a local Gaussian process model of the target function, and chooses the next point by taking into account both mean and variance of the surrogate model, balancing exploration of unknown but promising regions and exploitation of regions known to be high-valued (for maximization). By combining model-free and powerful model-based search, BADS has been shown to be much more effective than alternative optimization methods particularly when dealing with stochastic objective functions, and with a relatively limited budget of a few hundreds to a few thousands function evaluations [27]. We refer the interested reader to [27] and to the extensive online documentation for further information about the algorithm.

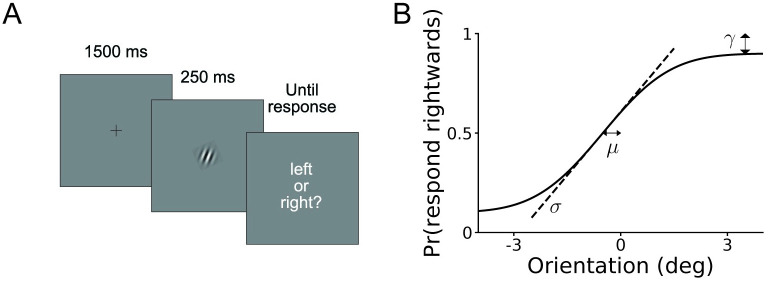

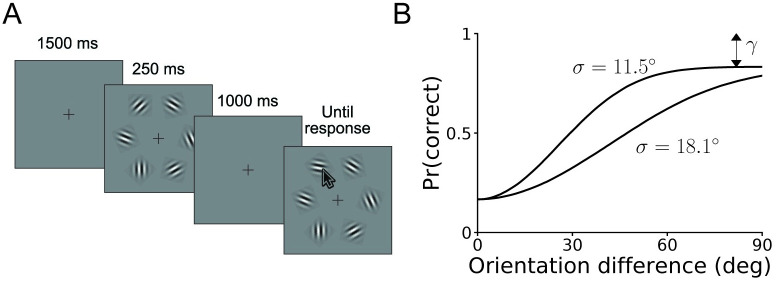

Orientation discrimination

The first task we simulate is an orientation discrimination task, in which a participant observes an oriented patch on a screen, and indicates whether they believe it was rotated leftwards or rightwards with respect to a reference line (see Fig 4A). Here, on each trial the stimulus s is the orientation of the patch with respect to the reference (in degrees), and the response r is ‘rightwards’ or ‘leftwards’.

Fig 4. Orientation discrimination task.

A. Trial structure of the simulated task. A oriented patch appears on a screen for 250 ms, after which participants decide whether it is rotated rightwards or leftwards with respect to a vertical reference. B. Graphical illustration of the behavioral model, which specifies the probability of choosing rightwards as a function of the true stimulus orientation. The three model parameters σ, μ, and γ correspond to the (inverse) slope, horizontal offset and (double) asymptote of the psychometric curve, as per Eq 20. Note that we parametrize the model with η ≡ log σ.

For each dataset, we simulated N = 600 trials, drawing on each trial the stimulus s from a Gaussian distribution with mean 0° (the reference) and standard deviation 3°. The generative model assumes that the observer makes a noisy measurement x of the stimulus, which is normally distributed with mean s and standard deviation σ, as per standard signal detection theory [45]. They then respond ‘rightwards’ if x is larger than μ (a parameter which captures response bias, or an incorrect memory of the reference line) and ‘leftward’ otherwise. However, a fraction of the time, given by the lapse rate γ ∈ (0, 1], the observer guesses randomly. We visually illustrate the model in Fig 4B. For both theoretical reasons and numerical convenience, we parametrize the slope σ as η ≡ log σ. Thus, the model has parameter vector θ = (η, μ, γ).

We can derive the likelihood of each trial analytically:

| (20) |

where Φ(x) is the cumulative normal distribution function. Eq 20 takes the form of a typical psychometric function [46]. Note that in this section we use Gaussian distributions for circularly distributed variables, which is justified under the assumption that both the stimulus distribution and the measurement noise are small. For more details about the numerical experiments, see S1 Appendix B.1.

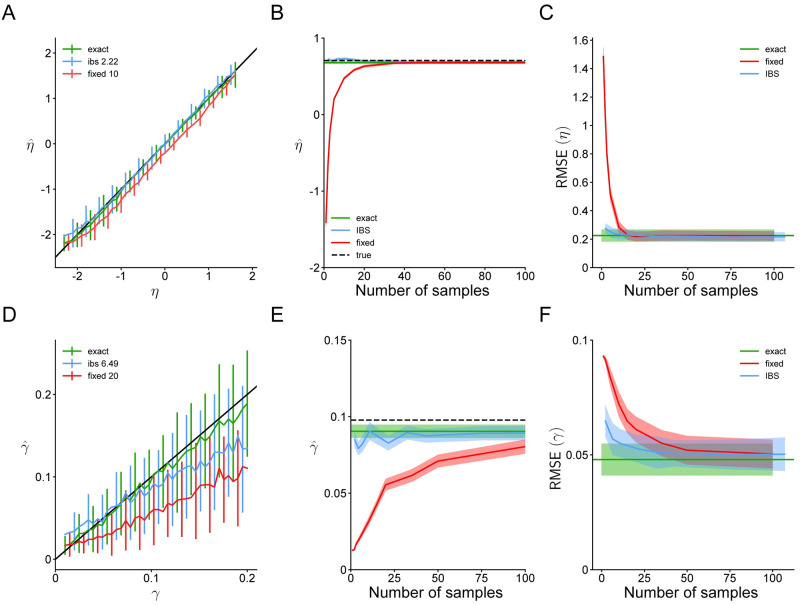

In Fig 5, we show the parameter recovery using fixed sampling, IBS and the exact log-likelihood function from Eq 20. First, we show that IBS can estimate the sensory noise parameter η and lapse rate γ more accurately than fixed sampling while using on average the same or fewer samples (Fig 5A and 5D). For visualization purposes, we show here a representative example with R = 1 or R = 3 repeats of IBS and M = 10 or M = 20 fixed samples (see Fig 6 in S1 Appendix for the plots with all tested values of R and M). As baseline, we also plot the mean and standard deviation of exact maximum-likelihood estimation, which is imperfect due to the finite data size (600 trials), and stochasticity and heuristics used in the optimization algorithm. We omit results for estimates of the response bias μ, since even fixed sampling can match the performance of exact MLE with only 1 sample per trial.

Fig 5. Parameter recovery for the orientation discrimination model.

A. Estimated values of η ≡ log σ as a function of the true η in simulated data using IBS with R = 1 repeat (blue), fixed sampling with M = 10 (red) or the exact likelihood function (green). The black line denotes equality. Error bars indicate standard deviation across 100 simulated data sets. IBS uses on average 2.22 samples per trial. B. Mean and standard error (shaded regions) of estimates of η for 100 simulated data sets with ηtrue = log 2°, using fixed sampling, IBS or the exact likelihood function. For fixed sampling and IBS, we plot mean and standard error as a function of the (average) number of samples used. C. Root mean squared error (RMSE) of estimates of η, averaged across the range of ηtrue in A, as a function of the number of samples used by IBS or fixed sampling. Shaded regions denote ±1 standard deviation across the 100 simulated data sets. We also plot the RMSE of exact maximum-likelihood estimation, which is nonzero since we simulated data sets with only 600 trials. D-F Same, for γ (with R = 3, M = 20 in panel D). These results demonstrate that IBS estimates parameters of the model for orientation discrimination more accurately than fixed sampling using equally many or even fewer samples.

Next, we fix ηtrue ≡ log σtrue = log 2°, μtrue = 0.1°, γtrue = 0.1 and plot the mean and standard deviation of the estimated and across 100 simulated data sets as a function of the (average) number of samples per trial used by IBS or fixed sampling (Fig 5B and 5E). We find that fixed sampling is highly sensitive to the number of samples, and with less than 20 samples per trial, its estimate of η is strongly biased. Estimating γ accurately remains unattainable even with 100 samples per trial. By contrast, IBS estimates η and γ reasonably accurately regardless of the number of samples per trial. IBS has a slight tendency to underestimate γ, which is a result of an interaction of the uncertainty handling in BADS with our choice of model parametrization and parameter bounds. In general, estimating lapse rates is notoriously prone to biases [47].

Finally, we measure the root mean squared error (RMSE) of IBS, fixed sampling and the exact solution, averaged across all simulated data sets, as a function of number of samples per trial (Fig 5C and 5F). This analysis confirms the same pattern: fixed sampling makes large errors in estimating η with fewer than 20 samples, and for γ it requires as many as 100 samples per trial to become approximately unbiased. IBS outperforms fixed sampling for both parameters and any number of samples, and even with as few as 2 or 3 repeats comes close to matching the RMSE of exact maximum-likelihood inference.

Change localization

The second problem we consider is a typical ‘change localization’ task (see Fig 6A), in which participants observe a display of 6 oriented patches, and after a short inter-stimulus interval, a second display of 6 patches [48]. Of these patches, 5 are identical between displays and one denoted by c ∈ {1, …, 6} will have changed orientation. The participant responds by indicating which patch they believe changed orientation. Here, on each trial the stimulus s is a vector of 12 elements corresponding to a vector of orientations (in degrees) of the six patches in the first display, concatenated with the vector of orientation of the six patches in the second display. The response r ∈ {1, …, 6} is the patch reported by the participant.

Fig 6. Change localization task.

A. Trial structure of the simulated task. While the participant fixates on a cross, 6 oriented patches appear for 250 ms, disappear and the re-appear after a delay. In the second display, one patch will have changed orientation, in this example the top left. The participant indicates with a mouse click which patch they believe changed. B. The generative model is fully characterized by the proportion correct as function of model parameters and circular distance between the orientations of the changed patch in its first and second presentation (see text). Here we plot this curve for two values of η ≡ log σ. In both curves, γ = 0.2. We can read off η from the slope and γ from the asymptote.

For each dataset, we simulated N = 400 trials. On each trial, the patches on the first display are all independently drawn from a uniform distribution Uniform[0, 360]. For the second display, we randomly select one of the patches and change its orientation by an amount drawn from a von Mises distribution centered at 0° with concentration parameter κs = 1. A von Mises distribution is the equivalent of a Gaussian distribution in circular space, and the concentration parameter is monotonically related to the precision (inverse variance) of the distribution. Note that, for mathematical convenience (but without loss of generality) we assume that patch orientations are defined on the whole circle, whereas in fact they are defined on the half-circle [0°, 180°).

The generative model assumes that participants independently measure the orientation of each patch in both displays. For each patch, the measurement distribution is a von Mises centered on the true orientation with concentration parameter κ, representing sensory precision. The participant then selects the patch for which the absolute circular difference of the measurements between the first and second display is largest. This model too includes a lapse rate γ ∈ (0, 1], the probability with which the participant guesses uniformly randomly across responses.

Since thinking in terms of concentration parameter is not particularly intuitive, we reparametrize participants’ sensory noise as , since in the limit κ ≫ 1, the von Mises distribution with concentration parameter κ tends to a Gaussian distribution with standard deviation . The model has then two parameters, θ = (η, γ).

We can express the trial likelihood for the change localization model in an integral form that does not have a known analytical solution (see S1 Appendix B.2 for a derivation). We can, however, evaluate the integral numerically, which can take a few seconds for a high-precision likelihood evaluation across all trials in a dataset. The key quantity in the computation of the trial likelihood is , the difference in orientation between the changed stimulus at position c between the first and second display. We plot the probability of a correct response, , as a function of in Fig 6B. As expected, the probability of a correct response increases monotonically with the amount of change, with the slope being modulated by sensory noise and the asymptote by the lapse rate (but also by the sensory noise, for large noise, as we will discuss later). For more details about the numerical experiments, see S1 Appendix B.2.

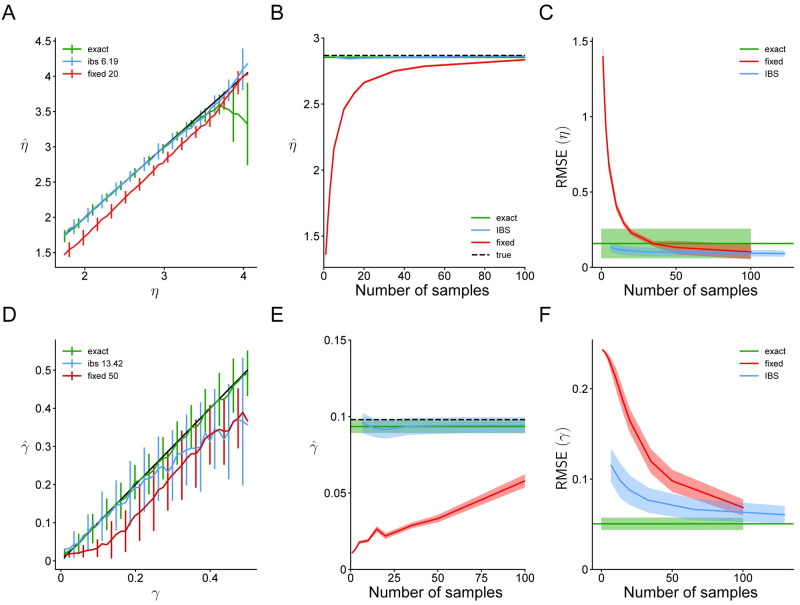

In Fig 7, we compare the performance of IBS, fixed sampling and the ‘exact’ log-likelihood evaluated through numerical integration. As before, IBS estimates both η and γ more accurately with fewer samples than fixed sampling (Fig 7A and 7D). As an example, we show IBS with R = 1 repeats and fixed sampling with M = 20 or M = 50; the full results with all tested values of R and M are reported in Fig 7 in S1 Appendix.

Fig 7. Parameter recovery for the change localization model.

Same as Fig 5, for the change localization experiment and estimates of η ≡ log σ and γ. In panel A we show results for R = 1 and M = 20; in panel D, R = 2 and M = 50.

Interestingly, maximum-likelihood estimation via the ‘exact’ method provides biased estimates of η when the noise is high. This is because sensory noise and lapse become empirically non-identifiable for large η, as large noise produces a nearly-flat response distribution, which is indistinguishable from lapse. For these particular settings of θtrue, due to the interaction between noisy log-likelihood evaluations and the optimization method, IBS and fixed sampling perform better at recovering η than the ‘exact’ method, but it does not necessarily hold true in general. Issues of identifiability can be ameliorated by using Bayesian inference instead of maximum-likelihood estimation [49].

In Fig 7B and 7E, we show the estimates of fixed sampling and IBS for simulated data with ηtrue ≡ log σtrue = log 17.2° and γtrue = 0.1, and find that fixed sampling substantially underestimates η when using less then 50 samples, and underestimates γ even with 100 samples per trial. By contrast, IBS produces parameter estimates with relatively little bias and standard deviation close to that of exact maximum-likelihood estimation. Finally, in Fig 7C and 7F we show that IBS has lower RMSE than fixed sampling for both parameters when compared on equal terms of number of samples.

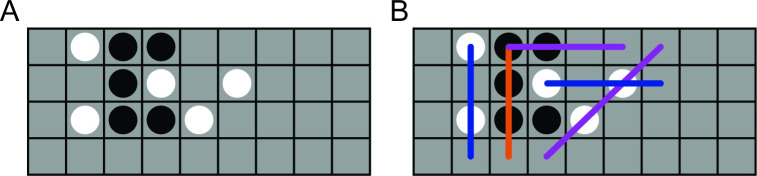

Four-in-a-row game

The third problem we examine is a complex sequential decision-making task, a variant of tic-tac-toe in which two players compete to place 4 pieces in a row, column or diagonal on a 4-by-9 board (see Fig 8A). Previous work showed that people’s decision-making process in this game can be modeled accurately as heuristic search [14]. A heuristic search algorithm makes a move in a given board state by searching through a decision tree of move sequences starting at that board state for a number of moves into the future. To decide which candidate future moves to include in the tree, the algorithm uses a value function defined as

| (21) |

in which fi denotes a set of nf features (i.e., configurations of pieces on the board, such as ‘three pieces on a row of the same color’; see Fig 8B), the corresponding feature weights, and σ > 0 is a model parameter which controls value noise. As before, we parameterize the model with η ≡ log σ.

Fig 8. Four-in-a-row task.

A. Example board configuration in the 4-in-a-row task, in which two players alternate placing pieces (white or black circles) on a 4-by-9 board (gray grid), and the first player to get 4 pieces in a row wins. In this example, the black player can win by placing a piece on the square on the bottom row, third column. B. Illustration of features used in the value function of the heuristic search model (Eq 21). For details on the model, see S1 Appendix B.3 and [14].

The interpretation of this value function is that moves which lead to a high value V(board, move) are given priority in the search algorithm, and the model is more likely to make those moves. As a heuristic to reduce the search space, any moves for which the value V(board, move) is less than that of the highest-value move minus a threshold parameter ξ > 0 are pruned from the tree and never considered as viable options. Finally, when evaluating V(board, move), the model stochastically omits features from the sum ; the probability for any feature to be omitted (or dropped) is independent with probability δ ∈ [0, 1] (the drop rate). Previous work considered various heuristic search models with different feature sets, and estimated the value of feature weights wi as well as the size (number of nodes) of the decision tree based on human data [14]. Here, we consider a reduced model in which the feature identity fi, feature weights wi and tree size are fixed (see S1 Appendix B.3 for their values). Thus, the current model has three parameters, θ = (η, ξ, δ).

Note that even though the 4-in-a-row task is a sequential game, the heuristic search model makes an independent choice on each move, with the ‘stimulus’ s on each trial being the current board state. Hence, the model satisfies the conditional independence assumptions of Eqs 2 and 6. Note also that, even though the heuristic search algorithm can be specified as a generative ‘simulator’ which we can query to make moves in any board position, we have no way of calculating the distribution over its moves, since this would require integrating over all possible trees it could build, features which may be dropped, and realizations of the value noise. Therefore, we are in the scenario in which log-likelihood estimation is only possible by simulation, and we cannot compare the performance of fixed sampling or IBS to any ‘exact’ method.

To generate synthetic data sets for the 4-in-a-row task, we first compiled a set of 5482 board positions which occurred in human-versus-human play [14]. For each data set, we then randomly sampled N = 100 board positions without replacement which we used as ‘stimuli’ for each trial, and sampled a move from the heuristic search algorithm for each position to use as ‘responses’. For more details about the numerical experiments, see S1 Appendix B.3.

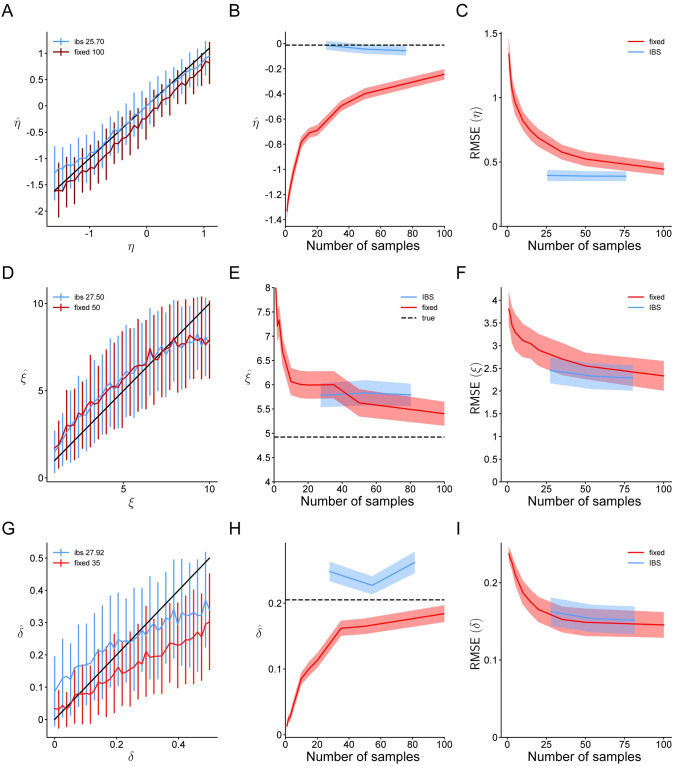

In Fig 9, we perform the same tests as before, comparing fixed sampling and IBS, but lacking any ‘exact’ estimation method. Due to the high computational complexity of the model, we only consider IBS with up to R = 3 repeats, corresponding to ∼ 80 samples. The full results with all tested values of R and M are reported in Fig 8 in S1 Appendix. As a specific example for Fig 9B, 9E and 9H we show the estimates of fixed sampling and IBS for simulated data with ηtrue ≡ log σtrue = log 1, pruning threshold ξtrue = 5 and δtrue = 0.2.

Fig 9. Parameter recovery for the four-in-a-row model.

Same as Figs 5 and 7, for the 4-in-a-row experiment and estimates of the value noise η ≡ log σ, pruning threshold ξ and feature drop rate δ. In panel A we show results for R = 1 and M = 100; in panel D, R = 1 and M = 50; in panel G, R = 1 and M = 35.

Fixed sampling underestimates the value noise η, even when using M = 100 samples, whereas IBS estimates it accurately with 4 times fewer samples (Fig 9A). This bias of fixed sampling gets worse with fewer samples (Fig 9B), and overall, IBS outperforms fixed sampling when compared on equal terms (Fig 9C). The same holds true for the pruning threshold ξ. IBS estimates ξ about equally well as fixed sampling, but with about half as many samples (Fig 9D), fixed sampling is severely biased when using too few samples (Fig 9E) and overall, IBS outperforms fixed sampling.

The results are slightly more complicated for the feature drop rate δ. As before, fixed sampling produces strongly biased estimates of δ with up to 35 samples (Fig 9G), and the bias increases when using fewer samples (Fig 9H). However, for this parameter IBS is also biased, but towards 0.25 (Fig 9G and 9H), which is the midpoint of the ‘plausible’ upper and lower bounds used as reference by the optimization algorithm (see S1 Appendix B.3 for details). This bias can be interpreted as a form of regression towards the mean; likely a by-product of the optimization algorithm struggling with a low signal-to-noise ratio for this parameter and these settings (i.e., a nearly flat likelihood landscape for the amount of estimation noise on the log-likelihood). The negative bias of fixed sampling helps to reduce its variance in the low-δ regime, and therefore in terms of RMSE, fixed sampling performs similarly to IBS for this parameter (Fig 9I).

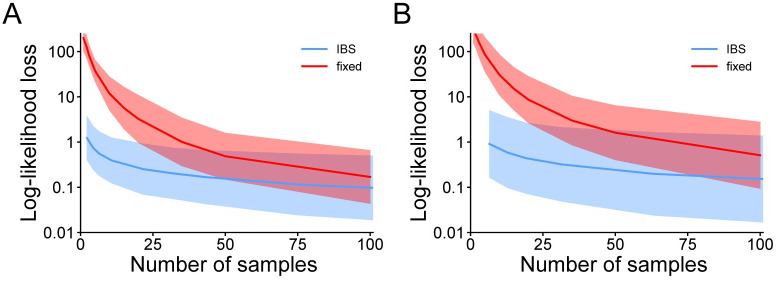

Log-likelihood loss

In the previous sections, we have analyzed the bias and error of different estimation methods when recovering the generating model parameters in various scenarios. Another important question, crucial for model selection, is how well different methods are able to recover the true maximum log-likelihood. The ability to recover the true parameters and the true maximum log-likelihood are related but distinct properties because, for example, a relatively flat likelihood landscape could yield parameter estimates very far from ground truth, but still afford recovery of a value of the log-likelihood close to the true maximum. We recall that differences in log-likelihood much greater than one point are worrisome as they might significantly affect the outcomes of a model comparison [24–26].

To compute the log-likelihood loss of a method for a given data set, we estimate the difference between the ‘exact’ log-likelihood evaluated at the ‘true’ maximum-likelihood solution (as found after multiple optimization runs) and the ‘exact’ log-likelihood of the solution returned by the multi-start optimization procedure for a given method, as described in the “Procedure” section. In terms of methods, we consider IBS and fixed-sampling with different amounts of samples. We perform the analysis for the two scenarios, orientation discrimination and change localization, for which we have access to the exact likelihood, either analytically or numerically.

The results in Fig 10 show that IBS, even with only a few repeats, is able to return solutions which are very close to the true maximum-likelihood solution in terms of log-likelihood (within 1-2 points); whereas fixed sampling remains strongly biased (log-likelihood loss ≫ 1) even with large number of samples, being thus at risk of inducing wrong inferences in model selection. Note that our analyses of the loss are based on the ‘exact’ log-likelihood values evaluated at the solution returned by the optimization procedure. In practice, we would not have access to the ‘exact’ log-likelihood at the solution; but its value can be estimated up to the desired precision with IBS, by taking multiple repeats at the returned solution.

Fig 10. Log-likelihood loss.

A. Log-likelihood loss with respect to ground truth, as a function of number of samples, for the orientation discrimination task. Lines are mean ±1 standard deviation (in log space) across 120 generating parameter values, with 100 simulated datasets each. B. Log-likelihood loss for the change localization task (80 generating parameter values).

Finally, the results in Fig 10 also display clearly that, while IBS is unbiased in estimating the log-likelihood for a given parameter setting θ, the maximum-likelihood procedure per se will have some error. Due to estimation noise and specific features of the data, model, and stochastic optimization method at hand, the returned solution will rarely be the true maximum-likelihood solution, and thus, by definition, the value of the log-likelihood at the solution will underestimate the true value of the maximum log-likelihood. Still, Fig 10 shows that the underestimation error, at least in the IBS case, tends to be acceptable, as opposed to the large errors obtained with fixed sampling.

Summary

The results in this section demonstrate that in realistic scenarios, fixed sampling with too few samples causes substantial biases in parameter and maximum log-likelihood estimates, whereas inverse binomial sampling is much more accurate and robust to the number of samples used. Across all 3 models and all parameters, IBS yields parameter estimates with little bias and RMSE, close to that of ‘exact’ maximum-likelihood estimation, even when using only a handful of repeats (R between 1 and 5). Conversely, fixed sampling yields substantially biased parameter estimates when using too few samples per trial, especially for parameters which control decision noise, such as measurement noise and lapse rates in the two perceptual decision-making tasks, and value noise in the 4-in-a-row task. Moreover, for the two models for which we have access to ‘exact’ log-likelihood estimates, we found that IBS is able to recover maximum-likelihood solutions close to the true maximum log-likelihood, whereas fixed sampling remains severely biased even for many samples.

It is true that, given a large enough number of samples, fixed sampling is eventually able to recover most parameters and maximum log-likelihood values with reasonable accuracy. However, we have seen empirically that the number of samples required for reliable estimation varies between tasks, models and parameters of interests. For tasks and models where an exact likelihood or a numerical approximation thereof is unavailable, such as the four-in-a-row problem we examined in the Results, this limitation renders fixed sampling hardly usable in practice. By contrast, IBS automatically chooses the number of samples to allocate to the problem.

Finally, for complex models with a large response space, accurate parameter estimation with fixed sampling will require many more samples per trial than are feasible given the computational time needed to generate them. Therefore, in such scenarios accurate and efficient parameter estimation is only possible with IBS.

Discussion

In this work, we presented inverse binomial sampling (IBS), a method for estimating the log-likelihood of simulation-based models given an experimental data set. We demonstrated that estimates from IBS are uniformly unbiased, their variance is uniformly bounded, and we introduced a calibrated estimator of the variance. IBS is sample-efficient and, for the purpose of maximum-likelihood estimation, combines naturally with gradient-free optimization algorithms that handle stochastic objective functions, such as Bayesian Adaptive Direct Search (BADS [27]). We compared IBS to fixed sampling and showed that the bias inherent in fixed sampling can cause researchers to draw false conclusions when performing model selection. Moreover, we showed in three realistic scenarios of increasing complexity that maximum-likelihood estimation of model parameters is more accurate with IBS than with fixed sampling with the same average number of samples.

In the rest of this section, we discuss additional applications of IBS, possible extensions, and give some practical usage recommendations.

Additional applications

We developed inverse binomial sampling for log-likelihood estimation of models with intractable likelihoods, for the purpose of model comparison or fitting model parameters with maximum-likelihood estimation, but IBS has other practical uses.

Checking analytical or numerical log-likelihood calculations

We presented IBS as a solution for when the log-likelihood is intractable to compute analytically or numerically. However, even for models where the log-likelihood could be specified, deriving it can be quite involved and time-consuming, and mistakes in the calculation or implementation of the resulting equations are not uncommon. In this scenario, IBS can be useful for:

quickly prototyping (testing) of new models, as writing the generative model and fitting it to the data is usually much quicker than deriving and implementing the exact log-likelihood;

checking for derivation or implementation mistakes, as one can compare the supposedly ‘exact’ log-likelihood against estimates from IBS (on real or simulated data);

assessing the quality of numerical approximations used to calculate the log-likelihood, for example when using methods such as adaptive quadrature for numerical integration [50].

Estimating entropy and other information-theoretic quantities

We can also use inverse binomial sampling to estimate the entropy of an arbitrary discrete probability distribution Pr(x), with x ∈ Ω, a discrete set (see [51] for an introduction to information theory). To do this, we first draw a sample x from the distribution, then use IBS to estimate log Pr(x). The first sample and the samples in IBS are independent, and therefore we can calculate the expected value of the outcome of IBS,

| (22) |

which is the definition of the negative entropy of Pr(x).