Abstract

Cycle-consistent generative adversarial network (CycleGAN) has been widely used for cross-domain medical image synthesis tasks particularly due to its ability to deal with unpaired data. However, most CycleGAN-based synthesis methods cannot achieve good alignment between the synthesized images and data from the source domain, even with additional image alignment losses. This is because the CycleGAN generator network can encode the relative deformations and noises associated to different domains. This can be detrimental for the downstream applications that rely on the synthesized images, such as generating pseudo-CT for PET-MR attenuation correction. In this paper, we present a deformation invariant cycle-consistency model that can filter out these domain-specific deformation. The deformation is globally parameterized by thin-plate-spline (TPS), and locally learned by modified deformable convolutional layers. Robustness to domain-specific deformations has been evaluated through experiments on multi-sequence brain MR data and multi-modality abdominal CT and MR data. Experiment results demonstrated that our method can achieve better alignment between the source and target data while maintaining superior image quality of signal compared to several state-of-the-art CycleGAN-based methods.

Keywords: Information fusion, GAN, Image synthesis

Highlights

-

•

Novel DiCyc architecture filters out domain-specific deformations.

-

•

The deformable layers are modified for less parameters and faster convergence.

-

•

Expectation–maximization training with 2-forward-1-backward back propagation.

-

•

New cycle-consistency and image alignment losses with less conflicted gradients.

-

•

An ablation study visualizes the trade-off between image alignment and quality.

1. Introduction

Multi-modal medical imaging, i.e. acquiring images of the same organ or structure using different imaging techniques (or modalities) that are based on different physical phenomena, is increasingly used towards improving clinical decision-making. However, collecting data from the same patient using different imaging techniques is often impractical, due to, limited access to different imaging devices, additional time needed for multiple scanning sessions, and the associated cost. This makes cross-domain medical image synthesis a technology that is gaining popularity. We use the term “domain” herein to refer to different imaging modalities, contrast and parametric configurations, for example, for magnetic resonance imaging (MRI). We present a method, called DiCyc, that can perform cross-domain medical image synthesis by learning from non-paired data, thus taking advantage of multiple sources of images, but due to new network architectures it is immune to the presence of deformations inherent to some medical imaging techniques.

Cross-domain image synthesis2 has been used to impute incomplete information in standard statistical analysis [1], [2], to predict and simulate developments of missing information [3], or to improve intermediate steps of analysis such as registration [4], information fusion [5], [6], [7], segmentation [8], [9], [10], atlas construction [11], [12] and disease classification [13], [14]. These methods map between MRI, computed tomography (CT), positron emission tomography (PET) and ultrasound imaging from one domain to another. Our main motivation is to synthesize CT images or a particular MR image contrast from multi-sequence MR data. We require the synthesized data should be usable for further medical applications, for example, using synthesized or “pseudo” CT images to improve PET-MR attenuation correction [15], [16], [17], [18], [19]. Using MRI to achieve attenuation correction of PET data can be a disadvantage as, unlike CT, the MR signal is not physically related to attenuation of x-rays in tissue. To overcome this, pseudo-CT generated from corresponding MR could be used to compute a map of linear attenuation coefficients (-map) and used for attenuation correction of the PET data acquired on a PET-MRI scanner [20]. This requires mapping of the geometric correspondences between CT and MR.

Learning a contextual correspondence between domains requires not only paired, but well-aligned training data. Such data can be generated by a reliable automatic or manual registration algorithm. As a result, the vast majority of cross-modality image synthesis methods are solely applicable to, or evaluated on brain image data [1], [2], [4], [8], [13], [16], [17], [18], [19], [21], [22], [23], [24], [25], [26], [27], [28], [29], due to the low geometric variance across different imaging modalities for this particular organ. For other organs, most methods require that the data be aligned by affine transformations or small deformations [3], [9], [25], [30], [31], [32], [33]. However, very distinct geometric variances may occur among these data. Nonlinear geometric variances are often associated with different modalities, such as those caused by the shape of imaging bed, the field of view and the axial location planning (captured in Fig. 1). We refer to these as “domain-specific deformations”, the presence of which can compromise the quality of the synthesis. This depends on whether the network can learn the mapping sufficiently by being invariant to the presence of deformations (which depends on landing on an ideal local minimum of the loss), or whether pre-processing has removed the deformation due to successful registration (which is not always feasible and cannot deal with large field of view differences).

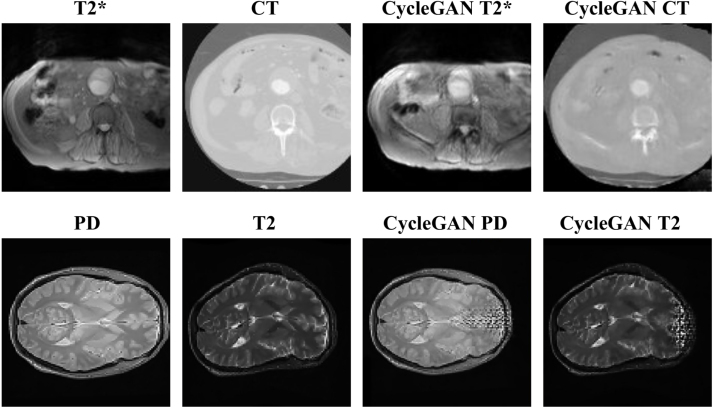

Fig. 1.

Example of cross-domain synthesis using vanilla CycleGAN. The first row shows the results obtained from cross-modality abdominal MR-CT data; the second row shows the results of multi-sequence brain data with a synthesized deformation. Both cases demonstrate a reproduction of “domain-specific deformation” in the synthesized output.

Methods that allow training with unregistered or unpaired data have recently been proposed [34]. Most state-of-the-art methods use deep convolutional neural networks (CNN) as the image generator within a generative adversarial network (GAN) framework [35]. GAN can represent sharp and even intractable probability densities through a nonparametric approach. It has been widely used in medical image analysis, especially for data augmentation and multi-modality image translations, due to its ability of dealing with domain shift [36]. A popular direction for cross-domain image synthesis is to leverage CycleGAN [37] into the training process. Previous studies have shown that CycleGAN can be trained with unpaired brain data [22], [28]. However, CycleGAN can mistakenly encode domain-specific deformations as domain specific features and reproduce the deformations in the synthesized output. Fig. 1 demonstrates two examples. The first row shows a synthesis performed between abdominal CT and T2*-weighted MR, while the second row gives an example of T2-weighted and proton density brain MR with a simulated deformation. In both cases, the deformations specific to the input sources are reproduced by CycleGAN in the output. For applications, such as, attenuation correction where voxel-wise attenuation coefficients are computed, domain-specific deformations should be discarded whilst contextual information relating to the cross-domain appearance of anatomical features and organs is retained.

Recently, several modifications of the vanilla CycleGAN have been proposed, to enhance the alignment between data from the source and target domain using an additional image alignment measure [30], [32], [38]. However, the additional image alignment loss conflicts with the original loss function in CycleGAN. The synthesized data in which the domain-specific deformations are reproduced will lead to a lower adversarial loss (of the discriminator in GAN). At the same time, the reproduced deformations harm the alignment between the source and the synthesized data, which leads to higher alignment loss. As a result, the synthesized data cannot be aligned to the source data particularly well while maintaining a good quality of signal. To address this issue, we propose the deformation invariant CycleGAN model, or DiCyc. Fig. 2 presents the structural differences between the vanilla CycleGAN and the proposed DiCyc generator networks. We introduce a global transformation model and modified layers of the deformable convolutional network (DCN) into the CycleGAN image generator and propose to the use of a novel image alignment loss based on normalized mutual information (NMI). We evaluate the proposed method using both a publicly available multi-sequence brain MR dataset and our private multi-modality (CT, MR) abdominal dataset. DiCyc displayed better ability to handle disparate imaging domains and to generate synthesized images aligned with the source data whilst keeping comparable quality of the output, compared to state-of-the-art models. Furthermore, the ablation experiment demonstrated that, unlike in the state-of-the-art models, the image alignment loss and the GAN loss were minimized together during training without conflicts in DiCyc.

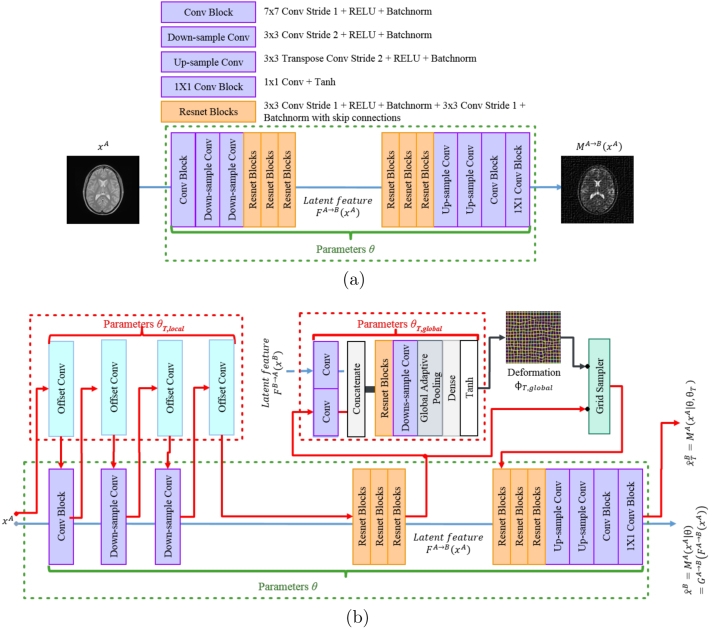

Fig. 2.

Comparison of network architectures between CycleGAN and DiCyc. (a) The generator of CycleGAN model used in the original CycleGAN, which is a normal CNN. (b) shows the DiCyc generator network. A deformation convolution layer is inserted in each block before the stack of the Resnet blocks to model the local deformation, parameterized by . The global non-linear distortion is modeled using thin-plate-spline (TPS) generated by a spatial transformation subnetwork, parameterized by . Details of the modified deformable convolution is shown in Fig. 4(c). The blue arrows represent the CycleGAN forward pass. The additional forward pass introduced by the deformable convolutional layers is represent as red arrows. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

The main contributions of this paper are as follows:

-

1.

We propose a novel DiCyc architecture using a global transformation network and modified deformable convolution layers in between normal convolution layers to address the problem of domain-specific deformations. The deformable layers are modified to have less parameters and offer faster convergence.

-

2.

Rather than the classical “1 forward pass, 1 backward pass” training routine, we designed a new expectation–maximization training procedure where each training iteration includes two distinct forward passes (shown as the blue and red arrows in Fig. 2b) and one single backward pass.

-

3.

We designed a novel cycle-consistency loss and an image alignment loss for information fusion. These losses, together with the new training procedure, address the conflict observed between image alignment loss and the discriminative loss of GAN.

-

4.

We visualized and quantitatively assessed the influence of the domain-specific deformation. We demonstrated the negative effects of the conflict between the image alignment loss and GAN loss in experiments using simulated brain data and realistic abdominal data, and visualized these effects on model convergence in our ablation study.

The paper is organized as follows. Section 2 reviews previous and related techniques. Section 3 gives details of the DiCyc network architecture and the associated loss function. Experiments and datasets used are described in Section 4. The results and discussion are presented in Section 5. Conclusions are given in Section 6.

2. Related works

2.1. Non-CycleGAN models

Typically, most image synthesis methods build up a mapping function from a source to a target domain using paired and pre-registered data. The mapping can be constructed by learning a regression or a dictionary from a collection of patches or feature examples as in [16], [17], [19], [39], [40]. Another conventional approach is to build an atlas for each domain using registration, such as modality propagation [41], [42], [43]. The prediction is given by mapping between atlases. Along with the rise of deep learning in recent years, neural networks have been used as the cross-domain regressor. For example, the Location Sensitive Deep Network (LSDN) [27] uses a CNN to map the location-dependent patch information between domains. In [26], [29], a GAN framework are used to learn the mapping function with context-aware measure based on gradient difference loss. Similarly, [44] uses conditional GAN to synthesize lung histology images. An early method using unpaired data is proposed in [34] where training with unpaired data was addressed as an unsupervised approach. It uses mutual information (MI) to select the best corresponding image patches from unpaired cross-domain data, and maximizes a mixture of global MI and local spatial consistency to synthesize multi-sequence brain MR data. This work uses a preprocessing procedure [41] which includes a registration step. Another approach similar to [34] is to construct a dictionary from patches or image pairs [19], [39]. In [45], an algorithm using Weakly-coupled And Geometry (WAG) co-regularized joint dictionary learning is proposed, which learns the patch correspondence from partially unpaired data. Yet, this method was only evaluated using brain images with small geometric variances. A natural strategy in current deep learning based medical image synthesis methods is to model the latent features to arbitrary distributions. For example, [46] assumes the latent features follow a mixed Gaussian distribution, but this method was only evaluated on multi-contrast CT images for segmentation tasks. This paper concentrates on more general synthesis problems between multi-sequence MR data or multi-modal MR and CT data.

2.2. CycleGAN-based methods

CycleGAN was first applied to cross-domain medical image synthesis in [31] and [28] for co-synthesis of CT and MR cardiac and brain data respectively. Both works hint at the influence of deformation affecting results and so removed such artifacts by regularizing the problem through adding additional information (e.g. segmentation masks) [31], and by co-registration [28]. Similarly, in [11], [25], [30], [31], [47], the performance of image synthesis networks can be enhanced when jointly trained for segmentation tasks. However, these models require extra manual annotations or registration. Without this requirement, many methods integrate image similarity measures into the GAN loss, for matching the same structure across different domains. For example, [48] introduced a structure-consistency loss based on the modality independent neighborhood descriptor (MIND) [49]. It has been demonstrated that this structure-constrained CycleGAN can deal to some extent with unregistered multi-modal MR and CT brain data. A similar gradient-consistency loss, based on the normalized gradient cross correlation (GCC), is used in [32] for the same purpose. This method has been evaluated using unpaired but pre-registered, multi-modal MR and CT hip images. However, as discussed in Section 1, there is a conflict between the image similarity based losses and the CycleGAN discriminative loss. One potential solution of this problem is to factorize the latent representations into domain-independent semantic features and domain-dependent appearance features, and explicitly filter out the relative spacial deformation between the source and target data [50], [51], [52]. This work extends this idea for larger deformations and wider range of domains.

3. Method

3.1. Notation and background

Our goal is to generate synthesized CT or MR data to help post-processing of the source data. For example, a pseudo-CT map applicable to PET-MR attenuation correction without registering the synthesized data to the source.

We assume that we have images from domain , and images from domain . For a source image , a generator, , is trained to generate a synthesized image . Following the GAN setup, and a discriminator ares trained to solve the min–max problem of the GAN loss . For brevity, we let denote the GAN loss. maps the data from to while is trained to distinguish whether an image is real or synthesized. Accordingly, for synthesis from to , there are a , a , and a GAN loss . The vanilla CycleGAN framework consists of two symmetric sets of generators and act as mapping functions applied to a source domain, and two discriminators and to distinguish real and synthesized data for a target domain [37]. The cycle consistency loss , or , is used to keep the cycle-consistency between the two sets of networks [37]. This gives CycleGAN the ability to deal with unpaired data. Then the loss of the whole CycleGAN framework is . Recent improvements of CycleGAN [32], [48] add an image alignment term to which becomes

| (1) |

where is the weight used to balance the effects of and . As discussed in Section 1, this causes the conflict between quality of synthesis images and source-target image alignment. The later parts of this section present the detailed analysis of this problem and our DiCyc solution.

3.2. Dicyc architecture

Adding the alignment loss makes cross-domain image synthesis a multi-task learning problem: is trained for image synthesis while aligning the source and synthesized images. Because the relative deformation, , between the source and target training images are partially domain specific, this information is encoded by the discriminator . Note that and in existing methods [32], [48] are both works on the source image and the synthesized image . Assuming is well aligned to , and is identical to the target image, even when both images have the same image quality, it is always true that

| (2) |

for an optimal discriminator . At the same time,

| (3) |

As a result, and lead to gradients with opposite directions: where is the network parameters. Any choice of the hyperparameter or data augmentation for will cause a trade-off between the image quality and data alignment.

To solve the problem of inverse gradients, we model the deformation using a separated set of parameters . For example, in the process, outputs two synthesized images: one undeformed image aligned to the source:

| (4) |

and one deformed image that is identical to the target:

| (5) |

As shown in Fig. 1, the relative deformation between the source and target domains can be seen as a combination of a global and a local transformation, thus . The corresponding transformation parameters are modeled by in different subnetworks in the DiCyc generator (Fig. 2b).

We split the generator into three subnetworks: an encoder, , a decoder and a transformer . estimates the global transformation , parameterized by . In previous CycleGAN based methods parameterize and with image synthesize parameters . In our DiCyc model, the generator also estimates the local deformations, parameterized by which is introduced by a series of deformable convolutional layers. As a results, also produced two versions of latent features: the undeformed feature map and the locally deformed feature .

3.3. Global deformation

The global transformer has a similar structure with the thin-plate-spline (TPS) based STN. As shown in Fig. 2b, in the process, the global deformation is calculated by:

| (6) |

where and are latent features given by the encoders and , and represents the concatenation operation. Specifically, a regular grid of 6 × 6 control points is placed on the latent feature maps of . outputs the coordinates of corresponding points on features of . TPS maps the deformation decided by and using an interpolation function . has a form of:

| (7) |

where is regular image grid and is the weights assigned to the control points. and define the affine transformation between and . is defined as:

| (8) |

where is a radial basis kernel has the form of:

| (9) |

Note that the transformer uses a normalized grid where the coordinates .

It has been proved that this form of interpolation function minimizes the bending energy of a surface [53], so it introduces minimal affection on image quality. Based on this analysis, for better quality of synthesis, we wish to keep the local deformation to minimum level within tiny spatial area. When ignoring the local deformation , the whole DiCyc model is shown as in Fig. 3.

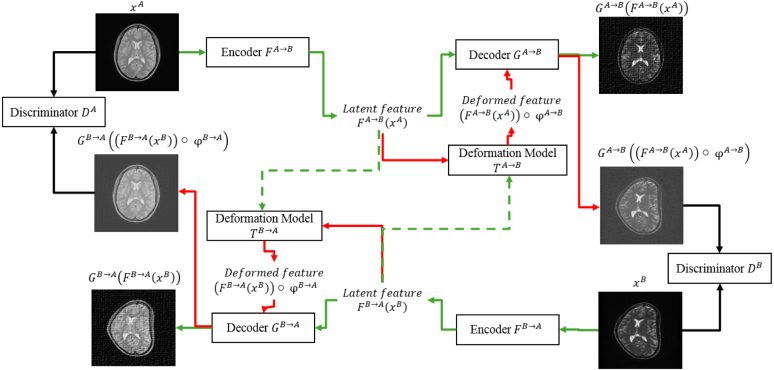

Fig. 3.

The DiCyc framework when ignoring local deformation being trained for cross synthesis of PD-weighted () and T2-weighted () images. The process is shown by the green arrow and the process is shown in red. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

3.4. Local deformation

We use a modified DCN structure in the encoder to model the deformation in a local neighborhood after the latent feature and are globally aligned. A deformable convolutional layer interpolates the input feature maps through an “offset convolution” operation, followed by a normal convolutional layer [54]. This architecture separates the information about local spatial deformation and image context into two forward passes, thus further removes the conflict introduced by .

As shown in Fig. 2b, we add an offset convolutional layer (displayed in cyan) before the input convolution layer, the two down-sample convolution layers and the stack of Resnet blocks. This leads to a “lasagne-like” structure consisting of interleaved “offset convolution” and conventional convolution operations so that the spatial deformation is gradually encoded through each layer. The red and blue arrows in Fig. 2b display the computation flows for generating and in the forward passes.

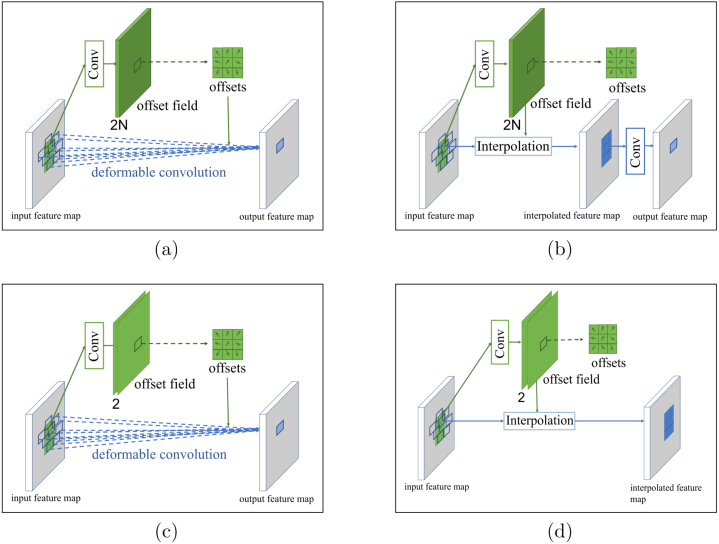

Fig. 4 demonstrates details of the deformable convolution and our modified version used in this work. The deformable convolution can be viewed as an atrous convolution kernel with trainable dilation rates as shown in Fig. 4a. This dilation rate varies across different locations of the input feature maps. As shown in Fig. 4b, the offset of each point in the “N-channel” input feature maps is learned by a standard convolutional operation, outputting 2N “offset maps” (a 2-D deformation for each input feature map is represented by 1 “x” and 1 “y” offset map) [54]. The N input feature maps are then interpolated using the 2N offset feature maps. These operations together are termed as “offset convolution”. A standard convolution layer is then applied to the interpolated feature map. When put together these operations form a deformable convolution operation. Designed originally for object recognition tasks, the deformable convolution operation deforms each input feature map independently. Instead, to adjust this operation to cross-domain image synthesis, our modified deformable convolution generates a uniform 2-D deformation that is valid for all input feature maps (Fig. 4c). This is equivalent to directly applying a deformation to the input image and passing it forward through the vanilla CycleGAN generator. This reduces the number of parameters in DCN to a minimum level. Fig. 4d shows our implementation of the “offset convolution”.

Fig. 4.

Details of the original deformable convolution and our modified version. (best viewed in color).

Combined with the global transformation, is then taken by the corresponding discriminator to compute GAN losses, and is expected to be aligned with .

Training DiCyc loss involves the traditional GAN loss, the cycle-consistency loss used in the original implementation of CycleGAN [37], as well as an image alignment loss and an additional cycle consistency loss introduced by the auxiliary outputs obtained from our two separated forward passes. We detail these below.

3.4.1. GAN loss

For the GAN loss , the minmax game of and is represented as:

| (10) |

where and represents optimal generator and discriminator. Theoretically, in our DiCyc model, the loss function of is:

| (11) |

where and represent the data distribution in domain and . The GAN loss of generator is:

| (12) |

Similarly, the GAN loss of is:

| (13) |

3.4.2. Image alignment loss

Eq. (11) can be rewritten as:

| (14) |

where is the distribution of synthesized domain images. is then trained to discriminate the distributions and [35]. In the minmax game of the original GAN model, it has been proved that an optimal discriminator . Substituting this into , it can be rewritten as:

| (15) |

where is the Kullback–Leibler divergence and is the Jensen–Shannon divergence.

Let and be the spatial poses of the two images, and and . For a pair of training images, the relation between and is:

| (16) |

| (17) |

where represents the inverse transformation and represents the identical transformation. With training data which is suffering from the domain-specific deformation, optimally trained and will inevitably predict that and even when has comparable quality with . As the GAN losses are calculated using and , a new discriminative loss is required to predict which the data is sampled from. Based on the infoGAN theory [55], we can maximize the mutual information (MI) between and , as it can be easily proved that

| (18) |

MI yields values from 0 to , which makes it difficult to be scaled and combined with other losses. Here we propose to use an image alignment loss based on NMI:

| (19) |

Because the deformations are modeled by a separated set of parameters, this image alignment loss can be adopted with any similarity measure suitable for image registration, such as normalized mutual information (NMI) [56], normalized GCC used in [32], or MIND in [48] and [49].

3.4.3. Cycle-consistency losses

The cycle-consistency loss plays a critical role for the improved performance of CycleGAN compared to a single GAN network, as it forces and learning mutually recoverable information from distinct domains. As in DiCyc, each generator produces an undeformed and deformed version of synthesized data, both should be cycle-consistent to encode optimal representation. This results in two cycle-consistency losses in our DiCyc model. The undeformed cycle consistency loss is defined as:

| (20) |

and the deformation-invariant cycle consistency loss is:

| (21) |

3.5. Training procedure

Based on the discussion above, the overall loss of our DiCyc model is3

| (22) |

Treating the cycle-consistent losses as a kind of regularization, training the DiCyc model can be seen as a maximum likelihood estimation (MLE):

| (23) |

where is an unknown distribution of the image poses. Based on Jensen’s inequality, as is an convex function,

| (24) |

which gives a lower bound of the maximum likelihood. To make the equality established, , where is a constant. Thus the distribution is:

| (25) |

This MLE learning can be performed through an expectation–maximization (EM) training procedure. The “E” step estimates the distribution by:

| (26) |

where is decided by the sample training data. For learning optimal global transformations, we fixed the parameters of and while only update the STN . In other world, only the parameters are updated. In the “M” step, the two synthesized images and are calculated through two forward passes. The parameters are updated based on .

4. Experiments

4.1. Datasets and preprocessing

IXI dataset: We selected two datasets for multi-sequence MR and cross-modality MR-CT data synthesis tasks. The first was the Information eXtraction from Images (IXI) dataset4 which provides co-registered multi-sequence skull-stripped 1.5T and 3T MR images collected from multiple sites. We used 66 proton density (PD-) and T2-weighted volumes, each volume containing 116 to 130 2D slices. For training and testing, 38 pairs and 28 pairs were used, respectively. Our image generators take 2D axial-plane slices of the volumes as inputs. All volumes were resampled to a resolution of , then cropped to a size of pixels. As each resampled volume contains 94 to 102 slices, over 6000 pairs of IXI images were used in our experiments. As the generators in both CycleGAN and DiCyc are fully convolutional, the predictions are performed on uncropped images. All the images are bias field corrected and normalized with their mean and standard deviation.

dataset: We used a dataset containing 40 pairs of multi-modality abdominal T2*-weighted and CT images collected from 20 patients with abdominal aortic aneurysm. Example images are shown in Fig. 1 where domain-specific deformations can be observed. The data were collected as part of the clinical trial5 [57]. All images were resampled to a resolution of , and the axial-plane slices trimmed to pixels. We used 30 volumes for training and 10 volumes for testing. Each resampled volume contains 24 to 40 slices, which gives over 1200 pairs of slices for our experiments.

4.2. Evaluation metrics

Ideally, alignment between data and the quality of the synthesized images can be evaluated by segmentation-based metrics, such as, Dice index. However, it is difficult to generate the segmentation masks on synthesized data, which can also introduce extra errors in the evaluation. Referring to previous image synthesis works discussed in previous sections, here we use three metrics to evaluate performance of image synthesis: mean squared error (MSE), peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) as typically used by other CycleGAN based methods. Given a volume and a target volume , the MSE is computed as: , where is number of voxels in the volume. PSNR is calculated as: , where is the maximum voxel value of the image . SSIM is computed as: , where and are mean and variance of a volume, and is the covariance between and . and are two variables to stabilize the division with weak denominator [58]. Larger PSNR and SSIM, or smaller MSE, indicate a better performance of a synthesis algorithm. These metrics were used to identify the best performing CycleGAN-based method, which we will subsequently refer to as the baseline method. We then evaluated the performance of the proposed DiCyc method compared to this baseline method. A paired t-test was used to the difference in mean MSE, PSNR and SSIM values between DiCyc and selected baseline. For the ablation experiment, a paired t-test was performed on metrics arising from the DiCyc model and its CycleGAN-based counterpart. Differences in performance were considered to be statistically significant when the pvalue resulting from the t-test was less than 0.05.

4.3. Experimental setup

We present three experiments using the two datasets. In the first and second, performance of our DiCyc model was compared to the vanilla CycleGAN [28] and state-of-the-art CycleGAN models with image alignment losses [32], [48]. For all experiments, we applied random affine transformations, including translation, rotation, scaling, shearing and flipping, to the input data as augmentations in the training stage,6 and we manually set that each epoch contains 6000 iterations for better network convergence. After comparing performance of the proposed DiCyc with selected state-of-the-art methods, an ablation study was performed to reveal the influence of DiCyc architecture and learning procedure.

Simulated IXI to identify influence of domain-specific deformation: As the brain organs are mainly rigid structures and rarely suffer from non-linear deformations, ground truth obtained from the registered PD- and T2-weighted image pairs allows evident quantitative assessments. When trained on the registered data, all the methods obtained better performance than when they were trained on unaligned and unpaired data. This provided an upper limit of performance for all the tested methods. To assess the ability of the selected methods to deal with domain-specific deformations, we applied a simulated nonlinear transformation to each T2-weighted image. We performed synthesis experiments using the undeformed PD-weighted images and deformed T2-weighted images to generate undeformed T2-weighted data and deformed PD-weighted data. Minibatches of the input data were sampled from randomly selected patients and slices. When using deformed T2-weighted images to generate synthesized PD data, the ground truth was generated by applying the same nonlinear deformation to the source PD images. Similarly, the ground truth for the synthesized T2-weighted data were the original undeformed T2-weighted data provided in IXI. Values for the three evaluation metrics were computed between the synthesized images and the ground truths. We also qualitatively evaluate the synthesized images using error images as in prior works [26], [29].

data: After evaluated on simulated dataset with given ground truths, the methods are further evaluated using realistic data from our dataset. Due to “domain-specific deformations”, the multi-modality images cannot be affinely registered. Specifically, the multiple organs in the pair of images can be hardly aligned at the same time. Furthermore, as non-rigid registration remains an ill-posed problem and lacks a gold standard, we did not non-rigidly register the images to generate ground truth for synthesis. However, several objects, such as aorta and spine, are relatively rigid compared to other surrounding soft tissues such as lower gastrointestinal tract organs. These objects can be separately registered with affine transformations. As a result, performance of synthesis should be assessed by alignment of multiple organs, as well as by quantitative analysis of image quality. In this work, for each volume in the dataset, the anatomy of the aorta was manually segmented (as described in [59]). Multi-modality data acquired from the same patient were affinely registered so that the segmented aortas were well aligned. The manual registration and segmentation were performed by 4 clinical researchers. Signal of the synthesized images was evaluated within the segmentation of aorta using the three metrics described above. Image alignment between the source and synthesized data were visually assessed within both the aorta and spine regions. To sum up, a method with better performance should generate images show better alignment in both the aorta and spine region while achieving lower MSE, higher PSNR, and higher SSIM. In the training stage, the input minibatch was sampled from the same patient but randomly selected slices as described in [48]. The data is augmented with similar transformations that have been applied to the IXI dataset.

Ablated models with different alignment losses: The CycleGAN-based models do not handle the conflict between the additive image alignment losses and the discriminative GAN loss, thus cannot achieve good data alignment without sacrificing quality of the synthesized data. By contrast, the architecture and associated training algorithm of DiCyc handles the geometric deformation and contextual correspondence between the domains separately. This property plays a key role in generating synthesized data that are aligned with source data while maintaining a good performance of contextual synthesis. To prove this argument, it is necessary to analyze the different behaviors of an image alignment loss while being used in CycleGAN and DiCyc frameworks. Furthermore, current CycleGAN-based models use GCC and MIND, but we use a NMI-based alignment loss given in Eq. (19). To verify our proposed alignment loss, it is necessary to compare the performance GCC, MIND and NMI under the same architecture and training procedure.

With these motivations in mind, we performed an ablation experiment using the IXI dataset where different image alignment losses were integrated within both CycleGAN and DiCyc models. Specifically, we replaced NMI-based alignment loss used in the proposed model with the GCC- and MIND-based alignment loss to build a GCC-DiCyc and a MIND-DiCyc. Similarly, our NMI-based alignment loss was added to the CycleGAN loss to build a NMI-CycleGAN. Performance of DiCyc’s with different alignment losses were then compared to their CycleGAN-based counterparts. We performed a paired t-test on the evaluation metrics for each pair of CycleGAN and DiCyc models with the same alignment loss to evaluate any improvement in performance introduced by our new architecture. Any improvements introduced by the NMI-based alignment loss can be seen by comparing performance of the DiCyc models using different alignment losses. Evolution of the loss values and synthesis results were also visually assessed throughout the training process.

4.4. Implementation details

We used image generators with 6 Resnet blocks, and 70 × 70 PatchGAN [60] as discriminator networks. Based on the default setup of CycleGAN, we use the LSGAN loss to compute . Experiments were implemented in PyTorch and paired t-tests were performed using Scipy library. All parameters of, or inherit from, vanilla CycleGAN are taken from the PyTorch implementation of the original paper.7 The first convolutional layer uses kernels, all others use kernels. The first convolution output channels of feature maps, followed by layers with and channels. All the convolutions in the Resnet blocks have channels.

For the DiCyc, we set and . The models were trained with Adam optimizer [61] with a fixed learning rate of 0.0002 for the first 100 epochs, followed by 100 epochs with linearly decreasing learning rate. Here we apply a simple early stop strategy: in the first 100 epochs, when stops decreasing for 10 epochs, the training will move to the learning rate decaying stage; similarly, this tolerance is set to 20 epochs in the second 100 epochs. For the selected benchmark CycleGAN-based models, unless mentioned above, setup of hyper-parameters follows the original publications. Experiments were performed with nVidia Tesla K80 GPUs provided by the Amazon AWS EC2 cloud computing platform.

5. Results and discussion

This section presents the performance across all models assessed. For each experiment, we visualize the data from the source domain and the synthesized results. Quantitative results are shown in terms of MSE, PSNR and SSIM.

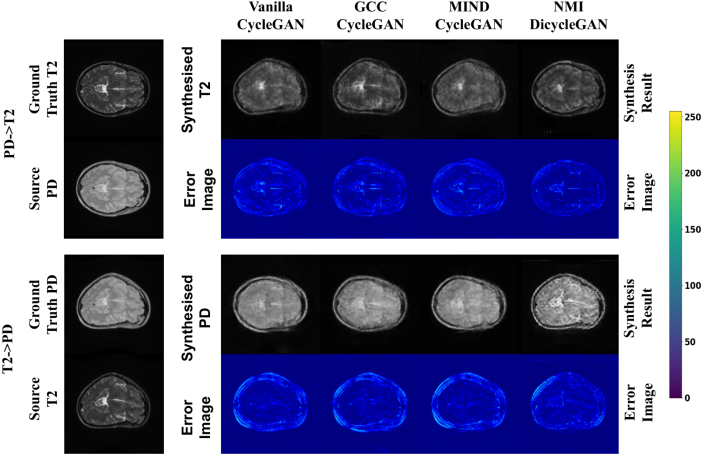

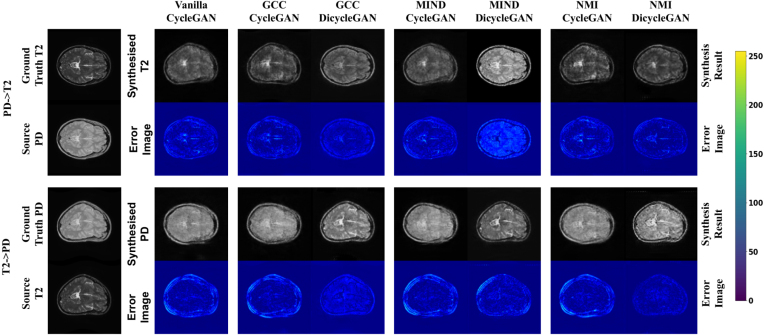

5.1. DiCyc versus CycleGAN-based models on IXI

Fig. 5 shows an example of the synthesized images generated by the methods we tested, along with the error images calculated between the synthesized data and corresponding ground truth. For a fair visual comparison, here we present the results obtained by all the compared baselines with the same non-linear deformation. As the simulated “domain-specific deformation” were applied to the T2-weighted data, the synthesized PD-weighted data should display the same deformation aligned with the source data. Similarly, the synthesized T2-weighted data should be aligned with the source PD-weighted data without showing the simulated deformation. However, as shown in Fig. 5, the vanilla CycleGAN model reproduced the simulated deformation in the synthesized T2-weighted image and did not show the simulated deformation in the synthesized PD-weighted image. Although the GCC-CycleGAN and MIND-CycleGAN reduce the misalignment effect of the simulated deformation, the synthesized and source data are still not well aligned. Furthermore, the synthesis results generated by the three CycleGAN-based models are blurry and showed visible artifacts. In contrast, our DiCyc model gave the best alignment between the source and synthesized data and also lead to better image quality when assessed visually.

Fig. 5.

Examples of synthesis from the IXI dataset: an arbitrary deformation was applied to the T2 weighted images, and the ground truth of the synthesized proton density (PD) weighted image was generated by applying the same deformation.

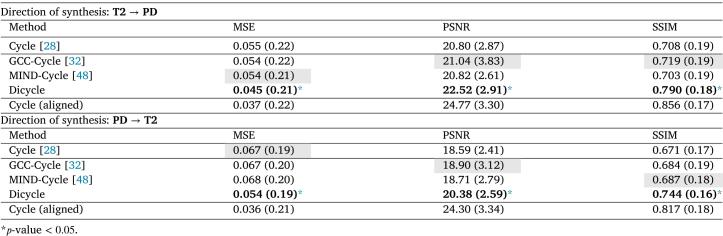

The quantitative evaluation of multi-sequence MR synthesis using the IXI dataset is shown in Table 1, where the best result for each metric is shown in bold and the optimum baseline method we chose for a paired t-test is highlighted by a gray background. Vanilla CycleGAN trained on paired and registered images (without simulated deformation) gave the best results with PSNR , SSIM and MSE . This is considered as the upper bound of synthesis performance. Trained with unpaired data that have simulated deformations, the vanilla CycleGAN gave a lower-bound baseline of performance. With additive image alignment losses, GCC-CycleGAN and MIND-CycleGAN methods lead to improvements in terms of PSNR. However, because these two models are still affected by the simulated domain-specific deformation, their performance was still comparable to vanilla CycleGAN.

In contrast, the proposed DiCyc model led to at least 18% increase in MSE, and 8% and 12% performance gain in terms of PSNR and SSIM on IXI data. The results were statistically significant based on the paired t-tests (-value ).

Table 1.

Synthesis results of IXI dataset using deformed T2 images given by value of each metric. Standard deviations are shown within parentheses.

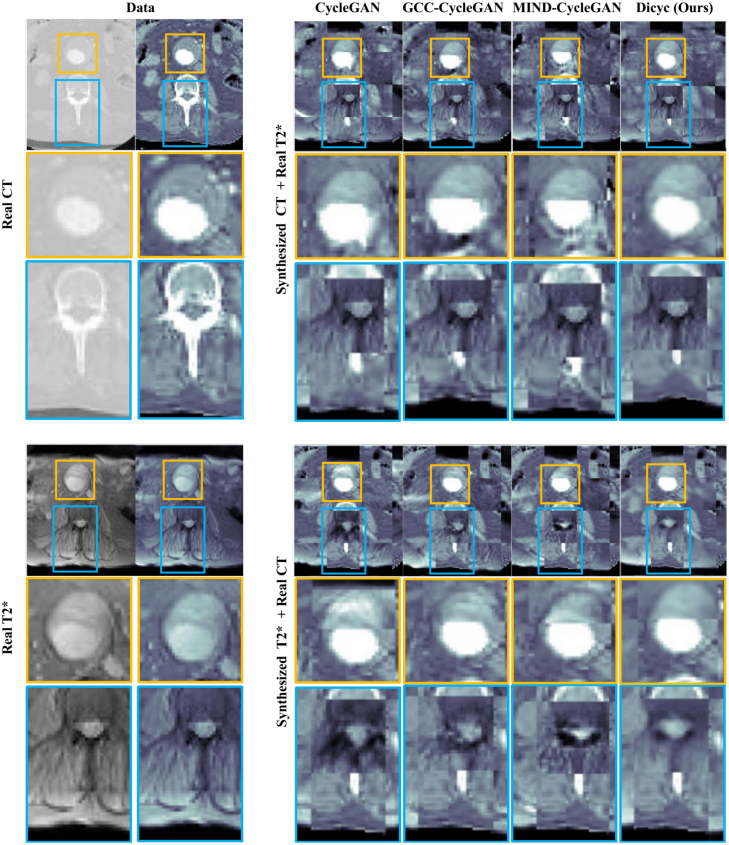

5.2. DiCyc versus CycleGAN-based models on

Table 2 shows the quantitative assessments of the four models based on the same metrics used for the IXI data. The vanilla CycleGAN had slightly better performance compared to the GCC- and MIND-CycleGAN models. The only exception is that MNID-CycleGAN model obtained higher PSNR in the “T2*CT” synthesis. Our DiCyc model outperformed the other three methods according to all the metrics. Note that in the “CTT2*” synthesis, DiCyc lead to a 20% performance gain in terms of MSE, and achieved 22.8% higher SSIM. Differences between performance achieved by the DiCyc model and the best baseline methods were statistically significant.

Table 2.

Multi-modality synthesis results using private dataset given by value of each metric. Standard deviations are shown within parentheses.

The quantitative results shown in Table 2 can be affected by both the qualities of the synthesized images and the alignment between the source and synthesized data. As discussed above, some objects in the images can be affinely registered independently, for example, the anatomy of aorta and spine. However, these two objects cannot be affinely aligned at the same time as a result of domain-specific deformations. This leads to lower PSNR and SSIM, and higher MSE value within the segmented region of aorta.

For better assessing the effects of the domain-specific deformation, the synthesis results of the compared baselines and our TPS-based DiCyc model are displayed in Fig. 6 using a checkerboard visualization. As shown in Fig. 6, when the region of aorta is affinely aligned, the CycleGAN-based methods either achieved worse alignment in the spine area, for example, the synthesized CT produced by CycleGAN and GCC-CycleGAN, and the synthesized T2* weighted image given by GCC-CycleGAN; or they generated significant artifacts, for example, in the aorta area of synthesized CT output by CycleGAN and MIND-CycleGAN. Our DiCyc model is the only model that produces synthesized images where both the aorta and spine are simultaneously aligned. Although the synthesized T2* weighted images looks slightly blurred, our DiCyc model generated less artifacts.

Fig. 6.

Visualization results on MA3RS data: the source and the associated synthesized images are displayed using a chessboard visualization. The regions of aorta and spine are highlighted by yellow and blue boxes. CycleGAN-based methods tend to reproduce the domain-specific deformation or suffer from significant artifacts. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

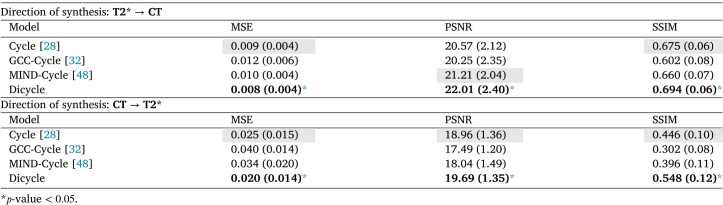

5.3. Ablation study

Fig. 7 presents the synthesized images produced by the ablated models using different alignment losses, and the quantitative evaluation results are shown in Table 3. As shown in Fig. 7, all the DiCyc-based models achieved better alignment between the source and synthesized data. This is consistent with the quantitative results shown in Table 3 where in most cases ablated DiCyc models achieved lower MSE and higher PSNR and SSIM models. However, using GCC- and MIND-based alignment losses within the DiCyc framework caused a shift of intensities in the synthesized data. The most obvious example is the synthesized T2-weighted image produced by MIND-DiCyc which looks more like the source PD-weighted data rather than the target T2-weighted data. As a result, the MIND-DiCyc model gave higher MSE and lower PSNR values in the “PDT2” synthesis. By contrast, this intensity shift was not observed in the synthesized data generated by our proposed NMI-based DiCyc model. The proposed NMI-DiCyc model outperformed the ablated GCC-DiCyc and MIND-DiCyc models, as well as the state-of-the-art CycleGAN-based methods.

Fig. 7.

Visualization of results of ablated models with different image alignment losses. The results were obtained from the IXI dataset with the same simulated deformation applied to the PD-weighted MRI data. The difference image for each method is shown under the synthesis result it generated.

Table 3.

Multi-modality synthesis results using private dataset given by value of each metric. Standard deviations are shown within parentheses.

| Direction of synthesis: PDT2 | |||

|---|---|---|---|

| Model | MSE | PSNR | SSIM |

| GCC-Cycle [32] | 0.054 (0.021) | 21.04 (3.83) | 0.719 (0.19) |

| GCC-Dicycle | 0.047 (0.006)⁎ | 22.17 (3.05)⁎ | 0.840 (0.18)⁎ |

| MIND-Cycle [48] | 0.054 (0.21) | 20.82 (2.61) | 0.703 (0.19) |

| MIND-Dicycle | 0.090 (0.22)⁎ | 18.59 (2.04)⁎ | 0.714 (0.20)⁎ |

| NMI-Cycle | 0.055 (0.22) | 21.03 (3.06) | 0.712 (0.20) |

| Dicycle (NMI) | 0.045 (0.21)⁎ | 22.52 (2.91)⁎ | 0.790 (0.18)⁎ |

| Direction of synthesis: T2PD | |||

| Model | MSE | PSNR | SSIM |

| GCC-Cycle [32] | 0.067 (0.20) | 18.90 (3.12) | 0.684 (0.19) |

| GCC-Dicycle | 0.054 (0.20)⁎ | 20.42 (3.18)⁎ | 0.740 (0.20)⁎ |

| MIND-Cycle [48] | 0.068 (0.20) | 18.71 (2.79) | 0.687 (0.18) |

| MIND-Dicycle | 0.054 (0.19)⁎ | 20.33 (2.97)⁎ | 0.740 (0.19)⁎ |

| NMI-Cycle | 0.067 (0.21) | 18.76 (3.09) | 0.684 (0.19) |

| Dicycle (NMI) | 0.054 (0.19)⁎ | 20.38 (2.59)⁎ | 0.744 (0.19)⁎ |

-value .

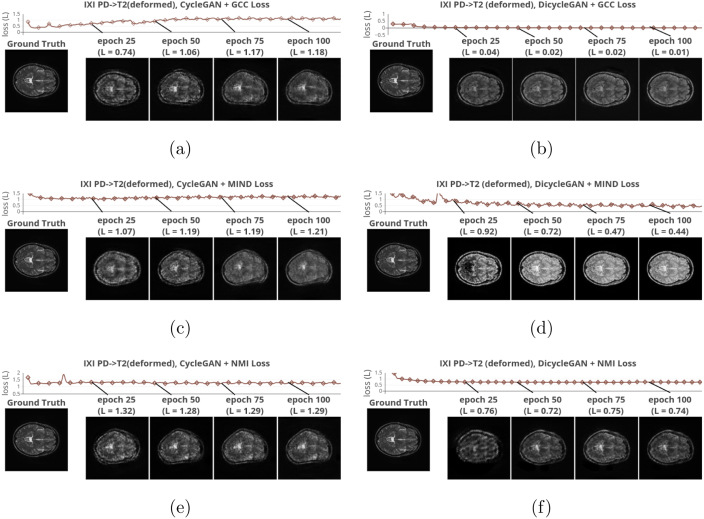

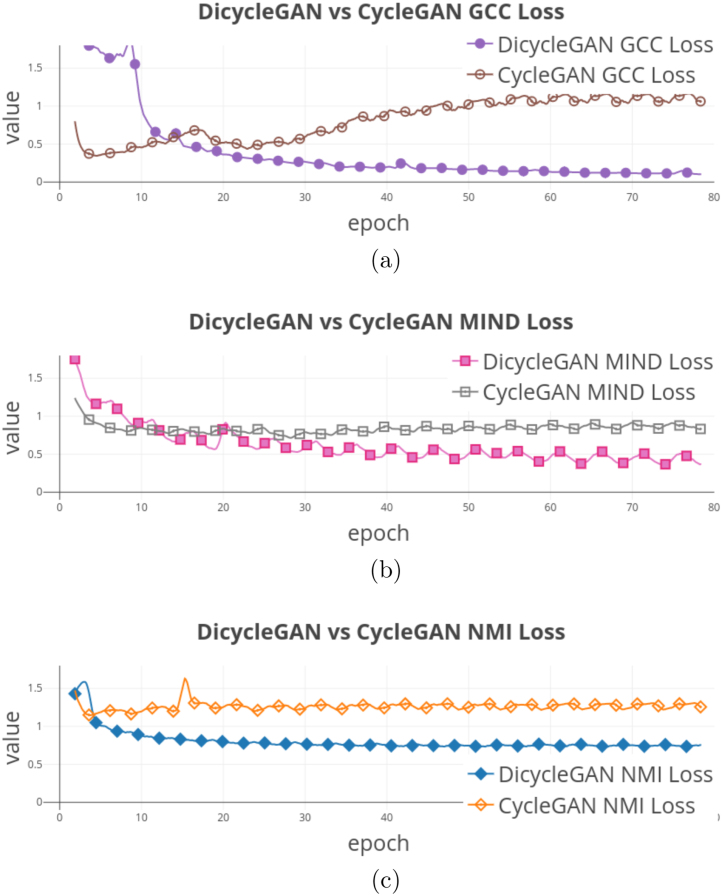

Fig. 8, Fig. 9 demonstrate the evolution of the compared image alignment losses and the synthesis results in the CycleGAN and DiCyc frameworks during the training process. Comparing the synthesis results produced by CycleGAN-based methods (Figs. 8a, 8c and 8e) with those generated by DiCyc models (Figs. 8b, 8d and 8f), we can see that the CycleGAN methods can achieve a good data alignment within the first 20 epochs of training. However as the training algorithm continues to minimize the CycleGAN losses, the domain-specific deformation is gradually reproduced. As the DiCyc framework separately trains the image alignment loss and the CycleGAN loss in two forward passes, the relative deformation between the source and target domain is removed. As shown in Figs. 9a, 9b and 9c, in the CycleGAN framework, each alignment loss was minimized at a certain point of the training process, but then kept increasing as it started to conflict with the GAN discriminative losses. In our DiCyc framework, the alignment losses kept decreasing throughout the whole training process.

Fig. 8.

Evolution of synthesized data during the training process. 8a to 8f successively display the loss curves of GCC-CycleGAN [32], GCC-DiCyc, MIND-CycleGAN [48], MIND-DiCyc, NMI-CycleGAN and NMI-DiCyc (proposed). synthesized T2 weighted data obtained at the 25th, 50th, 75th, 100th epochs are shown above the curves, in comparison of the ground truth shown at the bottom right. (Best viewed in color).

Fig. 9.

Evolution processes of each alignment loss in the training process while used in the CycleGAN and DiCyc framework: 9a GCC Loss, 9b MIND Loss, and 9c NMI Loss. (best viewed in color).

Comparing the results shown in Figs. 8b, 8d and 8f, we can see that the ablated GCC- and MIND-DiCyc models reproduced the appearance of the PD-weighted data in the synthesized T2-weighted data. This means GCC and MIND are still more domain-dependent measures compared to NMI although they have been widely used in multi-modality registration methods. However, computationally GCC and MIND are easily vectorized and the associated backward pass are easier to implement with lesser computational complexities.

5.4. Model complexity

For the CycleGAN-based baselines compared above, each generator network, , has 34.52M trainable parameters, and each descriminator network, , has 2.76M. As a result, in the training stage, a CycleGAN-based model has 74.56M trainable parameters and each forward pass consists of 37.98G multiply-add operations (MACs)8 processing 128 × 128 image data. For our DiCyc model, the local and the global transformation modules introduce 8.15M and 4.31M trainable parameters. Each forward pass consists of 66.36G MACs. As a result, it takes 75% more time and 33% extra memory to train a DiCyc model. However, once trained, prediction of the synthesized images is performed only by the image generator without global and local deformation modules. In other word, in the testing stage, the proposed DiCyc model has the same temporal and spacial complexity with the CycleGAN-based methods (34.52M trained parameters, 18.24G MACs per forward pass).

6. Conclusion

We introduced the DiCyc cross-domain medical image synthesis model which addresses the issue of and is resilient to domain-specific deformations. We integrated a modified deformable convolutional layer into the network architecture, and proposed the associateddeformation-invariant cycle consistency loss and NMI-based alignment loss function. Experiments were performed for synthesis of multi-sequence MRI data with simulated deformations and of multi-modality CT and MRI data suffering from actual domain-specific deformations. We compared our method to the vanilla CycleGAN method and two state-of-the-art methods with additional alignment losses. Our DiCyc method achieved better alignment between the source and synthesized data while maintaining signal qualities of the synthesized data. It outperformed state-of-the-art methods. In order to reveal the mechanism of DiCyc that is separately encoding the information about spatial deformation in the synthesis process, we also performed an ablation study by integrating popular image similarity metrics into DiCyc and comparing their CycleGAN-based counterparts. It shows that the DiCyc model avoids the conflict between the CycleGAN loss and the image alignment losses. Our NMI-based image alignment loss also demonstrated better robustness for synthesis of images from different domains.

CRediT authorship contribution statement

Chengjia Wang: Conceptualization, Methodology, Software, Data curation, Writing - original draft, Visualization, Investigation, Writing - review & editing. Guang Yang: Conceptualization, Investigation, Visualization, Investigation, Writing - review & editing, Validation. Giorgos Papanastasiou: Methodology, Data curation, Writing - review & editing, Software, Investigation, Validation. Sotirios A. Tsaftaris: Methodology, Validation, Writing - review & editing. David E. Newby: Supervision, Validation. Calum Gray: Software, Data curation, Visualization, Methodology. Gillian Macnaught: Conceptualization, Data curation, Validation, Software, Writing - review & editing, Supervision. Tom J. MacGillivray: Conceptualization, Investigation, Validation, Software, Writing - review & editing, Supervision.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

This work is funded by British Heart Foundation, UK (no. RG/16/10/32375). D.E. Newby is supported by the British Heart Foundation (CH/09/002, RG/16/10/32375, RE/18/5/34216) and is the recipient of a Wellcome Trust Senior Investigator Award (WT103782AIA). G. Yang is supported by IIAT Hangzhou, the British Heart Foundation (Grant Number: PG/16/78/32402), the European Research Council Innovative Medicines Initiative on Development of Therapeutics and Diagnostics Combatting Coronavirus Infections Award (H2020-JTI-IMI2 101005122), and the AI for Health Imaging Award (H2020-SC1-FA-DTS-2019-1 952172). S.A. Tsaftaris and G. Papanastasiou acknowledge support from the EPSRC, UK Grant (EP/P022928/1). S.A. Tsaftaris acknowledges the support of the Royal Academy of Engineering, UK and the Research Chairs and Senior Research Fellowships scheme .

Footnotes

This is also addressed as “image translation” in computer vision.

Here we set and .

The affine transformation are randomly generated within translation, rotation, scaling, shearing, and random flip with a probability of 0.2. This setup makes sure the affine transformation does not move significant about of the imaged object out of the field of view so that quantitatively assessible results can be obtained..

1G multiply-add operation (MACs) is roughly 2G floating points operations (FLOPs). Results are obtained using the ptflops package at https://github.com/sovrasov/flops-counter.pytorch and the torchsummary package at https://github.com/sksq96/pytorch-summary.

References

- 1.van Tulder G., de Bruijne M. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2015. Why does synthesized data improve multi-sequence classification? pp. 531–538. [Google Scholar]

- 2.Dalca A.V., Bouman K.L., Freeman W.T., Rost N.S., Sabuncu M.R., Golland P. Medical image imputation from image collections. IEEE Trans. Med. Imaging. 2018 doi: 10.1109/TMI.2018.2866692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Eilertsen K., Nilsen Tor Arne Vestad L., Geier O., Skretting A. A simulation of MRI based dose calculations on the basis of radiotherapy planning CT images. Acta Oncol. 2008;47:1294–1302. doi: 10.1080/02841860802256426. [DOI] [PubMed] [Google Scholar]

- 4.Iglesias J.E., Konukoglu E., Zikic D., Glocker B., Van Leemput K., Fischl B. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2013. Is synthesizing MRI contrast useful for inter-modality analysis? pp. 631–638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Du J., Li W., Lu K., Xiao B. An overview of multi-modal medical image fusion. Neurocomputing. 2016;215:3–20. [Google Scholar]

- 6.He Q., Li X., Kim D.N., Jia X., Gu X., Zhen X., Zhou L. Feasibility study of a multi-criteria decision-making based hierarchical model for multi-modality feature and multi-classifier fusion: Applications in medical prognosis prediction. Inf. Fusion. 2020;55:207–219. [Google Scholar]

- 7.Wang K., Zheng M., Wei H., Qi G., Li Y. Multi-modality medical image fusion using convolutional neural network and contrast pyramid. Sensors. 2020;20:2169. doi: 10.3390/s20082169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Roy S., Carass A., Prince J. Biennial International Conference on Information Processing in Medical Imaging. Springer; 2011. A compressed sensing approach for MR tissue contrast synthesis; pp. 371–383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chartsias A., Joyce T., Papanastasiou G., Semple S., Williams M., Newby D., Dharmakumar R., Tsaftaris S.A. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2018. Factorised spatial representation learning: application in semi-supervised myocardial segmentation; pp. 490–498. [Google Scholar]

- 10.Li L., Zhao X., Lu W., Tan S. Deep learning for variational multimodality umor segmentation in pet/ct. Neurocomputing. 2020;392:277–295. doi: 10.1016/j.neucom.2018.10.099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cordier N., Delingette H., Lê M., Ayache N. Extended modality propagation: image synthesis of pathological cases. IEEE Trans. Med. Imaging. 2016;35:2598–2608. doi: 10.1109/TMI.2016.2589760. [DOI] [PubMed] [Google Scholar]

- 12.Commowick O., Warfield S.K., Malandain G. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2009. Using frankensteins creature paradigm to build a patient specific atlas; pp. 993–1000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Li R., Zhang W., Suk H.-I., Wang L., Li J., Shen D., Ji S. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2014. Deep learning based imaging data completion for improved brain disease diagnosis; pp. 305–312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhou T., Thung K.-H., Liu M., Shi F., Zhang C., Shen D. Multi-modal latent space inducing ensemble svm classifier for early dementia diagnosis with neuroimaging data. Med. Image Anal. 2020;60 doi: 10.1016/j.media.2019.101630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wagenknecht G., Kaiser H.-J., Mottaghy F.M., Herzog H. MRI for attenuation correction in pet: methods and challenges. Magn. Reson. Mater. Phys. Biol. Med. 2013;26:99–113. doi: 10.1007/s10334-012-0353-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Torrado-Carvajal A., Herraiz J.L., Alcain E., Montemayor A.S., Garcia-Cañamaque L., Hernandez-Tamames J.A., Rozenholc Y., Malpica N. Fast patch-based pseudo-CT synthesis from T1-weighted MR images for PET/MR attenuation correction in brain studies. J. Nucl. Med. 2016;57:136–143. doi: 10.2967/jnumed.115.156299. [DOI] [PubMed] [Google Scholar]

- 17.Burgos N., Cardoso M.J., Thielemans K., Modat M., Pedemonte S., Dickson J., Barnes A., Ahmed R., Mahoney C.J., Schott J.M. Attenuation correction synthesis for hybrid PET-MR scanners: application to brain studies. IEEE Trans. Med. Imaging. 2014;33:2332–2341. doi: 10.1109/TMI.2014.2340135. [DOI] [PubMed] [Google Scholar]

- 18.Gong K., Yang J., Kim K., El Fakhri G., Seo Y., Li Q. Attenuation correction for brain PET imaging using deep neural network based on Dixon and ZTE MR images. Phys. Med. Biol. 2018 doi: 10.1088/1361-6560/aac763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Roy S., Wang W.-T., Carass A., Prince J.L., Butman J.A., Pham D.L. PET attenuation correction using synthetic CT from ultrashort echo-time MR imaging. J. Nucl. Med. 2014;55:2071–2077. doi: 10.2967/jnumed.114.143958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Leynes A.P., Yang J., Wiesinger F., Kaushik S.S., Shanbhag D.D., Seo Y., Hope T.A., Larson P.E. Zero-echo-time and dixon deep pseudo-ct (zedd ct): direct generation of pseudo-ct images for pelvic pet/mri attenuation correction using deep convolutional neural networks with multiparametric mri. J. Nucl. Med. 2018;59:852–858. doi: 10.2967/jnumed.117.198051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bowles C., Qin C., Ledig C., Guerrero R., Gunn R., Hammers A., Sakka E., Dickie D.A., Hernández M.V., Royle N. International Workshop on Simulation and Synthesis in Medical Imaging. Springer; 2016. Pseudo-healthy image synthesis for white matter lesion segmentation; pp. 87–96. [Google Scholar]

- 22.Chartsias A., Joyce T., Giuffrida M.V., Tsaftaris S.A. Multimodal MR synthesis via modality-invariant latent representation. IEEE Trans. Med. Imaging. 2017 doi: 10.1109/TMI.2017.2764326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cohen J.P., Luck M., Honari S. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2018. Distribution matching losses can hallucinate features in medical image translation; pp. 529–536. [Google Scholar]

- 24.Johansson A., Garpebring A., Asklund T., Nyholm T. CT substitutes derived from MR images reconstructed with parallel imaging. Med. Phys. 2014;41 doi: 10.1118/1.4886766. [DOI] [PubMed] [Google Scholar]

- 25.Joyce T., Chartsias A., Tsaftaris S.A. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2017. Robust multi-modal mr image synthesis; pp. 347–355. [Google Scholar]

- 26.Nie D., Trullo R., Lian J., Petitjean C., Ruan S., Wang Q., Shen D. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2017. Medical image synthesis with context-aware generative adversarial networks; pp. 417–425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Van Nguyen H., Zhou K., Vemulapalli R. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2015. Cross-domain synthesis of medical images using efficient location-sensitive deep network; pp. 677–684. [Google Scholar]

- 28.Wolterink J.M., Dinkla A.M., Savenije M.H., Seevinck P.R., van den Berg C.A., Išgum I. International Workshop on Simulation and Synthesis in Medical Imaging. Springer; 2017. Deep MR to CT synthesis using unpaired data; pp. 14–23. [Google Scholar]

- 29.Nie D., Trullo R., Lian J., Wang L., Petitjean C., Ruan S., Wang Q., Shen D. Medical image synthesis with deep convolutional adversarial networks. IEEE Trans. Biomed. Eng. 2018;65:2720–2730. doi: 10.1109/TBME.2018.2814538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Huo Y., Xu Z., Bao S., Assad A., Abramson R.G., Landman B.A. Biomedical Imaging (ISBI 2018), 2018 IEEE 15th International Symposium on. IEEE; 2018. Adversarial synthesis learning enables segmentation without target modality ground truth; pp. 1217–1220. [Google Scholar]

- 31.Chartsias A., Joyce T., Dharmakumar R., Tsaftaris S.A. International Workshop on Simulation and Synthesis in Medical Imaging. Springer; 2017. Adversarial image synthesis for unpaired multi-modal cardiac data; pp. 3–13. [Google Scholar]

- 32.Hiasa Y., Otake Y., Takao M., Matsuoka T., Takashima K., Carass A., Prince J.L., Sugano N., Sato Y. International Workshop on Simulation and Synthesis in Medical Imaging. Springer; 2018. Cross-modality image synthesis from unpaired data using cyclegan; pp. 31–41. [Google Scholar]

- 33.Costa P., Galdran A., Meyer M.I., Niemeijer M., Abràmoff M., Mendonça A.M., Campilho A. End-to-end adversarial retinal image synthesis. IEEE Trans. Med. Imaging. 2017;37(3):781–791. doi: 10.1109/TMI.2017.2759102. [DOI] [PubMed] [Google Scholar]

- 34.R. Vemulapalli, H. Van Nguyen, S. Kevin Zhou, Unsupervised cross-modal synthesis of subject-specific scans, in: Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 630–638.

- 35.Goodfellow I., Pouget-Abadie J., Mirza M., Xu B., Warde-Farley D., Ozair S., Courville A., Bengio Y. Advances in Neural Information Processing Systems. 2014. Generative adversarial nets; pp. 2672–2680. [Google Scholar]

- 36.Lan L., You L., Zhang Z., Fan Z., Zhao W., Zeng N., Chen Y., Zhou X. Generative adversarial networks and its applications in biomedical informatics. Front. Public Health. 2020;8:164. doi: 10.3389/fpubh.2020.00164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.J.-Y. Zhu, T. Park, P. Isola, A.A. Efros, Unpaired image-to-image translation using cycle-consistent adversarial networks, in: Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 2223–2232.

- 38.Iglesias J.E., Modat M., Peter L., Stevens A., Annunziata R., Vercauteren T., Lein E., Fischl B., Ourselin S., Initiative A.D.N. Joint registration and synthesis using a probabilistic model for alignment of MRI and histological sections. Med. Image Anal. 2018;50:127–144. doi: 10.1016/j.media.2018.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Jog A., Carass A., Roy S., Pham D.L., Prince J.L. Random forest regression for magnetic resonance image synthesis. Med. Image Anal. 2017;35:475–488. doi: 10.1016/j.media.2016.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Martinez-Murcia F.J., Górriz J.M., Ramírez J., Illán I.A., Segovia F., Castillo-Barnes D., Salas-Gonzalez D. Functional brain imaging synthesis based on image decomposition and kernel modeling: Application to neurodegenerative diseases. Front. Neuroinform. 2017;11:65. doi: 10.3389/fninf.2017.00065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ye D.H., Zikic D., Glocker B., Criminisi A., Konukoglu E. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; 2013. Modality propagation: coherent synthesis of subject-specific scans with data-driven regularization; pp. 606–613. [DOI] [PubMed] [Google Scholar]

- 42.Jog A., Roy S., Carass A., Prince J.L. Biomedical Imaging (ISBI), 2013 IEEE 10th International Symposium on. IEEE; 2013. Magnetic resonance image synthesis through patch regression; pp. 350–353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Jog A., Carass A., Roy S., Pham D.L., Prince J.L. MR image synthesis by contrast learning on neighborhood ensembles. Med. Image Anal. 2015;24(1):63–76. doi: 10.1016/j.media.2015.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.N. Bayramoglu, M. Kaakinen, L. Eklund, J. Heikkila, Towards virtual h&e staining of hyperspectral lung histology images using conditional generative adversarial networks, in: Proceedings of the IEEE International Conference on Computer Vision Workshops, 2017, pp. 64–71.

- 45.Huang Y., Shao L., Frangi A.F. Cross-modality image synthesis via weakly coupled and geometry co-regularized joint dictionary learning. IEEE Trans. Med. Imaging. 2018;37(3):815–827. doi: 10.1109/TMI.2017.2781192. [DOI] [PubMed] [Google Scholar]

- 46.Zhu Y., Tang Y., Tang Y., Elton D.C., Lee S., Pickhardt P.J., Summers R.M. 2020. Cross-domain medical image translation by shared latent Gaussian mixture model. arXiv preprint arXiv:2007.07230. [Google Scholar]

- 47.Liu M.-Y., Breuel T., Kautz J. Unsupervised image-to-image translation networks. In: Guyon I., Luxburg U.V., Bengio S., Wallach H., Fergus R., Vishwanathan S., Garnett R., editors. Advances in Neural Information Processing Systems 30. Curran Associates, Inc.; 2019. pp. 700–708. [Google Scholar]

- 48.Yang H., Sun J., Carass A., Zhao C., Lee J., Xu Z., Prince J. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Springer; 2018. Unpaired brain MR-to-CT synthesis using a structure-constrained cyclegan; pp. 174–182. [Google Scholar]

- 49.Heinrich M.P., Jenkinson M., Bhushan M., Matin T., Gleeson F.V., Brady M., Schnabel J.A. MIND: Modality independent neighbourhood descriptor for multi-modal deformable registration. Med. Image Anal. 2012;16(7):1423–1435. doi: 10.1016/j.media.2012.05.008. [DOI] [PubMed] [Google Scholar]

- 50.Wang C., Papanastasiou G., Tsaftaris S., Yang G., Gray C., Newby D., Macnaught G., MacGillivray T. International Workshop on Machine Learning for Medical Image Reconstruction. Springer; 2019. Tpsdicyc: Improved deformation invariant cross-domain medical image synthesis; pp. 245–254. [Google Scholar]

- 51.Qin C., Shi B., Liao R., Mansi T., Rueckert D., Kamen A. International Conference on Information Processing in Medical Imaging. Springer; 2019. Unsupervised deformable registration for multi-modal images via disentangled representations; pp. 249–261. [Google Scholar]

- 52.Chartsias A., Joyce T., Papanastasiou G., Semple S., Williams M., Newby D.E., Dharmakumar R., Tsaftaris S.A. Disentangled representation learning in cardiac image analysis. Med. Image Anal. 2019;58 doi: 10.1016/j.media.2019.101535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kent J., Mardia K. 1994. The link between kriging and thin-plate splines. [Google Scholar]

- 54.Dai J., Qi H., Xiong Y., Li Y., Zhang G., Hu H., Wei Y. 2017. Deformable convolutional networks; p. 3. CoRR, abs/1703.06211 1. [Google Scholar]

- 55.Chen X., Duan Y., Houthooft R., Schulman J., Sutskever I., Abbeel P. Advances in Neural Information Processing Systems. 2016. Infogan: Interpretable representation learning by information maximizing generative adversarial nets; pp. 2172–2180. [Google Scholar]

- 56.Vinh N.X., Epps J., Bailey J. Information theoretic measures for clusterings comparison: Variants, properties, normalization and correction for chance. J. Mach. Learn. Res. 2010;11(Oct):2837–2854. [Google Scholar]

- 57.Newby D., Forsythe R., McBride O., Robson J., Vesey A., Chalmers R., Burns P., Garden O.J., Semple S. Aortic wall inflammation predicts abdominal aortic aneurysm expansion, rupture, and need for surgical repair. Circulation. 2017;136(9):787–797. doi: 10.1161/CIRCULATIONAHA.117.028433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Hore A., Ziou D. Pattern Recognition (ICPR), 2010 20th International Conference on. IEEE; 2010. Image quality metrics: PSNR vs. SSIM; pp. 2366–2369. [Google Scholar]

- 59.Papanastasiou G., González-Castro V., Gray C., Forsythe R., Sourgia-Koutraki Y., Mitchard N., Newby D.E., Semple S. Annual Conference on Medical Image Understanding and Analysis. Springer; 2017. Multidimensional assessments of abdominal aortic aneurysms by magnetic resonance against ultrasound diameter measurements; pp. 133–143. [Google Scholar]

- 60.P. Isola, J.-Y. Zhu, T. Zhou, A.A. Efros, Image-to-image translation with conditional adversarial networks, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 1125–1134.

- 61.Kingma D.P., Ba J. International Conference on Learning Representations (ICLR) 2015. Adam: A method for stochastic optimization. [Google Scholar]