Abstract

Simple Summary

Cat–human communication is a core aspect of cat–human relationships and has an impact on domestic cats’ welfare. Meows are the most common human-directed vocalizations and are used in different everyday contexts to convey emotional states. This work investigates adult humans’ capacity to recognize meows emitted by cats during waiting for food, isolation, and brushing. We also assessed whether participants’ gender and level of empathy toward animals in general, and toward cats in particular, positively affect the recognition of cat meows. Participants were asked to complete an online questionnaire designed to assess their knowledge of cats and to evaluate their empathy toward animals. In addition, they listened to cat meows recorded in different situations and tried to identify the context in which they were emitted and their emotional valence. Overall, we found that, although meowing is mainly a human-directed vocalization and should represent a useful tool for cats to communicate emotional states to their owners, humans are not good at extracting precise information from cats’ vocalizations and show a limited capacity of discrimination based mainly on their experience with cats and influenced by gender and empathy toward them.

Abstract

Although the domestic cat (Felis catus) is probably the most widespread companion animal in the world and interacts in a complex and multifaceted way with humans, the human–cat relationship and reciprocal communication have received far less attention compared, for example, to the human–dog relationship. Only a limited number of studies have considered what people understand of cats’ human-directed vocal signals during daily cat–owner interactions. The aim of the current study was to investigate to what extent adult humans recognize cat vocalizations, namely meows, emitted in three different contexts: waiting for food, isolation, and brushing. A second aim was to evaluate whether the level of human empathy toward animals and cats and the participant’s gender would positively influence the recognition of cat vocalizations. Finally, some insights on which acoustic features are relevant for the main investigation are provided as a serendipitous result. Two hundred twenty-five adult participants were asked to complete an online questionnaire designed to assess their knowledge of cats and to evaluate their empathy toward animals (Animal Empathy Scale). In addition, participants had to listen to six cat meows recorded in three different contexts and specify the context in which they were emitted and their emotional valence. Less than half of the participants were able to associate cats’ vocalizations with the correct context in which they were emitted; the best recognized meow was that emitted while waiting for food. Female participants and cat owners showed a higher ability to correctly classify the vocalizations emitted by cats during brushing and isolation. A high level of empathy toward cats was significantly associated with a better recognition of meows emitted during isolation. Regarding the emotional valence of meows, it emerged that cat vocalizations emitted during isolation are perceived by people as the most negative, whereas those emitted during brushing are perceived as most positive. Overall, it emerged that, although meowing is mainly a human-directed vocalization and in principle represents a useful tool for cats to communicate emotional states to their owners, humans are not particularly able to extract precise information from cats’ vocalizations and show a limited capacity of discrimination based mainly on their experience with cats and influenced by empathy toward them.

Keywords: domestic cat, Felis catus, cat–human communication, meow, empathy, questionnaire

1. Introduction

Over the past two decades, scientific interest in the human–animal relationship and interaction has rapidly grown, leading to a large body of literature on both theoretical and practical aspects of this interspecific relationship. In particular, studies on domestic species have increased considerably, providing insight into the physiological, ethological, psychological, and sociocultural aspects of the multifaceted relationship between humans and nonhuman species [1,2,3,4,5,6].

Domestic species are considered interesting models for investigating interspecific relationships and communication since domestication, artificial selection, and close coexistence with humans have shaped their behavior and sociocognitive abilities, favoring the emergence of interspecific relationships based on mutual understanding, effective communication, and emotional connection (e.g., [7,8,9]). Even though the dog (Canis familiaris) is regarded as the archetype of a “companion animal” among the domestic species due to its unique sociocognitive and communicative abilities [3], a growing number of studies show that other domestic animals also have sociocommunicative abilities that allow them to interact and communicate with humans. For example, like dogs, domestic cats (Felis catus) [10,11], horses (Equus caballus) [12,13], goats (Capra hircus) [14], pigs (Sus scrofa domesticus) [15,16], and ferrets (Mustela furo) [17] are sensitive and respond to some humans’ communicative cues. In addition, cats [11], pigs [16], and horses [18,19] use communicative cues to manipulate the attention/behavior of a human recipient to obtain an unreachable resource. Last but not least, there is evidence that cats [20,21,22], horses [23,24], and goats [25] are able to recognize and respond to human emotional expressions.

Dogs and cats are the two most common nonhuman animals with which we interact. They have a long history of domestication and close association with humans [26,27], are beloved companion animals living in the human household, and are widely viewed as important social partners by their owners [28]. In some countries, cats are rapidly becoming extremely popular domestic animals not just for practical reasons, but also thanks to their flexibility in adapting to human environments and to their capacity to communicate in a complex way with humans, forming well-established relationships with them [29,30,31]. Differently from their wild ancestors (Felis silvestris), domestic cats are often defined to be social [32,33], as they show certain social interactions in particular circumstances (for example, around an abundant food source), and have sociocognitive and communicative abilities probably developed to maintain social groups [34,35] and to manage different social interactions with humans as well as other pets [2,36,37].

Although research on domestic cat behavior and cognition is growing [37], cat cognitive and communicative skills have been far less investigated than those of dogs, and the literature on the cat–human relationship and communication is more sparse and limited [10,38]. Only a handful of studies have investigated cat vocalizations and the characteristics of cat–human communication [39,40,41,42,43] and little is known about the human ability to recognize and classify the context and the possible emotional content of cat-to-human vocalizations [44,45,46]. However, understanding the extent of the effectiveness of the reciprocal communication between cats and humans is not only theoretically interesting but also relevant for cat welfare, since cats, like dogs, live in close contact with their human social partners and depend on them for health, care, and affection.

Domestic cats have a wide and complex vocal repertoire; it includes several different vocalizations that are emitted in different contexts and carry information about internal states and emotions, allowing pet cats to communicate with humans [2,42]. Among cat vocalizations, meows appear to be highly modulated by the context of emission, with meows produced in positive contexts differing in their pitch, duration, and melody from meows produced in negative contexts [41,42,47]. Meow vocalizations are particularly interesting for a number of reasons:

they appear to be rare in cat-to-cat interaction and in cat colonies [40], but they are typical of cat–human interactions [34,35,42];

undomesticated felids rarely meow to humans in adulthood [48];

meows emitted by feral cats and by cats raised in the human household show differences in their acoustic parameters [40], suggesting they are shaped by the close relationship with humans;

it has been suggested that the meow could be a product of domestication and socialization with humans, with a less relevant role in intraspecific communication [49,50].

Despite the fact that various studies showed that humans can correctly classify the vocalizations of different species according to their context of emission and emotional content (e.g., chimpanzees [51], pigs [52], dogs [53], and cats [44]), meows have been largely overlooked, and the few available studies have produced contrasting results [41,44,46,54].

Therefore, the main aim of the current study was to further investigate to what extent humans recognize meows emitted in three different familiar contexts (i.e., waiting for food, isolation, and brushing) that elicit different behaviors [55] and are supposed to trigger different emotional states (positive or negative).

Two previous studies [44,46] found that human classification of context-specific domestic cat meow vocalizations seems to be relatively poor. In particular, Nicastro and Owren [44] reported that humans’ classification accuracy of unfamiliar cat meows was just modestly above chance and that experience with cats had a positive effect on context classification of single calls. In addition, they reported a slight positive effect of experience and affinity for cats on the classification of the affective valence. Conversely, Ellis et al. [46] found that the classification of cat meows in different contexts was above chance only when the vocalizing cat was the owned cat and not when the vocalizations belonged to an unfamiliar cat. In another study, Schötz and van de Weijer [41] found that human listeners’ ability to classify cat meows recorded either during feeding time and while waiting at a vet clinic was significantly above chance, and listeners with cat experience performed significantly better than naive listeners. Finally, Belin et al. [54] reported that humans failed to recognize the emotional valence of cat meows recorded in affective contexts of positive or negative emotional valence (food-related and affiliative vs. agonistic and distress contexts).

The role of experience emerges in a number of studies (cats: [45]; pigs: [52]; different species: [51]). McComb and colleagues [45] found that participants with no experience of owning cats judged purrs emitted while cats were actively seeking food (i.e., solicitation purrs) as more urgent and less pleasant than those emitted in other contexts (nonsolicitation purrs). However, individuals who had owned a cat performed significantly better than nonowners. Similarly, Tallet and colleagues [52] reported that people with no experience of pigs were able to classify the context and detect the emotional content of piglet vocalizations: however, ethologists and farmers were more skilled in discriminating different emotions than naive people and the type of experience influenced the judgment of the emotional intensity of piglets’ vocalizations. Finally, Scheumann and colleagues [51] found that, in order to recognize emotions from humans’, chimps’, dogs’ and tree shrews’ vocalizations, human listeners had to be familiar not only with the species but also with the specific sound evoked by a given context. Thus, the second purpose of the present study was to further explore the effect of experience on the identification of cats’ meows context, and also to evaluate the potential role of empathy and gender.

In a psychological perspective, empathy refers to the ability to perceive, understand and share another individual’s emotional state [56,57], whereas from an evolutionary perspective empathy’s purposes are to promote prosocial, cooperative behavior and to understand or predict the behavior of others [58]. Studies on humans show that the ability to recognize the emotional states of others from vocal and/or visual cues appears to be positively influenced by both empathy and gender. More empathic individuals are more accurate in recognizing others’ emotional states [59,60,61]. Moreover, there is evidence that women are more empathic [62] and more skilled in recognizing emotions than men [63,64,65]. In the field of human-animal interaction the link between empathy toward animals and the capacity to recognize other species emotional states from visual/vocal cues has been poorly investigated so far [66,67]. Furthermore, although gender differences have been reported in many studies of human–animal interactions (e.g., [4]), very little is known on potential gender differences on the capacity to recognize other species’ emotional states [44,52]. Empathy toward animals seems to be a good predictor of how dog-owners and vets rate pain in dogs [66] and cattle [67]. In their study on human ability to recognize piglets emotional vocalizations, Tallet et al. [52] hypothesized that ethologists performed better then farmers and naive people in identifying the context in which piglets vocalizations were emitted and assigned them a more negative valence than did farmers, because they were more empathic since they are usually interested in animal welfare. They also reported a small gender difference in the evaluation of piglets’ vocalizations. Similarly, Nicastro and Owren [44] found a significant effect of “affinity” for cats in general on the classification of production context of single calls, and a gender difference that approached but did not reach statistical significance.

In the current study, adult human participants were asked to listen to audio recordings of single meows recorded in the home environment from 10 cats of the same breed (Maine Coon) belonging to a single private owner and thus sharing similar environmental conditions. Participants were asked to complete an online questionnaire assessing their knowledge of cats and their empathy toward animals and cats. They were also asked to listen to cat meows recorded in different familiar contexts and to specify the context in which the vocalizations were emitted and their emotional valence. Based on the available literature, we hypothesized that human participants should be able to classify meows recorded in the different behavioral contexts; we also expected them to recognize, at least to some extent, the emotional valence of the meowing cat (positive vs. negative). Moreover, we hypothesized that experience with cats, empathy toward animals and/or cats, and gender would facilitate participants’ performance in the classification task.

2. Materials and Methods

2.1. Participants

Two hundred and twenty-five adults (79 men and 146 women) ranging in age between 18 and 70 () participated in the study. Participants were recruited through personal contacts, word of mouth and by advertising the study on the researchers’ Facebook sites; therefore, all participants were volunteers with different levels of experience with pets and with cats in particular. Potential participants were told that “the purpose of the study was to investigate humans’ understanding of cats’ vocalizations and that they would have to fill in an online questionnaire and to listen to a number of cats’ vocalizations”.

2.2. Cat Vocalizations

In the context of an interdisciplinary project involving the departments of Pathophysiology and Transplantation, Veterinary Medicine, Agricultural and Environmental Science, and Computer Science of the University of Milan, a dataset called “CatMeows” was created. Such a dataset, publicly available on Zenodo [68] and described in detail in [69], is composed by sounds obtained from two cat breeds: European Shorthair and Maine Coon.

In the present work, we focused only on the 10 Maine Coon cats: meows were recorded from six females and four males, ranging in age between 1 and 13 years (). Three males and three females were neutered. All cats belonged to a single private owner, and thus shared similar environmental conditions. All cats were fed ad libitum with “Royal Canine Sensible” dry cat food and twice a day with “Cosma Nature” canned cat food (at about 7:00 a.m. and 7:00 p.m.). All cats were brushed monthly since kittenhood in order to maintain healthy fur conditions. All the subjects were used to the pet carrier since kittenhood and entered it spontaneously when it was open. They were also used to being transported outside the home by car (inside the pet carrier) about once a year to go to the vet or during holidays. Each cat was exposed for five minutes in a random order to three different situations that normally occur in the life of a cat, in order to stimulate the production of meows in different contexts. The exposure to each experimental context was repeated three times for each cat, at a one-month interval. Meows were recorded in the following three contexts:

Waiting for food (meows made prior to regular feeding)—The owner started the normal routine operations that precede food delivery in the home environment, but food was actually delivered with a delay of five minutes;

Isolation (meows made during a period of isolation in an unfamiliar environment)—The cat was placed in its pet carrier and transported by its owner, adopting the same routine used to transport it for any other reason, to an unfamiliar environment (e.g., a room in a different apartment or an office, not far from their home environment). On arrival, the owner opened the pet carrier and the cat was free to roam in the room (if it wanted) for 30 min, in the presence of the owner, to recover from transportation stress. Then, the cat was left alone in the room for five minutes;

Brushing (meows made while being brushed by the owner)—Cats were brushed by their owner in their home environment for a maximum of 5 min.

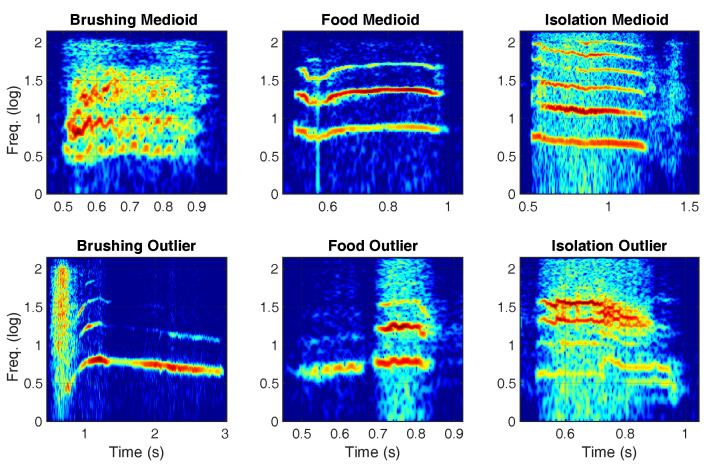

Cat meows were recorded by using the small Bluetooth microphone of a QCY Q26 Pro Bluetooth headset placed on the cats’ collar. All cats were used to wearing the collar and had previously been accustomed to the presence of such a device (Figure 1). Recordings, saved as WAV files with a sampling frequency of 8000 Hz and 16 quantization bits, were subsequently subjected to bioacoustic analyses in order to select two meows for each situation: a medioid (i.e., the most representative sound of the specific situation) and an outlier (i.e., the most different sound with respect to the typical one emitted in the specific situation). Spectrograms of the six selected sounds are visible in Figure 2.

Figure 1.

A cat provided with a Bluetooth microphone placed on the collar.

Figure 2.

Time–frequency spectrograms of the medioid and outlier meows in the three different contexts.

Medioids and outliers were identified by computing three audio features (see [70] for further information about audio features) for each recording, namely the fundamental frequency , the roughness R, and the tristimulus :

corresponds to the pitch (or note) of the vocalization. It has been computed with the SWIPE’ method [71], ignoring values where the confidence reported by SWIPE’ is below ;

R is defined in [72] as the sensation for rapid amplitude variations, which reduces pleasantness, and whose effect is perceived as dissonant [73]. This is a sonic descriptor which conveys information about unpleasantness. It was computed using the dedicated function of the MIRToolbox [74] and, more specifically, adopting the strategy proposed in [75];

is a set of features coming from color perception studies that has been adapted to the audio domain [76]. is the ratio between the energy of and the total energy, is the ratio between the second to fourth harmonics and the total energy, and is the ratio between all others harmonics and the total energy. Tristimulus was chosen to represent information regarding formants, since, with a band-limited signal containing only few harmonics, the actual computation of formants with linear prediction techniques resulted to be unreliable.

All the above-mentioned features are time-varying signals, extracted from the short-term Fourier transform of the sound. In order to reduce them to a set of global values, mean values and standard-deviation ranges were calculated for each feature. This led to a feature space of 10 values. Finally, a one-way ANOVA test was carried out to assess which features are meaningful in distinguishing the three situations (i.e., ), an operation that discarded and , leading to a final feature space of 6 values per sound.

By taking the mean of the feature space of each class, three centroids were found. Medioids were chosen as the sounds closest to the centroids in terms of Euclidean distance, while outliers were the most distant from the centroids. Outliers were included mainly for two reasons: first, to have at least a pair of samples for each class, but keeping them sufficiently distant from each other (i.e., to sample the data space with some criterion), and second, to assess if the chosen features were effective in grasping the informative content of meows. In particular, we expect a significant difference in the recognition scores in favor of the medioids if the features are actually effective (and no significant differences otherwise).

2.3. Questionnaire

The whole questionnaire (Supplementary File S1) included three sections. The first section contained general information on participants: age, gender, education, and other background experiences which could be relevant in determining their responses (previous and present interaction/experience with animals, past or actual pet ownership, and cat ownership).

The second section was aimed at evaluating the human–cat relationship and comprised the Animal Empathy Scale (AES), designed to measure empathy toward animals [77] and the Cat Empathy Scale (CES), calculated on the basis of three additional specific questions investigating participants’ reported empathy toward cats. The Animal Empathy Scale (AES) was initially developed by Paul [77] to measure empathy toward animals. It has been recently translated into Italian and used in two studies assessing empathy toward animals in a sample of students of veterinary medicine at the University of Milan (Italy) and in veterinary practitioners working with pets [78,79]. The scale includes a total of 22 items, 11 representing unempathic sentiments and 11 empathic sentiments. Responses to each item are requested on a 9-point Likert-type scale ranging from “very strongly agree” to “very strongly disagree”, with agreements with empathic statements scoring high (maximum 9) and agreements with unempathic statements scoring low (minimum 1). The total score, ranging from a minimum of 22 to a maximum of 198, is calculated as the sum of scores obtained in each item. Higher scores indicate a higher level of self-reported empathy. Previous studies carried out on different samples showed that the AES has a good internal consistency, with Cronbach alpha values ranging from 0.78 [77] to 0.83 [78] and 0.68 [79].

Since the AES evaluates empathy toward animals in general with only two items specifically referring to cats (item 2: Often cats will meow and pester for food even when they are not really hungry; item 9: A friendly purring cat almost always cheers me up), in order to specifically investigate the level of empathy toward cats (CES) reported by participants, the following three additional questions were added: 1. I can easily understand whether a cat is trying to communicate with me; 2. I can easily and intuitively understand how a cat is feeling; 3. I am good at predicting what a cat will do. As for the AES, responses to these questions were requested on a 9-point Likert-type scale ranging from “very strongly agree” to “very strongly disagree”. The total score ranged from a minimum of 3 to a maximum of 27 and was calculated as the sum of the scores for each question. Higher scores indicated a higher level of self-reported empathy toward cats.

In the third part of the survey, participants were asked to wear earphones and listen with attention to the recordings of six meows (one medioid and one outlier per context), specifically prepared for the study, as described above. After listening to each meow (participants could replay each sound ad libitum), participants were asked to choose one out of three possible contexts in which the vocalization was emitted (i.e., waiting for food, isolation, brushing), to indicate the emotional state of the meowing cat in terms of valence (positive vs. negative), and to give a score on a 7-point Likert scale to 11 descriptors of the possible emotional state: agitated/anxious, aggressive/angry, frustrated, restless/nervous, frightened, suffering, friendly, calm/relaxed, happy, curious, and playful. This procedure was based on previous research using qualitative behavior assessment in companion animals (dogs) [80] and reminds sociolinguistic researchers to investigate attitudes toward different aspects of language [81,82]. Between the proposed sounds, a 5-second pink noise sample was reproduced in order to avoid direct comparison.

2.4. Procedure

The questionnaire developed for the study was made available online from March 2018 until March 2019. All subjects who voluntarily accessed the questionnaire were told that the purpose of the survey was to gain knowledge regarding the human–animal relationship and that their responses would remain anonymous and be used for scientific research only. They also signed an informed consent form and an authorization to allow us to use the data according to the National Privacy Law 675/96. Participants were also asked to fill the entire questionnaire and to listen to the vocalizations in a quiet moment of the day, taking their time and using their earphones, and to carefully follow the guided procedure.

2.5. Ethical Statement

The present project was approved by the Animal Welfare Organisation of the University of Milan (approval n. OPBA_25_2017). The challenging situations were conceived considering potentially stressful situations that may occur in cats’ life and to which cats can usually easily adapt. In order to minimize possible stress reactions, preliminary information on the normal husbandry practices (e.g., brushing or transportation) to which the experimental cats were submitted and on their normal reactions to these practices were collected in interviews with the owners. The information collected did not point out any possibility of excessive reactions of cats in one of the planned situations; therefore, all the cats were included in the trial. No signs of excessive stress were ever recorded in any of the challenging situations, all of which could therefore be completed. Before starting to complete the questionnaire, the interviewed people were asked to sign an informed consent, stating that all data were going to be treated anonymously and used only for scientific purposes.

3. Statistical Analysis

All data were collected in a number of spreadsheets for statistical analyses. Preliminary descriptive analyses were carried out to evaluate the characteristics of the sample and the distribution of the data collected.

The accuracy rate of the responses given by the participants (percentage of correct assignment) was calculated for each context and compared between medioids and outliers using chisq test. Then, taking into consideration only medioids, the accuracy rate of the responses among contexts was compared using the chisq test. The chisq test was also used to compare, within each context, the accuracy rate of meow medioids depending on the following characteristics of the interviewed persons: gender (males vs. females), parental status (parents vs. nonparents), and level of experience with cats (cat owners vs. nonowners; grown up with cats vs. grown up without cats). The Mann–Whitney test was used to evaluate the effect of gender (males vs. females) and of the level of experience with cats (cat owners vs. nonowners) on AES and CES scores. The internal consistency of the AES was assessed using Cronbach’s alpha. Spearman correlations were calculated between AES and CES scores and the total number of correct context identifications (0 = no correct assignment; 1 = one correct assignment; 2 = two correct assignments; 3 = all assignments were correct). Within each context, AES and CES scores were compared, depending on the correct or incorrect assignment of the meow, using the Mann–Whitney test. Finally, in order to understand the type of emotions perceived by participants in response to the meows emitted in each specific context, we performed a principal component analysis (PCA) on the scores given to each descriptor. This analysis was initially performed on the whole sample, and then only using the descriptors of the situations that had been correctly assigned to their context. All the statistical analyses were carried out with SPSS Statistics 25 (IBM, Armonk, NY, USA), with alpha set at 0.05.

4. Results

In all contexts, medioid meows had a significantly higher probability to be assigned to the correct context than outlier meows (Table 1). This seems to prove that the chosen features are somehow related to those to which subjects are sensitive.

Table 1.

Absolute frequencies (and percentages) of correct or incorrect assignment of medioid or outlier meows in each of the three contexts. Significance levels refer to differences between the rate of correct assignment of medioid vs. outlier meows within each context.

| Context | Medioid Assignment | Outlier Assignment | Significance | ||

|---|---|---|---|---|---|

| Correct | Incorrect | Correct | Incorrect | ||

| Waiting for food | 91 (40.44%) | 134 (59.56%) | 61 (27.11%) | 164 (72.89%) | p < 0.01 |

| Isolation | 60 (26.67%) | 165 (73.33%) | 32 (14.22%) | 193 (85.78%) | p < 0.001 |

| Brushing | 74 (32.89%) | 151 (67.11%) | 30 (13.33%) | 195 (86.67%) | p < 0.001 |

However, even considering only the medioids, the accuracy ratio of the responses was generally low, and it was never significantly above the chance level (0.33%). Statistical differences in the accuracy ratio of the responses were recorded among contexts (): although still low and not above chance, waiting for food had the highest rate of correct assignment (40.44%), while isolation had the lowest (26.67%) (Table 1). Some individual characteristics of the interviewed persons significantly affected the accuracy rate (Table 2). Females showed a higher accuracy rate, with significant differences emerging in the isolation and brushing contexts, whereas parental status did not affect the accuracy rate. The level of experience with cats also had some effect on the accuracy rate: in particular, cat owners had a higher accuracy rate than nonowners in all contexts, with significant differences for isolation and brushing, whereas having grown up with cats did not affect the accuracy rate.

Table 2.

Absolute frequencies (and percentages) of correct or incorrect assignment of medioid meows in each of the three contexts, depending on individual characteristics and level of experience with cats of the interviewed persons.

| Waiting for Food | Isolation | Brushing | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Correct | Incorrect | Sign. | Correct | Incorrect | Sign. | Correct | Incorrect | Sign. | |

| Gender | |||||||||

| Male | 27 (34.2%) | 52 (65.8%) | n.s. | 14 (17.7%) | 65 (82.3%) | 19 (24.1%) | 60 (75.9%) | ||

| Female | 64 (43.8%) | 82 (56.2%) | 46 (31.5%) | 100 (68.5%) | 55 (37.7%) | 91 (62.3%) | |||

| Parental status | |||||||||

| Parent | 24 (33.3%) | 48 (66.7%) | n.s. | 14 (19.4%) | 58 (80.6%) | n.s. | 18 (25.0%) | 54 (75.0%) | n.s. |

| Nonparent | 67 (43.8%) | 86 (56.2%) | 46 (30.1%) | 107 (69.9%) | 56 (36.6%) | 97 (63.4%) | |||

| Cat owner | |||||||||

| Yes | 48 (44.4%) | 60 (55.6%) | n.s. | 38 (35.2%) | 70 (64.8%) | 48 (44.4%) | 60 (55.6%) | ||

| No | 43 (36.8%) | 74 (63.2%) | 22 (18.8%) | 95 (81.2%) | 26 (22.2%) | 91 (77.8%) | |||

| Grown up with cats | |||||||||

| Yes | 51 (41.8%) | 71 (58.2%) | n.s. | 38 (31.1%) | 84 (68.9%) | n.s. | 44 (36.1%) | 78 (63.9%) | n.s. |

| No | 40 (38.8%) | 63 (61.2%) | 22 (21.4%) | 81 (78.6%) | 30 (29.1%) | 73 (70.9%) | |||

Both experience with cats and gender significantly affected the empathy toward animals in general (AES), and more specifically toward cats (CES), that were higher in females, cat owners, and persons who had grown up with cats (Table 3).

Table 3.

Mean (±SD) of AES and CES of the participants, depending on their gender or experience with cats, and relative significance levels.

| AES | CES | |||

|---|---|---|---|---|

| Mean ± s.d. | Sign. | Mean ± s.d. | Sign. | |

| Gender | ||||

| Male | 144.28 ± 17.95 | p < 0.001 | 15.92 ± 6.59 | p < 0.05 |

| Female | 157.82 ± 18.02 | 17.97 ± 5.68 | ||

| Parental status | ||||

| Parent | 153.58 ± 21.08 | n.s. | 18.32 ± 5.64 | n.s. |

| Nonparent | 152.82 ± 18.14 | 16.75 ± 6.23 | ||

| Cat owner | ||||

| Yes | 157.11 ± 17.58 | p < 0.01 | 20.06 ± 4.53 | p < 0.001 |

| No | 149.32 ± 19.73 | 14.65 ± 6.18 | ||

| Grown up with cats | ||||

| Yes | 157.75 ± 17.20 | p < 0.001 | 19.03 ± 5.08 | p < 0.001 |

| No | 147.50 ± 19.79 | 15.14 ± 6.50 | ||

| Overall | 153.06 ± 19.09 | 17.25 ± 6.08 | ||

AES and CES scores were significantly correlated ( = 0.316; ). A significant correlation was found between the total number of correct context identification and CES ( = 0.145; ), whereas no correlation emerged between the total number of correct context identification and AES ( = 0.040; n.s.). Within each context, AES and CES scores did not differ depending on the correct or incorrect assignment of the meow, except for CES in the isolation context (Table 4).

Table 4.

Mean (±SD) of AES and CES of the participants depending on the correct or incorrect assignment of meows to each of the three contexts.

| AES | CES | |||||

|---|---|---|---|---|---|---|

| Context | Context Assignment | Context Assignment | ||||

| Correct | Incorrect | Sign. | Correct | Incorrect | Sign. | |

| Waiting for food | 154.02 ± 18.32 | 152.41 ± 19.63 | n.s. | 17.90 ± 5.55 | 16.81 ± 6.40 | n.s. |

| Isolation | 154.92 ± 16.48 | 152.39 ± 19.95 | n.s. | 18.75 ± 5.38 | 16.70 ± 6.24 | p < 0.05 |

| Brushing | 154.01 ± 18.92 | 152.60 ± 19.21 | n.s. | 18.31 ± 5.65 | 16.73 ± 6.23 | n.s. |

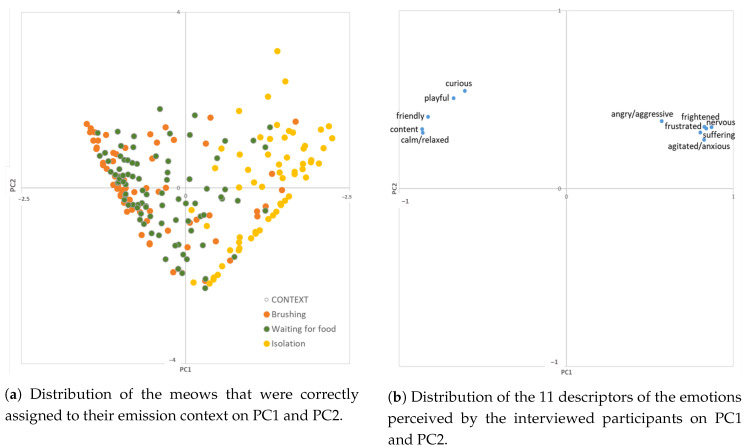

A preliminary PCA carried out on the scores given to each descriptor related to the type of emotion perceived by the interviewed participants to the meows emitted in each context revealed no clear separation among the emission contexts (data not presented). The same analysis conducted only on the descriptors of the meows that had been correctly assigned to their context showed a clear trend of meows emitted during brushing to cluster on the left side of PC1, whereas meows emitted in the isolation context are scattered on the right side and those emitted while waiting for food are in an intermediate position, with a trend to cluster on the left (Figure 3a).

Figure 3.

Two-dimensional score plot of PCA results.

The left side of PC1 is related to a positive valence, as shown by the high loadings of variables such as content, calm/relaxed, friendly, playful, and curious, whereas the right side is characterized by a negative valence, as shown by descriptors like nervous, frightened, agitated/anxious, frustrated, suffering, and angry/aggressive (Figure 3b). This means that brushing and, to a lesser extent, waiting for food are perceived by the interviewed persons as more positive situations, whereas isolation is clearly perceived as a negative situation.

The first two principal components explained 76.3% of total variance (PC1: 61.7%; PC2: 14.6%).

5. Discussion

The current study investigates the human–cat relationship and communication evaluating adult humans’ ability to classify single cat meows emitted in different well-defined and familiar contexts: waiting for food, brushing, and isolation. We also evaluated the effect of factors such as experience with cats, gender, and empathy toward animals and cats on human performance in the context recognition task. Finally, we asked participants to judge the emotional state of the meowing cat by scoring different descriptors, in order to highlight the perceived emotional valence (positive vs. negative).

Meowing is a common and mainly human-directed vocalization [34,35,42]; thus, in principle, it should represent a useful mean for cats to communicate their emotional states to humans. Furthermore, previous studies showed that meows emitted in these three different contexts can be successfully discriminated on the basis of a series of acoustic parameters [83]. In spite of this, our results suggest that adult humans have a limited capacity to discriminate among the production contexts of single meows (both outlier and medioid meows) in familiar contexts, and therefore seem unable to extract reliable specific information from cats’ meows.

In all contexts, the most representative meows of the specific situation (i.e., medioids) were assigned by participants to the correct context significantly more than meows with less core features, and thus more different from the typical sound emitted in the specific situation (outlier meows). However, even when taking into consideration exclusively meows most representative of a given context, the correct ratio of context assignment was never significantly above the random chance (0.33%). In particular, the best recognized meow was that emitted in the context “waiting for food”, with a rate of correct assignment of 40.44% (outlier meow = 27.11%), whereas the less recognized one was that emitted in the context “isolation”, with a rate of only 26.67% (outlier meow = 14.22%).

It is worth noting that the contexts in which cat meows were recorded, in particular waiting for food and isolation, were similar to the contexts used in previous studies [44,46,54] and were chosen because they are considered common ones for cats and humans and are associated to different emotional valence. However, differently from the results by Nicastro and Owren [44], our participants did not perform above chance in the context classification task. In this respect, our results are more in line with those by Ellis et al. [46], showing that vocalizations of unfamiliar cats are difficult to classify, and performance is above chance only when the vocalizing cat is the owned cat.

The finding that cat ownership positively affected classification is in line with previous work on cats [44,45,46] and other species [51,52], showing that experience with the species allows a better recognition of animal vocalizations. Despite the overall poor performance, cat owners were more accurate than nonowners in all contexts, with significant differences emerging for isolation and brushing, whereas having grown up with cats did not affect the accuracy rate. This suggests that regular and daily interactions may be more relevant than past experience in favoring the correct attribution. However, this seems to be true for acoustic cues, but not for visual cues: in fact, Dawson et al. [84] found that personal experience with cats (i.e., having ever lived with a cat, the number of years spent living with cats, the current number of owned cats) was not important for the correct identification of feline emotions from cats’ faces, and a previous study by Schirmer et al. [85] reported that humans without previous experience with dogs discriminate dogs’ facial expressions of both negative and positive emotions.

Current findings, together with previous ones, are in contrast with a series of playback experiments with dogs’ vocalizations showing that adult humans are successful in the recognition of contextual and motivational content of dog barks [53,86] independently from previous experiences, and that even children aged 6–10 years can perform similarly to adults [87].

It has been suggested that classifying the vocalizations of unfamiliar animals exclusively on the basis of mere acoustic cues, without the associated visual cues (e.g., facial, body expression), is a difficult task [44]; moreover, the variability of cat meows, both within and between contexts and individuals, may represent a further aspect that complicates the classification task in both experimental and natural circumstances. However, there is evidence that meows are highly modulated by the context of emission, with meows produced in positive contexts differing in their pitch, duration, and melody from meows produced in negative contexts [42,47], and that they can be automatically classified with a high accuracy rate on the basis of their acoustic characteristics [83]. Schötz et al. [47], for example, reported effects of the recording context and of cat internal state on f0 and duration of cat meows; in addition, they found that positive (e.g., affiliative) contexts and internal states tended to have rising f0 contours, while meows produced in negative (e.g., stressed) contexts and mental states had predominantly falling f0 contours. These acoustic characteristics could presumably help humans in differentiating at least negative versus positive emotional states. Interestingly, Yin [88] and Pongrácz et al. [53] reported that dog barking has reliable acoustic features (e.g., peak and fundamental frequency, interbark intervals) that are specific to particular contexts or inner states and that are used by humans to detect the context and the inner state of the barking dog. In the current study, the medioid meow was obtained by keeping the more representative aspects of the meow vocalization in a given context; thus, we expected humans to perform at least moderately above chance.

Regarding the effect of gender, our findings provide new insight on possible gender differences in human–animal interactions, suggesting a potential gender bias in the capacity to recognize other species emotional states from vocalizations. With regards to cats’ vocalizations, Nicastro and Owren [44] reported only a small gender effect that approached but did not reach significance; similarly, Tallet et al. [52] found small gender differences in the evaluation of piglet vocalizations and related them with the evidence of a greater empathy [89] and a greater accuracy in recognizing emotional expressions [65,90] in women than in men.

Studies on humans have shown that women have greater abilities to recognize nonverbal displays of other humans’ emotions [91], but little evidence is available with respect to other species. A recent study [84] showed that people can identify feline emotions from cats’ faces and reported that women were more successful than men in the identification of feline emotions. Similarly, Schirmer et al. [85] reported that women were more sensitive than men to dogs’ affective expressions.

These results are possibly due to the higher empathy of women toward animals recorded also in the present study, in line with our predictions and with the results of previous studies [62]. In fact, besides observing a correlation between empathy toward animals and cats, we also found that the total scores of empathy toward animals and cats were significantly higher in females. Evidence of females’ greater levels of empathy toward nonhuman animals has been reported in previous studies and is associated with more positive attitudes toward animals and a greater concern for their welfare [77,78,79].

Empathy was higher also in cat owners and persons who had grown up with cats, and a significant correlation was found between the total number of correct context identifications and empathy toward cats, but not empathy toward animals in general. Greater empathy toward cats, and not just toward animals in general, could motivate the choice of a cat as a companion animal; moreover, “being empathetic” toward cats may, for example, motivate owners to shift their attention toward them more, which can thereby increase accuracy in emotion recognition as happens in humans [60,61]. In general, feeling empathy together with familiarity can lead perceivers to focus on expressive cues that communicate information about the feelings of others. Of course, these are just hypotheses that require further investigation.

With regard to the evaluation of the emotional state of the meowing cat in terms of valence (positive vs. negative), the analysis carried out only on the descriptors of the meows that had been correctly assigned to their context showed that meows emitted during isolation were perceived by participants as more negative (the cat was described as nervous, frightened, agitated/anxious, frustrated, suffering, and/or angry/aggressive), whereas those emitted during brushing and, to a lesser extent, waiting for food were perceived as positive (the cat was described as calm/relaxed, friendly, playful, and/or curious). Since meows produced in positive contexts are different in their pitch, duration, and melody from meows produced in negative contexts [42,47], this finding suggests that the correct distinction of meows in terms of valence was probably based on the detection of these differences.

Nicastro and Owren [44], when grouping the different contexts by their presumptive affective valence, assigned the food-related context to the positive affect category, whereas calls made when the cats were placed in an unfamiliar environment (i.e., distress) were considered to be negative. However, unexpectedly, Ellis et al. [46] reported that meow vocalizations emitted while cats were alone in a room and unable to exit (i.e., negotiating a barrier context) were rated by participants as the most pleasant. Finally, Belin et al. [54] reported that humans failed to recognize the emotional valence of cat meows recorded in affective contexts of positive or negative emotional valence (food-related and affiliative vs. agonistic and distress).

The finding that meows emitted during isolation were perceived by people as negative whereas those emitted during brushing and, to a lower extent, waiting for food were perceived as positive supports previous evidence on cats and other species showing that humans are able to differentiate negative from positive emotions conveyed through vocalizations [44,53,54]. One possible reason why waiting for food was perceived as less positive than brushing in the current study could be that cats were gently brushed and touched by their owner as usual while in the brushing condition, whereas in the waiting for food context the owner, after starting the normal routine operations that preceded food delivery, waited for 5 min before delivering it, thus possibly inducing also some distress. In fact, as reported by Cannas et al. [55], cats waiting for food showed yawning, lip licking, swallowing, and salivation. These behaviors are related to a condition of stress and frustration due to the delay in giving food instead of its request. By definition, frustration arises when an individual is unable to immediately access something it wants [92].

6. Conclusions

Although cats’ popularity as pets rivals that of dogs, cats are far less studied than dogs, and cat–human communication has received less attention compared to dog–human communication. Our findings provide the first evidence of gender differences in the ability to recognize cats’ meow vocalizations and highlight the role of experience and empathy toward cats in the recognition of the context of emission of the vocalizations. However, overall, the current knowledge on human ability to decode cat meows indicates that, although meowing is a common and mainly human-directed vocalization and its acoustic characteristics vary depending on the context of emission, adult humans show a limited capacity to extract specific information from cats’ meows and poorly discriminate among the production contexts of single meows emitted in familiar contexts. Given the limited number of studies on cat-to-human vocalizations and the mixed results obtained so far, future studies should further explore human understanding of cat vocal communicative sounds and the different variables that affect it, as well as the human ability to understand the valence and arousal of emotional states elicited by different contexts.

Supplementary Materials

The following are available online at https://www.mdpi.com/2076-2615/10/12/2390/s1, File S1: Online questionnaire.

Author Contributions

Conceptualization, E.P.-P., S.M., M.B., S.C., L.A.L. and G.P.; methodology, E.P.-P.; software, L.A.L., G.P. and S.N.; formal analysis, S.M.; data collection, S.I.; data curation, S.M. and S.I.; writing–original draft preparation, E.P.-P.; writing–review and editing, S.M., M.B., S.C., C.P., L.A.L., S.N. and G.P.; supervision, S.M., L.A.L. and E.P.-P.; project administration, S.M.; funding acquisition, S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the University of Milan, Italy, grant number LINEA2SMATT_2017_AZB.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Waiblinger S., Boivin X., Pedersen V., Tosi M.V., Janczak A.M., Visser E.K., Jones R.B. Assessing the human–animal relationship in farmed species: A critical review. Appl. Anim. Behav. Sci. 2006;101:185–242. doi: 10.1016/j.applanim.2006.02.001. [DOI] [Google Scholar]

- 2.Turner D.C. A review of over three decades of research on cat-human and human-cat interactions and relationships. Behav. Process. 2017;141:297–304. doi: 10.1016/j.beproc.2017.01.008. [DOI] [PubMed] [Google Scholar]

- 3.Miklósi Á., Topál J. What does it take to become ‘best friends’? Evolutionary changes in canine social competence. Trends Cogn. Sci. 2013;17:287–294. doi: 10.1016/j.tics.2013.04.005. [DOI] [PubMed] [Google Scholar]

- 4.Herzog H. Biology, culture, and the origins of pet-keeping. Anim. Behav. Cogn. 2014;1 doi: 10.12966/abc.08.06.2014. [DOI] [Google Scholar]

- 5.Beetz A., Uvnäs-Moberg K., Julius H., Kotrschal K. Psychosocial and psychophysiological effects of human-animal interactions: The possible role of oxytocin. Front. Psychol. 2012;3:234. doi: 10.3389/fpsyg.2012.00234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Prato Previde E., Valsecchi P. The Social Dog. Elsevier; Amsterdam, The Netherlands: 2014. The immaterial cord: The dog–human attachment bond; pp. 165–189. [Google Scholar]

- 7.Hare B., Brown M., Williamson C., Tomasello M. The domestication of social cognition in dogs. Science. 2002;298:1634–1636. doi: 10.1126/science.1072702. [DOI] [PubMed] [Google Scholar]

- 8.Udell M.A., Dorey N.R., Wynne C.D. What did domestication do to dogs? A new account of dogs’ sensitivity to human actions. Biol. Rev. 2010;85:327–345. doi: 10.1111/j.1469-185X.2009.00104.x. [DOI] [PubMed] [Google Scholar]

- 9.Albiach-Serrano A., Bräuer J., Cacchione T., Zickert N., Amici F. The effect of domestication and ontogeny in swine cognition (Sus scrofa scrofa and S. s. domestica) Appl. Anim. Behav. Sci. 2012;141:25–35. doi: 10.1016/j.applanim.2012.07.005. [DOI] [Google Scholar]

- 10.Miklósi Á., Pongrácz P., Lakatos G., Topál J., Csányi V. A comparative study of the use of visual communicative signals in interactions between dogs (Canis familiaris) and humans and cats (Felis catus) and humans. J. Comp. Psychol. 2005;119:179–186. doi: 10.1037/0735-7036.119.2.179. [DOI] [PubMed] [Google Scholar]

- 11.Ito Y., Watanabe A., Takagi S., Arahori M., Saito A. Cats beg for food from the human who looks at and calls to them: Ability to understand humans’ attentional states. Psychologia. 2016;59:112–120. doi: 10.2117/psysoc.2016.112. [DOI] [Google Scholar]

- 12.Maros K., Gácsi M., Miklósi Á. Comprehension of human pointing gestures in horses (Equus caballus) Anim. Cogn. 2008;11:457–466. doi: 10.1007/s10071-008-0136-5. [DOI] [PubMed] [Google Scholar]

- 13.Proops L., McComb K. Attributing attention: The use of human-given cues by domestic horses (Equus caballus) Anim. Cogn. 2010;13:197–205. doi: 10.1007/s10071-009-0257-5. [DOI] [PubMed] [Google Scholar]

- 14.Kaminski J., Riedel J., Call J., Tomasello M. Domestic goats, Capra hircus, follow gaze direction and use social cues in an object choice task. Anim. Behav. 2005;69:11–18. doi: 10.1016/j.anbehav.2004.05.008. [DOI] [Google Scholar]

- 15.Nawroth C., Ebersbach M., von Borell E. Juvenile domestic pigs (Sus scrofa domestica) use human-given cues in an object choice task. Anim. Cogn. 2014;17:701–713. doi: 10.1007/s10071-013-0702-3. [DOI] [PubMed] [Google Scholar]

- 16.Gerencsér L., Fraga P.P., Lovas M., Újváry D., Andics A. Comparing interspecific socio-communicative skills of socialized juvenile dogs and miniature pigs. Anim. Cogn. 2019;22:917–929. doi: 10.1007/s10071-019-01284-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hernádi A., Kis A., Turcsán B., Topál J. Man’s underground best friend: Domestic ferrets, unlike the wild forms, show evidence of dog-like social-cognitive skills. PLoS ONE. 2012;7:e43267. doi: 10.1371/journal.pone.0043267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Malavasi R., Huber L. Evidence of heterospecific referential communication from domestic horses (Equus caballus) to humans. Anim. Cogn. 2016;19:899–909. doi: 10.1007/s10071-016-0987-0. [DOI] [PubMed] [Google Scholar]

- 19.Takimoto A., Hori Y., Fujita K. Horses (Equus caballus) adaptively change the modality of their begging behavior as a function of human attentional states. Psychologia. 2016;59:100–111. doi: 10.2117/psysoc.2016.100. [DOI] [Google Scholar]

- 20.Merola I., Lazzaroni M., Marshall-Pescini S., Prato-Previde E. Social referencing and cat–human communication. Anim. Cogn. 2015;18:639–648. doi: 10.1007/s10071-014-0832-2. [DOI] [PubMed] [Google Scholar]

- 21.Galvan M., Vonk J. Man’s other best friend: Domestic cats (F. silvestris catus) and their discrimination of human emotion cues. Anim. Cogn. 2016;19:193–205. doi: 10.1007/s10071-015-0927-4. [DOI] [PubMed] [Google Scholar]

- 22.Quaranta A., D’Ingeo S., Amoruso R., Siniscalchi M. Emotion Recognition in Cats. Animals. 2020;10:1107. doi: 10.3390/ani10071107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Smith A.V., Proops L., Grounds K., Wathan J., McComb K. Functionally relevant responses to human facial expressions of emotion in the domestic horse (Equus caballus) Biol. Lett. 2016;12:20150907. doi: 10.1098/rsbl.2015.0907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nakamura K., Takimoto-Inose A., Hasegawa T. Cross-modal perception of human emotion in domestic horses (Equus caballus) Sci. Rep. 2018;8:8660. doi: 10.1038/s41598-018-26892-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Nawroth C., Albuquerque N., Savalli C., Single M.S., McElligott A.G. Goats prefer positive human emotional facial expressions. R. Soc. Open Sci. 2018;5:180491. doi: 10.1098/rsos.180491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Vigne J.D., Guilaine J., Debue K., Haye L., Gérard P. Early taming of the cat in Cyprus. Science. 2004;304:259. doi: 10.1126/science.1095335. [DOI] [PubMed] [Google Scholar]

- 27.Driscoll C.A., Clutton-Brock J., Kitchener A.C., O’Brien S.J. The Taming of the cat. Genetic and archaeological findings hint that wildcats became housecats earlier–and in a different place–than previously thought. Sci. Am. 2009;300:68–75. doi: 10.1038/scientificamerican0609-68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Karsh E.B., Turner D.C. The Domestic Cat: The Biology of Its Behaviour. Cambridge University Press; Cambridge, UK: 1988. The human-cat relationship; pp. 159–177. [Google Scholar]

- 29.Mertens C. Human-Cat Interactions in the Home Setting. Anthrozoös. 1991;4:214–231. doi: 10.2752/089279391787057062. [DOI] [Google Scholar]

- 30.Turner D.C. The ethology of the human-cat relationship. Schweiz. Arch. Tierheilkd. 1991;133:63. [PubMed] [Google Scholar]

- 31.Bradshaw J.W. Sociality in cats: A comparative review. J. Vet. Behav. 2016;11:113–124. doi: 10.1016/j.jveb.2015.09.004. [DOI] [Google Scholar]

- 32.Macdonald D.W., Yamaguchi N., Kerby G. The Domestic Cat: The Biology of Its Behaviour. Volume 2. Cambridge University Press; Cambridge, UK: 2000. Group-living in the domestic cat: Its sociobiology and epidemiology; pp. 95–118. [Google Scholar]

- 33.Curtis T.M., Knowles R.J., Crowell-Davis S.L. Influence of familiarity and relatedness on proximity and allogrooming in domestic cats (Felis catus) Am. J. Vet. Res. 2003;64:1151–1154. doi: 10.2460/ajvr.2003.64.1151. [DOI] [PubMed] [Google Scholar]

- 34.Bradshaw J., Cameron-Beaumont C. The Domestic Cat: The Biology of Its Behaviour. Cambridge University Press; Cambridge, UK: 2000. The signalling repertoire of the domestic cat and its undomesticated relatives; pp. 67–93. [Google Scholar]

- 35.Bradshaw J.W., Casey R.A., Brown S.L. The Behaviour of the Domestic Cat. CABI; Wallingford, UK: 2012. [Google Scholar]

- 36.Feuerstein N., Terkel J. Interrelationships of dogs (Canis familiaris) and cats (Felis catus L.) living under the same roof. Appl. Anim. Behav. Sci. 2008;113:150–165. doi: 10.1016/j.applanim.2007.10.010. [DOI] [Google Scholar]

- 37.Vitale Shreve K.R., Udell M.A. What’s inside your cat’s head? A review of cat (Felis silvestris catus) cognition research past, present and future. Anim. Cogn. 2015;18:1195–1206. doi: 10.1007/s10071-015-0897-6. [DOI] [PubMed] [Google Scholar]

- 38.Saito A., Shinozuka K., Ito Y., Hasegawa T. Domestic cats (Felis catus) discriminate their names from other words. Sci. Rep. 2019;9:5394. doi: 10.1038/s41598-019-40616-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Nicastro N. Perceptual and Acoustic Evidence for Species-Level Differences in Meow Vocalizations by Domestic Cats (Felis catus) and African Wild Cats (Felis silvestris lybica) J. Comp. Psychol. 2004;118:287–296. doi: 10.1037/0735-7036.118.3.287. [DOI] [PubMed] [Google Scholar]

- 40.Yeon S.C., Kim Y.K., Park S.J., Lee S.S., Lee S.Y., Suh E.H., Houpt K.A., Chang H.H., Lee H.C., Yang B.G., et al. Differences between vocalization evoked by social stimuli in feral cats and house cats. Behav. Process. 2011;87:183–189. doi: 10.1016/j.beproc.2011.03.003. [DOI] [PubMed] [Google Scholar]

- 41.Schötz S., van de Weijer J. A Study of Human Perception of Intonation in Domestic Cat Meows. In: Campbell N., Gibbon D., Hirst D., editors. Proceedings of the 7th International Conference on Speech Prosody; Dublin, Ireland. 20–23 May 2014; Dublin, Ireland: International Speech Communication Association; 2014. pp. 874–878. [DOI] [Google Scholar]

- 42.Tavernier C., Ahmed S., Houpt K.A., Yeon S.C. Feline vocal communication. J. Vet. Sci. 2020;21 doi: 10.4142/jvs.2020.21.e18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Fermo J.L., Schnaider M.A., Silva A.H.P., Molento C.F.M. Only when it feels good: Specific cat vocalizations other than meowing. Animals. 2019;9:878. doi: 10.3390/ani9110878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Nicastro N., Owren M.J. Classification of domestic cat (Felis catus) vocalizations by naive and experienced human listeners. J. Comp. Psychol. 2003;117:44. doi: 10.1037/0735-7036.117.1.44. [DOI] [PubMed] [Google Scholar]

- 45.McComb K., Taylor A.M., Wilson C., Charlton B.D. The cry embedded within the purr. Curr. Biol. 2009;19:R507–R508. doi: 10.1016/j.cub.2009.05.033. [DOI] [PubMed] [Google Scholar]

- 46.Ellis S.L., Swindell V., Burman O.H. Human classification of context-related vocalizations emitted by familiar and unfamiliar domestic cats: An exploratory study. Anthrozoös. 2015;28:625–634. doi: 10.1080/08927936.2015.1070005. [DOI] [Google Scholar]

- 47.Schötz S., Van De Weijer J., Eklund R. Melody matters: An acoustic study of domestic cat meows in six contexts and four mental states. PeerJ Prepr. 2019;7:e27926v1. doi: 10.7287/peerj.preprints.27926v1. [DOI] [Google Scholar]

- 48.Cameron-Beaumont C.L. Ph.D. Thesis. University of Southampton; Southampton, UK: 1999. Visual and Tactile Communication in the Domestic Cat (Felis silvestris catus) and Undomesticated Small Felids. [Google Scholar]

- 49.Mertens C., Turner D.C. Experimental analysis of human-cat interactions during first encounters. Anthrozoös. 1988;2:83–97. doi: 10.2752/089279389787058109. [DOI] [Google Scholar]

- 50.Atkinson T. Practical Feline Behaviour: Understanding Cat Behaviour and Improving Welfare. CABI; Wallingford, UK: 2018. [Google Scholar]

- 51.Scheumann M., Hasting A.S., Kotz S.A., Zimmermann E. The voice of emotion across species: How do human listeners recognize animals’ affective states? PLoS ONE. 2014;9:e91192. doi: 10.1371/journal.pone.0091192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Tallet C., Špinka M., Maruščáková I., Šimeček P. Human perception of vocalizations of domestic piglets and modulation by experience with domestic pigs (Sus scrofa) J. Comp. Psychol. 2010;124:81. doi: 10.1037/a0017354. [DOI] [PubMed] [Google Scholar]

- 53.Pongrácz P., Molnár C., Miklósi A., Csányi V. Human listeners are able to classify dog (Canis familiaris) barks recorded in different situations. J. Comp. Psychol. 2005;119:136. doi: 10.1037/0735-7036.119.2.136. [DOI] [PubMed] [Google Scholar]

- 54.Belin P., Fecteau S., Charest I., Nicastro N., Hauser M.D., Armony J.L. Human cerebral response to animal affective vocalizations. Proc. R. Soc. B Biol. Sci. 2008;275:473–481. doi: 10.1098/rspb.2007.1460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Cannas S., Mattiello S., Battini M., Ingraffia S., Cadoni D., Palestrini C. Evaluation of cat’s behavior during three different daily management situations. J. Vet. Behav. 2020;37:93–100. doi: 10.1016/j.jveb.2019.12.004. [DOI] [Google Scholar]

- 56.Zahn-Waxler C., Radke-Yarrow M. The origins of empathic concern. Motiv. Emot. 1990;14:107–130. doi: 10.1007/BF00991639. [DOI] [Google Scholar]

- 57.Preston S.D., De Waal F.B. Empathy: Its ultimate and proximate bases. Behav. Brain Sci. 2002;25:1–20. doi: 10.1017/S0140525X02000018. [DOI] [PubMed] [Google Scholar]

- 58.Smith A. Cognitive empathy and emotional empathy in human behavior and evolution. Psychol. Rec. 2006;56:3–21. doi: 10.1007/BF03395534. [DOI] [Google Scholar]

- 59.Vellante M., Baron-Cohen S., Melis M., Marrone M., Petretto D.R., Masala C., Preti A. The “Reading the Mind in the Eyes” test: Systematic review of psychometric properties and a validation study in Italy. Cogn. Neuropsychiatry. 2013;18:326–354. doi: 10.1080/13546805.2012.721728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Zaki J. Empathy: A motivated account. Psychol. Bull. 2014;140:1608. doi: 10.1037/a0037679. [DOI] [PubMed] [Google Scholar]

- 61.Israelashvili J., Sauter D., Fischer A. Cognition and Emotion. Taylor & Francis; Abingdon, UK: 2020. Two facets of affective empathy: Concern and distress have opposite relationships to emotion recognition; pp. 1–11. [DOI] [PubMed] [Google Scholar]

- 62.Christov-Moore L., Simpson E.A., Coudé G., Grigaityte K., Iacoboni M., Ferrari P.F. Empathy: Gender effects in brain and behavior. Neurosci. Biobehav. Rev. 2014;46:604–627. doi: 10.1016/j.neubiorev.2014.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Hall J.A. Gender effects in decoding nonverbal cues. Psychol. Bull. 1978;85:845. doi: 10.1037/0033-2909.85.4.845. [DOI] [Google Scholar]

- 64.Hall J.A., Matsumoto D. Gender differences in judgments of multiple emotions from facial expressions. Emotion. 2004;4:201. doi: 10.1037/1528-3542.4.2.201. [DOI] [PubMed] [Google Scholar]

- 65.McClure E.B. A meta-analytic review of sex differences in facial expression processing and their development in infants, children, and adolescents. Psychol. Bull. 2000;126:424. doi: 10.1037/0033-2909.126.3.424. [DOI] [PubMed] [Google Scholar]

- 66.Ellingsen K., Zanella A.J., Bjerkås E., Indrebø A. The relationship between empathy, perception of pain and attitudes toward pets among Norwegian dog owners. Anthrozoös. 2010;23:231–243. doi: 10.2752/175303710X12750451258931. [DOI] [Google Scholar]

- 67.Norring M., Wikman I., Hokkanen A.H., Kujala M.V., Hänninen L. Empathic veterinarians score cattle pain higher. Vet. J. 2014;200:186–190. doi: 10.1016/j.tvjl.2014.02.005. [DOI] [PubMed] [Google Scholar]

- 68.Ludovico L.A., Ntalampiras S., Presti G., Cannas S., Battini M., Mattiello S. CatMeows: A Publicly-Available Dataset of Cat Vocalizations. Zenodo. 2020 doi: 10.5281/zenodo.4008297. [DOI] [Google Scholar]

- 69.Ludovico L.A., Ntalampiras S., Presti G., Cannas S., Battini M., Mattiello S. CatMeows: A Publicly-Available Dataset of Cat Vocalizations; Proceedings of the 27th International Conference on Multimedia Modeling; Prague, Czech Republic. 25–27 January 2021. [Google Scholar]

- 70.Alías F., Socoró J.C., Sevillano X. A review of physical and perceptual feature extraction techniques for speech, music and environmental sounds. Appl. Sci. 2016;6:143. doi: 10.3390/app6050143. [DOI] [Google Scholar]

- 71.Camacho A., Harris J.G. A sawtooth waveform inspired pitch estimator for speech and music. J. Acoust. Soc. Am. 2008;124:1638–1652. doi: 10.1121/1.2951592. [DOI] [PubMed] [Google Scholar]

- 72.Daniel P., Weber R. Psychoacoustical roughness: Implementation of an optimized model. Acta Acust. United Acust. 1997;83:113–123. [Google Scholar]

- 73.Plomp R., Levelt W.J.M. Tonal consonance and critical bandwidth. J. Acoust. Soc. Am. 1965;38:548–560. doi: 10.1121/1.1909741. [DOI] [PubMed] [Google Scholar]

- 74.Lartillot O., Toiviainen P. A Matlab toolbox for musical feature extraction from audio; Proceedings of the International Conference on Digital Audio Effects; Bordeaux, France. 10–14 September 2007; pp. 237–244. [Google Scholar]

- 75.Vassilakis P. Auditory roughness estimation of complex spectra—Roughness degrees and dissonance ratings of harmonic intervals revisited. J. Acoust. Soc. Am. 2001;110:2755. doi: 10.1121/1.4777600. [DOI] [Google Scholar]

- 76.Pollard H.F., Jansson E.V. A tristimulus method for the specification of musical timbre. Acta Acust. United Acust. 1982;51:162–171. [Google Scholar]

- 77.Paul E.S. Empathy with animals and with humans: Are they linked? Anthrozoös. 2000;13:194–202. doi: 10.2752/089279300786999699. [DOI] [Google Scholar]

- 78.Colombo E., Pelosi A., Prato-Previde E. Empathy towards animals and belief in animal-human-continuity in Italian veterinary students. Anim. Welf. 2016;25:275–286. doi: 10.7120/09627286.25.2.275. [DOI] [Google Scholar]

- 79.Colombo E.S., Crippa F., Calderari T., Prato-Previde E. Empathy toward animals and people: The role of gender and length of service in a sample of Italian veterinarians. J. Vet. Behav. 2017;17:32–37. doi: 10.1016/j.jveb.2016.10.010. [DOI] [Google Scholar]

- 80.Stubsjøen S.M., Moe R.O., Bruland K., Lien T., Muri K. Reliability of observer ratings: Qualitative behaviour assessments of shelter dogs using a fixed list of descriptors. Vet. Anim. Sci. 2020;10:100145. doi: 10.1016/j.vas.2020.100145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Lambert W.E., Hodgson R.C., Gardner R.C., Fillenbaum S. Evaluational reactions to spoken languages. J. Abnorm. Soc. Psychol. 1960;60:44. doi: 10.1037/h0044430. [DOI] [PubMed] [Google Scholar]

- 82.Campbell-Kibler K. Sociolinguistics and perception. Lang. Linguist. Compass. 2010;4:377–389. doi: 10.1111/j.1749-818X.2010.00201.x. [DOI] [Google Scholar]

- 83.Ntalampiras S., Ludovico L.A., Presti G., Prato Previde E., Battini M., Cannas S., Palestrini C., Mattiello S. Automatic Classification of Cat Vocalizations Emitted in Different Contexts. Animals. 2019;9:543. doi: 10.3390/ani9080543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Dawson L., Cheal J., Niel L., Mason G. Humans can identify cats’ affective states from subtle facial expressions. Anim. Welf. 2019;28:519–531. doi: 10.7120/09627286.28.4.519. [DOI] [Google Scholar]

- 85.Schirmer A., Seow C.S., Penney T.B. Humans process dog and human facial affect in similar ways. PLoS ONE. 2013;8:e74591. doi: 10.1371/journal.pone.0074591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Pongrácz P., Molnár C., Miklósi Á. Acoustic parameters of dog barks carry emotional information for humans. Appl. Anim. Behav. Sci. 2006;100:228–240. doi: 10.1016/j.applanim.2005.12.004. [DOI] [Google Scholar]

- 87.Pongrácz P., Molnár C., Dóka A., Miklósi Á. Do children understand man’s best friend? Classification of dog barks by pre-adolescents and adults. Appl. Anim. Behav. Sci. 2011;135:95–102. doi: 10.1016/j.applanim.2011.09.005. [DOI] [Google Scholar]

- 88.Yin S. A new perspective on barking in dogs (Canis familaris.) J. Comp. Psychol. 2002;116:189. doi: 10.1037/0735-7036.116.2.189. [DOI] [PubMed] [Google Scholar]

- 89.Lawrence E.J., Shaw P., Baker D., Baron-Cohen S., David A.S. Measuring empathy: Reliability and validity of the Empathy Quotient. Psychol. Med. 2004;34:911. doi: 10.1017/S0033291703001624. [DOI] [PubMed] [Google Scholar]

- 90.Proverbio A.M., Matarazzo S., Brignone V., Del Zotto M., Zani A. Processing valence and intensity of infant expressions: The roles of expertise and gender. Scand. J. Psychol. 2007;48:477–485. doi: 10.1111/j.1467-9450.2007.00616.x. [DOI] [PubMed] [Google Scholar]

- 91.Thayer J., Johnsen B.H. Sex differences in judgement of facial affect: A multivariate analysis of recognition errors. Scand. J. Psychol. 2000;41:243–246. doi: 10.1111/1467-9450.00193. [DOI] [PubMed] [Google Scholar]

- 92.Mills D. The ISFM Guide to Feline Stress and Health. International Cat Care; Tisbury, UK: 2016. What are stress and distress and what emotions are involved? Feline Stress and Health—Managing negative emotions to improve feline health and wellbeing. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.