Abstract

Background

Artificial intelligence (AI) has recently achieved considerable success in different domains including medical applications. Although current advances are expected to impact surgery, up until now AI has not been able to leverage its full potential due to several challenges that are specific to that field.

Summary

This review summarizes data-driven methods and technologies needed as a prerequisite for different AI-based assistance functions in the operating room. Potential effects of AI usage in surgery will be highlighted, concluding with ongoing challenges to enabling AI for surgery.

Key Messages

AI-assisted surgery will enable data-driven decision-making via decision support systems and cognitive robotic assistance. The use of AI for workflow analysis will help provide appropriate assistance in the right context. The requirements for such assistance must be defined by surgeons in close cooperation with computer scientists and engineers. Once the existing challenges will have been solved, AI assistance has the potential to improve patient care by supporting the surgeon without replacing him or her.

Keywords: Surgical data science, Robot-assisted surgery, Artificial intelligence in surgery, Cognitive surgical robotics, Sensor-enhanced operating room, Workflow analysis

The Potential of Artificial Intelligence in Surgery

Artificial intelligence (AI) has recently achieved considerable success in domains such as object detection, speech recognition, or natural language processing [1]. Especially deep learning (DL) techniques have been responsible for such breakthroughs, and they have experienced a renaissance due to the massive increase in computational power and data availability [2]. DL is a subset of machine learning, which itself is part of the all-encompassing concept of AI. DL methods are based on artificial neural networks, which are inspired by neurons in a biological brain. DL refers to the concept of training specific tasks based on a large amount of data, learning from them and making predictions about these specific tasks through flexible adaptation to new data.

Recently, several success stories have been published in the medical domain based on DL for image classification, such as prediction of cardiovascular risk based on retinal images [3], skin lesion classification [4], or breast cancer detection based on mammograms [5]. However, in surgery, AI has not yet leveraged its full potential, due to several challenges that are specific to this discipline. Unlike in the aforementioned examples, which are focused on the analysis of static images, surgery consists of procedural data in a dynamic environment including the patient, different devices and sensors in the operating room (OR), and the OR team, as well as domain knowledge such as clinical guidelines or experience from previous procedures [6]. Furthermore, DL methods require large amounts of annotated data for training, which is especially challenging in surgery. In addition, real-time capability is required of machine learning methods if they should be used during an operation.

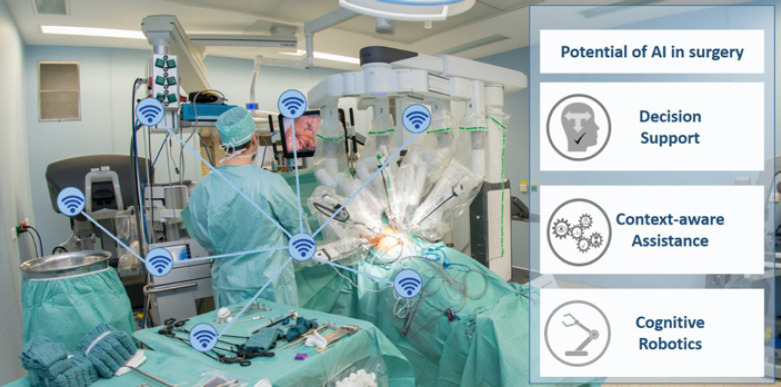

Surgical data science is a newly emerging field that has the aim of “improving the quality of interventional healthcare and its value through capturing, organization, analysis, and modeling of data” [6], especially using AI-based methods. Any potential for surgery can be identified along the surgical treatment path, such as decision support, context-aware assistance, and cognitive robotics (Fig. 1).

Fig. 1.

Potential of artificial intelligence (AI) in surgery based on a sensor-enhanced operating room (SensorOR).

The remainder of this review will focus on data-driven methods and technologies as a prerequisite for different AI-based assistance functions in the OR. Potential effects of AI use in surgery will be highlighted, concluding with ongoing challenges to enabling AI for surgery.

Enabling AI-Assisted Surgery

The following paragraphs provide a short introduction to the basic technologies and concepts needed as a prerequisite for AI-assisted surgery. This includes (1) access to comprehensive data in a sensor-enhanced OR (SensorOR) as well as (2) enrichment of those data with surgical knowledge by annotation to make them usable for (3) machine learning methods that provide AI assistance to the surgeon.

SensorOR

Today's operating theaters are characterized by a multitude of information sources. For example, preoperative image and planning data provide information about the positions of tumors and the planned course of the operation, various medical devices (e.g., a suction-irrigation system, operating light, and anesthesia monitor) provide regular status reports and intraoperative imaging devices (endoscope, ultrasound, etc.) provide data about the patient and current processes in the OR. In their entirety, these heterogeneous sensors provide the information necessary to infer the actual course of the operation and to provide proper assistance at the right time. This is subsumed under the term “context-aware assistance” [7], which avoids an information overflow and decreases the cognitive load, in particular in an already stressful and complex environment such as the OR. A prerequisite for providing such assistance is a SensorOR in which all devices are connected to collect their data (Fig. 1).

Data Annotation

In order for AI to learn from collected data, the original raw data has to be enriched with additional knowledge through annotation. The most common forms of annotation are classification (e.g., which organs are visible in an image), semantic segmentation (e.g., which pixels belong to which organ in an image), and numerical regression (e.g., the size of an object). The process of data annotation is often time-consuming, especially for large data sets. Annotation of surgical data requires expert knowledge, which can be expensive to muster and is often a bottleneck. In addition, only a fraction of the data is digitally available, and there are no standard acquisition and annotation protocols. The data has to be representative of the task to be learned, preferably from multiple centers and accessible in comprehensive open data registries, highlighting questions regarding privacy and confidentiality.

Several approaches to reducing the annotation effort have been proposed, such as active learning [8, 9, 10], where only the most informative data points are selected and then are annotated, as well as crowdsourcing, where the “wisdom of the crowd” can be utilized for certain clinical tasks [11, 12]. A promising pathway for overcoming the lack of annotated data is to generate realistic synthetic images based on a simple simulation by using generative adversarial networks [13] (Fig. 2).

Fig. 2.

Left: example of generating synthetic images based on simple laparoscopic simulations. Middle: generative adversarial networks translate these images so they look like real laparoscopic images. These images, along with their generated labels, can be used without further annotation effort. Right: machine-learning-based detection of the surgical phase and prediction of the duration of the remaining procedure during laparoscopic cholecystectomy.

To ensure a consistent vocabulary for annotation, ontologies are used. Ontologies are widely used in the medical domain − for instance, for clinical terms [14], for modeling surgical knowledge [15], to identify risks across medical processes [16], or for surgical processes [17, 18].

In other AI domains [19], there are many open data sets that can be used to develop, evaluate and compare different machine learning algorithms. While access to data sources is crucial also in surgery, only few public annotated data sets for different applications such as surgical phase detection [20, 21], surgical training [22, 23], and segmentation [21, 24] exist [7]. The Endoscopic Vision Challenge [25], an initiative that supports the availability of new public data sets for the systematic comparison of algorithms, has hosted challenges in surgical vision.

Machine Learning

Machine learning algorithms can operate in a supervised or an unsupervised manner. While in both cases the algorithms rely on data to learn, in supervised learning, the data have to be annotated [26]. DL, a popular example of supervised learning [2], is the current state of the art for many applications, such as surgical data analysis. Methods for DL have the benefit that they can be “pretrained,” meaning that a method used to solve one problem, e.g., transforming grayscale laparoscopic images into color images, can be retrained to solve a different task, e.g., segmenting laparoscopic tools [27]. This makes it possible to retain and apply knowledge from the former task to improve upon the later task.

Such methods can be used for workflow analysis through automatic segmentation of procedures into phases or surgical actions. These methods rely, for example, on data from tool usage [28, 29] or robotic kinematics [30] or, by using DL, directly on camera data, such from an endoscope [20, 31, 32] or 3D camera [32]. DL methods for determining surgery duration (Fig. 2) [33, 34, 35] and for predicting surgical tool usage [36] have also been developed.

Of further importance are methods for semantic segmentation, especially surgical scene analysis, which provides information on relevant structures, such as tools and organs, during surgery. Until recently, most research has focused on surgical tools [37], though the broader topic of analyzing the entire surgical scene is becoming more popular [38]. One application of semantic segmentation has been used for measurements during surgery with a stereo-endoscope [39].

AI has the potential to enhance soft-tissue navigation where the risk and target structures are highlighted during surgery. DL methods have been used in data-driven registration and deformation models that estimate non-rigid deformations of structures inside an organ when given only the displacement of the partial organ surface [40, 41, 42], a prerequisite for soft-tissue navigation.

Potential Effects of AI in Surgery

The abovementioned novel AI technologies developed by computer scientists will have tremendous effects on surgical practice. The effects can be categorized into the fields of decision support, context-aware assistance, and cognitive robotics [6].

Decision support systems that gather patient information in order to provide a clinical recommendation are commercially available and under scientific investigation in internal medicine [43], but they show only marginal change in clinical practice. Novel AI-based systems can predict future acute kidney injury based on data from hundreds of thousands of patients [44]. Similarly, AI can be used to predict circulatory failure in the intensive care unit [45]. Another system for intraoperative blood pressure management has shown its benefit in supporting the anesthesiologist in a randomized controlled trial [46]. In surgery, however, we still lack these kinds of assistance system. For example, in oncological liver surgery, one would like to have a system that combines patient information (laboratory results, imaging, comorbidities, and previous surgeries) with evidence-based information (scientific publications) and surgical experience (the clinical course of previously treated patients) to avoid post-hepatectomy liver failure or to choose the optimal multimodal treatment. Similarly, AI may help to improve systems of predictive analytics that estimate survival after pancreatoduodenectomy for pancreatic cancer [47] or secondary effects of surgery, such as incisional hernia [48].

Context-aware assistance systems will provide this additional information for the right patient at the right point in time. To achieve this, the surgical workflow may be optimized by modelling the process [49] to define important steps during the operation and recognize them using machine learning, such as has been shown by Twinanda et al. [20] for laparoscopic cholecystectomy. This may also allow the detection of dangerous deviations from the optimal workflow. Extended information could be provided to predict the remaining duration of a surgery to optimize the organizational workflow and treat more patients. Bodenstedt et al. [34] demonstrated a way of online procedure duration prediction using unlabeled endoscopic video data and surgical device data in a laparoscopic setting. Relevant information may also be obtained by means of AI such as computer vision for detecting structures at risk such as the cystic duct and common bile duct during laparoscopic cholecystectomy, as demonstrated by Tokuyasu et al. [50].

AI may also change practice in robot-assisted surgery towards cognitive surgical robotics. Today, clinically used surgical robots are mere telemanipulators without any autonomous activity. In research, robotic systems have been developed for situation-aware automatic needle insertion [51]. Another system has shown its superiority over humans in performing bowel anastomosis on a porcine bowel [52]. However, even these robotic systems do not understand the surgical scene and do not adapt to the surgical workflow. This is why surgical workflow analysis and an understanding of the surgical scene have to be developed and validated towards a level of robustness where they can be used as an information source for cognitive surgical robots. Only then can auxiliary tasks such as control of the laparoscopic camera and stretching tissue, or even certain major surgical tasks such as an anastomosis, be performed by a cognitive robot. Using AI, such a cognitive robot will understand its environment and may even learn from experience to improve its performance over time.

Conclusions

AI-assisted surgery enables objective data-driven decision-making and will have a strong impact on how surgery is performed in the future. Clinical implications arise from the aim of assistance, but not replacement of surgical expertise. Decision support systems will recommend, not make, a decision. Cognitive robots will perform autonomous actions only as requested by surgeons. Less experienced surgeons will need this support more often than experienced surgeons. However, in the end, all AI assistance has to improve patient outcomes to be effective.

While machine learning methods are evolving and further achievements are to be expected, there exist numerous challenges in the surgical domain that have hampered any significant impact so far. Challenges arise regarding data, methods, devices, and integration. Machine learning methods require large amounts of labeled training data, which are difficult and expensive to acquire considering the need for high-quality annotations. Furthermore, such methods need to be robust and accurate, as well as able to deal with heterogeneous data sources and high variability, since the course of surgery greatly depends on the patient and the OR team, which makes it difficult to predict anomalies that are not represented in the training data. To reduce the amount of training data and to address diversity, DL approaches could be combined with semantic knowledge to incorporate medical background knowledge and context. Up to now, such methods have behaved like a “black box,” which is critical for such a high-risk domain as surgery. Research towards explainable AI can overcome the black box paradigm and make decisions more transparent and traceable by humans [53]. Another important aspect is related to the devices and their integration; for instance, to enable data-driven online analysis in the OR, devices have to be connected and accessible (SensorOR), which can only be achieved in collaboration with the device manufactures.

In summary, AI-assisted surgery has the potential to improve patient care if the aforementioned challenges are addressed by all stakeholders including clinicians, engineers, patients, and industry.

Conflict of Interest Statement

The authors have no conflicts of interest to declare.

Funding Sources

Funded by the German Research Foundation (DFG, Deutsche Forschungsgemeinschaft) as part of Germany's Excellence Strategy − EXC 2050/1 − Project ID 390696704 − Cluster of Excellence “Centre for Tactile Internet with Human-in-the-Loop” (CeTI) of TU Dresden.

Author Contributions

All authors drafted and reviewed the manuscript.

Acknowledgements

We thank Anna Kisilenko, Duc Tran, Michael Haselbeck-Köbler, Jonathan Chen, and Johanna Brandenburg for assistance in reviewing the literature.

References

- 1.Esteva A, Robicquet A, Ramsundar B, Kuleshov V, DePristo M, Chou K, et al. A guide to deep learning in healthcare. Nat Med. 2019 Jan;25((1)):24–9. doi: 10.1038/s41591-018-0316-z. [DOI] [PubMed] [Google Scholar]

- 2.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015 May;521((7553)):436–44. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 3.Poplin R, Varadarajan AV, Blumer K, Liu Y, McConnell MV, Corrado GS, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. 2018 Mar;2((3)):158–64. doi: 10.1038/s41551-018-0195-0. [DOI] [PubMed] [Google Scholar]

- 4.Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017 Feb;542((7639)):115–8. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.McKinney SM, Sieniek M, Godbole V, Godwin J, Antropova N, Ashrafian H, et al. International evaluation of an AI system for breast cancer screening. Nature. 2020 Jan;577((7788)):89–94. doi: 10.1038/s41586-019-1799-6. [DOI] [PubMed] [Google Scholar]

- 6.Maier-Hein L, Vedula SS, Speidel S, Navab N, Kikinis R, Park A, et al. Surgical data science for next-generation interventions. Nat Biomed Eng. 2017 Sep;1((9)):691–6. doi: 10.1038/s41551-017-0132-7. [DOI] [PubMed] [Google Scholar]

- 7.Vercauteren T, Unberath M, Padoy N, Navab N. CAI4CAI: The Rise of Contextual Artificial Intelligence in Computer Assisted Interventions. Proc IEEE Inst Electr Electron Eng. 2020 Jan;108((1)):198–214. doi: 10.1109/JPROC.2019.2946993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bodenstedt S, Rivoir D, Jenke A, Wagner M, Breucha M, Müller-Stich B, et al. Active learning using deep Bayesian networks for surgical workflow analysis. Int J CARS. 2019 Jun;14((6)):1079–87. doi: 10.1007/s11548-019-01963-9. [DOI] [PubMed] [Google Scholar]

- 9.Manoj AS, Hussain MA, Teja PS. Patient health monitoring using IoT. InMobile Health Applications for Quality Healthcare Delivery. IGI Global; 2019. pp. pp.30–45. [Google Scholar]

- 10.Lecuyer G, Ragot M, Martin N, Launay L, Jannin P. Assisted phase and step annotation for surgical videos. Int J CARS. 2020 Apr;15((4)):673–80. doi: 10.1007/s11548-019-02108-8. [DOI] [PubMed] [Google Scholar]

- 11.Lendvay TS, White L, Kowalewski T. Crowdsourcing to assess surgical skill. JAMA Surg. 2015 Nov;150((11)):1086–7. doi: 10.1001/jamasurg.2015.2405. [DOI] [PubMed] [Google Scholar]

- 12.Maier-Hein L, Ross T, Gröhl J, Glocker B, Bodenstedt S, Stock C, et al. In: Medical Image Computing and Computer- Assisted Intervention – MICCAI 2016. MICCAI 2016. Lecture Notes in Computer Science, vol. 9901. Ourselin S, Joskowicz L, Sabuncu M, Unal G, Wells W, editors. Cham: Springer; pp. pp. 616–23. [Google Scholar]

- 13.Pfeiffer M, Funke I, Robu MR, Bodenstedt S, Strenger L, Engelhardt S, et al. Generating large labeled data sets for laparoscopic image processing tasks using unpaired imageto-image translation. In: Shen D, et al., editors. Medical Image Computing and Computer Assisted Intervention – MICCAI 2019. MICCAI 2019. Lecture Notes in Computer Science, vol. 11768. Cham: Springer; pp. pp. 119–27. [Google Scholar]

- 14.Donnelly K. SNOMED-CT: the advanced terminology and coding system for eHealth. Stud Health Technol Inform. 2006;121:279–90. [PubMed] [Google Scholar]

- 15.Mudunuri R, Burgert O, Neumuth T. Ontological Modelling of Surgical Knowledge. GI Jahrestagung. 2009;154:1044–54. [Google Scholar]

- 16.Uciteli A, Neumann J, Tahar K, Saleh K, Stucke S, Faulbrück-Röhr S, et al. Ontology-based specification, identification and analysis of perioperative risks. J Biomed Semantics. 2017 Sep;8((1)):36. doi: 10.1186/s13326-017-0147-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Katić D, Julliard C, Wekerle AL, Kenngott H, Müller-Stich BP, Dillmann R, et al. LapOntoSPM: an ontology for laparoscopic surgeries and its application to surgical phase recognition. Int J CARS. 2015 Sep;10((9)):1427–34. doi: 10.1007/s11548-015-1222-1. [DOI] [PubMed] [Google Scholar]

- 18.Gibaud B, Forestier G, Feldmann C, Ferrigno G, Gonçalves P, Haidegger T, et al. Toward a standard ontology of surgical process models. Int J CARS. 2018 Sep;13((9)):1397–408. doi: 10.1007/s11548-018-1824-5. [DOI] [PubMed] [Google Scholar]

- 19.Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, et al. Microsoft COCO: common objects in context. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T, editors. Computer Vision – ECCV 2014. ECCV 2014. Lecture Notes in Computer Science, vol 8693. Cham: Springer; pp. pp. 740–55. [Google Scholar]

- 20.Twinanda AP, Shehata S, Mutter D, Marescaux J, de Mathelin M, Padoy N. Endonet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging. 2017 Jan;36((1)):86–97. doi: 10.1109/TMI.2016.2593957. [DOI] [PubMed] [Google Scholar]

- 21.Maier-Hein L, Wagner M, Ross T, Reinke A, Bodenstedt S, Full PM, et al. Heidelberg Colorectal Data Set for Surgical Data Science in the Sensor Operating Room. 2020 May 7. arXiv. arXiv. 2005:03501v1. doi: 10.1038/s41597-021-00882-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Gao Y, Vedula SS, Reiley CE, Ahmidi N, Varadarajan B, Lin HC, et al. JHU-ISI Gesture and Skill Assessment Working Set (JIGSAWS): a surgical activity dataset for human motion modeling. MICCAI Workshop: M2CAI. 2014 Sep;3:3. [Google Scholar]

- 23.Sarikaya D, Corso JJ, Guru KA. Detection and localization of robotic tools in robot-assisted surgery videos using deep neural networks for region proposal and detection. IEEE Trans Med Imaging. 2017 Jul;36((7)):1542–9. doi: 10.1109/TMI.2017.2665671. [DOI] [PubMed] [Google Scholar]

- 24.Flouty E, Kadkhodamohammadi A, Luengo I, Fuentes-Hurtado F, Taleb H, Barbarisi S, et al. CaDIS: cataract dataset for image segmentation. 2019 Jun 27. arXiv. arXiv. 1906:11586. [Google Scholar]

- 25.Endoscopic Vision Challenge ICCAI 2020 Lima. Available from: https://endovis.grandchallenge.org/

- 26.Norvig PR. Artificial Intelligence: A modern approach. Prentice Hall; 2002. [Google Scholar]

- 27.Ross T, Zimmerer D, Vemuri A, Isensee F, Wiesenfarth M, Bodenstedt S, et al. Exploiting the potential of unlabeled endoscopic video data with self-supervised learning. Int J CARS. 2018 Jun;13((6)):925–33. doi: 10.1007/s11548-018-1772-0. [DOI] [PubMed] [Google Scholar]

- 28.Padoy N, Blum T, Ahmadi SA, Feussner H, Berger MO, Navab N. Statistical modeling and recognition of surgical workflow. Med Image Anal. 2012 Apr;16((3)):632–41. doi: 10.1016/j.media.2010.10.001. [DOI] [PubMed] [Google Scholar]

- 29.Padoy N, Blum T, Feussner H, Berger MO, Navab N. On-Line Recognition of Surgical Activity for Monitoring in the Operating Room. In: IAAI'08: Proceedings of the 20th National Conference on Innovative Applications of Artificial Intelligence. 2008;vol. 3:pp.1718–24. [Google Scholar]

- 30.Lea C, Hager GD, Vidal R. An improved model for segmentation and recognition of fine-grained activities with application to surgical training tasks. In2015. IEEE Winter Conf Appl Comput Vis. 2015 Jan;:1123–9. [Google Scholar]

- 31.Funke I, Jenke A, Mees ST, Weitz J, Speidel S, Bodenstedt S. Temporal coherence-based self-supervised learning for laparoscopic workflow analysis. In: Stoyanov D, et al., editors. OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis. CARE 2018, CLIP 2018, OR 2.0 2018, ISIC 2018. Lecture Notes in Computer Science. vol. 11041. Cham: Springer; [Google Scholar]

- 32.Twinanda AP. Vision-based approaches for surgical activity recognition using laparoscopic and RBGD videos [doctoral dissertation] Strasbourg: Université de Strasbourg; 2017. [Google Scholar]

- 33.Twinanda AP, Yengera G, Mutter D, Marescaux J, Padoy N. RSDNet: learning to predict remaining surgery duration from laparoscopic videos without manual annotations. IEEE Trans Med Imaging. 2019 Apr;38((4)):1069–78. doi: 10.1109/TMI.2018.2878055. [DOI] [PubMed] [Google Scholar]

- 34.Bodenstedt S, Wagner M, Mündermann L, Kenngott H, Müller-Stich B, Breucha M, et al. Prediction of laparoscopic procedure duration using unlabeled, multimodal sensor data. Int J CARS. 2019 Jun;14((6)):1089–95. doi: 10.1007/s11548-019-01966-6. [DOI] [PubMed] [Google Scholar]

- 35.Rivoir D, Bodenstedt S, von Bechtolsheim F, Distler M, Weitz J, Speidel S. Unsupervised Temporal Video Segmentation as an Auxiliary Task for Predicting the Remaining Surgery Duration. In: Zhou L, et al., editors. OR 2.0 Context-Aware Operating Theaters and Machine Learning in Clinical Neuroimaging. Cham: Springer; 2019. pp. pp. 29–37. [Google Scholar]

- 36.Bouget D, Allan M, Stoyanov D, Jannin P. Vision-based and marker-less surgical tool detection and tracking: a review of the literature. Med Image Anal. 2017 Jan;35:633–54. doi: 10.1016/j.media.2016.09.003. [DOI] [PubMed] [Google Scholar]

- 37.Rivoir D, Bodenstedt S, Funke I, von Bechtolsheim F, Distler M, Weitz J. Rethinking Anticipation Tasks: Uncertainty-Aware Anticipation of Sparse Surgical Instrument Usage for Context-Aware Assistance. In: Martel AL, et al., editors. Medical Image Computing and Computer Assisted Intervention – MICCAI 2020. MICCAI 2020. Lecture Notes in Computer Science, vol. 12263. Cham: Springer; 2020. pp. pp. 752–62. [Google Scholar]

- 38.Allan M, Kondo S, Bodenstedt S, Leger S, Kadkhodamohammadi R, Luengo I, et al. 2018 Robotic Scene Segmentation Challenge. 2020 Jan 30. arXiv. arXiv. 2001:11190. [Google Scholar]

- 39.Bodenstedt S, Wagner M, Mayer B, Stemmer K, Kenngott H, Müller-Stich B, et al. Image-based laparoscopic bowel measurement. Int J CARS. 2016 Mar;11((3)):407–19. doi: 10.1007/s11548-015-1291-1. [DOI] [PubMed] [Google Scholar]

- 40.Pfeiffer M, Riediger C, Weitz J, Speidel S. Learning soft tissue behavior of organs for surgical navigation with convolutional neural networks. Int J CARS. 2019 Jul;14((7)):1147–55. doi: 10.1007/s11548-019-01965-7. [DOI] [PubMed] [Google Scholar]

- 41.Brunet JN, Mendizabal A, Petit A, Golse N, Vibert E, Cotin S. Physics-based deep neural network for augmented reality during liver surgery. In: Brunet JN, et al., editors. MICCAI 2019 − 22nd International Conference on Medical Image Computing and Computer Assisted Intervention, Oct 2019, Shenzhen, China. Cham: Springer; 2019. pp. pp. 137–45. [Google Scholar]

- 42.Moja L, Polo Friz H, Capobussi M, Kwag K, Banzi R, Ruggiero F, et al. Effectiveness of a Hospital-Based Computerized Decision Support System on Clinician Recommendations and Patient Outcomes: A Randomized Clinical Trial. JAMA Netw Open. 2019 Dec;2((12)):e1917094. doi: 10.1001/jamanetworkopen.2019.17094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Tomašev N, Glorot X, Rae JW, Zielinski M, Askham H, Saraiva A, et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature. 2019 Aug;572((7767)):116–9. doi: 10.1038/s41586-019-1390-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Pfeiffer M, Riediger C, Leger S, Kühn JP, Seppelt D, Hoffmann RT, et al. Non-Rigid Volume to Surface Registration Using a Data- Driven Biomechanical Model. In: Martel AL, et al., editors. Medical Image Computing and Computer Assisted Intervention – MICCAI 2020. MICCAI 2020. Lecture Notes in Computer Science, vol. 12264. Cham: Springer; 2020. [Google Scholar]

- 45.Hyland SL, Faltys M, Hüser M, Lyu X, Gumbsch T, Esteban C, et al. Early prediction of circulatory failure in the intensive care unit using machine learning. Nat Med. 2020 Mar;26((3)):364–73. doi: 10.1038/s41591-020-0789-4. [DOI] [PubMed] [Google Scholar]

- 46.Wijnberge M, Geerts BF, Hol L, Lemmers N, Mulder MP, Berge P, et al. Effect of a machine learning–derived early warning system for intraoperative hypotension vs standard care on depth and duration of intraoperative hypotension during elective noncardiac surgery: the HYPE randomized clinical trial. JAMA. 2020 Mar;323((11)):1052–60. doi: 10.1001/jama.2020.0592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.van Roessel S, Strijker M, Steyerberg EW, Groen JV, Mieog JS, Groot VP, et al. International validation and update of the Amsterdam model for prediction of survival after pancreatoduodenectomy for pancreatic cancer. Eur J Surg Oncol. 2019 Dec;:105455. doi: 10.1016/j.ejso.2019.12.023. [DOI] [PubMed] [Google Scholar]

- 48.Basta MN, Kozak GM, Broach RB, Messa CA, 4th, Rhemtulla I, DeMatteo RP, et al. Can We Predict Incisional Hernia?: Development of a Surgery-specific Decision-Support Interface. Ann Surg. 2019 Sep;270((3)):544–53. doi: 10.1097/SLA.0000000000003472. [DOI] [PubMed] [Google Scholar]

- 49.Lalys F, Jannin P. Surgical process modelling: a review. Int J CARS. 2014 May;9((3)):495–511. doi: 10.1007/s11548-013-0940-5. [DOI] [PubMed] [Google Scholar]

- 50.Tokuyasu T, Iwashita Y, Matsunobu Y, Kamiyama T, Ishikake M, Sakaguchi S, et al. Development of an artificial intelligence system using deep learning to indicate anatomical landmarks during laparoscopic cholecystectomy. Surg Endosc. 2020 Apr;:1–8. doi: 10.1007/s00464-020-07548-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Muradore R, Fiorini P, Akgun G, Barkana DE, Bonfe M, Boriero F, et al. Development of a cognitive robotic system for simple surgical tasks. Int J Adv Robot Syst. 2015 Apr;12((4)):37. [Google Scholar]

- 52.Shademan A, Decker RS, Opfermann JD, Leonard S, Krieger A, Kim PC. Supervised autonomous robotic soft tissue surgery. Sci Transl Med. 2016 May;8((337)):337ra64. doi: 10.1126/scitranslmed.aad9398. [DOI] [PubMed] [Google Scholar]

- 53.Holzinger A. In: 2018 World Symposium on Digital Intelligence for Systems and Machines (DISA) Kosice: 2018. From machine learning to explainable AI; pp. pp. 55–66. [Google Scholar]