Abstract

PURPOSE

There is increasing interest in implementing digital systems for remote monitoring of patients’ symptoms during routine oncology practice. Information is limited about the clinical utility and user perceptions of these systems.

METHODS

PRO-TECT is a multicenter trial evaluating implementation of electronic patient-reported outcomes (ePROs) among adults with advanced and metastatic cancers receiving treatment at US community oncology practices (ClinicalTrials.gov identifier: NCT03249090). Questions derived from the Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE) are administered weekly by web or automated telephone system, with alerts to nurses for severe or worsening symptoms. To elicit user feedback, surveys were administered to participating patients and clinicians.

RESULTS

Among 496 patients across 26 practices, the majority found the system and questions easy to understand (95%), easy to use (93%), and relevant to their care (91%). Most patients reported that PRO information was used by their clinicians for care (70%), improved discussions with clinicians (73%), made them feel more in control of their own care (77%), and would recommend the system to other patients (89%). Scores for most patient feedback questions were significantly positively correlated with weekly PRO completion rates in both univariate and multivariable analyses. Among 57 nurses, most reported that PRO information was helpful for clinical documentation (79%), increased efficiency of patient discussions (84%), and was useful for patient care (75%). Among 39 oncologists, most found PRO information useful (91%), with 65% using PROs to guide patient discussions sometimes or often and 65% using PROs to make treatment decisions sometimes or often.

CONCLUSION

These findings support the clinical utility and value of implementing digital systems for monitoring PROs, including the PRO-CTCAE, in routine cancer care.

INTRODUCTION

Symptoms are commonly experienced among patients receiving cancer treatment and are a major cause of distress, functional disability, and emergency room/hospital utilization1,2 but go undetected and unaddressed by clinicians up to half the time.3-7 There is substantial interest in using digital strategies to systematically monitor patients’ symptoms in real-world clinical settings to catch problems early before they worsen or cause complications.8-10

CONTEXT

Key Objective

What are the perspectives of patients, nurses, and physicians about ongoing collection of patient-reported outcomes for symptom monitoring in their community oncology clinics?

Knowledge Generated

Almost all patients find online systems for self-reporting symptoms between visits easy to use, understandable, and valuable for communication and quality of care. Nurses and oncologists are also overall enthusiastic about patient-reported outcomes in clinic, although there are concerns about potential added workload from symptom alerts.

Relevance

These findings demonstrate wide acceptance and embracing of patient-reported outcomes for symptom monitoring in routine cancer care by patients and their clinicians, and pave the way for broader implementation. Work is needed to minimize the burden of symptom alerts on nurses.

Digital monitoring strategies include electronic patient-reported outcome (ePRO) systems in which symptom questions are loaded into online, downloadable, or automated telephone interfaces for patient self-completion, with real-time alerts triggered to clinic staff if severe or worsening symptoms are reported.11 A prior large, real-world single-center randomized controlled trial demonstrated that such an ePRO system (the “STAR” system) improved patients’ symptom control and quality of life, decreased emergency room visits, lengthened time tolerating chemotherapy, and increased overall survival.12,13

To evaluate a digital ePRO system for symptom monitoring in a multicenter real-world setting, the PRO-TECT cluster-randomized trial was initiated across community oncology practices in the US (ClinicalTrials.gov identifier: NCT03249090). All patient participants in this trial were receiving systemic treatment of advanced or metastatic cancer. Patients at 26 of the 52 participating practices were assigned to complete weekly ePRO self-reports from home for 1 year. The PRO-TECT digital ePRO system includes symptom questions derived from the National Cancer Institute’s Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE) item library as well as questions about physical function (patient-reported performance status), falls, and financial toxicity.14

Although one purpose of PRO-CTCAE is evaluation of symptomatic adverse events in clinical trials, it has also been extensively tested previously in routine care settings among patients receiving cancer treatment. This includes development of the questions themselves through extensive qualitative cognitive debriefing in a national population of real-world patients,15 as well as evaluation of measurement properties (validity, reliability, sensitivity),16 recall period,17 mode equivalence,18 and software usability testing19 in populations of real-world adult patients.

Therefore, the performance of the PRO-CTCAE is already well established scientifically in routine care (real-world) populations. However, an additional step in evaluating PRO questions and digital systems for use in routine care is establishing clinical utility from the perspective of its key users: patients, nurses, and physicians.20-22 Specifically, patient perceptions can be elicited regarding comprehension, general usability, meaningfulness/relevance, communication/actionability, clinical utility, self-efficacy, and overall perceived value, whereas nurse and physician perceptions can be elicited regarding clinical utility and impact on quality and value of care.

To evaluate perceptions of the PRO-TECT digital ePRO system and PRO-CTCAE questions, surveys were administered to patients, nurses, and physicians at practices participating in the PRO-TECT real-world ePRO implementation trial.

METHODS

Setting and Participants

PRO-TECT is an ongoing multicenter, real-world, cluster-randomized trial testing implementation of ePROs in routine care at US community oncology practices (ClinicalTrials.gov identifier: NCT03249090). There are 52 practices involved in PRO-TECT, of which 26 are intervention practices in which ePROs are being implemented and 26 are control practices, where ePROs are not being implemented (ie, standard of care). Adult patients receiving chemotherapy, targeted therapy, or immunotherapy for any type of advanced or metastatic cancer at these practices are approached and complete informed consent to participate. The trial protocol was approved by central and local institutional review boards.

Digital ePRO System and Questions

At the 26 PRO-TECT ePRO intervention practices, participating patients are trained by local personnel to use the PRO-TECT ePRO digital system for symptom self-reporting.23 This system includes questions from the National Cancer Institute’s PRO-CTCAE item library for pain, nausea, vomiting, constipation, diarrhea, dyspnea, insomnia, and depression, which are scored using a 5-point ordinal verbal descriptor scale,24 as well as PRO questions about physical function (patient-reported Eastern Cooperative Oncology Group performance status), falls, and financial toxicity (item FT12 from the COST-FACIT [v2] questionnaire; Data Supplement).

Questions were loaded into the PRO-TECT digital ePRO system, which includes options for patients to complete questions via a web-based interface or via an automated telephone interface. The web-based interface is mobile responsive and allows for use on various screen sizes across computers or mobile devices, displaying a single question per page on the basis of prior usability testing and best practices for PRO visualization.19,25 The automated telephone interface is based on prior testing and best practices for interactive voice response systems.19 The digital ePRO system was built by the University of North Carolina’s Patient-Reported Outcomes Core (PRO Core).

Participating patients in the intervention arm are asked to self-report their PROs via this system weekly for 1 year or until discontinuation of all cancer treatment. On a day of the week and time of the patient’s choice, they receive a prompt by e-mail or automated call to self-report symptoms via the system. If they do not complete the self-report, they receive a reminder prompt 24 hours later, followed by a call from a clinic staff member if they have not completed the self-report after 72 hours.

Whenever a PRO-CTCAE score is severe or very severe or when it worsens to moderate from none on the prior self-report, the patient receives an e-mail with a link to general educational materials about home self-management of that symptom. In addition, an e-mail–based alert is triggered and routed to a clinical nurse at the patient’s oncology practice with a symptom management pathway for the symptom that generated the alert. Nurse alerts are also triggered for Eastern Cooperative Oncology Group scores > 2 or worsened by 2 points and for financial distress scores > 2 (“quite a bit” or “very much”). Reports showing the longitudinal trajectory of PROs can be visualized or printed for nurses and oncologists to view at visits to guide discussions and care. The digital ePRO system is a stand-alone software platform and is not interfaced to electronic health record (EHR) systems at practices. There are no requirements for what clinical actions nurses or oncologists should take based on the ePRO or symptom management pathway information they receive.

Surveys Evaluating Perceptions of the Digital System

Feedback surveys were administered to patients after 3 months of participation and when they completed study participation (“off-study”). The survey assessed patient comprehension by asking if PRO questions were easy to understand; general usability by asking if the digital ePRO system was easy to use; meaningfulness/relevance by asking if the PRO questions were relevant to them; and communication/actionability, clinical utility, and self-efficacy, by asking if they felt the process improved discussions with their care team, if their doctor or nurse used the symptom information, and if the system made them feel more in control of their own care, respectively. For overall perceived value of the system, they were asked if they would recommend it to other patients.

After at least 6 months of experience using the system, nurse input on clinical utility was elicited by asking if PRO information was helpful for clinical documentation in the EHR; improved quality of discussions with patients; increased efficiency of discussions with patients; and was useful for patient care. For quality and value of care, nurses were asked if they felt that using the system improved quality of care for patients and if they would recommend it to other clinics, respectively. Physician (oncologist) input on clinical utility was elicited by asking how often PRO information was used to guide discussions with patients, and to make treatment decisions.

Statistical Analysis

Descriptive statistics included medians and ranges for continuous variables, and frequencies and relative frequencies for categorical variables. Completion of patient self-reports was tabulated for each patient as the number of completed weekly ePRO questionnaires divided by the number of questionnaires that were expected to be completed. Pairwise relationships between patient survey items with patient baseline characteristics (patient-selected survey mode, age, sex, ethnicity, race, urban/rural location of clinic, educational attainment, employment status, marital status), baseline technical experience (prior internet use, prior e-mail use, prior computer use), baseline financial status (difficulty paying bills), and completion of self-reporting during trial participation were described using Spearman correlations and χ2 tests. P values for Spearman correlations were evaluated graphically via a choropleth map (ie, heat map). Multivariable regression with forward selection (variable entry until statistical significance < 0.10) was used to evaluate the relationships of patient characteristics and completion of self-reports with patient survey items. P values < .05 were considered statistically significant.

RESULTS

Survey Completion

Patients.

By the cutoff date of May 4, 2020, for this analysis, there were 497 patients in the PRO-TECT trial intervention arm who completed at least 3 months of participation. Among these, 496 (99.8%) of 497 completed the 3-month feedback survey. In addition, by the cutoff date, 257 patients had finished participation in the study, of whom 245 (95.3%) of 257 completed the off-study feedback survey. Reasons for missed off-study surveys were six deaths (2.3%) and six who preferred not to complete (2.3%).

Nurses and physicians.

Surveys were completed by a total of 57 clinical nurses from 23 (88.5%) of the 26 participating community practices. Surveys were not administered at the three remaining practices, because they had enrolled two or fewer patients and thus had limited experience with the PRO-TECT system and workflow. Multiple nurse surveys were completed at practices that included more than one site of service. Physician surveys were completed by 39 oncologists from 22 (84.6%) of the 26 participating practices (no physician agreed to complete the survey at one of the practices).

Survey Respondent Characteristics

Table 1 shows characteristics of patient participants. The median age was 63 years (range, 29-89 years), and 305 (61.5%) were women. Most participants were white (394 [80.1%]), whereas 84 (17.1%) were Black or African American. More than one third had high school education or less, 124 (25.0%) received treatment at a rural center, 70 (14.1%) had no prior internet experience, and 185 (37.4%) had at least some difficulty paying monthly bills. A variety of cancer types were represented. Among nurses, all were clinically involved with symptom management and cared for patients participating in the trial, and all of the physicians were medical oncologists.

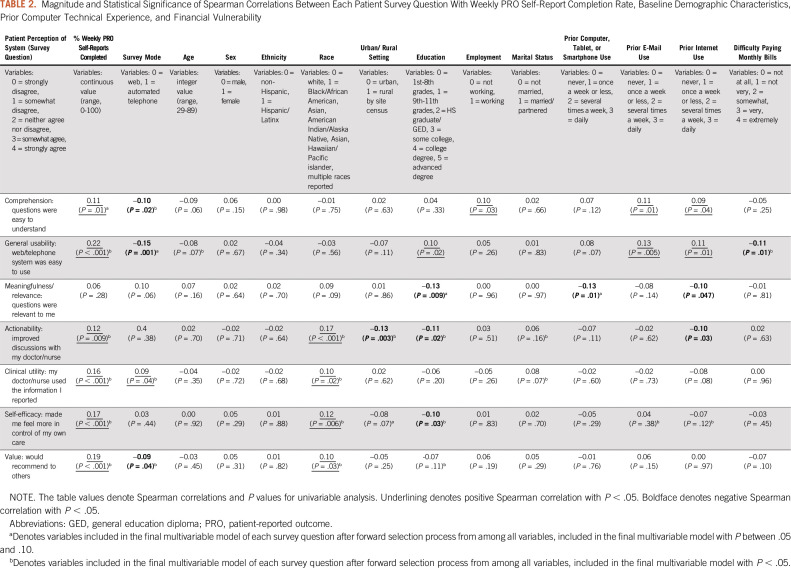

TABLE 1.

Characteristics of Patients

Patient Survey Responses

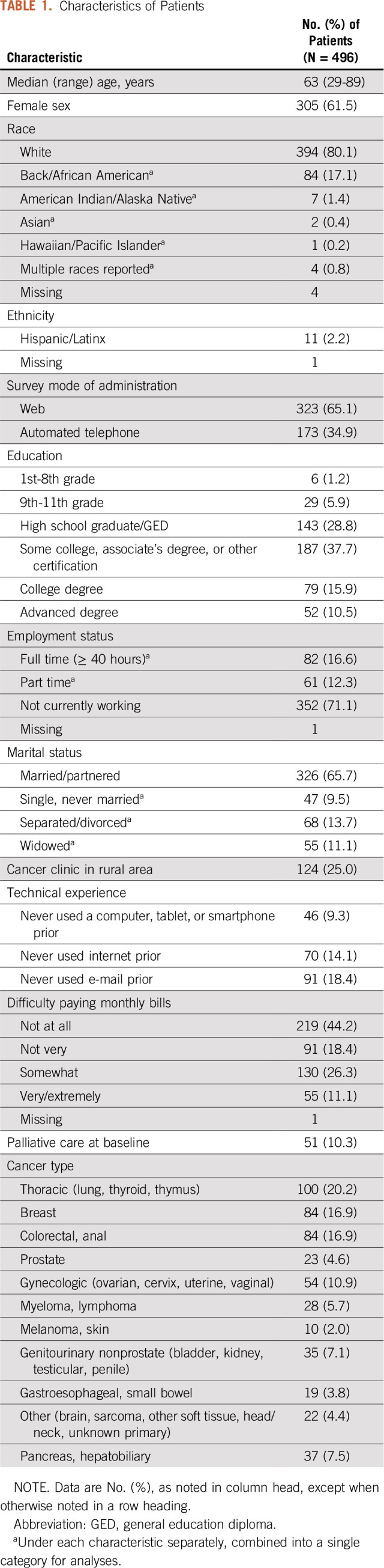

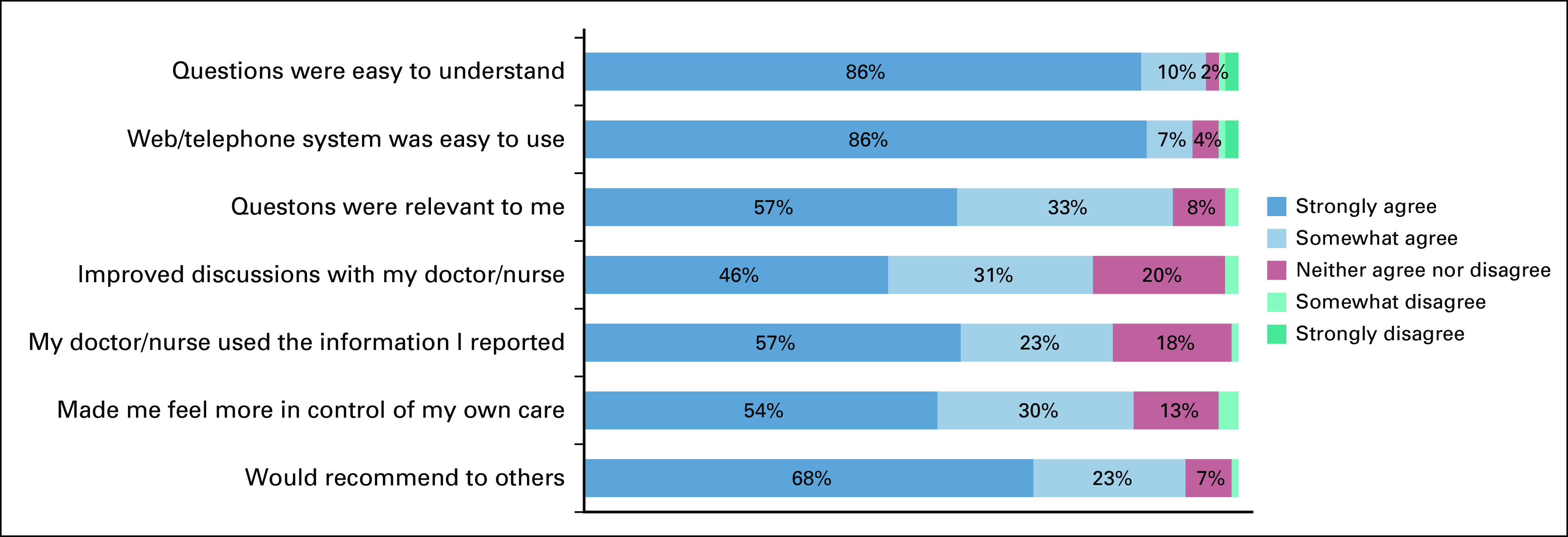

Results of the 496 patient surveys at 3 months are shown in Figure 1, and the 245 surveys at the off-study time point are shown in the Data Supplement. Comprehension of the PRO questions was high with 471 (95.0%) of 496 patients strongly or somewhat agreeing that questions were easy to understand at 3 months (and 230 [95.0%] of 242 at off study). General usability of the digital ePRO system was also high, with 463 (93.3%) of 496 strongly or somewhat agreeing that the system was easy to use at 3 months (227 [93.4%] of 243 at off study). A meaningfulness/relevance question was added to the survey partway through the trial and therefore was administered to fewer patients; 350 (91.4%) of 383 strongly or somewhat agreed that the PRO questions were relevant to them at 3 months (214 [90.3%] of 237 at off study).

FIG 1.

Patient feedback survey responses at 3 months.

Regarding communication/actionability, clinical utility, and self-efficacy, perceived benefits increased over time after more experience with the system. Among participants, 359 (72.5%) of 495 felt the process improved discussions with their care team at 3 months, which increased to 188 (77.0%) of 244 at off study; 345 (70.0%) of 493 stated their doctor or nurse used the symptom information they reported at 3 months, which increased to 196 (80.7% of 243) at off study. In addition, 381 (77.1%) of 494 noted that self-reporting symptoms weekly from home made them feel more in control of their own care at 3 months, which increased to 205 (84.0%) of 244 at off study. In terms of overall perceived value of the system, at 3 months, 443 (89.3%) of 496 strongly or somewhat agreed they would recommend the system to other patients (223 [91.4%] of 244 at off study).

The survey also assessed practical and process aspects of the system configuration. When asked about the frequency of self-reporting symptoms, 357 (94.4%) of 378 patients responded that weekly was “just about right” (n = 5 [1.3%] said “not often enough”; n = 17 [4.5%] said “too often”). The length of time to finish the weekly surveys was felt by 474 (95.6% of 496) to be reasonable (n = 20 [4.0%], “neither agree nor disagree”; n = 2 [0.4%], “disagree”). When asked if they would like the PRO symptom questions themselves to be changed from time to time (eg, phrasing, formatting, or appearance), 52 (13.6%) of 383 strongly agreed, 117 (30.5%) somewhat agreed, 163 (42.6%) neither agreed nor disagreed, 20 (5.2%) somewhat disagreed, and 31 (8.1%) strongly disagreed. Regarding the educational materials about home symptom management triggered for severe or worsening symptoms, 302 (61.8%) of 489 felt the materials were useful.

Relationships of Patient Survey Responses With Completion of Weekly PRO Self-Reports, Technical Experience, and Baseline Patient Characteristics

Table 2 shows that virtually all patient feedback survey questions were significantly positively correlated with completion of weekly PRO self-reports in both univariable and multivariable analyses, demonstrating an association between higher rates of PRO completion with higher perceived comprehension, general usability, meaningfulness, actionability, clinical utility, and self-efficacy related to the system. Notably, the rate of completion of PRO weekly reports overall has been high to date in this ongoing trial, with 92% of expected weekly reports completed on time across all patients (85% directly by patients, 4% by caregivers, and 3% by staff on behalf of patients). Nonetheless, there has been variation in individual patients’ compliance with weekly reporting, ranging from 17% to 100%. Once the trial is complete, details of compliance rates will be published separately.

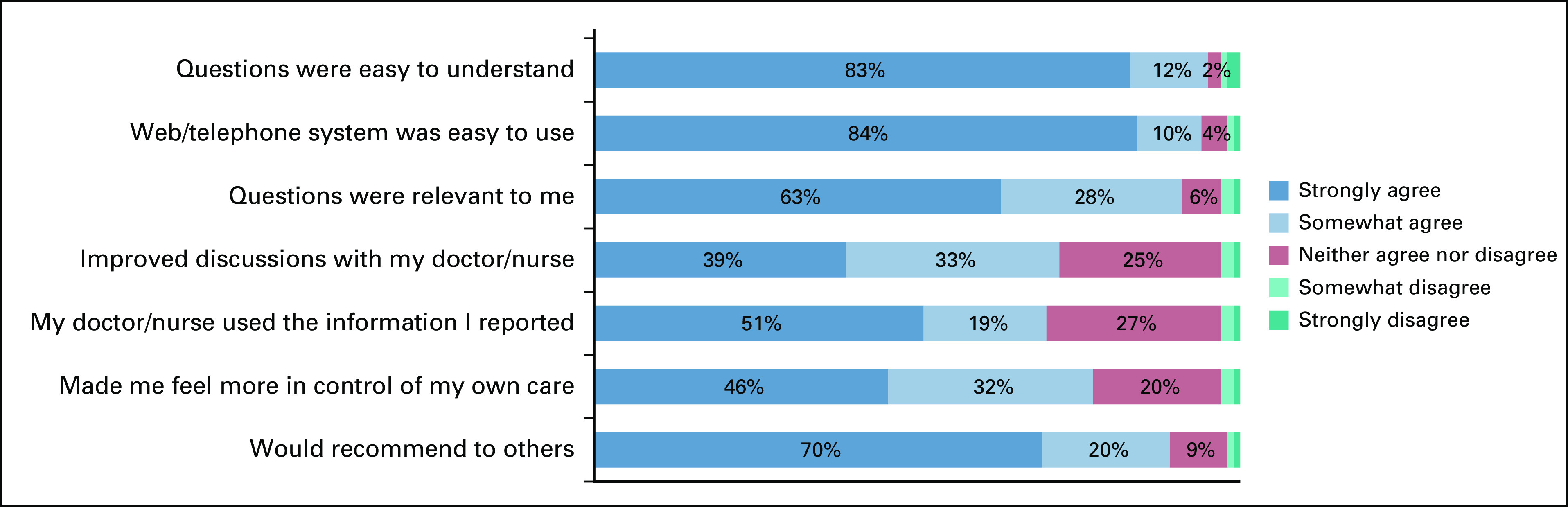

TABLE 2.

Magnitude and Statistical Significance of Spearman Correlations Between Each Patient Survey Question With Weekly PRO Self-Report Completion Rate, Baseline Demographic Characteristics, Prior Computer Technical Experience, and Financial Vulnerability

Both comprehension (“questions were easy to understand”) and general usability (“system was easy to use”) were significantly correlated with prior experience using connected technologies, specifically with experience using e-mail or the internet (Table 2). However, significant correlation was not seen with simply using a computer/tablet/smartphone previously. Notably, there was a negative correlation of prior internet use with both meaningfulness/relevance (“questions were relevant to me”) and communication/actionability (“improved discussions with my doctor/nurse”), suggesting that the system may offer particular benefits for patients lacking prior experience with connected technologies. Patient-selected survey mode was significantly related to comprehension, general usability, and value in favor of web over automated telephone, whereas clinical utility favored automated telephone. It is unclear in this analysis whether these associations were due to factors leading patients to select one mode or another, or to inherent experience using those modes.

Table 2 also shows relationships between patient survey responses and other patient baseline characteristics. White race was significantly positively correlated in univariable and multivariable analyses with perception that the PRO system improved communication/actionability, clinical utility, self-efficacy, and value, but not comprehension, usability, or meaningfulness/relevance. Higher educational attainment was significantly positively correlated with usability and was negatively correlated with meaningfulness/relevance, communication/actionability, and self-efficacy, suggesting that the added benefit of the PRO system may be greater for patients with less education. Statistical significance of these negative correlations was retained in the multivariable model.

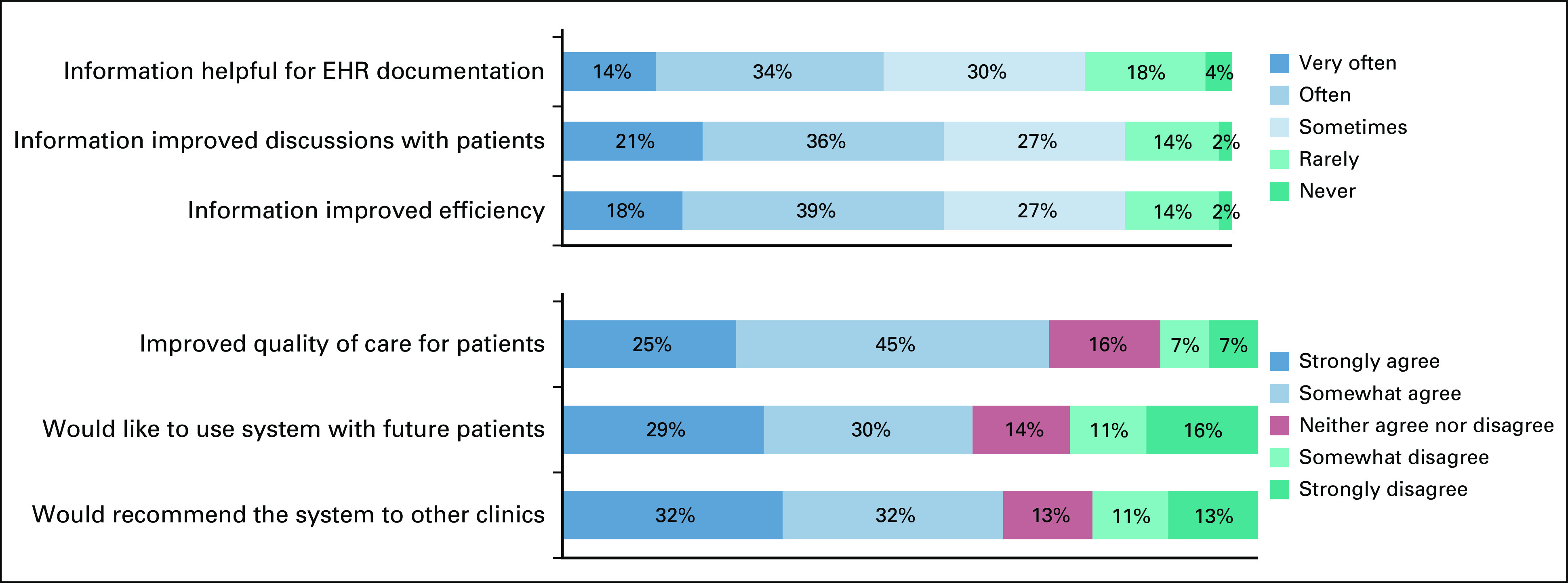

Nurse Survey Responses

Figure 2 shows results of the nurse survey. In terms of clinical utility, 44 (78.6%) of 56 nurses indicated that patient self-reported information was helpful for documentation in the electronic medical record; 47 (83.9%) noted the information improved quality of discussions with patients; and 47 (83.9%) found that the information increased efficiency of discussions with patients. In an additional clinical utility question posed as “yes/no” (not shown in the figure), 42 (75.0%) of nurses felt that overall, patient self-reported information was useful for patient care. In terms of quality and value of care, 39 (69.6%) of nurses felt that the system improved quality of care for patients; 33 (58.9%) would like to use it in the future, and 36 (64.3%) would recommend it to other clinics.

FIG 2.

Nurse feedback survey responses.

Because alerts are known to be a potential dissatisfier for providers, nurses were asked about the number of ePRO alerts received, with 26 (47.3%) of 55 stating “too many,” 19 (34.5%) saying “just right,” one (1.8%) reporting “too few,” and nine (16.4%) stating “unsure.” When asked if they found alerts to be helpful, 40 (71.4%) of 56 agreed, seven (12.5%) neither agreed nor disagreed, and nine (16.1%) disagreed. The preferred mechanism for receiving alerts was by e-mail (38 [69.1%] of 55) followed by EHR message (11 [20.0%] of 55), although the ePRO system in this trial was not integrated with EHRs. When asked how much time it took to review each ePRO alert, 29 (52%) of 56 nurses noted < 5 minutes, 20 (36%) of 56 noted 5-10 minutes, and five (9%) of 56 noted 11-20 minutes (one noted > 20 minutes, and one said “not applicable”). Nurses were also asked how long it took to review full symptom reports at clinic visits; 22 (39%) of 56 stated < 5 minutes, 15 (27%) of 56 stated 5-10 minutes, and three (5%) of 56 stated 11-20 minutes (one noted > 20 minutes, and 15 said “not applicable”).

Regarding the symptom management pathway accompanying alerts, 28 (50.0%) of 56 nurses stated they had used the pathways, among which 23 (82.1%) of 28 agreed the pathways are useful (n = 2 [7.1%], neither agree nor disagree; n = 3 [10.7%], disagree).

Physician (oncologist) Survey Responses

Among oncologists, 34 (87.2%) of 39 noted that they had actively reviewed patient self-reported information from the study during clinical care, generally through discussions with nurses, and 31 (91.2%) of the 34 stated that they found the information to be useful. In terms of clinical utility, when asked if they used the patient-reported information to guide discussions with patients, 11 (32.4%) of 34 stated often, 11 (32.4%) of 34 said sometimes, and 10 (29.4%) of 34 said rarely. Similarly, when asked if they used the patient-reported information to make treatment decisions, seven (20.6%) of 34 stated often, 15 (44.1%) of 34 said sometimes, and nine (26.5%) of 34 said rarely. When asked what the ideal approach would be for them to receive patient-reported information at clinic visits, 52% indicated computerized in the EHR; 26% desired it to be printed out, 20% wished to have a tablet computer brought to them with the information, and 2% wanted to be told the information directly by patients.

DISCUSSION

Structured feedback from a diverse group of adult patients completing PRO questionnaires digitally in community oncology practices indicates high levels of comprehension, ease of use, perceived meaningfulness, relevance, communication, actionability, clinical utility, improvement of self-efficacy, and value. Feedback from nurses and oncologists caring for these patients demonstrates favorable perceptions of clinical utility and impact on quality and value of care.

The concept of clinical utility in the context of digital health technologies refers to its relevance and usefulness in care delivery.20-22 Feedback on the PRO-TECT ePRO digital system, and the PRO-CTCAE questions within it, demonstrates that, from patient and provider perspectives, these have utility in the conduct of routine cancer care. Demonstrating this in a digital health platform is a key step in understanding its role in care and in the likelihood of ultimate successful widespread implementation.26 The findings in this study leave little doubt that the design and functionalities of the PRO-TECT digital system and PRO-CTCAE questionnaire are viewed as valuable tools to enhance delivery of quality cancer care by both patients and clinicians.

Patient endorsement was particularly high for comprehension and ease of use. Scores related to communication/actionability, clinical utility, and self-efficacy increased over time between the 3-month and off-study survey time points, suggesting that, as an ePRO system becomes more integrated into processes of care for a patient, its value increases. Most patients agreed that weekly is a favored frequency for ePRO questionnaire administration, in this context of treatment of advanced and metastatic cancers.

Scores on patient feedback survey questions were significantly positively associated with completion rates of weekly ePRO self-reports, demonstrating a relationship between greater patient comfort using the system, as well as perceived value of the system, with willingness and ability to use it. Therefore, efforts should be made for future ePRO systems to optimize ease of use and to communicate to patients the clinical relevance and value of the system for their care. Notably, in this trial, almost 40% of patients chose to use an automated telephone interface rather than a web interface (particularly older patients, those living in rural areas, or those with lower educational levels),27 reflecting the importance of offering interface options to ensure that patients who prefer more traditional technologies are not excluded.

Most patients (62%) felt that there was usefulness in the provided educational materials about home symptom management. Toward the future, efforts to integrate self-help materials within the software system, rather than as a separate module, may increase usefulness.

Although nursing perspectives were generally favorable, there was a consistent minority with unfavorable views, including 16% who felt the system rarely or never improved discussions with patients; 14% who felt it did not improve quality of care, 27% who would not want to use it with future patients, and 24% who would not recommend it to other clinics. An ongoing qualitative interview substudy is eliciting input from nurses on barriers to implementing ePROs and causes of dissatisfaction, which will be reported separately.

A likely underlying source of dissatisfaction for clinicians using digital systems to monitor symptoms during routine cancer care is alert fatigue. Alerts are frequently triggered by the PRO-TECT system, because the participating population is ill and highly symptomatic. Approximately one third of ePRO weekly questionnaires triggered an alert to nurses. Notably, 47% of nurses felt there were “too many” alerts. Yet, 93% noted they would like to receive future alerts for severe symptoms. This disconnect reveals an underlying tension that is common with care transformation approaches: perceived added work of an innovation can offset perceived benefits. Feedback in this study revealed that the amount of time spent by nurses on any given alert is small (most taking < 5 minutes to address). Although ePROs can decrease ultimate workload by preventing downstream complications that can be highly time consuming and costly, this benefit is usually not perceived directly by clinicians. Rather, the burden of incoming alerts is felt on top of their existing workloads. For ePRO systems to be fully embraced by providers, underlying changes to workflows and personnel deployment are necessary to monitor and address incoming alerts, and to prevent providers from feeling burdened by an intervention that in fact enhances their ability to effectively manage patients. Methods for refining the number of triggered alerts are under development and will be reported separately.

In this study, the ePRO system was not integrated with EHRs at practices. Given the timeline and multicenter nature of the study, and the considerable time and technical effort required for such integration, it was not feasible. Clinic staff members therefore forwarded alerts to nurses, synthesizing the functionality of an EHR in-basket. In the future, ideally, ePRO systems will be more fully integrated with EHRs to enable seamless information flow of patient-reported information into the medical record for providers to view. In summary, this study supports the clinical utility and value of integrating ePROs into routine cancer care from the perspectives of patients, nurses, and physicians.

ACKNOWLEDGMENT

The authors would like to thank Dr. Neeraj Arora for his support and commitment to this project.

FIG A1.

Patient feedback survey responses at off-study time point.

DISCLAIMER

All statements in this publication, including its findings, are solely those of the authors and do not necessarily represent the views of the Patient-Centered Outcomes Research Institute (PCORI), its Board of Governors or Methodology Committee.

SUPPORT

Supported by funding from the Patient-Centered Outcomes Research Institute (PCORI) award No. IHS-1511-33392. The study made use of technology systems provided by the Patient-Reported Outcomes Core (PRO Core; pro.unc.edu) at the Lineberger Comprehensive Cancer Center of the University of North Carolina, which is funded in part by National Cancer Institute Cancer Center Core Support Grant No. 5-P30-CA016086 and the University Cancer Research Fund of North Carolina. The trial was facilitated by the Foundation of the Alliance for Clinical Trials in Oncology (https://acknowledgments.alliancefound.org).

AUTHOR CONTRIBUTIONS

Conception and design: Ethan Basch, Angela M. Stover, Sydney Henson, Allison Deal, Patricia A. Spears, Mattias Jonsson, Antonia V. Bennett, Lauren J. Rogak, Bryce B. Reeve, Claire Snyder, Lisa A. Kottschade, Deborah Bruner, Amylou C. Dueck

Data analysis and interpretation: Ethan Basch, Angela M. Stover, Deborah Schrag, Arlene Chung, Jennifer Jansen, Sydney Henson, Brenda Ginos, Allison Deal, Mattias Jonsson, Antonia V. Bennett, Gita Mody, Gita Thanarajasingam, Lauren J. Rogak, Bryce B. Reeve, Claire Snyder, Lisa A. Kottschade, Marjory Charlot, Amylou C. Dueck

Collection and assembly of data: Ethan Basch, Angela M. Stover, Jennifer Jansen, Sydney Henson, Philip Carr, Allison Deal, Mattias Jonsson, Anna Weiss, Amylou C. Dueck

Provision of study material or patients: Ethan Basch

Administrative support: Lauren J. Rogak, Anna Weiss

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/cci/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Ethan Basch

Consulting or Advisory Role: Sivan, Carevive Systems, Navigating Cancer, AstraZeneca

Other Relationship: Centers for Medicare and Medicaid Services, National Cancer Institute, American Society of Clinical Oncology, Journal of the American Medical Association, Patient-Centered Outcomes Research Institute

(OPTIONAL) Open Payments Link: https://openpaymentsdata.cms.gov/physician/427875/summary

Angela M. Stover

Honoraria: Genentech

Deborah Schrag

Stock and Other Ownership Interests: Merck (I)

Honoraria: Pfizer

Consulting or Advisory Role: Journal of the American Medical Association

Research Funding: American Association for Cancer Research (Inst), GRAIL (Inst)

Patents, Royalties, Other Intellectual Property: PRISSMM model is trademarked and curation tools are available to academic medical centers and government under creative commons license.

Travel, Accommodations, Expenses: IMEDEX, Precision Medicine World Conference, Journal of the American Medical Association

Other Relationship: Journal of the American Medical Association

Arlene Chung

Honoraria: United Health Group R&D

Patricia A. Spears

Consulting or Advisory Role: Pfizer

Mattias Jonsson

Consulting or Advisory Role: GlaxoSmithKline (I), Epividian (I)

Other Relationship: GlaxoSmithKline, Takeda, Boehringer Ingelheim, AbbVie, UCB Bioscience and Merck (I)

Gita Mody

Consulting or Advisory Role: iRenix (I)

Bryce B. Reeve

Consulting or Advisory Role: Higgs Boson, The Learning Corporation, United Therapeutics, Takeda

Claire Snyder

Research Funding: Genentech (Inst)

Patents, Royalties, Other Intellectual Property: Royalties as a section author for UptoDate

Lisa A. Kottschade

Consulting or Advisory Role: Array BioPharma, Bristol Myers Squibb (Inst)

Research Funding: Bristol Myers Squibb (Inst), Novartis (Inst)

Anna Weiss

Research Funding: Myriad Laboratories

Amylou C. Dueck

Patents, Royalties, Other Intellectual Property: Royalties from licensing fees for a patient symptom questionnaire (MPN-SAF)

No other potential conflicts of interest were reported.

REFERENCES

- 1.Panattoni L, Fedorenko C, Greenwood-Hickman MA, et al. Characterizing potentially preventable cancer- and chronic disease-related emergency department use in the year after treatment initiation: A regional study. J Oncol Pract. 2018;14:e176–e185. doi: 10.1200/JOP.2017.028191. [DOI] [PubMed] [Google Scholar]

- 2.Henry DH, Viswanathan HN, Elkin EP, et al. Symptoms and treatment burden associated with cancer treatment: Results from a cross-sectional national survey in the US. Support Care Cancer. 2008;16:791–801. doi: 10.1007/s00520-007-0380-2. [DOI] [PubMed] [Google Scholar]

- 3.Cleeland CS, Zhao F, Chang VT, et al. The symptom burden of cancer: Evidence for a core set of cancer-related and treatment-related symptoms from the Eastern Cooperative Oncology Group Symptom Outcomes and Practice Patterns study. Cancer. 2013;119:4333–4340. doi: 10.1002/cncr.28376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Fromme EK, Eilers KM, Mori M, et al. How accurate is clinician reporting of chemotherapy adverse effects? A comparison with patient-reported symptoms from the Quality-of-Life Questionnaire C30. J Clin Oncol. 2004;22:3485–3490. doi: 10.1200/JCO.2004.03.025. [DOI] [PubMed] [Google Scholar]

- 5.Laugsand EA, Sprangers MAG, Bjordal K, et al. Health care providers underestimate symptom intensities of cancer patients: A multicenter European study. Health Qual Life Outcomes. 2010;8:104. doi: 10.1186/1477-7525-8-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Atkinson TM, Li Y, Coffey CW, et al. Reliability of adverse symptom event reporting by clinicians. Qual Life Res. 2012;21:1159–1164. doi: 10.1007/s11136-011-0031-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fisch MJ, Lee JW, Weiss M, et al. Prospective, observational study of pain and analgesic prescribing in medical oncology outpatients with breast, colorectal, lung, or prostate cancer. J Clin Oncol. 2012;30:1980–1988. doi: 10.1200/JCO.2011.39.2381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wu AW, Kharrazi H, Boulware LE, et al. Measure once, cut twice: Adding patient-reported outcome measures to the electronic health record for comparative effectiveness research. J Clin Epidemiol. 2013;66:S12–S20. doi: 10.1016/j.jclinepi.2013.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Howell D, Molloy S, Wilkinson K, et al. Patient-reported outcomes in routine cancer clinical practice: A scoping review of use, impact on health outcomes, and implementation factors. Ann Oncol. 2015;26:1846–1858. doi: 10.1093/annonc/mdv181. [DOI] [PubMed] [Google Scholar]

- 10.Snyder CF, Aaronson NK, Choucair AK, et al. Implementing patient-reported outcomes assessment in clinical practice: A review of the options and considerations. Qual Life Res. 2012;21:1305–1314. doi: 10.1007/s11136-011-0054-x. [DOI] [PubMed] [Google Scholar]

- 11. doi: 10.1200/OP.20.00264. Basch E, Mody GN, Dueck AC: Electronic patient-reported outcomes as digital therapeutics to improve cancer outcomes. JCO Oncol Pract 16:541-542, 2020. [DOI] [PubMed] [Google Scholar]

- 12.Basch E, Deal AM, Dueck AC, et al. Overall survival results of a trial assessing patient-reported outcomes for symptom monitoring during routine cancer treatment. JAMA. 2017;318:197–198. doi: 10.1001/jama.2017.7156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Basch E, Deal AM, Kris MG, et al. Symptom monitoring with patient-reported outcomes during routine cancer treatment: A randomized controlled trial. J Clin Oncol. 2016;34:557–565. doi: 10.1200/JCO.2015.63.0830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Basch E, Reeve BB, Mitchell SA, et al. Development of the National Cancer Institute’s Patient-Reported Outcomes version of the common terminology criteria for adverse events (PRO-CTCAE) J Natl Cancer Inst. 2014;106:dju244. doi: 10.1093/jnci/dju244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hay JL, Atkinson TM, Reeve BB, et al. Cognitive interviewing of the US National Cancer Institute’s Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE) Qual Life Res. 2014;23:257–269. doi: 10.1007/s11136-013-0470-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dueck AC, Mendoza TR, Mitchell SA, et al. Validity and reliability of the US National Cancer Institute’s Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE) JAMA Oncol. 2015;1:1051–1059. doi: 10.1001/jamaoncol.2015.2639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mendoza TR, Dueck AC, Bennett AV, et al. Evaluation of different recall periods for the US National Cancer Institute’s PRO-CTCAE. Clin Trials. 2017;14:255–263. doi: 10.1177/1740774517698645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bennett AV, Dueck AC, Mitchell SA, et al. Mode equivalence and acceptability of tablet computer-, interactive voice response system-, and paper-based administration of the US National Cancer Institute’s Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE) Health Qual Life Outcomes. 2016;14:24. doi: 10.1186/s12955-016-0426-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Schoen MW, Basch E, Hudson LL, et al. Software for administering the National Cancer Institute’s Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events: Usability study. JMIR Human Factors. 2018;5:e10070. doi: 10.2196/10070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Singh K, Drouin K, Newmark LP, et al. Many mobile health apps target high-need, high-cost populations, but gaps remain. Health Aff (Millwood) 2016;35:2310–2318. doi: 10.1377/hlthaff.2016.0578. [DOI] [PubMed] [Google Scholar]

- 21.Gordon WJ, Landman A, Zhang H, et al. Beyond validation: Getting health apps into clinical practice. NPJ Digit Med. 2020;3:14. doi: 10.1038/s41746-019-0212-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lesko LJ, Zineh I, Huang SM. What is clinical utility and why should we care? Clin Pharmacol Ther. 2010;88:729–733. doi: 10.1038/clpt.2010.229. [DOI] [PubMed] [Google Scholar]

- 23.Stover AM, Tompkins Stricker C, Hammelef K, et al. Using stakeholder engagement to overcome barriers to implementing Patient- Reported Outcomes (PROs) in cancer care delivery: Approaches from three prospective studies—“PRO-Cision” medicine toolkit. Med Care. 2019;55:S92–S97. doi: 10.1097/MLR.0000000000001103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.National Cancer Institute https://healthcaredelivery.cancer.gov/pro-ctcae Patient-reported outcomes version of the Common Terminology Criteria for Adverse Events.

- 25.Snyder C, Smith K, Holzner B, et al. Making a picture worth a thousand numbers: Recommendations for graphically displaying patient-reported outcomes data. Qual Life Res. 2019;28:345–356. doi: 10.1007/s11136-018-2020-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Stover AM, Haverman L, van Oers H, et al. Using an implementation science approach to implement and evaluate Patient-Reported Outcome Measures (PROM) initiatives in routine care settings. Qual Life Res . doi: 10.1007/s11136-020-02564-9. doi: 10.1007/s11136-020-02564-9 [epub ahead of print on July 10, 2020] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Stover AM, Henson S, Jansen J, et al. Demographic and symptom differences in PRO-TECT trial (AFT-39) cancer patients electing to complete weekly home patient-reported outcome measures (PROMs) via an automated phone call vs. email: Implications for implementing PROs into routine care. Qual Life Res. 2019;28:S1. [Google Scholar]