Abstract

Background

Alzheimer’s disease (AD) is the most common type of dementia, typically characterized by memory loss followed by progressive cognitive decline and functional impairment. Many clinical trials of potential therapies for AD have failed, and there is currently no approved disease-modifying treatment. Biomarkers for early detection and mechanistic understanding of disease course are critical for drug development and clinical trials. Amyloid has been the focus of most biomarker research. Here, we developed a deep learning-based framework to identify informative features for AD classification using tau positron emission tomography (PET) scans.

Results

The 3D convolutional neural network (CNN)-based classification model of AD from cognitively normal (CN) yielded an average accuracy of 90.8% based on five-fold cross-validation. The LRP model identified the brain regions in tau PET images that contributed most to the AD classification from CN. The top identified regions included the hippocampus, parahippocampus, thalamus, and fusiform. The layer-wise relevance propagation (LRP) results were consistent with those from the voxel-wise analysis in SPM12, showing significant focal AD associated regional tau deposition in the bilateral temporal lobes including the entorhinal cortex. The AD probability scores calculated by the classifier were correlated with brain tau deposition in the medial temporal lobe in MCI participants (r = 0.43 for early MCI and r = 0.49 for late MCI).

Conclusion

A deep learning framework combining 3D CNN and LRP algorithms can be used with tau PET images to identify informative features for AD classification and may have application for early detection during prodromal stages of AD.

Keywords: Alzheimer’s disease, Tau PET, Deep learning

Background

The accumulation of hyperphosphorylated and pathologically misfolded tau protein is one of the cardinal and most common features in Alzheimer’s disease (AD) [1–5]. The amount and spatial distribution of abnormal tau, seen pathologically as neurofibrillary tangles in brain, is closely related to the onset of cognitive decline and the progression of AD. The identification of morphological phenotypes of tau on in vivo neuroimaging may help to differentiate mild cognitive impairment (MCI) and AD from cognitively normal older adults (CN) and provide insights regarding disease mechanisms and patterns of progression [6–9].

Deep learning has been used in a variety of applications in response to the increasingly complex and growing amount of medical imaging data [10–12]. Significant efforts have been made regarding the application of deep learning to AD research, but predicting AD progression through deep learning using neuroimaging data has focused primarily on magnetic resonance imaging (MRI) and/or amyloid positron emission tomography (PET) [10, 13]. However, MRI scans cannot visualize molecular pathological hallmarks of AD, and amyloid PET cannot, without difficulty, visualize the progression of AD due to the accumulation of amyloid-β early in the disease course with a plateau in later stages [14, 15].

The presence and location of pathological tau deposition in the human brain are well established [2, 3, 5]. Braak and Braak [5] analyzed AD-related neuropathology and generated a staging algorithm to describe the tau anatomical distribution [6, 8, 16, 17]. Their results have been confirmed by subsequent studies showing that the topography of tau corresponds with the pathological stages of neurofibrillary tangle deposition. Cross-sectional autopsy data shows that AD-related tau pathology may begin with tau deposition in the medial temporal lobe (Braak stages I/II), then moves to the lateral temporal cortex and part of the medial parietal lobe (stage III/IV), and eventually to broader neocortical regions (V / VI).

In this study, we developed a novel deep learning-based framework that identifies the morphological phenotypes of tau deposition in tau PET images for the classification of AD from CN. Application of CNN to tau PET is novel as the spatial characteristics and interpretation are quite different compared to amyloid PET, fluorodeoxyglucose (FDG) PET, or MRI. In particular, the regional location and topography of tau PET signal is considered to be more important than for other molecular imaging modalities. This has implications for how CNN interacts with the complex inputs as well as for visualization of informative features. The deep learning-derived AD probability scores were then applied to prodromal stages of disease including early and late mild cognitive impairment (MCI).

Methods

Study participants

All individuals included in the analysis were participants in the Alzheimer’s Disease Neuroimaging Initiative (ADNI) cohort [18, 19]. A total of 300 ADNI participants (N = 300; 66 CN, 66 AD, 97 early mild cognitive impairment (EMCI), and 71 late MCI (LMCI)) with [18F]flortaucipir PET scans were available for analysis [1]. Genotyping data were also available for all participants [19]. Informed consent was obtained for all subjects, and the study was approved by the relevant institutional review board at each data acquisition site.

Alzheimer’s Disease Neuroimaging Initiative (ADNI)

ADNI is a multi-site longitudinal study investigating early detection of AD and tracking disease progression using biomarkers (MRI, PET, other biological markers, and clinical and neuropsychological assessment) [1]. Demographic information, PET and MRI scan data, and clinical information are publicly available from the ADNI data repository (https://www.loni.usc.edu/ADNI/).

Imaging processing

Pre-processed [18F]flortaucipir PET scans (N = 300) were downloaded from the ADNI data repository, one scan per individual. Scans were normalized to Montreal Neurologic Institute (MNI) space using parameters generated from segmentation of the T1-weighted MRI scan in Statistical Parametric Mapping v12 (SPM12) (www.fil.ion.ucl.ac.uk/spm/). Standard uptake value ratio (SUVR) images were then created by intensity-normalization using a cerebellar crus reference region.

Deep learning method for AD classification

Deep learning is a subset of machine learning that has been applied in various fields [20, 21]. Deep learning uses a back-propagation procedure [22], which utilizes gradient descent for the efficient error functions and gradient computing [10, 23–26]. The weights are updated after the initial error value is calculated by the least squares method until the differential value becomes 0, as in the following formula:

Here, is a current weight of neuron j in layer i, and is the next. ErrorYout is the sum of errors that are known through the given data. Wij can be calculated by the chain rule as follows:

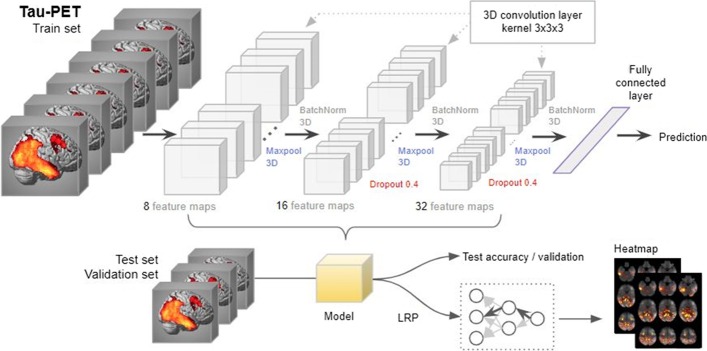

Net is a sum of weights and bias, and Yoj is an output of neuron j. Convolutional Neural Network (CNN) is a method of inserting convolution and pooling layers to the basic structure of this neural network to reduce complexity. Since CNN is widely used in the field of visual recognition, we used a CNN method for the classification of AD from CN [27]. The overall architecture of 3D CNN that we used is shown in Fig. 1. To avoid excessive epochs that can lead to overfitting, an early stopping method was applied to cease training if the model did not show improvement over 10 iterations. The learning rate of 0.0001 and Adam, a first-order gradient based probabilistic optimization algorithm [28] with a batch size of 4, were used for training a model. Feature maps (8, 16, and 32 features) were extracted from three hidden layers, with Maxpool3D and BatchNorm3D applied to each layer [29]. Dropout (0.4) was applied to the second and third layers. Five-fold cross validation was applied to measure the classifier performance for distinguishing AD from CN. All participants were partitioned into 5 subsets randomly, but every subset has the same ratio of CN and AD participants. One subset was selected for testing and the remaining four subsets were used for training. Among the four subsets for training, one subset (validation) was used without applying augmentation for tuning the weights of the layers without overfitting and the remaining three subsets were augmented by three criteria: flipping the image data, shifting the position within two voxels, and shifting the position simultaneously with the flip. Each fold was repeated four times for a robustness check, and the mean accuracy of the four repeats was used as the final accuracy. Pytorch 1.0.1 was used to design neural networks and load pre-trained weights, and all of the programs were run on Python 3.5.

Fig. 1.

3D convolutional neural network (3D-CNN)-based and layer-wise relevance propagation (LRP)-based framework for the classification of Alzheimer’s disease and the identification of informative features

Application of the AD-CN derived classification model to MCI

After an AD classification model was constructed using AD and CN groups, the model was applied to the tau PET scans from the MCI participants to calculate AD probability scores. The AD probability scores were distributed from 0 to 1, and individuals with AD probability scores closer to 1 were classified as having AD characteristics, and individuals with scores closer to 0 were classified as having CN characteristics.

Identification of informative features for AD classification

We applied a layer-wise relevance propagation (LRP) algorithm to identify informative features and visualize the classification results [30, 31]. The LRP algorithm is used to determine the contribution of a single pixel of an input image to the specific prediction in the image classification task (for full details of the LRP algorithm, see [30]).

The output xj of a neuron j is calculated by a nonlinear activation function g and function h such as

If the relevance score R of the j neuron in the layer l + 1 sets to , the relevance score R sent to the neuron i in the layer l will be represented as . So, the input value of the neuron j can be expressed as the following equation:

Bach, et al. [30] proposed the following formula for calculating :

Here, represents the positive input that the node i contributes to the node j, and represents the negative input. The variable β that ranges from 0 to 1 controls the inhibition of the relevance redistribution. A larger β value (e.g. β = 1) makes the heat map clearer [31]. In this experiment, we set β = 1.

Whole-brain imaging analysis

A voxel-wise whole brain analysis to identify brain regions in the tau PET SUVR images showing significantly higher tau deposition in AD relative to CN was conducted in SPM12. The analysis was masked for grey plus white matter. The voxel-wise family-wise error (FWE) correction was applied at p < 0.05, with a cluster size of ≥ 50 voxels for adjustment for multiple comparisons.

Results

In the analysis, 300 ADNI participants (66 CN, 66 AD, 97 EMCI, and 71 LMCI) who had baseline tau PET scans were used. Sample demographics were given in Table 1.

Table 1.

Demographic information

| AD | CN | EMCI | LMCI | Total | |

|---|---|---|---|---|---|

| n | 66 | 66 | 97 | 71 | 300 |

| Age | 76.6 | 69.3 | 73.4 | 73.4 | 73.2 |

| (SD) | (8.9) | (5.4) | (7.5) | (8.0) | (7.5) |

| % male | 56.1% | 40.0% | 37.9% | 66.7% | 50.2% |

| Education | 15.8 | 17.2 | 16.3 | 16.4 | 16.4 |

| (SD) | (2.5) | (2.1) | (2.8) | (2.5) | (2.5) |

| % amyloid + | 90.9% | 27.3% | 40.9% | 48.5% | 51.9% |

| % ApoE4 carriers | 51.5% | 25.7% | 35.1% | 28.2% | 35.1% |

Classification of AD from CN

We developed an image classifier to distinguish AD from CN by training a 3D CNN-based deep learning model on tau PET images. As the number of individuals with AD who had tau PET data was smaller than those of CN, we chose the same number of CN randomly (66 CN) to train a classifier with a balanced dataset. In the binary classification problem, it is a well-known issue that detecting disease when the majority of the applicants are healthy, the majority group may be referred as cases, causing biased classification [32]. So we used a random under-sampling (RUS) method to decrease samples from the majority group. All analyses were performed using five-fold cross-validation to reduce the likelihood of overfitting. Ultimately, cross validation in a novel independent data set will be important when such data becomes available. The classification accuracy is shown in Table 2. Our deep learning-based classification model of AD from CN yielded an average accuracy of 90.8% and a standard deviation of 2% from five-fold cross-validation (Table 2).

Table 2.

All participants were partitioned into 5 subsets randomly, but every subset has the same ratio of CN and AD participants

| Train set | Test | Acc. r1 | Epoch | Acc. r2 | Epoch | Acc. r3 | Epoch | Acc. r4 | Epoch | Mean acc | SD | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Train | Val | ||||||||||||

| fold1 | 78 (312) | 28 | 26 | 85.7 | 23 | 92.9 | 24 | 89.3 | 21 | 85.7 | 27 | 88.4 | 3.0 |

| fold2 | 78 (312) | 28 | 26 | 96.2 | 20 | 96.2 | 21 | 92.3 | 21 | 88.5 | 22 | 93.3 | 3.2 |

| fold3 | 80 (320) | 26 | 26 | 100 | 35 | 92.3 | 28 | 88.5 | 27 | 88.5 | 30 | 92.3 | 4.7 |

| fold4 | 80 (320) | 26 | 26 | 92.3 | 24 | 88.5 | 31 | 88.5 | 38 | 88.5 | 29 | 89.4 | 1.7 |

| fold5 | 80 (320) | 26 | 26 | 92.3 | 50 | 80.8 | 36 | 96.2 | 35 | 92.3 | 34 | 90.4 | 5.8 |

One subset was selected for testing and the remaining four subsets were used for training. Among the four subsets for training, one subset (validation) was used without applying augmentation for tuning the weights of the layers without overfitting and the remaining three subsets were augmented. The numbers in parentheses are the training images after applying augmentation. The experiment was repeated four times for each fold (Acc. r1 ~ r4), and the mean accuracy was considered as the final accuracy of the fold. If the accuracy for the testing subset did not improve further within up to ten iterations, the training was stopped (epoch)

Identification of informative features for AD classification

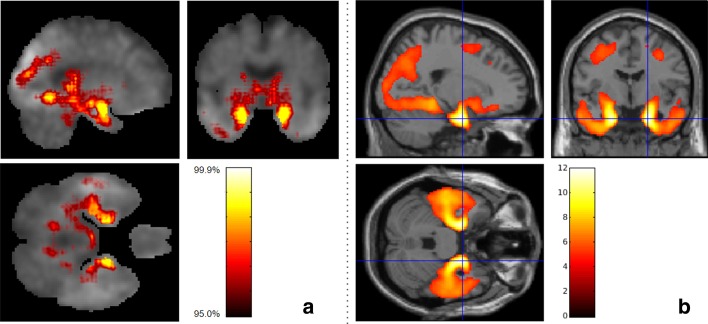

The LRP algorithm generated relevance heatmaps in the tau PET image to identify which brain regions play a significant role in a deep learning-based AD classification model. After selecting an AD classification model with the highest accuracy in each fold, we generated five heatmaps and selected the top ten regions with the highest contribution. Figure 2a shows a visualization of the relevance heatmap in three orientations of our 3D CNN-based classification of AD from CN. The heatmap displays the primary brain regions that contributed to the classification, color-coded with increasing values from red to yellow. The colored regions in the heatmap include the hippocampus, parahippocampal gyrus, thalamus, and fusiform gyrus (Fig. 2a). For comparison with our 3D CNN-based LRP results, Fig. 2b shows the results of whole brain voxel-wise analysis in SPM12 to identify brain regions where there are significant differences between AD and CN in brain tau deposition (FWE corrected p value < 0.05; minimum cluster size (k) = 50). AD had significantly higher tau deposition in widespread regions including the bilateral temporal lobes with global maximum differences in the right and left parahippocampal regions, compared to CN (Fig. 2b). The informative regions for AD classification in the LRP results are very similar to those found using SPM12, but the 3D CNN-based LRP identified smaller focal regions.

Fig. 2.

Heatmaps of 3D-CNN classifications compared to voxel-wise group difference maps between AD and CN participant groups. a Relevance heatmaps of 3D-CNN classification of AD and CN. The bright areas represent the regions that most contribute to the CN/AD classification in CNN. Selected regions with the highest contribution include the hippocampus, parahippocampal gyrus, thalamus, fusiform gyrus, and diencephalon. b SPM maps show similar regions of the brain as the 3D-CNN maps where tau deposition is significantly higher in the AD group compared to the CN group (Voxel-wise FWE-corrected p value < 0.05; minimum cluster size (k) = 50)

Classification of MCI based on the AD-CN classification model

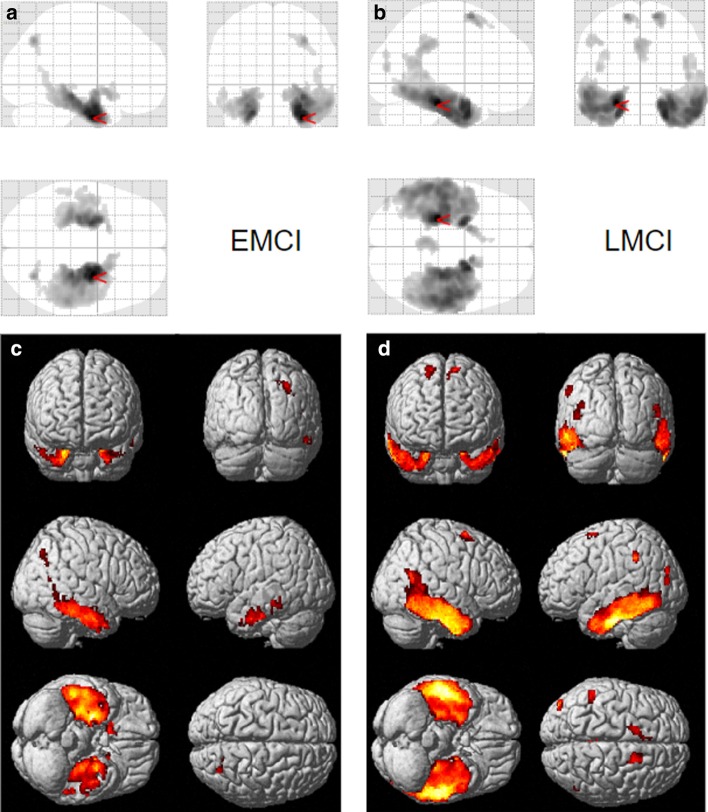

We calculated the AD probability scores of MCI participants (97 EMCI and 71 LMCI, separately) using the classification model generated above. Figure 3a and b show scatter plots between the AD probability scores of EMCI and LMCI, respectively, with bilateral mean tau deposition in the medial temporal lobe (includes the entorhinal cortex, fusiform, and parahippocampal gyri). The correlation coefficients were R = 0.43 for EMCI and R = 0.49 for LMCI, with greater tau deposition levels in the medial temporal lobe associated with higher AD probability scores. Figure 3c and d show mean tau accumulation in the medial temporal cortex of EMCI and LMCI, respectively, for participants with AD probability score ranges (0 ≤ AD probability score ≤ 0.05 versus 0.95 ≤ AD probability score ≤ 1.00, the ranges to which 65% of EMCI and 62% of LMCI belong; 0 ≤ AD probability score < 0.5 versus 0.5 < AD probability score ≤ 1.00). In EMCI (Fig. 3c), a comparison between participants with 0 ≤ AD probability score ≤ 0.05 and those with 0.95 ≤ AD probability score ≤ 1.00 yielded a difference of 0.19 SUVR in the medial temporal lobe. In LMCI (Fig. 3d), the comparison of participants with low AD probability scores (0 ≤ AD probability score ≤ 0.05) and LMCI with high AD probability scores (0.95 ≤ AD probability score ≤ 1.00) yielded a difference of 0.26 SUVR in the medial temporal cortex. Whole brain voxel-wise analysis in SPM12 was performed to identify brain regions showing differences between tau deposition between MCI participants with low AD probability scores (0 ≤ AD probability score ≤ 0.05) and those with high AD probability scores (0.95 ≤ AD probability score ≤ 1.00). In EMCI (Fig. 4a, c) and LMCI (Fig. 4b, d), voxel-wise analysis identified significant group differences in the bilateral temporal lobes including the entorhinal cortex. In addition, the differences in tau deposition were more widespread in LMCI compared to EMCI (Fig. 4).

Fig. 3.

Results of scoring all the images through the classifier and comparing the scores to the tau accumulation in the MTL region. Correlation of DL score with the amount of tau accumulated in the MTL region was R = 0.43 for EMCI (a) and R = 0.49 for LMCI (b). The red and blue bar chart in C and D show the average of the tau amounts of the image with the DL score of 5% and the image of the top 95%. The charts in light red and light blue in C and D are the result of averaging the bottom 50% and top 50% of the images

Fig. 4.

Voxel-wise differences between MCI participants with AD-like tau patterns and CN-like tau patterns defined using the 3D-CNN classifier. Significantly greater tau was observed in EMCI (a, c) and LMCI (b, d) with high AD probability (“AD-like,” 0.95 ≤ AD probability score ≤ 1.00) relative to the low AD probability group (“CN-like,” 0 ≤ AD probability score ≤ 0.05). Voxel-wise significance maps are displayed at FWE corrected p value < 0.05; minimum cluster size (k) = 50

Discussion

We developed a deep learning framework for detecting informative features in tau PET for the classification of Alzheimer’s disease. After training a 3D CNN-based AD/CN classifier on 132 [18F]flortaucipir PET images to distinguish AD with > 90% accuracy, heatmaps were generated by a LRP algorithm to show the most important regions for the classification. This model was then applied to [18F]flortaucipir PET images from 168 MCI to classify them into “AD similar” and “CN similar” groups for further investigation of the morphological characteristics of the tau deposition.

Maass, et al. [7] examined the key regions of in vivo tau pathology in ADNI using a data-driven approach and determined that the major regions contributing to a high global tau signal mainly overlapped with Braak stage III ROIs (i.e., amygdala, parahippocampal gyri and fusiform). Our deep learning-based results correspond well to the pattern reported by Maass, et al. [7] on a more limited data set.

It is noteworthy that stages III / IV can be seen in both CN and AD patients, while stages I / II are common in CN and stages V / VI are common for AD patients [3]. Thus, it is difficult to predict AD by measuring tau deposition in stage III / IV ROIs, highlighting the importance of understanding the morphological characteristics of tau. Our heatmaps, which visualized the regions driving the classification of AD and CN using deep learning on tau PET images, showed a distribution pattern similar to group differences in tau deposition between AD and CN assessed using voxel-wise analysis in SPM12. This finding indicates that the deep learning classifier used the morphological characteristics of the tau distribution for classifying AD from CN. In particular, the heatmaps show that the hippocampus, parahippocampal gyrus, thalamus and fusiform gyrus were primarily used to classify AD from CN. These results support existing research showing that tau accumulation in memory-related areas plays an important role in the development of AD [33, 34].

Early, accurate and efficient diagnosis of AD is important for initiation of effective treatment. Prognostic prediction of the likelihood of conversion of MCI to AD plays a significant role in therapeutic development and ultimately will be important for effective patient care. Thus, the CN vs. AD classifier was used to generate a score showing whether the tau distribution in MCI participants was similar or different from that seen in AD. When the AD probability score generated by the classifier was high, suggesting high similarity to AD, the MCI participants generally had the characteristic tau morphology seen in AD. In addition, we assessed applied this method to both EMCI and LMCI participants. Pearson correlation coefficients between AD probability scores and bilateral mean of SUVR in the medial temporal lobe were R = 0.43 for EMCI and R = 0.49 for LMCI. These findings indicate that the tau deposition difference between the lower 5% and upper 95% of LMCI participants was 7.1% more than the difference between the lower 5% and upper 95% of EMCI participants. Thus, the classifier determined that the tau deposition of LMCI participants is more similar to those seen in AD than that of EMCI participants. This is in line with numerous reports of biomarkers in late MCI where there is considerable overlap with early stage AD pathology [18].

Conclusion

Deep learning can be used to classify tau PET images from AD patients versus controls. Furthermore, this classifier can score the tau distribution by its similarity to AD when applied to scans from older individuals with MCI. A deep learning derived AD-like tau deposition pattern may be useful for early detection of disease during the prodromal or possibly even preclinical stages of AD on an individual basis. Advances in predictive modeling are needed to develop accurate precision medicine tools for AD and related neurodegenerative disorders, and further developments can be expected with inclusion of multi-modality data sets and larger samples.

Acknowledgements

Data collection and sharing for this project was funded by the Alzheimer's Disease Neuroimaging Initiative (ADNI) and Department of Defense (DOD). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie, Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol-Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Lumosity; Lundbeck; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California. The authors are grateful to Paula Bice, PhD for editorial assistance.

Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: https://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf

About this supplement

This article has been published as part of BMC Bioinformatics Volume 21 Supplement 21 2020: Accelerating Bioinformatics Research with ICIBM 2020. The full contents of the supplement are available at https://bmcbioinformatics.biomedcentral.com/articles/supplements/volume21-supplement-21.

Abbreviations

- AD

Alzheimer’s disease

- MCI

Mild cognitive impairment

- EMCI

Early mild cognitive impairment

- LMCI

Late mild cognitive impairment

- CN

Cognitively normal

- ADNI

Alzheimer’s Disease Neuroimaging Initiative

- PET

Positron emission tomography

- MRI

Magnetic resonance imaging

- SPM

Statistical parametric mapping

- SUVR

Standard uptake value ratio

- CNN

Convolutional neural network

- LRP

Layer-wise relevance propagation

- FWE

Family-wise error

- RUS

Random under-sampling

Authors’ contributions

TJ, KN, and AS: conceptualization and study design. TJ, SR: data processing. TJ: conducted the analysis. TJ, KN: drafting manuscript. All authors contributed to, read, and approved the final draft. All authors read and approved the final manuscript.

Funding

This work was supported, in part, by grants from the National Institutes of Health (NIH) and includes the following sources: U01 AG024904, DOD W81XWH-12–2-0012, P30 AG010133, R01 AG019771, R01 LM013463, DOD W81XWH-14–2-0151, R01 AG057739, R01 CA129769, R01 LM012535, R03 AG063250, U01 AG068057, K01 AG049050, R01 AG061788. The funders played no role in the design of the study, analysis, and interpretation of the data or in writing the manuscript.

Availability of data and materials

The datasets used and analyzed during the study are available in the ADNI LONI repository, https://adni.loni.usc.edu/

Ethics approval and consent to participate

Ethics approval is not required as the human data were publicly available by ADNI website, and all the data are not identifiable.

Consent for publication

Not applicable. Secondary analysis of publicly available data.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Taeho Jo, Email: tjo@iu.edu.

Kwangsik Nho, Email: knho@iupui.edu.

Shannon L. Risacher, Email: srisache@iupui.edu

Andrew J. Saykin, Email: asaykin@iupui.edu

References

- 1.Weiner MW, Veitch DP, Aisen PS, Beckett LA, Cairns NJ, Green RC, Harvey D, Jack CR, Jr, Jagust W, Morris JC. The Alzheimer's Disease neuroimaging initiative 3: continued innovation for clinical trial improvement. Alzheimer's & Dementia. 2017;13(5):561–571. doi: 10.1016/j.jalz.2016.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cho H, Choi JY, Hwang MS, Kim YJ, Lee HM, Lee HS, Lee JH, Ryu YH, Lee MS, Lyoo CH. In vivo cortical spreading pattern of tau and amyloid in the Alzheimer disease spectrum. Ann Neurol. 2016;80(2):247–258. doi: 10.1002/ana.24711. [DOI] [PubMed] [Google Scholar]

- 3.Schöll M, Lockhart SN, Schonhaut DR, O’Neil JP, Janabi M, Ossenkoppele R, Baker SL, Vogel JW, Faria J, Schwimmer HD. PET imaging of tau deposition in the aging human brain. Neuron. 2016;89(5):971–982. doi: 10.1016/j.neuron.2016.01.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Risacher SL, Fandos N, Romero J, Sherriff I, Pesini P, Saykin AJ, Apostolova LG. Plasma amyloid beta levels are associated with cerebral amyloid and tau deposition. Alzheimer's Dement Diagnos Assess Dis Monit. 2019;11:510–519. doi: 10.1016/j.dadm.2019.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Braak H, Braak E. Neuropathological stageing of Alzheimer-related changes. Acta Neuropathol. 1991;82(4):239–259. doi: 10.1007/BF00308809. [DOI] [PubMed] [Google Scholar]

- 6.Johnson KA, Schultz A, Betensky RA, Becker JA, Sepulcre J, Rentz D, Mormino E, Chhatwal J, Amariglio R, Papp K. Tau positron emission tomographic imaging in aging and early A lzheimer disease. Ann Neurol. 2016;79(1):110–119. doi: 10.1002/ana.24546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Maass A, Landau S, Baker SL, Horng A, Lockhart SN, La Joie R, Rabinovici GD, Jagust WJ. Initiative AsDN: comparison of multiple tau-PET measures as biomarkers in aging and Alzheimer's disease. Neuroimage. 2017;157:448–463. doi: 10.1016/j.neuroimage.2017.05.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Villemagne VL, Fodero-Tavoletti MT, Masters CL, Rowe CC. Tau imaging: early progress and future directions. Lancet Neurol. 2015;14(1):114–124. doi: 10.1016/S1474-4422(14)70252-2. [DOI] [PubMed] [Google Scholar]

- 9.Deters KD, Risacher SL, Kim S, Nho K, West JD, Blennow K, Zetterberg H, Shaw LM, Trojanowski JQ, Weiner MW. Plasma tau association with brain atrophy in mild cognitive impairment and Alzheimer’s disease. J Alzheimer's Dis. 2017;58(4):1245–1254. doi: 10.3233/JAD-161114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jo T, Nho K, Saykin AJ. Deep learning in Alzheimer’s disease: diagnostic classification and prognostic prediction using neuroimaging data. Front Aging Neurosci. 2019;11:220. doi: 10.3389/fnagi.2019.00220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, Van Der Laak JA, Van Ginneken B, Sánchez CI. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 12.Shen D, Wu G, Suk H-I. Deep learning in medical image analysis. Annu Rev Biomed Eng. 2017;19:221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Islam J, Zhang Y. Understanding 3D CNN Behavior for Alzheimer's Disease Diagnosis from Brain PET Scan. 2019. arXiv preprint: https://arXiv.org/191204563.

- 14.Palmqvist S, Schöll M, Strandberg O, Mattsson N, Stomrud E, Zetterberg H, Blennow K, Landau S, Jagust W, Hansson O. Earliest accumulation of β-amyloid occurs within the default-mode network and concurrently affects brain connectivity. Nat Commun. 2017;8(1):1–13. doi: 10.1038/s41467-017-01150-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Panza F, Lozupone M, Logroscino G, Imbimbo BP. A critical appraisal of amyloid-β-targeting therapies for Alzheimer disease. Nat Rev Neurol. 2019;15(2):73–88. doi: 10.1038/s41582-018-0116-6. [DOI] [PubMed] [Google Scholar]

- 16.Braak H, Alafuzoff I, Arzberger T, Kretzschmar H, Del Tredici K. Staging of Alzheimer disease-associated neurofibrillary pathology using paraffin sections and immunocytochemistry. Acta Neuropathol. 2006;112(4):389–404. doi: 10.1007/s00401-006-0127-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Schwarz AJ, Yu P, Miller BB, Shcherbinin S, Dickson J, Navitsky M, Joshi AD, Devous MD, Sr, Mintun MS. Regional profiles of the candidate tau PET ligand 18 F-AV-1451 recapitulate key features of Braak histopathological stages. Brain. 2016;139(5):1539–1550. doi: 10.1093/brain/aww023. [DOI] [PubMed] [Google Scholar]

- 18.Veitch DP, Weiner MW, Aisen PS, Beckett LA, Cairns NJ, Green RC, Harvey D, Jack CR, Jr, Jagust W, Morris JC. Understanding disease progression and improving Alzheimer's disease clinical trials: recent highlights from the Alzheimer's Disease neuroimaging initiative. Alzheimer's Dementia. 2019;15(1):106–152. doi: 10.1016/j.jalz.2018.08.005. [DOI] [PubMed] [Google Scholar]

- 19.Saykin AJ, Shen L, Yao X, Kim S, Nho K, Risacher SL, Ramanan VK, Foroud TM, Faber KM, Sarwar N. Genetic studies of quantitative MCI and AD phenotypes in ADNI: progress, opportunities, and plans. Alzheimer's Dementia. 2015;11(7):792–814. doi: 10.1016/j.jalz.2015.05.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 21.Richiardi J, Altmann A, Milazzo A-C, Chang C, Chakravarty MM, Banaschewski T, Barker GJ, Bokde AL, Bromberg U, Büchel C. Correlated gene expression supports synchronous activity in brain networks. Science. 2015;348(6240):1241–1244. doi: 10.1126/science.1255905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hecht-Nielsen R. Theory of the backpropagation neural network. In: Neural networks for perception. Elsevier; 1992. pp. 65–93.

- 23.Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagating errors. Nature. 1986;323(6088):533–536. [Google Scholar]

- 24.Bishop CM. Neural networks for pattern recognition. Oxford: Oxford University Press; 1995. [Google Scholar]

- 25.Ripley BD. Pattern recognition and neural networks. Oxford: Cambridge University Press; 2007. [Google Scholar]

- 26.Schalkoff RJ. Artificial neural networks. New York: McGraw-Hill Higher Education; 1997. [Google Scholar]

- 27.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems. 2012. pp. 1097–1105.

- 28.Kingma DP, Ba J. Adam: a method for stochastic optimization. 2014. arXiv preprint: https://arXiv.org/14126980.

- 29.Ioffe S, Szegedy C: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning. 2015. pp. 448–456.

- 30.Bach S, Binder A, Montavon G, Klauschen F, Müller K-R, Samek W. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS One. 2015;10(7). [DOI] [PMC free article] [PubMed]

- 31.Böhle M, Eitel F, Weygandt M, Ritter K. Layer-wise relevance propagation for explaining deep neural network decisions in mri-based alzheimer’s disease classification. Front Aging Neurosci. 2019;11:194. doi: 10.3389/fnagi.2019.00194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Johnson JM, Khoshgoftaar TM. Survey on deep learning with class imbalance. J Big Data. 2019;6(1):27. [Google Scholar]

- 33.De Calignon A, Polydoro M, Suárez-Calvet M, William C, Adamowicz DH, Kopeikina KJ, Pitstick R, Sahara N, Ashe KH, Carlson GA. Propagation of tau pathology in a model of early Alzheimer's disease. Neuron. 2012;73(4):685–697. doi: 10.1016/j.neuron.2011.11.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hyman BT, Van Hoesen GW, Damasio AR, Barnes CL. Alzheimer's disease: cell-specific pathology isolates the hippocampal formation. Science. 1984;225(4667):1168–1170. doi: 10.1126/science.6474172. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used and analyzed during the study are available in the ADNI LONI repository, https://adni.loni.usc.edu/