Abstract

The segmentation of the brain ventricle (BV) and body in embryonic mice high-frequency ultrasound (HFU) volumes can provide useful information for biological researchers. However, manual segmentation of the BV and body requires substantial time and expertise. This work proposes a novel deep learning based end-to-end auto-context refinement framework, consisting of two stages. The first stage produces a low resolution segmentation of the BV and body simultaneously. The resulting probability map for each object (BV or body) is then used to crop a region of interest (ROI) around the target object in both the original image and the probability map to provide context to the refinement segmentation network. Joint training of the two stages provides significant improvement in Dice Similarity Coefficient (DSC) over using only the first stage (0.818 to 0.906 for the BV, and 0.919 to 0.934 for the body). The proposed method significantly reduces the inference time (102.36 to 0.09 s/volume ≈1000x faster) while slightly improves the segmentation accuracy over the previous methods using slide-window approaches.

Index Terms—: Image segmentation, high-frequency ultrasound, mouse embryo, volumetric deep learning

1. INTRODUCTION

The mouse, due to its high degree of homology with human genome, is widely used for studies of embryonic mutations. The physical expression of genetic mutations can be identified in terms of variations in the shape of the brain ventricle (BV) or other parts of the body [1]. High-frequency ultrasound (HFU) is well suited for imaging embryonic mice because it is noninvasive, real-time and can still provide fine-resolution volumetric images [2]. Manual segmentation of the BV and body is time-consuming necessitating the development of fully automatic and real-time segmentation algorithms [3].

Earlier works attempted to address segmentation of volumetric embryonic data. Nested Graph Cut (NGC) [4] was developed to perform the segmentation of the BV from a HFU mouse embryo head image manually cropped from a whole-body scan, and [5] extended to perform BV segmentation in whole-body images, which worked well on a small data set but failed to generalize to a larger unseen data set.

Inspired by the success of fully convolutional networks (FCN) for semantic segmentation [6], a deep-learning based framework for BV segmentation was proposed in [7] which outperformed the NGC based framework in [5] by a large margin. Because the BV makes up a very small portion (<0.5%) of the whole volume, the algorithm [7] first applied a volumetric convolutional neural network (CNN) on a 3D sliding window over the entire volume to identify a 3D bounding box containing the entire BV, followed by a FCN to segment the detected bounding box into BV or background. However, despite high accuracy (0.904 DSC for BV), hundreds of thousands of forward passes of a classification network is required. The challenges for body segmentation are similar to those for BV segmentation, except the extreme imbalance between foreground and background is somewhat alleviated (the body makes up around 10% of the whole volume). Hence, the localization step is not necessary for body segmentation. Qiu et al. [8] used the same FCN for BV segmentation in [7] to segment the body in a sliding window based manner. This sliding window based method is inefficient for the same reason as the localization network in [7]. The BV is contained inside the head of the body, which makes it possible to segment both of them simultaneously in a unified and efficient framework.

Roth et al. [9] focused on abdominal CT image segmentation. They applied two cascaded 3D FCNs using the initial segmentation results to localize the foreground organs and reduced the size of the 3D region which was input to the second FCN. The initial segmentation was used only for localization and was not concatenated with the raw image as input to the second FCN. Tang et al. [10] cascaded four UNets and trained them in an end-to-end manner for skin lesion segmentation. However, this framework did not use the segmentation output of previous UNet to reduce the spatial input to the next UNet and was restricted to 2D binary segmentation.

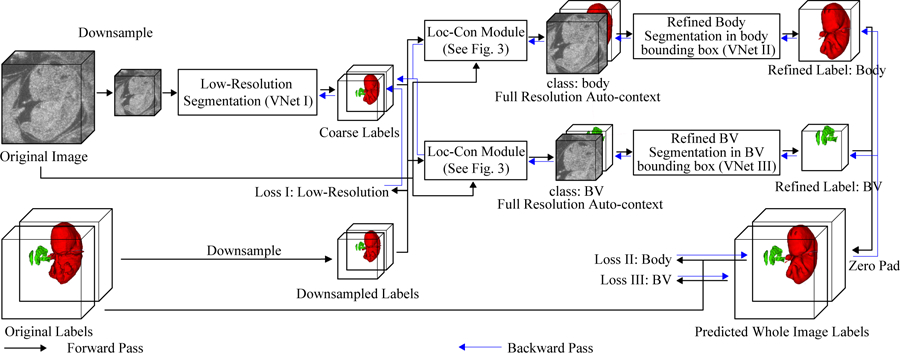

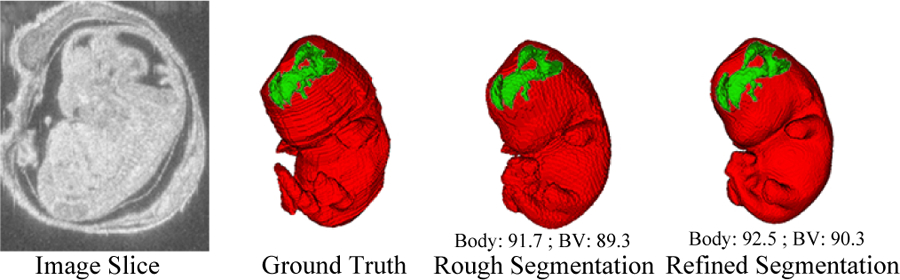

Here, we propose an efficient end-to-end auto-context refinement framework for joint BV and body segmentation from volumetric HFU images (Fig. 2). The idea behind auto-context [11] is to iteratively approach the ground truth by a sequence of models where the input and output of the previous model is concatenated to form the input for the next model such that the final segmentation is closer to the ground truth than the intermediate segmentation (Fig. 1). Specifically, a VNet like network (VNet I) [12] was first applied to a down-sampled HFU 3D image to jointly segment BV and body. The resulting low resolution BV label was then up-sampled to the original resolution, and a bounding box containing the BV was generated (Fig. 3). Next, the original image and the initial BV predicted probability map in the bounding box were concatenated as localized auto-context input and fed into another VNet (VNet III) to generate the refined BV label. A parallel process was used to generate final fine-resolution body segmentation using a third VNet (VNet II). Each VNet was trained separately and then fine-tuned in an end-to-end manner. Compared with other works, this framework has the following advantages:

The class imbalance problem posed by segmenting small structures in large volumes and the memory issue associated with large volumetric images were mitigated by cascading the networks from low resolution for the whole image to high resolution in the localized region.

The auto-context provided by concatenating the up-sampled low resolution predicted probability maps with the full resolution images allows efficient full resolution segmentation which can incorporate information from a wide field of view.

The combination of the two stages in a pipeline allows end-to-end training and efficient, real-time one-pass inference while achieving segmentation accuracy slightly better than the sliding-window based approach, which is the substantially more time consuming.

Fig. 2:

Diagram of overall pipeline.

Fig. 1:

An example of image data, ground truth label, initial rough segmentation and final refined segmentation. The numbers below the 3D segmentation are corresponding DSC.

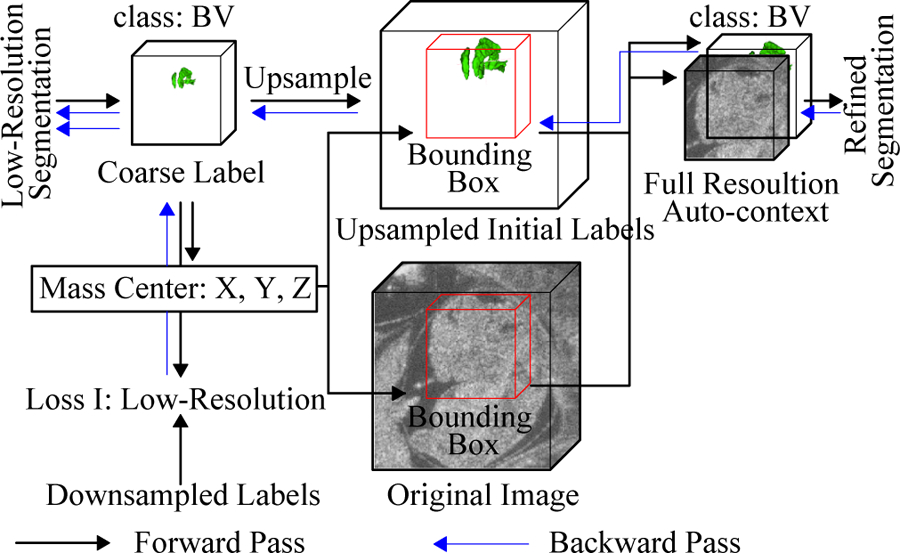

Fig. 3:

Diagram of localization-auto-context (Loc-Con) module for the BV. A similar configuration is used for the body. The gradient produced by the refinement loss can flow back to low resolution segmentation network (blue arrows).

2. METHODS

The overview of our proposed end-to-end BV and body segmentation framework is shown in Fig. 2. The pipeline consists of two stages: (1) initial segmentation and (2) segmentation refinement. The initial segmentation produces a joint low resolution segmentation maps for BV and body simultaneously. Next, the original data and the low resolution label for each object are passed to a localization-auto-context (Loc-Con) module (Fig. 3), which generates a bounding box for the object based on the centroid of the up-sampled predicted probability map, and concatenates the original (full-resolution) image and initial up-sampled predicted probability map as auto-context input for the refinement network for this object. Then, the refinement network generates a refined full resolution segmentation map within the bounding box. Finally, all the independently trained networks are jointly fine-tuned to further improve the segmentation performance.

2.1. Initial segmentation on low resolution

Because of memory constraints, the low resolution images were rescaled and processed at a size of 1603 voxels. A VNet-like [12] structure (VNet I) was trained to perform BV and body segmentation simultaneously at low resolution. The output of the VNet had 3 channels, representing the background, BV, and body. The Dice loss [12] for each class was summed and used as the training loss (loss I in Fig. 2).

2.2. Localization-auto-context module

To better utilize the information given from the low resolution segmentation result for each object, the Loc-Con module was introduced to generate the localized auto-context input for refinement. For each foreground object (BV or body), Loc-Con module steps were (Fig. 3):

Up-sample (trilinear interpolation) the initial segmentation map to original resolution.

Generate a fixed size bounding box (1603 for BV and 2563 for body) located at the center of predicted probability map for each class.

Concatenate the original resolution image and initial predicted probability map in the bounding box to create the auto-context input for the refinement network.

Going beyond the previous works [9][10], the Loc-Con module acts as an attention mechanism by leveraging the low resolution rough segmentation to crop a ROI (the bounding box) at the original resolution. It also draws on the conventional auto-context strategy [11] by providing an initial predicted probability map as a separate input channel. This initial map obtained from the whole image at the low resolution provides global context information, which helps to improve the final segmentation results. It also enables the gradient from the refinement networks (VNet II & III) to flow back to the initial segmentation network (VNet I), which makes end-to-end fine-tuning feasible (Fig. 2).

2.3. Pre-training of fine resolution refinement network

Two refinement networks (VNet II & III) were trained for BV and body, respectively. For each object, the high resolution raw image and the up-sampled initial segmentation probability map in the localized bounding box were concatenated and used as the input. The structure of the refinement network was exactly the same as the initial segmentation, except that it takes 2 channels as input and produces 1 channel as output. Using the object centroid information, the output was zero-padded back to original image size.

2.4. End-to-end refinement on fine resolution

During the pretraining of the refinement stage, the parameters of the initial segmentation network (VNet I) were frozen until the refinement network for each object (VNet II & III) converged. After that, all three networks were jointly optimized to minimize the sum of Dice losses measured on the fine resolution image (loss II & III in Fig. 2).

3. EXPERIMENTS

3.1. Data description and implementation details

The data set used in this work consisted of 231 HFU mouse embryo volumes which were acquired in utero and in vivo from pregnant mice (10–14.5 days after mating) using a 5-element 40-MHz annular array [2]. The dimensions of the HFU volumes vary from 150 × 161 × 81 to 210 × 281 × 282 voxels and the voxel size is 50 × 50 × 50 μm. For each of the 231 volumes, manual BV and body segmentations were conducted by trained research assistants using Amira, a commercial software. The data were then randomly split into 185 for training and 46 for testing.

All the neural network models were implemented in PyTorch 1.2 [13], with CUDA 9.1 using two NVIDIA Tesla P40s. To compensate for limited data, original images were randomly rotated from −180° to 180° along each of the three axes, then randomly translated −30 to 30 voxels and finally randomly flipped. During the initial pretraining step (VNet I), the Dice loss (loss I) between the predicted segmentations and the ground truth labels was averaged across the three classes (background, body and BV). During the pre-train refinement stage (VNet II & III) and end-to-end refinement stage (VNet I, II & III), the Dice loss for body and BV (loss II & III) were used to train the networks. All networks were trained with the Adam optimizer [14] with learning rate 10−2 for the initial segmentation and pre-train refinement stages. A learning rate of 10−3 was used for the end-to-end refinement stage.

3.2. Results and discussion

As shown in Table. 1, the initial segmentation achieves average DSC of 0.818 and 0.918 for BV and body respectively. The results on the body are still competitive, while the BV segmentation performance is unsatisfactory. This phenomenon is as expected due to the fact that BV is much smaller than the body. Hence, it is necessary to localize the ROI and refine the segmentation.

Table 1:

The Dice Similarity Coefficient (DSC) and inference time averaged over 46 test volumes for different methods.

In order to determine the effectiveness of the auto-context approach, refinement without concatenation only inputs the raw image cropped from the bounding box found in the localization step to the refinement network (without including the initial predicted probability map as a second channel). Compared to using the initial segmentation and the raw image (auto-context), this approach suffers from a significant degradation in DSC for the BV (from 0.894 to 0.878 DSC). Finally, end-to-end refinement improves BV DSC to 0.906 and body to 0.934. Although the performance is only slightly better than the previous sliding-window based methods [7][8], the new method has significantly shorter inference time (0.09 second for each image, compared to 102.36 second). For fair comparison, the networks from [7] and [8] were retrained using the same training set described here and evaluated on the same testing set.

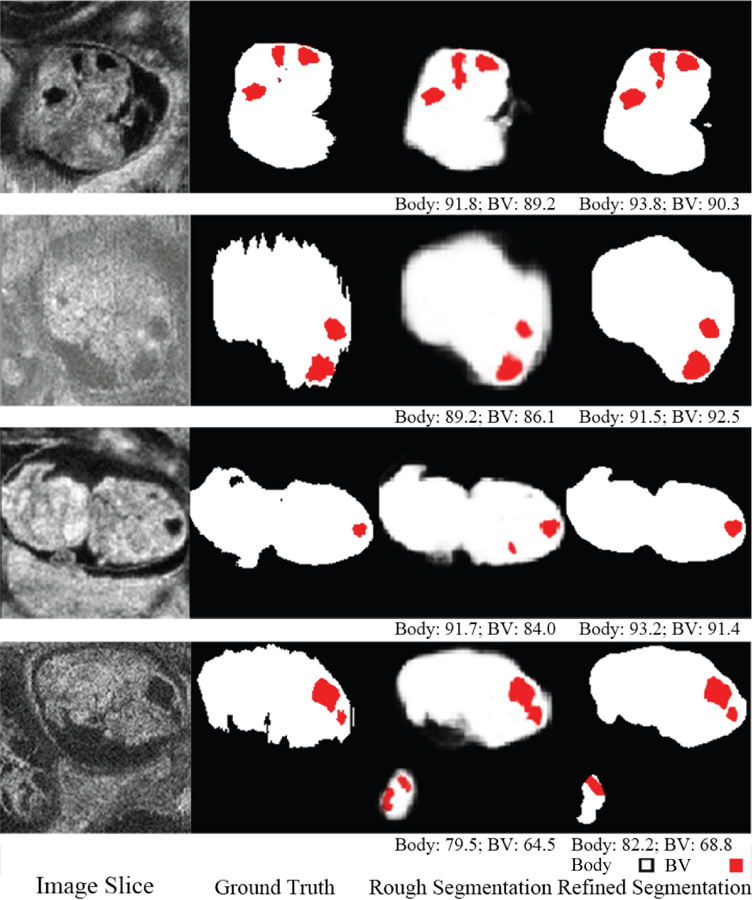

As shown in Fig. 4, the initial segmentation produces reasonable body segmentations along with very rough BV segmentations. After end-to-end refinement, the segmentation accuracy was substantially improved.

Fig. 4:

Qualitative segmentation results of end-to-end auto-context refinement framework for 4 ultrasound volumes. Red indicates BV, white indicates body and the numbers below the predicted segmentation are corresponding DSC. The second row is an image with motion artifacts so the ground truth is noisy in the body background boundary. The refined network produces a smooth boundary which is closer to the true physical structure. The last row is an image with one complete embryo and one partial embryo but only the complete one is labeled. The predicted label contained part of the incomplete embryo.

4. CONCLUSION

In this work, an end-to-end auto-context refinement framework was proposed consisting of two stages. The initial segmentation acts not only as a ROI localization module, but also provides global context information when fed as the second channel together with the original images to the network. Experiments demonstrate the necessity of this two-stage structure and the effectiveness of end-to-end fine-tuning. The proposed method achieves DSC of 0.906 and 0.934 for BV and body segmentation respectively, outperforming the previous methods in accuracy, while also being around a thousand times faster. In conclusion, the proposed algorithm could be invaluable for phenotyping studies.

Acknowledgments

The research described in this paper was supported in part by NIH grant EB022950 and HD097485.

5. REFERENCES

- [1].Kuo Jen-wei, Wang Yao, Aristizabal Orlando, Turnbull Daniel H, Ketterling Jeffrey, and Mamou Jonathan, “Automatic mouse embryo brain ventricle segmentation, gestation stage estimation, and mutant detection from 3d 40-mhz ultrasound data,” in 2015 IEEE International Ultrasonics Symposium (IUS). IEEE, 2015, pp. 1–4. [Google Scholar]

- [2].Aristizábal Orlando, Mamou Jonathan, Ketterling Jeffrey A, and Turnbull Daniel H, “High-throughput, high-frequency 3-d ultrasound for in utero analysis of embryonic mouse brain development,” Ultrasound in Medicine & Biology, vol. 39, no. 12, pp. 2321–2332, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Mark Henkelman R, “Systems biology through mouse imaging centers: experience and new directions,” Annual Review of Biomedical Engineering, vol. 12, pp. 143–166, 2010. [DOI] [PubMed] [Google Scholar]

- [4].Kuo Jen-wei, Mamou Jonathan, Aristizábal Orlando, Zhao Xuan, Ketterling Jeffrey A, and Wang Yao, “Nested graph cut for automatic segmentation of high-frequency ultrasound images of the mouse embryo,” IEEE Transactions on Medical Imaging (TMI), vol. 35, no. 2, pp. 427–441, 2015. [DOI] [PubMed] [Google Scholar]

- [5].Kuo Jen-wei, Qiu Ziming, Aristizabal Orlando, Mamou Jonathan, Turnbull Daniel H, Ketterling Jeffrey, and Wang Yao, “Automatic body localization and brain ventricle segmentation in 3d high frequency ultrasound images of mouse embryos,” in 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI). IEEE, 2018, pp. 635–639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Long Jonathan, Shelhamer Evan, and Darrell Trevor, “Fully convolutional networks for semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015, pp. 3431–3440. [DOI] [PubMed]

- [7].Qiu Ziming, Langerman Jack, Nair Nitin, Aristizabal Orlando, Mamou Jonathan, Turnbull Daniel H, Ketterling Jeffrey, and Wang Yao, “Deep bv: A fully automated system for brain ventricle localization and segmentation in 3d ultrasound images of embryonic mice,” in 2018 IEEE Signal Processing in Medicine and Biology Symposium (SPMB). IEEE, 2018, pp. 1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Qiu Ziming, Nair Nitin, Langerman Jack, Aristizabal Orlando, Mamou Jonathan, Turnbull Daniel H, Ketterling Jeffrey A, and Wang Yao, “Automatic mouse embryo brain ventricle & body segmentation and mutant classification from ultrasound data using deep learning,” in 2019 IEEE International Ultrasonics Symposium (IUS). IEEE, 2019, pp. 12–15. [Google Scholar]

- [9].Roth Holger R, Oda Hirohisa, Zhou Xiangrong, Shimizu Natsuki, Yang Ying, Hayashi Yuichiro, Oda Masahiro, Fujiwara Michitaka, Misawa Kazunari, and Mori Kensaku, “An application of cascaded 3d fully convolutional networks for medical image segmentation,” Computerized Medical Imaging and Graphics, vol. 66, pp. 90–99, 2018. [DOI] [PubMed] [Google Scholar]

- [10].Tang Yujiao, Yang Feng, Yuan Shaofeng, et al. , “A multi-stage framework with context information fusion structure for skin lesion segmentation,” in 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI). IEEE, 2019, pp. 1407–1410. [Google Scholar]

- [11].Tu Zhuowen, “Auto-context and its application to high-level vision tasks,” in 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2008, pp. 1–8. [Google Scholar]

- [12].Milletari Fausto, Navab Nassir, and Ahmadi Seyed-Ahmad, “V-net: Fully convolutional neural networks for volumetric medical image segmentation,” in 2016 Fourth International Conference on 3D Vision (3DV). IEEE, 2016, pp. 565–571. [Google Scholar]

- [13].Paszke Adam, Gross Sam, Chintala Soumith, Chanan Gregory, Yang Edward, Zachary DeVito Zeming Lin, Desmaison Alban, Antiga Luca, and Lerer Adam, “Automatic differentiation in pytorch,” in NIPS, 2017.

- [14].Kingma Diederik P and Ba Jimmy, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.