Abstract

Evaluating the clinical impacts of healthcare alarm management systems plays a critical role in assessing newly implemented monitoring technology, exposing latent threats to patient safety, and identifying opportunities for system improvement. We describe a novel, accurate, rapidly implementable, and readily reproducible in-situ simulation approach to measure alarm response times and rates without the challenges and expense of video analysis. An interprofessional team consisting of biomedical engineers, human factors engineers, information technology specialists, nurses, physicians, facilitators from the hospital’s simulation center, clinical informaticians, and hospital administrative leadership worked with three units at a pediatric hospital to design and conduct the simulations. Existing hospital technology was used to transmit a simulated, unambiguously critical alarm that appeared to originate from an actual patient to the nurse’s mobile device, and discreet observers measured responses. Simulation observational data can be used to design and evaluate quality improvement efforts to address alarm responsiveness and to benchmark performance of different alarm communication systems.

Background

The Challenge of Measuring and Understanding Alarm Fatigue

Evaluating the sociotechnical impact and limitations of newly adopted biomedical technology is challenging and complex. The Joint Commission National Patient Safety Goal1 and a growing body of literature recognize alarm fatigue as a healthcare hazard2,3 and have drawn attention to the need to evaluate the effectiveness of available physiologic monitoring technology.4,5

Alarm fatigue is defined broadly as the lack of or delay in response to alarms due to excessive numbers of alarms resulting in sensory overload and desensitization of hospital workers.1,6,7 In children’s hospitals, patients generate between 42–155 alarms per monitored-day8 and only about 0.5% of alarms on pediatric wards are actionable.3 Up to 67% of alarms heard outside a patient room are not investigated9 and there is wide variation in response time.3 Although many commercially-available alarm management products promise improved alarm communication and patient safety, hospitals often lack the resources to measure the benefits and unintended adverse consequences of implementing these systems.

Analysis of the benefits (and hazards) of an alarm management system is challenging because alarm response is the result of complex interactions between physiologic monitors, the patient, the acuity of illness, the clinician’s competing tasks, and the dynamic hospital environment. Despite advances in characterizing alarm frequency and decision-making surrounding alarm response, evaluation of clinical response to truly actionable and life-threatening alarms has remained elusive. One method for assessing clinician response to alarms is through the use of video observation.10 Video observation of clinician alarm response is highly accurate, but is complicated and expensive to implement.11 In addition, given the rarity of life-threatening alarms, video recording thousands of alarms is likely to reveal only a handful of truly critical actionable alarms.10 Novel approaches are needed to measure responsiveness to rare, life-threatening alarms and identify potential latent hazards in our current alarm system.

In-Situ Simulation for Analysis and Evaluation of Critical Alarms

Simulation has been used to evaluate clinician and system performance, particularly in emergent or infrequent clinical scenarios.12–14 Kobayashi et al evaluated alarm responsiveness in the emergency department (ED) through simulation of a life-threatening arrhythmia.15 A simulator was connected to the in-room bedside monitor in an empty ED room, a life-threatening electrocardiogram tracing was generated, and clinical response time was measured by an observer. The simulation ended when any clinical provider responded to the life-threatening event or after 3 minutes. In their pre-intervention arm, only 1 of 20 simulation alarms (5%) garnered clinical response.

We developed an in-situ simulation approach to evaluate critical alarm response in a pediatric, non-intensive care setting, adapting Kobayashi et al’s simulation methodology. To our knowledge this form of in-situ simulation, in which simulated alarms appearing to come from an actual admitted patient are injected into a clinical environment to measure alarm response, has not been previously described. Our aim was to develop a method for efficient, rapid, repeated measurement of unit-level response to life-threatening events that trigger physiologic alarms and require an immediate response. Using response time and response rates to quantify alarm fatigue/responsiveness on a unit level, we sought to evaluate our current alarm management system and establish a baseline from which to measure the impact of future changes to the alarm system. The intent of the intervention was not to evaluate individual nurse performance, and individual-level response time data were not available to or shared with unit leadership to avoid the possibility of punitive actions for slow responses. This article describes reproducible methodology that may be useful to other institutions seeking to evaluate hospital alarm systems in non-intensive care, non-telemetry inpatient units.

Patient Safety Learning Lab

This work was part of a portfolio of quality improvement work undertaken by the Patient Safety Learning Laboratory (PSLL) at Children’s Hospital of Philadelphia. The PSLL is funded by the Agency for Healthcare Research and Quality, and one of its primary aims is to re-engineer the system of monitoring hospitalized children on acute care units, with a focus on reducing non-informative alarms and accelerating nurse responses to critical events. In the PSLL, our transdisciplinary team uses systems engineering approaches to proceed through a 5-step process for each aim: problem analysis, design, development, implementation, and evaluation. Evaluation of the relationship between alarm burden and nurse workload was part of the problem analysis phase of this improvement initiative.

The Committees for the Protection of Human Subjects (the IRB) at Children’s Hospital of Philadelphia determined that the problem analysis, design, and development phases of this project were consistent with quality improvement activities and did not meet criteria for human subjects’ research.

Setting

Hospital Environment:

Children’s Hospital of Philadelphia is an urban, tertiary care children’s hospital with a combination of private and semi-private rooms. Each inpatient bed is connected to a physiologic monitor (General Electric (GE) Dash 3000, 4000, or 5000) and are used for patients on general non-intensive care floors who require continuous physiologic monitoring, which requires a physician order. The monitors are capable of simultaneously displaying data from the following channels: electrocardiogram, respiratory rate, non-invasive blood pressure, pulse oximetry, and carbon dioxide. These monitors can physically be connected to a private hospital network (GE Carescape Network) through a wall jack (RJ45 connection) for every patient bed. The network is monitored via a network gateway (MMG ASCOM GE Gateway) that has the ability to route alarm notifications to handheld mobile devices (ASCOM d62 Phone Device). The network gateway has a user interface (GE Unite Assign) that allows users to interact with the server and assign specific patient physiologic monitors detected on the hospital network and route alarm messages to the handheld mobile devices carried by clinical staff.

Nurses are primarily responsible for responding to physiologic monitor alarms. Nurses on a general care unit typically care for three to five patients. Nurse/patient assignments are completed by unit charge nurses, who attempt to balance patient acuity, workload, and patient flow. Currently, continuous monitoring is not considered when evaluating workload for nursing assignment purposes.

Alarm Notifications:

Alarm “primary notification” occurs when the bedside monitor alarm sounds. Alarm “secondary notification” occurs when a notification appears on the handheld mobile device and on the centralized information center (CIC) monitor at the front desk. Each CIC displays a remote live view of up to 16 bedside monitors. When an alarm event occurs, the CIC also alarms and highlights which bed monitor is alarming. No individual is assigned to monitor the CIC. Each unit also has 1–2 remote view monitors of the main CIC screen in other locations. The remote monitors do not audibly alarm, but they do provide a live feed of the patient monitor visual display.

Currently, when a patient is connected to a physiologic monitor and a physiologic alarm is triggered, those data are transmitted to the hospital network which is monitored at all times by the network gateway. Based on the filters set in place in the network gateway, the gateway routes alarm messages to the ASCOM mobile device of the nurse who is assigned to that particular hospital bed during his/her shift (see Figure 1). Nurse ASCOM phones alarm with a ring tone accompanied by a text message including the room location and the alarm details (e.g.: “Bed 5S061 Warning SPO2 LO 76”, see Figure 2). The nurse can “Accept” the message and respond accordingly. When the alarm condition resolves the message is automatically removed from the phone.

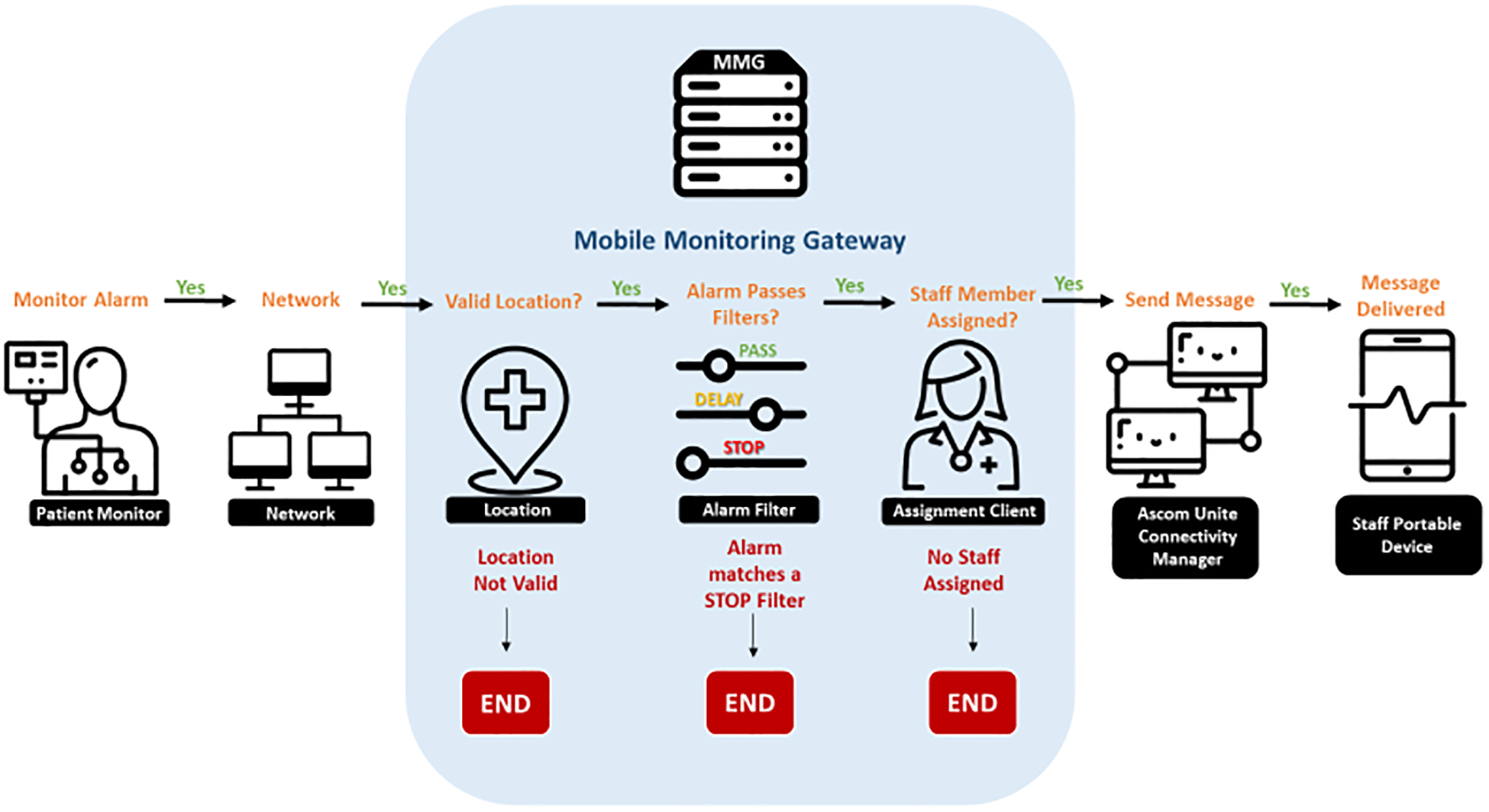

Figure 1:

The network gateway is a server with filtering capabilities that sits on the hospital network and monitors the network at all times. When a patient has a bedside monitor that is connected to the hospital network, the gateway can be programmed to detect the monitor alarms, determine its location within the hospital, filter alarm messages based on alarm parameters, create an alarm message, and forward the message to a clinician’s handheld mobile devices.

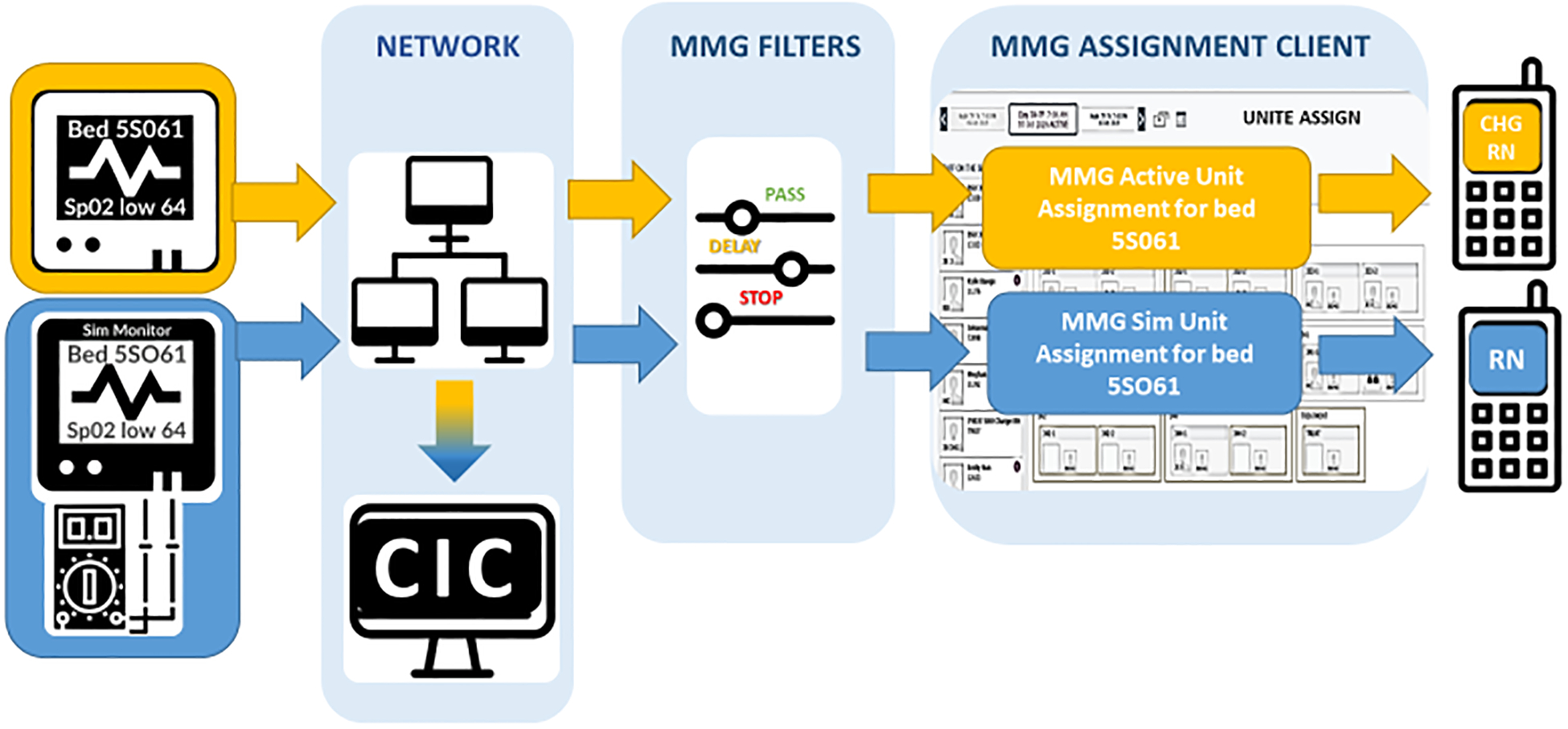

Figure 2:

Parallel design of simulation unit monitor with patient’s real monitor: Depiction of a real patient monitor and simulated patient monitor connected in parallel without interference with one another. The yellow flow of data shows how the real patient bedside monitor connects from the bedside to the network, alarms are detected and filtered via the MMG Gateway filters. The hospital’s gateway network has a user interface in which users with access can assign the mobile phone device to receive all alarm messages. The blue parallel flow of data shows a simulator connected to a physiologic monitor named similarly to the true patient monitor with the number “0” in “Bed 5S061” replaced by the letter “O”. Its flow of data is otherwise similarly connected to the hospital network and alarm messages can be routed to the desired mobile device.

Simulation Design

Technical Goals

We sought to transmit a high fidelity, simulated critical alarm that appeared to come from an existing, admitted and actively monitored patient in order to observe and measure response times on 3 units. The critical alarm was sent to the patient’s primary nurse’s mobile phone and displayed on all unit CICs, enabling staff who heard or observed the alarm at the central nurse’s station or on the other unit CIC monitors to respond. To avoid disruption to patient-care or patient-clinician trust, we simulated only “secondary notification” (to mobile phone and CIC display), avoided entering patient rooms, and took steps to ensure there was no delay or interference in patient care. Prior to beginning the in-situ simulations, all unit nurses were informed that alarm simulations would be taking place over the coming months as part of a quality improvement effort to better understand and combat alarm fatigue.

Simulation scenario, endpoint, and outcomes

We simulated a critical hypoxemic event, with a pulse oximeter oxygen saturation (SpO2) between 60–70%. SpO2 alarms were chosen because they are the most frequent in pediatric hospitals. Though <2% of SpO2 alarms are actionable,3 true and sustained low SpO2 will result in cardiac arrest if unresolved.16 Simulations ended when either a clinician responded to the alarm or 10 minutes passed without a response. We selected the 10-minute maximum based on data from animal models approximating toddler cardiac arrest which demonstrated that sustained hypoxia progresses to pulseless electrical activity and cardiac arrest in approximately half of patients in under 10 minutes.16

The primary outcome of each simulation was time to response by any clinical staff to the simulated critical alarm, starting from receipt of critical alarm message on the mobile phone devices (t=0 min). An observer was discreetly positioned in the hallway with a line of sight to the door of the patient’s room to assure no bedside alarms were silenced, confirm that alarm systems were functioning, and verify whether a clinician entered the room during the simulation. The simulation was discontinued with any clinician entry into the patient room even if the clinician entered to perform routine care (e.g., administer medication, check an intravenous line) and was not responding to the simulated critical alarm. This accounts for the fact that in true patient decompensation, the bedside monitor alarm (“primary notification”) would alarm and staff inside the room would presumably respond accordingly. Observers recorded simulation end-time and qualitative field notes including whether anyone interacted with the CIC, whether the CIC was making alarm noises, or whether they observed anyone silencing a CIC or phone alarm (Table 1).

Table 1: Simulation Observations.

Simulation observational data and reflections from the debriefing interview were recorded in a RedCap survey immediately following the simulation.

| Session Information |

|

| Time Point Metrics |

|

| Observer Gathered Data |

|

| Debriefing interview with the bedside RN |

|

Inclusion criteria

In collaboration with hospital leadership, inclusion criteria were developed to establish an accurate and realistic representation of threats within the current alarm system. We sought to simulate alarms among patients who are particularly vulnerable in our current alarm system (rather than adopting a random sampling approach). Therefore, we defined inclusion criteria for patient room selection including: patient in the room must be on a continuous monitor at the time of the simulation, should be cared for by a nurse who works regularly on the unit, must not have experienced a code-event or rapid-response in the prior 24 hours, and were not designated by the unit as “watchers” (receiving additional surveillance due to heightened risk for clinical deterioration). These criteria were selected in order to evaluate response among patients at risk of harm from alarm fatigue in our current alarm system (as opposed to patients who already receive heightened surveillance within our unit). While such criteria by themselves may be subject to nurse response bias, the level of desaturation (SpO2 of less than 70%) simulated was intentionally set at a signal threshold that should have superseded existing bias. We did not repeat simulations on the same day within the same unit, to avoid priming responses.

Number of Simulations

We sought to realistically simulate what should be “never events” within a high reliability organization, to evaluate potential vulnerability within our alarm system. In order to determine the optimal number of simulations to conduct, we discussed with hospital and unit leadership the number of simulations required to demonstrate with sufficient statistical confidence the safety of our alarm system. We elected to conduct 20 simulations because it provided an appropriate level of precision in reporting response rates with a feasible completion time frame of 3 months. For instance in the case of perfect response, in which nurses responded to 20 of 20 of simulations (100%, 95% CI: 83 – 100%). If response was observed in 10 instances (50%) the 95% CI of response would be 27– 73%.

Team

We formed an interprofessional team consisting of biomedical engineers, human factors engineers, information technology (IT) specialists, clinical nurses, physicians, facilitators from the hospital simulation center, clinical informaticians, and hospital administrative leadership to create and implement the simulation. The clinical engineering and information technology (IT) departments facilitated in (1) acquisition of simulation and monitoring technology to replicate critical alarms, (2) reconfiguration of the gateway server to recognize the simulation equipment and (3) routing simulated alarm messages to the appropriate CIC and mobile devices.

The simulation team, human factors engineers, and clinical nursing leadership at the unit and hospital level sought to ensure the integrity and acceptability of in-situ simulation design. Aware that this simulation could expose systems vulnerabilities (for instance, delayed or absent response to life threatening alarms) we sought broad-based input on how to maintain psychological safety, defined as “the degree to which team members feel that their environment is supportive of asking for help, trying new ways of doing things, and learning from mistakes.”17 To this end, nurses and simulation facilitators developed pre-simulation language and post-simulation debriefing scripts to explicitly frame this simulation as systems evaluation (rather than comparative assessment of individual- or unit-level performance).

Simulation Equipment

Hospital Network and Server changes to accommodate Simulation Unit:

Our engineering and information technology (IT) departments created a virtual simulation unit within the hospital’s network gateway (MMG ASCOM GE Gateway) to deliver simulated alarm notifications directly to nurses’ mobile devices and CICs. The virtual hospital unit (named “Simulation Unit”) in the gateway was created using the gateway user interface and populated with unique hospital bed names that appeared visually similar to existing hospital beds (for instance replacing the numeric “0” with the letter “O”). When a physiologic monitor is programmed with the bed name of one of the Simulation Unit beds and is connected to the private hospital network, it can be detected by the network gateway. The monitor then appears in the gateway user interface and a user, such as a charge nurse, can assign the simulation monitor to an active mobile phone device so that the phone receives simulation monitor alarms. Because this monitor’s bed name is unique and not programmed into any other downstream system, it is not recognized by systems outside of the network gateway, preventing downstream consequences such as abnormal data abstracted into the electronic health record (Figure 2).

Simulation Sessions

On the day of a simulation, unit nursing leadership identified eligible patient rooms and selected one using a random number generator. Unit leaders were notified that a simulation would take place, but not the specific room number selected.

A team including a nursing leader, biomedical engineer, simulation observer, and a simulation center facilitator/debriefer assembled with necessary equipment just outside the unit (Table 2). The biomedical engineer and nursing leader programmed the simulator to mimic the selected patient’s true vital signs and connected the simulator to the simulation bed monitor (GE Dash 3000 Series Monitor). The monitor was connected to the hospital network via the RJ45 connector in the data closet on the unit. The engineer pulled the simulation monitor into view on the central workstation display so the critical alarm would be displayed at the central nursing station and other unit central displays. The nursing leader then logged into GE Unite Assign to assign the simulation monitor to the nurse caring for the selected patient room and to a control ASCOM phone held by a team member. To ensure patient safety during the simulation, all the participating nurse’s true patient monitor alarm messages and calls were routed to the unit charge nurse.

Table 2:

Equipment required and used for simulation

| Need | Equipment used: |

|---|---|

| Physiologic monitor | GE Dash 3000 Series |

| Full Monitor Simulator | FLUKE ProSim 4 |

| Pulse Ox-Jack | RJ45 Connection |

Application Processes:

|

Applications Used:

|

At the start of the simulation the engineer programmed the simulator to a pulse oximeter value between 61–69%, which when detected by the simulation GE Dash 3000 monitor triggered an alarm. The gateway identified the alarm coming from the simulation monitor and routed the message to the nurse’s mobile device and to the team’s control mobile device, signaling the start of the simulation (t=0).

An observer stood within view of the selected patient room to intercept the clinician responding to an alarm. The simulation ended when a clinician arrived at the patient bed or after 10 minutes. Observations and timing were recorded by the observer into a redcap survey.18,19 The observer recorded interactions with the CIC and monitor, how the RN interacted with the phone while it was alarming, and how the simulation ended (Table 1). The observer also collected information about the patient and nursing assignment. Following the simulation, the debriefer reviewed goals of the simulation with the bedside nurse and solicited suggestions on improvements to the unit alarm system.

Limitations

This methodology must be considered in light of its limitations. First, we did not design this simulation to shed light on the mechanisms that explain delayed responses to alarms. Rather, we sought an overall indicator of the clinical unit’s performance within the socio-technical environment as a whole in response to critical alarms. Second, we created the simulations only on general medical-surgical units, thus limiting the applicability of this method to ICU or telemetry patients and other types of units with different staffing models and alarm systems. Third, we developed and tested this method in a pediatric hospital. Although we believe that alarm response time is a relevant outcome measure across patient populations, some of the aspects of the simulation might need to be modified for use in adult settings. Lastly, our method gives information about specific alarm notification delivered by one mechanism so cannot be extrapolated to different types of alarms (i.e. primary notification, arrhythmia, etc).

Conclusion

As healthcare systems adapt to the rapidly changing landscape of communications technology, it is important to understand the effects and latent threats of these technologies. We describe a reproducible approach using existing hospital technology to test and quantify alarm response times and rates without interfering with routine patient care. We intend to use simulation observations and outcomes to design and evaluate quality improvement efforts to address alarm fatigue. In the future, such simulations could provide a framework to benchmark performance with different communication and alarm systems.

Acknowledgements:

Grace Good provided simulation debriefing support. Charlie Kovacs, Larry Villecco, and Michael Hamid provided technical support in simulation implementation.

Funding Disclosure: Effort contributing to this manuscript was supported in part by the Agency for Healthcare Research and Quality under award number R18HS026620. Funders had no role in the project design; in the collection, analysis or interpretation of data; in the decision to submit for publication; or in the writing of the manuscript.

Contributor Information

Brooke Luo, Departments of Pediatrics and Biomedical and Health Informatics, Children’s Hospital of Philadelphia, Philadelphia, PA; Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA.

Melissa McLoone, Department of Nursing Practice and Education, Children’s Hospital of Philadelphia, Philadelphia, PA.

Irit R. Rasooly, Departments of Pediatrics and Biomedical and Health Informatics, Children’s Hospital of Philadelphia, Philadelphia, PA; Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA.

Sansanee Craig, Departments of Pediatrics and Biomedical and Health Informatics, Children’s Hospital of Philadelphia, Philadelphia, PA; Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA.

Naveen Muthu, Departments of Pediatrics and Biomedical and Health Informatics, Children’s Hospital of Philadelphia, Philadelphia, PA; Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA.

James Won, Center for Healthcare Quality and Analytics, Children’s Hospital of Philadelphia, Philadelphia, PA; Perelman School of Medicine and School of Engineering, University of Pennsylvania, Philadelphia, PA.

Halley Ruppel, Division of Research, Kaiser Permanente Northern California, Oakland, CA.

Christopher P. Bonafide, Departments of Pediatrics and Biomedical and Health Informatics, Children’s Hospital of Philadelphia, Philadelphia, PA; Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA.

References

- 1.The Joint Commission: Sentinel Event Alert. Medical Device Alarm Safety In Hospitals. Available at: https://www.jointcommission.org/-/media/tjc/documents/resources/patient-safety-topics/sentinel-event/sea_50_alarms_4_26_16.pdf. Accessed June 24, 2020. [PubMed]

- 2.ECRI Institute. Top 10 Health Technology Hazards for 2020: Expert Insights from Health Devices. Available at: https://elautoclave.files.wordpress.com/2019/10/ecri-top-10-technology-hazards-2020.pdf. Accessed June 26, 2020.

- 3.Bonafide CP, Localio AR, Holmes JH, et al. Video Analysis of Factors Associated with Response Time to Physiologic Monitor Alarms in a Children’s Hospital. JAMA Pediatrics. 2017;171(6):524–531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ruskin KJ, Bliss JP. Alarm Fatigue and Patient Safety. The Anesthesia Patient Safety Foundation Newsletter. 2019;34(1): 1–5. [Google Scholar]

- 5.Gaines K Alarm Fatigue is Way Too Real (and Scary) for Nurses. Available at: https://nurse.org/articles/alarm-fatigue-statistics-patient-safety/. Published: August 19, 2019. Accessed: June 26, 2020. [Google Scholar]

- 6.Johnson KR, Hagadorn JI, Sink DW. Alarm Safety and Alarm Fatigue. Clinics in Perinatology. 2017;44(3):713–728. [DOI] [PubMed] [Google Scholar]

- 7.Cvach M Monitor Alarm Fatigue: An Integrative Review. Biomedical Instrumentation and Technology. 2012;46(4):268–277. [DOI] [PubMed] [Google Scholar]

- 8.Schondelmeyer AC, Brady PW, Goel VV., et al. Physiologic Monitor Alarm Rates At 5 Children’s Hospitals. Journal of Hospital Medicine. 2018;13(6):396–398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schondelmeyer AC. Nurse Responses to Physiologic Monitor Alarms on a General Pediatric Unit. J Hosp Med. 2019;14(10): 602–606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bonafide CP, Zander M, Graham CS, et al. Video Methods for Evaluating Physiologic Monitor Alarms and Alarm Responses. Biomedical Instrumentation & Technology. 2014;48(3):220–230. [DOI] [PubMed] [Google Scholar]

- 11.MacMurchy M, Stemler S, Zander M, Bonafide CP. Acceptability, Feasibility, and Cost of Using Video to Evaluate Alarm Fatigue. Biomedical Instrumentation & Technology. 2017;51(1):25–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Becker D Using Simulation in Academic Environments to Improve Learner Performance In: Ulrich B, Mancini, Eds. Mastering Simulation: A Nurse’s Handbook for Success. 1st ed Indianapolis, IN: Sigma Theta Tau International, 2014. Available at: http://site.ebrary.com/id/10775127. Accessed October 22, 2018. [Google Scholar]

- 13.Robert Wood Johnson Foundation Initiative on the Future of Nursing, at the Institute of Medicine. The Future of Nursing: Leading Change, Advancing Health. Washington, DC: National Academies Press, 2011. Available at: http://www.ncbi.nlm.nih.gov/books/NBK209880/. Accessed: October 22, 2018. [PubMed] [Google Scholar]

- 14.Schubert CR. Effect of Simulation on Nursing Knowledge and Critical Thinking in Failure to Rescue Events. The Journal of Continuing Education in Nursing. 2012;43(10):467–471. [DOI] [PubMed] [Google Scholar]

- 15.Kobayashi L, Parchuri R, Gardiner FG, et al. Use of In-Situ Simulation and Human Factors Engineering to Assess and Improve Emergency Department Clinical Systems for Timely Telemetry-Based Detection of Life-Threatening Arrhythmias. BMJ Quality and Safety. 2013;22(1):72–83. [DOI] [PubMed] [Google Scholar]

- 16.Marquez AM, Morgan RW, Ko T, et al. Oxygen Exposure During Cardiopulmonary Resuscitation is Associated with Cerebral Oxidative Injury in a Randomized, Blinded, Controlled, Preclinical Trial. JAHA. 2020;9(9). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Agency for Healthcare Research and Quality. Creating Psychological Safety in Teams: Handout. Available at: https://www.ahrq.gov/evidencenow/tools/psychological-safety.html. Accessed June 12, 2020.

- 18.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research Electronic Data Capture (Redcap)--A Metadata-Driven Methodology and Workflow Process for Providing Translational Research Informatics Support. Journal of Biomedical Informatics. 2009;42(2):377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Harris PA, Taylor R, Minor BL, et al. The Redcap Consortium: Building an International Community of Software Platform Partners. Journal of Biomedical Informatics. 2019;95:103208. [DOI] [PMC free article] [PubMed] [Google Scholar]