INTRODUCTION

In today’s era of evidence-based medicine, scientific breakthroughs should guide medical care. Unfortunately, it takes approximately 17 years for knowledge gained from research to reach widespread practice.1 Consequently, patients may receive outdated care for nearly 2 decades. Implementation science (IS) is the study of methods to promote the adoption and integration of evidence-based practices into routine utilization (Table 1). IS is the next great global health care research frontier to bridge the crucial gap between knowledge and practice. The World Health Organization identifies the process of implementing proven interventions as one of the greatest challenges facing the global health community.2

Table 1.

Implementation science and related research/process definitions

| Type of Research/Process | Definition |

|---|---|

| Clinical effectiveness research | The study of which clinical or public health interventions work best for improving health |

| Quality improvement | The systematic approach to the analysis of practice patterns to improve performance in health care |

| Implementation science | The study of the timely uptake of evidence into routine practice; abroad term that includes implementation research, dissemination research, and deimplementation research |

| Implementation research | The study and use of strategies to integrate best practice, evidence-based interventions into specific settings |

| Dissemination research | The study of diffusion of materials and information to a particular audience |

| Deimplementation research | The study of the processes to remove outdated, incorrect, or low-value care from practice |

The goals of this review are to

Define implementation science,

Discuss key outcomes,

Review commonly used frameworks,

Describe research methods, and

Provide an overview of deimplementation.

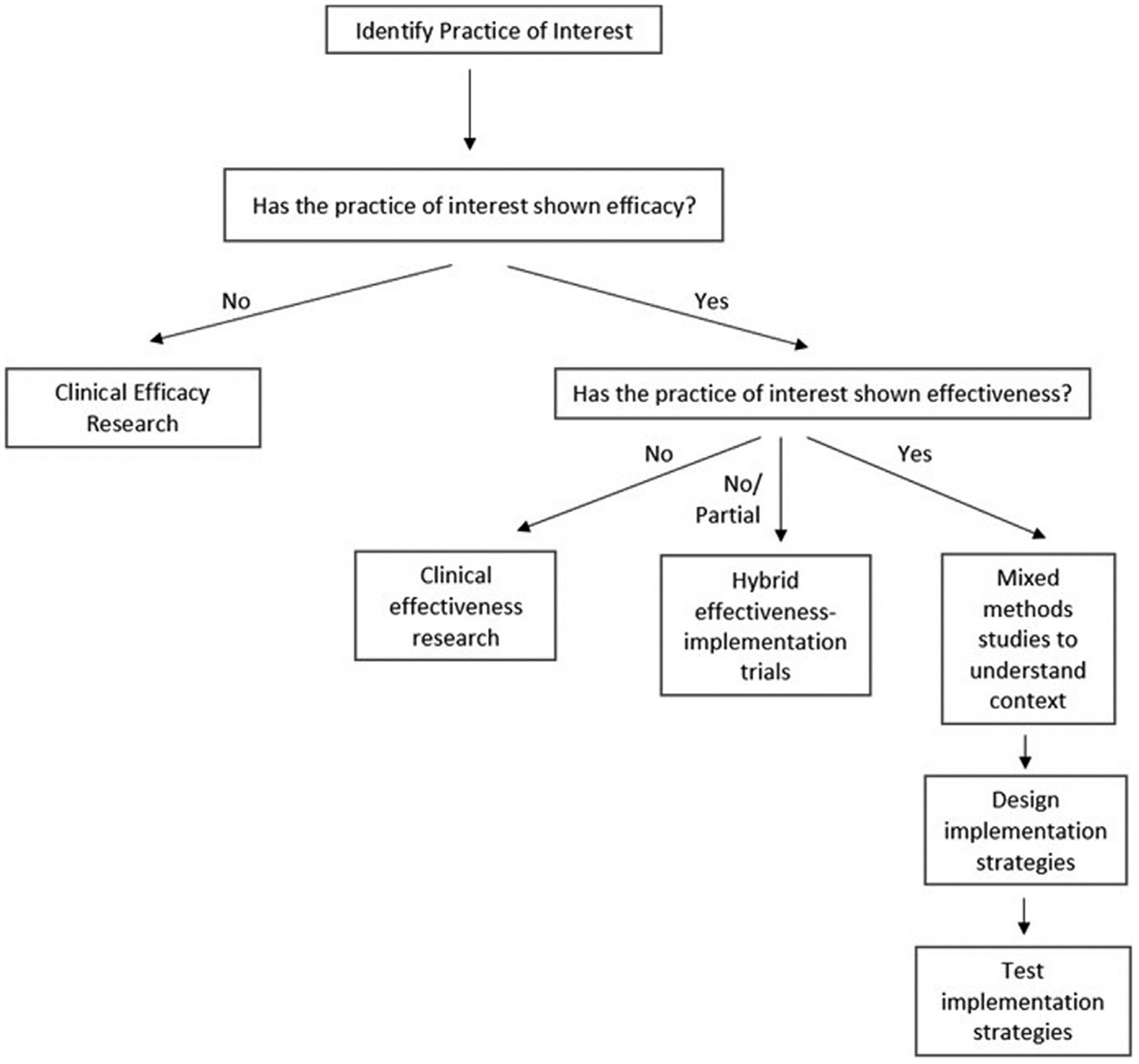

Implementation research is typically salient after an intervention is known to be clinically efficacious and effective (Fig. 1).3 In many ways, IS is an extension of familiar processes of clinical efficacy or effectiveness research and quality improvement (QI). Clinical efficacy/effectiveness research aims to establish best clinical practices to improve outcomes at the patient level. With implementation research, however, the best practice is already established, and it focuses on the next steps of improving utilization of effective interventions. Interventions can target policy, organizations, systems, clinical settings such as inpatient floors and outpatient clinics, or providers. QI examines effects of process changes related to health care quality and safety and is typically performed in a local setting. IS research expands on QI principles to allow extrapolation to other settings. IS also includes the study of deimplementation or systematic removal of outdated or low-value care from practice.

Fig. 1.

Process to assist with research efforts. Adapted from Lane-Fall MB, Curran GM, Beidas RS. Scoping implementation science for the beginner: locating yourself on the “subway line” of translational research. BMC Med. Res. Methodol. 2019;19(1):133; with permission.

IMPLEMENTATION OUTCOMES

Just as clinical research typically examines outcomes such as mortality, key outcomes of IS help researchers to know if an intervention is successful. Proctor and colleagues4 proposed 8 commonly used and distinct implementation outcomes (Table 2):

Acceptability

Adoption

Appropriateness

Feasibility

Fidelity

Penetration

Sustainability

Cost

Table 2.

Implementation outcomes

| Implementation Outcome | Definition |

|---|---|

| Acceptability | The extent to which a treatment, practice, service, etc. is agreeable or satisfactory |

| Adoption | The intention to use the treatment, practice, service, etc. |

| Appropriateness | The perceived relevance or fit of a treatment or intervention in a particular setting and to address a certain issue/problem |

| Cost | The cost impact of the implementation effort, which depends on the complexity of the intervention, the cost of the strategy, and the location where it is deployed |

| Feasibility | The degree to which a treatment, practice, service, etc. can be carried out in a certain setting |

| Fidelity | The extent to which an intervention is implemented as originally proposed |

| Penetration | The uptake or integration of an intervention into practice |

| Sustainability | The degree to which an intervention is maintained within a setting |

Data from Proctor E, Silmere H, Raghavan R. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76.

These outcomes can be used first as indicators of implementation success, second as indicators of implementation processes, and third as intermediate outcomes in relation to clinical outcomes for effectiveness or quality research.5

Acceptability

Acceptability is the belief among stakeholders that a given treatment, process, device, standard, etc. is agreeable or respectable. The complexity and content of the intervention and a stakeholder’s familiarity or comfort with it often play a role in that intervention’s acceptability. Acceptability is often measured at the stakeholder level and is important at nearly all phases of the implementation process, as it affects adoption, penetration, and sustainability. It can be measured quantitatively with surveys or qualitatively with interviews.

Adoption

Adoption is defined as the decision or action to try to put into effect an intervention or evidence-based practice. It is usually measured at the level of the individual provider or entire organization and is most pertinent at the early to middle stages of implementation, as it refers to the uptake of an intervention into practice. It can be studied with surveys, observation, interviews, or administrative data.

Appropriateness

Appropriateness refers to the believed fit or relevance of an intervention to a given setting and how well it addresses a specific issue or problem. This is different than acceptability in that an intervention may be considered a good fit for a particular audience but may be unacceptable or inappropriate to a provider. Appropriateness is central to the implementation process early, before the adoption phase. It is often analyzed at the level of the individual provider, consumer, or organization and can be studied using surveys, qualitative interviews, or focus groups.

Feasibility

Feasibility is the degree to which an intervention can be implemented within a certain setting.6 It is important early in the implementation process but is often studied retrospectively as an explanation to why an intervention may have been successful or not. It is analyzed at both the provider and organizational level and is often examined using surveys or administrative data.

Fidelity

Fidelity is defined as the extent to which an intervention was carried out as it was originally intended.7,8 It is, perhaps, the most commonly studied implementation outcome, as it comments on the integrity to which an evidence-based practice is adhered to through quality of a treatment protocol or adherence to a program. Fidelity represents the achievement of successful translation of treatments from the clinical laboratory to the real-world delivery systems. There are multiple dimensions of fidelity including adherence, quality of delivery, program component differentiation, exposure to the intervention, and participant responsiveness or involvement.9,10 Fidelity is analyzed at the provider level with observation, ratings, checklists, or self-report and is important at the early to middle stages of the implementation process.

Penetration

Penetration refers to the successful integration of an intervention into a setting or practice. Penetration can be synonymous with “spread” or “reach.” An example may include assessing the number of providers who deliver a certain service out of the total number or providers who are expected or qualified to provide the service. It is usually analyzed at the level of the institution in the middle to later stages of the implementation process and can be done with case audits or checklists.

Sustainability

Sustainability is defined as the degree to which an intervention is maintained or integrated throughout a system over time. In order to have successful sustainability, an evidence-based practice may require saturation throughout all levels of an organization. Sustainability is targeted in the later stages of the implementation process and is often studied at the administrator or organizational level. Sustainability can be measured quantitatively with case audits and checklists or subjectively using interviews and questionnaires.

Cost

Cost is the financial implication of an intervention. The cost often depends on the complexity of the intervention, what strategy is used for implementation, and the target setting or location. Cost is analyzed at the provider and institution level and is important at each stage of the implementation process because it affects other outcomes such as adoption, feasibility, penetration, and sustainability. It is often studied with administrative data.

OUTCOME CONSIDERATIONS: TIMELINE, STAKEHOLDERS

Stakeholders and time frames over which the outcome of interest is salient should be carefully considered while planning implementation research. Ideally, the measurement of these outcomes will allow implementation scientists to determine which factors of a treatment or strategy allow for the greatest success of implementation of an intervention over time. For example, fidelity is usually evaluated during initial implementation, whereas adoption can be studied later.11–14 Feasibility may be important when a new treatment is first used but may be inconsequential once the treatment becomes routine.

Outcomes of interest can be interrelated, can affect each other over time, and can be studied together or sequentially. For example, as an intervention becomes increasingly acceptable within an institution, penetration can increase over time. In this scenario, acceptability may be considered a leading indicator or a future predictor of implementation success. A lagging indicator such as sustainability may only be observed after the implementation process is mostly complete. Furthermore, the relationship between outcomes can be dynamic and complex. For example, feasibility, appropriateness, and cost will affect an intervention’s acceptability. Acceptability will affect adoption, penetration, and sustainability of the intervention.4 Also, if providers do not have to implement an intervention or treatment with exact fidelity, they may view the intervention as more acceptable.15

Depending on the setting, stakeholders can include physicians, patient representatives, nursing staff, administrators, and governmental officials. Stakeholders with varying expertise will bring important perspectives to overcome different barriers. Stakeholders may also value outcomes differently: providers may be most concerned about feasibility, policy makers may be interested in cost, and treatment developers may value fidelity the most. Integrated involvement of key stakeholders throughout the entire planning process is essential to success.

IMPLEMENTATION THEORIES, FRAMEWORKS, AND MODELS

Numerous implementation theories, frameworks, and models exist to help researchers direct and evaluate implementation efforts. Tabak and colleagues16 identified 61 theories and frameworks and categorized them according to construct flexibility, dissemination and/or implementation activities, and socioecological framework level. Similarly, Nilsen developed a catalog of implementation theories, frameworks, and models but organized them based on 3 aims: (1) describing and/or guiding the process of translating research into practice, (2) understanding and/or explaining what influences implementation outcomes, and (3) evaluating implementation.17 The frameworks themselves provide a starting point for developing a study design or implementation project by highlighting potential obstacles or catalysts in the actual implementation and dissemination of the research outcomes, especially with regard to how they may need to be adapted to fit local needs. Three commonly used frameworks or models are presented here: Translating Evidence into Practice (TRIP), Consolidated Framework for Implementation Research (CFIR), and Reach, Effectiveness, Adoption, Implementation, and Maintenance (RE-AIM). A catalog of implementation strategies called Expert Recommendations for Implementing Change (ERIC) is also introduced.

Translating Evidence into Practice

TRIP model is an integrated approach to improve the quality and reliability of care that is often used in both QI processes and dissemination research. TRIP has 4 main steps: (1) summarizing the evidence, (2) identifying local barriers to implementation, (3) measuring performance, and (4) ensuring all patients reliably receive the intervention. The fourth step is a circular process involving 4 components: engagement of stakeholders to explain why interventions are important; educating them by sharing evidence in support of the intervention; executing the intervention by designing a toolkit directed toward barriers, implementation, and learning from prior mistakes; and evaluation or regular assessment of performance and inadvertent consequences.18 The model is designed for use in large-scale collaborative projects.

Consolidated Framework for Implementation Research

The CFIR model19 suggests 5 domains—intervention characteristics (complexity), outer setting (policies or incentives), inner setting (cultural readiness and resources), individual characteristics (knowledge and belief about the intervention), and process (planning)—with multiple constructs under each domain for identifying implementation barriers and facilitators. In addition, CFIR provides a framework to report on the findings of the implementation process and offers sample interview guides to assess each domain. Further information can be found at https://cfirguide.org/.

Reach, Effectiveness, Adoption, Implementation, and Maintenance

RE-AIM is a commonly used implementation science framework developed in 1999 that defines 5 key domains: reach, effectiveness, adoption, implementation, and maintenance.20,21 Each dimension is an opportunity for intervention, and all dimensions can be addressed within a study. Comprehensive information about the framework and its use is available at their Web site (http://re-aim.org/). The goal of RE-AIM is to allow multidisciplinary stakeholders to pay attention to programmatic elements that will translate into practice. Definitions of each dimension of RE-AIM and key trigger questions to answer for each dimension are presented in Table 3.

Table 3.

Reach, effectiveness, adoption, implementation, and maintenance dimensions

| Dimension | Definition | Key Pragmatic Priorities |

|---|---|---|

| Reach | The absolute number, proportion, and representativeness of settings and intervention agents who are willing to initiate a program. | WHO is (was) intended to benefit and who actually participates or is exposed to the intervention? |

| Effectiveness | The impact of an intervention on important outcomes, including potential negative effects, quality of life, and economic outcomes. | WHAT is (was) the most important benefit you are trying to achieve and what is (was) the likelihood of negative outcomes? |

| Adoption | The absolute number, proportion, and representativeness of settings and intervention agents who are willing to initiate a program. | WHERE is (was) the program or policy applied and WHO applied it? |

| Implementation | At the setting level, implementation refers to the intervention agents’ fidelity to the various elements of an intervention’s protocol. This includes consistency of delivery as intended, adaptations made, and the time and cost of the intervention. | HOW consistently is (was) the program or policy delivered, HOW will (was) it be adapted, HOW much will (did) it cost, and WHY will (did) the results come about? |

| Maintenance | The extent to which a program or policy becomes institutionalized or part of the routine organizational practices and policies. Maintenance in the RE-AIM framework also has referents at the individual level. At the individual level, maintenance has been defined as the long-term effects of a program on outcomes after 6 or more months after the most recent intervention contact. | WHEN will (was) the initiative become operational, how long will (was) it be sustained (setting level), and how long are the results sustained (individual level)? |

Data from Glasgow RE, Harden SM, Gaglio B et al. RE-AIM planning and evaluation framework: adapting to new science and practice with a 20-year review. Front Public Health. 2019;7:64 and Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–1327.

Expert Recommendations for Implementing Change

The ERIC project used a Delphi method to identify 73 discrete implementation strategies and categorizes them into 9 distinct clusters.22–24 The strategies are broken down into groups as follows: use evaluative and iterative strategies, provide interactive assistance, adapt and tailor to context, develop stakeholder interrelationships, train and educate stakeholders, support clinicians, engage consumers, and use financial strategies. The ERIC project subsequently defined the relative importance and feasibility of each strategy with the intention of providing knowledge about their perceived applicability and general ratings by experts.25

IMPLEMENTATION RESEARCH: HOW TO DO IT

Planning the Study

Implementation studies must be designed appropriately to reflect the outcome of interest and use process-, institution-, or policy-level data outcomes instead of patient-level outcomes. Topics to consider when planning the study include the following:

Choosing an appropriate framework and key implementation domains

Establishing the intervention target

Defining data elements and data sources

Involving major stakeholders

Addressing anticipated barriers

Some important questions to consider include: how strong are efficacy data? What is the specific implementation barrier being studied, and how should it best be measured? How many settings should be included? How should time-based biases be addressed? What is the internal and external context? What are ideal data sources (prospective data collection, qualitative data, existing medical or administrative data, or a combination)?

Context

Context is the “set of circumstances or unique factors that surround a particular implementation effort” and is a key factor that influences implementation success.19 For example, after the introduction of the World Health Organization (WHO) Surgical Safety Checklist across 8 countries worldwide, there was a reduction in morbidity and mortality across all sites but the decline in statistically significant morbidity was only seen at 3 sites and statistically significant mortality at 2 sites.26 Contextual factors likely affected the implementation process, as well as downstream implementation at other sites, leading to subsequent controversies around the generalizability regarding the overall efficacy of the SCC.27 Several tools can quantitatively depict contextual factors, such as the Organizational Readiness for Implementing Change tool or the Implementation Climate Scale.28

STUDY DESIGNS

Qualitative and Mixed Methods

Qualitative research uses focus groups, interviews, and observations to explain phenomena. Mixed methods research thoughtfully uses both quantitative and qualitative research to approach different aspects of a research question. Qualitative studies can be used simultaneously or sequentially with quantitative research and can help researchers understand how an intervention functions in specific settings or contexts. Qualitative research performed before quantitative research can help researchers develop mathematically testable hypotheses. Qualitative research can be used after quantitative studies to explain numeric findings or help explain why an intervention succeeded or failed.

Randomized Controlled Trials

Randomized controlled trials (RCTs) are the gold standard to study clinical intervention efficacy. Randomization can occur at the patient-level or at the level of groups (such as a cluster-randomized study). An RCT minimizes bias by spreading confounding variables evenly across groups. RCTs are often costly and time consuming and can be so tightly controlled for internal validity that the findings are not reflective of real-life situations. Pragmatic RCTs are designed to occur in real-world settings by removing some restrictions designed to measure efficacy only. RCTs exist along a spectrum of explanatory (higher internal validity) to pragmatic (higher external validity). The PRagmatic-Explanatory Continuum Indicator Summary tool (http://precis-2.org/), designed in 2009 and updated in 2013, can help investigators determine where a trial falls within this spectrum.29,30

Alternative Approaches: Parallel Group, Pre-/Postintervention, Interrupted Time Series, Stepped-Wedge

Other study designs have been designed as RCT alternatives. The parallel group design randomizes groups to one or more interventions; otherwise the groups are to be treated as similarly as possible. Treatments are allocated randomly, and groups are compared. There are biases inherent to this design, as the groups may be different in unmeasured ways, and temporal factors can affect the treatment and control groups in ways that accentuate or minimize differences.

Pre- and postintervention studies examine data before and after an intervention. These studies are useful for single-center studies where an intervention occurs at a specified time point. A short washout period of noncollection can help allow time for the intervention to take effect. These are subject to time-based biases such as changes in the population over time (eg, influenza in the winter vs the summer), other changes in practice (unrelated interventions that have an effect on the outcome of interest), and increasing knowledge about a topic that occurs concurrently, or even as a result of, the ongoing study. Because of these confounders, it is difficult attribute causation even if statistical effect exists, although these can be feasible for single centers and can provide pilot data for prospective or multicenter studies.

Interrupted time-series (ITS) is a quasi-experimental design that builds on the pre-post concept. ITS use longitudinal data with multiple time points before and after the intervention to examine temporal trends and make causal interpretations.31 The ITS approach uses multiple time points to assess the preintervention and postintervention effects, allowing for adjustment for temporal trends that existed before the intervention. This methodology can be useful to examine effects after a policy is put into place.

The stepped-wedge cluster randomized design is a newer alternative to parallel group designs and require multiple groups (clusters that can be at the level of a nursing floor, hospital, etc).32 In a stepped-wedge design, interventions are delivered at the cluster level. At the beginning of the trial, none of the clusters receive the intervention (controls). As the trial continues, a randomized cluster or group of clusters crosses to the intervention. At regular intervals, more clusters cross to the intervention, so by the end of the study all clusters are exposed. Each cluster contributes observations from both the control and intervention arms, but the exposure occurs at different times for the cluster, but each center serves as its own control. This methodology removes bias from baseline center-level imbalance. A widespread trend that temporally affects all sites over the study period could be a confounder with this method, but statistical modeling can help adjust for temporal effects.

Limitations and Reporting Quality

As with any newer science, measurement issues can affect the ability to collect and report data. Standardized and validated instruments are not always available, and terminology is not always consistent. Variation in outcome measures can hinder a study’s ability to be generalizable and adaptable to other settings. Implementation processes that address one barrier to effectiveness may not overcome a different barrier, so the outcome measure must be chosen correctly to reflect the intervention of interest. Lastly, implementation research assumes an intervention is effective, but if an intervention is not truly effective, an incorrect conclusion may be attributed to an implementation process rather than the intervention itself. As with any other research, the research reporting quality should be held to accepted standards. Reporting guidelines for different study types can be access through the Equator Network (Enhancing the QUAlity and Transparency of health Research, available at https://www.equator-network.org/).

DEIMPLEMENTATION

An indispensable, yet often overlooked component of implementation science is the study of deimplementation. Deimplementation is the discontinuation of interventions that should be stopped because they are (1) harmful or ineffective, (2) not the most efficient or effective, or (3) unnecessary. Although it would be natural to assume that ineffective practices are discontinued when effective practices are discovered, this process is not guaranteed. Intuitively, there are psychological barriers to removing practices that were previously acceptable. In addition, factors such as organizational priorities, cost-benefit analyses, and perceived benefit to the patient can affect both implementation and deimplementation.33

Unlearning of old ideas must occur when learning new ideas. Implementation and deimplementation are hence inherently coupled, and transition requires deliberate intellectual and operational effort at an individual and organizational level. One hypothesis is that this coupling allows for the switch to be effort-neutral over time and positively affects change by avoiding overburdening the system when abolishing archaic practices to make room for novel and evidence-based practices.34 There are 4 types of change by which outdated practices are discarded: partial reversal, complete reversal, reversal with related replacement, and reversal with unrelated replacement.

Partial Reversal

Partial reversal refers to a reduction in the frequency, scale, or breadth of a current outmoded intervention such that it is offered less frequently or to only a subset of the patients for whom it has been most useful. One example from general surgery can be seen with the treatment of severe perforated diverticulitis. Historically, the gold-standard management of perforated diverticulitis was an end colostomy, which subsequently led to a second morbid operation for many patients. Comparable outcomes have been seen with primary anastomoses in selected populations, and practices have evolved such that end colostomy procedures are used more selectively.35

Complete Reversal

Deimplementation is a core process of medical evolution. Thankfully, as a result, processes such as “icepick lobotomies” for psychiatric disease and rectal feeding for patients who are nil per os are of historical interest only. These are examples of complete reversal, which implies entirely abolishing a certain intervention that has failed to show any benefit for any group of patients in any timespan or circumstance. A more recent example from critical care is that the common practice of routinely changing ventilator circuits was shown to increase the risk of infection and hence is no longer practiced as the standard of care.36 Complete removal of a practice implies that the practice was not replaced with a better option but simply was no longer used because it was determined to be ineffective or cause harm.

Reversal with Related Replacement

Reversal with related replacement suggests removing an outdated practice while substituting it with one that is closely related but more evidence based or effective. One excellent example of reversal with related replacement is demonstrated by the use of laparoscopy for surgery as new knowledge, skill, and technology became available. Almost 150 years after the open appendectomy was described, laparoscopic appendectomy is now the gold-standard operation for appendicitis.37

Reversal with Unrelated Replacement

Reversal with unrelated replacement refers to replacing a practice with one that is different.38 Currently, some patients with uncomplicated appendicitis do not receive laparoscopic appendectomy but are treated with antibiotics alone, which is an example of unrelated replacement.39 As another example from trauma surgery, splenectomy was the standard of care for splenic lacerations; however, angioembolization has now replaced surgery as the initial intervention, which allows for both the preservation of the spleen and avoidance of open surgery.40 Challenges in this type of change are that 2 separate collaborator groups need to be committed to and help facilitate the transition of practice in clinical scenarios.34

Widespread lack of deimplementation of outdated therapies contributes to substantial financial waste in the health care system and has a negative impact on patients and organizations. The execution of deimplementation has led to the development of different frameworks to guide the process. A positive feedback loop, or virtuous cycle of deimplementation before implementation, has been described and involves 9 phases: identification of practice for deimplementation, documenting prevalence of current pattern, investigating context and beliefs that maintain the practice, reviewing relevant methods, choosing matched extinction methods, conducting a deimplementation experiment, evaluating consequences, collecting evidence of saved time and resources, and finally, proposing the next practice to be implemented. This framework effectively removes an outdated practice before introducing a new evidence-based one.41

Several government policies such as the Choosing Wisely campaign (https://www.choosingwisely.org/), an initiative of the American Board of Internal Medicine, encourages a dialogue between clinicians and patients about which interventions are truly essential, not duplicated, and free from harm. Similarly, the United States Preventative Task Force makes regular recommendations on altering screening modalities and frequencies, with the intention to encourage evidence-based methods and remove those that are no longer best practice.42

Deimplementation is increasingly recognized as an important and deliberate component of improving health care delivery. However, in practice, even strong science can be met with implementation resistance due to deep-seated belief in existing treatment paradigms. A recent editorial proposed a framework where evidence-based deimplementation was approached via 3 broad categories: (1) interventions known not to work, (2) interventions where evidence is uncertain, and (3) interventions that are in development where early discussion of evidence can pave the way for eventual deadoption. Deimplementation reflects a commitment to evidence-based practices and deserves greater attention and study, as it is central to the goal of giving patients the best proved therapies.43

SUMMARY

Implementation science will be necessary to improve the delivery of care to patients in a systematic and efficient manner, as well as remove unnecessary and ineffective treatments from being used routinely. Understanding the key outcomes of interest, frameworks, and research methods can help researchers effectively perform and communicate implementation findings to bring modern and effective care to all our patients.

KEY POINTS.

Implementation science is the study of the translation of evidence-based best practices to real-world clinical environments.

Outcomes of implementation research include acceptability, adoption, appropriateness, feasibility, fidelity, penetration, sustainability, and implementation costs.

Key frameworks and models introduced, including Translating Evidence into Practice; Consolidated Framework for Implementation Research; Reach, Effectiveness, Adoption, Implementation, and Maintenance; and Expert Recommendations for Implementing Change, that outline domains and strategies to assist researchers identify barriers and facilitate implementation.

Research methods may include qualitative studies, mixed methods, parallel group, pre-/postintervention, interrupted time series, and cluster or stepped-wedge randomized trials.

Deimplementation is the study of how to remove outdated, unnecessary, or ineffective practices from the clinical setting and is an equally important component of implementation science.

CLINICS CARE POINTS.

Use implementation science research methods when a clinical intervention is known to be effective. Implementation research is used to learn about the best way to deliver the intervention to patients.

When planning an implementation study, ensure that care is taken to discuss barriers to implementation.

Select your outcome of interest for collection. Commonly used outcomes include: Acceptability, Adoption, Appropriateness, Feasibility, Fidelity, Penetration, Sustainability, Cost.

Choose a study design and a framework that fits well with your research question.

ACKNOWLEDGMENTS

This publication was made possible by the Clinical and Translational Science Collaborative of Cleveland, KL2TR002547 from the National Center for Advancing Translational Sciences (NCATS) component of the National Institutes of Health and NIH roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH.

DISCLOSURES

A.M. Lasinski and P. Ladha have no disclosures. V.P. Ho is supported by the Clinical and Translational Science Collaborative of Cleveland, KL2TR002547 from the National Center for Advancing Translational Sciences (NCATS) component of the National Institutes of Health and NIH roadmap for Medical Research. V.P. Ho spouse is a consultant for Atricure, Sig Medical, Zimmer Biomet, and Medtronic.

REFERENCES

- 1.Balas EA, Boren SA. Managing clinical knowledge for health care improvement. Yearb Med Inform 2000;(1):65–70. [PubMed] [Google Scholar]

- 2.Peters EA, Tran NT, Adam T. Implementation research in health: a practical guide Alliance for health policy and systems research. Geneva (Switzerland): World Health Organization; 2013. [Google Scholar]

- 3.Lane-Fall MB, Curran GM, Beidas RS. Scoping implementation science for the beginner: locating yourself on the “subway line” of translational research. BMC Med Res Methodol 2019;19(1):133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health 2011;38(2):65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rosen A, Proctor EK. Distinctions between treatment outcomes and their implications for treatment evaluation. J Consult Clin Psychol 1981;49(3):418–25. [DOI] [PubMed] [Google Scholar]

- 6.Karsh BT. Beyond usability: designing effective technology implementation systems to promote patient safety. Qual Saf Health Care 2004;13(5):388–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dusenbury L, Brannigan R, Falco M, et al. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. Health Educ Res 2003;18(2):237–56. [DOI] [PubMed] [Google Scholar]

- 8.Rabin BA, Brownson RC, Haire-Joshu D, et al. A glossary for dissemination and implementation research in health. J Public Health Manag Pract 2008;14(2): 117–23. [DOI] [PubMed] [Google Scholar]

- 9.Carroll C, Patterson M, Wood S, et al. A conceptual framework for implementation fidelity. Implement Sci 2007;2:40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: are implementation effects out of control? Clin Psychol Rev 1998;18(1): 23–45. [DOI] [PubMed] [Google Scholar]

- 11.Adily A, Westbrook J, Coiera E, et al. Use of on-line evidence databases by Australian public health practitioners. Med Inform Internet Med 2004;29(2): 127–36. [DOI] [PubMed] [Google Scholar]

- 12.Cooke M, Mattick RP, Campbell E. A description of the adoption of the ‘Fresh start’ smoking cessation program by antenatal clinic managers. Aust J Adv Nurs 2000;18(1):13–21. [PubMed] [Google Scholar]

- 13.Fischer MA, Vogeli C, Stedman MR, et al. Uptake of electronic prescribing in community-based practices. J Gen Intern Med 2008;23(4):358–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Waldorff FB, Steenstrup AP, Nielsen B, et al. Diffusion of an e-learning programme among Danish general practitioners: a nation-wide prospective survey. BMC Fam Pract 2008;9:24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rogers EM. Diffusion of innovations. 5th edition New York (NY): Free Press; 2003. [Google Scholar]

- 16.Tabak RG, Khoong EC, Chambers DA, et al. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med 2012; 43(3):337–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nilsen P Making sense of implementation theories, models and frameworks. Implement Sci 2015;10:53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pronovost PJ, Berenholtz SM, Needham DM. Translating evidence into practice: a model for large scale knowledge translation. BMJ 2008;337:a1714. [DOI] [PubMed] [Google Scholar]

- 19.Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 2009;4:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Glasgow RE, Harden SM, Gaglio B, et al. RE-AIM planning and evaluation framework: adapting to new science and practice with a 20-year review. Front Public Health 2019;7:64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health 1999;89(9): 1322–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev 2012;69(2):123–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the expert recommendations for implementing Change (ERIC) project. Implement Sci 2015;10:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Waltz TJ, Powell BJ, Chinman MJ, et al. Expert recommendations for implementing change (ERIC): protocol for a mixed methods study. Implement Sci 2014; 9:39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Waltz TJ, Powell BJ, Matthieu MM, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the expert recommendations for implementing change (ERIC) study. Implement Sci 2015;10:109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Haynes AB, Weiser TG, Berry WR, et al. A surgical safety checklist to reduce morbidity and mortality in a global population. N Engl J Med 2009;360(5):491–9. [DOI] [PubMed] [Google Scholar]

- 27.Urbach DR, Dimick JB, Haynes AB, et al. Is WHO’s surgical safety checklist being hyped? BMJ 2019;366:l4700. [DOI] [PubMed] [Google Scholar]

- 28.Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS). Implement Sci 2014;9:157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Loudon K, Zwarenstein M, Sullivan F, et al. Making clinical trials more relevant: improving and validating the PRECIS tool for matching trial design decisions to trial purpose. Trials 2013;14:115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Thorpe KE, Zwarenstein M, Oxman AD, et al. A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. CMAJ 2009;180(10): E47–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kontopantelis E, Doran T, Springate DA, et al. Regression based quasi-experimental approach when randomisation is not an option: interrupted time series analysis. BMJ 2015;350:h2750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hemming K, Haines TP, Chilton PJ, et al. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. BMJ 2015;350:h391. [DOI] [PubMed] [Google Scholar]

- 33.van Bodegom-Vos L, Davidoff F, Marang-van de Mheen PJ. Implementation and de-implementation: two sides of the same coin? BMJ Qual Saf 2017;26(6): 495–501. [DOI] [PubMed] [Google Scholar]

- 34.Wang V, Maciejewski ML, Helfrich CD, et al. Working smarter not harder: coupling implementation to de-implementation. Healthc (Amst) 2018;6(2):104–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Acuna SA, Wood T, Chesney TR, et al. Operative strategies for perforated diver-ticulitis: a systematic review and meta-analysis. Dis Colon Rectum 2018;61(12): 1442–53. [DOI] [PubMed] [Google Scholar]

- 36.Han J, Liu Y. Effect of ventilator circuit changes on ventilator-associated pneumonia: a systematic review and meta-analysis. Respir Care 2010;55(4):467–74. [PubMed] [Google Scholar]

- 37.Korndorffer JR Jr, Fellinger E, Reed W. SAGES guideline for laparoscopic appendectomy. Surg Endosc 2010;24(4):757–61. [DOI] [PubMed] [Google Scholar]

- 38.Ho VP, Dicker RA, Haut ER. Dissemination, implementation, and de-implementation: the trauma perspective. Trauma Surg Acute Care Open 2020; 5(1):e000423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Harnoss JC, Zelienka I, Probst P, et al. Antibiotics versus surgical therapy for uncomplicated appendicitis: systematic review and meta-analysis of controlled trials (PROSPERO 2015: CRD42015016882). Ann Surg 2017;265(5):889–900. [DOI] [PubMed] [Google Scholar]

- 40.Stassen NA, Bhullar I, Cheng JD, et al. Selective nonoperative management of blunt splenic injury: an eastern association for the surgery of trauma practice management guideline. J Trauma acute Care Surg 2012;73(5 Suppl 4):S294–300. [DOI] [PubMed] [Google Scholar]

- 41.Davidson KW, Ye S, Mensah GA. Commentary: de-implementation science: a virtuous cycle of ceasing and desisting low-value care before implementing new high value care. Ethn Dis 2017;27(4):463–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.McKay VR, Morshed AB, Brownson RC, et al. Letting go: conceptualizing intervention de-implementation in public health and social service settings. Am J Community Psychol 2018;62(1–2):189–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Prasad V, Ioannidis JP. Evidence-based de-implementation for contradicted, unproven, and aspiring healthcare practices. Implement Sci 2014;9:1. [DOI] [PMC free article] [PubMed] [Google Scholar]