Abstract

Different regions of the striatum regulate different types of behavior. However, how dopamine signals differ across striatal regions and how dopamine regulates different behaviors remain unclear. Here, we compared dopamine axon activity in the ventral, dorsomedial, and dorsolateral striatum, while mice performed a perceptual and value-based decision task. Surprisingly, dopamine axon activity was similar across all three areas. At a glance, the activity multiplexed different variables such as stimulus-associated values, confidence, and reward feedback at different phases of the task. Our modeling demonstrates, however, that these modulations can be inclusively explained by moment-by-moment changes in the expected reward, that is the temporal difference error. A major difference between areas was the overall activity level of reward responses: reward responses in dorsolateral striatum were positively shifted, lacking inhibitory responses to negative prediction errors. The differences in dopamine signals put specific constraints on the properties of behaviors controlled by dopamine in these regions.

Research organism: Mouse

Introduction

Flexibility in behavior relies critically on an animal’s ability to alter its choices based on past experiences. In particular, the behavior of an animal is greatly shaped by the consequences of specific actions – whether a previous action led to positive or negative experiences. One of the fundamental questions in neuroscience is how animals learn from rewards and punishments.

A neurotransmitter dopamine, is thought to be a key regulator of learning from rewards and punishments (Hart et al., 2014; Montague et al., 1996; Schultz et al., 1997). Neurons that release dopamine (hereafter, dopamine neurons) are located mainly in the ventral tegmental area (VTA) and substantia nigra pars compacta (SNc). These neurons send their axons to various regions including the striatum, neocortex, and amygdala (Menegas et al., 2015; Yetnikoff et al., 2014). The striatum, which receives the densest projection from VTA and SNc dopamine neurons, is thought to play particularly important roles in learning from rewards and punishments (Lloyd and Dayan, 2016; O'Doherty et al., 2004). However, what information dopamine neurons convey to the striatum, and how dopamine regulates behavior through its projections to the striatum remains elusive.

A large body of experimental and theoretical studies have suggested that dopamine neurons signal reward prediction errors (RPEs) – the discrepancy between actual and predicted rewards (Bayer and Glimcher, 2005; Cohen et al., 2012; Hart et al., 2014; Schultz et al., 1997). In particular, the activity of dopamine neurons resembles a specific type of prediction error, called temporal difference error (TD error) (Montague et al., 1996; Schultz et al., 1997; Sutton, 1988; Sutton, 1987). Although it was widely assumed that dopamine neurons broadcast homogeneous RPEs to a swath of dopamine-recipient areas, recent findings indicated that dopamine signals are more diverse than previously thought (Brown et al., 2011; Kim et al., 2015; Matsumoto and Hikosaka, 2009; Menegas et al., 2017; Menegas et al., 2018; Parker et al., 2016). For one, recent studies have demonstrated that a transient (‘phasic’) activation of dopamine neurons occurs near the onset of a large movement (e.g. locomotion), regardless of whether these movements are immediately followed by a reward (Howe and Dombeck, 2016; da Silva et al., 2018). These phasic activations at movement onsets have been observed in somatic spiking activity in the SNc (da Silva et al., 2018) as well as in axonal activity in the dorsal striatum (Howe and Dombeck, 2016). Another study showed that dopamine axons in the dorsomedial striatum (DMS) are activated when the animal makes a contralateral orienting movement in a decision-making task (Parker et al., 2016). Other studies have also found that dopamine axons in the posterior or ventromedial parts of the striatum are activated by aversive or threat-related stimuli (de Jong et al., 2019; Menegas et al., 2017). An emerging view is that dopamine neurons projecting to different parts of the striatum convey distinct signals and support different functions (Cox and Witten, 2019).

Previous studies have shown that different parts of the striatum control distinct types of reward-oriented behaviors (Dayan and Berridge, 2014; Graybiel, 2008; Malvaez and Wassum, 2018; Rangel et al., 2008). First, the ventral striatum (VS) has often been associated with Pavlovian behaviors, where the expectation of reward triggers relatively pre-programmed behaviors (approaching, consummatory behaviors etc.) (Dayan and Berridge, 2014). Psychological studies suggest that these behaviors are driven by stimulus-outcome associations (Kamin, 1969; Pearce and Hall, 1980; Rescorla and Wagner, 1972). Consistent with this idea, previous experiments have shown that dopamine in VS conveys canonical RPE signals (Menegas et al., 2017; Parker et al., 2016), and support learning of values associated with specific stimuli (Clark et al., 2012). In contrast, the dorsal part of the striatum has been linked to instrumental behaviors, where animals acquire an arbitrary action that leads to a reward (Montague et al., 1996; Suri and Schultz, 1999). Instrumental behaviors are further divided into two distinct types: goal-directed and habit (Dickinson and Weiskrantz, 1985). Goal-directed behaviors are ‘flexible’ reward-oriented behaviors that are sensitive to a causal relationship (‘contingency’) between action and outcome, and can quickly adapt to changes in the value of the outcome (Balleine and Dickinson, 1998). After repetition of a goal-directed behavior, the behavior can become a habit which is characterized by insensitivity to changes in the outcome value (e.g. devaluation) (Balleine and O'Doherty, 2010). According to psychological theories, goal-directed and habitual behaviors are supported by distinct internal representations: action-outcome and stimulus-response associations, respectively (Balleine and O'Doherty, 2010). Lesion studies have indicated that goal-directed behaviors and habit are controlled by DMS and the dorsolateral striatum (DLS), respectively (Yin et al., 2004; Yin et al., 2005).

Instrumental behaviors are shaped by reward, and it is generally thought that dopamine is involved in their acquisition (Gerfen and Surmeier, 2011; Montague et al., 1996; Schultz et al., 1997). However, how dopamine is involved in distinct types of instrumental behaviors remains unknown. A prevailing view in the field is that habit is controlled by ‘model-free’ reinforcement learning, while goal-directed behaviors are controlled by ‘model-based’ mechanisms (Daw et al., 2005; Dolan and Dayan, 2013; Rangel et al., 2008). In this framework, habitual behaviors are driven by ‘cached’ values associated with specific actions (action values) which animals learn through direct experiences via dopamine RPEs. In contrast, goal-directed behaviors are controlled by a ‘model-based’ mechanism whereby action values are computed by mentally simulating which sequence of actions lead to which outcome using a relatively abstract representation (model) of the world. Model-based behaviors are more flexible compared to model-free behaviors because a model-based mental simulation may allow the animal to compute values in novel or changing circumstances. Although these ideas account for the relative inflexibility of habit over model-based, goal-directed behaviors, they do not necessarily explain the most fundamental property of habit, that is, its insensitivity to changes in outcome, as cached values can still be sensitive to RPEs when the actual outcome violates expectation, posing a fundamental limit in this framework (Dezfouli and Balleine, 2012; Miller et al., 2019). Furthermore, the idea that habits are supported by action value representations does not necessarily match with the long-held view of habit based on stimulus-response associations.

Until recently an implicit assumption across many studies was that dopamine neurons broadcast the same teaching signals throughout the striatum to support different kinds of learning (Rangel et al., 2008; Samejima and Doya, 2007). However, as mentioned before, more recent studies revealed different dopamine signals across striatal regions, raising the possibility that different striatal regions receive distinct teaching signals. In any case, few studies have directly examined the nature of dopamine signals across striatal regions in instrumental behaviors, in particular, between DLS and other regions. As a result, it remains unclear whether different striatal regions receive distinct dopamine signals during instrumental behaviors. Are dopamine signals in particular areas dominated by movement-related signals? Are dopamine signals in these areas still consistent with RPEs or are they fundamentally distinct? How are they different? Characterizing dopamine signals in different regions is a critical step toward understanding how dopamine may regulate distinct types of behavior.

In the present study, we sought to characterize dopamine signals in different striatal regions (VS, DMS and DLS) during instrumental behaviors. We used a task involving both perceptual and value-based decisions in freely-moving mice – a task that is similar to those previously used to probe various important variables in the brain such as values, biases (Rorie et al., 2010; Wang et al., 2013), confidence (Hirokawa et al., 2019; Kepecs et al., 2008), belief states (Lak et al., 2017), and response vigor (Wang et al., 2013). In this task, the animal goes through various movements and mental processes – self-initiating a trial, collecting sensory evidence, integrating the sensory evidence with reward information, making a decision, initiating a choice movement, committing to an option and waiting for reward, receiving an outcome of reward or no reward, and adjusting internal representations for future performance using RPEs and confidence. Compared to Pavlovian tasks, which have been more commonly used to examine dopamine RPEs, the present task has various factors with which to contrast dopamine signals between different areas.

Contrary to our initial hypothesis, dopamine signals in all three areas showed similar dynamics, going up and down in a manner consistent with TD errors, reflecting moment-by-moment changes in the expected future reward (i.e. state values). Notably, although we observed correlates of accuracy and confidence in dopamine signals, consistent with previous studies (Engelhard et al., 2019; Lak et al., 2017), the appearance of these variables was timing- and trial type-specific. In stark contrast with these previous proposals, our modeling demonstrates that these apparently diverse dopamine signals can be inclusively explained by a single variable – TD error, that is moment-by-moment changes in the expected reward in each trial. In addition, we found consistent differences between these areas. For instance, DMS dopamine signals were modulated by contralateral orienting movements, as reported previously (Parker et al., 2016). Furthermore, DLS dopamine signals, while following TD error dynamics, were overall more positive, compared to other regions. Based on these findings, we present novel models of how these distinct dopamine signals may give rise to distinct types of behavior such as flexible versus habitual behaviors.

Results

A perceptual decision-making task with reward amount manipulations

Mice were first trained in a perceptual decision-making task using olfactory stimuli (Figure 1; Uchida and Mainen, 2003). To vary the difficulty of discrimination, we used two odorants mixed with different ratios (Figure 1A). Mice were required to initiate a trial by poking their nose into the central odor port, which triggered a delivery of an odor mixture. Mice were then required to move to the left or right water port depending on which odor was dominant in the presented mixture. Odor-water side (left or right) rule was held constant throughout training and recording in each animal. In order to minimize temporal overlaps between different trial events and underlying brain processes, we introduced a minimum time required to stay in the odor port (for 1 s) and in the water port (for 1 s) to receive a water reward.

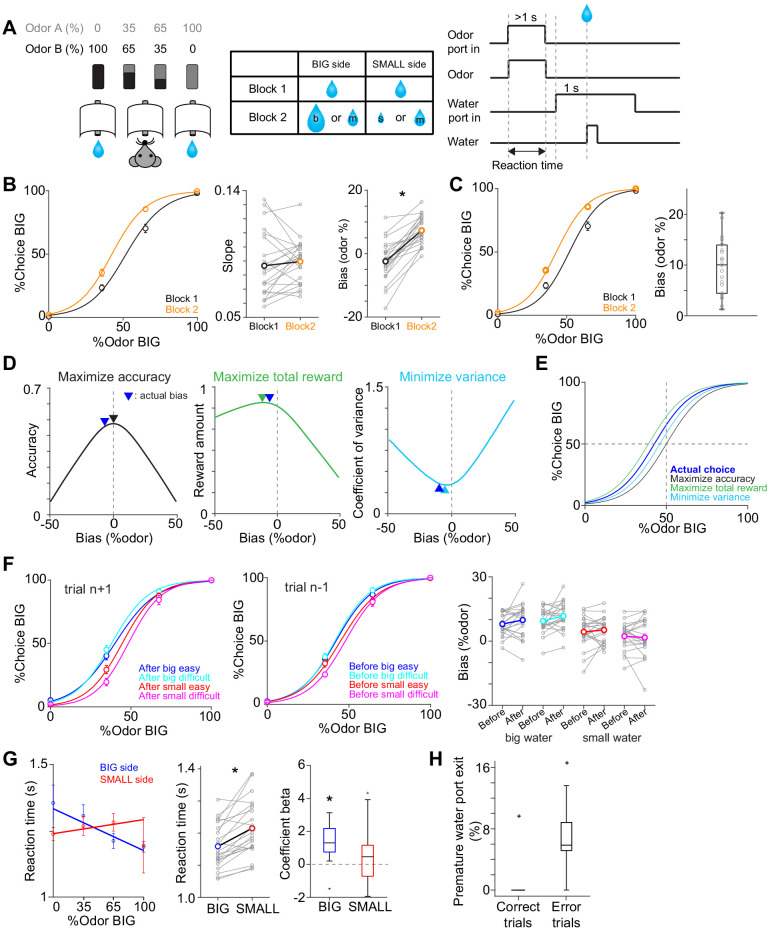

Figure 1. Perceptual choice paradigm with probabilistic reward conditions.

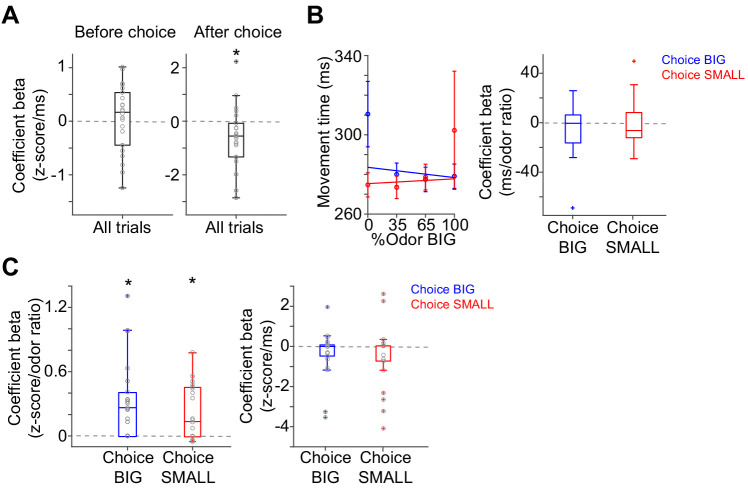

(A) A mouse discriminated a dominant odor in odor mixtures that indicates water availability in either the left or right water port. Correct choice was rewarded by a drop of water. In each session, an equal amount of water was assigned at both water ports in the first block, and in the second block, big/medium water (50%–50%, randomized) was assigned at one water port (BIG side) and medium/small water (50%–50%, randomized) was assigned at another port (SMALL side). The BIG or SMALL side was assigned to a left or right water port in a pseudorandom order across sessions. (B) Left, % of choice of the BIG side in block 1 and 2 (mean ± SEM) and the average psychometric curve for each block. Center, slope of the psychometric curve was not different between blocks (t(21) = 0.75, p=0.45, paired t-test). Right, choice bias at 50/50 choice, expressed as 50 - odor (%). Choice biased toward BIG side in block 2 (t(21) = 8.5, p=2.8 × 10−8, paired t-test). (C) Left, % of choice of the BIG side in block 1 and 2 (mean ± SEM) and the average psychometric curve with a fixed slope across blocks. Right, all the animals showed choice bias toward BIG side in block two compared to block 1 (z = 4.1, p=4.0 × 10−5, Wilcoxon signed rank test). The choice bias was expressed by a lateral shift of a psychometric curve with a fixed slope across blocks. (D) Average reward amounts, accuracy, and coefficients of variance were examined with different levels of choice bias with a fixed slope (average slope of all animals). (E) Optimal choice patterns with different strategies in D (bias −11, 0, and −4, respectively) and the actual average choice pattern (mean bias −7.3). (F) Trial-by-trial choice updating was examined by comparing choice bias before (center, trial n−1) and after (left, trial n+1) specific trial types. Choice updating in one trial was not significant for reward acquisition of either small or big water in easy or difficult trials (right, big easy, z = −1.1, p=0.24; big difficult, z = −1.6, p=0.10; small easy, z = −0.95, p=0.33; small difficult, z = 0.081, p=0.93, Wilcoxon signed rank test). (G) Left, animal's reaction time was modulated by odor types. Center, for easy trials (pure odors, correct choice), reaction time was shorter when animals chose the BIG side (t(21) = −5.0, p=4.9 × 10−5, paired t-test). Right, the reaction time was negatively correlated with sensory evidence for choice of the BIG side (t(21) = −4.7, p=1.2 × 10−4, one sample t-test), whereas the modulation was not significant for choice of the SMALL side (t(21) = −1.5, p=0.13, one sample t-test). (H) Animals showed more premature exit of water port (<1 s) in trials with error choice than trials with correct choice (t(21) = −7.9, p=9.5 × 10−8, paired t-test). n = 22 animals.

Figure 1—figure supplement 1. Average psychometric curve in odor manipulation blocks.

After mice learned the task, the water amounts at the left and right water ports were manipulated (Lak et al., 2017; Rorie et al., 2010; Wang et al., 2013) in a probabilistic manner. In our task, one of the reward ports was associated with a big or medium size of water (BIG side) while another side was associated with a small or medium size of water (SMALL side) (Figure 1A). In a daily session, there were two blocks of trials, the first with equal-sized water and the second with different distributions of water sizes on the two sides (BIG versus SMALL side). The reward ports for BIG or SMALL conditions stayed unchanged within a session and were randomly chosen for each session. In each reward port (BIG or SMALL side), which of the two reward sizes was delivered was randomly assigned in each trial. Note that the medium-sized reward is delivered with the probability of 0.5 for every correct choice at either side. This design was used to facilitate our ability to characterize RPE-related responses even after mice were well trained (Tian et al., 2016). First, the responses to the medium-sized-reward allowed us to characterize how ‘reward expectation’ affects dopamine reward responses because we can examine how different levels of expectation, associated with the BIG and SMALL side, affect dopamine responses to reward of the same (medium) amount. Conversely, for a given reward port, two sizes of reward allowed us to characterize the effect of ‘actual reward’ on dopamine responses, by comparing the responses when the actual reward was smaller versus larger than expected.

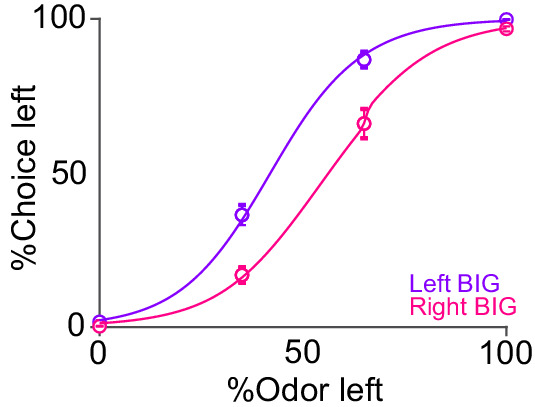

We first characterized the choice behavior by fitting a psychometric function (a logistic function). Compared to the block with equal-sized water, the psychometric curve was shifted laterally to the BIG side (Figure 1B, Figure 1—figure supplement 1). The fitted psychometric curves were laterally shifted whereas the slopes were not significantly different across blocks (t(21) = 0.75, p=0.45, n = 22, paired t-test) (Figure 1B). We, therefore, quantified a choice bias as a lateral shift of the psychometric curve with a fixed slope in terms of the % mixture of odors for each mouse (Figure 1C; Wang et al., 2013). All the mice exhibited a choice bias toward the BIG side (22/22 animals). Because a ‘correct’ choice (i.e. whether a reward is delivered or not) was determined solely by the stimulus in this task, biasing their choices away from the 50/50 boundary inevitably lowers the choice accuracy (or equivalently, the probability of reward). For ambiguous stimuli, however, mice could go for a big reward, even sacrificing accuracy, in order to increase the long-term gain; choice bias is potentially beneficial if taking a small chance of big reward surpasses more frequent loss of small reward. Indeed, the observed biases yielded increase of total reward (1.016 ± 0.001 times reward compared to no bias, mean ± SEM, slightly less than the optimal bias that yields 1.022 times reward compared to no bias), rather than maximizing the accuracy (=reward probability, i.e. no bias) or solely minimizing the risk (the variance of reward amounts) (Figure 1D and E).

Previous studies have shown that animals shift their decision boundary even without reward amount manipulations in perceptual decision tasks (Lak et al., 2020a). These shifts occur on a trial-by-trial basis, following a win-stay strategy, choosing the same side when that side was associated with reward in the previous trial, particularly when the stimulus was more ambiguous (Lak et al., 2020a). In the current task design, however, the optimal bias is primarily determined by the sizes of reward (more specifically, which side delivered a big or small reward) which stays constant across trials within a block. To determine whether the animal adopted short-time scale updating or a more stable bias, we next examined how receipt of reward affected the choice in the subsequent trials. To extract trial-by-trial updating, we compared the psychometric curves one trial before (n−1) and after (n+1) the current trials (n). This analysis was performed separately for the rewarded side in the current (n) trials. We found that choice biases before and after a specific reward location (choice in (n+1) trials minus choice in (n−1) trials) were not significantly different in any trial types (Figure 1F), suggesting that trial-by-trial updating was minimum, contrary to a previous study (Lak et al., 2020b). Notably, the previous study (Lak et al., 2020b) only examined the choice pattern in (n+1) trials to measure trial-by-trial updates, which was potentially overestimated because of global bias already seen in (n−1) trials. Instead, our results indicate that the mice adopted a relatively stable bias that lasts longer than one trial in our task.

Although we imposed a minimum time required to stay in the odor port, the mice showed different reaction times (the duration between odor onset and odor port exit) across different trial types (Figure 1G). First, reaction times were shorter when animals chose the BIG side compared to the SMALL side in easy, but not difficult, trials. Second, reaction times were positively correlated with the level of sensory evidence for choice (as determined by odor % for the choice) when mice chose the BIG side. However, this modulation was not evident when mice chose the SMALL side.

Animals were required to stay in a water port for 1 s to obtain water reward. However, in rare cases, they exited a water port early, within 1 s after water port entry. We examined the effects of choice accuracy (correct or error) on the premature exit (Figure 1H). We found that while animals seldom exited a water port in correct trials, they occasionally exited prematurely in error trials, consistent with a previous study (Kepecs et al., 2008).

Overall activity pattern of dopamine axons in the striatum

To monitor the activity of dopamine neurons in a projection-specific manner, we recorded the dopamine axon activity in the striatum using a calcium indicator, GCaMP7f (Dana et al., 2019) with fiber fluorometry (Kudo et al., 1992) (fiber photometry) (Figure 2A) (‘dopamine axon activity’ hereafter). We targeted a wide range of the striatum including the relatively dorsal part of VS, DMS and DLS (Figure 2B). Calcium signals were monitored from mice both before and after introducing water amount manipulations (n = 9, 7, six mice, for VS, DMS, DLS).

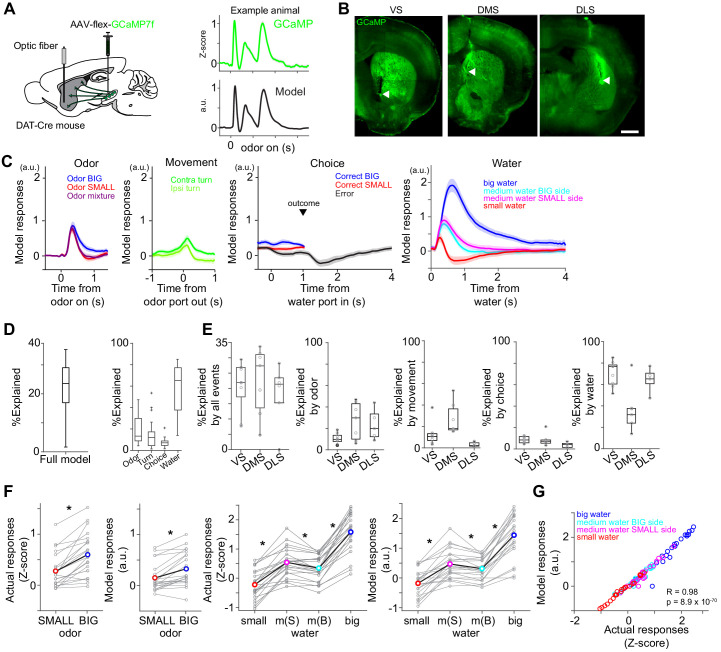

Figure 2. Dopamine axons in the striatum show characteristics of RPE.

(A) AAV-flex-GCaMP7f was injected in VTA and SNc, and dopamine axon activity was measured with an optic fiber inserted in the striatum. Right top, dopamine axon activity in all the valid trials (an animal chose an either water port after staying in odor port for required time,>1 s) in an example animal, aligned at odor onset (mean ± SEM). Right bottom, average responses using predicted trial responses in a fitted model of the same animal (mean ± SEM). (B) Location of an optic fiber in example animals. Arrow heads, tips of fibers. Green, GCaMP7f. Bar = 1 mm. (C) Odor-, movement-, choice-, and water-locked components in the model of all the animals (mean ± SEM). (D) Contribution of each component in the model was measured by reduction of deviance in the full model compared to a reduced model excluding the component. (E) Contribution of each component in the model in each animal group. (F) Left, comparison of dopamine axon responses to an odor cue that instructs to choose BIG and SMALL side in easy trials (pure odor, correct choice, −1–0 s before odor port out). t(21) = 5.8, p=8.1 × 10−6 for actual signals and t(21) = 4.8, p=9.5 × 10−5 for models. Paired t-test, n = 22 animals. Right, comparison of dopamine axon responses to different sizes of water (big versus medium water with BIG expectation, and medium versus small water with SMALL expectation) and to medium water with different expectation (BIG versus SMALL expectation) (0.3–1.3 s after water onset). t(21) = 12.9, p=1.6 × 10−11, t(21) = 9.7, p=2.9 × 10−9, and t(21) = −3.8, p=9.3 × 10−4, respectively for actual signals, and t(21) = 10.3, p=1.0 × 10−9, t(21) = 7.9, p=9.2 × 10−8, and t(21) = −3.3, p=0.0033, respectively for models. Paired t-test, n = 22 animals. m(B), medium water with BIG expectation; m(S), medium water with SMALL expectation. (G) Comparison between actual dopamine axon responses and model responses to water. Arbitrary unit (a.u.) was determined by model-fitting with z-score of GCaMP signals.

Figure 2—figure supplement 1. Dopamine axon activity outside of the task.

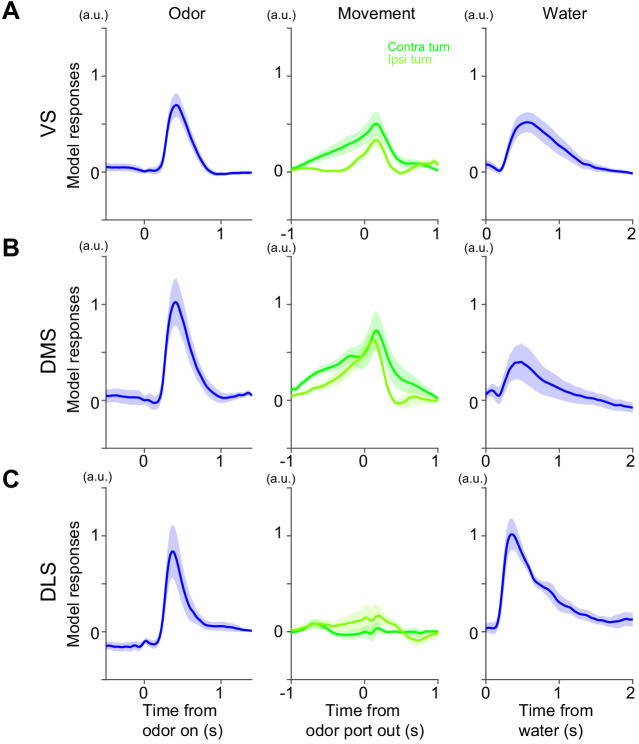

The main analysis was performed using the calcium signals obtained in the presence of water amount manipulations. To isolate responses that are time-locked to specific task events but with potentially overlapping temporal dynamics, we first fitted dopamine axon activity in each animal with a linear regression model using multiple temporal kernels (Park et al., 2014) with Lasso regularization with 10-fold cross validation (Figure 2). We used kernels that extract stereotypical time courses of dopamine axon activity locked to four different events: odor onset (odor), odor port exit (movement), water port entry (choice commitment or ‘choice’ for short), and reward delivery (water) (Figure 2C–F). Even if we imposed minimum 1 s delay, calcium signals associated with these events were potentially overlapped. In our task, the time course of events such as reaction time (odor onset to odor port exit) and movement time (odor port exit to water port entry) varied across trials. The model-fitting procedure finds kernels that best explain individual trial data in entire sessions assuming that calcium responses follow specific patterns upon each event and sum up linearly.

The constructed model captured modulations of dopamine axon activity time-locked to different events (Figure 2C). On average, the magnitude of the extracted odor-locked activity was modulated by odor cues. Dopamine axons were more excited by a pure odor associated with the BIG side than a pure odor associated with the SMALL side (Figure 2C and F). The movement-locked activity was stronger for a movement toward the contra-lateral (the opposite direction to the recorded hemisphere), compared to the ipsi-lateral side, which was most evident in DMS (Parker et al., 2016) but much smaller in VS or DLS (Figure 2E, % explained by movement). The choice-locked activity showed two types of modulations (Figure 2C). First, it exhibited an inhibition in error trials at the time of reward (i.e. when it has become clear that reward is not going to come). Second, dopamine axon activity showed a modulation around the time of water port entry, an excitation when the choice was correct, and an inhibition when the choice was incorrect, even before the mice received a feedback. These ‘choice commitment’-related signals will be further analyzed below. Finally, delivery of water caused a strong excitation which was modulated by the reward size (Figure 2C and F). Furthermore, the responses to medium-sized water were slightly but significantly smaller on the BIG side compared to the SMALL side (Figure 2C and F). The contribution of water-locked kernels was larger than other kernels except in DMS, where odor, movement and water kernels contributed similarly (Figure 2D and E).

In previous studies, RPE-related signals have typically been characterized by phasic responses to reward-predictive cues and a delivery or omission of reward. Overall, the above results demonstrate that observed populations contain the basic response characteristics of RPEs. First, dopamine axons were excited by reward-predicting odor cues, and the magnitude of the response was stronger for odors that instructed the animal to go to the side associated with a higher value (i.e. BIG side). Responses to water were modulated by reward amounts, and the water responses were suppressed by higher reward expectation. These characteristics were also confirmed by using the actual responses, instead of the fitted kernels (Figure 2F and G). Finally, in error trials, dopamine axons were inhibited when the time passed beyond the expected time of reward, as the negative outcome becomes certain (Figure 2C). In the following sections, we will investigate each striatal area in more detail.

Dopamine activity is also modulated while a mouse moves without obvious reward (Coddington and Dudman, 2018; Howe and Dombeck, 2016; da Silva et al., 2018). To examine movement-related dopamine activity, we analyzed videos recorded during the task with DeepLabCut (Mathis et al., 2018; Figure 2—figure supplement 1). An artificial deep network was trained to detect six body parts: nose, both ears and three points along the tail – base, midpoint, and tip. To evaluate tracking in our task, we examined stability of a nose location detected by DeepLabCut when a mouse kept its nose in a water port, which was detected with the infra-red photodiode. After training of total 400 frames in 10 videos from 10 animals, error rates, calculated by disconnected tracking of nose position (50 pixel/frame), was 4.6 × 10−4 ± 1.5×10−4 of frames (mean ± SEM, n = 43 videos), and nose tracking stayed within 2 cm when a mouse poked its nose into a water port for >1 s in 96.0% ± 0.3 of trials (mean ± SEM, n = 43 sessions) (Figure 2—figure supplement 1B). We examined dopamine axon activity when a mouse started or stopped locomotion (body speed is faster than at 3 cm/s), outside of the odor/water port area. In either case, we observed slightly but significantly lower dopamine axon activity level when a mouse moves, consistent with previous studies showing that some dopamine neurons show inhibition with movement (Coddington and Dudman, 2018; Dodson et al., 2016; da Silva et al., 2018; Figure 2—figure supplement 1C,E). We did not observe difference of modulation across the striatal areas (Figure 2—figure supplement 1F).

Shifted representation of TD error in dopamine axon activity across the striatum

Although excitation to unpredicted reward is one of the signatures of dopamine RPE, recent studies found that the dopamine axon response to water is small or undetectable in some parts of the dorsal striatum (Howe and Dombeck, 2016; Parker et al., 2016; da Silva et al., 2018). Therefore, the above observation that all three areas (VS, DMS, and DLS) exhibited modulation by reward may appear at odds with previous studies.

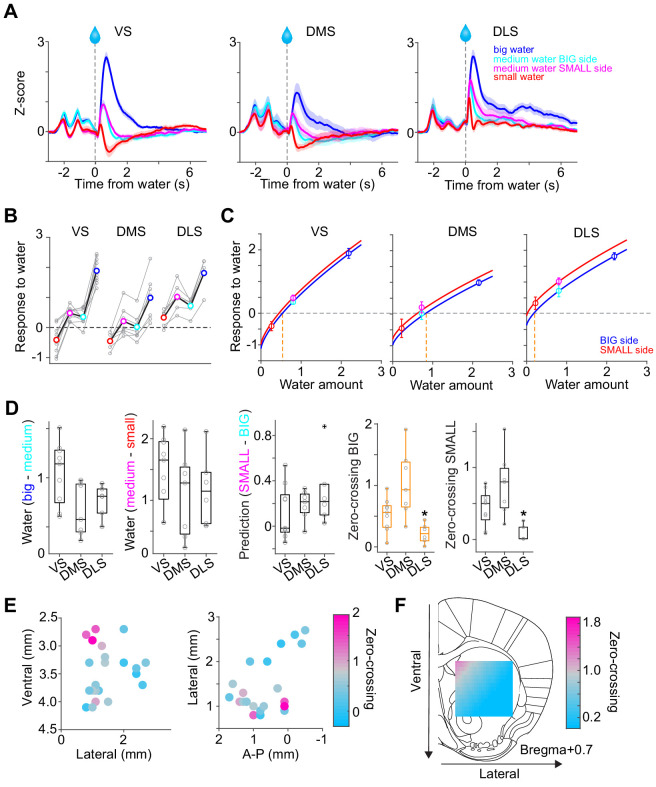

We noticed greatly diminished water responses when the reward amount was not manipulated, that is, when dopamine axon signals were monitored during training sessions before introducing the reward amount manipulations (Figure 3, Figure 3—figure supplement 1). In these sessions, dopamine axons in some animals did not show significant excitation to water rewards (Figure 3A and D). This ‘lack’ of reward response was found in DMS, consistent with previous studies (Parker et al., 2016), but not in VS or DLS (Figure 3G). Surprisingly, however, DMS dopamine axons in the same animals showed clear excitation when reward amount manipulations were introduced, responding particularly strongly to a big reward (Figure 3B and E). Indeed, the response patterns were qualitatively similar across different striatal areas (Figure 4); the reward responses in all the areas were modulated by reward size and expectation, although the whole responses seem to be shifted higher in DLS, and lower in DMS (Figure 4A and B). These results indicate that the stochastic nature of reward delivery in our task enhanced or ‘rescued’ reward responses in dopamine axons in DMS.

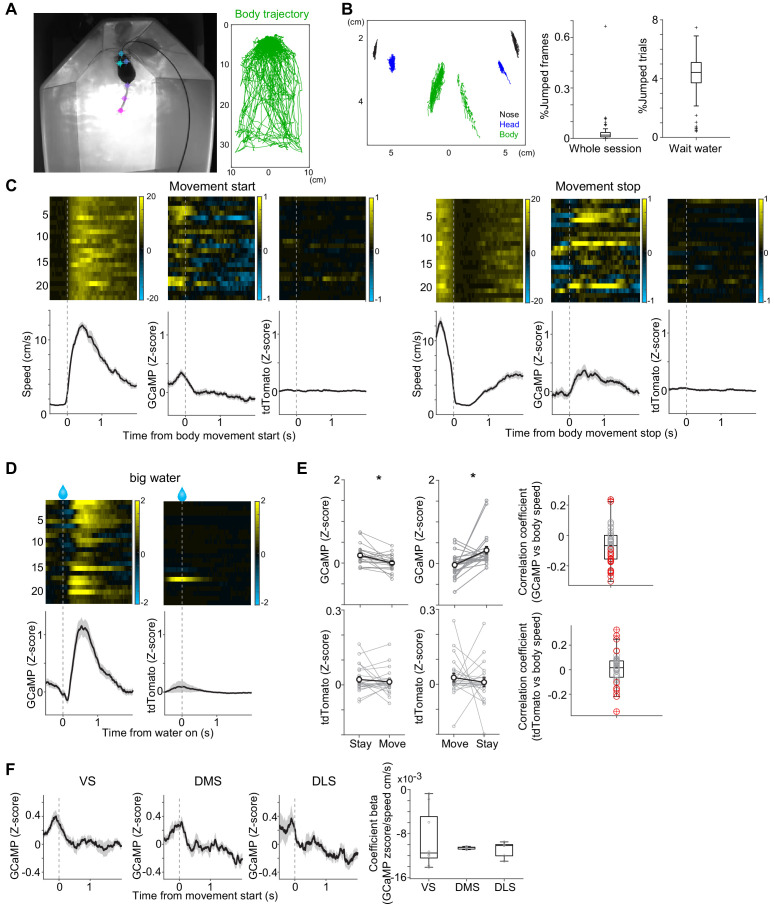

Figure 3. Small responses to fixed amounts of water in dopamine axons in DMS.

(A, D) Dopamine axon responses to water in a fixed reward amount task (pure odor, correct choice). (B, E) Dopamine axon responses to a big amount of water in a variable reward amount task (pure odor, correct choice). (C, F) Dopamine axon responses to a small amount of water in a variable reward amount task (pure odor, correct choice). A-C, dopamine axon activity in an example animal; D-F, another example animal. (G) Responses to water (0.3–1.3 s after water onset) were significantly modulated with striatal location (F(2,19) = 5.1, p=0.016, ANOVA; t(11) = 2.9, p=0.013, DMS versus DLS; t(14) = 1.2, p=0.21, VS versus DMS; t(13) = −2.6, p=0.021, VS versus DLS, two sample t-test; t = 2.4, p=0.023, dorsal-ventral; t = −1.3, p=0.18, anterior-posterior; t = 1.6, p=0.10, medial-lateral, linear regression). The water responses were significantly positive in VS (t(8) = 4.7, p=0.0015) and in DLS (t(5) = 9.7, p=1.9 × 10−4), but not in DMS (t(6) = 1.2, p=0.26). one sample t-test, n = 9, 7, six animals for VS, DMS, DLS.

Figure 3—figure supplement 1. Model responses in a task with fixed amounts of reward.

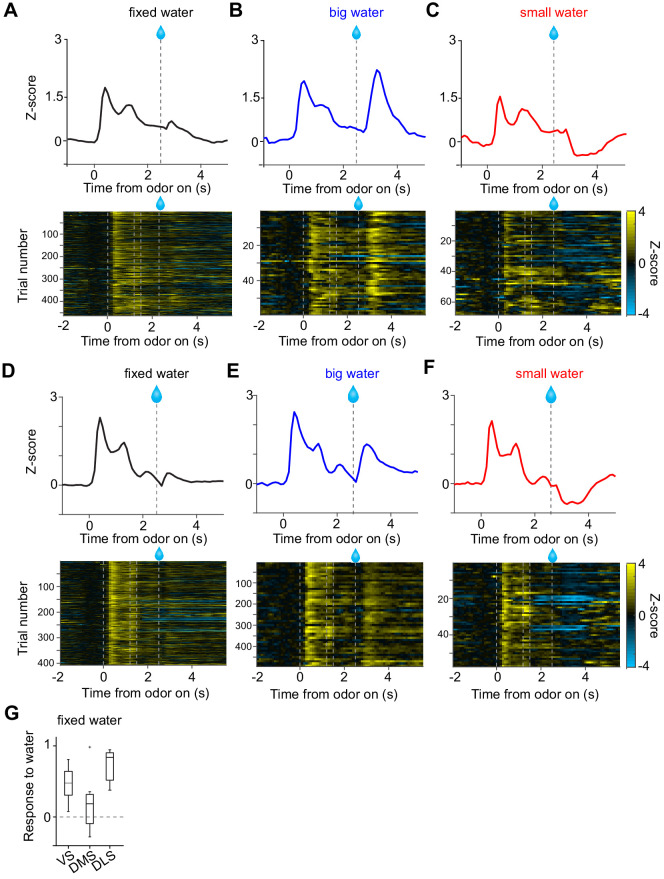

Figure 4. Responses to water in dopamine axons in the striatum.

(A) Activity patterns per different striatal location, aligned at water onset (mean ± SEM, n = 9 for VS, n = 7 for DMS, n = 6 for DLS). (B) Average responses to each water condition in each animal grouped by striatal areas. (C) Average response functions of dopamine axons in each striatal area. (D) Comparison of parameters for each animal grouped by striatal areas. ‘Water big-medium’ is responses to big water minus responses to medium water at the BIG side and ‘Water medium-small’ is responses to medium water minus responses to small water at the SMALL side, normalized with difference of water amounts (2.2 minus 0.8 for BIG and 0.8 minus 0.2 for SMALL). ‘Prediction SMALL-BIG’ is responses to medium water at SMALL side minus responses to medium water at BIG side. ‘Zero-crossing BIG’ is the water amount when the dopamine response is zero at BIG and side, which was estimated by the obtained response function. ‘Zero-crossing SMALL’ is the water amount when the dopamine response is zero at SMALL side, which was estimated by the obtained response function. Response changes by water amounts (BIG or SMALL) or prediction was not significantly modulated by the striatal areas (F(2,19) = 4.33, p=0.028, F(2,19) = 0.87, p=0.43, F(2,19) = 1.11, p=0.34, ANOVA), whereas zero-crossing points (BIG or SMALL) were significantly modulated (F(2,19) = 8.6, p=0.0021, F(2,19) = 8.5, p=0.0023, ANOVA; t(11) = 3.6, p=0.0039, DMS versus DLS; t(14) = −2.4, p=0.028, VS versus DMS; t(13) = 2.4, p=0.030, VS versus DLS for BIG side; t(14) = −1.8, p=0.085, VS versus DMS; t(13) = 3.1, p=0.0076, VS versus DLS; t(11) = 3.88, p=0.0026, DMS versus DLS for SMALL side, two sample t-test). (E) Zero-crossing points were plotted along anatomical location in the striatum. Zero-crossing points were correlated with medial-lateral positions (t = −2.8, p=0.011) and with dorsal-ventral positions (t = −2.7, p=0.014) but not with anterior-posterior positions (t = −0.3, p=0.72). Linear regression. (F) Zero-crossing points were fitted with recorded location, and the estimated values in the striatal area were overlaid on the atlas for visualization (see Materials and methods). Trials with all odor types (pure and mixture) were used in this figure. t-test, n = 9, 7, six animals for VS, DMS, DLS.

Figure 4—figure supplement 1. Zero-crossing points across the striatum with different methods.

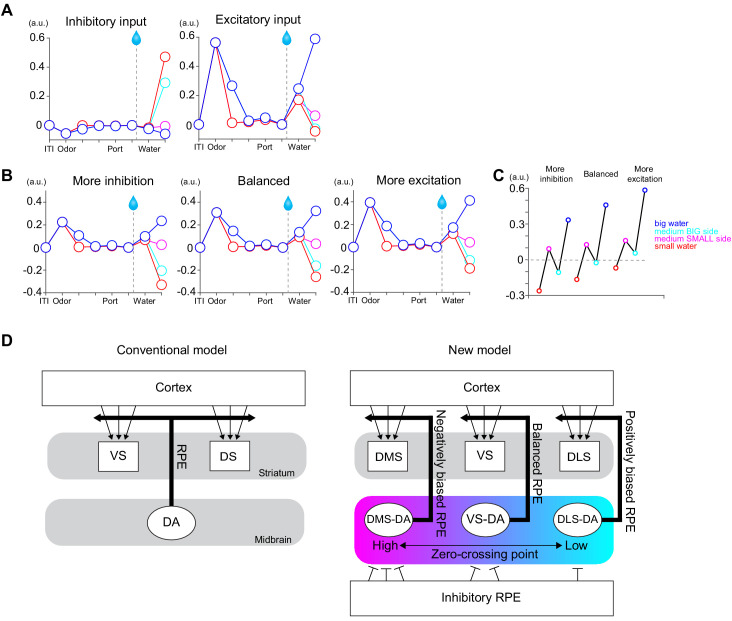

The above results emphasized the overall similarity of reward responses across areas, but some important differences were also observed. Most notably, although a delivery of a small reward caused an inhibition of dopamine axons below baseline in VS and DMS, the activity remained non-negative in DLS. The overall responses tended to be higher in DLS.

In order to understand the diversity of dopamine responses to reward, we examined modulation of dopamine axon activity by different parameters (Figure 4D). First, the effect of the amount of ‘actual’ reward was quantified by comparing responses to different amounts of water for a given cue (i.e. the same expectation). The reward responses in all areas were modulated by reward amounts, with a slightly higher modulation by water amounts in VS (Figure 4D Water big-medium, Water medium-small). Next, the effect of expectation was quantified by comparing the responses to the same amounts of water with prediction of different amounts. Effects of reward size prediction were not significantly different across areas, although VS showed slightly less modulation with more variability (Figure 4D, prediction SMALL-BIG).

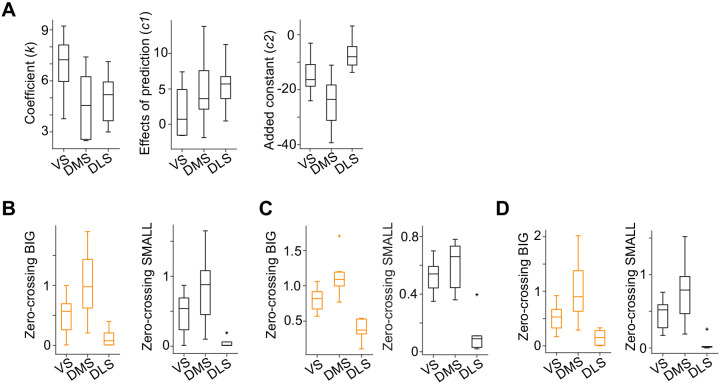

Next, we sought to characterize these differences between areas in simpler terms by fitting response curves (response functions). Previous studies that quantified responses of dopamine neurons to varied amounts of reward under different levels of expectation indicated that their reward responses can be approximated by a common function, with different levels of expectation just shifting the resulting curves up and down while preserving the shape (Eshel et al., 2016). We, therefore, fitted dopamine axon responses with a common response function (a power or linear function) for each expectation level (i.e. separately for BIG and SMALL) while fixing the shape of the function (i.e. the exponent of the power function or the slope of the linear function was fixed, respectively) (Figure 4C, Figure 4—figure supplement 1A). The obtained response functions for the three areas recapitulated the main difference between VS, DMS, and DLS, as discussed above. For one, the response curves of DLS are shifted overall upward. This can be characterized by estimating the amount of water that does not elicit a change in dopamine responses from baseline firing (‘zero-crossing point’ or reversal point). The zero-crossing points, obtained from the fitted curves, were significantly lower in DLS (Figure 4C and D). The results were similar regardless of whether the response function was a power (power function or a linear function () (Figure 4—figure supplement 1B). Similar results were obtained using the aforementioned kernel models in place of the actual activity (Figure 4—figure supplement 1D).

Since the recording locations varied across animals, we next examined the relationship between recording locations and the zero-crossing points (Figure 4E and F). The zero-crossing points varied both along the medial-lateral and the dorsal-ventral axes (linear regression coefficient; β = −50.8 [zero-crossing point water amounts/mm], t = −2.8, p=0.011 for medial-lateral axis; β = −43.1, t = −2.7, p=0.014 for the dorsal-ventral axis). Examination of each animal confirmed that DMS showed higher zero-crossing points (upper-left in Figure 4E left) whereas DLS showed lower zero-crossing points (upper-right cluster in Figure 4E right).

We next examined whether the difference in zero-crossing points manifested specifically during reward responses or whether it might be explained by recording artifacts; upward and downward shifts in the response function can be caused by a difference in baseline activity before trial start (odor onset), and/or lingering activity of pre-reward activity owing to the relatively slow dynamics of the calcium signals (a combination of calcium concentration and the indicator). To examine these possibilities, the same analysis was performed after subtracting the pre-reward signals (Figure 4—figure supplement 1C). We observed similar or even bigger differences in zero-crossing points (F(2,19) = 20.5, p=1.7 × 10−5, analysis of variance [ANOVA]). These results indicate that the elevated or decreased responses, characterized by different zero-crossing points, were not due to a difference in ‘baseline’ but were related to the difference that manifests specifically in responses to reward.

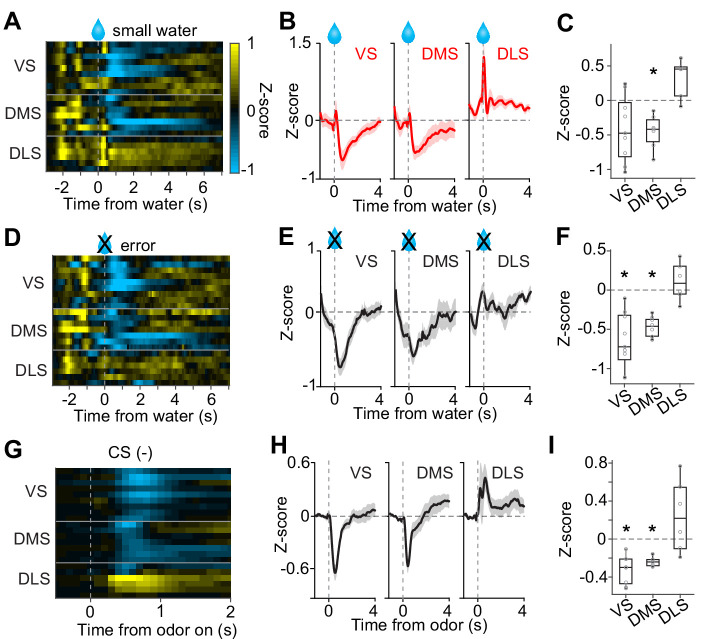

Considerably small zero-crossing points in dopamine axons in DLS were not due to a poor sensitivity to reward amounts nor a poor modulation by expected reward (Figure 4D). Different zero-crossing points, that is shifts of the boundary between excitation and inhibition at reward, suggest biased representation of TD error in dopamine axons across the striatum. In TD error models, difference in zero-crossing points may affect not only water responses but also responses to other events. Thus, the small zero-crossing points in dopamine axons in DLS should yield almost no inhibition following an event that is worse than predicted. To test this possibility, we examined responses to events with lower value than predicted (Figure 5): small water (Figure 5A–C), water omission caused by choice error (Figure 5D–F), and a cue that was associated with no outcome (Figure 5G–I). Consistent with our interpretation of small zero-crossing points, dopamine axons in DLS did not show inhibition in response to outcomes that were worse than predicted while being informative about water amounts.

Figure 5. No inhibition by negative prediction error in dopamine axons in DLS.

(A) Activity pattern in each recording site aligned at small water. (B) Average activity pattern in each brain area (mean ± SEM). (C) Mean responses to small water (0.3–1.3 s after water onset) were negative in VS and DMS (t(8) = −2.3, p=0.044; t(6) = −4.5, p=0.0040, responses versus baseline, one sample t-test), but not in DLS (t(5) = 3.3, p=0.020 responses versus baseline, one sample t-test). The responses were different across striatal areas (F(2,19) = 9.62, p=0.0013, ANOVA; t(13) = −3.4, p=0.0041, VS versus DLS; t(11) = −5.5, p=1.8 × 10−4, DMS versus DLS: t(14) = 0.20, p=0.83, VS versus DMS, two sample t-test). (D) Activity pattern aligned at water timing in error trials. (E) Average activity pattern in each brain areas (mean ± SEM). (F) Mean responses in error trials (0.3–1.3 s after water timing) were negative in VS and DMS (t(8) = −5.4, p=6.2 × 10−4; t(6) = −10.9, p=3.5 × 10−5, responses versus baseline, one sample t-test), but not in DLS (t(5) = 1.1, p=0.30, responses versus baseline, one sample t-test). The responses were different across striatal areas (F(2,19) = 14.7, p=1.3 × 10−4, ANOVA; t(13)=−4.5, p=5.6 × 10−4, VS versus DLS; t(11)=-5.7, p=1.2 × 10−4, DMS versus DLS; t(14) = −1.1, p=0.25, VS versus DMS, two sample t-test). (G) Activity pattern aligned at CS(-) in a fixed reward amount task. (H) Average activity pattern in each brain area (mean ± SEM). (I) Mean responses at CS(-) (−1–0 s before odor port out) were negative in VS and DMS (t(8) = −6.7, p=1.4 × 10−4, VS; t(6) = −13.4, p=1.0 × 10−5, DMS, responses versus baseline, one sample t-test), but not in DLS (t(5) = 1.5, p=0.17, responses versus baseline, one sample t-test). Responses were different across striatal areas (F(2,19) = 13.1, p=2.5 × 10−4, ANOVA; t(13) = −4.1, p=0.0012, VS versus DLS; t(11) = −3.3, p=0.0065, DMS versus DLS; t(14) = −1.4, p=0.16, VS versus DMS, two sample t-test). n = 9, 7, six animals for VS, DMS, DLS.

Taken together, these results demonstrate that dopamine reward responses in all three areas exhibited characteristics of RPEs. However, relative to canonical responses in VS, the responses were shifted more positively in the DLS and more negatively in the DMS.

TD error dynamics in signaling perceptual uncertainty and cue-associated value

The analyses presented so far mainly focused on phasic dopamine responses time-locked to cues and reward. However, dopamine axon activity also exhibited richer dynamics between these events, which need to be explained. For instance, the signals diverged between correct and error trials even before the actual outcome was revealed (a reward delivery versus a lack thereof) (Figure 2C Choice). This difference between correct and error trials, which is dependent on the strength of sensory evidence (or stimulus discriminability), was used to study how neuronal responses are shaped by ‘confidence’. Confidence is defined as the observer’s posterior probability that their decision is correct given their subjective evidence and their choice () (Hangya et al., 2016). A previous study proposed a specific relationship between stimulus discriminability, choice and confidence (Hangya et al., 2016), although generality of the proposal is not supported (Adler and Ma, 2018; Rausch and Zehetleitner, 2019). Additionally, in our task, the mice combined the information about reward size with the strength of sensory evidence to select an action (confidence, or uncertainty) (Figure 1). The previous analyses did not address how these different types of information affect dopamine activity over time. We next sought to examine the time course of dopamine axon activity in greater detail, and to determine whether a simple model could explain these dynamics.

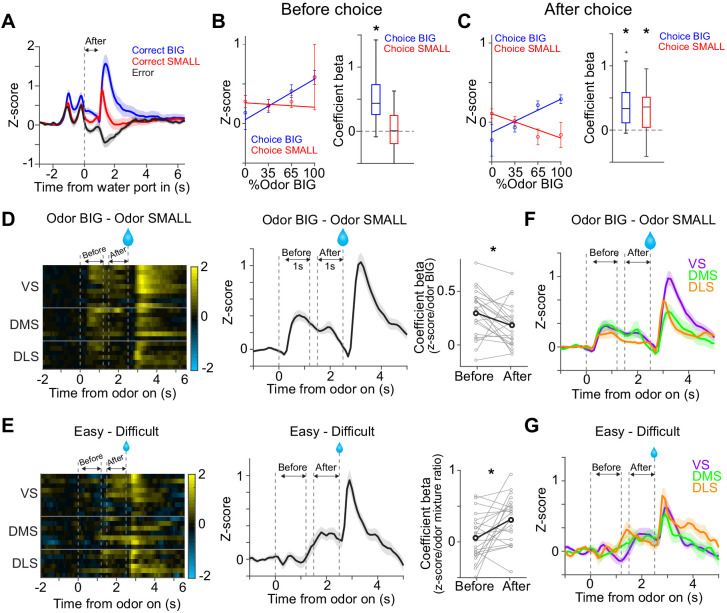

Our task design included two delay periods, imposed before choice movement and water delivery, to improve our ability to separate neuronal activity associated with different processes (Figure 1A). The presence of stationary moments before and after the actual choice movement allows us to separate time windows before and after the animal’s commitment to a certain option. We examined how the activity of dopamine neurons changed before choice movement and after the choice commitment (Figure 6).

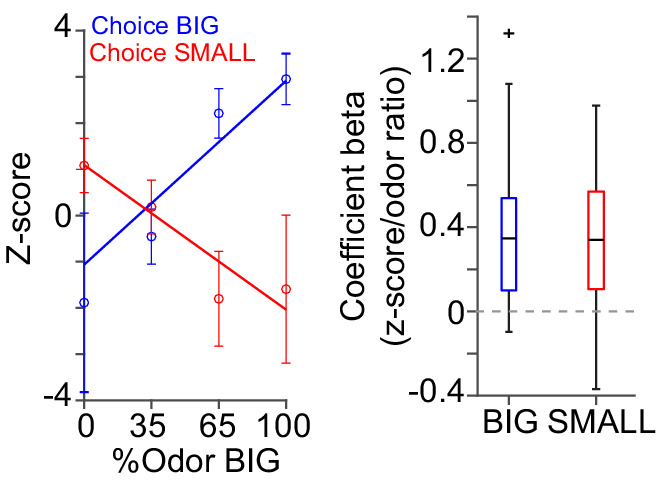

Figure 6. Dopamine signals stimulus-associated value and sensory evidence with different dynamics.

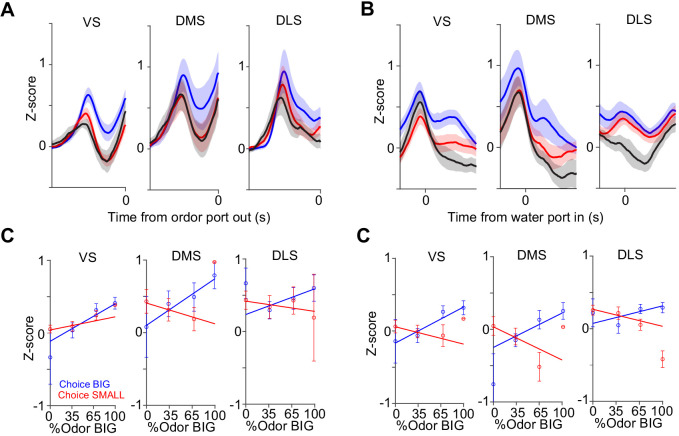

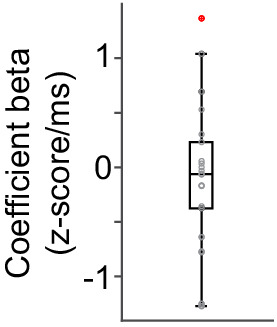

(A) Dopamine axon activity pattern aligned to time of water port entry for all animals (mean ± SEM). (B) Responses before choice (−1–0 s before odor port out) were fitted with linear regression with odor mixture ratio, and coefficient beta (slope) for all the animals are plotted. Correlation slopes were significantly positive for choice of the BIG side (t(21) = 6.0, p=5.6 × 10−6, one sample t-test), but not significant for choice of the SMALL side (t(21) = −0.8, p=0.42, one sample t-test). (C) Responses after choice (0–1 s after water port in) were fitted with linear regression with stimulus evidence (odor %) and coefficient beta (slope) for all the animals are plotted. Correlation slopes were significantly positive for both choice of the BIG side (t(21) = 5.6, p=1.4 × 10−5, one sample t-test) and of the SMALL side (t(21) = 4.4, p=2.2 × 10−4, one sample t-test). (D) Dopamine axon activity with an odor that instructed to choose BIG side (pure odor, correct choice) minus activity with odor that instructed to choose SMALL side (pure odor, correct choice) in each recording site (left), and the average difference in activity was plotted (mean ± SEM, middle). Correlation slopes between responses and stimulus-associated value (water amounts) significantly decreased after choice (t(21) = 2.3, p=0.026, before choice (−1–0 s before odor port out) versus after choice (0–1 s after water port in), pure odor, correct choice, paired t-test). (E) Dopamine axon activity when an animal chose SMALL side in easy trials (pure odor, correct choice) minus activity in difficult trials (mixture odor, wrong choice) in each recording site (left), and the average difference in activity was plotted (mean ± SEM, center). Coefficient beta between responses to odors and sensory evidence (odor %) significantly increased after choice (t(21) = −2.9, p=0.0078, before choice versus after choice, paired t-test). (F) Average difference in activity (odor BIG minus odor SMALL) before and after choice in each striatal area. The difference of coefficient (before versus after choice) was not significantly different across areas (F(2,19) = 0.15, p=0.86, ANOVA). (G) Average difference in activity (easy minus difficult) in each striatal area. The difference of coefficient (before versus after choice) was not significantly different across areas (F(2,19) = 1.46, p=0.25, ANOVA). n = 22 animals.

Figure 6—figure supplement 1. Dopamine axon responses before and after choice in each striatal area.

We first examined dopamine axon activity after water port entry (0–1 s after water port entry). In this period, the animals have committed to a choice and are waiting for the outcome to be revealed. Responses following different odor cues were plotted separately for trials in which the animal chose the BIG or SMALL side. The vevaiometric curve (a plot of responses against sensory evidence) followed the expected ‘X-pattern’ with a modulation by reward size (Hirokawa et al., 2019), which matches the expected value for these trial types, or the size of reward multiplied by the probability of receiving a reward, given the presented stimulus and choice (Figure 6C). The latter has been interpreted as the decision confidence, (Lak et al., 2017; Lak et al., 2020b). The crossing point of the two lines forming an ‘X’ is shifted to the left in our data because of the difference in the reward size (Figure 6C).

When this analysis was applied to the time period before choice movement (0–1 s before odor port exit), the pattern was not as clear; the activity was monotonically modulated by the strength of sensory evidence (%Odor BIG) only for the BIG choice trials, but not for the SMALL choice trials (Figure 6B). This result is contrary to a previous study that suggested that the dopamine activity reflecting confidence develops even before a choice is made (Lak et al., 2017). We note, however, that the previous study only examined the BIG choice trials, and the results were shown by ‘folding’ the x-axis, that is, by plotting the activity as a function of the stimulus contrast (which would correspond to |%Odor BIG – 50| in our task), with the result matching the so-called ‘folded X-pattern’. We would have gotten the same result, had we plotted our results in the same manner excluding the SMALL choice trials. Our results, however, indicate that a full representation of ‘confidence’ only becomes clear after a choice commitment, leaving open the question what the pre-choice dopamine axon activity really represents.

The aforementioned analyses, using either the kernel regression or actual activity showed that cue responses were modulated by whether the cue instructed a choice toward the BIG or SMALL side (Figure 2C and F). These results indicate that the information about stimulus-associated values (BIG versus SMALL) affected dopamine neurons earlier than the strength of sensory evidence (or confidence). We next examined the time course of how these two variables affected dopamine axon activity more closely. We computed the dopamine axon activity between trials when a pure odor instructed to go to the BIG versus SMALL side. Consistent with the above result, the difference was evident during the cue period, and then gradually decreased after choice movement (Figure 6D). We performed a similar analysis, contrasting between easy and difficult trials (i.e. the strength of sensory evidence). We computed the difference between dopamine axon activity in trials when the animal chose the SMALL side after the strongest versus weaker stimulus evidence (a pure odor that instructs to choose the SMALL side versus an odor mixture that instructs to choose the BIG side). In stark contrast to the modulation by the stimulus-associated value (BIG versus SMALL), the modulation by the strength of stimulus evidence in SMALL trials fully developed only after a choice commitment (i.e. water port entry) (Figure 6E). Across striatal regions, the magnitude and the dynamics of modulation due to stimulus-associated values and the strength of sensory evidence were similar (Figure 6F and G), although we noticed that dopamine axons in DMS showed slightly higher correlation with sensory evidence before choice (Figure 6—figure supplement 1).

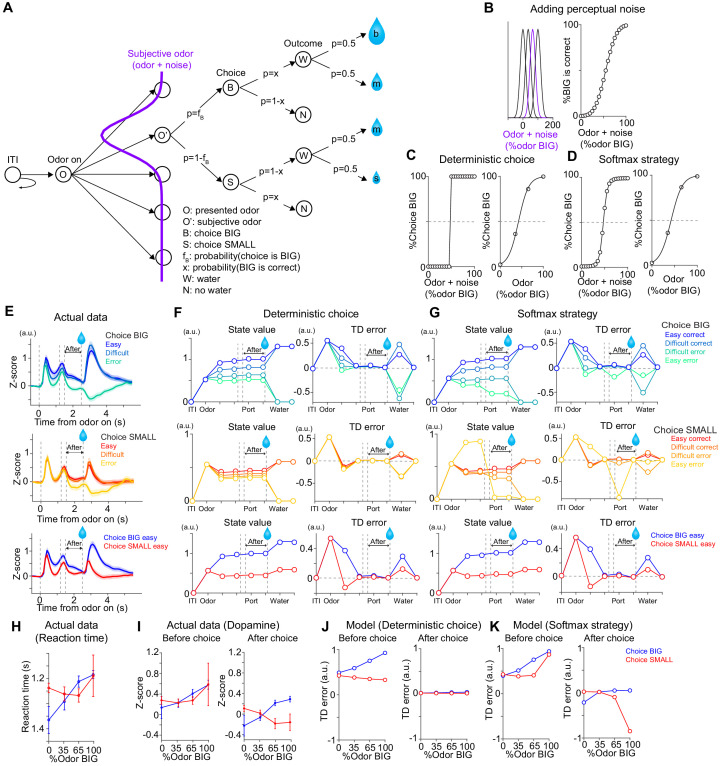

As discussed above, a neural correlate of ‘confidence’ appears at a specific time point (after choice commitment and before reward delivery) or in a specific trial type (when an animal would choose BIG side) before choice. We, therefore, next examined whether a simple model can account for dopamine axon activity more inclusively (Figure 7). To examine how the value and RPE may change within a trial, we employed a Monte-Carlo approach to simulate an animal’s choices assuming that the animal has already learned the task. We used a Monte-Carlo method to obtain the ground truth landscape of the state values over different task states, without assuming a specific learning algorithm.

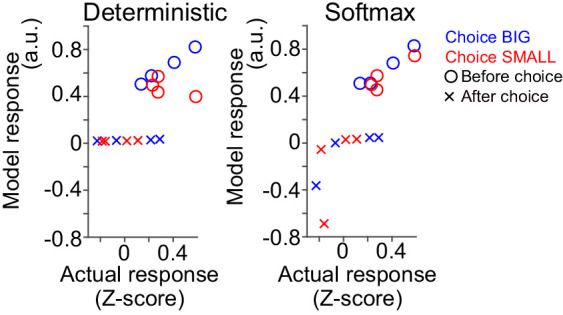

Figure 7. TD error dynamics capture emergence of sensory evidence after stimulus-associated value in dopamine axon activity.

(A) Trial structure in the model. Some repeated states are omitted for clarification. (B–D) Models were constructed by adding perceptual noise with normal distribution to each experimenter's odor (B left, subjective odor), calculating correct choice for each subjective odor (B right), and determining choice for each subjective odor (C or D left) according to choice strategy in the model. The final choice for each objective odor by experimenters (odor %) was calculated as the weighted sum of choice for subjective odors (C or D right). (E) Dopamine axon activity in trials with different levels of stimulus evidence: easy (pure odor, correct choice), difficult (mixture odor, correct choice), and error (mixture odor, error), when animals chose the BIG side (top) and when animals chose the SMALL side (middle). Bottom, dopamine axon activity when animals chose the BIG or SMALL side in easy trials (pure odor, correct choice). (F, G) Time course in each trial of value (left) and TD error (right) of a model. (H) Line plots of actual reaction time from Figure 1G. Y-axis are flipped for better comparison with models. (I) Line plots of actual dopamine axon responses before and after choice from Figure 6B and C. (J, K) Model responses before and after choice were plotted with sensory evidence (odor %). Arbitrary unit (a.u.) was determined by value of standard reward as 1 (see Materials and methods).

Figure 7—figure supplement 1. TD errors with stochastic choice strategies.

Figure 7—figure supplement 2. Comparison of model and actual responses.

Figure 7—figure supplement 3. Correlation between dopamine axon signals and reaction time.

Figure 7—figure supplement 4. Correlation between dopamine axon signals and movement time.

Figure 7—figure supplement 5. Dopamine axon responses while animals stayed at water port.

Figure 7—figure supplement 6. Dopamine axon signals and body movement when a mouse waits for water.

The variability and errors in choice in psychophysical performance are thought to originate in the variability in the process of estimating sensory inputs (perceptual noise) or in the process of selecting an action (decision noise). We first considered a simple case where the model contains only perceptual noise (Green and Swets, 1966). In this model, an internal estimate of the stimulus or a ‘subjective odor’ was obtained by adding Gaussian noise to the presented odor stimulus on a trial-by-trial basis (Figure 7B left). In each trial, the subject chooses deterministically the better option (Figure 7C left) based on the subjective odor and the reward amount associated with each choice (Figure 7B right). The model had different ‘states’ considering N subjective odors (N = 60 and 4 were used and yielded similar results), the available options (left versus right), and a sequence of task events (detection of odor, recognition of odor identity, choice movement, water port entry [choice commitment], Water/No-water feedback, inter-trial interval [ITI]) (Figure 7A). The number of available choices is two after detecting an odor but reduced to 1 (no choice) after water port entry. In each trial, the model receives one of the four odor mixtures, makes a choice, and obtains feedback (rewarded or not). After simulating trials, the state value for each state was obtained as the weighted sum of expected values of the next states, which was computed by multiplying expected values of the next states with probability of transitioning into the corresponding state. After learning, the state value in each state approximates the expected value of future reward, which is the sum of the amount of reward multiplied by probability of the reward (for simplicity, we assumed no temporal discounting of value within a trial). After obtaining state values for each state, state values for each odor (‘objective’ odor presented by experimenters) were calculated as the weighted sum of state values over subjective odors. After obtaining state values for each state for each objective odor, we then computed TD errors using a standard definition of TD error which is the difference between the state values at consecutive time points plus received rewards at each time step (Sutton, 1987).

We first simulated the dynamics of state values and TD errors when the model made a correct choice in easy trials, choosing either the BIG or SMALL side (Figure 7F bottom, blue versus red). As expected, the state values for different subjective odors diverged as soon as an odor identity was recognized, and the differences between values stayed constant as the model received no further additional information before acquisition of water. TD errors, which are the derivative of state values, exhibited a transient increase after odor presentation, and then returned to their baseline levels (near zero), remaining there until the model received a reward. Next, we examined how the strength of sensory evidence affected the dynamics of value and TD errors (Figure 7F and J). Notably, after choice commitment, TD error did not exhibit the additional modulation by the strength of sensory evidence, or a correlate of confidence (Figure 7F right and 7J right), contrary to our data (Figure 7E and I right). Thus, this simple model failed to explain aspects of dopamine axon signals that we observed in the data.

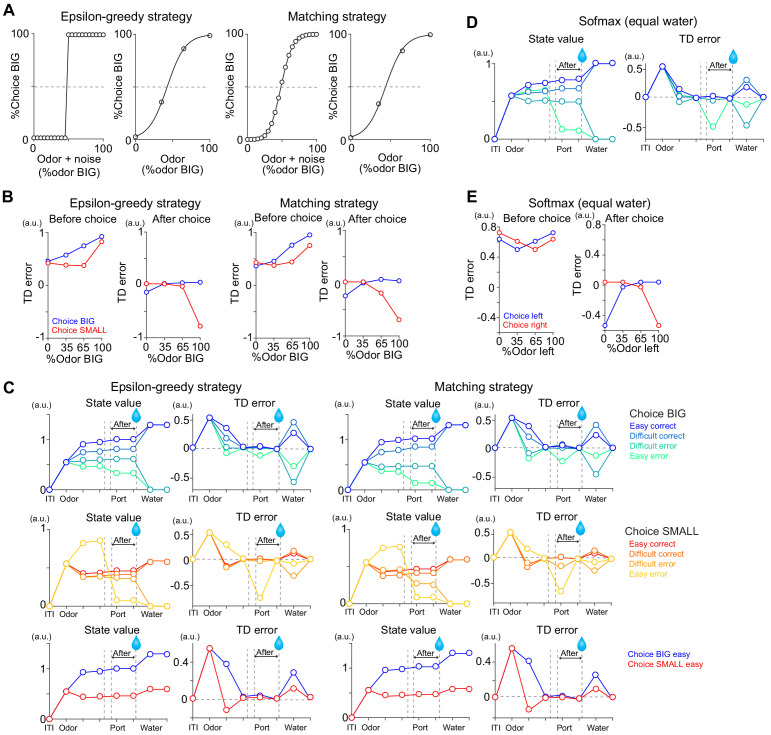

In the first model, we assumed that the model picks the best option given the available information in every trial (Figure 7C). In this deterministic model, all of the errors in choice are attributed to perceptual noise. We next considered a model that included decision noise in addition to the perceptual noise (Figure 7D). Here decision noise refers to some stochasticity in the action selection process, and may arise from errors in an action selection mechanism or exploration of different options, and can be modeled using different methods depending on rationale behind the noise. Here, we present results based on a ‘softmax’ decision rule, in which a decision variable (in this case, the difference in the ratio of the expected values at the two options) was transformed into the probability of choosing a given option using a sigmoidal function (e.g. Boltzmann distribution) (Sutton and Barto, 1998). We also tested other stochastic decision rules such as Herrnstein’s matching law (Herrnstein, 1961) or ε-greedy exploration (randomly selecting an action in a certain fraction [ε] of trials) (Sutton and Barto, 1998; Figure 7—figure supplement 1A–C).

Interestingly, we were able to explain various peculiar features of dopamine axon signals described above simply by adding some stochasticity in action selection (Figure 7G and K). Note that the main free parameters of the above models are the width of the Gaussian noise, which determines the ‘slope’ of the psychometric curve, and was chosen based merely on the behavioral performance, but not the neural data. When the model chose the BIG side, state value at odor presentation was roughly monotonically modulated by the strength of sensory evidence similar to the above model (Figure 7G top left). When the model chose the SMALL side, however, the relationship between the strength of sensory evidence and value was more compromised (Figure 7G middle left). As a result, TD error did not show a monotonic relationship with sensory evidence before choice (Figure 7G middle right and 7K left), similar to actual dopamine axons responses (Figure 7E middle and 7I left), which was reminiscent of reaction time pattern (Figure 7H). On the other hand, once a choice was committed, the model exhibited interesting dynamics very different from the above deterministic model. After choice commitment, expected value was monotonically modulated by the strength of sensory evidence for both BIG and SMALL side choices (Figure 7G top and middle left, After). Further, because of the introduced stochasticity in action selection, the model sometimes chose a suboptimal option, resulting in a drop in the state value. This, in turn, caused TD error to exhibit an ‘inhibitory dip’ once the model ‘lost’ a better option (Figure 7G right), similar to the actual data (Figure 7E and I). This effect was strong particularly when the subjective odor instructed the BIG side but the model ended up choosing the SMALL side. For a similar reason, TD error showed a slight excitation when the model chose a better option (i.e. lost a worse option). The observed features in TD dynamics were not dependent on exact choice strategy: softmax, matching, and ε-greedy, all produced similar results (Figure 7—figure supplement 1B,C). This is because, with any strategy, after commitment of choice, the model loses another option with a different value, which results in a change in state value. These results are in stark contrast to the first model in which all the choice errors were attributed to perceptual noise (Figure 7—figure supplement 2, difference of Pearson's correlation with actual data p=0.0060, n = 500 bootstrap, see Materials and methods).

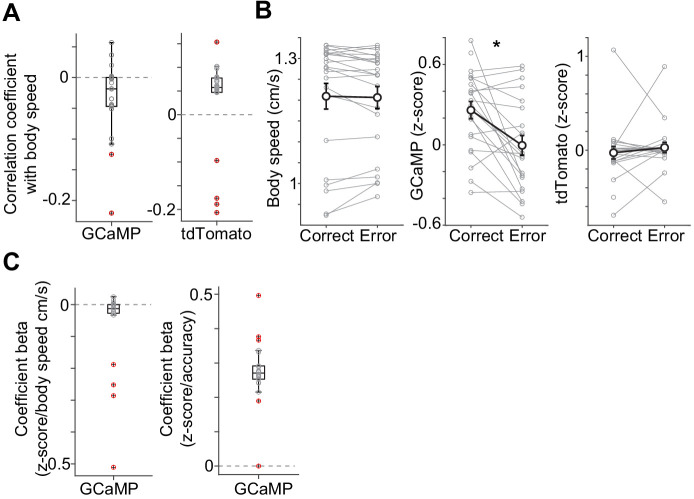

The observed activity pattern in each time window is potentially caused by physical movement. For example, the qualitative similarity of reaction time and dopamine axon activity before choice (Figures 1G, 6B and 7H,I) suggests some interaction. However, we did not observe trial-to-trial correlation between reaction time and dopamine axon activity (Figure 7—figure supplement 3). On the other hand, we observed a weak correlation between movement time (from odor port exit to water port entry) and dopamine axon activity after choice (Figure 7—figure supplement 4). However, movement time did not show modulation by sensory evidence (Figure 7—figure supplement 4B) contrary to dopamine axon activity (Figure 6C), and dopamine axon activity was correlated with sensory evidence even after normalizing with movement time (Figure 7—figure supplement 4C). Since animals occasionally exit the water port prematurely in error trials (Figure 1H), we performed the same analyses as Figure 6C excluding trials where animals exited the water port prematurely. The results, however, did not change (Figure 7—figure supplement 5). While waiting for water after choice, the animal's body occasionally moved while the head stayed in a water port. We observed very small correlation between body displacement distances (body speed) and dopamine axon signals (Figure 7—figure supplement 6). However, this is potentially caused by motion artifacts in fluorometry recording, because we also observed significant correlation between dopamine axon signals and control fluorescence signals in each animal, although the direction was not consistent (Figure 7—figure supplement 6A). Importantly, neither body movement nor control signals showed modulation by choice accuracy (correct versus error) (Figure 7—figure supplement 6B). We performed linear regression of dopamine axon signals with body movement and accuracy with elastic net regularization, and dopamine axon signals were still correlated with accuracy (Figure 7—figure supplement 6C). These results indicate that the dopamine axon activity pattern we observed cannot be explained by gross body movement per se.

In summary, we found that a standard TD error, computing the moment-by-moment changes in state value (or, the expected future reward), can capture various aspects of dynamics in dopamine axon activity observed in the data, including the changes that occur before and after choice commitment, and the detailed pattern of cue-evoked responses. These results were obtained as long as we introduced some stochasticity in action selection (decision noise), regardless of how we did it. The state value dynamically changes during the performance of the task because the expected value changes according to an odor cue (i.e. strength of sensory evidence and stimulus-associated values) and the changes in potential choice options. A drop of the state value and TD error at the time of choice commitment occurs merely because the state value drops when the model chose an option that was more likely to be an error. Further, a correlate of ‘confidence’ appears after committing a choice, merely because at that point (and only at that point), the state value becomes equivalent to the reward size multiplied with the confidence, that is the probability of reward given the stimulus and the choice. This means that, as long as the animal has appropriate representations of states, a representation of ‘confidence’ can be acquired through a simple associative process or model-free reinforcement learning without assuming other cognitive abilities such as belief states or self-monitoring (meta-cognition). In total, not only the phasic responses but also some of the previously unexplained dynamic changes can be explained by TD errors computed over the state value, provided that the model contains some stochasticity in action selection in addition to perceptual noise. Similar dynamics across striatal areas (Figure 6) further support the idea that dopamine axon activity follows TD error of state values in spite of the aforementioned diversity in dopamine signals.

Discussion

In the present study, we monitored dopamine axon activity in three regions of the striatum (VS, DMS and DLS) while mice performed instrumental behaviors involving perceptual and value-based decisions. In addition to phasic responses associated with reward-predictive cues and reward, we also analyzed more detailed temporal dynamics of the activity within a trial. We present three main conclusions. First, contrary to the current emphasis on diversity in dopamine signals (and therefore, to our surprise), we found that dopamine axon activity in all of the three areas exhibited similar dynamics. Overall, dopamine axon dynamics can be explained approximately by the TD error which calculates moment-by-moment ‘changes’ in the expected future reward (i.e. state value) in our choice paradigm. Second, although previous studies propose confidence as an additional variable in dopamine signals (Engelhard et al., 2019; Lak et al., 2017), correlates of confidence/choice accuracy naturally emerge in dynamics of TD error. Thus, mere observation of correlates of confidence in dopamine activity does not necessarily support that dopamine neurons multiplex information. Third, interestingly, however, our results showed consistent deviation from what TD model predicts. As reported previously (Parker et al., 2016), during choice movements, contra-lateral orienting movements caused a transient activation in the DMS, whereas this response was negligible in VS and DLS. As pointed out in a previous study (Lee et al., 2019), this movement-related activity in DMS is unlikely to be a part of RPE signals. Nonetheless, dopamine axon signals overall exhibited temporal dynamics that are predicted by TD errors, yet, the representation of TD errors was biased depending on striatal areas. The activity during the reward period was biased toward positive responses in the DLS, compared to other areas; dopamine axon signals in DLS did not exhibit a clear inhibitory response (‘dopamine dip’) even when the actual reward was smaller than expected, or even when the animal did not receive a reward, despite our observations that dopamine axons in VS and DMS exhibited clear inhibitory responses in these conditions.

The positively or negatively biased reward responses in DLS and DMS can be regarded as important departures from the original TD errors, as it was originally formulated (Sutton and Barto, 1998). However, activation of dopamine neurons both in VTA and SNc are known to reinforce preceding behaviors (Ilango et al., 2014; Keiflin et al., 2019; Lee et al., 2020; Saunders et al., 2018), sharing, at least, their ability to function as reinforcement. Given the overall similarity between dopamine axon signals in the three areas of the striatum, these signals can be regarded as modified TD error signals. It is of note that our analyses are agnostic to how TD errors or underlying values are learned or computed: it may involve a model-free mechanism, as the original TD learning algorithm was formalized, or other mechanisms (Akam and Walton, 2021; Langdon et al., 2018; Starkweather et al., 2017). In any case, the different baselines in TD error-like signals that we observed in instrumental behaviors can provide specific constraints on the behaviors learned through dopamine-mediated reinforcement in these striatal regions.

Confidence and TD errors

Recent studies reported that dopamine neurons are modulated by various variables (Engelhard et al., 2019; Watabe-Uchida and Uchida, 2018). One of such important variables is confidence or choice accuracy (Engelhard et al., 2019; Lak et al., 2017; Lak et al., 2020b). Distinct from ‘certainty’ that approximates probability broadly over sensory and cognitive variables, confidence often implies a metacognition process that specifically validates an animal's own decision (Pouget et al., 2016). Confidence can affect an animal's decision-making by modulating both decision strategy and learning. While there are different ways to compute confidence (Fleming and Daw, 2017), a previous study concluded that dopamine neurons integrate decision confidence and reward value information, based on the observation that dopamine responses were correlated with levels of sensory evidence (Lak et al., 2017). However, the interpretation is controversial since the results can be explained in multiple ways, for instance, with simpler measurements of ‘difficulty’ in a signal detection theory (Adler and Ma, 2018; Fleming and Daw, 2017; Insabato et al., 2016; Kepecs et al., 2008). More importantly, previous studies are limited in that (1) they focused on somewhat arbitrarily chosen trial types to demonstrate confidence-related activity in dopamine neurons (Lak et al., 2017), and that (2) they did not consider temporal dynamics of dopamine signals within a trial. Our analysis revealed that dopamine axon activity was correlated with sensory evidence only in a specific trial type and/or in a specific time window. At a glance, dopamine axon activity patterns may appear to be signaling distinct variables at different timings. However, we found that the apparently complex activity pattern across different trial types and time windows can be inclusively explained by a single quantity (TD error) in one framework (Figure 7). Importantly, the dynamical activity pattern became clear only if all the trial types were examined. We note that state value and sensory evidence roughly covary in a limited trial type (trials with BIG choice), while previous studies mainly focused on trials with BIG choice and responses in a later time window (Lak et al., 2017). Our results indicate that the mere existence of correlates of confidence or choice accuracy in dopamine activity was not evidence for coding of confidence, belief state or metacognition, as claimed in previous studies (Lak et al., 2017; Lak et al., 2020b) using a similar task as ours.

Whereas our model takes a primitive strategy to estimate state value, state value can be also estimated with different methods. The observed dopamine axon activity resembled TD errors in our model if agent's choice strategy is not deterministic (i.e. there is decision noise). However, confidence measurements in previous models Hirokawa et al., 2019, Kepecs et al., 2008 and Lak et al., 2017 used a fixed decision variable, and hence, did not consider dynamics and probability that animal's choice does not follow sensory evidence. A recent study proposed a different way of computation of confidence by dynamically tracking states independent of decision variables (Fleming and Daw, 2017). A dynamical decision variable in a drift diffusion model also predicts occasional dissociation of confidence from choice (van den Berg et al., 2016; Kiani and Shadlen, 2009). While such dynamical measurements of confidence might be useful to estimate state value, confidence itself cannot be directly converted to state value because state value considers reward size and other choices as well. Interestingly, it was also proposed that a natural correlate of choice accuracy in primitive TD errors would be useful information in other brain areas to detect action errors (Holroyd and Coles, 2002). Together, our results and these models underscore the importance of considering moment-by-moment dynamics, and underlying computation.

Similarity of dopamine axon signals across the striatum

Accumulating evidence indicates that dopamine neurons are diverse in many respects including anatomy, physiological properties, and activity (Engelhard et al., 2019; Farassat et al., 2019; Howe and Dombeck, 2016; Kim et al., 2015; Lammel et al., 2008; Matsumoto and Hikosaka, 2009; Menegas et al., 2015; Menegas et al., 2017; Menegas et al., 2018; Parker et al., 2016; da Silva et al., 2018; Watabe-Uchida and Uchida, 2018). Our study is one of the first to directly compare dopamine signals in three different regions of the striatum during an instrumental behavior involving perceptual and value-based decisions. We found that dopamine axon activity in the striatum is surprisingly similar, following TD error dynamics in our choice paradigm.

Our observation of similarity across striatal areas (Figure 4A) would give an impression that these results are different from previous reports. We note, however, that our ability to observe similarities in dopamine RPE signals depended on parametric variations of experimental parameters. For instance, if we only had sessions with equal reward amount on both sides (i.e. our training sessions), we might have concluded that DMS is unique in greatly lacking reward responses. However, this was not true: the use of probabilistic reward with varying amounts allowed us to reveal similar response functions across these areas as well as the specific difference (overall activation level). We also note that our results included movement-related activity which cannot readily be explained by TD errors (Lee et al., 2019), in particular, contra-lateral turn-related activity in DMS, consistent with a previous study (Parker et al., 2016), However, systematic examination of striatal regions showed that such movement-related activity was negligible in other areas such as DLS and VS. The turning movement is one of the most gross task-related movements in our task, yet, dopamine signals representing this movement were not wide-spread unlike TD error-like activity. Taken together, although we cannot exclude the possibility that dopamine activity in DLS is modulated by a specific movement in particular conditions, our results do not support that TD error-like activity in DLS is generated by a completely different mechanism or based on other types of information than other dopamine neuron populations.

Our results in DMS are consistent with previous studies that reported small and somewhat mysterious responses to reward (Brown et al., 2011; Parker et al., 2016). We noticed that while animals were trained with fixed amounts of water, some of the dopamine axon signals in DMS did not exhibit clear responses to water, and on average water responses were smaller than in other areas (Figure 3, Figure 3—figure supplement 1). Once reward amounts became probabilistic, dopamine axons in DMS showed clear responses according to RPE (Figure 3, Figure 4), similar to the previous observation that dopamine responses to reward in DMS emerged after contingency change (Brown et al., 2011). Why are reward responses in DMS sometimes observed and sometimes not? We found that the response function for water delivery in dopamine axons in different striatal areas showed different zero-crossing points, the boundary between excitatory and inhibitory responses (Figure 4). The results suggested that dopamine axons in DMS use a higher standard (requiring larger amounts of reward to excite). In other words, dopamine signals in DMS use a strict criterion to be excited. Higher criteria in DMS may partly explain the observation that some dopamine neurons do not show a clear excitation by reward, such as in the case of our recording without reward amount modulations (Figure 3A,D,G). However, considering that dopamine responses to free water were also negligible in DMS in some studies (Brown et al., 2011; Howe and Dombeck, 2016), whether dopamine neurons respond to reward likely depends critically on task structures and training history. One potential idea is that dopamine in DMS has a higher excitation threshold because the system predicts upcoming reward optimistically, along not only size but also time, causing smaller RPE (predicting away) easily with little evidence. Optimistic expectation echoes with the idea of Watkin's Q-learning algorithm (Watkins, 1989; Watkins and Dayan, 1992) where an agent uses the maximum value among values of potential actions to compute RPEs, although we did not explore action values explicitly in this study. Future studies are needed to find the functional meaning of optimism in dopamine neurons and to examine whether the optimism is responsible for a specific learning strategy in DMS. We also have to point out that because fluorometry in our study only recorded average activity of dopamine axons, we likely missed diversity within dopamine axons in a given area. It will be important to further examine in what conditions these dopamine neurons lose responses to water, or whether there are dopamine neurons which do not respond to reward in any circumstances.

In contrast to DMS, we observed reliable excitation to water reward in dopamine axons in DLS. However, because we only recorded population activity of dopamine axons, our results do not exclude the possibility that some dopamine neurons that do not respond to reward also project to DLS. Alternatively, the previous observation that some dopamine neurons in the substantia nigra show small or no excitation to reward (da Silva et al., 2018) may mainly come from DMS-projecting dopamine neurons or another subpopulation of dopamine neurons that project to the tail of the striatum (TS) (Menegas et al., 2018), but not DLS. Notably, the study (da Silva et al., 2018) also used predictable reward (fixed amounts of water with 100% contingency) to examine dopamine responses to reward. In contrast, we found that dopamine axons in DLS show strong modulation by reward amounts and prediction, and their dynamics resemble TD errors in our task. Our observation suggests that the lack of reward omission responses and excitation by even small rewards in instrumental tasks is key for the function of dopamine in DLS.

Positively biased reinforcement signals in DLS dopamine

It has long been observed that the activity of many dopamine neurons exhibits a phasic inhibition when an expected reward is omitted or when the reward received is smaller than expected (Hart et al., 2014; Schultz et al., 1997). This inhibitory response to negative RPEs is one of the hallmarks of dopamine RPE signals. Our results that dopamine axon signals in DLS largely lack these inhibitory dips (Figure 4 and Figure 5) has profound implications on what types of behaviors are learned through DLS dopamine signals as well as what computational principles underlie reinforcement learning in DLS.