Abstract

Objectives

We investigated the ability of single-sided deaf listeners implanted with a cochlear implant (SSD-CI) to (i) determine the front-back and left-right location of sound sources presented from loudspeakers surrounding the listener and (ii) use small head rotations to further improve their localization performance. The resulting behavioral data were used for further analyses investigating the value of so-called “monaural” spectral shape cues for front-back sound source localization.

Design

Eight SSD-CI patients were tested with their CI on and off. Eight NH listeners, with one ear plugged during the experiment, and another group of eight NH listeners, with neither ear plugged, were also tested. Gaussian noises of three-second duration were bandpass filtered to 2–8 kHz and presented from one of six loudspeakers surrounding the listener, spaced 60° apart. Perceived sound source localization was tested under conditions where the patients faced forward with the head stationary, and under conditions where they rotated their heads between ±30°.

Results

(i) Under stationary listener conditions, unilaterally-plugged NH listeners and SSD-CI listeners (with their CIs both on and off) were nearly at chance in determining the front-back location of high-frequency sound sources. (ii) Allowing rotational head movements improved performance in both the front-back and left-right dimensions for all listeners. (iii) For SSD-CI patients with their CI turned off, head rotations substantially reduced front-back reversals, and the combination of turning on the CI with head rotations led to near-perfect resolution of front-back sound source location. (iv) Turning on the CI also improved left-right localization performance. (v) As expected, NH listeners with both ears unplugged localized to the correct front/back and left-right hemifields both with and without head movements.

Conclusions

Although SSD-CI listeners demonstrate a relatively poor ability to distinguish the front-back location of sound sources when their head is stationary, their performance is substantially improved with head movements. Most of this improvement occurs when the CI is off, suggesting that the NH ear does most of the “work” in this regard, though some additional gain is introduced with turning the CI on. During head turns, these listeners appear to primarily rely on comparing changes in head position to changes in monaural level (ML) cues produced by the direction-dependent attenuation of high-frequency sounds that result from acoustic head shadowing. In this way, SSD-CI listeners overcome limitations to the reliability of monaural spectral and level cues under stationary conditions. SSD-CI listeners may have learned, through chronic monaural experience before CI implantation, or with the relatively impoverished spatial cues provided by their CI-implanted ear, to exploit the ML cue. Unilaterally-plugged NH listeners were also able to use this cue during the experiment to realize approximately the same magnitude of benefit from head turns just minutes after plugging, though their performance was less accurate than that of the SSD-CI listeners, both with and without their CI turned on.

I. INTRODUCTION

Sound source localization is important in real-world scenarios for two primary reasons. First, when a listener can perceptually distinguish the location of a talker (s)he wants to listen to from the locations of other talkers (s)he wishes to ignore, speech comprehension is often improved (e.g., Bronkhorst (2015); Freyman et al. (1999), but see Edmonds and Culling (2005); Middlebrooks and Onsan (2012)). Thus, communication in busy auditory environments may become easier (cf. Firszt et al., 2017). Second, audition allows listeners to localize salient objects that are outside the field of vision, such as a bus coming from behind. For a general review of auditory localization, see Middlebrooks and Green (1991).

A. Auditory spatial ambiguities and listener head movements

The majority of what we know about sound source localization, regardless of the population, is from laboratory conditions where both the listener and sound source remain stationary, yet both listeners and sound sources move quite often in real-world scenarios. Listeners must localize sound sources in the context of the surrounding environment and distinguish sound source movements from listener movements. Both require the integration of an estimate of the listener’s head position, relative to the surrounding environment, with an auditory estimate of the sound source’s location relative to the listener’s head (Wallach, 1940; Yost et al., 2019). While listener movement may make this integration more complex, it also introduces opportunities to resolve auditory spatial ambiguities.

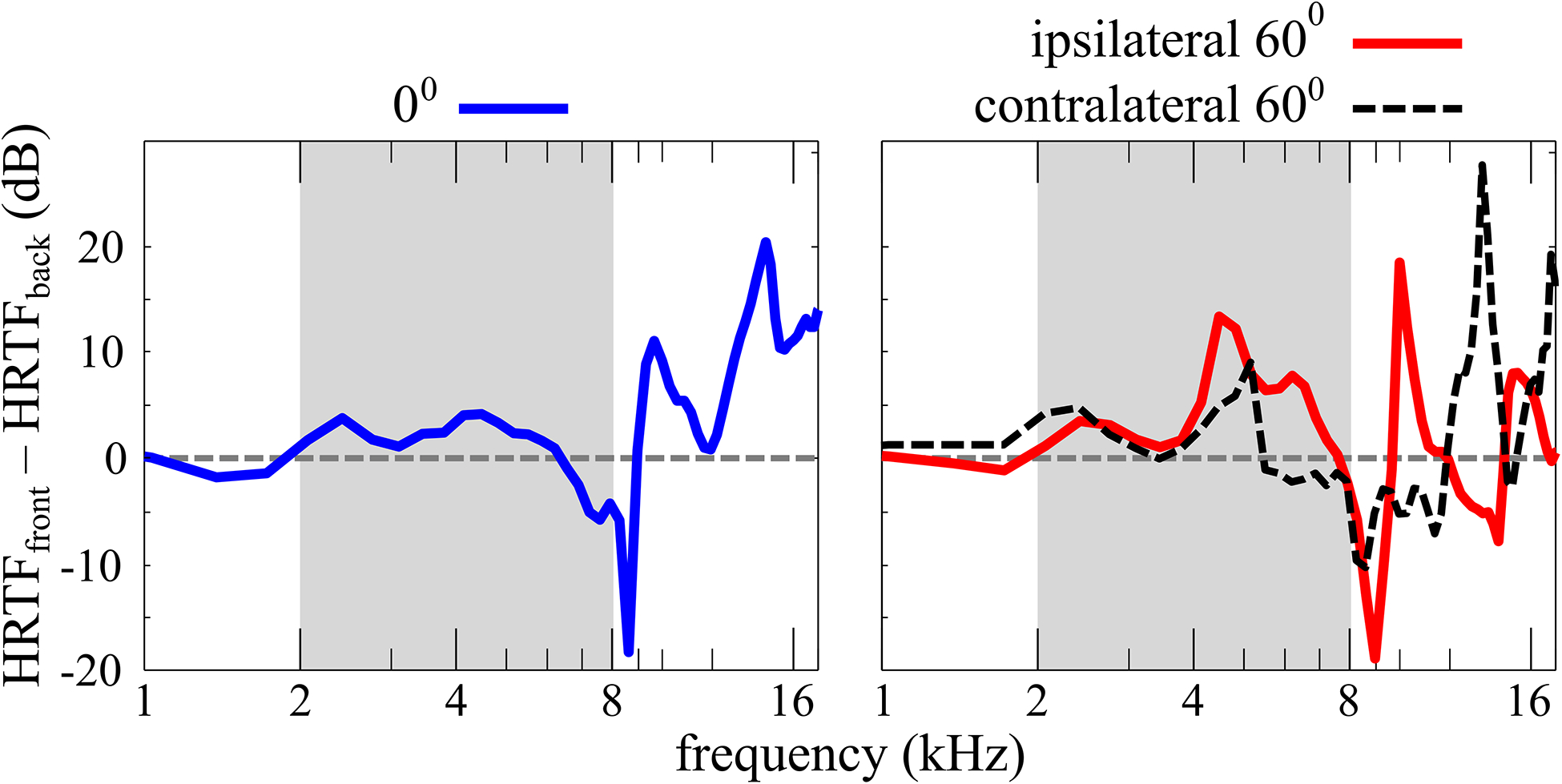

One such ambiguity arises in determining the front-back location of sound sources. Interaural differences of time (ITD) and level (ILD) specify a “cone-shaped” locus of positions that all have roughly the same angular relation to the listener’s two ears and therefore roughly the same interaural difference cues, especially for ITDs (Mills, 1972; Wallach, 1939). Therefore, interaural differences do not, on their own, provide enough information to determine where on the “cone of confusion” a sound source lies. However, the pinnae filter high frequencies differently depending on the location of the sound source, and this information is included in the head-related transfer function (HRTF). The resulting spectral shape at each ear may provide a cue to the location of a sound source on the relevant cone of confusion. This spectral shape cue is especially implicated in the judgment of sound source elevation, which includes the front-back dimension (e.g., Makous and Middlebrooks, 1990; Oldfield and Parker, 1984) and can therefore be used to resolve the front-back ambiguity, provided there is enough high-frequency energy of sufficient bandwidth in the sound stimulus and the listener has some familiarity with the spectral shape of the stimulus itself. Figure 1 shows the difference in energy for sound stimuli presented from the front v. the back of a KEMAR manikin. Below approximately 2 kHz, the magnitude of the difference in energy between front and back is quite small, and is unlikely to provide a useful cue. Up to approximately 4 kHz, the magnitude of the cue is still relatively small – an amplitude of roughly 5 dB or less. From approximately 7 kHz and up, the magnitude of the front-back cue increases to levels that may be more useful, especially for signals that are only roughly familiar to the listener. Unfortunately for CI listeners, there is often little transduced signal and little frequency resolution in what is transduced for frequencies higher than approximately 8 kHz (Wilson and Dorman, 2008).

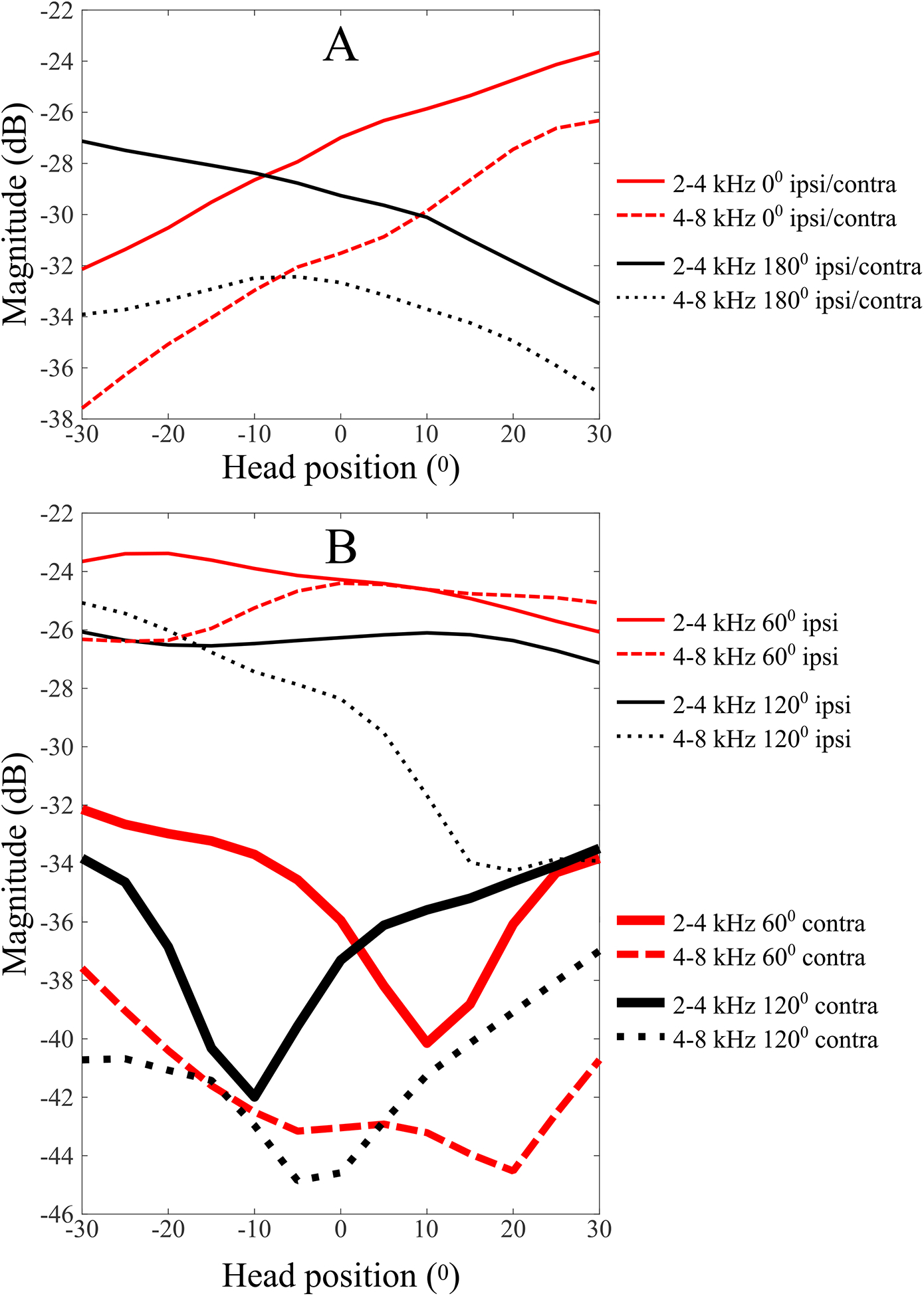

Fig. 1.

The frequency magnitude difference between front and back KEMAR HRTFs measured by Gardner and Martin (2005) for sound source angles of 0° and 60°, relative to the listener, at the ears ipsilateral and contralateral to the sound source. At 0° the HRTF, and therefore the difference between front and back HRTFs, is the same at both ears. For NH listeners with asymmetric pinnae, this may not be exactly the case. The frequency range of the stimuli presented in this study is highlighted in gray in each figure panel.

In cases where the sound source does not have enough high-frequency energy for a useful spectral shape cue, NH listeners can use head rotations to compare the direction of change in the head-related auditory estimate with the world-related change in head position estimated from proprioceptive cues, efferent copy cues, vestibular cues, etc. For example, for a stationary sound source located in front of a listener, a clockwise head turn will result in binaural difference cues that increasingly favor the left ear, while a sound source behind the listener will result in the opposite trend, where binaural cues increasingly favor the right ear. This information can then be used to determine the front-back location of the sound source (see also Wallach, 1939; Wightman and Kistler, 1999).

B. Single-sided deaf listeners with one cochlear implant

For single-sided deaf (SSD) patients, the most recent population to receive a cochlear implant, identifying the front-back location of sound sources may be difficult. SSD listeners, without a CI, have no access to binaural difference information (ITDs and ILDs). For SSD listeners fit with a CI in the deaf ear (SSD-CI), ITDs and ILDs are not well-encoded. The stimulus envelope, not the fine-structure used for ITDs, is transmitted by the CI, so localization using low-frequency ITD cues is very poor, if possible at all (Dirks et al., 2019). Instead, SSD-CI listeners tend to rely on ILDs (Dorman et al., 2015), but these too may be distorted and unreliable, in part because of automatic gain control (AGC) processing in the cochlear implant (e.g., see Archer-boyd and Carlyon, 2019, for an in-depth discussion of the effects of AGC on ILDs). As such, SSD-CI listeners might resort to using monaural level (ML) cues produced by the direction-dependent attenuation of high-frequency sounds that results from acoustic head shadowing in their NH ear. If so, head movements during longer duration stimuli with relatively stable amplitude could be used to compensate for a lack of prior knowledge of the stimulus intensity, because changes in the ML cue during the head movement, instead of the absolute ML cue, could be assessed.

C. Learning and plasticity

The primary cause of the deficit in auditory spatial acuity after unilateral hearing loss is the disassociation between sound source location and auditory cues based on interaural differences of time and intensity (ITDs and ILDs). Earlier studies have revealed that learning/plasticity mechanisms can help recover spatial functions in days after simulated unilateral hearing loss with monaural ear plugging (Bauer et al., 1966; Florentine, 1976; McPartland et al., 1997). A growing body of studies proposes “cue re-weighting” as one candidate restoration mechanism underlying the adaptive nature of auditory spatial perception (for reviews, see Keating and King, 2013; van Opstal, 2016). This hypothesis proposes that the impaired auditory system learns to minimize the role of distorted localization cues and emphasizes other unaffected cues. A primary alternative to distorted or unavailable ITDs and ILDs is monaural cues from the intact ear (Agterberg et al., 2014; Irving and Moore, 2011; Kumpik et al., 2010; Shub et al., 2008; Slattery and Middlebrooks, 1994; Van Wanrooij and Van Opstal, 2004, 2007). More recently, Venskytis et al. (2019) investigated how the duration and severity of unilateral hearing loss (UHL) affect auditory localization. They compared the performance of four hearing groups–normal hearing, acute UHL (minutes, by ear-plugging), chronic moderate UHL, and chronic severe UHL (more than 10 years, no hearing aid experience) using time-delay-based stereophony. This stereophony technique allowed Venskytis et al. (2019) to manipulate ITD cues while keeping ILDs near zero and the monaural spectral information relatively unchanged so that ITDs were essentially isolated as the cue for sound source localization in the horizontal plane. They found that the chronic, moderate UHL group performed near normal while the severe and acute groups could not do the task. These results suggest that the neural mechanisms of ITDs are adaptable with long-term experience. What is unknown from these previous studies, however, is the role learning might play in using dynamic monaural cues through head movement and whether its contribution differs between static and moving conditions.

D. Listeners with bilateral cochlear implants

Cochlear implant (CI) listeners usually have little or no access to the high-frequency pinna cues that could be used to distinguish sounds in front from those in back, because the implant microphone is usually on the head instead of in the ear canal. Also, CI listeners experience poor high-frequency spectral resolution because the number of independent sites of stimulation along the implantable length of the scala tympani is currently limited to ≈4–8, even with arrays that have as many as 22 electrodes (Wilson and Dorman, 2008). This limitation is brought about because the electrodes are bathed in highly conductive perilymph and are relatively far from the neurons in the spiral ganglia they stimulate. For example, for Med-El CIs, the “upper desired frequency” for E12 is 8.5 kHz, as measured at 3 dB down along the filter skirt. This further limits the usefulness of spectral shape cues. Additionally, speech processing algorithms often only encode frequencies between 0.1 and 8 kHz, thereby eliminating potentially useful information at frequencies ≥ 8 kHz. All of these conditions lead to elevated rates of front-back reversals (FBRs), even for stimuli that would pose no challenge to NH listeners (Mueller et al., 2014; Pastore et al., 2018). Furthermore, Senn et al. (2005) compared localization for a single CI to bilateral CIs and found that front-back discrimination remained poor with the additional CI, despite the improvement in spatial discrimination in the frontal horizonal plane.

Mueller et al. (2014) presented speech signals of 0.5, 2, and 4.5 seconds in noise to bilaterally-implanted listeners. When listeners remained still, their rate of FBRs was high, but head movements allowed them to reduce FBRs considerably for the two longer stimuli. Head movements did not, however, lead to any improvement in angular accuracy.

Pastore et al. (2018) showed that patients with bilateral CIs experience high rates of FBRs for high-pass filtered noises. Patients who use implants with relatively gentle AGC could successfully reduce their rate of FBRs using rotational head movements (c.f. Archer-boyd and Carlyon, 2019). It may be that listeners monitored the change in ILDs during head movements, or, that they instead monitored the change in amplitude at one cochlear implanted ear or the other. To probe this, patients in Pastore et al. (2018) turned off one of their CIs and repeated the experiment using the ear that they felt was their “best” ear. Now the head-movement strategy failed to reduce front-back reversals and localization was, in general, heavily-biased toward the ear with the CI turned on and poor overall. Pastore et al. (2018) surmised that the bilateral CI listeners determined front-back sound source location by using changes in ILDs that result from head turns, and not changes in ML cues. It may be that the automatic gain control (AGC) in the implant processor rendered this ML cue, which would be smaller in magnitude than the an ILD cue and therefore more vulnerable to the effects of AGC, unusable.

E. The focus of this report

We investigated the ability of single-sided deaf patients fit with a cochlear implant (SSD-CI) and normal hearing (NH) listeners acutely blocked in one ear to distinguish the front-back and left-right location of sound sources both with and without head movements. Comparison of these two groups allows us to test for learning/plasticity effects, as well as to study the utility of monaural spatial cues. Comparisons of SSD-CI listener performance with the CI on v. off allow us to determine if monaural spectral shape cues can be used by non-trained, monaurally experienced listeners to determine sound source location, and whether the inclusion of ILD cues with the CI turned on improves this ability. Finally, comparisons of performance for listeners under stationary v. head turn conditions allowed us to assess listeners’ ability to use dynamic monaural cues and the degree to which the addition of a CI improves the use of dynamic localization cues. The data reported here have a bearing on the real world functioning of CI patients, but also have important implications for basic questions about the mechanisms underlying sound source localization. While both aspects of the data are considered in this paper, most literature review and analyses relating to the relevant underlying mechanisms are presented in the Discussion section.

II. METHODS

All procedures reported in this study were approved by the Arizona State University Institutional Review Board (IRB).

A. Single-sided deaf listeners with a cochlear implant (SSD-CI)

Eight listeners with normal hearing in one ear and a cochlear implant in the deaf ear participated (1 male, 7 females, between the ages of 17.4 and 74, mean = 50.4 years). The oldest listener (identified as Listener 2464 in this study), while within the 20 dB HL bounds that qualify “normal hearing,” had, by far, the poorest hearing of all subjects in the NH ear. Her air conduction threshold was 10 dB HL at 2 kHz and 20 dB HL at 8 kHz. The average duration of deafness (before implantation) in the one ear was 4.1 years and the average time since implantation of that ear was 1.3 years; four listeners were tested less than one year after implantation. See Table I for further details.

TABLE I.

Overview of single-sided deaf listeners. All listeners are implanted in the deaf ear. The other ear is normal-hearing (NH).

| Listener | setup (left/right) |

Cl brand | ACC | processor | processing strategy |

age | sex | yrs deaf | yrs with CI | implanted ear |

|---|---|---|---|---|---|---|---|---|---|---|

| 2327 | NH/CI | Med-EL | 3:1/75% default sensitivity | Sonnet | FS4-P | 17.4 | F | 1.6 | 2.3 | R |

| 2465 | CI/NH | AB | 2-Dual Loop | Naida Q90 | — | 37.9 | F | 1.7 | 0.2 | L |

| 2461 | CI/NH | AB | 2-Dual Loop | Naida Q90 | — | 59.8 | F | 10.3 | 0.6 | L |

| 2463 | CI/NH | Med-EL | 3:1/75% default sensitivity | Sonnet | FS4 | 64.6 | M | 6.3 | 2.0 | L |

| 2458 | CI/NH | Cochlear | — | Nucleus | — | 59 | F | 2.6 | 0.5 | L |

| 2460 | CI/NH | AB | 2-Dual Loop | Naida Q90 | — | 49.2 | F | 3.5 | 0.5 | L |

| 2464 | CI/NH | Med-EL | 3:1/75% default sensitivity | Sonnet | FS4 | 74 | F | 4.8 | 2.3 | L |

| 2509 | NH/CI | Cochlear | — | Nucleus | — | 41 | F | 1.8 | 2.1 | R |

B. Normal hearing listeners (NH)

Eight normal hearing listeners were tested with one ear plugged (four male, four female, mean age = 27.6 yrs); these listeners are subsequently referenced as “unilaterally-plugged NH listeners,” or “plugged + NH” in figure labels. Normal hearing sensitivity was verified on all listeners prior to experiment participation using standard audiometric techniques under insert earphones. Earplug attenuation was measured in the sound field with stimuli presented from the loudspeaker directly in front of the listener at 0°. Measurement stimuli were pulsed-warble tones presented at octave frequencies from 250–8000 Hz with a narrowband noise masker (see Table V in ANSI S3.6–2010) presented at 50 dB effective masking (EM) to the unblocked ear using an insert earphone. The following steps were taken: (1) Unplugged hearing thresholds were determined by testing octave frequencies from 250–8000 Hz (this was done under insert earphones to verify normal hearing). (2) One ear was plugged (randomly determined) and step one was repeated in free field with masking, via a single earphone used to isolate the non-test ear. This was done to determine the attenuation provided by the earplug at all audiometric frequencies. (3) The lowest threshold between 2–8 kHz was identified. (4) The 2–8 kHz noise stimulus used in the main experiment was presented to the listener at 4 dB below the lowest tone threshold to verify that the stimulus was below threshold in the plugged ear. Mean presentation level in the main experiment was 50.4 dBA. Half the listeners were plugged in one ear and the other half in the opposite ear. Details for individual listeners are in Table II. Listeners did not remove or adjust the ear plug during the experiment once it was fitted by the audiologist.

TABLE V.

Statistics for all comparisons made in this experiment. All data were transformed using a rationalized arcsine function (i.e., rau correction), to account for the non-Gaussian distribution of a bounded range of possible proportion correct (0—1) or bias (0—60°). Where appropriate, the significance criterion, a, is Bonferroni corrected for multiple comparisons.

| Test | Independent Variables | Dependent Variables | F ratio | p-value | α |

|---|---|---|---|---|---|

| (la) | SSD-CI Listeners | front/back | |||

| Dependent samples ANOVA | Subjects | front/back | F(7,7) = 1.17 | 0.394 | 0.05 |

| head turns | front/back | F(l,7) = 69.05 | 0.000 | 0.05 | |

| Cl on/off | front/back | F(l,7) = 1.12 | 0.326 | 0.05 | |

| Subjects × head turns | front/back | F(7,7) = 4.75 | 0.029 | 0.05 | |

| Subjects × Cl on/off | front/back | F(7,7) = 3.41 | 0.064 | 0.05 | |

| head turns × Cl on/off | front/back | F(l,7) = 6.91 | 0.034 | 0.05 | |

| post-hoc paired t-test | head turns × Cl off | front/back | — | 0.000 | 0.05 |

| post-hoc paired t-test | head turns × Cl on | front/back | — | 0.000 | 0.05 |

| planned paired t-test | SSD Cl on v. SSD Cl off | improvement front/back | 0.084 | 0.05 | |

| (1b) | SSD-CI Listeners | left/right | |||

| Dependent samples ANOVA | Subjects | left/right | F(7,7) = 3.00 | 0.059 | 0.05 |

| head turns | left/right | F(l,7) = 13.42 | 0.008 | 0.05 | |

| Cl on/off | left/right | F(l,7) = 5.84 | 0.046 | 0.05 | |

| Subject × head turns | left/right | F(7,7) = 2.80 | 0.099 | 0.05 | |

| Subject × Cl on/off | left/right | F(7,7) = 5.21 | 0.022 | 0.05 | |

| head turns × Cl on/off | left/right | F(l,7) = 1.84 | 0.217 | 0.05 | |

| (lc) | SSD-CI Listeners | bias | |||

| Dependent samples ANOVA | Subjects | bias | F(7,7) = oo | 0.000 | 0.05 |

| head turns | bias | F(l,7) = 9.31 | 0.019 | 0.05 | |

| Cl on/off | bias | F(l,7) = 5.74 | 0.048 | 0.05 | |

| Subject × head turns | bias | F(7,7) = 0.12 | 0.995 | 0.05 | |

| Subject × Cl on/off | bias | F(7,7) = 0.24 | 0.959 | 0.05 | |

| head turns × Cl on/off | bias | F(l,7) = 0.24 | 0.641 | 0.05 | |

| planned paired t-test, 1-tail | SSD Cl off vs. SSD Cl on | bias | 0.019 | 0.05 | |

| (2) | Unilaterally Plugged NH Listeners | ||||

| planned paired t-test | head turns | front/back | 0.002 | 0.05 | |

| planned paired t-test | head turns | left/right | 0.019 | 0.05 | |

| planned paired t-test | head turns | bias | 0.022 | 0.05 | |

| (3) | Unilaterally Plugged NH v. SSD Cl off | ||||

| planned t-test | NH plugged v. SSD Cl off | improvement front/back | 0.783 | 0.05 | |

| planned t-test, 1-tail | NH plugged vs. SSD Cl off | bias | 0.035 | 0.05 | |

| (4) | Near Center Far for SSD-CI Listeners | ||||

| Dependent samples ANOVA | SSD Cl off v. SSD Cl on | front/back | F(l,51) = 0.57 | 0.476 | 0.05 |

| Near/Center/Far | front/back | F(2,51) = 7.18 | 0.007 | 0.05 | |

| head turns | front/back | F(l,51) = 84.53 | 0.000 | 0.05 | |

| Near/Center/Far × SSD Cl off v. SSD Cl on | front/back | F(2,51) = 0.55 | 0.578 | 0.05 | |

| Near/Center/Far × head turns | front/back | F(2,51) = 1.26 | 0.291 | 0.05 | |

| post-hoc paired t-test | SSD Cl off Near v. Far | front/back | — | 0.258 | 0.025 |

| post-hoc paired t-test | SSD Cl off Near v. Center | front/back | — | 0.429 | 0.025 |

| post-hoc paired t-test | SSD Cl off Center v. Far | front/back | — | 0.0230 | 0.025 |

| post-hoc paired t-test | SSD Cl on Near v. Far | front/back | — | 0.028 | 0.025 |

| post-hoc paired t-test | SSD Cl on Near v. Center | front/back | — | 0.959 | 0.025 |

| post-hoc paired t-test | SSD Cl on Center v. Far | front/back | — | 0.006 | 0.025 |

| (5) | Unilaterally Plugged NH Listeners | ||||

| post-hoc paired t-test | NH plugged Near v. Far | front/back | — | 0.490 | 0.025 |

| post-hoc paired t-test | NH plugged Near v. Center | front/back | — | 0.239 | 0.025 |

| post-hoc paired t-test | NH plugged Center v. Far | front/back | — | 0.837 | 0.025 |

| (6) | Near Center Far for SSD-CI v. Unilaterally Plugged NH Listeners | ||||

| Independent samples ANOVA | Subjects | front/back | F(7,51) = 5.77 | 0.000 | 0.05 |

| NH plugged v. SSD Cl off | front/back | F(l,51) = 7.63 | 0.008 | 0.05 | |

| Near/Center/Far | front/back | F(2,51) = 4.71 | 0.013 | 0.05 | |

| head turns | front/back | F(l,51) = 119.35 | 0.000 | 0.05 | |

| Near/Center/Far × NH plugged v. SSD Cl off | front/back | F(2,51) = 2.20 | 0.121 | 0.05 | |

| Near/Center/Far × head turns | front/back | F(2,51) = 0.14 | 0.867 | 0.05 | |

TABLE II.

Overview of normal hearing listeners who had one ear acutely plugged.

| Listener | plugged ear | sex | age | presentation level |

|---|---|---|---|---|

| 1 | R | F | 22 | 49 |

| 2 | L | M | 26 | 50 |

| 3 | R | F | 32 | 45 |

| 4 | L | F | 25 | 52 |

| 5 | L | M | 25 | 50 |

| 6 | L | M | 47 | 55 |

| 7 | R | F | 22 | 50 |

| 8 | R | M | 22 | 52 |

The same experiment was carried out for eight other normal hearing listeners with no ear plugging – these are simply referenced as “NH listeners,” or “NH + NH” in figure labels. Data for seven of these listeners were also included in Pastore et al. (2018).

C. Stimuli

All stimuli were created and presented using Matlab, and were the same as in Pastore et al. (2018). Three-second duration Gaussian noise bursts were bandpass filtered to 2–8 kHz with a 3-pole Butterworth filter, and then windowed with 20-ms cosine-squared onset and offset ramps. Sounds were presented at 60 dBA to SSD-CI listeners, as measured at the center of the room where the listener’s head would be. For the unilaterally-plugged NH listeners, sound stimuli were presented at 4 dB below the monaural detection thresholds measured when earplugs were fitted, as detailed in the Listeners section above. For each listener, the level of the long-duration stimuli was fixed, giving them their best chances of using a high-frequency “pinna cue” if it was useful for determining the front-back sound source location.

D. Test environment for localization

The Spatial Hearing Laboratory, used in (Pastore et al., 2018), was also used for this group of experiments. The room is 12′ × 15′ × 10′, covered on all six surfaces by 4″ thick acoustic foam for a broadband reverberation time (RT60) of 102 ms. Twenty-four loudspeakers (Boston Acoustics 100x, Peabody, MA) are equidistantly spaced 15° apart from each other on a five-foot radius circle at roughly the height of listeners’ pinnae. Stimuli were only presented from loudspeakers at 0°, ±60°, ±120°, and 180° relative to the listeners’ midline – see the top of Fig. 2 for an illustration. The average RMS error of localization for CI listeners, using all 24 loudspeakers spaced 15° apart, has been measured at ≈ 29° in the frontal hemifield – in contrast to ≈ 6° for NH listeners in the same setup (Dorman et al., 2016). The 60° spacing between the utilized loudspeakers was chosen to simplify the task of distinguishing front-back confusions from other localization errors. Those loudspeakers presenting stimuli were clearly labeled 1–6, while the remaining loudspeakers were unlabeled. An intercom and camera enabled the experimenter to monitor the listener’s head position and communicate with the listener from a remote control room. Listeners entered their response on a number keypad. All sounds were presented via a 24-channel Digital-to-Analog (DA) converter (two Echo Gina 12 DAs, Santa Barbara, CA) at a rate of 44.1 kHz/channel.

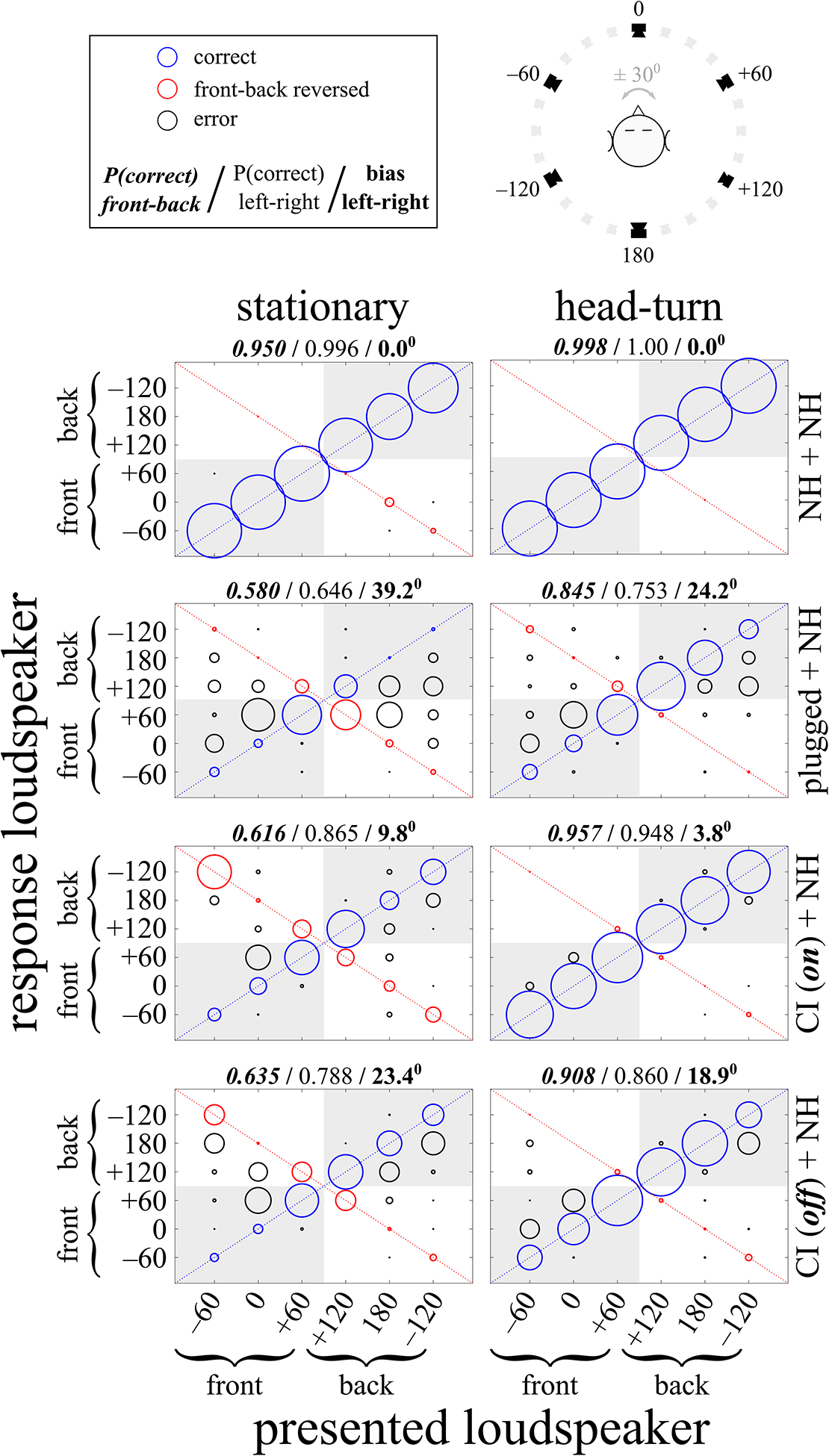

Fig. 2.

Group data, pooled across listeners. See Fig. 7 for individual data. The horizontal axis shows the loudspeaker positions (in degrees) from which stimuli were presented, and the vertical axis shows the loudspeaker positions (also in degrees) listeners identified in response to the stimulus presentations. The radius of each circle is proportional to the number of responses at that location. Correct responses, indicated with blue circles, are along the positive diagonal. Front-back reversed responses, indicated with red circles, are along the negative diagonal. All other errors are represented with black circles. Responses to the correct front-back hemifield are located in the shaded areas of each figure panel. The left column shows data for the stationary head condition and the right column shows data for when listeners turned their heads. The top 2 rows show data for NH listeners with either both ears unblocked (NH + NH) or with one ear acutely plugged (plugged + NH). Note that the 2 groups of NH listeners are not the same, and that seven of the eight NH + NH bilateral listeners’ data were previously included in Pastore (2018). The bottom 2 rows show data for SSD-CI listeners with cochlear implants either on (CI(on) + NH) or off (CI(off) + NH). Above each figure panel, from left-to-right, are the proportion of responses in the correct front-back hemifield (bold italic font), the proportion of responses in the correct left-right hemifield (normal font), and the mean bias toward the NH ear in degrees (bold font). All figures are plotted so the unoccluded NH ear is on the right side (positive degrees) and the occluded ear or implanted ear is on the left (negative degrees). See Methods section for further details.

E. Procedure

Listeners sat in a stationary chair, facing toward the loudspeaker directly in front of them at 0°. For the stationary condition, listeners were asked to keep their heads fixed, facing loudspeaker 1 (see Fig 2), focused on a red dot on loudspeaker 1. For the head movement condition, listeners were asked to make whatever rotational head movements they wished, between approximately ±30° (the corresponding loudspeakers were marked so listeners could have a visual estimate), in order to aid them in localizing the sound source. Listeners were asked to keep their movements relatively slow and continuous, as opposed to simply moving to one position and comparing it to another. This consistent range of head motion allowed subsequent estimation of the cues that might be available to the listeners. Continuous observation, via webcam, confirmed that all listeners’ head movements took the form of a “sweep” between a maximum of ±30°. No head-tracking was used, as details of listeners’ head movements were not a focus of the investigation. The 3-second stimulus duration afforded listeners ample time to rotate their heads several times. For both conditions, listeners were not naïve to the purpose of the experiment, but were rather encouraged to do their best to distinguish the location of sounds located in front of them from those located behind them.

Upon presentation of a noise stimulus, listeners were asked to indicate which of the 6 labeled loudspeakers corresponded best with the sound source location they perceived. For both the stationary and head movement conditions, the stimulus was presented randomly from the 6 loudspeaker locations for a total of 12 times at each location. The CI off condition was presented to SSD-CI listeners before the CI on condition.

F. Data Analysis

In the data figures (2 and 7), front-back reversals (FBRs), where the listener responds with the correct left-right location but at the wrong front-back location, are indicated in red, along the negative-slope diagonal. However, listeners demonstrated considerable difficulties in left-right localization, so FBRs may not be the most useful metric for listeners’ ability to distinguish front v. back sound source location. Therefore, the proportion of responses in the correct front-back hemifield (i.e., loudspeakers at −60°, 0°, +60° v. −120°, 180°, +120°) was calculated for each listener. The data in Figures 2 and 7 are plotted so the unoccluded NH ear is on the right side (positive degrees) and the occluded ear or implanted ear is on the left (negative degrees). Therefore, the data of Listeners 2327, 2509, 1, 3, 7, and 8 were flipped left-to-right, for both the individual and group data analyses and figures, because their unoccluded or non-implanted NH ear was on their left.

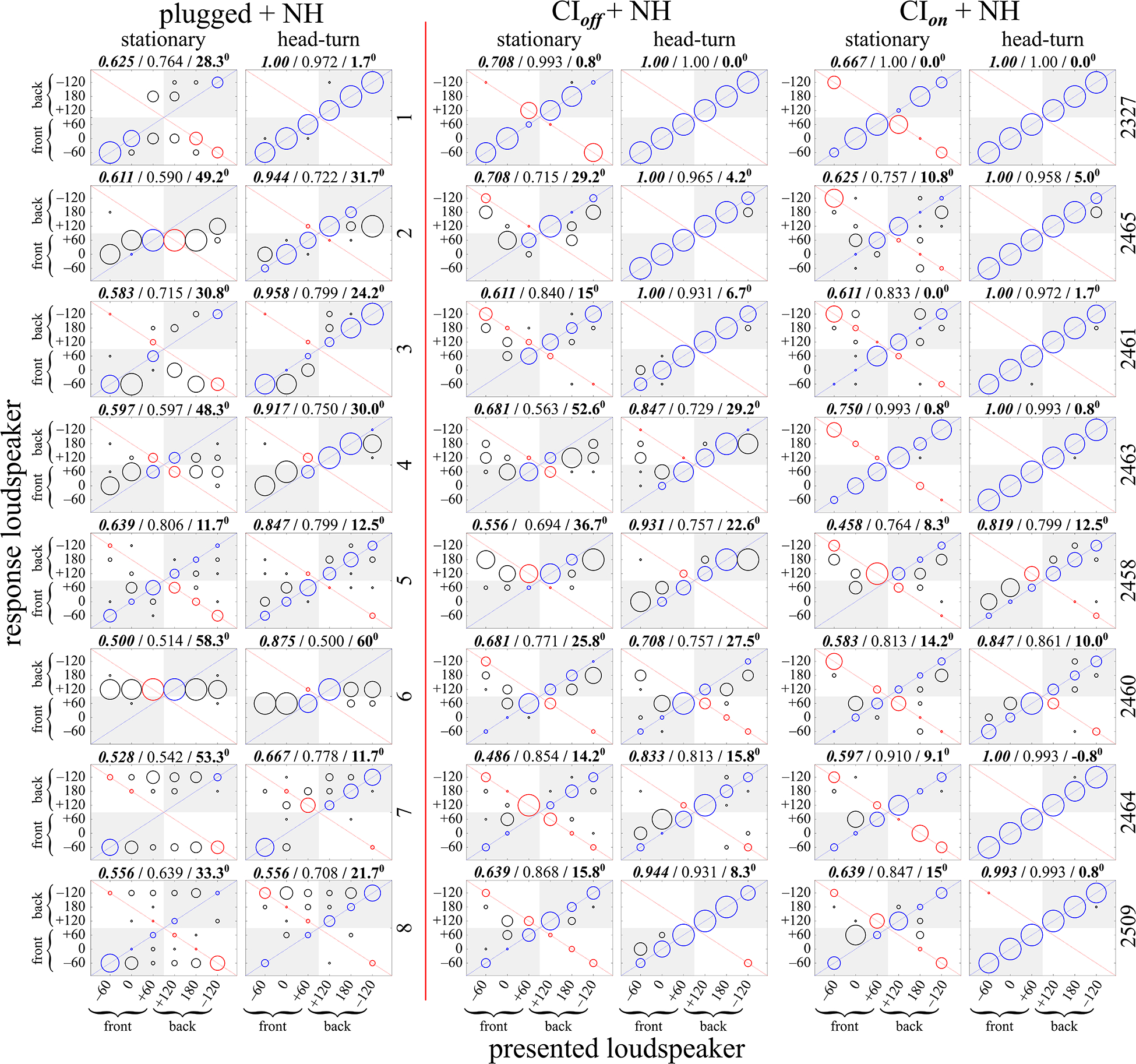

Fig. 7.

Individual listeners’ sound source localization, measured as loudspeaker identification. Stimuli were 3-s duration Gaussian noises filtered to between 2–8 kHz. Data to the left of the vertical line are for normal hearing (NH) with one plugged ear (see details in Methods). Data to the right of the vertical line are for single-sided deaf listeners implanted in the deaf ear. See Tables I and II for further details. Correct responses are along the positive diagonal and are indicated with blue circles. Front-back reversed responses are along the negative diagonal and are indicated with red circles. All other errors are represented with black circles. Responses to the correct front-back hemifield are located in the shaded areas of each figure panel. The radius of each circle is proportional to the number of responses at that location. Above each figure panel, from left-to-right, are the proportion of responses in the correct front-back hemifield (bold italic font), the proportion of responses in the correct left-right hemifield (normal font), and the mean bias toward the NH ear in degrees (bold font). All figures are plotted so the unoccluded NH ear is on the right side (positive degrees) and the occluded ear or implanted ear is on the left (negative degrees). Therefore, the data of CI Listeners 2327 and 2509 and NH monaurally-plugged listeners 1, 3, 7, and 8 are flipped left-to-right. The listener number is indicated to the right of each listener’s row of data. The individual data for the NH bilateral condition are not shown as there is essentially no variability in those data (i.e., all listeners were correct for almost all responses across all stimulus conditions, as shown in Fig.2 above).

Additionally, two metrics for left-right acuity were calculated. First, the proportion of listener responses to the correct left-right hemifield was calculated. When listeners reported that the perceived sound source location was at the center loudspeakers (0°, front, and 180°, back,) and the stimulus was presented from either of the sides (±60° and ±120°), the trial was recorded as “half correct,” as were responses to the center for stimuli presented from one side or the other. Responses of center loudspeakers to stimuli presented from center loudspeakers were counted as correct.

Lateral bias toward the unoccluded or unimplanted NH ear is a common feature of monaural localization. Lateral bias was calculated as the average signed left-right error across all trials, in degrees. A bias toward the unaffected NH ear is represented as a positive value, whereas a bias towards the plugged or implanted ear would be indicated by a negative value. Note that the maximum possible average lateral bias across all trials was 60°. Note also that lateral bias and the proportion of responses to the correct left-right hemifield are not equivalent measures. For example, a listener could localize every stimulus to the wrong left-right hemifield while demonstrating no lateral bias. Table III shows a summary of the independent and dependent variables, analyses, and potential cues for localization in this experiment.

TABLE III.

Listings of the independent and dependent variables, analyses, and primary, potential cues for localization in this ixperiment.

| Listener hearing configurations | (SSD) | CIon + NH |

| (SSD) | CIoff + NH | |

| (NH) | NH + NH | |

| (NH acute) | plugged + NH | |

| Conditions | head stationary | |

| head rotating | ||

| Calculated metrics | P(correct front/back hemifield) | |

| P (correct left/right hemifield) | ||

| left/right bias (max 60°) | ||

| Potential available cues | monaural level (ML) | |

| interaural level difference (ILD) | ||

| monaural/binaural spectral shape from head-related transfer function (HRTF) | ||

| interaural spectral difference (ISD) | ||

The large number of comparisons and outcome variables would make reporting statistical outcomes in the main text cumbersome to read, so statistical analyses are gathered in Table V and referenced by number in the text. For dependent samples (repeated measures) analyses of variance (ANOVA), subjects were treated as a random variable. Therefore, if Subjects was a significant main factor, it means that inter-subject performance was highly variable.

III. RESULTS

Figure 2 shows the group data pooled across all listeners, partitioned by group (NH and SSD-CI) and whether both ears or only one NH ear was used. Results for conditions where the listeners were stationary are shown in the left column and results for the conditions where listeners were allowed to rotate their heads are shown in the right column. In each panel, the horizontal axis shows the location, relative to the listeners’ frontal midline, of the loudspeaker that presented the stimulus while the vertical axis shows the position of the loudspeaker that the listener indicated as most closely matching his/her perceived sound source location. Data along the positive diagonal (blue circles) represent correct responses, data along the negative diagonal (red circles) represent front-back reversals, and all other data (black circles) represent all other localization errors. The size of each circle indicates the relative frequency of responses for each combination of stimulus presentation and listener response. Individual data for the unilaterally-plugged NH listeners and SSD-CI listeners, with their CI on and off, are shown in Fig. 7. Note that the

Looking first at the top row of panels of Fig. 2, we see the responses of eight NH listeners with neither ear plugged, labeled “NH + NH.” As one might expect, these listeners had few, if any, difficulties in localizing the sound stimuli to the correct location, and suffered almost no front-back reversals, regardless of whether head movement was allowed or not.

A. Responses with head stationary - left column

The second row from the top of Fig. 2 shows responses for NH listeners when one ear was plugged (“plugged + NH”). Front-back localization was near chance, with only 58% of all responses in the correct front-back hemifield. Localization along the left-right dimension was also quite poor, with only 64.6% of all responses in the correct left-right hemifield and an overall bias of 39.2° towards the unplugged ear. This lateral bias is comparable to the 30.9° bias found by Slattery and Middlebrooks (1994). See Fig. 3 for further analyses of front-back sound source localization and Fig. 4 for further analyses of bias towards the unoccluded/unimplanted NH ear, as well as the related subsections III.B and III.C below. Overall, these results confirm similar results found in a number of other studies (e.g., Wightman and Kistler, 1997) showing poor localization, including in the front-back dimension, for acutely monaural listeners.

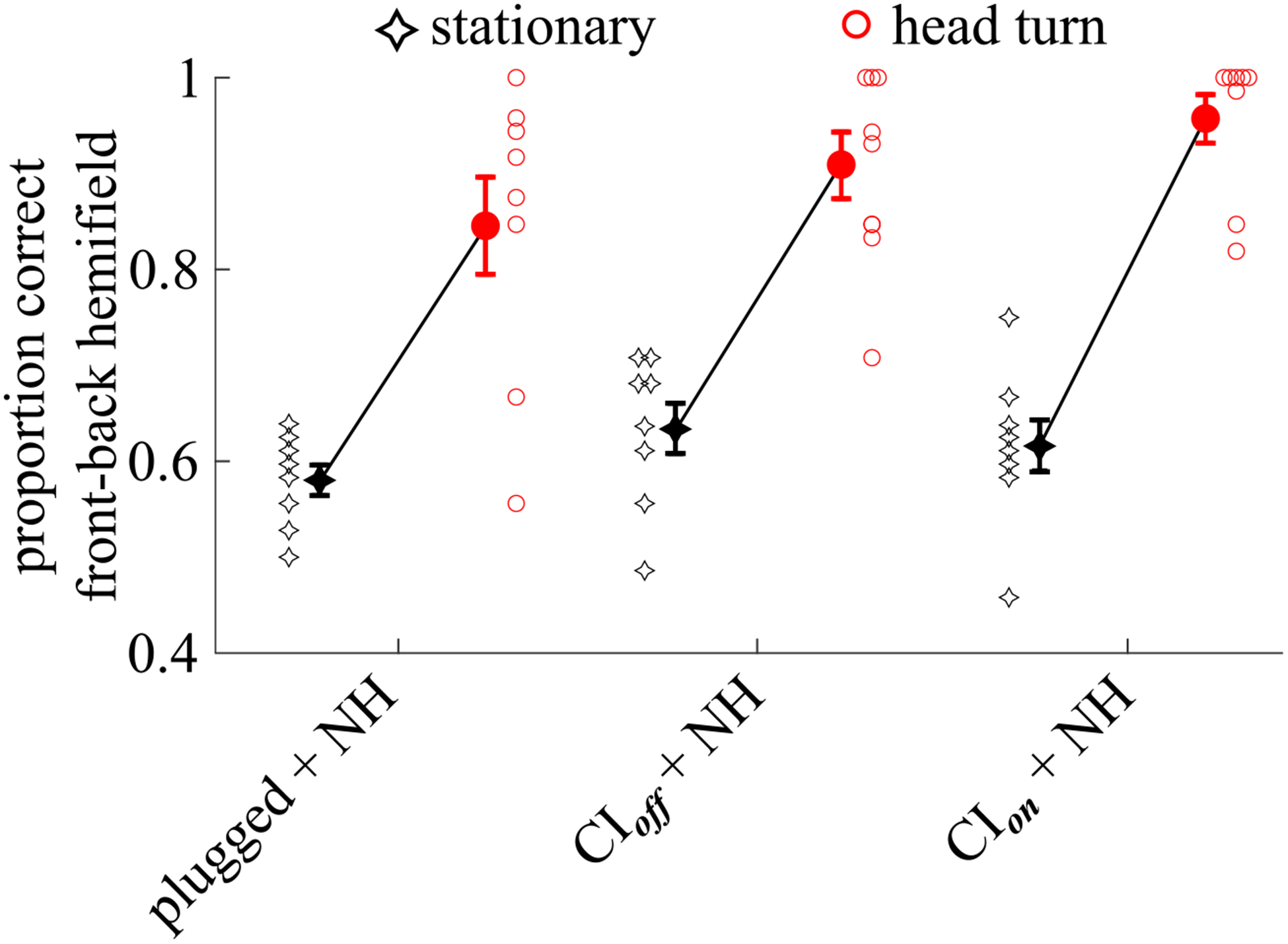

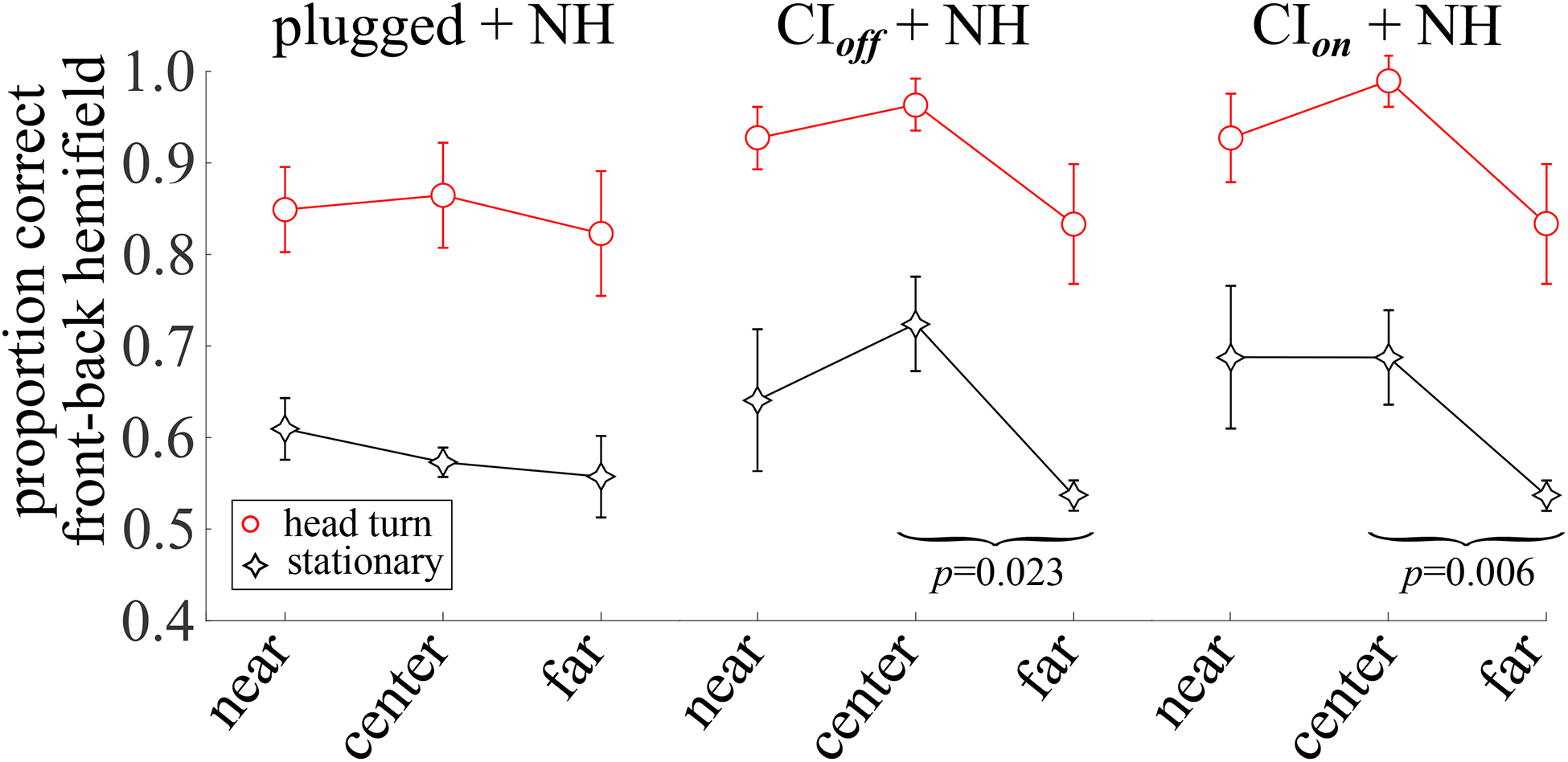

Fig. 3.

Individual and group data showing the proportion of responses that were in the correct front-back hemifield, collapsed across all presenting loudspeaker locations. Circles show data for the condition where listeners rotate their head. Diamond-like symbols show data for the condition where listeners keep their head stationary. Note that all individual listeners either improved or remained the same when allowed to move their head. Filled symbols, next to the individual data, show the group mean ± 1 standard error of the mean.

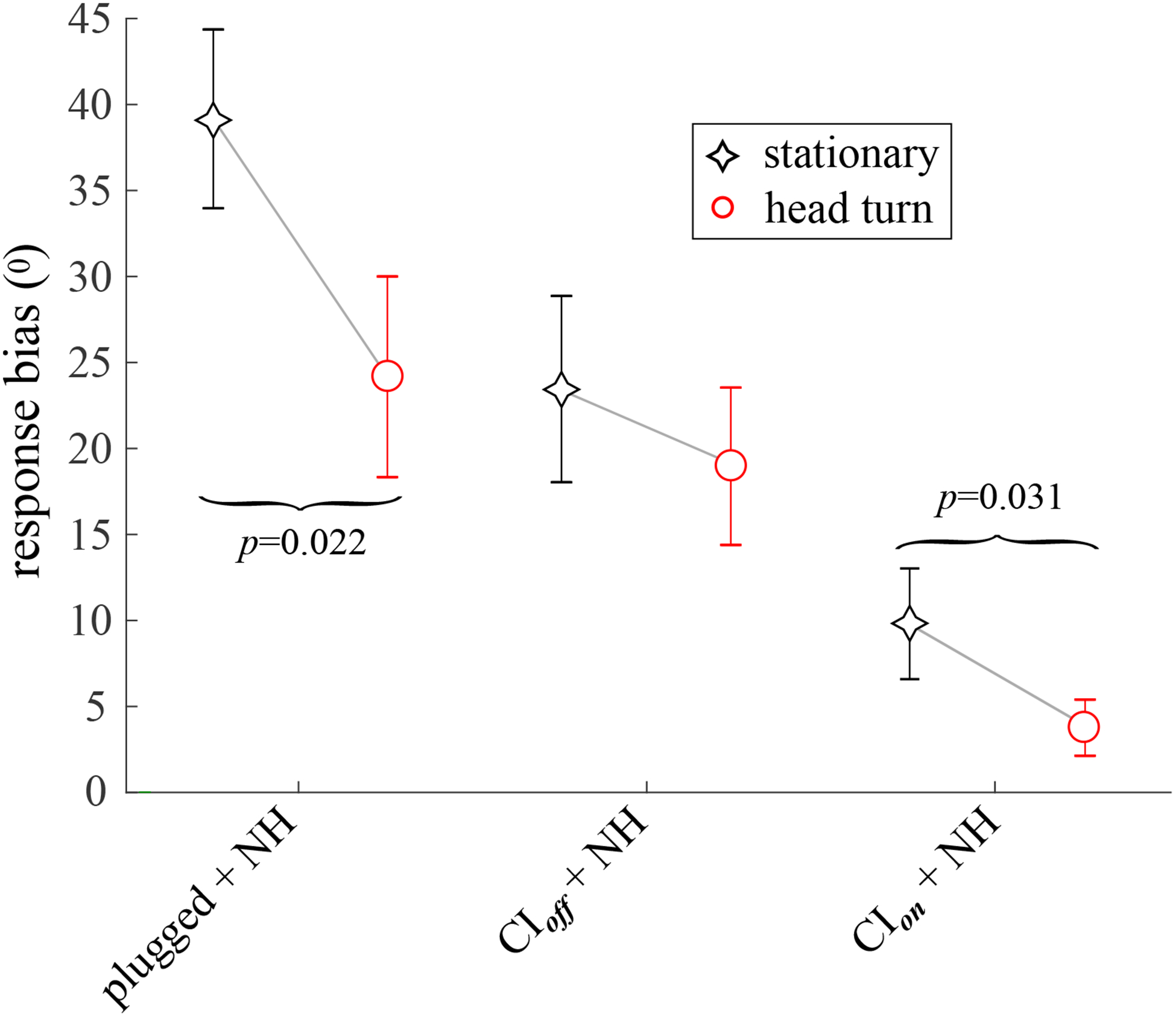

Fig. 4.

The mean lateral response bias, calculated across listeners. Positive bias indicates a lateral shift of responses toward the NH ear and away from the plugged or implanted ear. Error bars indicate ±1 standard error of the mean.

The bottom row shows data for when the SSD-CI listeners turned off their CI so that they only could use their NH ear to localize, labeled “CI(off) + NH.” This condition is somewhat analogous to the NH unilateraly-plugged condition. Localization in the front-back dimension is only marginally different to that of unilaterally-plugged NH listeners, with 63.5% of all responses in the correct front-back hemifield. However, lateral errors and lateral bias are both considerably lower than for unilaterally-plugged NH listeners, at 78.8% of all responses in the correct left-right hemifield (v. 64.4%) and 23.4° bias towards the NH ear (v. 30.9°).

The third row from the top shows the responses of SSD-CI listeners with their CI turned on, labeled “CI(on) + NH.” The proportion of these listeners’ responses to the correct front-back hemifield, at 61.6% of all responses, was essentially the same as for both the unilaterally-plugged NH listeners and these same SSD-CI listeners with their CI turned off. Interestingly, with turning the CI on, the proportion of responses to the correct left-right hemifield rose modestly to 86.5%, but the left-right bias fell to less than half of what it was with the CI off, from 23.4° to 9.8°. Also, SSD-CI listeners with their CI on compared favorably, on average, to unilaterally-plugged NH listeners in terms of lateral localization, and, to a smaller degree, in terms of front-back localization. A large difference was the much smaller bias for SSD-CI listeners with their CI off as compared to the unilaterally-plugged NH listeners (9.8° v. 39.2°).

B. Responses with head rotation - right column

Figure 3 shows individual and group data for the proportion of responses that were in the correct front-back hemifield, without regard to any lateral localization errors. Head movement leads to a clear, statistically significant (see Table V, 1a) reduction in front-back reversals (compare red circles to black diamonds) for all three listener conditions. Looking across listener conditions, the slope and magnitude of the improvement in front-back localization is quite similar, suggesting that the magnitude of the improvement gained with head movements did not depend on listener condition. A planned, paired t-test revealed that the improvement resulting from head rotation in localizing to the correct front-back hemifield was not significantly different for SSD-CI listeners with their CI turned on v. off (see Table V, 1a). Independent samples comparisons of SSD-CI listeners with their CI turned off and unilaterally-plugged NH listeners also showed no statistically significant difference in the reduction in FBRs brought about by head turns (see Table V, 3).

For the SSD-CI subjects, a two-way repeated-measures analysis of variance (ANOVA) showed that the main effect of head turns on the proportion of responses to the correct front-back hemifield was statistically significant, while the main effect of having the cochlear implant turned on or off was not. However, there was a significant interaction between subjects and head turns but not between subjects and cochlear implant on/off. This pattern of interactions suggests that the utility of head turns in avoiding front-back reversals varied considerably between listeners, while the lack of an effect of having the CI on v. off was relatively consistent across listeners. See Table V (1a) for further statistical details.

A dependent samples ANOVA showed that head turns had a significant effect on the percentage of responses in the correct left/right hemifield (see Table V, 1b), as did having the CI on v. off. However, the interaction of Subjects with CI on v. off was also significant, suggesting that not all SSD-CI listeners benefited to the same degree when they turned on their CI.

C. Bias toward NH ear

Figure 4 shows the mean bias towards the unoccluded, NH ear with and without head movements. Looking first at the stationary condition (black diamonds), SSD-CI listeners with CIs turned off exhibited a significantly lower response bias toward the side of the NH ear than did unilaterally-plugged NH listeners (≈ 40° v. ≈ 23°, see also Table V, 3), though inter-subject variabilty was high in both groups. Turning on their CI approximately halved SSD-CI listeners’ bias to about 10°; this change was statistically significant, see Table V, 1c. Head turns reduced lateral bias for SSD-CI listeners both with their CIs on and off, but the greatest improvement was for the unilaterally-plugged NH listeners. A dependent samples ANOVA showed that head turns had a significant effect on left/right bias. A planned comparison of bias with the CI turned on v. off was also significant. See Table V, 1c, for further details.

D. Correlation between SSD CI on v. CI off

Both at the group level (see Fig. 2, bottom two rows), and for most individual SSD-CI listeners (see Fig. 7), visual inspection of the two-dimensional data pattern for SSD-CI listeners suggests their behavior is quite similar with the CI turned on v. off. That is, with the head stationary, turning on the CI does not radically change the overall patterning of the data, nor does turning on the CI radically change the response pattern when listeners turn their heads. Table IV evaluates this observation, showing the sample 2-dimensional Pearson correlation coefficient (r) for each SSD-CI listener’s data (confusion matrices), comparing their localization data with their CI turned on to when the CI was off. Excepting for Listener 2463, the correlation is quite high for performance with or without the CI. The mean value across all listeners, when they kept their heads stationary, was r = 0.682 (or r = 0.765 if we exclude Listener 2463 as an outlier). Performance was also highly correlated between conditions where the CI was on v. off when listeners rotated their heads, with a mean of r = 0.806 (or r = 0.847 if we again exclude Listener 2463). See Table IV for further details. Note this does not imply that there was no benefit from turning on the cochlear implant, only that sound source localization behavior was similar (but not the same) with and without the implant.

TABLE IV.

The Pearson correlation r between listener performance, calculated across all responses, between the conditions where the cochlear implant was turned off and turned on.

| Listener | Stationary (r) | Head Turn (r) |

|---|---|---|

| 2327 | 0.943 | 1.000 |

| 2465 | 0.823 | 0.999 |

| 2461 | 0.777 | 0.962 |

| 2463 | 0.105 | 0.521 |

| 2458 | 0.769 | 0.732 |

| 2460 | 0.731 | 0.739 |

| 2464 | 0.507 | 0.595 |

| 2509 | 0.803 | 0.899 |

| mean (SD) | 0.682 (0.246) | 0.806 (0.175) |

IV. DISCUSSION

A. Comparison to previous related results

Taken together, the previous analyses and correlations suggest that the SSD-CI listeners we tested rely considerably, though not exclusively, on their NH ear when localizing. Nevertheless, turning on the CI did improve localization in the lateral dimension if not in the front-back dimension. Head turns improved localization regardless of whether the CI was turned on or off. A similar reduction in front-back reversals with head turns was found by Pastore et al. (2018) for bilaterally-implanted listeners under the same stimulus and laboratory conditions. Head movements also improved SSD-CI listeners’ left-right acuity whereas no such improvement was discernable for the bilateral CI listeners tested by Pastore et al. (2018).

Dorman et al. (2015) found that SSD-CI listeners generally localize with greater acuity than SSD listeners without a cochlear implant, but nevertheless more poorly than NH listeners. Zeitler et al. (2015) tested SSD-CI patients in localization in the frontal hemifield and found that they performed as well as bilaterally-implanted CI patients. In a few cases, SSD-CI patients performed almost as well as NH listeners. Similarly, Dillon et al. (2018) found that only 2 out of 20 SSD patients demonstrated localization that was correlated with sound source position. After the implantation of the deaf ear, 15 of these listeners could localize as well or almost as well as normal hearing listeners, and even the 5 remaining listeners’ responses were correlated with the position of the sound source. Overall, the root-mean-squared error for SSD-CI listeners in their experiment was ≈ 25° whereas it was ≈ 7.5° for NH listeners. Buss et al. (2018) also demonstrated numerous benefits that come with implantation of the deaf ear in SSD patients, including improved localization acuity with reduced lateral bias in localization in the frontal hemifield, and improved masked sentence recognition at the normal hearing side. Improvement in these metrics generally asymptoted after 3 months.

Overall, the performance of SSD-CI listeners in the current study seems comparable to that of SSD-CI listeners in these other studies. That is, while there is considerable variability between listeners, the general effect of implantation of the deaf ear is to improve localization performance, sometimes to levels that approach the accuracy of NH listeners. It is worth noting that, given the complexity of the underlying dynamic auditory and multisensory processing required during listener movement (e.g., see Yost et al., 2020) the improvements accrued with cochlear implantation for SSD listeners could reasonably have been expected to be lost with listener movement. The results presented here suggest that these benefits do indeed carry over into localization when listeners move, as listeners often do in everyday life.

B. Lateral error and lateral response bias

Martin et al. (2004) and also Wightman and Kistler (1997) have shown that, in order for high-frequency spectral cues to be useful for front-back discrimination, binaural cues for the lateral displacement of the sound source are probably necessary. Also, Janko et al. (1997) implemented a neural net model and found that monaural spectral cues only contained enough information to produce localization results on a par with human listeners if the ITD that would specify lateral sound source location was also provided. Intuitively, it appears the auditory system can only determine the location of a sound source on a cone of confusion if it first has determined which “cone” the sound source is on – the estimate of the lateral displacement of the sound source provides the necessary information for this determination. We might therefore reasonably expect the implantation of a CI in the deaf ear to improve front-back localization, at least for high-frequency stimuli that offer ILDs. That is, with the addition of a CI, lateral position may be estimated accurately enough to facilitate the use of monaural spectral cues in the NH ear. On the other hand, SSD-CI listeners’ ILD estimate would be distorted by the AGC in the CI processor, and so may be less precise than is required to provide support a useful monaural spectral cue.

Considering these competing hypotheses in light of the results reported here prompts the question, does the rate of lateral error or bias predict the rate of FBRs? The reduction in left-right errors and lateral bias when SSD-CI listeners turned on their CI (see Figs. 2 & 4) offers a chance to answer this question for these data. The correlation between (1) the proportion of responses to the correct left/right hemifield and (2) the proportion of responses to the correct front/back hemifield was high for stationary, unilaterally-plugged NH listeners (r = 0.796). However, the same correlation for stationary SSD-CI listeners with their CI turned off was very low (r = −0.067).

One might expect that improvements in lateral accuracy brought about by implantation of the deaf ear with a CI would lead to a reduction in FBRs for SSD-CI listeners. However, the changes in the proportion of responses to the correct front-back hemifield that came about when their CIs were turned on was very small at −0.0175, meaning that front-back resolution was very similar with the CI on or off. The change in the proportion of responses to the correct left/right hemifield that came with turning on the CI was also quite modest at +0.0774. And, finally, the correlation of these two changes in localization performance (front/back and left/right), was quite modest at r = 0.402. Overall, it appears that the benefit in the acuity of lateral resolution that came to with turning on the CI, though welcome, was insufficient to translate into a sizable benefit in front-back resolution when the SSD-CI listeners were stationary. Rather, it seems head movements, with or without the CI, were most helpful in resolving the front-back location of sound sources.

C. The effect of sound source proximity to the NH ear

Morimoto (2001) showed data suggesting that, at azimuths of 60° or more, perceived elevation is essentially based on the monaural spectral cue at the ear ipsilateral to the sound source, but relies on both HRTFs as the sound source approaches the median plane (also see Hofman and van Opstal, 2003; Humanski and Butler, 1988; Jin et al., 2004). Supporting this finding, Hofman and van Opstal (2003) showed evidence that HRTFs at both ears are somehow weighted with each other since elevation localization in the midline is impacted by a uniltateral ear mold even though, presumably, the same information is available from either ear for midline sound sources (though it is not clear why listeners would be expected to favor the HRTF in the unaffected ear over the other). Since listeners in the present study have one ear that cannot offer high-frequency spectral cue information (the plugged + NH, CI(on) + NH, and CI(off) + NH conditions), one might expect fewer front-back errors for presentations from the side of the NH/unplugged ear as compared to presentations from the center or the side contralateral to the NH ear.

Figure 5 shows the results of an analysis of the proportion of responses to the correct front-back hemifield, irrespective of any lateral error, as a function of whether the stimulus was presented from the side of the NH ear (near), from the center (center), or from the side opposite the NH ear (far), for both stationary and rotating head conditions. For the unilaterally-plugged NH listeners, the proportion of responses in the correct front-back hemifield does not appear related to whether the sound source was ipsi- or contra-lateral to the unplugged ear, regardless of whether the listener remained stationary or turned the head.

Fig. 5.

The mean, across listeners, of the proportion of responses in the correct front-back hemifield as a function of whether the sound stimulus was presented from a loudspeaker at 60° on the same side as the normal hearing ear (near), was presented from 0° at the center (center), or from a loudspeaker at 60° on the side opposite the normal hearing ear (far). Error bars indicate ±1 standard error of the mean.

A repeated measures ANOVA (see Table V, 4) compared performance as a function of the proximity of the sound source to the NH ear for the SSD-CI subjects with the CI turned on v. off. Having the CI on or off did not lead to a significant difference in this measure. Both main effects of head turns and sound source proximity to the NH ear were significant. Post-hoc t-tests, Bonferroni-corrected for two comparisons for each measure (α = 0.05/2), showed that only the difference in responses to the correct front-back hemifield between the center and far sound source locations was significant for the stationary SSD-CI listeners regardless of whether their CI off and CI on. That is, although the mean proportion correct was somewhat higher for stimuli presented to the NH ear for SSD-CI listeners (see Fig. 5, center and right columns), the difference in front/back proportion correct for stimuli presented from the side ipsilateral versus contralateral to the NH ear was neither large nor was it statistically significant when corrected for family-wise error.

An independent samples ANOVA compared performance, again with regard to the proximity of the sound source to the NH ear, between unilaterally-plugged NH listeners and SSD-CI listeners with their CI turned off (see Table V, 6). The main factors of group, head turns, and sound source proximity to the NH ear were all significant, but no interactions were significant.

D. Monaural spectral and level cues

Notwithstanding the work of Martin et al. (2004), Wightman and Kistler (1997), and others, the degree to which spectral shape cues can be used monaurally, without binaural difference cues to provide an estimate of laterality, is still the subject of considerable debate and research (e.g., Hofman and van Opstal, 2003; Jin et al., 2004; Searle et al., 1975).

Studies show that SSD listeners (Agterberg et al., 2014; Shub et al., 2008; Slattery and Middlebrooks, 1994) and listeners with simulated monaural hearing loss (Irving and Moore, 2011; Kumpik et al., 2010; Shub et al., 2008; Van Wanrooij and Van Opstal, 2004, 2007) utilize ML cues and monaural spectral shape cues to compensate for the loss of binaural hearing for horizontal localization. However, both ML and spectral shape cues are not strong, or reliable, directional cues. Monaural spectral cues are inherently confounded with the spectral variations of a sound source (Wightman and Kistler, 1997). Similarly, the ML cue is confounded by changes in sound source intensity. For both, at least under conditions where listener and sound source are stationary, prior knowledge of source information is needed for these two monaural cues to be reliable. Unsurprisingly, these cues appear to play a minor role in horizontal localization for NH listeners when ITDs and ILDs are available (Shub et al., 2008; Van Wanrooij and Van Opstal, 2004).

Also, not all listeners appear to learn to use monaural spectral cues to the same extent. For example, Agterberg et al. (2014) demonstrated that inter-subject variability in the localization performance of SSD listeners can be partly explained by high-frequency hearing loss in their hearing ear. Slattery and Middlebrooks (1994) showed that some monaurally-deaf listeners appear to learn to use monaural spectral cues to localize equally well on both sides of the head without any sizable lateral bias, though their performance was not quite as good as NH listeners. Three out of five single-sided deaf listeners localized with greater acuity than acutely-plugged NH control subjects, while two were essentially the same as the controls.

Interestingly, Listener 2426, the patient in the present study with, by far, the poorest hearing in the NH ear (see Subjects in the Methods section), demonstrated localization performance that was quite similar to most other listeners, both in terms of front-back and left-right localization. In other words, high frequency hearing loss in the NH ear, which would most impact the use of spectral shape cues, did not lead to “outlier” performance for the tasks tested in this experiment.

Taken together, the high correlation between SSD-CI listeners’ responses with the CI on and off, and their significant localization improvement with head turns regardless of the on/off state of the CI suggest that most (but not all) of the localization “work” is done by the NH ear, even when the CI is on. Given the poor front/back localization of stationary SSD-CI listeners, both with CI on or off, it seems likely that they monitored the change in the attenuation of high frequencies due to head shadowing as the listeners turned their heads (a dynamic ML cue) to improve their front/back localization. It is possible that monaural listeners may have used some kind of dynamic monaural spectral shape cue, but, given their lack of success in using the stationary monaural spectral shape cue without any binaural information to specify the lateral position of the sound source, successfully monitoring the change in spectral shape across a wide range of frequencies during head turns would seem computationally daunting. By contrast, simply monitoring the change in ML cue within a few auditory filters or across several would provide the same information, and therefore seems the more parsimonious (though by no means conclusive) explanation. The observation that unilaterally-plugged NH listeners showed essentially the same improvement in front-back resolution as SSD-CI off listeners, despite having no time to “learn” to use either dynamic monaural cue (see Fig. 3), also argues for listeners’ use of the simpler ML cue. However, discussion in the next two sections below suggests it may not be so simple as a choice between one cue or the other.

The high correlation in SSD-CI results with the CI on and off is all the more striking when we consider the performance of the bilaterally implanted listeners reported by (Pastore et al., 2018), where head motion markedly improved front-back discrimination when both implants were turned on, and yet listeners were unable to distinguish front from back with or without head movement when only one implant was turned on. These results suggest that dynamic ML cues may be useful for NH ears but not for implanted ears.

Figure 6 shows simulated dynamic ML cues extracted from KEMAR HRTFs (Gardner and Martin, 2005) that would result for changes in head position as indicated along the x-axis. The analysis reveals several trends, especially in light of the analysis shown in Fig. 5. For sound sources located near midline, the dynamic ML cue (see top panel A in Fig. 6) varies monotonically with the rotational position of the head. Sound sources behind the listener create a similar, but opposite, pattern of change. This would therefore appear to be a potentially useful cue. Looking at the pattern of change in ML for sound sources at 60° (see bottom panel B in Fig. 6), however, reveals a cue that changes very little over the course of a head turn at the ipsilateral ear (though the slope of the cue across head positions is considerable for the 4–8 kHz filter for sound sources behind the listener). The change is sizable at the contralateral ear, especially within the octave band from 2–4 kHz, but the shape is non-monotonic (double-sloped, up and down) and nearly the same, but shifted right or left, for sound sources in front v. back. However, for head turns between ±10°, this pattern yields a monotonic cue with a relatively steep slope across a relatively small head turn that goes in the opposite direction for sound sources in front v. behind, thereby providing a cue that potentially could be used for distinguishing front from back sound source location. See also Tollin (2003), Fig. 6A, which shows evidence that a monaural level cue could potentially provide useful information in the (cat) lateral superior olive (LSO) throughout the azimuth plane. It is also worth noting that, with an intact auditory nerve in the deafened ear, the ML cue might be effectively an ILD cue in that the level of neural activity from the auditory nerve on the NH side could be compared to the spontaneous firing rate of the deaf or plugged ear in, presumably, LSO.

Fig. 6.

Simulated dynamic monaural level (ML) cues generated by listener head movements relative to the midline, for sound sources at 0° and 180° (top figure panel) and 60° and 120° (bottom figure panel) using measured Kemar impulse responses (Gardner and Martin, 2005). A fourth-order digital Butterworth filter was used to bandpass filter the head-related impulse-responses. 20log10 of the RMS amplitude of the resulting bandpass-filtered impulse responses was calculated and shown for 2–4 kHz (solid lines) and 4–8 kHz (dotted lines). Note that, for sound sources presented from the midline, either ear is ipsilateral to the sound source for half the head turn and contralateral for the other half of the head turn. Therefore, while the top panel shows magnitude for the left ear, magnitude at the right ear would just be the same curve flipped symmetrically about the midline. To simplify the figure, only magnitude at the left ear is shown in the top panel.

Looking again at Fig. 5, while head turns increased the proportion of correct front-back judgments, the pattern across sound sources near/center/far relative to the unblocked ear remained the same with head turns. This leads to a conundrum that these analyses reveal but do not solve. Monaural spectral cues appear to be inadequate for localization both front/back and left/right, at least without reasonably accurate binaural difference cues to locate the correct “cone of confusion.” Monaural level cues are a relatively poor cue for stationary listeners, but would appear to be somewhat more useful when the listener rotates the head. However, given the pattern of dynamic headshadow cues for the ipsilateral 60° condition, one would expect a relative improvement with head turns for the contralateral 60° condition, and yet that is not what we see – the pattern of correct front/back responses v. near/center/far remains essentially the same with or without head turns. It may be that both dynamic ML cues and dynamic monaural spectral shape cues may be used during headturns, and that their relative weighting may shift with their relative reliability, though such a hypothesis is neither supported nor refuted by the results and analyses in this report.

E. Interaural spectral difference cues

One difficulty posed by a reliance on a monaural spectral cue is that the listener would need to have familiarity with the signal spectrum, in order to disentangle the direction-dependent filtering of the HRTF from the stimulus spectrum. Searle et al. (1975) showed evidence that using a comparison of the left and right pinna cues, an interaural spectral difference cue (ISD), may provide an elevation cue that is less vulnerable to the effects of spectral uncertainty in the sound stimulus. Indeed, Hofman and van Opstal (2003) have shown that listeners unilaterally-fitted with ear molds that disturb ISD cues, while leaving the monaural spectral cue in one ear and low-frequency binaural cues intact, were less successful at elevation localization even in the midline, where monaural spectral cues are nearly identical between the two ears. Hofman and van Opstal (2003) interpreted these results to suggest that the monaural spectral cues at the two ears are somehow weighted and combined with each other in the calculation of elevation.

Jin et al. (2004) studied the relative weighting of monaural spectral cues and ISD cues in with NH listeners using a modified, virtual stimulus and determined that ISD cues were not weighted heavily enough to overcome a conflicting monaural spectral cue. Jin et al. (2004) made a theoretical analysis of the mutual information shared by the ISD cue and the monaural spectral cue as a function of the angle of sound source incidence. They found that, “…within 20° of the midline, the monaural spectral cues carry more information for resolving directions within a cone of confusion than the ISD cue, but as one moves more laterally there is a substantial increase in the information associated with the ISD cue.” Such an analysis seems to be supported by our findings for SSD-CI listeners that the proximity of the sound source to the NH ear only weakly reduced the incidence of front-back reversals (see Section IV C). Yet, no such improvement in front-back resolution can be found for unilaterally-plugged NH listeners and bilaterally NH listeners perform at ceiling and so no such improvement, if it is there, could emerge. Nevertheless, as Jin et al. (2004) suggest, it appears as though binaural and monaural inputs (e.g., ITDs, ILDs, monaural spectral cues, interaural spectral difference cues) could be used and evaluated together such that their relative weighting may be fluid, perhaps driven by the relative variability/reliability of each estimate.

F. Learning and localization strategy after SSD

With hearing impairment, the auditory system uses information based on what is available, not what is optimal. The “cue reweighting” hypothesis proposes that the impaired auditory system learns to minimize the role of distorted localization cues and emphasize other unaffected cues – see review by Keating and King (2013) and van Opstal (2016) and also Venskytis et al. (2019).

Irving and Moore (2011) tested whether learning could improve horizontal performances for SSD listeners. They also introduced simulated SSD using monaural ear-plugging. In addition, the level of the stimulus (pink noise) was roved between 50 and 70 dB SPL to prevent the usage of any loudness cue introduced by head shadowing. During a four-day period during which subjects wore their earplugs at all times (even when sleeping), and which included approximately hour-long training sessions where listeners were given feedback, monaural localization improved, suggesting that SSD listeners could learn to use the spectral shape information related to their pinnae in horizonal localization. It should be noted, however, that Wightman and Kistler (2002) showed that even the small amount of low-frequency energy that passes through an ear plug is sufficient to provide an ITD that facilitates the use of monaural spectral cues (see also Martin et al., 2004), whereas when all low-frequency information is removed monaural localization was essentially impossible. It is, in practice, very difficult to achieve full attenuation of low-frequency energy with earplugs, and even as much as 30 dB of attenuation does not remove a usable ITD cue (Wightman and Kistler, 2002).

Firszt et al. (2015) also tested the ability of SSD listeners to improve their localization performance after training, and found considerable variability in listener outcomes, but at least some benefit for all listeners. It is not clear that everyday experience would serve well as “localization training” – in any event, the results of the present experiment did suggest at least a small benefit of experience in the SSD-CI listeners with their CIs off as compared to unilaterally, acutely plugged NH listeners.

More specifically, listeners have been shown to be able to learn to use monaural spectral cues in the lab for both elevation and azimuth (Hebrank and Wright, 1974), but the degree to which listeners can do this in the real world without formal training and pre-existing knowledge of the stimulus is less clear. Also, Kumpik et al. (2010) showed that randomizing stimulus spectral information prevented learning pinnae spectral cues. The results shown in this paper suggest that, at least for determining the front/back location of sound sources, most listeners had not learned how to use the monaural spectral cue effectively (see Fig. 7). While the SSD-CI listeners did demonstrate a decreased left-right bias as compared with unilaterally-plugged NH listeners, it seems likely that they had learned to use a ML cue more so than a monaural spectral cue, given their poor front-back resolution, but this is not conclusive (see discussion in Discussion subsections D and E above). The hypothesis that ML cues are primarily used over monaural spectral shape cues is supported by the results of Shub et al. (2008), who found no improvement in azimuthal localization for chronic monaurally-impaired listeners over acutely-monaural NH listeners when they were presented with spectrally consistent but amplitude-roved stimuli with feedback on every trial.

Before introducing listeners to the head movement condition and its underlying rationale, listeners were first tested in the stationary-head condition, so as to avoid any use of even very small head movements while listeners were supposed to remain stationary. It is therefore possible that some portion of the improvement seen in sound source localization performance for the head movement conditions, especially for the NH plugged listeners, may have resulted from learning during the preceding stationary-head condition. Though it cannot be ruled out, several factors conspire against any such learning accounting for more than a very small portion of the improvement. First, as Irving and Moore (2011) showed, improved monaural localization of broadband pink noise stimuli (as opposed to the high-pass filtered stimuli presented in the present experiment) required listeners to train with feedback in the lab for five days while wearing their earplugs at all times. By contrast, in the present experiments each condition only lasted approximately twenty minutes, no feedback was given, and listeners had been fitted with their earplug minutes before the session. It would seem that listeners would have comparatively little time and no feedback to learn monaural localization while stationary, and so it seems likely that very little learning would be accomplished. Second, it would seem that whatever learning listeners acquired while performing at roughly chance levels in the stationary condition (the average percent localization to the correct front-back hemifield was 58% for the monaurally-plugged NH listeners in the stationary condition) would be unlikely to provide the basis for much of an improvement in the subsequent head movement condition. Finally, during informal pilot testing, two of the authors and one lab assistant did the stationary, monaurally-plugged conditions several times in succession, interleaved with head motion conditions as well. To our surprise, none of the three of us were able to improve our stationary monaurally-plugged sound source localization performance. Nevertheless, Firszt et al. (2015) showed that variability between listeners was very high under their conditions where listeners trained with feedback to localize sound source with one ear, and so the three authors’ experience may not apply to all other listeners.

V. CONCLUSION

The present study extends the investigation of localization strategies during head movement. Our previous results (Pastore et al., 2018) showed that localization results for bilaterally-implanted listeners with only one CI turned on did not benefit from head turning. But in the current study, SSD-CI with one normal ear showed improved performance in front-back judgment with head turns both with and without a CI in the other ear. In light of this contrasting result, we may speculate that a monaural preference for the normal ear is strengthened through learning, insomuch as listeners did not reveal a substantial benefit from using the ILDs (which are distorted by automatic gain control in the implant processor) generated between the normal-hearing and CI ears. It further seems that SSD listeners may learn to rely more on ML cues than on high-frequency spectral cues, and that spectral cues are processed, in NH listeners, in a way that relies on having two ears and is highly integrated with the processing of binaural difference cues.

ACKNOWLEDGMENTS

Research was supported by grants from the National Institute on Deafness and Communication Disorders (NIDCD 5R01DC015214) and Facebook Reality Labs(both awarded to W.A. Yost) and NIDCD F32DC017676 (awarded to M. Torben Pastore). M. Torben Pastore conceived of and designed the experiments, analyzed the data, and wrote the manuscript. Sarah Natale and Colton Clayton screened and tested SSD-CI and NH listeners, respectively. Yi Zhou analyzed aspects of the data concerning listener plasticity and assisted in writing. William Yost is director of the lab and provided valuable input on the experimental design, data analysis, and editing the paper. Michael Dorman provided access to the CI patients and expert advice on making the psychoacoustical measures on CI patients. We have no conflicts of interest to report.

VI. REFERENCES

- Agterberg MJ, Hol MK, Van Wanrooij M, Van Opstal AJ, and Snik AF (2014). “Single-sided deafness and directional hearing: contribution of spectral cues and high-frequency hearing loss in the hearing ear,” Front Neurosci 8, 188, https://www.ncbi.nlm.nih.gov/pubmed/25071433, doi: 10.3389/fnins.2014.00188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Archer-boyd AW, and Carlyon RP (2019). “Simulations of the effect of unlinked cochlear-implant automatic gain control and head movement on interaural level differences,” J Acoust Soc Am 145(3), 1389–1400, doi: 10.1121/1.5093623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer RW, Matuza JL, and Blackmer RF (1966). “Noise localization after unilateral attenuation,” J Acoust Soc Am 40(2), 441–444. [Google Scholar]

- Bronkhorst AW (2015). “The cocktail-party problem revisited: early processing and selection of multi-talker speech,” Atten Percept Psychophys http://link.springer.com/10.3758/s13414-015-0882-9, doi: 10.3758/s13414-015-0882-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buss E, Dillon MT, Rooth MA, King ER, Deres EJ, Buchman CA, Pillsbury HC, and Brown KD (2018). “Effect of Cochlear Implantation on Quality of Life in Adults with Unilateral Hearing Loss,” Trends in Hearing 22, 1–15, doi: 10.1159/000484079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon MT, Buss E, Rooth MA, King ER, Deres EJ, Buchman CA, Pillsbury HC, and Brown KD (2018). “Effect of Cochlear Implantation on Quality of Life in Adults with Unilateral Hearing Loss,” Audiology and Neurotology 22(4–5), 259–271. [DOI] [PubMed] [Google Scholar]

- Dirks C, Nelson PB, Sladen DP, and Oxenham AJ (2019). “Mechanisms of Localization and Speech Perception with Colocated and Spatially Separated Noise and Speech Maskers Under Single-Sided Deafness with a Cochlear Implant,” Ear and Hearing 40(6), 1293–1306, doi: 10.1097/aud.0000000000000708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Loiselle LH, Cook SJ, Yost WA, and Gifford RH (2016). “Sound source localization by normal hearing listeners, hearing-impaired listeners and cochlear implant listeners,” Audio and Neuro 21, 127–131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Zeitler DM, Cook SJ, Loiselle LH, Yost WA, Wanna GB, and Gifford RH (2015). “Interaural level difference cues determine sound source localization by single-sided deaf patients fit with a cochlear implant,” Audiol Neurootol 20(3), 183–188, doi: 10.1159/000375394.Interaural. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edmonds BA, and Culling JF (2005). “The spatial unmasking of speech: evidence for within-channel processing of interaural time delay,” The Journal of the Acoustical Society of America 117(5), 3069–3078, doi: 10.1121/1.1880752. [DOI] [PubMed] [Google Scholar]

- Firszt JB, Reeder RM, Dwyer NY, Burton H, and Holden LK (2015). “Localization training results in individuals with unilateral severe to profound hearing loss,” Hearing Research 319, 48–55, doi: 10.1016/j.heares.2014.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt JB, Reeder RM, and Holden LK (2017). Unilateral Hearing Loss: Understanding Speech Recognition and Localization Variability - Implications for Cochlear Implant Candidacy, 38, pp. 159–173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Florentine M (1976). “Relation between lateralization and loudness in asymmetrical hearing losses.,” Journal of the American Audiology Society 1(6), 243–251, https://www.ncbi.nlm.nih.gov/pubmed/931759. [PubMed] [Google Scholar]