Abstract

This paper proposes machine learning approaches to support dentistry researchers in the context of integrating imaging modalities to analyze the morphology of tooth crowns and roots. One of the challenges to jointly analyze crowns and roots with precision is that two different image modalities are needed. Precision in dentistry is mainly driven by dental crown surfaces characteristics, but information on tooth root shape and position is of great value for successful root canal preparation, pulp regeneration, planning of orthodontic movement, restorative and implant dentistry. An innovative approach is to use image processing and machine learning to combine crown surfaces, obtained by intraoral scanners, with three dimensional volumetric images of the jaws and teeth root canals, obtained by cone beam computed tomography. In this paper, we propose a patient specific classification of dental root canal and crown shape analysis workflow that is widely applicable.

Keywords: Deep learning, Shape analysis, Dentistry

1. Introduction

In the context of dentistry imaging, machine learning techniques are becoming important to automatically isolate areas of interest in the dental crowns and roots [1]. Root resorption susceptibility has been associated to root morphology [2–4], and interest in variability in root morphology has increased recently [5–7]. Analysis of root canal and crown shape and position has numerous clinical applications, such as root canal treatment, regenerative endodontic therapies, restorative crown shape planning to avoid inadequate forces on roots and planning of orthodontic tooth movement. External apical root resorption (RR) is present in 7% to 15% of the population, and in 73% of individuals who had orthodontic treatment [8, 9].

Here, we propose automated root canal and crown segmentation methods based on image processing, machine learning approaches, shape analysis and geometric learning in medical imaging. The proposed methods are implemented in open source software solutions in two pipelines. The first pipeline is based on U-net for root canal and crown automatic segmentation from cone-beam computed tomography (CBCT) images and the second one is based on ResNet architecture for automatic segmentation of digital dental models (DDM) acquired with intraoral scanners. The proposed analysis is based on three main phases: (i) features extraction from raw volumetric and surface meshes, (ii) representative anatomic regions identification, and (iii) overall voxel-by-voxel and meshes vertices classification. The proposed automatic segmentation methods provide clinicians with labeling of the crown and root morphologies.

The paper sections are organized as follows. The next section reviews the materials and methods, describing the proposed approach. The experimental part and results are described in Sect. 3, followed by Sect. 4, dedicated to the discussion and conclusion.

2. Materials and Methods

2.1. Material

This retrospective study was approved by the Institutional Review Board. The sample of the present study was secondary data analysis and no CBCT scan was taken specifically for this research. The data consisted of 40 mandibular digital dental models (DDM) and CBCT scans for the same subjects. All subjects were imaged with the 2 imaging modalities. The mandibular CBCT scans were obtained using the Veraviewepocs 3D R100 (J Morita Corp.) with the following acquisition protocol: FOV 100 × 80 mm; 0.16 mm3 voxel size; 90 kVp; 3 to 5 mA; and 9.3 s. DDM of the mandibular arch were acquired from intraoral scanning with the TRIOS 3D intraoral scanner (3 Shape; software version: TRIOS 1.3.4.5). The TRIOS intraoral scanner (IOS) utilizes “ultrafast optical sectioning” and confocal microscopy to generate 3D images from multiple 2-dimensional images with accuracy of 6.9 ± 0.9 μm. All scans were obtained according to the manufacturer’s instructions, by 1 trained operator.

2.2. Methods

Two open-source software packages, ITK- snap, version 3.8 [10] and Slicer, version 4.11 [11] were used to perform user interactive manual segmentation of the volumetric images and common orientation of the mandibular dental arches for the learning model training. All IOS and CBCT scans were registered to each other using the validated protocol described by Ioshida et al. [12]. In this paper, we proposed two machine learning pipelines to boost the segmentation performance of root canal in volumetric images and dental crowns in surface scans. We first perform pre-processing in which we enhance the quality of images and increase the ratio between pixels belonging to root canals and background pixels . Then, we train a deep learning model to segment root canals. As there might be outliers in the results and the images might be over/under segmented we perform post-processing to address these issues.

Automatic Root Canal Segmentation.

One of the main issues in many machine learning applications is class imbalance. To improve the accuracy in the first proposed pipeline and deal with the imbalance issue caused by low percentage of root canal pixels compared to the entire scan, we performed slice cropping to increase the ratio between the pixels which belong to root canals to the background pixels. All the 3D volumetric scans were cropped depending on their size in order to keep only the region of interest where the root canal pixels are. The cross-sectional images without root canals were automatically removed in order to feed the neural network almost exclusively with the images of interest. The algorithm selected the same anatomic cropping region for every 3D scan in the dataset, then split it into 2D cross-sections, and every cross-section was resized to 512 × 512 pixels. Contrast adjustment was performed as the original 3D scans had low contrast. After image pre-processing, 150 cross-sectional images were obtained for each patient.

UNet Model Training.

This dataset was then trained in a UNet model [13, 14]. This network was first developed for biomedical image segmentation and then used in other applications, such as field boundary extraction from satellite images [15]. The network hierarchically extracts low-level features and recombines them into higher-level features in the encoder first. Then, it performs the element-wise classification from multiple features in the decoder. The encoder–decode architecture consists of down-sampling blocks to extract features and up-sampling blocks to infer the segmentation in the same resolution. The training has been done with 100 epochs, 400 steps per epochs, and a learning rate of 1e-5.

We also performed a 10-fold-cross validation in which each fold contains 4 scans. Therefore, 10 models were trained and for each model, 9 folds (36 scans) were used for training and 1 fold (4 scans) was used as the validation set. In order to identify the precision of the results, the quantitative measurements included Area Under the ROC Curves (AUC), F1 Score, accuracy, sensitivity and specificity were computed between the machine learning segmentation and the semi-manual segmentation. We did find a model that all of its measurements were the highest. Then the trained model with the highest AUC, and adequate F1 Score, accuracy, sensitivity and specificity, was selected and used it as the reference model for the segmentation.

Automatic Dental Crowns Segmentation.

The data analytics of the 3D dental model surfaces requires extracting shape features of the dental crowns. The approach consists of taking 2D images or pictures of the 3D dental surface and extracting their associated shape features plus the corresponding label (background, gum, boundary between teeth and gum, teeth). The ground truth labeling of the dental surface is done with a region growing algorithm that uses the minimum curvature as stopping criteria plus manually correcting the miss-classified regions.

The 2D training samples are then generated by centering and scaling the mesh inside a sphere of radius one. The surface of the sphere is sampled regularly using an icosahedron sub-division approach, and each sample point is used to create a tangent plane to the sphere. The tangent plane serves as starting point of a ray-cast algorithm, i.e., the dental surface is probed from the tangent plane. When an intersection is found, the surface normal and the distance to the intersection are used as image features. The corresponding ground truth label map is also extracted here.

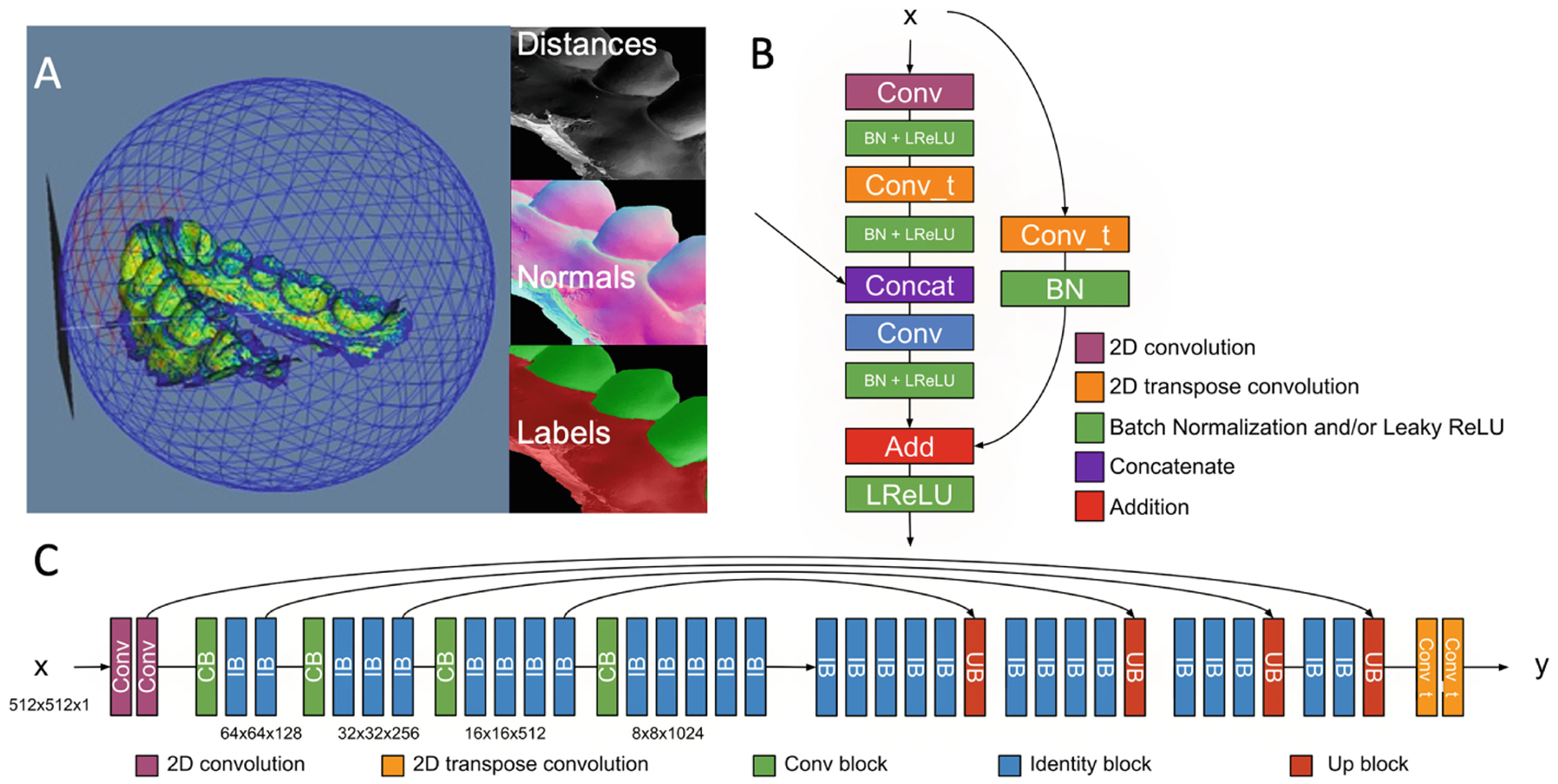

Figure 1A shows the wireframe mesh of the sphere, the object inside the sphere, the tangent plane to the sphere and a perpendicular ray starting at the tangent plane. The plane resolution is set to 512 × 512. These purely geometric features are proposed because they led to higher accuracy in classification in our preliminary study [16], and do not depend on the position nor the orientation of the model, which may vary across the population. Each image is then used to train a modified Unet model with connections similar to a ResNet [17] training model. The modified architecture is shown in Fig. 1B and C. Specifically, the up-sampling block shown in Fig. 1B is modified to mirror the down-sampling blocks of ResNet in Fig. 1C, and this architecture is referred to as Ru-Net.

Fig. 1.

PSCP meshes segmentation. A - Sphere and tangent plane created around the dental surface meshes and the shape features that are extracted; B - Up-sampling block; C - RUNET neural network architecture.

The prediction of a label on a new dental model is done following a majority vote scheme, i.e., a single point on the dental surface may be captured by several tangent planes. The label with the greater number of votes is set as the final label for a specific point. A post processing step is applied to remove islands, i.e., if a region has less than 1000 points, the label of the region is set to the closest labeled region. The final output of the algorithm is a labeled mesh with labels 0 as gingiva, 1 as boundary and 2 as dental crown. Finally, the calculated boundary helps provide individual labels for each crown. The final learning model, the DentalModelSeg, [18] was fed with 40 × 252 images/scan = 10,080 images. The DentalModelSeg tool, as part of the pipelines for patient specific classification and prediction (PSCP) tool, has been deployed in an open web-system for Data Storage, Computation and Integration, the DSCI [19], for execution of the automated tasks [20].

3. Results

3.1. Automatic Root Canal Segmentation

Quantitative measurements of AUC, F1 Score, accuracy, sensitivity and specificity are presented in Table 1. The F1 scores of the 10 folds presented a standard deviation of 0.077. As can be seen, the specificity values are higher than 0.99 with the standard deviation close to zero. However, the sensitivity values have higher standard deviation. The reason for getting low performance could be because of class imbalance and having low number of samples. Table 1 shows the average and standard deviation measurement for the 10 trained models and Fig. 2 shows an example of manual and automatic root canal segmentation.

Table 1.

AUC, F1 Score, accuracy, sensitivity and specificity of the proposed approach

| F1 Score | AUC | Sensitivity | Specificity | Accuracy | |

|---|---|---|---|---|---|

| Average | 0.7324 | 0.9174 | 0.8271 | 0.9997 | 0.9996 |

| SD | 0.0774 | 0.0548 | 0.1087 | 0.0001 | 0.0001 |

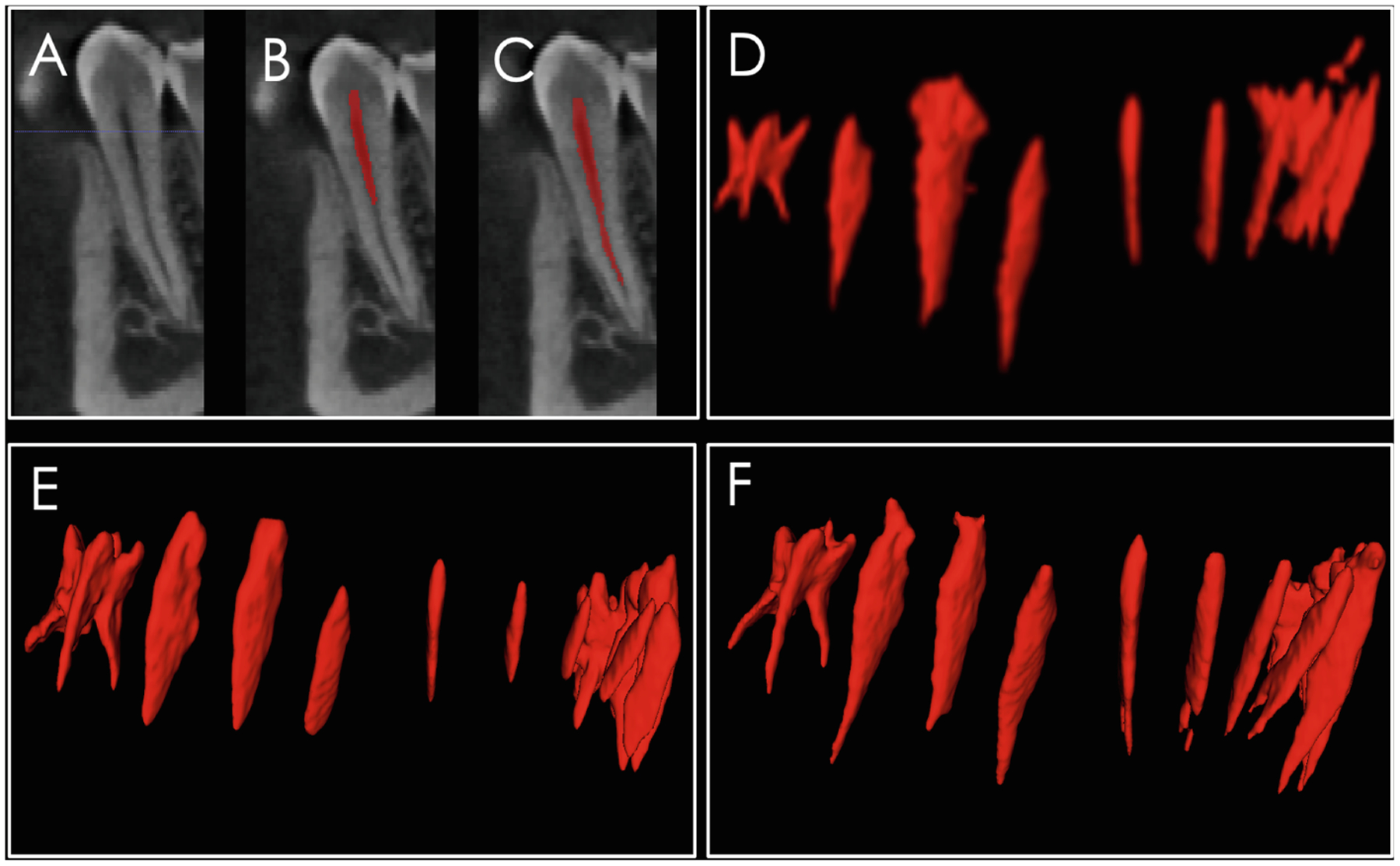

Fig. 2.

A, CBCT scan gray level sagittal image at the premolar region; B, CBCT scan with manually labeled root canal; C, automatic segmentation combining image processing and machine learning approach; D, rendering of the root canals from molar to molar showing the initial automatic segmentation using image processing; E, manual segmentation, which often misses the apical portions of the root canal; and F, the combined image processing and machine learning segmentation that clearly identifies the root canal morphology for each tooth.

3.2. Automatic Dental Crowns Segmentation

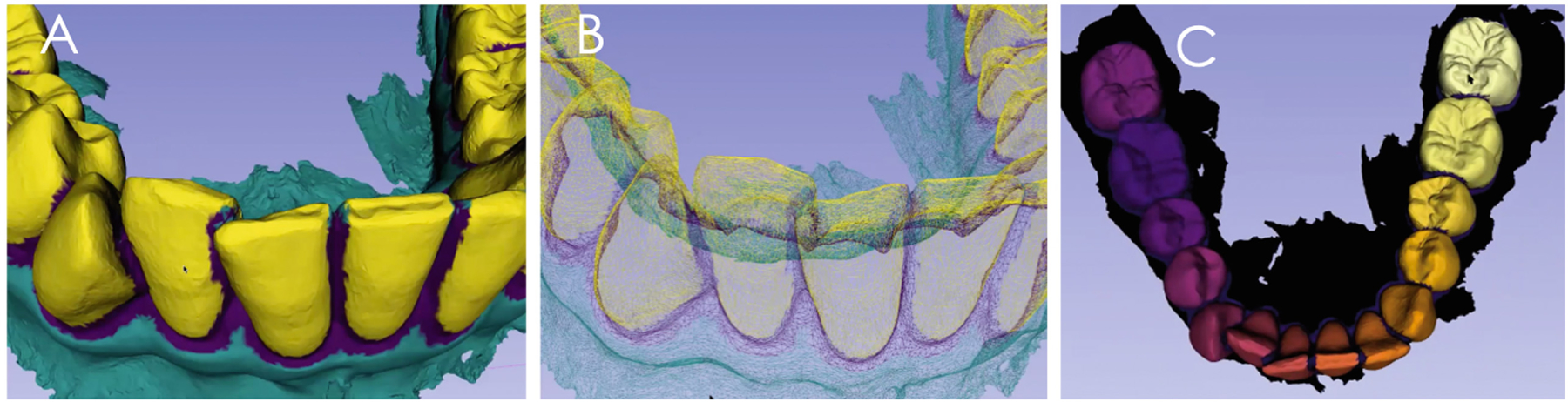

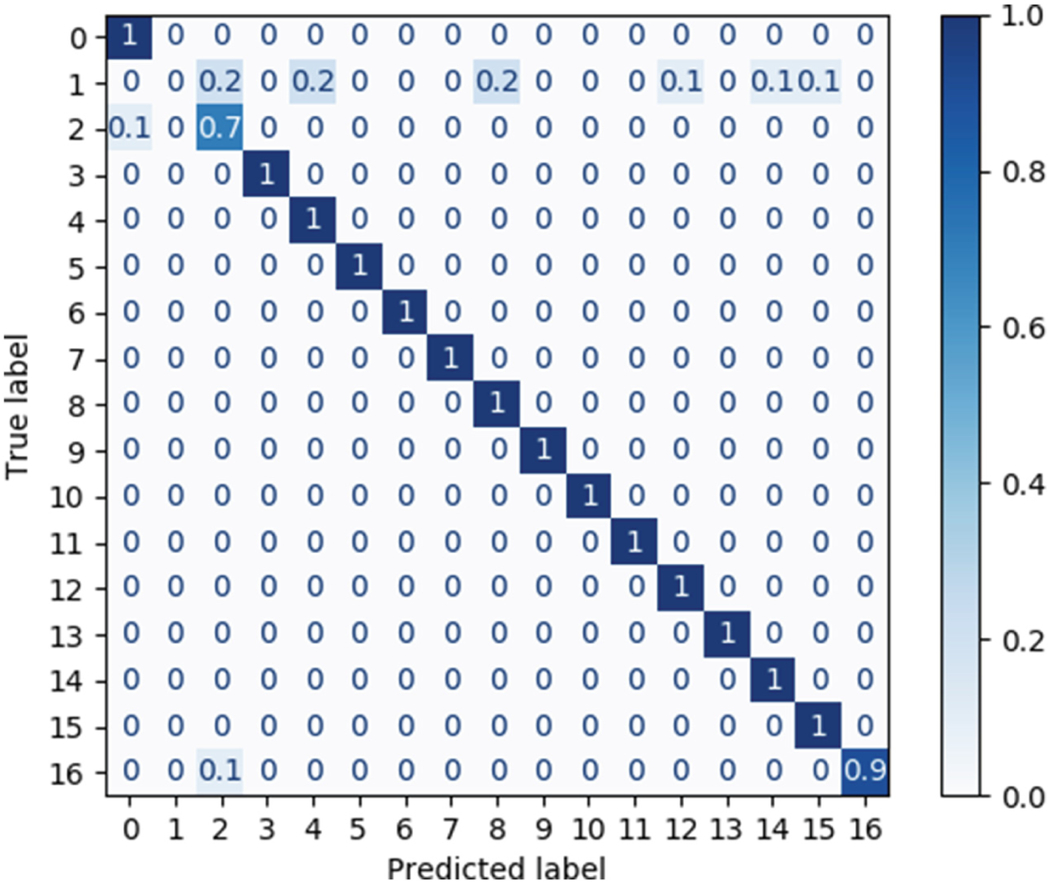

The trained model detects a continuous boundary between the crown and the gingiva and segments the individual dental crowns for the 40 scans in the datasets, as shown in Fig. 3. The accuracy of the model is shown in a confusion matrix for random 7 scans (Table 2). The confusion matrix is a matrix where the diagonal component shows the ratio between the predicted label and the actual label. The closer it is to one, the better label was predicted there are. The 2nd label presented the worst performance (0.7) due to the dilation performed to detect boundaries.

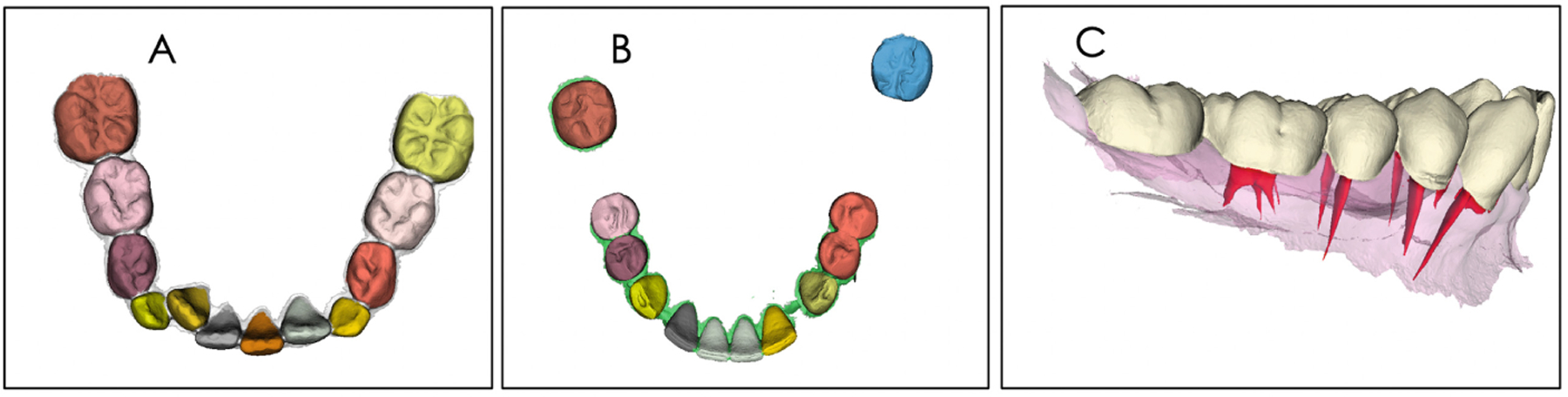

Fig. 3.

A, the identification of shape parameters segments the dental crowns; B, errors in the boundaries are post-processed with region growing; and C, the machine learning segmentation clearly identifies the dental crown morphology for each tooth.

Table 2.

Confusion matrix of the true and predicted labels for random 7 scans

|

4. Discussion and Conclusions

Better understanding of the root canal morphology has the potential to increase the chance of successful root canal preparation, improve the treatment of pulpally involved teeth, and indicate the root position for planning both orthodontic movement and/or implant dentistry. In this paper we proposed automated image processing and machine learning based methods to segment both root canals and digital dental models automatically. The two different approaches presented in this paper facilitate the integration of the two imaging modalities, which provide complementary information to the dental clinician. The first approach uses U-net for automated root canal segmentation from CBCT images. The second approach uses ResNet architecture for automatic segmentation of crowns from digital dental models. As the same patients imaged with the CBCT were also imaged with the intraoral scanner, the multi-modal registration using a validated protocol facilitated the multi-modal fusion, consolidating the two approaches in service of one common patient.

In the automated root canal algorithm, we performed pre-processing to deal with the imbalance data issue and then trained deep learning models. While image processing performs well for in vitro root canal segmentations [21], as shown in Fig. 2D image processing alone often does not segment the root apex anatomy. The first model was based on U-Net architecture and the second one was based on RU-Net architecture. We performed 10-fold cross-validation and evaluated 10 models using different metrics including F1 score, AUC, sensitivity and specificity and achieved very high specificity. However, the sensitivity values need improvement. The low sensitivity performance could be due to variations in the CBCT scans field of view (e.g. some scans have only lower jaws and some of them have both jaws), class imbalance issues and small sample size. In our future work, to have more samples for training the models and improve the performance of the models, we will use the trained models to segment new scans first. Then, clinician users can interactively modify the segmented results, as it is easier to edit the segmented images rather than manually segment them from scratch.

Interestingly, the results shown in Fig. 2 reveal that the automatic segmentation can better identify the root canal apex compared to the manual segmentation. These segmented pixels on the deeper parts of the root canals increase the number of false positives, even though they belong to root canals. However, the manual segmentation of the root canals often fails to segment the root canal apex and anatomic details, and the automatic segmentation may in fact be more anatomically precise.

The training of the automatic dental crown segmentation in the DentalModelSeg tool was performed with digital dental models of permanent full dentition. All dental models were stored and computed in the DSCI open web-system [18] for execution of the automated tasks. Preliminary testing of the trained model included segmentation of dental crowns of the digital dental models in the primary and mixed stages of the dentition, as well as cases with unerupted, missing or ectopically positioned teeth. Future work will require individual labelling of the primary and/or permanent teeth as the individual labeling does not follow a specific order, and it is done based on the internal ordering of the points. Providing specific, unique labels for each crown is desired for generalizability of the proposed approaches (Fig. 4).

Fig. 4.

The trained model was tested using in A, a mixed dentition DDM; and in B, a DDM with missing teeth; C shows the integration of root canal and crown morphology. Note that the trained model segmented the individual teeth in the mixed dentition in A properly, but segmentation of the DDM with missing teeth in B still requires further learning.

The automatic segmentation algorithms proposed in this study allow shape processing and analysis with precise learning and classification of whole tooth data. Both surface scanners images and grey level volumetric images can be segmented with accuracy with the methods presented in this paper. Analyzing and understanding 3D shapes for segmentation and classification remains a challenge due to various geometrical shapes of teeth, complex tooth arrangements, different dental model qualities, and varying degrees of crowding problems. Clinical applications of the proposed algorithms will benefit from future work comparing the performance of state-of-the-art neural networks and quantitative shape analysis of root and crown morphologies and position.

Acknowledgments.

Supported by NIH DE R01DE024450, R21DE025306 and R01 EB021391.

References

- 1.Ko CC, et al. : Machine Learning in Orthodontics: Application Review. Craniofacial Growth Series, vol. 56, pp 117–135 (2020). http://hdl.handle.net/2027.42/153991 [Google Scholar]

- 2.Xu X, Liu C, Zheng Y: 3D tooth segmentation and labeling using deep convolutional neural networks. IEEE Trans. Vis. Comput. Graph 25(7), 2336–2348 (2019). 10.1109/TVCG.2018.2839685 [DOI] [PubMed] [Google Scholar]

- 3.Elhaddaoui R, et al. : Resorption of maxillary incisors after orthodontic treatment-clinical study of risk factors. Int. Orthod 14, 48–64 (2016). 10.1016/j.ortho.2015.12.015 [DOI] [PubMed] [Google Scholar]

- 4.Marques LS, Ramos-Jorge ML, Rey AC, Armond MC, Ruellas AC. Severe root resorption in orthodontic patients treated with the edgewise method: prevalence and predictive factors. Am J Orthod Dentofacial Orthop 2010; 137: 384 ± 8. 10.1016/j.ajodo.2008.04.024 [DOI] [PubMed] [Google Scholar]

- 5.Marques LS, Chaves KC, Rey AC, Pereira LJ, Ruellas AC: Severe root resorption and orthodontic treatment: clinical implications after 25 years of follow-up. Am. J. Orthod. Dentofac. Orthop 139, S166–S169 (2011). 10.1016/j.ajodo.2009.05.032 [DOI] [PubMed] [Google Scholar]

- 6.Kamble RH, Lohkare S, Hararey PV, Mundada RD: Stress distribution pattern in a root of maxillary central incisor having various root morphologies: a finite element study. Angle Orthod. 82, 799–805 (2012). 10.2319/083111-560.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Oyama K, Motoyoshi M, Hirabayashi M, Hosoi K, Shimizu N: Effects of root morphology on stress distribution at the root apex. Eur. J. Orthod 29, 113–117 (2007). 10.1093/ejo/cjl043 [DOI] [PubMed] [Google Scholar]

- 8.Lupi JE, Handelman CS, Sadowsky C: Prevalence and severity of apical root resorption and alveolar bone loss in orthodontically treated adults. Am. J. Orthod. Dentofac. Orthop 109(1), 28–37 (1996). 10.1016/s0889-5406(96)70160-9 [DOI] [PubMed] [Google Scholar]

- 9.Ahlbrecht CA, et al. : Three-dimensional characterization of root morphology for maxillary incisors. PLoS ONE 12(6), e0178728 (2017). 10.1371/journal.pone.0178728 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.ITK- snap. www.itksnap.org(2020). Accessed 30 June 2020

- 11. [30 June 2020];Slicer, version 4.11. www.slicer.org. Accessed.

- 12.Ioshida M, et al. : Accuracy and reliability of mandibular digital model registration with use of the mucogingival junction as the reference. Oral Surg. Oral Med. Oral Pathol. Oral Radiol 127(4), 351–360 (2019). 10.1016/j.oooo.2018.10.003 [DOI] [PubMed] [Google Scholar]

- 13.Ronneberger O, Fischer P, Brox T: U-net: convolutional networks for biomedical image segmentation In: Navab N, Hornegger J, Wells W, Frangi A (eds.) International Conference on Medical Image Computing and Computer-Assisted Intervention. Lecture Notes in Computer Science, vol. 9351, pp. 234–241. Springer, Cham: (2015). 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- 14. [30 June 2020]; https://github.com/zhixuhao/unet. Accessed.

- 15.Waldner F, Diakogiannis FI: Deep learning on edge: ex-tracting field boundaries from satellite images with a convolutional neural network. Remote Sens. Environ 245, 111741 (2020) [Google Scholar]

- 16.Ribera NT: Shape variation analyzer: a classifier for temporomandibular joint damaged by osteoarthritis. Proc SPIE Int. Soc. Opt. Eng 10950, 1095021 (2019). 10.1117/12.2506018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.He K, Zhang X, Ren S, Sun J: Deep residual learning for image recognition. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 7780459, pp. 770–778 (2016) [Google Scholar]

- 18.DentalModelSeg source code and documentation. https://github.com/DCBIA-OrthoLab/flyby-cnn. Accessed 30 June 2020 [Google Scholar]

- 19.Data Storage Computation and Integration, DSCI. www.dsci.dent.umich.edu. Accessed 30 June 2020

- 20.Michoud L, et al. : A web-based system for statistical shape analysis in temporomandibular joint osteoarthritis. Proc. SPIE Int. Soc. Opt. Eng 10953, 109530T (2019). 10.1117/12.250603 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Michetti J, Basarab A, Diemer F, Kouame D: Comparison of an adaptive local thresholding method on CBCT and μCT endodontic images. Phys. Med. Biol 63(1), 015020 (2017). 10.1088/1361-6560/aa90ff [DOI] [PubMed] [Google Scholar]