Abstract

Background

Timely recognition of patient deterioration remains challenging. Ambulatory monitoring systems (AMSs) may provide support to current monitoring practices; however, they need to be thoroughly tested before implementation in the clinical environment for early detection of deterioration.

Objective

The objective of this study was to assess the wearability of a selection of commercially available AMSs to inform a future prospective study of ambulatory vital sign monitors in an acute hospital ward.

Methods

Five pulse oximeters (4 with finger probes and 1 wrist-worn only, collecting pulse rates and oxygen saturation) and 2 chest patches (collecting heart rates and respiratory rates) were selected to be part of this study: The 2 chest-worn patches were VitalPatch (VitalConnect) and Peerbridge Cor (Peerbridge); the 4 wrist-worn devices with finger probe were Nonin WristOx2 3150 (Nonin), Checkme O2+ (Viatom Technology), PC-68B, and AP-20 (both from Creative Medical); and the 1 solely wrist-worn device was Wavelet (Wavelet Health). Adult participants wore each device for up to 72 hours while performing usual “activities of daily living” and were asked to score the perceived exertion and perception of pain or discomfort by using the Borg CR-10 scale; thoughts and feelings caused by the AMS using the Comfort Rating Scale (CRS); and to provide general free text feedback. Median and IQRs were reported and nonparametric tests were used to assess differences between the devices’ CRS scores.

Results

Quantitative scores and feedback were collected in 70 completed questionnaires from 20 healthy volunteers, with each device tested approximately 10 times. The Wavelet seemed to be the most wearable device (P<.001) with an overall median (IQR) CRS score of 1.00 (0.88). There were no statistically significant differences in wearability between the chest patches in the CRS total score; however, the VitalPatch was superior in the Attachment section (P=.04) with a median (IQR) score of 3.00 (1.00). General pain and discomfort scores and total percentage of time worn are also reflective of this.

Conclusions

Our results suggest that adult participants prefer to wear wrist-worn pulse oximeters without a probe compressing the fingertip and they prefer to wear a smaller chest patch. A compromise between wearability, reliability, and accuracy should be made for successful and practical integration of AMSs within the hospital environment.

Keywords: wearables, pulse oximeter, chest patch, wearability, vital signs, ambulatory monitoring

Introduction

Background

Failure to recognize and act on deteriorating signs of acute illness has been documented previously [1,2]. The National Institute for Health and Care Excellence [3] recommends the use of an early warning score, which is designed to quantitatively assess the severity of abnormal vital signs triggering the appropriately graded clinical response. A limitation of early warning score systems is the requirement for clinical staff to measure vital signs at the correct frequency. There are several factors that can affect monitoring frequency such as clinical shift duration [4], ward staff levels [5] and workload associated with the vital sign measurements [6]; hence, the ideal frequency is often not achieved [7-9].

Research has shown that the current ward-based vital-sign monitoring of patients is time consuming, as there can be several processes involved in addition to manual measurement, for example, explaining the process and obtaining consent from patients, documenting vital signs in patient records, calculating the early warning score, among others [6,10]. Additionally, even if the ideal frequency of measurement is achieved, patients might deteriorate between observation sets [11].

To address this, patients could be continuously monitored with the aim of increasing early detection of deterioration [12]. In the United Kingdom, continuous monitoring is undertaken in clinical practice but is not commonly performed in wards [13]. It has also been suggested that the most frequent reason for nonuse of continuous monitoring systems is restriction of patient movement and that, to maximize clinical integration, continuous monitoring should be comfortable and less restrictive [13,14].

Ambulatory monitoring systems (AMSs) may provide an alternative to either intermittent measurement of manual vital signs or wired continuous monitoring, affording the patients more mobility and comfort while supporting clinical staff by providing regular vital signs data [15]. There is an increased focus on the development of wireless vital sign monitors for use in the health care setting; however, their reliability and efficiency are still uncertain and need to be tested [16]. Additionally, it has been suggested that introducing AMSs may have physical or psychological effects that should be assessed [17-19] in order to maximize patient compliance and data retrieval. Previous studies have shown that AMSs are removed prematurely owing to patient irritation, discomfort, feeling unwell, or equipment failure [20].

This is the first study of our virtual high dependency unit project, with the overall aim of testing the feasibility of deploying ambulatory vital sign monitoring in the hospital environment. To achieve this, and considering the nonadoption, abandonment, scale-up, spread, and sustainability framework [21], this study will support the initial AMS selection to move into further testing within the virtual high dependency unit project. This will include ward locational testing as well as tests of device accuracy during patient movement and in the detection of hypoxia [22]. Once the final devices have been selected, we will integrate these within our user interface and test its clinical deployment.

Objective

The aim of this study was to assess the wearability of a selection of commercially available AMSs to inform a future prospective study of wearable vital sign monitors in an acute hospital ward.

Methods

Device Selection

The research team conducted a preliminary review of commercially available ambulatory vital sign monitors. To be considered for this study, the devices were required to have wireless connectivity, to measure at least two of the target parameters (ie, heart rate, oxygen saturation, respiratory rate) and to provide third-party permission to access raw data. Based on these requirements, we selected the following monitors: 2 chest-worn patches, that is, VitalPatch (VitalConnect) and Peerbridge Cor (Peerbridge); 4 wrist-worn devices with finger probe, that is, Nonin WristOx2 3150 (Nonin), Checkme O2+ (Viatom Technology), PC-68B, and AP-20 (both from Creative Medical); and 1 solely wrist-worn device, that is, Wavelet (Wavelet Health). Nonin WristOx2 3150 is named Nonin hereafter (Figure 1).

Figure 1.

Devices included in this study.

Study Design and Participants

This study used a prospective observational cohort design. It was reviewed and approved by the Oxford University Research and Ethics Committee and Clinical Trials and Research Governance teams (R55430/RE003). This study is compliant with the cohort checklist of the STROBE (STrengthening the Reporting of OBservational studies in Epidemiology) statement Multimedia Appendix 1.

Adult participants were in-house research staff based in the Kadoorie Research Centre (Level 3, John Radcliffe Hospital) and the Oxford Institute of Biomedical Engineering (University of Oxford, Old Road Campus Research Building), who were recruited through posters placed in target locations such as office common spaces. We also used the internal departmental newsletter and distributed the participant information sheet within the departments. Once a volunteer expressed interest in the study via email/telephone, a study session was organized. All participants were healthy adult volunteers, with the only exclusion criterion being known allergies to adhesive stickers or intracardiac devices (permanent pacemaker).

Following informed consent, participants had at least one of the AMSs fitted and were guided through the device practicalities (eg, how to remove and reattach, waterproofing advice). Participants were required to wear up to 4 different AMSs for up to 72 hours each to mimic in-hospital use. They were advised that they could remove the device if desired; they were then requested to log the time and reason for removal (eg, not being able to wear the finger probe while cooking). Participants were also asked to score various activities that patients are likely to perform during their hospital stay by using a validated questionnaire. No incentives (monetary or otherwise) were given to the participants.

Measurements

All participants completed 1 “Ambulatory Monitoring Wearability Assessment Questionnaire” for each device tested. Data collected included participant demographics and device details (eg, sex, age, device used), the perceived exertion while performing “activities of daily living” (ADLs) using the Borg CR-10 scale [23], the perception of pain or discomfort in specific body areas using body maps with the Borg CR-10 scale [23], and thoughts and feelings about emotions, anxiety, harm, etc, caused by the AMS by using the Comfort Rating Scale (CRS), as described in Multimedia Appendix 2 (CRS information [19] and an open comment section for participants to share general feedback).

The CRS [19] uses a 21-point scale throughout 14 statements, split into 6 categories; 3 statements for emotion, 4 for attachment, 1 for harm, 2 for perceived change, 1 for movement, and 3 for anxiety. All but one of the 14 statements are negatively worded such that, to strongly disagree with a statement (lower score), is a positive outcome [19]. In the case of the 1 positive statement, the answers were further preprocessed (ie, inverted) to make them homogeneous with the other answers, as previously described in another study [17]. For each participant, the median score was first determined for each questionnaire section. For better interpretation, we have also calculated the percentage of responses within each question/category and colored it according to positive or negative outcome (further information in Multimedia Appendix 2). The median score of all the sections was then computed to determine the participant’s overall median CRS score.

To minimize the risk of missing data, the clinical researchers double-checked all the received questionnaires with the participants when collecting them. To minimize wearability bias between devices, we mixed them and documented the order/combination they were used by participants, introduced a washout period of at least one week before testing another device and checked for any clear bias in the free-text feedback section of the questionnaire.

Data Analysis

Sample Size

Owing to the exploratory pragmatic nature of this study, no sample size calculation was performed. We recruited a convenience sample of 20 healthy volunteers to offer a wide range of experiences with wearability of the test devices.

Data Preprocessing

For comparisons, we grouped the chest patches (Peerbridge Cor and VitalPatch) and the pulse oximeters (AP-20, Checkme O2+, Nonin, PC-68B, and Wavelet). This grouping allowed us to conduct separate comparisons, as the selected main measurements from the chest patches are heart rate and respiratory rate, while the pulse oximeters include pulse rate and peripheral capillary oxygen saturations (SpO2). It is expected that these 2 types of monitors will be part of the same AMS.

Statistical Analysis

Due to the limited sample size and data skewness (normality was assessed using the Shapiro-Wilk test), median and IQRs were reported. Nonparametric tests were used to assess differences between the devices’ CRS scores. The Wilcoxon test was used to compare the median CRS scores of the chest patches. Finally, the Kruskal-Wallis test, followed by post hoc Dunn tests [24] (with Bonferroni correction [25]), was used to compare the median CRS scores of the pulse oximeters. All statistical tests were conducted using R v3.6.1 [26] and the tidyverse package [27].

Free Text Analysis

Participants were also encouraged to write free text to describe the problems and challenges they encountered with each device. NVivo 12 (NVivo qualitative data analysis software, QSR International Pty Ltd Version 12, 2018) was used to analyze and collate feedback into common categories. For each category, we included the number of participants with at least one negative comment (eg, for disrupted ADLs, the number of participants who took off the device for ADLs or mentioned that these were disruptive when performing daily tasks is reported). This number was reviewed and agreed by 2 researchers from the study team.

Results

Participant Characteristics

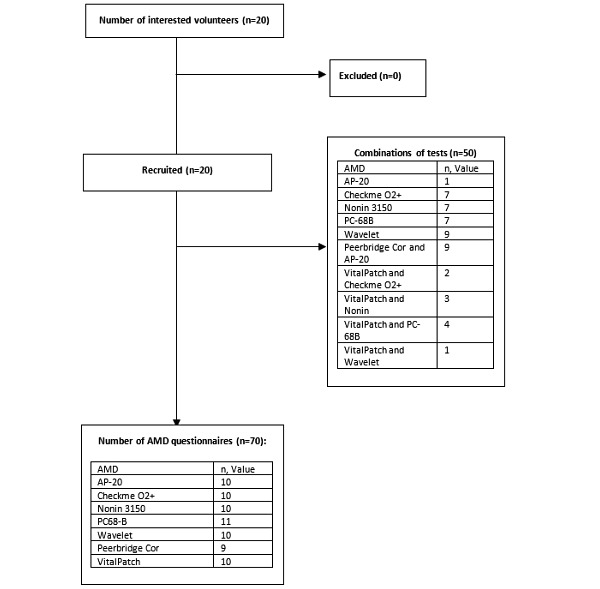

Twenty in-house volunteers (13 women and 7 men) were recruited between May 4, 2018 and October 30, 2018, with a median (IQR) age of 34 (32-40) years. For each session, participants wore either a pulse oximeter, a chest patch, or both for up to 72 hours before completing the wearability questionnaire. All participants wore at least one device, with a median (IQR) washout period of 31 (13-58) days before wearing another one, and they all completed 1 questionnaire per device (Figure 2).

Figure 2.

The study participant flowchart. AMD: ambulatory monitoring device.

Wearability Questionnaire Outcomes

The total wear duration and total number of removals per device are presented in Table 1. Wavelet and Checkme O2+ were the most used pulse oximeters (644/720, 89.4%) and the VitalPatch was the most used chest patch (663/720, 92.1%). These devices also had the lowest pain/discomfort and median exertion scores in the included activities as described in Table 2.

Table 1.

Device testing duration, removal duration, and reasons for removal.

| Measurements | Pulse oximeters | Chest patches | ||||||||||||||

| AP-20 (n=10) | Checkme O2+ (n=10) | Nonin (n=10) | PC68-B (n=11) | Wavelet (n=10) | Peerbridge Cor (n=9) | VitalPatch (n=10) | ||||||||||

| Testing duration (hours:minutes) | ||||||||||||||||

|

|

Total planned durationa | 720:00 | 720:00 | 720:00 | 792:00 | 720:00 | 648:00 | 720:00 | ||||||||

|

|

Used durationb (% of total planned duration) | 522:31 (72.6) | 643:35 (89.4) | 590:11 (82.0) | 558:43 (70.6) | 640:45 (90.0) | 491:19 (75.8) | 662:52 (92.1) | ||||||||

|

|

Median session duration (IQR) | 70:45 (51:50-72:00) | 70:40 (58:17-72:00) | 64:42 (53:46-69:55) | 67:50 (36:58-70:44) | 72:00 (55:11-72:00) | 70:45 (45:10-71:55) | 69:58 (68:12-72:00) | ||||||||

| Removals | ||||||||||||||||

|

|

Number of participants (total removals) | 8 (36) | 10 (45) | 9 (49) | 8 (31) | 3 (4) | 4 (8) | 0 | ||||||||

|

|

Median removal durationc (IQR) | 1:06 (0:30-5:37) | 1:21 (0:50-3:30) | 2:01 (1:04-5:05) | 01:20 (0:35-3:15) | 1:50 (1:33-3:00) | 0:10 (0:10-0:45) | 0:00 (0:00-0:00) | ||||||||

| Reasons for removal (n) | ||||||||||||||||

|

|

Hygiene | 10 | 6 | 6 | 1 | 0 | 6 | 0 | ||||||||

|

|

Discomfort | 3 | 3 | 7 | 6 | 0 | 0 | 0 | ||||||||

|

|

Cooking or eating | 6 | 6 | 11 | 10 | 0 | 0 | 0 | ||||||||

|

|

Exercise | 0 | 3 | 3 | 4 | 0 | 0 | 0 | ||||||||

|

|

Work/social | 2 | 5 | 4 | 3 | 0 | 0 | 0 | ||||||||

|

|

Battery/hardware failure |

10 | 17 | 2 | 2 | 3 | 0 | 0 | ||||||||

|

|

Other/unknown | 5 | 5 | 16 | 5 | 1 | 2 | 0 | ||||||||

aTotal planned duration refers to the total amount of time if all participants wore the respective device for the full 72 hours (Total=72 × n). Values are shown as hours:minutes.

bUsed duration: reflects the actual time that the devices were worn by the participants, with missing times representing a combination of device removal periods and differences between the actual end of the session and 72 hours (ie, when the full 72 hours are not achieved). Values are shown as hours:minutes.

cValues are shown as hours:minutes.

Table 2.

Pain/discomfort and exertion scores per device, body part, and activity by using Borg CR-10 scale [23].

| Device | Pulse oximeters | Chest patches | |||||||

| AP-20 | Checkme O2+ | Nonin | PC68-B | Wavelet | Peerbridge Cor | VitalPatch | |||

| Median pain/discomfort score per body parta (IQR) | 3.00 (5.00) | 1.50 (2.38) | 3.50 (2.50) | 3.00 (2.50) | 0.75 (0.50) | 2.00 (6.00) | 1.00 (0.88) | ||

| Median exertion score per activity | |||||||||

|

|

Walking | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

|

|

Eating | 3 | 2 | 3 | 4 | 0 | 0 | 0 | |

|

|

Drinking | 1 | 0 | 1 | 3 | 0 | 0 | 0 | |

|

|

Dressing | 5 | 4 | 5 | 8 | 1 | 0 | 0 | |

|

|

Writing | 1 | 0 | 3 | 5 | 0 | 0 | 0 | |

|

|

Using phone/tablet | 0 | 0 | 3 | 3 | 0 | 0 | 0 | |

|

|

Reading | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

|

|

Hand washing | 9 | 6 | 7 | 10 | 0 | 0 | 0 | |

|

|

Sleeping | 4 | 2 | 3 | 4 | 0 | 3 | 0 | |

aFor the pulse oximeters, the body part of interest was the nondominant wrist and for the chest patches, it was the chest.

Common problems identified in free text sections of the questionnaires are presented in Table 3. We grouped free text comments into 5 categories: (1) device size (eg, device being too big or bulky), (2) disrupted ADLs (eg, limited daily tasks, so needed to be removed), (3) skin irritation (eg, some concerns of wrist strap/finger-probe becoming itchy), (4) finger probe uncomfortable (eg, sweaty and annoying), and (5) affected sleep (eg, participants kept waking up and unable to sleep with it on).

Table 3.

Device specifications and participant-identified problems.

| Measurements and problems | Pulse oximeters | Chest patches | ||||||

| AP-20 | Checkme O2+ | Nonin | PC-68B | Wavelet | Peerbridge Cor | VitalPatch | ||

| Device measurements | SpO2a, PRb, RRc, perfusion index | SpO2, PR, steps | SpO2, PR | SpO2, PR, perfusion index | SpO2, PRb | HRd, RR, ECGe | HR, RR, ECG, body position | |

| Participant-identified problems (n)f | ||||||||

|

|

Device size | 2 | 0 | 3 | 10 | 0 | 16 | 0 |

|

|

Disrupted ADLsg | 8 | 6 | 5 | 6 | 0 | 0 | 0 |

|

|

Skin irritation | 0 | 2 | 1 | 0 | 2 | 3 | 3 |

|

|

Finger probe uncomfortable | 6 | 0 | 5 | 8 | N/Ah | N/A | N/A |

|

|

Affected sleep | 1 | 0 | 3 | 5 | 0 | 5 | 1 |

aSpO2: oxygen saturation.

bPR: pulse rate.

cRR: respiratory rate. For the AP-20, respiratory rate is only possible using a nasal cannula.

dHR: heart rate.

eECG: electrocardiogram. Peerbridge Cor uses a 2-lead ECG and VitalPatch a single-lead ECG.

fThe cells show the number of participants identifying at least one problem in each category.

gADLs: activities of daily living.

hN/A: not applicable.

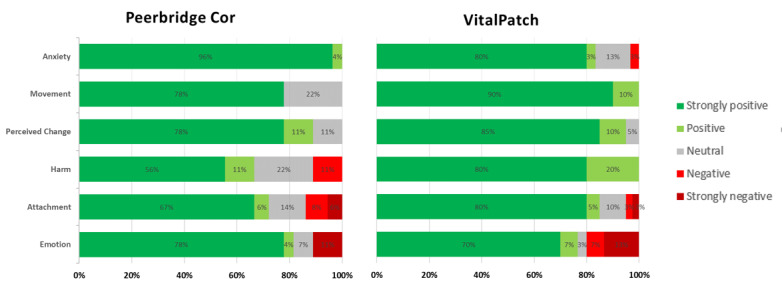

Significant differences for most sections were found between the CRS scores of the pulse oximeters as well as between the overall median CRS scores (Table 4 and Table 5). Figure 3 and Figure 4 represent the percentage of positive/negative CRS score outcomes per section and per device.

Table 4.

Comparison of the Comfort Rating Scale scores across different pulse oximeters.

| CRSa section | Pulse oximeters, median (IQR) score | |||||

| AP-20 (n=10) | Checkme O2+ (n=10) | Nonin (n=10) | PC68-B (n=11) | Wavelet (n=10) | P value | |

| Overall score | 7.50 (4.00)b | 3.25 (8.63)b | 6.00 (6.38)b | 9.00 (4.75)b | 1.00 (0.88)c,d,e,f | <.001 |

| Emotion | 4.00 (6.00) | 4.00 (7.25) | 6.50 (12.25)b | 7.00 (5.5)b | 1.00 (1.00)e,f | .02 |

| Attachment | 10.75 (5.13)b | 8.50 (9.75) | 10.75 (2.00)b | 14.00 (3.25)b | 2.5 (3.00)c,e,f | .001 |

| Harm | 1.00 (1.75) | 1.50 (6.75) | 1.00 (0.00) | 2.00 (5.00) | 1.00 (1.50) | .30 |

| Perceived change | 13.75 (4.25)b | 6.75 (9.13) | 10.50 (3.88) | 15.00 (6.50)b | 1.25 (1.38) c,f | <.001 |

| Movement | 17.00 (6.00)b,d | 7.00 (6.75)g | 10.50 (8.00)b | 16.00 (7.00)b | 1.00 (1.00)c,e,f | <.001 |

| Anxiety | 2.50 (7.75) | 1.50 (2.75) | 5.00 (6.50) | 4.00 (5.50) | 1.00 (1.00) | .25 |

aCRS: Comfort Rating Scale.

bStatistically significant difference from Wavelet.

cStatistically significant difference from AP-20.

dStatistically significant difference from Checkme O2+.

eStatistically significant difference from Nonin.

fStatistically significant difference from PC-68B.

Table 5.

Comparison of the Comfort Rating Scale scores between the chest patches.

| CRSa section | Chest patches, median (IQR) | ||

| Peerbridge Cor (n=9) | VitalPatch (n=10) | P value | |

| Overall score | 1.50 (1.50) | 1.25 (2.00) | .60 |

| Emotion | 1.00 (2.00) | 1.00 (1.00) | .92 |

| Attachment | 4.50 (2.50) | 3.00 (1.00) | .04 |

| Harm | 3.00 (10.00) | 2.50 (3.50) | .48 |

| Perceived change | 2.50 (3.00) | 1.00 (3.13) | .17 |

| Movement | 3.00 (3.00) | 1.00 (1.50) | .06 |

| Anxiety | 1.00 (0.00) | 1.50 (3.50) | .22 |

aCRS: Comfort Rating Scale.

Figure 3.

Comfort Rating Scale scores for each pulse oximeter. Green represents the percentage of positive outcomes and red represents the percentage of negative outcomes from the Comfort Rating Scale scores (Multimedia Appendix 2).

Figure 4.

Comfort Rating Scale scores for each chest patch device. Green represents the percentage of positive outcomes and red represents the percentage of negative outcome from the Comfort Rating Scale scores (Multimedia Appendix 2).

A post hoc analysis showed that the Wavelet had significantly better scores than other pulse oximeters in most sections of the CRS (P<.05), for example, in the CRS total score, the Wavelet was statistically superior to AP-20 (P=.004), Checkme O2+ (P=.048), Nonin (P=.02), and PC-68B (P<.001). The Checkme O2+ also showed a significantly better score for Movement against the PC-68B (P=.048) and close to statistical significance against the AP-20 (P=.05) (Multimedia Appendix 3).

For the chest patches, although there was a statistically significant difference in the Attachment section, no difference was found in the overall median CRS scores between the VitalPatch and Peerbridge Cor (Table 5). In the open feedback, the main difference reported by participants was related to sleep, with the Peerbridge Cor more frequently reported to be uncomfortable.

Discussion

Main Results

Twenty participants were recruited for this study, with 70 questionnaires completed (approximately 10 per device) to assess AMS wearability. The Wavelet was found to be the most comfortable wearable pulse oximeter, with the absence of a finger probe, thereby contributing to its better wearability score. Having a probe on the fingertip seemed to negatively impact a device’s wearability, with participants reporting a feeling of tightness and sweatiness after prolonged and continuous use. The finger probe was also often reported as hindering function, requiring its removal to perform activities and affecting the total time the device was worn. Amongst the pulse oximeters with a finger probe, the Checkme O2+ was preferred, probably due to the smaller and ring-shaped finger probe, with placement away from the fingertip. For the remaining pulse oximeters (AP-20, PC-68B, and Nonin), no significant differences were found; however, PC-68B was the most negatively commented on, as participants consistently described this device as bulky and disruptive to ADLs. In addition, it had the most negative feedback for a finger probe, in comparison with AP-20 and Nonin.

For the chest patches, there were only significant differences between the 2 selected devices in favor of the VitalPatch in the Attachment section of the CRS scale. Participants noted that they found it difficult to sleep on their front or side with the Peerbridge Cor.

Preference for the Wavelet, Checkme O2+, and VitalPatch was also reflected in the pain/discomfort score, activity exertion score, and free text feedback given. Participants also reported preference for smaller devices, both for the pulse oximeters and the chest patches. To our knowledge, this is the first study comparing wearability for a number of wearable devices.

Study Limitations

A clear limitation of this study was the recruitment of in-house healthy volunteers; thus, our results may not reflect the hospitalized population. However, this was the first step within our project to provide evidence on the wearability and to select feasible devices to be further tested. As there was a limited number of devices available, the order was not randomly assigned; these were allocated to participants as they were available, with the order being documented.

Another limitation was that not all participants were able to test all 7 devices, with an average of 3.5 devices used per participant (1 being a chest patch) between all sessions. To avoid bias, participants were encouraged to assess each device individually and not by comparison with previous devices worn. Additionally, although a log of temporary removals was provided, not all participants were fully compliant with it, which may explain the variability in the device removal numbers.

Furthermore, no specific instruction was given to participants regarding the finger probe placement for any of the pulse oximeters (we note that Viatom Technology recommends using the Checkme O2+ on the thumb [28]), and participants were therefore not asked to indicate which finger they used. This could provide an additional comparison between pulse oximeters that use finger probes and such a comparison will be included in future wearability studies.

We have used the CRS scale [18,19] for the main assessment of device wearability in healthy volunteers; however, the 6 domains evaluated and their distribution might not be the most appropriate method to evaluate wearability for clinical monitoring devices, as it does not necessarily ensure a correct representation of and applicability to the clinical environment.

Comparison With Prior Work

Our questionnaire methodology for wearability evaluation has been described in previous studies [17,18] and adapted here to compare our selected devices using similar outcomes such as the CRS scale, which is designed to assess comfort over a range of dimensions [29]. Wearability has a direct impact on system usability and its clinical implementation, as patients will be more likely to wear the AMS if they feel comfortable, thus improving data availability and quality [30]. A recent review analyzed the validation, feasibility, clinical outcomes, and costs of 13 different wearable devices and concluded that these were predominantly in the validation and feasibility testing phases [31]. Despite the exponential growth in wearable technology, little evidence is available regarding wearability and acceptance in the clinical setting [30,32,33]. We note that, for all the devices under test, only the VitalPatch had indexed wearability studies available [34,35].

This exploratory study is embedded in a comprehensive research project, which aims to test, refine, and deploy these devices in clinical practice. This project follows a human-centered design process that requires a full exploration of the environment into which the technology is to be placed and understanding the eventual end users of the technology [21]. Although this was not tested on end users, our study was the most ethically sound surrogate, as it did not expose patients to equipment that does not work or that they would find intolerable because of discomfort.

The findings of this study will support the initial selection of wearable devices for the next phases of our project for testing the reliability, accuracy, and functionality of the selected devices [22], as it is not known the AMSs that are the most reliable for use in the hospital environment. Once the initial devices have been selected, tested, and refined, patients will also have the opportunity to provide both qualitative and quantitative data on the wearability of the devices.

Conclusions and Future Research

Our results suggest that traditional pulse oximeter finger probes hinder function, as participants preferred the wrist-worn (Wavelet) and ring-style pulse oximeters (Checkme O2+). The smaller chest patch (VitalPatch) was found to be less noticeable and more comfortable. These preferences were reflected in the total time participants wore the device. These results help to inform which wearable device designs are more likely to be deployed successfully within the hospital environment.

Acknowledgments

The research was funded by the National Institute for Health Research (NIHR) Oxford Biomedical Research Centre. PW and LT are supported by the NIHR Biomedical Research Centre, Oxford. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR, or the Department of Health. We thank Delaram Jarchi for performing the initial review that supported our device selection as part of this study. We would also like to thank Philippa Piper, Dario Salvi, and Marco Pimentel for their support in the design of the study.

Abbreviations

- ADLs

activities of daily living

- AMS

ambulatory monitoring system

- CRS

comfort rating scale

- NIHR

National Institute for Health Research

- SpO2

oxygen saturation

Appendix

STROBE checklist.

Comfort Rating Scale information.

Post hoc Dunn tests with Bonferroni Correction for Pulse Oximeters.

Footnotes

Authors' Contributions: CA, LY, SV, JE, LT and PW made significant contributions to the conception and design or acquisition of data. CA, LY, and MS analyzed the data. CA and LY wrote the first draft of the manuscript, which was reviewed and approved by all authors.

Conflicts of Interest: PW and LT report significant grants from the NIHR, UK, and the NIHR Biomedical Research Centre, Oxford, during the conduct of the study. PW and LT report modest grants and personal fees from Sensyne Health, outside the submitted work. LT works part-time for Sensyne Health and has share options in the company. PW holds shares in the company. All other authors have no conflicts to declare.

References

- 1.McQuillan P, Pilkington S, Allan A, Taylor B, Short A, Morgan G, Nielsen M, Barrett D, Smith G, Collins C H. Confidential inquiry into quality of care before admission to intensive care. BMJ. 1998 Jun 20;316(7148):1853–8. doi: 10.1136/bmj.316.7148.1853. http://europepmc.org/abstract/MED/9632403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Watkinson PJ, Barber VS, Price JD, Hann A, Tarassenko L, Young JD. A randomised controlled trial of the effect of continuous electronic physiological monitoring on the adverse event rate in high risk medical and surgical patients. Anaesthesia. 2006 Nov;61(11):1031–9. doi: 10.1111/j.1365-2044.2006.04818.x. doi: 10.1111/j.1365-2044.2006.04818.x. [DOI] [PubMed] [Google Scholar]

- 3.Acutely ill adults in hospital: recognising and responding to deterioration. The National Institute for Health and Care Excellence. 2007. Jul 25, [2019-12-17]. https://www.nice.org.uk/guidance/cg50. [PubMed]

- 4.Dall'Ora Chiara, Griffiths P, Redfern O, Recio-Saucedo A, Meredith P, Ball J, Missed Care Study Group Nurses' 12-hour shifts and missed or delayed vital signs observations on hospital wards: retrospective observational study. BMJ Open. 2019 Feb 01;9(1):e024778. doi: 10.1136/bmjopen-2018-024778. https://bmjopen.bmj.com/lookup/pmidlookup?view=long&pmid=30782743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Griffiths P, Recio-Saucedo A, Dall'Ora C, Briggs J, Maruotti A, Meredith P, Smith GB, Ball J, Missed Care Study Group The association between nurse staffing and omissions in nursing care: A systematic review. J Adv Nurs. 2018 Jul;74(7):1474–1487. doi: 10.1111/jan.13564. http://europepmc.org/abstract/MED/29517813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dall'Ora Chiara, Griffiths P, Hope J, Barker H, Smith GB. What is the nursing time and workload involved in taking and recording patients' vital signs? A systematic review. J Clin Nurs. 2020 Jul;29(13-14):2053–2068. doi: 10.1111/jocn.15202. [DOI] [PubMed] [Google Scholar]

- 7.Jansen JO, Cuthbertson BH. Detecting critical illness outside the ICU: the role of track and trigger systems. Curr Opin Crit Care. 2010 Jun;16(3):184–90. doi: 10.1097/MCC.0b013e328338844e. [DOI] [PubMed] [Google Scholar]

- 8.Clifton DA, Clifton L, Sandu D, Smith GB, Tarassenko L, Vollam SA, Watkinson PJ. 'Errors' and omissions in paper-based early warning scores: the association with changes in vital signs--a database analysis. BMJ Open. 2015 Jul 03;5(7):e007376. doi: 10.1136/bmjopen-2014-007376. https://bmjopen.bmj.com/lookup/pmidlookup?view=long&pmid=26141302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Downey C, Tahir W, Randell R, Brown J, Jayne D. Strengths and limitations of early warning scores: A systematic review and narrative synthesis. Int J Nurs Stud. 2017 Nov;76:106–119. doi: 10.1016/j.ijnurstu.2017.09.003. [DOI] [PubMed] [Google Scholar]

- 10.Cardona-Morrell M, Prgomet M, Turner RM, Nicholson M, Hillman K. Effectiveness of continuous or intermittent vital signs monitoring in preventing adverse events on general wards: a systematic review and meta-analysis. Int J Clin Pract. 2016 Oct;70(10):806–824. doi: 10.1111/ijcp.12846. [DOI] [PubMed] [Google Scholar]

- 11.Tarassenko L, Hann A, Young D. Integrated monitoring and analysis for early warning of patient deterioration. Br J Anaesth. 2006 Jul;97(1):64–8. doi: 10.1093/bja/ael113. https://linkinghub.elsevier.com/retrieve/pii/S0007-0912(17)35184-X. [DOI] [PubMed] [Google Scholar]

- 12.Prgomet M, Cardona-Morrell M, Nicholson M, Lake R, Long J, Westbrook J, Braithwaite J, Hillman K. Vital signs monitoring on general wards: clinical staff perceptions of current practices and the planned introduction of continuous monitoring technology. Int J Qual Health Care. 2016 Sep;28(4):515–21. doi: 10.1093/intqhc/mzw062. [DOI] [PubMed] [Google Scholar]

- 13.Bonnici T, Tarassenko L, Clifton DA, Watkinson P. The digital patient. Clin Med (Lond) 2013 Jun;13(3):252–7. doi: 10.7861/clinmedicine.13-3-252. http://europepmc.org/abstract/MED/23760698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Downey C, Chapman S, Randell R, Brown J, Jayne D. The impact of continuous versus intermittent vital signs monitoring in hospitals: A systematic review and narrative synthesis. Int J Nurs Stud. 2018 Aug;84:19–27. doi: 10.1016/j.ijnurstu.2018.04.013. [DOI] [PubMed] [Google Scholar]

- 15.Weenk M, van Goor Harry, Frietman B, Engelen L, van Laarhoven Cornelis Jhm, Smit J, Bredie S, van de Belt Tom H. Continuous Monitoring of Vital Signs Using Wearable Devices on the General Ward: Pilot Study. JMIR Mhealth Uhealth. 2017 Jul 05;5(7):e91. doi: 10.2196/mhealth.7208. https://mhealth.jmir.org/2017/7/e91/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Appelboom G, Camacho E, Abraham ME, Bruce SS, Dumont EL, Zacharia BE, D'Amico Randy, Slomian J, Reginster JY, Bruyère Olivier, Connolly ES. Smart wearable body sensors for patient self-assessment and monitoring. Arch Public Health. 2014;72(1):28. doi: 10.1186/2049-3258-72-28. https://archpublichealth.biomedcentral.com/articles/10.1186/2049-3258-72-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cancela J, Pastorino M, Tzallas AT, Tsipouras MG, Rigas G, Arredondo MT, Fotiadis DI. Wearability assessment of a wearable system for Parkinson's disease remote monitoring based on a body area network of sensors. Sensors (Basel) 2014 Sep 16;14(9):17235–55. doi: 10.3390/s140917235. https://www.mdpi.com/resolver?pii=s140917235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Knight J, Deen-Williams D, Arvanitis T, Baber C, Sotiriou S, Anastopoulou S, Gargalakos M. Assessing the wearability of wearable computers. Proceedings - International Symposium on Wearable Computers, ISWC; 11-14 Oct 2006; Montreux, Switzerland. IEEE; 2007. pp. 75–82. [DOI] [Google Scholar]

- 19.Knight JF, Baber C. A tool to assess the comfort of wearable computers. Hum Factors. 2005;47(1):77–91. doi: 10.1518/0018720053653875. [DOI] [PubMed] [Google Scholar]

- 20.Jeffs E, Vollam S, Young JD, Horsington L, Lynch B, Watkinson PJ. Wearable monitors for patients following discharge from an intensive care unit: practical lessons learnt from an observational study. J Adv Nurs. 2016 Aug;72(8):1851–62. doi: 10.1111/jan.12959. [DOI] [PubMed] [Google Scholar]

- 21.Greenhalgh T, Wherton J, Papoutsi C, Lynch J, Hughes G, A'Court C, Hinder S, Fahy N, Procter R, Shaw S. Beyond Adoption: A New Framework for Theorizing and Evaluating Nonadoption, Abandonment, and Challenges to the Scale-Up, Spread, and Sustainability of Health and Care Technologies. J Med Internet Res. 2017 Nov 01;19(11):e367. doi: 10.2196/jmir.8775. https://www.jmir.org/2017/11/e367/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Areia C, Vollam S, Piper P, King E, Ede J, Young L, Santos M, Pimentel MAF, Roman C, Harford M, Shah A, Gustafson O, Rowland M, Tarassenko L, Watkinson PJ. Protocol for a prospective, controlled, cross-sectional, diagnostic accuracy study to evaluate the specificity and sensitivity of ambulatory monitoring systems in the prompt detection of hypoxia and during movement. BMJ Open. 2020 Jan 12;10(1):e034404. doi: 10.1136/bmjopen-2019-034404. https://bmjopen.bmj.com/lookup/pmidlookup?view=long&pmid=31932393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Borg G. Borg's perceived exertion and pain scales. Illinois: Human Kinetics, 1998; 1998. [Google Scholar]

- 24.Daniel W. Applied nonparametric statistics. Boston: Cengage Learning; 2nd Revised edition (1 Jan. 1980); 1990. Applied nonparametric statistics. [Google Scholar]

- 25.Neyman J, Pearson ES. On The Use And Interpretation Of Certain Test Criteria For Purposes Of Statistical Inference Part I. Biometrika. 1928;20A(1-2):175–240. doi: 10.1093/biomet/20a.1-2.175. [DOI] [Google Scholar]

- 26.A Language and Environment for Statistical Computing Internet. Vienna, Austria. 2019. [2020-03-01]. https://www.r-project.org/

- 27.Wickham H, Averick M, Bryan J, Chang W, McGowan L, François R, Grolemund G, Hayes A, Henry L, Hester J, Kuhn M, Pedersen T, Miller E, Bache S, Müller K, Ooms J, Robinson D, Seidel D, Spinu V, Takahashi K, Vaughan D, Wilke C, Woo K, Yutani H. Welcome to the Tidyverse. JOSS. 2019 Nov;4(43):1686. doi: 10.21105/joss.01686. [DOI] [Google Scholar]

- 28.Shenzhen VTC. O2 Vibe Wrist Pulse Oximeter Quick Start Guide. 2016. [2019-08-01]. https://fccid.io/2ADXK-1600/User-Manual/Users-Manual-3119531.pdf.

- 29.Knight J, Baber C, Schwirtz A, Bristow H. The comfort assessment of wearable computers. Proceedings Sixth Int Symp Wearable Comput Internet IEEE; 10 Oct. 2002; Seattle, WA, USA. IEEE; 2003. pp. 65–72. [DOI] [Google Scholar]

- 30.Baig MM, GholamHosseini H, Moqeem AA, Mirza F, Lindén Maria. A Systematic Review of Wearable Patient Monitoring Systems - Current Challenges and Opportunities for Clinical Adoption. J Med Syst. 2017 Jul;41(7):115. doi: 10.1007/s10916-017-0760-1. [DOI] [PubMed] [Google Scholar]

- 31.Leenen J, Leerentveld C, van Dijk Joris D, van Westreenen Henderik L, Schoonhoven L, Patijn G. Current Evidence for Continuous Vital Signs Monitoring by Wearable Wireless Devices in Hospitalized Adults: Systematic Review. J Med Internet Res. 2020 Jun 17;22(6):e18636. doi: 10.2196/18636. https://www.jmir.org/2020/6/e18636/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Botros A, Schütz N, Camenzind M, Urwyler P, Bolliger D, Vanbellingen T, Kistler R, Bohlhalter S, Müri RM, Mosimann UP, Nef T. Long-Term Home-Monitoring Sensor Technology in Patients with Parkinson’s Disease—Acceptance and Adherence. Sensors. 2019 Nov 26;19(23):5169. doi: 10.3390/s19235169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Pavic M, Klaas V, Theile G, Kraft J, Tröster Gerhard, Guckenberger M. Feasibility and Usability Aspects of Continuous Remote Monitoring of Health Status in Palliative Cancer Patients Using Wearables. Oncology. 2020;98(6):386–395. doi: 10.1159/000501433. https://www.karger.com?DOI=10.1159/000501433. [DOI] [PubMed] [Google Scholar]

- 34.Tonino R, Larimer K, Eissen O, Schipperus M. Remote Patient Monitoring in Adults Receiving Transfusion or Infusion for Hematological Disorders Using the VitalPatch and accelerateIQ Monitoring System: Quantitative Feasibility Study. JMIR Hum Factors. 2019 Dec 02;6(4):e15103. doi: 10.2196/15103. https://humanfactors.jmir.org/2019/4/e15103/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Selvaraj N. Long-term remote monitoring of vital signs using a wireless patch sensor. IEEE Healthc Innov Conf Internet IEEE; 8-10 Oct 2014; Seattle, WA, USA. 2015. Feb 12, pp. 83–86. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

STROBE checklist.

Comfort Rating Scale information.

Post hoc Dunn tests with Bonferroni Correction for Pulse Oximeters.