Abstract

Artificial intelligence (AI) has received widespread and growing interest in healthcare, as a method to save time, cost and improve efficiencies. The high-performance statistics and diagnostic accuracies reported by using AI algorithms (with respect to predefined reference standards), particularly from image pattern recognition studies, have resulted in extensive applications proposed for clinical radiology, especially for enhanced image interpretation. Whilst certain sub-speciality areas in radiology, such as those relating to cancer screening, have received wide-spread attention in the media and scientific community, children’s imaging has been hitherto neglected.

In this article, we discuss a variety of possible ‘use cases’ in paediatric radiology from a patient pathway perspective where AI has either been implemented or shown early-stage feasibility, while also taking inspiration from the adult literature to propose potential areas for future development. We aim to demonstrate how a ‘future, enhanced paediatric radiology service’ could operate and to stimulate further discussion with avenues for research.

Introduction

Despite the hype surrounding artificial intelligence (AI) in radiology, paediatric imaging has been neglected compared to other sub-specialties such as breast, oncology or neuroimaging.1 This may be partly due to a comparatively larger workload in adult medicine, conveniently providing large training datasets and thereby potentially greater opportunities to automate routine tasks (e.g., cancer screening applications). There are intrinsically challenging aspects surrounding the practice of paediatric radiology, such as the need for a more ‘hands-on/ human’ approach in many cases (e.g., fluoroscopy and ultrasound studies, keeping children calm during examinations), and greater heterogeneity in data due to wide variations of normal findings at different stages of childhood development. Nevertheless, AI could still prove helpful in enhancing children’s imaging services, particularly given the current radiology workforce shortages (only 38.5% of institutions in the UK have 24/7 access to a paediatric radiology opinion)2 and national economic hardships – potentially leading to a vicious cycle of fewer job and training opportunities, with even further lack of access to specialist opinion.

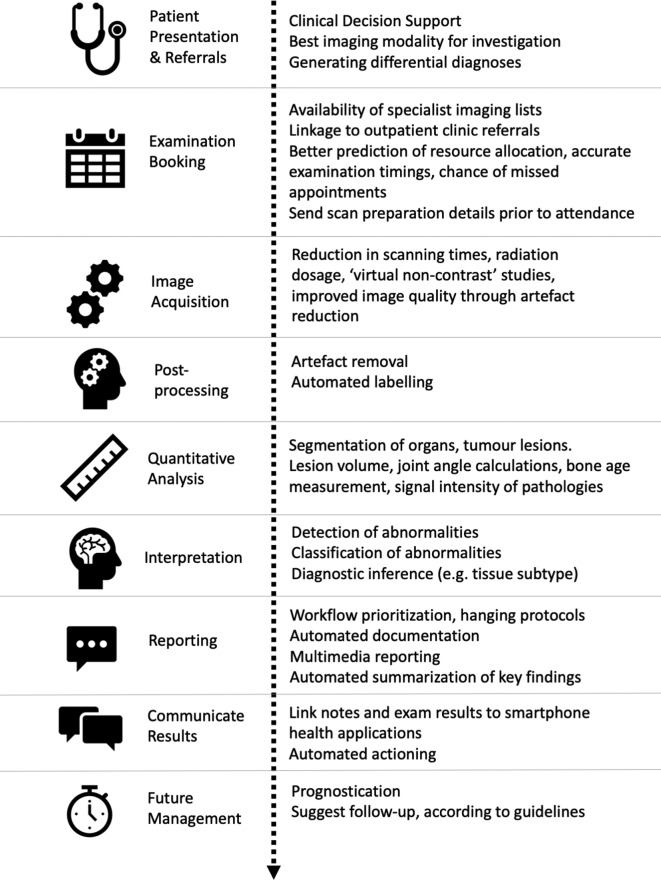

In this article, we discuss a variety of possible ‘use cases’ in paediatric radiology where AI has either been implemented already or shown early-stage feasibility, while also taking inspiration from the adult literature to propose areas for future development. This review is broadly structured around the patient imaging pathway from ‘request to report’ (Figure 1), but also touches upon uses relating to clinical governance (e.g., training, audit). Basic terminologies and definitions used in AI, machine- and deep-learning techniques have already been described elsewhere,1,3–5 and will not be repeated here. Our primary aim is to demonstrate how a future, enhanced paediatric radiology service could operate, and to stimulate further discussion with avenues for research.

Figure 1.

Diagram depicting the patient pathway from hospital admission to radiology report and follow-up, with summary of how artificial intelligence tools may enhance clinical practice and patient experience.

Referrals

Clinical decision support (CDS)

Unfortunately, despite numerous ‘best practice’ guidelines, it is estimated that 10–40% of all imaging procedures are performed with little or no patient benefit.6,7 Implementing an AI-enhanced CDS tool could allow for the rapid synthesis of all patient information held within electronic health records (EHR), matched against national referral guidelines, to provide clinicians the most appropriate next course of action (e.g., 2-year-old child with recurrent urinary tract infections would generate a suggestion for a renal ultrasound referral within 6 weeks, rather than a CT abdomen).8,9

Information from the EHR could be pre-populated into the imaging request, ensuring the most relevant information was available for the radiologist. This is one of the most helpful stages at which AI could change patient healthcare, produce readily auditable results, and allow for more integrated and efficient service delivery models to be developed based on local population data.

Where imaging referrals involve the use of ionising radiation, the CDS could automatically raise potential radiation risks, expected dosages and radiation safety information sheets for parents and patients, alleviating parental concerns. Details regarding patient preparation prior to a study could also be generated (e.g., fasting instructions). An AI-assisted CDS could potentially reduce over exposure from repeated (potentially unnecessary) imaging (e.g., repeated CT KUBs for renal stones) and could be used to catalogue a cumulative dose profile from the EHR.

This automation may reduce inappropriate referrals and help answer simple enquiries, leading to improved efficiencies for administrative staff, both prior to and at the point of attendance. At present, integrating referral guidelines (i.e., the RCR iRefer guidance)9 within a CDS software is already being piloted across several hospitals and GP surgeries in London, with planned studies to assess the clinical impact.10

These scenarios however represent a CDS following a ‘rules-based system’–replicating a human following appropriate standard protocols (rather than ‘thinking for itself’). A more advanced feature in future applications would use machine learning to integrate information from imaging reports, patient demographics, biochemical and blood markers for risk stratification: to suggest probabilities of certain diagnoses or to predict patient outcomes.11 In one example of this, Hale et al12 used a deep-learning neural network to predict the possibility of clinically relevant paediatric traumatic brain injuries, through combining clinical information and radiologist-interpreted CT head reports. The creation of this tool allowed for an evidence-based automated risk stratification tool, encouraging early safe discharge for low-risk patients from the emergency department and reducing unnecessary hospital occupancy.

Booking

Resource allocation

AI-supported predictive modelling could help with resource allocation, particularly within NHS trusts which encompass a mixture of tertiary referral and district general hospitals. Expected numbers of outpatient clinics per day (and subsequent ‘walk-in’ imaging referrals), type of local radiological expertise, staff rotas, and prior knowledge of cases requiring additional attention (e.g., children with complex developmental or learning difficulties), could help predict likelihood of delays, or flattening out of acute service variation over a normal working day.13 This could result in routine, non-urgent cases being allocated appointments at alternative centres or during predicted ‘lulls’ in a service, avoiding unnecessary appointment delays, while ensuring complex and urgent cases are given specialist access and time.14

Imaging resources could also be better managed by integrating AI supported software for scheduling with the local PACS. For example, where interval MRI is recommended and protocolled by the radiologist, the software could use this information along with the DICOM metadata from baseline imaging to suggest which particular scanners the patient should be booked for (as opposed to booking them for the first available appointment). This would be particularly helpful where a department has several scanners from different vendors using different protocols and could ensure that high-quality comparable images were acquired. It could avoid unnecessary repeated studies and improve a radiologist’s confidence in reporting subtle imaging changes at follow-up, which might otherwise be confused with artefact and potentially affect management decisions.

Safeguarding

Missed hospital appointments are not only costly to healthcare systems, but repeated missed appointments in children raise safeguarding issues. AI-supported predictive modelling within the EHR can identify habitual missed appointments,15,16 may even identify factors which predict the likelihood of missing the next appointment, and automatically highlight behaviours which could require further action, via hospital child protection or social services.

Patient waiting times

Delayed appointments pose a challenge for many parents who struggle to keep their young children entertained in the radiology waiting room prior to their study, particularly those with wider families to consider. An AI-supported notification system via a smartphone application that communicates with parents in real time, could provide them the option of bringing an appointment forward due to last minute cancellations or arriving slightly later if their appointment is delayed (without needing to spend extra time in the hospital). Where consent is provided, location tracking could allow administrative staff to check how far away a patient is from hospital prior to their appointment time, and reassign them a different time slot while reallocating their original appointment to a ‘walk-in’ patient. This could help better manage the radiology workload across the working day and avoid parental frustration due to inefficiencies in service delivery.

Another way to enhance the patient experience could include the use of a ‘Chatbot’ (also known as an Artificial Conversational Entity) via a smartphone application.17 These programmes use both natural language processing (NLP) and deep learning to assess human queries to generate a verbal or text-based response. Where a patient is unsure of how to prepare for a radiological study, what to expect, or why their examination is being performed, a chatbot could provide these answers in an easy to understand, and age appropriate way. Currently one UK-based children’s hospital is already developing such a tool18 to assist patient queries; however, a few Chatbots already exist in healthcare and have been shown to be helpful for monitoring mental health.19,20

Image acquisition & Post-processing

Decreasing imaging acquisition time, radiation dosage and improving image quality (through reduction of noise and motion/metal artefacts) have all been major areas of research in MRI and CT technology since their invention. While hardware solutions have previously been enhanced to improve scanning efficiencies (e.g., increasing numbers of detectors for multi-detector CT scanners), AI-supported deep-learning tools are now being used to reduce scanning times21 and in some cases the need for intravenous contrast.22

MRI scanning & image quality

In paediatric imaging, reduced MRI scanning times would not only allow for more studies to be performed per day, but also contribute to reduced motion artefacts by a less co-operative child23 and could reduce the need for general anaesthesia and its associated risks.24 A variety of AI-assisted techniques are being assessed,25,26 but have predominantly included training a neural network to learn relationships between zero-filled k-space data and those of fully sampled k-space data for a particular study type; thereby allowing for interpolation of missing data in future unseen studies (e.g., in adult brain imaging)21,27 ; and also by using neural networks to remove aliasing from under-sampled real-time MR data to enhance image reconstruction times (e.g., used in MRI reconstruction of congenital heart diseases in children).28 In this technique, deep learning artefact suppression reconstructions were reportedly over five times faster than conventional (compressed sensing) image reconstruction methods. Nevertheless, these reconstructions can sometimes introduce blurring or remove findings that would not have been present on the original ‘ground truth’ images, highlighting the importance that such tools to be rigorously tested prior to routine clinical implementation.

Post-processing techniques can also help improve MRI quality. In one study, the removal of ‘ghost’ artefact from diffusion tensor imaging of paediatric spinal cord MRIs was achieved through a multi-stage process of computer-aided detection, segmentation, feature extraction, texture analysis and subsequent subtraction, with an accuracy of 84% in separating true cord from artefact.29

CT image quality & radiation reduction

AI-based tools for reducing CT radiation dose while maintaining image quality can also be achieved by reconstructing high-quality images from reduced amounts of raw data. This has been made possible by showing an AI model different examples of normal and abnormal pathology at low and standard radiation dosages, then assessing the model’s ability to produce extrapolated ‘standard dose’ images when provided with only noisy, low-dose images from ‘unseen’ cases.30 Alternatively teaching an AI model the typical appearances of low-dose CT artefacts and then subtracting these from other low-does CT images could enhance image quality without increasing radiation dose.31,32

In paediatric imaging, MacDougall et al33 successfully demonstrated a 31% reduction in image noise after training a neural network to create iterative reconstructed CT images from the filtered back projection data of low-dose abdominal CTs, with radiologists reportedly preferring the AI-reconstructed images (than the iterative reconstructed images). AI algorithms could therefore potentially be used in non-specialist centres to reduce doses but still achieve diagnostic imaging in children, and in specialist centres to reduce CT doses even further. Few other studies have yet been published on this topic for children specifically, although it is likely to play a larger role in future imaging processing research.

Contrast usage

Deep learning techniques have been reported to help produce high-quality post-contrast MRI images in cases where only a fraction of the usual dose of gadolinium-based contrast agent has been administered.22 In one adult’s brain MRI study (including healthy volunteers and patients with gliomas), a deep learning architecture was able to generate ‘virtual contrast’ MRI images from unenhanced MRI sequences with a structural similarity index of 0.872 ( ± 0.031). Although the radiologist raters scored the virtual contrast images highly in terms of image quality, the virtual contrast maps were noted to be more blurry and less nodular-like for ring enhancement around brain tumours than the ground truth contrast-enhanced images.34 Future adaptations of this technique in MRI studies for children, and potentially also for multiphase CT studies, could help reduce the contrast dosage (and potential renal damage) as well as radiation burden but again, careful assessment of any potential clinical impact from misdiagnosis of underlying pathology (due to differences in their appearances on reconstructed images) should be undertaken prior to routine usage.

Quantitative analysis & prognostication

Quantification

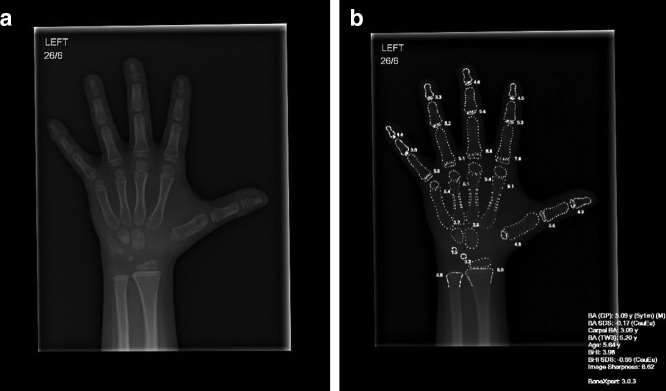

Computer supported software (e.g., BoneXpertTM, Figure 2) and several other recently developed algorithms35,36 are already widely used in paediatric imaging for the automated segmentation and subsequent calculation of bone age from hand radiographs,37 rather than the traditional and time-consuming manual Greulich-Pyle or Tanner-Whitehouse assessments.

Figure 2.

An example of how artificial intelligence software (i.e., BoneXpertTM v.3.0.3) is already being used in some radiology departments for the rapid, automated assessment of bone age. (a) A plain radiograph of the left hand in a male child with short stature aged 5 years and 7 months old. (b) After assessment by the BoneXpertTM software, a duplicate image is produced with an image overlay (white text and outlines), providing details in the bottom right of the image for the bone age according to Greulich and Pyle (5 years 1 month) and estimated standard deviation (−0.17). Additional details are also provided for estimated bone age according to Tanner Whitehouse 3 (TW3: 5.2 years) and a bone health index (BHI).

Quantification of imaged volumes has been successfully demonstrated in areas of paediatric imaging such as in the volume measurements of pneumothoraces on chest CT38 and in the segmentation of brain tissue and cerebrospinal fluid (CSF) for determining degree of hydrocephalus on infant brain CTs.39 These measurements could be used to provide rapid objective parameters for treatment decisions embedded within future radiology reports, for example by hyperlinking approved measurements into interactive reports. Future automated image quantification may include assessment of paediatric tumour burden across different cross-sectional studies,11 measurements of leg lengths on limb radiographs post-orthopaedic intervention,40 or even scoliosis angles from spine radiographs (as demonstrated in one study with adult imaging).41

Predictive modelling

Radiogenomic studies in adults with the aid of AI techniques have been commonly used to prognosticate clinical outcomes and to determine optimal treatment regimes.42–44 At present, several pipelines and registries are being created for similar work in children. For example, Weiss et al45 have created a multicentre clinical and imaging dataset to develop machine-learning frameworks for the detection and outcome prediction in neonatal hypoxic ischaemic encephalopathy. Similarly in paediatric oncology, the recently established multi-centre ‘PRIMAGE’ project aims to phenotype, provide appropriate treatment decisions and prognosticate disease outcomes for two types of paediatric cancers – neuroblastoma and diffuse intrinsic pontine glioma (DIPG).46 Further multi-site data-mining and predictive modelling could facilitate prognoses for other paediatric tumour types (e.g., Wilms tumour) or patient outcomes derived from pattern identification across non-oncological serial images (e.g., determining the likely neurological outcome in children with post-haemorrhagic hydrocephalus or after vein of Galen embolisation).

Perhaps most exciting, AI-enabled predictive modelling could potentially determine the likelihood of a disorder from happening, even before significant clinical signs are apparent. For example, Chen et al47 were able to identify neuroimaging biomarkers from brain MRIs in children who could distinguish those with autistic spectrum disorder from healthy controls. When testing this algorithm in four different datasets across different institutions, they reported an area under the curve (AUC) of >0.75. AI assisted tools such as these could aid early identification of certain diseases, or in patients who may be difficult to examine or acquire a history from.

In the future, such models could be used to predict neurological deficits and timings of developmental milestones in children with delayed myelination on brain imaging, and thus identify those who require greatest clinical support. This would enable better planning for speech and language therapy and schooling requirements for the child as they grow, with patient-specific expected growth and development trajectories.

Image interpretation

Detecting and classifying abnormalities

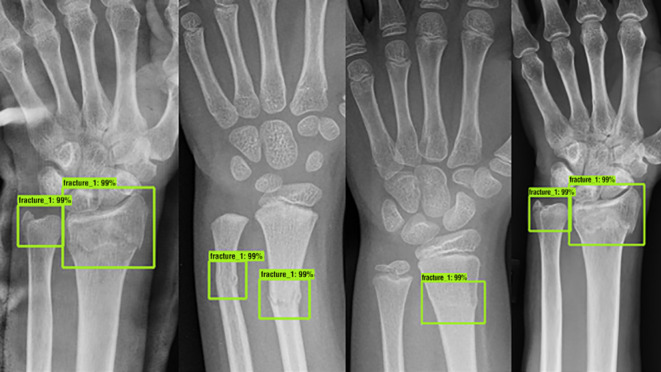

Several studies encompassing detection and classification algorithms (either alone or in combination) for medical images in adults (e.g., dermatology photographs, radiographs and retinal scans) have shown equivocal or superior performance compared to trained healthcare professionals.48 In paediatric radiology, few large-scale multicentre studies have been published, however early work has demonstrated feasibility in the detection of a wide spectrum of diseases, including paediatric pneumonia,49–52 elbow effusions,53 developmental dysplasia of the hip,54 wrist fractures on radiography55 (Figure 3) as well as interval changes in bone marrow signal on MRI in children with chronic non-bacterial osteitis (CNO).56 Zheng et al57 developed a feature extraction algorithm which performed automated classification of congenital abnormalities of the kidney and urinary tract on paediatric ultrasound, with a sensitivity of over 80%.

Figure 3.

Fracture detection using artificial intelligence on plain frontal wrist radiographs. These examples are from different patients, all with fracture of the distal radius with and without additional ulnar fractures which have been assessed by a deep-learning neural network (the ‘Faster R-convolutional neural network’) trained to detect and localise fractures. Green boxes denote the location of the suspected abnormalities, with percentages provided to reflect the confidence score by the network for a fracture located within the marked box. Reproduced with permission from Thian YL et al. Radiology: Artificial Intelligence. 2019;1(1):e180001 55

In neuro-oncology,58 a more advanced classification system gave 86% accuracy in distinguishing between medulloblastoma, ependymoma and astrocytomas on 3T MR spectroscopy. There is now also evidence that subgroups of medulloblastomas can be differentiated using a combination of texture analysis, clinical biomarkers and imaging characteristics, potentially negating tissue biopsies in the future, to better stratify treatments.59

Further applications which are already performed in adults and could have benefited in children’s imaging include identifying inappropriate positioning of support lines such as nasogastric tube, umbilical arterial or venous catheters, and central lines,60 detecting osteoporotic vertebral fractures on spine CT61 and pulmonary nodule detection on chest imaging.62

Reporting

Workflow prioritisation

Workflow prioritisation with AI tools to facilitate urgent radiology reporting have been explored in the adult literature, predominantly for urgent findings on chest radiography63 and CT heads.64,65 These ensure images most likely to have a significant abnormalities are flagged up on the reporting worklist and reported by radiologists first. Although publications specifically on the topic of report prioritisation have not been widely described in children, the previously reported image detection and classification tools could be incorporated into a reporting framework to help streamline workflow, and flag up potential findings on studies.

Image labelling

Most imaging studies are currently assigned an examination label (i.e., US abdomen) based on the referral booking request, manually at time of scanning, or a generic label without detail of the study (e.g., ‘External Imaging’). Automated identification and labelling of images imported into PACS by modality and body part coverage could have many benefits. Firstly, this would ensure appropriate hanging protocols for the studies would be assigned for reporting66; secondly, it could help assign the correct study to the appropriate reporting list (e.g., ultrasound knee versus CT chest) and a combination of imaging appearances and report findings could generate more accurate coding for billing purposes (trialled in adult and veterinary clinical records) for future research/audit purposes.67

Yi P et al68 have shown that it is possible to successfully use AI to automatically label paediatric musculoskeletal radiographs into their respective body parts (e.g., pelvis, shoulder, elbow etc) from a relatively small training dataset (250 radiographs, with 50 radiographs in each body part category) with perfect accuracy. This methodology could be adapted for labelling other imaging modalities, such as chest radiographs69 and MRIs,70 but could also be used for internal audit to help quickly determine whether all sequences from a particular MRI protocol had been performed and sent to PACS prior to reporting.

Communication of results & management

Significant findings

Although automated emails and non-clinician-led ‘significant findings’ pathways exist in many radiology services, prioritisation alert systems would improve this. Paediatricians could then prioritise clinical management and findings above a certain level of urgency could be automatically assigned to the next multidisciplinary team meeting simply by adding the term “add to MDT” on the radiology report.

Enhanced reports

Misunderstandings or a lack of clarity in key findings may be encountered by clinical colleagues when presented with a lengthy radiology report. An AI tool could help to distil radiology reports down to their most important findings. An example of one such algorithm was reported by Gálvez et al71 where a natural language processing (NLP) tool was able to identify the presence of a deep vein thrombosis (DVT) in children from free text ultrasound reports and generate a clinical alert. In adults, work has been developed to categorise chest CT findings into those of ‘normal/insignificant’ versus ‘significant’ findings, with further sub-classification of whether the findings were stable, worsening or improved from previous reports.72 In order to prioritise treatment and follow-up of patients at a higher risk of stroke, Mowery et al73 devised a NLP model to filter and highlight ultrasound reports with significant carotid artery stenosis.

In paediatric imaging, NLP software could also be developed to highlight specific important findings in reports (e.g., misplaced lines or tubes) or those that raise safe-guarding issues (e.g., metaphyseal corner fractures). Categorisation of worsening appearances on oncological imaging studies could also trigger referrals for further MDT discussion and incorporate tumour dimensions across previous studies to visualise trends in the disease process. This would be enhanced and aided by the routine usage of multimedia reporting with embedded report hyperlinks to prior key images, aiding the AI algorithm to search for the same tumour in the follow-up examination,74 or readily verify like-for-like measurements.

Where a differential diagnosis exists for rare or unfamiliar diseases, or a follow-up guideline is not readily established–the integration of advanced data-mining software linked to the internet could help rapidly ‘read’ in real-time millions of manuscripts to suggest the most current expert opinions on the topic.75 This type of software is already gaining some popularity for use in universities and academia to help with literature searching,76 but the most relevant results generated from the software could be integrated into a clinical radiological report for radiologist and clinician education and information.

Clinical governance

Aside from direct patient care, clinical governance activities could be enhanced using AI-supported methods. For example, using NLP to mine information from the EHR, radiology reports and DICOM metadata could result in faster search and potentially larger datasets for audit and research projects,77 finding interesting cases for a teaching library (potentially with digital pathology correlation, and information of patient outcomes), as well as providing direct feedback for trainees on their reporting skills.78

For example, instead of an AI tool being used as a ‘first read’ for certain studies, trainees could go through a case list (e.g., neonatal chest radiographs from intensive care) determining their own differential diagnoses, and then check to see if the highest probability assigned to a list of differential diagnoses by the AI tool matches their own impressions.79 In a proof of principle study by Hedgé J,80 this technique has shown early success when training lay subjects (with no medical qualifications) to detect certain cancers on mammograms. The AI algorithm could further help education by displaying the commonest errors made by trainees next to the correct diagnosis, without senior input.

Alternatively, where a differential diagnosis is uncertain for an unusual imaging pattern (e.g., honeycombing on a chest CT), it would be possible to mark the region of interest on the image and run a ‘reverse image search’ through the entire PACS system looking for other studies with confirmed diagnoses in patients with similar imaging patterns (one AI company has already developed such a tool for adult chest CT findings (contextflow SEARCH81)). This could help trainees learn descriptive terminology and avoid manually retrieving inappropriate examples from pictorial reviews or textbooks.

Challenges and pitfalls

This article has highlighted several areas where AI could improve clinical paediatric radiology practice, and taken together, the multitude of research studies suggest an optimistic, exciting and positive future. Nevertheless, readers should remain cautious of the dangers that expedited implementation of AI tools could bring. While many of these are generic and affect all aspects of healthcare (i.e., data security, legal, ethical and implementation considerations, standardisation of clinical terminologies),82–85 there are two areas of caution specific to paediatric imaging: the temptation to apply AI software designed for adults to children unmodified, and the potential lack of acceptability amongst parents and carers.

The first area of caution relates to the dangers of improper external validation of algorithms in children, and ignorance amongst healthcare professionals regarding the intended usage of the AI tool. In one study,86 two different automated software for vertebral fracture detection designed for adults were applied to paediatric spine radiographs. The overall sensitivity and specificity dropped significantly to between 26–36% and 95–98%, respectively (compared to 98 and 99% for adults). The low sensitivity means a high false-negative rate, which is largely useless as a screening tool. It is unclear whether entirely new paediatric datasets would be needed to train such an AI algorithm, or whether adaptations of the existing “adult” algorithms can give the desired end result.

A national archive (imaging biobank) of multicentric paediatric cases may help objectively and independently assess the performance of novel AI tools intended to be used within the NHS for paediatric use. However, even this may not provide sufficient reassurance given the lack of a ‘one size fits all’ solution–individual hospitals may need mechanisms in place to audit errors and potential improvements for AI solutions according to their local range of cases and local demographics.

The second issue relates to patient and carer acceptability of AI solutions. While Goldberg et al87 found that the public were overwhelmingly positive on the transformative impact of AI in radiology, there was still an underlying mistrust for autonomous computer systems. Interestingly, parental and children’s views have yet to be specifically addressed and may be at odds with what the general adult public perceive as acceptable for themselves. Engagement with end-service users (e.g., paediatricians, patients, carers) is, therefore, vital during any change to services, with acceptability likely to be linked with more ‘explainable’ AI tools,88 better familiarity and evidence-based improved patient outcomes.

Conclusion

The current climate represents an opportunity for early adopters to better determine how AI solutions could, and should, be used to enhance the workflow of paediatric radiology–potentially providing a more individualised patient and referrer-centred approach. Several aspects of the patient pathway, experience and clinical governance could be enhanced to provide time and cost-saving improvements. Paediatric radiologists are well placed and should maintain a central role in determining how these AI tools are evaluated, implemented and embedded to provide better and safer clinical healthcare.

Footnotes

Funding: SCS is supported by a RCUK/ UKRI Innovation Fellowship and Medical Research Council (MRC) Clinical Research Training Fellowship (Grant Ref: MR/R002118/1). This award is jointly funded by the Royal College of Radiologists (RCR). OJA is supported by an NIHR Career Development Fellowship (NIHR-CDF-2017-10-037)

Funding: This article presents independent research and the views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR, or the Department of Health. None of the funders were involved in the design or interpretation of the results.

Contributor Information

Natasha Davendralingam, Email: natasha.davendralingam2@nhs.net.

Neil J Sebire, Email: neil.sebire@gosh.nhs.uk.

Owen J Arthurs, Email: owen.arthurs@gosh.nhs.uk.

Susan C Shelmerdine, Email: susie.shelmerdine@gmail.com.

REFERENCES

- 1.LIT L, Kanthasamy S, Ayyalaraju RS, Ganatra R. The current state Fo artificial intelligence in medical imaging and nuclear medicine. BJR Open 2019;: 20190037; (: 201900371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Royal College of Radiologists National Audit of Paediatric Radiology Services in Hospitals. 2015. Available from: https://www.rcr.ac.uk/sites/default/files/auditreport_paediatricrad.pdf [accessed 24 May 2020].

- 3.Letzen B, Wang CJ, Chapiro J. The role of artificial intelligence in interventional oncology: a primer. Journal of Vascular and Interventional Radiology 2019; 30: 38–41. doi: 10.1016/j.jvir.2018.08.032 [DOI] [PubMed] [Google Scholar]

- 4.Moore MM, Slonimsky E, Long AD, Sze RW, Iyer RS, concepts Mlearning. Concerns and opportunities for a pediatric radiologist. Pediatr Radiol 2019; 49: 509–16. [DOI] [PubMed] [Google Scholar]

- 5.Razavian N, Knoll F, Geras KJ. Artificial intelligence explained for Nonexperts. Semin Musculoskelet Radiol 2020; 24: 003–11. doi: 10.1055/s-0039-3401041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gray M. Value based healthcare. In: ed)BMJ (Clinical research. 356; 2017. pp j437. doi: 10.1136/bmj.j437 [DOI] [PubMed] [Google Scholar]

- 7. European school of radiology (ESoR). ESR concept paper on value-based radiology. Insights into imaging 2017; 8: 447–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.NICE Guidelines Urinary tract infection - children. 2019. Available from: https://cks.nice.org.uk/urinary-tract-infection-children#!scenario (accessed 27th July 2020).

- 9.Royal College of Radiologists. iRefer: Radiological Investigation Guidelines. 2019. Available from: https://www.rcr.ac.uk/clinical-radiology/being-consultant/rcr-referral-guidelines/about-irefer (accessed 25th July 2020).

- 10.Remedios D, Herman S, Williams CJ, Taiwo R, Johnson P. Rcr iRefer and MedCurrent clinical decision support for appropriate imaging: the NW London pilot project. EPOS 2018;Available at. accessed 25 June 2020. [Google Scholar]

- 11.Daldrup-Link H. Artificial intelligence applications for pediatric oncology imaging. Pediatr Radiol 2019; 49: 1384–90. doi: 10.1007/s00247-019-04360-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hale AT, Stonko DP, Lim J, Guillamondegui OD, Shannon CN, Patel MB. Using an artificial neural network to predict traumatic brain injury. J Neurosurg 2018; 23: 219–26. doi: 10.3171/2018.8.PEDS18370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Klute B, Homb A, Chen W, Stelpflug A. Predicting outpatient appointment demand using machine learning and traditional methods. J Med Syst 2019; 43: 288. doi: 10.1007/s10916-019-1418-y [DOI] [PubMed] [Google Scholar]

- 14.Improvement NHS. Transforming imaging services in England - a national strategy for imaging networks. 2019. Available from: https://improvement.nhs.uk/resources/transforming-imaging-services-in-england-a-national-strategy-for-imaging-networks/ [accessed 20 June 2020].

- 15.AlMuhaideb S, Alswailem O, Alsubaie N, Ferwana I, Alnajem A. Prediction of hospital no-show appointments through artificial intelligence algorithms. Ann Saudi Med 2019; 39: 373–81. doi: 10.5144/0256-4947.2019.373 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kurasawa H, Hayashi K, Fujino A, Takasugi K, Haga T, Waki K, et al. Machine-Learning-Based prediction of a missed scheduled clinical appointment by patients with diabetes. J Diabetes Sci Technol 2016; 10: 730–6. doi: 10.1177/1932296815614866 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tudor Car L, Dhinagaran DA, Kyaw BM, Kowatsch T, Joty S, Theng Y-L, et al. Conversational agents in health care: Scoping review and conceptual analysis. J Med Internet Res 2020; 22: e17158. doi: 10.2196/17158 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.UKRI Science and Technology Facilities Council (STFC) Ask Oli" chatbot starts an AI revolution in children’s healthcare. 2019. Available from: https://stfc.ukri.org/about-us/our-impacts-achievements/case-studies/ask-oli-chatbot-starts-an-ai-revolution-in-childrens-healthcare/ [accessed 25 July 2020].

- 19.Fitzpatrick KK, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated Conversational agent (Woebot): a randomized controlled trial. JMIR Mental Health 2017; 4: e19: e19. doi: 10.2196/mental.7785 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Fulmer R, Joerin A, Gentile B, Lakerink L, Rauws M. Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: randomized controlled trial. JMIR Mental Health 2018; 5: e64: e64. doi: 10.2196/mental.9782 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chea P, Mandell JC. Current applications and future directions of deep learning in musculoskeletal radiology. Skeletal Radiol 2020; 49: 183–97. doi: 10.1007/s00256-019-03284-z [DOI] [PubMed] [Google Scholar]

- 22.Gong E, Pauly JM, Wintermark M, Zaharchuk G. Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J. Magn. Reson. Imaging 2018; 48: 330–40. doi: 10.1002/jmri.25970 [DOI] [PubMed] [Google Scholar]

- 23.Thukral B. Problems and preferences in pediatric imaging. Indian Journal of Radiology and Imaging 2015; 25: 359–64. doi: 10.4103/0971-3026.169466 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jung SM. Drug selection for sedation and general anesthesia in children undergoing ambulatory magnetic resonance imaging. Yeungnam University Journal of Medicine 2020; 37: 159–68. doi: 10.12701/yujm.2020.00171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Nguyen XV, Oztek MA, Nelakurti DD, Brunnquell CL, Mossa-Basha M, Haynor DR, et al. Applying artificial intelligence to mitigate effects of patient motion or other complicating factors on image quality. Topics in Magnetic Resonance Imaging 2020; 29: 175–80. doi: 10.1097/RMR.0000000000000249 [DOI] [PubMed] [Google Scholar]

- 26.Kromrey M-L, Tamada D, Johno H, Funayama S, Nagata N, Ichikawa S, et al. Reduction of respiratory motion artifacts in gadoxetate-enhanced Mr with a deep learning–based filter using convolutional neural network. Eur Radiol 2020; 266. doi: 10.1007/s00330-020-07006-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wang S, Su Z, Ying L, et al. Accelerating magnetic resonance imaging via deep learning. Proceedings IEEE International Symposium on Biomedical Imaging 2016; 2016: 514–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hauptmann A, Arridge S, Lucka F, Muthurangu V, Steeden JA. Real‐time cardiovascular Mr with spatio‐temporal artifact suppression using deep learning–proof of concept in congenital heart disease. Magnetic Resonance in Medicine 2019; 81: 1143–56. doi: 10.1002/mrm.27480 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Alizadeh M, Conklin CJ, Middleton DM, Shah P, Saksena S, Krisa L, et al. Identification of ghost artifact using texture analysis in pediatric spinal cord diffusion tensor images. Magn Reson Imaging 2018; 47: 7–15. doi: 10.1016/j.mri.2017.11.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chen H, Zhang Y, Kalra MK, Lin F, Chen Y, Liao P, et al. Low-Dose CT with a residual Encoder-Decoder Convolutional neural network. IEEE Trans Med Imaging 2017; 36: 2524–35. doi: 10.1109/TMI.2017.2715284 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Takam CA, Samba O, Kouanou AT, Tchiotsop D. Spark architecture for deep learning-based does optimization in medical imaging. Informatics in Medicine Unlocked 2020; 19. [Google Scholar]

- 32.Xie S, Zheng X, Chen Y, Xie L, Liu J, Zhang Y, et al. Artifact removal using improved GoogLeNet for Sparse-view CT reconstruction. Sci Rep 2018; 8: 6700. doi: 10.1038/s41598-018-25153-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.MacDougall RD, Zhang Y, Callahan MJ, Perez-Rossello J, Breen MA, Johnston PR, et al. Improving low-dose pediatric abdominal CT by using Convolutional neural networks. Radiology 2019; 1: e180087. doi: 10.1148/ryai.2019180087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kleesiek J, Morshuis JN, Isensee F, Deike-Hofmann K, Paech D, Kickingereder P, et al. Can virtual contrast enhancement in brain MRI replace gadolinium? Invest Radiol 2019; 54: 653–60. doi: 10.1097/RLI.0000000000000583 [DOI] [PubMed] [Google Scholar]

- 35.Dallora AL, Anderberg P, Kvist O, Mendes E, Diaz Ruiz S, Sanmartin Berglund J. Bone age assessment with various machine learning techniques: a systematic literature review and meta-analysis. PLoS One 2019; 14: e0220242. doi: 10.1371/journal.pone.0220242 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mutasa S, Chang PD, Ruzal-Shapiro C, Ayyala R. MABAL: a novel Deep-Learning architecture for Machine-Assisted bone age labeling. J Digit Imaging 2018; 31: 513–9. doi: 10.1007/s10278-018-0053-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Booz C, Yel I, Wichmann JL, Boettger S, Al Kamali A, Albrecht MH, et al. Artificial intelligence in bone age assessment: accuracy and efficiency of a novel fully automated algorithm compared to the Greulich-Pyle method. European Radiology Experimental 2020; 4: 6. doi: 10.1186/s41747-019-0139-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Cai W, Lee EY, Vij A, Mahmood SA, Yoshida H. Mdct for computerized volumetry of pneumothoraces in pediatric patients. Acad Radiol 2011; 18: 315–23. doi: 10.1016/j.acra.2010.11.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cherukuri V, Ssenyonga P, Warf BC, Kulkarni AV, Monga V, Schiff SJ. Learning based segmentation of CT brain images: application to postoperative hydrocephalic scans. IEEE transactions on bio-medical engineering 2018; 65: 1871–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zheng Q, Shellikeri S, Huang H, Hwang M, Sze RW. Deep learning measurement of leg length discrepancy in children based on radiographs. Radiology 2020; 296: 152–8. doi: 10.1148/radiol.2020192003 [DOI] [PubMed] [Google Scholar]

- 41.Horng M-H, Kuok C-P, Fu M-J, Lin C-J, Sun Y-N. Cobb angle measurement of spine from X-ray images using Convolutional neural network. Comput Math Methods Med 2019; 2019: 1–18. doi: 10.1155/2019/6357171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.20)-Gore S, Chougule T, Jagtap J, Saini J, Ingalhalikar M. A review of Radiomics and deep predictive modeling in glioma characterization. Acad Radiol 2020;: 30366–4S1076-6332. [DOI] [PubMed] [Google Scholar]

- 43.Trivizakis E, Papadakis G, Souglakos I, Papanikolaou N, Koumakis L, Spandidos D, et al. Artificial intelligence radiogenomics for advancing precision and effectiveness in oncologic care (review. Int J Oncol 2020; 57: 43–53. doi: 10.3892/ijo.2020.5063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Hu W, Yang H, Xu H, Mao Y. Radiomics based on artificial intelligence in liver diseases: where are we? Gastroenterology Report 2020; 8: 90–7. doi: 10.1093/gastro/goaa011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Weiss RJ, Bates SV, Song Ya’nan, Zhang Y, Herzberg EM, Chen Y-C, Song Y, et al. Mining multi-site clinical data to develop machine learning MRI biomarkers: application to neonatal hypoxic ischemic encephalopathy. J Transl Med 2019; 17: 385. doi: 10.1186/s12967-019-2119-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Martí-Bonmatí L, Alberich-Bayarri Ángel, Ladenstein R, Blanquer I, Segrelles JD, Cerdá-Alberich L, et al. PRIMAGE project: predictive in silico multiscale analytics to support childhood cancer personalised evaluation empowered by imaging biomarkers. European Radiology Experimental 2020; 4: 22. doi: 10.1186/s41747-020-00150-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Chen T, Chen Y, Yuan M, Gerstein M, Li T, Liang H, et al. The development of a practical artificial intelligence tool for diagnosing and evaluating autism spectrum disorder: multicenter study. JMIR Medical Informatics 2020; 8: e15767: e15767. doi: 10.2196/15767 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Liu X, Faes L, Kale AU, Wagner SK, Fu DJ, Bruynseels A, et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. The Lancet Digital Health 2019; 1: e271–97. doi: 10.1016/S2589-7500(19)30123-2 [DOI] [PubMed] [Google Scholar]

- 49.Rajpurkar P, Irvin J, Ball RL, Zhu K, Yang B, Mehta H, et al. Deep learning for chest radiograph diagnosis: a retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med 2018; 15: e1002686. doi: 10.1371/journal.pmed.1002686 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Behzadi-khormouji H, Rostami H, Salehi S, Derakhshande-Rishehri T, Masoumi M, Salemi S, et al. Deep learning, reusable and problem-based architectures for detection of consolidation on chest X-ray images. Comput Methods Programs Biomed 2020; 185: 105162. doi: 10.1016/j.cmpb.2019.105162 [DOI] [PubMed] [Google Scholar]

- 51.Yates EJ, Yates LC, Harvey H. Machine learning “red dot”: open-source, cloud, deep convolutional neural networks in chest radiograph binary normality classification. Clin Radiol 2018; 73: 827–31. doi: 10.1016/j.crad.2018.05.015 [DOI] [PubMed] [Google Scholar]

- 52.Mahomed N, van Ginneken B, Philipsen RHHM, Melendez J, Moore DP, Moodley H, et al. Computer-Aided diagnosis for World health Organization-defined chest radiograph primary-endpoint pneumonia in children. Pediatr Radiol 2020; 50: 482–91. doi: 10.1007/s00247-019-04593-0 [DOI] [PubMed] [Google Scholar]

- 53.England JR, Gross JS, White EA, Patel DB, England JT, Cheng PM. Detection of traumatic pediatric elbow joint effusion using a deep Convolutional neural network. American Journal of Roentgenology 2018; 211: 1361–8. doi: 10.2214/AJR.18.19974 [DOI] [PubMed] [Google Scholar]

- 54.Li Q, Zhong L, Huang H, Liu H, Qin Y, Wang Y, et al. Auxiliary diagnosis of developmental dysplasia of the hip by automated detection of Sharpʼs angle on standardized anteroposterior pelvic radiographs. Medicine 2019; 98: e18500. doi: 10.1097/MD.0000000000018500 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Thian YL, Li Y, Jagmohan P, Sia D, Chan VEY, Tan RT. Convolutional neural networks for automated fracture detection and localization on wrist radiographs. Radiology: Artificial Intelligence 2019; 1: e180001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Bhat CS, Chopra M, Andronikou S, Paul S, Wener-Fligner Z, Merkoulovitch A, et al. Artificial intelligence for interpretation of segments of whole body MRI in CNO: pilot study comparing radiologists versus machine learning algorithm. Pediatric Rheumatology 2020; 18: 47. doi: 10.1186/s12969-020-00442-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Zheng Q, Furth SL, Tasian GE, Fan Y. Computer-Aided diagnosis of congenital abnormalities of the kidney and urinary tract in children based on ultrasound imaging data by integrating texture image features and deep transfer learning image features. J Pediatr Urol 2019; 15: 75.e1–75.e7. doi: 10.1016/j.jpurol.2018.10.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Zarinabad N, Abernethy LJ, Avula S, Davies NP, Rodriguez Gutierrez D, Jaspan T, et al. Application of pattern recognition techniques for classification of pediatric brain tumors by in vivo 3T 1 H‐MR spectroscopy—A multi‐center study. Magnetic Resonance in Medicine 2018; 79: 2359–66. doi: 10.1002/mrm.26837 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Iv M, Zhou M, Shpanskaya K, Perreault S, Wang Z, Tranvinh E, et al. Mr Imaging–Based radiomic signatures of distinct molecular subgroups of medulloblastoma. AJNR Am J Neuroradiol 2019; 40: 154–61. doi: 10.3174/ajnr.A5899 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Yi X, Adams SJ, Henderson RDE, Babyn P. Computer-Aided assessment of catheters and tubes on radiographs: how good is artificial intelligence for assessment? Radiology: Artificial Intelligence 2020; 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Tomita N, Cheung YY, Hassanpour S. Deep neural networks for automatic detection of osteoporotic vertebral fractures on CT scans. Comput Biol Med 2018; 98: 8–15. doi: 10.1016/j.compbiomed.2018.05.011 [DOI] [PubMed] [Google Scholar]

- 62.Baldwin DR, Gustafson J, Pickup L, Arteta C, Novotny P, Declerck J, et al. External validation of a convolutional neural network artificial intelligence tool to predict malignancy in pulmonary nodules. Thorax 2020; 75: 306–12. doi: 10.1136/thoraxjnl-2019-214104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Annarumma M, Withey SJ, Bakewell RJ, Pesce E, Goh V, Montana G. Automated triaging of adult chest radiographs with deep artificial neural networks. Radiology 2019; 291: 196–202. doi: 10.1148/radiol.2018180921 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Ginat DT. Analysis of head CT scans flagged by deep learning software for acute intracranial hemorrhage. Neuroradiology 2020; 62: 335–40. doi: 10.1007/s00234-019-02330-w [DOI] [PubMed] [Google Scholar]

- 65.Arbabshirani MR, Fornwalt BK, Mongelluzzo GJ, Suever JD, Geise BD, Patel AA, et al. Advanced machine learning in action: identification of intracranial hemorrhage on computed tomography scans of the head with clinical workflow integration. NPJ Digit Med 2018; 1: 9. doi: 10.1038/s41746-017-0015-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Luo H, Hao W, Foos DH, Cornelius CW. Automatic image hanging protocol for chest radiographs in PACS. IEEE Transactions on Information Technology in Biomedicine 2006; 10: 302–11. doi: 10.1109/TITB.2005.859872 [DOI] [PubMed] [Google Scholar]

- 67.Venkataraman GR, Pineda AL, Bear Don’t Walk IV OJ, Zehnder AM, Ayyar S, Page RL, et al. FasTag: automatic text classification of unstructured medical narratives. PLoS One 2020; 15: e0234647. doi: 10.1371/journal.pone.0234647 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.PH Y, Kim TK, Wei J, et al. Automated semantic labeling of pediatric musculoskeletal radiographs using deep learning. Pediatr Radiol 2019; 49: 1066–70. [DOI] [PubMed] [Google Scholar]

- 69.Kim TK, Yi PH, Wei J, Shin JW, Hager G, Hui FK, et al. Deep learning method for automated classification of anteroposterior and Posteroanterior chest radiographs. J Digit Imaging 2019; 32: 925–30. doi: 10.1007/s10278-019-00208-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Lee YH. Efficiency improvement in a busy radiology practice: determination of musculoskeletal magnetic resonance imaging protocol using Deep-Learning Convolutional neural networks. J Digit Imaging 2018; 31: 604–10. doi: 10.1007/s10278-018-0066-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Gálvez JA, Pappas JM, Ahumada L, Martin JN, Simpao AF, Rehman MA, et al. The use of natural language processing on pediatric diagnostic radiology reports in the electronic health record to identify deep venous thrombosis in children. J Thromb Thrombolysis 2017; 44: 281–90. doi: 10.1007/s11239-017-1532-y [DOI] [PubMed] [Google Scholar]

- 72.Hassanpour S, Bay G, Langlotz CP. Characterization of change and significance for clinical findings in radiology reports through natural language processing. J Digit Imaging 2017; 30: 314–22. doi: 10.1007/s10278-016-9931-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Mowery DL, Chapman BE, Conway M, South BR, Madden E, Keyhani S, et al. Extracting a stroke phenotype risk factor from veteran health administration clinical reports: an information content analysis. J Biomed Semantics 2016; 7: 26. doi: 10.1186/s13326-016-0065-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Beesley SD, Patrie JT, Gaskin CM. Radiologist adoption of interactive multimedia reporting technology. Journal of the American College of Radiology 2019; 16: 465–71. doi: 10.1016/j.jacr.2018.10.009 [DOI] [PubMed] [Google Scholar]

- 75.Schoeb D, Suarez-Ibarrola R, Hein S, Dressler FF, Adams F, Schlager D, et al. Use of artificial intelligence for medical literature search: randomized controlled trial using the Hackathon format. Interact J Med Res 2020; 9: e16606. doi: 10.2196/16606 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.IRIS AI Research Discovery with Artificial Intelligence. 2020. Available from: https://iris.ai/ [accessed 30 July 2020].

- 77.Shelmerdine SC, Singh M, Norman W, Jones R, Sebire NJ, Arthurs OJ. Automated data extraction and report analysis in computer-aided radiology audit: practice implications from post-mortem paediatric imaging. Clin Radiol 2019; 74: 733.e11–733.e18. doi: 10.1016/j.crad.2019.04.021 [DOI] [PubMed] [Google Scholar]

- 78.2)Slanetz PJ, Daye D, Chen PH, Salkowski LR. Artificial intelligence and machine learning in radiology education is ready for prime time. J Am Coll Radiol 2020;: S1546–144030418-X. [DOI] [PubMed] [Google Scholar]

- 79.Duong MT, Rauschecker AM, Rudie JD, Chen P-H, Cook TS, Bryan RN, et al. Artificial intelligence for precision education in radiology. Br J Radiol 2019; 92: 20190389. doi: 10.1259/bjr.20190389 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Hegdé J. Deep learning can be used to train naïve, nonprofessional observers to detect diagnostic visual patterns of certain cancers in mammograms: a proof-of-principle study. Journal of medical imaging 2020; 7: 022410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.contextflow SEARCH: 3D image search engine. 2020. Available from: https://contextflow.com/solution/search/ [accessed 25 July 2020].

- 82.Mazurowski MA. Artificial intelligence in radiology: some ethical considerations for radiologists and algorithm developers. Acad Radiol 2020; 27: 127–9. doi: 10.1016/j.acra.2019.04.024 [DOI] [PubMed] [Google Scholar]

- 83.Safdar NM, Banja JD, Meltzer CC. Ethical considerations in artificial intelligence. Eur J Radiol 2020; 122: 108768. doi: 10.1016/j.ejrad.2019.108768 [DOI] [PubMed] [Google Scholar]

- 84.Brady AP, Neri E. Artificial intelligence in Radiology-Ethical considerations. Diagnostics 2020; 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.van Assen M, Lee SJ, De Cecco CN. Artificial intelligence from a to Z: from neural network to legal framework. Eur J Radiol 2020; 129: 109083. doi: 10.1016/j.ejrad.2020.109083 [DOI] [PubMed] [Google Scholar]

- 86.Alqahtani FF, Messina F, Offiah AC. Are semi-automated software program designed for adults accurate for the identification of vertebral fractures in children? Eur Radiol 2019; 29: 6780–9. doi: 10.1007/s00330-019-06250-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Goldberg JE, Rosenkrantz AB, Intelligence A. And radiology: a social media perspective. Curr Probl Diagn Radiol 2019; 48: 308–11. [DOI] [PubMed] [Google Scholar]

- 88.Ghosh A, Kandasamy D. Interpretable artificial intelligence: why and when. American Journal of Roentgenology 2020; 214: 1137–8. doi: 10.2214/AJR.19.22145 [DOI] [PubMed] [Google Scholar]