Significance

Best-subset selection is a benchmark optimization problem in statistics and machine learning. Although many optimization strategies and algorithms have been proposed to solve this problem, our splicing algorithm, under reasonable conditions, enjoys the following properties simultaneously with high probability: 1) its computational complexity is polynomial; 2) it can recover the true subset; and 3) its solution is globally optimal.

Keywords: best-subset selection, splicing, high dimensional

Abstract

Best-subset selection aims to find a small subset of predictors, so that the resulting linear model is expected to have the most desirable prediction accuracy. It is not only important and imperative in regression analysis but also has far-reaching applications in every facet of research, including computer science and medicine. We introduce a polynomial algorithm, which, under mild conditions, solves the problem. This algorithm exploits the idea of sequencing and splicing to reach a stable solution in finite steps when the sparsity level of the model is fixed but unknown. We define an information criterion that helps the algorithm select the true sparsity level with a high probability. We show that when the algorithm produces a stable optimal solution, that solution is the oracle estimator of the true parameters with probability one. We also demonstrate the power of the algorithm in several numerical studies.

Subset selection is a classic topic of model selection in statistical learning and is encountered whenever we are interested in understanding the relationship between a response and a set of explanatory variables. Naturally, this problem has been pursued in statistics and mathematics for decades. The classic methods that are commonly described in statistical textbooks include step-wise regression with the Akaike information criterion (1), the Bayesian information criterion (BIC) (2), and Mallows’s (3).

Consider independent observations , where , . Let and . For convenience, we centralize the columns of to have zero mean. The following is the classic multivariable linear model with regression coefficient vector and error vector :

| [1] |

Parsimony is desired when we consider a subset of the explanatory variables in Model 1 with comparable prediction accuracy. When the regression coefficient vector is sparse, we want to identify this subset of nonzero coefficients. This is the commonly known problem of the best-subset selection that minimizes the empirical risk function, e.g., the sum of residual squares, under the cardinality constraint in Model 1,

| [2] |

where is the norm of , and the sparsity level is usually an unknown nonnegative integer.

The Lagrangian of Eq. 2 represents a balance between goodness of fit and parsimony. The latter is characterized by model complexity that is generally defined as an increasing function of the number of nonzero values. Thus, this Lagrangian is not continuous and, of course, not smooth. Greedy methods are usually applied to solve such Lagrangian but suffer from computational difficulties even for a reasonably large . Alternatively, some relaxation methods, e.g., Least-Absolute Shrinkage and Selection Operator (LASSO) (4), Adaptive LASSO (5), Smoothly Clipped Absolute Deviation Penalty (SCAD) (6), and Minimax Concave Penalty (MCP) (7) have been proposed and investigated to ameliorate the computational issue by replacing the nonsmooth penalty function with a smooth approximation. These recently developed methods are computationally feasible and provide near-optimal solutions even for large . However, their solutions do not lead to the best subset and are known for lack of important statistical properties (8).

There has been little progress on how to find the best-subset selection until recently because such a nonsmooth optimization problem is generally nondeterministic polynomial-time–hard (9). Recently, to make the best-subset selection problem computationally tractable, optimization strategies and algorithms are proposed, including the Iterate Hard Thresholding (IHT) algorithm (10), primal-dual active set (PDAS) methods (11), and the Mixed Integer Optimization (MIO) approach (12). However, their solutions may converge to a local minimizer, and IHT and PDAS may also suffer from the periodic iterative issue. More importantly, these methods do not determine the sparsity-level adaptively, and their statistical properties remain unclear.

In this paper, we directly deal with Eq. 2 and solve the best-subset selection problem with two critical ideas: a splicing algorithm and an information criterion. Our contribution is threefold. Firstly, we propose “splicing,” a technique to improve the quality of subset selection, and derive an efficient iterative algorithm based on splicing, Adaptive Best-Subset Selection (ABESS), to tackle problem 2. The ABESS algorithm is applicable to analyze high dimensional datasets with tens of thousands of observations and variables. Secondly, we prove that ABESS algorithm consistently selects important variables and its computational complexity is polynomial. Our algorithm is stringently shown to solve problem 2 within polynomial times. Finally, to determine the most suitable sparsity level, we design an information criterion (special information criterion [SIC]) whose theoretical best-subset selection consistency is rigorously proven.

We define some useful notations for the content below. For , we define the norm of by , where . Let , for any set , denote as the complement of and as its cardinality. We define the support set of vector as . For an index set , . For matrix , define . For any vector and any set , is defined to be the vector whose th entry is equal to if and zero otherwise. For instance, is the vector whose th entry is if and zero otherwise. is the vector whose th entry is and zero otherwise.

Method

Splicing.

In this section, we describe the splicing method. Consider the constraint minimization problem,

where . Without loss of generality, we consider . Given any initial set with cardinality , denote and compute

We call and as the active set and the inactive set, respectively.

Given the active set and , we can define the following two types of sacrifices:

-

1)

Backward sacrifice: For any , the magnitude of discarding variable is,

| [3] |

-

2)

Forward sacrifice: For any , the magnitude of adding variable is,

| [4] |

where .

Intuitively, for (or ), a large (or ) implies the th variable is potentially important. Unfortunately, it is noteworthy that these two sacrifices are incomparable because they have different sizes of support set. However, if we exchange some “irrelevant” variables in and some “important” variables in , it may result in a higher-quality solution. This intuition motivates our splicing method.

Specifically, given any splicing size , define

to represent least relevant variables in and

to represent most relevant variables in . Then, we splice and by exchanging and and obtain a new active set

Let , , and be a threshold. If , then is preferable to . The active set can be updated iteratively until the loss function cannot be improved by splicing. Once the algorithm recovers the true active set, we may splice some irrelevant variables, and then the loss function may decrease slightly. The threshold can reduce this unnecessary calculation. Typically, is relatively small, e.g., .

The remaining problem is to determine the initial set. Typically, we select the first variables that are most correlated with variables as the initial set . Let be the maximum splicing size, . In the following, we summarize our arguments in the above:

| Algorithm 1: BESS.Fix(): Best-Subset Selection with a given support size . | |

| 1) Input: , , a positive integer , and a threshold . | |

| 2) Initialize , | |

| and (, ): | |

| , | |

| , | |

| , | |

| 3) For , do | |

| If , then stop | |

| end for | |

| 4) Output . |

Note that splicing size k is an important parameter in splicing. Typically, we can try all possible values of k≤s.

| Algorithm 2: Splicing (, , , , , ). |

| 1) Input: , , , , , and . |

| 2) Initialize , and set |

| . |

| 3) For , do |

| , |

| Let , and solve |

| If , then |

| , |

| End for |

| 4) If , then . |

| 5) Output (). |

ABESS.

In practice, the support size is usually unknown. We use a data-driven procedure to determine . Information criteria such as high-dimensional BIC (HBIC) (13) and extended BIC (EBIC) (14) are commonly used for this purpose. Specifically, HBIC (13) can be applied to select the tuning parameter in penalized likelihood estimation. To recover the support size for the best-subset selection, we introduce a criterion that is a special case of HBIC (13). While HBIC aims to tune the parameter for a nonconvex penalized regression, our proposal is used to determine the size of best subset. For any active set , define an SIC as follows:

where . To identify the true model, the model complexity penalty is and the slow diverging rate is set to prevent underfitting. Theorem 4 states that the following ABESS algorithm selects the true support size via SIC.

Let be the maximum support size. Theorem 4 suggests as the maximum possible recovery size. Typically, we set , where denotes the integer part of .

| Algorithm 3: ABESS. |

| 1) Input: , , and the maximum support size . |

| 2) For , do |

| End for |

| 3) Compute the minimum of SIC: |

| 4) Output . |

Theoretical Results

We establish the computational complexity and the consistency of the best subset recovery from the ABESS algorithm.

Conditions.

Let be the true regression coefficient with the sparsity level in Model 1. Denote the true active set by and the minimal signal strength by . Without loss of generality, assume the design matrix has -normalized columns, i.e., . We say that satisfies the Sparse Restricted Condition (SRC) (15) with order and spectrum bound if ,

We denote this condition by . The SRC gives the range of the spectrum of the diagonal submatrices of the Gram matrix . The spectrum of the off-diagonal submatrices of can be bounded by the sparse orthogonality constant a,b, defined as the smallest number such that

for and . For any , denote

| [5] |

To prove the theoretical properties of the estimator, we assume the following conditions:

-

1)

The random errors are i.i.d with mean zero and sub-Gaussian tails; that is, there exists a such that , for all .

-

2)

.

-

3)

, where is defined in Eq. 5.

-

4)

.

-

5)

.

-

6)

.

-

7)

and .

Remark 1:

The sub-Gaussian condition is often assumed in the related literature and slightly weaker than the standard normality assumption. Condition 2 imposes bounds on the -sparse eigenvalues of the design matrix. As a typical condition in modeling involving high-dimensional data, it restricts the correlation among a small number of variables and thus guarantees the identifiability of the true active set. For example, the SRC has been assumed in existing methods (15–17). Sufficient conditions are provided for a design matrix to satisfy the SRC in propositions 4.1 and 4.2 in ref. 15.

Remark 2:

To verify condition 3, let , which is closely related to the restricted isometry property (RIP) (18) constant for . By lemma 20 in ref. 19, a sufficient condition for condition 3 is , i.e., , which is weaker than the condition in ref. 19.

Remark 3:

Condition 4 ensures that the threshold can control random errors. Condition 6 is the minimal magnitude of the signal for the best subset recovery. To discriminate between the signal and threshold, the signal needs to be stronger than the threshold. The condition is slightly stronger than the condition in ref. 20.

Remark 4:

For the recovery of the true active set, the true sparsity level and the maximum model size cannot be too large. Condition 7 is weaker than the condition in ref. 13 as we consider the least-squares loss function without concave penalty. As shown in the SI Appendix, condition 5 can be removed.

Computational Theory.

Firstly, we show that the splicing method converges in finite steps.

Theorem 1.

Algorithm 1 terminates in a finite number of iterations.

This follows immediately from the fact that . Furthermore, the next theorem delineates the polynomial complexity of the ABESS algorithm.

Theorem 2.

Suppose conditions 1 and 4 hold. Assume conditions 2, 3, and 6 hold with . The computational complexity of ABESS for a given is

If , Algorithm 1 will find the true active set in high probability under conditions 1–4 (Lemma 1). Furthermore, by splicing, the loss function decreases drastically at the first several iterations and the convergence rate of Algorithm 1 is presented in Theorem 3. However, if , we can determine the iterations of Algorithm 1 by using thresholding to exclude useless splicing. Thus, we can show that the number of iterations of Algorithm 1 is polynomial.

Statistical Theory.

Let , where is some constant depending on . The following lemma gives an interesting property of the active set output by Algorithm 1.

Lemma 1.

Suppose is the solution of Algorithm 1 for a given support size and conditions 1–4 hold. Then, we have

Furthermore, if conditions 5 and 6 hold,

Especially, if , we have

Lemma 1 indicates that our estimator of the active set will eventually include the true active set. The next theorem characterizes the number of iterations and the bound error of the splicing method.

Theorem 3.

Suppose is the th iteration of Algorithm 1 for a given support size . Suppose conditions 1–4 hold. Then, with probability , we have

-

1)

where , is the minimal signal strength, and is defined in condition 3;

-

2)

where .

With the threshold , Theorem 3 suggests that our splicing method terminates at a logarithm number of iterations. The estimation error decays geometrically.

The next theorem guarantees that the splicing method can recover the true active set with a high probability.

Theorem 4 (Consistency of Best-Subset Recovery).

Suppose conditions 1, 4, and 7 hold. Assume conditions 2, 3, and 6 hold with . Then, under the information criterion SIC, with probability , for some positive constant and a sufficiently large , the ABESS algorithm selects the true active set, that is, .

Theorem 4 implies that the solution of the splicing method is the same as the oracle least-squares estimator with an unknown sparsity level. Since our approach can recover the true active set, we can directly deduce the asymptotic distribution of .

Corollary 1 (Asymptotic Properties).

Suppose the assumptions and conditions in Theorem 4 hold. Then, with a high probability, the solution of ABESS is the oracle estimator, i.e.,

where and is the least-squares estimator given the true active set . Furthermore,

where .

Simulation

In this part, we compare the proposed ABESS algorithm with other variable selection algorithms under Model 1, where the rows of the design matrix are i.i.d-sampled from the multivariate normal distribution with mean and covariance matrix . The error terms are i.i.d-drawn from the normal distribution .

We consider four criteria to assess the methods. The first two criteria, true-positive rate (TPR) and true-negative rate (TNR), are used to evaluate the performance of variable selection. The estimation accuracy for is measured by the relative error (ReErr): . We also examine the dispersion between the sparsity level estimation and the ground truth, which is measured by the sparsity-level error (SLE): . All simulation results are based on 100 synthetic datasets.

Low-Dimensional Case.

We begin with a low-dimensional setting and compare ABESS and all-subsets regression (ASR), which exhaustively searches for the best subsets of the explanatory variables to predict the response via an efficient branch-and-bound algorithm (21). We use SIC (ASR-SIC) to select a model size for ASR.

We adopt a simulation model from ref. 6. Specifically, the coefficient is fixed at , the covariance matrix has a decayed structure, i.e., for all . The pair of sample size and noise level varies as , and . It can be seen from Table 1 that when the noise level is large but the sample size is small, the performance of ABESS and ASR is close, although ASR is slightly better. When the noise level reduces, the slight advantage of ASR-SIC disappears. The fact that ABESS performs as well as the exhaustive ASR algorithm, when the setting is simple enough for the latter to be computationally feasible, demonstrates the power of ABESS in selecting the best subset.

Table 1.

Simulation results in the low-dimensional setting

| Method | TPR | TNR | ReErr | SLE |

| ABESS | 0.90 (0.17) | 0.86 (0.15) | 0.20 (0.19) | 0.40 (0.89) |

| ASR-SIC | 0.91 (0.17) | 0.87 (0.15) | 0.14 (0.13) | 0.38 (0.85) |

| ABESS | 1.00 (0.00) | 0.87 (0.14) | 0.02 (0.02) | 0.63 (0.72) |

| ASR-SIC | 1.00 (0.00) | 0.87 (0.14) | 0.02 (0.02) | 0.63 (0.72) |

| ABESS | 1.00 (0.00) | 0.90 (0.13) | 0.01 (0.01) | 0.48 (0.64) |

| ASR-SIC | 1.00 (0.00) | 0.90 (0.13) | 0.01 (0.01) | 0.49 (0.64) |

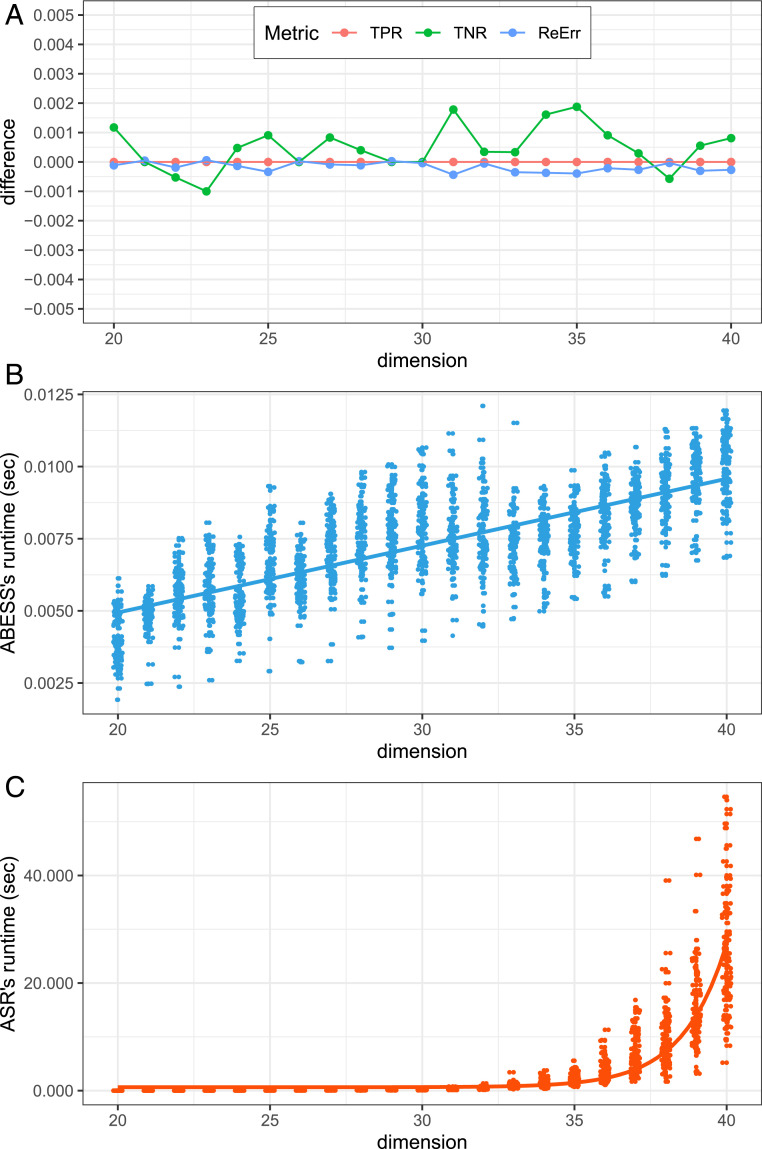

Next, we study the computational time and computational complexity of the ASR and ABESS algorithms by adding zeros to in the previous experiment to form a new of a total of coefficients. Without loss of generality, we consider the runtime of algorithms when increases from 20 to 40 with step size 1. Fig. 1 presents the simulation results. On the one hand, from Fig. 1A, we can see that the difference between ASR-SIC and ABESS in the three criteria are all under control in interval , and, hence, we can conclude that ABESS and ASR have a negligible difference in this setting. On the other hand, from Fig. 1 B and C, the computational time of ASR is 20 s when dimensionality reaches 40, while that of ABESS is less than 0.03 s. More importantly, from Fig. 1B, the computational time of ABESS grows linearly when the dimension increases, as proven in Theorem 2. In contrast, from Fig. 1C, the runtime of ASR increases exponentially. In summary, ABESS not only can recover the support but also is computationally fast.

Fig. 1.

(A) For each of the three metrics (TPR, TNR, and ReErr), the difference (y axis) is calculated by subtracting an ABESS metric from its corresponding ASR metric. Different colors correspond to different metrics. (B) Dimension ( axis) versus ABESS’s runtime ( axis) scatterplot. The blue straight line is characterized by equation . (C) Dimension ( axis) versus ASR’s runtime ( axis) scatterplot. The red curve is . In B and C, the coefficients are estimated by the ordinary least squares.

High-Dimensional Case.

We consider the case when the dimension is in hundreds or even thousands, for which the exhaust search is computationally infeasible. It is of interest to compare ABESS and modern variable selection algorithms, including LASSO (4), SCAD (6), and MCP (7). The solutions of the three algorithms are given by the coordinate descent algorithm (22, 23) implemented in R packages glmnet and ncvreg. For all of these methods, we use SIC to select the optimal regularized parameters. We also consider cross-validation (CV), a widely used method, to select the tuning parameter. For MCP/SCAD/LASSO, the regularized parameters to be selected are prespecified values following the default setting in R packages glmnet and ncvreg. For a fair comparison, the input argument of the ABESS algorithm, , is also set as . Here, is set to be . Note that the concavity parameter of the SCAD and MCP penalties is fixed at 3.7 and 3, respectively (6, 7).

The dimension, , of the explanatory variables increases as 500, 1,500, and 2,500, but only 10 randomly selected variables from them would affect the response. Among the 10 effective variables, 3 of them have a strong effect, 4 of them have a moderate effect, and the rest have a weak effect. Here, a strong/moderate/weak effect means that a coefficient is sampled from a zero-mean normal distribution with SD10/5/2. We consider two structures of . The first one is the uncorrelated structure , and the second one is a constant structure , corresponding to the case that any two explanatory variables are highly correlated. The sample size is fixed at 500, and the noise level is fixed at 1.

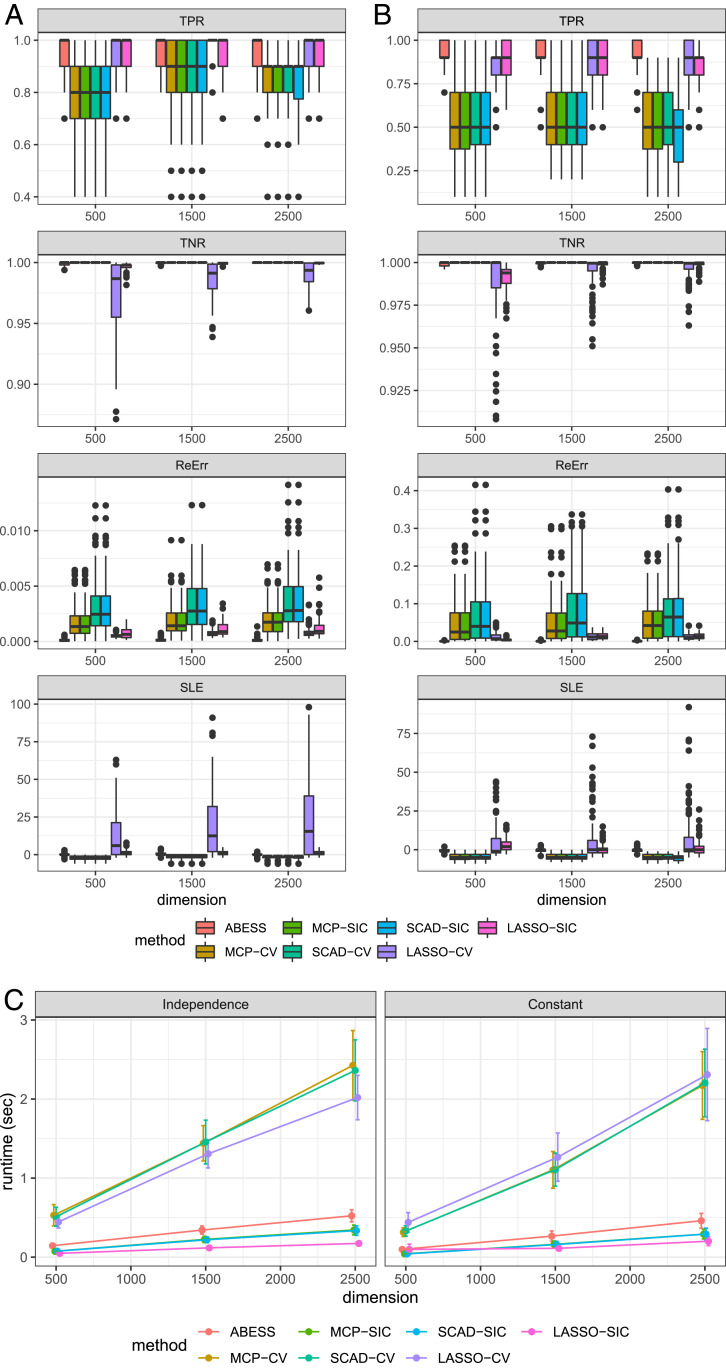

The simulation results are presented in Fig. 2 A and B. A few observations are noteworthy. First, among all of the methods, ABESS or the CV-based LASSO estimator have the best performance for correctly identifying the true effective variables; moreover, ABESS can reasonably control the false-positive rate at a low level like SCAD and MCP. Second, SIC helps ABESS efficiently detect the true model size and its SLE approaches to 0. In conjunction with the first point, the empirical results demonstrate ABESS’s performance as proven in Theorem 4. In contrast, the MCP and SCAD underestimate the model size, whereas LASSO overestimates it. Also, we see that like BIC (24), SIC avoids overfitting (see additional simulation studies in SI Appendix). Finally, the parameter estimation of ABESS is superior to the other algorithms because ABESS not only effectively recovers the support set but also yields an unbiased parameter estimate. Fig. 2C compares the runtime. We see that ABESS’s runtime is computationally efficient. Furthermore, as expected, ABESS is much faster than the CV-based LASSO/SCAD/MCP methods.

Fig. 2.

(A and B) The boxplots of TPR, TNR, ReErr, and SLE of different algorithms in the high-dimensional setting when any two covariates have no correlation (Left) and constant correlation 0.8 (Right). (C) Average runtime comparison under two correlation structure settings: uncorrelated and constant. The runtime ( axis) is measured in seconds.

Summary

We present an iterative splicing method that distinguishes the active set from the inactive set iteratively in variable selection. The estimated active set is shown to contain the true active set when the given support size is no less than the true size, or to be included in the true active set when the given support size is less than the true size. We also introduce a selection information criterion to adaptively determine the sparsity level, which can guarantee to choose the true active set with a high probability. We show that our solution is globally optimal for the Lagrangian of Eq. 2 with SIC and has the oracle properties with a high probability. Numerical results demonstrate the theoretical properties of ABESS. However, when there are a large number of weak effects, the ambiguity makes it challenging for us to detect signals. ABESS as well as other methods such as LASSO, SCAD, and MCP face a similar difficulty. How to perform an effective subset selection with many weak effects warrants further research.

Supplementary Material

Acknowledgments

X.W.’s research is partially supported by National Key Research and Development Program of China Grant 2018YFC1315400, Natural Science Foundation of China (NSFC) Grants 71991474 and 11771462, and Key Research and Development Program of Guangdong, China Grant 2019B020228001. H.Z.’s research is supported in part by US NIH Grants R01HG010171 and R01MH116527 and NSF Grant DMS1722544. C.W.’s research is partially supported by NSFC Grant 11801540, Natural Science Foundation of Anhui Grant BJ2040170017, and Fundamental Research Funds for the Central Universities Grant WK2040000016.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

R.L. is a guest editor invited by the Editorial Board.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2014241117/-/DCSupplemental.

Data Availability.

All study data are included in the article and SI Appendix.

References

- 1.Akaike H., “Information theory and an extension of the maximum likelihood principle” in Selected Papers of Hirotugu Akaike, Parzen E., Tanabe K., Kitagawa G., Eds. (Springer, 1998), pp. 199–213. [Google Scholar]

- 2.Schwarz G., Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978). [Google Scholar]

- 3.Mallows C. L., Some comments on Cp. Technometrics 15, 661–675 (1973). [Google Scholar]

- 4.Tibshirani R., Regression shrinkage and selection via the lasso. J. Roy. Stat. Soc. B 58, 267–288 (1996). [Google Scholar]

- 5.Zou H., The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 101, 1418–1429 (2006). [Google Scholar]

- 6.Fan J., Li R., Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96, 1348–1360 (2001). [Google Scholar]

- 7.Zhang C., Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38, 894–942 (2010). [Google Scholar]

- 8.Hazimeh H., Mazumder R., Fast best subset selection: Coordinate descent and local combinatorial optimization algorithms. Oper. Res., in press. [Google Scholar]

- 9.Natarajan B. K., Sparse approximate solutions to linear systems. SIAM J. Comput. 24, 227–234 (1995). [Google Scholar]

- 10.Blumensath T., Davies M. E., Iterative hard thresholding for compressed sensing. Appl. Comput. Harmon. Anal. 27, 265–274 (2009). [Google Scholar]

- 11.Hintermüller M., Ito K., Kunisch K., The primal-dual active set strategy as a semismooth Newton method. SIAM J. Optim. 13, 865–888 (2002). [Google Scholar]

- 12.Bertsimas D., King A., Mazumder R., Best subset selection via a modern optimization lens. Ann. Stat. 44, 813–852 (2016). [Google Scholar]

- 13.Wang L., Kim Y., Li R., Calibrating non-convex penalized regression in ultra-high dimension. Ann. Stat. 41, 2505–2536 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chen J., Chen Z., Extended Bayesian information criteria for model selection with large model spaces. Biometrika 95, 759–771 (2008). [Google Scholar]

- 15.Zhang C., Huang J., The sparsity and bias of the lasso selection in high-dimensional linear regression. Ann. Stat. 36, 1567–1594 (2008). [Google Scholar]

- 16.Bickel P. J., Ritov Y., Tsybakov A. B., Simultaneous analysis of Lasso and Dantzig selector. Ann. Stat. 37, 1705–1732 (2009). [Google Scholar]

- 17.Raskutti G., Wainwright M. J., Yu B., Restricted eigenvalue properties for correlated Gaussian designs. J. Mach. Learn. Res. 11, 2241–2259 (2010). [Google Scholar]

- 18.Candes E. J., Tao T., Decoding by linear programming. IEEE Trans. Inf. Theor. 51, 4203–4215 (2005). [Google Scholar]

- 19.Huang J., Jiao Y., Liu Y., Lu X., A constructive approach to penalized regression. J. Mach. Learn. Res. 19, 1–37 (2018). [Google Scholar]

- 20.Zheng Z., Bahadori M. T., Liu Y., Lv J., Scalable interpretable multi-response regression via seed. J. Mach. Learn. Res. 20, 1–34 (2019). [Google Scholar]

- 21.Miller A., Subset Selection in Regression (CRC Press, 2002). [Google Scholar]

- 22.Friedman J., Hastie T., Tibshirani R., Regularization paths for generalized linear models via coordinate descent. J. Stat. Software 33, 1–22 (2010). [PMC free article] [PubMed] [Google Scholar]

- 23.Breheny P., Huang J., Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection. Ann. Appl. Stat. 5, 232–253 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhang Y., Li R., Tsai C. L., Regularization parameter selections via generalized information criterion. J. Am. Stat. Assoc. 105, 312–323 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All study data are included in the article and SI Appendix.