Abstract

Semantic retrieval is flexible, allowing us to focus on subsets of features and associations that are relevant to the current task or context: for example, we use taxonomic relations to locate items in the supermarket (carrots are a vegetable), but thematic associations to decide which tools we need when cooking (carrot goes with peeler). We used fMRI to investigate the neural basis of this form of semantic flexibility; in particular, we asked how retrieval unfolds differently when participants have advanced knowledge of the type of link to retrieve between concepts (taxonomic or thematic). Participants performed a semantic relatedness judgement task: on half the trials, they were cued to search for a taxonomic or thematic link, while on the remaining trials, they judged relatedness without knowing which type of semantic relationship would be relevant. Left inferior frontal gyrus showed greater activation when participants knew the trial type in advance. An overlapping region showed a stronger response when the semantic relationship between the items was weaker, suggesting this structure supports both top-down and bottom-up forms of semantic control. Multivariate pattern analysis further revealed that the neural response in left inferior frontal gyrus reflects goal information related to different conceptual relationships. Top-down control specifically modulated the response in visual cortex: when the goal was unknown, there was greater deactivation to the first word, and greater activation to the second word. We conclude that top-down control of semantic retrieval is primarily achieved through the gating of task-relevant ‘spoke’ regions.

Keywords: Semantic cognition, Control, Executive, Top-down, Left inferior frontal gyrus, Hub and spoke model

1. Introduction

Although meaningful items are often presented in isolation in psychology experiments, in the real world we encounter concepts in rich contexts which allow us to anticipate the type of information we need to retrieve at a given moment. Moment-to-moment flexibility is critical for efficient semantic cognition, since we know many features and associations for any given concept, yet only a subset of this information will be relevant at any given time. For example, we know that carrots are brightly coloured, juicy, crunchy and have a sweet flavour: these physical features are typically shared with other fruits and vegetables; commonalities that contribute to our taxonomic knowledge of hierarchical relations (Hashimoto, McGregor, & Graham, 2007; Murphy, 2010; Springer & Keil, 1991). We also know that carrots are associated with soup, and are prepared with a peeler; this thematic knowledge is based on temporal, spatial, causal, or functional relations (Estes, Golonka, & Jones, 2011), and usually involves items which have complementary roles in episodes or events (e.g., dog and leash; Estes et al., 2011; Goldwater et al., 2011). Contexts can cause one of these types of semantic relation to be prioritised over another: for example, in the supermarket, taxonomic knowledge helps us to organise and locate items on our shopping list (carrots are vegetables), yet while cooking with carrots in the kitchen, we focus on thematic associations relating to recipes and utensils. In these situations, we know something about the kind of knowledge we will need to retrieve, even before we encounter the word “carrot” on the shopping list or in our recipe book.

Semantic retrieval is inextricably linked to the context or current task goals (e.g., Damian, Vigliocco, & Levelt, 2001; Hsu, Kraemer, Oliver, Schlichting, & Thompson-Schill, 2011; Kalénine, Shapiro, Flumini, Borghi, & Buxbaum, 2014; Witt, Kemmerer, Linkenauger, & Culham, 2010), and this influences the extent to which particular conceptual features are activated (Yee, Chrysikou, Hoffman, & Thompson-Schill, 2013). In sentence comprehension and production, context constrains access to relevant semantic information (Damian et al., 2001; Glucksberg, Kreuz, & Rho, 1986; Moss & Marslen-Wilson, 1993) and patients with semantic control deficits benefit from constraining cues but also show negative effects of miscueing contexts (Jefferies, Patterson, & Lambon Ralph, 2008; Lanzoni et al., 2019; Noonan, Jefferies, Corbett, & Lambon Ralph, 2010). Furthermore, conceptual activation can be influenced by task cues, for example, Abdel Rahman et al. (2011) found that providing ad hoc contexts for thematic relations (e.g., fishing trip) elicited interference amongst a set of thematically-related items to be named, which was otherwise only found for taxonomically-related sets. Yet the neurobiological mechanisms that allow us to flexibly focus retrieval on particular semantic relationships, according to the context, are largely unknown.

Semantic representations are thought to be highly distributed, involving both unimodal ‘spoke’ regions, such as vision, audition and motor features, as well as heteromodal ‘hub’ regions, which integrate these diverse features (Buxbaum & Saffran, 2002; Goldberg, Perfetti, & Schneider, 2006; Hoffman, Jones, & Lambon Ralph, 2012; Lambon Ralph, Jefferies, Patterson, & Rogers, 2017; Patterson, Nestor, & Rogers, 2007; Reilly, Garcia, & Binney, 2016; Thompson-Schill, Aguirre, Desposito, & Farah, 1999; Visser, Jefferies, Embleton, & Lambon Ralph, 2012). By this account, conceptual retrieval occurs when there is interactive-activation between hub and spokes (Binder et al., 2011; Clarke & Tyler, 2014; Moss, Rodd, Stamatakis, Bright, & Tyler, 2004; Murphy, Rueschemeyer, Smallwood, & Jefferies, 2019; Rogers et al., 2006; Tyler et al., 2013). Both ventral anterior temporal lobe (ATL) and angular gyrus (AG) are candidate semantic hubs, well-situated for the convergence of sensorimotor information across modalities, and overlapping with the default mode network (DMN) (Caspers et al., 2011; Margulies et al., 2016; Murphy et al., 2017; Turken & Dronkers, 2011; Uddin et al., 2010; Visser et al., 2010, Visser et al., 2012). Some accounts have suggested that ATL is specialised for taxonomic relations, while AG supports thematic relations (de Zubicaray, Hansen, & McMahon, 2013; Geng & Schnur, 2016; Lewis et al., 2015; Schwartz et al., 2011). However, ATL shows activation for both types of relationships when difficulty is matched (Jackson, Hoffman, Pobric, & Lambon Ralph, 2015; Sass et al., 2009; Teige et al., 2019). Consequently, ATL might underpin a wide range of semantic decisions, dynamically forming distinct long-range networks with different cortical spoke regions depending on the task (Chiou & Lambon Ralph, 2019; Mollo, Cornelissen, Millman, Ellis, & Jefferies, 2017).

The flexible retrieval of taxonomic and thematic relations might involve interactions between hub and/or spoke regions and control processes. The Controlled Semantic Cognition framework (Lambon Ralph et al., 2017) proposes that retrieval is ‘shaped’ to suit the circumstances by a semantic control network (Addis and McAndrews, 2006; Badre & Wagner, 2007; Chiou, Humphreys, Jung, & Lambon Ralph, 2018; Davey et al., 2016; Jefferies, 2013; Lambon Ralph et al., 2017; Moss et al., 2005; Thompson-Schill et al., 1997). Recruitment of this network is maximised when non-dominant information or weak associations must be retrieved, or there is competition or ambiguity (Davey et al., 2015; Davey et al., 2016; Demb et al., 1995; Noonan, Jefferies, Visser, & Lambon Ralph, 2013; Noppeney & Price, 2004; Wagner, Paré-Blagoev, Clark, & Poldrack, 2001; Whitney, Kirk, O'sullivan, Lambon Ralph, & Jefferies, 2010; Zhang et al., 2004). In these circumstances, a strong left-lateralised response is seen within inferior frontal gyrus (LIFG) and posterior temporal cortex (Badre, Poldrack, Paré-Blagoev, Insler, & Wagner, 2005; Gold & Buckner, 2002; Noonan et al., 2013; Thompson-Schill et al., 1997) – regions which partially overlap with, and yet are distinct from, domain-general executive regions (Davey et al., 2016; Dobbins & Wagner, 2005; Fedorenko, Duncan, & Kanwisher, 2013; Whitney et al., 2010; Whitney, Kirk, O'Sullivan, Lambon Ralph, & Jefferies, 2012). LIFG changes its pattern of connectivity according to the task, connecting more to visual colour regions during demanding colour matching trials, and to ATL during easier globally-related semantic trials (Chiou et al., 2018). Controlled semantic retrieval is also impaired in patients with damage to LIFG (Harvey, Wei, Ellmore, Hamilton, & Schnur, 2013; Noonan et al., 2010; Robinson, Blair, & Cipolotti, 1998; Stampacchia et al., 2018), while inhibitory stimulation of this region disrupts semantic control (Hoffman, Jefferies, & Lambon Ralph, 2010; Whitney et al., 2010, 2012).

Although previous studies have described this semantic control network, we still lack a detailed mechanistic account of how semantic control operates. In many tasks manipulating semantic control (for example, involving ambiguous words, or weak associations), there are few contextual cues and no pre-specified goal for retrieval, so the semantic connection between the items is not known in advance (Davey et al., 2015; Hallam, Whitney, Hymers, Gouws, & Jefferies, 2016; Morris, 2006; Moss et al., 2005; Rodd, Johnsrude, & Davis, 2011; Swinney, 1979; Thompson et al., 2017; Wagner et al., 2001). In these circumstances, semantic control cannot be engaged proactively: for example, switching between taxonomic and thematic trials is effortful (Landrigan & Mirman, 2018), presumably because the interaction of semantic hub(s) and spokes must be dynamically altered. In other tasks (particularly feature matching, within the existing literature), there is a pre-specified goal (e.g., to match concepts on colour or shape), and consequently semantic control can be applied in a top-down manner (see Badre et al., 2005)1. Since it is not possible to match semantically-unrelated items on a specific feature without being told this feature in advance, proactive and retroactive aspects of semantic control are not easily compared in existing studies – they are tested by different paradigms. We overcame this limitation by manipulating task knowledge within a semantic judgement paradigm: on half of the trials, a cue specified the type of conceptual link (taxonomic or thematic) that would be relevant in the forthcoming trial, while a non-specific cue was presented on the other trials. Using fMRI, we were able to investigate the impact of manipulating prior task knowledge on retrieval within the semantic network, and compare this to the effect of strength of association, which has been shown to modulate responses within semantic control regions in previous studies (Badre et al., 2005; Teige et al., 2019; Wagner et al., 2001).

There are several alternative hypotheses about the neural basis of top-down semantic control that are consistent with previous literature. First, top-down semantic control may draw on the same semantic control mechanisms highlighted by earlier studies (Badre et al., 2005; Davey et al., 2015; Teige et al., 2019; Wagner et al., 2001). This view predicts substantial overlap in activation between the effects of task knowledge (allowing top-down control, since the information to focus on is specified in advance) and associative strength (involving stimulus-driven control, in which the information to focus on can only be determined after the concepts are presented). This overlap might be seen in key semantic control regions, such as LIFG, even though manipulations of stimulus-driven control are accompanied by strong differences in task difficulty (e.g., weak associations are harder to retrieve than strong associations), while the provision of task knowledge is not expected to make semantic decisions more difficult. Alternatively, top-down and stimulus-driven semantic control might involve partially-distinct control mechanisms, such as greater reliance on the multiple-demand network for top-down control. This network is implicated in goal-directed behaviour (Crittenden, Mitchell, & Duncan, 2016; Woolgar, Hampshire, Thompson, & Duncan, 2011; Woolgar & Zopf, 2017), and consequently the recruitment of this network might be crucial when a goal for semantic retrieval must be maintained and effortfully implemented.

To allow conceptual retrieval to be shaped to suit the circumstances, it is also necessary for top-down control processes to interact with semantic representations, and there are several hypotheses about how this is achieved. First, it has been suggested that semantic control processes interact with ‘spoke’ representations in sensory and motor regions (Jackson, Rogers, & Lambon Ralph, 2019). This interaction could “gate” conceptual retrieval, for example, by modulating the extent to which visually-presented inputs activate the ventral visual stream; control processes could also shape the flow of activation through the semantic network by regulating the extent to which particular sensorimotor features, such as colour, shape and size, are instantiated during retrieval (via imagery). In addition (or alternatively), semantic control processes may directly modulate the activation of heteromodal conceptual representations in ‘hub’ regions like ATL.

In light of these alternative accounts, we first asked if top-down semantic control would activate semantic control and domain-general executive regions. In an exploratory analysis, we also asked if this response overlapped with the effect of strength of association (a classic manipulation of semantic control: weak associations require more controlled retrieval than strong associations). Next, we used multivoxel pattern analyses to examine where information about semantic goals could be classified prior to semantic decisions, and characterised the intrinsic connectivity of this region (which fell within LIFG) in an independent dataset. Third, we investigated the level at which control processes are applied to conceptual representations – i.e. whether control is applied to the heteromodal hub (e.g., in ATL), or to the spokes in order to bias the pattern of semantic retrieval in task-appropriate ways. These analyses allowed us to test mechanistic accounts of top-down semantic retrieval.

2. Methods

2.1. Participants

Thirty-two undergraduate students were recruited for this study (age-range 18–23 years, mean age = 20.6 ± 1.52 years, 5 males). All were right-handed native English speakers, and had normal or corrected-to-normal vision. None of them had any history of neurological impairment, diagnosis of learning difficulty or psychiatric illness. All provided written informed consent prior to taking part and received monetary compensation for their time. One participant was excluded from data analysis due to chance-level accuracy on the semantic decision task (Mean ± SD = 57.5% ± 5.69%). Consequently, 31 participants were included in the final analysis. We also used a separate sample of 211 participants (age-range 18–31 years, mean age = 20.85 ± 2.44 years, 82 males; 5 participants overlapped between the two datasets) who completed resting-state fMRI, to examine the intrinsic connectivity of regions identified in task contrasts. Ethical approval was obtained from the Research Ethics Committees of the Department of Psychology and York Neuroimaging Centre, University of York.

2.2. Materials

In the semantic relatedness judgement task, participants decided whether the probe and target were semantically related. Items were linked by one of two different types of semantic relationships – taxonomic (i.e. they judged if the items were in the same category) and thematic (i.e. they judged if the items were commonly found or used together; see Procedure for task instructions). On half of the trials, they were told in advance which relationship would be probed before the presentation of the word pair. For the other half of the trials, there was no specific information about which type of semantic relationship to expect in advance; instead participants decided about semantic relatedness based on the two items presented. Overall, 120 related and 60 unrelated word pairs were included in this task. Our study used a fully-factorial within-subjects design manipulating (i) Task Knowledge (Known Goal vs. Unknown Goal) and (ii) Semantic Relation (Taxonomic relation vs. Thematic relation) to create four conditions, with each experimental condition including 30 related trials. Unrelated word pairs were generated without repeating words from the related pairs (i.e., each of the 180 word-pairs was unique and there was no overlap across conditions). The trials were then evenly divided into two sets corresponding to the Known Goal (30 unrelated, 60 related trials) and Unknown Goal (30 unrelated, 60 related trials) conditions.

Items were selected for taxonomically and thematically-related trials based on existing definitions of these relations. Taxonomic relationships provide hierarchical similarity structures, based primarily on common features shared across same-category items (Hampton, 2006), while thematic relations are largely built around events or scenarios (Estes et al., 2011, Lin and Murphy, 2001), which become conventionalized due to the frequent co-occurrence of particular types of objects in real-life situations and in linguistic descriptions of them. We selected a subset of related items used in Teige et al. (2019) study. The words in each pair were related either taxonomically or thematically, but not in both ways: this was confirmed using subjective ratings from an independent sample of 30 participants, who were asked about: (1) Thematic relatedness (Co-occurrence): “How associated are these items? For example, are they found or used together regularly?”; (2) Taxonomic relatedness (Physical similarity): “Do these items share similar physical features?” and (3) Difficulty: “How easy overall is it to identify a connection between the words?”. Ratings were made on a 7-point Likert-scale (with a score of 7 indicating strongest agreement). 2-by-2 repeated-measures ANOVAs examined the differences in these ratings between the trials selected for each condition. The results revealed that there were significant main effects of Semantic Relation for Co-occurrence, F(1,29) = 231.67, p < .001, ηp2 = .89, and Physical similarity, F(1,29) = 318.98, p < .001, ηp2 = .92. Thematically-related word pairs were rated as having higher co-occurrence compared to taxonomically-related word pairs, while the taxonomically-related word pairs had higher physical similarity than the thematically-related word pairs. Rated difficulty was the same across both the taxonomic and thematic conditions, F(1,29) = 2.39, p = .13, ηp2 = .08. Importantly, there were no differences between Known Goal and Unknown Goal trials (Co-occurrence: F(1,29) = .34, p = .56, ηp2 = .01; Physical similarity: F(1,29) = .54, p = .47, ηp2 = .02; Difficulty: F(1,29) = .01, p = .93, ηp2 < .001), and no interactions between Task Knowledge and Semantic Relation for the ratings of thematic relatedness, F(1,29) = .73, p = .40, ηp2 = .03, physical similarity, F(1,29) = .07, p = .79, ηp2 = .002, and difficulty, F(1,29) = .01, p = .92, ηp2 < .001, showing that the trials were well-matched across the Known and Unknown conditions (see Table 1).

Table 1.

Ratings of Co-occurrence, Physical similarity, Difficulty, and word2vec values for the four Related conditions and the Unrelated trials.

| Conditions | Co-occurrence | Physical similarity | Difficulty | Word2vec* |

|---|---|---|---|---|

| Known goal taxonomic relation | 3.11 ± .84 | 4.94 ± .69 | 4.68 ± .79 | .36 ± .09 |

| Unknown goal taxonomic relation | 3.05 ± .76 | 4.8 ± .99 | 4.69 ± .80 | .33 ± .13 |

| Known goal thematic relation | 5.25 ± .88 | 1.67 ± .75 | 5.03 ± 1.31 | .25 ± .10 |

| Unknown goal thematic relation | 5.48 ± .86 | 1.61 ± .83 | 5 ± 1.28 | .21 ± .11 |

| Known goal unrelated condition | 1.23 ± .17 | 1.07 ± .10 | 6.70 ± .18 | — |

| Unknown goal unrelated condition | 1.18 ± .27 | 1.08 ± .12 | 6.77 ± .19 | — |

The word2vec values were only available for 115 word pairs of the 120 semantic related word pairs, with 1 missing value for Known Goal Taxonomic Relations, Known and Unknown Goal Thematic Relations, and 2 missing values for Unknown Goal Taxonomic Relations.

To allow an exploratory analysis of the overall effects of semantic relatedness across both thematic and taxonomic trials, taking into account physical similarity as well as thematic relations, we extracted word2vec values for each trial. Word2vec is a measure of semantic similarity/distance based on the assumption that words with similar meanings occur in similar contexts (Mikolov, Chen, Corrado, & Dean, 2013). In this way, word2vec is able to capture both taxonomic and thematic relationships: for taxonomically related words, items with shared features occur in similar contexts; for example, dog and sheep can be both described in feeding and running contexts, and for thematically related words, items that are frequently found or used together occur in similar contexts; for example, cabbage and bowl both occur in kitchen contexts. Word2vec is correlated with subjective ratings of semantic distance (Wang et al., 2018); however, word2vec may be more sensitive to taxonomic similarity than human volunteers, who tend to be strongly influenced by thematic relationships2.

Word2vec has been shown to predict human behaviour better than other approaches, like latent semantic analysis (Pereira, Gershman, Ritter, & Botvinick, 2016). We opted to use this metric since previous research has shown that semantic distance, as measured by word2vec, is negatively correlated with the strength of activation in the semantic control network: weakly-related trials require more controlled retrieval to identify a semantic link (e.g., Hoffman, 2018; Teige et al., 2019). Consequently, we included word2vec as a parametric regressor across taxonomic and thematic trials to establish if effects of task knowledge, thought to modulate top-down control processes, occur within the same brain regions as effects of the strength of semantic relations, thought to modulate stimulus-driven control demands. For word2vec, we found a significant main effect of Semantic Relation, F(1,111) = 31.37, p < .001, ηp2 = .22 (taxonomic trials were more related on this metric than thematic trials2), but no main effect of Task Knowledge, F(1,111) = 2.50, p = .12, ηp2 = .02, or interaction effect between these two variables, F(1,111) = .12, p = .73, ηp2 = .001 (see Table 1). In order to avoid any confounding effects from linguistic properties, the probe and target words in the four conditions were also matched for word frequency (CELEX database; Baayen, Piepenbrock, & Van Rijn, 1993), length, and imageability using the N-Watch database (Davis, 2005; p > .1, see Table 2).

Table 2.

Linguistic properties of the Probe and Target words (M ± SD).

| Probe | Frequency | Length | Imageability* |

|---|---|---|---|

| Known goal taxonomic relation | .90 ± .44 | 5.57 ± 1.96 | 579 ± 36.14 |

| Unknown goal taxonomic relation | .94 ± .56 | 5.63 ± 1.52 | 576.98 ± 55.55 |

| Known goal thematic relation | 1.09 ± .63 | 5.77 ± 1.77 | 562 ± 65.82 |

| Unknown goal thematic relation | 1.14 ± .61 | 5.57 ± 1.55 | 571.21 ± 52.73 |

| Known goal unrelated condition | .96 ± .41 | 5.77 ± 1.92 | 570.28 ± 53.54 |

| Unknown goal unrelated condition | .97 ± .50 | 5.80 ± 1.61 | 581.78 ± 49.24 |

| Target | Frequency | Length | Imageability* |

| Known goal taxonomic relation | 1.04 ± .54 | 5.77 ± 1.89 | 578.22 ± 44.64 |

| Unknown goal taxonomic relation | 1.01 ± .38 | 5.3 ± 1.68 | 566.59 ± 89.65 |

| Known goal thematic relation | 1.19 ± .61 | 5.43 ± 2.24 | 567.79 ± 54.11 |

| Unknown goal thematic relation | 1.11 ± .46 | 5.53 ± 1.68 | 561.32 ± 77.29 |

| Known goal unrelated condition | 1.05 ± .66 | 5.70 ± 2.00 | 580.38 ± 53.95 |

| Unknown goal unrelated condition | 1.00 ± .54 | 5.80 ± 1.71 | 578.11 ± 49.87 |

Imageability ratings were only available for 91 probes and 101 targets of the 120 semantic related word pairs, and 59 probes and 59 targets of the 60 semantic unrelated word pairs.

As word order influences semantic processing (Popov, Zhang, Koch, Calloway, & Coutanche, 2019), we analysed the frequency of usage for each word pair, when the words appeared in either order (word 1 → word 2; word 2 → word 1), using the Sketch Engine corpus (Kilgarriff, Rychly, Smrz, & Tugwell, 2004). Paired-samples t-tests revealed no differences in the frequency of word use across the two possible word orders in any experimental condition (t values < 1.65, p values > .11). We then analysed the frequency of word pairs in the order in which they were presented in the experiment: there were no main effects of Task Knowledge (F(1,29) = 2.52, p = .12, ηp2 = .08; Known Goal: Mean ± SD = 185.17 ± 88.81 occurrences; Unknown Goal: Mean ± SD = 40.03 ± 12.90 occurrences) or Semantic Relation (F(1,29) = 3.47, p = .07, ηp2 = .11; Taxonomic: Mean ± SD = 27.28 ± 17.69 occurrences; Thematic: Mean ± SD = 197.92 ± 88.08 occurrences). There was also no interaction, F(1,29) = 1.44, p = .24, ηp2 = .05. These findings indicate that the frequency of the word pairs was well-matched across conditions, irrespective of word order.

For the unrelated word pairs, another 12 participants who did not take part in the fMRI experiment were asked to rate each word pair on Co-occurrence, Physical similarity and Difficulty, using a 7-point Likert-scale as described above. However, the Difficulty question was adapted to ask: “How easy is it to decide there is no relationship between the words?”. The unrelated word pairs presented in the Known Goal and Unknown Goal conditions were matched on Co-occurrence (t(29) = .83, p = .41), Physical similarity (t(29) = −.10, p = .92), and Difficulty (t(29) = −1.46, p = .16) as no significant effects were revealed by the paired t-tests (see Table 1). The unrelated probes and targets in Known Goal and Unknown Goal conditions were matched for frequency (CELEX database; Baayen et al., 1993), length and imageability in the N-Watch database (Davis, 2005; p > .1, see Table 2). Also, the comparisons between related and unrelated words for both probes and targets in each condition did not reveal any significant difference in frequency, length, and imageability (p > .10).

We also included a non-semantic baseline task which matched the presentation and response format of the semantic task and provided a means of focussing the analysis on the semantic response. Meaningless letter strings were presented on the screen one after another and participants were asked to decide whether the two letter strings contained the same number of letters. There were 30 matching strings and 15 mismatching strings (i.e., different number of letters between the two).

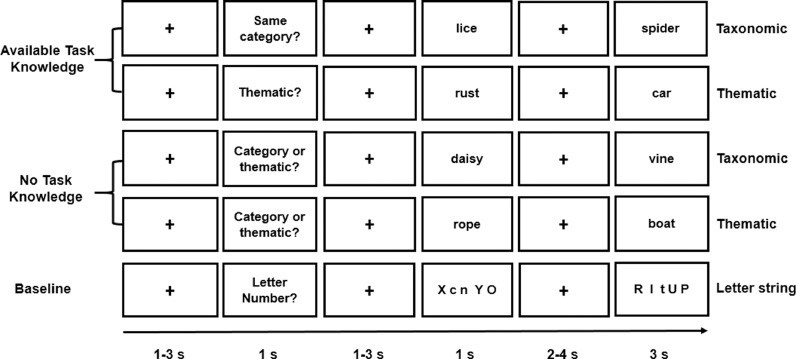

2.3. Procedure

We employed a semantic relatedness judgement task in which participants were asked to decide if the words in each pair were related or unrelated. This basic task was unchanged across conditions, but the nature of the semantic relationship changed across semantically-related trials – some words were from the same category, while some were thematically related. We manipulated the opportunity for top-down controlled semantic retrieval by changing the specificity of the instructions. On half of the trials, a specific task instruction, ‘Category?’ or ‘Thematic?’, was presented such that participants knew in advance which type of semantic relationship would be relevant on the trial (Known Goal). On the other trials, the semantic decision about the word pair was preceded by a non-specific instruction, ‘Category or Thematic?’ (Unknown Goal). This allowed us to compare the brain's response to meaningful items during a semantic task when participants either knew the kind of information that would be relevant to making a subsequent decision, or did not have this information in advance and simply decided based on the words that were presented. As a baseline condition, meaningless letter strings were presented and participants were asked to decide whether the number of letters in the two strings was the same or not. For these trials, a ‘Letter number?’ task instruction was presented (See Fig. 1).

Fig. 1.

Illustration of Known and Unknown Taxonomic, Known and Unknown Thematic, and Letter string trials.

As Fig. 1 indicates, each trial started with a fixation cross presented for a jittered interval of 1–3 s in the centre of the screen. Then the task instruction slide appeared for 1s followed by a jittered inter-stimulus fixation for 1–3 s. After that, the probe item was presented on the screen for 1 s. After a longer jittered fixation interval lasting 2–4 s, the target was presented for 3 s. This corresponded to the response period during which participants made their judgments (i.e. decisions about the semantic relationship between the words, or if the number of letters was the same or not) and responded as fast and accurately as possible. They pressed buttons on a response box with their right index and middle fingers to indicate YES and NO responses. The overall likelihood of a YES or NO response was the same across conditions.

Stimuli were presented in five runs each containing 45 trials: 6 related and 3 unrelated trials in each of the four experimental conditions, and 9 letter string trials (6 “same number” and 3 “different number” trials). Each run lasted 9 minutes, and trials were presented in a random order. The runs were separated by a short break and started with a 9-second alerting slide (i.e. Experiment starts soon).

Before entering the scanner, participants received detailed instructions, and the experimenter explained the distinction between taxonomic and thematic relationships. Participants were told that taxonomically-related items are from the same category and share physical features and were given the example of Kangaroo and Hedgehog. They were told thematically-related items are found or used together and were given the example of Leaves and Hedgehog. To ensure participants fully understood this distinction, as well as to increase their familiarity with the task format, they completed a 15-trial practice block containing all types of judgements. They were given feedback about their performance and, if accuracy was less than 75%, they repeated the practice trials (this additional training was only needed for one participant who pressed the wrong response buttons).

2.4. Neuroimaging data acquisition

Structural and functional data were acquired using a 3T GE HDx Excite Magnetic Resonance Imaging (MRI) scanner utilizing an eight-channel phased array head coil at the York Neuroimaging Centre, University of York. Structural MRI acquisition in all participants was based on a T1-weighted 3D fast spoiled gradient echo sequence (repetition time (TR) = 7.8 s, echo time (TE) = minimum full, flip angle = 20°, matrix size = 256 × 256, 176 slices, voxel size = 1.13 mm × 1.13 mm × 1 mm). The task-based activity was recorded using single-shot 2D gradient-echo-planar imaging sequence with TR = 3 s, TE = minimum full, flip angle = 90°, matrix size = 64 × 64, 60 slices, and voxel size = 3 mm × 3 mm × 3 mm. Data was acquired in a single session. The task was presented across 5 functional runs, each containing 185 volumes.

In a different sample of participants, a 9-minute resting-state fMRI scan was recorded using single-shot 2D gradient-echo-planar imaging (TR = 3 s, TE = minimum full, flip angle = 90°, matrix size = 64 × 64, 60 slices, voxel size = 3 mm × 3 mm × 3 mm, 180 volumes). The participants were instructed to focus on a fixation cross with their eyes open and to keep as still as possible, without thinking about anything in particular. For both the task-based and the resting-state scanning, a fluid-attenuated inversion-recovery (FLAIR) scan with the same orientation as the functional scans was collected to improve co-registration between subject-specific structural and functional scans. The resting-state data were collected alongside task data, with the resting-state sequence presented first, so that measures of intrinsic connectivity could not be influenced by task performance.

2.5. Pre-processing of task-based fMRI data

All functional and structural data were pre-processed using a standard pipeline and analysed via the FMRIB Software Library (FSL version 6.0, www.fmrib.ox.ac.uk/fsl). Individual FLAIR and T1-weighted structural brain images were extracted using FSL's Brain Extraction Tool (BET). Structural images were linearly registered to the MNI152 template using FMRIB's Linear Image Registration Tool (FLIRT). The first three volumes (i.e. the presentation of the 9-second-task-reminder ‘Experiment starts soon’) of each functional scan were removed in order to minimise the effects of magnetic saturation, therefore there was a total of 182 volumes for each functional scan. The functional neuroimaging data were analysed by using FSL's FMRI Expert Analysis Tool (FEAT). We applied motion correction using MCFLIRT (Jenkinson, Bannister, Brady, & Smith, 2002), slice-timing correction using Fourier space time-series phase-shifting (interleaved), spatial smoothing using a Gaussian kernel of FWHM 6 mm (for the univariate analyses only), and high-pass temporal filtering (sigma = 100 s) to remove temporal signal drift. The spatial smoothing step was omitted for multivariate pattern analysis to preserve local voxel information. In addition, motion scrubbing (using the fsl_motion_outliers tool) was applied to exclude volumes that exceeded a framewise displacement threshold of 0.9. One run for two participants showed high head motion values (absolute value > 2 mm) and these runs were excluded from the univariate analysis. These two participants were removed entirely from the multivariate pattern analysis. Mean temporal signal-to-noise ratio (tSNR; ratio of mean signal in each voxel and standard deviation of the residual error time series in that voxel across time) across all the acquisitions is shown in Supplementary Fig. S1.

2.6. Univariate analysis of task-based fMRI data

The analysis examined the controlled retrieval of semantic information at different stages of the task. We differentiated the response to the first word, when participants either knew what type of semantic relationship to retrieve in advance (Specific goal: ‘Category?’ or ‘Thematic?’) or did not know (Non-specific goal: ‘Category or Thematic?’), and the second word when participants linked the words according to the specific instructions or in the absence of a specific instruction about the nature of the semantic relationship. Consequently, the model included three factors: (1) Word Position (First word vs. Second word), (2) Task Knowledge (Known goal vs. Unknown goal), and (3) Semantic Relation (Taxonomic relation vs. Thematic relation).

In addition, in an exploratory analysis, we examined effects of the semantic distance between the probe and target words during semantic decision making. This analysis was designed to establish if the effects of having specific instructions prior to semantic retrieval, seen in LIFG (see below), would overlap with the well-established effect of identifying semantic links between more distantly-related as opposed to closely-related items, which elicits activation within the semantic control network (Davey et al., 2015; Noonan et al., 2010; Noonan et al., 2013; Whitney et al., 2010). We included a parametric regressor in the model, across all the taxonomic and thematic trials, to characterise the semantic distance for each trial. This was derived using the word2vec algorithm (Mikolov et al., 2013), which uses word co-occurrence patterns in a large language corpus to derive semantic features for items, which can then be compared to determine their similarity. The inclusion of this analysis allowed us to establish whether the brain regions that support the top-down control of retrieval (i.e. the comparison of Known goal vs. Unknown goal trials) overlap with those sensitive to stimulus-driven control demands. Since the thematic and taxonomic trials were found to differ in word2vec, the inclusion of this additional regressor also statistically controls for differences across these two types of semantic relation – although examining overall effects of semantic relation was not the key aim of the current study (see Supplementary Analysis 5).

The pre-processed time-series data were modelled using a general linear model, using FMRIB's Improved Linear Model (FILM) correcting for local autocorrelation (Woolrich, Ripley, Brady, & Smith, 2001). 10 Explanatory Variables (EV) of interest and 5 of no interest were modelled using a double-Gaussian hemodynamic response gamma function (probe and target were modelled separately; i.e., two EVs for each condition). The 10 EV's of interest were: (1) Probe and (2) Target for Known Goal Taxonomic Relations, (3) Probe and (4) Target for Unknown Goal Taxonomic Relations, (5) Probe and (6) Target for Known Goal Thematic Relations, (7) Probe and (8) Target for Unknown Goal Thematic Relations, (9) Probe and (10) Target for same-number letter string baseline condition. Our EV's of no interest were: (11) Probe and (12) Target unrelated word pairs (when participants should decide that the two words are not semantically linked), (13) Other inputs-of-no-interest (including the instruction slide, the period after the response on each trial when the word was still on the screen, and different-number letter string trials), (14) Fixation (including the inter-stimulus fixations between instructions and first item, as well as between first item and second item when some retrieval or task preparation was likely to be occurring), and (15) Incorrect Responses (including all the incorrect trials across conditions). We also included an EV to model word2vec as a parametric regressor, therefore we had 16 EVs in total. EVs for the first item in each pair commenced at the onset of the word or letter string, with EV duration set as the presentation time (1s). EVs for the second item in each pair commenced at the onset of the word or letter string, and ended with the participants’ response (i.e. a variable epoch approach was used to remove effects of time on task). The remainder of the second item presentation time was modelled in the inputs-of-no-interest EV (i.e., word 2 was on screen for 3 s, and if a participant responded after 2 s, the post-response period lasting 1 second was removed and placed in the inputs-of-no-interest EV). The parametric word2vec EV had the same onset time and duration as the EVs corresponding to the second word in the semantic trials, but in addition included the demeaned word2vec value as a weight. The fixation period between the trials provided the implicit baseline.

In addition to contrasts examining the main effects of Word Position (Word 1 vs. Word 2), Task Knowledge (Known Goal vs. Unknown Goal), and Semantic Relation (Taxonomic Relation vs. Thematic Relation), we included all two-way and three-way interaction terms, and comparisons of each semantic condition with the non-semantic (baseline) letter task. The five sequential runs were combined using fixed-effects analyses for each participant. In the higher-level analysis at the group level, the combined contrasts were analysed using FMRIB's Local Analysis of Mixed Effects (FLAME1), with automatic outlier de-weighting (Woolrich, 2008). A 50% probabilistic grey-matter mask was applied. Clusters were thresholded using Gaussian random-field theory, with a cluster-forming threshold of z = 3.1 and a familywise-error-corrected significance level of p = .05.

2.7. ROI-based multivariate pattern analysis

To examine whether regions in the semantic control network (Noonan et al., 2013) contained information about the semantic goal specified by the instructions, we performed ROI-based MVPA using the PyMVPA toolbox (Hanke et al., 2009). This network, including LIFG, posterior middle temporal gyrus, dorsal angular gyrus, and pre-supplementary cortex, was defined by a formal meta-analysis of 53 studies that contrasted semantic tasks with high over low executive control demands (Noonan et al., 2013). We examined the neural response to the first word, as this allowed us to capture the process of tailoring ongoing retrieval to suit the task demands, before the presentation of different semantic relationships could elicit different neural patterns. We used a linear support vector machine (SVM) classifier (Linear CSVMC, Chang & Lin, 2011) to separately decode the neural patterns elicited for Known Goals vs. Unknown Goals. We also examined decoding of different types of semantic relationship: Known Goal Taxonomic Relation vs. Known Goal Thematic Relation, when semantic control regions might reflect the distinction between these trial types, and Unknown Goal Taxonomic Relation vs. Unknown Goal Thematic Relation, as a control analysis, since semantic control regions should not be able to decode Unknown trial types.

To derive condition-specific beta estimates, we repeated the univariate first-level GLM analysis on spatially unsmoothed fMRI data. The resulting beta images pertaining to each condition were estimated in each of the five runs and only trials with correct responses were included. Consequently, the spatial pattern information entered for classification analysis represented the average neural pattern for each condition and each run. This approach is consistent with previous studies investigating of semantic and goal representations (Murphy et al., 2017; Peelen & Caramazza, 2012; van Loon, Olmos-Solis, Fahrenfort, & Olivers, 2018). We employed a searchlight method (Kriegeskorte, Goebel, & Bandettini, 2006) to reveal local activity patterns within the semantic control network that carry goal information using a spherical searchlight with a radius of 3 voxels, within a leave-one-run-out cross-validation scheme across all 5 runs (i.e., five training-testing iterations). Each run acted in turn as the testing set, and the remaining 4 runs were used for training the SVM. Z-scored normalization of each voxel per run and per condition was performed in order to control for global variations of the hemodynamic response across runs and subjects, and remove any contributions of mean activation and retain only pattern information.

Classifiers were trained and tested on individual subject data transformed into MNI standard space. Leave-one-run-out cross-validation was carried out for Known vs. Unknown Goal, and Known Goal Thematic vs. Known Goal Taxonomic relations separately, with classification accuracy (i.e., proportion of the correctly classified testing samples that have the same predicted label as the target label) calculated within each fold. Since the classifier was tested on two samples corresponding to each condition in each fold, for example Known Goal Taxonomic Relation and Known Goal Thematic Relation, the performance for each iteration could be 0% (i.e., no correct prediction), 50% (i.e., only correctly classified one of the two conditions), 100% (i.e., all correctly classified). Classification accuracies were then averaged across folds and assigned to the central ROI voxel to produce participant-specific information maps, with the accuracies at individual level widely ranging from 0 to 1 (i.e., 0/0.1/0.2/0.3/0.4/0.5/0.6/0.7/0.8/0.9/1). To identify whether any region within the ROI contained significant pattern information (i.e., above chance level = 50%), individual information maps from all participants were submitted to a nonparametric one-sample t-test on each voxel based on permutation methods implemented using the Randomize tool in FSL (5000 permutations). Threshold-free cluster enhancement (TFCE; Smith & Nichols, 2009) was used to identify significant clusters from the permutation tests. The final results were thresholded at a TFCE cluster-corrected p-value < 0.05 after controlling for family-wise error rate.

2.8. Resting-state neuroimaging data analysis

Resting-state functional connectivity analyses in an independent dataset were used to establish patterns of intrinsic connectivity for the clusters we derived from the task contrasts. First, we looked to see if the identified LIFG clusters linked to task knowledge (derived from the contrast of Known > Unknown Goal), effects of word2vec, and classification of specific goal information would show connectivity with the broader semantic control network, described by Noonan et al. (2013). Secondly, we assessed patterns of intrinsic connectivity for sites sensitive to the type of semantic relationship, to establish whether these brain regions showed relatively strong connectivity with each other and to other default mode network regions. Lastly, we characterised the intrinsic connectivity of a site implicated in the application of semantic control (a site in visual cortex which showed an interaction between task knowledge and word position). This resting-state, from more than 200 healthy students at the University of York, has been used in multiple previous publications (e.g., Sormaz et al., 2018; Vatansever et al., 2017; Vatansever, Karapanagiotidis, Margulies, Jefferies, & Smallwood, 2019; Wang et al., 2018).

Resting-state functional connectivity analyses were conducted using CONN-fMRI functional connectivity toolbox, version 18a (http://www.nitrc.org/projects/conn) (Whitfield-Gabrieli & Nieto-Castanon, 2012), based on Statistical Parametric Mapping 12 (http://www.fil.ion.ucl.ac.uk/spm/). First, we performed spatial pre-processing of functional and structural images. This included slice-timing correction (bottom-up, interleaved), motion realignment, skull-stripped, co-registration to the high-resolution structural image, functional indirect segmentation, spatially normalisation to Montreal Neurological Institute (MNI) space and smoothing with a 8 mm FWHM-Gaussian filter. In addition, a temporal filter in the range 0.009–0.08 Hz was applied to constrain analyses to low-frequency fluctuations. A strict nuisance regression method included motion artifacts, scrubbing, a linear detrending term, and CompCor components attributable to the signal from white matter and cerebrospinal fluid (Behzadi, Restom, Liau, & Liu, 2007), eliminating the need for global signal normalisation (Chai, Castañón, Öngür, & Whitfield-Gabrieli, 2012; Murphy, Birn, Handwerker, Jones, & Bandettini, 2009). ROIs were taken from the task-based fMRI results (see Results section), and binarised using fslmaths. In the first-level analysis, we extracted the time series from these seeds and used these data as explanatory variables in whole-brain connectivity analyses at the single-subject level. For group-level analysis, a whole-brain analysis was conducted to identify the functional connectivity of each ROI with a cluster-forming threshold of z = 3.1 correcting for multiple comparisons (p < .05, FDR corrected). Connectivity maps were uploaded to Neurovault (Gorgolewski et al., 2015, https://neurovault.org/collections/3509/).

2.9. ROI selection for “Hub” and “Spoke” analysis

Additional ROI analysis for heteromodal hub and spoke sites were conducted to further characterise the effects of semantic relation and task knowledge. We identified coordinates for hub ROIs from meta-analyses of neuroimaging studies on semantic cognition. We examined (i) left ventral ATL (MNI coordinates −41, −15, −31) from an average of peaks across eight studies that included a semantic > non-semantic contrast (Rice, Hoffman, Binney, & Lambon Ralph, 2018), (ii) left lateral ATL (MNI coordinates −54, 6, −28) from an activation likelihood estimation (ALE) meta-analysis of 97 studies (Rice, Lambon Ralph, & Hoffman, 2015), and (iii) left AG (MNI coordinates −48, −68, 28) from an ALE meta-analysis of 386 studies (Binder, Desai, Graves, & Conant, 2009; Humphreys & Lambon Ralph, 2014). We created these ROIs by placing a binarised spherical mask with a radius of 3 mm, centred on the MNI coordinates in each site. For spoke ROIs, masks corresponding to primary auditory, motor and visual cortex were generated from Juelich histological atlas in standard space in FSLview, with each mask thresholded at 25% probability and binarized using fslmaths.

2.10. Data and code availability statement

Neuroimaging data at the group level are openly available in Neurovault at https://neurovault.org/collections/3509/. Semantic material and script for the task are accessible in the Open Science Framework at https://osf.io/stmv5/. The conditions of our ethical approval do not permit public archiving of the raw data because participants did not provide sufficient consent. Researchers who wish to access the data and analysis scripts should contact the Research Ethics and Governance Committee of the York Neuroimaging Centre, University of York, or the corresponding authors, Meichao Zhang or Beth Jefferies. Data will be released to researchers when this is possible under the terms of the GDPR (General Data Protection Regulation).

3. Results

3.1. Results outline

First, we describe the behavioural results. Second, we report univariate analyses which identified brain regions showing effects of (i) Word Position – i.e. differences in the response to the first and second words of each pair, (ii) Task Knowledge – i.e. differences in semantic retrieval when the relationship was known or not known in advance, and (iii) any interactions between these factors. Given the focus of this study was on the effect of prior task knowledge, and not on understanding the neural basis of taxonomic and thematic judgements, we present main effects of Semantic Relation in the Supplementary Materials (see Supplementary Analysis 5; there were no significant interaction effects involving this factor). To anticipate our key results, we found that a region in LIFG showed a stronger response for trials with a known goal. In addition, a region of visual cortex showed an interaction between task knowledge and word position, consistent with the modulation of spoke-related activity to allow proactive control of semantic retrieval. We then conducted resting-state functional connectivity analyses in a separate dataset, to examine the intrinsic connectivity of these regions and to characterise their overlap with established brain networks. Third, we compared effects of Task Knowledge (i.e. top-down control) with the parametric effect of semantic distance between the words in each pair, using word2vec scores to characterise this well-established semantic control manipulation, in line with previous studies (e.g., Badre et al., 2005; Teige et al., 2019; Wagner et al., 2001). This analysis tested whether a shared region of LIFG supported semantic control when retrieval could be controlled in a top-down fashion and when the inputs themselves determined the control demands. Next, we performed multivariate pattern analysis of the task-based fMRI data to establish how goal information is maintained within the semantic control network. We tested the hypothesis that the semantic control site in LIFG would be able to distinguish between different kinds of semantic relation following the task instructions, and prior to these relations being presented. Finally, we employed an ROI approach to check further effects of control demands in both “Hub” and “Spoke” regions, to test hypotheses about which aspects of conceptual knowledge might be modulated by control processes.

3.2. Behavioural results

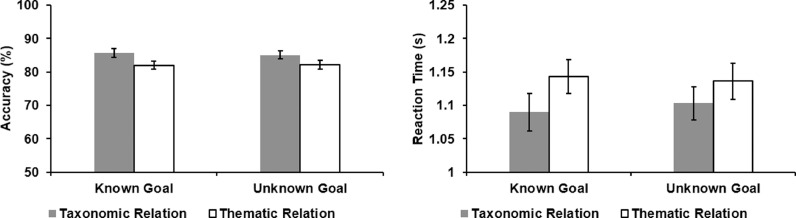

We obtained behavioural data from a total of 54 participants (31 tested in the scanner and 23 tested in the lab, with data from the scanner presented separately in the Supplementary Analysis 2). Fig. 2 shows task accuracy (left panel) and reaction time (RT; right panel). Trials with incorrect responses were excluded from the RT analysis (16.3%). We performed repeated-measures ANOVAs on both RT and accuracy, examining the effects of Task Knowledge (Known Goal vs. Unknown Goal) and Semantic Relation (Taxonomic relation vs. Thematic relation). For both accuracy and RT, there was a main effect of Semantic Relation (Accuracy: F(1,53) = 7.19, p = .01, ηp2 = .12; RT: F(1,53) = 15.82, p < .001, ηp2 = .23), as the thematic trials were more difficult than the taxonomic trials. There was no main effect of Task Knowledge (Accuracy: F(1,53) = .05, p = .82, ηp2 = .001; RT: F(1,53) = .07, p = .79, ηp2 = .001), and no interaction (Accuracy: F(1,53) = .27, p = .61, ηp2 = .005; RT: F(1,53) = 1.00, p = .32, ηp2 = .02).

Fig. 2.

Behavioural results. Accuracy (percentage correct, left panel) and reaction times (in seconds, right panel) for the target words in each semantic condition (Known Goal Taxonomic Relation, Unknown Goal Taxonomic Relation, Known Goal Thematic Relation, and Unknown Goal Thematic Relation). Error bars represent the standard error.

We also performed paired-samples t-tests (Bonferroni-corrected for four comparisons) comparing each semantic condition with the letter string baseline (Mean RT = 1.16 s; Mean Accuracy = 92.0%). For RT, there were no significant differences (Known Taxonomic vs. Letter Baseline: t(53) = −2.28, p = .10; Unknown Taxonomic vs. Letter Baseline: t(53) = −2.06, p = .18; Known Thematic vs. Letter Baseline: t(53) < 1; Unknown Thematic vs. Letter Baseline: t(53) < 1). Accuracy on the letter string task was higher than in the semantic conditions (Known Taxonomic vs. Letter Baseline: t(53) = −3.09, p = .012; Unknown Taxonomic vs. Letter Baseline: t(53) = −4.29, p < .004; Known Thematic vs. Letter Baseline: t(53) = −6.06, p < .004; Unknown Thematic vs. Letter Baseline: t(53) = −5.87, p < .004).

3.3. Univariate analysis

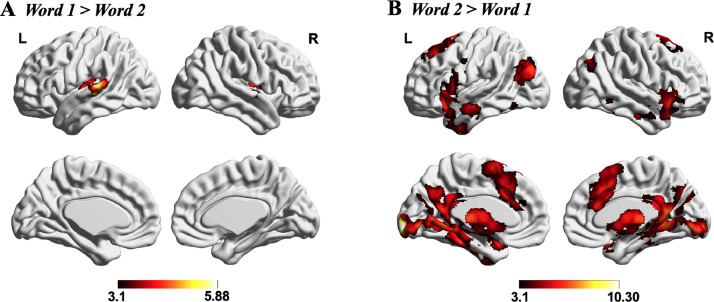

3.3.1. Main effects in task-based fMRI

Effects of word position: First, we examined the brain regions involved in different stages of the task in a whole-brain analysis. This analysis characterised the baseline effects of task structure prior to investigating how task knowledge might interact with these effects. We compared each experimental condition with the letter string baseline at each word position (providing a basic level of control for visual input and button presses), before performing contrasts between different word positions. Brain regions showing a stronger response to the first than second word included left superior temporal gyrus and right central opercular cortex (See Fig. 3A). In contrast, left inferior frontal gyrus, bilateral lateral occipital cortex, bilateral inferior/middle temporal gyrus, paracingulate/cingulate gyrus, parahippocampal gyrus and primary visual areas showed a stronger response to the second word (i.e., when the semantic decision was made; See Fig. 3B). Similar effects were observed when Word 1 and Word 2 were contrasted directly, without the subtraction of the letter string baseline (see Supplementary Fig. S4).

Fig.. 3.

Effects of word position. (A) Word 1 (experimental conditions - letter string baseline) > Word 2 (experimental conditions - letter string baseline). (B) Word 2 (experimental conditions - letter string baseline) > Word 1 (experimental conditions - letter string baseline). Both maps were cluster-corrected with a voxel inclusion threshold of z > 3.1 and family-wise error rate using random field theory set at p < .05. L = Left hemisphere; R = Right hemisphere.

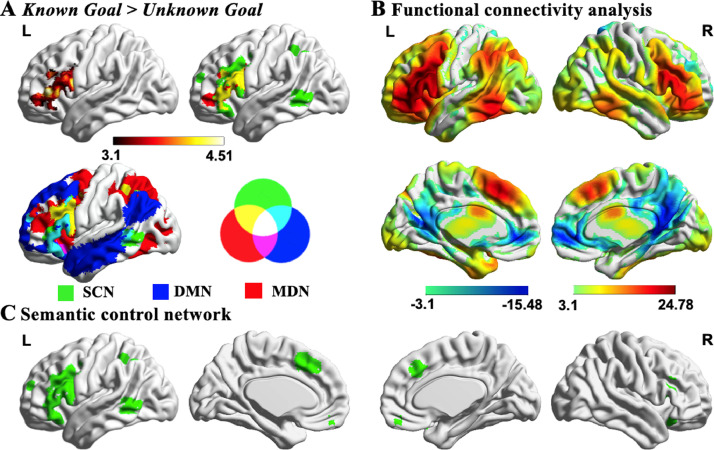

Effects of task knowledge: The next stage of our analysis sought to capture activation relating to the effect of task knowledge, regardless of semantic relation, comparing all of the Known Goal trials with Unknown Goal trials. Activation of LIFG was stronger when the goal was known compared to when the goal was not known (see Fig. 4A). This cluster largely fell within the semantic control network defined by Noonan et al. (2013) in a formal meta-analysis of 53 studies that manipulated executive semantic demands (in green with overlap in yellow at the top right-hand corner of Fig. 4A). This semantic control network partially overlaps with the default mode network (DMN; blue regions at the bottom left-hand of Fig. 4A) as defined by Yeo et al. (2011) in a 7-network parcellation of whole-brain functional connectivity, particularly in the anterior and ventral parts of LIFG (cyan regions). There is also partial overlap with the multiple demand network (MDN; in red), as defined by the response to difficulty across a diverse set of demanding cognitive tasks (Fedorenko et al., 2013), particularly in the posterior and dorsal parts of LIFG (yellow regions at the bottom left-hand of Fig. 4A). In this way, the semantic control network is intermediate between DMN and MDN (Davey et al., 2016). Of those voxels within this identified LIFG cluster, 66.85% fell within the semantic control network, 25.7% were within the DMN, and 25.0% were within the MDN (some voxels fell in more than one of these networks).To understand the intrinsic connectivity of this region, we seeded it in an independent resting-state fMRI dataset, revealing a pattern of strong connectivity with posterior temporal cortex, intraparietal sulcus and anterior cingulate cortex/pre-supplementary motor area (see Fig. 4B), including the key regions of the semantic control network (see Fig. 4C; Chiou et al., 2018; Noonan et al., 2013; Whitney et al., 2010). This analysis confirmed that the LIFG cluster responding to task knowledge forms a network at rest with other brain areas associated with semantic control.

Fig. 4.

Effects of task knowledge. A) Significant activation in left inferior frontal gyrus defined using the contrast Known Goal > Unknown Goal. This cluster overlapped with the semantic control network (SCN; in green) from Noonan et al. (2013; overlap in yellow). This semantic control network is intermediate between default mode network (DMN; in blue with overlap in cyan with SCN) and multiple demand network (MDN; in red with overlap in yellow with SCN). The network maps are fully saturated to emphasize the regions of overlap. B-C) The functional connectivity of this region with the rest of the brain revealed a large-scale brain network commonly associated with regions of the semantic control network. All maps are thresholded at z > 3.1 (p < .05). L = Left hemisphere; R = Right hemisphere.

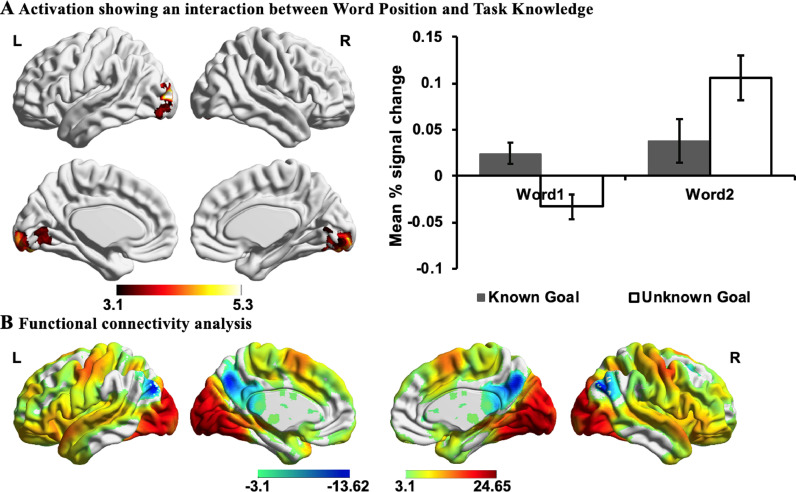

3.3.2. Interaction between task knowledge and word position

Next, we examined the interaction between task knowledge (Known Goal vs. Unknown Goal) and word position (Word 1 vs. Word 2). An interaction effect was observed in visual regions, including lingual gyrus, occipital pole, occipital fusiform gyrus, and lateral occipital cortex. To understand the nature of this effect, we extracted parameter estimates for each condition (see Fig. 5A). When the goal for semantic retrieval was unknown, this region showed deactivation to the first word and stronger activation to the second word, compared with when the goal was known – the responsiveness of visual cortex to written words was modulated by goal information. The findings are consistent with the gating of visual processing by task knowledge, such that the engagement of visual ‘spoke’ regions is suppressed when the required pattern of retrieval is not yet known, preventing the unfolding of a semantic response which might not be appropriate to the pattern of retrieval required by the task. This visual site was used as a seed region in an analysis of intrinsic connectivity in an independent dataset, revealing high levels of connectivity with other visual regions, moderate connectivity with motor and auditory sites, and low levels of connectivity with angular gyrus and posterior cingulate cortex (see Fig. 5B).

Fig. 5.

Interaction effects. A) Activation showing an interaction between Word Position (Word 1 vs. Word 2) and Task Knowledge (Known Goal vs. Unknown Goal). The bar charts plot the mean percentage (%) change in the visual cluster for each condition relative to implicit baseline (i.e., the first fixation interval), revealing differences in activity between Known Goal and Unknown Goal at the first and the second words. Error bars depict the standard error of the mean. B) The functional connectivity of this region with the rest of the brain revealed a large-scale brain network commonly associated with the processing of visual input. All maps are cluster-corrected with a voxel inclusion threshold of z > 3.1 and family-wise error rate using random field theory set at p < .05. L = Left hemisphere; R = Right hemisphere.

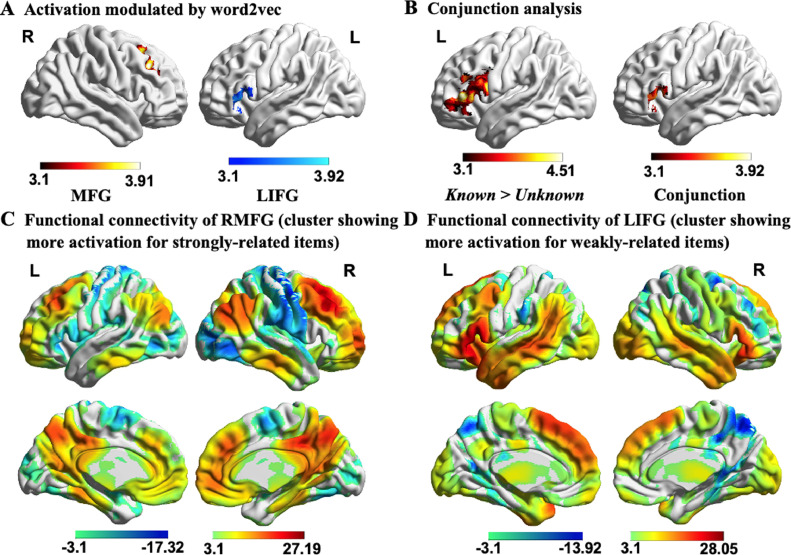

3.3.3. Effects of the strength of semantic relations

In order to establish if the LIFG cluster that responded to Task Knowledge also responded to a commonly-used manipulation of semantic control demands, in an exploratory analysis, we examined the parametric effect of the strength of the conceptual relationship between the two words, across thematic and taxonomic trials3. The whole-brain parametric modelling revealed that word2vec – a measure of the strength of the semantic connection between words – was correlated with activation in right middle frontal gyrus (MFG) when the words were easier to link (i.e., high word2vec score), and with anterior LIFG when the words were harder to link (i.e., low word2vec score; see Fig. 6A). We computed a formal conjunction of word2vec modulation effects associated with harder trials and the Known Goal > Unknown Goal contrast and established that these two independently-derived maps overlapped in anterior LIFG (see Fig. 6B). These results indicate that anterior LIFG is involved in both (i) goal-directed semantic retrieval (top-down semantic control) and (ii) the retrieval of weak semantic links between distantly related word pairs, even when there are no specific task instructions and so the semantic information itself must establish which aspects of meaning should be focussed on (bottom-up semantic control). Given that word2vec was not matched between taxonomic and thematic trials, we also extracted the parameter estimates from the LIFG cluster modulated by word2vec, to examine if the effects of this variable were similar across these conditions. Paired sample t-tests revealed no significant differences between taxonomic (Mean ± SD = 120.94 ± 304.34) and thematic trials (Mean ± SD = 141.88 ± 264.46; t(30) = −.30, p = .77).

Fig. 6.

Brain activation modulated by semantic distance between words (measured by word2vec). A) Right MFG in red showed stronger responses to the word pairs with higher word2vec values, while anterior LIFG in blue showed greater activation for the word pairs with lower word2vec values. B) The anterior LIFG site which is sensitive to word2vec fully overlapped with the LIFG cluster identified in the contrast Known Goal > Unknown Goal, with this conjunction identified using FSL's ‘easythresh_conj’ tool. C-D) The functional connectivity of right MFG and anterior LIFG (identified from the parametric effects of word2vec) with the rest of the brain. These analyses revealed large-scale brain networks associated with the default mode network and semantic control network, respectively. All maps are cluster corrected at a threshold of z > 3.1 (p < .05). LIFG = Left inferior frontal gyrus; RMFG = Right middle frontal gyrus; L = Left hemisphere; R = Right hemisphere.

To investigate the intrinsic connectivity of these regions at rest, we seeded them in an independent dataset. Right MFG showed connectivity to default mode network regions that typically show a stronger response during more automatic memory retrieval (Philippi, Tranel, Duff, & Rudrauf, 2014; Spreng, Mar, & Kim, 2009), including medial and inferolateral temporal regions, middle frontal gyrus, medial prefrontal cortex, angular gyrus and posterior cingulate cortex (red regions in Fig. 6C). In contrast, anterior LIFG showed stronger intrinsic connectivity with regions in the semantic control network, implicated in shaping semantic retrieval when dominant aspects of meaning are not suited to the current goal or context (Chiou et al., 2018; Noonan et al., 2013; Whitney et al., 2010); these sites included inferior frontal gyrus, posterior middle temporal gyrus, anterior cingulate cortex, and dorsal angular gyrus (red regions in Fig. 6D), alongside anterior temporal lobe and primary visual areas.

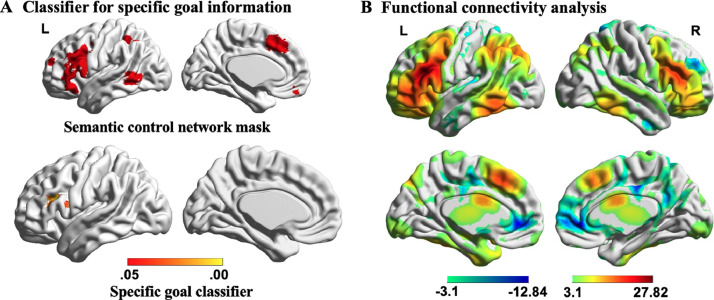

3.4. Multivariate pattern analysis

Next, we investigated whether the pattern of activation across voxels within the semantic control network (Noonan et al., 2013) maintained information about the type of semantic information to be retrieved. Specifically, we examined whether regions within this network could discriminate between taxonomic and thematic trials when the goal for retrieval was known. We predicted that brain regions supporting proactive aspects of semantic control should be able to distinguish the type of semantic relation being probed even during the presentation of the first word, before a specific taxonomic or thematic link was presented4. Consequently, we focussed this analysis on the neural response to the first word in the pair. The classification analysis revealed a cluster within LIFG that could decode taxonomic and thematic relations when the goal was known (decoding accuracy: Mean ± SD = 60 ± 10 %, FWE-corrected p < .05; see Fig. 7A). We performed an additional MVPA analysis examining the classification of taxonomic and thematic relations when the goal was not known in advance, and found no significant clusters at FWE-corrected p < .05. This provides a useful control analysis, as no classification effects are expected under these circumstances, when there is no known goal to maintain. The LIFG site able to classify current goal showed high intrinsic connectivity with other areas of the semantic control network, implicated in shaping semantic retrieval (Chiou et al., 2018; Noonan et al., 2013; Whitney et al., 2010), including posterior middle temporal gyrus, dorsal angular gyrus, and anterior cingulate/pre-supplementary motor area (red regions in Fig. 7B).

Fig. 7.

A) Semantic control network which supports semantic control, used as mask for classification analysis, in the upper left panel. Results of group-level searchlight analysis for specific goal classification shown in the bottom left panel (Known Taxonomic vs. Known Thematic at the first word; FWE-corrected p < .05). Colour bar indicates p value. B) The functional connectivity of this region with the rest of the brain revealed a large-scale brain network commonly associated with the regions of semantic control network. L = Left hemisphere; R = Right hemisphere.

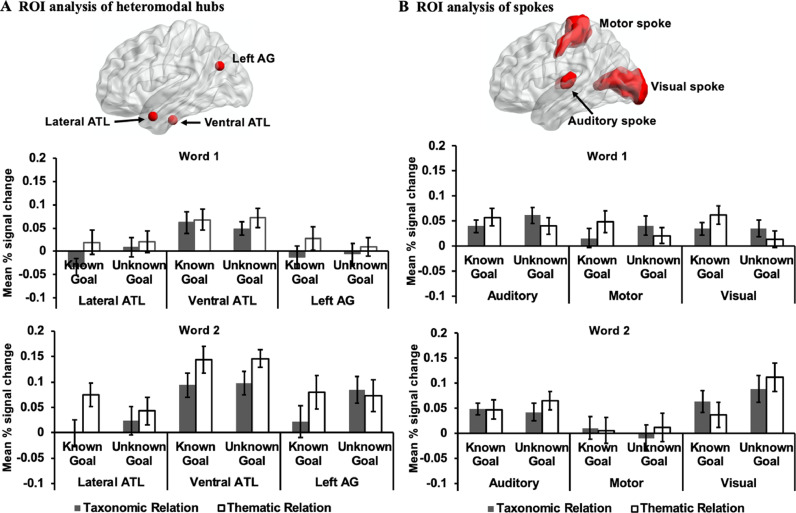

3.5. Additional analysis to characterise effects of control demands in “Hub” and “Spoke” regions

The findings so far suggest that an interaction between LIFG and visual cortex may underpin proactive semantic control. In the final analysis, we used an ROI approach to check for further effects of theoretical significance, to guard against Type II errors. We examined both clusters that emerged from the main analysis for additional effects of interest, and spherical ROIs defined according to meta-analyses (for hub sites) and atlases (for spoke sites) for effects that may have been missed in whole-brain analyses.

First, we report results for several putative heteromodal hub sites (i.e., lateral ATL, ventral ATL, and left AG; see Fig. 8A) to establish whether brain regions linked to heteromodal conceptual representation can show effects of control demands similar to those seen in visual cortex, or if the effects of top-down control are restricted to ‘spoke’ regions in this dataset. Next, we examined whether the cluster in visual cortex that showed an interaction between Task Knowledge and Word Position in the whole-brain analysis was also influenced by bottom-up control demands (word2vec), to establish if this cluster was specifically sensitive to prior knowledge of goals for semantic retrieval, or more generally supported semantic control processes. Finally, we examined additional spoke sites (primary visual/auditory/motor cortex; see Fig. 8B) to establish if there was any evidence of further interactions between Task Knowledge and Word Position, beyond visual cortex. These analyses established whether the effects of top-down control are restricted to visual regions in our data.

Fig. 8.

A) ROI analysis of heteromodal hubs and B) spokes. The bar charts plot the mean percentage (%) change extracted from these ROIs for each experimental condition compared to implicit baseline (i.e., the first fixation interval) at the first and the second words. Error bars depict the standard error of the mean. ATL = anterior temporal lobes; AG = angular gyrus.

3.5.1. Heteromodal hub sites

We examined spherical ROIs centred on heteromodal hub sites defined by the literature. We performed a 2 (Word 1 vs. Word 2) by 2 (Known Goal vs. Unknown Goal) by 2 (Taxonomic vs. Thematic) repeated-measures ANOVA for each hub site, revealing that the main effect of Semantic Relation was significant for both ventral ATL (F(1,30) = 12.46, p = .001, ηp2 = .29) and lateral ATL (F(1,30) = 7.09, p = .012, ηp2 = .19), with thematic trials eliciting greater activation relative to taxonomic trials (see also Supplementary Analysis 5 in Supplementary Materials). This effect was not significant in left AG (F(1,30) = 2.60, p = .12, ηp2 = .08). The interaction effect between Task Knowledge and Word Position was not significant for any of the hub sites (ventral ATL: F(1,30) = .08, p = .78, ηp2 = .003; lateral ATL: F(1,30) = .37, p = .55, ηp2 = .01; left AG: F(1,30) = 1.45, p = .24, ηp2 = .05). In addition, we found a significant interaction effect between Semantic Relation and Task Knowledge in left AG, F(1,30) = 5.92, p = .021, ηp2 = .17. Tests of simple effects revealed that this region showed a stronger response to thematic trials relative to taxonomic trials when the goal was known, F(1,30) = 6.53, p = .016, ηp2 = .18, while the activation in this region was comparable between these conditions when the goal was unknown, F(1,30) = .013, p = .91, ηp2 < .001. These results are presented in Fig. 8A.

3.5.2. Unimodal spoke sites

The visual cortex cluster defined by the interaction term between Word Position and Task Knowledge showed no effects of word2vec in a one-sample t-test (mean signal change: Mean ± SD = .02 ± .13, t(30) < 1), indicating that this visual area is not strongly modulated by bottom-up control demands.

For the spoke regions, a series of 2 (Word 1 vs. Word 2) by 2 (Known Goal vs. Unknown Goal) by 2 (Taxonomic vs. Thematic) repeated-measures ANOVAs at each site revealed that the interaction effect between Word Position and Task Knowledge was only significant in visual cortex, F(1,30) = 11.93, p = .002, ηp2 = .29. There was no effect for auditory cortex, F(1,30) = .04, p = .84, ηp2 = .001, or motor cortex, F(1,30) = .10, p = .76, ηp2 = .003. This interaction effect was also significantly greater in the visual cortex than that in the other two spokes as indicated by a significant three-way interaction effect between Spoke, Word Position, and Task Knowledge, F(2,60) = 9.17, p < .001, ηp2 = .23. The results are summarized in Fig. 8B.

Taken together, these results suggest that heteromodal hub regions, especially in ATL, are sensitive to the type of semantic relation, while semantic goal information primarily modulates activation in visual cortex.

4. Discussion

We examined how control is applied to conceptual representations within the hub and spoke framework. The Controlled Semantic Cognition account (Lambon Ralph et al., 2017) proposes that concepts emerge from interaction of a heteromodal hub in ATL with modality-specific ‘spoke’ regions such as visual cortex. Consequently, semantic control processes can be thought of as biasing activation in the hub and/or spoke regions. A key semantic control site, LIFG, showed greater activation when participants knew what aspect of semantic knowledge they should retrieve in advance (top-down control), and also when they identified links between weakly-related words (bottom-up control in the absence of a goal). MVPA analysis revealed that LIFG could classify specific goal information related to different semantic relationships (i.e., taxonomic and thematic relations) during the presentation of the first word in the pair (and therefore before the association indicated by the words could be retrieved). Heteromodal hub sites linked to memory representation were also sensitive to the type of semantic relationship but did not show an effect of control demands. In addition, goal information modulated the activation of visual cortex, in a way that suggested task knowledge could gate the recruitment of visual ‘spoke’ regions. When the goal was unknown, and therefore extensive semantic retrieval to the first item was of little benefit to task performance (and potentially a hindrance), there was deactivation to the first word and strong activation to the second word. When the goal was known in advance, visual cortex showed an increased response to the first word, while the response to the second word was greatly reduced. These findings are consistent with the view that LIFG proactively controls semantic retrieval to written words based on current goals, and the consequences of this top-down control manipulation can be seen in visual regions. In this paradigm, semantic control processes appear to be applied to spoke and not hub representations. A computational model of top-down semantic control recently made this prediction: efficient semantic cognition was achieved in a model in which control processes were applied to peripheral (e.g., unimodal spoke regions) rather than deep layers (e.g., heteromodal semantic regions) of a semantic network (Jackson et al., 2019).

Our results confirmed that LIFG supports both goal-directed semantic retrieval (i.e. top-down control) and stimuli-driven controlled retrieval processes (i.e. bottom-up control), since we found overlapping responses for the proactive application of task knowledge and for the retrieval of weak semantic relationships (as assessed by word2vec). LIFG is widely found to be involved in the controlled retrieval of semantic knowledge across paradigms – including bottom-up manipulations of control demands, for example, weak vs. strong associations and trials with strong vs. weak distractors (Badre et al., 2005; Krieger-Redwood, Teige, Davey, Hymers, & Jefferies, 2015; Noonan et al., 2013; Thompson-Schill et al., 1997; Wagner et al., 2001; Whitney et al., 2010), and top-down manipulations that instruct participants to focus on specific features, such as colour, shape or size, as opposed to global semantic relationships (Badre et al., 2005; Chiou et al., 2018; Davey et al., 2016). However, the feature and global matching tasks used in previous studies required retrieval to be focussed on different types of information. We separated the capacity for proactive control from the type of semantic retrieval required by the task, by informing participants about the nature of the semantic relationship on half of the trials. Our results confirm that LIFG shapes semantic retrieval in a top-down way, even when there is no difference in difficulty between trials that can be controlled and trials that lack prior task knowledge.

LIFG is not functionally homogeneous – different subdivisions within LIFG are thought to have dissociable functions in supporting controlled semantic retrieval: specifically, anterior-to-mid LIFG has been implicated in “controlled retrieval”, needed when the subset of knowledge required by a task cannot be brought to the fore through automatic retrieval processes; in contrast, posterior LIFG is thought to support selection, by resolving competition among active representations (Badre et al., 2005; Badre & Wagner, 2007). Our task manipulations were not designed to investigate functional distinctions within LIFG, and differences in the locations of responses we observed in this region might result from differences in methodology. However, our findings are broadly consistent with previous studies: activation in anterior LIFG was modulated by semantic distance, with increased engagement for weakly-linked word pairs, which is similar to the controlled retrieval induced by the contrast of weak and strong associations used by Badre et al. (2005), while MVPA revealed that dorsal and posterior portions of LIFG contained information about the semantic relationship needed on the current trial. This site might support the selection of goal-relevant information by prioritising particular aspects of conceptual knowledge during retrieval. Since the connections of taxonomic and thematic relations are thought to be based on different organisational principles (Estes et al., 2011; Rogers & McClelland, 2004), with taxonomic relations relying on perceptual similarity processing, and thematic relations activating hippocampus and visuo-motor regions involved in global scene processing (Burgess et al., 2002, Hoscheidt et al., 2010, Kalénine et al., 2009, Nielson et al., 2015, Ryan et al., 2008), LIFG might shape semantic retrieval to suit the task by influencing the kind of information that is prioritised. The ability of posterior regions in LIFG to decode the distinction between thematic and taxonomic trials even at the first word (before taxonomic and thematic relations were actually presented) suggests that participants may have been able to selectively focus their retrieval on task-relevant features.