Abstract

Objective. To examine the effects of student demographics, prior academic performance, course engagement, and time management on pharmacy students’ performance on course examinations and objective structured clinical examinations (OSCEs).

Methods. Study participants were one cohort of pharmacy students enrolled in a five-year combined Bachelor and Master of Pharmacy degree program at one institution. Variables included student demographics, baseline factors (language assessment and situational judgement test scores), prior academic performance (high school admission rank), course engagement, and student time management of pre-class online activities. Data were collected from course, learning management system, and institutional databases. Data were analyzed for univariate, bivariate, and multivariate associations (four linear regression models) between explanatory factors and outcome variables.

Results. Three years of data on 159 pharmacy students were obtained and entered in the dataset. Significant positive predictors of OSCE communication performance included domestic (ie, Australian) student designation, higher baseline written English proficiency, and pre-class online activity completion. Positive predictors of OSCE problem-solving included workshop attendance and low empathy as measured by a baseline situational judgment test (SJT). Positive predictors of performance on year 2 end-of-course examinations included the Australian Tertiary Academic Rank, completing pre-class online activities prior to lectures, and high integrity as measured by an SJT.

Conclusion. Several explanatory factors predicted pharmacy students’ examination and OSCE performance in the regression models. Future research should continue to study additional contexts, explanatory factors, and outcome variables.

Keywords: OSCE, flipped classroom, blended learning

INTRODUCTION

The focus of curricula in health professions education is shifting from knowledge acquisition to knowledge application and skill development.1 The methods by which educators teach future pharmacists are also changing. As a part of this wider movement, educational leaders are studying both what they teach and how they teach it. Dialogue about what to teach is centered on 21st century skills development, ie, those skills related to the emerging competencies that contemporary healthcare workers worldwide need in order to contribute to better outcomes for people with chronic conditions.2 Discourse about how educators teach includes adaptations of what is known as flipped classrooms, which is a type of blended-learning technique. In flipped classrooms, students complete pre-class activities prior to in-class active learning3

There is a lack of education research that investigates which student factors predict success within flipped classrooms and skill development curricula.4 In particular, much of the research on flipped classrooms focuses on the effectiveness of these models or elements of these models.4 As instruction increasingly requires more self-direction and self-regulated learning,4 student factors may become more important. Pharmacy students, educators, and workplace leaders have a vested interest in understanding the student factors that may improve academic performance.

The purpose of this study was to examine the extent to which student demographics, baseline factors (eg, English proficiency, situational judgment test scores),5 course engagement, and time management of pre-class online activities predicted performance on objective structured clinical examination (OSCE) communication, OSCE problem solving, and year 2 examination grades. This study is an examination of the extent to which these student factors predicted academic success in one cohort enrolled in a five-year Master of Pharmacy degree program. Because the degree program emphasized 21st century skills and every didactic course in the degree program followed the same flipped classroom model, this study was situated in a contemporary educational context.

The literature is replete with studies investigating potential predictors of standardized test scores, including Pharmacy Curriculum Outcomes Assessment (PCOA) test scores,6,7 pre-North American Pharmacist Licensure Examination (NAPLEX) test scores,8 and NAPLEX test scores.9 There has been limited work in pharmacy education10 and health professions education11,12 in predicting OSCE performance. For OSCE and examination outcomes, researchers have investigated the predictive value of demographics, preadmission prior performance, academic performance, and other types of factors. However, to our knowledge, no researchers have explored the relationship between pharmacy student academic outcomes and baseline English proficiency, situational judgment tests, course engagement, and time management of pre-class online activities. Therefore, we conducted a comprehensive investigation into what predicts pharmacy student OSCE and examination performance.

METHODS

In Australia, students must complete a Bachelor of Pharmacy degree program, a yearlong internship placement, and pass two regulatory examinations to become eligible for registration as a pharmacist. Students in this study were enrolled in a five-year combined Bachelor and Master of Pharmacy degree program at the Monash Faculty of Pharmacy and Pharmaceutical Sciences.

A fundamental underpinning of the degree program was an evidence-based, curriculum-wide flipped classroom approach.13,14 The topic cycle in each course followed a discover, explore, apply, and reflect model, otherwise known as the DEAR model. For each content topic (lasting one week), students were provided with pre-class online activities called “discoveries.” Each week of the semester included one day, called a discovery day, on which there was no on-campus instruction to give students time to complete the pre-class online activities, fulfilling the discover component of the DEAR model). On the day after each discovery day, students attended interactive lectures. Rather than providing new content, educators clarified content from the discoveries and engaged students in patient- or medicine-focused problem-based learning activities (ie, the explore component of the DEAR model). Lectures were followed by two days of workshops, which fulfilled the apply component of the DEAR model. Each course had a two-hour workshop scheduled during these two days. In apply workshops, students engaged in small-group learning to further their professional, clinical, and technical skills. Each workshop had a ratio of 25-30 students to one facilitator. The types of workshop activities varied depending on the course, but generally included patient cases, role plays, and group discussions. The day after workshops, students attended close-the-loop lectures to reflect on their grasp of the material for each topic, as well as on their skill development (ie, the reflect component of the DEAR model).

Another crucial aspect of the degree program was professional skill development through skills coaching. Students (typically in groups of 10) met with a skills coach (an academic or practitioner) at least three times a semester. Prior to the meeting, students documented in their e-portfolio a reflection and plan for developing one of the following professional skills: communication, teamwork, empathy, integrity, inquiry, or problem-solving. They then received feedback from their skills coach via the same e-portfolio.

Data from one pharmacy student cohort that commenced in 2017 was used in this study. For the majority of explanatory factors and outcome variables, cohort data from the first and second year of their program (2017 and 2018) was included. We also included one third-year (2019) variable: OSCE results. The cohort commencing in 2017 started with 200 students. Thirty-nine students subsequently withdrew from the degree program and were not included in this analysis. This withdrawl rate (about 20%) was typical in Australian pharmacy schools because students began the degree after high school and some decided early on to switch to an alternative major. Of the 161 students enrolled in this cohort in 2019, two students opted out of the research study. Therefore, the final sample included 159 participants (99% of the study cohort). The dataset for this study included the following categories of explanatory factors: demographic and baseline factors, course engagement factors, student time management of the pre-class online activity factors, and the outcome variables.

Demographic factors included student date of birth, gender, and domestic (ie, Australian) or international designation. Student date of birth was used to calculate a cohort average age and then distinguish those students who were at least two years older than the cohort average age. Baseline factors included student scores on the Australian Tertiary Admission Rank (ATAR), Diagnostic English Language Assessment (DELA) writing test, and situational judgment tests (SJTs). The ATAR score is a rank from 0 to 100 based on domestic students’ year 12 high school grades. The DELA writing test is a 45-minute written assessment that asks students to write an argumentative piece in support or against a provided statement. Based on how the DELA raters deem the grammar, organization, logical reasoning, language use, and other factors, the students are either deemed proficient (score of 3), borderline (score of 2), or at risk (score of 1).15 Finally, the SJT was a 90-minute test developed specifically for pharmacy students involving scenarios and scoring based on performance from practicing pharmacists.5 The SJT development and testing for validity and reliability has been previously reported.5 The students must complete the test within 90 minutes. The test had the following four scales: teamwork, integrity, empathy, and critical thinking and problem solving. The students received a score of either 1 (low), 2 (medium), or 3 (high) on each scale. Both the DELA and SJT were undertaken by students during the first few weeks of the program.

The students took five courses in the first year of their program and seven during their second year. Lecture attendance was quantified as percentage of sesssions students attended from a sample of 131 randomly selected lectures, representing approximately 73% of all lectures in two years. The data were collected both electronically and manually. For classes that used an audience response system, the data were collected from that system. For other classes, an administrative assistant attended randomly selected lectures and recorded student attendance. At least three attendance data points were collected for each course.

Workshop attendance was measured as the percentage of workshops attended of the 176 given over two years. Attendance was recorded in Moodle, the learning management system (LMS) used by our institution. We measured Skills Coaching participation as a composite of attendance at 16 small-group Skills Coaching sessions and completion of 16 personal learning plans uploaded to an electronic portfolio system.

Completion of pre-class online activities was the proportion of pre-class activities that a student completed over the two-year study period. This factor was measured by log data from our LMS. Pre-class online activities consisted of readings, figures, videos, links to websites, and discussion forums, and concluded with a multiple-choice quiz to assess learning. The completion of the online quizzes served as a proxy measure for whether students completed the pre-class online activities. Completion of pre-class online activities was measured for each student across all 245 pre-class online quizzes over two years. The students were expected to complete the pre-class activities before each in-class lecture, but no incentive for doing so, eg, course credit, was given.

In addition to measuring whether students completed the pre-class online activities at any time, we created three factors based on the timing and frequency of when students completed the pre-class online activities. These factors were calculated from LMS trace data from five randomly selected pre-class online activities in students’ second year of the program. We labeled the first factor “prepared.” A student was classified as prepared if they completed at least four out of the five randomly selected pre-class online activities before the time of the related lecture. The second factor, “catching-up,” included students who took an average of 10 days or more after the lecture time to complete the pre-class online activities. The third factor, “revisiting,” was created to capture students who used the study strategy of self-testing.16 Students were classified as revisiting if they completed the randomly selected five discoveries an average of 1.5 times or more. Students classified as either prepared or catching-up could also be classifed as revisiting.

Two of the outcome variables included students’ performance on the objective structured clinical examination (OSCE), including average grades for OSCE communication and OSCE problem-solving. The OSCE-related outcome variables were a composite of all OSCE grades across the three years. Students in the degree program completed an OSCE at the end of year one, the end of year two, and at the midpoint of year three. The OSCE-related outcome variables were a composite of all OSCE grades across the three years. The OSCEs included two stations in year one, four stations in year two, and four stations in year three. Over the three years of OSCEs, the breadth of topics was extensive. Year 1 OSCEs covered community pharmacy topics (eg, tinea, headache, gastroesophageal reflux disease). Year 2 OSCEs covered hospital pharmacy topics (eg, medication history taking, blood pressure, evidence-based practice). Year three OSCEs covered several therapeutics topics (eg, anemia, infectious diseases, pain).

The OSCE communication grades were determined by an examiner who followed a rubric with criteria for oral communication. The OSCE problem-solving was determined by an examiner using case-specific criteria (eg, did the student recommend an appropriate treatment?). The Monash pharmacy program has included OSCEs for about a decade, investing substantial time in developing and sharing our overarching framework and robust training materials for assessors.17 The OSCE assessors complete an online training program that involves reviewing and assessing video examples. They complete oral communication checklists and then compare their answers to a standard. All assessors retrain in face-to-face sessions by reviewing video cases together prior to each OSCE session. The OSCE sessions are video recorded. Videos of all failed stations and any irregularities are reviewed by at least two additional academics, the simulation lead, and the course coordinator.

The third outcome variable was the average end-of-course examination grades for year two. This was calculated by averaging student end-of-course examination grades in the seven year 2 courses. The end-of-course examinations included mutiple-choice and open-ended questions and counted approximately 40%-50% of their class grade.

We examined the effects of the explanatory factors on three outcome variables. Prior to building the linear regression models, univariate and bivariate aspects of the data were examined. There were no missing data points. We evaluated all variables for violations of normality, linearity, collinearity, and homoscedasticity. Univariate analyses included means, standard deviations, proportions, and ranges.

For the bivariate analyses between the explanatory factors and the outcome variables, t tests, analysis of variance with contrasts, and Pearson correlation coefficients were calculated. Correlations between the explanatory factors were tested using Phi correlation coefficients, Spearman rho, and Pearson correlations coefficient and reported on a scale of -1 to 1.18 If the correlation coefficient was above .50, the interaction was considered large, and if it was above .70, the interaction was considered collinear.19 Multicollinearity was further investigated during regression diagnostics using the variance inflation factor. For continuous variable explanatory factors, a linear regression was also calculated between the explanatory factor and each outcome variable.

For the multivariate analyses, we computed three multiple linear regression models with the following outcome variables: OSCE communication performance, OSCE problem-solving performance, and year 2 examination performance. Because 45 students did not have an available metric for prior performance from high school (ie, ATAR score), separate regressions were run with and without the explanatory factor of prior high school performance. The regression models only included explanatory factors that were statistically significant with the outcome variable in bivariate analyses at the α<0.2 level. All variables were entered into the models as fixed effects. Statistical significance was established at α=0.05.

All analyses were conducted in STATA version 16 (StataCorp, College Station, TX). The study was approved by the Monash University Human Research Ethics Committee.

RESULTS

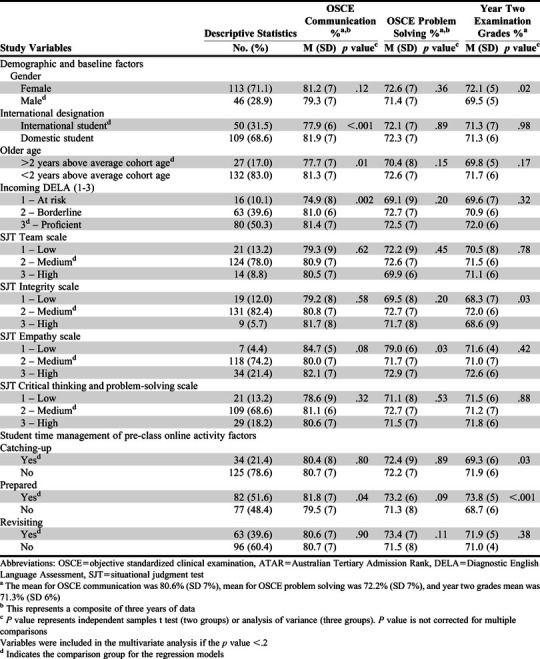

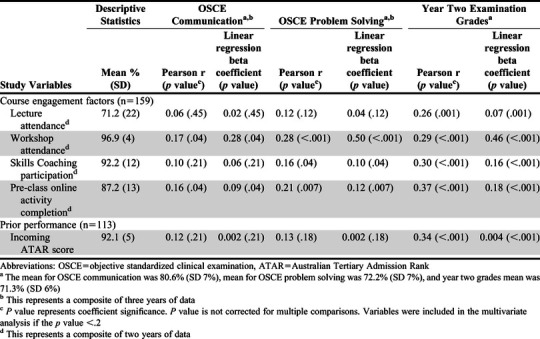

The overall sample included 159 pharmacy students. Continuous data are presented as means with standard deviation (SD). Most commonly, students were female (71.1%), Australian (68.5%), and 18.5 (SD=1.4) years old (range=16-27 years old) when they entered the program. The averages and variation for each outcome variable and explanatory factor are summarized in Tables 1 and 2.

Table 1.

Analysis of Variables Included in a Study of Predictors of Pharmacy Student Performance in a Flipped Classroom Curriculum (n=159)

Table 2.

Analysis of Course Engagement and Prior Performance Included in a Study of Predictors of Pharmacy Student Performance in a Flipped Classroom Curriculum

We calculated additional descriptive statistics for factors related to student management of pre-class online activity factors. For this data set, 51.6% of the students were classified as prepared, 21.4% as catching-up, and 27% were between prepared and catching-up. In other words, 27% of students often completed their pre-class online activities after attending the lecture but within 10 days. Students who were classified as prepared completed their pre-class online activities an average of 3.1 (SD=2.9) days before the lecture, whereas students classified as catching up completed pre-class activities an average of 16.1 (SD=6.5) days after the lecture. Thirty-four percent of the students classified as prepared used revisiting, whereas 44.2% of the students classified as catching up used revisiting.

The majority of correlations between independent variables were small with the exception of three different relationships. As expected, given their definitions, the variables catching up and prepared had a large negative association (Cramer V = -0.54). Workshop attendance rate and skills coaching attendance rate had a large positive association (Pearson correlation coefficient=0.53). Estimated average lecture attendance rate and workshop attendance rate had a collinear positive association (Pearson’s correlation coefficient=0.74). To account for these large associations, we did not exclude any variables from the regression, but calculated the variance inflation factor for each model as a check for multi-collinearity. No multi-collinearity existed for any regression model. The remaining correlations between independent variables were less than 0.5.

We calculated bivariate associations between explanatory factors and outcome variables. All explanatory factors that had a bivariate association with the outcome variables (p value of 0.2 or less) were included in the multivariate models. The associations between the explanatory factors and the three outcome variables are summarized in Tables 1 and 2.

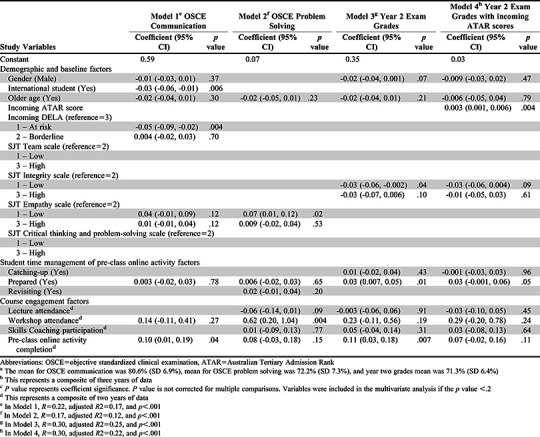

Model 1 was the result of a multiple linear regression for OSCE communication. Model 1 accounted for 16.5% of three years of OSCE communication grade variation (Table 3, p<.001). In this model, international student designation (β=-0.03, p=.01) and an incoming DELA score of 1 (ie, at risk) (β=-0.05, p=.004) were significant negative predictors of OSCE communication grades. Therefore, controlling other variables, international student designation explained 3% less on OSCE communication scores. A DELA of one, controlling other variables, explained 5% less on OSCE communication scores compared to a DELA of two or three. Pre-class online activity completion was a positive predictor of OSCE communication grades (β=0.1, p=.04). Thus, the model predicts a 10% difference in OSCE communication scores between students who completed 0% of pre-class online activities compared to those students who completed 100% of pre-class online activities. Other included explanatory factors were not significant.

Table 3.

Model 1, 2, 3 Results (n=159) and Model 4 Results (n=133)

Model 2 was the result of a multiple linear regression for OSCE problem-solving. Model 2 accounted for 12.2% of three years of grade variation in OSCE problem solving (Table 3, p<.001). In this model, when controlling for other variables, the variation was positively predicted by an incoming SJT empathy scale score of 1 (ie, low empathy) (β=0.07, p=.02) and higher workshop attendance (β=0.62, p=.004). Thus, controlling other variables, a SJT empathy score of 1 explained 7% of OSCE problem-solving scores compared to a SJT empathy score of 2 or 3. The workshop attendance, in particular, had a large beta coefficient of 0.62 (p=.004). The model predicts a 62% difference in OSCE problem-solving scores between theoretical students with a workshop attendance of 0% vs 100%. In model 2, all other included explanatory factors were not significant.

Model 3 was the result of a multiple linear regression for year two examination grades, but excluding ATAR scores as they were not available for every student. Model 3 accounted for 37.9% of the variation in year 2 course examination grades (Table 3, p<.001). Because ATAR scores were not available for most of the international students (28.9%), a separate model was run without ATAR scores (ie, model 3) and with ATAR scores (ie, model 4). In model 3, year 2 examination grade variations were positively predicted by the variables prepared (β=0.03, p=.01) and completion of the pre-class online activity (β=0.11, p=.007). Thus, when controlling for other variables, students being prepared explained 3% of year 2 examination scores when their scores were compared to those of students who were not prepared. The model predicts a 11% difference in year 2 examination scores between students who did not completed any pre-class online activities and students who completed 100% of pre-class online activities. An incoming SJT integrity scale score of 1 (ie, low integrity) (β=-0.03, p=.04) negatively predicted year 2 examination grades. When controlling for other variables, an SJT integrity score of 1 explained 3% less on year 2 examination scores compared to a SJT integrity score of 2 or 3. In model 3, all other included explanatory factors were not significant.

Model 4 was the result of a multiple linear regression for year two examination grades, including ATAR scores. Model 4 included all the explanatory factors in model 3 with the addition of the ATAR scores of incoming students but omitting students without an ATAR score. Model 4 accounted for 31.5% of year 2 course grade variation (Table 4, p<.001). In model 4, incoming ATAR score was the only explanatory factor with a significant association with year two examination grades (β=0.003, p=.004). Thus, the model predicts a 0.3% difference in year 2 examination scores between theoretical students with an ATAR score of 0 vs those with an ATAR score of 100.

DISCUSSION

This study examined the extent to which demographics, baseline factors, course engagement, and time management predicted pharmacy students’ performance on objective structured clinical examinations and course examinations. We found that alternative sets of factors predicted qualitatively different outcomes. The factors that predicted OSCE performance were not the same as the factors that predicted performance on course examinations. The ATAR was predictive of examination scores but not OSCE scores. Course engagement factors were positively associated with higher performance, but attendance at applied workshops, in particular, predicted OSCE problem-solving. Also, we would have predicted that higher (more frequent) participation in activities requiring communication (eg, workshops, skills coaching) would have predicted students’ OSCE communication scores. Instead, completion of pre-class online activities was a better predictor of OSCE communication scores. One hypothesis for this effect is that acquisition of knowledge led to greater confidence in communicating during the OSCE. Alternatively, completion of pre-work may have been highly correlated with factors not included in the analyses (eg, motivation, study strategies). Finally, international student designation and low English proficiency at baseline were negative predictors for OSCE communication scores but not for other outcome variables.

The timing of completing flipped classroom pre-work prior to lecture predicted performance. In a flipped classroom, success may be moderated by self-regulation strategies while completing pre-class learning material and in-class active learning.4,20,21 Distributed practice and cognitive load theory support the flipped classroom approach to learning, which involves initial exposure of students to content and self-testing prior to class.22,23 The study results contribute to a growing body of research highlighting the importance of students completing pre-class activities and doing so before lectures.24,25

There is a need for further investigation into the role that empathy plays in learning outcomes. One surprising finding was that low empathy, as measured on the SJT, predicted higher OSCE problem-solving scores. The finding may have been a chance occurrence in which seven students with low SJT empathy scores performed consistently well on OSCE problem-solving. However, because empathy is essential to the pharmacist establishing a positive patient-provider relationship,26 researchers should continue to explore whether low empathy benefits students on assessments and therapeutic problem solving. We plan to investigate whether this effect persists in future cohorts.

Pharmacy schools need to support international students as they have the added challenge of adjusting to a new culture and, in some cases, a new language. As globalization continues, more pharmacy schools will recruit and admit international students.27 Currently, more than 30% of our students are international students. From this study, we learned our international students are succeeding academically.

Further research and support are required to improve students’ communication performance. The DELA, in particular, was predictive of students’ future communication performance. As a school, we will continue to provide additional English language support (eg, small group conversation classes) to students who score a 1 on their DELA. This study contributes to previously reported mixed results on international students and communication performance. While another study found similar results to our study28 (ie, international students fared worse on OSCE communication performance), a multi-institutional study did not find a significant relationship between student ethnicity and communication performance.29 Future researchers should seek to understand whether this is because of the actual communication performance of international students or rater bias in communication performance scores.28 This study is one of the most comprehensive to date to investigate the predictors of health professions students’ grades and performance on OSCEs. Well-established and novel factors, including SJT scales and student time management in completing pre-class online activities, were compared across three distinct outcome variables. However, future researchers could apply a greater number and quality of factors to these types of models. Variables that were measured in the study are also subject to measurement errors for outcome and explanatory factors. Ceiling effects, in particular, limited the exploration of course engagement factors. Although it would require substantial time resources, greater sampling of the pre-class online activity factors would improve the reliability of those measures. As we have found the time management of pre-class online activities to be a predictive factor, we plan to invest in a solution that would automate this measure. The results of this study should be considered in context as they are from a single cohort at one institution located in one region of the world. Further, despite some suggestions that the early didactic curricula of bachelor’s, master’s, and doctorate pharmacy programs are similar, the type of degree program could influence generalizability of the results.30

Our explanatory model revealed the importance of pharmacy students completing pre-work before lectures and engaging in workshops, and schools measuring students’ communications skills. These findings may be used to build targeted interventions to support students in developing optimal learning behaviors. For example, researchers could study approaches to promote timely completion of pre-work. Also, international students and incoming students with lower English proficiency could be referred early to support services that would better prepare them for OSCE communication (and future experiential placements).

In addition to the areas already noted, future researchers should consider longitudinal studies, other outcome variables and explanatory factors, and further contexts. For example, although OSCE performance has mixed evidence for predicting experiential placement and postgraduate performance,31,32 these performance metrics could also be measured and modeled directly. Other outcome variables could include other types of skill-based measurements, workplace outcomes, and patient outcomes. The resulting model accounted for 16.5% of OSCE communication performance, an improvement from previous models (4.2%-7.2% OSCE communication).10 However, as much of the variability between the examined outcome variables remains unaccounted for, future researchers could seek to explain more of the variance between students’ outcomes. For example, they could include motivational beliefs, learning strategies, and self-regulated learning processes in their models, especially given that these are hypothesized to be important moderators of success in a flipped classroom.4 Researchers should explore further contexts including different institutions, curriculums, degree programs, and student populations.

CONCLUSION

Our institution and others should continue to model how different student factors explain and predict outcomes, especially in the context of flipped classrooms and professional skill development. Students, educators, and workplace leaders have a vested interest in understanding how student factors may explain academic performance. In the end, modelling these types of factors serves as one means to greater develop students’ learning, skill development, and future performance.

ACKNOWLEDGMENTS

The authors acknowledge Zoe Ord from the Monash Faculty of Pharmacy and Pharmaceutical Sciences for her substantial assistance in verifying and aggregating data. We also thank Conan MacDougall from the University of California, San Francisco, in assisting with final revisions to this manuscript.

REFERENCES

- 1.Irby DM, Cooke M, O'Brien BC. Calls for reform of medical education by the Carnegie Foundation for the Advancement of Teaching: 1910 and 2010. Acad Med. 2010;85(2):220-227. [DOI] [PubMed] [Google Scholar]

- 2.Pruitt SD, Epping-Jordan JE. Preparing the 21st century global healthcare workforce. BMJ. 2005;330(7492):637-639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.White PJ, Naidu S, Yuriev E, Short JL, McLaughlin JE, Larson IC. Student engagement with a flipped classroom teaching design affects pharmacology examination performance in a manner dependent on question type. Am J Pharm Educ. 2017:81(9):Article 5931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.van Alten DC, Phielix C, Janssen J, Kester L. Effects of flipping the classroom on learning outcomes and satisfaction: a meta-analysis. Educ Res Rev. 2019. [Google Scholar]

- 5.Patterson F, Galbraith K, Flaxman C, Kirkpatrick CM. Evaluation of a situational judgement test to develop non-academic skills in pharmacy students. Am J Pharm Educ. 2019; 83(10):7074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Daugherty KK, Malcom DR. Assessing the relationship among PCOA performance, didactic academic performance, and NAPLEX scores. Am J Pharm Educ. 2020;84(8):847712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Medina MS, Neely S, Draugalis JR. Predicting pharmacy curriculum outcomes assessment performance using admissions, curricular, demographics, and preparation data. Am J Pharm Educ. 2019;83(10):Article 7526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chisholm-Burns MA, Spivey CA, McDonough S, Phelps A, Byrd D. Evaluation of student factors associated with Pre-NAPLEX scores. Am J Pharm Educ. 2014;78(10):Article 181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Spivey CA, Chisholm-Burns MA, Johnson JL. Factors associated with academic progression and NAPLEX performance among student pharmacists. Am J Pharm Educ . 2019;83(10):Article 7561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Williams JS, Metcalfe A, Shelton CM, Spivey CA. Examining the association of GPA and PCAT scores on objective structured clinical examination scores. Am J Pharm Educ . 2019;83(4):Article 6608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kulatunga-Moruzi C, Norman GR. Validity of admissions measures in predicting performance outcomes: the contribution of cognitive and non-cognitive dimensions. Teach Learn Med. 2002;14(1):34-42. [DOI] [PubMed] [Google Scholar]

- 12.Peksun C, Detsky A, Shandling M. Effectiveness of medical school admissions criteria in predicting residency ranking four years later. Med Educ. 2007;41:57-64 [DOI] [PubMed] [Google Scholar]

- 13.White PJ, Larson I, Styles K, et al. Adopting an active learning approach to teaching in a research-intensive higher education context transformed staff teaching attitudes and behaviours. High Educ Res & Dev. 2016;35(3):619-633. [Google Scholar]

- 14.Freeman S, Eddy SL, McDonough M, et al. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci. 2014;111(23):8410-8415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Read J. Assessing English Proficiency for University Study. London: Palgrave Macmillan; 2015. [Google Scholar]

- 16.Dunlosky J, Rawson KA, Marsh EJ, Nathan MJ, Willingham DT. Improving students' learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychol Sci Public Interest. 2013;14(1):4-58. [DOI] [PubMed] [Google Scholar]

- 17.Hussainy SY, Crum MF, White PJ, et al. Developing a framework for objective structured clinical examinations using the nominal group technique. Am J Pharm Educ. 2016;80(9):Article 158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Agresti A, Kateri M. Categorical Data Analysis. Berlin, Germany: Springer; 2011. [Google Scholar]

- 19.Bonate PL. The effect of collinearity on parameter estimates in nonlinear mixed effect models. Pharm Res. 1999;16(5):709-717. [DOI] [PubMed] [Google Scholar]

- 20.Lim DH, Morris ML. Learner and instructional factors influencing learning outcomes within a blended learning environment. J Educ Techno Soc. 2009;12(4):282-293. [Google Scholar]

- 21.Tune JD, Sturek M, Basile DP. Flipped classroom model improves graduate student performance in cardiovascular, respiratory, and renal physiology. Adv Physiol Educ. 2013;37(4):316-320. [DOI] [PubMed] [Google Scholar]

- 22.Talley CP, Scherer S. The enhanced flipped classroom: Increasing academic performance with student-recorded lectures and practice testing in a" flipped" STEM course. J Negro Educ. 2013;82(3):339-347. [Google Scholar]

- 23.Abeysekera L, Dawson P. Motivation and cognitive load in the flipped classroom: definition, rationale and a call for research. High Educ Res Dev. 2015;34(1):1-14. [Google Scholar]

- 24.Ahmad Uzir Na, Gašević D, Matcha W, Jovanović J, Pardo A. Analytics of time management strategies in a flipped classroom. J Comput Assist Learn. 2019. [Google Scholar]

- 25.McLaughlin JE, Gharkholonarehe N, Khanova J, Deyo ZM, Rodgers JE. The impact of blended learning on student performance in a cardiovascular pharmacotherapy course. Am J Pharm Educ. 2015;79(2):24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ratka A. Empathy and the development of affective skills. Am J Pharm Educ. 2018;82(10):7192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Teodorczuk A, Morris C. Time to CHAT about globalisation. Med Educ. 2019;53(1):3-5. [DOI] [PubMed] [Google Scholar]

- 28.Fernandez A, Wang F, Braveman M, Finkas LK, Hauer KE. Impact of student ethnicity and primary childhood language on communication skill assessment in a clinical performance examination. J Gen Intern Med. 2007;22 (8):1155-1160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hauer KE, Boscardin C, Gesundheit N, Nevins A, Srinivasan M. Impact of stuent ethnicity and patient-centredness on communication skill performance. Med Educ. 2010;44(7):653-661. [DOI] [PubMed] [Google Scholar]

- 30.Arakawa N, Bruno-Tome A, Bates I. A global comparison of initial pharmacy education curricula: an exploratory study. Innov Pharm. 2020;11(1):Article 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Terry R, Hing W, Orr R, Milne N. Do coursework summative assessments predict clinical performance? a systematic review. BMC Med Educ. 2017;17(1):40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.McLaughlin JE, Khanova J, Scolaro K, Rodgers PT, Cox WC. Limited predictive utility of admissions scores and objective structured clinical examinations for APPE performance. Am J Pharm Educ. 2015;79(6):Article 84. [DOI] [PMC free article] [PubMed] [Google Scholar]