Abstract

The purpose of the study was to examine the quality of high school programs for students with Autism Spectrum Disorder (ASD) in the United States. The Autism Program Environment Rating Scale-Middle/High School (APERS-MHS) was used to rate the quality of programs for students with ASD in 60 high schools located in three geographic locations in the US (CA, NC, WI). Findings indicated that the total quality rating across schools was slightly above the adequate criterion. Higher quality ratings occurred for program environment, learning climate, family participation, and teaming domains. However, quality ratings for intervention domains related to the characteristics of ASD (e.g., communication, social, independence, functional behavior, transition) were below the adequate quality rating level. Also, quality ratings for transition were significantly higher for modified (primarily self-contained) programs than standard diploma (primarily served in general education) programs. School urbanicity was a significant predictor of program quality, with suburban schools having higher quality ratings than urban or rural schools, controlling for race, school enrollment size, and title 1 eligibility status. Implications for working with teachers and school teams that support high-school students with ASD should include a targeted focus on transition programming that includes a breadth of work-based learning experiences and activities that support social-communication domains.

Students with autism spectrum disorder (ASD) are entering high schools in the United States at an increasing rate. A recent report from the Centers for Disease Control and Prevention indicated that the prevalence rate of 10 year olds with ASD had increased 150% in the last 5 years (Baio et al., 2018). The reported data were from 2014, so those children are now entering high school programs. Confirming these numbers, the 38th Office of Special Education Programs Report to Congress (U.S. Department of Education, Office of Special Education and Rehabilitative Services, Office of Special Education Programs, 2017) indicated that, between 2006 and 2015, the percentage of students with ASD in special education increased 189% and 209% for the 12–17 and 19–21 years age groups, respectively. ASD is manifested by challenges in social communication and presence of restrictive and repetitive behavior (Volkmar, Reichow, Westphal, & Mandell, 2014). It affects males three to four times as often as females, and is present among racial/ethnic and socioeconomic groups in the United States (Baio et al., 2018). The “spectrum” feature of this disability reflects the range of severity of the condition, and the criteria from the Diagnostic and Statistical Manual of Mental Disorders (5th ed.; DSM-5; American Psychiatric Association, 2013) indicate that different levels of support are needed by individuals with ASD. Approximately 60% of children and youth with ASD do not have intellectual disability, are often included in the general education curriculum, and may graduate with a diploma. The Office of Special Education Programs (U.S. Department of Education, Office of Special Education and Rehabilitative Services, Office of Special Education Programs, 2017) confirms that 62.7% of students with ASD qualifying for special education services receive them primarily in general education settings (80% or more of their time spent in general education). At the other end of the spectrum, students may have limited verbal skills (Tager-Flusberg & Kasari, 2013), possibly have intellectual disability, participate in a special education class usually with some opportunity for inclusion, and finish high school with a modified diploma or certificate of completion. The OSEP 2017 report indicates that approximately 32% receive special education outside of the general education setting.

For this heterogeneous group of students, public schools are required by Individuals with Disabilities Education Act (IDEA) and also the Every Student Succeeds Act (ESSA, 2015) to provide an educational experience that is based on scientifically proven practices. The cumulative set of practices, procedures, and policies employed by school personnel represent the quality of the school program (ACT, 2012). It is a legitimate expectation of parents, as well as the public, that school programs are of sufficient quality to meet the needs of students with ASD. Public secondary schools are required to meet standards established by the state and/or municipality in which they are located. However, to judge if schools are meeting the program quality expectation for students with ASD, it is necessary to have a process for assessing autism program quality.

The issue of program quality for high school students with ASD is especially important because this group of students have among the poorest outcomes of any disability group when they leave public school. In a recently published report of the National Longitudinal Transition Study 2012, Lipscomb et al. (2017) found that, relative to other students with Individualized Education Programs (IEPs), students with ASD had significantly more trouble completing activities of daily living, had a lower sense of self direction, had fewer planned activities and social engagement with friends, and were less likely to have had paid employment outside of school. From an analysis of the National Longitudinal Transition Study 2 (NLTS2), Roux, Shattuck, Rast, Rava, and Anderson (2015) reported that outcomes for young adults with ASD and their families are among the worst of any disability group. Other longitudinal studies have documented that about one-third of young adults with ASD are unemployed, and for those who are employed, they often fail to maintain employment or struggle with employment over time (Taylor, Henninger, & Mailick, 2015). Young adults with ASD are more likely to live at home after high school and less likely to live independently, in comparison to individuals from other disability groups (Anderson, Shattuck, Cooper, Roux, & Wagner, 2014). Confirming these findings, Roux, Rast, Anderson, and Shattuck (2017) reported from their analysis of the National Core Indicators Adult Survey (involving 3520 respondents with ASD), that 50% of the adult respondents live at home with a family member, 54% reported some form of mental health condition, and only 14% held a paying job in the community.

Until recently, there have been few measures for assessing program quality for students with ASD. To assess quality of residential environments for adolescents and adults with ASD, Van Bourgondien, Reichle, Campbell, and Mesibov (1998) developed the Environmental Rating Scale (ERS), and Hubel, Hagell, and Sivberg (2008) designed a questionnaire version of the scale to be completed by program staff. Although the scale has some positive psychometric evidence, it focused on out-of-school settings rather than school program quality. To examine school program quality for students with ASD in Belgium, Renty and Roeyers (2005) developed a school staff questionnaire that provided information about services, school environment modifications, staff knowledge of ASD, and parent involvement. No information was available about psychometric qualities of their questionnaire. Also, the European school context is quite different from the United States (Bejnö et al., 2019) and to date there have been no reports of its use in the United States. In the United States, however, a number of states (e.g. New York, New Jersey, Colorado, and Kansas) have developed program quality measures (e.g. the New York Autism Program Quality Indicators develop by Crimmins, Durand, Theurer-Kaufman, and Everett (2001)). While useful for formative evaluation when completed by program staff, these measures generally lack information about the psychometric quality of the instrument.

In 2008, the National Professional Development Center on Autism Spectrum Disorders (NPDC) began developing the Autism Program Environment Rating Scale (APERS). The APERS has Preschool/Elementary and Middle School/High School forms. The scale consists of multiple domains that assess dimensions of program ecology, interdisciplinary teaming, and family participation. In a previous paper, Odom et al. (2018) described a psychometric analysis of the NPDC data, revealing the measure to have evidence of high internal consistency (as measured by Cronbach’s alpha), construct validity (i.e. factor analysis generating a single primary quality factor), and criterion-related validity (i.e. scores sensitive to changes across time demonstrating treatment effects).

The purpose of this study was to use the APERS to examine the quality of high school programs in the United States for students with ASD and demographic factors that might influence quality. The authors recruited 60 high schools from three nationally representative sites, conducted the middle/high school version of the APERS at each school, and gathered associated information about program types in high schools (i.e. standard diploma or modified diploma), urbanicity of school community, and general socioeconomic status of schools (percentage of free lunch for all students in the schools). The specific research questions addressed were as follows: (1) What is the overall program quality for students with ASD enrolled in high schools in the United States? (2) Are there relative strengths and challenges in specific domains of quality? (3) Are there differences between standard diploma and modified programs in overall quality and on specific domains of quality? (4) Are community and school characteristics associated with program quality?

Method

This study was a part of a randomized clinical trial (RCT) conducted by the Center on Secondary Education for Students with Autism Spectrum Disorders (CSESA). CSESA was funded by the Institute of Education Sciences to develop and evaluate a multi-component intervention for high school students with ASD. All data reported herein are from the initial pretest collected before any intervention occurred.

Participants and setting

The study took place in 60 high schools located in central North Carolina; southeast, central, and northern Wisconsin; and southern California. Schools had an average enrollment of nine students with ASD functioning across the spectrum that participated. All of the students met their state’s criteria for eligibility for special education services under the autism category. The 547 student participants were racially/ethnically diverse, with 48% of the sample identified by parents or school staff in categories other than White, non-Hispanic (see Table 1).

Table 1.

School and Student Characteristics

| Characteristic | Mean or % (SD) - Range |

|---|---|

| Urbanicity | |

| Rural/Town | 15.0 |

| Suburb | 45.0 |

| City | 40.0 |

| School Size | 1890 (70.1) |

| SES (% Title 1 Eligible) | 56.7 |

| Ethnicity | |

| White, non-Hispanic | 51.3 |

| Hispanic | 24.1 |

| Black, non-Hispanic | 13.9 |

| Asian | 6.22 |

| More than 2 races | 3.75 |

| American Indian/Alaskan | .520 |

| Native Hawaiian | .290 |

| Leiter-3 (Nonverbal IQ) | 85.8 (27.2) |

| 30-141 | |

| Vineland Adaptive Behavior Composite SS | 75.7 (16.7) |

| 20-131 | |

| Social Responsiveness Scale (ASD Severity) | |

| Severe | 33.9 |

| Moderate | 31.7 |

| Mild | 16.9 |

| No ASD or Missing | 18.6 |

All schools were publicly funded (i.e. no charter schools), and none were solely special education schools (i.e. no schools only had classes for students with disabilities). School programs began in either Grade 9 or 10, depending on the local education agency. Participation was voluntary and approval was sought from district administrators and then at least three key personnel in each school before the school was included in the study. Schools were located in Rural/Towns, Suburban areas, and Cities within each of the three sites and classified based on the National Center for Education Statistics data from the 2012 to 2013 school years (Keaton, 2014). The rationale for recruiting from three regional sites and different communities within regional sites was to provide a representative sample for the United States. To assess this representativeness, the authors used the Generalizer software (Tipton & Miller, 2015). The Generalizer was designed to analyze the extent to which samples from experimental studies reflect the demographics of the United States (Tipton & Miller, 2015). The tool yields a coefficient that ranges from 0 to 1.0, with scores in the range of 0.90–1.0 identified as having very high generalizability, 0.89–0.70 as high generalizability, 0.69–0.50 as medium generalizability, and <0.50 as low generalizability. For the CSESA sample, the generalizability index was 0.71, which indicated high generalizability.

Instrument

As noted, the Autism Program Environment Rating Scale–Middle/High School version (APERS-MHS) assesses quality of high school programs serving learners with ASD who are 11 to 22 years of age (i.e. through the end of high school) (Odom et al., 2018). The APERS-MHS includes 66 items in 10 domains that focus on interdisciplinary teaming, learning environment, positive classroom climate, assessment and IEP, curriculum and instruction, communication, social competence, personal independence, functional behavior, and family participation. The transition domain is a composite of transition-relevant items embedded in other domains.

The APERS items are organized on a 5-point rating continuum, with the 1 rating indicating poorest quality and 5 indicating high quality. A rating of 3 indicated minimally acceptable quality. Item ratings 1, 3, and 5 include behavioral anchors consisting of 2–3 descriptive indicators. To score a 1, the rater codes any indicator in the 1 rating. For a score of 2, the rater codes all of the indicators listed for a 1 rating, plus at least one, but not all of the 3 rating indicators. For a score of 3, the rater codes all 3 rating indicators. Similarly, for a score of 4, the rater codes all 3 rating indicators, and at least one (but not all) 5 rating indicators. For a score of 5, the rater codes all 3 and all 5 rating indicators (Odom et al., 2018). The coding format is computer-based. When the rater codes the individual indicators for a specific item, the software program generates the score for the item, and the program also tabulates the domain (e.g. Assessment and IEP, Teaming, and Curriculum and Instruction) and total mean item ratings.

Procedures

CSESA staff at each project site participated in an APERS training regimen in which they reviewed items in each domain, discussed interpretation of the items, and practiced observation and item rating from video samples with feedback from the trainer, who was one of the original developers of the APERS or was trained by a developer. They then conducted a complete APERS assessment with a trained rater, examined agreement between ratings, and reached consensus on items scored separately. Consensus was agreement within one point on the majority of items and agreeing on interpretation of items, with the trained coder, on items not within one point. When the trained coder and coder in training established consensus, the coder in training then conducted the APERS-MHS independently. APERS coders generated summary reports that were sent to the trainer for review and feedback before continuing with additional evaluations.

APERS data collection

The CSESA project measured “whole school” quality of programs for (1) students seeking a high school diploma who were often in classes with their peers in general education and for (2) non-diploma-bound students following a modified curriculum who received instruction in self-contained classes for most or all of the school day. Across the three states, different criteria for obtaining a diploma existed. In California and Wisconsin, students in high school could be in one of the two completion pathways: standard diploma or modified diploma. Students seeking a standard diploma completed courses that met state requirements for high school graduation. Students seeking a modified diploma did not meet requirements for a high school diploma, but could earn certificates of completion. North Carolina has standard and modified diploma pathways, in addition to an Occupational Course of Study (OCS). Students on the OCS path complete a set of academic courses, as well as a prescribed number of hours of work-based learning experiences. Students earn a high school diploma (not a certificate); however, it does not allow for entry directly into a community college or college. For the purpose of the APERS-MHS, these students were grouped into the modified diploma group.

To gather information for scoring the APERS-MHS, trained coders observed classes and contexts in programs (lunch period, transitions across campus buildings) for learners with ASD for 4–6 h distributed across 2 days. Three students with ASD served as the focus of observations in the school (e.g. one from the modified program, one from the standard diploma program, and the third from either based on school context). These students were nominated by the school staff as being representative of the students receiving services in the program (e.g. if the program were self-contained and most students in the class had an intellectual disability and had limited communication skills, then the focal student had these characteristics). The function of the focal students was to guide the observer/rater in their observations in specific aspects of the programs (i.e. by following and observing the focal student, the observer was able to see how the program worked for other students as well).

Coders also completed between five and nine interviews to gather information about aspects of the program that they could not observe directly (e.g. team decision-making process, families’ involvement in the assessment and IEP process, and participation of community service providers in transition plan meetings). The interviewees included the school principal, special and general education teachers, related service personnel, paraprofessionals, and selected family members. Coders also reviewed students’ Individual Education and Transition Plans, as well as any other relevant documentation (e.g. behavior intervention plans). The coders used the entire set of information (i.e. observations, interviews, and document review) to inform their ratings. Coders completed APERS-MHS separately for the diploma-bound program and the modified program when both types of programs existed within one school.

The APERS-MHS was scored for 60 programs for diploma-bound students at each of the participating high schools and for 47 programs with students following a modified curriculum at the start of the academic year. The reason there were only 47 programs with students following a modified curriculum was because, at some participating high schools, there were no student participants with ASD enrolled in a non-diploma-bound program.

Psychometric features of APERS-MHS rating scale

The internal consistency of the APERS-MHS has been previously reported in Odom et al. (2018). To summarize, Cronbach’s alpha for the high school APERS was 0.95 and 0.96 for inclusive and self-contained programs. The mean coefficient for the individual domains was 0.77 with a range of 0.60–0.89.

Although not specifically a measure of reliability, a second assessment of consistency is interobserver agreement. To calculate inter-rater agreement, two research staff members simultaneously collected APERS-MHS information and completed APERS-MHS ratings in schools at each regional site. One rater was a staff member from the respective regional site who did not have an association with the school being assessed (i.e. did not serve as a CSESA contact for that school or a coach). The second “reliability” rater traveled to each regional site to collect inter-rater agreement APERS data. Rating by two researchers occurred for 21 of the 60 APERS collected, distributed evenly across regional sites. The inter-rater agreement calculation was number of exact agreements divided by total number of items coded. The mean percentage agreement was 76.5%. As a second illustration of agreement, the mean item ratings for the total APERS scores was averaged across all schools for the two raters. The mean scores were 3.31 (SD = 0.55) and 3.33 (SD = 0.53) for the two raters. Item ratings were also examined at the individual item level, and the difference between coders was calculated. For example, if one coder scored 3 and the other scored 5, the difference was 2; if they both scored the same rating, the difference was 0. The mean item rating difference on this 5-point scale (i.e. calculated by subtracting the rating by one coder on an individual item from the rating by the second observer and dividing by the number of items) was 0.37 (SD = 0.35) (Odom et al., 2018).

Data analysis

To address the first research question, descriptive statistics are provided about mean program quality and then quality ratings for each domain. To address the second research question, a repeated-measures multivariate analysis of variance (MANOVA) was conducted to compare the APERS weighted mean differences among domains. Analyses were conducted in SPSS version 24. Because this analysis addressed the question of “whole” school quality, for schools that had both diploma and modified programs, authors calculated a weighted school mean, with the weighting reflecting the proportion of students in each program. For example, a school with nine students in the standard diploma program and three students in the modified program would have their mean scores weighted at 0.75 and 0.25, respectively, for the total mean score. Post hoc t-tests were completed using a Bonferroni correction of p < 0.001 to correct for multiple analyses. To address the third research question, hierarchical linear models (HLMs) were conducted in SAS 9.3 to examine the effect of program type (diploma and modified) on APERS scores. HLMs were conducted to account for the clustering of the individual programs within schools. Standardized effect sizes were calculated for each domain using the equation suggested by Raudenbush and Liu (2000, 2001). According to Cohen (1988), effect size values of 0.20, 0.50, and 0.80 are interpreted as small, medium, and large, respectively.

To address the fourth research question, a multiple regression was performed to examine the extent to which school characteristics predicted overall school quality. The total APERS weighted mean was regressed on to location of school (Rural/Town, Suburb, and City); percentage of White, non-Hispanic students; Title 1 eligibility; and school size.

Results

The results of this study are organized by the following research questions.

What is the overall program quality for students with ASD enrolled in high schools in the United States?

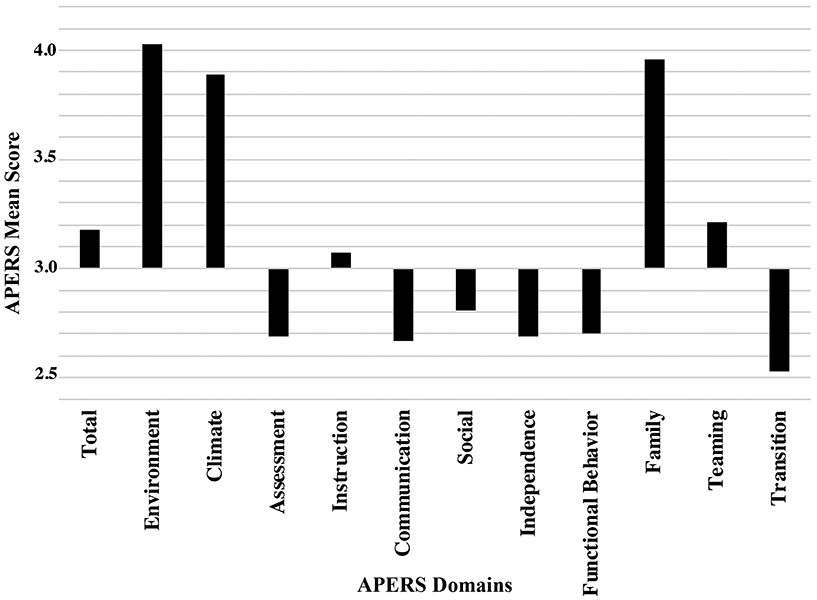

Overall, the mean items rating for the total APERS-MHS was slightly above the adequate rating score (3.0) for the 60 schools in the sample (M = 3.18, SD = 0.45; see Figure 1). The Environment (M = 4.03, SD = 0.57), Family Participation (M = 3.96, SD = 0.72), and Climate (M = 3.89, SD = 0.64) domains all had mean item ratings substantially above the adequate quality rating of 3.0. The domains of Teaming (M = 3.21, SD = 0.50) and Instruction (M = 3.07, SD = 0.63) mean item ratings were near or slightly above the adequate quality rating. The Social (M = 2.81, SD = 0.65), Functional Behavior (M = 2.70, SD = 0.72), Assessment (M = 2.69, SD = 0.54), Independence (M = 2.69, SD = 0.57), Communication (M = 2.67, SD = 0.65), and Transition composite (M = 2.53, SD = 0.55.) domains had mean item ratings below the adequate quality rating.

Figure 1.

Mean Total APERS and Domain Scores

Are there relative strengths and challenges in specific domains of quality?

A repeated-measures MANOVA was conducted to compare the APERS weighted mean differences among domains. Overall, there were significant between-domain differences, F(9, 531) = 93.2, p < 0.001. Post hoc t-tests showed that the Environment, Family Participation, and Climate domains were significantly higher than the minimally adequate domains (Teaming and Instruction, p < 0.001; see Supplemental material 1) and the below minimally adequate domains (Social, Functional Behavior, Assessment, Independence, and Communication, p < 0.001). The Teaming and Instruction domains were significantly higher than the below minimally adequate domains.

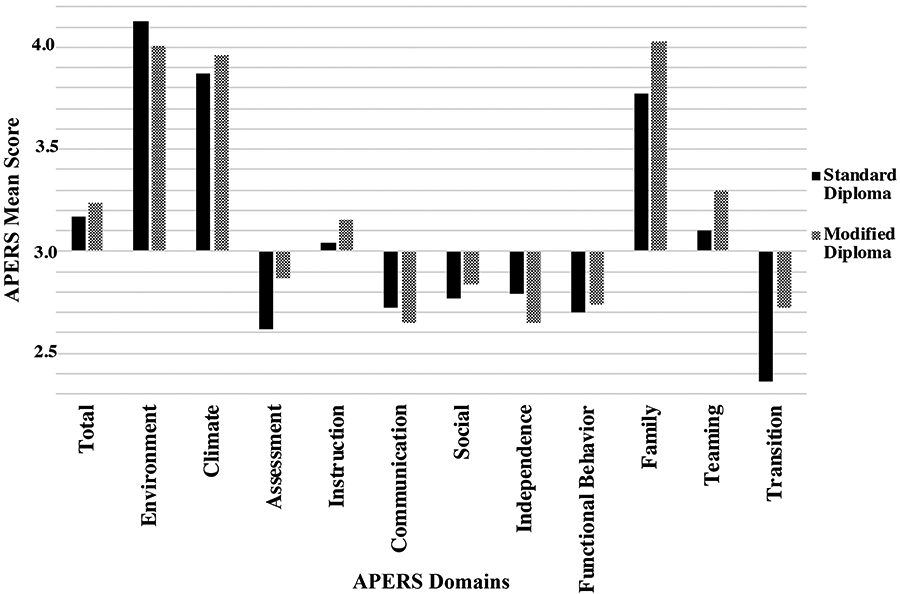

Are there differences between standard diploma and modified programs in overall quality and on specific domains of quality?

In order to determine if there were differences in program quality for the standard diploma and modified diploma programs, HLMs were conducted to examine the effect of program type on APERS-MHS scores (see Figure 2). The mean item rating for the total APERS was not significantly different for the standard diploma and modified diploma programs. To examine the differences in mean item ratings for domains, a Bonferroni adjusted alpha of p < 0.005 (0.05/10 domains) was used. Table 2 lists the significance levels and effect sizes. Of all the domains, only the Transition Composite revealed a significant effect, β = 0.36, SE = 0.08, t(46) = 4.44, p < 0.001, ES = 0.58. Both programs had mean item ratings below the minimal quality items rating criterion of 3.0, but the mean item rating for the diploma program was significantly lower than the modified program. Teaming (β = 0.22, SE = 0.08, t(46) = 2.88, p = 0.006. ES = 0.40) was trending toward significance, with higher mean item ratings occurring for the modified diploma program Also, the Family Participation and Assessment domains had modest effect sizes, with differences favoring the modified diploma program, but their alpha levels did not reach the adjusted level of significance.

Figure 2.

Mean Total APERS and Domain Scores by Diploma Type

Table 2.

Group Differences in APERS Means by Program

| APERS Domain | Standard Diploma Program Mean(SD) (N=60) |

Modified Diploma Program Mean(SD) (N=47) |

Group Parameter estimate (SE) |

p-value | Effect size |

|---|---|---|---|---|---|

| Total | 3.17(.46) | 3.24(.54) | .03(.07) | .63 | 0.07 |

| Environment | 4.13(.62) | 4.01(.67) | −.16(.10) | .13 | .25 |

| Climate | 3.87(.80) | 3.96(.78) | .09 (.10) | .55 | .12 |

| Assessment | 2.62(.53) | 2.87(.65) | .20(.09) | .03 | .34 |

| Instruction | 3.04(.67) | 3.15(.73) | .07(.10) | .47 | .10 |

| Communication | 2.72(.81) | 2.65(.77) | −.09(.12) | .45 | .12 |

| Social | 2.77(.65) | 2.84(.72) | .10(.09) | .29 | .14 |

| Independence | 2.79(.62) | 2.65(.74) | −.18(.11) | .10 | .27 |

| Functional Behavior | 2.70(.81) | 2.74(.72) | .004(.11) | .97 | .01 |

| Family | 3.77(.88) | 4.03(.82) | .27(.13) | .05 | .33 |

| Teaming | 3.10(.54) | 3.30(.53) | .22(.08) | .006 | .40 |

| Transition | 2.36(.53) | 2.72(.68) | .36(.08) | <.001 | .58 |

Are community and school characteristics associated with program quality?

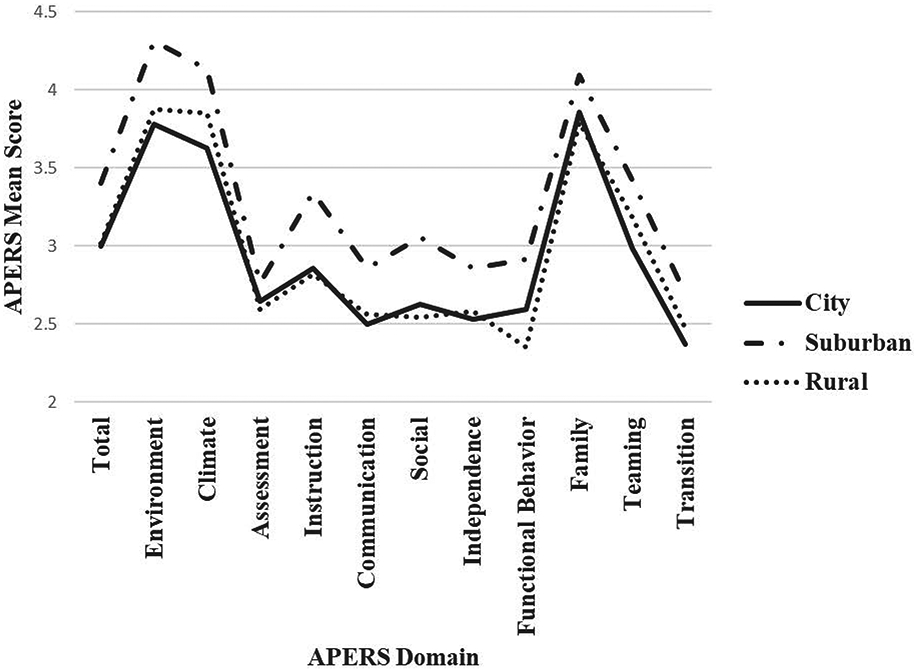

A multiple regression was performed to examine the extent to which school characteristics predicted overall school quality (see Table 1 for descriptive statistics). The total APERS-MHS weighted mean was regressed on to location of school (Rural/Town, Suburb, and City), percentage of non-White and Hispanic students, Title 1 eligibility, and school size. Overall, the model was significant, F(5, 54) = 3.61, p = 0.007, adjusted R2 = 0.181. School characteristics accounted for 18.1% of the variance in overall school quality. The only significant predictor of the model was suburban schools. Suburban schools had significantly higher overall quality than city schools, controlling for rural schools, number of total students, race, and Title 1 eligibility (see Supplemental material 2). Figure 3 shows the mean APERS-MHS scores per domain for school location. As it can be seen, the pattern of scores across the domains was similar for City, Suburban, and Rural schools, with schools performing best on the domains of Environment, Climate, and Family Participation. Overall, Suburban schools had higher APERS means when compared to Rural and City schools.

Figure 3.

APERS Total and Weighted Mean Scores for City, Suburban, and Rural Schools

Discussion

The purpose of this study was to examine the quality of high school programs for students with ASD in the United States. On average, public schools are providing programs of minimally adequate quality for students with ASD. Although meeting the letter of the law, it is also important to determine which features of programs are relatively strong and, conversely, which features dip below minimal quality. The domains that had the highest levels of quality were Learning Environment, Learning Climate, and Family Participation. These domains reflect the structure and safety of the learning environment, the atmosphere of the school in regard to interacting with students in positive and respectful ways, and opportunities to involve families in meaningful ways in the educational process. The domains for which ratings suggest challenges were Assessment, Communication, Social, Independence, Functional Behavior, and Transition. The mean domain scores for these areas were all below 3 (minimum level of acceptable quality). Items in these domains reflect the team’s ability to collect data on IEP goals, develop behavior plans specific to target areas, provide multiple opportunities for social engagement and communication, and implement opportunities for independence and self-regulation into the curriculum for students with ASD. Importantly, these are the key features of schooling that are likely to lead to more positive learning experiences in school and more positive life outcomes when students leave school. When planning for quality improvement of high school programs for students with ASD, these are the areas that could/should be the focus of professional development of high school personnel.

When comparing standard diploma programs for students with ASD to modified programs, for most domains, these programs were similar in quality. However, there was a significant difference in the Transition composite, with the quality of transition programming being significantly higher for students in the modified program verses the diploma-bound program, although it must be noted that the scores for both types of programs were below 3.0, reflecting inadequate quality. The transition items include implementing transition assessments and parent involvement in transition planning, in addition to students having regular and frequent access to work-based learning activities and self-advocacy instruction. It is concerning that the quality of transition programming is low for these high school students on the autism spectrum, especially considering what we know about outcomes for these students when they leave high school (Roux et al., 2017; Taylor & Seltzer, 2011). Research shows that early transition planning in high school that includes career exploration, paid or unpaid work experiences, self-determination instruction, and family involvement is associated with better outcomes for individuals with autism in adulthood (Landmark, Ju, & Zhang, 2010; Test et al., 2009). For diploma-bound high school youth on the autism spectrum, the access to this critical instruction is even more lacking. This group in particular needs instruction that focuses on successful life outcomes early on, as they are exiting the school system at 18 years and, thus, have less time for transition-based programming than do students in modified diploma programs who may stay until age 22 years. Moreover, adult outcome data indicate that this group is often the most at risk of having no formal programming in adulthood (Taylor & Seltzer, 2011).

When predictors of school programming quality were examined, location of the school was the only significant predictor, with suburban schools having higher APERS-MHS scores compared to rural or city schools. Suburban schools could have higher APERS scores because of their access to services, resources, and supports for the school team, which may include more opportunities to collaborate with colleagues and attend professional development and trainings. Interestingly, even though suburban location predicted higher quality, the pattern of scores across school location was similar, with all schools having the same relative strengths (e.g. strong learning environment, positive classroom climate, and family participation) and needs (e.g. assessment and IEP, communication, social competence, personal independence, functional behavior, and transition). Thus, in terms of professional development and pre-service teacher preparation, areas of focus and need are relatively similar. Teachers need supports in assessment of skills, writing quality IEPs, developing functional programs addressing social competence, promoting self-advocacy, including opportunities for work-based learning, creating effective communication systems, and providing supports to increase independence, regardless of whether they work in cities, suburban towns, or more rural communities.

Implications for practice

Based on the findings of this study, there are several implications for practice. In general, high school programs for students with ASD need more emphasis on transition programming. This should include a breadth of work-based learning activities, such as career exploration, job shadowing, service learning, volunteer work, and paid employment, in addition to activities that focus on self-advocacy. Although this is an area of need for all high school students with ASD, it is of particular importance for diploma-bound high school students, who have even less opportunity to participate in such programming while in school. In-service training for school personnel aimed at increasing knowledge about the characteristics of ASD, and awareness that students in general education programs who are performing well with academic content could exit high school without the skills to be successful in postsecondary and employment settings, may provide the needed rationale for a focus on areas typically unaddressed by high school programs such as organizational skills, collaborating, and social competence (Hall & Odom, 2019). In addition, in order to incorporate such programming into the curriculum, administrators and school teams will need to be flexible in their scheduling to allow for courses/periods/advisory to address some of this content.

Clear areas to target for high school students with ASD include social and communication behaviors. This was an area of need regardless of school location or diploma type. Because time and resources are limited, and it is important to minimize teacher burden, resources that target multiple domains should be chosen. This can include both peer-mediated interventions and group social skills training. Free resources exist to support teachers and school teams in implementing such practices including the Center on Secondary Education for Students with Autism (CSESA; www.csesa.fpg.unc.edu) and the National Technical Assistance Center on Transition (NTACT; www.transitionta.org).

Implications for district-level administrators include the careful consideration of course offerings that focus on Career and Technical Education and providing work-based learning experiences (e.g. creating a coffee service, office assistance internships, and coordinating recycling efforts) within those offerings. In terms of the overarching need for social communication skills for the population, links can be made to Common Core and 21st Century skills (Partnership for 21st Century Learning, 2007), which focus on communication and collaboration skills for all students.

Implications for research

This study examined program quality for high school students with ASD at one point in time. It will be important for future research to examine changes in program quality over time and as a function of targeted interventions. It will also be important to examine the relationship between the quality of school programs and specific outcomes for students including employment, community living, social networks, and other adult life outcomes.

Limitations

Several limitations exist for this study. First, all of the APERS data were collected during the first semester of the schools’ academic year. It is quite possible that quality might change over the course of a school year, which could be a focus of future research. Second, the exact inter-rater agreement at the item level was at the lower end of acceptability for quantitative observational measures with discretely defined categories. However, the APERS-MHS is a rating scale that depends on three sources of information (i.e. observations, interviews, and record review) from which raters make subjective judgments about an item rating. Rating judgments may vary to a small degree between raters on individual items and still yield summary rating scores that are consist between raters and that reflect internal consistency of the instrument, as occurred for the APERS-MHS. Third, in recruiting schools in different regional and demographically diverse communities, the authors attempted to secure a sample that represented public schools in the United States and the analysis of generalizability (Tipton & Miller, 2015) indicated that the goal of this recruitment was successful. However, the schools recruited were a sample of convenience and were not randomly selected from across the United States. Such random selection could possibly have yield different results, although the feasibility of random selection in whole school-based research in authentic settings is a challenge. Finally, it is important to note that the conceptualization of quality represented in the APERS and the findings of this study are contextually bound to the United States. A recent study of the use of the APERS in Scandinavia indicates that cultural adaptations are necessary to “fit” the Swedish school system (Bejnö et al., 2019).

Conclusion

The primary conclusions from this study are that, on average, high schools in the United States are providing safe environments for students with ASD that have a positive social climate, connections to families, and teaming. However, it appears that the features of high school programs that focus on the areas of most need for students with ASD (e.g. communication, social competence, independence, and challenging behavior) fall below expectations of adequate quality. Of particular concern is instruction and preparation for transition, which consistently fell below adequate quality, especially for students in diploma-granting (i.e. inclusive) programs. These data suggest that future research and program development should focus on building the instructional quality of high schools in the United States for students with ASD.

Supplementary Material

Acknowledgements

The authors thank the many schools, staff members, adolescents on the autism spectrum, and their families who made this study possible.

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the Institute of Education Sciences, US Department of Education (grant R324C120006) awarded to University of North Carolina at Chapel Hill. The opinions expressed represent those of the authors and do not represent views of the Institute or the US Department of Education. B.T. was supported by the US National Institutes of Health (grant T32HD040127).

References

- ACT. (2012). A first look at higher performing high schools: School qualities that educators believe contribute most to college and career readiness (ERIC Reproduction No. ED541867). Iowa City, IA: Author; Retrieved from https://files.eric.ed.gov/fulltext/ED541867.pdf [Google Scholar]

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (DSM-5). Washington, DC: Author. [Google Scholar]

- Anderson KA, Shattuck PT, Cooper BP, Roux AM, Wagner M (2014). Prevalence and correlates of postsecondary residential status among young adults with an autism spectrum disorder. Autism, 18, 562–570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baio J, Wiggins L, Christensen DL, Maenner MJ, Daniels J, Warren Z, . . . Dowling NF (2018). Prevalence of autism spectrum disorder among children aged 8 years—Autism and Developmental Disabilities Monitoring Network, 11 sites, University States, 2014. Morbidity and Mortality Weekly Report, 67, 1–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bejnö H, Roll-Pettersson L, Klintwall L, Långh U, Odom S, Bölte S (2019). Cross-cultural content validity of the Autism Program Environment Rating Scale in Sweden. Journal of Autism and Developmental Disorders, 49, 1853–1862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum. [Google Scholar]

- Crimmins DB, Durand VM, Theurer-Kaufman K, Everett J (2001). Autism program quality indicators. Albany: New York State Education Department. [Google Scholar]

- ESSA. (2015). Every Student Succeeds Act of 2015, Pub. L. No. 114–95 § 114 Stat. 1177 (2015–2016). [Google Scholar]

- Hall LJ, Odom SL (2019, May). Deepening supports for teens with autism. Educational Leadership, 76(8). Available from www.ascd.org [Google Scholar]

- Hubel M, Hagell P, Sivberg B (2008). Brief report: Development and initial testing of a questionnaire version of the Environment Rating Scale (ERS) for assessment of residential programs for individuals with autism. Journal of Autism and Developmental Disorders, 38, 1178–1183. [DOI] [PubMed] [Google Scholar]

- Keaton P (2014). Documentation to the NCES common core of data public elementary/secondary school universe survey: School year 2012–13 provisional version 1a (NCES 2015–009). Washington, DC: National Center for Education Statistics, U.S. Department of Education; Retrieved from https://nces.ed.gov/ccd/pdf/sc122a_documentation_052716.pdf [Google Scholar]

- Landmark LJ, Ju S, Zhang D (2010). Substantiated best practices in transition: Fifteen plus years later. Career Development for Exceptional Individuals, 333, 165–176. [Google Scholar]

- Lipscomb S, Haimson J, Liu AY, Burghardt J, Johnson DR, Thurlow ML (2017). Preparing for life after high school: The characteristics and experiences of youth in special education. Findings from the National Longitudinal Transition Study 2012. Volume 1: Comparisons with other youth: Executive summary (NCEE 2017–4017). Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance; Retrieved from https://ies.ed.gov/ncee/projects/evaluation/disabilities_nlts2012.asp [Google Scholar]

- Odom S, Cox A, Sideris J, Hume K, Hedges S, Kucharczyk S, . . . Neitzel J (2018). Assessing quality of program environments for children and youth with autism: Autism Program Environment Rating Scale (APERS). Journal of Autism and Developmental Disorders, 48, 913–924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Partnership for 21st Century Learning . (2007). Framework for 21st century learning. Washington, DC: Retrieved from http://www.p21.org/our-work/p21-framework [Google Scholar]

- Raudenbush SW, Liu XL (2000). Statistical power and optimal design for multisite randomized trials. Psychological Methods, 6, 199–213. doi: 10.1037//1082-989X.5.2.199 [DOI] [PubMed] [Google Scholar]

- Raudenbush SW, Liu XL (2001). Effects of study duration, frequency of observation, and sample size on power of studies of group differences in polynomial change. Psychological Methods, 6, 387–401. doi: 10.1037//1082-989X.6.4.387 [DOI] [PubMed] [Google Scholar]

- Renty J, Roeyers H (2005). Students with autism spectrum disorder in special and general education schools in Flanders. The British Journal of Developmental Disabilities, 51, 27–39. doi: 10.1179/096979505799103795 [DOI] [Google Scholar]

- Roux AM, Rast JE, Anderson KA, Shattuck PT (2017). National autism indicators report: Developmental disability services and outcomes in adulthood. Philadelphia, PA: Life Course Outcomes Program, A.J. Drexel Autism Institute, Drexel University. [Google Scholar]

- Roux AM, Shattuck PT, Rast JE, Rava JA, Anderson KA (2015). National Autism Indicators Report: Transition into young adulthood. Philadelphia, PA: Life Course Outcomes Research Program, A. J. Drexel Autism Institute, Drexel University. [Google Scholar]

- Tager-Flusberg H, Kasari C (2013). Minimally verbal school-aged children with autism spectrum disorder: The neglected end of the spectrum. Autism Research, 6, 468–478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JL, Henninger NA, Mailick MR (2015). Longitudinal patterns of employment and postsecondary education for adults with autism and average-range IQ. Autism, 19, 785–793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JL, Seltzer MM (2011). Employment and post-secondary educational activities for young adults with autism spectrum disorders during the transition to adulthood. Journal of Autism and Developmental Disorders, 41, 566–574. doi: 10.1007/s10803-010-1070-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Test D, Mazzotti VL, Mustian AL, Fowler CH, Kortering L, Kohler P (2009). Evidence-based secondary transition predictors for improving postschool outcomes for students with disabilities. Career Development for Exceptional Individuals, 32, 160–181. doi: 10.1177/0885728809346960 [DOI] [Google Scholar]

- Tipton E, Miller K (2015). Generalizer [Web-tool]. Retrieved from http://www.generalizer.org [Google Scholar]

- U.S. Department of Education, Office of Special Education and Rehabilitative Services, Office of Special Education Programs . (2017). 39th annual report to Congress on the implementation of the Individuals with Disabilities Education Act. Washington, DC: Author. [Google Scholar]

- Van Bourgondien ME, Reichle NC, Campbell DG, Mesibov GB (1998). The Environmental Rating Scale (ERS): A measure of the quality of the residential environment for adults with autism. Research in Developmental Disabilities, 19, 381–394. doi: 10.1016/S0891-4222(98)00012-2 [DOI] [PubMed] [Google Scholar]

- Volkmar FR, Reichow B, Westphal A, Mandell DS (2014). Autism and the autism spectrum: Diagnostic concepts In Volkmar F, Rogers S, Paul R, Pelphrey K (Eds.), Handbook of autism and developmental disorders, 4th ed., Vol. 1 (pp. 3–27). Hoboken, NJ: Wiley & Sons. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.