Abstract

There is a need to better identify impaired cognitive processes to increase our understanding of cognitive dysfunction caused by cancer and cancer treatment and to improve interventions. The Trail Making Test is frequently used for evaluating information-processing speed (part A) and executive function (part B), but interpretation of its outcomes is challenging because performance depends on many cognitive processes. To disentangle processes, we collected high-resolution data from 192 non–central nervous system cancer patients who received systemic therapy and 192 cancer-free control participants and fitted a Shifted-Wald computational model. Results show that cancer patients were more cautious than controls (Cohen d = 0.16). Patients were cognitively slower than controls when the task required task switching (Cohen d = 0.16). Our results support the idea that cancer and cancer treatment accelerate cognitive aging. Our approach allows more precise assessment of cognitive dysfunction in cancer patients and can be extended to other instruments and patient populations.

Neuropsychological assessment is a key component of care for cancer patients facing cognitive dysfunction. The choice of an intervention to diminish cognitive problems is based on neuropsychological assessment (1,2), so care can be improved by enhancing precision of assessment instruments. One of the most used tests (3,4), and part of the core battery recommended by the International Cognition and Cancer Task Force (5), is the Trail Making Test (TMT) (6). Cancer and cancer treatment affect performance on the TMT, although results are mixed (7–9), and impairments may differ between patients (10). The TMT has two parts. Part A requires patients to connect circles labeled 1 and 2, 2 and 3, and so on. Part B requires connecting circles labeled 1 and A, A and 2, 2 and B, and so on (11). Traditional outcomes include the time to complete part A and part B, and the ratio or difference between the two.

Although the primary goal of part A is to measure information-processing speed, individual differences in performance can also reflect differences in motor slowing (12), numerical ability, decisiveness, and attention (6). Although the primary goal of part B is to measure executive functioning, differences in performance can also reflect differences in working memory, alphabetism, motor slowing, numerical ability, decisiveness, and attention (13). The influence of these secondary processes decreases the interpretability and diagnosticity of test results (14). From traditional outcomes, primary and secondary processes cannot be disentangled.

To disentangle cognitive processes, we obtained data (15,16) using a computerized version of the TMT. This test is part of the Amsterdam Cognition Scan, a test battery designed to be completed without supervision and on the participant’s own computer (15). The Amsterdam Cognition Scan stores high-resolution data, that is, per mouse click, allowing more sophisticated analyses of processes underlying participants’ performance. Participants included 192 patients (112 women, mean [SD] age: 52.4 [11.9] years) with non–central nervous system (non-CNS) cancer who received systemic therapy (chemotherapy, hormonal therapy, or immunotherapy, or a combination) and 192 controls (123 women, mean [SD] age = 51.1 [11.4] years) without a history of cancer and recruited via participants after outlier removal and matching on age (see Supplementary Methods, available online). The institutional review board of the Netherlands Cancer Institute approved the study. Written informed consent was obtained from all participants before the assessments. As reaction times, the time to transition from one circle to the next was used, resulting in 24 reaction times per part and 48 reaction times in total per person (18 432 reaction times overall).

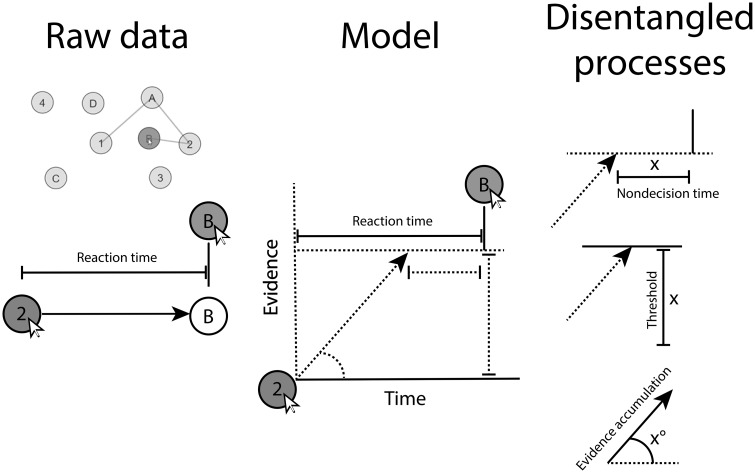

We fitted a hierarchical Bayesian cognitive model (17). Hierarchical Bayesian models provide the optimal balance between estimating parameters at the group level and estimating interindividual heterogeneity (18) and are well suited for cognitive modeling (19). Cognitive models allow disentanglement of processes by requiring the specification of a mathematical model and its assumptions (20). We used a Shifted-Wald model to relate the time participants required to connect circles to three parameters: evidence accumulation, threshold, and nondecision time (21, 22).

Each parameter defines an aspect of the response time distribution (21) and represents a distinct process [see Figure 1 for a review (23) and Supplementary Methods, available online, for further explanation]. Evidence accumulation can be interpreted as cognitive speed. If the evidence accumulation parameter is high, participants gather evidence quickly and respond quickly. The threshold can be interpreted as response caution. If the threshold is low, participants require little evidence and respond quickly. Nondecision time can be interpreted as time required for noncognitive parts of the task. If nondecision time is low, participants waste little time on noncognitive tasks, such as physically moving and clicking the mouse, and respond quickly.

Figure 1.

Illustration of how raw reaction times are decomposed into three cognitive processes in the Shifted-Wald model.

We fitted the model in Stan (24) (see Supplementary Methods, available online, for model code and convergence diagnostics). For each parameter and comparison, we computed the 95% highest posterior density interval (25,26) to quantify statistical significance, signifying an α level of .05, and two-sided testing. Findings are depicted in Figure 2 (numbers in Supplementary Tables, available online, observed and estimated distributions to assess model fit in Supplementary Figure 10, available online).

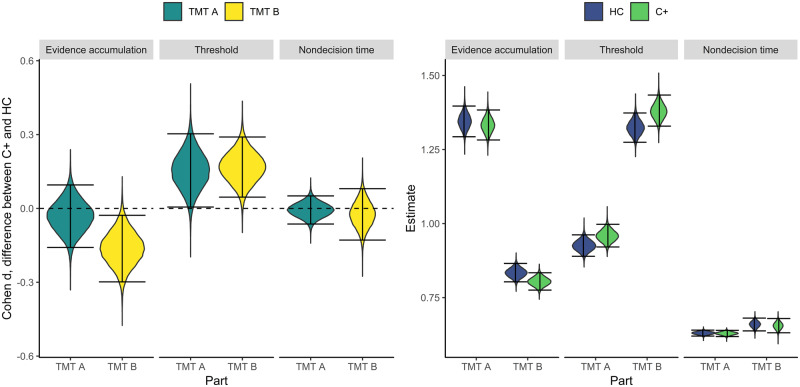

Figure 2.

Distributions of effect sizes and parameter estimates on three parameters and parts A and B of the TMT. Intervals denote 95% highest posterior density intervals. C+ = non–central nervous system cancer patients; HC = controls; TMT = Trail Making Test.

Evidence accumulation was slower for patients than for controls during part B but not during part A; the task that requires switching between letters and numbers is more difficult for cancer patients than for controls. Thresholds were higher for patients than for controls for both parts A and B, indicating that cancer patients are more cautious. Patients and controls did not differ in time needed to complete noncognitive parts of the test. This suggests that motor speed is intact in cancer patients.

The observed differences between patients and controls on evidence accumulation and threshold were both small (d = 0.16). These differences will most probably not be of immediate concern to patients undergoing chemotherapy. Instead, they inform us on the mechanisms of cognitive dysfunction. Because these mechanisms can affect performance on more domains than the TMT measures, the impact of these differences may be more widespread than the small effect sizes initially suggest.

Cancer patients showed more caution in their responses. This “conservative” response is frequently observed when comparing older and younger patients using the Shifted-Wald Model (27) and related models and tasks (28,29) and is associated with age-related reductions in white matter integrity between cortex and striatum (29). Second, patients’ cognitive speed was slower when the task required task switching. Age-related slowing in task switching accompanies decreases in frontoparietal white matter integrity (30). White matter integrity is found to be decreased in cancer patients as well (31). That the pattern of cognitive results converges between cancer patients and aging participants supports the idea that cancer treatment may accelerate cognitive aging (32–34).

The Shifted-Wald model can be extended to other timed tasks and is applicable beyond non-CNS cancer to psychiatric and neurological conditions associated with cognitive decline. A potential limitation of our approach is the assumption that reaction times are homogeneously composed of the same three processes. This assumption may be violated in participants who strategically pause between circles to map out the next clicks. Although such strategies are not obvious given task instructions, a modeling approach that statistically separates strategies may be a fruitful extension (35).

Currently, behavioral and pharmacological interventions for cognitive decline in cancer patients lean on findings from outside the non-CNS cancer literature, because information on the mechanistic nature of cognitive dysfunction in cancer patients is sparse. More such knowledge improves information for patients and caretakers on the cognitive phenotype and will guide the search for more effective interventions that target the basis of decline.

Funding

This work was supported by the Dutch Cancer Society, KWF Kankerbestrijding (grant number KWF 2010–4876).

Notes

The funder had no role in the design of the study; the collection, analysis, and interpretation of the data; the writing of the manuscript; and the decision to submit the manuscript for publication.

The authors have no conflicts of interest to disclose.

Supplementary Material

References

- 1. Kessels RP. Improving precision in neuropsychological assessment: bridging the gap between classic paper-and-pencil tests and paradigms from cognitive neuroscience. Clin Neuropsychol. 2019;33(2):357–368. [DOI] [PubMed] [Google Scholar]

- 2. Schagen SB, Klein M, Reijneveld JC, et al. Monitoring and optimising cognitive function in cancer patients: present knowledge and future directions. EJC Suppl. 2014;12(1):29–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Lezak MD, Howieson DB, Bigler ED, Tranel D.. Neuropsychological Assessment. Oxford: Oxford University Press; 2012. [Google Scholar]

- 4. Strauss E, Sherman EM, Spreen O.. A Compendium of Neuropsychological Tests: Administration, Norms, and Commentary. Oxford: Oxford University Press; 2006. [Google Scholar]

- 5. Wefel JS, Vardy J, Ahles T, Schagen SB.. International cognition and cancer task force recommendations to harmonise studies of cognitive function in patients with cancer. Lancet Oncol. 2011;12(7):703–708. [DOI] [PubMed] [Google Scholar]

- 6. Reitan RM. Validity of the trail making test as an indicator of organic brain damage. Percept Mot Skills. 1958;8(3):271–276. [Google Scholar]

- 7. Ganz PA, Kwan L, Castellon SA, et al. Cognitive complaints after breast cancer treatments: examining the relationship with neuropsychological test performance. J Natl Cancer Inst. 2013;105(11):791–801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Schagen SB, Dam FV, Muller MJ, Boogerd W, Lindeboom J, Bruning PF.. Cognitive deficits after postoperative adjuvant chemotherapy for breast carcinoma. Cancer. 1999;85(3):640–650. [DOI] [PubMed] [Google Scholar]

- 9. Tager FA, McKinley PS, Schnabel FR, et al. The cognitive effects of chemotherapy in post-menopausal breast cancer patients: a controlled longitudinal study. Breast Cancer Res Treat. 2010;123(1):25–34. [DOI] [PubMed] [Google Scholar]

- 10. Castellon SA, Silverman DH, Ganz PA.. Breast cancer treatment and cognitive functioning: current status and future challenges in assessment. Breast Cancer Res Treat. 2005;92(3):199–206. [DOI] [PubMed] [Google Scholar]

- 11. Bowie CR, Harvey PD.. Administration and interpretation of the trail making test. Nat Protoc. 2006;1(5):2277–2281. [DOI] [PubMed] [Google Scholar]

- 12. Crowe SF. The differential contribution of mental tracking, cognitive flexibility, visual search, and motor speed to performance on parts a and b of the trail making test. J Clin Psychol. 1998;54(5):585–591. [DOI] [PubMed] [Google Scholar]

- 13. Arbuthnott K, Frank J.. Trail making test, part B as a measure of executive control: validation using a set-switching paradigm. J Clin Exp Neuropsychol. 2000;22(4):518–528. [DOI] [PubMed] [Google Scholar]

- 14. Ahles TA, Hurria A.. New challenges in psycho-oncology research IV: cognition and cancer: conceptual and methodological issues and future directions. Psychooncology. 2018;27(1):3–9. [DOI] [PubMed] [Google Scholar]

- 15. Feenstra HE, Murre JM, Vermeulen IE, Kieffer JM, Schagen SB.. Reliability and validity of a self-administered tool for online neuropsychological testing: The Amsterdam Cognition Scan. J Clin Exp Neuropsychol. 2018;40(3):253–273. [DOI] [PubMed] [Google Scholar]

- 16. Feenstra HE, Vermeulen IE, Murre JM, Schagen SB.. Online self-administered cognitive testing using the Amsterdam cognition scan: establishing psychometric properties and normative data. J Med Internet Res. 2018;20(5):e192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Lee MD, Wagenmakers E-J.. Bayesian Cognitive Modeling: A Practical Course. Cambridge: Cambridge University Press; 2014. [Google Scholar]

- 18. Gelman A, Hill J.. Data Analysis Using Regression and Multilevel/Hierarchical Models. New York: Cambridge University Press; 2006. [Google Scholar]

- 19. Lee MD. How cognitive modeling can benefit from hierarchical Bayesian models. J Math Psychol. 2011;55(1):1–7. [Google Scholar]

- 20. Shankle WR, Hara J, Mangrola T, Hendrix S, Alva G, Lee MD.. Hierarchical Bayesian cognitive processing models to analyze clinical trial data. Alzheimers Dement. 2013;9(4):422–428. [DOI] [PubMed] [Google Scholar]

- 21. Anders R, Alario F, Van Maanen L.. The shifted Wald distribution for response time data analysis. Psychol Methods. 2016;21(3):309–327. [DOI] [PubMed] [Google Scholar]

- 22. Heathcote A. Fitting Wald and Ex-Wald distributions to response time data: an example using functions for the s-plus package. Behav Res Methods. 2004;36(4):678–694. [DOI] [PubMed] [Google Scholar]

- 23. Matzke D, Wagenmakers E-J.. Psychological interpretation of the ex-Gaussian and shifted Wald parameters: a diffusion model analysis. Psychon Bull Rev. 2009;16(5):798–817. [DOI] [PubMed] [Google Scholar]

- 24. Carpenter B, Gelman A, Hoffman MD, et al. Stan: a probabilistic programming language. J Stat Softw. 2017;76(1):1–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Kruschke J. Doing Bayesian Data Analysis: A Tutorial with R, Jags, and Stan. London: Academic Press; 2014. [Google Scholar]

- 26. McElreath R. Statistical Rethinking: A Bayesian Course with Examples in R and Stan. Boca Raton: Chapman; Hall/CRC; 2016. [Google Scholar]

- 27. Anders R, Hinault T, Lemaire P.. Heuristics versus direct calculation, and age-related differences in multiplication: an evidence accumulation account of plausibility decisions in arithmetic. J Cogn Psychol. 2018;30(1):18–34. [Google Scholar]

- 28. Ratcliff R, Thapar A, McKoon G.. Aging and individual differences in rapid two-choice decisions. Psychon Bull Rev. 2006;13(4):626–635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Forstmann BU, Tittgemeyer M, Wagenmakers E-J, Derrfuss J, Imperati D, Brown S.. The speed-accuracy tradeoff in the elderly brain: a structural model-based approach. J Neurosci. 2011;31(47):17242–17249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Gold BT, Powell DK, Xuan L, Jicha GA, Smith CD.. Age-related slowing of task switching is associated with decreased integrity of frontoparietal white matter. Neurobiol Aging. 2010;31(3):512–522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Deprez S, Amant F, Smeets A, et al. Longitudinal assessment of chemotherapy-induced structural changes in cerebral white matter and its correlation with impaired cognitive functioning. J Clin Oncol. 2012;30(3):274–281. [DOI] [PubMed] [Google Scholar]

- 32. Ahles TA, Root JC, Ryan EL.. Cancer-and cancer treatment–associated cognitive change: an update on the state of the science. J Clin Oncol. 2012;30(30):3675–3686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Mandelblatt JS, Hurria A, McDonald BC, et al. Cognitive effects of cancer and its treatments at the intersection of aging: what do we know; what do we need to know? Semin Oncol. 2013;40(6):709–725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Carroll JE, Van Dyk K, Bower JE, et al. Cognitive performance in survivors of breast cancer and markers of biological aging. Cancer. 2019;125(2):298–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Steingroever H, Jepma M, Lee MD, Jansen BR, Huizenga HM.. Detecting strategies in developmental psychology. Comput Brain Behav. 2019;2(2):128–140. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.