Abstract

Background

The specialty-registration of independent prescribing (IP) was introduced for optometrists in 2008, which extended their roles including into acute ophthalmic services (AOS). The present study is the first since IP’s introduction to test concordance between IP optometrists and consultant ophthalmologists for diagnosis and management in AOS.

Methods

The study ran prospectively for 2 years at Manchester Royal Eye Hospital (MREH). Each participant was individually assessed by an IP optometrist and then by the reference standard of a consultant ophthalmologist; diagnosis and management were recorded on separate, masked proformas. IP optometrists were compared to the reference standard in stages. Cases of disagreement were arbitrated by an independent consultant ophthalmologist. Cases where disagreement persisted after arbitration underwent consensus-review. Agreement was measured with percentages, and where possible kappa (Κ), for: diagnosis, prescribing decision, immediate management (interventions during assessment) and onward management (review, refer or discharge).

Results

A total of 321 participants presented with 423 diagnoses. Agreement between all IP optometrists and the staged reference standard was as follows: ‘almost perfect’ for diagnosis (Κ = 0.882 ± 0.018), ‘substantial’ for prescribing decision (Κ = 0.745 ± 0.034) and ‘almost perfect’ for onward management (0.822 ± 0.032). Percentage-agreement between all IP optometrists and the staged reference standard per diagnosis was 82.0% (CI 78.1–85.4%), and per participant using stepwise weighting was 85.7% (CI 81.4–89.1%).

Conclusions

Clinical decision-making in MREH’s AOS by experienced and appropriately trained IP optometrists is concordant with consultant ophthalmologists. This is the first study to explore and validate IP optometrists’ role in the high-risk field of AOS.

Subject terms: Health occupations, Diagnosis, Outcomes research, Health services, Therapeutics

Background

The National Health Service’s (NHS) emergency services are experiencing increasing demand [1] compounded by a growing and ageing population [2]. Attendance to acute ophthalmic services (AOS) increased by 7.9% year-on-year at Moorfields Eye Hospital from 2001–2011 [3]. Growing clinical demand, constraints on the number of training posts in ophthalmology [4], a deficit of £960 million for the financial year 2017/2018 across the NHS [5], plus limited capacity and resources, are factors driving strategies to maximise the efficiency of services [6], including extending optometrists’ role into AOS.

Optometrists within hospitals have seen their roles and scope of practice extend [7], which has followed legislative developments, notably the Crown Report [8], and the introduction of independent prescribing (IP) for optometrists in 2008. Agreement studies have shown optometrists to be effective in extended roles, including diabetes [9], appraisal of new referrals [10], glaucoma [11–18], cataract [19, 20] and minor eye conditions services [21].

Limited research exists on optometrists’ clinical decision-making in AOS, with only one agreement-study from 2007 by Hau et al. at Moorfields Eye Hospital before IP was introduced [22]. Good agreement for diagnosis and management plan was found between two senior optometrists and an ophthalmologist, which led the authors to conclude that optometrists have the potential to work safely in the AOS of a busy eye hospital.

Now that IP optometrists can prescribe, further work is needed to validate optometrists’ extended roles in AOS given the lack of evidence and the potential for risk in AOS. The present study is the first one since the introduction of IP to measure agreement in clinical decision-making in AOS between IP optometrists and consultant ophthalmologists. The study tested the hypothesis of concordance (or effective consistency) between four IP optometrists (A–D) and nine consultant ophthalmologists for the diagnosis and management of patients at Manchester Royal Eye Hospital’s (MREH) AOS, which accepts primary and secondary referrals.

Subjects and method

Clinical decision-making by IP optometrists was compared to the reference standard of consultant ophthalmologists; the ophthalmologists, given their experience and qualifications, provided the highest benchmark to compare against. Concordance between each IP optometrist and the reference standard was tested by measuring agreement in diagnosis (defined with reference to local and national guidelines [23]) and management under the three following criteria: prescribing decision (prescribed medication or not), immediate management (any intervention during the assessment) and onward management (review, refer or discharge from AOS).

The study adopted a novel method of a ‘staged reference standard’, which meant that the comparison of each IP optometrist to the reference standard included stages of arbitration and consensus-review where necessary. Arbitration was introduced to address disagreement or partial agreement between the assessing IP optometrist’s and ophthalmologist’s diagnosis and management. The arbitrator was a consultant ophthalmologist independent of the case who had access to both assessing clinicians’ proformas, results of investigations (e.g., blood tests and swabs), and letters from follow-ups. The arbitrator decided whether they agreed with the IP optometrist, the ophthalmologist, either, both or neither. Partial agreement required the arbitrator to judge how many steps away from agreement the IP optometrist was; each step away was subjectively determined by the arbitrator given the complexity of each case. Cases where disagreement persisted after arbitration proceeded to consensus-review at the study’s end; assessing clinicians and arbitrators were forced to reach a consensus for these equivocal cases.

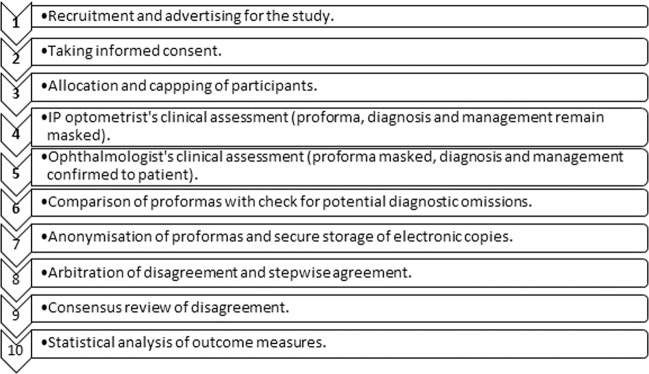

The study’s method is summarised in Fig. 1. Research ethics approval was granted by the NHS and Aston University: REC reference 15/NW/0433, ClinicalTrials.gov Identifier NCT02585063. All applicable institutional and governmental regulations regarding the ethical use of human volunteers were followed.

Fig. 1. Flow chart outlining the method.

Participants and their data passed linearly through each step.

A sample of 300 was required in order to detect the true rate of disagreement with suitably narrow confidence intervals (CI). With a minimum target disagreement rate of 3.0%, the binomial 95% CI was 1.6–5.6%. Recruitment from MREH’s AOS took place from December 2015 to January 2018 during sessions where an IP optometrist and consultant ophthalmologist were present. Posters and leaflets advertised the study. Individuals were invited to participate by approaching them in consecutive order following nurse-led triage in AOS; MREH’s nurses triage by urgency and redirect out-of-area and non-urgent patients. Informed consent was obtained by the research team within the standard wait of 4 h. Consultant ophthalmologists were able to cap recruitment; this control prevented waiting times being adversely affected.

Participants were allocated to be individually assessed by one IP optometrist and then by one consultant ophthalmologist. The IP optometrist and the consultant ophthalmologist recorded their individual assessments on a separate masked proforma; masking prevented the IP optometrist’s findings from influencing the consultant ophthalmologist. Each clinician had space to record their diagnosis, management and opinion on whether the case was independently manageable by an IP optometrist.

Proformas were compared by the research co-ordinator straight after the ophthalmologist’s clinical assessment for diagnostic omissions (diagnoses missed by one of the clinicians but not the other) and confusions (where clinicians’ diagnoses differed). The assessing ophthalmologist was unmasked for potential diagnostic omissions and confusions so that they could be addressed if needed. Proformas were then anonymized and scanned. Electronic copies were stored on NHS computers adhering to the legal requirements of data protection and encryption. Privacy and confidentiality were respected throughout.

Microsoft Excel and International Business Machines Corporation’s Statistical Package for the Social Sciences Version 23 were used for the analyses. Agreement was measured with percentages including 95% CIs and where possible inter-rater reliability called kappa (Κ). Κ factors in agreement by chance into the observed agreement and provides an index that may be interpreted as follows: Κ < 0.00 as poor, 0.00 ≤ Κ ≤ 0.20 as slight, 0.20 < Κ ≤ 0.40 as fair, 0.40 < Κ ≤ 0.60 as moderate, 0.60 < Κ ≤ 0.80 as substantial and 0.80 < Κ ≤ 1.00 as almost perfect [24]. Acceptable agreement with Κ was set as ≥0.6, which follows similar studies of agreement [13, 17]. Acceptable percentage agreement was arbitrarily set at ≥75% to allow differences between IP optometrists, diagnoses and subspecialties to be evaluated.

Specific diagnoses were identified, and totalled once data collection ended; agreement in diagnosis with percentages and Κ was then calculable. Agreement in the management-criteria of prescribing decision and onward management were also calculable with percentages and Κ. Agreement in immediate management could not be calculated with because the reference standard’s immediate management was assumed to be correct following arbitration, and if needed consensus-review, therefore constant. Agreement in immediate management was however calculable as a percentage analysed per individual diagnosis, and as a percentage with stepwise weighting analysed per participant. Stepwise agreement per participant had the following linear weighting applied for each step away from agreement: 0.75 for one step, 0.50 for two steps, 0.25 for three steps and 0 for ≥ four steps.

Results

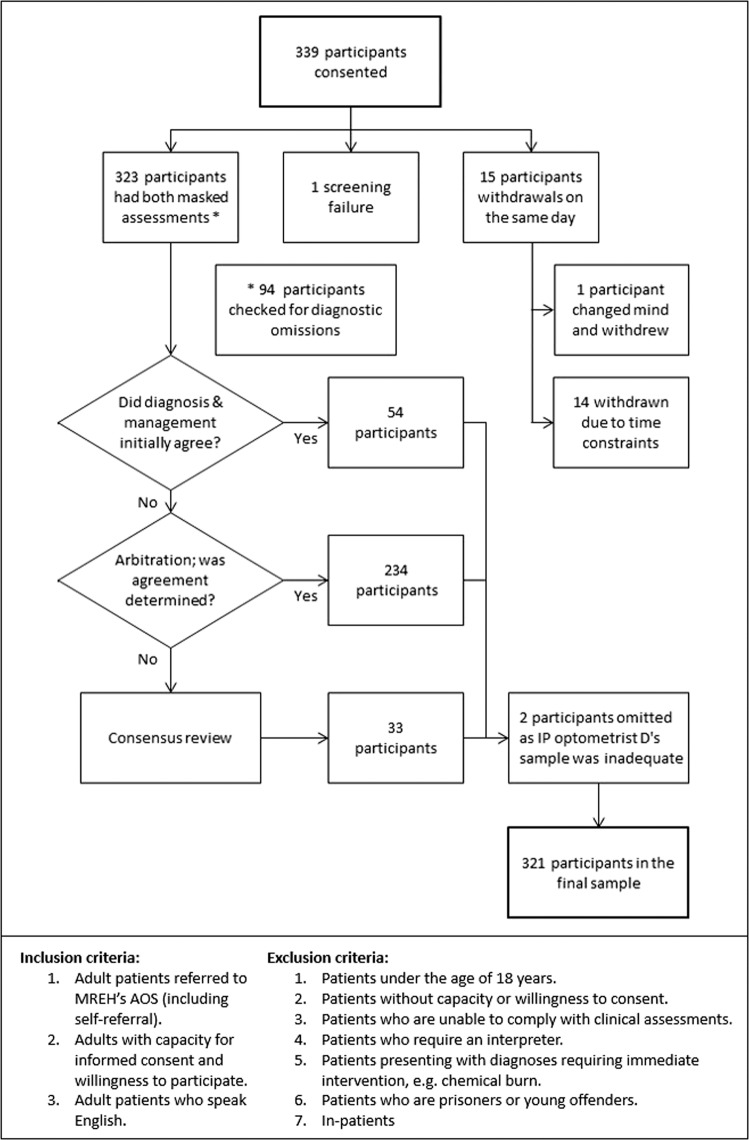

Data were analysed from 321 of the 339 participants recruited; there were 15 withdrawals, one screening failure (participant unable to undergo both masked assessments) and two participants omitted owing to IP optometrist D’s small sample. Figure 2 shows participation in arbitration and consensus-review, plus the inclusion and exclusion criteria.

Fig. 2. Flow chart outlining participation.

The flow chart shows the participants’ involvement in comparison to the staged reference standard, plus the inclusion and exclusion criteria.

The results for agreement in diagnosis and in the three criteria of management between IP optometrists A–C and the staged reference standard are summarised in Table 1. Initial agreement for both diagnosis and management between the IP optometrists and assessing ophthalmologists occurred for only 54 participants. The remaining 267 participants showed differences in diagnostic terms or management plans requiring arbitration; consensus-review was required for 33 of these participants.

Table 1.

Overview of agreement between each IP optometrist and the staged reference standard for diagnosis and management.

| Clinical decision | Measure | IP optometrist | |||

|---|---|---|---|---|---|

| All | A | B | C | ||

| Diagnosis | Percentage agreement | 88.9 | 88.3 | 94.9 | 83.3 |

| Number of diagnoses | 423 | 334 | 57 | 30 | |

| (95% CI) | (85.5–91.5) | (84.4–91.3) | (86.1–98.3) | (66.4–92.7) | |

| Kappa, Κ | 0.885 | 0.882 | 0.930 | 0.821 | |

| [SE] | [0.869–0.901] | [0.864–0.900] | [0.896–0.964] | [0.749–0.893] | |

| Interpretation of Κ | Almost perfect | Almost perfect | Almost perfect | Almost perfect | |

| Prescribing decision | Percentage agreement | 88.2 | 87.7 | 94.9 | 80.0 |

| Number of diagnoses | 423 | 334 | 59 | 30 | |

| (95% CI) | (84.8–90.9) | (83.8–90.8) | (86.1–98.3) | (62.7–90.5) | |

| Kappa, Κ | 0.745 | 0.733 | 0.897 | 0.534 | |

| [SE] | [0.711–0.779] | [0.694–0.772] | [0.839–0.955] | [0.374–0.694] | |

| Interpretation of Κ | Substantial | Substantial | Almost perfect | Moderate | |

| Immediate management, per diagnosis | Percentage agreement | 82.0 | 82.3 | 88.1 | 66.7 |

| Number of diagnoses | 423 | 334 | 59 | 30 | |

| (95% CI) | (78.1–85.4) | (77.9–86.1) | (77.5–94.1) | (48.8–80.8) | |

| Immediate management, per participant | Percentage agreement, weighted | 85.7 | 86.2 | 88.6 | 75.0 |

| Number of participants | 321 | 251 | 42 | 28 | |

| (95% CI) | (81.4–89.1) | (81.4–89.9) | (76.0–95.1) | (53.3–90.2) | |

| Onward management | Percentage agreement | 88.3 | 89.7 | 88.9 | 73.7 |

| Number of participants | 240 | 194 | 27 | 19 | |

| (95% CI) | (83.7–91.8) | (84.6–93.2) | (71.9–96.2) | (51.2–88.2) | |

| Kappa, Κ | 0.822 | 0.840 | 0.820 | 0.597 | |

| [SE] | [0.790–0.854] | [0.806–0.874] | [0.724–0.916] | [0.451–0.743] | |

| Interpretation of Κ | Almost perfect | Almost perfect | Almost perfect | Moderate | |

SE standard error, CI confidence interval.

P < 0.001 for all kappa values.

Agreement in diagnosis

Percentage agreement in diagnosis with the reference standard was ≥75% for IP optometrists A, B and the IP optometrists together; IP optometrist C’s small sample generated wide CIs. Strong inter-rater reliability (Κ) was observed with ‘almost perfect’ agreement for the IP optometrists collectively and for IP optometrists A and B individually for diagnosis. IP optometrist C also showed ‘almost perfect’ agreement, however due to the smaller sample size, the standard error bridged across the bounds of Κ.

Agreement in prescribing decision

Percentage agreement in prescribing decision with the reference standard was ≥75% for IP optometrists A, B and the IP optometrists together; IP optometrist C’s small sample generated wide CIs. Strong inter-rater reliability was observed with ‘substantial’ agreement by IP optometrist A and all IP optometrists collectively and ‘almost perfect’ agreement for IP optometrist B. IP optometrist C notably showed ‘moderate’ agreement; the standard error bridged across the bounds due to sample size.

Immediate management

Percentage agreement in immediate management per diagnosis was ≥75% for IP optometrists A, B and the IP optometrists together; IP optometrist C showed weaker agreement compared to A and B as shown by less overlap of the CIs. Percentage agreement in immediate management per participant was ≥75% for IP optometrists A, B, C and the IP optometrists together; the total sample of participants was smaller than the total of diagnoses widening the CI’s providing greater overlap between the IP optometrists.

The IP optometrists were in complete agreement with the consultant ophthalmologists for the immediate management of per participant for 71.7% of cases, whilst in complete disagreement for 10.3% of cases. Stepwise agreement was seen for 16.5% of cases; most of this group (92.4%) being one step from agreement, and the remainder (8.6%) being two steps from agreement.

Onward management

Percentage agreement in onward management between IP optometrists and the reference standard was ≥75% for IP optometrist A, B and all IP optometrists collectively. Percentage agreement in onward management each IP optometrist straddled 75%. Inter-rater reliability showed ‘almost perfect’ agreement for IP optometrist A, B and all IP optometrists together, while IP optometrist C showed ‘moderate’ agreement that was significantly lower that IP optometrist A. Recording of onward management by the IP optometrists was incomplete; the percentage of each IP optometrist’s participants with onward management recorded was 77.3% for A, 64.3% for B, and 67.9% for C.

Sub-group analysis of diagnoses

A total of 423 diagnoses were made across the 321 participants. Table 2 shows the frequency of these diagnoses grouped by anatomical structure. Two-thirds (64.9%) of diagnoses related to the anterior segment (cornea, eyelid, conjunctiva, lacrimal system, anterior chamber and sclera), 31.2% related to the posterior segment (uvea, vitreous, retina, choroid and lens) and 4.6% related to the wider visual system (orbit and visual pathway). The most prevalent diagnoses in order of decreasing frequency were blepharitis (33, 7.8%), dry eye (31, 7.3%), posterior vitreous detachment (29, 6.9%), acute anterior uveitis (28, 6.6%) and corneal abrasion (22, 5.2%).

Table 2.

Frequency and percentage of all presenting diagnoses grouped anatomically.

| Anatomical structure [list of diagnoses] | Frequency | % |

|---|---|---|

|

Cornea [band keratopathy; contact lens (CL) keratitis; CL over-weara; corneal abrasion; corneal epitheliopathya; corneal foreign body; Fuchs’ endothelial dystrophya; herpes simplex keratitis; keratoconus; marginal keratitis; microbial keratitis; recurrent corneal erosion syndromea; unspecified keratitis] |

96 | 22.7 |

|

Eyelid [blepharitis/meibomian gland dysfunctiona; chalaziona; ectropion; eczemaa; herpes simplex on eyelid; herpes zoster virus/shingles; hordeolum; trichiasis] |

60 | 14.2 |

|

Conjunctiva [conjunctival laceration; conjunctivitis: allergica, bacteriala, giant papillarya, infective unspecified, medicamentosa, non-infective unspecified, virala; subconjunctival haemorrhage; subtarsal foreign bodya] |

57 | 13.5 |

|

Uvea [acute anterior uveitis (AAU); Fuchs’ heterochromic cyclitis; herpetic uveitis; intermediate uveitis; pan-uveitis; post-operative uveitis; traumatic uveitis] |

46 | 10.9 |

|

Vitreous [posterior vitreous detachment (PVD), vitreous haemorrhage; vitreous liquefaction/synaeresisa] |

35 | 8.3 |

|

Lacrimal system [dry eye] |

31 | 7.3 |

|

Retina and choroid [choroidal neovascularisationa; benign choroidal pigmentation; central serous retinopathy; commotio retinaea; diabetic maculopathy/retinopathy; epiretinal membrane; peripheral retinal degeneration; retinal artery occlusion; retinal break/detachment/tear; retinal vein occlusion; retinoschisis; sickle cell retinopathy; Valsalva retinopathya] |

27 | 6.4 |

|

Optic nerve and neurological [amaurosis fugax/transient ischaemic attack (TIA); asymmetric optic discsa; arteritic anterior ischaemic optic neuropathy (AAION); crowded optic disc; headache/migraine; glaucoma; optic disc drusen; optic neuritis; papilloedema] |

24 | 5.7 |

|

Anterior chamber (AC) and aqueous [angle recession; hyphaema; narrow AC angles; non-endogenous AC activitya; non-compliance to hypotensive dropsa; raised intraocular pressure (IOP)] |

19 | 4.5 |

|

Sclera [episcleritis; scleritis] |

8 | 1.9 |

|

Orbit [orbital cellulitis; orbital fracture; orbital myositis; pre-septal cellulitis] |

6 | 1.4 |

|

Refractive and orthoptic [refractive error/asthenopia] |

6 | 1.4 |

|

Lens [cataract; posterior capsule opacification] |

6 | 1.4 |

| No pathology | 2 | 0.4 |

aDiagnoses considered independently manageable for MREH’s IP optometrists.

Local guidelines were used to classify each diagnosis by urgency: emergency (as soon as possible) 1.2%, emergency (within 24 h) 26.5%, urgent (may wait overnight or the weekend) 25.3% and routine 47.0%. Diagnoses were also classifiable by ophthalmological subspecialty under which their care would fall: general ophthalmology (37.6%), emergency (24.1%), uveitis (10.6%), cornea (10.6%), surgical/vitreo-retina (5.2%), glaucoma (3.8%), medical retina (3.1%), neuro-ophthalmology (3.1%) and oculoplastics (1.9%).

Diagnostic omissions

IP optometrists missed 15 diagnoses whilst assessing ophthalmologists missed 23; again, masking was suspended after the ophthalmologist’s assessment as a safeguard to address all omissions.

IP optometrists missed five acute diagnoses: conjunctival laceration, hyphaema, intermediate uveitis, post-operative uveitis and TIA. The remainder was non-acute: four cases of blepharitis, two cases of dry eye, and single cases of refractive error, corneal epitheliopathy, bacterial conjunctivitis and unspecified infective conjunctivitis.

Ophthalmologists omitted the following urgent diagnoses: two cases of angle recession, a case of narrow AC angles and a case of marginal keratitis. Several cases saw the ophthalmologist omit a diagnosis secondary to a chief complaint: a case of orbital fracture from blunt trauma (traumatic uveitis omitted), a case of cataract (PVD omitted), a case of RCES (AC activity omitted), a case of blunt trauma (commotio retinae omitted), a case of retinal tear (a vitreous haemorrhage and PVD were omitted) and a case haemorrhagic PVD (retinal tear omitted). Ophthalmologists omitted the following non-acute diagnoses: six cases of dry eye, and single cases of blepharitis, ectropion, eczema, epiretinal membrane, posterior capsule opacification, and benign choroidal pigmentation.

Diagnostic confusions

IP optometrists confused 32 diagnoses, whilst the assessing ophthalmologists confused 38. Table 3 shows the cases of confused diagnoses ordered first by frequency then alphabetically. A mix of urgent and routine diagnoses was confused by both assessing clinicians. Inflammatory conditions were confused for common differential diagnoses: blepharitis, conjunctivitis, episcleritis, keratitis and uveitis.

Table 3.

Diagnostic confusions made by the IP optometrists and assessing consultant ophthalmologists.

| IP optometrists | Assessing consultant ophthalmologists | ||||

|---|---|---|---|---|---|

| Cases | Diagnosis | Confused for | Cases | Diagnosis | Confused for |

| 2 | Conjunctivitis, infective | Conjunctivitis, viral | 3 | Uveitis, acute anterior | AC activity |

| 2 | Keratitis, CL | CL over-wear | 1 | Corneal abrasion | Keratitis, microbial |

| 1 | AAION | Non-AAION | 3 | conjunctivitis, viral | Unspecified infective conjunctivitis |

| 1 | AC activity | Uveitis, acute anterior | 2 | Conjunctivitis, bacterial | Unspecified infective conjunctivitis |

| 1 | Blepharitis | Conjunctivitis, viral | 2 | Corneal abrasion | Corneal epitheliopathy |

| 1 | Blepharitis | Episcleritis | 2 | Corneal abrasion | Keratitis, CL or microbial |

| 1 | Blepharitis | Conjunctivitis, infective | 1 | AAION | Non-AAION |

| 1 | Chalazion | Conjunctivitis, bacterial | 1 | AC angles, narrow | Dry eye |

| 1 | CL over-wear | Dry eye | 1 | Blepharitis | Dry eye |

| 1 | Conjunctivitis, Allergic | Conjunctivitis, bacterial | 1 | Blepharitis | Allergic conjunctivitis |

| 1 | Conjunctivitis, Bacterial | Conjunctivitis, Viral | 1 | Conjunctivitis, allergic | Conjunctivitis, giant papillary |

| 1 | Conjunctivitis, non-infective | AAU and episcleritis | 1 | Conjunctivitis, allergic | Eczema |

| 1 | Conjunctivitis, viral | Episcleritis | 1 | Conjunctivitis, allergic | Conjunctivitis, viral |

| 1 | Corneal abrasion | Corneal epitheliopathy | 1 | Conjunctivitis, giant papillary | Conjunctivitis, allergic |

| 1 | Corneal FB | RCES | 1 | Conjunctivitis, infective | Conjunctivitis, bacterial |

| 1 | Dry Eye | Blepharitis | 1 | Conjunctivitis, infective | Conjunctivitis, viral |

| 1 | Episcleritis | Conjunctivitis, Viral | 1 | Conjunctivitis medicamentosa | Conjunctivitis, infective |

| 1 | Episcleritis | Scleritis | 1 | Conjunctivitis, viral | Conjunctivitis, bacterial |

| 1 | Headache | No pathology | 1 | Conjunctivitis, viral | Conjunctivitis medicamentosa |

| 1 | HSV on eyelid | Blepharitis | 1 | Corneal abrasion | RCES |

| 1 | Episcleritis | Blepharitis | |||

| 1 | Keratitis, Marginal | Conjunctivitis, Viral | 1 | Episcleritis | Scleritis |

| 1 | Keratitis, unspecified | Corneal abrasion | 1 | Foreign body, subtarsal | Conjunctivitis, bacterial |

| 1 | Orbital cellulitis | Conjunctivitis, Bacterial | 1 | Headache | TIA |

| 1 | Pre-septal cellulitis | AC activity | 1 | Herpes simplex on eyelid | blepharitis |

| 1 | Scleritis | Episcleritis | 1 | Hordeolum | Chalazion |

| 1 | Trichiasis | Subtarsal FB | 1 | Keratitis, marginal | Keratitis, unspecified |

| 1 | Uveitis, acute anterior | Error in laterality | 1 | Keratitis, microbial | Uveitis, acute anterior |

| 1 | Uveitis, acute anterior | Scleritis | 1 | Optic disc asymmetry | Glaucoma |

| 1 | Uveitis, post-operative | AC activity | 1 | Papilloedema | Crowded optic disc |

| Total of diagnostic confusions: IP optometrists (32), assessing consultant ophthalmologists (38). | 1 | RCES | Corneal abrasion | ||

| 1 | Uveitis, post-operative | Uveitis, acute anterior | |||

Notable diagnoses confused by IP optometrists included scleritis, episcleritis and uveitis. Notable diagnoses confused by the ophthalmologists included a case of narrow AC angles confused for dry eye, and papilloedema confused for crowded optic discs. Both clinicians confused a case of AAION as atypical non-AAION; however, both planned tests to check for giant cell arteritis, which was later confirmed to be positive changing the diagnosis to AAION.

Diagnoses considered independently manageable for MREH’s IP optometrists

Opinion on independent management by IP optometrists was recorded by both assessing IP optometrist and ophthalmologist for 225 participants. Assessing clinicians’ opinion matched for over half of this sample, which translated to 19 diagnoses (denoted by superscript a on Table 2) mutually considered as independently manageable; some straightforward secondary diagnoses like dry eye were absent from this subset as they presented alongside difficult chief complaints. The IP optometrists’ immediate management for 12.8% of this subset notably disagreed with the ophthalmologists.

Discussion

The present agreement-study is first in an AOS setting to compare optometrists’ clinical decision-making with consultant ophthalmologists since the introduction of IP for optometrists; the number of participants was over double the closest comparable study [22]. The participating IP optometrists and consultant ophthalmologists from MREH’s specifically organised AOS showed concordance in diagnosis, and for two of the three IP optometrists in management, as demonstrated by ‘almost perfect’ inter-rater reliability and high percentage agreement.

Diagnosis

IP optometrists and the staged reference standard diagnosed concordantly; this finding concurs with Hau’s study [22], and extends the range of conditions optometrists have been shown to effectively diagnose. Disagreement in the form of diagnostic omissions and confusions were observed for both IP optometrists and consultant ophthalmologists; analysis of this disagreement provided useful insight for local service improvement.

One factor affecting agreement in diagnosis was the Hawthorne effect [25], which relates to awareness of participation within a study artificially improving or lowering performance from normal clinical practice. Masking may have led to lower agreement than would occur in practice as it prevented assessing clinicians conferring with colleagues; IP optometrists were forced to reach a tentative diagnosis for cases potentially beyond their scope of practice where they would have usually sought ophthalmological opinion.

Inability to confer affected both IP optometrists and ophthalmologists. For example, an ophthalmologist (specialised in medical retina) initially missed a case of narrow AC angles due to omitting gonioscopy, unlike the IP optometrist (specialised in glaucoma). The same scenario occurred for two angle recessions; omitting gonioscopy was trend also observed in a survey of glaucoma from 2010 [15]. These examples demonstrate the value of multidisciplinary teams sharing expertise, plus the importance in AOS of gonioscopy and auditing clinical practice.

No serious diagnoses were missed by the two optometrists in the Hau’s study [22]; however, the IP optometrists in the present study made two potentially serious diagnostic errors: a case of visual migraine had an associated TIA omitted, and a case of orbital cellulitis was confused for bacterial conjunctivitis. The IP optometrists considered these cases independently manageable, which raised debate. IP optometrists need a clear scope of practice in AOS, as outlined in the Royal College of Ophthalmologist’s (RCOphth) Level 3 Common Clinical Competency Framework [26]. This scope helps with triage of complex cases and IP optometrists knowing when to seek ophthalmological opinion. Access to appropriate supervision is also needed in order to address over-confidence and safeguard against diagnostic omissions and confusions.

Non-urgent diagnoses

The number of non-urgent, routinely manageable diagnoses that presented was remarkable. Non-urgent diagnoses continue to present within AOS, which has been evidenced in the United Kingdom since the 1980s [27, 28]. Addressing the complex challenge of patient’s inefficient health seeking behaviour requires several strategies from the traditional ones of legislation, regulation and providing information, to the more novel behaviour change techniques as described in nudge-theory [29]. One strategy to improve health seeking behaviour in hospitals is using social media as an authoritative, influential messenger that encourages patients to attend the local optometrist for routine problems and to attend AOS for urgent problems. Improving communication between primary and secondary care was another strategy shown to improve the quality of referrals in the first direct referral scheme from optometrist to hospital eye service [30]; communication may be enhanced through feedback letters, training events and shared guidelines.

Management

Concordance for prescribing decision, immediate management and onward management between IP optometrist A, B plus the IP optometrists collectively and the staged reference standard was confirmed by high measures of agreement; they managed as effectively as the consultant ophthalmologists to which they were being compared against. IP optometrist C’s smaller sample prevented the same conclusion about concordance to confidently made for prescribing decision and immediate management; IP optometrist C was unexpectedly absent for 6 months during the study. The poor recording of onward management by the IP optometrists appeared to have arisen from ordering of the assessments; unlike the IP optometrists, the assessing ophthalmologists were forced to confirm whether the participants were discharged, reviewed or referred.

Management guidelines

The stages of arbitration and consensus-review revealed that there were often several acceptable ways to manage a diagnosis; consequently, there were variation in management, including prescribing, between ophthalmologists. Consistent management tended to occur where local guidelines and protocols were in place, such as for AAU. IP optometrists tended to initiate conservative management before pharmacological management, which is in line with the College of Optometrists guidelines [23], for example with chalazia IP optometrists advised warm compresses whilst the ophthalmologists tended to prescribe Occ Maxitrol®. Revisiting and updating local guidelines to incorporate the current evidence would likely lead to more consistent management across clinicians.

Training

The RCOphth’s Common Clinical Competency Framework describes the nationally agreed competencies required by non-medical professionals [26]. Whilst the framework aims to achieve competence through standardised training, training in AOS is arguably still a variable, bespoke process given the wide range of challenging diagnoses that present. The present research’s method provides a way to audit performance and to check that training is effective; agreement with a staged reference standard can be measured over time and compared against a set benchmark.

Discussion of the implications of the present study on training for IP at undergraduate stage is important and relevant given the General Optical Council’s current Education Strategic Review [31]. The results and conclusions of this study apply the high-risk field of AOS in one site, with highly experienced IP optometrists and do not generalise to new optometry-graduates or newly qualified optometrists by virtue of their lack of experience. Care should be taken before extending undergraduate courses to cover IP given the lack of evidence of their clinical effectiveness at graduation and given the lack of a validated formal framework for training in AOS.

Service improvement

Aside the potential improvement of updating MREH’s local guidelines, the cases of disagreement from this study have provided information on how to target training, to improve triage, and to make prescribing more efficient and cost effective. Local implementation of these improvements can be the focus of future work.

Limitations

There are inherent limitations of agreement studies that the salient being assumptions made about the reference standard, but also bias from ordering, sampling and the Hawthorne effect. The reference standard involved the most qualified and experienced clinicians however it cannot be assumed infallible; the safeguard of checking for potential diagnostic omissions and the method of arbitration addressed this fallibility and minimised the effect of human error. Bias from sampling is ideally achieved through stratified random sampling and bias from ordering is preferably controlled through randomisation with the assessing clinicians’ assessments crossed over. Neither method was practical in this study due to capping and a pragmatic need to have the ophthalmologist see the participant last to confirm the diagnosis and management. A method to overcome the Hawthorne effect has yet to be established [32].

How the conclusions generalise to AOS in other hospitals is a further limitation given the study’s single site, the specific organisation of MREH’s AOS (other departments may not be staffed by consultant ophthalmologists) and the limited sample (prolonged absence of two assessing clinicians reduced the samples for IP optometrists C and D). The current study compared IP optometrists to a gold standard rather than a real-world standard; further work on intra-group and inter-group agreement between consultants, trainee ophthalmologists and IP optometrists would address this limitation.

Conclusions

Appropriately trained and experienced IP optometrists can diagnose and manage patients as effectively as consultant ophthalmologists in AOS. The method of comparing to a ‘staged reference standard’ including stages of arbitration and consensus-review provides scrutiny of disagreement, clarification of diagnostic omissions and confusions, and consensus to be reached regarding observed variations in clinical decision-making. Cases of disagreement provided direction for improvement to local acute guidelines, training and triage. The present study develops the evidence base required to validate IP optometrists’ extended role in high-risk field of AOS.

Summary

What was known before

Acute ophthalmic services (AOS) are under increasing demand at a time of limited resources.

Agreement studies enable validation of optometrists’ extended roles.

One study of AOS, prior to IP’s introduction, showed good agreement between two senior optometrist and a consultant ophthalmologist in diagnosis and management plans.

What this study adds

This is the first agreement study validating optometrists’ extended role in AOS carried out since IP was introduced.

The study is the largest of its kind evidencing of concordant clinical decision-making between three trained and experienced IP optometrists and eight consultant ophthalmologists including novel data on prescribing decisions.

The method of comparison against a staged reference standard provides enables scrutiny of disagreement and consensus to be reach for variation in clinical decision-making.

Acknowledgements

The authors would like to acknowledge the participants, and the research teams at MREH and Aston University.

Funding

The study was awarded a Small Grant Scheme by the College of Optometrists.

Compliance with ethical standards

Conflict of interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Blunt I, Bardsley M, Dixon J, Trends in emergency admissions in England 2004–2009: is greater efficiency breeding inefficiency? London: Nuffield Trust; 2010. https://www.kingsfund.org.uk/publications/how-nhs-performing-june-2018. Accessed 5 Feb 2020.

- 2.Office for National Statistics, Population estimates for the UK, England and Wales, Scotland and Northern Ireland: mid-2017. 2018: https://webarchive.nationalarchives.gov.uk/20180904135853/https://www.ons.gov.uk/releases/populationestimatesforukenglandandwalesscotlandandnorthernirelandmid2017?:uri=releases/populationestimatesforukenglandandwalesscotlandandnorthernirelandmid2017. Accessed 5 Feb 2020.

- 3.Smith HB, Daniel CS, Verma S. Eye casualty services in London. Eye. 2013;27:320–8. doi: 10.1038/eye.2012.297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Royal College of Ophthalmologists, Workforce Census 2016. 2016: https://www.rcophth.ac.uk/wp-content/uploads/2017/03/RCOphth-Workforce-Census-2016.pdf. Accessed 5 Feb 2020.

- 5.Anandaciva S, Jabbal J, Maguire D, Ward D, How is the NHS performing? June 2018 quarterly monitoring report. 2018, London: The King’s Fund. https://www.kingsfund.org.uk/publications/how-nhs-performing-june-2018 Accessed 5 Feb 2020.

- 6.Ismail SA, Gibbons DC, Gnani S. Reducing inappropriate accident and emergency department attendances: a systematic review of primary care service interventions. Br J Gen Pract. 2013;63:e813–20. doi: 10.3399/bjgp13X675395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Harper R, Creer R, Jackson J, Erlich D, Tompkin A, Bowen M, et al. Scope of practice of optometrists working in the UK Hospital Eye Service: a national survey. Ophthalmic Physiol Opt. 2015;36:197–206. doi: 10.1111/opo.12262. [DOI] [PubMed] [Google Scholar]

- 8.Crown J. Review of prescribing, supply and administration of medicines. Final Report (Crown II Report). Department of Health, Editor. 1999. London.

- 9.Hammond CJ, Shackleton J, Flanagan DW, Herrtage J, Wade J. Comparison between an ophthalmic optician and an ophthalmologist in screening for diabetic retinopathy. Eye. 1996;10:107–12. doi: 10.1038/eye.1996.18. [DOI] [PubMed] [Google Scholar]

- 10.Oster J, Culham LE, Daniel R. An extended role for the hospital optometrist. Ophthalmic Physiol Opt. 1999;19:351–6. doi: 10.1046/j.1475-1313.1999.00427.x. [DOI] [PubMed] [Google Scholar]

- 11.Spry PG, Spencer IC, Sparrow JM, Peters TJ, Brookes ST, Gray S, et al. The Bristol Shared Care Glaucoma Study: reliability of community optometric and hospital eye service test measures. Br J Ophthalmol. 1999;83:707–12. doi: 10.1136/bjo.83.6.707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gray SF, Spry PG, Brookes ST, Peters TJ, Spencer IC, Baker IA, et al. The Bristol shared care glaucoma study: outcome at follow up at 2 years. Br J Ophthalmol. 2000;84:456–63. doi: 10.1136/bjo.84.5.456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Banes MJ, Culham LE, Bunce C, Xing W, Viswanathan A, Garway-Heath D. Agreement between optometrists and ophthalmologists on clinical management decisions for patients with glaucoma. Br J Ophthalmol. 2006;90:579–85. doi: 10.1136/bjo.2005.082388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Azuara-Blanco A, Burr J, Thomas R, Maclennan G, McPherson S. The accuracy of accredited glaucoma optometrists in the diagnosis and treatment recommendation for glaucoma. Br J Ophthalmol. 2007;91:1639–43. doi: 10.1136/bjo.2007.119628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Vernon SA, Adair A. Shared care in glaucoma: a national study of secondary care lead schemes in England. Eye. 2010;24:265–9. doi: 10.1038/eye.2009.118. [DOI] [PubMed] [Google Scholar]

- 16.Ho S, Vernon SA. Decision making in chronic glaucoma-optometrists vs ophthalmologists in a shared care service. Ophthalmic Physiol Opt. 2011;31:168–73.. doi: 10.1111/j.1475-1313.2010.00813.x. [DOI] [PubMed] [Google Scholar]

- 17.Marks JR, Harding AK, Harper RA, Williams E, Haque S, Spencer AF, et al. Agreement between specially trained and accredited optometrists and glaucoma specialist consultant ophthalmologists in their management of glaucoma patients. Eye. 2012;26:853–61. doi: 10.1038/eye.2012.58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Roberts HW, Rughani K, Syam P, Dhingra S, Ramirez-Florez S. The Peterborough scheme for community specialist optometrists in glaucoma: results of 4 years of a two-tiered community-based assessment and follow-up service. Curr Eye Res. 2015;40:690–6. doi: 10.3109/02713683.2014.957326. [DOI] [PubMed] [Google Scholar]

- 19.Voyatzis G, Roberts HW, Keenan J, Rajan MS. Cambridgeshire cataract shared care model: community optometrist-delivered postoperative discharge scheme. Br J Ophthalmol. 2014;98:760–4. doi: 10.1136/bjophthalmol-2013-304636. [DOI] [PubMed] [Google Scholar]

- 20.Bowes OMB, Shah P, Rana M, Farrell S, Rajan MS. Quality indicators in a community optometrist led cataract shared care scheme. Ophthalmic Physiol Opt. 2018;38:183–92. doi: 10.1111/opo.12444. [DOI] [PubMed] [Google Scholar]

- 21.Konstantakopoulou E, Edgar DF, Harper RA, Baker H, Sutton M, Janikoun S, et al. Evaluation of a minor eye conditions scheme delivered by community optometrists. BMJ Open. 2016;6:e011832. doi: 10.1136/bmjopen-2016-011832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hau S, Ehrlich D, Binstead K, Verma S. An evaluation of optometrists’ ability to correctly identify and manage patients with ocular disease in the accident and emergency department of an eye hospital. Br J Ophthalmol. 2007;91:437–40. doi: 10.1136/bjo.2006.105593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.College of Optometrists, How to use the Clinical Management Guidelines. 2020. https://www.college-optometrists.org/guidance/clinical-management-guidelines/what-are-clinical-management-guidelines.html. Accessed 5 Feb 2020.

- 24.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–74. doi: 10.2307/2529310. [DOI] [PubMed] [Google Scholar]

- 25.Adair JG. The Hawthorne effect: a reconsideration of the methodological artefact. J Appl Psychol. 1984;69:334–45. doi: 10.1037/0021-9010.69.2.334. [DOI] [Google Scholar]

- 26.Royal College of Ophthalmologists, The Common Clinical Competency Framework for Non-medical Ophthalmic Healthcare Professionals in Secondary Care. 2016. https://www.rcophth.ac.uk/wp-content/uploads/2017/01/CCCF-Acute-Emergency-Care.pdf. Accessed 5 Feb 2020.

- 27.Jones NP, Hayward JM, Khaw PT, Claoue CM, Elkington AR. Function of an ophthalmic “accident and emergency” department: results of a six month survey. Br Med J. 1986;292:188–90. doi: 10.1136/bmj.292.6514.188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Vernon SA. Analysis of all new cases seen in a busy regional centre ophthalmic casualty department during 24-week period. J R Soc Med. 1983;76:279–82. doi: 10.1177/014107688307600408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Vlaev I, King D, Dolan P, Darzi A. The theory and practice of ‘nudging’: changing health behaviors. Public Adm Rev. 2016;76:550–61.. doi: 10.1111/puar.12564. [DOI] [Google Scholar]

- 30.Dhalmann-Noor AH, Gupta N, Hay GR, Cates AC, Galloway G, Jordan K, et al. Streamlining the patient journey; The interface between community- and hospital-based eye care. Clin Gov. 2007;13:185–91. doi: 10.1108/14777270810892593. [DOI] [Google Scholar]

- 31.General Optical Council. Education Strategic Review. 2019. https://www.optical.org/en/Education/education-strategic-review-est/index.cfm. Accessed 5 Feb 2020.

- 32.Sedgwick P, Greenwood N. Understanding the Hawthorne effect. BMJ. 2015;351:h4672. doi: 10.1136/bmj.h4672. [DOI] [PubMed] [Google Scholar]