Abstract

Background

Clinical genomic implementation studies pose challenges for informed consent. Consent forms often include complex language and concepts, which can be a barrier to diverse enrollment, and these studies often blur traditional research-clinical boundaries. There is a move toward self-directed, web-based research enrollment, but more evidence is needed about how these enrollment approaches work in practice. In this study, we developed and evaluated a literacy-focused, web-based consent approach to support enrollment of diverse participants in an ongoing clinical genomic implementation study.

Methods

As part of the Cancer Health Assessments Reaching Many (CHARM) study, we developed a web-based consent approach that featured plain language, multimedia, and separate descriptions of clinical care and research activities. CHARM offered clinical exome sequencing to individuals at high risk of hereditary cancer. We interviewed CHARM participants about their reactions to the consent approach. We audio recorded, transcribed, and coded interviews using a deductively and inductively derived codebook. We reviewed coded excerpts as a team to identify overarching themes.

Results

We conducted 32 interviews, including 12 (38%) in Spanish. Most (69%) enrolled without assistance from study staff, usually on a mobile phone. Those who completed enrollment in one day spent an average of 12 minutes on the consent portion. Interviewees found the information simple to read but comprehensive, were neutral to positive about the multimedia support, and identified increased access to testing in the study as the key difference from clinical care.

Conclusions

This study showed that interviewees found our literacy-focused, web-based consent approach acceptable; did not distinguish the consent materials from other online study processes; and valued getting access to testing in the study. Overall, conducting empirical bioethics research in an ongoing clinical trial was useful to demonstrate the acceptability of our novel consent approach but posed practical challenges.

Keywords: informed consent, interview, qualitative research, multimedia, understanding, bioethics

Introduction

Implementing genomic technologies in clinical practice and evaluating their use among diverse patient populations is an important step in the translational research process (Hindorff et al. 2017). An effective informed consent process can support individual decision-making about participation (Dickert et al. 2017). However, several features of clinical genomic implementation studies pose challenges for informed consent as traditionally imagined.

Research participant understanding of consent forms is broadly recognized to be limited, especially among individuals with limited literacy or education (Flory & Emanuel 2004; Nishimura et al. 2013; Montalvo & Larson 2014). The consent process for genomics research often includes complex concepts (e.g., genetic risk), scientific language (e.g., gene mutation), and legal jargon (e.g., Genetic Information Nondiscrimination Act), which may impede decision-making and serve as a barrier to enrollment of diverse and limited literacy populations (Yu et al. 2019; Tomlinson et al. 2017; de Vries et al. 2011; Hughson et al. 2016). To improve access to research participation and facilitate individual control over the information reviewed, some genomics researchers have moved toward obtaining consent via web-based platforms (Cadigan et al. 2017; Yuen et al. 2019). While one study found that many participants are hypothetically willing to provide consent online (Gaieski et al. 2019), little is known about how well these platforms support decision-making among diverse populations.

Another challenge for clinical genomic implementation studies is that they blur the boundary between research and clinical care, which raises concerns that prospective participants may have trouble distinguishing clinical care options from research interventions (Wolf et al. 2018; Largent et al. 2012). Even if participants can distinguish their options, being offered a clinical service through a research study may raise questions about the voluntariness of consent (Henderson 2011; Yearby 2016).

Because of these challenges, it is important to evaluate non-traditional approaches to informing prospective research participants and supporting their decisions. To this end, we developed a literacy-focused, web-based consent approach for the Cancer Health Assessments Reaching Many (CHARM) study, a clinical genomic implementation study with a goal of recruiting patients from medically underserved populations. Our approach used plain language, multimedia, and separate descriptions of clinical care and research-specific activities. This manuscript describes findings from interviews with study participants about their reactions to our approach and examines the ethical implications of these reactions.

Methods

CHARM study overview

The CHARM study was part of the Clinical Sequencing Evidence-Generating Research (CSER) consortium funded by the National Human Genome Research Institute, National Cancer Institute, and National Institute on Minority Health and Health Disparities (Amendola et al. 2018). CHARM’s overarching goal was to increase access to evidence-based genetic testing for hereditary cancer in low income, low literacy, and minority populations by evaluating key laboratory, clinical, and behavioral interventions and modifications in a diverse primary care setting. CHARM enrolled English- and Spanish-speaking patients age 18 to 49 years at Kaiser Permanente Northwest (KPNW), an integrated healthcare delivery system in the Portland, Oregon metropolitan area, and Denver Health (DH), an integrated safety-net health system in Denver County, Colorado. Prospective participants completed a web-based family history risk assessment application, which comprised literacy-adapted versions of two clinically validated cancer risk assessment applications (Kastrinos et al. 2017; Bellcross et al. 2019), to evaluate their risk for Lynch syndrome and/or hereditary breast and ovarian cancer syndrome. Those at risk, or with insufficient family history information to evaluate risk, were invited to enroll in CHARM (NCCN 2019a; NCCN 2019b). Participants submitted a saliva kit for clinical exome sequencing for cancer risk and optional additional findings (medically actionable secondary findings and carrier findings unrelated to hereditary cancer). All participants interviewed in this study received results by phone from a study genetic counselor. The genetic counselor’s note and the test results were added to the participant’s health record, high-risk referrals were made as appropriate, and primary care providers were notified about relevant results. Outcomes were measured through health record review, baseline and follow-up surveys, and qualitative interviews. English- and Spanish-speaking patient advisors reviewed and provided detailed feedback on study procedures and materials (Kraft et al., 2020). CHARM, including this interview sub-study, was approved with a waiver of documentation of consent by the KPNW IRB acting as a single IRB.

CHARM recruitment and consent

Prospective CHARM participants were invited into the study via email, text, postcard, phone call, provider referral, or in-person recruitment. All recruitment and consent materials were available in both English and Spanish and bilingual study staff were available. Materials were translated by a co-investigator who is a native Spanish speaker and a certified translator. The risk assessment and consent materials were on a self-directed, web-based application that could be completed at home, although prospective participants could opt to have a study staff member complete the application with them. The web-based application was developed with user-centered design principles, including responsive design and a mobile-first approach intended to allow prospective participants to easily complete it on mobile devices (Marcotte 2011; Wroblewski 2011). All participant responses and response times to the risk assessment and consent were recorded in an automated tracking system.

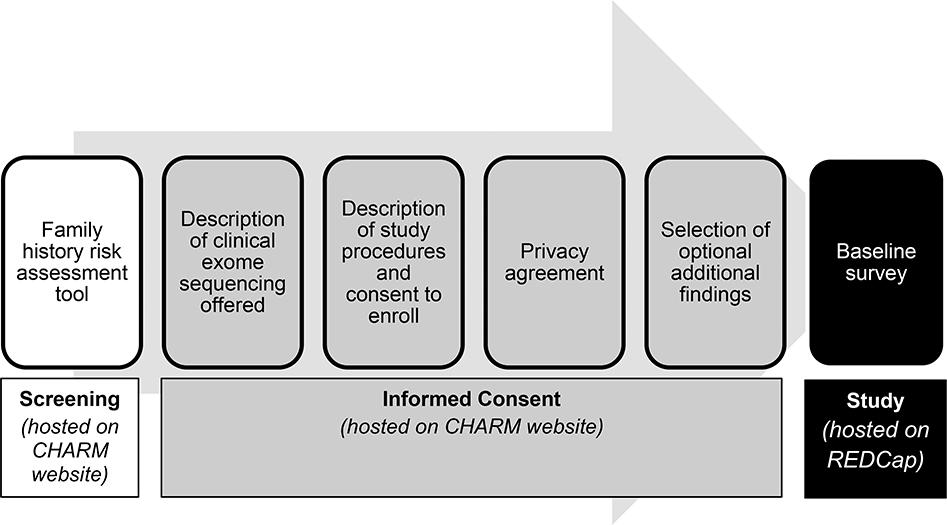

The risk assessment application, which included the logos from the clinically validated applications, included questions about one’s family history of cancer, followed by a lay language summary of risk results, and took those who screened eligible (n=1252) an average of 4 minutes (median 3, range 0–53). Eligible individuals proceeded to an introduction to the CHARM study and a consent approach with four sections on sequential web pages: (1) description of the clinical exome sequencing offered and information about its clinical availability, (2) description of study procedures and consent to enroll, including a reminder that the reader could ask their doctor about sequencing outside of the study, (3) privacy agreement (HIPAA authorization), and (4) selection of optional additional findings. The sequential order was intended to emphasize the clinical availability of sequencing first, then offer research participation as one option for those interested in sequencing. The information within each section was further divided into subsections and spaced to allow for ease of reading. After consenting, participants provided contact information and received a link to complete the study’s baseline survey in REDCap (Harris et al. 2019; Harris et al. 2009). Of 1252 eligible individuals, 966 (77%) consented to join CHARM. Figure 1 illustrates the stages of the enrollment process.

Figure 1.

CHARM screening and enrollment process

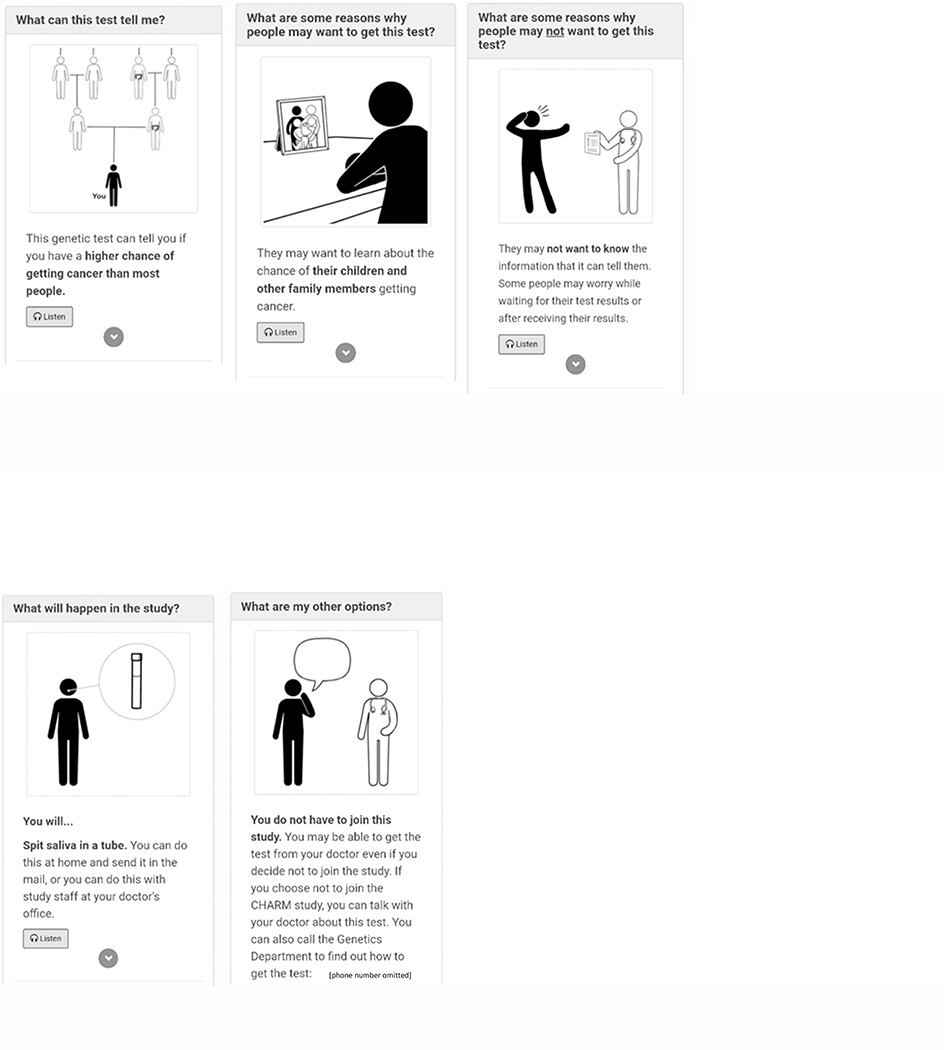

The language in the consent materials was written at an overall Flesch-Kincaid grade 6.3 reading level (section 1=grade 5.7, section 2=grade 6.3, section 3=grade 7.4, section 4=grade 6.7) and was iteratively reviewed by our patient advisory committees, as well as health literacy and content area experts on the study team (Kraft et al., 2020). It also included cartoon illustrations depicting each key point, which were developed with health communications experts at Booster Shot Media. Figure 2. The web pages also included audio recordings of the text in English and Spanish in the first three consent sections, which was added in two stages at two and six months after CHARM recruitment began. A PDF version of the consent form is available at ClinicalTrials.gov (NCT03426878).

Figure 2.

a-c. Screenshots from part 1: “A Test That Can Tell You More About Your Chance of Getting Cancer

d-e. Screenshots from part 2: “Cancer Health Assessments Reaching Many (CHARM) Study”

Post-consent interviews

To obtain in-depth feedback about how our consent approach worked in practice, we conducted semi-structured interviews with CHARM enrollees between when they enrolled and received clinical results to ensure their responses were not influenced by their results. We anticipated that interviewing up to 36 enrollees, with about half at each clinical site and a quarter Spanish speakers, would suffice to achieve thematic saturation, even if we identified differences between groups (Guest 2006), but planned to conduct ongoing analysis and stop enrollment once saturation was achieved (O’Reilly & Parker 2013; Glaser & Strauss 1967). We began our analysis after completing about half of our interviews and used purposive sampling to ensure we reached saturation among subgroups of interviewees, at which point we stopped enrollment. We initially intended to interview individuals who declined to enroll in CHARM as well, but because very few people who were eligible for CHARM declined to join the study, we were unable to successfully recruit decliners for interviews.

We developed a semi-structured interview guide [Appendix] by reviewing the relevant literature about informed consent and decision-making for genomics research and identifying novel aspects of our consent approach. The study team reviewed the interview guide, and we pilot-tested the guide for length and clarity.

We emailed eligible CHARM participants, followed by up to three outreach attempts by phone. Trained interviewers (four English speakers and one native Spanish speaker) conducted and audio recorded interviews by phone. English recordings were transcribed professionally and reviewed for accuracy. Spanish recordings were transcribed professionally in Spanish then translated into English by certified translators, reviewed for accuracy, and re-translated if necessary. De-identified transcripts were uploaded to the online qualitative analysis platform Dedoose for coding and analysis (www.dedoose.com).

Analysis

We developed a qualitative codebook using iterative inductive and deductive techniques (Charmaz 2006). We drafted an initial codebook based on the topic areas of the questions in our interview guide. Through high-level review of transcripts and discussion among the qualitative analysis team, we incorporated emerging interview data into the codebook. Three coders completed two rounds of test coding and proposed codebook modifications to be reviewed by the qualitative analysis team, until the coders consistently reached consensus.

Each transcript was coded independently by two coders, then reviewed until both coders reached consensus. If consensus could not be reached, a third coder served as tiebreaker. Members of the qualitative analysis team then produced summaries of codes related to novel aspects of our consent approach, which the author team iteratively discussed to identify emerging themes (Saldaña 2016). Findings related to other topics in the interview guide are reported elsewhere.

Results

Interviewee characteristics

We interviewed 32 enrollees, out of 58 invited (55% completion rate), at a mean of 21.9 days (standard deviation 11.8, range 7–63) after the date they completed the consent. Approximately half each were from KPNW (n=15, 47%) and DH (n=17, 53%). Twelve interviews (38%) were conducted in Spanish. Most interviewees were female (n=27, 84%), reported annual household income under $60,000 (n=23, 72%), and had less than a Bachelor’s degree (n=18, 56%). Fifteen (47%) identified as Hispanic/Latino(a) and nine (28%) as non-Hispanic white. Ten (31%), including eight Spanish speakers and two English speakers, had limited health literacy, as measured through self-reported answers to the BRIEF Health Literacy Screening Tool on the CHARM baseline survey (Haun et al. 2009). Complete demographics are shown in Table 1. We did not identify notable differences between groups and therefore report most of our results collectively, except as noted below with regard to individuals who received assistance.

Table 1.

Interviewee characteristics (N=32)

| Number | (%)1 | |

|---|---|---|

| Recruitment site | ||

| DH | 17 | (53) |

| KPNW | 15 | (47) |

| Preferred language | ||

| English | 20 | (62) |

| Spanish | 12 | (38) |

| Mean age (range) | 34 (22–48) | |

| Sex | ||

| Female | 27 | (84) |

| Male | 5 | (16) |

| Gender identity | ||

| Female | 26 | (81) |

| Male | 3 | (9) |

| Non-binary | 1 | (3) |

| No response | 2 | (6) |

| Race/ethnicity | ||

| Asian | 2 | (6) |

| Black or African American | 4 | (13) |

| Hispanic/Latino(a) | 15 | (47) |

| White or European American | 9 | (28) |

| Multiple responses | 2 | (6) |

| Highest level of education | ||

| Less than high school | 3 | (9) |

| Some high school, no diploma | 2 | (6) |

| High school graduate or equivalent | 4 | (13) |

| Some post-high school training, no degree or certificate | 6 | (19) |

| Associate college or occupational, technical, or vocational program, received degree or certificate | 3 | (9) |

| Bachelor’s degree | 10 | (31) |

| Graduate or professional degree | 2 | (6) |

| No response | 2 | (6) |

| Annual household income | ||

| Less than $20,000 | 7 | (22) |

| $20,000 to $39,999 | 7 | (22) |

| $40,000 to $59,999 | 9 | (28) |

| $60,000 to $79,999 | 2 | (6) |

| $80,000 to $99,999 | 2 | (6) |

| $100,000 to $139,999 | 1 | (3) |

| $140,000 or more | 1 | (3) |

| No response | 3 | (9) |

| Limited health literacy2 | 10 | (31) |

Percentages may add up to less than 100 due to rounding

Based on responses to the BRIEF Health Literacy Screening Tool (Haun et al. 2009)

Assistance and time to completion

Most interviewees (n=24, 75%) did not receive assistance to complete the risk assessment, consent, and baseline survey. The rest, all of whom were Spanish speakers, received assistance in person (n=6, 19%), by phone (n=1, 3%), or both (n=1, 3%). Most (n=22, 69%) completed the risk assessment, consent, and baseline survey in one day; those completing it in one day took an average of 47 minutes to complete all three parts (median 32, range 14–268) and 12 minutes to complete the consent portion (median 7.5, range 3–56). In comparison, all CHARM participants who completed the risk assessment, consent, and baseline survey in one day (n=532) took an average of 55 minutes (median 33, range 10–670) and 18 minutes to complete the consent portion (median 8, range 1–621). Times are based on when the individual advanced to the next page and do not necessarily indicate they were actively interacting with the application for that whole time.

Format of completion

Interviewees most often reported having viewed the information on a mobile phone, followed by laptop or desktop computer. A few said they had switched to a computer so they could better read the text or because they thought the formatting might not work as well on a phone. Some interviewees commented that the study set-up made participation convenient:

“You can email, you can call, you can set up when to call, you can answer the questions. You can save the questionnaires and come back to it later if you’re in a hurry. So it was a really convenient set-up. It was pretty straightforward and very easy to maneuver and the information was great.” (102)

However, several interviewees had a hard time remembering the consent materials in detail, other than making the decision to join CHARM:

“[What do you remember about the consent form?] Nothing other than I accepted participation in the study. I think that’s the only thing that I remember.” (168)

Some Spanish-speaking participants said they had not actually seen the consent materials because a researcher read the information to them and recorded their answers.

Information

Most interviewees said the information was simple and easy to understand:

It was just clear. It…wasn’t broad. It was defined and it was easy to understand everything that was being said. You didn’t have to read in between the lines. There weren’t words that you didn’t know, that kind of thing. (111)

I think it was clear and direct so that you knew what– if you were going to sign up for the study, you knew what it entailed. (121)

Most interviewees were satisfied with the amount of information they received and did not think anything was either missing or extraneous:

It’s very thorough. You know exactly what the test is for. It tells you what they expect of you, and it even tells you, ‘If you, for some reason, don’t want to know anything, then let us know, and we won’t share that information with you.’ And it also tells you, ‘There is going to be something that might be a little more serious that you’re going to find out that might be devastating.’ So, I mean, it kind of covered everything. (145)

It wasn’t, let’s say, too much. I like to read because sometimes I’m a little bit ashamed to not be understanding something and asking. So it seemed very good to me. It wasn’t exhausting. It wasn’t too much and repeating over and over the same thing. And it seemed to me that it was complete. (171)

Suggestions for improvement included adding optional additional information in hyperlinks or an FAQ section at the end. While many interviewees commented that there was a lot of information, only a few said there was too much or that it was repetitive:

I thought it was kind of a lot and kind of repetitive in some respects. I wish I could tell you exactly because I would love to but I don’t [remember]. (115)

I was like, ‘Oh, I’m not going to sit down to read all this. I’m just moving on [laughter].’ So, I mean, to me, being the type of person I am, it was a lot of information. I mean, if it would’ve just said, ‘Your information’s being used for this, this, and this,’ and leave out all the mumbo jumbo of all the legal stuff, I guess I would’ve been okay with that. (110)

A few said they wondered about some specific concepts, such as results sharing and research methods, where they felt there was not enough detail:

I definitely was curious about additional details that weren’t available, and there was no option to see more information. But I would have if there had been that option or if it was clear or evident. (109)

Some interviewees made statements suggesting they may not have read the information closely, and a few explicitly stated they had skipped ahead in the process:

I was kind of in a hurry because, like I said, I was on my lunch break. So I just kind of got straight to the meat of the stuff that they wanted answers for and answered the questions as best I could. (110)

Further conversation revealed that interviewees were not always focusing their answers on the information in the consent materials, but rather the entire online enrollment process, including the risk assessment and baseline survey. These were often subtle comments suggesting, but not explicating, a lack of distinction, making it difficult to count exactly how frequent this blurring occurred. For example, in response to a question about the information in the consent, one interviewee described how an image of a family tree in the risk assessment application had helped them to understand the family history questions:

It had, let’s say, like a little tree. I mean, it had the little branches in order to understand it all. If someone had a doubt, it explained about each step. (171)

Those who had assistance going through the information tended to focus on how the research staff member answered questions and explained things, rather than on the content of the written information:

I don’t even, honestly, remember what I read. I just know that what my doctor said was more important to me than what was in the pamphlet. (117)

Cartoon images

While some interviewees noticed and appreciated the cartoon images, others did not notice them at all. Those who did not notice them either were more focused on the text and thus, when asked, said the images had not registered, or they did not see them because a researcher had read the information to them:

I didn’t pay much attention [to the cartoons]…. I was looking at the text anyway, I was focused on the text not so much on the images. (171)

Those who noticed the images said they helped clarify the information and break up the text into manageable modules:

I feel like any time there’s illustration, it’s always easier to understand and see the bigger picture and things. Because it’s one thing to see or hear something or read something, it’s another thing to see it and feel it. (102)

I did think they were really helpful because, skimming for information, you sometimes overlook what’s what. (106)

A couple of interviewees added that they found the images engaging and felt they would be helpful for visual learners.

Audio

Many interviewees did not remember seeing the option to play audio versions of the text. Some early interviewees completed interviews before the audio option was available, but some later interviewees also did not remember seeing the option. As with the images, in some cases this was because they had received assistance from research staff and therefore did not look at the screen themselves. Of those who recalled seeing the audio option, most did not listen to it but commented that they could see the value for other people, particularly for auditory learners or non-native English speakers:

Reading—and I’m guilty of this too—on a screen, sometimes I scan more than I read, so if you have an audio option that would actually be helpful to some people. Especially auditory learners who when they read it doesn’t really compute. (101)

People that are not strong readers may like that option. That would be good. (163)

One interviewee said they had listened to see if there was additional information in the audio, and another listened to some of it, but none used the audio for the entire process.

Clinical versus research distinction

When asked about the multipart structure and sequential ordering of the consent materials, many interviewees said they had not noticed the order, but none raised any concerns about it. Several said the order didn’t matter, and most of the rest said it seemed “fine.” A few commented that it helped to review background information about clinical sequencing first, before deciding whether to participate:

I kind of read it all within, like, this is introducing me to it and then this is what we’re doing.… It kind of gave me a basis to like, ‘Okay, this is what they’re going to do with that kind of stuff.’ (101)

Just under half of interviewees could not identify a difference between getting testing in CHARM versus from their doctor. Of those who drew distinctions, nearly all identified benefits from the study that would not be present clinically, most commonly related to access. Several interviewees said they did not think they could get testing from their doctor:

The difference is that my doctor doesn’t offer it to me. He has never offered it. And well, the difference [is] that CHARM has given me the opportunity.… I am very persistent, like telling them, ‘Look. My family has cancer. I have this little lump and I’m worried.’ And so on. But, they’ve never told me anything about genetics. … So with this study, I imagine, I feel like more-- like they gave me another opportunity to know more about me. (157)

Relatedly, several also said the test in CHARM would likely offer them more thorough information than if they got it from their doctor:

I feel like you guys are going to take more care and more in-depth and more in detail look into the DNA or the sample because you guys are studying it. … I feel like with a doctor, everything is based off of symptoms. In order for us to do that test, we need these symptoms. In order for us to look into even deeper, we need you to be pretty symptomatic. Just like as a preventive measure, they don’t necessarily … look into something, unless it’s something that’s symptomatic and bothers them. Where in a study you don’t necessarily have to be symptomatic or have an actual condition and you guys still look into it and with detail because you guys are studying it. So I feel like there is a huge difference.(102)

I think with the study, there’s going to be no bias. And I think that it’s going to give you, I think, more of a thorough answer. Because I don’t want any biased information, and I think with a doctor, I don’t think it’s going to be as-- I think it’s going to be more specific to just cancers or whatever. And I think with the study, it’s a wider range of what’s going on with you. (145)

Multiple interviewees also noted that the study was free or that they could participate from home without needing to visit the clinic, and one said the study would return results faster than clinical care. Two pointed to the study’s contribution to broader research questions in comparison to clinical care, and two identified privacy differences, although one perceived the study as more protective of privacy because it did not go through the clinical team, while the other perceived the study’s data sharing as less protective.

Discussion

We identified three key findings based on interviewees’ reactions to our novel consent approach: (1) our literacy-focused, web-based consent approach was acceptable to participants; (2) participants did not distinguish the consent materials from other online study processes; and (3) increased access to testing in the study was the biggest perceived difference from clinical care.

First, overall, interviewees were satisfied with the consent approach and did not have major criticisms or concerns about it. Interviewees did not report significant challenges with the web-based format, in some cases noting its convenience, which aligns with results of another study of a streamlined, web-based consent approach for hereditary cancer testing (Yuen et al. 2019). Interviewees were also neutral to positive about the simplified language, cartoon images, audio recordings, and multipart structure. This indicates that our consent approach was satisfactory to at least this subset of CHARM participants and did not impose significant barriers to this group. However, the availability of study staff to walk through the enrollment process with those who needed support may have mitigated some potential for barriers. While our results would be strengthened by the perspective of decliners, the low rate of eligible individuals who did not consent suggests that the consent process was not a notable barrier to those who declined.

Second, although our interview guide was designed to focus on the information presented in the consent materials, interviewees did not always distinguish between the risk assessment, consent, and baseline survey. This illustrates that, to prospective participants, the entire enrollment process—and even post-enrollment study activities—may blur together into their overall impression of the study. As more studies begin using self-directed, web-based enrollment approaches (All of Us 2019; Rayes et al. 2019; Pang et al. 2018), it may be increasingly difficult for individuals to distinguish between different study processes that flow together in an online process and may share similar web design features. This highlights the importance of ensuring all study materials are accessible and understandable, not just the consent materials. More broadly, it reflects the reality that consent is a process and, to a prospective participant, neither begins nor ends on the page the research team designates as the “consent form.”

Third, increased access in the study was the key difference interviewees highlighted when we asked about the distinction between clinical care and research. Although the consent materials sought to distinguish between clinical care and research activities and stated that patients could seek testing from their doctors, there was a sense of skepticism among at least some interviewees that they would have meaningful clinical access. This highlights a perception among our study population that clinical access to genetic testing was an unmet need and raises a critical question for study design in settings where, outside the study, patients might have difficulty accessing the non-experimental test or treatment being investigated: How should we balance the potential for increased access against concerns about voluntary decision-making? On one hand, increasing access to proven clinical sequencing can reduce health disparities, both through research participation itself and by providing evidence to improve clinical implementation (Hindorff et al. 2017). On the other, some have raised concerns about individuals’ ability to make a voluntary decision in this setting (Yearby 2016). We suggest that overcoming access barriers may outweigh concerns about voluntariness in some contexts, particularly in this study where the intervention offered was recommended care for patients at risk of hereditary cancer (Joffe & Miller 2008; NCCN 2019a; NCCN 2019b), provided there is sufficient research ethics oversight (Wolf et al. 2018; Wolf et al. 2015).

This evaluation of our novel consent approach benefited from being part of an ongoing clinical trial; interviewees’ responses reflected not only the content of the consent materials, but the overall study context, making our findings more applicable to real-world settings. However, this approach also posed challenges for interpretation. For example, participants who enrolled with researcher assistance did not all see the multimedia support options and thus could only comment on these elements hypothetically. These aspects of our study reflect the limitations of conducting empirical bioethics research in the context of an ongoing clinical trial but also illustrate the reality of clinical research and thus are important contextual factors to consider for those designing consent approaches.

Limitations

Due to low rates of eligible individuals declining to enroll in CHARM, there were only a small number of study decliners, so we were unable to evaluate their perspectives. Additionally, there is the possibility of selection bias in our sample; CHARM’s use of email/text recruitment may have biased our study population toward people who are more comfortable with web-based consent, and those who declined to be interviewed may have had different perspectives that those who agreed. Some research questions could have been better addressed if CHARM included a comparison group to directly compare the experience of individuals who went through our novel consent approach versus a traditional approach. However, because the CHARM target population included many individuals with limited health literacy, we chose to use a literacy-focused approach that is supported in the literature to improve accessibility for our entire study population (Yu et al. 2019). Finally, recall may have been limited for some interviewees, especially those who were interviewed several weeks after they consented to join the study. We were unable to interview participants immediately after consent, when recall would be likely be greatest, within CHARM’s structure, but by conducting interviews later we were able to generate data reflecting interviewees’ overall experiences with the consent process, not just short-term recall.

Conclusion

Our literacy-focused, web-based consent approach was satisfactory to our diverse group of interviewees and highlighted access barriers among this population. Empirical bioethicists should continue to build on our approach and develop and evaluate novel approaches to improve the informed consent process and ensure accessibility and acceptability across diverse patient populations.

Supplementary Material

Acknowledgments:

The authors would like to thank the many members of the CHARM study team who contributed to this project. The authors also thank Gary Ashwal and Alex Thomas of Booster Shot Media for their work conceptualizing and creating the cartoon illustrations included in the consent materials. This work was funded as part of the Clinical Sequencing Evidence-Generating Research (CSER) consortium funded by the National Human Genome Research Institute with co-funding from the National Institute on Minority Health and Health Disparities and the National Cancer Institute. This work was supported by grant U01HG007292 (MPIs: Wilfond, Goddard), with additional support from U24HG007307 (Coordinating Center). The CSER consortium represents a diverse collection of projects investigating the application of genome-scale sequencing in different clinical settings including pediatric and adult subspecialties, germline diagnostic testing and tumor sequencing, and specialty and primary care. The contents of this paper are solely the responsibility of the authors and do not necessarily represent the official views of the NIH. The CSER consortium thanks the staff and participants of all CSER studies for their important contributions. More information about CSER can be found at https://cser-consortium.org/.

Contributor Information

Stephanie A. Kraft, Treuman Katz Center for Pediatric Bioethics, Seattle Children’s Hospital and Research Institute, and Department of Pediatrics, University of Washington School of Medicine, Seattle, WA

Kathryn M. Porter, Treuman Katz Center for Pediatric Bioethics, Seattle Children’s Hospital and Research Institute, Seattle, WA

Devan M. Duenas, Treuman Katz Center for Pediatric Bioethics, Seattle Children’s Hospital and Research Institute, Seattle, WA

Claudia Guerra, Department of Anthropology, History and Social Medicine, University of California, San Francisco.

Galen Joseph, Department of Anthropology, History and Social Medicine, University of California, San Francisco.

Sandra Soo-Jin Lee, Division of Ethics, Department of Medical Humanities and Ethics, Columbia University, New York, NY.

Kelly J. Shipman, Treuman Katz Center for Pediatric Bioethics, Seattle Children’s Hospital and Research Institute, Seattle, WA

Jake Allen, IT (Information Technology) Department, Kaiser Permanente Northwest, Center for Health Research, Portland, OR.

Donna Eubanks, IT (Information Technology) Department, Kaiser Permanente Northwest, Center for Health Research, Portland, OR.

Tia L. Kauffman, Department of Translational and Applied Genomics, Kaiser Permanente Northwest, Center for Health Research, Portland, OR

Nangel M. Lindberg, Department of Translational and Applied Genomics, Kaiser Permanente Northwest, Center for Health Research, Portland, OR

Katherine Anderson, Denver Health Ambulatory Care Services, Denver, CO.

Jamilyn M. Zepp, Department of Translational and Applied Genomics, Kaiser Permanente Northwest, Center for Health Research, Portland, OR

Marian J. Gilmore, Department of Translational and Applied Genomics, Kaiser Permanente Northwest, Center for Health Research, Portland, OR

Kathleen F. Mittendorf, Department of Translational and Applied Genomics, Kaiser Permanente Northwest, Center for Health Research, Portland, OR

Elizabeth Shuster, Research Data and Analysis Center, Kaiser Permanente Northwest, Center for Health Research, Portland, OR.

Kristin R. Muessig, Department of Translational and Applied Genomics, Kaiser Permanente Northwest, Center for Health Research, Portland, OR

Briana Arnold, Department of Translational and Applied Genomics, Kaiser Permanente Northwest, Center for Health Research, Portland, OR.

Katrina A.B. Goddard, Department of Translational and Applied Genomics, Kaiser Permanente Northwest, Center for Health Research, Portland, OR

Benjamin S. Wilfond, Treuman Katz Center for Pediatric Bioethics, Seattle Children’s Hospital and Research Institute, and Department of Pediatrics, University of Washington School of Medicine, Seattle, WA

References

- All of Us Research Program Investigators, Denny JC, Rutter JL, Goldstein DB, Philippakis A, Smoller JW, Jenkins G, and Dishman E. 2019. The “All of Us” Research Program. New England Journal of Medicine 381: 668–676 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amendola LM, Berg JS, Horowitz CR, Angelo F, Bensen JT, Biesecker BB, Biesecker LG, et al. 2018. The Clinical Sequencing Evidence-Generating Research Consortium: Integrating genomic sequencing in diverse and medically underserved populations. American Journal of Human Genetics 103: 319–327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellcross C, Hermstad A, Tallo C, and Stanislaw C. 2019. Validation of version 3.0 of the Breast Cancer Genetics Referral Screening Tool (B-RST). Genetics in Medicine 21: 181–184. [DOI] [PubMed] [Google Scholar]

- Cadigan RJ, Butterfield R, Rini C, Waltz M, Kuczynski KJ, Muessig K, Goddard KAB, and Henderson GE. 2017. Online education and e-consent for GeneScreen, a preventive genomic screening study. Public Health Genomics 20: 235–246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charmaz K 2006. Constructing Grounded Theory: A Practical Guide through Qualitative Analysis. London: Sage. [Google Scholar]

- de Vries J, Bull SJ, Doumbo O, Ibrahim M, Mercereau-Puijalon O, Kwiatkowski D, and Parker M. 2011. Ethical issues in human genomics research in developing countries. BMC Medical Ethics 12: 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dickert NW, Eyal N, Goldkind SF, Grady C, Joffe S, Lo B, Miller FG, et al. 2017. Reframing consent for clinical research: A function-based approach. American Journal of Bioethics 17(12): 3–11. [DOI] [PubMed] [Google Scholar]

- Flory J, and Emanuel E. 2004. Interventions to improve research participants’ understanding in informed consent for research: A systematic review. Journal of the American Medical Association 292: 1593–1601. [DOI] [PubMed] [Google Scholar]

- Gaieski JB, Patrick-Miller L, Egleston BL, Maxwell KN, Walser S, DiGiovanni L, Brower J, et al. 2019. Research participants’ experiences with return of genetic research results and preferences for web-based alternatives. Molecular Genetics and Genomic Medicine 7: e898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glaser B, and Strauss A. 1967. The Discovery of Grounded Theory: Strategies for Qualitative Research. New York: Aldine Publishing Company. [Google Scholar]

- Guest G, Bunce A, and Johnson L. 2006. How many interviews are enough? An experiment with data saturation and variability. Field Methods 18: 59–82. [Google Scholar]

- Harris PA, Taylor R, Minor BL, Elliott V, Fernandez M, O’Neal L, McLeod L, et al. 2019. The REDCap consortium: Building an international community of software partners. Journal of Biomedical Informatics [Epub 2019 May 9] doi: 10.1016/j.jbi.2019.103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. 2009. Research electronic data capture (REDCap) – A metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics 42: 377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haun J, Noland-Dodd V, Varnes J, Graham-Pole J, Rienzo B, and Donaldson P. 2009. Testing the BRIEF health literacy screening tool. Federal Practitioner Dec 2009: 24–31. [Google Scholar]

- Henderson GE 2011. Is informed consent broken? American Journal of the Medical Sciences 342: 267–272. [DOI] [PubMed] [Google Scholar]

- Hindorff LA, Bonham VE, Brody LC, Ginoza MEC, Hutter CM, Manolio TA, and Green ED. 2017. Prioritizing diversity in human genomics research. Nature Reviews Genetics 89: 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hughson JA, Woodward-Kron R, Parker A, Hajek J, Bresin A, Knoch U, Phan T, and Story D. 2016. A review of approaches to improve participation of culturally and linguistically diverse populations in clinical trials. Trials 17: 263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joffe S, and Miller FG. 2008. Bench to bedside: Mapping the moral terrain of clinical research. Hastings Center Report 38: 30–42. [DOI] [PubMed] [Google Scholar]

- Kastrinos F, Uno H, Ukaegbu C, Alvero C, McFarland A, Yurgelun MB, Kulke MH, et al. 2017. Development and validation of the PREMM5 model for comprehensive risk assessment of Lynch syndrome. Journal of Clinical Oncology 35: 2165–2172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kraft SA, McMullen C, Lindberg NM, Bui D, Shipman K, Anderson K, Joseph G, et al. Integrating stakeholder feedback in translational genomics research: An ethnographic analysis of a study protocol’s evolution. Genetics in Medicine 2020: 1094–1101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Largent EA, Joffe S, and Miller FG. 2012. Can research and care be ethically integrated? Hastings Center Report 41: 37–46. [DOI] [PubMed] [Google Scholar]

- Marcotte E 2011. Responsive Web Design. New York: A Book Apart. [Google Scholar]

- Montalvo W, and Larson E. 2014. Participant comprehension of research for which they volunteer: A systematic review. Journal of Nursing Scholarship 46: 423–431. [DOI] [PubMed] [Google Scholar]

- National Comprehensive Cancer Network (NCCN). 2019. Clinical Practice Guidelines in Oncology: Genetic/Familial high-risk assessment: breast and ovarian V3. In: NCCN; 2019. [Google Scholar]

- National Comprehensive Cancer Network (NCCN). 2019. Clinical Practice Guidelines in Oncology: Genetic/Familial high-risk assessment: colorectal V1. In: NCCN; 2019. [Google Scholar]

- Nishimura A, Carey J, Erwin PJ, Tilburt JC, Murad MJ, and McCormick JB. 2013. Improving understanding in the research informed consent process: a systematic review of 54 interventions tested in randomized control trials. BMC Medical Ethics 14: 28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Reilly M, and Parker N. 2013. ‘Unsatisfactory saturation’: A critical exploration of the notion of saturated sample sizes in qualitative research. Qualitative Research 13: 190–197. [Google Scholar]

- Pang PC, Chang S, Verspoor K, and Clavisi O. 2018. The use of web-based technologies in health research participation: Qualitative study of consumer and researcher experiences. Journal of Medical Internet Research 20: e12094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rayes N, Bowen DJ, Coffin T, Nebgen D, Peterson C, Munsell MF, Gavin K, et al. 2019. MAGENTA (Making Genetic testing accessible): a prospective randomized controlled trial comparing online genetic education and telephone genetic counseling for hereditary cancer genetic testing. BMC Cancer 19: 648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saldaña J 2016. The Coding Manual for Qualitative Researchers. London: Sage. [Google Scholar]

- Tomlinson AN, Skinner D, Perry DL, Scollon SR, Roche MI, and Bernhardt BA. 2016. “Not tied up neatly with a bow”: Professionals’ challenging cases in informed consent for genomic sequencing. Journal of Genetic Counseling 25: 62–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolf SM, Amendola LM, Berg JS, Chung WK, Clayton EW, Green RC, Harris-Wai J, et al. 2018. Navigating the research-clinical interface in genomic medicine: analysis from the CSER Consortium. Genetics in Medicine 20: 545–553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolf SM, Burke W and Koenig BA. 2015. Mapping the ethics of translational genomics: Situating return of results and navigating the research-clinical divide. Journal of Law and Medical Ethics 43: 486–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wroblewski L 2011. Mobile First New York: A Book Apart. [Google Scholar]

- Yearby RA 2016. Involuntary consent: conditioning access to health care on participation in clinical trials. Journal of Law, Medicine & Ethics 44: 445–461. [DOI] [PubMed] [Google Scholar]

- Yu JH, Appelbaum PS, Brothers KB, Joffe S, Kauffman TL, Koenig BA, Prince AE, et al. 2019. Consent for clinical genome sequencing: considerations from the Clinical Sequencing Exploratory Research Consortium. Personalized Medicine 16: 325–333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuen J, Cousens N, Barlow-Stewart K, O’Shea R, and Andrews L. 2019. Online BRCA1/2 screening in the Australian Jewish community: a qualitative study. Journal of Community Genetics [Epub 2019 Dec 26] doi: 10.1007/s12687-019-00450-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.