Abstract

Some environmental influences, including intentional interventions, have shown persistent effects on psychological characteristics and other socially important outcomes years and even decades later. At the same time, it is common to find that the effects of life events or interventions diminish and even disappear completely, a phenomenon known as fadeout. We review the evidence for persistence and fadeout, drawing primarily on evidence from educational interventions. We conclude that 1) fadeout is widespread, and often co-exists with persistence; 2) fadeout is a substantive phenomenon, not merely a measurement artefact; and 3) persistence depends on the types of skills targeted, the institutional constraints and opportunities within the social context, and complementarities between interventions and subsequent environmental affordances. We discuss the implications of these conclusions for research and policy.

Keywords: fadeout and persistence, interventions, education

INTRODUCTION

The possibility that time-limited experiences can alter a person’s life trajectory has interested psychological researchers and the public for generations. Prominent examples include Freud’s (1924) writings on the hypothesized role of adverse childhood experiences in the etiology of hysteria; Bowlby’s (1973) research on the consequences of separation and attachment, adoption studies that compared adult siblings who had been reared in different homes (Bouchard et al., 1990); studies of the influence of early childhood educational experiences on adult labor market outcomes (Heckman, 2006); and research on the contribution of childhood home and contextual experiences to the intergenerational transmission of inequality (Chetty & Hendren, 2018; Felitti et al., 1998).

Despite this long-standing interest, psychology lacks a strong general scientific framework for understanding the circumstances under which the effects of a childhood experience persist (with a child continuing to do better or worse than they would have done had they not had the experience), or fade out (with a child’s longer-run outcomes not depending on whether or not they had the experience). This is evident from seemingly contradictory ideas in the field. For example:

Some argue that our default expectation should be that the effects of treatments (e.g., higher-quality schooling) that change psychological characteristics are transitory because the underlying traits (Costa & McCrae, 1980; Jensen, 1998) and environmental experiences (Campbell & Frey, 1970) that produce individual differences in the first place remain unchanged. Others argue for persistence as the default expectation, because earlier levels of psychological characteristics tend to be strongly correlated with, and likely affect, later levels of psychological characteristics (Duncan et al., 2007).

The temporal stability of many psychological characteristics has been taken both as evidence that potential treatments that change those characteristics will have persistent effects (Roisman & Fraley, 2013) and as evidence that treatment effects will likely fade out (Bailey, Watts, Littlefield, & Geary, 2014).

Some have argued that the benefits from childhood interventions will fade out if, after the intervention ends, children enter environments that fail to support the skills developed by the intervention (Currie & Thomas, 2000), but others have argued that the most learningconducive environments will produce rapid fadeout of the effects of prior interventions because they give students who did not receive the intervention a chance to be “treated” (Campbell & Frey, 1970; Bailey, Duncan, Odgers, & Yu, 2017).

These contradictions reflect, in part, variation in definitions of persistence and fadeout, the nature of the interventions, the developmental periods in which they occur, the psychological domains studied by these researchers, and, of course, the differing training, prior beliefs, experiences, and biases of the researchers themselves.

The purpose of this review is to describe the current state of research on the persistence and fadeout of the effects generated by interventions. The primary focus of this review is on educational interventions, although we will also discuss some non-educational interventions when they help elucidate the potential mechanisms through which specific kinds of educational interventions might operate, or might help us understand why the effects of educational interventions persist or fade out. Our goal is to develop a set of concepts, terms, and hypotheses that can be applied across domains and tested empirically.

We begin by providing more precise definitions of fadeout and persistence and reviewing empirical research on their patterns. We conclude that some degree of fadeout is the norm in interventions targeting psychological outcomes, such as skills, interests, tendencies, capacities, and beliefs, but that the relatively small number of longer-run follow-ups of initially effective interventions often show at least some degree of persistence.

We then review explanations for fadeout in interventions targeting skills, capacities, and opportunities of children and youth. Some researchers consider fadeout to be a mere measurement artefact; others attribute fadeout to substantive psychological and social processes. We conclude that while its precise magnitude and timing can be sensitive to measurement, fadeout is real and in need of explanation. However, how fadeout is shaped by substantive psychological and social processes is likely to differ across kinds of interventions, populations, and subsequent experiences.

Next, we discuss mechanisms for persistence, which include: 1) intervening on the kinds of skills, capacities, and beliefs likely to generate persistent effects; 2) the removal of post-intervention constraints and/or the realization of opportunities afforded by institutions; and 3) the features of post-intervention environments most likely to support persistence.

Finally, we discuss implications of this literature for future research into persistence and fadeout, as well as implications for policy. Most notably, a strong model-based theory of persistence and fadeout would be useful for both scientific and applied purposes, but can only be constructed by increasing the number of intervention studies that include long-term follow-up assessments of outcomes and assessments of the mechanisms listed above.

Terminology

Intervention “impacts” or “effects” are typically defined as the differences in outcomes among the group of people who were offered (or actually received) some treatment relative to a group of people who were not offered that treatment. Researchers have used the term “fadeout” to refer to a variety of associated but distinct phenomena related to the time course of effects following the completion of an intervention. For some, fadeout is a dichotomous characterization of whether or not the longer-run effects of initially effective interventions are essentially null across a range of longer-run outcomes (Heckman, 2017). In other cases, and for the purposes of the current review, it refers to a pattern of diminishing intervention impacts following the end of treatment (Elango, García, Heckman, & Hojman, 2015). This latter definition allows for fadeout and persistence to co-exist for the same intervention, for the same sample, and even across measurement occasions for the same outcome.

Co-existence occurs when fadeout-generating processes such as forgetting co-occur with persistence-generating processes such as remembering and transfer of learning (Kang et al., 2018). For example, the process of beginning to learn a new language often involves a sometimes frustrating combination of learning, forgetting recently learned words, using previously acquired knowledge to make inferences about new knowledge (e.g., conjugating new verbs and spelling new words), and hopefully some remembering. Despite the setbacks, educators hope that students will develop more of a command of the new language than if they had not attempted to learn it in the first place.

This co-occurrence-based conceptualization of persistence and fadeout also has implications for the distinction between two important concepts in the fadeout literature – “emergence” and “reemergence.” We restrict our use of “reemergence” to cases in which intervention impacts are initially favorable, then fade out, but subsequently reemerge within the same domain. A version of reemergence for which there is strong evidence is “savings,” the phenomenon in memory research whereby fewer trials are required to learn a list of items to mastery after the list has already been mastered a first time (for review, see Murre & Dros, 2015).

We argue that in the case of intensive multifaceted interventions, it is more common to find “emergence”; in other words, some degree of fadeout within one set of domains (e.g., academic and social skills) is followed by the emergence of impacts in related but distinct domains (e.g., high school graduation, adult earnings, or physical and mental health). Emergence may occur for a variety of reasons, including persistent impacts on key, often unmeasured, constructs directly affected by the intervention and indirect effects of an intervention on constructs via targeted and measured skills, impacts that may fade out completely.

Although impacts are typically defined as the differences in outcomes between groups of people who did or did not receive a treatment, experts in causal inference propose a different, individual-specific, definition. In their view, the effect of an intervention is the difference between a treated person’s outcomes relative to an imaginary (i.e. “counterfactual”) world in which that same person did not receive the treatment (or received a different kind of treatment). Since we cannot observe imaginary worlds, we generally use the outcomes of a comparison group as a stand-in for a person’s own counterfactual outcomes (Rubin, 2004).

It is useful to think about fadeout in the context of a randomized experiment. While most intervention research reports provide careful descriptions of the treatment, they often fail to provide an equally thorough description of counterfactual conditions (e.g. the experiences of the control groups). This can be a problem, because the nature of counterfactual conditions has important implications for understanding the magnitude and time path of intervention impacts in the real world. When conditions in the absence of the intervention benefit control-group members, then impact estimates (i.e., differences between the treatment and control groups) are smaller than would otherwise be the case. Example of factors benefiting control-group members include maturation (e.g., typically developing children in the control group for an intervention to increase self-regulation would have developed similar levels of self-regulation skills in any case) and the availability of alternative interventions (e.g., if children who are eligible for a reading tutoring program but are not chosen for that program receive other additional services from the school); or, in the case of an active control group, the members of that group benefit from the key ingredients responsible for the program’s success).

Fadeout may even occur when treatment-group individuals enjoy rapid improvement on an outcome of interest following the end of an intervention, as long as members of the control group improve more rapidly. As we will see in the next section, this “catch-up” pattern of fadeout stemming from more rapid post-treatment improvement for control-group, as opposed to treatment-group, children is probably the norm in the case of early childhood educational interventions targeting academic skills.

HOW WIDESPREAD IS FADEOUT?

Fadeout and persistence are observable at several timescales: minutes in the case of memory (Ebbinghaus, 1885); months and years in the case of many academic interventions; and even generations as with the introduction of food stamp program in the United States in the 1960s and 1970s (Hoynes et al., 2016) and famous early childhood education programs such as Perry Preschool. We divide our review into interventions focused on specific skills, capacities, and beliefs, and then on more general education and other environmental interventions. Basic descriptive knowledge on the temporal pattern of impacts with these kinds of interventions is hindered by the fact that relatively few evaluations measure outcomes beyond the end of their treatments.

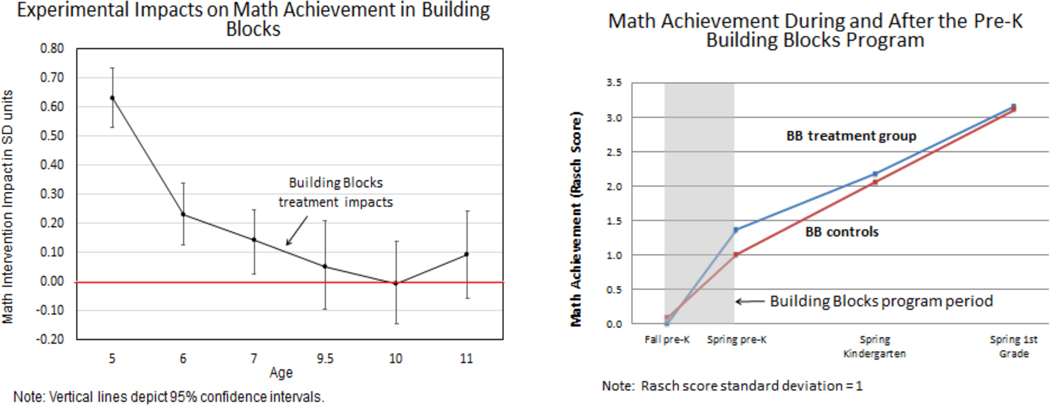

We begin our look at skill-targeted interventions with data from an evaluation of one implementation of an unusually well-developed pre-K mathematics curriculum called Building Blocks (Clements et al., 2011, 2013). One of Building Blocks’s evaluations involved random assignment of the curriculum at the school level to 834 students in 30 elementary schools serving low-income neighborhoods in either Buffalo, New York or Boston, Massachusetts.1 Students’ mathematics achievement was measured at the beginning and end of the preschool year and at the end of kindergarten, first grade and fifth grade, and during the fourth-grade year. Drawn from Clements et al. (2013) and Bailey et al. (2016), Figure 1a shows that treatment/control differences at the end of the pre-K year amounted to .63 SD – a large impact.

Figures 1a and 1b.

Treatment/control differences (1a) and Treatment and control group trajectories (1b) in the Building Blocks/TRIAD intervention

But Figure 1a also shows that this large initial treatment effect at the end of preschool quickly faded– to about 40% of its initial value by the end of first grade and almost completely by fourth grade. Figure 1b recasts Building Blocks data through age 7 by showing vertically scaled math scores separately for treatment and control groups. The more rapid growth in math skills across the pre-K year for the treatment relative to the control group produces the large endof-treatment effect shown in Figure 1a. Between the end of treatment and the end of first grade, math scores grow for both groups but they grow faster, on average, for control-than treatment-group children. Thus, the fadeout observed in Figure 1a appears to be the result of control-group catch-up.

By and large, meta-analyses of interventions targeting specific skills also show that endof-treatment impacts begin to fade out quickly and, in some cases, disappear completely. The case of educational and environmental interventions is more difficult to summarize succinctly, in part because differential fadeout is observed from one domain to the next (e.g., academic vs. socioemotional skills) and in part because long-run outcomes such as completed schooling, labor market success and health in adulthood lack closely-associated counterpart domains in childhood.

Widespread fadeout in skill-focused interventions?

A pattern of declining treatment effects has been observed in many experiments testing the effects of interventions designed to boost children’s academic skills:

In a meta-analysis of 51 studies of interventions that attempted to boost student learning, Hattie and colleagues (1996) estimated an average end of treatment impact of .45 SD. However, in studies that followed students up after the end of treatment (with follow-up intervals averaging between 3 and 4 months after the end of treatment), the average impact was .10 SD.

Of the 36 evaluations assessing impacts on phonological awareness included in Bus & van IJzendoorn’s (1999) study of phonological awareness training programs, only seven assessed “long-term” effects, which, on average occurred only 8 months after the end of the programs. Effect sizes fell by about half of their end-of-treatment values in these follow-up assessments. In the case of reading outcomes, the measurement period was longer (averaging 18 months), but so too was the amount of fadeout (nearly 80%).

Protzko (2015) conducted a meta-analysis of 23 different evaluations of interventions targeting IQ. End-of-treatment effect sizes averaged .37 SD, but these effects declined by about .10 SD per year. Limiting the sample to evaluations of interventions that could reasonably be expected to raise IQ increases the end-of-treatment impact estimate to .52 SD, but also the amount of fadeout (.13 SD per year). Protzko finds that IQ fadeout is caused mainly by declines in the IQs of treatment-group children across the follow-up period. However, because IQ scores are age normed, it is not clear whether treatment groups experienced a net loss of skill, on average, following the interventions included in this meta-analysis.

Takacs and Kassai (2019) summarize the large literature on interventions designed to promote children’s executive function skills. As with the other literature reviews, only a small fraction of studies (15 of 90) included follow-up assessments, which were conducted, on average, 22 weeks after the end of treatment. In contrast to end-of treatment effect sizes, which were substantial, the authors did not find convincing evidence that impacts persisted on these follow-up assessments.

All of these meta-analyses of a diverse set of skill-targeted interventions suggest that some degree of fadeout is the norm. Although it is difficult to generalize to the universe of skill-based interventions, we know of no meta-analyses of interventions targeting specific skills in children, with substantial variation in follow-up period, in which full persistence prevails.

Persistence in some intensive environmental interventions

In contrast to the ubiquity of fadeout in interventions that target specific skills, large bodies of literature document substantial persistent effects of some interventions that explicitly target or unintendedly affect a broader set of skills and capacities. Adoption in childhood is a dramatic example, with adoption into relatively more advantaged families associated with improvements across a number of domains, including cognitive skills (Kendler et al., 2015), health-related behavior, educational attainment, and earnings (Sacerdote, 2007). It should be noted that, relative to typical education interventions, adoption is remarkably intensive, both in terms of duration and ubiquity.

Less intensive environmental interventions can also generate persistent socially significant effects. Positive early life treatments, such as iodine fortification (Advaryu et al., 2016), and negative early life shocks, such as pneumonia exposure in infancy (Bhalotra & Venkataramani, 2015; for review, see Almond, Currie, & Duque, 2018), are associated with changes in adult labor market outcomes in studies based on strong quasi-experimental designs— a finding that has appeared in various forms at least since Elder’s (1974) classic study of children affected by the Great Depression. Differences between identical twins on a variety of psychological characteristics show stability across several years (Bailey & Littlefield, 2016; Tucker-Drob & Briley, 2014; von Stumm & Plomin, 2018), which is consistent with the notion that changes induced by environments that differ between children raised together can persist across time.

Some economic interventions appear to generate long-run benefits as well. Hoynes et al. (2016) take advantage of the fact that the U.S. food stamp program was rolled out on a countyby-county basis between the early 1960s and mid-1970s to show that children conceived or born into counties with the program already in place reported lower levels of cardiovascular symptoms in their 30s and 40s than children living in counties that adopted the food stamp program later in childhood. In Bougen and colleagues’ (2018) review of cash transfers and health interventions in developing countries, several conditional cash transfer programs were identified as producing long-term impacts on cognitive, educational, and labor market outcomes. Moreover, some unconditional cash transfer programs generated long-term impacts only when they were combined with intensive training and support.

A set of clinical interventions presents a notable potential exception to the regular pattern of fadeout described above. These interventions include preventive and therapeutic clinical interventions for antisocial behavior (Farrington & Welsh, 2003; Sawyer, Borduin, & Dopp, 2015), and social-emotional learning interventions based in schools (Taylor et al., 2017). Often involving both skill building and environmental changes, they have sometimes generated impacts persisting for months or years, with many impacts remaining stable in magnitude from the end of treatment to long-run follow-ups. Similar patterns of strong persistence have been found in adulthood for cognitive behavioral therapy (van Dis et al., 2019) and for (largely clinical) interventions that have estimated impacts on components of adult personality (Roberts et al., 2017).

Persistence in education interventions

Some interventions designed to improve cognition and behavior appear to generate persistent benefits. For example, evaluations have shown that some early childhood education programs promote educational attainment in early adulthood (for reviews, see Elango et al., 2015; McCoy et al., 2017), while additional years of education at the end of schooling appears to improve cognitive test scores (Ritchie & Tucker-Drob, 2018) and earnings (Card, 2001) in adulthood.

Some of the best-known instances of persistence of educational interventions show an interesting pattern of fadeout, followed by the emergence of long-term effects, although not always on the same kinds of developmental outcomes (e.g., Campbell et al., 2014; Heckman, 2006; Schweinhart et al., 2005). In the case of teacher effects, Jacob, Lefgren and Sims (2010) concluded that teacher-induced (value-added) learning has low persistence, with three-quarters or more of teacher effects on achievement test scores fading out within one year. However, Chetty, Friedman and Rockoff (2011, 2013) found longer-run impacts on both attainment and behavior when the same children were tracked through adulthood via administrative records.

A pattern of fadeout and reemergence in young adulthood has also been documented for early social skills training. The Fast Track program provided a range of behavioral and academic services to a random subset of 1st grade boys exhibiting conduct problems. Impacts in elementary school were uniformly positive, producing improvements in the boys’ prosocial behaviors and classroom social competence and reductions in their aggressive and oppositional behaviors (Conduct Problems Prevention Research Group, 1999a, 1999b). By middle or high school, most of these effects had disappeared for all but the highest-risk boys (CPPRG, 2011), although impacts on some of these outcomes reappeared when the participants were assessed in their mid-20s (Dodge et al., 2015).

All in all, it appears that some well-designed and implemented cognitive, social and emotional interventions produce immediate positive impacts on child and adolescent outcomes. Sharp reductions in subsequent intervention effects are typically observed among the regrettably small fraction of interventions where follow-up data are available. And, in a handful of some of the most rigorously implemented and evaluated early childhood interventions, this pattern of rapid intervention-effect fadeout has been followed by the detection of impacts in other domains in adulthood, such as attainment, behavior, and sometimes health.

METHODOLOGICAL AND SUBSTANTIVE EXPLANATIONS OF FADEOUT AND PERSISTENCE

Explanations of the pattern of diminishing treatment effects after the end of an initially successful intervention fall along a continuum (Bailey, 2019). At one end is the idea that fadeout is an artefact of measurement and requires no substantive explanation. At the other end are purely substantive explanations (e.g., fadeout is a result of forgetting and might be mitigated by teaching children skills to mastery). In the middle are explanations that view fadeout as either artefactual or “real” depending on how the explanation is framed. For example, some observed fadeout may result as an artefact of teaching to the test – impacts on children’s test-specific knowledge might persist following the end of an effective intervention, but that increase in knowledge might not be useful in children’s later academic pursuits. We begin our discussion by highlighting some of the methodological problems associated with tracking patterns of fadeout and persistence. Brief descriptions of these explanations, and why we conclude that each of them is insufficient to provide a full account of fadeout, appear in Table 1.

Table 1.

Proposed explanations of fadeout as a methodological artefact.

| Explanation | Description | Why likely insufficient |

|---|---|---|

| Artefactual Explanations | ||

| Misleading effect size reporting | Changes in standardized effect sizes over time can be misleading, particularly when variance on the underlying construct increases with age. | Fadeout has been observed on a variety of measures, scaling decisions, constructs, and age ranges. Effects sometimes reverse in sign. |

| Publication bias | If follow-up assessments are more likely to be conducted in the case of evaluations showing larger end-oftreatment impacts, then the end-oftreatment impacts in studies with follow-up assessments would be positively selected on sampling error and thus upwardly biased. | Publication bias can make fadeout look more or less severe. Fadeout is observable in quasi-experimental designs for which all outcome waves have been collected pre-analysis. |

| Part-Artefactual Explanations | ||

| Over-alignment | Initial over-alignment between treatments and outcomes creates a spuriously large estimate of end-oftreatment impacts. | Fadeout has been observed for combinations of broad treatments and measures (including measures other than cognitive tests), where a strong degree of alignment is unlikely. |

| Multidimensionality | Interventions may meaningfully affect some psychological attribute, but at follow-up, longer-run impacts are misestimated because a different construct is measured. | Fadeout has been observed on outcome measures other than psychological attributes with straightforward interpretations such as employment and earnings. |

Fadeout as an Artefact of Misleading Effect Size Reporting

Because fadeout is defined as a temporal pattern of diminishing effect sizes following the end of an effective intervention, a first question is whether fadeout is robust to the types of measures used to calculate effect sizes. For example, for various reasons, children progress far more rapidly on vertically-scaled standardized achievement tests in the early grades than in the later grades when progress is measured in standard deviation units. Using national norming data on seven major standardized tests in reading and six tests in math, Hill, Bloom, Black, and Lipsey (2007) calculate annual growth rates of over a full standard deviation per year in kindergarten but less than one third of a standard deviation per year in the middle-school years. Thus, when expressed as months of schooling, a treatment effect of x standard deviations in kindergarten would be the same as a treatment effect of x/3 standard deviations in middle school. Moreover, test score variance also increases with age on some tests, which means that a standard deviation group difference is fewer raw points on a test in a given year than in the following year. Thus, treatment impacts reported in standard deviation units could in principle fade over time, even as months of schooling or unstandardized group differences on some policy-relevant scale (e.g., predicted future earnings, rank in the distribution of effect sizes of interventions at some later age) remain the same or increase.

Changes in effect sizes across time are notoriously difficult to study. Bond and Lang (2013, 2017) show that apparent growth in black-white gaps on achievement tests in the early school years are sensitive to how the tests are scaled and may in large measure be an artefact of measurement error in test scores in the early grades. Although a pattern of decreasing measurement error with age would bias end of treatment impacts downward, making fadeout look less dramatic, the more general idea that changes in group differences can be highly sensitive to scaling decisions should be considered in interpreting the literature on fadeout and persistence.

Although the influence of test score construction and reporting on fadeout and persistence is not fully understood, there are good reasons to think that these explanations are insufficient to account for fadeout. A first reason is that test score fadeout expressed in standard deviations tends to be far more rapid, on average, than year-to-year fluctuations in growth rates, falling by 50% or more in the first year after the end of treatment in reviews of educational interventions targeting children in early childhood and the early school years (Bailey et al., 2018; Li et al., 2017). Second, within the set of interventions measured with vertically-scaled achievement tests, changes in test score variance over time are too small to fully explain fadeout (Cascio & Staiger, 2012). Third, fadeout can be readily observed on measures that are not vulnerable to the problems of vertically-scaled achievement tests, such as tasks designed to measure cognitive processes (Bailey et al., 2019; Roberts et al., 2016), life satisfaction surveys (Lucas, 2007), and economic consumption (Bouguen et al., 2018). Finally, a recent evaluation of a preschool program reported estimated effects on achievement test scores that flipped from positive at the end of pre-k to negative by second grade (Lipsey, Farran, & Durkin, 2018), a pattern difficult to reconcile with the scaling based explanations for fadeout above. Still, the possibility that fadeout may be sensitive to the use of different scales, and that different scales may be more useful for researchers with different goals, e.g., researchers interested in the development of skill-building for its own sake vs. researchers interested in long-run impacts on socially important outcomes influenced by the accumulation of human capital, is an important reason to consider a variety of scales when comparing earlier impacts to later impacts.

Fadeout as an Artefact of Publication Bias

Protzko (2015) notes that the temporal pattern of impacts following the end of an intervention may be sensitive to publication practices. In particular, publication bias will reduce the apparent magnitude of fadeout if positive impacts are more likely than null impacts to be published following the end of the evaluation of some intervention. On the other hand, other kinds of publication selection could lead to an over-estimation of fadeout. For example, follow-up studies showing that an initially promising intervention faded out over time could be surprising, and more likely to be viewed as novel and publishable. Furthermore, if follow-up assessments are more likely to be conducted in the case of evaluations showing larger end-oftreatment impacts (e.g. if funding agencies support follow-ups only after knowing that a treatment worked initially), then the end-of-treatment impacts in studies with follow-up assessments would be positively selected on sampling error and thus upwardly biased. Subsequent effect sizes could regress to smaller magnitudes, creating the illusion of fadeout, even if the true impacts of interventions remained stable following the end of the intervention.

Alternatively, if researchers selectively report positive relative to null follow-up impacts, this would upwardly bias long-term impact estimates and could lead to an underestimate of fadeout. This may be a concern in the clinical literature described above, for which persistence is commonly observed, but long-run outcome measures are quite heterogeneous. For example, in long-term evaluations of studies of cognitive-behavioral therapy in adults, the combination of high rates of uncertainty about whether outcomes are fully reported and the regularity of intervention developers co-authoring intervention evaluations is potentially worrisome (van Dis et al., 2019).

Nor can we discount the possibility of publication bias in meta-analytic estimates of fadeout and persistence. This is particularly true for interventions with small impacts, imprecisely measured relative to their magnitudes, where the difference between complete fadeout and complete persistence is very small, and both of these outcomes can be obtained within a range of plausible model specifications. Various methods are also available to probe whether the average role of publication bias in any particular literature is sufficient to create illusory fadeout or persistence (e.g., McShane et al., 2016).

That said, our confidence that fadeout effects are not fully artefacts of publication bias is bolstered by the fact that observed instances of fadeout come from designs with properties that make publication bias unlikely. For example, some field RCTs of educational programs contain a single, pre-specified outcome measure at any given wave (e.g., Clements et al., 2013). Others have measured the same set of outcomes several times following the end of an intervention, finding corresponding diminishing treatment impacts on all of them (e.g., Bailey et al., 2019).

Also inconsistent with a publication bias explanation is the fact that fadeout has been observed in many retrospective quasi-experimental studies estimating the causal effect of some quasi-randomly assigned treatment on a set of outcomes all measured by the time the analysis is conducted. In these cases, positive selection of reported long-term effect sizes is plausibly at least as severe as positive selection of reported end of treatment effect sizes. A small subset of educational interventions that have been evaluated years after the end of treatment and shown to produce fadeout on achievement test scores over time include Head Start attendance (Deming, 2009), high-quality teachers (Jacob, Lefgren, & Sims, 2010), and taking two periods of sixth grade math (Taylor, 2014).

Baseline or Post-Treatment Imbalance

Baseline imbalance between groups in RCTs (i.e., “unhappy randomization”) or quasi-experimental designs, or imbalance on post-treatment experiences not caused by the treatment, may lead groups to diverge or converge following the end of treatment in ways that will deviate from the population-level long-run effects of the treatment. However, because these factors likely vary randomly and not systematically (at least in the absence of the other artefactual explanations discussed in greater detail above), they are just as likely to lead to inflated estimates of persistence as to inflated estimates of fadeout. Attrition may systematically bias findings toward fadeout or persistence, depending on the processes through which individuals select out of the study. If relatively more disadvantaged control group members attrit, this may create the illusion of fadeout. Perhaps consistent with this pattern, Taylor and colleagues (2017) estimated that, across studies of the impacts of social-emotional learning interventions higher levels of attrition were associated with smaller longer-run impacts. However, if disadvantaged treatment group members drop out, this may create the illusion of persistence. For example, Ou and colleagues (2019) estimated larger long-run impacts of the Chicago Child-Parent Center program for children who spent longer in the program, but these children were also less disadvantaged, on average. Finally, being in the treatment group may cause individuals to have subsequently different environmental experiences, but these would be mediators through which fadeout and persistence would be observed, rather than measurement artefacts (we discuss this possibility as a substantive explanation for impact persistence under MECHANISMS FOR PERSISTENCE).

All of the fadeout-as-artefact explanations discussed above deserve careful consideration in individual studies and across specific bodies of intervention research. Notably, different practices related to multiple testing and decisions to collect follow-up data make different predictions about patterns of publication bias, and we encourage researchers to test these systematically in future meta-analytic work. However, we doubt that these explanations can fully account for the fadeout effect, because the conditions under which they apply (namely, increasing test score variance over time, the use of vertically scaled tests on which participants are rapidly improving, and the sort of publication bias in which inflated initial effects are reported and more realistic follow-up effects are reported) do not characterize all of the cases in which fadeout has been observed.

One notable body of research to which none of these explanations apply is the study of the forgetting curve (Ebbinghaus, 1885, Murre & Dros, 2015, Pashler et al., 2007), in which participants are taught information they would never otherwise learn and repeatedly tested on the same items. This work is important because it points to an important substantive explanation for some instances of fadeout: forgetting, which we discuss below.

Fadeout as Substantively Meaningful but Misleading

We turn now to explanations positing that fadeout results from a lack of correspondence between constructs influenced by or measured at the end of interventions and constructs measured during the follow-up period. Depending on the nature of these constructs, these explanations might be viewed as characterizing fadeout as an artefact or as a substantive phenomenon.

Over-alignment between treatments and outcomes.

One important explanation for fadeout is that initial over-alignment between treatments and outcomes creates a spuriously large estimate of end-of-treatment impacts. Over-alignment occurs when an intervention is evaluated with measures closely tied to the treatment itself. An example might be in the evaluation of an early math curriculum that stresses geometry using an assessment containing a preponderance of items testing for understanding of shapes and sizes. Using terminology from experimental psychology, over-alignment might be conceptualized as using a manipulation check measure to estimate program impacts.

For tests measuring skills that accumulate with training, over-alignment can result from what is sometimes called teaching to the test. And when outcomes are measured by self-reported behavior rather than performance-based assessment, participants may know or guess what the experimenters’ hypotheses are and respond or behave in a way consistent with those hypotheses.

There are some reasons to believe that teaching to the test contributes to some of the observed patterns of fadeout. First, everyday instruction appears to involve some amount of teaching to tests. Linn and colleagues (1990, 2000) have documented instances in which a test used for accountability purposes is replaced within a state or district. Standardized scores for the presumably otherwise comparable cohorts in the following year drop substantially on the new test, and then slowly rebound over time as teachers align their instruction with the new test, resulting in what the researchers call a “sawtooth” pattern.

Second, a large meta-analysis of educational interventions found that impacts estimated with experimenter-designed measures were roughly twice as large as interventions using independent measures (Cheung & Slavin, 2016). Perhaps apparent fadeout would be observed if impacts on all tests remained the same following an intervention, but a researcher-created outcome was measured at the end of treatment, and grades or standardized test scores were measured at a follow-up assessment.

Teaching to the test is a common explanation for fadeout following cognitive training interventions. Jensen (1998) referred to gains following cognitive training as “hollow.” However, the precise links between teaching to the test and fadeout are rarely articulated and may not be as direct as many psychologists assume (Protzko, 2015, 2016). We discuss some of the relevant ideas using the case of cognitive training interventions for which the goal is often to transfer to some general causes of individual differences in cognitive ability.

In one sense, fadeout following overly-aligned treatments and posttest measures as well as teaching to the test is real – performance or self-reported psychological characteristics did indeed change relative to some counterfactual, and at some later point, this difference was no longer detectable. In another sense, fadeout on overly-aligned outcome measures is artefactual if the targeted underlying psychological characteristics were never changed at all. Completely artefactual cases are better conceptualized as instances in which there was no initial impact rather than cases in which an initial impact diminished after the end of treatment.

Importantly, explanations based on over-alignment likely do not apply to the same degree across all interventions that raise children’s test scores and show some degree of fadeout after the end of treatment. Although it is sensible that short-term task-specific coaching interventions will lead to shallow knowledge that may be forgotten, many educational interventions are designed to build children’s conceptual understanding within some underlying domain (e.g., Clements et al., 2011; Gonzalez et al., 2011) and may look quite different in duration of treatment, amount of contact with an instructor, and depth of content than experiments in which participants receive repeated test practice (e.g., Estrada et al., 2015). Still other interventions change children’s contexts intensively for a substantial amount of time, as when children attend preschool for several hours a day rather than staying home. In such cases, the idea that participant benefits are fully captured by a narrow assessment is unlikely. Yet some degree of fadeout on cognitive test scores is the norm in both kinds of interventions.

Multidimensionality.

A broader set of possible explanations of fadeout, of which over-alignment may be considered a subset, is that the appearance of fadeout is a predictable consequence when an intervention meaningfully affects some psychological attribute, but at follow-up, longer-run impacts are misestimated because a different construct is measured. For example, Clarke and colleagues (2016) found substantial impacts of a kindergarten mathematics intervention at the end of kindergarten on a variety of tests focused primarily on children’s number sense. At the end of first grade, researchers observed no impact on a novel broad math achievement test. In this case, one cannot rule out the possibility that, while impacts never emerged on that broad math achievement test, impacts may have persisted on number sense. If number sense continues to influence performance or learning during the follow-up period, it would be misleading to interpret the null follow-up impact on the broad math achievement test as indicative of complete fadeout.

Multidimensionality of assessment content across waves is most clearly a concern in skill-building domains, in which different tests are administered at different points during development or in which the same test includes different kinds of items for individuals who are at different points in their development. In such cases, impacts on skills targeted by an intervention might diminish on subsequent assessments in part because those assessments are no longer measuring the content targeted during the initial intervention.

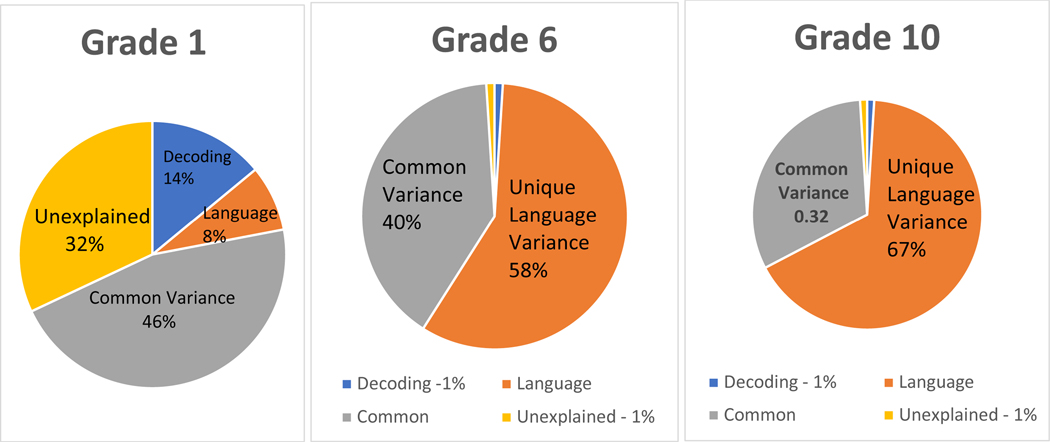

For example, children’s reading skills follow a predictable skill-building sequence: Throughout development, correlations between decoding and reading comprehension decrease while correlations between linguistic comprehension and reading comprehension increase (Hoover & Gough, 1990). Foorman, Petscher, and Herrera (2018) examined the prediction of reading comprehension by decoding and linguistic comprehension in a large sample of students in grades 1–10 and found that their contributions to reading achievement were dynamic: As can be seen in the left panel of Figure 2, decoding explained more unique variance than linguistic comprehension in grade 1 reading achievement, with the majority of the explained variance shared between the two predictors. As can be seen in the center panel of Figure 2, this pattern changed dramatically by grade 6, such that more than half of the total variance in reading achievement was explained by the unique contribution of linguistic comprehension and nearly all the remaining variance explained by the shared variance between decoding and linguistic comprehension. By grade 10 (Figure 2, right panel), unique variance due to linguistic comprehension explained an even larger proportion of the variance, with the shared variance between decoding and linguistic comprehension continuing to account for almost all of the remaining variance. By high school, reading comprehension and written language understanding at the word and discourse level were nearly psychometrically indistinguishable.

Figure 2.

Total percent of variance explained in reading comprehension decomposed into unique and common effects of language and decoding and unexplained variance in grade 1, grade 6, and grade 10

Thus, consider an intervention generating a persistent impact on decoding. If the heightened decoding skills did not transfer to linguistic comprehension, then the intervention would appear to have a diminishing impact on a reading comprehension test from one grade to the next. If reading comprehension scores are considered to be a good index of the intervention’s long-run success, then one might consider this to be a clear case of fadeout. Indeed, many literacy researchers have noted that the skills required for success vary dynamically based on what an individuals need to learn or do to be successful (e.g., Ackerman, 2017; Cunha & Heckman, 2007).

Alternatively, one might consider fadeout in this hypothetical case to be a measurement artefact, as treatment impacts on the trained cognitive skill (decoding) do not diminish after the end of treatment. Our view is that, in such cases, both persistence on the decoding measure and fadeout on the reading comprehension measure are real, and their relative importance will depend on the importance of 10th grade decoding and 10th grade reading comprehension for children’s later life outcomes.

The importance of the multidimensionality problem for understanding fadeout depends on the answers to two questions: 1) how important are variations in basic skills, above and beyond some limited amount of transfer to intermediate measured skills, for advanced skill development and other socially important outcomes? and 2) if variations in basic skills continue to causally influence these long-term outcomes, do impacts on basic skills persist in cases in which basic skills are manipulated and more advanced skills are measured at follow-up?

The answer to the first question likely varies substantially across interventions, populations, and outcomes: for example, increasing children’s decoding skills may influence their educational success primarily because they transfer to early reading skills. In contrast, if educational interventions improve children’s socioemotional skills, these skills may improve academic achievement in the short-term and directly reduce high school dropout in the long-term by improving socioemotional behaviors. However, as we will argue in the section on Mechanisms for Persistence, skills that are no longer measured after the end of the posttest may often develop very quickly under counterfactual conditions, leading to catchup by the control group, and thus our hypothetical example of persistence on decoding may not be realistic in many cases. For example, in a number knowledge tutoring intervention that gave first graders a substantial amount of practice with speeded arithmetic, children from the control group fully caught up to the level of performance of the treatment group by two years after the end of treatment on the same measure of speeded arithmetic given at the end of treatment (Bailey et al., 2019).

To summarize our discussion of multidimensionality, understanding changes in measures and constructs over time may be useful for developing a process-based understanding of fadeout in some cases. Further, a variant of the multidimensionality problem, whereby treatment impacts on some construct are substantial and persistent but unmeasured, may be an important route to the emergence of long-term effects of interventions.

Fadeout as a Predictable Consequence of Psychological Processes

Some explanations for fadeout invoke psychological and/or social processes that occur following the end of interventions. Each explanation makes predictions about what factors will promote persistence.

Forgetting.

As noted above, research on forgetting has provided some of the clearest and most robust evidence for fadeout. Moreover, the shape of fadeout curves and the shape of forgetting curves are often very similar. Might forgetting play an important role in fadeout from skill-building interventions? Forgetting is likely under conditions in which individuals practice learned information less and new information more (for review, see Wixted, 2004). Both of these conditions are likely to prevail when children receive a one-time skill-building intervention and then return to the same environment as children who did not.

A simplistic interpretation of the hypothesis that forgetting accounts for fadeout, whereby children forget everything they learned immediately following the end of an effective educational intervention, can be ruled out: As described above, the most common pattern of fadeout observed in early childhood educational interventions is one of control-group catch-up rather than absolute declines in the skill of treatment-group children. However, control-group catch-up does not rule out a role for forgetting: Campbell and Frey (1970) presented a very simple model of simultaneous learning and forgetting to account for fadeout following effective early childhood educational intervention. Briefly, for some subset of related skills and equal environmental inputs in the period following an effective intervention, if forgetting is steeper for individuals who have acquired more skill, and learning is steeper for individuals with less skill, then skill levels of the higher skill treatment group and the lower skill control group will converge.

Evidence for the role of forgetting in fadeout comes from a study of the Building Blocks pre-kindergarten mathematics intervention (Kang et al., 2018). One year following the end of treatment, children in the treatment group were more likely than children in the control group to answer an item incorrectly on a subsequent math achievement test that they had incorrectly answered on the end-of-treatment assessment. This difference, which does not capture instances in which children in the treatment group forgot and then re-learned items, was approximately 1/4 the size of the total fadeout effect during this period.

Modest transfer.

Although transfer of learning, whereby learning one skill influences performance or the learning of another, likely plays an enormous role in children’s academic development, the striking degree of fadeout following initially-effective skill-building interventions suggests that transfer of learning may play a smaller role than is often assumed. We argue that both of these statements can be true, particularly when 1) the set of possible skills on which one might intervene is very large, 2) when development under counterfactual conditions is rapid, and 3) when one-time skill-building interventions are constrained by time and resources to focus on a narrow subset of these skills.

The degree of fadeout observed in studies of academic skill-building casts doubt on the hypothesis that transfer of learning within a single domain (e.g., literacy or math) primarily accounts for the large correlations between children’s early and much later academic achievement. A common and influential finding in developmental psychology has been that children’s early academic achievement strongly predicts their much later academic achievement, statistically controlling for a comprehensive set of covariates (e.g., Duncan et al., 2007). When interpreted causally, these findings imply that the impacts of an early math intervention will persist at approximately 40% of the initial magnitude of the treatment effect approximately 5 years after the end of treatment – a degree of persistence obviously at odds with the kind of data presented in Figure 1.

Moreover, the kinds of partial correlations presented in Duncan et al. (2007) show some odd patterns difficult to reconcile with cognitive and educational psychological theory. For example, if interpreted causally, they imply that early math interventions will have persistent effects on reading skills many years later – effects of a comparable size as early reading interventions on later reading achievement (Bailey et al., 2018). In contrast, patterns of fadeout following the end of an effective early math intervention imply that treatment effects decay by approximately 60% each year following the end of an intervention (although whether they approach an asymptote at 0 or some small positive value is not easily known although perhaps of theoretical importance). These results imply that transfer of learning within the math domain and across domains accounts for some of the longitudinal stability in children’s early to later academic skills, but far from all of it, and perhaps only a trivial part of the stability across multi-year time lags and across domains2.

MECHANISMS FOR PERSISTENCE

Bailey et al. (2017) propose three distinct processes that might sustain the benefits of interventions for children and adolescents: skill building, sustaining environments and institutional opportunities and constraints.3

Key to the skill building process is the idea that simpler skills support the learning of more sophisticated ones and, in some economic models, that skills acquired prior to a given skill- or capacity-building intervention increase the productivity of that investment. Skill-building processes can be readily seen in both math and literacy learning. In math, counting serves as a cognitive basis for children’s early addition problem solving, and addition is often employed as a subroutine of children’s multiplication problem solving (Baroody, 1987; Lemaire & Siegler, 1995). In reading, children’s ability to match letters to sounds supports their learning to recognize written words, which in turn supports their vocabulary learning, which then supports their reading comprehension (LaBerge & Samuels, 1974). It is important to note that “skills” in skill-building models are conceived to be much broader than conventional academic skills such as literacy and math. Indeed, they encompass any skill, behavior, capacity or psychological resource that helps individuals attain successful outcomes.

In the case of skill-building interventions, Bailey et al. (2017) point to the potential importance of targeting what they call “trifecta” skills – ones that are malleable, fundamental, and would not have developed in the absence of the intervention. In this framework, malleability is not an absolute property of any skill but is defined relative to what can be changed across the range of currently available interventions. Fundamentality is the extent to which a skill affects positive life outcomes in adulthood or affects other, intermediate, skills that reliably affect such outcomes. Bailey and colleagues argue that all three conditions are needed to generate long-run effects, and that the third “trifecta” condition – eventual skill development in counterfactual conditions – is particularly problematic for long-term impacts of interventions focused on building early literacy and math skills because most children are likely to eventually acquire at least minimal levels of these skills soon after entering school. This kind of “catch-up” driven explanation of fadeout may explain the widespread fadeout reported in our above review of interventions targeting specific skills.

A second approach to understanding fadeout is termed the “sustaining environments” perspective by Bailey et al. (2017). It recognizes the importance of interventions that build important skills and capacities, but views the quality of environments subsequent to the completion of the intervention as crucial for maintaining initial skill advantages.

An example of unsustaining environmental processes is the “constraining content” hypothesis, which is based on the idea that fadeout is a consequence of high achieving students’ limited opportunities to build on learning from an effective educational intervention after it ends. This might be the case for intervention gains among the highest achieving students if subsequent instruction is aimed at lower-achieving students. Support for this idea comes from studies showing fadeout following Head Start attendance, which has been hypothesized to result from Head Start children attending lower quality elementary schools (Currie & Thomas, 2000), and the finding that kindergarten teachers in the U.S. have been observed to dedicate little instructional time, on average, to advanced academic skills that might be most likely to build on skills learned in high-quality, skill-building preschools (Engel, Claessens, & Finch, 2013).

Despite this kind of supportive evidence, more general tests of the constraining content hypothesis have yielded mixed results. One important prediction is that fadeout will be largest for children with the highest levels of achievement and thus the least opportunity to be exposed to content beyond their level of mastery. However, similar degrees of fadeout for higher and lower achieving students are often found following early educational interventions (Bailey et al., 2016; Bitler, Hoynes, & Domina, 2014). Also relevant to the constraining content hypothesis are studies that have estimated statistical interactions between early and later educational quality. We will review this literature in the section on Sustaining Environments, below.

As explained in the “gateway” sections below, interactions between children’s developmental and schools is key to the institutional opportunities and constraints perspective. In this case, successful interventions equip a child with the right skills or capacities at the right time to avoid imminent risks (e.g., grade failure, teen drinking or teen childbearing) or to seize emerging opportunities (e.g., entry into honors classes, SAT prep) associated with the organization of schools or other social structures. The skill or capacity boosts need not be permanent, as with SAT prep that boosts chances of acceptance into a higher-resourced college, a key step in a positive cascade that might influence human capital and labor market outcomes. For SAT prep, it is the enriched college resources, rather than any lingering test prep knowledge, that might lead to a higher-paying job.

A Formal Model of Skill Building

Our discussion of fadeout and persistence thus far has been inductive and presented in narrative form rather than as formal theory. Formal models such as the one presented below can be very helpful in clarifying concepts and making explicit assumptions that are often hidden and sometimes unintended (Lave and March, 1993). Formal models are best developed in a cyclical process. First, the theorist studies data from sound empirical studies to inform model assumptions. Then, she proceeds by deriving model predictions that can be inform empirical studies to reject (or not) such predictions. Then, given rejections, the new data are used to update model assumptions, thus resuming the cycle in which formal models are created.

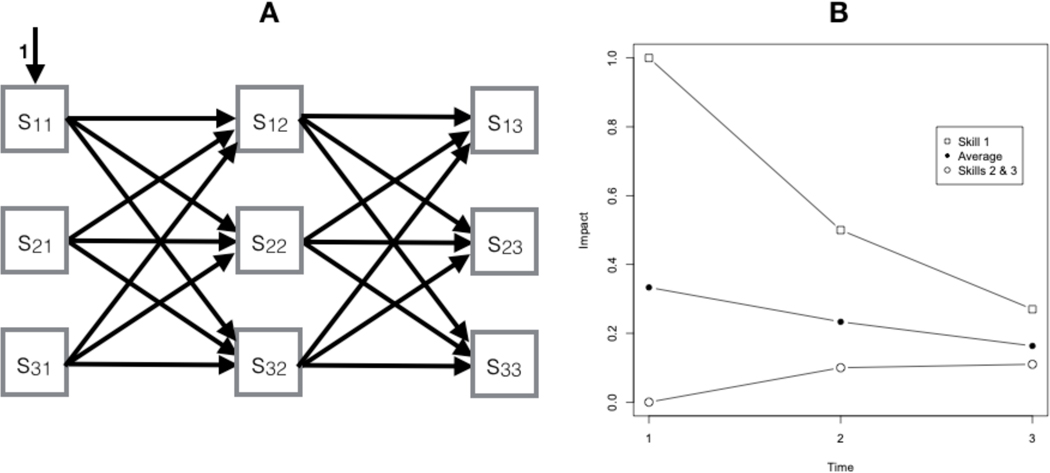

With the details provided in an Appendix, in this section, we extend the kinds of skill-building models developed by Cunha and Heckman (2007) by showing the conditions under which these models can explain the patterns of fadeout or persistence of intervention effects. We also describe conditions under which skill-building models can produce the emergence of long-term effects in adult outcomes reported in some studies in the literature.

Skill-building models developed by economists view skill building by children as a sequential process of combining time and money investments (specific to these skills) with the stock of children’s skills developed at earlier stages of the child’s lifecycle. Economists call the process by which more complex skills are built upon simpler skills as “self-productivity.” A second key component of skill-building models focuses on the nature of the interactions between the level of incoming foundational skills developed prior to the intervention (period t − 1) and the levels of time and money investments undertaken in the current stage (period t). A key issue is whether higher foundational skill levels increase or decrease the productivity of period-t investments. Sustaining environments are possible when the productivity of investments undertaken in the time period following the end of an intervention (period t + 1) is higher for individuals whose skills have been boosted by the period-t intervention than for otherwise similar individuals not exposed to the period t intervention. Cases in which these investments yield greater benefits to individuals with lower as opposed to higher levels of incoming skills will lead to faster fadeout.

The nature of these interactions can be framed in the following fashion: consider two children, A and B, who differ in their levels of counting knowledge, with A having higher foundational skills than B. If both receive the same amount of teaching time and effort to learn addition and subtraction, which child will profit the most from the instruction? If A, we say that the skill-building model features dynamic complementarity – the teaching investment complements a child incoming level of foundational skills and produces a Matthew Effect, where the rich get richer. On the other hand, if teaching investments are more productive for child B, then we say that the model features dynamic substitutability – the teaching investment is compensatory by raising skills already mastered by Child A but not Child B.

In the case of sequential interventions, different skills may exhibit dynamic complementarity or dynamic substitutability with different interventions. Dynamic substitutability is more likely to occur when two skill investments are redundant in their content. An example that is consistent with dynamic substitutability is Clements et al.’s (2013) TRIAD evaluation of the Building Blocks pre-K math intervention (see Figures 1a and 1b). The results of the evaluation show that, although the math skills of children in the treatment group grew faster (relative to the math skills of children in the control group) during the intervention period, the math skills of the treatment group actually grew more slowly than the skills of children in the control group in the years after the end of the intervention. Key to dynamic substitutability in this case is that what determines the importance of math skills targeted by the pre-K Building Blocks intervention are not the investments in the preschool years but the total sequence of investments across a number of periods. Dynamic substitutability is, thus, closely related to the notion of “development under counterfactual conditions” as proposed by Bailey et al (2017). We return to this issue below.

Dynamic substitutability also has implications for the composition of the target population for early childhood interventions. Such interventions (say Head Start at age 4) usually target children who are at risk for having experienced relatively low levels of investments (e.g., by parents or programs like Early Head Start) period to age 4. But if Early Head Start and Head Start investments are dynamically substitutable, then the highest returns to Head Start would be for children who had not received services from Early Head Start. An example of dynamic substitution is Rossin-Slater and Wüst’s (2017) study of a Danish preschool program and nurse-visiting program, which were rolled out across communities in a haphazard way during the same period. Although each produced long-term effects on educational attainment, they were almost completely dynamic substitutes for one another: receiving both programs yielded no larger effects than receiving just one of them.

Dynamic complementarity presents difficulties for policymakers concerned with educational equity. It implies that skill-building interventions in, say, adolescence may be less productive if early investment levels are low (Cunha & Heckman, 2007). This argument is used for advocates of early childhood investments who identify low levels of early investments as a significant bottleneck for the development of human capital once children growing up in disadvantaged circumstances reach school age. If, say, educational investments in the K-12 schooling years are dynamic complements with kindergarten-entry skill levels, then preschool investments in children’s school readiness will pay learning dividends across all of the years of formal schooling. The opposite would be the case if the two sets of investments are dynamic substitutes.

There is less discussion of a second public policy implication of dynamic complementarity: If the skill-formation process features dynamic complementarity, then the rate of return to early investments is greater, the higher the expected level of late investments. Therefore, when dynamic complementarity is in place, interventions that boost early skill formation will generate larger long-term impacts when accompanied by higher levels of later investments. These ideas support a direct linkage between dynamic complementarity and the concept of “sustaining environments,” as described in Bailey et al. (2017), and elaborated on below.

Another important result from skill-building models is that the effects of early interventions will fade out if the sequence of investments post-intervention is the same between control and treatment groups. The intuition is as follows: while it is true that skills produced in the early stages of the lifecycle are an input into the production of skills produced at later stages, early skills are not the only input. Indeed, in skill-building models that feature malleability, skills require investments as well. Regardless of the degree of dynamic complementarity or substitutability, if later investments are equated across groups – and below the initial boost in the treatment condition – fadeout will generally happen regardless of whether the model features dynamic complementarity or substitutability.4

As highlighted in the previous paragraph, a crucial condition for skill building models to generate fadeout is that there are no differences between control and treatment groups in the amount of investments in post-intervention periods. Unfortunately, there are very few studies that systematically investigate the differences in post-intervention investments. Two studies that do focus on the Head Start Program. First, Currie and Thomas (2000) use data from the Children of the National Longitudinal Study 1979 to compare the quality of post-intervention environments between children who attended and did not attend the Head Start Program. They find that black Head Start children go on to attend schools of lower quality than other black children. This finding suggests that treated children experienced lower levels of investments during post-intervention years than control children. Gelber and Isen (2013) use data from the Head Start Impact Study and find a small but positive difference in post-intervention home investments between treated children and controls, between 3% and 8% of a standard deviation.

Given these empirical findings, the puzzle, from the point of view of economic models of skill formation is not why there is fadeout or persistence, but why there is convergence or divergence of investments in post-intervention periods between control and treatment groups. An exogenous increase in the levels of investments in period t − 1 induces two forces in investments in period t that operate in opposite directions if there is dynamic complementarity and in the same direction if there is dynamic substitutability. If there is dynamic complementarity, an exogenous increase in investments in period t − 1 raises the returns to investments in period t because of the synergistic nature of dynamic complementarity. If there is dynamic substitutability, then an exogenous increase in period t − 1 lowers the returns to investments in period t.

On the other hand, an exogenous increase in investments in period t − 1 raises skill levels in period t − 1 and, via self-productivity, it also indirectly raises skill levels in period t (everything else constant). Thus, this second force reduces the incentives for higher levels of investments in period t . If there is dynamic substitutability, both forces predict that an exogenous increase in investments in period t − 1 would reduce investments in post-intervention years. If there is dynamic complementarity, the forces work in opposite direction and to determine which force prevails depends on the relative strength of dynamic complementarity and selfproductivity. The stronger dynamic complementarity and the weaker the self-productivity of skills, the stronger the incentives for investments to be higher in period t .

We now return to the discussion concerning the concept of “not developed under counterfactual conditions” by Bailey et al. (2017). Skill building models predict that the proficiency in skills that have sensitive periods of development is more closely determined by the total amount of investments during sensitive periods than by how investments are distributed within sensitive periods of development. This finding automatically implies fadeout of intervention effects. To see why, consider a skill whose sensitive period of development lasts two years, which we denote by year t and year t + 1. Assume that we randomly allocate individuals to an intervention or control group. In the control group, the individuals receive two units of investment in each period. In the treatment group, the individuals receive three units of investment in the first period and one unit of investments in the second period. Both groups receive four units of investments, but these investments units are distributed differently across groups. If there is equal malleability and high degree of dynamic substitutability, skill building models predict the treatment group will surge during year %, but that the control group will eventually catch up by the end of year t + 1. Thomas and colleagues (2018) call the frustrating implication of malleability for fadeout the “double-edged sword of plasticity”. When sensitive periods of development are long, final proficiency of skills are determined by total amount of investment within years of sensitive periods, and not how investments are allocated across these years.

Ideally, we would be able to use these implications of skill building models to inform public policy. From the point of view of skill building models, the design should start by answering the question: what is the long-term outcome of interest the intervention is supposed to improve? Once the answer is determined, the second question is: what are the most fundamental skills that determine this outcome? The answer to this question is very important because not all outcomes are equally affected by different skills (e.g., Heckman, Stixrud, and Urzua, 2006). The third question is: at what stage of the lifecycle are these skills most malleable? How long is the sensitive period of development? Is it a long period or is short lived? The answer to this question determines the age range that is the target for the intervention and whether the timing of investment is crucial or not. The fourth question is, what constitutes, in practice, investments in the formation of these fundamental skills during the sensitive periods of development? And, finally, who are the individuals who are at-risk for low levels of investments during the sensitive periods of development of these fundamental skills?

In summary, skill building models describe a rich process of human capital formation. There are many families of skills, each one of these families have multiple members, and each one of these members have different degrees of fundamentality, distinct levels of malleability, and heterogeneity in the duration of sensitive periods of development.

There are two ways that skill building models can explain why impacts fade out over time. First, impacts will fade out because of malleability. Each member in a family of skill requires investments. So, interventions that take place in only certain segments of an individual’s life may impact the members of a skill family that are malleable during those segments, but this advantage can only be sustained over time if later skills also receive higher levels of investments from sustaining environments or if self- or cross-productivity is reliably large. Alternatively, skill building models predict that impact will fade out over time if interventions just anticipate investments to early stages of sensitive periods of development.

There are also two ways in which skill building models predict that interventions will not lead to long-term impacts. First, interventions will not have impacts on long-term outcomes if the interventions do not focus on fundamental skills. Second, interventions that just anticipate investments to early stages of sensitive periods will also not lead to long-term impacts because long-term outcomes are affected by final performance in fundamental skills and not by performance at each point in time.

What Skills to Target?

Bailey and colleagues (2017) highlighted the potential tradeoffs among the criteria for trifecta skills. Malleable and fundamental skills (e.g., basic math and reading skills) may develop quickly under counterfactual conditions because educators and other interventionists know they are malleable and foundational and have designed early-grade curricula accordingly. Analogously, psychological factors with known limited sensitive periods are often those for which the required inputs will be predictably present and are the subject of pediatrician screening. Examples include basic visual input and exposure to the sounds that appear in one’s first language; for a review on mechanisms underlying sensitive periods in cognitive development, see Knudsen (2004). Under such conditions, increased investment in early skills that are both malleable and fundamental would be dynamic substitutes for schooling and other counterfactual environments, resulting in fadeout. Exceptions would include cases in which these inputs are predictably absent, most notably in cases of extreme deprivation, but certainly more broadly applicable in the case of second language acquisition. Skills that are foundational and do not develop quickly under counterfactual conditions may be difficult to change (this may apply most to broad domains that are influenced by a large number of inputs, such as working memory capacity or conscientiousness). Skills that are malleable but do not develop under normal conditions may not be taught because educators have decided they are not foundational (e.g., Aramaic).

Because of these tradeoffs, Bailey and colleagues (2017) argued that the list of potential trifecta skills may be short. Their list of potential trifecta skills included advanced academic and vocational skills and social cognitive factors, such as children’s implicit theories of intelligence. Their list of trifecta skills also included additional potential targets for children in particularly adverse environments, such as nutrition, toxic stress, parenting, and basic problem-solving skills. We think the list is plausible, but also that the binary category of trifecta skill probably does not reflect the underlying distribution of promise of all of the possible skills that could yield long-run benefits. Malleability, fundamentality, and development under counterfactual conditions are all difficult to measure quantitatively, are continuously distributed, and vary across populations as well as across time within individuals.

Measures of skills omitted from this list due to a hypothesized lack of malleability, such as personality traits and general cognitive ability, appear to change in response to environmental inputs (Bleidorn et al., 2019; Ritchie & Tucker-Drob, 2018) in ways that are plausibly consequential for long-run outcomes. We think that more formal models of skill development, along with increased use of cost-benefit analysis, both of which tend to rely on long-term follow-up studies from causally informative evaluations of interventions, when available, are better sources of evidence for deciding which skills to target, and will discuss these later in a section on Implications for Research. However, in Box 2, we describe an example of how reasoning about these criteria might be used to make judgments about what kinds of skills to target.

Box 2: A Case Study from Effects of Promising and Unpromising Targets of Reading Intervention.