Abstract

Purpose:

Recently, the outbreak of the novel coronavirus disease 2019 (COVID-19) pandemic has seriously endangered human health and life. In fighting against COVID-19, effective diagnosis of infected patient is critical for preventing the spread of diseases. Due to limited availability of test kits, the need for auxiliary diagnostic approach has increased. Recent research has shown radiography of COVID-19 patient, such as CT and X-ray, contains salient information about the COVID-19 virus and could be used as an alternative diagnosis method. Chest X-ray (CXR) due to its faster imaging time, wide availability, low cost, and portability gains much attention and becomes very promising. In order to reduce intra- and inter-observer variability, during radiological assessment, computer-aided diagnostic tools have been used in order to supplement medical decision making and subsequent management. Computational methods with high accuracy and robustness are required for rapid triaging of patients and aiding radiologist in the interpretation of the collected data.

Method:

In this study, we design a novel multi-feature convolutional neural network (CNN) architecture for multi-class improved classification of COVID-19 from CXR images. CXR images are enhanced using a local phase-based image enhancement method. The enhanced images, together with the original CXR data, are used as an input to our proposed CNN architecture. Using ablation studies, we show the effectiveness of the enhanced images in improving the diagnostic accuracy. We provide quantitative evaluation on two datasets and qualitative results for visual inspection. Quantitative evaluation is performed on data consisting of 8851 normal (healthy), 6045 pneumonia, and 3323 COVID-19 CXR scans.

Results:

In Dataset-1, our model achieves 95.57% average accuracy for a three classes classification, 99% precision, recall, and F1-scores for COVID-19 cases. For Dataset-2, we have obtained 94.44% average accuracy, and 95% precision, recall, and F1-scores for detection of COVID-19.

Conclusions:

Our proposed multi-feature-guided CNN achieves improved results compared to single-feature CNN proving the importance of the local phase-based CXR image enhancement. Future work will involve further evaluation of the proposed method on a larger-size COVID-19 dataset as they become available.

Keywords: Chest X-ray, COVID-19 diagnosis, Image enhancement, Image phase, Multi-feature CNN

Introduction

Coronavirus disease 2019 (COVID-19) is an infectious disease caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2), a newly discovered coronavirus [1, 2]. In March 2020, the World Health Organization (WHO) declared the COVID-19 outbreak a pandemic. Up to now, more than 9.23 million cases have been reported across 188 countries and territories, resulting in more than 476,000 deaths [3]. Early and accurate screening of infected population and isolation from public is an effective way to prevent and halt spreading of virus. Currently, the gold standard method used for diagnosing COVID-19 is real-time reverse transcription polymerase chain reaction (RT-PCR) [4]. The disadvantages of RT-PCR include its complexity and problems associated with its sensitivity, reproducibility, and specificity [5]. Moreover, the limited availability of test kits makes it challenging to provide the sufficient diagnosis for every suspected patients in the hyper-endemic regions or countries. Therefore, a faster, reliable, and automatic screening technique is urgently required.

In clinical practice, easily accessible imaging, such as chest X-ray (CXR), provides important assistance to clinicians in decision making. Compared to computed tomography (CT), the main advantages of CXR are enabling fast screening of patients, being portable, and easy to set up (can be set up in isolation rooms). However, the sensitivity and specificity (radiographic assessment accuracy) of CXR for diagnosing COVID-19 are low compared to CT. This is especially problematic for identifying early-stage COVID-19 patients with mild symptoms. This causes larger intra- and inter-observer variability in reading the collected data by radiologists since qualitative indicators can be subtle. Therefore, there is increased demand for computer-aided diagnostic method to aid the radiologist during decision making for improved management of COVID-19 disease.

In view of these advantages and motivated by the need for accurate and automatic interpretation of CXR images, a number of studies based on deep convolutional neural networks (CNNs) have shown quite promising results. Ozturk et al. [6] proposed a CNN architecture, termed DarkCovidNet, and achieved 87.02% three class classification accuracy. The method was evaluated on 127 COVID-19, 500 healthy, and 500 pneumonia CXR scans. COVID-19 data were obtained from 125 patients. Wang et al. [7] built a public dataset named COVIDx, which is comprised of a total of 13975 CXR images from 13870 patient case and developed COVID-Net, a deep learning model. Their dataset had 358 COVID-19 images obtained from 266 patients. Their model achieved 93.3% overall accuracy in classifying normal, pneumonia, and COVID-19 scans. In [8], a ResNet-50 architecture was utilized to achieve a 96.23% overall accuracy in classifying four classes, where pneumonia was split into bacterial pneumonia and viral pneumonia. However, there were only eight COVID-19 CXR images used for testing. In [9], 76.37% overall accuracy was reported on a dataset including 1583 normal, 4290 pneumonia, and 76 COVID-19 scans. COVID-19 data were collected from 45 patients. In order to improve the performance of the proposed method, data augmentation was performed on the COVID-19 dataset bringing the total COVID-19 data size to 1,536. With data augmentation, they have improved the overall accuracy 97.2%. In [10], contrast limited adaptive histogram equalization (CLAHE) was used to enhance the CXR data. The authors proposed a depth-wise separable convolutional neural network (DSCNN) architecture. Evaluation was performed on 668 normal, 619 pneumonia, and 536 COVID-19 CXR scans. Average reported multi-class accuracy was 96.43%. The number of patients for the COVID-19 dataset was not available. In [11], a stacked CNN architecture achieved an average accuracy of 92.74%. The evaluation dataset had 270 COVID-19 scans from 170 patients, 1139 normal scans from 1015 patients, and 1355 pneumonia scans from 583 patients. In [12], the reported multi-class average classification accuracy was 94.2%. The evaluation dataset included 5000 normal, 4600 pneumonia, and 738 COVID-19 CXR scans. The data were collected from various sources and patient information was not specified. In [13], transfer learning was investigated for training the CNN architecture. The evaluation dataset included 224 COVID-19, 504 normal, and 700 pneumonia images. 93.48% average accuracy was reported for three-class classification. The average accuracy increased to 94.72% if viral pneumonia was included in the evaluation. In [14], performance of three different, previously proposed, CNN architectures was evaluated for multi-class classification. With 2265 COVID-19 images, the study used the largest COVID-19 dataset reported so far. Average area under the curve (AUC), for classification of COVID-19 from regular pneumonia, was 0.73 [14].

Although numerous studies have shown the capability of CNNs in effective identification of COVID-19 from CXR images, none of these studies investigated local phase CXR image features as multi-feature input to a CNN architecture for improved diagnosis of COVID-19 disease. Furthermore, except [7, 14], most of the previous work was evaluated on a limited number of COVID-19 CXR scans. In this work, we show how local phase CXR feature-based image enhancement improves the accuracy of CNN architectures for COVID-19 diagnosis. Specifically, we extract three different CXR local phase image features which are combined as a multi-feature image. We design a new CNN architecture for processing multi-feature CXR data. We evaluate our proposed methods on large-scale CXR images obtained from healthy subjects as well as subjects who are diagnosed with community acquired pneumonia and COVID-19. Quantitative results show the usefulness of local phase image features for improved diagnosis of COVID-19 disease from CXR scans.

Material and methods

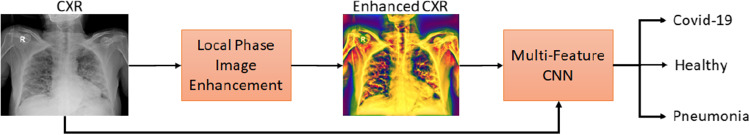

Our proposed method is designed for processing CXR images and consists of two main stages as illustrated in Fig. 1: 1—We enhance the CXR images (CXR(x, y)) using local phase-based image processing method in order to obtain a multi-feature CXR image () and 2—we classify by designing a deep learning approach where multi-feature CXR images (), together with original CXR data (), are used for improving the classification performance. Next, we describe how these two major processes are achieved.

Fig. 1.

Block diagram of the proposed framework for improved COVID-19 diagnosis from CXR

Image enhancement

In order to enhance the collected CXR images, denoted as , we use local phase-based image analysis [15]. Three different image phase features are extracted: 1—local weighted mean phase angle (LwPA(x, y)), 2—LwPA(x, y) weighted local phase energy (), and 3—enhanced local energy attenuation image (). and image features are extracted using monogenic signal theory where the monogenic signal image ((x,y)) is obtained by combining the band-pass-filtered image, denoted as , with the Riesz filtered components as:

Here, and represent the vector valued odd filter (Riesz filter) [16]. -scale space derivative quadrature filters (ASSD) are used for band-pass filtering due to their superior edge detection [17]. The LwPA(x, y) image is calculated using:

We do not employ noise compensation during the calculation of the image in order to preserve the important structural details of . The image is obtained by averaging the phase sum of the response vectors over many scales using:

In the above equation, sc represents the number of scales. image extracts the underlying tissue characteristics by accumulating the local energy of the image along several filter responses. The image is used in order to extract the third local phase image . This is achieved by using image feature as an input to an L1 norm-based contextual regularization method. The image model, denoted as CXR image transmission map (), enhances the visibility of lung tissue features inside a local region and assures that the mean intensity of the local region is less than the echogenicity of the lung tissue. The scattering and attenuation effects in the tissue are combined as: . Here, is a constant value representative of echogenicity in the tissue. In order to calculate , is estimated first by minimizing the following objective function [15]:

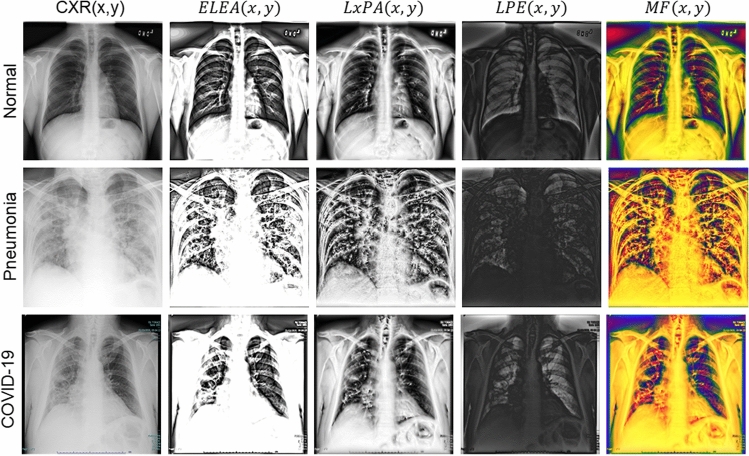

In the above equation, represents element-wise multiplication, is an index set, and is convolution operator. is calculated using a bank of high-order differential filters [18]. The filter bank enhances the CXR tissue features inside a local region while attenuating the image noise. is a weighting matrix calculated using: . In the above equation, the first part measures the dependence of on and the second part models the contextual constraints of [15]. These two terms are balanced using a regularization parameter [15]. After estimating , image is obtained using: . is related to tissue attenuation coefficient ) and is a small constant used to avoid division by zero [15]. Combination of these three types of local phase images as three-channel input creates a new multi-feature image, denoted as . Qualitative results corresponding to the enhanced local phase images are displayed in Fig. 2. Investigating Fig. 2, we can observe that the enhanced local phase images extract new lung features that are not visible in the original images. Since local phase image processing is intensity-invariant, the enhancement results will not be affected from the intensity variations due to patient characteristics or X-ray machine acquisition settings. The multi-feature image and the original image are used as an input to our proposed deep learning architecture which is explained in the next section.

Fig. 2.

Local phase enhancement of images

Network architecture

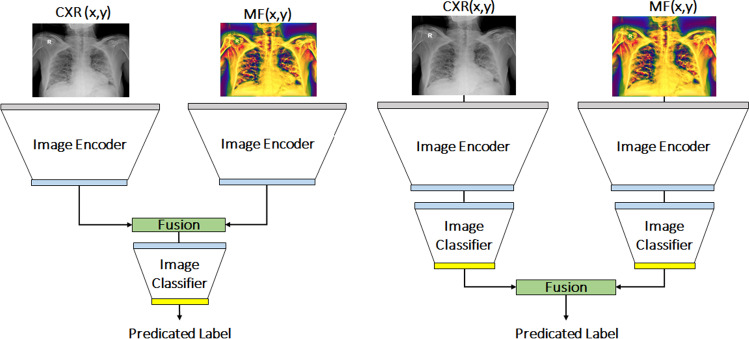

Our proposed multi-feature CNN architecture consists of two same convolutional network streams for processing images and the corresponding , respectively. Strategies for the optimal fusion of features from multimodal images is an active area of research. Generally, data are fused earlier when the image features are correlated and later when they are less correlated [19]. Depending on the dataset, different types of fusion strategies outperform the other [20]. In [21], our group has also investigated early, mid-, and late-fusion operations in the context of bone segmentation from ultrasound data. Late-fusion operation has outperformed the other fusion operations. In [22], the authors have also used late-fusion network, for segmenting brain tumors from MRI data, and have outperformed other fusion operations. During this work, we design mid-fusion and late-fusion architectures (Fig. 3). As part of this work, we have also investigate several fusion operations: sum fusion, max fusion, averaging fusion, concatenation fusion, convolution fusion. Based on the performance of the fusion operations and fusion architectures, on a preliminary experiment, we use concatenation fusion operation for both of our architectures. We use the following network architectures as the encoder network: pretrained AlexNet [23], ResNet50 [24], SonoNet64 [25], XNet (Xception) [26], InceptionV4 (Inception-Resnet-V2) [27] and EfficientNetB4 [28]. Pretrained AlexNet [23] and ResNet50 [24] have been incorporated into various medical image analysis tasks [29]. SonoNet64 achieved excellent performance in implementation of both classification and localization tasks [25]. XNet (Xception) [26], InceptionV4 (Inception-Resnet-V2) [27] and EfficientNetB4 [28] were chosen due to their outstanding performance on recent medical data classification tasks as well as classification of COVID-19 from chest CT data [30, 31].

Fig. 3.

Our proposed multi-feature mid-level (left) and late-level (right) fusion architectures

Dataset

We use the following datasets to evaluate the performance of proposed fusion network models: BIMCV [32], COVIDx [7], and COVID-CXNet [12]. COVID-19 CXR scans from BIMCV [32] and COVIDx [7] datasets were combined to generate the ‘Evaluation Dataset’ (Table 1). For Normal and Pneumonia datasets, we have randomly selected a subset of 2567 images (from 2567 subjects) from the evaluation dataset (Table 1). In total, 2567 images from each class (normal, pneumonia, COVID-19) were used during fivefold cross-validation. Table 2 shows the data split for COVID-19 data only. Similar split was also performed for Normal and Pneumonia datasets. In order to provide additional testing for our proposed networks, we have designed a new test dataset which we call ‘Test Dataset-2’ (Table 3). The images from Normal and Pneumonia cases which were not included in the ‘Evaluation Dataset’ were part of the ‘Test Dataset-2.’ Furthermore, we have included all the COVID-19 scans from COVID-CXNet [12].

Table 1.

Data distribution of the evaluation dataset

Table 2.

Distribution of fivefold cross-validation dataset split for training, validation, and testing for COVID-19 data only. Same split was also performed for Normal and Pneumonia datasets

| k1 | k2 | k3 | k4 | k5 | ||

|---|---|---|---|---|---|---|

| Training data | # images | 1555 | 1560 | 1541 | 1547 | 1529 |

| # subjects | 890 | 890 | 890 | 890 | 891 | |

| Validation data | # images | 494 | 504 | 512 | 511 | 515 |

| # subjects | 297 | 297 | 297 | 297 | 297 | |

| Test data | # images | 518 | 503 | 514 | 509 | 523 |

| # subjects | 297 | 297 | 297 | 297 | 296 |

Table 3.

Data distribution of Test Dataset-2

| Normal | Pneumonia | COVID-19 (COVID-CXNet) [12] | |

|---|---|---|---|

| # images | 6284 | 3478 | 756 |

| # subjects | 6284 | 3464 | Unknown |

In order to show the improvements achieved using our proposed multi-feature CNN architecture, we also trained the same CNN architectures using only or images. We refer to these architectures as mono-feature CNNs. Quantitative performance was evaluated by calculating average accuracy, precision, recall, and F1-scores for each class [7, 9].

Results

The experiments were implemented in Python using Pytorch framework. All models were trained using stochastic gradient descent (SGD) optimizer, cross-entropy loss function, learning rate 0.001 for the first epoch, and a learning rate decay of 0.1 every 15 epochs with a mini-batches of size 16. For local phase image enhancement, we have used and the rest of the ASSD filter parameters were kept same as reported in [15]. For calculating images, we used , , , and , the constant related to tissue echogenicity, was chosen as the mean intensity value of . These values were determined empirically and kept constant during qualitative and quantitative analysis.

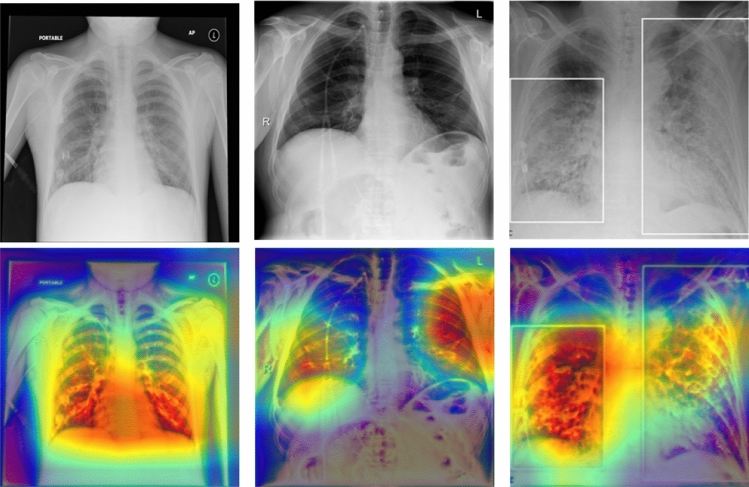

Qualitative analysis: Gradient-weighted class activation mapping (Grad-CAM) [33] visualization of normal, pneumonia, and COVID-19 are presented as qualitative results in Fig. 4. Investigating Fig. 4, we can see the discriminative regions of interest localized in the normal, pneumonia, and COVID-19 data.

Fig. 4.

Top row: From left to right CXR(x, y) image of normal, pneumonia, and COVID-19 subjects. Bottom row: Grad-CAM images [33] obtained by late-fusion ResNet50 architecture

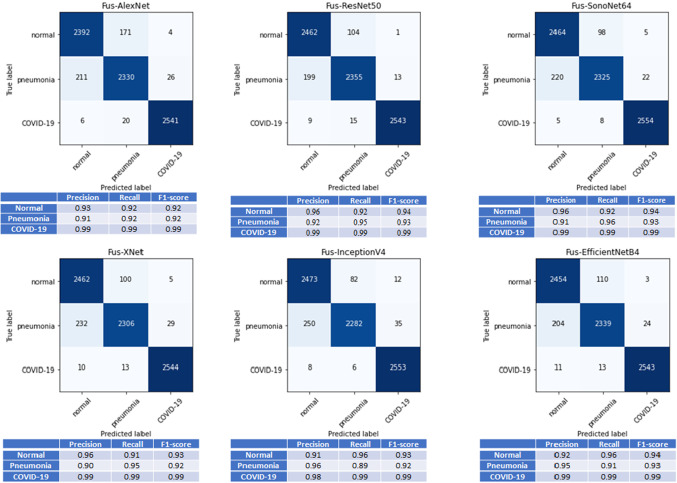

Quantitative analysis of Evaluation Dataset: Table 4 shows average accuracy of the fivefold cross-validation on the ‘Evaluation Dataset’ for mono-feature CNN architectures as well as the proposed multi-feature CNN architectures. In most of the investigated network designs, -based mono-feature CNN architectures outperform -based mono-feature CNN architectures. The best average accuracy is obtained when using our proposed multi-feature ResNet50 [24] architecture. All multi-feature CNNs with mid- and late-fusion operation compared with mono-feature CNNs, with original images as input, achieved statistically significant difference in terms of classification accuracy (p<0.05 using a paired t-test at significance level). Except SonoNet64 [25], XNet(Xception) [26], and InceptionV4(Inception-Resnet-V2) [27], all multi-feature CNNs with mid-fusion operation compared with mono-feature CNNs with images as input show statistically significant difference in terms of classification accuracy (p<0.05 using a paired t-test at significance level). We did not find any statistical significant difference in the average accuracy results between the middle-level and late-fusion networks (p>0.05 using a paired t-test at significance level). Figure 5 presents confusion matrix results together with average precision, recall, and F1-scores for all multi-feature late-fusion CNN architectures. One important aspect observed from the presented results we can see that almost all the investigated multi-feature networks achieved very high precision, recall, and F1-scores for COVID-19 data indicating very few cases were misclassified as COVID-19 from other infected types.

Table 4.

Mean overall accuracy after fivefold cross-validation on ‘Evaluation Data’ using mono-feature CNNs and multi-feature CNNs. Bold denotes the best results obtained

| AlexNet | ResNet50 | SonoNet64 | |

|---|---|---|---|

| CXR(x, y) | 91.9± 0.55 | 94.58± 0.43 | 93.59±0.7 |

| MF(x, y) | 93.51± 0.39 | 94.82±0.58 | 94.70±0.4 |

| Middle fusion | 94.27±0.64 | 95.44±0.28 | 95.30±0.42 |

| Late-fusion | 94.32± 0.27 | 95.57± 0.3 | 95.35±0.4 |

| Xception | InceptionV4 | EfficientNetB4 | |

| CXR(x, y) | 93.38±0.38 | 93.43±0.31 | 93.47±0.62 |

| MF(x, y) | 93.83±0.47 | 94.17±0.59 | 94.19±0.45 |

| Middle fusion | 94.47±0.76 | 94.89±0.36 | 95.26±0.61 |

| Late-fusion | 94.95± 0.52 | 94.90±0.46 | 95.26±0.43 |

Fig. 5.

Confusion matrix, and average precision, recall, and F1-scores obtained from fivefold cross-validation on ‘Evaluation Data’ using all multi-feature network models

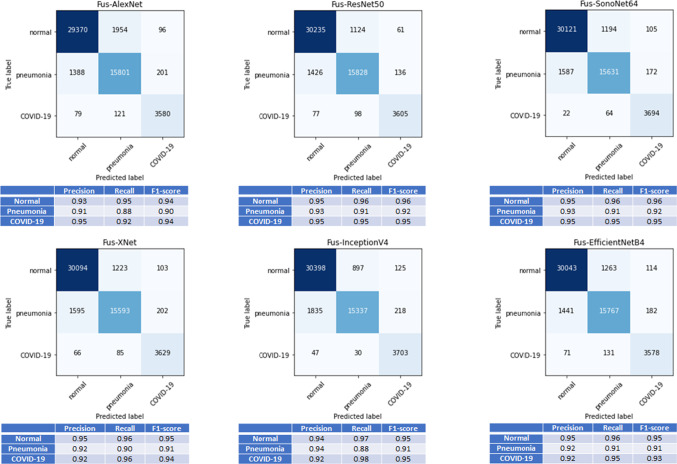

Quantitative analysis of Test Dataset-2: Multi-feature ResNet50 provides the highest overall accuracy shown in Table 5, which is consistent with the quantitative result achieved with the ‘Evaluation Dataset.’ All multi-feature CNNs with mid- and late-fusion operation compared with mono-feature CNNs, with original images as input, achieved statistically significant difference in terms of classification accuracy (p<0.05 using a paired t-test at significance level). Except XNet(Xception) [26], all the multi-feature CNNs with mid-fusion operation compared with mono-feature CNNs with original CXR(x, y) images as input achieved statistically significant difference in terms of classification accuracy (p<0.05 using a paired t-test at significance level). Except XNet(Xception) [26], all multi-feature CNNs with mid-fusion operation compared with mono-feature CNNs with images as input show statistically significant difference in terms of classification accuracy (p<0.05 using a paired t-test at significance level). Similar to ‘Evaluation Dataset’ results, there was no statistically significant difference in the average accuracy results between the middle-level and late-fusion networks (p>0.05 using a paired t-test at significance level) except ResNet50 [24], and XNet(Xception) [26] architectures. Confusion matrix results, together with average precision recall and F1-score values, for all multi-feature late-fusion CNN architectures evaluated is presented in Fig. 6. Similar to the results presented for ‘Evaluation Dataset,’ high precision, recall, and F1-score values are obtained for the COVID-19 data.

Table 5.

Mean overall accuracy after fivefold cross-validation on ‘Test Dataset-2’ using mono-feature CNNs and multi-feature CNNs. Bold denotes the best results obtained

| AlexNet | ResNet50 | SonoNet64 | |

|---|---|---|---|

| CXR(x, y) | 90.59±0.21 | 93.4±0.17 | 91.1±0.8 |

| MF(x, y) | 91.97± 0.24 | 93.17±0.3 | 93.46±0.15 |

| Middle fusion | 92.52±0.32 | 94.26±0.19 | 93.94±0.13 |

| Late-fusion | 92.72±0.17 | 94.44±0.2 | 94.02±0.14 |

| Xception | InceptionV4 | EfficientNetB4 | |

| CXR(x, y) | 92.28±0.46 | 92.99±0.2 | 92.16±0.49 |

| MF(x, y) | 92.61±0.19 | 92.89±0.27 | 93.1±0.17 |

| Middle fusion | 92.89±0.12 | 93.8±0.27 | 93.54±0.29 |

| Late-fusion | 93.77±0.15 | 94.01±0.09 | 93.91±0.07 |

Fig. 6.

Confusion matrix, and average precision, recall, and F1-scores obtained from fivefold cross-validation on ‘Test Dataset-2’ using all multi-feature network models

Discussion and conclusion

Development of a new computer-aided diagnostic methods for robust and accurate diagnosis of COVID-19 disease from CXR scans is important for improved management of this pandemic. In order to provide a solution to this need, in this work, we present a multi-feature deep learning model for classification of CXR images into three classes including COVID-19, pneumonia, and normal healthy subjects. Our work was motivated by the need for enhanced representation of CXR images for achieving improved diagnostic accuracy. To this end, we proposed a local phase-based CXR image enhancement method. We have shown that by using the enhanced CXR data, denoted as , in conjunction with the original CXR data, diagnostic accuracy of CNN architectures can be improved. Our proposed multi-feature CNN architectures were trained on a large dataset in terms of the number of COVID-19 CXR scans and have achieved improved classification accuracy across all classes. One of the very encouraging result is the proposed models show high precision, recall, and F1-scores on the COVID-19 class for both testing datasets. Finally, compared to previously reported results, our work achieves the highest three class classification accuracy on a significantly larger COVID-19 dataset (Table 6). This will ensure few false positive cases for the COVID-19 detected from CXR images and will help alleviate burden on the healthcare system by reducing the amount of CT scans performed. While the obtained results are very promising, more evaluation studies are required specifically for diagnosing early-stage COVID-19 from CXR images. Our future work will involve the collection of CXR scans from early-stage or asymptotic COVID-19 patients. We will also investigate the design of a CXR-based patient triaging system.

Table 6.

Comparison of proposed method with recent state-of-the-art methods for COVID-19 detection using CXR images

| Study | Method | Dataset | Acc (%) | |

|---|---|---|---|---|

| Wang et al. [4] | COVID-Net | Training data | Testing data: | 93.3 |

| 7966 Normal | 100 Normal | |||

| 5438 Pneumonia | 100 Pneumonia | |||

| 258 COVID-19 | 100 COVID-19 | |||

| Ozturk et al. [6] | DarkCovidNet | 500 Normal | 87.02 | |

| 500 Pneumonia | ||||

| 127 COVID-19 | ||||

| Haghanifar et al. [12] | UNet+DenseNet | Training data | Testing data: | 87.21 |

| 3000 Normal | 724 Normal | |||

| 3400 Pneumonia | 672 Pneumonia | |||

| 400 COVID-19 | 144 COVID-19 | |||

| Siddhartha and | COVIDLite | 668 Normal | 96.43 | |

| Santra [10] | 619 Viral Pneumonia | |||

| 536 COVID-19 | ||||

| Apostolopoulos and Mpesiana [13] | VGG19 | Testing data 1 | Testing data 2: | 93.48 & 94.72 |

| 504 Normal | 504 Normal | |||

| 700 Bacterial | 714 Viral& | |||

| Pneumonia | Bacterial Pneumonia | |||

| 224 COVID-19 | 224 COVID-19 | |||

| Proposed Method | Fus-ResNet50 | Testing data 1 | Testing data 2 | 95.57&94.44 |

| 2567 Normal | 6284 Normal | |||

| 2567 Pneumonia | 3478 Pneumonia | |||

| 2567 COVID-19 | 756 COVID-19 | |||

Acknowledgements

The authors are thankful to all the research groups, and national agencies worldwide who provided the open-source X-ray images.

Compliance with ethical standards

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

The article uses open-source datasets.

Funding

Nothing to declare.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Xiao Qi, Email: xq53@scarletmail.rutgers.edu.

Lloyd G. Brown, Email: brownl8@njms.rutgers.edu

David J. Foran, Email: foran@cinj.rutgers.edu

John Nosher, Email: nosher@rwjms.rutgers.edu.

Ilker Hacihaliloglu, Email: ilker.hac@soe.rutgers.edu.

References

- 1.Singhal T. A review of coronavirus disease-2019 (covid-19) Indian J Pediatr. 2020;5:1–6. doi: 10.1007/s12098-020-03263-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zu ZY, Jiang MD, Xu PP, Chen W, Ni QQ, Lu GM, Zhang LJ. Coronavirus disease 2019 (covid-19): a perspective from china. Radiology. 2020;3:200490. doi: 10.1148/radiol.2020200490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Dong E, Du H, Gardner L. An interactive web-based dashboard to track covid-19 in real time. Lancet Infect Dis. 2020;20(5):533–534. doi: 10.1016/S1473-3099(20)30120-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wang W, Xu Y, Gao R, Lu R, Han K, Wu G, Tan W. Detection of sars-cov-2 in different types of clinical specimens. JAMA. 2020;323(18):1843–1844. doi: 10.1001/jama.2020.3786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bleve G, Rizzotti L, Dellaglio F, Torriani S. Development of reverse transcription (rt)-pcr and real-time rt-pcr assays for rapid detection and quantification of viable yeasts and molds contaminating yogurts and pasteurized food products. Appl Environ Microbiol. 2003;69(7):4116–4122. doi: 10.1128/AEM.69.7.4116-4122.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ozturk T, Talo M, Yildirim EA, Baloglu UB, Yildirim O, Acharya UR. Automated detection of covid-19 cases using deep neural networks with x-ray images. Comput Biol Med. 2020;2:103792. doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wang L, Wong A (2020) Covid-net: a tailored deep convolutional neural network design for detection of covid-19 cases from chest x-ray images. arXiv preprint arXiv:2003.09871 [DOI] [PMC free article] [PubMed]

- 8.Farooq M, Hafeez A (2020) Covid-resnet: A deep learning framework for screening of covid19 from radiographs. arXiv preprint arXiv:2003.14395

- 9.Ucar F, Korkmaz D. Covidiagnosis-net: Deep bayes-squeezenet based diagnostic of the coronavirus disease 2019 (covid-19) from x-ray images. Med Hypotheses. 2020;3:109761. doi: 10.1016/j.mehy.2020.109761. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Siddhartha M, Santra A (2020) Covidlite: a depth-wise separable deep neural network with white balance and clahe for detection of covid-19. arXiv preprint arXiv:2006.13873

- 11.Gour M, Jain S (2020) Stacked convolutional neural network for diagnosis of covid-19 disease from x-ray images. arXiv preprint arXiv:2006.13817

- 12.Haghanifar A, Majdabadi MM, Ko S (2020) Covid-cxnet: Detecting covid-19 in frontal chest x-ray images using deep learning . https://github.com/armiro/COVID-CXNet [DOI] [PMC free article] [PubMed]

- 13.Apostolopoulos ID, Mpesiana TA. Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Phys Eng Sci Med. 2020;6:1. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.González G, Bustos A, Salinas JM, de la Iglesia-Vaya M, Galant J, Cano-Espinosa C, Barber X, Orozco-Beltrán D, Cazorla M, Pertusa, A (2020) Umls-chestnet: a deep convolutional neural network for radiological findings, differential diagnoses and localizations of covid-19 in chest x-rays. arXiv preprint arXiv:2006.05274

- 15.Hacihaliloglu I (2017) Localization of bone surfaces from ultrasound data using local phase information and signal transmission maps. In: International workshop and challenge on computational methods and clinical applications in musculoskeletal imaging, pp 1–11. Springer

- 16.Felsberg M, Sommer G. The monogenic signal. IEEE Trans Signal Process. 2001;49(12):3136–3144. doi: 10.1109/78.969520. [DOI] [Google Scholar]

- 17.Belaid A, Boukerroui D (2014) scale spaces filters for phase based edge detection in ultrasound images. In: 2014 IEEE ISBI, pp 1247–1250. IEEE

- 18.Meng G, Wang Y, Duan J, Xiang S, Pan C (2013) Efficient image dehazing with boundary constraint and contextual regularization. In: IEEE ICCV, pp 617–624

- 19.Ngiam J, Khosla A, Kim M, Nam J, Lee H, Ng AY (2011) Multimodal deep learning. In: ICML

- 20.Zhou T, Ruan S, Canu S. A review: deep learning for medical image segmentation using multi-modality fusion. Array. 2019;3:100004. doi: 10.1016/j.array.2019.100004. [DOI] [Google Scholar]

- 21.Alsinan AZ, Patel VM, Hacihaliloglu I. Automatic segmentation of bone surfaces from ultrasound using a filter-layer-guided cnn. Int J Comput Assist Radiol Surg. 2019;14(5):775–783. doi: 10.1007/s11548-019-01934-0. [DOI] [PubMed] [Google Scholar]

- 22.Aygün M, Şahin YH, Ünal G (2018) Multi modal convolutional neural networks for brain tumor segmentation. arXiv preprint arXiv:1809.06191

- 23.Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

- 24.He K, Zhang X, Ren S, Sun J (2016)Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

- 25.Baumgartner CF, Kamnitsas K, Matthew J, Fletcher TP, Smith S, Koch LM, Kainz B, Rueckert D. Sononet: real-time detection and localisation of fetal standard scan planes in freehand ultrasound. IEEE Trans Med Imaging. 2017;36(11):2204–2215. doi: 10.1109/TMI.2017.2712367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chollet F (2017) Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

- 27.Szegedy C, Ioffe S, Vanhoucke V, Alemi A (2016) Inception-v4, inception-resnet and the impact of residual connections on learning

- 28.Tan M, Le QV (2019) Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv preprint arXiv:1905.11946

- 29.Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, Van Der Laak JA, Van Ginneken B, Sánchez CI. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 30.Ha Q, Liu B, Liu F (2020)Identifying melanoma images using efficientnet ensemble: Winning solution to the siim-isic melanoma classification challenge. arXiv preprint arXiv:2010.05351

- 31.Kassani SH, Kassasni PH, Wesolowski MJ, Schneider KA, Deters R (2020) Automatic detection of coronavirus disease (covid-19) in x-ray and ct images: a machine learning-based approach. arXiv preprint arXiv:2004.10641 [DOI] [PMC free article] [PubMed]

- 32.de la Iglesia Vayá M, Saborit JM, Montell JA, Pertusa A, Bustos A, Cazorla M, Galant J, Barber X, Orozco-Beltrán D, García-García F, Caparrós M, González, G, Salinas JM (2020) Bimcv covid-19+: a large annotated dataset of rx and ct images from covid-19 patients. arXiv preprint arXiv:2006.01174

- 33.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-cam: visual explanations from deep networks via gradient-based localization. Int J Comput Vis. 2019;128(2):336–359. doi: 10.1007/s11263-019-01228-7. [DOI] [Google Scholar]