Schools take more steps to embed a physical activity program into policies and procedures when a staff member supports its continued implementation and when there are ways that principals can evaluate the effectiveness of the program in their school.

Keywords: Digital behavior change interventions, Evaluation methods, Intervention development, Intervention optimization, Intervention personalization

Abstract

The rapid expansion of technology promises to transform the behavior science field by revolutionizing the ways in which individuals can monitor and improve their health behaviors. To fully live into this promise, the behavior science field must address distinct challenges, including: building interventions that are not only scientifically sound but also engaging; using evaluation methods to precisely assess intervention components for intervention optimization; and building personalized interventions that acknowledge and adapt to the dynamic ecosystem of individual and contextual variables that impact behavior change. The purpose of this paper is to provide a framework to address these challenges by leveraging behavior science, human-centered design, and data science expertise throughout the cycle of developing and evaluating digital behavior change interventions (DBCIs). To define this framework, we reviewed current models and practices for intervention development and evaluation, as well as technology industry models for product development. The framework promotes an iterative process, aiming to maximize outcomes by incorporating faster and more frequent testing cycles into the lifecycle of a DBCI. Within the framework provided, we describe each phase, from development to evaluation, to discuss the optimal practices, necessary stakeholders, and proposed evaluation methods. The proposed framework may inform practices in both academia and industry, as well as highlight the need to offer collaborative platforms to ensure successful partnerships that can lead to more effective DBCIs that reach broad and diverse populations.

Implications.

Practice: The paper provides a guideline for collaborating with researchers and digital health intervention developers for providing subject matter expertise to inform intervention development.

Policy: The paper highlights the need to consider interdisciplinary collaboration platforms to ensure successful partnerships that can lead to more effective digital behavior change interventions (DBCIs) toward better health outcomes.

Research: The paper provides a guideline for developing and testing DBCIs by using an iterative, interdisciplinary, and collaborative process, describing the stakeholders that need to be involved, as well as the methodologies to be used.

INTRODUCTION AND BACKGROUND

The rapid expansion of technology promises to transform the behavior science field by revolutionizing the ways in which individuals can monitor and improve their health behaviors and ultimately their health. Wearables, smartphone apps, voice technology, and social media are permeating the health care space, bringing the promise that such technology can foster behavior change across a wide spectrum of health and well-being goals, from smoking cessation, chronic disease management, to improved physical activity and nutrition behaviors [1–4].

Digital behavior change interventions (DBCIs) have been defined by Yardley et al. (2016) as interventions that employ digital technologies to encourage and support behavior change, with the goal of promoting and maintaining health [5]. Various technologies can be used to support such interventions, from smartphones to personal computers, tablets, wearables, voice-assisted technologies, environmental sensors, and other emerging technologies. Smartphone applications, patient–provider portals, or texting interventions are a few examples of DBCIs. They can be automated or include interactions with human facilitators [5]. While DBCIs can focus on the patient or the individual seeking care as an end user, they can also focus on care providers and other key actors of the health care process. At the same time, in order to impact behavior change, depending on their scope and theoretical approach, DBCIs can address individual-level variables, as well as variables at the interpersonal (e.g., social network) [6] or environmental level [7].

A growing body of research indicates that the widespread, everyday use of technology, along with a variety of data about the user behavior (e.g., accelerometer and GPS) can make DBCIs increasingly more feasible and can minimize implementation barriers that nondigital behavioral interventions encounter (e.g., affordability and lack of time) [8–10]. Additionally, advances in data technology and analytics enable researchers to harness these comprehensive streams of behavioral data in more sophisticated ways than previously possible to meaningfully improve health outcomes across diverse populations.

With increased reach, DBCIs also have the potential to reduce health disparities in a variety of ways. First, most digital technology products (mobile phones, smartphones, etc.) have shown an exponential reduction in costs and are rapidly becoming more affordable [11], which enables DCBIs to have a wider socioeconomic reach [12]. Additionally, digital interventions are less constrained by geographic location, which can help reduce disparities in access to health care. Second, the flipside of technology’s wider reach is data capture and analysis capabilities, which can allow a better understanding of health disparities and provide a better ground for personalization. By accessing the rich stream of DBCIs data (i.e., about intervention exposure, health behaviors and their psychosocial determinants and health outcomes), there is an opportunity to uncover behavioral phenotypes (i.e., observable behavioral characteristics that differentiate one individual from another) as they are influenced by individual-level determinants, as well as determinants within the social and built environments, which can provide a better chance to offer more precise and personalized interventions toward improved health outcomes across wider and more diverse populations.

To leverage the benefits of technology and develop, evaluate, and implement more effective DBCIs, the behavior science field must first acknowledge and address its challenges, amongst which the most notable are: building interventions that are not only scientifically sound but also engaging; using evaluation methods to precisely assess discrete components for intervention optimization; and building personalized interventions that can acknowledge and adapt to the dynamic fluctuation of contextual variables, such as social and physical environment, that ultimately influence individual behavior [9,13].

PARTICIPANT ENGAGEMENT

One of the main challenges that the field faces is that of participant engagement. Engagement with health interventions is a precondition for their effectiveness, and this statement will hold true for DBCIs as well. Perhaps, similar to the quote attributed to the U.S. Surgeon General C. Everett Koop, “Drugs don’t work in patients who don’t take them”, DBCIs will not work if users do not engage with them. While technology provides unprecedented opportunities to develop DBCIs that people can use in real time and in the real world, if they do not engage with them, then they will not be exposed to the “scientific ingredients” embedded in them, which will render the DBCI unlikely to achieve their behavioral or health objective [14].

Overall, there is evidence indicating that very often, people download an app (e.g., health and nonhealth apps) and never use it again, with a rapid usage decline after the first download for apps that are not connected to other devices [15,16]. In digital health, DBCIs that adhere to scientific rigors and are designed to include evidence-based components can still fail in engaging users due to poor design, while other solutions that may not necessarily include a scientific backbone can have a high user engagement due to the compelling user experience they provide [17]. The opportunity to have a meaningful impact on health behaviors and outcomes is missed in both scenarios.

To respond to the engagement challenge, an important consideration in designing DBCIs is their usability. Usability is defined by the International Organization for Standardization (ISO) as “the extent to which a user can use a product to achieve specific goals with effectiveness, efficiency and satisfaction in a specified context” [18]. One of the most widely known approaches for increased product usability has been employing a design process called user-centric design, in which end users influence how a design takes shape [19]. In recent years, the ISO broadened the definition of user-centered design to focus on the impact on other stakeholders as well, not just end users per se, referring to this design approach as human-centered design [20].

The human-centered design approach is currently used as a foundation for designing numerous consumer-facing technology products. As identified by reviewing the design process used by top digital technology companies [21], although different nomenclature may be used [22–24], the human-centered design approach consists of a learning phase focused on understanding user’s needs and the individual and environmental context in which the product will be used. Following the learning phase, opportunities for design are identified and prototypes are being developed, engaging stakeholders for feedback and testing the prototypes with end users. Lastly, the solution is developed and tested with users [25].

While focusing on human-centered design, technology companies often adhere to what is called an Agile approach [26–28]. This is an approach initiated to challenge the traditional “waterfall” software development model, in which entire projects are preplanned, then fully built before being tested with users. Fully exhausting a project’s resources before getting the results of any testing effort is cited among the critical shortcomings of the waterfall approach. An example of that would be developing an intervention with no prior testing before placing it in a clinical trial, only to potentially arrive at the conclusion that it is not effective without a clear understanding of the mechanism responsible for the results nor any insight into how discrete components of the intervention may have performed. On the other hand, the agile approach emphasizes an iterative flexibility that proposes testing “minimum viable products” (MVPs) to gather feedback from stakeholders, instead of building the full solution and test it only after its completion [26]. The agile approach proposes testing early and often, which would allow intervention developers to incorporate users’ and stakeholders’ (e.g., health care providers) feedback into the proposed DBCI early on and would ensure a progressive effort toward optimization and validation testing.

Since DBCIs rely heavily on software development capabilities, it is useful to understand Agile models of human-centered design for software development [27–29], which currently emphasize iterative testing and rely on a close collaboration between end users, designers, and other relevant stakeholders for developing engaging solutions [30]. It is important to emphasize that the end goal of that would be to create more efficient interventions for improved behavior and health outcomes, not to simply create more engaging solutions. In the context of health care, engagement is in the service of intervention efficacy, not an end goal in and of itself.

INTERVENTION OPTIMIZATION

Another challenge that DBCIs are facing stems from the larger behavior science field. Traditionally, most studies investigate the efficacy or effectiveness of a whole behavior change intervention package, offering little insight into the effectiveness of its individual, discrete components [31]. With the wealth of theories available, along with the intervention constructs stemming from them, the process of identifying the right combination of components to include in a DBCI can seem obscure and daunting for many researchers and intervention developers. For example, a recent review on digital interventions targeting physical activity [32] showed that they tend to include various behavior change techniques, including: information on the health consequences of the behavior, goal setting, action planning, modeling, framing, feedback and monitoring, graded tasks, incentives, rewards, and social support. Are all these behavior change techniques necessary? Are they necessary for every user and/or under any circumstances? To facilitate the process of answering some of these questions, approaches such as intervention mapping (IM) have been proposed [33]. IM serves as a blueprint for designing, implementing, and evaluating an intervention based on a foundation of theoretical, empirical, and practical information. While IM provides a very useful and rigorous series of steps to develop an intervention, it may not be able to answer which are the necessary and sufficient components of an intervention within the specific individual and environmental context of the user. When DBCI development resources are likely cost constrained and the user’s time and interest to stay engaged with an intervention is limited, which combination offers the most bang for the intervention’s buck? To answer that question, we need to be able to measure continuously and systematically so that we can begin to identify the most promising intervention components and optimize the intervention to deliver the most impact at the least cost (i.e., time and resources) for the user as well as the entity developing or funding the intervention. As opposed to measuring only postintervention development, after all resources have been spent, early and continuous evaluation allows a more rationalized resource planning approach and increases the likelihood that the intervention will have the results it is designed to accomplish.

To address this challenge, a particular advancement in the field has been the multiphase optimization strategy (MOST) framework [34]. MOST establishes an optimization process for behavior change interventions by evaluating the efficacy of intervention components. Ideally, an iterative process of intervention optimization would be repeated as many times as necessary to achieve a complete set of highly effective components for the broadest population of users. However, while MOST provides a very robust framework for optimizing interventions, in reality, intervention developers and researchers need to make decisions on the level of granularity of the tested components and it is very likely that resources, time limitations, and funding mechanisms will constrain the number of optimization cycles [35], leading them to focus on the higher-level components rather than more specific details (e.g., the UI/UX design elements for behavior change techniques) of a DBCI.

Since the technology of DBCIs affords a unique look into how end users engage with intervention components, down to the UI/UX design elements that support them, in real-life context, this rich stream of data should also be considered in intervention optimization efforts. To that end, beyond the design of an intervention, to fully leverage the ability to analyze how end users engage with intervention components (e.g., behavior change techniques) and to assess which of these components, in what format (e.g., UI/UX design elements), lead to better health behavior outcomes, DBCIs need to be built with a configurable system architecture, which allows “editing” intervention components (e.g., turning behavior change techniques on/off, swapping them, and offering optimal design options). In addition to the system architecture, advanced data science methods and computational capabilities are needed to support optimization. Such capabilities will allow optimizations not only at the user experience level (e.g., features that drive engagement) but also at the level of scientific components (e.g., behavior change techniques).

INTERVENTION PERSONALIZATION

Lastly, perhaps the most daunting challenge that DBCIs face is the complexity of human behavior change. Individual variables, such as personal preference, state of well-being, demographics, or presence of comorbidities, matter [36,37]. Contextual variables matter as well, from cultural norms, social support, to geographical location, or access to exercise or fitness facilities [38–40]. Lastly, many of these variables are not stable over time. In other words: People are different. Context matters. Things change [41]. There is a dynamic ecosystem of variables that impact health behavior, including social factors, cultural norms, physical environment, and individual factors (e.g., spanning wide from beliefs and attitudes to comorbidities or acute health episodes). Evidence has shown that tailored and individualized interventions are successful for improving health behavior and health outcome [42]. The “one size fits all” approach fails to accommodate the complexities of health behavior across diverse populations and we need interventions that can offer a personalized approach—one that is able to provide context-specific, relevant, personalized tools at the moment when the individual needs it to make healthier choices [43,44]. One of the most significant advantages of DBCIs, their accessibility, poses its own challenge—attracting a very diverse and potentially global group of users. Previously, nondigital interventions were typically bound by geographic location and other sociodemographic factors. Digital interventions are not constrained by such factors, and if they boast accessibility as an advantage, then they should be able to serve a wide and very diverse audience, evolving from a one size fits all approach to a more personalized one.

Just-in-time-adaptive interventions (JITAIs) have been proposed to address this challenge. JITAIs are suites of interventions that adapt over time to an individual’s changing status and circumstances with the goal to address the individual’s need for support, whenever this need arises [45]. While a significant step forward, one key challenge that JITAIs face is that for most current interventions, the decision rules for tailoring (e.g., the “if/then” rules necessary to set up the tailoring) are, for now, set a priori and limited by the existing published evidence [45]. Depending on the behavior or health outcome, the existing evidence may be insufficient to inform the formulation of comprehensive decision rules that would consider the most relevant individual and contextual variables [45,46].

DBCIs technology, such as wearable sensors and advances in passive data capture technology, offers a significant volume of information that can be used to build on the existing body of evidence. Data science methods have been proposed to analyze the ongoing, real-time information obtained through passive data collection and “train” the JITAIs decision rules through dynamic feedback loops and accommodate this information to truly adapt to the continuously changing individual and contextual variables [45,47–49].

A FRAMEWORK FOR DBCIS: AN ITERATIVE, INTERDISCIPLINARY AND COLLABORATIVE APPROACH COMBINING BEHAVIOR SCIENCE, HUMAN-CENTERED DESIGN, AND DATA SCIENCE

In reviewing the existing challenges and the current attempts to address them, a common emerging theme is that behavior scientists can no longer rely solely on their own subject matter expertise to develop effective DBCIs. To address the challenge of building engaging interventions, human-centered design expertise is needed to inform DBCI design. To address the DBCI optimization and personalization challenges, modern analytics methods and data science expertise are needed to advance the science of behavior change by providing the opportunities to understand how end users engage with an intervention and to utilize the massive volume of data afforded by mobile technologies, connected wearables and sensors technologies [47,48,50].

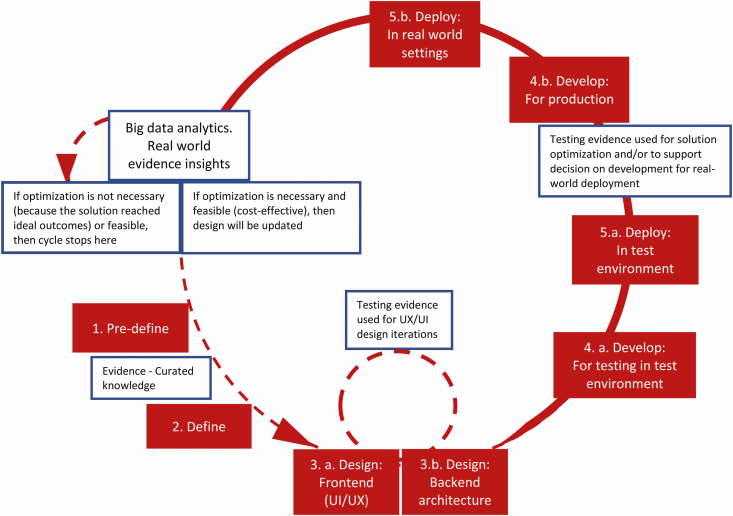

We propose an iterative, interdisciplinary, collaborative framework for DBCIs—an approach that can provide prospective DBCI stakeholders with a collaborative methodological blueprint to develop, implement, and evaluate them (Figure 1).

Fig 1.

An iterative, interdisciplinary, collaborative framework for digital behavior change interventions.

The proposed iterative, interdisciplinary, and collaborative framework builds upon existing models of behavior change interventions development, evaluation, and optimization, as well as on models and frameworks employed by the information technology industry (e.g., agile approach, human-centered design). The framework’s five phases are built upon the human-centered design approach (i.e., predefine, define, design, develop, and deploy). While human-centered design approach phases are well suited for most technology consumer products, the content, approach, and outcomes of each phase required tailoring to serve DBCIs meant to improve or maintain health outcomes by changing behavior. For example, as seen below, the define phase describes methodologies used for intervention development, whereas the deploy phases incorporate and reference intervention evaluation and optimization methodologies. In its typical use, the human-centered design approach informs an optimal user experience, toward better engagement and usability. However, an optimal user experience and high levels of engagement are not the end goals in DBCIs, rather, they are a means to an end, which is behavior change and health outcome improvement. Adhering to the five phases without using the specific behavior science methodologies specified may produce engaging interventions, but it will not render effective interventions capable of changing health behavior.

The framework also rests upon an agile approach, emphasizing testing early and often in two of its phases (i.e., design and deploy). While in the design phase, testing can be focused solely on user experience and usability; in the deploy phase, the focus should include testing for impact on behavior change as well. To that end, it is important to acknowledge that although the agile notion of the MVP may make perfect sense in developing software for other purposes (e.g., entertainment and transportation), in health care, there is an ethical responsibility to ensure that an intervention is safe and at least equivalent to standard or usual care before making it available to patients or end users [51]. As such, instead of an MVP, we propose striving for a “minimum viable intervention” (MVI), capable of achieving the intended behavior change, in order to adhere to the ethics, scientific rigors, and the regulatory frameworks of the health care sector. When collaborating toward developing a DBCI, behavior scientists have the responsibility to offer and prioritize the scientific requirements that the DBCI would need to meet in order to achieve an MVI status for early validation testing on route toward optimizing the intervention. In other words, the agile approach focuses on rapid iterations to inform proximal success criteria. According to the Agile Manifesto, “Working software is the primary measure of progress” [26]. Proximal success criteria such as the users being able to log in, being able to set up an appointment with their care provider through a care portal, or being able to watch a video are certainly relevant. However, DBCIs are aimed at changing health behavior, and focusing on proximal success criteria will render a usable product, but that product only becomes an intervention once it is capable of behavior change. To address the identified challenges, we propose the iterative, interdisciplinary, collaborative framework described in Table 1 and in the text below.

Table 1.

An iterative, interdisciplinary, collaborative framework for digital behavior change interventions

| Phase | 1. Predefine | 2. Define | 3. Design (a) Front-end (UI/UX) (b) Back-end architecture |

4. Develop (a) For testing in test environment (b) For production |

5. Deploy (a) In test environment (b) In real-world settings |

|---|---|---|---|---|---|

| Aim | Understanding the health outcome, behaviors, user population, context of usage | Defining the intervention strategy and its conceptual model | (a) Ideating and creating wireframes, low/high-fidelity prototypes (b) Designing a system architecture with computational capabilities |

(a) Developing a low-fidelity, minimally viable intervention in a test environment (e.g., mobile testing platform) (b) Developing for real-world settings (e.g., app store) |

(a) Deploy in test environment to test audience (b) Deploy in real-world setting |

| Type of research | Empirical research. Formative research—if empirical evidence is lacking | Systematic reviews, market research, including stakeholders, and competitive analysis to validate concept | User testing | Demo reviews | (a) Optimization and validation testing (b) Big data analytics, real-world evidence (i.e., evaluation is focused on both engagement and behavioral/health outcomes) |

| Stakeholders | End users, stakeholders, behavior scientists, product designers | Behavior scientists, subject matter experts, solution stakeholders (health care, commercial, etc.) | Engineers (data architects), behavior scientists | Product leads, engineers (software, data architects), scrum team, designers, data scientists, behavior scientists | Data scientists, behavior scientists, solution stakeholders, end users |

Predefine phase

The goal of this phase is to understand the end-user population, define the health outcomes and the related behaviors, identify the general intervention parameters and intended use, as well as the context of usage. Formative research may be necessary in this phase. For example, learning about end users can be done through methodologies such as interviews and focus groups (e.g., what do end users consider that they need in order to change a certain behavior) [52]. Better understanding the health outcomes, behaviors to be targeted toward those health outcomes (i.e., empirical support for behavior-health outcome connection is needed to justify the selection of targeted behaviors), and implementation context can be accomplished by conducting ethnographic research, interviews with stakeholders, observational studies (e.g., how do end users perform these behaviors in their daily lives; how will the intervention fit with users’ health and daily routines; how will end users access the intervention; and who are the other relevant actors for the intervention’s success). This phase should set the foundation for addressing the engagement and intervention design challenge. At the same time, this phase should be used to lay the foundation for addressing health disparities by better understanding the outcomes, behaviors, implementation context, and overall needs across a socioeconomically diverse population.

Although describing a circular and iterative process, this framework does not imply that every new iteration needs to start with a predefine phase. New insights from one iteration can shape the define phase of a subsequent one.

Define phase

The goal of this phase is to define the intervention strategy and its conceptual model. The knowledge gained in the previous steps should inform the scientific areas of inquiry, and in this phase, systematic reviews of existing evidence should be performed to inform the development of an intervention strategy, a conceptual model (i.e., the hypothesized pathway toward the desired outcomes or the mechanisms of action), as well as the selection of behavior change techniques and other intervention components.

The process of defining a behavior change intervention usually involves determining the overall approach that will be used and then identifying the components of the intervention. Approaches such as the COM-B model of behavior [53] or IM [54] can be used to guide the intervention’s conceptual model and the identification of its unique components (i.e., behavior change techniques). The COM-B model draws from a single unifying theory of motivation, specifying the variables (i.e., determinants) that need to be influenced to change behavior [53]. On the other hand, the IM approach is a tool for planning and developing interventions, drawing from a wider range of theoretical models for identifying the intervention components. It maps the path from recognition of a need or problem to the identification of a solution. Depending on the solution’s scope, focus, and available paths for intervention, broader theoretical models, such as the socioecological model [55], can also be used for mapping out the intervention components by understanding the variables with impact on the behavior to be changed. To facilitate replication and ensure a common language across interventions and domains, behavior change technique taxonomies such as the one proposed by Michie (2008) should also be used [56,57].

While the expertise of behavior scientists is leveraged to conduct the systematic reviews and to inform the conceptual model and the components of the intervention, other key stakeholders’ expertise is folded into the process to inform this stage (i.e., subject matter experts on the health topic). This phase should bolster the results obtained from the previous step and specify the scientific backbone of the intervention. In this phase, the intervention should have a conceptual or logic model, along with the proposed specifications for the proposed components (e.g., what are the proposed behavior change techniques and what is the hypothesized mechanism of change through which they will impact the target behavior?). It is important to state that the scientific requirements are agnostic of technology and design, in that if a certain behavior change technique is required for an intervention (i.e., there is evidence indicating it as necessary), then the technology and design should be in its service.

Additionally, when such evidence exists, this phase should also develop the a priori (i.e., based on previous evidence) decision rules needed for adaptive interventions. When supported by previous evidence, these preliminary rules should specify what component of the intervention should be offered for what users (e.g., personas) based on what criteria and at what threshold. To summarize, the outcome of this phase should be a comprehensive set of behavioral science requirements, based on evidence, that will inform the design and development of an effective DBCI.

Design phase

Front-end design

The documentation provided in the previous step should enable designers to develop intervention flows or user journeys, as well as map out the user interaction and design features against the specific scientific requirements for the behavior change techniques embedded within the intervention. This step leverages a human-centered design process, with an emphasis on rapid prototyping and testing. Beyond designers’ expertise, a close collaboration with behavior scientists ensures that the behavior science requirements are met in the design assets. As wireframes and prototypes are designed, testing with users and stakeholders should be an ongoing process [58]. Usability and feasibility testing should be performed by sharing prototypes with relevant stakeholders to receive feedback. The key is testing early and often, with design iterations for improved usability made after each test. Methods such as card sorting, click-stream analysis, focus groups, interviews can be employed to gather both quantitative and qualitative data [59]. It is important to note that the role of this research is not to validate any scientific claims about the DBCIs but rather to improve their usability. The goal of testing is to identify an optimal way for bringing the behavior science requirements to life through design. The iterative, collaborative frame for conducting this phase should ensure the design of a highly usable solution, addressing the engagement challenge. Although the description above fits the front-end design of a smartphone app type of DBCI, the same steps can be taken for other types of DBCIs as well. For example, text-based interventions may not require the same type of design assets, but they require content creation and decision rules, which can be similarly tested and improved in the same manner. Depending on the technology and aim of the intervention, this phase is typically focused on iteratively improving usability and engagement with the intervention, whether that comes through designing visual, written, audio, haptic, or other emerging types of technology modalities.

Through iterative testing, the voice of the user is integrated into the design, informing and shaping the solution for better engagement. To help ensure broad accessibility and usability, study design and sample selection during usability testing should ensure that the designs are tested within representative and inclusive sociodemographic samples. More so, to reduce the likelihood of design flaws that disproportionately impact participants or patients from health disparities affected populations, disparities-oriented use case scenarios should be included to allow the identification of skewed embedded assumptions [60].

Back-end design

This step should leverage the expertise of data and systems engineers to allow for a thoughtful consideration of decision rules embedded in the user experience. Data capture (e.g., type of data, data level of granularity, and formatting) and integration processes need to be considered (e.g., the DBCI could leverage data captured through a passive data collection device, such as a wearable that could require data integration with a smartphone app), as well as the computational capabilities that are needed to deliver the designed user experience.

Develop phase

Developing for testing in test environments

In order to live into Agile principles, instead of developing first for the real-world settings (e.g., app store), it is important to pilot-test the intervention in a test environment (e.g., mobile testing platform). This should be a low-cost endeavor, developing a minimally viable intervention, with a focus on gathering data and evidence for intervention optimization (e.g., improved decision rules). Depending on the goals and research designs employed, evidence can also be used to support decisions for developing for real-world settings.

Developing for production

In the development stage, a systematic collaboration between software developers, data and systems engineers, behavior scientists, and designers is necessary to ensure that the solution is built to accurately reflect the design specifications. Although a description of the scrum process [61] used when applying an agile approach to software development [62–64] is beyond the scope if this paper, it is important for behavior scientists to be familiar with the team structure and roles, as well as to have a good understanding of the phases in which their input is needed (e.g., Sprint review meetings in which they, as stakeholders, would review and provide input on what the development team has accomplished; demo testing to ensure adherence to the scientific requirements of the intervention). Beyond the attention to the front-end development, building an integration layer (e.g., interventions may require apps and connected devices) and a back-end architecture is essential. Adaptive interventions rely on modern data science methodologies, such as machine learning, which require specific architecture specifications. Data science, data architecture, and product analytics expertise should also be folded into the development process to ensure the data infrastructure lends itself to the computational capabilities that will be employed in the implementation and evaluation phase. The interdisciplinary nature of this approach should set the foundation for a solution that has a back end that can sustain personalization and optimization capabilities.

Deployment/implementation and evaluation phase

Deployment in test environments

Beta versions (i.e., a version made available for testing in a lab-like environment, typically by a limited number of users, before being released to its intended audience) are deployed for testing and product optimization [65]. Usability and feasibility pilots may precede the traditional randomized controlled trial and specific methods, such as microrandomized, trials may be used to provide a more resource-efficient way to evaluate and optimize the DBCI [66,67]. It is important to use this phase for assessing the DBCI’s impact on behavior change. If the iterative testing taking place in the design phase should keenly focus on engagement with the DBCI, in the deploy phase, it is important to move beyond that and to also assess engagement with the health behaviors (see above description on MVP versus MVI).

Deployment in real-world settings

Post Beta validation and learning, once the DBCI is offered to its intended population, real-world evidence-advanced analytics should continue to improve the intervention to maximize outcomes. While the specific methodologies may be dictated by the context of an intervention, DBCIs afford a coveted stream of data: real-world evidence, objective data on interaction with the DBCI (e.g., product analytics, such as Google Analytics for web or mobile apps), on the behavior (e.g., wearable data), and on users’ context (e.g., weather and geolocation data). Data science methods such as machine learning, data mining, and other modern analytic methods are needed to capitalize on intensive longitudinal data to identify factors that would inform intervention optimization [45,68]. Additionally, given the limited evidence available for setting complex and comprehensive a priori decision rules, more modern data analytic methods can now be used to refine and continuously adjust the decision rules to reflect in-context behavior and DBCI usage. More exactly, methods such as machine learning are needed to analyze the ongoing data captured (e.g., about the interaction with the DBCI, about real-time behavior, and/or about the users’ context), and “train” the decision rules to accommodate the complexity of human behavior change [45,49]. Machine learning involves training algorithms to find patterns in data, making predictions and then learning from those predictions to improve them on its own [69]. By collaborating with data scientists and using advanced analytics methods, behavior scientists can devise more efficient interventions. They can also advance the theoretical understanding of behavior change so that we can answer the big question in behavior science: “What works, how well, for whom, in what settings, for what behaviors or outcomes, and why?” [70] evolving from a one size fits all approach to a more personalized one that is better suited to address the complexities of behavior chance across large and sociodemographically diverse populations.

In the implementation and evaluation phase, leveraging data science capabilities, in addition or in parallel to traditional research methods (i.e., for robust generalized causal inferences via effect size estimates), allows faster and more nimble learning cycles, in which dynamic algorithms are created and looped back to the solution—addressing the personalization and optimization challenge.

To summarize, our framework describes an iterative, interdisciplinary, and collaborative process for developing, implementing, and evaluating DBCIs, highlighting the close partnership between behavior scientists, designers, data engineers, software developers, and data scientists, as well as on a continuous feedback loop from end users.

CONCLUSIONS

The proposed framework is meant to provide an approach for leveraging the expertise of behavior scientists, UI/UX designers, data scientists, and data engineers, as well as for guiding the process of defining an intervention from its scientific backbone to its implementation and evaluation stages, describing the steps of an ongoing, dynamic learning loop that is set to respond to findings coming from the various rounds of testing and analysis conducted throughout the five proposed stages.

If DBCIs are to deliver on their promise, they can no longer look at behavior science as a source of static truth, a pool of knowledge from which to borrow, but rather DBCIs have to use their own capabilities to contribute to the behavior science field. In addition to engaging interfaces and appealing user experiences, DBCIs can also bring a continuous stream of data about real-time user behavior, their context, and their DBCI usage [71,72]. It is this data, along with the computational capabilities to analyze it, that can advance the science of behavior change by providing the opportunities to better understand behavior change [47,48,50] in order to develop engaging, optimized, and personalized interventions.

Developing effective DBCIs depends on strong interdisciplinary partnerships among behavior scientists, designers, software developers, system engineers, and data scientists as no single group has sufficient expertise and resources to develop successful, effective behavioral health technologies on its own [73]. Previously, most behavioral health research has been done exclusively in academic or medical settings at a pace dictated by government research funding. We are now witnessing the emergence of new interdisciplinary collaborations between behavior scientists, data scientists, and software and data engineering. One example is the Human Behavior Change Project, which aims to develop an artificial intelligence (AI) system that can extract relevant information from intervention evaluations and build a knowledge base that can be used and improved as new information becomes available [74].

Several limitations of this model need to be considered. First, the proposed framework is intended to be generalizable across various professional settings, from academia to industry and, therefore, it stays at a high level instead of providing granularity and specificity in methodology and content. It is intended as a general model that can and should be modified and elaborated to fit the needs of a specific DBCI and the product iteration cycles, which are largely influenced by budgetary and scope constraints. We acknowledge that implementing such a framework may require certain collaboration platforms or funding mechanisms. One such example is the Small Business Innovation Research (SBIR) program [75], which enables high-tech innovation through awarding Research and Development (R&D) efforts.

A second limitation is that the proposed framework assumes a DBCI ecosystem that can be costly. Building platforms that can support machine learning and predictive analytics capabilities for just-in-time, adaptive DBCIs requires considerable resources, which may exceed the typical government agency funding opportunities and even various industry R&D budgets. More so, “real-time” individual personalization may not be possible to implement or sustain with current computational capabilities at a large scale. Currently, personalization does not mean creating a treatment that is unique to an individual patient. That could be possible if a DBCI were to target tens of users (if the costs of its development and deployment is justified by the limited population it reaches). For DBCIs that could potentially reach millions of people, personalizing at an individual level is not feasible with current computational capabilities. Rather, just like personalized medicine [76], current personalization capabilities involve relying on the ability to classify individuals into subgroups that are uniquely responsive to a specific intervention component or instantiation of a component (e.g., UI/UX through which certain techniques are instantiated).

With these considerations in mind, and as with any scientific endeavor, the true value of such a framework has to be determined by assessing its results—the health impact it renders considering the resources it requires.

To conclude, while it is very likely that more complex partnerships models and collaboration platforms will emerge as technology and data science will also evolve, the behavior science field no longer has to rely on siloed settings to produce, investigate, and implement its digital interventions [73]. The proposed framework may inform practices in both academia and industry, as well as highlight the need to offer collaboration platforms to ensure successful partnerships that can lead to more effective DBCIs toward better health outcomes.

Acknowledgment

The team would like to acknowledge the contribution of Kelsey Ford, MPH, who contributed to the evidence review for this paper during her 2018 Internship with JJHWS.

Compliance with Ethical Standards

Conflicts of Interest: All authors are employees of Johnson and Johnson, Health and Wellness Solutions Inc.

Authors’ Contributions: All authors had an equal contribution in the conception and design of this work.

Ethical Approval: This article does not contain any studies with human participants performed by any of the authors. This article does not contain any studies with animals performed by any of the authors.

Informed Consent: This study does not involve human participants and informed consent was therefore not required.

References

- 1. Mohr DC, Burns MN, Schueller SM, Clarke G, Klinkman M. Behavioral intervention technologies: evidence review and recommendations for future research in mental health. Gen Hosp Psychiatry. 2013;35(4):332–338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Nilsen W, Kumar S, Shar A, et al. Advancing the science of mHealth. J Health Commun. 2012;17(suppl 1):5–10. doi: 10.1080/10810730.2012.677394. [DOI] [PubMed] [Google Scholar]

- 3. Marsch LA, Gustafson DH. The role of technology in health care innovation: a commentary. J Dual Diagn. 2013;9(1):101–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Mohr DC, Schueller SM, Riley WT, et al. Trials of intervention principles: evaluation methods for evolving behavioral intervention technologies. J Med Internet Res. 2015;17(7):e166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Yardley L, Choudhury T, Patrick K, Michie S. Current issues and future directions for research into digital behavior change interventions. Am J Prev Med. 2016;51(5):814–815. doi: 10.1016/j.amepre.2016.07.019. [DOI] [PubMed] [Google Scholar]

- 6. Laranjo L, Arguel A, Neves AL, et al. The influence of social networking sites on health behavior change: a systematic review and meta-analysis. J Am Med Inform Assoc. 2015;22(1):243–256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Mohr DC, Schueller SM, Montague E, Burns MN, Rashidi P. The behavioral intervention technology model: an integrated conceptual and technological framework for eHealth and mHealth interventions. J Med Internet Res. 2014;16(6):e146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Wang CJ, Huang AT. Integrating technology into health care: what will it take? JAMA. 2012;307(6):569–570. [DOI] [PubMed] [Google Scholar]

- 9. Schueller SM, Muñoz RF, Mohr DC. Realizing the potential of behavioral intervention technologies. Curr Dir Psychol Sci. 2013;22(6):478–483. doi: 10.1177/0963721413495872. [Google Scholar]

- 10. Teyhen DS, Aldag M, Edinborough E, et al. Leveraging technology: creating and sustaining changes for health. Telemed J E Health. 2014;20(9):835–849. [DOI] [PubMed] [Google Scholar]

- 11. Kumar RK. Technology and healthcare costs. Ann Pediatr Cardiol. 2011;4(1):84–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Peek ME. Can mHealth interventions reduce health disparities among vulnerable populations. Divers Equal Health Care. 2017;14(2):44–45. doi: 10.21767/2049-5471.100091. [Google Scholar]

- 13. Michie S, Yardley L, West R, Patrick K, Greaves F. Developing and evaluating digital interventions to promote behavior change in health and health care: recommendations resulting from an international workshop. J Med Internet Res. 2017;19(6):e232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Laing BY, Mangione CM, Tseng CH, et al. Effectiveness of a smartphone application for weight loss compared with usual care in overweight primary care patients: a randomized, controlled trial. Ann Intern Med. 2014;161(10 suppl):S5–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Spear Z. (2009, February 19). Average iPhone app usage declines rapidly after first download Available at https://appleinsider.com/articles/09/02/19/iphone_app_usage_declining_rapidly_after_first_downloads. Accessibility verified February 2, 2019.

- 16. Rodde T. (2018, April 16). 21% of users abandon an app after one use Available at http://info.localytics.com/blog/21-percent-of-users-abandon-apps-after-one-use. Accessibility verified January 2019.

- 17. Foster EC. User interface design. In: Software Engineering: A Methodological Approach. Berkeley, CA: Apress; 2014:187–206. [Google Scholar]

- 18. International Organization for Standardization. ISO 9241-11:1998, Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs) -- Part 11: Guidance on Usability. Geneva, Switzerland: International Organization for Standardization; March 15, 1998. Available at https://www.iso.org/obp/ui/#iso:std:iso:9241:-11:ed-1:v1:en. Accessibility verified January 2019. [Google Scholar]

- 19. Norman DA, Draper SW. User Centered System Design: New Perspectives on Human-computer Interaction. CRC Press; 1986. doi: 10.1201/b15703. [Google Scholar]

- 20. International Organization for Standardization. ISO 9241-210, Ergonomics of Human-System Interaction -- Part 210: Human-Centered Design for Interactive Systems Geneva, Switzerland: International Organization for Standardization; March 15, 2010. Available at https://www.iso.org/standard/52075.html. Accessibility verified January 2019. [Google Scholar]

- 21. Forbes. (n.d.). Top 100 Digital Companies Available at https://www.forbes.com/top-digital-companies/list/2/#tab:rank. Accessibility verified January 2019.

- 22. Google. (n.d.). What is a design sprint? Available at https://designsprintkit.withgoogle.com/introduction/overview. Accessibility verified January 2019.

- 23. Design Kit. (n.d.). What is human-centered design? Available at http://www.designkit.org/human-centered-design. Accessibility verified January 2019.

- 24. Microsoft Design. (n.d.). Inclusive design Available at https://www.microsoft.com/design/inclusive/. Accessibility verified January 2019.

- 25. Harte R, Glynn L, Rodríguez-Molinero A, et al. A human-Centered design methodology to enhance the usability, human factors, and user experience of connected health systems: A three-Phase methodology. JMIR Hum Factors. 2017;4(1):e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Agile Alliance. (n.d.). Manifesto for Agile software development Available at https://www.agilealliance.org/agile101/the-agile-manifesto/. Accessibility verified January 2019.

- 27.Glomann L. Introducing ‘human-centered agile workflow’ (HCAW) – an agile conception and development process model. 2018;646–655. doi: 10.1007/978-3-319-60492-3_61.

- 28. Da Silva TS, Martin A, Maurer F, Silveira M. (2011, August). User-centered design and agile methods: a systematic review. In: Proceedings of the 2011 Agile Conference (AGILE ‘11). IEEE Computer Society, Washington, DC, USA: 77–86. doi:10.1109/AGILE.2011.24.

- 29. Memmel T, Gundelsweiler F, Reiterer H. (2007, September). Agile human-centered software engineering. In: Proceedings of the 21st British HCI Group Annual Conference on People and Computers: HCI... but not as we know it-Volume 1 Swinton, UK: British Computer Society:167–175. ISBN: 978-1-902505-94-7. [Google Scholar]

- 30. Agile Alliance. (n.d.). What is agile? Available at https://www.agilealliance.org/agile101/. Accessibility verified January 2019.

- 31. Collins LM, Baker TB, Mermelstein RJ, et al. The multiphase optimization strategy for engineering effective tobacco use interventions. Ann Behav Med. 2011;41(2):208–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Duff OM, Walsh DM, Furlong BA, O’Connor NE, Moran KA, Woods CB. Behavior change techniques in physical activity eHealth interventions for people with cardiovascular disease: systematic review. J Med Internet Res. 2017;19(8):e281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Bartholomew LK, Parcel GS, Kok G. Intervention mapping: a process for developing theory- and evidence-based health education programs. Health Educ Behav. 1998;25(5):545–563. [DOI] [PubMed] [Google Scholar]

- 34. Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): new methods for more potent eHealth interventions. Am J Prev Med. 2007;32(5 suppl):S112–S118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Wyrick DL, Rulison KL, Fearnow-Kenney M, Milroy JJ, Collins LM. Moving beyond the treatment package approach to developing behavioral interventions: addressing questions that arose during an application of the Multiphase Optimization Strategy (MOST). Transl Behav Med. 2014;4(3):252–259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Stults-Kolehmainen MA, Sinha R. The effects of stress on physical activity and exercise. Sports Med. 2014;44(1):81–121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Borodulin K, Sipilä N, Rahkonen O, et al. Socio-demographic and behavioral variation in barriers to leisure-time physical activity. Scand J Public Health. 2016;44(1):62–69. [DOI] [PubMed] [Google Scholar]

- 38. Sallis JF, Owen N, Fisher E. Ecological models of health behavior. In: Glanz K, Rimer BK, Visvanath K. eds. Health Behavior: Theory, Research, and Practice 5th ed. San Francisco, CA: Jossey-Bass; 2015:43–64. ISBN: 978-1-118629-00-0. [Google Scholar]

- 39. Fan JX, Wen M, Kowaleski-Jones L. Rural-urban differences in objective and subjective measures of physical activity: findings from the national health and nutrition examination survey (NHANES) 2003-2006. Prev Chronic Dis. 2014;11(E141):E141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Moran M, Van Cauwenberg J, Hercky-Linnewiel R, Cerin E, Deforche B, Plaut P. Understanding the relationships between the physical environment and physical activity in older adults: a systematic review of qualitative studies. Int J Behav Nutr Phys Act. 2014;11(1):79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Pagoto S. (2017, November 17). How do you change behavior? Available at https://www.pchalliance.org/news/how-do-you-change-behavior. Accessibility verified January 2019.

- 42. Lustria ML, Noar SM, Cortese J, Van Stee SK, Glueckauf RL, Lee J. A meta-analysis of web-delivered tailored health behavior change interventions. J Health Commun. 2013;18(9):1039–1069. [DOI] [PubMed] [Google Scholar]

- 43. Nahum-Shani I, Smith SN, Spring BJ, et al. Just-in-time adaptive interventions (JITAIs) in mobile health: key components and design principles for ongoing health behavior support. Ann Behav Med. 2017;52(6):446–462. doi: 10.1007/s12160-016-9830-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Almirall D, Nahum-Shani I, Sherwood NE, Murphy SA. Introduction to SMART designs for the development of adaptive interventions: with application to weight loss research. Transl Behav Med. 2014;4(3):260–274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Nahum-Shani I, Hekler EB, Spruijt-Metz D. Building health behavior models to guide the development of just-in-time adaptive interventions: a pragmatic framework. Health Psychol. 2015;34(S):1209–1219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Hekler EB, Rivera DE, Martin CA, et al. Tutorial for using control systems engineering to optimize adaptive mobile health interventions. J Med Internet Res. 2018;20(6):e214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Nilsen WJ, Pavel M. Moving behavioral theories into the 21st century: technological advancements for improving quality of life. IEEE Pulse. 2013;4(5):25–28. [DOI] [PubMed] [Google Scholar]

- 48. Spruijt-Metz D, Hekler E, Saranummi N, et al. Building new computational models to support health behavior change and maintenance: new opportunities in behavioral research. Transl Behav Med. 2015;5(3):335–346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Dallery J, Kurti A, Erb P. A new frontier: integrating behavioral and digital technology to promote health behavior. Behav Anal. 2015;38(1):19–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Rivera DE, Jimison HB. Systems modeling of behavior change: two illustrations from optimized interventions for improved health outcomes. IEEE Pulse. 2013;4(6):41–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. London AJ. Equipoise in research: integrating ethics and science in human research. JAMA. 2017;317(5):525–526. [DOI] [PubMed] [Google Scholar]

- 52. Richey RC, Klein JD. Design and Development Research: Methods, Strategies, and Issues. New York, NY: Routledge; 2014. doi: 10.4324/9780203826034. [Google Scholar]

- 53. Michie S, van Stralen MM, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement Sci. 2011;6:42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Bartholomew LK, Parcel GS, Kok G, Gottlieb NH, Fernández ME. Planning Health Promotion Programs: An Intervention Mapping Approach. 3rd ed. San Francisco, CA: Jossey-Bass; 2011. ISBN: 978-0-470528-51-8. [Google Scholar]

- 55. McLeroy KR, Bibeau D, Steckler A, Glanz K. An ecological perspective on health promotion programs. Health Educ Q. 1988;15(4):351–377. [DOI] [PubMed] [Google Scholar]

- 56. University College of London (n.d.). BCT taxonomy Available at https://www.ucl.ac.uk/health-psychology/bcttaxonomy. Accessibility verified January 2019.

- 57. Abraham C, Michie S. A taxonomy of behavior change techniques used in interventions. Health Psychol. 2008;27(3):379–387. [DOI] [PubMed] [Google Scholar]

- 58. Fulton E, Kwah K, Wild S, Brown K. (2018, September). Lost in translation: transforming behaviour change techniques into engaging digital content and design for the StopApp. Healthcare. 6(3):75. doi: 10.3390/healthcare6030075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Rohrer C. When to Use Which User-Experience Research Methods. Available at https://www.nngroup.com/articles/which-ux-research-methods/. Accessibility verified January 2019. [Google Scholar]

- 60. Gibbons MC, Lowry SZ, Patterson ES. Applying human factors principles to mitigate usability issues related to embedded assumptions in health information technology design. JMIR Hum Factors. 2014;1(1):e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Agile Alliance. (n.d.). Scrum Available at https://www.agilealliance.org/glossary/scrum. Accessibility verified January 2019.

- 62. Dingsøyr T, Nerur S, Balijepally V, Moe NB. A decade of agile methodologies: Towards explaining agile software development. J Syst Softw. 2012;85(6):1213–1221. doi: 10.1016/j.jss.2012.02.033.

- 63. Dybå T, Dingsøyr T. Empirical studies of agile software development: a systematic review. Inf Softw Technol, 2008;50(9–10):833–859. doi:10.1016/j.infsof.2008.01.006. [Google Scholar]

- 64. Barlow JB, Giboney J, Keith MJ, et al. Overview and guidance on agile development in large organizations. Commun Assoc Inf Syst. 2011;29(2):25–44. doi:10.2139/ssrn.1909431. [Google Scholar]

- 65. Beta version. (2019, January 24). Wikitionary Available at https://en.wiktionary.org/wiki/beta_version. Accessibility verified January 2019.

- 66. Hekler EB, Klasnja P, Riley WT, et al. Agile science: creating useful products for behavior change in the real world. Transl Behav Med. 2016;6(2):317–328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Klasnja P, Hekler EB, Shiffman S, et al. Microrandomized trials: an experimental design for developing just-in-time adaptive interventions. Health Psychol. 2015;34(S):1220–1228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Smith DM, Walls TA. MHealth analytics. In: Marsh LA, Lord SE, Dallery J, eds. Behavioral Health Care and Technology: Using Science-Based Innovations to Transform Practice. New York, NY: Oxford Publishing Press; 2014:153–167. [Google Scholar]

- 69. SAS. Machine learning Available at https://www.sas.com/en_us/insights/analytics/machine-learning.html. Accessibility verified January 2019.

- 70. West R, Michie S. A Guide to Development and Evaluation of Digital Behaviour Interventions in Healthcare. Great Britain: Silverback Publishing; 2016. [Google Scholar]

- 71. Wood AD, Stankovic JA, Virone G, et al. Context-aware wireless sensor networks for assisted living and residential monitoring. IEEE Netw. 2008;22(4):26–33. doi:10.1109/MNET.2008.4579768. [Google Scholar]

- 72. Dunton GF, Liao Y, Intille SS, Spruijt-Metz D, Pentz M. Investigating children’s physical activity and sedentary behavior using ecological momentary assessment with mobile phones. Obesity (Silver Spring). 2011;19(6):1205–1212. [DOI] [PubMed] [Google Scholar]

- 73. Sucala M, Nilsen W, Muench F. Building partnerships: a pilot study of stakeholders’ attitudes on technology disruption in behavioral health delivery and research. Transl Behav Med. 2017;7(4): 854–860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Michie S, West R, Johnston M, et al. Human Behavior Change Project: Digitising the knowledge base on effectiveness of behavior change interventions (n.d.). Available at https://www.frontiersin.org/10.3389/conf.FPUBH.2017.03.00008/event_abstract. Accessibility verified January 2019.

- 75. SBIR. (n.d.). About SBIR Available at https://www.sbir.gov/about/about-sbir. Accessibility verified January 2019.

- 76. Redekop WK, Mladsi D. The faces of personalized medicine: a framework for understanding its meaning and scope. Value Health. 2013;16(6 Suppl):S4–S9. [DOI] [PubMed] [Google Scholar]