Summary

Technology advancement demands energy storage devices (ESD) and systems (ESS) with better performance, longer life, higher reliability, and smarter management strategy. Designing such systems involve a trade-off among a large set of parameters, whereas advanced control strategies need to rely on the instantaneous status of many indicators. Machine learning can dramatically accelerate calculations, capture complex mechanisms to improve the prediction accuracy, and make optimized decisions based on comprehensive status information. The computational efficiency makes it applicable for real-time management. This paper reviews recent progresses in this emerging area, especially new concepts, approaches, and applications of machine learning technologies for commonly used energy storage devices (including batteries, capacitors/supercapacitors, fuel cells, other ESDs) and systems (including battery ESS, hybrid ESS, grid and microgrid-containing energy storage units, pumped-storage system, thermal ESS). The perspective on future directions is also discussed.

Subject areas: Applied Computing, Energy Storage, Materials Design

Graphical Abstract

Applied Computing; Energy Storage; Materials Design

Introduction and overviews

With economic growth, global energy consumption increases significantly during the last decade. For instance, the global electricity and fossil fuel consumption increased by 19.44% and 9.14%, respectively from 2010 to 2017, according to the statistics data from the International Energy Agency. These have aggravated the issue of energy shortage and CO2 emission. The amount of CO2 emission has increased by 22.2% from 2010 to 2019. Improving the efficiency of energy usage and promoting renewable energy become crucial. The increasing use of consumer electronics and electrified mobility drive the demand for mobile power sources, which stimulate the development and management of energy storage devices (ESDs) and energy storage systems (ESSs). The increasing complexity of ESDs and ESSs and the large amount of front-end data pose significant challenges to traditional models and algorithms. New state-of-the-art technology is needed to address the issues faced by the traditional approaches for higher accuracy, more efficiency, and better optimization.

Machine learning (ML), coupled with big data, has been flourishing in recent years. Integrating human knowledge into machine learning (Deng et al., 2020) has achieved functions and performance not available before and facilitated the interaction between human beings and machine learning systems, making machine learning decisions understandable to humans. Beyond the field of computer and data sciences such as computer vision, natural language processing, image recognition, and search engine, machine learning is increasingly used in the field of physics (Carleo et al., 2019; Dunjko and Briegel, 2018), chemistry (Goh et al., 2017; Panteleev et al., 2018), biology (Silva et al., 2019; Zitnik et al., 2019), engineering (Flah et al., 2020; Kim et al., 2018; McCoy and Auret, 2019), and materials science (Morgan and Jacobs, 2020). Besides the above-mentioned disciplines, machine learning technologies have great potentials for addressing the development and management of energy storage devices and systems by significantly improving the prediction accuracy and computational efficiency. Several recent reviews have highlighted the trend. The work in (Zhang et al., 2019a) reviewed the use of deep learning technologies on prognostics and health management (PHM), which include fault detection, diagnosis, and prognosis in application domains including batteries. The work in (Hu et al., 2020; Meng and Li, 2019) reviewed the application of different approaches, including physics-based (model-based) approaches, data-driven approaches, and hybrid approaches, on the PHM and lifetime prognosis for batteries. The work in (Ng et al., 2020) reviewed the application of machine learning on the estimation of state of charge and state of health for batteries. The work in (Erick and Folly, 2020) reviewed the application of reinforcement learning for the management of grid-tied microgrid energy systems, specifically aiming at control problems. The work in (Chen et al., 2020; Gu et al., 2019) reviewed the application of machine learning in the field of energy storage and renewable energy materials for rechargeable batteries, photovoltaics, catalysis, superconductors, and solar cells, specifically focusing on how machine learning can assist the design, development, and discovery of novel materials. These reviews mainly focus on the application of certain types of machine learning algorithms in a specific subarea. Recognizing that the field of energy storage device and system as well as machine learning is broad, a more comprehensive review is needed to provide a better representation and guidance of the relevant state-of-the-art research and development. A unique aspect of this review is to provide a coverage of machine learning in both device and system level applications.

In this paper, we provide a comprehensive review of recent advances and applications of machine learning in ESDs and ESSs. These include state estimation, lifetime prediction, fault and defect diagnosis, property and behavior analysis, modeling, design and optimization for ESDs, as well as modeling and optimization of the control strategy for ESSs. The structure of this paper is organized as follows: first, we discuss the status and challenge that the current ESDs and ESSs are facing. Then, we introduce the major machine learning technologies that have been used in the field of energy storage. Next, we present how to apply machine learning for ESDs. After that, we introduce the application of machine learning for ESSs. Finally, we provide a summary and perspective on future directions.

Development and challenges of current energy storage devices and systems

ESDs can store energy in various forms (Pollet et al., 2014). Examples include electrochemical ESD (such as batteries, flow batteries, capacitors/supercapacitors, and fuel cells), physical ESDs (such as superconducting magnets energy storage, compressed air, pumped storage, and flywheel), and thermal ESDs (such as sensible heat storage and latent heat storage based on phase change materials). Batteries alone have various types, such as lithium-ion, sodium-ion, lead-acid, nickel–metal hydride, and zinc-air batteries. Representative flow batteries are vanadium flow, zinc-based flow, and polysulfide-halide flow batteries. Commonly used fuel cells include alkaline, polymer membrane exchange, solid oxide, and microbial fuel cells (Mejia and Kajikawa, 2020). Batteries are widely used in automobiles, consumer electronics, mobile power, aeronautics and astronautics, medical equipment, and security systems. Supercapacitors are used in applications that require high current and frequent charge/discharge cycles, such as automobiles, energy harvesting, and consumer electronics. Fuel cells are attractive for medium to heavy duty transport and are used in automobiles, electricity generation, aeronautics, and astronautics. The flywheel is mainly used for uninterruptible power supply in the power grid to provide backup power instantaneously, as well as in some types of vehicles. Both flywheel and compressed air can serve for storing redundant electricity and supply the electricity during the peak of demand. The pumped storage is mainly used for generating electricity. Thermal ESDs are mainly used for heat storage and reuse in buildings and industrial processes and storage of the solar energy for electricity generation. The typical ESD parameters include specific energy, specific power, storage capacity, response time, efficiency, charge-discharge rate, lifetime, capital/operational cost, heat sensitivity, maintenance, etc (Pollet et al., 2014).

The goal for current ESD development can be grouped into five categories: (1) lowering the cost, (2) improving the performance and efficiency, (3) ensuring the usage safety, (4) promoting the reliability and durability, and (5) reducing the environmental impact. Achieving these goals rely on accurate ESD modeling that guides the performance analysis and design. One associated challenge is the identification of model parameters. Appropriate ESD design, including choice of structural parameters, material selection, as well as designing operational strategies, is critical in ensuring the target cost, performance, efficiency, durability, and safety. A challenge is to systematically optimize the design for various conditions. Besides, monitoring and predicting the ESD status such as the state of charge, state of health, and capacity of a battery is vital for the management system to perform and adjust its controlling strategy to maximize the performance, prolong the lifetime, and ensure the safety of an ESD. The status is often difficult to directly measure and predict. A challenge is to distill and acquire useful information from a large measured dataset.

Appropriate design and optimization of ESS is critical to achieve high efficiency in energy storage and harvest. An ESS is typically in the form of a grid or a microgrid containing energy storage units (a single or multiple ESDs), monitoring units, and scheduling management units. Representative systems include electric ESS and thermal ESS. Specifically, the electric ESS mainly includes battery ESS, battery/supercapacitor/fuel cell hybrid ESS, hydraulic ESS, flying wheel ESS, and compress air ESS. ESSs are widely used in transportation (especially pure electrical vehicles and hybrid electrical vehicles), consumer electronics, grids and microgrids, buildings, aeronautics and astronautics applications, etc. The goal of ESS development is to achieve high energy storage capacity, high power distribution ability, high operation and energy usage efficiency, long durability, and low system cost. A main challenge for current ESSs is the selection and adjustment of control strategy based on the status of each unit and the energy demand.

Overview of machine learning technologies

Representative types of machine learning algorithms

Unsupervised learning

Unsupervised learning performs learning on unlabeled dataset and is typically used in the problems of clustering. A commonly used algorithm is the k-mean clustering algorithm (such as the k-nearest neighbor algorithm (k-NN)). This algorithm first randomly sets k initial centroids for k clusters with the samples assigned to the closest cluster centroids and then moves the centroids to minimize the cost function (e.g., the distance between the samples and the centroids). The learning process is terminated when the cost function is minimized or the maximum iteration is reached. Hierarchical clustering is another widely used algorithm, which calculates the similarity between two data points (quantified by the Euclidean distance), between two datasets (quantified by a distance calculated by the single linkage, complete linkage, or average linkage method), or between a data point and a dataset, and clusters the two units having the highest similarity. The learning process is terminated when the number of clusters decreases to a given value. Density-based spatial clustering of applications with noise (DBSCAN), proposed in the work of (Ester et al., 1996), is also a commonly used clustering algorithm. This algorithm groups the data points that are closely packed (described by the scanning radius, ε, and the minimum number of points required to form a dense region, MinPts) and marks the data points located in the low-density regions as outliers. The learning process is terminated when all the data points are scanned. Other clustering algorithms include the Gaussian mixture model and the mean shift algorithm.

Supervised learning

Supervised learning performs learning on labeled data, which is widely used in the problems of classification and regression. Linear regression (LR), polynomial regression, and exponential regression are the fundamental regression algorithms to construct a direct relationship between the independent and dependent variables. Besides, the Gaussian processing regression (GPR) is a type of regression algorithm that is gaining increasing attention. The GPR assumes that the sample obeys a Gaussian random process distribution (GP) rather than a parametric form, which is determined by its mean and covariance function (as indicated in (Equations 1, 2, and 3), where x and x' denote two different input vectors, m(x) is the mean function, and κ(x, x') is the covariance function). Specifically, the covariance function (kernel function) has multiple formats such as linear kernel, squared exponential kernel, Matérn kernel, periodic kernel, or a compound format of multiple types of kernel functions. During training with a labeled sample dataset, the hyperparameters in the function Equation (1) are optimized. The GPR has a higher estimation accuracy when dealing with non-linear relationships than the LR algorithm.

| (Equation 1) |

| (Equation 2) |

| (Equation 3) |

Support vector machine (SVM) is a representative kernel-based supervised learning algorithm. For solving classification problems on the linearly separable dataset (such as (x, y), where x denotes the input sample vector and y denotes the labeled target vector), SVM uses two parallel hyperplanes (margin) to clearly separate the data. The decision boundary in the middle of the two parallel hyperplanes has a form shown in Equation (4), where w and b denote the weight and the bias parameter vector, respectively. The distance between the decision boundary and each hyperplane is for a normalized or standard dataset. For solving classification problems on the linearly inseparable dataset, SVM introduces a hinge loss function to the hyperplanes to reduce the classification errors, and the problem changes to minimizing the function L in Equation (5), where n denotes the sample number and λ denotes a regularization parameter. Specifically, SVM can also apply the kernel method (the decision boundary has a form shown in Equations (6) and (7), where φ is a mapping function) by using a kernel function such as those in Table 1 to transform the input vectors to a higher dimensional feature space (Hilbert space) and then uses hyperplanes to separate the data. SVM can also be used to solve regression problems (called SVR, or support vector regression). The interior point method (IPM), sequential minimal optimization (SMO), and stochastic gradient descent (SGD) are often employed for solving the parameters in the hyperplanes. Other commonly used SVM forms include support vector clustering (SVC) and Bayesian SVM. The relative vector machine (RVM) is also a kernel-based model and is based on the sparse Bayesian learning theory (Tipping, 2001, 2003). RVM is similar to SVM and has been used in the field of energy storage.

| (Equation 4) |

| (Equation 5) |

| (Equation 6) |

| (Equation 7) |

Table 1.

Commonly used kernel functions for vector machine algorithms

| Kernel function name | Expression |

|---|---|

| Linear kernel | |

| Polynomial kernel | |

| Laplacian kernel | |

| Radial basis kernel (Gaussian kernel) | |

| Sigmoid kernel |

Note: xi and xj denote two sample vectors, n, a, b, σ denote the parameters in the kernel function.

Decision tree (DT) is another supervised learning algorithm. DT firstly chooses features to form a root node and calculates the information gain (rate) of the features. The feature with the largest information gain is selected as the node feature, whereas child nodes are built based on different values of the feature. Further child nodes are generated in the same way for each child node until the information gain is small or there are no features to choose from. The DT commonly uses the ID3, C4.5, or CART algorithm to calculate and optimize the information gain, which is based on information entropy. The random forest (RF) algorithm, which is a type of ensemble learning containing multiple DT models, can be used to increase the robustness of a tree-based algorithm. The RF algorithm surveys the result of each DT model and chooses the result with the most votes as its result.

Deep learning

Deep learning builds upon artificial neural networks (ANNs) and can be either unsupervised or supervised. It establishes a relation between the input and target parameters by non-linear functions and uses certain methods to calculate the function parameters. Deep learning receives increasing attention along with the development of big data mining and advanced computational technologies. A standard ANN model is the single-layer feedforward neural network (SLFNN), which contains an input layer, a hidden layer, and an output layer as shown in Figure 1A. An SLFNN can be described by Equation (8), where x denotes the input sample, f(x) denotes the SLFNN output, fa denotes the activation function (e.g., Table 2), W is the weight, and b is the bias. A deep neural network (DNN) contains more than one hidden layer (Figure 1B). A DNN can be described by Equation (9). SLFNN and DNN are trained through backpropagation (an optimization algorithm based on gradient descendant to optimize the weight and bias in the neural network). The deep belief network (DBN) is another widely used form of ANN proposed in the work of (Hinton et al., 2006).

| (Equation 8) |

| (Equation 9) |

Figure 1.

A schematic of commonly used machine learning models

(A) Single-layer feed-forward neural network (SLFNN).

(B) Deep neural network (DNN).

(C) Auto encoder (AE).

(D) Convolution neural network (CNN).

(E) Recurrent neural network (RNN).

(F) Reinforcement learning architecture.

(G) Generative adversarial network (GAN).

Table 2.

Commonly used activation functions in deep learning

| Name (fa) | Expression |

|---|---|

| Sigmoid function | |

| ReLu function | |

| Tanh function |

The extreme learning machine (ELM) proposed by (Huang et al., 2011) is a type of neural network with a single hidden layer. The weights and the biases are randomly defined, whereas the output layer contains no bias. During training, the weights of the output layer are transformed to a linear system for solving by a pseudo-inverse method. This is different from the typical ANN-based model, where the weight and bias parameters are obtained by the gradient descent algorithm.

Auto encoder (AE) is a neural network-based unsupervised learning algorithm, which contains an encoder and a decoder as shown in Figure 1C. The encoder maps the input dataset to the code (also known as latent variables or latent representation), whereas the decoder maps the code to the reconstructed original input. There are three types of commonly used AE variants, including the sparse AE, the denoising AE, and the contractive AE. Besides, multiple AE models combined with other algorithms (such as the clustering algorithms) can act as a deep learning model for machine learning.

The convolution neural network (CNN) is a deep learning model with a strong capability of performing feature distillation and representation learning. CNN is widely used for image recognition and object recognition. A typical CNN model contains convolutional layers, pooling layers, fully connected layers, and loss layers, as shown in Figure 1D. The convolutional layer uses convolutional kernels to sweep the input tensor or the tensor of previous convolutional layers to distill the feature information to form feature maps. The convolutional layer has a format shown in Equaitons (10) and (11) (Goodfellow et al., 2016), where denotes the (ith, jth) pixel in the output from the (l+1)th convolutional layer (feature map), denotes the input to the (l+1)th convolutional layer (the kth channel), K denotes the total number of channels in the lth convolutional layer, Ll+1 denotes the size of , denotes the weight value of the (xth, yth) element in the convolutional kernel in the (l+1)th convolutional layer, b denotes the bias vector (determined by the convolutional kernel), f denotes the size of the convolutional kernel, s0 denotes the stride number, and p denotes the padding number. The pooling layer selects features and filter the information, which usually has the form in Equation (12) (Estrach et al., 2014), where is the output and a denotes the parameter that determines the pooling strategy (a = 1 denotes the average pooling; a→∞ denotes the max pooling). The max and average pooling strategies are often used. The convolutional layer and the pooling layer often appear alternatively to provide better data mining performance. The fully connected (FC) layer combines the distilled features non-linearly and send them to the output, which has a similar format as shown in Equations (8) and (9). Similar to SLFNN and DNN, CNN is typically trained by the backpropagation algorithm. To improve the training efficiency and model robustness, transfer learning (TL) and ensemble learning (EL) strategies are sometimes used for DNN and CNN models. TL trains a complex neural network to converge on small sets of training data. EL increases the accuracy and robustness of a neural network by combining multiple learning systems.

| (Equation 10) |

| (Equation 11) |

| (Equation 12) |

Recurrent neural network (RNN), as shown in Figure 1E, is widely used for processing time-series data. The input for each RNN block is a variable x(t) at each moment t. Each block has a hidden status h(t) and an output y(t). The h(t) is imported to the next unit and combines with x(t+1) to produce h(t+1) as well as the output y(t+1). This process allows an RNN to have a time-series memory. A trained RNN can predict the patterns of input time-series variables. Long short-term memory (LSTM), originally proposed by (Hochreiter, 1997), is a representative RNN architecture. Each LSTM block contains a forget gate (which can “forget” the “unimportant” inputs to the block, whereas strengthen the “important” inputs), which can solve the problem of gradient vanishing and exploding. This leads to better training performance for long time-series scenarios than the traditional RNN algorithm. Other RNN architectures include gate recurrent unit (GRU), which is a mutation type of LSTM (Cho et al., 2014), stacked RNN, bidirectional RNN, and reservoir computing (RC).

Reinforcement learning

Reinforcement learning (RL) is mostly used for an intelligent agent to choose actions that give the maximum cumulative reward during its interaction with the environment, building on the principle of Markov decision process. A typical RL model contains an environment and an agent. The agent learns actions in response to the environment based on the state of the environment, whereas the environment sends back reward to the agent, as shown in Figure 1F. RL can be divided into two categories—model-based and model-free RLs—depending on whether explicit modeling of the environment is required. Common RL algorithms include the following: (1) Q-learning that uses the quality values Q(s, a) stored in the Q-table to generate the action for the next step and then the quality value is updated based on Equation (13), where α denotes the learning rate, γ denotes the deduction factor, R denotes the reward, a and s denote the action and state in the current step, and a' and s' denote the action and state in the next step; (2) the deep Q-network (DQN) that applies deep learning algorithms (e.g., DNN, CNN, DT) to generate a continuous Q quality value in order to overcome the exponentially increasing computational cost of Q-learning; (3) the policy gradient algorithm that generates the next-step action based on the policy function (which is the quantification of state and action values at the current step) instead of the quality value such as Q; and (4) actor-critic algorithm that uses the actor to generate the next-step action based on the current-step state and then adjust its policy based on the score from the critic, whereas the critic uses the critic function to score the actor at the current step and then adjusts its scoring policy.

| (Equation 13) |

Data pre-processing

Data pre-processing is needed in many cases in order to achieve high training accuracy for ML models. This is because the amount of data for different types of datasets can vary significantly, causing an ML model to fail to capture the features of datasets with less data. Data compaction on the dataset is one of the widely used pre-processing methods. Principal component analysis (PCA) is often used to compact the data. The idea is to reduce the dimension of the dataset while keeping the main features within the dataset. PCA firstly calculates the correlation coefficient matrix (or the covariance matrix) of the pre-processing data matrix, then calculates the eigenvector of the correlation coefficient matrix (or the covariance matrix), and finally projects the data to the space formed by the feature vector. Besides PCA, AE is also widely used for data compaction. Other algorithms such as the undersampling algorithms based on clustering has also been used to compact the dataset.

In some application scenarios, the amount of data for certain types of dataset is limited, which can cause difficulties to train an ML model to achieve high learning accuracy (especially for the datasets containing complex features or ML models with complex structures such as a DNN with multiple hidden layers or a CNN with multiple convolutional and pooling layers). This is because the parameters of an ML model can hardly reach their optimized values during training (for ML models using optimization algorithms to acquire the model parameters) or be solved explicitly (for ML models that solve the model parameters analytically). It is important to have a large dataset with similar features but different details to train an ML model. To solve this problem, the generative adversarial network (GAN), an unsupervised learning proposed by (Goodfellow et al., 2014), is widely used. The GAN can generate or reconstruct dataset with features similar to the original inputs as well as to repair or enhance the quality of the dataset. A GAN typically contains a generator and a discriminator (two neural networks as shown in Figure 1G). The input to the generator is the noise vector (containing information of the real dataset), whereas the output from the generator is the fabricated dataset. The input to the discriminator is the fabricated dataset and the real dataset, whereas the output from the discriminator is the result showing whether the fabricated dataset is fake or real. The training principle for the GAN is to optimize the game function in Equation (14), which is quantified based on the theory of cross-entropy. Specifically, the generator firstly fabricates a dataset that can easily cause the discriminator to judge whether the dataset is generated or real. The generator keeps on training to reduce the difference between the fabricated and the real datasets based on the loss function ℓG in Equation (15), where z ∼ p(z) denotes the random noise samples obeying a random probability distribution p(z), G(z) denotes the generated datasets based on the random noise samples (the output datasets from the generator), and D(G(z)) denotes the judgment result of the fabricated datasets by the generator (the output scalar from the discriminator). At the same time, the discriminator keeps on training to distinguish the fabricated dataset and the real dataset based on the loss function ℓD in Equation (16), where x ∼ pdata denotes the real datasets obeying a certain distribution characteristics pdata, and D(x) denotes the judgment result of the real datasets (the output scalar from the discriminator). The training of a GAN ends when the discriminator cannot distinguish whether the fabricated dataset by the generator is fake or real (i.e. the system optimization reaches the Nash equilibrium, ). In addition to GAN, other types of algorithms such as the oversampling algorithm based on SMOTE (Chawla et al., 2002) are also widely used to regenerate datasets with similar features.

| (Equation 14) |

| (Equation 15) |

| (Equation 16) |

Besides data compaction and dataset construction, data normalization and data smoothing are also commonly used before training an ML model. Data normalization scales the values of the data to be within a certain range (typically within the range of (0,1)), which can accelerate the convergence of model parameters during training and increase the learning accuracy. The typical data normalization methods include the min-max normalization calculated by x'=(x-min(x))/(max(x)-min(x)) and the mean normalization calculated by x'=(x-mean(x))/(max(x)-min(x)). Data smoothing uses some algorithms to reduce the noise in the signal, which is important in reflecting the characteristics of the dataset and avoiding overfitting during training. The commonly used data smoothing algorithms include moving average, exponential mean average, Savitzky Laplacian smoothing, kernel smoother, Golay filter, and Kalman filtering.

Approaches and applications of machine learning for ESDs

In this section, the application of machine learning for the development and management of energy storage devices is reviewed. We first introduce the three most commonly used types of ESDs, including batteries, capacitors/supercapacitors, and fuel cells. The problems that machine learning mainly focuses on are state estimation and prediction, lifetime prediction, property analysis and classification, fault discovery and diagnosis, as well as modeling, design, and optimization. The commonly used ML algorithms include unsupervised learning, supervised learning, and deep learning. For each application, the problem description, setup of the ML model, and resulting performance are discussed. Finally, the application of machine learning for the flow battery and flywheel are introduced.

Application of machine learning for batteries

Battery state estimation

The battery state mainly includes state of charge (SOC), capacity, and state of health (SOH). Specifically, SOC is quantified by the ratio of the releasable capacity of a battery over its rated capacity. SOH is quantified by the ratio of the maximum releasable capacity over the rated capacity. SOC and SOH are important parameters for the battery management system (BMS) to choose appropriate controlling strategies to improve the performance and to ensure the safety and lifetime of the battery system (Murnane and Ghazel, 2017).

We first show the application of supervised learning for battery state estimation. For the application of regression methods, the work in (Richardson et al., 2017) applied GPR to forecast the SOH of batteries. The GPR model contains a compound kernel function composed of two types of Matérn kernel functions with ν of 5/2 and 3/2 (Ma5+Ma3). Specifically, they applied an integer number of cycles to be the input to the GPR model and applied the corresponding measured capacity to be the output from the GPR model. Their results showed that the compound kernel function has the advantage of capturing complex behaviors. Using multi-output GPs, the correlation between the data from different cells can be effectively explored. This can improve the forecasting performance, whereas the calculation efficiency may be reduced as a result of the need to handle a large amount of output. Besides, they also applied GPR to estimate the in-situ capacity of Li ion batteries (Richardson et al., 2018). The GPR model contains a Matérn (ν of 5/2) kernel function. The work in (Sahinoglu et al., 2017) applied GPR models (including regular GPR model, recurrent GPR model, and autoregressive recurrent GPR model) to estimate the SOC of Li ion batteries. The GPR models contain a squared exponential kernel function. They set the voltage, current, and temperature at the moment k to be the input to the regular GPR model; the voltage, current, and temperature at the moment k and the SOC at the moment (k −1) to be the input to the recurrent GPR mode; and the voltage, current, and temperature at the moment k and the voltage, current, temperature, and SOC at the moment (k −1) to be the input to the autoregressive recurrent GPR model. They set the SOC to be the output of the three GPR models and applied a dataset of 2000 data points to train their models. The results show that the recurrent GPR model has a better estimation accuracy than the regular GPR model.

Besides the above-mentioned regression approaches, a random forest regression model is applied to estimate on-line SOH (Li et al., 2018). Specifically, features are extracted (including the relative capacity values with ΔV intervals in a specific voltage region) from the voltage-capacity curves. The associated SOH values are used to construct the training dataset. The RF model contains 500 decision trees and provides high accuracy in SOH prediction with a mean squared error (MSE) below 1.3%. In another work (Andre et al., 2013), a dual filter consisting of a standard Kalman filter (SKF) (Kalman, 1960) and an unscented Kalman filter (UKF) (Julier and Uhlmann, 1997) is employed to estimate the SOC and SOH for Li-ion batteries. An SVR is used to couple with the dual filter. High accuracy in SOC estimation with an error below 1% is achieved.

ANN-based models are widely used for battery state estimation. DNN is used to estimate the SOC of Li-ion batteries (Chemali et al., 2018). The voltage, temperature, average current, and average voltage of the battery at moment t are used as the input of the DNN, whereas the SOC value at moment t is the output. The DNN shows high accuracy in predicting the SOC with an average mean absolute error (MAE) of 1.10% at 25°C and 2.17% at −20°C. In another work, a radial basis function neural network (RBFNN) model is applied to estimate the capacity and internal resistance of Li-ion batteries (Zhou et al., 2020b). The model contains one hidden layer, with the input being the three characteristic voltage points collected during the series discharging after normalization and with the output being the capacity and internal resistance. Among the data collected from 108 cells (acquired by simulation), 50% of them is used to train the RBFNN model. The capacity and internal resistance are predicted with an error below ±5% and ±0.4%, respectively. A load-classifying neural network (NN) model is also developed to estimate SOC (Tong et al., 2016). The NN classifies the input vectors (including the extracted features of current, voltage, and time) into three categories (charging, idling, and discharging, as shown in Figure 2A) based on the current and trains the three sub-NNs in parallel. Thus, the load-classifying NN has a simpler training procedure, a broader choice of training data, and smaller computational cost. The output of the load-classifying NN is the estimated SOC (after filtering). The model is trained with the load profile of a vehicle's driving cycle. It is shown that the average absolute estimation error of SOC is 3.8%. Extreme learning machine (ELM) is used for SOH estimation of LiNMC batteries on-line (Pan et al., 2018). The input for the ELM is the ohmic internal resistance and the polarized internal resistance (suggested by the result of health indicator extraction), whereas the output is capacity degradation. The ELM provides on-line SOH estimation with a maximum error below 2.5%.

Figure 2.

Examples of the application of machine learning for battery state estimation

(A) The structure of the load-classification neural network model. Reprinted from (Tong et al., 2016). The input data are divided into three subsets based on the three types of battery behavior (charging, idling, and discharging). Each subset is imported into a specific split of the input layer (distinguished by three colors).

(B) The structure of the CNN-LSTM hybrid model. Reprinted from (Song et al., 2019). The input parameters are firstly imported into the CNN module, which has a layer with six filters of length three. Then the CNN-processed data are imported to the LSTM module, which has a layer with hidden nodes.

(C) The application of GAN-CLS for generating the required data rather than running experiments to train the bidirectional LSTM model. Reprinted from (Zhang et al., 2020). (C1) Converting the vector data (the voltage curves with pulse characteristics of nine battery cells) to 2D images for importing to the discriminator after feature mining by a CNN. (C2) The generated image representing the voltage data with pulse characteristics during the training of the GAN-CLS. Note that random data can be generated as voltage data after 40 epochs.

(D) A schematic of the transfer learning and ensemble learning for DCNN models to estimate the battery capacity. Reprinted from (Shen et al., 2020). (D1) An illustration of the transfer learning process. (D2) An illustration of the ensemble learning model, which contains n DCNN-TL models.

CNN and RNN are used to estimate the battery state based on complex feature datasets with time-series characteristics. A combination of CNN and LSTM networks are employed to estimate the SOC of Li-ion batteries (Song et al., 2019). The CNN-LSTM structure is shown in Figure 2B, where the CNN is used for pattern recognition and spatial feature distillation, whereas the LSTM is used to process the time-series data (learn the temporal features of the battery dynamic evolution). A total of 24,815 groups of data points is acquired from experiments to train the CNN-LSTM system. The input in each group include the current, voltage, temperature, average current, and voltage, whereas the output is the time-dependent SOC estimation. The trained CNN-LSTM can estimate the SOC with the overall root-mean-square error (RMSE) and MAE below 2% and 1%, respectively. In a GRU-based RNN model to estimate the SOC (Xiao et al., 2019), the Nadam and AdaMax ensemble optimizers (Dozat, 2016; Kingma and Ba, 2014) are used to optimize the model parameters during the GRU-RNN training. The input for the RNN model includes the time-series of current, voltage, and temperature after normalization, whereas the output from the RNN model is the time series of estimated SOC. A dropout algorithm (with a dropout probability of 0.2) is used to prevent overfitting during training. Trained with 80% of the 18 simulated data subsets (a total sample number of 1,26,356), the GRU-RNN model gives high accuracy in SOC estimation with an average RMSE of 1.13% on the testing dataset. In another RNN model to estimate SOH (You et al., 2017), the input for the LSTM include the current and voltage at each present moment and the output parameter at each previous moment. The output parameter of the LSTM at each present moment is imported to the pooling layer and finally to the regression layer (which finally constructs the relationship between the current, voltage, and SOH at each present moment). A vanilla neural network is coupled to assist in alleviating the sensitivity to noise. Trained with 20 sets of experimental data, the model shows an average validation error on capacity estimation lower than 0.0765 Ah (RMSE of 2.46%). Studies have also used a combination of GAN-CLS and bidirectional LSTM (Bi_LSTM) to predict SOC (Zhang et al., 2020). The GAN-CLS is used for generating labeled datasets to train the Bi_LSTM model, which can reduce the amount of experimental running. The battery charging/discharging data (OCV curves) are converted into images (as shown in Figure 2C), and then the GAN-CLS model is employed to generate the corresponding data based on the images. After that, the newly generated data are used to train the Bi_LSTM model to predict the OCV characteristics. The results show that the modeling and training/testing time is significantly reduced. The MSE and average MSE of prediction are less than 0.0025 and 0.0013, respectively.

The strategy of transfer learning and ensemble learning is gaining increasing attention for improving the training efficiency and robustness of the ML model. Deep convolutional neural networks coupled with ensemble learning and transfer learning (DCNN-ETL) are used to estimate the capacity of Li-ion batteries (Shen et al., 2020). The DCNN-ETL structure is shown in Figure 2D. Each of the input data group is set to be current, voltage, and charge capacity with 25 segments, whereas the output is capacity. A total of 25,338 groups of samples in the dataset are split to pre-train the n DCNN sub-systems and 525 groups of the samples are used to retrain the DCNN-ETL system. The results show that the DCNN-ETL has a higher training accuracy than DCNN, DCNN-TL (DCNN with transfer learning), and DCNN-EL (DCNN with ensemble learning), whereas the training efficiency of DCNN-ETL is much lower than the other machine learning algorithms.

Battery lifetime estimation and prediction

The remaining useful lifetime (RUL) of a battery is typically quantified by the time or cycling number when the capacity or SOH decreases to a threshold value. Accurately predicting the RUL is critical for the BMS to adjust its controlling strategy to ensure the performance, safety, and lifetime of a battery. Besides, accurate estimation and prediction of battery RUL is vital in providing guidance for battery reuse or recycling. Regression algorithms are often used to estimate and predict RUL.

Among the application of supervised learning for battery lifetime estimation, the work in (Severson et al., 2019) applied a linear regression data-driven approach to predict the battery cycle life before capacity degradation based on the early cycle discharge data. They created a dataset from 124 Li-ion battery cells cycled under fast charging conditions, used features calculated based on the discharge voltage curve (such as charge time, temperature integral, discharge capacity at cycle 2, etc.) as the input of the regression model, and used the predicted number of cycles as the output of the regression model. Their results showed a test error of 9.1% for the regression setting when only using the first 100 cycles and a test error of 4.9% for the classification setting when only using data from the first 5 cycles. Besides the regression approach, decision tree is used to predict RUL (Zhu et al., 2019b). An optimized version of the CART algorithm is applied for the DT model. It is shown that DT can predict RUL with high accuracy (e.g., 95.2% for feature combination). The work in (Zhou et al., 2020c) shows the application of a k-NN regression (kNNR) algorithm to estimate the RUL of Li-ion batteries by utilizing k-nearest experimental cells sharing a similar degradation trend. A differential evolution technology is employed to optimize the parameters used for RUL prediction. The best RUL estimation has an error of 2 cycles or a relative error of 0.5%. In another work (Liu and Chen, 2019), a combination of indirect health indicator (HI) and multiple GPR model is applied to predict RUL. Three features are first distilled as HIs from the voltage and current curves of the batteries during the constant current-constant voltage (CCCV) charging process. The GPR model (which is based on Bayesian framework) is optimized with combined kernel functions (linear function, squared exponential, and periodic covariance functions). Then the three normalized HIs and the capacity are set as the input and output of the optimized GPR, respectively, to train the model. It is found that the approach gives high prediction accuracy (the relative error is below 5% for the B5 battery with the starting predicting cycle of 100).

As one of the supervised learning models, SVM (and SVR) is widely used for RUL prediction. For instance, the SVM model is used to classify and predict the RUL of Li-ion batteries (Patil et al., 2015). The SVM classification technology is firstly used to estimate a gross RUL (a total of four classes for different discharge cycles). Then the SVM regression model is used to estimate an accurate RUL. Specifically, features (including capacity, energy of signal, fluctuation index of signal, curvature index of signal, etc.) are distilled from the cycling tests and are imported into the SVM to train the models for RUL prediction. It is shown that the classification-regression approach can improve the calculation efficiency. Besides SVM, the relevance vector machine is also used to predict RUL, such as the work of (Liu et al., 2015) and (Zhou et al., 2016). Specifically, a novel health indicator (the mean voltage falloff (MVF)) is developed to describe the degradation more comprehensively (Zhou et al., 2016). A series of optimized MVFs is used to train the RVM and then predict the MVF pattern starting from a specific starting cycle to predict the RUL. The approach shows high prediction accuracy with an absolute error below five cycles. A hybrid method (fusing the algorithms of unscented Kalman filter (UKF), RVM, and complete ensemble empirical mode decomposition (CEEMD)) is applied to predict the RUL (Chang et al., 2017). The UKF is used to achieve the prognostic result and gain a series of raw error data. Then the CEEMD is used to decompose the error data and obtain the dominant mode (new error data). Finally, the RVM is used to predict the prognostic error to predict the final RUL.

Various ANN-based models are applied for RUL prediction. ANN (an SLFNN model) is used to predict the RUL for Li-ion batteries online (Wu et al., 2016). The input to the SLFNN are eleven points from the CC charging voltage curve selected by the importance sampling (IS) method (Biondini, 2015; Sadowsky, 1990), whereas the output of the SLFNN is the equivalent circle life (ECL). Then the RUL is calculated by subtracting the cycle number when the battery reaches its end of life by the ECL. The data points from 2000 charging/discharging cycles are used to train the SLFNN model. It is shown that the error of RUL prediction is less than 5%. ANN (an SLFNN model) and SVM models are used to estimate the cycle life of lithium polymer batteries (Zhou et al., 2017). The input for the SLFNN and SVM (has a linear kernel function) is the thermal information (normalized de-trend surface temperature acquired from the infrared images taken in experiments) or the electrical information (the current or voltage). The output of the SLFNN and SVM is the cycle number of the battery (or the associated elapse time when the ML model is SVM). Three cells are cycled for 410 cycles and a portion of voltage, current, and temperature datasets of each cell are used to train the ML models. It is shown that with the input being the thermal information, the accuracy of cycle life prediction by the SLFNN and SVM is similar (with the error below 10%), but the SVM needs longer testing time. DNN is used to predict the SOH and RUL of Li-ion batteries (Khumprom and Yodo, 2019). In the work a dropout algorithm is applied to train the DNN (the dropout strategy randomly drops some neurons of the DNN with a fixed probability of p (0.25 in the research) during DNN training) to make the DNN thinner and reduce overfitting, which improves the DNN performance (Srivastava et al., 2014). A comparison to other algorithms shows that DNN prediction of SOH has an RMSE (3.427%) lower than that of ANN (4.611%), SVM (4.552%), logistic regression (4.558%), and k-NN (5.598%). DNN shows similar accuracy as ANN when predicting RUL (e.g., both have an error of one cycle at the starting point of 120th cycle). DNN and ANN show higher prediction accuracy than that of the SVM (an error of 4 cycles), logistic regression (an error of 9 cycles), and k-NN (an error of 19 cycles).

For estimating the RUL, the data can sometimes be complex so that appropriate data preprocessing and feature distilling methods should be employed to improve the training accuracy. In a work of using DNN to predict RUL (Ren et al., 2018), feature extraction from the characteristic curves of batteries (including terminal voltage, output current, temperature, and measured voltage, and current curves during multiple charge cycles) is applied to overcome the unbalanced dataset number. Specifically, an autoencoder neural network is employed to reduce the dimension of the features (15 dimensions) and improve the efficiency of the model. After that, the 15-dimensional features are normalized and set as the input to the DNN, whereas the output of the DNN is RUL. The results show that the DNN combining the data-preprocessing approach has much higher prediction accuracy for RUL than that of the LR, Bayesian regression (BR), and SVM algorithms (the RMSE of DNN, LR, BR and SVM is 6.66%, 12.00%, 11.22%, and 10.66%, respectively). Besides, the strategy of ensemble learning can also be applied during the setup of an ML model to increase the model robustness. For example, an ensemble learning system (ensemble of 200 single random vector functional link (RVFL) network models) is applied to predict the RUL of Li-ion batteries (Gou et al., 2019). The RVFL algorithm randomly chooses the input weights and biases and analytically determines the output weights by simple matrix computations. Thus, the learning speed of RVFL is higher than that of the conventional ANN models. Specifically, the nonlinear autoregressive with exogenous inputs (NARX) structure containing past and present information is applied for the RVFL network to improve the accuracy and stability of the predicting results for RUL. The sequence of history capacity value and the duration of equal charging voltage difference value are input to each of the RVFL network models. The output from each RVFL network is the capacity value after a certain number of cycling. The dataset from three batteries is used to train the ML model. The ensemble learning system shows high accuracy in predicting RUL (e.g., with an RMSE of 0.0184 for the CS 38 battery).

As for the input dataset with complex time-series characteristics, CNN and RNN models are both used to predict RUL. For instance, a CNN-LSTM hybrid neural network is employed (Ma et al., 2019a). The CNN is used to extract useful information, whereas the LSTM is used to predict the unknown sequence of capacity data based on the features extracted by CNN. A segment of the discharging capacity degradation curve is applied to train the hybrid neural network. Specifically, the false nearest neighbors (FNN) algorithm is used to determine the appropriate sliding window size and to determine the size of the input vector (the segment length of the capacity-cycle curve selected for the input). The output is the predicted capacity-cycle curve. The RUL is calculated based on the curve. It is shown that by appropriately selecting the sliding window size, the CNN-LSTM hybrid neural network model can provide a higher prediction accuracy for RUL than using CNN or LSTM alone (e.g., the actual RUL is 56 cycles, whereas the predicted RUL by CNN-LSTM, CNN, and LSTM are 55, 117, and 102 cycles, respectively). In a work of using LSTM to predict RUL (Zhang et al., 2018), a specific span of the experimental cycle-capacity curve of each battery cell is employed to train the LSTM. A total of four cells are used to generate the data. A dropout strategy is used during training to avoid overfitting, whereas Monte Carlo is used to generate RUL prediction uncertainties. A comparison to other ML algorithms, including SVM, particle filter model (PFM), and simple RNN (SimRNN), shows that the LSTM can predict RUL independent of the offline training data and that the LSTM provides much higher prediction accuracy. An LSTM-DNN deep learning model is also applied to predict RUL (Veeraraghavan et al., 2018). The battery capacity decaying pattern obtained from virtual tests (based on a standard proposed by (Wang et al., 2011)) under various C-rates is used to train the deep learning model. The input features for the deep learning model are current, voltage, working temperature of the battery, and the battery capacity at the previous moment. The output is the capacity at the current moment. Similarly, several studies (Liu et al., 2019b; Park et al., 2020; Qu et al., 2019) have used ML algorithms containing LSTM to predict the SOH or the RUL of batteries.

Battery fault diagnosis, degradation analysis, and property classifications

An important application of the machine learning approaches is to detect battery defects, as well as to detect and classify the abnormal batteries, in order to ensure the consistency of battery cells. This is beneficial for the BMS system to choose appropriate controlling strategies, which is critical for ensuring the safety and lifetime of batteries.

We first look at unsupervised and supervised learning approaches. In a study (Ortiz et al., 2019) several ML algorithms are applied to classify the unbalance and damage of Ni-MH battery cells, including logistic regression (Dong et al., 2016), k-NN, kernel-SVM (KSVM with a Gaussian radial base function kernel (Kim et al., 2012)), Gaussian naive Bayes (GNB), and NN with only one hidden layer. Principal component analysis (PCA) is used to generate 28 PC points showing the cell quality in two classes based on 28 discharging voltage curves. The generated PC points are used to train the ML algorithms. It is found that KSVM and GNB give the best classification performance because their classification curves matches the data well. In another work (Haider et al., 2020), a K-shape-based time series hierarchical clustering algorithm is applied to perform defect detection for lead-acid batteries.

The Gaussian process regression is also gaining significant attention. For instance, the work in (Lucu et al., 2020) applied the GPR to construct a calendar capacity loss model to analyze the aging characteristics of Li ion batteries. The model contains a tailored kernel function. Specifically, they set the storage time for which the aging is predicted, the reciprocal temperature corresponding to this storage time, and the SOC level corresponding to this storage time to be the input to the GPR model and set the capacity loss to be the output. Their results showed that the mean-absolute-error of prediction of capacity loss and capacity is 0.31% and 0.53%, respectively, when the model was trained by the dataset acquired from only 18 cells tested at 6 storage conditions.

The deep learning approach is also widely used for detecting and classifying battery cells with abnormal behaviors. A deep belief network model is employed to detect the voltage anomalies of storage batteries (Li et al., 2019). The DBN model consists of a plurality of restricted Boltzmann machine (RBM) (Fischer and Igel, 2012) stacks and a layer of neural network. The model can learn the probability distribution of the input data and use the calculated probability to determine the active state of each node. So, the model has a higher training speed and better training convergence than the traditional back-propagation neural network. The voltage-time and current-time curves after parameter extraction are used to train the DBN (with 15 hidden layers). The input includes 9 parameters such as charge/discharge current, time, temperature, etc., whereas the output is voltage. A comparison with optimization methods of gradient descent (GD) and Levenberg-Marquardt (LM) (Moré, 1978) show that DBN-LM has the highest voltage predicting accuracy (with an MSE of 7.25 × 10−4 and an MAE of 0.0105) and training speed (639 iterations) than DBN-GD, BP-LM, and BP-GD. In another work (Zhao et al., 2017), big data analysis methods (including 3σ multi-level screening strategy (3σ-MSS) and a neural network algorithm) are used to diagnose the fault and defect of batteries. The real-time running data of electric vehicles are used. The 3σ-MSS algorithm is based on the Gaussian distribution probability characteristics, which can generate clusters and fault criteria for trouble-free terminal voltages. The 3σ-MSS updates the cluster by excluding the samples outside the 3σ range. Then an ANN (back-propagation NN with a hidden layer) model is applied to simulate the cell fault distribution in a battery pack. The input to the ANN is the fault frequency matrix acquired by the 3σ-MSS. In the work of (Yao et al., 2020), a wavelet-neural network system (containing discrete wavelet transform (DWT) and a general regression neural network (GRNN)) are used to detect the fault of Li-ion batteries for electric vehicles. The GRNN used is a highly parallel radial basis function network, which contains the input layer, pattern layer, summation layer, and output layer. The GRNN has the advantage of faster model building when compared with a traditional ANN. Specifically, the signal values (after denoising by DWT) and the characteristic parameters of the voltage curves (acquired from vibration tests) are used to train the GRNN. The input of the GRNN include voltage, voltage difference, covariance matrix, and variance matrix. The output of the GRNN is the fault degree (quantified by the fault score). The predicting accuracy of the GRNN can be up to 99.675%.

The input dataset (such as a cluster of multiple voltage curves) can sometimes be complex and contains multiple features, which make it difficult for most machine learning algorithms to process and learn. CNN, which has a great capability in mining the underlying features of the dataset, can be employed for clustering the terminal voltage curves of battery cells to diagnose the abnormal battery cells. For instance, CNNs based on two-step time-series clustering (TTSC) and hybrid resampling (HR) are applied to screen the Li-ion batteries (Liu et al., 2018), in order to ensure the consistency of electrochemical characteristics of battery cells and to avoid property discrepancies caused by material variation and fluctuations in manufacturing precision. The TTSC is used for labeling the raw discharge voltage series of the cells (by using the k-means algorithm (Huang et al., 2016) to classify whether the cell is inconsistent (labeled as “0”) or not (labeled as “1”)). The hybrid resampling is used for solving the sample imbalance issue (by using the under-sampling algorithm to discard a portion of similar samples in the majority class dataset and by using the SMOTE algorithm (Chawla et al., 2002) to increase the number of minority class dataset). After that, the labeled and number-balanced discharge voltage series are used to train the CNN (a total of 7,205 series for training). The input is the discharge voltage-time curve, whereas the output is the classification result of the label distribution (inconsistent or not). By using the TTSCHR-CNN to screen the testing discharge voltage series of the cells, the inconsistency rate drops by 91.08% compared with the traditional screening method.

The above-mentioned machine learning approaches mainly use the dataset with the array data structure as the input for training the ML models. In some applications, the input to the ML models is in the form of images, such as the snapshots of the battery electrode microstructure. Under this circumstance, the CNN, which is highly capable in distilling the features of images, can be utilized. This borrows the learning process from the field of computer vision and is gaining increasing attention in the energy storage field. The work in (Wang et al., 2019) shows an approach to classify the voltage of fully charged LiPo batteries after a period of usage by applying CNN to learn the images of “battery face.” A Walabot sensor (which is a radio-frequency-based sensor) is used to collect the signals of five types of LiPo batteries with four types of fully charged voltage, to create “battery face” RGB images (the varying image characteristics are caused by the varying electrolyte states). Besides, linear discriminant analysis (LDA) is used to cluster the voltage class. Then the images (input) and the class that the battery voltage belongs to (output) are used to train the CNN model. It is shown that the CNN model has an accuracy of 93.75% (at the 80th epoch) for classifying the battery voltage. It is suggested that the CNN classifying accuracy can be further improved by accessing the raw image of Walabot, increasing the amount of training images, developing parameter optimization algorithms for CNN, and employing appropriate preprocessing methods on the training images. The work in (Badmos et al., 2020) shows the application of CNN to detect the microstructural defect in the electrodes of Li-ion batteries as a result of the manufacturing process to evaluate the battery quality. A total of 2,284 micrographs of the original electrodes are used to train the CNN model. The micrographs are 256 × 256 RGB images as shown in Figure 3. An image with defect is labeled as “class 0,” whereas an image without defect is labeled as “class 1.” The input to the CNN are the images, whereas the output from the CNN is the classification result (defect or not). The models used in the research include traditional CNN models (Baseline model, Sigmoid model, and Softmax model) trained by limited battery data and TL CNN models (pre-trained VGG19 (Simonyan and Zisserman, 2014), inception V3 (Szegedy et al., 2016), and Xception (Chollet, 2017), with a new fully connected layer added at the end of each TL model). It is shown that the CNN can learn meaningful features on its own and identify various defects. Specifically, the TL CNN models show a much higher classification accuracy than the traditional CNN models, because the TL models have previously experienced a larger size of dataset for training. The VGG19 fine-tuned model achieves the best classification performance (with an F1-score of 0.99 for class 0 and 1.00 for class 1) among all the CNN models. In the work of (Ma et al., 2019a, 2019b), CNN is applied to detect the blister defect of the polymer Li-ion battery (PLB). A total of 18,860 PLB sheet images (RGB images with a size of 219 × 219) are used to train the CNN model. The output of the CNN model is the classification result (blister or not). Improved dense blocks are employed to overcome the gradient vanishing problem during CNN training and to increase the training rate. In addition, the flower pollination algorithm (FPA) is used to optimize the parameters in the CNN model during training. It is found that the CNN model gives better classification performance (with an F1-score of 0.988) than the other seven ML algorithms tested (such as NN, SVM, and RCNN).

Figure 3.

Examples of the application of machine learning for battery fault diagnosis and abnormal detection

(A) The application of CNN for detecting the battery electrode microstructural defect based on deep learning computer vision method. Reprinted by permission from Springer Nature (Badmos et al., 2020). Copyright 2020. (A1) Training process for CNN based on the large image of electrode micrograph. (A2) Testing process for CNN.

(B) Examples of class heatmap for micrographs with defect (shown in red box) and without defect. Reprinted by permission from Springer Nature (Badmos et al., 2020). Copyright 2020.

Battery design and optimization

In this subsection, we introduce the application of machine learning for battery design and optimization. The work of (Wu et al., 2018) shows the approach of using ANN (SLFNN model) to optimize the performance (specific energy and specific power) of Li-ion battery cells based on cell design parameters (electrode thickness, solid volume ratio, Bruggeman constant, particle radius, electrolyte concentration, and the applied C-rate). A total of 900 groups of data points generated by finite element method (FEM) simulations are used to train and valid the ANN-based model. Sensitivity analysis based on the ANN model identifies which design parameters are important for specific energy or specific power. Battery design maps that satisfy both specific energy and specific power requirements are generated. The work of (Gao and Lu, 2020) demonstrates the development and application of a DNN model to optimize the electrolyte channel design in thick electrodes for fast charging. A total of 20,000 groups of data points are generated by FEM to train, verify, and test the DNN model (which has three hidden layers). The geometrical parameters of the electrolyte channel (Figure 4A1) are set as the input for the DNN model, whereas the output from the DNN is the specific energy (SE), specific power (SP), and specific capacity (SC). The design maps that correlate the geometrical parameters and cell performance (as shown in Figures 4A2–A4) and the Ragone planes that correlate SE and SP (as shown in Figures 4A5–A7) are successfully generated. The trained DNN shows high accuracy in predicting the SE, SP, and SC, with relative error below 5%. The work also shows using the Markov Chain Monte Carlo (MCMC) algorithm with DNN to optimize the battery performance. The MCMC is based on the gradient descendant method coupled with the self-adjustment strategy (Gao et al., 2019; Ying et al., 2017a, 2017b). The MCMC is contained in the trained DNN model as a continuous regression function. It is shown that a battery cell with the electrolyte channel design can improve the specific energy by 79% compared with the conventional-designed battery cells during fast charging. ANN-based model is also applied to design mesoscale electrode structures (Takagishi et al., 2019). MATLAB is used to construct 2,100 three-dimensional (3D) artificial electrode structures based on the random packed method. These models are then used to generate 2,100 groups of data points acquired by simulations to train and verify an ANN. The input to the ANN are electrode design, property, and manufacturing parameters including the active material volume fraction, particle radius, binder/additives volume fraction, electrolyte conductivity, and the compaction process pressure. The output from the ANN are resistance parameters, including the reaction resistance, the electrolyte resistance, and the diffusion resistance. The trained ANN model (with two hidden layers) can achieve prediction accuracy of R2 = 0.99. The Bayesian optimization algorithm coupled with the trained ANN-based model is further used to infer the optimized process parameters from the total specific resistance.

Figure 4.

Examples of the application of machine learning for battery design and optimization

(A) The application of DNN on optimizing the electrolyte channel geometrical parameters within the electrodes to gain the maximum battery cell performances. Reprinted from (Gao and Lu, 2020). (A1) Model description and geometrical parameters of the electrolyte channels. The design idea is motivated by the microstructure of the trunk, which can transport nutrients quickly through catheters. The input parameters to the DNN are LEA, LEC, WEA/WH, WEC/WH, WEA/WEA-b, and WEC/WEC-b. The output from the DNN are SE, SP, and SC. (A2–A4) Design map of the specific energy generated by the trained DNN. (A5–A7) Ragone planes generated by the trained DNN coupled with MCMC.

(B) The application of DC-GAN for the reconstruction of the 3D multi-phase electrode microstructure for the battery and SOFC with periodic boundaries. Reprinted from (Gayon-Lombardo et al., 2020). (B1) The structure of the DC-GAN model. (B2) Results of the reconstruction. The generated and the real microstructures are similar, showing successful reconstruction by the DC-GAN.

For constructing the structure of ESDs, several studies have applied the generative adversarial network. For instance, in (Gayon-Lombardo et al., 2020), deep convolutional GAN (DC-GAN) models are applied to construct 3D cathode microstructures for batteries and 3D anode microstructures for solid oxide fuel cells (SOFC). The structure of the DC-GAN is shown in Figure 4B1. The generator and the discriminator of the DC-GAN both contain five layers. The layer function for the generator is Conv3d, whereas the layer function for the discriminator is ConvTransposed3d. The real dataset for the DC-GAN model are microstructural images. A comparison of the real and the synthetic data (based on morphological parameters, transport properties, and the two-point correlation function) shows that arbitrarily large synthetic microstructural volume and periodic boundaries can be successfully constructed. Examples of the real and generated microstructures for the battery cathode and the SOFC anode are shown in Figure 4B2.

Battery modeling and behavior prediction

The machine learning approaches are also used for modeling and predicting the battery behavior, as well as building up battery models. For instance, the SVM algorithm is used to classify the type of EIS models for the EIS spectrum in Li-ion batteries and supercapacitors (Zhu et al., 2019a). Five different types of equivalent circuit EIS models are included. Over 500 EIS spectra are extracted from published papers and the (Z(Re); -Z(Im)) points of the EIS spectra are used to train the SVM. Among the total data, 80% is used for training and 20% is used for testing. In the work of (Tang et al., 2018), a model-based ELM is applied to predict the future voltage, power, and surface temperature of batteries based on the given load current information. The model-based ELM uses the temperature model and the equivalent circuit model of a battery to replace the active function of the ELM. The model-based ELM can predict the temperature and power with error limited to ±1.5 °C and 2.5 W, respectively. In another work (Chen et al., 2018), SVM is applied to detect the heat generation mode for Li-ion batteries. The input for the SVM model are the temperature rise and the discharge capacity. The SVM model outputs the classification of the type of heat generation mode (JD mode or RGB mode) based on an optimized hyperplane.

For battery model construction, the stacked denoising autoencoders-extreme learning machine (SDAE-ELM) is applied to build a temperature-dependent model for Li-ion batteries (Li et al., 2019a). A total of 450,000 points of data are used to train the SDAE-ELM model. Specifically, SVR is used for data cleaning, including filling the missing data (current, terminal voltage, or SOC) and correcting the outlier data. The f-divergence algorithm is applied to evaluate and even the data distribution for data preprocessing. The SDAE acts as a feature extractor, which is trained (unsupervised) by the preprocessed data. The output of the SDAE serves as the input for ELM. The ELM is used to construct models, which is trained by the raw data. For predicting the terminal voltage, the input to the SDAE-ELM are SOC, current, and temperature. For predicting the SOC (at moment t), the input to the SDAE-ELM are the terminal voltage, current, temperature at moment t, as well as the SOC at moment (t-1). Results show that the temperature-dependent battery model provides terminal voltage estimation with an error less than 2%, whereas the error in SOC estimation is within 3%. In (Li et al., 2019b), a deep belief network-back propagation neural network (DBN-BP) is used to construct a battery model to provide information for the BMS. The DBN-BP model contains a DBN and a layer of BP neural network. The DBN acts as the pre-trainer for the BP network. The experimental data collected from electrical buses are used to train the DBN-BP model. The input for the DBN-BP are current, temperature, and SOC. The output from the DBN-BP is the terminal voltage. A comparison of the voltage prediction accuracy with other ML algorithms including SLFNN, SVM, and ELM shows that DBN-BP gives the highest voltage predicting accuracy (with an MAPE of 2.42%). SVM has higher accuracy (with an MAPE of 2.87%) than ELM (with an MAPE of 3.68%) and SLFNN (with an MAPE of 4.15%) because SVM is more robust and not easily affected by the noise in the data (Jain et al., 2014). Moreover, the Gaussian process is also applied to assist in constructing crack pattern model for LIB with silicon-based anode (Zheng et al., 2020), to assist the design of anode material. Specifically, the data points generated by FEM are applied to train their GP-based surrogate model. The input to the model are the fraction ratio, the Si layer thickness, and the curvature. The output from the model is the Si island area. The results showed that the GP surrogate model has high accuracy in predicting the Si anode performance, and the reliability (quantified by “Cumulative confidence level” (Wang and Wang, 2014)) can be 0.997 when using 20 data points to train the model.

Application of machine learning for capacitors/supercapacitors

In this subsection, the application of machine learning for capacitors and supercapacitors is introduced. Current studies mostly focus on behavior analysis, performance estimation, RUL prediction, and modeling and design. Specifically, RUL is quantified by the time or cycling number when the capacitance decreases to a threshold value.

For the cyclic voltammetry investigation, an ANN-based approach (an SLFNN model) is applied to model the cyclic voltammetry behavior of supercapacitors with a MnO2 electrode (Dongale et al., 2015). The potential and current density are used as the input parameters for the SLFNN, whereas the output is the cyclic voltammetry performance. Experimental data are used to train the SLFNN model based on five types of supercapacitors under applied DC voltages of 0–1 V for 20 cycles. The results show that the trained SLFNN model has good performance with a low error (below 2%) in predicting the specific capacitance when compared with experimental results. Similarly, ANN (an SLFNN model) is also used in (Lokhande, 2020) to model the cyclic voltammetry for supercapacitors with Ni(OH)2 electrodes.

DNN is applied to predict the capacitance for carbon-based supercapacitors (Zhu et al., 2018). A total of 681 sets of data are collected from more than 300 publications to train the DNN model (with two hidden layers). The input data to the DNN are the specific surface area, the pore size, the ID/IG, the N-doping, and the voltage window. The output from the DNN is the capacitance. The DNN shows a high training accuracy (R2 = 0.91) and out-of-sample accuracy when comparing the predicted capacitance to the real capacitance.

Several studies have compared different types of ML algorithms in predicting the performance of supercapactors. For instance, the work in (Zhou et al., 2020a) compares several machine learning algorithms (generalized LR (GLR), SPV, RF, and ANN) in correlating the structural features with the capacitance of the carbon-based supercapacitor. The input parameters for these ML algorithms are the surface area of micropore and mesopore and the scan rate. The output are the specific capacitance and power density. A total of 70 groups of data points are used for training the ML algorithms. The results show that ANN has the highest accuracy for predicting the capacitance, wheres GLR has the lowest accuracy. The performance of ANN and RF for estimating the current of Co-CeO2/rGO nanocomposite supercapacitors are compared in (Parwaiz et al., 2018). The training datasets are obtained from experiments. The input for the ML algorithms are potential, oxidation/reduction, and doping concentration, whereas the output is current (the set-up of the ANN (an SLFNN) is illustrated in Figure 5A2). It is found that ANN performs better than RF. In (Su et al., 2019), the performance of ML algorithms including M5 model tree (M5P) (Breiman et al., 1984), M5 rule (M5R), multilayer perception (MLP, a widely used ANN model), SVM, and LR are compared for predicting the capacity of supercapacitors based on the properties of 13 types of electrolytes. The diameter, dipole moments, viscosities, boiling temperature, and dielectric constant of the electrolytes are used as the input for the ML algorithms. It is shown that M5P gives higher prediction accuracy than M5R and MLP due to the simple rule, and LR gives the lowest prediction accuracy. The solvent molecular size and dielectric constant are found to significantly affect the capacity.

Figure 5.

Examples of the application of machine learning for capacitors and supercapacitors

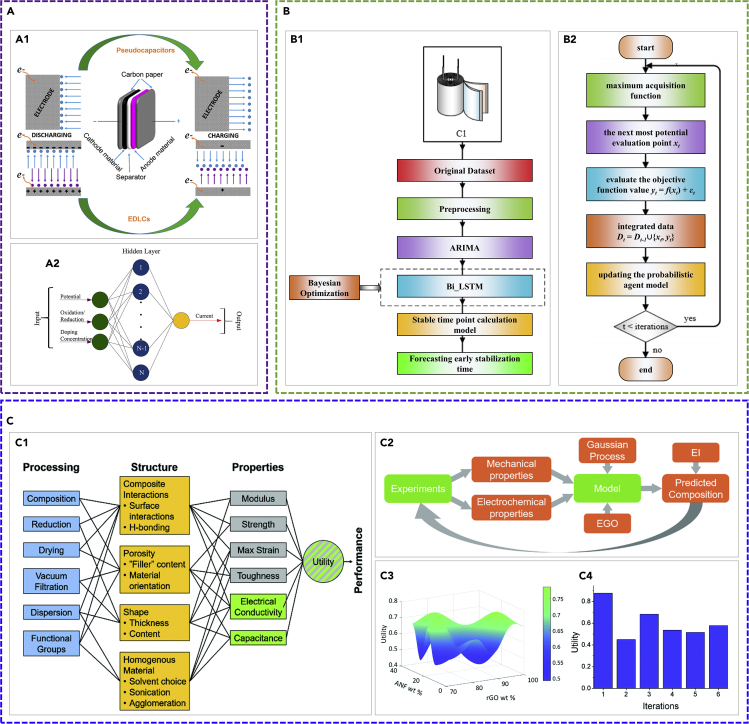

(A) The application of ML on the cyclic voltammetry behavior modeling of Co-CeO2/rGO nanocomposite supercapacitors. Reprinted with permission from (Parwaiz et al., 2018). Copyright 2018 American Chemical Society. (A1) The illustration of the structure of supercapacitors and two modes of charging storage mechanism. (A2) The setup of the ANN for modeling the cyclic voltammetry behavior for Co-CeO2/rGO nanocomposite supercapacitors. The ANN contains an input layer, a hidden layer, and an output layer.

(B) A schematic of the electronic capacitor early stabilization time prediction through (B1) the hybrid ARIMA-Bi_LSTM model and (B2) a schematic of the Bayesian optimization algorithm. Reprinted from (Wang et al., 2020a, 2020b).

(C) The application of machine learning for designing multifunctional supercapacitor electrodes. Reproduced from (Patel et al., 2019) with permission from The Royal Society of Chemistry. (C1) The correlation between processing, structure, properties (including mechanical properties (gray boxes) and electrochemical properties (green boxes)), and the performance of a composite electrode composed of reduced graphene oxide, Aramid nanofibers, and carbon nanotubes. (C2) A schematic of the interactions between experiments and computations (including the Gaussian process machine learning computation and global optimization). (C3) The predicted utility (after 6 iterations). (C4) The predicted utility of the samples with composition at each iteration.