Abstract

Objectives

To describe critical features of the Ethiopian Pediatric Society (EPS) Quality Improvement (QI) Initiative and to present formative research on mentor models.

Setting

General and referral hospitals in the Addis Ababa area of Ethiopia.

Participants

Eighteen hospitals selected for proximity to the EPS headquarters, prior participation in a recent newborn care training cascade and minimal experience with QI.

Interventions

Education in QI in a 2-hour workshop setting followed by implementation of a facility-based QI project with the support of virtual mentorship or in-person mentorship.

Primary and secondary outcome measures

Primary outcome—QI progress, measured using an adapted Institute for Healthcare Improvement Scale; secondary outcome—contextual factors affecting QI success as measured by the Model for Understanding Success in Quality.

Results

The dose and nature of mentoring encounters differed based on a virtual versus in-person mentoring approach. All QI teams conducted at least one large-scale change. Education of staff was the most common change implemented in both groups. We did not identify contextual factors that predicted greater QI progress.

Conclusions

The EPS QI Initiative demonstrates that education in QI paired with external mentorship can support implementation of QI in low-resource settings. This pragmatic approach to facility-based QI may be a scalable strategy for improving newborn care and outcomes. Further research is needed on the most appropriate instruments for measuring contextual factors in low/middle-income country settings.

Keywords: continuous quality improvement, global health, healthcare quality improvement, paediatrics

Strengths and limitations of this study.

This is a programme evaluation of a pragmatic approach to quality improvement led by the Ethiopian Pediatric Society.

We evaluate hospital progress with quality improvement methodology using a novel adaptation of an Institute for Healthcare Improvement Scale.

Although hospitals were balanced across the in-person mentorship and virtual mentorship groups with regards to location and census, they were not randomised.

Data were collected via medical record abstraction; when the medical record was erroneous per the experience of the quality improvement team, an estimate of compliance with a care process was used for baseline data.

Introduction

In 2016, the neonatal mortality rate in Ethiopia was 28/1000 live births; 90 000 newborns died in Ethiopia during that year.1 Many of these deaths were preventable. In response to this public health crisis, the Ethiopian Ministry of Health, in collaboration with the Survive and Thrive Global Development Alliance (S&T GDA) and the Ethiopian Pediatric Society (EPS), initiated a training programme for providers of newborn care in hospitals countrywide.2 Through this programme, midwives learnt evidence-based practices for newborn resuscitation, early newborn care and care of the small baby using the American Academy of Pediatrics’ Helping Babies Survive (HBS) suite of educational programmes.3

The HBS curriculum, and particularly the first programme in the suite entitled Helping Babies Breathe (HBB), has been adopted in many other low/middle-income countries (LMICs).4 5 Training in HBB reduces perinatal mortality and the likelihood of sustained reductions in mortality increases by using companion strategies for maintaining and translating knowledge into practice.6–11 However, translation of knowledge into practice is frequently impeded by systems barriers, including lack of resources, inadequate staffing and poorly organised processes of care.12 To eliminate some of these barriers, local strategies must be employed. Quality improvement (QI) methods that promote local adaptation of proven interventions through iterative testing may be key to sustaining a reduction in perinatal mortality following training.13 14

While many Ministries of Health in LMICs are developing QI expertise, capacity-building for facility-based QI is still needed.15 Consequently, to date, successful facility-based QI in LMICs has typically involved concentrated coaching by a QI expert.16–22 Recognising the labour-intensive and resource-intensive nature of such models, virtual consultation has been increasingly explored as a complementary or even alternative approach.23 24

In 2017, the EPS began a pilot project called the EPS QI Initiative to test a strategy to improve adherence to newborn care practices. The initiative included QI education using Improving Care of Mothers and Babies, a QI guide developed by the S&T GDA, to support facility-based QI efforts in LMICs.25 In addition to training in QI, the EPS QI Initiative provided either virtual or in-person mentoring for each hospital-based team. In this manuscript, we examine critical features of the EPS QI Initiative and present formative research on mentor models for QI teams in LMIC settings. Finally, we review key elements of the EPS QI Initiative using the Consolidated Framework for Implementation Research (CFIR), an implementation science framework of constructs associated with effective implementation.26

Methods

Features of the EPS QI Initiative

Selection of hospitals

The director of the EPS invited 20 hospitals in the Addis Ababa area to join the QI initiative. He selected hospitals that participated in the countrywide HBS training cascade and had little experience in QI methodology. This group of hospitals included general and referral hospitals in both rural and urban locations. Executives at all 20 hospitals gave permission for their hospital to participate. Two were later excluded because they did not participate in baseline data collection (see below). Therefore, 18 hospitals participated in the final cohort.

Baseline data collection

The initiative focused on newborn care in the labour and delivery ward. In an effort to track implementation of practices recommended in the HBS programmes, the EPS identified 15 key newborn process and outcome indicators from the HBS curriculum for continuous monitoring and evaluation by the cohort. These included the following dichotomous process indicators: (1) stimulation to breathe at birth, (2) administration of positive pressure ventilation, (3) cord clamping after 1 min, (4) skin-to-skin for 1 hour after birth, (5) early initiation of breastfeeding, (6) temperature measurement, (7) vitamin K administration, (8) tetracycline administration, (9) BCG administration, (10) polio vaccination and (11) kangaroo mother care (for newborns <2000 g). Additionally, four dichotomous outcome indicators were included: (1) crying at birth, (2) hypothermia (temperature <36.5°C), (3) stillbirth (including fresh vs macerated) and (4) death prior to discharge.

A midwife from each hospital participated in an initial workshop in June 2017 to learn how to abstract data from the medical record for monitoring of the quality indicators. At this workshop, participants also trained in how to implement low-dose high frequency (LDHF) practice of newborn care skills at their hospital such as bag mask ventilation. (While there is evidence to support improved knowledge translation with LDHF practice following HBS training,7 9–11 27 time constraints did not permit covering LDHF practice during the initial HBS training cascade.) Following the workshop, hospitals began collecting baseline data using tablets provided by the initiative and a purpose-designed database using REDCap electronic data capture tools (RedCap, Vanderbilt University, Nashville, Tennessee, USA).28 29 The midwife at each hospital abstracted data describing every birth and the subsequent care of the newborn in the labour and delivery ward from paper medical records and entered these data into the tablet-based database. The midwife tasked with this responsibility received a small financial incentive. Digital data were then transferred via the internet to a central computer in the EPS office. Baseline data were collected during the months following the workshop.

Mentor selection and training

The EPS director conducted a search to identify local mentors with strong understanding of Ethiopian health systems, knowledge of QI methodology, experience in coaching healthcare providers and evidence of ability to motivate change. After an in-depth interview process, three candidates were selected to serve as mentors for hospital-based QI projects. All three mentors were neonatal intensive care nurses with at least 5 years of clinical experience; each had prior leadership experience, but limited QI experience.

The mentors studied the QI guide and subsequently participated in an 1-hour training to review QI methodology, to learn how to facilitate the QI training workshop and to practice successful QI coaching. During this mentor training, two authors of the QI guide (CB and JP, also authors of this manuscript) conducted a detailed review of the basic steps for QI methodology outlined in the guide. Additionally, these authors briefed the mentors on how to facilitate the QI training workshop for the cohort and also led sessions on effective coaching using both instruction and simulation cases to highlight successful coaching strategies.

Quality improvement training workshop

In December 2017, the head nurse midwife and one additional representative self-selected from the labour and delivery ward of each hospital attended a 2-hour workshop on QI methods. We used Improving Care of Mothers and Babies as a teaching tool. On the first day, two authors of the QI guide (CB and JP, also authors of this manuscript) taught the following basic QI steps: creating a team, deciding what to improve, choosing the barriers to overcome, planning and testing change, and determining if the change resulted in improvement. They taught this portion of the workshop in English with interpretation into Amharic. Participants applied key knowledge from these steps in small group practice exercises with the help of Ethiopian facilitators. These facilitators served as mentors for the cohort in the subsequent months (see Virtual Mentorship vs In-Person Mentorship below). Representatives received all written material in English, the designated language of the Ethiopian healthcare professional.

On the second day, participants reviewed baseline data of key indicators from their hospital in the form of run charts.30 The data manager for the initiative plotted these run charts using the REDCap data and a purpose-designed template in Excel. With the assistance of a facilitator, each hospital identified gaps in their quality of care and selected an indicator for improvement based on its importance (eg, to families or the health authority), expected amount of improvement and the potential impact of the improvement. Although the initiative did not use a collaborative model in which all hospitals conduct QI on the same indicator,31 independent selection of indicators by the hospitals still resulted in the majority pursuing the same gap in quality (see below). After selecting an indicator, hospital representatives began the initial planning of a project including completion of an aim statement using the model described in the QI guide. Following this training, representatives returned to their hospitals to form a QI team and complete a project.

Mentor role

We initially planned to provide QI training, followed by the addition of in-person mentorship for only one half of the hospitals. The plan to limit in-person mentorship to a subset of the hospitals was made to permit the evaluation of the impact of mentorship on the success of implementing a QI project. The EPS director assigned hospitals to QI training alone or QI training with mentorship with the goal of achieving balance between groups with respect to rural versus urban location of the hospitals and low versus high delivery census. The EPS director also considered geographic proximity with allocation of the groups, such that each group included hospitals located both near to and far from the EPS central office. The nine hospitals in the mentorship group were clustered into sets of three based on ease of travel between them; subsequently, each mentor was assigned one of these sets of three hospitals to mentor with in-person visits. However, subsequent discussions with participants at the QI training workshop suggested that there was a very low likelihood of successful execution of a QI project in the absence of mentorship. In response to this, and in appreciation of the primary objective of the initiative to improve care in participant hospitals, the plan was modified to provide virtual mentorship to support QI activities in hospitals not receiving in-person mentorship. Thus, each mentor was assigned three hospitals for virtual mentorship in addition to their three hospitals for in-person mentorship.

The EPS director instructed mentors to interact with their hospitals once monthly by phone for the virtual mentorship subset and in person for the in-person mentorship subset. The mentor, in conjunction with the QI team, determined the content and length of mentoring sessions with consideration for the status of the QI project and perceived challenges in moving forward. Mentors recorded the length and nature of each interaction for all mentoring encounters. Mentors also participated in a monthly conference call with the EPS director and QI guide authors to discuss successes and challenges with coaching and for collective support and learning.32

Monitoring and evaluation

Patient level data collection continued following the second workshop. During this period, a data manager at the EPS produced a comprehensive monitoring and evaluation report for each hospital that summarised the hospital’s monthly data for all key indicators. The comprehensive report contained a running monthly tally of total births and births <2000 g, rates of compliance with each process indicator per month in both table and run chart format, and rates of outcomes for each outcome indicator per month in table format. The data manager provided run charts for the outcome indicator hypothermia only, as other outcomes (eg, mortality) were sufficiently rare that conclusions could not be inferred from monthly graphic data. The comprehensive report also included a detailed table on missing data for each process and outcome indicator.

The data manager also produced an indicator-specific monitoring and evaluation report for key indicators directly linked to the QI team’s process or outcome selected for improvement. The report included a running weekly tally of total births, rates of compliance with any relevant process indicator per week in both table and run chart format, and rates of outcomes for any relevant outcome indicator per week in table format.

Hospitals received comprehensive reports summarising monthly data at approximately 3-month intervals, and indicator-specific reports summarising weekly data at approximately monthly intervals. As a result of delayed data extraction in some hospitals and a period of poor internet access for a subset of the hospitals, reports were not always delivered at the prescribed intervals. Thus, data were not continuously available to guide QI teams in their work.

Evaluation of the EPS QI Initiative

Hospital-based monitoring of improvement

QI teams determined whether there was improvement in their chosen process or outcome using simple run chart rules. Teams identified change by a shift (six or more consecutive data points all located above or below the median) or trend (five or more consecutive points all going up or all going down) in the data. For 16 of the teams, the data manager calculated the baseline median depicted on the run charts using weekly data from October 2017 through the first week of December 2017. There were two teams who reviewed their baseline data and determined from their own experience that the chart data inaccurately reflected compliance with their chosen process of care. These teams determined an approximate baseline rate through either an educated guess or direct observation of that process of care for a subset of deliveries. Both teams selected rates that were worse than what was calculated using the chart data. These approximated rates were adopted as the baseline for subsequent QI work, and strategies were implemented to improve the quality of these data in the medical chart. As teams did not consistently record the timing of the changes they implemented, in this report we considered all data from the second week of December onwards as occurring after initiation of the QI project.

Progress with QI methodology

We evaluated progress with QI methodology using a QI progress scale adapted from a scale for quality collaboratives published by the Institute for Healthcare Improvement (see online supplemental table 1).33 This scale was originally designed for a collaborative model where there is a single intervention implemented across all sites. Since the EPS QI Initiative involved different interventions at each site, the two authors of the QI guide adapted this scale to remove references to a single change package and to customise language to match the methodology presented in the QI guide. Two representatives from each QI team gathered at a final workshop in April 2018 to present their team’s QI work and discuss sustainability of QI efforts. Two authors of this report independently rated the hospitals on their QI progress based on these team presentations and run chart data using the QI progress scale. Any discrepancies in the two investigator’s ratings were resolved through consensus.

bmjoq-2020-000927supp001.pdf (263.5KB, pdf)

Context

We used the Model for Understanding Success In Quality (MUSIQ) to evaluate the context in which QI work was conducted in this cohort.34 MUSIQ is a conceptual model to describe contextual factors that influence QI success. It has been used in high-income settings to understand the context around QI projects in a paediatric hospital, a state QI collaborative, verification visits to healthcare organisations and an improvement advisor training programme.35 36

The MUSIQ Survey was revised to reflect the setting of this QI initiative, with the following adaptations: the preamble was adjusted to reflect the details of this initiative; the organisation was specified as the hospital and the microsystem as the delivery room; and three questions (#32, 34, 36) were deleted because of lack of relevance in this initiative. The final survey was comprised of 33 questions in the following five domains: (1) QI team, (2) the organisation (in this initiative, defined as the hospital), (3) the microsystem (in this initiative, defined as the delivery room), (4) support and (5) environment. The final three questions on the survey addressed outcomes of the specific QI project including a question on perceived success.

We used a back-translation strategy to produce a translated survey in Amharic.37 First, the revised English survey was translated into Amharic by an external translator and then back-translated into English. The investigators reviewed the original survey and back-translated survey for points of confusion, discussed discrepancies and came to consensus on the final Amharic survey. A committee comprised of the three mentors for the initiative reviewed the final survey and suggested additional edits for clarification.

Up to six individuals from each hospital independently completed the MUSIQ Survey, with representation from the following personnel: QI team leaders, QI team members, heads of the labour and delivery ward and hospital administrators. The MUSIQ scoring system assigns the following numeric values for the Likert scale responses to each question: totally agree=7, agree=6, somewhat agree=5, neither agree nor disagree=4, somewhat disagree=3, disagree=2, totally disagree=1, don’t know=0. We calculated median domain scores and interquartile ranges (IQRs) for each hospital.

Patient and public involvement and oversight

Neither patients nor the lay public were involved in the design, or conduct, or reporting, or dissemination plans of our research. However, the professional community represented by participant midwives advised the EPS about modifications in the design (ie, type of mentorship). Our Institutional Review Board exempted this study from review.

Results

Demographics of participating hospitals and selection of QI project

Among the hospitals receiving virtual mentorship, six were rural and seven were general hospitals; among the hospitals receiving in-person mentorship, five were rural and five were general hospitals. The number of annual deliveries ranged from 1296 to 5728 (median 2940) among the hospitals receiving virtual mentorship and from 1404 to 8732 (median 2348) among the hospitals receiving in-person mentorship.

Hospitals most commonly selected skin-to-skin care (n=10) for their improvement project because this process was identified as one with poor compliance. Hospitals also chose to improve temperature measurement (n=5), hypothermia (n=1), hand washing (n=1) and delayed cord clamping (n=1; see online supplemental table 2).

Mentor encounters

All hospitals received at least one encounter from their mentor per month (table 1). Encounters for the hospitals receiving virtual mentorship were nearly all virtual (95%) and most lasted 30 min to 2 hours (81%). In contrast, encounters for the hospitals receiving in-person mentorship were predominantly in person (84%) and lasted greater than 2 hours (74%). While mentors interacted with a variety of the QI team members, QI team leaders were most frequently involved in mentor encounters for both groups. Data collectors were involved in one-third of encounters for hospitals receiving virtual mentorship; QI team members were involved in one-third of encounters for hospitals receiving in-person mentorship. The majority of mentor encounters with hospitals receiving virtual mentorship focused on assessing progress, with directed coaching on the QI process only one-quarter of the time (figure 1). In contrast, mentor encounters with hospitals receiving in-person mentorship involved coaching on the QI process nearly half of the time, with time spent encouraging and motivating the team during one-third of visits.

Table 1.

Mentor encounters to support quality improvement projects

| Virtual mentorship | In-person mentorship | |

| Total encounters (n) | 42 | 43 |

| Encounters per hospital per month (mean) | 1.2 | 1.2 |

| Encounter type (%) | ||

| Virtual | 95 | 16 |

| In-person | 5 | 84 |

| Encounter duration (%) | ||

| <30 min | 14 | 7 |

| 30 min to 2 hours | 81 | 19 |

| >2 hours | 5 | 74 |

| Participants involved (%) | ||

| QI team leader | 83 | 81 |

| QI team member(s) | 9 | 35 |

| Data collector | 31 | 14 |

| Other staff | 2 | 7 |

QI, quality improvement.

Figure 1.

Themes of mentor encounters for hospitals receiving virtual mentorship and those receiving in-person mentorship. Encounters that involved more than one theme are displayed in all relevant categories. QI, quality improvement.

In addition to the encounters to support QI projects, mentors engaged with hospitals in both groups around data entry and transmission issues (n=36 additional encounters) and assistance with development of a presentation for the April workshop (n=11 additional encounters). Among those hospitals receiving in-person mentorship, mentors also made in-person visits focused on specific reinforcement of clinical training and other reasons such as discussion of finances for purchasing supplies (n=14 additional encounters).

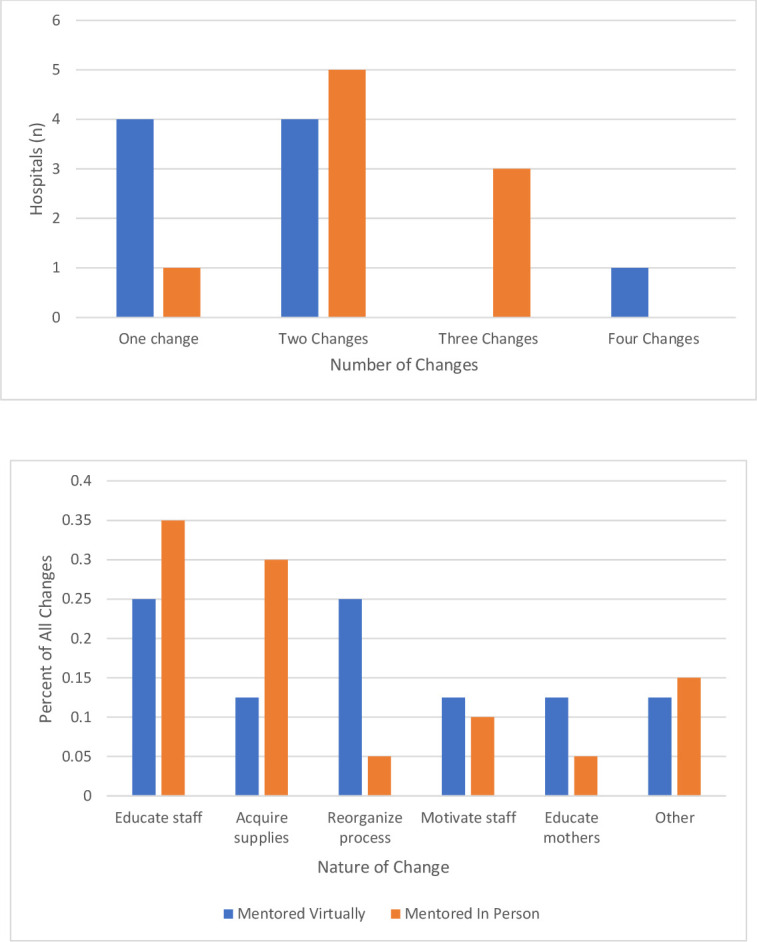

Progress with QI methodology

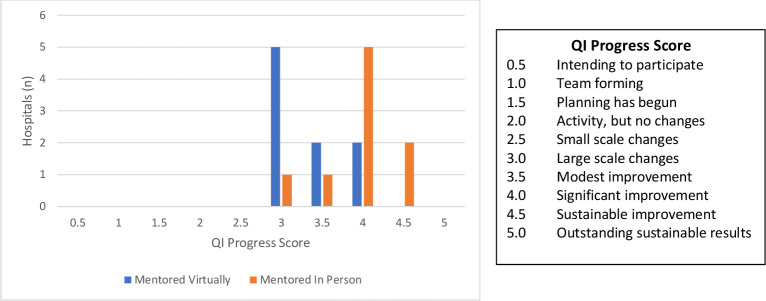

Hospitals implemented one to four changes during the initiative (figure 2, top panel). In general, hospitals receiving in-person mentorship implemented two or three changes compared with hospitals receiving virtual mentorship that implemented one or two changes. Educating staff was the most common change implemented by all hospitals in the cohort (figure 2, bottom panel). During the 5 months following QI training, all teams implemented a large-scale intervention (one that affected all providers and patients in the labour and delivery ward) targeting the process or outcome they had selected for improvement (figure 3). Two teams were able to progress to sustaining improvement through more permanent or extensive changes in the system.

Figure 2.

Data describing the number of changes (top) and nature of changes (bottom) implemented by hospitals in the initiative.

Figure 3.

QI progress score of hospitals in the initiative. Scores indicate the progress at each hospital during the 5 months following QI training. QI, quality improvement.

Contextual factors affecting QI

Two to six individuals from each hospital responded to the MUSIQ Survey. Median domain scores for the entire cohort indicate that hospital respondents ‘agreed’ that contextual factors predicting QI success within the domain of the QI team were accessible for their QI work (online supplemental table 3). Respondents ‘somewhat agreed’ to ‘agreed’ that factors predicting QI success were accessible within the domain of the delivery room; for all other domains (support, hospital, environment), respondents ‘somewhat agreed.’

Discussion

The EPS QI Initiative demonstrates a successful, pragmatic approach to conducting mentored, facility-level QI in low-resource settings. Hospitals in the initiative demonstrated that they could successfully engage in QI by implementing at least one large-scale intervention in their labour and delivery ward with the support of QI training and a mentor. We noted a number of strongly distinguishing constructs as described in the CFIR that may have contributed to the success of this initiative. These constructs included elements of the process, namely external change agents and reflecting and evaluating, and elements of the intervention, namely adaptability and complexity.26

External change agents

External QI mentors supporting the novice teams in this cohort were key to the overall success of the initiative, reinforcing the wealth of literature on the importance of mentorship for QI programmes in LMICs.38–44 The dose and nature of mentoring encounters may have been affected by a virtual versus in-person approach, and in turn, could have affected QI progress, though definitive conclusions cannot be drawn from this study. The approach to external change agents in this initiative was pragmatic, training healthcare workers with relatively little prior QI experience to become mentors. While these mentors spent the majority of in-person encounters coaching on the QI process or encouraging the team, they were more likely to focus on assessment of progress in virtual encounters. This difference may relate to the challenges of interacting virtually; however, it is unclear if the differences seen in the virtual mentorship versus in-person mentorship encounters would have been lessened if more experienced mentors were supporting the teams. Given the high cost of in-person mentorship noted in the literature, virtual mentorship remains an attractive approach for scale-up in low-resource settings that deserves further evaluation.45

Reflecting and evaluating

Reflecting and evaluating on progress with a QI project through the provision of data in visual run charts may have been key to the overall success of the initiative. The importance of real-time data feedback in QI interventions in LMICs has been previously described.46 During this initiative, the data collector at each hospital received a small, monthly stipend to support their data collection efforts. Additionally, this initiative required a full-time data manager at the EPS who assembled monitoring reports for the facilities using data downloaded from REDCap and a purpose-designed Excel template. This data-driven approach, although simple, may have motivated teams to improve their care. However, we recognise that the method of reflecting and evaluating used in this initiative, namely internal continuous data collection paired with external production of run charts, may not be sustainable in many low-resource settings. The large amount of data collection required for a continuous monitoring and evaluation approach, particularly in the absence of electronic medical records, is burdensome. Furthermore, accurate documentation of care in the medical record remains a barrier to data collection in many low-resource settings. Many of the teams in this initiative, challenged by inaccurate documentation of processes of care, invested time getting buy-in from their colleagues to ensure accurate data collection for the purposes of improvement. This experience further supports the need for data quality assessments as a precursor to data-driven QI interventions.21 Lean approaches to data collection that may be more realistic in low-resource settings, such as purposive sampling across a wide range of conditions, are an alternative to support data-driven QI work.47 Additionally, QI work in LMICs may benefit from programmes that allow for the generation of automated run charts from electronic data.

Adaptability

The QI approach of locally derived systems solutions to improve newborn care and outcomes may have been key to this initiative’s success. Adaptation to reflect local context has been previously shown to improve both adoption and sustainability.48 Two-thirds of the teams in the initiative demonstrated at least modest improvement through implementation of solutions specific to their hospital. The process or outcome that was the focus of each QI project was self-selected by the team based on their identification of a gap in quality and particular interest in closing that gap. The adaptability of this initiative included allowing for the selection of a gap in quality that was not part of the key indicators being monitored. Although this adaptability may have heightened the motivation of teams to invest in QI work, comparative evaluation of QI success across teams with disparate projects was a challenge. As an alternative, we evaluated their progress with QI methodology using an adapted Institute for Healthcare Improvement Scale. This adapted scale has not been validated. To our knowledge, there are few methods that have been validated to rate QI teams on their progress outside of a determination of improvement in the process or outcome selected for their project. Tools to rate QI progress, particularly in LMIC settings, are needed to support research focused on implementation of QI.

Complexity

The basic steps of QI taught in the workshop, and supported by the QI guide, were intuitive, relatively easy to apply and largely free of QI jargon. This simplified approach to QI methodology may have been key to the teams’ progress in our initiative.

Teams were encouraged to choose ‘low-hanging fruit’ in order to establish early success. Most chose a simple process of care that was applicable to all newborns as the subject of their project. It is unclear if the pragmatic strategy employed in this initiative would be effective in addressing more complex outcomes such as stillbirth or mortality. These outcomes likely require more difficult and larger systems changes that would be challenging for a novice QI team to implement. Additionally, the teams in both groups commonly resorted to education as the change they implemented, an observation consistent with literature on novice QI teams.49 Education is a necessary but often insufficient intervention to effect lasting systems changes.

Context as evaluated by MUSIQ

We used MUSIQ, a tool originally designed and evaluated in high-resource settings, to evaluate contextual factors that may have effected success in this initiative. MUSIQ has only recently been applied to LMIC settings.50–52 Results from the EPS QI Initiative suggest that QI support in hospitals in LMICs may be less available compared high-resource settings. For example, respondents to the MUSIQ Survey in our initiative more often reported that they somewhat agreed they had access to QI support in several domains, compared with respondents in high-income countries who commonly totally agreed or agreed in these same domains.36 In addition, wide IQRs in our cohort for some domains suggest considerable variability in the hospital support and environment for QI. Despite this variability, we could not draw conclusions regarding the influence of specific contextual factors on QI success in the participant hospitals. Nevertheless, it is important to understand context and causality in QI initiatives, and it is possible that an instrument such as MUSIQ that is designed for use in high-resource health systems did not transfer well to the Ethiopian context despite our adaptations. Future research should continue to develop methods for strengthening data collection as well as dealing with flawed, uncertain, proximate and sparse data.53

Limitations

While this study allows us to explore two pragmatic approaches to mentored-QI in LMICs, we cannot draw conclusions regarding the impact of mentorship on QI success in this small cohort of hospitals. The non-randomised design for assignment of mentorship, while practical for travel of the mentors during programme implementation, also limits the extent to which we can separate the role of mentorship from facility characteristics on QI success. Virtual mentorship, although novel, was put into place during programme implementation when it became obvious during QI training that teams might be at high risk of failure without external support. Given this late addition to the programme implementation, and limitation to phone calls only given available technology, it is possible that more rigorous virtual mentorship with video capability would have produced different results. In the QI workshop setting, we discovered that English proficiency was not as strong as we anticipated among participants. As such, it is possible that use of the QI guide by QI teams was decreased due to its being in English. Two hospitals estimated their baseline data due to grossly inaccurate medical record documentation. It is possible these QI teams were motivated to underestimate the quality of their care in order to demonstrate greater improvement. Interpretation of the QI progress scores reported in this study is limited by their method of assignment (two independent reviewers; resolution of discrepancies by consensus). The value of this scoring system could be strengthened for future studies by using blinded, external reviewers to assign scores with discrepancies adjudicated. Finally, the short follow-up period does not allow us to address questions regarding sustainability of QI. Future studies should address the sustainability of facility-driven QI across multiple projects, and with decreasing external support as QI teams become more experienced.

Conclusion

QI methodology was successfully implemented in this cohort of hospitals in low-resource settings with the support of education in QI paired with external mentorship. Mentoring appears to be essential for progress of QI, and further research is needed on the relative costs and effectiveness of different mentoring approaches. This pragmatic approach to facility-based QI may be a scalable strategy for improving newborn care and outcomes. Development and validation of tools to evaluate both progress with QI methodology as well as contextual factors relevant for QI success in low-resource settings is needed. Future work should also focus on whether pragmatic, lean QI strategies improve important outcomes that have complex antecedents.

Acknowledgments

We would like to acknowledge the three mentors who served as external change agents for this initiative: Azeb Sibhatu, Megerssa Kumera and Fikirte Tilahun. We would also like to acknowledge Tekleab Mekbib for his translation of the MUSIQ Survey, and Sara Berkelhamer, Kate McHugh and Renate Savich for their facilitation of the June workshop which began this initiative.

Footnotes

Contributors: JP developed the project design, provided oversight during the execution of the project, assisted with data analyses and wrote the initial draft of the manuscript. BW assisted with development of the project design, recruited sites, provided in-country oversight during the execution of the project, assisted with data analyses and reviewed the manuscript. DJ assisted with the provision of data during the conduct of the project and with data analyses, and reviewed the manuscript. AC assisted with data analyses, particularly with analyses of the MUSIQ data, and reviewed the manuscript. RR assisted with development of the data analytic plan and with data analyses, and with the writing and reviewing of manuscript. CB assisted in the development of the project design, provided oversight during the execution of the project, and assisted with data analyses and writing of the manuscript.

Funding: This work was supported by a grant (#50008) from the Laerdal Foundation, Stavanger, Norway.

Competing interests: None declared.

Patient consent for publication: Not required.

Ethics approval: The University of North Carolina at Chapel Hill Institutional Review Board exempted this study from review.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

References

- 1.UNICEF Maternal and newborn health disparities. Ethiopia, 2016: 1–8. [Google Scholar]

- 2.Survive & Thrive Global Development Alliance. Available: https://surviveandthrive.org/Pages/default.aspx [Accessed 15 Jan 2019].

- 3.Helping babies survive. Available: https://www.aap.org/en-us/advocacy-and-policy/aap-health-initiatives/helping-babies-survive/Pages/Our-Programs.aspx [Accessed 09 Jan 2019].

- 4.Helping babies breathe: American Academy of pediatrics. Available: http://www.helpingbabiesbreathe.org

- 5.Niermeyer S. From the neonatal resuscitation program to helping babies breathe: global impact of educational programs in neonatal resuscitation. Semin Fetal Neonatal Med 2015;20:300–8. 10.1016/j.siny.2015.06.005 [DOI] [PubMed] [Google Scholar]

- 6.Goudar SS, Somannavar MS, Clark R, et al. . Stillbirth and newborn mortality in India after helping babies breathe training. Pediatrics 2013;131:e344–52. 10.1542/peds.2012-2112 [DOI] [PubMed] [Google Scholar]

- 7.Msemo G, Massawe A, Mmbando D, et al. . Newborn mortality and fresh stillbirth rates in Tanzania after helping babies breathe training. Pediatrics 2013;131:e353–60. 10.1542/peds.2012-1795 [DOI] [PubMed] [Google Scholar]

- 8.Bellad RM, Bang A, Carlo WA, et al. . A pre-post study of a multi-country scale up of resuscitation training of facility birth attendants: does helping babies breathe training save lives? BMC Pregnancy Childbirth 2016;16:222. 10.1186/s12884-016-0997-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kc A, Wrammert J, Clark RB, et al. . Reducing perinatal mortality in Nepal using helping babies breathe. Pediatrics 2016;137:e20150117. 10.1542/peds.2015-0117 [DOI] [PubMed] [Google Scholar]

- 10.Mduma E, Ersdal H, Svensen E, et al. . Frequent brief on-site simulation training and reduction in 24-h neonatal mortality--an educational intervention study. Resuscitation 2015;93:1–7. 10.1016/j.resuscitation.2015.04.019 [DOI] [PubMed] [Google Scholar]

- 11.Dol J, Campbell-Yeo M, Murphy GT, et al. . The impact of the helping babies survive program on neonatal outcomes and health provider skills: a systematic review. JBI Database System Rev Implement Rep 2018;16:701–37. 10.11124/JBISRIR-2017-003535 [DOI] [PubMed] [Google Scholar]

- 12.Ehret DY, Patterson JK, Bose CL. Improving neonatal care: a global perspective. Clin Perinatol 2017;44:567–82. 10.1016/j.clp.2017.05.002 [DOI] [PubMed] [Google Scholar]

- 13.Kamath-Rayne BD, Thukral A, Visick MK, et al. . Helping babies breathe, second edition: a model for strengthening educational programs to increase global newborn survival. Glob Health Sci Pract 2018;6:538–51. 10.9745/GHSP-D-18-00147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kieny M-P, Evans TG, Scarpetta S. Delivering quality health services: a global imperative for universal coverage. Washington, D.C.: World Bank Group, 2018. [Google Scholar]

- 15.Hirschhorn L, Ramaswamy R. Quality Improvement in Low- and Middle-Income Countries : Johnson J, Sollecito W, Continuous quality improvement in health care. 5th ed. Burlington, MA: Jones & Bartlett Learning, 2018: 297–311. [Google Scholar]

- 16.Singh K, Brodish P, Speizer I, et al. . Can a quality improvement project impact maternal and child health outcomes at scale in northern Ghana? Health Res Policy Syst 2016;14:45. 10.1186/s12961-016-0115-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Horwood CM, Youngleson MS, Moses E, et al. . Using adapted quality-improvement approaches to strengthen community-based health systems and improve care in high HIV-burden sub-Saharan African countries. AIDS 2015;29 Suppl 2:S155–64. 10.1097/QAD.0000000000000716 [DOI] [PubMed] [Google Scholar]

- 18.Magge H, Anatole M, Cyamatare FR, et al. . Mentoring and quality improvement strengthen integrated management of childhood illness implementation in rural Rwanda. Arch Dis Child 2015;100:565–70. 10.1136/archdischild-2013-305863 [DOI] [PubMed] [Google Scholar]

- 19.Marx Delaney M, Maji P, Kalita T, et al. . Improving adherence to essential birth practices using the who safe childbirth checklist with peer coaching: experience from 60 public health facilities in Uttar Pradesh, India. Glob Health Sci Pract 2017;5:217–31. 10.9745/GHSP-D-16-00410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mbonye MK, Burnett SM, Burua A, et al. . Effect of integrated capacity-building interventions on malaria case management by health professionals in Uganda: a mixed design study with pre/post and cluster randomized trial components. PLoS One 2014;9:e84945. 10.1371/journal.pone.0084945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wagenaar BH, Hirschhorn LR, Henley C, et al. . Data-Driven quality improvement in low-and middle-income country health systems: lessons from seven years of implementation experience across Mozambique, Rwanda, and Zambia. BMC Health Serv Res 2017;17:830. 10.1186/s12913-017-2661-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.National Academies of Sciences E, and Medicine Improving quality of care in low- and middle-income countries: workshop summary. Washington, DC: The National Academies Press, 2015. [PubMed] [Google Scholar]

- 23.Bardfield J, Agins B, Akiyama M, et al. . A quality improvement approach to capacity building in low- and middle-income countries. AIDS 2015;29 Suppl 2:S179–86. 10.1097/QAD.0000000000000719 [DOI] [PubMed] [Google Scholar]

- 24.World Health Organization Coaching for quality improvement: coaching guide. New Delhi: World Health Organization, Regional Office for South-East Asia, 2018. [Google Scholar]

- 25.Bose C, Hermida J, Breads J. Improving care of mothers and babies. American Academy of Pediatrics and University Research Co., LLC, 2016. [Google Scholar]

- 26.Damschroder LJ, Aron DC, Keith RE, et al. . Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 2009;4:50. 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Arabi AME, Ibrahim SA, Ahmed SE, et al. . Skills retention in Sudanese village midwives 1 year following Helping Babies Breathe training. Arch Dis Child 2016;101:439–42. 10.1136/archdischild-2015-309190 [DOI] [PubMed] [Google Scholar]

- 28.Harris PA, Taylor R, Minor BL, et al. . The REDCap Consortium: building an international community of software platform partners. J Biomed Inform 2019;95:103208. 10.1016/j.jbi.2019.103208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Harris PA, Taylor R, Thielke R, et al. . Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009;42:377–81. 10.1016/j.jbi.2008.08.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Perla RJ, Provost LP, Murray SK. The run chart: a simple analytical tool for learning from variation in healthcare processes. BMJ Qual Saf 2011;20:46–51. 10.1136/bmjqs.2009.037895 [DOI] [PubMed] [Google Scholar]

- 31.Wagner EH, Glasgow RE, Davis C, et al. . Quality improvement in chronic illness care: a collaborative approach. Jt Comm J Qual Improv 2001;27:63–80. 10.1016/S1070-3241(01)27007-2 [DOI] [PubMed] [Google Scholar]

- 32.Godfrey MM, Andersson-Gare B, Nelson EC, et al. . Coaching interprofessional health care improvement teams: the coachee, the coach and the leader perspectives. J Nurs Manag 2014;22:452–64. 10.1111/jonm.12068 [DOI] [PubMed] [Google Scholar]

- 33.Institute for healthcare improvement assessment scale for Collaboratives, 2011. Available: http://www.ihi.org/resources/Pages/Tools/AssessmentScaleforCollaboratives.aspx [Accessed 06 May 2020].

- 34.Kaplan HC, Provost LP, Froehle CM, et al. . The model for understanding success in quality (MUSIQ): building a theory of context in healthcare quality improvement. BMJ Qual Saf 2012;21:13–20. 10.1136/bmjqs-2011-000010 [DOI] [PubMed] [Google Scholar]

- 35.Griffin A, McKeown A, Viney R, et al. . Revalidation and quality assurance: the application of the MUSIQ framework in independent verification visits to healthcare organisations. BMJ Open 2017;7:e014121. 10.1136/bmjopen-2016-014121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kaplan HC, Froehle CM, Cassedy A, et al. . An exploratory analysis of the model for understanding success in quality. Health Care Manage Rev 2013;38:325–38. 10.1097/HMR.0b013e3182689772 [DOI] [PubMed] [Google Scholar]

- 37.Vreeman RC, McHenry MS, Nyandiko WM. Adapting health behavior measurement tools for cross-cultural use. J Integr Psychol Ther 2013;1:2 10.7243/2054-4723-1-2 [DOI] [Google Scholar]

- 38.Manzi A, Nyirazinyoye L, Ntaganira J, et al. . Beyond coverage: improving the quality of antenatal care delivery through integrated mentorship and quality improvement at health centers in rural Rwanda. BMC Health Serv Res 2018;18:136. 10.1186/s12913-018-2939-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Werdenberg J, Biziyaremye F, Nyishime M, et al. . Successful implementation of a combined learning collaborative and mentoring intervention to improve neonatal quality of care in rural Rwanda. BMC Health Serv Res 2018;18:941. 10.1186/s12913-018-3752-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Horwood C, Butler L, Barker P, et al. . A continuous quality improvement intervention to improve the effectiveness of community health workers providing care to mothers and children: a cluster randomised controlled trial in South Africa. Hum Resour Health 2017;15:39. 10.1186/s12960-017-0210-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Stringer JSA, Chisembele-Taylor A, Chibwesha CJ, et al. . Protocol-Driven primary care and community linkages to improve population health in rural Zambia: the better health outcomes through mentoring and assessment (BHOMA) project. BMC Health Serv Res 2013;13 Suppl 2:S7. 10.1186/1472-6963-13-S2-S7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ndayisaba A, Harerimana E, Borg R, et al. . A clinical mentorship and quality improvement program to support health center nurses manage type 2 diabetes in rural Rwanda. J Diabetes Res 2017;2017:1–10. 10.1155/2017/2657820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Anatole M, Magge H, Redditt V, et al. . Nurse mentorship to improve the quality of health care delivery in rural Rwanda. Nurs Outlook 2013;61:137–44. 10.1016/j.outlook.2012.10.003 [DOI] [PubMed] [Google Scholar]

- 44.Ajeani J, Mangwi Ayiasi R, Tetui M, et al. . A cascade model of mentorship for frontline health workers in rural health facilities in Eastern Uganda: processes, achievements and lessons. Glob Health Action 2017;10:1345497. 10.1080/16549716.2017.1345497 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Manzi A, Mugunga JC, Nyirazinyoye L, et al. . Cost-effectiveness of a mentorship and quality improvement intervention to enhance the quality of antenatal care at rural health centers in Rwanda. Int J Qual Health Care 2019;31:359–64. 10.1093/intqhc/mzy179 [DOI] [PubMed] [Google Scholar]

- 46.Webster PD, Sibanyoni M, Malekutu D, et al. . Using quality improvement to accelerate highly active antiretroviral treatment coverage in South Africa. BMJ Qual Saf 2012;21:315–24. 10.1136/bmjqs-2011-000381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Perla RJ, Provost LP, Murray SK. Sampling considerations for health care improvement. Qual Manag Health Care 2014;23:268–79. 10.1097/QMH.0000000000000042 [DOI] [PubMed] [Google Scholar]

- 48.Manzi A, Hirschhorn LR, Sherr K, et al. . Mentorship and coaching to support strengthening healthcare systems: lessons learned across the five population health implementation and training partnership projects in sub-Saharan Africa. BMC Health Serv Res 2017;17:831. 10.1186/s12913-017-2656-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Taylor MJ, McNicholas C, Nicolay C, et al. . Systematic review of the application of the plan-do-study-act method to improve quality in healthcare. BMJ Qual Saf 2014;23:290–8. 10.1136/bmjqs-2013-001862 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Eboreime EA, Nxumalo N, Ramaswamy R, et al. . Strengthening decentralized primary healthcare planning in Nigeria using a quality improvement model: how contexts and actors affect implementation. Health Policy Plan 2018;33:715–28. 10.1093/heapol/czy042 [DOI] [PubMed] [Google Scholar]

- 51.Reed J, Ramaswamy R, Parry G. Context matters: adapting the model for understanding success in quality improvement (MUSIQ) for low and middle income countries. Implement Sci 2017;12. [Google Scholar]

- 52.Zamboni K, Baker U, Tyagi M, et al. . How and under what circumstances do quality improvement Collaboratives lead to better outcomes? A systematic review. Implement Sci 2020;15:27. 10.1186/s13012-020-0978-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Wolpert M, Rutter H. Using flawed, uncertain, proximate and sparse (FUPS) data in the context of complexity: learning from the case of child mental health. BMC Med 2018;16:82. 10.1186/s12916-018-1079-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjoq-2020-000927supp001.pdf (263.5KB, pdf)