Abstract

Group decisions can outperform the choices of the best individual group members. Previous research suggested that optimal group decisions require individuals to communicate explicitly (e.g., verbally) their confidence levels. Our study addresses the untested hypothesis that implicit communication using a sensorimotor channel—haptic coupling—may afford optimal group decisions, too. We report that haptically coupled dyads solve a perceptual discrimination task more accurately than their best individual members; and five times faster than dyads using explicit communication. Furthermore, our computational analyses indicate that the haptic channel affords implicit confidence sharing. We found that dyads take leadership over the choice and communicate their confidence in it by modulating both the timing and the force of their movements. Our findings may pave the way to negotiation technologies using fast sensorimotor communication to solve problems in groups.

Subject terms: Human behaviour, Psychology

Introduction

We often make important decisions in groups, such as when we decide a travel destination with a group of friends or peer-review papers. Group decisions can sometimes outperform the choices of the best individual group members; for example, during logical problems1, numerical2 or perceptual tasks3.

There is strong consensus that effective group decisions require group members to share their degree of confidence in their individual choices3–8. This allows weighting individual choices according to their relative confidence levels, following principles of optimal (Bayesian) multisensory integration9–11. It has been assumed so far that explicit communicative channels, such as verbal communication3 or visual confidence reports4, are required to share confidence levels – and more broadly, negotiate optimal decisions12. This idea is in keeping with a long tradition in communication theory and cognitive psychology that emphasises the importance of communicating intentions and metacognitive confidence levels explicitly (e.g., verbally)13,14.

However, during ecologically realistic interactions, dyads also use implicit, sensorimotor communication channels to improve coordination and achieve joint goals15,16. For example, during joint grasping or joint pressing tasks, dyads modulate (e.g., amplify) the kinematics of their finger and arm movements to make the trajectory of their movements less variable and hence more predictable17, to make their intentions (e.g., what object they intend to grasp and when) easier to infer by coactors18–23, or to dynamically negotiate leader-follower roles24–26. Moreover, in the same tasks, participants tend to implicitly imitate each other’s actions27,28—a mechanism suggested to promote group affiliation29. During joint pulling tasks, haptically coupled dyads amplify their force to improve their coordination30. As a result, sensorimotor communication during jointly executed tasks can improve performance and the quality of execution31–39. This suggests the untested hypothesis that implicit communication based on sensorimotor (e.g., haptic) channels—which is faster and cheaper in cognitive load than explicit communication— may be sufficient to optimize group decisions and share confidence levels.

To test this hypothesis, we adapted a previous task designed to study optimal group decisions using verbal communication3—but we allowed participants to communicate only via a sensorimotor (haptic) channel. In our study, dyads (couples of individuals) make a series of individual decisions and then—if they disagree—group (consensus) decisions, about which of two sequentially presented stimuli contains an oddball target. During the group decisions, the dyads control coupled haptic devices with one degree-of-freedom, to jointly move the end effectors towards one of two (left or right) extreme positions, corresponding to their two choices.

We report three main findings. First, we show that haptic communication allows dyads to optimize group decisions and outperform the accuracy of individual participants (having similar sensitivity levels), akin to consensus reached using verbal communication3—but five times faster. Second, our computational analysis indicates that the haptic channel affords sharing confidence levels during the group choice—albeit in an implicit form. Indeed, the same computational (Bayesian) scheme explains group choices using both explicit (verbal) and implicit (haptic) communication, in terms of (weighted) confidence sharing. Third, our analyses indicate that dyads take leadership over the choice and communicate their confidence in it by manipulating both the timing and force of their movements – hence exploiting the haptic channel in full to optimise their joint performance.

Results

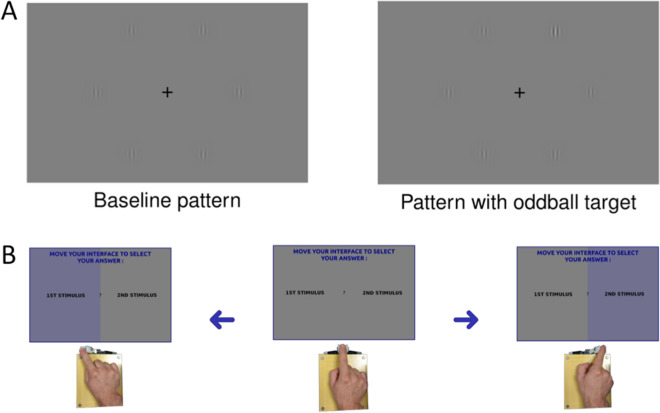

Couples of participants (dyads) are presented with a series of two stimuli, one of which containing an oddball target. Each participant sees the (identical) stimuli on a different computer screen (Fig. 1). During the first, individual decision phase, each participant indicates which of the stimuli (first or second) contains the oddball target, by moving their interface to the left or right response area. Each participant controls his or her cursor independently, using a haptic device. In this phase, the devices of the two participants are not coupled and participants cannot communicate. If the individual decisions are identical, the trial ends. If they differ, a second consensus decision phase begins, in which participants make the same decision as above, but jointly control the cursor trajectory (i.e., the cursor trajectory is an average of the individual trajectories). Different from the first phase, the participants’ haptic devices are coupled and permit sensing the amount of force the co-actor applies to his or her device.

Figure 1.

Experimental setup. (A) Example experimental stimuli, without (left) or with (right) an oddball target. (B) Graphical illustration of the (left-right) decisions using the haptic interface. Participants are presented with a choice between the first and second stimulus (center panel) and have to move the haptic interface to the left (left panel) or right (right panel). The setup is the same for both individual and group decisions; but while during individual decisions the haptic interfaces of the two participants are not connected, they are connected during group decisions. See the main text for details.

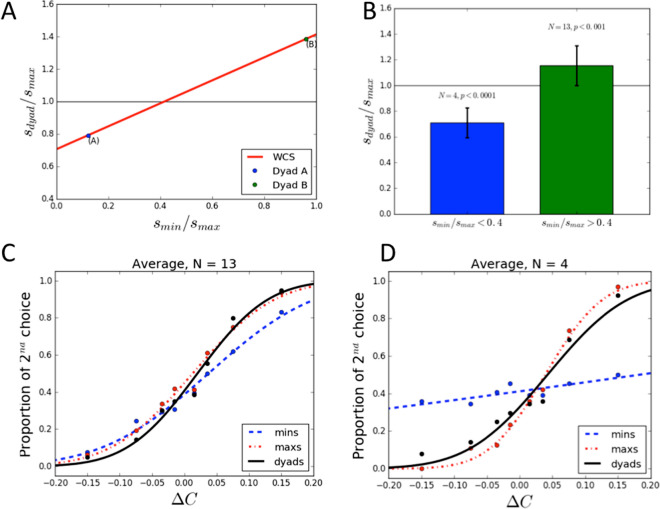

Haptic communication optimizes group decisions alike explicit communication: but is much faster

We fitted the response data using the weighted confidence sharing (WCS) model, which successfully explained group decisions using verbal communication3. The WCS model assumes that group decisions weight individual choices according to their relative confidence levels. It makes the theoretical prediction that when the ratio of sensitivities of dyad members is greater than 0.4 (i.e., participants have similar sensitivities), then the dyad will outperform each individual (Fig. 2A). The opposite happens if the ratio is lower than 0.4 (i.e., participants have different sensitivities). This prediction was confirmed, with dyads whose members had similar sensitivities () performing significantly better than their best members (t(13) = 3.94, p < 0.001) and dyads whose members had different sensitivities () performed significantly worse than their best members (t(4) = − 9.89, p < 0.0001) (Fig. 2B–D).

Figure 2.

Experimental results. (A) Theoretical prediction of the Weighted Confidence Sharing model (WCS model): dyads outperform their best individual members () if members have similar sensitivities (). (B) Performance of dyads whose members have similar (green) or different (blue) sensitivities. (C,D) Average psychometric functions of the worst (blue) and best (red) individual members, compared to the dyad (black). Dots are the average percentage of 2 stimuli chosen as answer, for each contrast difference in the experiment. Lines are fitted cumulative Gaussian functions. Steeper slopes correspond to higher sensitivities.

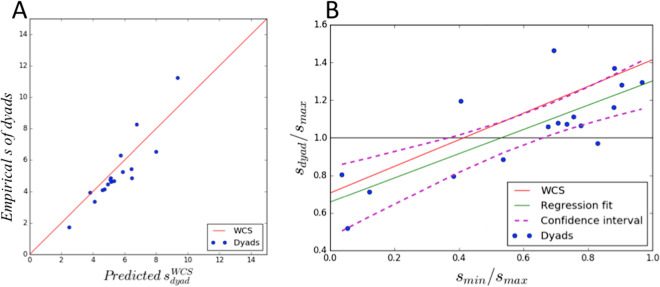

Furthermore, the slopes of the dyads’ psychometric functions and those predicted by the WCS were not significantly different (). As predicted by the WCS, the sensitivity of the dyads whose members had similar (dissimilar) sensitivity levels was significantly higher (lower) than the relative sensitivity of dyad members (Fig. 3). We found a significant linear correlation between improvement of the dyads against the relative sensitivities of their members (), with slope (0.64 ± 0.13) and intercept () close to those predicted by the WCS model (0.71 for slope and intercept).

Figure 3.

Computational analyses. (A) Correlation between dyads’ sensitivities observed in the experiment and those predicted by the WCS model. Blue dots: data points (mean data of each dyad over the 8 blocks); red line: theoretical prediction of the WCS model. (B) Correlation between dyads’ sensitivity, relative to the best member of the dyad. Green and purple lines: linear regression model fitted on the data points and 95% confidence interval, respectively.

The results of our study align very well with those of Bahrami et al.3, who used explicit verbal communication—and same WCS model applies equally well to both studies. However, group decision time was significantly faster in our study (N = 850, mean = 2856 ms, std = 2022 ms) than in Bahrami et al.3 (N = 5 groups, mean= 13,860 ms, std = 3720 ms; Dan Bang, personal communication).

Dyad members implicitly communicate and share their confidence by manipulating both the speed and the force of their movements

The WCS model requires individual confidence levels to be shared (because they need to be integrated). This raises the question of how, in our study, participants (implicitly) communicate and negotiate through the haptic channel. Previous studies showed that dyadic sensorimotor tasks, such as tapping in synchrony or lifting objects together, promote the emergence of Leader and Follower roles—with the Leader determining (for example) the pace of joint tapping15. Furthermore, Leaders often modify their action kinematics in communicative ways, to signal their roles and convey relevant task information to Followers18,20,21. In keeping with this body of evidence, we asked whether participants exploit their kinematic and kinetic movement parameters (e.g., the speed and force of their movements) to become “Leaders” of the group decision and to implicitly communicate their leadership and confidence.

We considered various kinematic and kinetic movement parameters as predictors of group choices. First, we considered individuals’ initial decision time for each trial as a predictor of leadership in the same trial. The correlation between being the individual who moves first and being the Leader (i.e., determining the group choice) is 66.5% overall (67.7% and 63% for dyads having similar and different sensitivities, respectively).

As a further proxy to initiative and early commitment to the group decision, we designed a First Crossing (1C) predictor: the side (left or right) at which any of the participants’ handles firstly exits a “small zone” centred on the start position40. We parametrised the “small zone” around the start position as using different thresholds (see Table 1). We found that the side selected by the (first) participant who moves less than 5% of the total distance to the target (i.e., threshold ) already predicts 88.5% of the group choices.

Table 1.

Proportion of group choices correctly predicted by the 1C predictor.

| : | 0.05 | 0.08 | 0.10 | 0.15 | 0.20 | 0.25 | 0.30 |

| % of correct predictions: | 88.5 | 90.0 | 91.9 | 92.9 | 93.7 | 94.6 | 95.7 |

Finally, we considered two kinetic parameters available through the haptic device—peak force (i.e., the highest force applied by a subject on the interface) and mechanical work (i.e., , i=0,1, where is the force applied on interface i and is the position of the interface i at time step k.)—and found that both are good predictors of group choices (71,7% and 69% accuracy, respectively). Leaders were more active than Followers during the interaction, with both significantly higher peak forces applied (Leader: 0.75N vs Follower: 0.43N; t(676) = 9.71, p < 0.0001) and significantly higher mechanical work provided (Leader: 0.30J vs Follower: − 0.08J; t(676) = 15.7, p < 0.0001). Rather, Followers tended to apply negative mechanical work, effectively exerting some (small) resistance to the Leader’s motion—see41,42 for similar results on dyadic co-manipulation.

Leaders impose their pace to the group decision movements

Previous studies of sensorimotor communication reported that Followers tend to align to Leaders’ movements during joint tasks (see15 for a review). In keeping, we asked whether Leaders imposed their pace to the group decision movements (and Followers adapted to it). We considered the ratio between the mean velocity of Leaders (VeloL) and Followers (VeloF) during the first part of the dyad movement (i.e., before the is crossed for the first time), which is arguable more important to reach consensus; and the mean velocity of the dyad (VeloD) during the second part of dyad movement (i.e., after the is crossed the first time), which is necessary to complete the trial. We found VeloL/VeloD (1.0788) to be significantly smaller than VeloF/VeloD (1.1115), (N = 1866.0, p-value: 0.00348, t-value: − 2.92344, d-value: − 0.05613). The fact that the former ratio (VeloL/VeloD) is closer to 1 than the latter (VeloF/VeloD) ratio indicates that the Leader is more able to impose his pace on the group decision movements.

Control analyses

To rule out the possibility that group decisions were made without negotiation, by simply following “who moves first (or pulls harder)”, we performed two control analyses, which compare individual and group decisions. We found group decision time (N = 850, mean = 2856 ms, std = 2022 ms) to be significantly longer than decision time of group members decision time (N = 4352, mean = 881 ms, std = 788 ms): t(850, 4352) = − 23.84, p < 0.0001. This result holds true both for dyads of similar (t(626,3328) = − 23.42, p < 0.0001) and different (t(224, 1024) = − 10.15, p < 0.0001) sensitivities. Furthermore, we found group initiation time, as indexed by the time the group reaches the 1C parameter (i.e., the handle firstly exit the starting zone as ) to be significantly slower than initiation time of group members, but only for 0.05 and for dyads having similar sensitivities (). Rather, we found group initiation time to be significantly faster than initiation time of group members (− ) for dyads having different sensitivities. These two control analyses reassuringly suggest that group decisions require involve time-consuming negotiation; and the slower initiation time of groups whose members have similar sensitivities may be conducive of better choices.

Discussion

Group decision making is an active area of research across behavioral sciences, psychology and neuroscience43, ecology44 and collective (or swarm) robotics45; but its dynamics and optimality principles are still incompletely known.

We show that sensorimotor (haptic) communication can optimise group decisions. Haptically coupled dyads perform significantly better than the best individual of the dyad, when the two individual members have similar visual sensitivities; but the opposite is true when the dyad members have different sensitivity levels. This group advantage was shown in tasks using explicit, verbal communication3. Here we demonstrate that implicit (haptic) channels can achieve the same results, at least in the joint decision investigated here.

In general, verbal communication can be much richer than implicit communication. However, in the context of this task, the specific information to be conveyed concerns (one’s belief about) the correct target. Both verbal and haptic channels can convey this information; but it is plausible that haptic information can convey it more precisely, i.e., with a better information/noise ratio. This speaks to the fact that explicit and implicit communication channels may have complementary benefits. Tasks requiring sophisticated debate and diplomacy and where response options are open-ended, such as legal proceedings or diplomatic negotiates, may be more difficult to address using implicit compared to explicit, verbal communication. On the other hand, simpler tasks having clear response options that can be mapped to spatial locations, implicit communication may afford a faster but equally accurate consensus compared to explicit communication.

Indeed, in our study, consensus was reached (in most cases) in less than 3 seconds, whereas with verbal communication it required about 14 seconds3. Note that neither in our study nor in those using explicit communication there was any time pressure. Clearly, the comparison may seem unfair, as (compared to haptics) language is a much richer communication channel; and hence consensus and conventions may take time to arise. What is most interesting in this comparison is that implicit channels afford very fast group consensus—which is relevant when it is necessary to trade off richness and speed of communication, such as during situated group decisions and team sports.

Our results can be explained within an optimal multisensory integration framework9–11, which combines multiple sources of evidence and weights them in proportion to their confidence levels. Importantly, the same WCS model that incorporates the above Bayesian assumptions explains group decisions that use both explicit3 and implicit communication (this study), suggesting that the differences between the two may be less prominent than currently believed—at least for the choices considered here. The computational model further indicates confidence sharing as a key ingredient to optimize group decisions. This raises the question of how exactly dyads communicate their confidence levels through the haptic channel.

Our results show that co-actors share their confidence levels and optimise group decisions by synergistically manipulating the movement parameters that are available via the haptic interface, such as speed and force. We found initiative (as indexed by 1C) to be the most effective group choice predictor; indeed, the participant who takes the initiative and commits early to a decision often acquires leadership and determines the final choice. However, the fact that higher levels of activity and force afford accurate predictions of group choice indicates that both kinematic and kinetic parameters may be used in combination. Supposedly, an effective sensorimotor communication strategy consists in taking initiative and then applying some force to maintain—and communicate—commitment to the choice and to impose a pace to the decision.

The success of such sensorimotor communication strategy may be due to the fact that both speed and force of movement reliably signal confidence in addition to advancing the decision. Indeed, given that response time and confidence are inversely correlated46,47, making an early commitment is a reliable signal that one is confident about the decision. Exerting force can reliably signal one’s confidence, too. The parallel between force and confidence is made apparent by the recent finding that participants showing (sub-threshold) motor activation in their response effectors have significantly higher confidence (but not necessarily accuracy) in their choices48. These findings suggest that haptic interfaces provide efficient channels, such as speed and force, for implicit confidence sharing, which is key to optimal group decisions.

Our results have deep theoretical and technological implications. From a theoretical perspective, our findings run against the hypothesis that explicit communication is necessary to achieve optimal decisions or to communicate confidence; suggesting that classical theories of communication should be expanded to consider more fully sensorimotor exchanges13,14,49–54. From a technological perspective, our results can pave the way to the development of novel negotiation and decision support tools that exploit fast sensorimotor channels to facilitate and improve group decisions. While we focused on a visuo-haptic interface, similar results may be obtained using other (e.g., auditory) channels, to the extent that they afford rich sensorimotor communication. Our study reveals that crucial information for accurate group decisions—one’s own confidence about the decision – can be conveyed by modulating speed and force of movement. It remains to be studied whether using different sensorimotor channels or interfaces offers the same or different ways to communicate confidence and other important information. Another challenge for the future consists in expanding the scope of sensorimotor technologies, to afford more complex negotiation dynamics that may be required to reach consensus beyond simple tasks.

Methods

Participants

Thirty-six participants (11 women) were recruited for this experiment amongst interns, master degree students, PhD students, post-docs and engineers of the Sorbonne Université and paired in dyads (8 M-M, 9 M-F, 1 F-F). Dyads were formed by pairing participants who were not friends or collaborators, to avoid possible influences of previous interactions on task performance and leadership. Participants were free of any known psychiatric or neurological symptoms, non-corrected visual or auditory deficits and recent use of any substance that could impede concentration. They were all right handed. Their mean age was 26.3 (SD = 5.25). This research was reviewed and approved by the High Council for Research and Higher Education (HCERES) institutional ethics committee. The research was performed in accordance with the relevant guidelines and regulations. Informed consent was obtained from each participant. One dyad had to be excluded because one of the members systematically defaulted to her partner’s choice in the second phase. The analysis was thus conducted on 34 participants.

Procedure

Dyad members are in the same testing room, seated side by side, and each has a computer screen. An opaque curtain is positioned between them in order to prevent them from seeing each other. Participants are instructed to refrain from trying to communicate orally with their partners for the duration of the experiment. Headphones playing pink noise are used to prevent the subjects from hearing each other or potential audio clues in the testing room. Visual feedback is provided to the participants through individual displays.

Each subject controls a custom, one degree-of-freedom haptic interface, which use two MAXON DC Motors (RE65-250W), connected to a 80mm handle for actuation and a magnetic encoder (CUI INC AMT11)55. The full design of the haptic interface is open source, available on GitHub at: github.com/LudovicSaintBauzel/teleop-controller-bbb-xeno.git.

Each experiment includes 8 blocks of 16 trials each. Subjects switch their positions after half the trials. Each trial proceeds as follows. First, the haptic interfaces are automatically centred and a warning message is displayed (1000ms). Second, a black central fixation cross is displayed on each subject’s screen for a random duration (500-1000ms).

The third phase is the individual decision phase. Two visual stimuli (6 Gabor patches displayed in circle) are sequentially presented to both subjects, for 85ms. A 1000 ms pause (grey screen, black fixation cross) is observed between the two stimuli. In either the first or second stimulus, one of the 6 patches has a slightly higher contrast (oddball target). The task objective is to determine whether the oddball target is in the first or second stimulus. Note that he oddball targets can have 4 different level of contrast compared to the baseline. The oddball target timing (first or second wave), position (one of the six patches) and contrast (one of the four levels, , , and ; baseline is ) are randomized for each trial. The oddball timing and contrast levels were used as independent variables and the number of occurrences of each of their combinations was balanced over each block (each of the 8 combinations appear twice per block, for a total of 16 trials per block). After the presentation of the stimuli, both subjects must indicate their individual answer, by moving the handle of the haptic interface towards the left (to select the first stimulus) or right (to select the second stimulus). In this phase, the positions of the haptic interfaces are independent, and each subject answers individually. After both subjects have answered, both answers are displayed for each subject. If they agree, feedback about the correct answer is given with both a color code (green for a correct answer, red for an incorrect one) and a symbol (green check mark for a correct answer, red cross for an incorrect one); and the trial ends. If they disagree, only their individual choices is provided and participants enter in the group decision phase.

The fourth phase is the group decision phase. In this phase, haptic feedback is added to the interfaces: the teleoperation controller will constrain the motions of the interfaces so that there are identical at all time. In this configuration, the interfaces’ positions are the same and the subjects have equal control over it. Furthermore, they can feel the force applied to the interfaces by each other. The subject must jointly move the interfaces in order to indicate their final choice (left for first stimulus, right for second). The interfaces must remain one second at stop in order to validate the common answer. During the group decision, participants can sense the force applied by their co-actors via the haptic interface. To avoid conflicts being resolved by brute force, visual feedback about the current level of force applied by the dyad on the interfaces was displayed as a red bar graph. Participants were asked to keep the force below the maximum (full bar, corresponding to ~24N).

Finally, after the group decision, feedback about the individual choices and the common decision are given to the subjects (CORRECT/WRONG). Feedback is color-coded: yellow for subject 1 on the left, blue for subject 1 on the right. Note that each feedback phase lasts a maximum of 10 seconds; but after 3 seconds, participants can skip by placing their fingers on the interface. At the end of the feedback, the graphical interface goes back to step 1, and the trials continue until the end of the experimental block.

Psychometric functions

Individual and dyadic psychometric functions are constructed by plotting the proportion of trials in which the oddball target was seen in the second wave of stimuli against the contrast difference at the oddball location (contrast in the second wave minus contrast in the first); see also3.

Examples of psychometric functions are shown in the main article. The dots correspond to the average proportion of 2 stimuli chosen as answer, for each contrast difference (± 1,5%, ± 3.5%, ± 7%, ± 15%). Lines are the fitted cumulative Gaussian functions for each individuals and dyads. The psychometric curves are fit to a cumulative Gaussian function whose parameters are bias (b) and variance (). Estimation of these parameters is done through curve fitting regression (Python Scipy curve_fit() function).

A participant with bias b and variance would have a psychometric curve given by:

| 1 |

with the contrast difference between second and first stimuli, and H(z) the cumulative normal function.

The psychometric curve, P(C), corresponds to the probability of reporting that the second stimulus had the higher contrast. Thus, a positive bias indicates an increased probability of saying that the second stimulus had higher contrast (and thus corresponds to a negative mean for the underlying Gaussian distribution).

Given the above definitions for P(C), the variance is related to the maximum slope of the psychometric curve, denoted s, via :

| 2 |

A steep slope indicates small variance and thus highly sensitive performance.

Weighted Confidence Sharing (WCS) Model

We used the Weighted Confidence Sharing (WCS) model3 to fit our behavioral data. The WCS assumes that participants share their confidence and make a Bayes-optimal decision based on the ratio of the individual values. This permits inferring the dyad psychometric function from the psychometric functions of the dyad members, as follows:

| 3 |

with

| 4 |

and

| 5 |

Consequently, the slope of the dyad’s psychometric function can be calculated as:

| 6 |

The WCS model predicts that the performance (sensitivity) of the dyad is superior to those of the best member if the sensitivities of the participants are similar. This can be appreciated by noting that if is the slope of the psychometric function of the best performing member of the dyad, and the slope of his/her partner’s psychometric function, we have:

| 7 |

If we compare the performances of the dyad and of the best performing member we have:

| 8 |

The WCS model makes the theoretical prediction that if the ratio of sensitivities of the dyad’s members is greater than 0.4 (i.e., participants have similar sensitivities), then the dyad will outperform each individual. The opposite happens if the ratio is lower than 0.4 (i.e., participants have different sensitivities). More formally, the following property holds: if .

Our findings reported in the main article confirm the theoretical predictions of the WCS model and permit to rule out alternative models considered in3: the Coin Flip (CF) model, which considers that conflicts are decided by chance; the Behaviour and Feedback (BF) model, which considers that participants learn who is the most accurate group member and rely on his or her choice during group decisions; and the Direct Signal Sharing (DSS) model, which considers that dyads communicate the mean and standard deviation of each member’s sensory response. None of these alternative models would predict the pattern of responses that we observe in our data.

Note that as reported in the main article, we found the slope of the linear regression fit of participants’ performance to be slightly slower than what predicted by the WCS model (despite the difference does not reach significance). This finding can be explained with a small modification of the WCS model: by adding a small bias to the most skilled member of the dyad. Indeed, according to the WCS model, the dyad sensitivity improvement can be calculated as: (Eq. 8). If the dyad slightly over-weights the decision of the most skilled member, the resulting sensitivity will be shifted towards :

| 9 |

with the relative weights.

This model would lead to a similar intercept than the WCS model, with a lower slope, which would explain the pattern of results we obtain.

First crossing (1C) parameter

The 1C parameter is defined as the side on which the individual position of one of the two subjects exits the interval . The position data from the group decision phase are extracted and normalised so that the middle starting position corresponds to , and the left and right sides corresponds to and respectively. The value of for the 1C calculations is then chosen as a percentage of .

Mechanical work parameter

The mechanical work of a subject is calculated as , i=0,1, where is the force applied on the interface i and is the position of the interface i at time step k.

Author contributions

G.P., L.R., L.S.B. designed the research; L.R. conducted the research under the supervision of G.P. and L.S.B.; G.P., L.R. and L.S.B. wrote the article.

Funding

This research received funding from the European Union’s Horizon 2020 Framework Programme for Research and Innovation under the Specific Grant Agreement Nos. 785907 and 945539 (Human Brain Project SGA2 and SGA3) to GP, the European Research Council under the Grant Agreement No. 820213 (ThinkAhead) to GP and the Office of Naval Research (ONR), grant number N62909-19-1-2017 to GP.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Moshman D, Geil M. Collaborative reasoning: Evidence for collective rationality. Think. Reason. 1998;4:231–248. doi: 10.1080/135467898394148. [DOI] [Google Scholar]

- 2.Bang, D. & Frith, C. D. Making better decisions in groups. R. Soc. Open Sci.4 (2017). http://rsos.royalsocietypublishing.org/content/4/8/170193. http://rsos.royalsocietypublishing.org/content/4/8/170193.full.pdf. [DOI] [PMC free article] [PubMed]

- 3.Bahrami, B. et al. Optimally interacting minds. Science329, 1081–1085 (2010). http://science.sciencemag.org/content/329/5995/1081. http://science.sciencemag.org/content/329/5995/1081.full.pdf. [DOI] [PMC free article] [PubMed]

- 4.Bahrami B, et al. What failure in collective decision-making tells us about metacognition. Philos. Trans. R. Soc. B. 2012;367:1350–1365. doi: 10.1098/rstb.2011.0420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fusaroli R, et al. Coming to terms: Quantifying the benefits of linguistic coordination. Psychol. Sci. 2012;23:931–939. doi: 10.1177/0956797612436816. [DOI] [PubMed] [Google Scholar]

- 6.Haller SP, Bang D, Bahrami B, Lau JY. Group decision-making is optimal in adolescence. Sci. Rep. 2018;8:15565. doi: 10.1038/s41598-018-33557-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Koriat, A. When are two heads better than one and why? Science336, 360–362 (2012). http://science.sciencemag.org/content/336/6079/360. http://science.sciencemag.org/content/336/6079/360.full.pdf. [DOI] [PubMed]

- 8.Sorkin RD, Hays CJ, West R. Signal-detection analysis of group decision making. Psychol. Rev. 2001;108:183. doi: 10.1037/0033-295X.108.1.183. [DOI] [PubMed] [Google Scholar]

- 9.Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- 10.Kording K, Wolpert D. Bayesian decision theory in sensorimotor control. Trends Cogn. Sci. 2006;10:319–326. doi: 10.1016/j.tics.2006.05.003. [DOI] [PubMed] [Google Scholar]

- 11.Doya K, Ishii S, Pouget A, Rao RPN, editors. Bayesian Brain: Probabilistic Approaches to Neural Coding. 1. Cambridge: The MIT Press; 2007. [Google Scholar]

- 12.Navajas J, Niella T, Garbulsky G, Bahrami B, Sigman M. Aggregated knowledge from a small number of debates outperforms the wisdom of large crowds. Nat. Hum. Behav. 2018;2:126. doi: 10.1038/s41562-017-0273-4. [DOI] [Google Scholar]

- 13.Metcalfe J. Memory. Amsterdam: Elsevier; 1996. Metacognitive processes; pp. 381–407. [Google Scholar]

- 14.Sperber D, Wilson D. Relevance: Communication and Cognition. New York: Wiley-Blackwell; 1995. [Google Scholar]

- 15.Pezzulo G, et al. The body talks: Sensorimotor communication and its brain and kinematic signatures. Phys. Life Rev. 2018;28:1–21. doi: 10.1016/j.plrev.2018.06.014. [DOI] [PubMed] [Google Scholar]

- 16.Sebanz N, Bekkering H, Knoblitch G. Joint action: Bodies and minds moving together. Trends Cogn. Sci. 2006;10:70–76. doi: 10.1016/j.tics.2005.12.009. [DOI] [PubMed] [Google Scholar]

- 17.Vesper C, van der Wel RPRD, Knoblich G, Sebanz N. Making oneself predictable: Reduced temporal variability facilitates joint action coordination. Exp. Brain Res. 2011;211:517–530. doi: 10.1007/s00221-011-2706-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Candidi M, Curioni A, Donnarumma F, Sacheli LM, Pezzulo G. Interactional leader-follower sensorimotor communication strategies during repetitive joint actions. J. R. Soc. Interface. 2015;12:20150644. doi: 10.1098/rsif.2015.0644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Curioni A, Minio-Paluello I, Sacheli LM, Candidi M, Aglioti SM. Autistic traits affect interpersonal motor coordination by modulating strategic use of role-based behavior. Molecular autism. 2017;8:1–13. doi: 10.1186/s13229-017-0141-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Pezzulo G, Donnarumma F, Dindo H. Human sensorimotor communication: A theory of signaling in online social interactions. PLoS ONE. 2013;8:1–11. doi: 10.1371/journal.pone.0079876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sacheli LM, Tidoni E, Pavone E, Aglioti S, Candidi M. Kinematics fingerprints of leader and follower role-taking during cooperative joint actions. Exp. Brain Res. 2013;226:473–486. doi: 10.1007/s00221-013-3459-7. [DOI] [PubMed] [Google Scholar]

- 22.Sartori L, Becchio C, Bara BG, Castiello U. Does the intention to communicate affect action kinematics? Conscious. Cogn. 2009;18:766–772. doi: 10.1016/j.concog.2009.06.004. [DOI] [PubMed] [Google Scholar]

- 23.Vesper C, Richardson MJ. Strategic communication and behavioral coupling in asymmetric joint action. Exp. Brain Res. 2014 doi: 10.1007/s00221-014-3982-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Konvalinka I, Vuust P, Roepstorff A, Frith CD. Follow you, follow me: Continuous mutual prediction and adaptation in joint tapping. Q. J. Exp. Psychol. (Colchester) 2010;63:2220–2230. doi: 10.1080/17470218.2010.497843. [DOI] [PubMed] [Google Scholar]

- 25.Noy L, Dekel E, Alon U. The mirror game as a paradigm for studying the dynamics of two people improvising motion together. Proc. Natl. Acad. Sci. USA. 2011;108:20947–20952. doi: 10.1073/pnas.1108155108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Skewes JC, Skewes L, Michael J, Konvalinka I. Synchronised and complementary coordination mechanisms in an asymmetric joint aiming task. Experimental brain research. 2015;233:551–565. doi: 10.1007/s00221-014-4135-2. [DOI] [PubMed] [Google Scholar]

- 27.Era V, Aglioti SM, Mancusi C, Candidi M. Visuo-motor interference with a virtual partner is equally present in cooperative and competitive interactions. Psychol. Res. 2020;84:810–822. doi: 10.1007/s00426-018-1090-8. [DOI] [PubMed] [Google Scholar]

- 28.Gandolfo M, Era V, Tieri G, Sacheli LM, Candidi M. Interactor’s body shape does not affect visuo-motor interference effects during motor coordination. Acta Psychol. 2019;196:42–50. doi: 10.1016/j.actpsy.2019.04.003. [DOI] [PubMed] [Google Scholar]

- 29.Salazar Kämpf, M. et al. Disentangling the sources of mimicry: Social relations analyses of the link between mimicry and liking. Psychol. Sci.29, 131–138 (2018). [DOI] [PubMed]

- 30.van der Wel, R., Knoblich, G. & Sebanz, N. Let the force be with us: Dyads exploit haptic coupling for coordination. J. Exp. Psychol. (2010). [DOI] [PubMed]

- 31.D’Ausilio A, et al. Leadership in orchestra emerges from the causal relationships of movement kinematics. PLoS ONE. 2012;7:e35757. doi: 10.1371/journal.pone.0035757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ganesh G, Tagaki A, Yoshioka T, Kawato M, Burdet E. Two is better than one: Physical interactions improve motor performance in humans. Nature. 2014;4:3824. doi: 10.1038/srep03824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Groten, R., Feth, D., Peer, A. & Buss, M. Shared decision making in a collaborative task with reciprocal haptic feedback-an efficiency-analysis. In 2010 IEEE International Conference on Robotics and Automation 1834–1839 (IEEE, 2010).

- 34.Malysz P, Sirouspour S. Task performance evaluation of asymmetric semiautonomous teleoperation of mobile twin-arm robotic manipulators. IEEE Transactions on Haptics. 2013;6:484–495. doi: 10.1109/TOH.2013.23. [DOI] [PubMed] [Google Scholar]

- 35.Masumoto J, Inui N. Motor control hierarchy in joint action that involves bimanual force production. J. Neurophysiol. 2015;113:3736–3743. doi: 10.1152/jn.00313.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Pezzulo G, Iodice P, Donnarumma F, Dindo H, Knoblich G. Avoiding accidents at the champagne reception: A study of joint lifting and balancing. Psychol. Sci. 2017;28:338–345. doi: 10.1177/0956797616683015. [DOI] [PubMed] [Google Scholar]

- 37.Reed KB, Peshkin MA. Physical collaboration of human-human and human-robot teams. IEEE Trans. Haptics. 2008;1(2):108–120. doi: 10.1109/TOH.2008.13. [DOI] [PubMed] [Google Scholar]

- 38.Takagi A, Usai F, Ganesh G, Sanguineti V, Burdet E. Haptic communication between humans is tuned by the hard or soft mechanics of interaction. PLoS Comput. Biol. 2018;14:e1005971. doi: 10.1371/journal.pcbi.1005971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Takagi, A., Hirashima, M., Nozaki, D. & Burdet, E. Individuals physically interacting in a group rapidly coordinate their movement by estimating the collective goal. eLife8, e41328 (2019). [DOI] [PMC free article] [PubMed]

- 40.Roche, L. & Saint-Bauzel, L. Implementation of haptic communication in comanipulative tasks: A statistical state machine model. In 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2670–2675 (2016).

- 41.Melendez-Calderon, A. Classification of strategies for disturbance attenuation in human-human collaborative tasks. In 33rd Annual International Conference of the IEEE EMBS (2011). [DOI] [PubMed]

- 42.Reed, K. B. et al. Haptic cooperation between people, and between people and machines. In 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems 2109–2114 (2006).

- 43.Toyokawa W, Kim H-R, Kameda T. Human collective intelligence under dual exploration-exploitation dilemmas. PLoS ONE. 2014;9:e95789. doi: 10.1371/journal.pone.0095789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Marshall JA, Brown G, Radford AN. Individual confidence-weighting and group decision-making. Trends Ecol. Evol. 2017;32:636–645. doi: 10.1016/j.tree.2017.06.004. [DOI] [PubMed] [Google Scholar]

- 45.Rosenberg, L. Artificial swarm intelligence vs human experts. In Neural Networks (IJCNN), 2016 International Joint Conference on 2547–2551 (IEEE, 2016).

- 46.Pleskac TJ, Busemeyer JR. Two-stage dynamic signal detection: A theory of choice, decision time, and confidence. Psychol. Revi. 2010;117:864. doi: 10.1037/a0019737. [DOI] [PubMed] [Google Scholar]

- 47.Vickers D, Packer J. Effects of alternating set for speed or accuracy on response time, accuracy and confidence in a unidimensional discrimination task. Acta Psychol. 1982;50:179–197. doi: 10.1016/0001-6918(82)90006-3. [DOI] [PubMed] [Google Scholar]

- 48.Gajdos, T., Fleming, S. M., Saez Garcia, M., Weindel, G. & Davranche, K. Revealing subthreshold motor contributions to perceptual confidence. Neurosci. Conscious. (2019). [DOI] [PMC free article] [PubMed]

- 49.Donnarumma F, Dindo H, Pezzulo G. You cannot speak and listen at the same time: A probabilistic model of turn-taking. Biol. Cybernet. 2017;111:156–183. doi: 10.1007/s00422-017-0714-1. [DOI] [PubMed] [Google Scholar]

- 50.Donnarumma F, Dindo H, Pezzulo G. Sensorimotor coarticulation in the execution and recognition of intentional actions. Front. Psychol. 2017;8:237. doi: 10.3389/fpsyg.2017.00237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Donnarumma F, Dindo H, Pezzulo G. Sensorimotor communication for humans and robots: Improving interactive skills by sending coordination signals. IEEE Trans. Cogn. Dev. Syst. 2017;10:903–917. doi: 10.1109/TCDS.2017.2756107. [DOI] [Google Scholar]

- 52.Pezzulo G, Dindo H. What should i do next? Using shared representations to solve interaction problems. Exp. Brain Res. 2011;211:613–30. doi: 10.1007/s00221-011-2712-1. [DOI] [PubMed] [Google Scholar]

- 53.Pezzulo G. The interaction engine: A common pragmatic competence across linguistic and non-linguistic interactions. IEEE Trans. Auton. Mental Dev. 2012;4:105–123. doi: 10.1109/TAMD.2011.2166261. [DOI] [Google Scholar]

- 54.Pezzulo G. Studying mirror mechanisms within generative and predictive architectures for joint action. Cortex. 2013;49:2968–2969. doi: 10.1016/j.cortex.2013.06.008. [DOI] [PubMed] [Google Scholar]

- 55.Roche, L. & Saint-Bauzel, L. The semaphoro haptic interface: a real-time low-cost open-source implementation for dyadic teleoperation. In ERTS (2018).