Abstract

We present an electrophysiological dataset collected from the amygdalae of nine participants attending a visual dynamic stimulation of emotional aversive content. The participants were patients affected by epilepsy who underwent preoperative invasive monitoring in the mesial temporal lobe. Participants were presented with dynamic visual sequences of fearful faces (aversive condition), interleaved with sequences of neutral landscapes (neutral condition). The dataset contains the simultaneous recording of intracranial EEG (iEEG) and neuronal spike times and waveforms, and localization information for iEEG electrodes. Participant characteristics and trial information are provided. We technically validated this dataset and provide here the spike sorting quality metrics and the spectra of iEEG signals. This dataset allows the investigation of amygdalar response to dynamic aversive stimuli at multiple spatial scales, from the macroscopic EEG to the neuronal firing in the human brain.

Subject terms: Perception, Amygdala, Neuronal physiology

| Measurement(s) | brain electrical activity • response to aversive stimulus • medial temporal lobe • neuronal action potential • functional brain measurement • response to stimulus • amygdala |

| Technology Type(s) | Electrocorticography • microelectrode |

| Factor Type(s) | aversive vs neutral stimulus • seizure onset zone |

| Sample Characteristic - Organism | Homo sapiens |

Machine-accessible metadata file describing the reported data: 10.6084/m9.figshare.13292561

Background & Summary

Several aspects of perception and cognition involve the amygdala. Neural activity within the amygdala is implied in novelty detection1, perception of faces2, emotions3 and aversive learning4. Emotional recognition is facilitated by presentation of fearful facial expression and especially their dynamic presentation5. Presentation of dynamic faces has been shown to elicit strong electrophysiological responses in the scalp electroencephalography (EEG)6. Within the face perception network, the human amygdala is an important node7 where its role has been mainly investigated by means of Blood Oxygen Level Dependent (BOLD) responses8–10. Electrophysiological oscillatory responses in the human amygdala are mostly explored in patients with refractory epilepsy who are undergoing pre-surgical monitoring11,12. In these patients, the intracranial electroencephalography (iEEG) records local field potentials that result from the activity of thousands of neurons13. Thanks to technological advance, iEEG can be combined with recordings of single neuron activity in the human amygdala14–16. While these two different types of data provide complementary information on the processing of sensory stimuli, their simultaneous recording remains rare.

Here, we describe a publicly released data set recorded from 14 amygdalae of 9 epilepsy patients. It consists of simultaneously acquired iEEG and single-neuron recordings. Differences in amygdala activation were found in response to watching sequences from thriller and horror movies showing actors portraying fearful faces in contrast to relaxing landscape recordings. The task (Fig. 1a) presents salient visual stimuli in a naturalistic way, different from most human single-neuron studies that have only used static stimuli3,16,17. Previous publications with the same task have shown strong amygdala responses with BOLD10,18 and iEEG11,12 together with enhanced firing of single neurons12. In a detailed analysis of the dataset12, we have described the interactions between iEEG and neuronal firing. Along with the iEEG traces and neuronal recordings, we here provide the technical validation of the quality of the isolated neurons, the localization information for iEEG electrodes, and the task video19. This dataset represents a unique opportunity for further investigation of the cross-scale dynamics that define the relation between macroscopic oscillatory activity in the iEEG and the neuronal firing in the human amygdala.

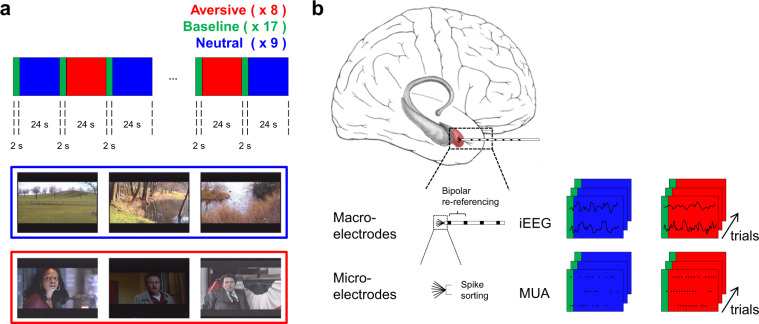

Fig. 1.

Task and recordings. (a) Aversive (red) and neutral (blue) video sequences were presented in blocks of 24 s, interleaved with a repeated 2 s neutral baseline (green). The images are representative video stills drawn from the video sequences. (b) iEEG was recorded with macroelectrodes and neuronal action potentials were recorded with microelectrodes. iEEG signals were stored trial-wise as recorded and after bipolar re-referencing. Neuronal action potentials (spikes) were extracted from the microelectrode recordings by spike sorting and stored trial-wise for each neuron.

Methods

Task

Short video sequences with dynamic fearful faces were compiled to activate the amygdala (Fig. 1a). The video was first used with fMRI in10 and later with iEEG11 and single neuron recordings12. The video is available in the original AVI format and read by a custom program19. Schacher et al.10 describe the video as follows: “To activate the amygdala, we developed a paradigm utilizing visual presentations of dynamic fearful faces. Stimuli were presented in a block design. The paradigm consisted of eight activation (aversive) and eight baseline (neutral) blocks each lasting 24 seconds. The activation condition consisted of 75 brief episodes (2 to 3 seconds) from thriller and horror films. All episodes showed the faces of actors who were expressing fear with high intensity. None of the episodes showed violence or aggression. Quality and applicability of film sequences were evaluated by an expert panel consisting of nine psychologists. Of an initial collection of 120 scenes, only sequences that were considered appropriate by the majority of the expert panel were extracted for the paradigm. Evaluation criteria were as follows: 1) actor’s face is clearly visible; 2) emotion displayed is clearly recognizable as fear; 3) fear is the only clearly recognizable emotion (no other emotion, e.g., anger, sadness, surprise, is displayed); and (4) the fear displayed is of high intensity. During baseline blocks, 72 short episodes of similar length (2 to 3 seconds) with dynamic landscape video recordings were presented. Video clips of calm domestic landscapes were used owing to their stable low emotional content while their general visual stimulus properties were comparable with the movie clips. Frequency and duration of the sequences (2 to 3 seconds) were matched for aversive and neutral conditions.”

Participants

Nine participants participated in the study (Table 1). All participants were patients with drug-resistant focal epilepsy. They were implanted with depth electrodes in the amygdala and in contiguous areas of the mesial temporal lobe for the potential surgical treatment of epilepsy. The implantation sites were selected solely based on the clinical indication. The study was approved by the institutional ethics review board (Kantonale Ethikkommission Zürich, PB-2016-02055). All participants provided written informed consent to participate in the study. The ethics approval covers the administration of multiple cognitive tasks. Some participants participated in several cognitive tasks. The data obtained in one of these other cognitive tasks has already been analysed and published earlier20,21.

Table 1.

Participant characteristics.

| Participant number | Age | Gender | Pathology | Implanted electrodes | Seizure onset zone (SOZ) electrodes |

|---|---|---|---|---|---|

| 1 | 31 | M | sclerosis | AL, AR | AR |

| 2 | 48 | M | gliosis | AL, AR | AR |

| 3 | 19 | F | sclerosis | AL | |

| 4 | 22 | M | sclerosis | AL | |

| 5 | 34 | M | sclerosis | AR | AR |

| 6 | 23 | M | sclerosis | AL, AR | AR |

| 7 | 39 | M | gliosis | AL, AR | AR |

| 8 | 27 | F | astrocytoma | AL | |

| 9 | 22 | M | sclerosis | AL, AR | AL, AR |

M: male; F: female; A: amygdala; L: left; R: right.

Recording setup

Data were recorded with a standard setup used in many hospitals that do human iEEG and single neuron recordings. We replicate here the description given in our earlier publications19–21. “We measured iEEG with depth electrodes (1.3 mm diameter, 8 contacts of 1.6 mm length, spacing between contact centers 5 mm, ADTech®, Racine, WI, www.adtechmedical.com), implanted stereotactically into the amygdala. Each macroelectrode had nine microelectrodes that protruded approximately 4 mm from its tip (Fig. 1b). Recordings were done against a common intracranial reference at a sampling frequency of 4 kHz for the macroelectrodes and 32 kHz for the microelectrodes via the ATLAS recording system (0.5–5000 Hz passband, Neuralynx®, Bozeman MT, USA, www.neuralynx.com). iEEG data were resampled at 2 kHz.” In the presented dataset, we share epoched iEEG data (trials of 26 seconds) as recorded and after bipolar re-referencing, and neuronal activity in the form of time stamps and average neuronal spike waveform.

Depth electrode localization

Electrodes were localized in the same way as in our earlier publications19–21, which we replicate in the following. “We used postimplantation CT scans and postimplantation structural T1-weighted MRI scans. Each scan was aligned to the ACPC (anterior commissure, posterior commissure) coordinate system. For each participant, the CT scan was registered to the postimplantation scan as implemented in FieldTrip22. In the coregistered CT-MR images, the electrode contacts were visually marked. The contact positions were normalized to the MNI space and assigned to a brain region using Brainnetome23. Anatomical labelling of each electrode contact was verified by the neurosurgeon (L.S.) after merging preoperative MRI with postimplantation CT images of each individual participant in the plane along the electrode (iPlan Stereotaxy 3.0, Brainlab, München, Germany). We specify whether electrodes were inside the seizure onset zone (SOZ).”

Spike detection and neuron identification

For spike sorting, we followed the same procedure as in our earlier publications19–21, where we described the method as follows. “The Combinato package (https://github.com/jniediek/combinato) was used for spike sorting24. Combinato follows a similar procedure to other freely available software packages: peak detection in the high-pass (>300 Hz) signal, computation of wavelet coefficients for detected peaks, and superparamagnetic clustering in the feature space of wavelet coefficients. As an advantage over other clustering procedures, Combinato is more sensitive in the detection of clusters of small size (few action potentials). We visually inspected each identified cluster based on the shape and amplitude of the action potentials and the interspike interval (ISI) distributions. We removed clusters noisy waveforms, or nonuniform amplitude or shape of the action potentials in the recorded time interval. Moreover, to avoid overclustering, we merged highly similar clusters identified on the same microelectrode to obtain units. We considered only units with firing rate >1 Hz. Finally, we computed several metrics of spike sorting quality (Fig. 2b–d).”

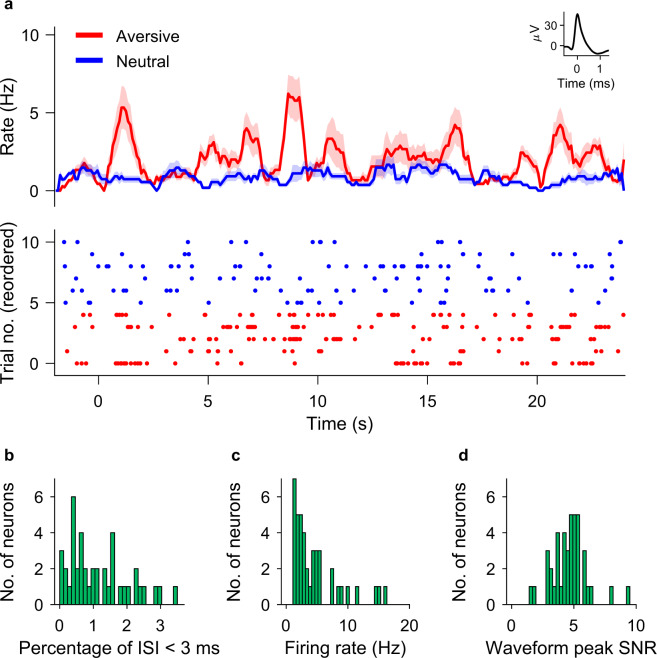

Fig. 2.

Neuronal firing and spike sorting quality metrics. (a) Example neuron in the amygdala. Top: Peristimulus time histogram (bin size: 100 ms; step size: 10 ms) for aversive (red) and neutral (blue) conditions. Shaded areas represent ± s.e.m. across trials of all spikes associated with the neuron Inset: mean extracellular waveform ± s.e.m. Bottom: Raster plot of trials reordered by trial condition for plotting purposes only. The trial onset is at time t = 0. (b) Histogram of percentage of inter-spike intervals (ISI) <3 ms. The majority of neurons had less than 0.5% of short ISI. (c) Histogram of average firing rate for all neurons. (d) Histogram of the signal-to-noise ratio (SNR) of the peak of the mean waveform.

Data Records

The dataset was released in the G-Node/NIX format and can be downloaded at 10.12751/g-node.270z5925,26.The README describes the repository structure and the instructions for downloading the data.

Data from each participant was saved in a single hierarchical data format (.h5) file. Each file has the same format and includes general information, information on the task, participant and session, intracranial EEG data, spike times and waveforms, and information on depth electrodes (Table 2). We adhere to the standard NIX format. Whenever we introduce a custom name, we explain the name in NIX_File_Structure.pdf. The NIX_File_Structure.pdf describes the structure of that data that our script reads (Main_Load_NIX_Data.m). The script calls the NIX library and is well-commented.

Table 2.

Data types in the NIX data.

| General information | Institution conducting the experiment |

| Recording location | |

| Related publications (name, doi) | |

| Recording setup (devices and settings) | |

| Task | Name |

| Description | |

| URL for downloading the task for PresentationⓇ | |

| Participant | For each particpant: Age, gender, pathology, depth electrodes, electrodes in seizure onset zone (SOZ) |

| Trials properties for each trial (trial number, condition, condition name) | |

| Session | Number of trials |

| Trial duration | |

| Intracranial EEG data | For each trial: Bipolar montage of signals recorded with a sampling frequency of 2 kHz |

| Labels and time axis | |

| Spike waveforms | For each unit: Mean and standard deviation of spike waveform in a 2-ms window, sampled at 32 kHz |

| Spike times | For each unit: Spike time with respect to t = 0 in the trial |

| Depth electrodes | MNI coordinates in millimeters |

| Electrode label | |

| Anatomical label updated after visual inspection of MRI | |

| Electrodes in the seizure onset zone (SOZ) |

The dataset was also released in the iEEG-BIDS format on the OpenNeuro repository (10.18112/openneuro.ds003374.v1.1.1)27–29. This repository includes metadata and iEEG data, and also the extended dataset in the NIX format. iEEG data is provided as the BrainVision and European data format (EDF) files.

Technical Validation

Spike-sorting quality metrics

Spike sorting yielded single unit activity (SUA) and multiunit activity (MUA). We refer here to a putative unit by the term ‘neuron’. The example neuron in Fig. 2a increased its firing rate during the presentation of faces. For all neurons, the histogram of the percentage of inter-spike intervals (ISI) <3 ms is shown in Fig. 2b. The majority of neurons had less than 3% of short ISI. The percentage of ISI below 3 ms was 1.15 ± 0.9%. The histogram of average firing rate is given in Fig. 2c. The average firing rate of all neurons was 1.66 ± 2.65 Hz. For the mean waveform, the ratio of the peak amplitude to the standard deviation of the noise (waveform peak signal-to-noise ratio) was 4.62 ± 1.46 (Fig. 2d). These metrics are in the range of what is expected for the physiology of neuronal firing.

Spectra of iEEG

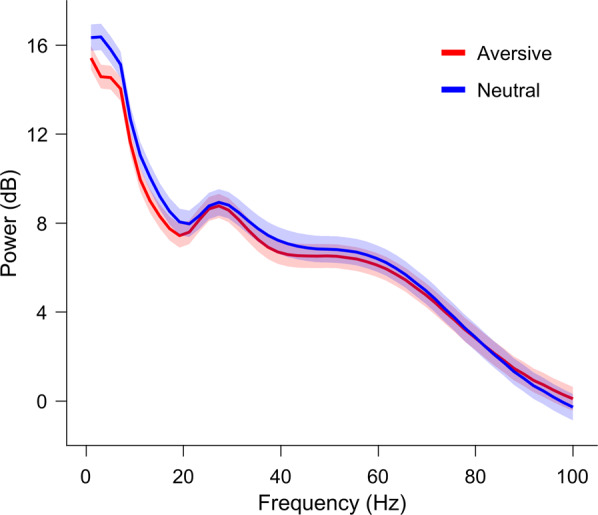

iEEG power spectra of signals from healthy amygdalae (outside the seizure onset zone, Table 1) for the two conditions in Fig. 3.

Fig. 3.

Power spectrum of the iEEG. The power spectrum for the aversive (red) and neutral (blue) conditions averaged over channels in the amygdalae outside of the SOZ (total 7 channels). Hann window 5 cycles of each frequency bin (temporal resolution: 100 ms; frequency resolution: 2 Hz).

Acknowledgements

We thank the physicians and the staff at Schweizerische Epilepsie-Klinik for their assistance and the participants for their participation. We acknowledge grants awarded by the Swiss National Science Foundation (SNSF 320030_156029 to J.S.), Mach-Gaensslen Stiftung (to J.S.), Stiftung für wissenschaftliche Forschung an der Universität Zürich (to J.S.), Forschungskredit der Universität Zürich (to T.F.) and Russian Foundation for Basic Research (RFBR 20-015-00176 A to T.F.). The funders had no role in the design or analysis of the study.

Author contributions

J.S. and H.J. designed the experiment. P.H. set up the recordings. J.S., T.F. conducted the experiments. T.F., E.B., V.C. analysed the data. T.G. provided patient care. L.S. performed surgery. T.F., V.C. and J.S. wrote the manuscript. All of the authors reviewed the final version of the manuscript.

Code availability

An example script is provided with the dataset26,29. It contains commented scripts for reading and plotting the data in NIX format25. We have also included scripts for the generation of Figs 2 and 3. All code is implemented in MATLAB (Mathworks Inc., version R2019a).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Balderston NL, Schultz DH, Helmstetter FJ. The Effect of Threat on Novelty Evoked Amygdala Responses. PloS One. 2013;8:e63220. doi: 10.1371/journal.pone.0063220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Adolphs R, Spezio M. Role of the amygdala in processing visual social stimuli. Prog. Brain Res. 2006;156:363–378. doi: 10.1016/S0079-6123(06)56020-0. [DOI] [PubMed] [Google Scholar]

- 3.Wang S, et al. Neurons in the human amygdala selective for perceived emotion. Proc. Natl. Acad. Sci. USA. 2014;111:E3110–E3119. doi: 10.1073/pnas.1323342111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Janak PH, Tye KM. From circuits to behaviour in the amygdala. Nature. 2015;517:284–292. doi: 10.1038/nature14188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Calvo MG, Avero P, Fernández-Martín A, Recio G. Recognition thresholds for static and dynamic emotional faces. Emotion. 2016;16:1186–1200. doi: 10.1037/emo0000192. [DOI] [PubMed] [Google Scholar]

- 6.Recio G, Sommer W, Schacht A. Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Res. 2011;1376:66–75. doi: 10.1016/j.brainres.2010.12.041. [DOI] [PubMed] [Google Scholar]

- 7.Duchaine B, Yovel G. A Revised Neural Framework for Face Processing. Annu. Rev. Vis. Sci. 2015;1:393–416. doi: 10.1146/annurev-vision-082114-035518. [DOI] [PubMed] [Google Scholar]

- 8.Krumhuber, E. G., Kappas, A. & Manstead, A. S. R. Effects of Dynamic Aspects of Facial Expressions: A Review: Emotion Review 41–46, 10.1177/1754073912451349 (2013).

- 9.Pitcher D, Ianni G, Ungerleider LG. A functional dissociation of face-, body- and scene-selective brain areas based on their response to moving and static stimuli. Sci. Rep. 2019;9:8242. doi: 10.1038/s41598-019-44663-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schacher M, et al. Amygdala fMRI lateralizes temporal lobe epilepsy. Neurology. 2006;66:81–87. doi: 10.1212/01.wnl.0000191303.91188.00. [DOI] [PubMed] [Google Scholar]

- 11.Zheng J, et al. Amygdala-hippocampal dynamics during salient information processing. Nat. Commun. 2017;8:14413. doi: 10.1038/ncomms14413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Fedele T, et al. The relation between neuronal firing, local field potentials and hemodynamic activity in the human amygdala in response to aversive dynamic visual stimuli. Neuroimage. 2020;213:116705. doi: 10.1016/j.neuroimage.2020.116705. [DOI] [PubMed] [Google Scholar]

- 13.Lachaux J-P, Axmacher N, Mormann F, Halgren E, Crone NE. High-frequency neural activity and human cognition: Past, present and possible future of intracranial EEG research. Prog. Neurobiol. 2012;98:279–301. doi: 10.1016/j.pneurobio.2012.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fried I, MacDonald KA, Wilson CL. Single Neuron Activity in Human Hippocampus and Amygdala during Recognition of Faces and Objects. J. Neurosci. 1997;29:13613–13620. doi: 10.1016/s0896-6273(00)80315-3. [DOI] [PubMed] [Google Scholar]

- 15.Parvizi J, Kastner S. Promises and limitations of human intracranial electroencephalography. Nature Neuroscience. 2018;21:474–483. doi: 10.1038/s41593-018-0108-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rutishauser U, et al. Single-Unit Responses Selective for Whole Faces in the Human Amygdala. Curr. Biol. 2011;21:1654–1660. doi: 10.1016/j.cub.2011.08.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Canli T, Sivers H, Whitfield SL, Gotlib IH, Gabrieli JDE. Amygdala Response to Happy Faces as a Function of Extraversion. Science. 2002;296:2191. doi: 10.1126/science.1068749. [DOI] [PubMed] [Google Scholar]

- 18.Steiger BK, Muller AM, Spirig E, Toller G, Jokeit H. Mesial temporal lobe epilepsy diminishes functional connectivity during emotion perception. Epilepsy Res. 2017;134:33–40. doi: 10.1016/j.eplepsyres.2017.05.004. [DOI] [PubMed] [Google Scholar]

- 19.Fedele, T. et al. Dynamic visual sequences of fearful faces. Archives of Neurobehavioral Experiments and Stimuli (2020).

- 20.Boran E, et al. Dataset of human medial temporal lobe neurons, scalp and intracranial EEG during a verbal working memory task. Scientific Data. 2020;7:30. doi: 10.1038/s41597-020-0364-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Boran E, et al. Persistent hippocampal neural firing and hippocampal-cortical coupling predict verbal working memory load. Science Advances. 2019;5:eaav3687. doi: 10.1126/sciadv.aav3687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stolk A, et al. Integrated analysis of anatomical and electrophysiological human intracranial data. Nat. Protoc. 2018;13:1699–1723. doi: 10.1038/s41596-018-0009-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fan L, et al. The Human Brainnetome Atlas: A New Brain Atlas Based on Connectional Architecture. Cereb. Cortex. 2016;26:3508–3526. doi: 10.1093/cercor/bhw157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Niediek J, Boström J, Elger CE, Mormann F. Reliable Analysis of Single-Unit Recordings from the Human Brain under Noisy Conditions: Tracking Neurons over Hours. PloS One. 2016;11:e0166598. doi: 10.1371/journal.pone.0166598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stoewer, A., Kellner, C. J., Benda, J., Wachtler, T. & Grewe, J. File format and library for neuroscience data and metadata. Front. Neuroinform, 10.3389/conf.fninf.2014.18.00027 (2014).

- 26.Fedele T, 2020. Dataset of neurons and intracranial EEG from human amygdala during aversive dynamic visual stimulation. G-node. [DOI]

- 27.Gorgolewski KJ, et al. The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Scientific Data. 2016;3:160044. doi: 10.1038/sdata.2016.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Holdgraf C, et al. iEEG-BIDS, extending the Brain Imaging Data Structure specification to human intracranial electrophysiology. Scientific data. 2019;6:102. doi: 10.1038/s41597-019-0105-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fedele T, 2020. Dataset of neurons and intracranial EEG from human amygdala during aversive dynamic visual stimulation. OpenNeuro. [DOI]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

Data Availability Statement

An example script is provided with the dataset26,29. It contains commented scripts for reading and plotting the data in NIX format25. We have also included scripts for the generation of Figs 2 and 3. All code is implemented in MATLAB (Mathworks Inc., version R2019a).