Abstract

As an analytic pipeline for quantitative imaging feature extraction and analysis, radiomics has grown rapidly in the past decade. On the other hand, recent advances in deep learning and transfer learning have shown significant potential in the quantitative medical imaging field, raising the research question of whether deep transfer learning features have predictive information in addition to radiomics features. In this study, using CT images from Pancreatic Ductal Adenocarcinoma (PDAC) patients recruited in two independent hospitals, we discovered most transfer learning features have weak linear relationships with radiomics features, suggesting a potential complementary relationship between these two feature sets. We also tested the prognostic performance for overall survival using four feature fusion and reduction methods for combining radiomics and transfer learning features and compared the results with our proposed risk score-based feature fusion method. It was shown that the risk score-based feature fusion method significantly improves the prognosis performance for predicting overall survival in PDAC patients compared to other traditional feature reduction methods used in previous radiomics studies (40% increase in area under ROC curve (AUC) yielding AUC of 0.84).

Subject terms: Cancer imaging, Paediatric cancer, Tumour biomarkers, Biomarkers, Biomedical engineering

Introduction

In the past decade, as an emerging field, radiomics has been developed to extract more information from medical images for improved diagnosis and prognosis of cancer. As a quantitative approach, radiomics comprises of the extraction and analysis of quantitative medical imaging features and establishing correlations between these features and clinical outcomes such as patient survival1–5. Several radiomic features have been found to be significantly associated with various clinical outcomes in multiple cancer sites such as lung, pancreas, and kidney2,6–12.

In the past few years, the pipeline for traditional radiomics analysis has been established1,2,9,13. This traditional pipeline consists of four steps: image acquisition, region of interest (ROI) segmentation or annotation, feature extraction, and building a predictive model. As the core of this pipeline, radiomics features are extracted from medical images using predefined mathematical equations14. These engineered equations have been designed to capture different characteristics of images15. For example, first-order features measure the distribution of pixel intensities while second-order features are based on matrices including grey-level co-occurrence matrix (GLCM) and grey-level run length matrix (GLRLM) and extract texture information14. Efforts have been made to standardize the feature banks by implementing open source libraries such as PyRadiomics15. In these feature banks, thousands of engineered features from different classes can be extracted from 2D or 3D medical images15. These features can be further tested for their associations with clinical outcomes such as overall survival, recurrence, or genetic mutations4,8,16,17. Several cross-cohort and multi-centre studies have also shown that several PyRadiomics features are robust to different scanners and clinician annotations8,15,18,19.

Despite recent progress, the traditional radiomics analytics pipeline has a few drawbacks. First, the equations of features are predefined, and many formulas are similar. Thus, some radiomics features are highly correlated with each other. As a result, if a feature was found to be significantly associated with a certain clinical outcome, other highly correlated features may be significant as well. Consequently, while the high dimension of significant features increases the complexity of the prognostic model, there is no corresponding increase in performance. Second, testing radiomics features one by one increases the family-wise error rate (FWER), which is the probability of making one or more false discoveries. Previous publications have pointed out that several radiomics studies lacked multiple testing control and hence, some discovered significant features may be the result of type I errors20,21. These shortcomings in the traditional radiomics analytics pipeline have inspired new research which takes advantage of the recent progress in deep learning and convolutional neural networks (CNNs) to improve the performance of the predictive models.

CNNs are one of the most frequently used deep learning architectures in computer vision22. CNNs apply a series of convolution operations on input images, preserving the spatial relationship between pixels and mapping these relationships onto outputs. During the training phase, parameters of the convolution operations are tuned based on the outcome. Consequently, convolution layers can capture information specifically related to the classification task (e.g., outcome prediction) at hand. In medical imaging, this allows generating customized feature maps for specific modalities or diseases, which further improves performance23,24. However, training CNN parameters requires a large sample size, which is usually not available in typical medical imaging research settings. To overcome this limitation, transfer learning-based feature extraction has been proposed25–27.

Transfer learning was developed based on an assumption that the structures of CNNs are similar to the mechanism of the human visual cortex22,28. The top layers of CNNs can extract general features from images, while the deeper layers are more specific to the target22. Pretraining CNNs using large image datasets such as ImageNet helps the model to learn how to extract general features29,30. Since many image recognition tasks are similar, the top layers of the network can be transferred to another target domain26. On the other hand, deeper layers of CNNs can extract “higher-order” information which is associated with the target outcome. Thus, if the target domain is similar to the pretrained domain, deeper layers can also be transferred to extract features25,31.

Deep learning and transfer learning-based feature extraction have shown promising results in cancer assessment31–33. Furthermore, it has also been shown that combining predefined features with deep learning-based features can improve the performance in the prognosis of Glioblastoma Multiforme31. To gain a deeper understanding of the relationship between traditional radiomics and transfer learning features, it is crucial to map the correlation between these two sets of features. In addition, it is imperative to develop an optimal feature fusion pipeline that can exploit the prognostic information from both feature sets to improve the overall performance of the model.

The aim of this study was to assess the complementary prognostic information of predefined radiomic features and transfer learning features for overall survival in CT scans of Pancreatic Ductal Adenocarcinoma (PDAC) patients. Using CT images from PDAC patients, we mapped the association between PyRadiomics and a set of transfer learning features and showed the correlation among the two classes of features. Next, we applied four existing feature fusion and reduction methods, which include principal component analysis (PCA), Boruta34, feature-wise selection using the Cox Proportional Hazards Model (CPH)35, and LASSO36, to combine the predefined radiomic features with transfer learning features for the prognosis of overall survival in PDAC patients. We then proposed a novel pipeline for combining predefined radiomics features and transfer learning features using a risk-score based model and compared its performance to aforementioned four existing feature fusion and reduction methods in an independent test cohort.

Methods

Dataset

Two cohorts from two independent hospitals consisting of 68 (training cohort) and 30 patients (test cohort) who had pre-operative contrast-enhanced CT available for analysis were enrolled in this retrospective study. All patients underwent curative-intent surgical resection for PDAC from 2008–2013 to 2007–2012 for both cohorts, respectively, and they did not receive other neo-adjuvant treatment. CT scans were performed on Toshiba, Aquilion (training cohort) and GE Medical Systems, LightSpeed VCT (test cohort) scanners using 2–3 mm slice thickness in the portal venous phase without advanced dose reduction algorithms.

Survival data were collected retrospectively (training cohort: 52 death vs. 16 survival, test cohort: 15 death vs. 15 survival at the end of follow-up). The median follow-up date was 21 months (range: 101 days to 1890 days) and 19 months (range: 109 days to 2569 days) for the training and test cohorts, respectively. We selected the two-year survival as the primary outcome, which was determined by the last follow-up date or date of death 2 years after surgery (Training cohort: 38 death vs. 30 survival, test cohort: 11 death vs. 19 survival). Further demographic information about these two cohorts can be found in Table 18. To exclude the effect of postoperative complications on the prognosis, the patients who died within 90 days after surgery were excluded. An in-house developed region of interest (ROI) contouring tool (ProCanVAS)37 was used by an experienced radiologist to annotate ROIs. The reader contoured the ROIs blind to the outcome.

Table 1.

Demographic information of training and test cohorts8.

| Training cohort | Test cohort | |

|---|---|---|

| Age (years) | ||

| Mean ± standard deviation | 65 ± 11 | 69 ± 8 |

| Sex | ||

| Male/female/total | 35/33/68 | 13/17/30 |

| Tumour size (diameter—cm) | ||

| Mean ± standard deviation | 4.34 ± 1.47 | 3.76 ± 0.97 |

| Grade | ||

| G1/G2/G3/G4/total | 17/44/6/1/68 | 3/19/8/0/30 |

Ethics approval and consent to participate

For the training cohort, University Health Network Research Ethics Boards approved the retrospective study and informed consent was obtained. For the test cohort, the Sunnybrook Health Sciences Centre Research Ethics Boards approved the retrospective study and waived the requirement for informed consent. All methods were performed in accordance with the relevant guidelines and regulations of both institutions.

Radiomics feature extraction

Pre-defined radiomic features were extracted using the PyRadiomics library (version 2.0.0) in Python15. To ensure that features were extracted from tumour regions exclusively, voxels with Hounsfield unit (HU) < -10 and > 500 were excluded to eliminate fat and stents from the feature values. A threshold of 500 would only exclude large parts of blood vessels in the portal venous phase which are not part of the tumor contour. These are normal structures that if included would confound analysis. This threshold, however, would not exclude tumor neovasculature or hyperenhancing subcomponents in the tumor which do not reach such a high attenuation level. In total, 1,428 radiomic features were extracted for both cohorts from the contoured ROIs. Details of the extracted features are listed in Table 2.

Table 2.

Number of radiomics features extracted for different feature classes and image filters.

| Filter/features | First-order | GLCM | GLDM | GLRLM | GLSZM | NGTDM | Shape | Total |

|---|---|---|---|---|---|---|---|---|

| Exponential | 16 | 0 | 11 | 12 | 7 | 0 | 0 | 46 |

| Gradient | 18 | 23 | 14 | 16 | 16 | 5 | 0 | 92 |

| lbp | 56 | 0 | 44 | 48 | 28 | 0 | 0 | 176 |

| Logarithm | 18 | 23 | 14 | 16 | 16 | 5 | 0 | 92 |

| Original | 18 | 23 | 14 | 16 | 16 | 5 | 12 | 104 |

| Square | 18 | 23 | 14 | 16 | 16 | 4 | 0 | 91 |

| Squareroot | 18 | 23 | 14 | 16 | 16 | 5 | 0 | 92 |

| Wavelet | 144 | 184 | 112 | 128 | 128 | 39 | 0 | 735 |

| Total | 306 | 299 | 237 | 268 | 243 | 63 | 12 | 1428 |

GLCM grey level co-occurrence matrix, GLDM grey level difference matrix, GLRLM grey level run length matrix, GLSZM gray level size zone, NGTDM neighboring gray tone difference matrix.

Transfer learning feature extraction

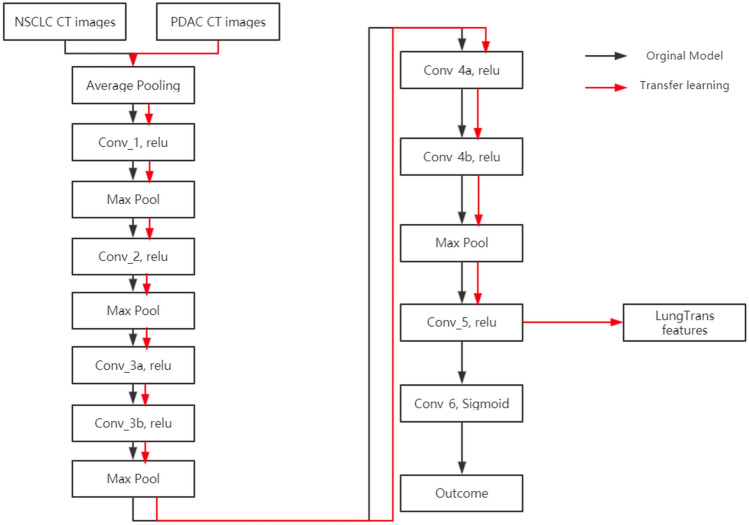

Transfer learning features were extracted using a CNN model (LungTrans) pretrained by Non-Small Cell Lung Cancer (NSCLC) CT images38. The NSCLC dataset was published as Lung Nodule Analysis (LUNA16) challenge with CT images from 888 patients39. Images were extracted from the largest contoured ROI from each patient without preprocessing. All input ROIs were resized to 32 32 greyscale. Given that the shape of the ROI is not rectangular, the region outside of the ROI was set as black. Using this dataset, an 8-layer CNN (LungTrans) was trained de novo with batch size 16 and learning rate 0.001, with the architecture shown in Fig. 140 Every convolutional layer has Kernel size of 3 3 with stride of 1 with zero padding except for Conv_5 layer which has 2 2 kernel size and stride of 1 without padding. All the Max Pooling layers have 2 2 kernel size.

Figure 1.

Architecture of the 8-layer CNN used to extract LungTrans Features.

The process of transfer learning varies depending on the similarity of the pretrained domain and target domain. If the pretrained and target domains are different (e.g., natural images vs. CT pancreatic images), features will generally be extracted from upper layers for better generalization. However, if the pretrained and target domains are similar (e.g., they share the same imaging modality, similar resolution, and similar outcome), features can be extracted from deeper layers. In this study, since the pretrained and target domains are similar (lung and pancreatic CT), features were extracted from the Conv_5 layer which is a deep layer just before classification layers. Feeding the LungTrans CNN with contoured PDAC CT images with the same settings as the pretrained domain (32 32 greyscale ROI images with black background), 64 LungTrans features were extracted. After eliminating 29 LungTrans features with zero variance, 35 LungTrans remaining features were used in this study.

Correlation

To investigate the correlation between the features extracted using traditional radiomics pipeline (PyRadiomics) and transfer learning (LungTrans), Pearson correlation coefficients were calculated for each pair of feature sets in the training cohort (n = 68). The mean absolute correlation coefficient was calculated for each feature set (PyRadiomics and LungTrans). The distributions of the correlation coefficients were also calculated.

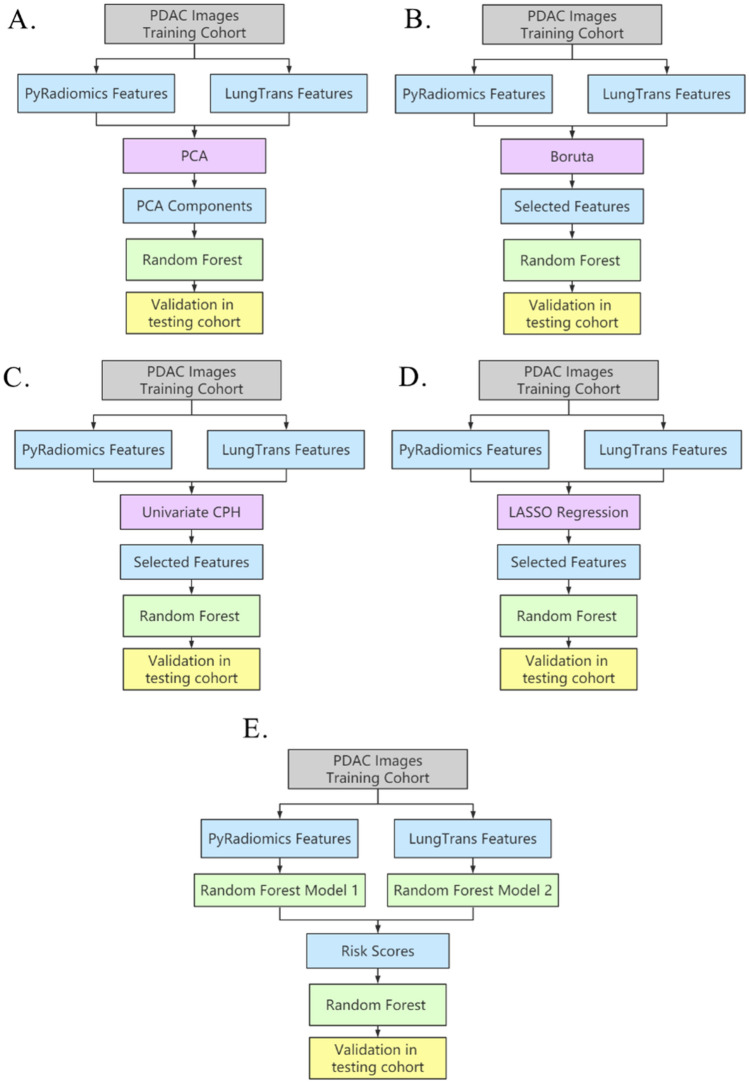

Proposed prognosis model

To investigate the optimal feature reduction and fusion methods, we first trained four prognosis models using CT images from the training cohort (n = 68) and validated them in the test cohort (n = 30) targeting a two-year survival. In each model, features from Pyradiomics and LungTrans were fused or selected in the training cohort using PCA, Boruta34, feature-wise reduction through CPH35, or LASSO36 method. These selected/fused features were then used to train Random Forest-based prognosis models (number of trees to grow (ntree) = 500, number of randomly sampled variables as candidates at each split (mtry) varies depending on the setting that had the best performance in the training cohort). These prognosis models were further validated in the test cohort. The pipelines of four traditional feature fusion/reduction algorithms including PCA, Boruta34, CPH-based feature reduction35, and LASSO36 are shown in Figs. 2A–D, respectively. In the following, each method is described in detail.

-

A.

Unsupervised feature fusion using PCA: Features from two feature banks were fused using PCA, generating 30 components. Next, these components were used to build a model (Random Forest, mtry = 2) in the training cohort, which was then evaluated in the test cohort.

-

B.

Supervised feature reduction using Boruta. Boruta identified prognostic features which were then used to build a prognosis model (Random Forest, mtry = 2) in the training cohort. The model’s performance was validated in the test cohort.

-

C.

Supervised feature reduction using Cox-Regression. Each feature was tested using univariate Cox-regression in the training cohort. Significant features were then used to build a prognosis model (Random Forest, mtry = 310), which was validated in the test cohort.

-

D.

Supervised feature selection using Correlation cut-off and LASSO Regression. In the training cohort, features with correlation coefficients higher than 0.7 were removed. The remaining features were reduced using LASSO logistic regression with optimized lambda. The features with nonzero coefficients in LASSO regression in the training cohort were selected to build the Random Forest model (mtry = 2), which was then evaluated in the test cohort.

Figure 2.

Pipelines for different feature reduction/fusion methods. (A) Unsupervised feature fusion using PCA. (B) Supervised feature reduction using Boruta. (C) Supervised feature reduction using Cox-Regression. (D) Supervised feature reduction using LASSO Regression. (E) The proposed risk-score based feature fusion method.

Our proposed risk score-based method is illustrated in Fig. 2E. First, using the training cohort, two different Random Forest classification models were trained separately using each of the two feature banks (PyRadiomics and LungTrans) through tenfold cross validation41. Each of these models was then used to produce the probability of death for every patient in the training cohort through tenfold cross-validation. At this point, each patient in the training cohort would have two probabilities (training risk scores) of death based on the two feature banks (PyRadiomics and LungTrans). Similarly, feeding these two random forest models (trained using the entire training cohort) with PyRadiomics features and LungTrans features in the test cohort, two risk scores were generated for each patient in the test cohort (test risk scores). We then used these two training risk scores to train another Random Forest-based prognosis model in the training cohort and validated the model in the test cohort using the test risk scores.

To address the imbalanced outcome in the training cohort, SMOTE algorithm42 was applied in the training process of all five models as it has been shown that SMOTE’s performance is comparable to that of more recent balancing methods such as ADASYN43. The following settings were used for SMOTE algorithm:

k (number of nearest neighbours used to generate the new examples of the minority class) = 5.

perc.over = 200, perc.under = 200 (a common default setting to balance the amount of over-sampling of the minority class and under-sampling of the majority class).

The area under the ROC curve (AUC) was used to measure the performance of these five approaches44. Youden’s J statistics were used to identify the optimal threshold for sensitivity and specificity45. DeLong tests were applied to test the difference between the AUCs of different models. The classification modeling, calculation of AUC, and DeLong tests were performed using the “caret”, “survival”, and “pROC” package in R (Version 3.5.1)46–48.

Results

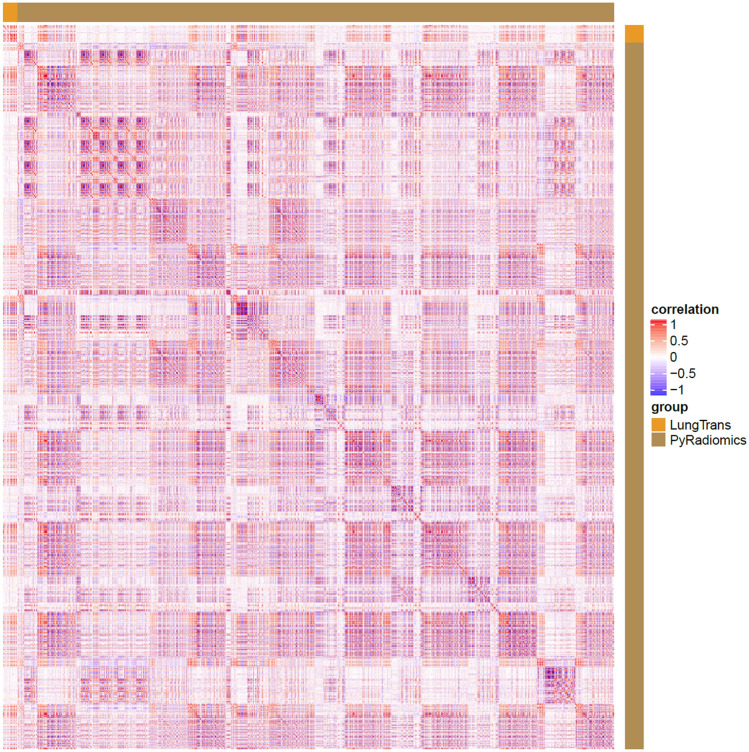

Correlation analysis between predefined and deep radiomic features

Within each feature bank, the average absolute values of Pearson correlation coefficients of 1,428 PyRadiomics and 35 LungTrans features were 0.27 (standard deviation: 0.23) and 0.32 (standard deviation: 0.32), respectively. The average absolute correlation coefficient between PyRadiomics and LungTrans features was 0.17 (standard deviation: 0.18). The weak linear relationship between PyRadiomics and LungTrans features suggest that the LungTrans features may harbor new information that PyRadiomics doesn’t capture.

The heatmap in Fig. 3 shows the correlation details between the two feature sets. Each dot in Fig. 3 represents a correlation coefficient. White colour indicates that the coefficient is 0, while red and blue dots represent positive or negative correlations. There are several colour blocks in PyRadiomics vs. the PyRadiomics region, indicating high correlations among the PyRadiomics features. Several colour bands in the PyRadiomics vs. LungTrans region also suggest that some LungTrans features may have strong linear relationships with PyRadiomics features.

Figure 3.

Correlation heatmap of PyRadiomics and LungTrans features.

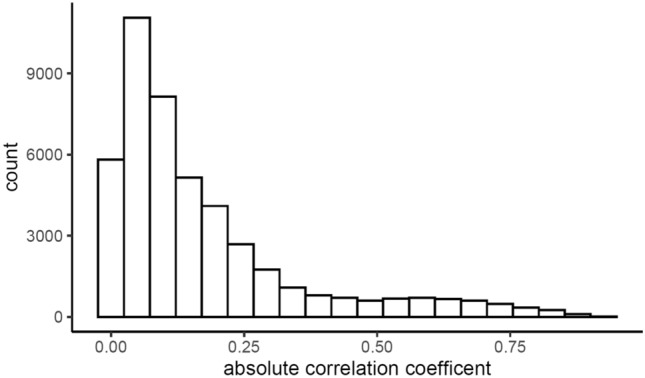

The distribution of the correlation coefficients (in absolute value) is displayed in histogram form in Fig. 4. As illustrated by a skewed distribution, most of the predefined and deep radiomic features have weak correlations with one another. However, strong linear associations exist between certain features given the high correlation coefficients (> 0.70)49. More details for the correlation between PyRadiomics and Transfer Learning features can be found in Table 3, where the average absolute values of correlation coefficients were calculated for each type of filter and feature.

Figure 4.

Histogram of absolute correlation coefficients between PyRadiomics and LungTrans.

Table 3.

Mean absolute correlation coefficients between PyRadiomics and LungTrans features across different types of filters and features.

| Filter/features | First order | glcm | gldm | glrlm | glszm | ngtdm | Shape |

|---|---|---|---|---|---|---|---|

| Exponential | 0.18 | 0.06 | 0.08 | 0.07 | |||

| Gradient | 0.32 | 0.32 | 0.22 | 0.20 | 0.27 | 0.31 | |

| lbp | 0.08 | 0.06 | 0.08 | 0.07 | |||

| Logarithm | 0.14 | 0.13 | 0.11 | 0.12 | 0.10 | 0.11 | |

| Original | 0.24 | 0.23 | 0.16 | 0.17 | 0.19 | 0.27 | 0.06 |

| Square | 0.27 | 0.42 | 0.24 | 0.25 | 0.32 | 0.36 | |

| Square root | 0.19 | 0.18 | 0.14 | 0.15 | 0.14 | 0.19 | |

| Wavelet | 0.26 | 0.18 | 0.14 | 0.14 | 0.14 | 0.16 |

Performance of the proposed prognosis model

The performances of four existing feature reduction methods (PCA, Boruta, feature-wise selection through CPH, and LASSO) were compared to that of the proposed risk score-based prognosis model. PCA method generated 30 components in the training cohort that represent the 95% variance in the original 1463 features from the PyRadiomics (1428 features) and LungTrans feature banks (35 features). In 100 iterations, Boruta feature reduction method selected only 1 feature in the training cohort, which was from PyRadiomics feature bank (Wavelet GLDM Small Dependence Low Gray Level Emphasis), with a cut-off at 0.05 (p-value cut-off for the Boruta method). CPH method identified 310 features associated with overall survival in the training cohort. Particularly, as shown in Table 4, 308 of them belong to the PyRadiomics feature bank, while LungTrans contributed with only 2 features. While some of the PyRadiomics features have been previously identified for PDAC prognosis (e.g., SumEntropy8), other well-known features such as ROI size was not significant. In the LASSO model, 14 features were identified as the potential prognostic biomarkers (3 features from LungTrans, and 11 features from PyRadiomics). Our proposed risk score-based model utilized the probabilities of the two individually trained Random Forest models. The performance of these five models was measured using the area under the ROC curve (AUC) for overall survival in the test cohort.

Table 4.

Significant PyRadiomics features in univariate CPH across different types of filters and features.

| Filter/feature | First order | glcm | gldm | glrlm | glszm | ngtdm | Shape | Total |

|---|---|---|---|---|---|---|---|---|

| Exponential | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 |

| Gradient | 4 | 11 | 5 | 7 | 6 | 1 | 0 | 34 |

| Local binary pattern | 9 | 0 | 0 | 4 | 0 | 0 | 0 | 13 |

| Logarithm | 1 | 0 | 1 | 0 | 2 | 1 | 0 | 5 |

| Original | 6 | 12 | 5 | 7 | 7 | 1 | 1 | 39 |

| Square root | 5 | 11 | 4 | 2 | 6 | 2 | 0 | 30 |

| Wavelet | 50 | 67 | 15 | 30 | 19 | 5 | 0 | 186 |

| Total | 75 | 101 | 30 | 51 | 40 | 10 | 1 | 308 |

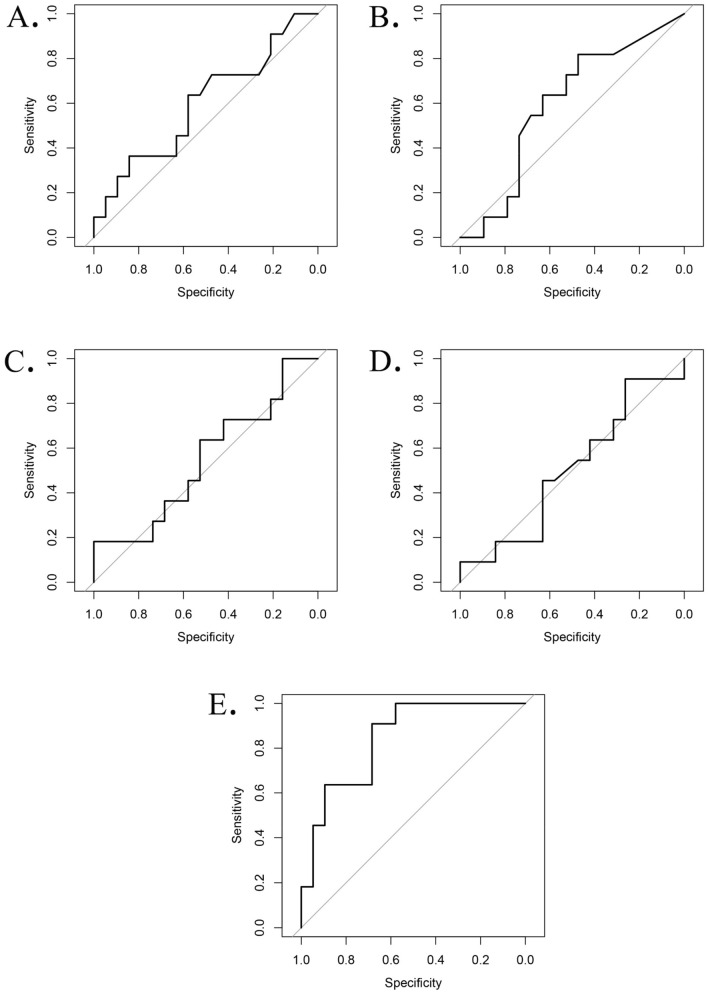

In the validation (test cohort), the AUCs for PCA, Boruta, CPH, and LASSO methods were 0.60 (95% Confidence Interval (CI): 0.37–0.82), 0.60 (95% CI: 0.38–0.81), 0.55 (95% CI: 0.32–0.77), and 0.50 (95% CI: 0.28–0.72), respectively. The proposed risk score-based method produced the highest AUC (AUC of 0.84, 95% CI: 0.70–0.98).

Comparing the feature reduction methods using DeLong test, the performance of the proposed risk score-based method was significantly higher than PCA (0.84 vs. 0.60, p-value = 0.044, FDR adjusted p-value = 0.044), Boruta (0.84 vs. 0.60, p-value = 0.040, FDR adjusted p-value = 0.044), Cox-regression methods (0.84 vs. 0.55, p-value = 0.0086, FDR adjusted p-value = 0.017), and LASSO (0.84 vs. 0.50, p-value = 0.0062, FDR adjusted p-value = 0.017). The results suggest that a risk score model, which is based on probabilities calculated by multiple individual small models, gave the best performance compared to other models. The ROC curves for four traditional feature reduction methods (PCA, Boruta, CPH, and LASSO) and the proposed risk score-based model are shown in Fig. 5.

Figure 5.

ROC curves of models using four feature reduction/fusion methods. (A) ROC curve for PCA based fusion method, AUC = 0.60, specificity = 0.58, sensitivity = 0.64. (B) ROC curve for Boruta based feature reduction method, AUC = 0.60, specificity = 0.47, sensitivity = 0.48. (C) ROC curve for CPH based feature reduction method, AUC = 0.55, specificity = 1.00, sensitivity = 0.18. (D) ROC curve for LASSO based feature selection method, AUC = 0.50, specificity = 0.26, sensitivity = 0.91. (E) ROC curve for the proposed risk-score based feature fusion method, AUC = 0.84, specificity = 0.68, sensitivity = 0.91.

Discussion

As deep transfer learning is becoming increasingly popular in medical imaging studies, there is an urgent need for identifying an optimal feature reduction and fusion method which can combine the information from traditional radiomics and transfer learning features. In this study, we proposed a risk score-based feature reduction and fusion method for a medical imaging-based model for PDAC prognosis. We discovered that the proposed risk score-based method had a significantly better prognosis performance than those of traditional supervised and unsupervised methods, increasing AUC by at least 40% (From 0.60 using PCA to 0.84). This result is consistent with previous studies, which have shown that ensemble methods can outperform traditional feature-wise selection models50–52.

As deep transfer learning increasingly plays a vital role in medical image analysis, the curse of dimensionality is becoming more acute in radiomics-based prognosis models1. Supervised feature reduction methods such as univariate CPH and Boruta have difficulties in balancing false positive rate and statistical power. By testing 1,463 features (1,428 PyRadiomics features and 35 LungTrans features) using univariate CPH, the probability of having at least one false positive (FWER) is higher than 99%. Hence, supervised feature reduction methods may lose their significance as feature banks continue to grow in size. In addition, PCA, an unsupervised method, wasn’t able to boost the prognosis performance due to the inherent noise in image features. Feature reduction using correlation cut-off with LASSO was previously used in a similar study for Glioblastoma prognosis31, but this method also failed in our independent test cohort in terms of performance. On the other hand, ensemble methods, which use multiple models to generate risk scores, may overcome these limitations of the traditional feature reduction methods53,54. Additionally, since risk scores were generated using a nonlinear classifier (Random Forest), they were in fact nonlinear mappings from the original feature space, providing better fits for patients’ survival patterns leading to higher AUC.

It is worth to note that although there were high Pearson correlation coefficients between certain transfer learning and PyRadiomics features, most deep radiomics features have weak linear relationships with PyRadiomics features. The nature of PyRadiomics features and LungTrans features is different. A PyRadiomics feature is extracted using a predefined formula from medical images while LungTrans features were extracted using parameters fine-tuned by lung CT images. This result suggests that the relationship between transfer learning and PyRadiomics features was more complementary than replacement. Thus, we hypothesized that fusing these two feature banks might provide more information to the prognosis model. Future studies can further test the associations between conventional radiomics features and transfer learning features from different pretrained models. A thorough understanding of these associations will provide a steady base for developing more sophisticated and advanced feature fusion methods, which may further improve the prognosis performance for different cancer types.

Although the proposed risk score-based method outperformed traditional approaches, it had limitations. First, compared to supervised methods where certain biomarkers can be identified during the process, the risk score method is hard to interpret since the stacked model is based on the results (probabilities) from other models. Although using intuitive algorithms such as logistic regression instead of Random Forests, one may derive the final prognosis probability (risk score) from original features using mathematical formulations, it would be a complicated task. Second, although lung cancer and pancreatic cancer are both adenocarcinomas, they are different in that pancreatic cancer tends to exhibit much more stromal reaction thus the features relevant to prognosis might be expected to be different. The effect of this on the transfer learning model is uncertain and further validation with a variety of adenocarcinoma types may be of interest to see if there are transfer learning features invariant across tumour types. Third, for practical applications, a model must include other known prognostic factors. In this case of pancreatic cancer, this includes variables such as age, tumour size, grade, and stage. Although it has been shown that none of these clinical variables is prognostic of overall survival in PDAC patients8, nor adding them to radiomic features improves the prognostic model8, further work is necessary to incorporate these into a practical prognostic model for PDAC. Forth, the aim of this paper was primarily to explore approaches to fuse radiomics and transfer learning features. We recognize that validation with a larger cohort with careful attention to covariates will be required for practical application and examining the effectiveness of the proposed feature fusion method.

Conclusion

Deep radiomics features are complementary to conventional radiomics features. Through the proposed risk score-based prognosis model by fusing deep transfer learning and radiomics features, prognostication performance for resectable PDAC patients showed significant improvement compared to that of the traditional feature fusion and reduction methods.

Acknowledgements

This study was conducted with support of the Ontario Institute for Cancer Research (PanCuRx Translational Research Initiative) through funding provided by the Government of Ontario, the Wallace McCain Centre for Pancreatic Cancer supported by the Princess Margaret Cancer Foundation, the Terry Fox Research Institute, the Canadian Cancer Society Research Institute, and the Pancreatic Cancer Canada Foundation. The study was also supported by charitable donations from the Canadian Friends of the Hebrew University (Alex U. Soyka).

Author contributions

Y.Z., M.A.H., and F.K. contributed to the design of the concept. E.M.L., P.K., S.G., M.A.H. contributed in collecting and reviewing the data. Y.Z. and F.K. contributed to the design and implementation of quantitative imaging feature extraction and machine learning modules. All authors contributed to the writing and reviewing of the paper. F.K. and M.A.H. are co-senior authors. All authors read and approved the final manuscript.

Data availability

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request pending the approval of the institution(s) and trial/study investigators who contributed to the dataset.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Yip SSF, Aerts HJWL. Applications and limitations of radiomics. Phys. Med. Biol. 2016;61:R150–66. doi: 10.1088/0031-9155/61/13/R150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Parmar C, Grossmann P, Bussink J, Lambin P, Aerts HJWL. Machine learning methods for quantitative radiomic biomarkers. Sci. Rep. 2015;5:13087. doi: 10.1038/srep13087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kumar V, et al. Radiomics: the process and the challenges. Magn. Reson. Imaging. 2012;30:1234–1248. doi: 10.1016/j.mri.2012.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Aerts HJWL, et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 2014;5:4006. doi: 10.1038/ncomms5006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Aerts HJWL. The potential of radiomic-based phenotyping in precision medicine. JAMA Oncol. 2016;2:1636. doi: 10.1001/jamaoncol.2016.2631. [DOI] [PubMed] [Google Scholar]

- 6.Hawkins S, et al. Predicting malignant nodules from screening CT scans. J. Thorac. Oncol. 2016;11:2120–2128. doi: 10.1016/j.jtho.2016.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Eilaghi A, Baig S, Zhang Y, Zhang J, Karanicolas P, Gallinger S, Khalvati F, Haider MA. CT texture features are associated with overall survival in pancreatic ductal adenocarcinoma - a quantitative analysis. BMC Med. Imaging. 2017;17:38. doi: 10.1186/s12880-017-0209-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Khalvati F, et al. Prognostic value of CT radiomic features in resectable pancreatic ductal adenocarcinoma. Sci. Rep. 2019;9:5449. doi: 10.1038/s41598-019-41728-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zhang Y, Oikonomou A, Wong A, Haider MA, Khalvati F. Radiomics-based prognosis analysis for non-small cell lung cancer. Nat. Sci. Rep. 2017;7:1. doi: 10.1038/s41598-016-0028-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lambin P, et al. Radiomics: extracting more information from medical images using advanced feature analysis. Eur. J. Cancer. 2012;48:441–446. doi: 10.1016/j.ejca.2011.11.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Oikonomou A, Khalvati F, et al. Radiomics analysis at PET/CT contributes to prognosis of recurrence and survival in lung cancer treated with stereotactic body radiotherapy. Sci. Rep. 2018;8:1. doi: 10.1038/s41598-018-22357-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Haider MA, et al. CT texture analysis: a potential tool for prediction of survival in patients with metastatic clear cell carcinoma treated with sunitinib. Cancer Imaging. 2017;17:1. doi: 10.1186/s40644-017-0106-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Khalvati F, Zhang Y, Wong A, Haider MA. Radiomics. Encyclop. Biomed. Eng. 2019;2:597–603. doi: 10.1016/B978-0-12-801238-3.99964-1. [DOI] [Google Scholar]

- 14.Lambin P, et al. Radiomics: the bridge between medical imaging and personalized medicine. Nat. Rev. Clin. Oncol. 2017;14:749–762. doi: 10.1038/nrclinonc.2017.141. [DOI] [PubMed] [Google Scholar]

- 15.van Griethuysen JJM, et al. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017;77:e104–e107. doi: 10.1158/0008-5472.CAN-17-0339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Li Y, et al. MRI features predict p53 status in lower-grade gliomas via a machine-learning approach. NeuroImage Clin. 2018;17:306–311. doi: 10.1016/j.nicl.2017.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li H, et al. MR imaging radiomics signatures for predicting the risk of breast cancer recurrence as given by research versions of MammaPrint, Oncotype DX, and PAM50 gene assays. Radiology. 2016;281:382–391. doi: 10.1148/radiol.2016152110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Parmar C, et al. Robust radiomics feature quantification using semiautomatic volumetric segmentation. PLoS ONE. 2014;9:e102107. doi: 10.1371/journal.pone.0102107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Traverso A, Wee L, Dekker A, Gillies R. Repeatability and reproducibility of radiomic features: a systematic review. Int. J. Radiat. Oncol. 2018;102:1143–1158. doi: 10.1016/j.ijrobp.2018.05.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sanduleanu S, et al. Tracking tumor biology with radiomics: a systematic review utilizing a radiomics quality score. Radiother. Oncol. 2018;127:349–360. doi: 10.1016/j.radonc.2018.03.033. [DOI] [PubMed] [Google Scholar]

- 21.Chen S-Y, Feng Z, Yi X. A general introduction to adjustment for multiple comparisons. J. Thorac. Dis. 2017;9:1725–1729. doi: 10.21037/jtd.2017.05.34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems (2012).

- 23.Yamashita R, Nishio M, Do RKG, Togashi K. Convolutional neural networks: an overview and application in radiology. Insights Imaging. 2018;9:611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Irvin, J. et al. CheXpert: a large chest radiograph dataset with uncertainty labels and expert comparison. In The Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19) (2019).

- 25.Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010;22:1345–1359. doi: 10.1109/TKDE.2009.191. [DOI] [Google Scholar]

- 26.Tan, C. et al. A survey on deep transfer learning. In International Conference on Artificial Neural Networks (ed. Kůrková, V. et al.) 270–279 (Springer, Cham, 2018).

- 27.He K, Girshick R, Dollár P. IEEE/CVF International Conference on Computer Vision (ICCV) Seoul: IEEE; 2019. Rethinking imagenet pre-training. [Google Scholar]

- 28.Hubel DH, Wiesel TN. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962;160:106–54. doi: 10.1113/jphysiol.1962.sp006837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.George, D., Shen, H. & Huerta, E. A. Deep transfer learning: a new deep learning glitch classification method for advanced LIGO (2017).

- 30.Torrey, L. & Shavlik, J. Transfer learning. In Handbook of Research on Machine Learning Applications (ed Soria, E. et al.) (IGI Global, 2009).

- 31.Lao J, et al. A deep learning-based radiomics model for prediction of survival in glioblastoma multiforme. Sci. Rep. 2017;7:10353. doi: 10.1038/s41598-017-10649-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zhang Y, Lobo-Mueller EM, Karanicolas P, Gallinger S, Haider MA, Khalvati F. CNN-based survival model for pancreatic ductal adenocarcinoma in medical imaging. BMC Med. Imaging. 2020;20:11. doi: 10.1186/s12880-020-0418-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zhang Y, Lobo-Mueller EM, Karanicolas P, Gallinger S, Haider MA, Khalvati F. Prognostic value of transfer learning based features in resectable pancreatic ductal adenocarcinoma. Front. Artif. Intell. 2020;3:550890. doi: 10.3389/frai.2020.550890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kursa MB, Rudnicki WR. Feature selection with the boruta package. J. Stat. Softw. 2010;36:1–13. doi: 10.18637/jss.v036.i11. [DOI] [Google Scholar]

- 35.Fox J, Weisberg S. Cox proportional-hazards regression for survival data in R. Most. 2011 doi: 10.1016/j.carbon.2010.02.029. [DOI] [Google Scholar]

- 36.Tibshirani R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B. 1996;58:267–288. [Google Scholar]

- 37.Zhang, J., Baig, S., Wong, A., Haider, M. A. & Khalvati, F. A Local ROI-specific Atlas-based Segmentation of Prostate Gland and Transitional Zone in Diffusion MRI. J. Comput. Vis. Imaging Syst. (2016).

- 38.He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778 (IEEE, 2016). 10.1109/CVPR.2016.90.

- 39.Armato SG, et al. The lung image database consortium (LIDC) and image database resource initiative (IDRI): a completed reference database of lung nodules on CT scans. Med. Phys. 2011;38:915–31. doi: 10.1118/1.3528204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.De Wit, J. Kaggle datascience bowl 2017. (2017). Available at: https://github.com/juliandewit/kaggle_ndsb2017 (Accessed: 3rd November 2019)

- 41.Breiman L. Random forests. Mach. Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 42.Blagus R, et al. SMOTE for high-dimensional class-imbalanced data. BMC Bioinform. 2013;14:106. doi: 10.1186/1471-2105-14-106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Xie C, et al. Effect of machine learning re-sampling techniques for imbalanced datasets in 18F-FDG PET-based radiomics model on prognostication performance in cohorts of head and neck cancer patients. Eur. J. Nucl. Med. Mol. Imaging. 2020;47:2826–2835. doi: 10.1007/s00259-020-04756-4. [DOI] [PubMed] [Google Scholar]

- 44.Fawcett T. An introduction to ROC analysis. Pattern Recogn. Lett. 2006;27(8):861–874. doi: 10.1016/j.patrec.2005.10.010. [DOI] [Google Scholar]

- 45.Youden WJ. Index for rating diagnostic tests. Cancer. 1950;3:32–35. doi: 10.1002/1097-0142(1950)3:1<32::AID-CNCR2820030106>3.0.CO;2-3. [DOI] [PubMed] [Google Scholar]

- 46.Robin X, et al. pROC: an open-source package for R and S+ to analyze and compare ROC curves. BMC Bioinform. 2011;12:77. doi: 10.1186/1471-2105-12-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Kuhn M. Building predictive models in R using the caret package. J. Stat. Softw. 2008;28:1–26. doi: 10.18637/jss.v028.i05. [DOI] [Google Scholar]

- 48.Terry, M. & Therneau, M. Package ‘survival’ (2018).

- 49.Mukaka MM. Statistics corner: a guide to appropriate use of correlation coefficient in medical research. Malawi Med. J. 2012;24:69–71. [PMC free article] [PubMed] [Google Scholar]

- 50.Breiman L, Leo Stacked regressions. Mach. Learn. 1996;24:49–64. [Google Scholar]

- 51.Dietterich, T. G. Ensemble methods in machine learning. in 1–15 (Springer, Berlin, 2000). 10.1007/3-540-45014-9_1.

- 52.Rokach, L. Ensemble Methods for Classifiers. in Data Mining and Knowledge Discovery Handbook 957–980 (Springer, 2005). 10.1007/0-387-25465-X_45.

- 53.Suk, H.-I. & Shen, D. Deep ensemble sparse regression network for Alzheimer’s disease diagnosis. in 113–121 (2016). 10.1007/978-3-319-47157-0_14.

- 54.Yang, P., Yang, Y. H., Zhou, B. B. & Zomaya, A. Y. A review of ensemble methods in bioinformatics: * Including stability of feature selection and ensemble feature selection methods (updated on 28 Sep. 2016).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request pending the approval of the institution(s) and trial/study investigators who contributed to the dataset.