Abstract

Cross-modal interaction (CMI) could significantly influence the perceptional or decision-making process in many circumstances. However, it remains poorly understood what integrative strategies are employed by the brain to deal with different task contexts. To explore it, we examined neural activities of the medial prefrontal cortex (mPFC) of rats performing cue-guided two-alternative forced-choice tasks. In a task requiring rats to discriminate stimuli based on auditory cue, the simultaneous presentation of an uninformative visual cue substantially strengthened mPFC neurons' capability of auditory discrimination mainly through enhancing the response to the preferred cue. Doing this also increased the number of neurons revealing a cue preference. If the task was changed slightly and a visual cue, like the auditory, denoted a specific behavioral direction, mPFC neurons frequently showed a different CMI pattern with an effect of cross-modal enhancement best evoked in information-congruent multisensory trials. In a choice free task, however, the majority of neurons failed to show a cross-modal enhancement effect and cue preference. These results indicate that CMI at the neuronal level is context-dependent in a way that differs from what has been shown in previous studies.

Keywords: Behavioral training, Cross-modal interaction, Decision making, Medial prefrontal cortex, Multisensory

Introduction

In real life, we often receive multiple sensory cues simultaneously (with most being visual and auditory). The brain must combine them properly and form an effective decision in response to whatever the combination represents accurately. During this process, the brain must decide what sensory inputs are related and what integrative strategy is appropriate. In the past three decades, this process of cross-modal interaction (CMI) or multisensory integration has been widely examined in many brain areas such as superior colliculus, and both primary sensory and association cortices [1–6]. A series of integrative principles that govern this process have been derived (i.e., spatial, temporal, and inverse effectiveness), and testing has shown them to be operant in many brain areas [2]. In the classic example, multisensory neurons in superior colliculus can show greatly enhanced responses to spatiotemporally congruent multisensory cues [7]. Similarly, in monkeys performing a directional task, neurons in several cortical regions such as the dorsal medial superior temporal area have shown enhanced heading selectivity when matched visual and vestibular cues are given simultaneously [8]. In like manner, effectively integrating cross-modal cues was also found to improve perceptual performance [9–11] and shorten reaction times [12–14].

There is an increasing number of studies showing that CMI also could significantly modulate decision-related neural activities in many cortical regions [15]. Psychophysical studies report that perceptual decision-making often relies on CMI [16, 17]. Neuroimaging studies have demonstrated that CMI can directly influence perceptual decisions in both association and sensory cortices [18–20]. Also, in the neuronal level, several studies examined the effect of CMI on perceptual decision-related activities [21–23]. Despite these discoveries, the underlying neural mechanisms of multisensory perceptual decisions remain largely unclear. One of the interesting but challenging questions is what multisensory strategies are employed by the brain to deal with the difference in task contexts.

To explore this, we examined perceptual decision-related activities of the medial prefrontal cortex (mPFC) when rats performed three different cue-guided two-alternative forced-choice tasks. Rodent mPFC receives multimodal cortico-cortical projections from the motor, somatosensory, visual, auditory, gustatory, and limbic cortices [24, 25]. Single neuron activity in mPFC can be considered as a reflection of an ad-hoc mixture of several task-related features such as sensory stimuli, task rules, and possible motor responses [26–28]. Task 1 required rats to discriminate stimuli based on auditory signal alone (two pure tones of different frequencies sometimes paired with an invariant uninformative visual cue) and then make a behavioral choice (left or right). In Task 2, complexity was increased, as the visual cue was made informative for behavioral choice. In Task 3, animals could make a free choice without any cue discrimination. As shown in the following results, these three setups demonstrated that CMI could significantly modulate perceptual decision signals in mPFC in a context-dependent manner.

Results

We performed three series of experiments. In each experiment, we first trained animals to perform a specific cue-guided two-alternative forced-choice task and then examined mPFC neural activity during the task. All of the behavioral tasks were conducted in a training box (Fig. 1a). In Task 1 (details below), animals were required to make a choice based on whether the auditory stimulus or the auditory component of a multisensory cue, was a lower (3 kHz) or higher frequency (10 kHz) pure tone. Task 2 required animals to discriminate two criteria, the cue modality, and, if multisensory, the frequency content of the auditory component. In Task 3, animals were not required to discern stimuli at all and could make a free choice. These tasks allowed us to investigate how mPFC multisensory perceptual decision strategies changed with the demands of the task.

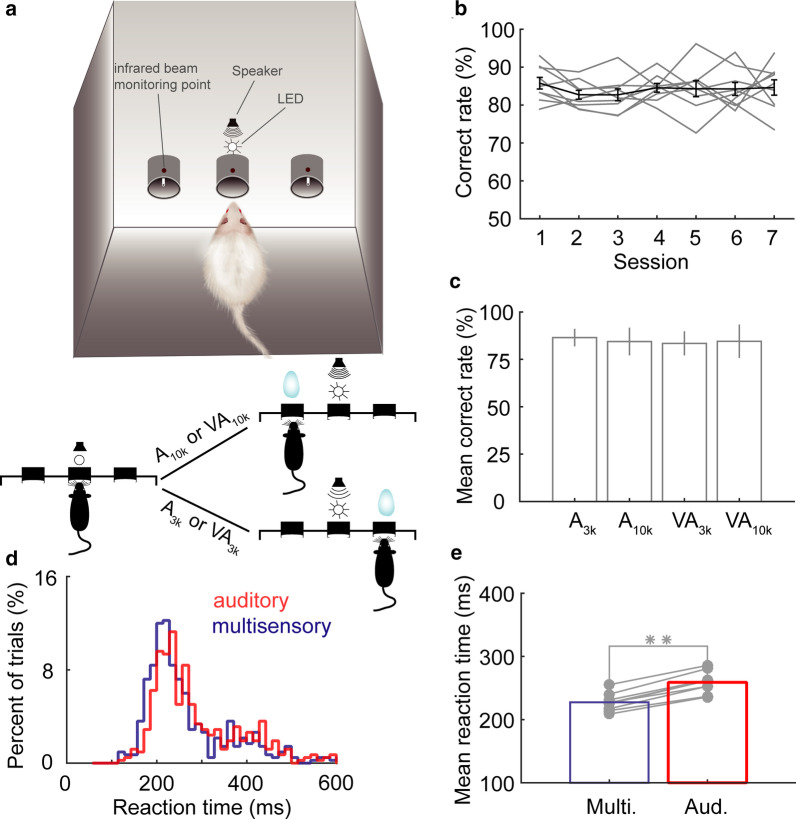

Fig. 1.

Stimulus discrimination task and behavioral performance. a A schematic of the behavioral paradigm used. An individual trial started when trained rats placed their nose in the central port. Next, a lone auditory stimulus or a combined auditory and visual stimulus was presented via a centrally positioned light emitting diode (LED) and speaker to cue the location for a water reward. When the stimulus was either a 10 kHz pure tone (A10k) or a combination of a 10 kHz pure tone and a flash of light (VA10k), the animal would be rewarded at the left port. If the stimulus given was a 3 kHz pure tone alone (A3k) or the same tone paired with a flash of light (VA3k), the animal would receive the reward in the right port. Trials of different stimuli combinations (A3k, A10k, VA3k, VA10k) were presented in a randomized order. b The correct response rate (the number of correct trials divided by the total number of trials, overall mean, black line) are shown for 7 complete testing sessions using 9 well-trained animals (Error bar, SEM). c The mean correct rate across all animals for each stimulus condition (Error bar, SEM). d The distribution of reaction times for both auditory (gray) and multisensory (black) trials performed by a well-trained animal. e A comparison of mean reaction times between auditory and multisensory trials across all animals. **, p < 0.001

The effect of an uninformative visual cue on mPFC neurons' multisensory perceptual decision

A total of 9 rats were trained to perform Task 1 (Fig. 1a). A trial was initiated when a rat poked its nose into the central port in a line of three ports on one wall of the training box (see Fig. 1a). After the waiting period of 500–700 ms, a cue, randomly chosen from a group of 4 cues (3 kHz pure tone, A3k; 10 kHz pure tone, A10k; 3 k Hz pure tone + flash of light, VA3k; 10 kHz pure tone + flash of light, VA10k), was presented in front of the central port. Based on the auditory cue, the rat was required to choose a port (left or right) to obtain a water reward within 3 s. If the stimulus was A10k or VA10k, the rat should move to the left port for harvesting the water reward (Fig. 1a). Any other cue indicated the animal should move to the right port for a reward. Rats readily learned this cue-guided two-alternative-choice task. After the animals performed the task correctly > 75% of the time in five consecutive sessions, they were deemed well-trained and could then undergo implantation and later electrophysiological recording.

Once well-trained, the average behavioral performance stabilized at 84 ± 2.9% (Fig. 1b). There was no difference in behavioral performance between auditory and multisensory cued trials (Fig. 1c). Despite this, the presence of the visual cue sped up the process of cue discrimination. The reaction time, defined as the temporal gap between the cue onset and the moment when the animal withdrew its nose from the infrared beam monitoring point in the central port (Fig. 1a), was compared between auditory and multisensory trials (Fig. 1d, e). Note that rats responded more quickly in multisensory trials with a mean reaction time of 224 ± 14 ms across animals, significantly shorter than 256 ± 17 ms in auditory trials (t(8) = -15.947, p < 0.00001, paired t-test).

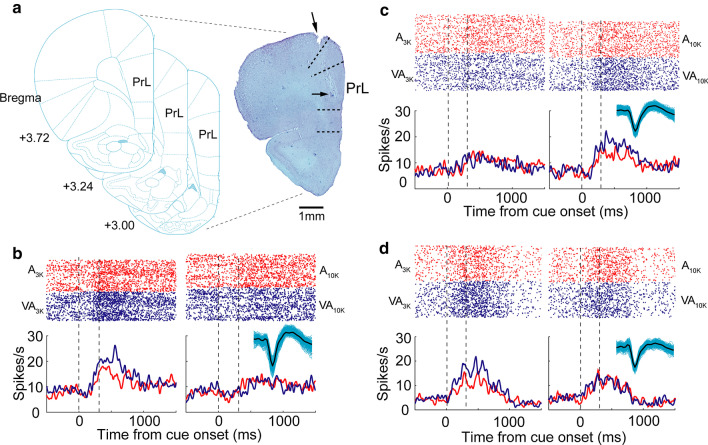

We used tetrode recordings to characterize the task-related activity of individual neurons in left mPFC while well-trained rats performed Task 1 (Fig. 2a). On average, animals performed 266 ± 53 trials in a daily session. A total of 654 neurons were recorded (65 ± 14 neurons per animal), and their responses were examined. 313 of them appeared to show cue-categorization signals within 500 ms after the cue onset (firing rate in continuous three bins > = spontaneous firing rate, Mann–Whitney Rank Sum Test, p < 0.05), and all further analysis was focused on them. In the examples shown in Fig. 2b-d, cue-categorization signals appeared to discriminate well auditory pure tones (low vs. high) and sensory modalities (multisensory vs. auditory). For instance, as is shown in Fig. 2b, the response in A3k trials is higher than in A10k trials, and the firing rate in VA3k trials is higher than in A3k trials. Nearly 34% (107/313) of neurons examined showed both cue-categorization signals and behavioral choice signals (coding moving directions). These two signals could be easily separated because behavioral choice signals occurred much later than cue-categorization signals (typically later than 600 ms after cue onset) (Fig. 3a, b). Different from cue-categorization signals, behavioral choice signals usually showed no difference between multisensory and auditory trials (Fig. 3a, b).

Fig. 2.

Neuronal activity during task performance and histological verification of recording sites. a The photograph of a stained brain section showing the electrode track (top arrow) and the final location of the electrode tip (bottom arrow). All recording sites used were similarly verified to be in the prelimbic area (PrL) of the medial prefrontal cortex. In b rasters (top rows) and peri-stimulus time histograms (PSTHs, bottom traces) showed a neuron’s activities in A3k (left, red), VA3k (left, blue), A10k (right, red), and VA10k (right, blue) trials. Inserted is action potentials of this example mPFC neuron (2000 single waveforms and their average, black). Mean spike counts of correct trials were computed in 10-ms time windows and smoothed with a Gaussian (σ = 100 ms). Responses were aligned to the initial cue presentation. In multisensory trials, visual and auditory stimuli were presented simultaneously. Dashed lines denote the stimulus onset and offset. In the same way, c, d show two more example neurons

Fig. 3.

Cue-categorization and behavioral-choice related activity in mPFC neurons. a Rasters and PSTHs showed a neuron’s activities in both correct (dark color) and error (light color) trials of each given cue condition. Note that the cue-categorization signal preceded a behavioral choice signal denoted by a dashed rectangle. b Another example neuron. The conventions used are the same as in Fig. 2

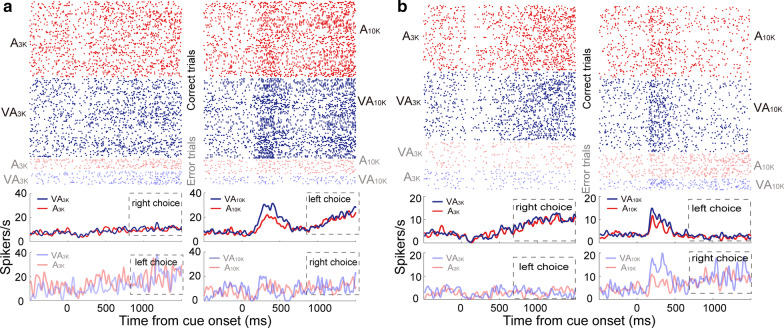

We used ROC analysis to generate an index of auditory choice preference that measures how strongly a neuron's cue-categorization signal for A3k trials diverged from the cue-categorization signal for A10k trials. In the same way, an index of multisensory choice preference was defined. As shown in exemplar cases (Figs. 2b, c, 3a, b), nearly half of neurons examined (55%, 171/313; preferring A3k: N = 111; preferring A10k: N = 60) exhibited an auditory choice preference (permutation test, p < 0.05). However, more neurons (71%; 222/313) showed the multisensory choice preference (Fig. 4a), in that, a sizeable minority of neurons (23%, 72/313) showed the perceptual choice preference only between two multisensory conditions (see the example in Fig. 2d). This result indicated that the visual cue, albeit uninformative, was able to facilitate mPFC neurons’ auditory choice capability. Auditory and multisensory choice preferences were fairly consistent. In other words, if the neuron preferred A10k it usually preferred VA10k (Fig. 4a).

Fig. 4.

Distributions of neuronal choice preferences and mean responses. a Choice preferences (ROC value) for both auditory and multisensory responses are shown. Each symbol shows the value of a single neuron. Abscissa, auditory choice preference for A10k vs. A3k trials; ordinate, multisensory choice preference for VA10k vs. VA3k trials. Open circles: neither auditory nor multisensory choice preference was significant (p < 0.05, permutation test, 5000 iterations); triangles: either multisensory (green) or auditory (blue) choice preference was significant; red diamonds: both multisensory and auditory choice preferences were significant. Similarly, in b modality choice preference (auditory vs. multisensory) are shown. Dashed lines represent zero ROC values. c, d PSTHs show mean responses across populations for different stimulus trials. Shaded areas, SEM

Taking things further, we examined the influence of visual cue on auditory choice signals. We found that in 49% (155/313) of cases, the simultaneous presentation of a visual stimulus could significantly modulate the response in one or both auditory conditions (permutation test, p < 0.05, Fig. 4b). Cross-modal enhancement was the favored processing strategy in use here because, for most neurons (87%, 135/155), the response in VA3k or/and VA10k trials were significantly higher than that in corresponding auditory trials (A3k: 79%, 76/96; A10k: 88%, 61/69). Due to this, across the population (n = 313), the mean response in multisensory trials was a bit larger than that in corresponding auditory trials regardless of the auditory component frequency (Fig. 4c, d).

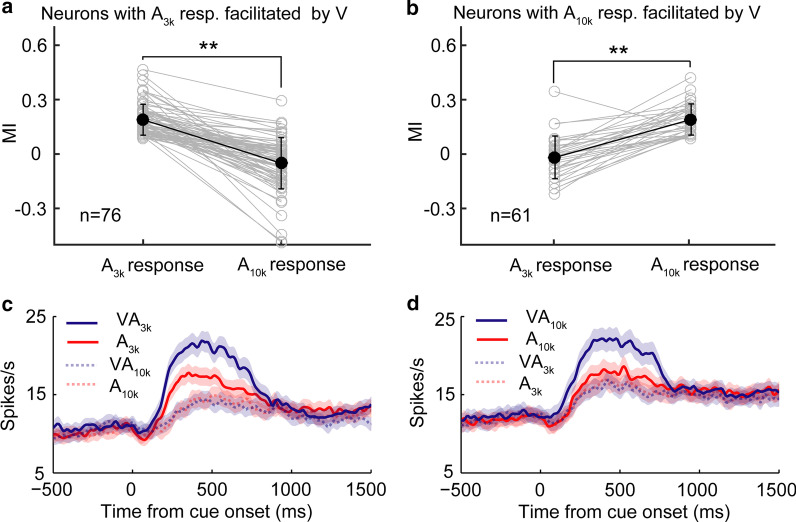

To further investigate those neurons with auditory choice signal facilitated by a visual cue, we were surprised to find that in nearly all of cases (98%, 133/135), the addition of a visual stimulus only facilitated the response in one auditory condition (p < 0.05, permutation test, Fig. 5a, b). We used MI to quantify the effect of cross-modal interaction. In 76 neurons showing cross-modal enhancement in VA3k trials, the mean MI in the VA3k condition was 0.18 ± 0.09, but the mean MI in VA10k condition was near zero (-0.05 ± 0.14, p < 0.0001, Wilcoxon Signed Rank Test, see Fig. 5a). It was also the case in those showing cross-modal enhancement in VA10k trials (n = 61, mean MI: 0.20 ± 0.11 in VA10k condition vs. -0.03 ± 0.12 in VA3k condition, p < 0.0001, Wilcoxon Signed Rank Test, Fig. 5b). Furthermore, we found that the visual cue usually just enhanced the preferred auditory choice signal (see examples in Fig. 2b, c) regardless of whether the preferred was A3k (51/54) or A10k (27/33). Such biased enhancement further strengthened neurons’ choice selectivity (Fig. 5c, d).

Fig. 5.

mPFC neurons exhibit a differential pattern of cross-modal interaction. a The comparison of the index of cross-modal interaction (MI) between low (3 kHz) and high (10 kHz) tone conditions for neurons with showing cross-modal facilitation in VA3k condition. MI is calculated by the following function: MI = (RVA—RA) / (RVA + RA); where RVA and RA represent the mean response in multisensory and auditory alone trials, respectively. Paired gray circles connected with a gray line represent one neuron’s responses. Dark circles represent the mean MI across neurons. **, p < 0.001. Similarly, b shows MI comparisons for neurons with showing cross-modal facilitation in VA10k condition. c, d shows the mean PSTHs of different cue trials for the same two groups of neurons shown in a and b, demonstrating greater responsiveness in the multisensory stimulation containing the preferred auditory stimulus

The influence of information congruence/incongruence between visual and auditory cues on mPFC neurons' cross-modal interaction

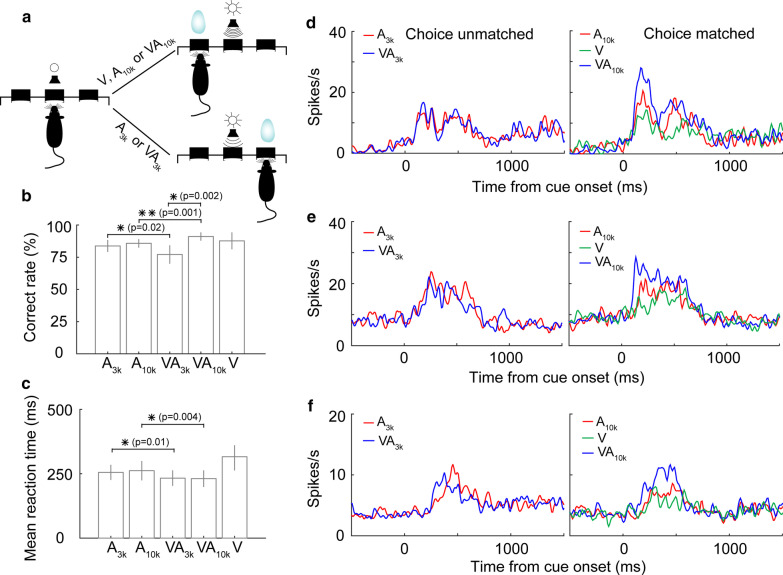

In behavioral Task 1, animals made their behavioral choice based on the auditory cue alone. We then wondered how mPFC neurons would change their integrative strategy if the behavioral choice became dependent on both auditory and visual cues. To examine this, we trained 7 rats to perform a new behavioral task (Task 2). In this task, the only difference from Task 1 is that an individual visual stimulus (V) was introduced into the stimulus pool as an informative cue. If the triggered stimulus is A10K, VA10k, or V, animals should go to the left port to get the reward (Fig. 6a). Otherwise, they should move to the right port to be rewarded. This task took animals about two months of training to surpass 75% correct performance for five consecutive sessions. Although there was no difference in behavioral performance between two auditory alone conditions (A3k vs. A10k: 83.7% vs. 85.7%, t(6) = 0.888, p = 0.41, paired t-test, Fig. 6b), the task showed a difference between two multisensory conditions. This performance increased when the cues themselves had congruent information content and declined when they indicated a cued directional mismatch (VA3k vs. VA10k: 77.1% vs. 91.1%, t(6) = 5.214, p = 0.002, paired t-test, Fig. 6b). The mean reaction time in multisensory trials across animals was still significantly shorter than that in corresponding auditory trials regardless of whether the auditory is A3k or A10k (A10k vs. VA10k: 263 ± 92 ms vs. 232 ± 79 ms, t(6) = 4.585, p = 0.004, paired t-test; A3k vs. VA3k: 256 ± 73 ms vs. 234 ± 75 ms, t(6) = 3.614, p = 0.01, paired t-test; Fig. 6c). There was no difference in the reaction times between two multisensory conditions (t(6) = 0.0512, p = 0.961, paired t-test).

Fig. 6.

Behavioral performance and neural responses when animals performed Task 2. a Schematic of the behavioral paradigm. When the triggered cue is A10k, V, or VA10k, the animal should move to the left port to obtain a water reward. Any other combination indicates they should go to the right port for the reward. b The behavioral accuracy for different cue trials across all animals. c The mean behavioral reaction time to each cue combination used across all animals. d–f PSTHs show the mean response to different cue trials for three neurons

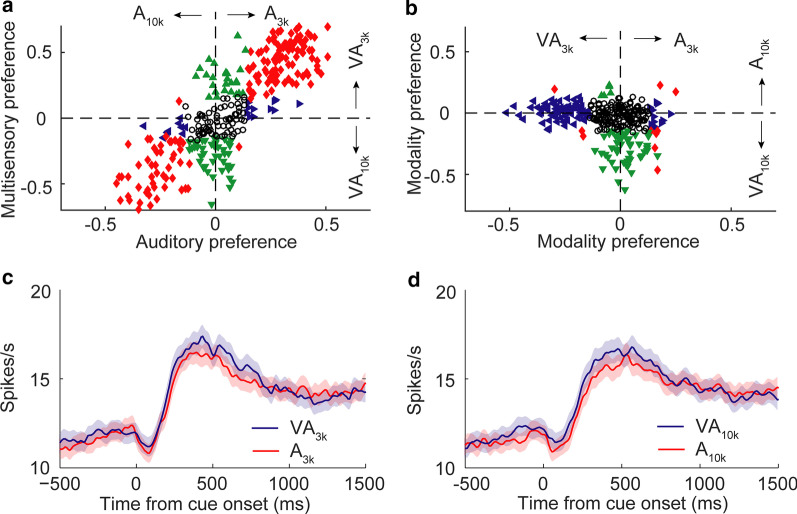

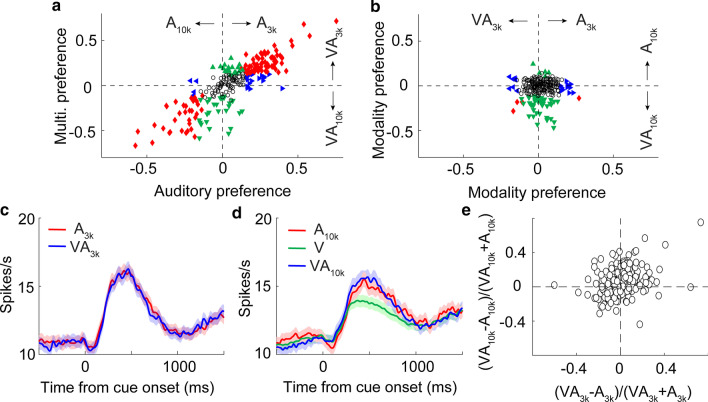

We examined the responses of 456 mPFC neurons recorded during performing Task 2. 54% (247/456) of these neurons showing cue-categorization signals (see examples in Fig. 6d-f). The result showed that the introduction of an informative visual stimulus into the cue pool significantly affected mPFC neurons’ CMI strategy (Fig. 7a, b), one which was dependent on information content. Compared with Task 1, a far lower proportion of neurons (10%, 24/247 in Task 2; 24%, 76/313 in Task 1; X2 = 19.96; p < 0.00001) showed cross-modal enhancement in VA3k trials (Fig. 7b). indicating that information mismatch disrupted cross-modal enhancement. However, this proportion In information-congruent VA10k trials is similar to the observation in Task 1 (22%, 55/247 in Task 2; 19%, 61/313 in Task 1; X2 = 0.65; p = 0.42). As shown in Fig. 6d-f, in each case, only the response in VA10k condition was significantly enhanced. Mean responses across the populations tested (n = 247) are shown in Fig. 7c, d. Of these neurons (n = 55) showing cross-modal enhancement in the information-congruent VA10k trials, 20 of them (36%) favored A10k (see the example in Fig. 6d) and 28 of them (51%) showed no overt preference of auditory choice (see the example in Fig. 6e). In several cases, like the neuron shown in Fig. 6f, the visual stimulus appeared to reverse selectivity, and for auditory, they showed a preference for A3k, but for multisensory, favored VA10k.

Fig. 7.

Cue preferences, neural responses, and multisensory integration. a Neuron response preference by modality (auditory vs. multisensory). b Preference for auditory response (x-axis) against multisensory response (y-axis). c, d The mean PSTHs of different cued trials across neurons. e The comparison of MIs between the two different auditory conditions for all neurons tested (3 kHz vs. 10 kHz). The conventions used are the same as in Fig. 4

The mean MI in information-incongruent VA3k condition across populations (n = 247) is nearly zero (0.01 ± 0.16), which was significantly lower than 0.07 ± 0.15 in the congruent VA10k condition (p < 0.00001, Mann–Whitney Rank Sum Test; see the comparison of an individual case in Fig. 7e). Also, one would expect that the information match should induce more substantial effects of cross-modal enhancement. It was not the case, however. In examining all neurons exhibiting cross-modal enhancement in VA10k condition in Task 1 and Task 2, we found no difference between them (mean MI: 0.21 ± 0.18 in Task 2 vs. 0.20 ± 0.11 in Task 1, p = 0.489, Mann–Whitney Rank Sum Test). Summarily, these results indicate that the activities of mPFC neurons reflected the context of the task and maintained their ability to discriminate, and, ostensibly, aid in successful task completion.

Cross-modal interaction in a choice-free task

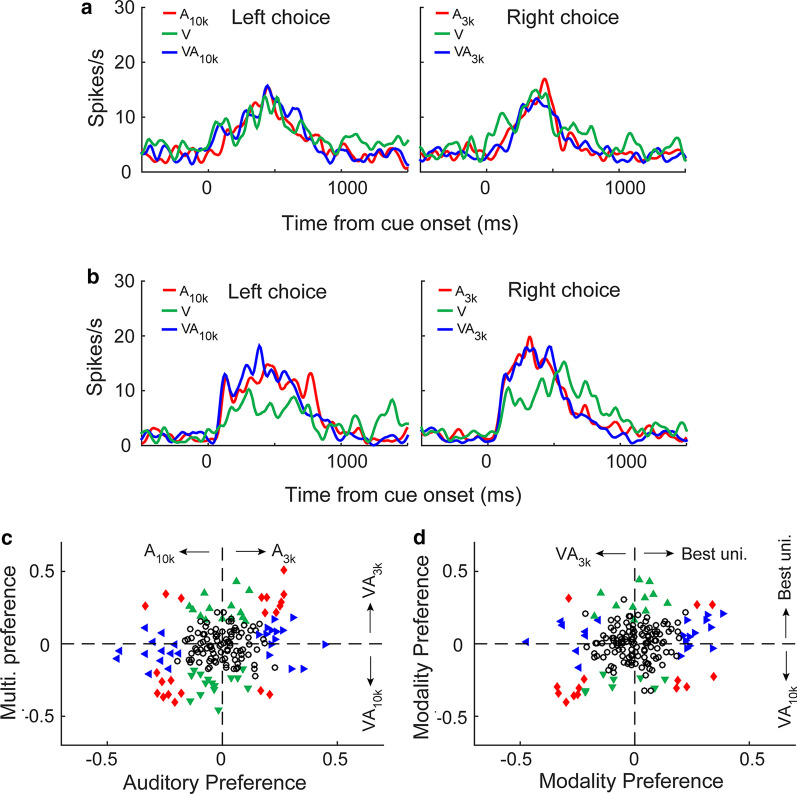

Tasks 1 and 2 required animals to discriminate sensory cues. The next intriguing question to us was how then mPFC neurons would treat different combinations of sensory cues and CMI when cue discrimination is not required? To investigate this, we trained another group of rats (n = 9) to perform a choice-free task (Task 3). In this task, animals would get a water reward in either the left or right port regardless of which stimulus was presented, rendering the cueing discrimination irrelevant. We carefully examined 184 mPFC neurons recorded during the performance of Task 3. For consistency with the earlier analyses, neuron’s response in A3k_right_choice trials was compared with the response in A10k_left_choice trials, and so was done in multisensory comparison. Different from those recorded in Task 1&2, in Task 3, the majority of mPFC neurons examined failed to show auditory choice preferences (74%, 137/184) and correspondingly, multisensory choice preference (73%, 135/184). Figure 8a shows such an example. Population distributions for choice selectivity are illustrated in Fig. 8c. This was also the case in the comparison of responses between conditions of the same moving direction (right direction: auditory choice selectivity, 75%, 138/184; multisensory choice selectivity, 72%, 132/184; left direction: auditory choice selectivity, 77%, 142/184; multisensory choice selectivity, 71%, 130/184).

Fig. 8.

mPFC neurons’ activities and choice preferences in a choice-free behavioral task. a, b, PSTHs show the mean response to different cue trials for two neurons. c Auditory vs. multisensory choice preferences is shown. d Neurons’ preferences for the modality (unisensory vs. multisensory). Conventions are the same as in Fig. 2 and in Fig. 4

For the majority of neurons (72%, 132/184), their response in multisensory trials is very similar to the corresponding response in auditory or visual trials (p > 0.05, permutation test, see the example in Fig. 8a, b and populations in Fig. 8d). For those neurons with the response in auditory trials that was influenced by visual stimulus (28%, n = 52), they showed induced inhibitory or facilitatory effects that appear similar (facilitated: 24; inhibited: 23; facilitated and inhibited: 5, see Fig. 8d).

We carefully examined neurons exhibiting auditory choice selectivity (n = 49) to see whether visual cue, as what we observed in cue-discrimination tasks, could specifically induce facilitative effect to the preferred response. The vast majority of cases (44/49) failed to do so, however. Figure 8b showed such an example where the neuron favored the A3k over A10k, but neither response was heightened by the visual stimulus. The mean MIs for both conditions were similar (preferred vs. non-preferred: − 0.03 ± 0.27 vs. − 0.04 ± 0.27; p = 0.441, Mann–Whitney Rank Sum Test). This result, taken together with those given above, reveals that the differential neural activities in mPFC likely reflect the context of the given task. When stimulus discrimination is not required, the neuronal activity exhibits no selectivity. When demanded by an appropriate task, mPFC neurons are quite capable of sensory discrimination.

Discussion

We used cue discrimination tasks to understand context-dependent CMI in rat mPFC, an area that is believed to be essential both for perception and decision-making. The result showed that, in a task requiring auditory discrimination, the presence of an uninformative visual stimulus mostly served only to heighten the preferred auditory choice signal. As a result, the neurons exhibited better perceptual decision capability for multisensory conditions than for auditory alone conditions. However, if a visual cue, like the auditory, was made informative, mPFC neurons frequently showed a different CMI pattern with an enhanced multisensory perceptual signal when both auditory and visual cues indicated the same behavioral instruction. When no cue discrimination was required in the task, the majority of neurons failed to show the same pattern of CMI and a similar choice strategy. This result greatly expands our understanding of the role that CMI can play in the brain.

Most of our understandings regarding CMI were developed using anesthetized or passively sensing animals. In these studies, the spatiotemporal arrangement and intensities of stimuli were found to be critical for CMI. We believe that more factors should influence CMI when humans and animals perform tasks. Also, as we know now, the levels of neural activity found in an alert, active brain are dramatically different from an anesthetized or passive preparation. To date, few studies have examined the association of multisensory cues (especially visual and auditory) in awake, unrestrained animals [29–31]. Based on our limited knowledge, no study examined the diversity of CMI at the neuronal level during tasks. Thus, our present study provides essential evidence for fully understanding CMI in the brain.

Our result demonstrated that task contexts significantly influenced the strategy of multisensory perceptual decisions. As behavioral demands of a complex decision rose, so could multisensory decision-making strategies. This result is consistent with most of the previous studies that contended contextual representations influenced the way stimuli, events, or actions were both encoded and interpreted [32–34]. These observations were considered especially true in higher-order cortices [25, 35]. Also, in rodents, mPFC has been identified as critical for changing strategies [36–38]. Thus, our result provided new evidence for backing this conclusion. However, it remains to be discovered whether this context-dependent CMI is unique to mPFC or if it exists in other brain areas, which we intend to examine in future studies. Also, the brain state should be a critical factor for influencing CMI, and a recent study showed that cross-modal inhibition dominated in mPFC in anesthetized rats [39].

When performing the task, rats showed shorter reaction times in multisensory conditions. This result is consistent with what was observed in many previous studies conducted in both humans and animals [40–43]. We believe that visual signals, even if task-irrelative, could be integrated into the auditory processing in task engagement, which should help explain why visual cues could speed up auditory discrimination. Previous studies showed that visual inputs could significantly influence the auditory response even in the auditory cortex [44]. Similar studies showed that task-irrelevant sounds could activate human visual cortex [45] and improve visual perceptual processing [10]. Also, multisensory cues could activate more attention effect [46], which might also help facilitate perceptual discrimination. In addition, the effect of cross-modal modulation on mPFC response was not closely correlated with the reaction time. In Task 2, there is no difference in reaction time between congruent and incongruent conditions; whereas, mean MI in the congruent condition was significantly larger than that in the incongruent condition. Of especial relevance to our finding here, it has been shown that a task-irrelevant auditory stimulus could shorten the reaction time of responding to visual cues [47] and multisensory processing of both semantic congruent and incongruent stimuli could speed up reaction time [42]. Our testing in Task 1 failed to show a higher degree of accuracy in choice selection for multisensory conditions. In considering this result, we attribute this to two factors: the completely uninformative character of the visual stimulus as a cue (lacking in any information, such as location or direction) and the possible presence of a ceiling effect on performance in a minimalist task with only two choices.

In Task 2, the rats exhibited a higher rate of behavioral performance in the information-congruent multisensory condition. This result is consistent with the multisensory correlation model that describes that multisensory enhancement increases with the rising correlation between multisensory signals [48]. Also, evidence from both human and nonhuman primates showed that information congruence is critical for multisensory integration [49–51]. For example, congruent audiovisual speech enhances our ability to comprehend a speaker, even in noise-free conditions [52], and semantically congruent multisensory stimuli result in enhanced behavioral performance [53]. Conversely, when incongruent auditory and visual information is presented concurrently, it can hinder a listener's perception and even cause him or her to perceive information that was not presented in either modality [54]. In Task 2, A3k and visual cue indicated the different behavioral choices. However, in multisensory VA3k conditions, rats mostly made their choice based on the auditory rather than visual components. This result is consistent with a recent study showing auditory dominance over vision during the integration of audiovisual conflicts [55].

Rat mPFC can be separated into multiple different subregions including the medial agranular cortex, the anterior cingulate cortex, the prelimbic (PrL), and infralimbic (IL) cortices, based on efferent and afferent patterns of projection [56, 57]. Functionally, PrL, the area that we examined in this study, is implicated in perception-based decision making and memory [36, 58–60] and also tuned to the value of spatial navigation goals [61, 62]. Perceptual decision-making is a complex neural process, including the encoding of sensory information, the calculation of decision variables, the application of decision rules, and the production of motor response [63]. In this study, we failed to know whether CMI occurred before or during the process of perceptual decision exactly. There is substantial physiological and anatomical evidence for cross-modal interactions in primary and non-primary sensory cortices [10, 44, 64, 65]. Considering mPFC receives a vast array of information from sensory cortices [56, 66], the effect of CMI might first occur in sensory processing and then influenced the process of decision making in mPFC.

Cross-modal enhancement appears not to be the default integrative mode for mPFC neurons of awake rats because most of them failed to show it in the choice-free task (Task 3). Similar results were found in other studies [67–69]. However, this result is quite different from earlier studies conducted in the superior colliculus and other sensory cortical areas that primarily showed enhanced multisensory responses to spatiotemporally congruent cues [3, 4, 70, 71]. This is reasonable, considering that different brain areas have different functional goals. For instance, it is well understood that the intrinsic functions of superior colliculus include the localization of novel stimuli and cue-triggered orientation. In contrast, the prefrontal cortex is known to be involved in higher-order cognitive functions, including decision making. It is, therefore, reasonable to conclude that different brain regions would need to apply different strategies of CMI to process multisensory inputs in line with their overall processing goals.

Methods

Rat subjects

Animal procedures were approved by the Local Ethical Review Committee of East China Normal University and carried out in accordance with the Guide for the Care and Use of Laboratory Animals of East China Normal University. Twenty-five adult male Sprague Dawley rats, provided by the Shanghai Laboratory Animal Center (Shanghai, China) were used for the experiments. These animals were 250–300 g each and were 4–6 months old at the start of behavioral training. Each was housed as one animal per cage under constant temperature (23 ± 1 °C) with a normal diurnal light cycle. All animals had access to food ad libitum at all times. Water was restricted only on experimental days up to the behavioral session and was unrestricted afterward for 5 min. Animals usually trained 5 days per week, in one 50 to 80-min session per day, held at approximately the same time of day. Bodyweight was carefully monitored and kept above 80% of the age-matched control animals undergoing no behavioral training.

Behavioral task

The animals were required to perform a cue-guided two-alternative forced-choice task slightly modified from other published protocols [72, 73]. Automated training was controlled using a custom-built program running on Matlab 2015b (Mathworks, Natick, Ma. USA). The training was conducted in an open-topped custom-built operant chamber made of opaque plastic (size: 50 × 30 × 40 cm, length × width × height) inside a well-ventilated painted wooden box covered with convoluted polyurethane foam for sound attenuation (outer size: 120 × 100 × 120 cm). Three snout ports, each monitored by a photoelectric switch, are located on one sidewall of the operant chamber (see Fig. 1a). The signals from the photoelectric switches were first fed to an analog–digital multifunction card and digitized (DAQ NI 6363, National Instruments, Austin, TX, USA) and sent via USB to a PC running the training program.

Rats initiated a trial by poking their nose into the center port. Following a short variable delay (500-700 ms), a stimulus (two auditory, two auditory-visual, or one visual, randomly selected) was presented. After presentation of this cue, rats could immediately initiate their behavioral choice, moving to the left or right port (Fig. 1a). If rats made a correct choice (hit trial), they could obtain a water reward, and a new trial could immediately follow. If animals made wrong or no behavioral choice within 3 s after cue onset, the punishment of a 5–6 s timeout was applied.

The auditory cue was delivered via a speaker (FS Audio, Zhejiang, China), using a 300 ms-long 3 kHz (low) or 10 kHz (high) pure tone with 25 ms attack/decay ramps given at 60 dB sound pressure level (SPL) against an ambient background of 35–45 dB SPL. SPLs were measured at the position of the central port (the starting position). The visual cue was a 300 ms-long flash of white light given at 5 ~ 7 cd/m2 intensity, delivered by a light-emitting diode. The auditory-visual cue (multisensory cue) was the simultaneous presentation of both auditory and visual cues.

Assembly of tetrodes

Formvar-Insulated Nichrome Wire (bare diameter: 17.78 μm, A-M systems, WA, USA) was twisted in groups of four as tetrodes (impedance: 0.5–0.8 MΩ at 1 kHz). Two 20 cm-long wires were folded in half over a horizontal bar for twisting. The ends were clamped together and manually twisted clockwise. Finally, their insulation coating was fused with a heat gun at the desired level of twist and cut in the middle to produce two tetrodes. To reinforce each tetrode longitudinally, each tetrode was then inserted into Polymide tubing (inner diameter: 0.045 inches; wall: 0.005 inches; A-M systems, WA, USA) and fixed in place by cyanoacrylate glue. An array of 2 × 4 tetrodes were then assembled using an inter-tetrode gap of 0.4–0.5 mm. After assembly, the insulation coating of each wire was gently removed at the tip, and then the wire was soldered to a connector pin. The reference electrode used was a tip-exposed Ni-Chrome wire of diameter 50.8 μm (A-M systems, WA, USA), and a ground electrode was a piece of copper wire of the diameter of 0.1 mm. Both of these were also soldered to a connector pin. The tetrodes and reference were then carefully cemented by silicon gel and trimmed to an appropriate length immediately before implantation.

Electrode implantation

The animal was administered a subcutaneous injection of atropine sulfate (0.01 mg/kg b.w.) before surgery and then was anesthetized with an initial intraperitoneal (i.p.) injection of sodium pentobarbital (40–50 mg/kg b.w.). After anesthesia, the animal was fixed on the stereotaxic apparatus (RWD, Shenzhen, China). The tetrode array was then implanted in the left mPFC (AP 2.5–4.5 mm, ML 0.3–0.8 mm, 2.0–3.5 mm ventral to the brain surface) by slowly advancing a micromanipulator (RWD, Shenzhen, China). Neuronal signals were monitored throughout implantation to ensure appropriate placement. Tissue gel (3 M, Maplewood, MN, US) was used to seal the craniotomy. The tetrode array was then secured to the skull with stainless steel screws and dental acrylic. After surgery, animals were given a 4-day course of antibiotics (Baytril, 5 mg/Kg b.w., Bayer, Whippany, NJ, US). They had a recovery period of at least 7 days (usually 9–12 days with free access to food and water).

Neural recordings

When recovered from the surgery, animals resumed performing the behavioral task in the same training chamber but now situated inside a larger acoustically and electrically shielded room (size 2.5 × 2 × 2.5 m, length × width × height). Recording sessions began after the animal's behavioral performance recovered to the level attained before surgery (typically 2–3 days). Wideband neural signals (250–6000 Hz) were recorded using a head-stage amplifier (RHD2132, Intantech, CA, USA). Amplified (20×) and digitized (at 20 kHz) neural signals were combined with trace signals representing both the stimuli and session performance information and sent to a USB interface board (RHD2000 Intan technology, CA, USA), and then to a PC for on-line observation and data storage.

Histology

After the last data recording session, the final tip position of the recording electrode was marked with a small DC lesion (-30 μA for 15 s). Afterwards, rats were deeply anesthetized with sodium pentobarbital (100 mg/kg) and perfused transcardially with saline for several minutes, followed immediately by phosphate-buffered saline (PBS) with 4% paraformaldehyde (PFA). Their brains were carefully removed and stored in the 4% PFA solution overnight. After cryoprotection in PBS with 20% sucrose solution for at least three days, the fixed brain tissue was sectioned in the coronal plane on a freezing microtome (Leica, Wetzlar, Germany) at a slice thickness of 50 μm and counterstained with methyl violet to aid lesion site verification to be in the Prelimbic area of mPFC [74, 75].

Data analysis

Reaction time was defined as the time between the onset of a stimulus and the moment when the animal withdrew its nose from the infrared beam monitoring point in the central port. The average reaction time for each cue condition was calculated as the median over the number of trials given. The correct performance rate was defined by:

Correct performance rate (%) = 100*hit trials/ total number of trials.

Raw neural signals were recorded and stored for offline analysis. Spike sorting was later performed using Spike 2 software (CED version 8, Cambridge, UK). Recorded raw neural signals were band-pass filtered in 300–6000 Hz to remove field potentials. A threshold criterion of no less than threefold standard deviations (SD) above background noise were used for identifying spike peaks. The detected spike waveforms were then clustered by principal component analysis and a template-matching algorithm. Waveforms with inter-spike intervals of < 2.0 ms were excluded. Relative spike timing data for a single unit were then obtained for different trials of different cued conditions and used to construct both raster plots and prestimulus time histograms (PSTHs) using custom Matlab scripts. Only neurons for which the overall meaning firing rate within the session was at least 2 Hz were included for analysis. As generally observed, behavioral and neuronal results were similar across all relevant animals for a particular testing paradigm. Thus, the data across sessions were combined to study population effects.

To render PSTHs, all spike trains were first binned at 10 ms and convolved with a smoothing Gaussian Kernel (δ = 100 ms) to minimize the impact of random spike-time jitter at the borders between bins. The mean spontaneous firing rate was calculated from a 500-ms window immediately preceding stimulus onset. Decision-making-related neural activity was quantified as mean firing rates in the 500-ms after cue onset after subtracting the mean spontaneous firing rate.

We quantified the choice selectivity between two different cue conditions used during a task (for example, low tone trials vs. high tone trials) by using a receiver operating characteristic (ROC) based analysis [76]. Firstly, we set 12 threshold levels of activity covering the range of firing rates obtained in cue_A and cue_B trials. Following that, a ROC curve is generated, for each threshold criterion, by plotting the proportion of cue_A trials on which the response exceeded criterion against the proportion of cue_B trials on which the response exceeded criterion. The value of choice selectivity is defined as 2*((area under the ROC curve)–0.5). Therefore, a value of 0 indicates no difference in the distribution of responses between cue_A and cue_B. A value of 1/-1 represents the highest selectivity, that is, responses triggered by cue_A were always higher or lower than those evoked by cue_B.

To test the significance of each choice selectivity value, we ran a permutation test. This was accomplished by randomly distributing all trials from a neuron into two groups, independent of the actual cue conditions. These groups were nominally called cue_A trials and cue_B trials and contained the same number of trials as the experimentally obtained groups. The choice selectivity value was then calculated from the redistributed data, and the procedure was repeated 5000 times, thereby giving a distribution of values from which to calculate the probability of the result we obtained. When our actual value was found in the top 5%, it was defined as significant (i.e., p < 0.05).

To quantify the difference between responses in visual-auditory (multisensory) and auditory trials, we calculate the index of cross-modal interaction (MI) using the following function: MI = (VA-A)/(VA + A), where VA and A represent firing rates in multisensory and auditory trials, respectively. MI has a range of -1 to 1, with more positive values indicating the response in multisensory trials was much stronger and more negative values meaning the response in auditory trials was more robust.

Statistical analysis

All statistical analyses were conducted in Matlab 2015b with statistical significance assigned for findings attaining a p-value of < 0.05. All behavioral data (for example, mean reaction time differences between auditory and multisensory trials) were compared using the paired t-test. We performed the Chi-square test to analyze the difference in proportions of neurons (recorded in different Tasks) showing choice selectivity. To compare MIs between different cue conditions within the same group of neurons, we performed a paired t-test or Mann–Whitney Rank Sum Test where appropriate. Unless stated otherwise, all data group results are presented as mean ± SD.

Acknowledgements

We thank Qing Nan for technical assistance and assistance in the preparation of the paper.

Abbreviations

- CMI

Cross-modal interaction

- IL

Infralimbic cortex

- MI

Index of cross-modal interaction

- mPFC

Medial prefrontal cortex

- PBS

Phosphate-buffered saline

- PFA

Paraformaldehyde

- PrL

Prelimbic cortex

- PSTH

Prestimulus time histogram

- ROC

Receiver operating characteristic

- SD

Standard deviations

- SPL

Sound pressure level

Authors’ contributions

LY and JX designed the experiments. MZ, JW and SC acquired, analyzed and interpreted the data. LY, LK and JX wrote the manuscript. LY developed custom MATLAB programs for behavioral training and data analysis. All authors read and approved the final manuscript.

Funding

This work was supported by Grants from the National Natural Science Foundation of China (31970925), Shanghai Natural Science Foundation (20ZR1417800, 19ZR1416500), and the Fundamental Research Funds for the Central Universities.

Availability of data and materials

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

Ethics approval and consent to participate

Animal procedures were approved by the Local Ethical Review Committee of East China Normal University and carried out in accordance with the Guide for the Care and Use of Laboratory Animals of East China Normal University.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Mengyao Zheng and Jinghong Xu are co-first authors and contributed equally.

Contributor Information

Mengyao Zheng, Email: 51161300110@stu.ecnu.edu.cn.

Jinghong Xu, Email: jhxu@bio.ecnu.edu.cn.

Les Keniston, Email: les.keniston@gmail.com.

Jing Wu, Email: 51141300112@stu.ecnu.edu.cn.

Song Chang, Email: 52191300016@stu.ecnu.edu.cn.

Liping Yu, Email: lpyu@bio.ecnu.edu.cn.

References

- 1.Allman BL, Keniston LP, Meredith MA. Subthreshold auditory inputs to extrastriate visual neurons are responsive to parametric changes in stimulus quality: sensory-specific versus non-specific coding. Brain Res. 2008;1242:95–101. doi: 10.1016/j.brainres.2008.03.086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Stein BE, Stanford TR, Rowland BA. Development of multisensory integration from the perspective of the individual neuron. Nat Rev Neuroence. 2014;15(8):520–535. doi: 10.1038/nrn3742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Xu J, Bi T, Wu J, Meng F, Wang K. Spatial receptive field shift by preceding cross-modal stimulation in the cat superior colliculus. J Physiol. 2018;9:8. doi: 10.1113/JP275427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Xu J, Sun X, Zhou X, Zhang J, Yu L. The cortical distribution of multisensory neurons was modulated by multisensory experience. Neuroscience. 2014;272:1–9. doi: 10.1016/j.neuroscience.2014.04.068. [DOI] [PubMed] [Google Scholar]

- 5.Zahar Y, Reches A, Gutfreund Y. Multisensory enhancement in the optic tectum of the barn owl: spike count and spike timing. J Neurophysiol. 2009;101(5):2380–2394. doi: 10.1152/jn.91193.2008. [DOI] [PubMed] [Google Scholar]

- 6.Murray MM, Lewkowicz DJ, Amedi A, Wallace MT. Multisensory processes: a balancing act across the lifespan. Trends Neurosci. 2016;39(8):567–579. doi: 10.1016/j.tins.2016.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Meredith MA, Stein BE. Spatial factors determine the activity of multisensory neurons in cat superior colliculus. Brain Res. 1986;365(2):350–354. doi: 10.1016/0006-8993(86)91648-3. [DOI] [PubMed] [Google Scholar]

- 8.Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci. 2008;11(10):1201–1210. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Atilgan H, Town SM, Wood KC, Jones GP, Maddox RK, Lee AKC, Bizley JK. Integration of visual information in auditory cortex promotes auditory scene analysis through multisensory binding. Neuron. 2018;97(3):640–655. doi: 10.1016/j.neuron.2017.12.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Feng W, Stormer VS, Martinez A, McDonald JJ, Hillyard SA. Sounds activate visual cortex and improve visual discrimination. J Neurosci. 2014;34(29):9817–9824. doi: 10.1523/JNEUROSCI.4869-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Zion Golumbic E, Cogan GB, Schroeder CE, Poeppel D. Visual input enhances selective speech envelope tracking in auditory cortex at a "cocktail party". J Neurosci. 2013;33(4):1417–1426. doi: 10.1523/JNEUROSCI.3675-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Maddox RK, Atilgan H, Bizley JK, Lee AK. Auditory selective attention is enhanced by a task-irrelevant temporally coherent visual stimulus in human listeners. Elife. 2015;4:e2302. doi: 10.7554/eLife.04995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chandrasekaran C, Lemus L, Ghazanfar AA. Dynamic faces speed up the onset of auditory cortical spiking responses during vocal detection. Proc Natl Acad Sci U S A. 2013;110(48):E4668–4677. doi: 10.1073/pnas.1312518110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Rowland BA, Quessy S, Stanford TR, Stein BE. Multisensory integration shortens physiological response latencies. J Neuroence Off J Soc Neuroence. 2007;27(22):5879–5884. doi: 10.1523/JNEUROSCI.4986-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bizley JK, Jones GP, Town SM. Where are multisensory signals combined for perceptual decision-making? Curr Opin Neurobiol. 2016;40:31–37. doi: 10.1016/j.conb.2016.06.003. [DOI] [PubMed] [Google Scholar]

- 16.McDonald JJ. Cross-modal orienting of visual attention. Neuropsychologia. 2016;83:170–178. doi: 10.1016/j.neuropsychologia.2015.06.003. [DOI] [PubMed] [Google Scholar]

- 17.Shams L, Kamitani Y, Shimojo S. What you see is what you hear. Nature. 2000;408:788. doi: 10.1038/35048669. [DOI] [PubMed] [Google Scholar]

- 18.Stoermer VS, McDonald JJ, Hillyard SA. Cross-modal cueing of attention alters appearance and early cortical processing of visual stimuli. Proc Natl Acad Sci USA. 2009;106(52):22456–22461. doi: 10.1073/pnas.0907573106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Tim R, Uta N. Distinct computational principles govern multisensory integration in primary sensory and association cortices. Curr Biol. 2016;9:e13. doi: 10.1016/j.cub.2015.12.056. [DOI] [PubMed] [Google Scholar]

- 20.Jon D, Toemme N. Multisensory Interplay reveals crossmodal influences on 'sensory-specific' brain regions, neural responses, and judgments. Neuron. 2008;9:876. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Raposo D, Kaufman MT, Churchland AK. A category-free neural population supports evolving demands during decision-making. Nat Neurosci. 2014;17(12):1784–1792. doi: 10.1038/nn.3865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Nikbakht N, Tafreshiha A, Zoccolan D, Diamond ME. Supralinear and supramodal integration of visual and tactile signals in rats: psychophysics and neuronal mechanisms. Neuron. 2018;97(3):626–639.e628. doi: 10.1016/j.neuron.2018.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hou H, Zheng Q, Zhao Y, Pouget A, Gu Y. Neural correlates of optimal multisensory decision making under time-varying reliabilities with an invariant linear probabilistic population code. Neuron. 2019;104(5):1010–1021. doi: 10.1016/j.neuron.2019.08.038. [DOI] [PubMed] [Google Scholar]

- 24.Eden CGV, Lamme VAF, Uylings HBM. Heterotopic cortical afferents to the medial prefrontal cortex in the rat. A combined retrograde and anterograde tracer study. Eur J Neurosci. 1992;4(1):77–97. doi: 10.1111/j.1460-9568.1992.tb00111.x. [DOI] [PubMed] [Google Scholar]

- 25.Heidbreder CA, Groenewegen HJ. The medial prefrontal cortex in the rat: evidence for a dorso-ventral distinction based upon functional and anatomical characteristics. Neurosci Biobehav Rev. 2003;27(6):555–579. doi: 10.1016/j.neubiorev.2003.09.003. [DOI] [PubMed] [Google Scholar]

- 26.Winocur G, Eskes G. Prefrontal cortex and caudate nucleus in conditional associative learning: dissociated effects of selective brain lesions in rats. Behav Neurosci. 1998;112(1):89–101. doi: 10.1037/0735-7044.112.1.89. [DOI] [PubMed] [Google Scholar]

- 27.Mansouri FA, Matsumoto K, Tanaka K. Prefrontal cell activities related to monkeys' success and failure in adapting to rule changes in a Wisconsin Card Sorting Test analog. J Neurosci. 2006;26(10):2745–2756. doi: 10.1523/JNEUROSCI.5238-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Asaad WF, Rainer G, Miller EK. Neural activity in the primate prefrontal cortex during associative learning. Neuron. 1998;21(6):1399–1407. doi: 10.1016/S0896-6273(00)80658-3. [DOI] [PubMed] [Google Scholar]

- 29.Fuster JM, Bodner M, Kroger JK. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature. 2000;405(6784):347–351. doi: 10.1038/35012613. [DOI] [PubMed] [Google Scholar]

- 30.Moll FW, Nieder A. Cross-Modal Associative Mnemonic Signals in Crow Endbrain Neurons. Curr Biol. 2015;25(16):2196–2201. doi: 10.1016/j.cub.2015.07.013. [DOI] [PubMed] [Google Scholar]

- 31.Stein BE, Huneycutt WS, Meredith MA. Neurons and behavior: the same rules of multisensory integration apply. Brain Res. 1988;448(2):355–358. doi: 10.1016/0006-8993(88)91276-0. [DOI] [PubMed] [Google Scholar]

- 32.Mante V, Sussillo D, Shenoy KV, Newsome WT. Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature. 2013;503(7474):78–84. doi: 10.1038/nature12742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Liu Z, Braunlich K, Wehe HS, Seger CA. Neural networks supporting switching, hypothesis testing, and rule application. Neuropsychologia. 2015;77:19–34. doi: 10.1016/j.neuropsychologia.2015.07.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- 35.Groenewegen HJ, Uylings HB. The prefrontal cortex and the integration of sensory, limbic and autonomic information. Prog Brain Res. 2000;126:3–28. doi: 10.1016/S0079-6123(00)26003-2. [DOI] [PubMed] [Google Scholar]

- 36.Liu D, Gu X, Zhu J, Zhang X, Han Z, Yan W, Cheng Q, Hao J, Fan H, Hou R, et al. Medial prefrontal activity during delay period contributes to learning of a working memory task. Science. 2014;346(6208):458–463. doi: 10.1126/science.1256573. [DOI] [PubMed] [Google Scholar]

- 37.Karlsson MP, Tervo DG, Karpova AY. Network resets in medial prefrontal cortex mark the onset of behavioral uncertainty. Science. 2012;338(6103):135–139. doi: 10.1126/science.1226518. [DOI] [PubMed] [Google Scholar]

- 38.Dalley JW, Cardinal RN, Robbins TW. Prefrontal executive and cognitive functions in rodents: neural and neurochemical substrates. Neurosci Biobehav Rev. 2004;28(7):771–784. doi: 10.1016/j.neubiorev.2004.09.006. [DOI] [PubMed] [Google Scholar]

- 39.Martin-Cortecero J, Nunez A. Sensory responses in the medial prefrontal cortex of anesthetized rats. Implications for sensory processing. Neuroscience. 2016;339:109–123. doi: 10.1016/j.neuroscience.2016.09.045. [DOI] [PubMed] [Google Scholar]

- 40.Gleiss S, Kayser C. Audio-visual detection benefits in the rat. PLoS ONE. 2012;7(9):e45677. doi: 10.1371/journal.pone.0045677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hammond-Kenny A, Bajo VM, King AJ, Nodal FR. Behavioural benefits of multisensory processing in ferrets. Eur J Neurosci. 2017;45(2):278–289. doi: 10.1111/ejn.13440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Barutchu A, Spence C, Humphreys GW. Multisensory enhancement elicited by unconscious visual stimuli. Exp Brain Res. 2018;8:954. doi: 10.1007/s00221-017-5140-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.McDonald JJ, Teder-Salejarvi WA, Di Russo F, Hillyard SA. Neural basis of auditory-induced shifts in visual time-order perception. Nat Neurosci. 2005;8(9):1197–1202. doi: 10.1038/nn1512. [DOI] [PubMed] [Google Scholar]

- 44.Kayser C, Logothetis NK, Panzeri S. Visual enhancement of the information representation in auditory cortex. Curr Biol. 2010;20(1):19–24. doi: 10.1016/j.cub.2009.10.068. [DOI] [PubMed] [Google Scholar]

- 45.McDonald JJ, Stormer VS, Martinez A, Feng W, Hillyard SA. Salient sounds activate human visual cortex automatically. J Neurosci. 2013;33(21):9194–9201. doi: 10.1523/JNEUROSCI.5902-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hillyard SA, Stormer VS, Feng W, Martinez A, McDonald JJ. Cross-modal orienting of visual attention. Neuropsychologia. 2016;83:170–178. doi: 10.1016/j.neuropsychologia.2015.06.003. [DOI] [PubMed] [Google Scholar]

- 47.McDonald JJ, Teder-Salejarvi WA, Hillyard SA. Involuntary orienting to sound improves visual perception. Nature. 2000;407(6806):906–908. doi: 10.1038/35038085. [DOI] [PubMed] [Google Scholar]

- 48.Parise CV, Ernst MO. Correlation detection as a general mechanism for multisensory integration. Nat Commun. 2016;7:11543. doi: 10.1038/ncomms11543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Shams L, Seitz AR. Benefits of multisensory learning. Trends Cogn Sci. 2008;12(11):411–417. doi: 10.1016/j.tics.2008.07.006. [DOI] [PubMed] [Google Scholar]

- 50.Campanella S, Belin P. Integrating face and voice in person perception. Trends Cogn Sci. 2007;11(12):535–543. doi: 10.1016/j.tics.2007.10.001. [DOI] [PubMed] [Google Scholar]

- 51.Romanski LM. Integration of faces and vocalizations in ventral prefrontal cortex: implications for the evolution of audiovisual speech. Proc Natl Acad Sci USA. 2012;109(Suppl 1):10717–10724. doi: 10.1073/pnas.1204335109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ross LA, Saint-Amour D, Leavitt VM, Javitt DC, Foxe JJ. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cereb Cortex. 2007;17(5):1147–1153. doi: 10.1093/cercor/bhl024. [DOI] [PubMed] [Google Scholar]

- 53.Laurienti PJ, Kraft RA, Maldjian JA, Burdette JH, Wallace MT. Semantic congruence is a critical factor in multisensory behavioral performance. Exp Brain Res. 2004;158(4):405–414. doi: 10.1007/s00221-004-1913-2. [DOI] [PubMed] [Google Scholar]

- 54.Crosse MJ, Butler JS, Lalor EC. Congruent visual speech enhances cortical entrainment to continuous auditory speech in noise-free conditions. J Neurosci. 2015;35(42):14195–14204. doi: 10.1523/JNEUROSCI.1829-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Song YH, Kim JH, Jeong HW, Choi I, Jeong D, Kim K, Lee SH. A neural circuit for auditory dominance over visual perception. Neuron. 2017;93(5):1236–1237. doi: 10.1016/j.neuron.2017.02.026. [DOI] [PubMed] [Google Scholar]

- 56.Hoover WB, Vertes RP. Anatomical analysis of afferent projections to the medial prefrontal cortex in the rat. Brain Struct Funct. 2007;212(2):149–179. doi: 10.1007/s00429-007-0150-4. [DOI] [PubMed] [Google Scholar]

- 57.Uylings HBM, Groenewegen HJ, Kolb B. Do rats have a prefrontal cortex? Behav Brain Res. 2003;146(1–2):3–17. doi: 10.1016/j.bbr.2003.09.028. [DOI] [PubMed] [Google Scholar]

- 58.Funahashi S. Prefrontal cortex and working memory processes. Neuroscience. 2006;139(1):251–261. doi: 10.1016/j.neuroscience.2005.07.003. [DOI] [PubMed] [Google Scholar]

- 59.Sharpe MJ, Killcross S. The prelimbic cortex contributes to the down-regulation of attention toward redundant cues. Cereb Cortex. 2014;24(4):1066–1074. doi: 10.1093/cercor/bhs393. [DOI] [PubMed] [Google Scholar]

- 60.Euston DR, Gruber AJ, McNaughton BL. The role of medial prefrontal cortex in memory and decision making. Neuron. 2012;76(6):1057–1070. doi: 10.1016/j.neuron.2012.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Ito HT, Zhang SJ, Witter MP, Moser EI, Moser MB. A prefrontal-thalamo-hippocampal circuit for goal-directed spatial navigation. Nature. 2015;522(7554):50–55. doi: 10.1038/nature14396. [DOI] [PubMed] [Google Scholar]

- 62.Hok V, Save E, Lenck-Santini PP, Poucet B. Coding for spatial goals in the prelimbic/infralimbic area of the rat frontal cortex. Proc Natl Acad Sci USA. 2005;102(12):4602–4607. doi: 10.1073/pnas.0407332102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 64.Henschke JU, Noesselt T, Scheich H, Budinger E. Possible anatomical pathways for short-latency multisensory integration processes in primary sensory cortices. Brain Struct Funct. 2015;220(2):955–977. doi: 10.1007/s00429-013-0694-4. [DOI] [PubMed] [Google Scholar]

- 65.Falchier A, Schroeder CE, Hackett TA, Lakatos P, Nascimento-Silva S, Ulbert I, Karmos G, Smiley JF. Projection from visual areas V2 and prostriata to caudal auditory cortex in the monkey. Cereb Cortex. 2010;20(7):1529–1538. doi: 10.1093/cercor/bhp213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Conde F, Maire-Lepoivre E, Audinat E, Crepel F. Afferent connections of the medial frontal cortex of the rat. II. Cortical and subcortical afferents. J Comp Neurol. 1995;352(4):567–593. doi: 10.1002/cne.903520407. [DOI] [PubMed] [Google Scholar]

- 67.Sharma S, Bandyopadhyay S. Differential rapid plasticity in auditory and visual responses in the primarily multisensory orbitofrontal cortex. eNeuro. 2020;7(3):22. doi: 10.1523/ENEURO.0061-20.2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Sugihara T, Diltz MD, Averbeck BB, Romanski LM. Integration of auditory and visual communication information in the primate ventrolateral prefrontal cortex. J Neurosci. 2006;26(43):11138–11147. doi: 10.1523/JNEUROSCI.3550-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Escanilla OD, Victor JD, Di Lorenzo PM. Odor-taste convergence in the nucleus of the solitary tract of the awake freely licking rat. J Neurosci. 2015;35(16):6284–6297. doi: 10.1523/JNEUROSCI.3526-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Meredith MA, Allman BL, Keniston LP, Clemo HR. Auditory influences on non-auditory cortices. Hear Res. 2009;258(1–2):64–71. doi: 10.1016/j.heares.2009.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Yu L, Stein BE, Rowland BA. Adult plasticity in multisensory neurons: short-term experience-dependent changes in the superior colliculus. J Neurosci. 2009;29(50):15910–15922. doi: 10.1523/JNEUROSCI.4041-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Jaramillo S, Zador AM. The auditory cortex mediates the perceptual effects of acoustic temporal expectation. Nat Neurosci. 2011;14(2):246–251. doi: 10.1038/nn.2688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Otazu GH, Tai LH, Yang Y, Zador AM. Engaging in an auditory task suppresses responses in auditory cortex. Nat Neurosci. 2009;12(5):646–654. doi: 10.1038/nn.2306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Wang Q, Yang ST, Li BM. Neuronal representation of audio-place associations in the medial prefrontal cortex of rats. Mol Brain. 2015;8(1):56. doi: 10.1186/s13041-015-0147-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Yang ST, Shi Y, Wang Q, Peng JY, Li BM. Neuronal representation of working memory in the medial prefrontal cortex of rats. Mol Brain. 2014;7:61. doi: 10.1186/s13041-014-0061-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci. 1992;12(12):4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.