Abstract

Purpose

The purpose of this study was to develop an automated process to analyze multimedia content on Twitter during the COVID-19 outbreak and classify content for radiological significance using deep learning (DL).

Materials and methods

Using Twitter search features, all tweets containing keywords from both “radiology” and “COVID-19” were collected for the period January 01, 2020 up to April 24, 2020. The resulting dataset comprised of 8354 tweets. Images were classified as (i) images with text (ii) radiological content (e.g., CT scan snapshots, X-ray images), and (iii) non-medical content like personal images or memes. We trained our deep learning model using Convolutional Neural Networks (CNN) on training dataset of 1040 labeled images drawn from all three classes. We then trained another DL classifier for segmenting images into categories based on human anatomy. All software used is open-source and adapted for this research. The diagnostic performance of the algorithm was assessed by comparing results on a test set of 1885 images.

Results

Our analysis shows that in COVID-19 related tweets on radiology, nearly 32% had textual images, another 24% had radiological content, and 44% were not of radiological significance. Our results indicated a 92% accuracy in classifying images originally labeled as chest X-ray or chest CT and a nearly 99% accurate classification of images containing medically relevant text. With larger training dataset and algorithmic tweaks, the accuracy can be further improved.

Conclusion

Applying DL on rich textual images and other metadata in tweets we can process and classify content for radiological significance in real time.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10140-020-01885-z.

Keywords: Social media, COVID-19, Twitter, Image classification, CNN

Introduction

Twitter is increasingly becoming the go-to social media platform of choice for public health professionals, health care providers, and the laity alike. With its global reach, rapid dissemination, and wide adoption by radiologists, radiology associations and organizations, its importance as a platform expeditiously sharing radiological content is unmatched. This became more evident during the recent COVID-19 pandemic when the spread of the virus escalated, and the role of imaging evolved rapidly. Many radiology leaders and influencers, including radiology journal editors, recognized this opportunity and quickly published COVID-19 research available free of charge [1, 2]. Even to disseminate this information urgently, the journals relied heavily on Twitter. Our review of popular platforms like Facebook, Twitter, Instagram shows that there is significant presence of radiologists, public health and other health professionals, institutes, government bodies and multi-disciplinary experts on Twitter, with active sharing, but the other platforms are limited in their adoption by radiologists, reach, and content. Being critical for emergency radiologists to recognize both typical and atypical manifestations of COVID-19 on radiological studies, many radiologists relied heavily on Twitter. As a recipient of a non-stop Twitter feed, however, radiologists, particularly those at the forefront of the fight against COVID-19 or public health emergencies, are expected to scan a surfeit of content to get to any useful information. There is also a limitation of the search feature on Twitter, where the content within images cannot be interpreted or searched with “text search keywords”, making it a frustrating effort to identify tweets with radiological content manually.

Therefore, the purpose of our study was to provide a multi-disciplinary automated solution using rich multimedia data from Twitter, tweet metadata, images shared, and state of the art deep learning (DL) techniques, namely computer vision(CV) and image classification, to process and analyze tweet content for radiological significance.

Materials and methods

Data collection

We used the Python programming language and open-source library called Tweepy to collect tweets. Using Twitter search features, all tweets containing keywords from both “radiology” and “COVID-19” were collected for the period of Jan 01, 2020 to Apr 24, 2020. The resulting dataset consisted of 8354 tweets from 5760 distinct users, containing 1885 images. For each tweet, the complete metadata in JSON was collected and all images tweeted were downloaded for analysis. All software used is open-source and adapted for this research. An additional set of 1040 images (including 520 radiological images from Kaggle as well as 260 images each of images with dense medical text and irrelevant images, from Google Images) was collected during the same interval for training the algorithm.

Image classification

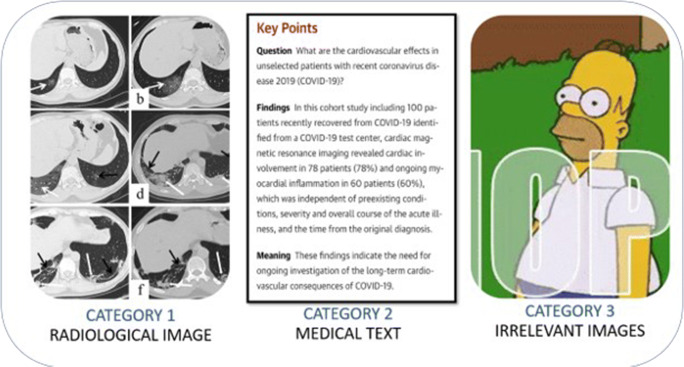

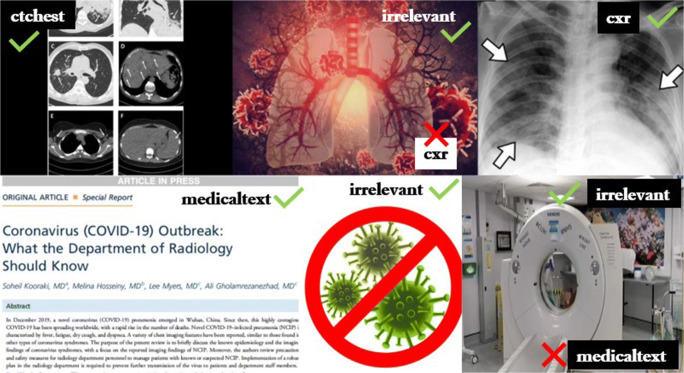

Images were classified as (i) images with text (e.g., containing dense medical text), (ii) radiological image content (e.g., CT scan snapshots, X-ray images), and (iii) non-medical content like personal images or memes (Fig. 1). The dataset of 1040 images was split into training and validation in the ratio of 80:20 (832:208 images). After tuning the parameters and getting a good fit over the validation set, the algorithm underwent a final test on unseen image dataset, classifying 1885 images from our Twitter image dataset (which included 471 radiological images, 623 images with textual information, and 791 irrelevant images). This helped us evaluate the performance and obtain a diagnostic accuracy. We used Convolutional Neural Networks (CNN) to process and classify images into these three classes (Fig. 2).

Fig. 1.

Different classes of images

Fig. 2.

Overview of automated Tweet content classification

Deep learning

We trained the deep learning model on individual datasets of the CT chest, chest X-ray, textual, and irrelevant images using a pre-trained model—ResNet-34. ResNet-34 can be downloaded with pre-trained weights, such that we start with a model that is known to recognize a thousand categories of images in ImageNet (a large image database for building and training neural networks). This model, once fitted into our algorithm, was able to have a shorter training epoch to reach a similar classifier. Using the “fastai” deep learning library, we created a model to classify the images collected from Twitter, “fastai” being a free and open source (FOSS) library that is popular because of its faster execution time and ease of building DL solutions. [3]

Model performance tuning

To reduce the redundancies of unnecessary epochs and prevent overfitting, we tried to find the learning rate, a trade-off between the rate of convergence and overshooting. The graph (Fig. 3) shows the loss (errors) of the model compared to the learning rate—The higher the slope, the better the chance to find the optimum learning rate within those boundaries. Once we determined the weights and biases for this neural network model, we were able to filter out the top losses (including defective data entries) and duplicates. This model was saved and loaded to finally test our unlabeled images from Twitter.

Fig. 3.

Learning rate of training model

Results

Results were interpreted using a Confusion Matrix from which we could calculate the accuracy measures (Table 1) (Fig. 4a, b). Our analysis of the test set showed that out of 1885 COVID-19 related tweets on radiology, nearly 32% (601) had images rich in medically relevant text medicaltext), 24% (459) had radiological images (ctchest and cxr), and the remaining 44% (825) were not of radiological significance (irrelevant). Our results indicated a 92% accuracy in classifying images as chest X-ray or chest CT and a nearly 99% accurate text classification, with a total accuracy of 96.4%. With a larger training dataset and algorithmic tweaks, the accuracy can be further improved.

Table 1.

Confusion Matrix

| True/predicted | Medicaltext | Ctchest | Cxr | Irrelevant | Actual totals |

|---|---|---|---|---|---|

| Medicaltext | 597 | 1 | 2 | 1 | 601 (32%) |

| Ctchest | 5 | 387 | 0 | 6 | 398 (21%) |

| Cxr | 1 | 5 | 53 | 2 | 61 (3%) |

| Irrelevant | 20 | 18 | 5 | 782 | 825 (44%) |

| Accuracy | 98.7% | 97.2% | 87% | 94.7 | 96.4% |

The value in italic signifies the Total Accuracy of the algorithm

Fig. 4.

a Medical text and irrelevant Confusion Matrix. b:Cxr and Ctchest Confusion Matrix

The figure above (Fig. 5) shows a glimpse of the true and false positives, with corresponding specificity and sensitivity ratios (Table 2). The classification report showed that the model performed with high accuracy in the range of 0.88–0.99 for all classes.

Fig. 5.

Predicted and actual

Table 2.

Statistical measures

| Classes (total predicted) | True positives | True negatives | False positives | False negatives | Specificity | Sensitivity | Negative predictive value |

|---|---|---|---|---|---|---|---|

| Medicaltext (623) | 597 | 1258 | 26 | 4 | 0.979 | 0.993 | 0.996 |

| Ctchest (411) | 387 | 1463 | 24 | 11 | 0.983 | 0.972 | 0.992 |

| Cxr (60) | 53 | 1817 | 7 | 18 | 0.996 | 0.868 | 0.995 |

| Irrelevant (791) | 782 | 1051 | 9 | 43 | 0.991 | 0.947 | 0.961 |

Discussion

In this study, we created a radiology-specific automated solution to distinguish clinically relevant from irrelevant and COVID text from COVID image content tweets using computer vision and image classification.

As we infer from the results, the algorithm was highly successful in sorting the relevant images from the irrelevant ones. The algorithm did exceptionally well in filtering images containing medical text, owing to a larger quantity of article/journal images and easy readability of text in screenshots, as screenshots captured on any device end up retaining the original pixel value of the image. However, the classification of chest X-ray images did not achieve high sensitivity, considering the low clarity in X-ray snapshots due to varying resolutions of the type of camera used and a deficiency in the total number of tweets containing radiographs. The sensitivity and precision metrics can be further improved by increasing the size of testing data and by collecting the latest data from more informative tweets.

Over the last few years, Twitter has become the most popular social media platform used for information dissemination during radiology meetings [4], continuing professional development (CPD) [5], and the teaching and learning of radiology residents and medical students [6]. Twitter was chosen as the platform for classifying images as we were able to gather Twitter data due to its transparency, as it provides an API (application program interface) which lets us interact and read data published on Twitter. Facebook has relatively lesser public content and such an API is not available for Facebook or Instagram without extra permissions. Also, Twitter is preferred for news sources and live updates as compared to Instagram [7], with Twitter being the preferred communication mode in most radiological conferences and with a critical mass of radiology and other health professionals. This sharing of information has not only improved the transparency and accessibility of knowledge, but it has also created a collaborative atmosphere among health care providers and researchers, regardless of their location and specialty. Despite its vast reach and convenience to post and access content, Twitter is still not a preferred resource in the radiology reading room during active patient care because of the overwhelmingly high volumes of information consisting of both relevant and irrelevant content to patient care. Any challenging radiology case during a readout often stimulates a google search and redirection to radiology education websites, online books, or journal articles. However, access to some sites might be blocked, requiring individual registration with payment or a subscription through the institution. Some sites might not be mobile friendly, requiring the search to be performed on desktop. Additionally, content may not be up to date and might not include all the information, including variants or atypical presentations. The latter became critical during the early phase of pandemic when radiologists were faced with the challenges of making the diagnosis of COVID-19 with very limited information. Twitter, on the other hand, due to its vast reach, free accessibility, up-to-date content, and ease of use/familiarity, played a vital role in the rapid dissemination of radiology knowledge among emergency radiologists during the early phase of the pandemic.

A significant limitation in the use of Twitter is the cluttering of information without the ability to distinguish content based on relevance to radiologists. While there has been research on content analysis on Twitter, it has been restricted to text analysis. Literature holds a rising trend, outside of Twitter, in the use of deep learning techniques to classify radiological images, but such research has not delved into the classification of image datasets containing a random mix of radiological and non-radiological images. This leads to inefficient use and imposes a penalty in the form of time and the attention of radiologists, who must manually scan through images to get relevant results. We provide a deep learning approach to solve this problem by filtering out non-radiological content. To the best of our knowledge, there has been no past research in identifying and filtering tweets for the presence of relevant radiological content.

Secondly, given the ease of taking screenshots on mobile devices and sharing them with a simple click on Twitter, many users prefer sharing research abstracts or short texts in image form. Restricted only to text, the Twitter search feature lacks the ability to search images or text shared in image format. However, there are third party external applications and software that use OCR (optical character recognition) technology to recognize the text within images, though it can only be done manually in a sequential manner, making it inefficient for searching through thousands of images uploaded on Twitter. With text-containing screenshots accounting for a significant amount of Twitter activity, we plan to go a step further by converting such images to text for interpretation using natural language processing (NLP) which can use lemmatization and unsupervised semantic classification techniques to assort the medical text images based on relevance. Our research introduces comprehensive features using deep learning to identify and automatically classify images within Twitter. Follow-up research can improve upon it by interpreting text extracted from images using our approach. That will further improve the classifier accuracy and the utility of the solution. We do not find any radiological study on Twitter that uses such techniques, although there have been some attempts in emergency events and disaster management [8] where Twitter feeds were used primarily for location detection. Another study on Twitter content analysis has been done to signify consonance between images posted on Twitter with their corresponding text [9] and whether any visual relevance existed. Apart from image analysis, some studies have been done solely on text using semi-supervised classification techniques to filter relevant Twitter text for medical syndromic surveillance [10].

Thirdly, to provide a fully comprehensive solution to radiologists, the content is labeled for the specific radiological method used and the captured anatomical region in the image. Again, such an approach towards automated and bulk identification of content for quick filtering and classification, has been made possible only recently due to the advent of deep learning.

Since February 2020, during the COVID-19 response, such automated radiological content collection, conversion, classification, interpretation, and analysis applications are potent weapons in global dissemination and assimilation of radiological content. With the approach and techniques used in our research being generalizable to other topics and keywords, we can adapt the model for any radiological content in other public health emergencies using suitable training data.

Compared to other sharing platforms like journals or a Google search, Twitter has distinct advantages. It is widely popular because it represents what is described as a “free marketplace of ideas”, which is essential for research. Additionally, journalists, radiologists, or public health officials have been utilizing Twitter to break news on any new developments [7]. Twitter provides the twin purpose of speedy dissemination of content with short messaging. It does not purport to be the portal that hosts full content like research papers or radiological image corpus and only acts as a pointer to resources, links, and other users. However, there is the menace of fake news on health matters during the pandemic, on all media, including Twitter. Easy reuse of images and resulting redundancy of content as a result are also some of the limitations of Twitter.

Many users admit they learn more radiology on Twitter than from any other single source [11, 12]. Educators from virtually every subspecialty area of radiology create original learning content generically referred to as “FOAMrad”, an acronym meaning “Free, Open-Access Medical education in radiology” [13]. Twitter is a popular platform among emergency radiologists and residents. The content is concise with provided hyperlinked hashtags. By enabling educational content to be delivered in small, bite-size units that require only minutes of learners’ time [14], learning on Twitter is easy.

In multi-disciplinary public health emergencies, like in the recent COVID-19 pandemic, rapid sharing of multimedia radiological information is critical. Besides Twitter, there are not any suitable platforms with the required versatility, speed, reach, and flexibility of content. If we can overcome Twitter’s limitations as elaborated with our approach, we have a truly unique solution for radiologists to use Twitter efficiently.

Our study has several limitations

All tweets were labeled by a freshly graduated physician who was not a radiologist, although aspires to become one. The algorithm was developed during the early phases of the pandemic, resulting in a limited number of tweets with sparse content on COVID. With more recent advancements, the content and knowledge of COVID is evolving, thereby capable of producing a difference in results. As with all deep learning classifiers, the training dataset must be representative of actual test images to be classified. Hence for any further similar applications, the training dataset will have to be freshly collected and our programming scripts need to be run again to obtain results. To deploy this software, an additional application will have to be downloaded and integrated with Twitter. Twitter being a social networking and microblogging site, has multiple negative sides to it as well: the lack of peer review or authenticity, possibility of the information dispensed being inaccurate, and the censorship laws affecting legitimacy of the knowledge being shared online. Our results are also subject to the tuning of parameters, which may vary with source image datasets. Even though our accuracy is quite good, it is crucial to ensure a high accuracy as it is easier for the user to give up if any irrelevant image shows up. Finally, the content on Twitter is not peer-reviewed and could be biased.

In conclusion, Twitter is becoming a popular platform for education and the rapid dissemination of radiology information. However, due to overwhelming information with irrelevant content, emergency radiologists and residents on call are often hesitant to use in the reading room. We have attempted to create an algorithm to selectively filter out tweets containing text and images with no radiological relevance.

Supplementary Information

(JPG 79 kb)

Author’s contribution

Bharti Khurana.

GE Health Care Research Support

Book Royalties, Cambridge University Press

Section Editor Royalties, UptoDate Wolter Kluwer

Compliance with ethical standards

Conflict of interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.RSNA Responds to COVID-19 outbreak with rapid publication of original research and images. (n.d.). Retrieved September 19, 2020, from https://www.rsna.org/news/2020/April/Rapid-Publication-COVID-19-Research

- 2.COVID-19 Resources. (n.d.). Retrieved September 28, 2020, from https://www.rsna.org/covid-19

- 3.Learner for the vision applications | fastai. (n.d.). Retrieved September 28, 2020, from https://docs.fast.ai/vision.learner

- 4.Hawkins CM, Duszak R, Rawson JV. Social media in radiology: early trends in Twitter microblogging at radiology’s largest international meeting. Journal of the American College of Radiology: JACR. 2014;11(4):387–390. doi: 10.1016/j.jacr.2013.07.015. [DOI] [PubMed] [Google Scholar]

- 5.Currie G, Woznitza N, Bolderston A, Westerink A, Watson J, Beardmore C, di Prospero L, McCuaig C, Nightingale J. Twitter Journal Club in Medical Radiation Science. Journal of Medical Imaging and Radiation Sciences. 2017;48(1):83–89. doi: 10.1016/j.jmir.2016.09.001. [DOI] [PubMed] [Google Scholar]

- 6.Miles RC, Patel AK. The radiology twitterverse: a starter’s guide to utilization and success. J Am Coll Radiol. 2019;16(9):1225–1231. doi: 10.1016/j.jacr.2019.03.014. [DOI] [PubMed] [Google Scholar]

- 7.Newman N (n.d.) Reuters Institute Digital News Report 2020:112

- 8.Kumar A, Singh JP, Dwivedi YK, Rana NP (2020) A deep multi-modal neural network for informative twitter content classification during emergencies. Ann Oper Res. 10.1007/s10479-020-03514-x

- 9.Chen, T., Lu, D., Kan, M.-Y., & Cui, P. (2013). Understanding and classifying image tweets. In Proceedings of the 21st ACM international conference on Multimedia - MM ‘13 (pp. 781–784). Presented at the 21st ACM international conference, Barcelona, Spain: ACM Press. 10.1145/2502081.2502203

- 10.Edo-Osagie O, Smith G, Lake I, Edeghere O, Iglesia BDL. Twitter mining using semi-supervised classification for relevance filtering in syndromic surveillance. PLoS One. 2019;14(7):e0210689. doi: 10.1371/journal.pone.0210689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.3 reasons why radiology leaders need to be on Twitter or risk falling behind. (n.d.). Retrieved September 19, 2020, from https://www.radiologybusiness.com/topics/leadership/radiology-leaders-need-be-twitter

- 12.Gonzalez SM, Gadbury-Amyot CC. Using Twitter for teaching and learning in an oral and maxillofacial radiology course. J Dent Educ. 2016;80(2):149–155. doi: 10.1002/j.0022-0337.2016.80.2.tb06070.x. [DOI] [PubMed] [Google Scholar]

- 13.Rosenberg H, Syed S, Rezaie S. The Twitter pandemic: the critical role of Twitter in the dissemination of medical information and misinformation during the COVID-19 pandemic. Canadian Journal of Emergency Medicine. 2020;22(4):418–421. doi: 10.1017/cem.2020.361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ranginwala S, Towbin AJ. Use of social media in radiology education. J Am Coll Radiol. 2018;15(1):190–200. doi: 10.1016/j.jacr.2017.09.010. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(JPG 79 kb)