Abstract

Auditory steady‐state responses (ASSRs) are evoked brain responses to modulated or repetitive acoustic stimuli. Investigating the underlying neural generators of ASSRs is important to gain in‐depth insight into the mechanisms of auditory temporal processing. The aim of this study is to reconstruct an extensive range of neural generators, that is, cortical and subcortical, as well as primary and non‐primary ones. This extensive overview of neural generators provides an appropriate basis for studying functional connectivity. To this end, a minimum‐norm imaging (MNI) technique is employed. We also present a novel extension to MNI which facilitates source analysis by quantifying the ASSR for each dipole. Results demonstrate that the proposed MNI approach is successful in reconstructing sources located both within (primary) and outside (non‐primary) of the auditory cortex (AC). Primary sources are detected in different stimulation conditions (four modulation frequencies and two sides of stimulation), thereby demonstrating the robustness of the approach. This study is one of the first investigations to identify non‐primary sources. Moreover, we show that the MNI approach is also capable of reconstructing the subcortical activities of ASSRs. Finally, the results obtained using the MNI approach outperform the group‐independent component analysis method on the same data, in terms of detection of sources in the AC, reconstructing the subcortical activities and reducing computational load.

Keywords: auditory evoked potentials, auditory pathways, brain mapping, brainstem, cerebral cortex, electroencephalography phase synchronization

Investigating the underlying neural generators of auditory steady‐state responses (ASSRs; evoked brain responses to modulated or repetitive acoustic stimuli) is important to gain in‐depth insight into the mechanisms of auditory temporal processing. In this study, a minimum‐norm imaging (MNI) technique is employed to simultaneously reconstruct a wide range of neural sources of ASSRs, including cortical (primary and non‐primary) and subcortical sources. We present a novel extension to MNI which facilitates source analysis by quantifying the ASSR for each dipole and developing the ASSR map.

1. INTRODUCTION

The temporal envelope of the speech signal fluctuates between 2 and 50 Hz and transmits both phonetic and prosodic information (Rosen, 1992). In particular, continuous speech yields pronounced low‐frequency modulations (between 2 and 20 Hz) in its temporal envelope: very low‐frequency amplitude modulations in sounds signal the occurrence of syllables (±4 Hz, ±250 ms), and phonemes (15–20 Hz, ±50 ms), and drive speech perception (Poeppel, 2003). These low‐frequency modulations are both necessary and almost sufficient for accurate speech perception (e.g., Drullman, Festen, & Plomp, 1994; Shannon, Zeng, Kamath, Wygonski, & Ekelid, 1995). Temporal processing of acoustic modulations can be investigated by analyzing auditory steady‐state responses (ASSRs) in the EEG. In response to amplitude modulated (AM) stimuli, afferent neurons in the central auditory system synchronize their firing patterns to a particular phase of these stimuli and generate the phase‐locked responses known as ASSRs (Picton, John, Dimitrijevic, & Purcell, 2003). These evoked potentials reflect the capability of the auditory system to follow the timing patterns of auditory stimuli. The excellent temporal resolution of EEG is highly suitable to capture these phase‐locked responses. EEG provides rich information about the dynamics of these responses and their underlying brain networks. Generally, EEG is highly relevant to study auditory temporal processing (He, Sohrabpour, Brown, & Liu, 2018; Picton, 2013). In the present study, amplitude modulations at 4 and 20 Hz are presented as a model of the rate of occurrence of syllables and phonemes. Relatively high modulation frequencies at 40 and 80 Hz are also investigated, because it is believed that these rates can activate more subcortical neural generators than cortical ones (Herdman et al., 2002).

ASSRs are also used clinically to determine hearing thresholds (Picton, Dimitrijevic, Perez‐Abalo, & van Roon, 2005), to assess suprathreshold hearing across age (Goossens, Vercammen, Wouters, & van Wieringen, 2016), to monitor the state of arousal during anesthesia (Plourde & Picton, 1990), and to open doors to neuroscience research.

In order to gain more insight into the mechanisms underlying auditory temporal processing it is necessary to reconstruct the cortical and subcortical sources related to ASSRs. The reconstruction (localization) techniques are not straightforward and yield different numbers and locations of sources, dependent on the applied procedure and its prior assumptions. Most electrophysiological studies have used dipole source analysis to localize ASSR generators and reported that cortical sources of ASSRs were located bilaterally in the primary auditory cortex (AC, in the supratemporal plane) (Herdman et al., 2002; Kuriki, Kobayashi, Kobayashi, Tanaka, & Uchikawa, 2013; Poulsen, Picton, & Paus, 2007; Schoonhoven, Boden, Verbunt, & de Munck, 2003; Spencer, 2012; Teale et al., 2008). While the above‐mentioned studies involved prior assumptions regarding the number and location of sources, other studies, using minimal prior assumptions, have reported that the ASSR may reflect activity in even more widely distributed regions of the brain, beyond the AC (Farahani, Goossens, Wouters, & van Wieringen, 2017; Farahani, Wouters, & van Wieringen, 2019; Reyes et al., 2005). Sources located within the AC are considered primary sources, those located outside the AC are considered non‐primary ones (Farahani et al., 2019). The number and location of the non‐primary sources are still a matter of debate and need to be investigated using source modeling techniques with minimal restrictions about the location of the sources.

Farahani et al. (2019) reconstructed ASSR sources using group‐independent component analysis (ICA), a data‐driven approach with minimal prior assumptions about the number and location of the sources. Although the group‐ICA approach was successful in reconstructing primary and non‐primary sources, for some stimulation conditions (e.g., 20 Hz AM stimuli presented to the right ear) the expected primary sources in the AC were not detected. Moreover, Farahani et al. (2019) detected a subcortical source near the thalamus only for 80 Hz ASSR, while fMRI studies show that the subcortical activations are also expected in the brainstem, midbrain, and also at other modulation frequencies (Overath, Zhang, Sanes, & Poeppel, 2012; Steinmann & Gutschalk, 2011). Reconstruction of the subcortical sources from the electrophysiological measurements with high temporal resolution like EEG can provide extremely important information about early bottom‐up processing and neural synchronization along the auditory pathway, such as the strength of response, the delay of response (phase delay), and the phase‐locking ability (temporal precision) to different modulation frequencies.

In sum, a broad view of neural sources of ASSRs including a wide range of cortical (primary and non‐primary) and subcortical sources is lacking. An extended overview of neural generators (i.e., all possible sources simultaneously) increases our understanding of the mechanisms underlying auditory temporal processing and is essential for developing functional connectivity networks, because unobserved neural sources pose spurious connections in the networks (Bastos & Schoffelen, 2016).

Beside the data‐driven methods of source reconstruction such as ICA, other methods involve the volume conduction model of the head to reconstruct the sources. The use of head‐model information can be helpful to consistently and simultaneously reconstruct an extensive range of neural sources. The two major groups of source reconstruction methods based on head‐model information and also with minimal restrictions about the number and location of the sources are those involving beamforming and minimum‐norm imaging (MNI) (Grech et al., 2008; Michel et al., 2004). Some recent studies have tested beamforming techniques for ASSR source analysis (Luke, de Vos, & Wouters, 2017; Popescu, Popescu, Chan, Blunt, & Lewine, 2008; Popov, Oostenveld, & Schoffelen, 2018; Wong & Gordon, 2009). They used beamforming techniques with a supplementary preprocessing to suppress the correlated source from the other hemisphere, because these techniques assume that spatially distinct sources are temporally uncorrelated. However, these studies could only reconstruct the primary sources in the AC, not any of the non‐primary ones. We therefore use MNI in the current study to obtain a broad view of neural sources including the cortical (primary and non‐primary) and subcortical sources. MNI is a distributed source modeling approach, which considers a large number of equivalent current dipoles in the brain and estimates the amplitude of all dipoles (for each time point) to reconstruct a current distribution (a source distribution map) with minimum overall energy (Grech et al., 2008; Hämäläinen & Ilmoniemi, 1994; Lin et al., 2006; Stenroos & Hauk, 2013).

The main objective of the current study is to reconstruct a wide‐range of the neural sources of ASSRs, including primary and non‐primary sources at the cortical level, as well as at the subcortical ones. To accomplish this, we use a source reconstruction approach based on MNI. Classically, MNI provides a source distribution map for each time point. This map (or a series of these maps) does not directly show the strength of the ASSRs for each dipole which makes interpretation difficult. The novelty of the current study regarding the approach is to propose an extension to MNI which facilitates source analysis of ASSRs by quantifying the ASSR for each dipole. We hypothesize that the MNI‐based approach is capable of reconstructing cortical and subcortical sources of ASSRs for different stimulation conditions. We compare the location of reconstructed sources and their activity with previous findings from imaging techniques to verify the validity of the results and the viability of the approach. The robustness of the approach is examined for acoustic modulations at 4, 20, 40, and 80 Hz presented monaurally to the left and right ears. Additionally, subcortical activity is compared with cortical activity for low and high modulation frequencies to investigate whether the subcortical activity is physiologically plausible. For low modulation frequencies higher cortical activity than subcortical activity is expected, while for high modulation frequencies more subcortical activity is expected (Giraud et al., 2000; Herdman et al., 2002; Liégeois‐Chauvel, Lorenzi, Trébuchon, Régis, & Chauvel, 2004). The second objective is to compare the MNI approach with group‐ICA on the same data (Farahani et al., 2019) to determine which approach is more effective for the reconstruction of ASSR sources. The main structural difference between MNI and group‐ICA is related to the use of a head‐model. The MNI approach is applied on the same recordings as described in Farahani et al. (2019) and then compared with group‐ICA with regard to detection of sources in the AC, reconstruction of subcortical sources, and reduced computational load. In sum, this study is novel and unique, as it does not only focus on simultaneous reconstruction of a wide range of cortical and subcortical sources but also facilitates source analysis of ASSRs using developing a frequency‐specific brain map.

2. METHODS AND MATERIALS

2.1. Participants

The EEG recordings were adopted from Goossens et al., 2016 who included 19 young adults (20–30 years of age, nine men) with clinically normal audiometric thresholds in both ears (≤25 dB HL, 125 Hz−4 kHz). All participants were Dutch native speakers and right‐handed as assessed by the Edinburgh Handedness Inventory (Oldfield, 1971). They showed no indication of mild cognitive impairment as assessed by the Montreal Cognitive Assessment Task (Nasreddine et al., 2005).

2.2. Stimuli and procedures

The stimuli were 100% AM white noise (bandwidth of one octave, centered at 1 kHz) at 3.91, 19.53, 40.04, and 80.08 Hz. These values were chosen to have integer number of cycles in an epoch of 1.024 s (John & Picton, 2000). The stimuli were presented monaurally to the left and right ears at 70 dB SPL through ER‐3A insert phones. Each stimulus type was presented continuously for 300 s. The order of stimulus presentation was randomized among participants.

The testing procedure was designed to ensure passive listening to the AM stimuli during a wakeful state. In this procedure, participants were lying on a bed and watched a muted movie with subtitles. They were encouraged to lie quietly and relaxed during the experiment to avoid muscle and movement artifacts. The experiment was performed in a double‐walled soundproof booth with Faraday cage.

2.3. EEG recording parameters

The EEG signals were picked up by 64 active Ag/AgCl electrodes mounted in head caps based on the 10–10 electrode system. These signals were amplified and recorded using the BioSemi ActiveTwo system at a sampling rate of 8,192 Hz with a gain of 32.25 nV/bit.

2.4. EEG source analysis

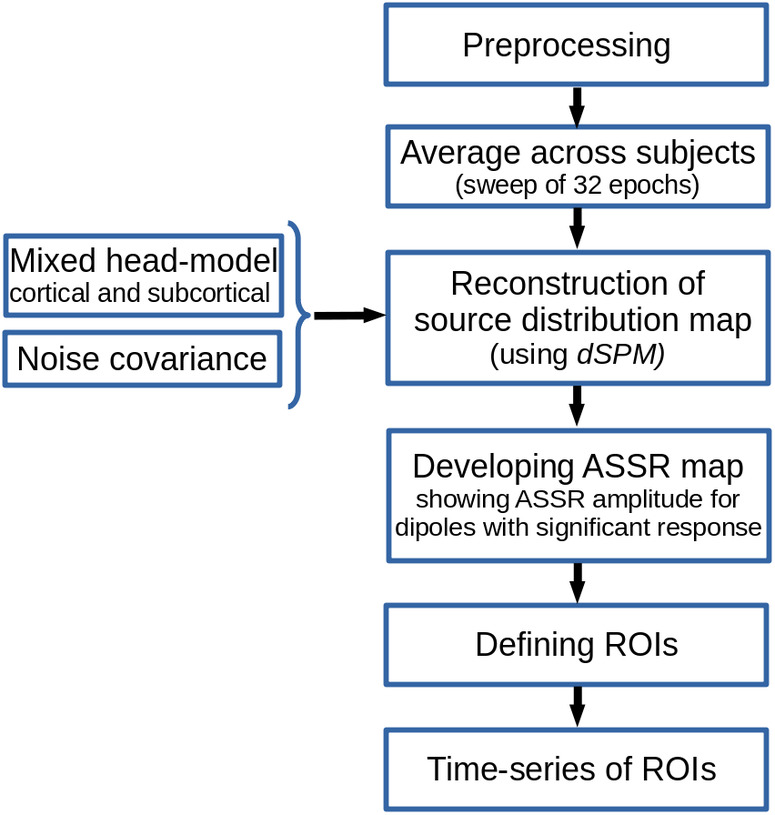

In response to AM stimuli, the central auditory system generates ASSRs. Figure 1 illustrates the pipeline for reconstructing brain sources of ASSRs based on MNI. In this pipeline, MNI was applied to the preprocessed EEG data and a source distribution map showing the activities of different cerebral regions was obtained for each time point. Subsequently, the source distribution map was transformed into the frequency domain and the ASSRs were calculated for each dipole in order to develop the ASSR map. Finally, the regions of interest (ROIs) were defined and their activities were extracted for further analyses. In the following paragraphs, the different steps of the pipeline are explained in more detail.

FIGURE 1.

Sketch of minimum‐norm imaging (MNI) pipeline for auditory steady‐state response (ASSR) source reconstruction

2.4.1. Preprocessing

EEG data of each stimulation condition (four modulation frequencies and two sides of stimulation; left ear and right ear) were preprocessed separately in MATLAB R2016b (MathWorks). To avoid low‐frequency distortions caused by skin potentials and/or drift of the amplifier, raw EEG data were filtered by a zero phase high‐pass Butterworth filter with a cut‐off frequency of 2 Hz (12 dB/octave). The continuous filtered data were segmented into the epochs of 1.024 s and submitted to an early noise reduction procedure consisting of the following three steps:

Channel rejection: The mean of the maximum absolute amplitude of all epochs was calculated for each of the 64 channels separately and considered as an index of maximum amplitude. A channel was rejected if its maximum amplitude index exceeded 100 μV.

Recording rejection: Blocks of EEG recording with more than five rejected channels were excluded from further analyses. On average, 1.3 (SD of 0.5) recordings were excluded across all four modulation frequencies and two sides of stimulation.

Epoch rejection: For each epoch, the highest peak‐to‐peak (PtoP) amplitude of the signals in the remaining channels was extracted as an index of PtoP of that epoch. Then, 10% of epochs with the highest PtoP amplitude was rejected.

After early noise reduction, the EEG data were re‐referenced to a common average over all remaining EEG channels. To eliminate artifacts such as eye blinks, eye movements and heartbeats, ICA was applied to the re‐referenced data using Infomax in the Fieldtrip toolbox (Oostenveld, Fries, Maris, & Schoffelen, 2011). Noisy independent components were recognized by visual inspection and removed. The remaining components were used to reconstruct the clean EEG data. Subsequently, missing channels, which had been rejected by the early noise reduction procedure, were interpolated using the spherical spline method (Perrin, Pernier, & Bertrand, 1989) implemented in the Fieldtrip toolbox (Oostenveld et al., 2011). The regularization parameter and the order of interpolation were set to 10−8 and 3, respectively, because these values lead to fewer distortions in temporal features of interpolated channels (Kang, Lano, & Sponheim, 2015).

Finally, to avoid residual artifacts not accounted for by ICA, the epochs with maximum absolute amplitude higher than 70 μV in any channel were removed. To have the same number of epochs across participants, the first 192 artifact free epochs (6 sweeps of 32 epochs) were selected for subsequent analyses. If less than 192 epochs could be retained, the amplitude threshold was gradually increased (in steps of 5 μV and up to maximally 110 μV) to find at least 192 artifact‐free epochs.

2.4.2. Mixed head‐model

It is very common to use a cortical surface head‐model for brain mapping. Restricting the source space to the cortex is mainly based on the assumption that most of the electrical activity recorded by EEG comes from the cerebral cortex. However, this assumption is not always valid. Recent studies showed that, although the expected signal‐to‐noise ratios of subcortical activities are poor, the activity of deep brain structures (deep sources) can be reconstructed from EEG (Attal et al., 2009; Attal & Schwartz, 2013; Seeber et al., 2019). The use of steady‐state paradigms or the high number of trials is beneficial to accumulate data samples and then to reconstruct subcortical activities (Attal et al., 2009).

fMRI studies showed that ASSRs also have some generators at the subcortical level (Coffey, Herholz, Chepesiuk, Baillet, & Zatorre, 2016; Langers, Van Dijk, & Backes, 2005; Overath et al., 2012; Steinmann & Gutschalk, 2011). In the current study, to be able to investigate the subcortical sources as well as cortical ones, a mixed head‐model was generated consisting of cortical (cortex) and subcortical regions (thalamus and brainstem). This head‐model was generated based on the template anatomy ICBM152 (nonlinear average of 152 individual magnetic resonance scans, Fonov et al., 2011) and the default channel location file in the Brainstorm application (Tadel et al., 2019; Tadel, Baillet, Mosher, Pantazis, & Leahy, 2011). The head‐model was developed using the boundary element method as implemented in OpenMEEG (Gramfort, Papadopoulo, Olivi, & Clerc, 2010), and consisted of three compartments, that is, brain (cortical and subcortical), skull, and scalp with conductivity values of 0.33, 0.0041, and 0.33 S/m, respectively. Using the Brainstorm application (Tadel et al., 2011, 2019), the surface model of the cortex (triangulation of the cortical surface) was combined with the volume model (three‐dimensional dipole grid) of the thalamus and brainstem. For the surface model, a dipole orthogonal to the surface was used at each grid point, while for the volume model, three dipoles with orthogonal orientations were considered at each grid point. It was shown that the majority of the neural activity recorded by the EEG comes from the pyramidal neurons, which are oriented orthogonally to the cortical surface (He & Ding, 2013). Therefore, it is reasonable to reduce the number of parameters which should be estimated by using only one dipole orthogonal to the cortical surface at each grid point. However, this assumption is not applicable to the subcortical regions, and therefore three dipoles were employed for those analyses.

2.4.3. Noise covariance

The noise covariance required for source reconstruction was estimated on the basis of the silence EEG data, that is, EEG recorded in the absence of auditory stimulation, while the participants were watching a muted movie with subtitles. For each participant, the silence data were recorded in two blocks of 150 s, before and after the main ASSR recordings.

For each modulation frequency, the noise covariance was calculated separately. First, the preprocessed silence data were filtered using a zero phase band‐pass Butterworth filter with a bandwidth of 4 Hz and modulation frequency as center frequency. Then, the filtered silence data of all subjects were concatenated and used to calculate the covariance matrix.

2.4.4. Reconstruction of the source distribution map

The brain source activities were reconstructed using dynamic statistical parametric mapping (dSPM, Dale et al., 2000) implemented in Brainstorm. dSPM provides a noise‐normalized minimum‐norm solution through normalization with the estimated noise at each source (Lin et al., 2006). This normalization reduces the bias toward superficial sources, which occurs with the standard minimum norm solution (Hauk, Wakeman, & Henson, 2011; Lin et al., 2006). The matrix of reconstructed sources was calculated as:

| (1) |

where K dSPM is the imaging kernel of dSPM obtained from Brainstorm and X is the 64‐channel EEG data.

The regularization parameter (λ 2) required for dSPM is related to the level of the noise present in the recorded data (Ghumare, Schrooten, Vandenberghe, & Dupont, 2018) and can be calculated as λ 2 = 1/SNR2, where SNR is the signal to noise ratio (S/N, based on the amplitude) of the whitened EEG data (Bradley, Yao, Dewald, & Richter, 2016; Ghumare et al., 2018; Hincapié et al., 2016). The aim of whitening is to remove the underlying correlation in a multivariable data to set the variances of each variable to 1. The required whitening operator was calculated based on the noise covariance matrix in Brainstorm. In order to calculate the SNR, the whitened EEG data were transformed to the frequency domain using a fast Fourier transform (FFT). The spectral amplitudes at the respective modulation frequencies were extracted for all channels and considered as response amplitudes. Since the response amplitude varies across channels due to the relative position of the channel to the brain sources of ASSRs, the maximum response amplitude was considered the signal of interest (S). The noise level at each EEG channel was estimated based on the average of 30 neighboring frequency bins on each side of the response frequency bin. The median of the noise level of the EEG channels was considered the noise level of measurements (N).

When we use a template MRI and a template channel location for source localization, the use of a group‐wise framework for source analysis can lead to a higher localization accuracy than individual‐level analyses (Farahani et al., 2019). To obtain a group‐wise framework for the current study, the epochs of each participant were divided into the sweeps of 32 concatenated epochs and averaged across participants for a grand‐averaged sweep before applying the imaging kernel of dSPM. Since calculating source activities based on imaging kernel (Equation (1)) is a linear transformation, multiplication of the imaging kernel to the grand‐averaged sweep is equal to first applying imaging kernel to the sweep of each subject and then averaging the outcome maps across all participants.

2.4.5. Developing the ASSR map

The aim of this analysis is to generate a frequency‐specific brain map that shows the activity of dipoles for a certain modulation frequency, that is, the ASSRs of each dipole. To accomplish this aim, the reconstructed time‐series of each dipole (Equation (1)) was transformed into the frequency domain by means of an FFT. The SNR of the ASSR for each dipole was calculated based on Equation (2).

| (2) |

where P S+N is the power of the frequency spectrum at the modulation frequency bin (i.e., 4, 20, 40, and 80 Hz) and includes the power of the response plus neural background noise (and relatively small measurement noise). P N refers to the power of the neural background noise, which was estimated using the average power of 30 neighboring frequency bins (corresponding to 0.92 Hz) on each side of the response frequency bin.

To recognize the dipoles with significant responses at the respective modulation frequencies, the one sample F test was performed with the SNR (i.e., P S+N/P N) as F ratio statistic. A dipole was recognized as an ASSR source when the F test showed a significant difference (α = .05) between the power of the response plus noise and the power of the noise (Dobie & Wilson, 1996; John & Picton, 2000; Picton et al., 2005). The correction for multiple comparison was performed using the false discovery rate method (Benjamini & Hochberg, 1995). Subsequently, the ASSR map was generated based on the ASSR amplitudes of the dipoles with significant responses and zero for the dipoles without significant response. The ASSR amplitude was calculated according to Equation (3).

| (3) |

For subcortical regions with three orthogonal dipoles at each grid point, the ASSR amplitude was calculated using the norm of the vectorial sum of the three orientations at each grid point (Equation (4)).

| (4) |

Similarly, the SNR map was generated based on the SNR (in dB, Equation (3)) of each dipole. The SNR can be considered as an index that shows the quality of the response for each dipole.

2.4.6. Defining ROIs

For further comparisons and interpretation of the ASSR maps, the ROIs were defined based on the averaged SNR map across all stimulation conditions (four modulation frequencies and two sides of stimulation). Since the dynamic range of the SNR varies across modulation frequency, we first applied normalization as follows:

| (5) |

where s is the dipole number and the SNR index has a range of [0,1]. Afterward, the maps of the SNR index were generated and were averaged across modulation frequencies (4, 20, 40, and 80 Hz) and across sides of stimulation (left and right), yielding a grand‐averaged map of SNR index that was independent of the acoustic stimulation type. Subsequently, the regions with a SNR index more than 50% of the maximum value of SNR index were selected as ROIs.

Additionally, eight ROIs along the primary auditory pathway were defined guided by the anatomical locations of the cochlear nucleus (CN), the inferior colliculus (IC), the medial geniculate body (MGB), and the AC, bilaterally. These regions are known to play a key role in generating ASSRs (Coffey et al., 2016; Langers et al., 2005; Overath et al., 2012; Steinmann & Gutschalk, 2011). The ROI for the AC (left AC: 5.49 cm2; right AC: 5.58 cm2) was defined in the Heschl's gyrus with reference to the transverse temporal gyrus in the Desikan–Killiany atlas implemented in Brainstorm (Desikan et al., 2006; Tadel et al., 2011). At the subcortical level, a semispherical ROI was defined in the CN (identified with reference to the medullary pontine junction; left CN: 0.49 cm3; right CN: 0.47 cm3) and in the IC (estimated with reference to the thalamus; left IC: 0.50 cm3; right IC: 0.55 cm3) (Coffey et al., 2016). The ROIs for the MGB were defined in the left and right posterior thalamus (roughly the posterior third of the thalamus, left MGB: 1.24 cm3; right MGB: 1.45 cm3) (Coffey et al., 2016). The subcortical ROIs were defined with a bigger size than their related anatomical regions to maximize the chance of capturing signals. The coordinates of the estimated central point of subcortical ROIs were, ±14, −28, 3 for the thalamus; ±7, −31, −12 for IC; ±8, −41, −43 for CN, all in the Montreal Neurological Institute coordination system.

2.4.7. Time‐series of ROIs

Each ROI depends on its size and includes several dipoles. In order to extract a time‐series per ROI, we need to find a representative dipole inside each ROI. This is because when the ROIs are broad and show heterogeneous patterns of activities, the extraction of a time‐series on the basis of averaging between all dipoles can impose extra smoothing to the final time‐series and lead to an underestimated response (Ghumare et al., 2018). On the other hand, the extraction of a time‐series based on the highest activity can lead to an overestimated response.

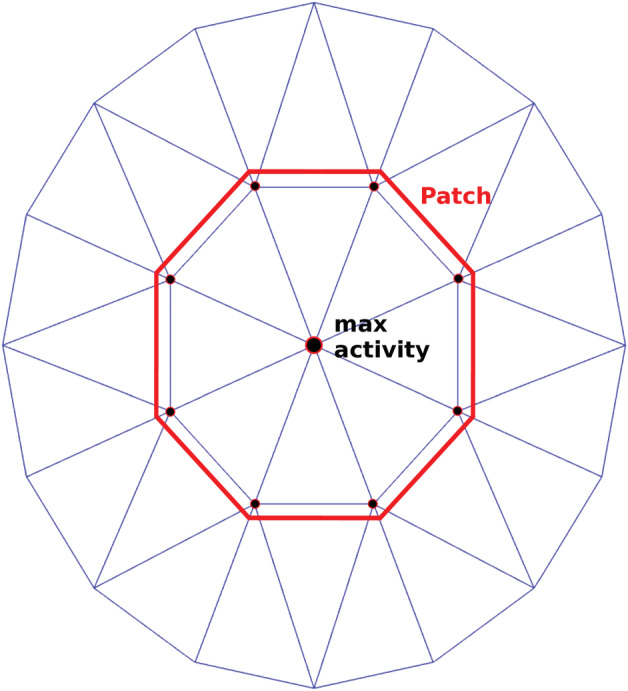

To find a representative dipole inside each ROI, we first considered the dipoles with maximum ASSR amplitude and its neighboring dipoles as the response patches. Then, the patch with the highest mean ASSR amplitude was chosen as response patch. Finally, a dipole showing the highest similarity to the mean time‐series of the response patch was chosen as the representative dipole. The detailed algorithm of choosing a representative dipole is as follows:

-

Find a response patch inside each ROI:

sort the dipoles based on ASSR amplitude and choose the first 3 dipoles with highest amplitude;

extract a patch around each selected dipole based on the first layer of neighboring dipoles in the cortical surface (see Figure 2);

calculate the mean ASSR amplitude of each patch;

sort three patches and choose the patch with highest mean ASSR amplitude.

-

Find the representative dipole from the response patch:

-

b.

calculate the mean ASSR of the selected patch in complex form, d average

-

c.

Note: complex representation of ASSR of each dipole was obtained from FFT output at a modulation frequency;

-

d.

find the dipole with most similar ASSR (in complex form) to the mean response (in complex form) using vectorial subtraction as:

-

b.

FIGURE 2.

Schematic diagram of the patch around a selected dipole with maximum activity

| (6) |

It should be noted that, in the subcortical ROIs, the ASSR amplitude of each dipole was very close to its neighboring dipoles in subcortical volume. Therefore, to diminish the computational load, the time‐series directly was extracted from the dipole with the highest ASSR amplitude.

2.5. Variance estimation

The variation of the ASSR amplitude was estimated by applying the Jackknife re‐sampling method to the EEG data of participants (Efron & Stein, 1981). For each resampling, the dSPM imaging kernel of the main MNI (Equation (1)) was applied to the averaged EEG data of resampling.

3. RESULTS

3.1. The ASSR maps

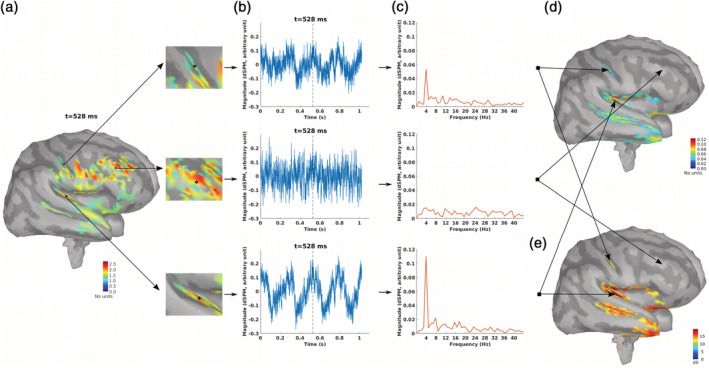

Brain sources of ASSR for different modulation frequencies and two sides of stimulation were reconstructed using the MNI approach (Figure 1). For each stimulation condition, the MNI approach yields an ASSR map, which shows the magnitude of the ASSR for different brain regions. As an example, the ASSR map for 4 Hz AM stimuli presented to the left ear is illustrated in Figure 3d. This figure shows different steps from a source distribution map in the time domain (Figure 3a) to the ASSR map (Figure 3d). The time‐series of three sample dipoles located in the precentral gyrus, the middle frontal and the AC are shown in Figure 3b. The time‐series of the dipole in the AC suggests a high neural synchronization to the envelope of 4 Hz AM stimuli, while the dipole in the middle frontal gyrus does not show synchronization. The degree of synchronization or ASSR of each dipole was calculated based on the frequency response of that dipole at the modulation frequency (Figure 3c). The ASSR amplitudes were used to generate the ASSR map (Figure 3d). This ASSR map illustrates a high ASSR amplitude in the AC, smaller amplitudes in the precentral gyrus and no significant ASSRs in the middle frontal gyrus. Similar to the ASSR map, the SNR map was also generated based on the value of SNR (in dB) for each dipole (see Section 2.4.5).

FIGURE 3.

The auditory steady‐state response (ASSR) map in response to 4 Hz amplitude modulated (AM) stimuli presented to the left ear. (a) Reconstructed brain map at 528 ms using dynamic statistical parametric mapping (dSPM) and enlarged view of three sample dipoles located in the precentral gyrus, the middle frontal and the auditory cortex (from top to bottom). The map shows the absolute values of activity. The color bar indicates the magnitude of activity (no unit because of normalization within dSPM algorithm). (b) Time series of activity (original values with length of one epoch) for the three sample dipoles. The vertical dashed line shows the time point of 528 ms. (c) The frequency spectrum for the three sample dipoles. (d) The generated ASSR map using ASSR amplitude for the dipoles with significant response (F‐test, α = .05, corrected for multiple comparison using false discovery rate (FDR), Benjamini & Hochberg, 1995). The color bar indicates the ASSR amplitude with arbitrary unit because of normalization within the dSPM algorithm. The dipoles with not significant ASSRs were set to zero. (e) The generated SNR map. The color bar indicates the SNR of 4 Hz ASSR in dB

3.2. ROIs and their time‐series

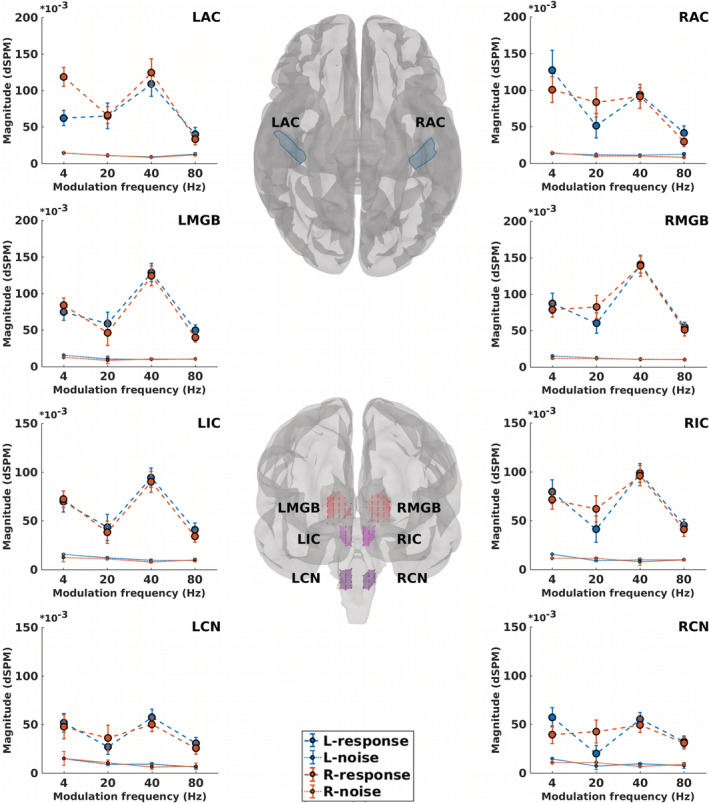

After developing the ASSR maps, the ROIs were defined for further analysis and interpretation of the results. Eight ROIs were defined along the primary auditory pathway as primary ROIs. These ROIs were located bilaterally in the AC at the cortical level as well as in the MGB, IC, and CN at the subcortical level (Figure 4).

FIGURE 4.

Regions of interest (ROIs) along the primary auditory pathway and their neural responses. The central panel depicts cortical ROIs (center‐top) in the left and right auditory cortex (LAC, RAC) and subcortical ROIs (center‐bottom) located bilaterally in the medial geniculate body (LMGB, RMGB), inferior colliculus (LIC, RIC), and cochlear nucleus (LCN, RCN). Surrounding panels illustrate biased responses (calculated based on Equation (3), dashed lines) and neural background noise (calculated based on Equation (3), dotted lines) of each ROI, in response to 4, 20, 40, and 80 Hz amplitude modulated (AM) stimuli presented to the left (blue) and right ears (red). The error bars show the SD estimated by means of the jackknife method (Efron & Stein, 1981)

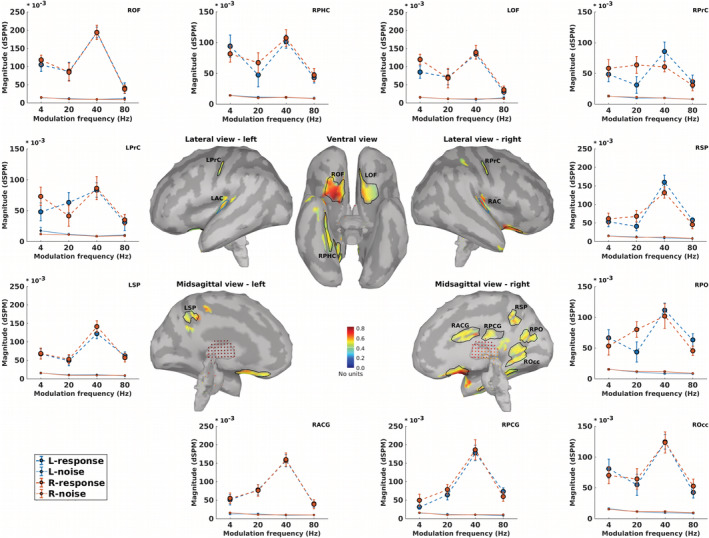

The ROIs beyond the auditory pathway, also termed non‐primary ROIs, were defined based on the grand‐averaged SNR map (cf. Section 2). Figure 5 illustrates 11 non‐primary ROIs, which were obtained for all stimulation conditions (four modulation frequencies and two sides of stimulation). The respective anatomical labels of the primary and the non‐primary ROIs are listed in Table 1.

FIGURE 5.

Non‐primary regions of interest (ROIs) and their neural responses. Central panels depict the grand‐averaged SNR maps of different stimulation conditions (4, 20, 40, and 80 Hz amplitude modulated (AM) stimuli presented to the left and the right ears) and the obtained ROIs. The anatomical labels of ROIs are listed in Table 1. Surrounding panels illustrate biased responses (calculated based on Equation (3), dashed lines) and neural background noise (calculated based on Equation (3), dotted lines) of each ROI, in response to AM stimuli with different modulation frequencies presented to the left (blue) and right ears (red). The error bars show the SD estimated by means of the jackknife method (Efron & Stein, 1981)

TABLE 1.

Anatomical labels of primary and non‐primary ROIs

| Primary ROIs | Non‐primary ROIs |

|---|---|

| Cortical | Left precentral gyrus (LPrC) |

| Left auditory cortex (LAC) | Right precentral gyrus (RPrC) |

| Right auditory cortex (RAC) | Right orbitofrontal (ROF) |

| Right parahippocampal (RPHC) | |

| Subcortical | Left orbitofrontal (LOF) |

| Left medial geniculate body (LMGB) | Right occipital (ROcc) |

| Right medial geniculate body (RMGB) | Right superior parietal (RSP) |

| Left inferior colliculus (LIC) | Left superior parietal (LSP) |

| Right Inferior colliculus (RIC) | Right posterior cingulate gyrus (RPCG) |

| Left cochlear nucleus (LCN) | Right anterior cingulate gyrus (RACG) |

| Right cochlear nucleus (RCN) | Right parieto‐occipital (RPO) |

The time‐series of each ROI (primary or non‐primary) was extracted from the representative dipole of that ROI (cf. Section 2). Then, the biased response and the neural background noise (Equation (3)) were calculated based on the extracted time‐series (Figures 4 and 5, surrounding panels).

3.3. The primary and the non‐primary sources of ASSRs

For all ROIs (primary and non‐primary), the time‐series showed a significant ASSR activity (F‐test with SNR cf. developing ASSR map section Equation (2)), and therefore the ROIs were considered as ASSR sources for the remaining part of the paper.

3.3.1. The primary sources

The grand‐average SNR map (Figure 5, central panel) shows active regions in the left and the right auditory cortices which are anatomically highly comparable with the Heschl's gyrus, the location of cortical sources of ASSRs (Kuriki et al., 2013; Popescu et al., 2008; Schoonhoven et al., 2003; Steinmann & Gutschalk, 2011). Moreover, the high SNR observed in these regions was in line with the prior knowledge about the AC as the main cortical generator of ASSRs (Giraud et al., 2000; Herdman et al., 2002; Picton et al., 2003).

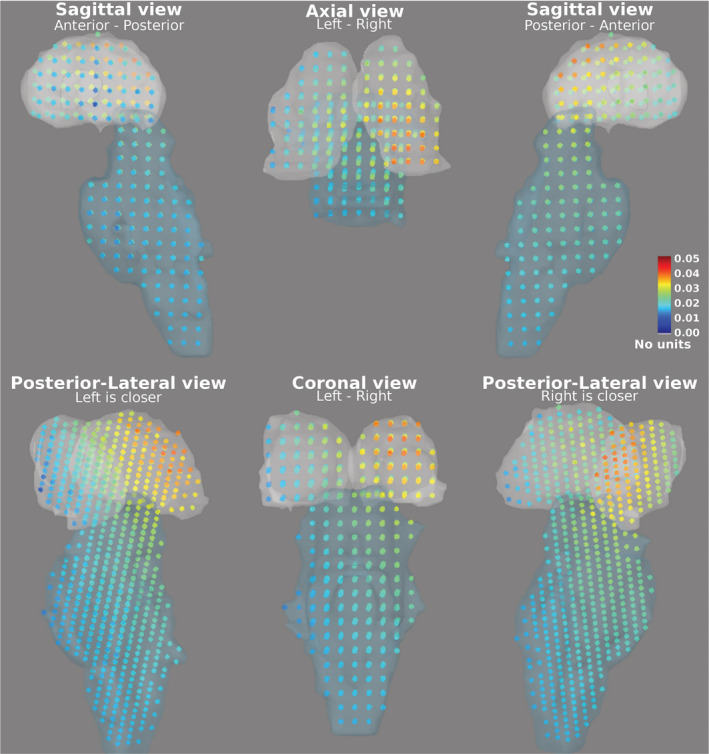

The primary subcortical sources of ASSRs are located in the CN, the IC, and the MGB (Coffey et al., 2016; Langers et al., 2005; Overath et al., 2012; Steinmann & Gutschalk, 2011). The activities of subcortical regions were reconstructed and significant ASSR activities were detected in the MGB, IC, and CN (Figure 4). Detection of these sources at four different modulation frequencies and two sides of stimulation demonstrate the robust ability of the MNI approach to detect activity of subcortical sources. As an example, the ASSR map of subcortical regions in response to 80 Hz AM stimuli presented to the right ear is illustrated in Figure 6. The highest amplitude is visible in the posterior region of the right thalamus (i.e., location of the MGB). This activity gradually decreases toward the anterior part of the thalamus. A similar pattern is seen for the right IC (the superior–posterior region of the brainstem). The gradual changes of activations across subcortical regions are due to the low resolution of MNI and also due to the method of calculating the total amplitude based on the norm of activities of the three orthogonal dipoles at each grid point.

FIGURE 6.

The auditory steady‐state response (ASSR) map of subcortical regions, the thalamus (superior, light gray region) and the brainstem (inferior, light blue region), in response to 80 Hz amplitude modulated (AM) stimuli presented to the right ear are shown in different projections (sagittal, axial, coronal, and posterior‐lateral view). Each dot indicates a grid point and its color shows the Euclidean norm of activities of the three orthogonal dipoles at that point (calculated based on Equation (4)). The color bar indicates the ASSR amplitude with arbitrary unit, because of normalization within the dynamic statistical parametric mapping (dSPM) algorithm

3.3.2. The non‐primary source

Only a limited number of studies report non‐primary sources of ASSR. Using the group‐ICA approach on the same data as here, Farahani et al. (2019) detected four sources beyond the auditory pathway as non‐primary sources of ASSRs. These were located in the left and right motor areas, the superior parietal lobe and the right occipital lobe. In the present study, the sources labeled as LprC, RprC, LSP, RSP, Rocc, and RPO, all with significant ASSRs, are in a similar location to the non‐primary sources which were detected using the group‐ICA approach (Farahani et al., 2019).

The sources located in the left and the right orbitofrontal are in a similar location to the identified ASSR sources in the frontal lobe by Farahani et al. (2017). These sources are also in line with the “what” path of auditory processing which is responsible for sound recognition (Kraus & Nicol, 2005; Maeder et al., 2001; Martin, 2012).

Significant ASSR activity in the non‐primary ROIs was detected for every stimulation condition. This is in line with the findings of Farahani et al. (2019) regarding the robustness of the non‐primary activities across different modulation frequencies.

3.3.3. ASSR activity of the sources

The ASSR amplitudes (Equation (3)) of the extracted time‐series were considered ASSR activity of each source. The ASSR activities and SDs (estimated using the Jackknife methods) of the four different modulation frequencies and two sides of stimulation for the primary and the non‐primary sources were summarized in Tables 2 and 3, respectively. These data provide the basis for further comparisons between sources, such as for different modulation frequency or sides of stimulation. In the following section, the activity of a cortical source is compared with the activity of a subcortical source for low and high modulation frequencies.

TABLE 2.

ASSR activity (ASSR amplitude × 1,000) and SD (between brackets) of the primary sources in response to 4, 20, 40, and 80 Hz AM stimuli and two sides of stimulation

| LAC | RAC | LMGB | R MGB | LIC | RIC | LCN | RCN | |

|---|---|---|---|---|---|---|---|---|

| 4 Hz‐left | 48.0 (10.6) | 113.4 (27.4) | 59.9 (11.1) | 74.1 (13.3) | 54.7 (10.5) | 64.9 (11.7) | 36.9 (9.4) | 42.8 (9.5) |

| 4 Hz‐right | 103.7 (13.4) | 86.0 (17.7) | 71.5 (10.3) | 67.0 (10.7) | 60.0 (8.5) | 60.0 (9.8) | 33.4 (8.6) | 28.8 (9.0) |

| 20 Hz‐left | 54.2 (17.4) | 39.3 (16.5) | 48.6 (14.9) | 47.9 (14.1) | 32.1 (13.6) | 32.5 (13.5) | 18.0 (8.1) | 12.7 (7.6) |

| 20 Hz‐right | 54.6 (11.7) | 73.3 (20.4) | 37.6 (17.3) | 71.2 (16.0) | 27.3 (11.2) | 50.8 (13.4) | 25.8 (12.7) | 32.1 (11.6) |

| 40 Hz‐left | 99.9 (16.9) | 82.6 (9.4) | 119.8 (12.4) | 131.6 (11.5) | 86.3 (9.7) | 90.5 (9.2) | 50.1 (7.7) | 47.6 (6.2) |

| 40 Hz‐right | 115.8 (18.8) | 81.2 (16.1) | 114.7 (13.9) | 129.5 (14.5) | 82.1 (10.9) | 88.3 (10.5) | 43.9 (7.4) | 42.8 (7.7) |

| 80 Hz‐left | 26.8 (9.6) | 28.6 (9.7) | 39.5 (7.5) | 44.3 (6.4) | 32.6 (7.2) | 35.8 (6.4) | 24.8 (6.1) | 25.5 (5.6) |

| 80 Hz‐right | 21.4 (7.1) | 21.4 (6.1) | 29.9 (5.8) | 41.2 (7.7) | 24.4 (5.0) | 31.2 (6.7) | 18.5 (4.6) | 21.9 (6.0) |

Note: Abbreviations are listed in Table 1.

TABLE 3.

ASSR activity (ASSR amplitude × 1,000) and SD (between brackets) of the non‐primary sources in response to 4, 20, 40, and 80 Hz AM stimuli and two sides of stimulation

| LPrC | RPrC | ROF | RPHC | LOF | ROcc | RSP | LSP | RPCG | RACG | RPO | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 4 Hz‐left | 30.5 (15) | 35.3 (12.6) | 90.7 (18.4) | 79.9 (18.1) | 70.1 (16.9) | 65.0 (14.8) | 37.9 (13.1) | 51.7 (14.4) | 16.1 (6.2) | 38.4 (14.2) | 50.9 (13.0) |

| 4 Hz‐right | 61.0 (14.4) | 45.1 (14.1) | 102.7 (14.1) | 67.4 (13.4) | 104.2 (14.3) | 54.9 (13.5) | 46.6 (15.0) | 52.5 (15.4) | 33.5 (16.8) | 38.9 (14.9) | 37.9 (14.1) |

| 20 Hz‐left | 51.4 (15.9) | 21.2 (13.5) | 75.0 (25.4) | 36.3 (19.5) | 59.9 (21.1) | 43.8 (16.9) | 28.2 (12.4) | 38.4 (13.9) | 52.7 (14.6) | 65.1 (13.9) | 32.5 (16.2) |

| 20 Hz‐right | 30.3 (16.8) | 52.4 (13.8) | 73.8 (25.1) | 57.1 (16.4) | 56.9 (27.1) | 53.1 (16.9) | 56.7 (15.2) | 43.1 (12.6) | 68.6 (13.9) | 65.6 (15.5) | 68.4 (12.7) |

| 40 Hz‐left | 74.4 (11.3) | 75.5 (15.8) | 184.3 (15.5) | 90.8 (10.4) | 124.3 (12.9) | 114.9 (11.7) | 150.4 (18.9) | 111.2 (13.8) | 167.3 (21.1) | 147.9 (15.9) | 103.1 (10.8) |

| 40 Hz‐right | 77.9 (19.3) | 50.8 (8.2) | 184.0 (19.6) | 96.2 (13.8) | 128.2 (18.9) | 112.3 (17.3) | 120.1 (14.6) | 131.6 (15.4) | 175.2 (27.3) | 149.3 (18.5) | 90.4 (19.8) |

| 80 Hz‐left | 20.3 (12.8) | 28.6 (10.7) | 27.7 (13.4) | 33.1 (8.3) | 16.5 (7.7) | 33.8 (9.3) | 49.6 (6.3) | 54.2 (10) | 65.3 (9.7) | 30.3 (9.7) | 55.0 (10.2) |

| 80 Hz‐right | 25.6 (8.6) | 22.5 (8.4) | 28.5 (11.0) | 38.0 (10.2) | 23.7 (9.7) | 43.1 (11.2) | 36.9 (10.8) | 47.8 (11.5) | 48.7 (12.5) | 29.0 (11.9) | 36.5 (7.5) |

Note: Abbreviations are listed in Table 1.

Abbreviations: AM, amplitude modulated; ASSR, auditory steady‐state response.

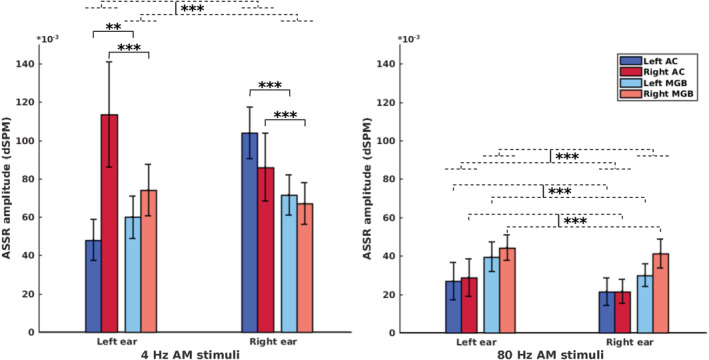

3.4. Comparison between primary sources: Cortical versus subcortical

It is expected that the relative activity of cortical and subcortical sources will change depending on the modulation frequency. The cortical sources show more activity than the subcortical ones at low modulation frequencies, while subcortical sources show higher activity at higher modulation frequencies (Giraud et al., 2000; Gransier, van Wieringen, & Wouters, 2017; Liégeois‐Chauvel et al., 2004; Wong & Gordon, 2009). In order to investigate this behavior with the MNI approach, we performed statistical comparisons between the activity of AC and MGB (as cortical and subcortical sources, respectively) in response to 4 and 80 Hz AM stimuli as a low and a high modulation frequency, respectively. The MGB was selected as representative of subcortical sources, because it showed stronger responses than the IC and CN.

Figure 7 shows the ASSR amplitude of the AC and the MGB in response to 4 and 80 Hz AM stimuli presented to the left and the right ears. The mean ASSR amplitude and the estimated SD of the sources in the AC and thalamus (Figure 7) were submitted to a factorial mixed analysis of variance (FM‐ANOVA) with amplitude as the dependent variable and sources (two categories: AC and MGB), hemisphere (two categories: left and right), and side of stimulation (two categories: left and right) as within‐subject variables. Afterward, a two‐sample t test with Bonferroni correction was used for post hoc testing.

FIGURE 7.

Auditory steady‐state response (ASSR) amplitudes of the auditory cortex (AC) and the medial geniculate body (MGB) in response to 4 and 80 Hz amplitude modulated (AM) stimuli presented to the left and the right ears. The bars are clustered per side of stimulation (left ear, right ear) and represent the ASSR amplitude of the left and right AC and the left and right MGB (indicated by different colors). All ASSRs were significantly different from the neural background activities (cf. Section 2.4.5). The error bars indicate the SD estimated using the jackknife method (Efron & Stein, 1981). The dashed lines represent grouping, while the solid lines indicate statistical comparison. *p < .05; **p < .01; ***p < .001

For the brain sources of 4 Hz ASSRs, the FM‐ANOVA test showed a main effect of source, with significantly higher ASSR amplitudes for the AC than for the MGB (F(1,33) = 58.8, p < .001). A significant interaction effect was observed for source and hemisphere (F(1,33) = 13.7, p < .001) and also for source and side of stimulation (F(1,33) = 5.4, p < .05). Post hoc testing showed that, for left side of stimulation, the right AC yielded significantly higher ASSR amplitudes than the right MGB (p < .001, Cohen's d 1.8), but the left AC yielded smaller amplitudes than the left MGB (p < .01, Cohen's d 1.1). For right side of stimulation, both the left and the right ACs yielded significantly higher ASSR amplitudes than the left MGB (p < .001, Cohen's d 2.6) and the right MGB (p < .001, Cohen's d 1.2), respectively.

A significant main effect of source was identified for the 80 Hz ASSR with significantly higher ASSR amplitudes for the MGB than for the AC (F(1,32) = 118.8, p < .001). An interaction effect between source and hemisphere was observed (F(1,32) = 7.5, p < .01), but there was no significant interaction effect between source and side of stimulation. Post hoc testing indicated that, irrespective of side of stimulation, the ASSR amplitudes of the both left and right MGB were significantly higher than those of the left AC (p < .001, Cohen's d 1.3) and the right AC (p < .001, Cohen's d 2.2), respectively.

4. DISCUSSION

4.1. ASSR source reconstruction

In the current study, we proposed an approach based on MNI for the reconstruction of ASSR sources. This approach facilitates ASSR source analysis by quantifying the ASSR amplitude for each dipole and developing the ASSR map. The results demonstrated that the MNI approach can successfully reconstruct a wide‐range of the neural sources of ASSRs, including primary sources at cortical and subcortical levels and the non‐primary sources. In all stimulation conditions (4, 20, 40, and 80 modulation frequencies presented to the left and the right ears), a significant ASSR activity was observed in the Heschl's gyrus in both hemispheres, which is located in the primary AC (Figure 4). This result indicates that the MNI approach is a robust method to reconstruct the primary sources from the ASSRs.

4.1.1. Contribution of the non‐primary sources to generate ASSRs

For all the non‐primary sources introduced in the current study the activity at the modulation frequency was significantly different compared to neighboring frequencies. This activity was observed sharply at 3.91, 19.53, 40.04, and 80.08 Hz modulation frequencies. While the modulation frequency is different, the paradigm of the experiment (i.e., passive listening and watching a subtitled movie) is the same across all blocks of experiments. Thus, we consider it unlikely that the activity of non‐primary sources are paradigm‐specific and related to watching subtitled movies or controlling eye movements.

The association between the non‐primary activation and AM stimuli can be investigated by comparing EEG activity with and without stimulation. In the same experimental conditions, before and after the main ASSR recordings, EEG data with no auditory stimulation (silence data) were recorded in two blocks of 150 s (Farahani et al., 2017). The analysis of silence data using group‐ICA did not show any ASSR sources, neither primary nor non‐primary ones (Farahani et al., 2019), which suggests that the non‐primary sources are activated by AM stimuli. However, to show that the non‐primary sources are only observed in case of acoustic stimulation, further experiments with limited visual input are needed.

It may be postulated that the activity of non‐primary sources is related to multisensory integration. Typically, in multisensory integration different sensory cues are integrated when the sensory inputs arise from the same source. Temporal and spatial proximity of the stimulus components are also important factors in multisensory integration (Stein & Stanford, 2008; Van Atteveldt, Murray, Thut, & Schroeder, 2014). In the current study, the acoustic stimuli were not associated nor synchronized with the visual inputs (i.e., a muted subtitled movie). Moreover, the paradigm of the experiment was designed for “passive listening” and the participants were asked not to pay attention to the AM stimuli. These two issues seem to be against the hypothesis of multisensory integration for explaining non‐primary activities.

Arnal, Kleinschmidt, Spinelli, Giraud, and Mégevand (2019) recently used intracranial recordings in epileptic patients to investigate neural synchronization to the click trains of varying frequencies. They observed phased locked responses at the rate of stimulation, maximally in the range of 40–80 Hz, in a large network of cortical and subcortical regions extending well beyond the AC. They specifically observed phased locked responses in the superior temporal gyrus, superior parietal, right inferior parietal, orbitofrontal, parahippocampal, and the sensory motor area and thereby provided a direct evidence and support to the non‐primary sources identified in the current study. The contribution of the motor system to auditory processing was also reported in several studies (Arnal, Doelling, & Poeppel, 2015; Brodbeck, Presacco, & Simon, 2018; Du, Buchsbaum, Grady, & Alain, 2016; Fujioka, Trainor, Large, & Ross, 2012) and is in line with the non‐primary sources that we found in motor area. The locations of the non‐primary sources here were also in a similar location to the non‐primary sources previously detected using the ICA on the same data as here (Farahani et al., 2017, 2019).

Findings about the non‐primary sources can have clinical implications. Previous animal studies suggested that the middle latency responses (MLRs) recorded over the temporal lobe mainly originate from the primary pathway, while those recorded over the midline are largely influenced by the non‐primary pathway (Kraus, Smith, & McGee, 1988; McGee, Kraus, Littman, & Nicol, 1992). Similar results were noted for mismatch negativity responses (Kraus et al., 1994) and also in response to click trains with slow rates (Abrams, Nicol, Zecker, & Kraus, 2011). Based on the location of the non‐primary sources identified in the current study, we suggest using the electrodes over the temporal lobe for the audiological assessment by ASSRs. This choice may be influenced more by the AC than by the non‐primary sources. Animal neurophysiological data suggest that the non‐primary components of the MLR are generated early and are probably sleep‐state dependent, while the primary components are generated later and are reliable even in sleep (Kraus & McGee, 1993). This finding about the components of the MLRs has important implications for the clinical use of MLRs in young children. Similar effects are also expected for ASSRs, however this needs to be investigated in a separate experiment. There are different studies suggesting that the non‐primary pathway may be affected in various neurological disorders. Moller, Kern, and Grannemann (2005) showed that some individuals with autism have an abnormal activation of the non‐primary sensory pathway. The non‐primary auditory pathway may also be involved in the generation of some forms of tinnitus (Moller & Rollins, 1992).

4.1.2. Subcortical sources

In recent years, the reconstruction of subcortical activities from the EEG has received substantial attention (Attal et al., 2009; Krishnaswamy et al., 2017; Min, Hämäläinen, & Pantazis, 2020; Seeber et al., 2019). Seeber et al. (2019) employed intra‐cortical recordings in humans to demonstrate that the EEG can detect and correctly localize subcortical signals. In another study with relatively low electrode density (70 channels) Krishnaswamy et al. (2017) showed the possibility of reconstructing both cortical and subcortical sources using EEG. Due to the importance of reconstructing subcortical sources to study auditory temporal processing and the proof of this concept from the above‐mentioned literature we have employed EEG and MNI to estimate deeper brain sources. Indeed, the lack of individual MRI scans affects localization accuracy at an individual level. To address this issue we used a group‐level framework for all analyses and reported the results only at a group‐level.

Subcortical sources along the primary auditory pathway (i.e., MGB, IC, and CN) are tiny regions and very difficult to localize. It should be noted that, in the current study, we only aimed to reconstruct the activity of these regions, not to localize them. To this end, we used the prior knowledge obtained from fMRI studies to define ROIs in MGB, IC, and CN.

Due to the ill‐posed inverse problem of the EEG, the reconstructed brain maps based on the EEG have limited spatial resolution (Min et al., 2020). Spatial resolution refers to the ability of an imaging technique to differentiate between two near‐by sources that should be reconstructed as separate sources (Knaapen & Lubberink, 2015). So, with regard to the limited spatial resolution of EEG source reconstruction the distance between desired sources is essential. The subcortical centers of the primary auditory pathway (i.e., MGB, IC, and CN) are tiny regions, but they are quite distinct from each other.

The MNI approach was able to reconstruct the activity of subcortical sources of ASSRs in the MGB, IC, and CN. We found significant ASSR activity in these regions for all the stimulation conditions (Figure 4). Statistical comparisons showed significantly more cortical activity than subcortical activity for low modulation frequency (i.e., 4 Hz) and more subcortical activity for high modulation frequency (i.e., 80 Hz). These results were in line with previous electrophysiological ASSR studies (Alaerts, Luts, Hofmann, & Wouters, 2009; Gransier et al., 2017; Herdman et al., 2002) and indicate the validity of the reconstructed subcortical activity. It should be noted that we compared cortical and subcortical activities here, not different modulation frequencies. To the best of our knowledge, there is only one study in which the subcortical activities in response to 80 Hz AM stimuli is compared with the 40 Hz ones (Herdman et al., 2002). In that study, they found higher subcortical activity at 39 Hz than 88 Hz which is in line with our results.

4.2. Comparison between MNI and group‐ICA

The fundamental distinction between the MNI and the group‐ICA approach (Farahani et al., 2019) is the use of head‐model information for source decomposition. While the decomposition of the source activities is only based on EEG data for the group‐ICA approach (data‐driven), the activity of sources is estimated based on EEG data and the head‐mode in the MNI approach.

The performance of the proposed MNI approach is compared with the group‐ICA approach (Farahani et al., 2019) on the same recording in the following paragraphs, to determine the more effective approach for the purpose of reconstructing ASSR sources.

4.2.1. Detection of sources in the AC

With the group‐ICA approach, no source was detected in the AC in response to 20 Hz AM stimuli (Farahani et al., 2019). However, the activity in the AC in response to 20 Hz AM stimuli can be reconstructed using the MNI approach (Figure 4). Moreover, activity in the AC was also reconstructed for 80 Hz ASSRs, although it was relatively small compared to other modulation frequencies. These results suggest that the MNI approach can overcome the limitations of group‐ICA in the detection of primary sources located in the AC, at some modulation frequencies.

4.2.2. Reconstruction of subcortical activity

With the exception of the AC, most centers along the auditory pathway are subcortical (Langers et al., 2005; Overath et al., 2012; Steinmann & Gutschalk, 2011). As a result, the reconstruction of subcortical activity can be very informative for research on early auditory processing in the central auditory system. However, because of the deep location and special cell architecture of the subcortical regions, the reconstruction of subcortical activities using electrophysiological measurements can be problematic (Attal et al., 2009; Attal & Schwartz, 2013). Given this consideration, the MNI approach poses a great advantage over group‐ICA for the reconstruction of subcortical activity.

4.2.3. Reducing computational load

AMICA was chosen among many available ICA algorithms for the implementation of group‐ICA (Farahani et al., 2019) because of its superior performance in terms of the remaining mutual information between components and the number of components with dipolar scalp projections (Delorme, Palmer, Onton, Oostenveld, & Makeig, 2012). Applying AMICA on the concatenated data matrix of participants (with the size of 64 × 17.1e6) took around 3 days with a powerful computer (“Ivy Bridge” Xeon E5‐2680v2 CPU, 2.8 GHz, 25 MB level 3 cache, 32 GB RAM), while the development of the ASSR map for the MNI approach took only 10 min using a normal computer (Core i7‐4600M CPU, 2.9 GHz, 4 MB level 3 cache, 16 GB RAM), thereby indicating a much lower computational load than the group‐ICA approach.

Comparison between the MNI approach and group‐ICA on the same EEG data (the above‐mentioned paragraphs) suggests that the use of head‐model information in the MNI (head‐model based) is beneficial and leads to a better performance than group‐ICA (data‐driven) in reconstructing ASSR sources. However, it should be noted that an accurate head‐model is a prerequisite for an accurate source map. In the current study, we used a template MRI to generate the head‐model. The use of a template MRI for individuals is a rough approximation and can impose errors into source reconstruction. Therefore, we used a group‐wise framework for source reconstruction (Farahani et al., 2019).

It is also possible that the MNI approach performs better as a result of the distributed nature of MNI source analysis with no assumption of independence between sources. This feature of MNI can provide more degrees of freedom for the reconstruction of the sources, especially the subcortical one.

5. CONCLUSIONS

In this study, an MNI technique was used to simultaneously reconstruct a wide range of neural sources of ASSRs, including cortical and subcortical sources. A novel extension to MNI was proposed which facilitates ASSR source analysis by quantifying the ASSR amplitude for each dipole and developing the ASSR map. The MNI approach was capable of reconstructing the sources located outside of the AC, designated as non‐primary sources, as well as primary sources, bilaterally located in AC. The non‐primary sources were in similar location to those reported in the previous studies (Arnal et al., 2019; Farahani et al., 2017, 2019; Martin, 2012). Primary sources were consistently detected in every stimulation condition (four modulation frequencies and two sides of stimulation), thereby demonstrating the robustness of the approach. Moreover, the MNI approach was successful in reconstructing the subcortical activities of ASSRs as validated by comparing between cortical and subcortical activities, in response to low and high modulation frequencies.

Finally, the MNI approach in our study showed a better performance than the group‐ICA approach (Farahani et al., 2019) in terms of detection of sources in the AC, reconstruction of subcortical activity and reduction of computational load. The superior performance of this approach is most likely due to the involvement of head‐model information for the decomposition of the sources.

ACKNOWLEDGMENTS

Special thanks go to Dr Tine Goossens for accumulating the ASSR recording data used in this work. This work was supported by the Research Council, KU Leuven through projects C14/17/046 and OT/12/98 and by the Research Foundation Flanders through FWO‐projects G066213 and G0A9115.

Farahani ED, Wouters J, van Wieringen A. Brain mapping of auditory steady‐state responses: A broad view of cortical and subcortical sources. Hum Brain Mapp. 2021;42:780–796. 10.1002/hbm.25262

Funding information Fonds Wetenschappelijk Onderzoek, Grant/Award Numbers: G066213, G0A9115; Onderzoeksraad, KU Leuven, Grant/Award Numbers: C14/17/046, OT/12/98

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy/ethical restrictions (containing information that could compromise the privacy of research participants).

REFERENCES

- Abrams, D. A. , Nicol, T. , Zecker, S. , & Kraus, N. (2011). A possible role for a paralemniscal auditory pathway in the coding of slow temporal information. Hearing Research, 272(1–2), 125–134. 10.1016/j.heares.2010.10.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alaerts, J. , Luts, H. , Hofmann, M. , & Wouters, J. (2009). Cortical auditory steady‐state responses to low modulation rates. International Journal of Audiology, 48(8), 582–593. 10.1080/14992020902894558 [DOI] [PubMed] [Google Scholar]

- Arnal, L. H. , Doelling, K. B. , & Poeppel, D. (2015). Delta‐beta coupled oscillations underlie temporal prediction accuracy. Cerebral Cortex, 25(9), 3077–3085. 10.1093/cercor/bhu103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnal, L. H. , Kleinschmidt, A. , Spinelli, L. , Giraud, A. L. , & Mégevand, P. (2019). The rough sound of salience enhances aversion through neural synchronisation. Nature Communications, 10(1), 1–12. 10.1038/s41467-019-11626-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Attal, Y. , Bhattacharjee, M. , Yelnik, J. , Cottereau, B. , Lefèvre, J. , Okada, Y. , … Baillet, S. (2009). Modelling and detecting deep brain activity with MEG and EEG. Irbm, 30(3), 133–138. 10.1016/j.irbm.2009.01.005 [DOI] [PubMed] [Google Scholar]

- Attal, Y. , & Schwartz, D. (2013). Assessment of subcortical source localization using deep brain activity imaging model with minimum norm operators: A MEG study. PLoS One, 8(3), e59856 10.1371/journal.pone.0059856 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bastos, A. M. , & Schoffelen, J. M. (2016). A tutorial review of functional connectivity analysis methods and their interpretational pitfalls. Frontiers in Systems Neuroscience, 9, 175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamini, Y. , & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. Series B (Methodological), 57(1), 289–300. [Google Scholar]

- Bradley, A. , Yao, J. , Dewald, J. , & Richter, C. P. (2016). Evaluation of electroencephalography source localization algorithms with multiple cortical sources. PLoS One, 11(1), e0147266 10.1371/journal.pone.0147266 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brodbeck, C. , Presacco, A. , & Simon, J. Z. (2018). Neural source dynamics of brain responses to continuous stimuli: Speech processing from acoustics to comprehension. NeuroImage, 172(January), 162–174. 10.1016/j.neuroimage.2018.01.042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coffey, E. B. J. , Herholz, S. C. , Chepesiuk, A. M. P. , Baillet, S. , & Zatorre, R. J. (2016). Cortical contributions to the auditory frequency‐following response revealed by MEG. Nature Communications, 7, 11070 10.1038/ncomms11070 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale, A. M. , Liu, A. K. , Fischl, B. R. , Buckner, R. L. , Belliveau, J. W. , Lewine, J. D. , … Louis, S. (2000). Neurotechnique mapping: Combining fMRI and MEG for high‐resolution imaging of cortical activity. Neuron, 26, 55–67. [DOI] [PubMed] [Google Scholar]

- Delorme, A. , Palmer, J. , Onton, J. , Oostenveld, R. , & Makeig, S. (2012). Independent EEG sources are dipolar. PLoS One, 7(2), e30135 10.1371/journal.pone.0030135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desikan, R. S. , Ségonne, F. , Fischl, B. , Quinn, B. T. , Dickerson, B. C. , Blacker, D. , … Killiany, R. J. (2006). An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. NeuroImage, 31(3), 968–980. 10.1016/j.neuroimage.2006.01.021 [DOI] [PubMed] [Google Scholar]

- Dobie, R. A. , & Wilson, M. J. (1996). A comparison of t test, F test, and coherence methods of detecting steady‐state auditory‐evoked potentials, distortion‐product otoacoustic emissions, or other sinusoids. The Journal of the Acoustical Society of America, 100(4), 2236–2246. 10.1121/1.417933 [DOI] [PubMed] [Google Scholar]

- Drullman, R. , Festen, J. M. , & Plomp, R. (1994). Effect of reducing slow temporal modulations on speech reception. The Journal of the Acoustical Society of America, 95(5), 2670–2680. 10.1121/1.409836 [DOI] [PubMed] [Google Scholar]

- Du, Y. , Buchsbaum, B. R. , Grady, C. L. , & Alain, C. (2016). Increased activity in frontal motor cortex compensates impaired speech perception in older adults. Nature Communications, 7, 1–13. 10.1038/ncomms12241 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Efron, B. , & Stein, C. (1981). The jackknife estimate of variance. Annals of Statistics, 9(3), 586–596. [Google Scholar]

- Farahani, E. D. , Goossens, T. , Wouters, J. , & van Wieringen, A. (2017). Spatiotemporal reconstruction of auditory steady‐state responses to acoustic amplitude modulations: Potential sources beyond the auditory pathway. NeuroImage, 148(December 2016), 240–253. 10.1016/j.neuroimage.2017.01.032 [DOI] [PubMed] [Google Scholar]

- Farahani, E. D. , Wouters, J. , & van Wieringen, A. (2019). Contributions of non‐primary cortical sources to auditory temporal processing. NeuroImage, 191(February), 303–314. 10.1016/j.neuroimage.2019.02.037 [DOI] [PubMed] [Google Scholar]

- Fonov, V. , Evans, A. C. , Botteron, K. , Almli, C. R. , McKinstry, R. C. , & Collins, D. L. (2011). Unbiased average age‐appropriate atlases for pediatric studies. NeuroImage, 54(1), 313–327. 10.1016/j.neuroimage.2010.07.033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fujioka, T. , Trainor, L. J. , Large, E. W. , & Ross, B. (2012). Internalized timing of isochronous sounds is represented in neuromagnetic beta oscillations. Journal of Neuroscience, 32(5), 1791–1802. 10.1523/JNEUROSCI.4107-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghumare, E. G. , Schrooten, M. , Vandenberghe, R. , & Dupont, P. (2018). A time‐varying connectivity analysis from distributed EEG sources: A simulation study. Brain Topography, 31(5), 721–737. 10.1007/s10548-018-0621-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giraud, A. , Lorenzi, C. , Ashburner, J. , Wable, J. , Johnsrude, I. , Frackowiak, R. , … Frackowiak, R. (2000). Representation of the temporal envelope of sounds in the human brain. Journal of Neurophysiology, 84, 1588–1598. 10.1093/cercor/11.3.183 [DOI] [PubMed] [Google Scholar]

- Goossens, T. , Vercammen, C. , Wouters, J. , & van Wieringen, A. (2016). Aging affects neural synchronization to speech‐related acoustic modulations. Frontiers in Aging Neuroscience, 8(JUN), 1–16. 10.3389/fnagi.2016.00133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gramfort, A. , Papadopoulo, T. , Olivi, E. , & Clerc, M. (2010). OpenMEEG: Opensource software for quasistatic bioelectromagnetics. BioMedical Engineering OnLine, 9(45), 1–20. 10.1186/1475-925X-8-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gransier, R. , van Wieringen, A. , & Wouters, J. (2017). Binaural interaction effects of 30‐50 Hz auditory steady state responses. Ear and Hearing, 38(5), e305–e315. 10.1097/AUD.0000000000000429 [DOI] [PubMed] [Google Scholar]

- Grech, R. , Cassar, T. , Muscat, J. , Camilleri, K. P. , Fabri, S. G. , Zervakis, M. , … Vanrumste, B. (2008). Review on solving the inverse problem in EEG source analysis. Journal of NeuroEngineering and Rehabilitation, 5, 1–33. 10.1186/1743-0003-5-25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hämäläinen, M. S. , & Ilmoniemi, R. J. (1994). Interpreting magnetic fields of the brain: Minimum norm estimates. Medical & Biological Engineering & Computing, 32(1), 35–42. 10.1007/BF02512476 [DOI] [PubMed] [Google Scholar]

- Hauk, O. , Wakeman, D. G. , & Henson, R. (2011). Comparison of noise‐normalized minimum norm estimates for MEG analysis using multiple resolution metrics. NeuroImage, 54(3), 1966–1974. 10.1016/j.neuroimage.2010.09.053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- He, B. , & Ding, L. (2013). Electrophysiological mapping and neuroimaging In He B. (Ed.), Neural Engineering (pp. 499–543). Boston, MA: Springer US; 10.1007/978-1-4614-5227-0_12 [DOI] [Google Scholar]

- He, B. , Sohrabpour, A. , Brown, E. , & Liu, Z. (2018). Electrophysiological source imaging: A noninvasive window to brain dynamics. Annual Review of Biomedical Engineering, 20(1), 171–196. 10.1146/annurev-bioeng-062117-120853 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herdman, A. T. , Lins, O. , van Roon, P. , Stapells, D. R. , Scherg, M. , & Picton, T. W. (2002). Intracerebral sources of human auditory steady‐state responses. Brain Topography, 15(2), 69–86. 10.1023/A:1021470822922 [DOI] [PubMed] [Google Scholar]

- Hincapié, A. S. , Kujala, J. , Mattout, J. , Daligault, S. , Delpuech, C. , Mery, D. , … Jerbi, K. (2016). MEG connectivity and power detections with minimum norm estimates require different regularization parameters. Computational Intelligence and Neuroscience, 2016, 12–18. 10.1155/2016/3979547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- John, M. S. , & Picton, T. W. (2000). Human auditory steady‐state responses to amplitude‐modulated tones phase and latency measurements. Hearing Research, 141, 57–79. [DOI] [PubMed] [Google Scholar]

- Kang, S. S. , Lano, T. J. , & Sponheim, S. R. (2015). Distortions in EEG interregional phase synchrony by spherical spline interpolation: Causes and remedies. Neuropsychiatric Electrophysiology, 1(1), 1–17. 10.1186/s40810-015-0009-5 [DOI] [Google Scholar]

- Knaapen, P. , Lubberink, M. , & (2015). Positron emission tomography In Nieman K., Gaemperli O., Patrizio L., & Sven P. Advanced cardiac imaging (pp. 71–95). Cambridge, England: Woodhead Publishing; 10.1016/B978-1-78242-282-2.00004-4 [DOI] [Google Scholar]

- Kraus, N. , Smith, D. I. , & McGee, T. (1988). Midline and temporal lobe MLRs in the Guinea pig originate from different generator systems: A conceptual framework for new and existing data. Electroencephalography and Clinical Neurophysiology, 70(6), 541–558. 10.1016/0013-4694(88)90152-6 [DOI] [PubMed] [Google Scholar]

- Kraus, N. , & McGee, T. (1993). Clinical implications of primary and non‐primary pathway contributions to the middle latency response generating system. Ear and Hearing, 14(1), 36–48. 10.1097/00003446-199302000-00006 [DOI] [PubMed] [Google Scholar]

- Kraus, N. , McGee, T. , Carrell, T. , King, C. , Littman, T. , & Nicol, T. (1994). Discrimination of speech‐like contrasts in the auditory thalamus and cortex. Journal of the Acoustical Society of America, 96(5), 2758–2768. 10.1121/1.411282 [DOI] [PubMed] [Google Scholar]

- Kraus, N. , & Nicol, T. (2005). Brainstem origins for cortical “what” and “where” pathways in the auditory system. Trends in Neurosciences, 28(4), 176–181. 10.1016/j.tins.2005.02.003 [DOI] [PubMed] [Google Scholar]

- Krishnaswamy, P. , Obregon‐Henao, G. , Ahveninen, J. , Khan, S. , Babadi, B. , Iglesias, J. E. , … Purdon, P. L. (2017). Sparsity enables estimation of both subcortical and cortical activity from MEG and EEG. Proceedings of the National Academy of Sciences of the United States of America, 114(48), 65–74. 10.1073/pnas.1705414114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuriki, S. , Kobayashi, Y. , Kobayashi, T. , Tanaka, K. , & Uchikawa, Y. (2013). Steady‐state MEG responses elicited by a sequence of amplitude‐modulated short tones of different carrier frequencies. Hearing Research, 296, 25–35. 10.1016/j.heares.2012.11.002 [DOI] [PubMed] [Google Scholar]

- Langers, D. R. M. , Van Dijk, P. , & Backes, W. H. (2005). Lateralization, connectivity and plasticity in the human central auditory system. NeuroImage, 28(2), 490–499. 10.1016/j.neuroimage.2005.06.024 [DOI] [PubMed] [Google Scholar]

- Liégeois‐Chauvel, C. , Lorenzi, C. , Trébuchon, A. , Régis, J. , & Chauvel, P. (2004). Temporal envelope processing in the human left and right auditory cortices. Cerebral Cortex, 14(7), 731–740. 10.1093/cercor/bhh033 [DOI] [PubMed] [Google Scholar]

- Lin, F. H. , Witzel, T. , Ahlfors, S. P. , Stufflebeam, S. M. , Belliveau, J. W. , & Hämäläinen, M. S. (2006). Assessing and improving the spatial accuracy in MEG source localization by depth‐weighted minimum‐norm estimates. NeuroImage, 31(1), 160–171. 10.1016/j.neuroimage.2005.11.054 [DOI] [PubMed] [Google Scholar]

- Luke, R. , de Vos, A. , & Wouters, J. (2017). Source analysis of auditory steady‐state responses in acoustic and electric hearing. NeuroImage, 147(June 2016), 568–576. 10.1016/j.neuroimage.2016.11.023 [DOI] [PubMed] [Google Scholar]

- Maeder, P. P. , Meuli, R. A. , Adriani, M. , Bellmann, A. , Fornari, E. , Thiran, J. P. , … Clarke, S. (2001). Distinct pathways involved in sound recognition and localization: A human fMRI study. NeuroImage, 14(4), 802–816. 10.1006/nimg.2001.0888 [DOI] [PubMed] [Google Scholar]

- Martin, J. (2012). Neuroanatomy text and atlas (4th ed.).New York, NY: McGraw‐Hill Professional; 10.1036/9780071603973 [DOI] [Google Scholar]

- McGee, T. , Kraus, N. , Littman, T. , & Nicol, T. (1992). Contributions of medial geniculate body subdivisions to the middle latency response. Hearing Research, 61(1–2), 147–154. 10.1016/0378-5955(92)90045-O [DOI] [PubMed] [Google Scholar]

- Michel, C. M. , Murray, M. M. , Lantz, G. , Gonzalez, S. , Spinelli, L. , & Grave De Peralta, R. (2004). EEG source imaging. Clinical Neurophysiology, 115(10), 2195–2222. 10.1016/j.clinph.2004.06.001 [DOI] [PubMed] [Google Scholar]

- Min, B. K. , Hämäläinen, M. S. , & Pantazis, D. (2020). New cognitive neurotechnology facilitates studies of cortical–subcortical interactions. Trends in Biotechnology, 38, 1–11. 10.1016/j.tibtech.2020.03.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moller, A. R. , Kern, J. K. , & Grannemann, B. (2005). Are the non‐classical auditory pathways involved in autism and PDD? Neurological Research, 27(6), 625–629. 10.1179/016164105X25117 [DOI] [PubMed] [Google Scholar]

- Moller, A. R. , & Rollins, P. R. (1992). Some forms of tinnitus and extralemniscal auditory pathway. Laryngoscope, 102(10), 1165–1171. [DOI] [PubMed] [Google Scholar]

- Nasreddine, Z. , Phillips, N. , Bedirian, V. , Charbonneau, S. , Whitehead, V. , Collin, I. , … Chertkow, H. (2005). The Montreal Cognitive Assessment, MoCA: A brief screening. Journal of the American Geriatric Society, 53, 695–699. 10.1111/j.1532-5415.2005.53221.x [DOI] [PubMed] [Google Scholar]

- Oldfield, R. (1971). The assessment and analysis of handedness. Neuropsychologia, 9(1), 97–113. [DOI] [PubMed] [Google Scholar]

- Oostenveld, R. , Fries, P. , Maris, E. , & Schoffelen, J. M. (2011). FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience, 2011, 9 10.1155/2011/156869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Overath, T. , Zhang, Y. , Sanes, D. H. , & Poeppel, D. (2012). Sensitivity to temporal modulation rate and spectral bandwidth in the human auditory system: fMRI evidence sensitivity to temporal modulation rate and spectral bandwidth in the human auditory system: fMRI evidence. Journal of Neurophysiology, 107(February), 2042–2056. 10.1152/jn.00308.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrin, F. , Pernier, J. , & Bertrand, O. (1989). Spherical splines for scalp potential and current density mapping. Electroencephalography and Clinical Neurophysiology, 72, 184–187. 10.1016/0013-4694(89)90180-6 [DOI] [PubMed] [Google Scholar]

- Picton, T. (2013). Hearing in time: Evoked potential studies of temporal processing. Ear and Hearing, 34(4), 385–401. 10.1097/AUD.0b013e31827ada02 [DOI] [PubMed] [Google Scholar]

- Picton, T. W. , Dimitrijevic, A. , Perez‐Abalo, M.‐C. , & van Roon, P. (2005). Estimating audiometric thresholds using auditory steady‐state responses. Journal of the American Academy of Audiology, 16(3), 140–156. 10.3766/jaaa.16.3.3 [DOI] [PubMed] [Google Scholar]

- Picton, T. W. , John, M. S. , Dimitrijevic, A. , & Purcell, D. (2003). Human auditory steady‐state responses. International Journal of Audiology, 42(1), 177–219. [DOI] [PubMed] [Google Scholar]

- Plourde, G. , & Picton, T. W. (1990). Human auditory steady‐state response during general anesthesia. Anesthesia and Analgesia, 71(5), 460–468. 10.1213/00000539-199011000-00002 [DOI] [PubMed] [Google Scholar]