Abstract

One of the main limitations of natural language‐based approaches to meaning is that they do not incorporate multimodal representations the way humans do. In this study, we evaluate how well different kinds of models account for people's representations of both concrete and abstract concepts. The models we compare include unimodal distributional linguistic models as well as multimodal models which combine linguistic with perceptual or affective information. There are two types of linguistic models: those based on text corpora and those derived from word association data. We present two new studies and a reanalysis of a series of previous studies. The studies demonstrate that both visual and affective multimodal models better capture behavior that reflects human representations than unimodal linguistic models. The size of the multimodal advantage depends on the nature of semantic representations involved, and it is especially pronounced for basic‐level concepts that belong to the same superordinate category. Additional visual and affective features improve the accuracy of linguistic models based on text corpora more than those based on word associations; this suggests systematic qualitative differences between what information is encoded in natural language versus what information is reflected in word associations. Altogether, our work presents new evidence that multimodal information is important for capturing both abstract and concrete words and that fully representing word meaning requires more than purely linguistic information. Implications for both embodied and distributional views of semantic representation are discussed.

Keywords: Multimodal representations, Semantic networks, Distributional semantics, Visual features, Affect

1. Introduction

When you look up the word rose in the 2012 Concise Oxford English Dictionary, it is defined as “a prickly bush or shrub that typically bears red, pink, yellow, or white fragrant flowers, native to north temperate regions and widely grown as an ornamental.” How central are each of these aspects to our representation of a rose, and in what form are they represented? Different theories give different answers to this question, particularly with respect to how much linguistic and nonlinguistic sensory representations contribute to meaning. In embodied theories, meaning is based on the relationship between words and internal bodily states corresponding to multiple modalities, such as somatosensation, vision, olfaction, and perhaps even internal affective states. By contrast, lexico‐semantic views focus on the contribution of language, suggesting that the meaning of rose can be derived in a recursive fashion by considering its relationship to the meaning of words in its linguistic context, such as bush, red, and flower (cf. Firth, 1968). These two views are extremes on a spectrum, and current theories of semantics tend to take an intermediate position that both linguistic and nonlinguistic information contribute to meaning.

How is information from sensory modalities and the language modality combined, and which is more important to understand the meaning of words? One idea is that language is a symbolic system that represents meaning via the relationships between (amodal) symbols but is also capable of capturing sensory representations since these symbols refer to perceptual information. This is consistent with the symbol interdependency hypothesis, proposed by Louwerse (2011) and closely related to Clark's (2006) hypothesis that we use language as proxy of the world.

Others have argued for hybrid approaches that combine both symbolic and sensorily grounded representations to varying degrees, depending on task and word characteristics (Andrews, Frank, & Vigliocco, 2014; Paivio, 1971; Riordan & Jones, 2011; Vigliocco, Meteyard, Andrews, & Kousta, 2009). One reason for the importance of nonlinguistic sensory information is that although language has the capacity to capture sensory properties, this capacity is imperfect in making fine‐grained distinctions. For example, in Japanese the word ashi

does not distinguish between foot and leg. As another example, consider the fact that across languages, commonly used color terms only cover a subset of all the colors that humans can detect. Thus, one would expect that models of lexical semantics should perform better when provided with visual training data in addition to linguistic corpora (e.g., Bruni, Tran, & Baroni, 2014; Johns & Jones, 2012; Silberer & Lapata, 2014). This may be true even for abstract words that lack visual referents: Recent work suggests that nonlinguistic factors such as sensorimotor experience, emotional experience, interoception, and sociality ground the meaning of abstract words (for an overview, see Borghi et al., 2017). This suggests that models incorporating only linguistic information will fare less well in capturing human representations than those that combine linguistic and sensory input. The opposite may also hold—that models incorporating only sensory input will fare less well than those based on both.

does not distinguish between foot and leg. As another example, consider the fact that across languages, commonly used color terms only cover a subset of all the colors that humans can detect. Thus, one would expect that models of lexical semantics should perform better when provided with visual training data in addition to linguistic corpora (e.g., Bruni, Tran, & Baroni, 2014; Johns & Jones, 2012; Silberer & Lapata, 2014). This may be true even for abstract words that lack visual referents: Recent work suggests that nonlinguistic factors such as sensorimotor experience, emotional experience, interoception, and sociality ground the meaning of abstract words (for an overview, see Borghi et al., 2017). This suggests that models incorporating only linguistic information will fare less well in capturing human representations than those that combine linguistic and sensory input. The opposite may also hold—that models incorporating only sensory input will fare less well than those based on both.

Not all agree that multimodal information is necessary for the representation of word meaning, at least not for abstract words that lack physical referents (Paivio, 2013). For instance, it is not clear how the meaning of concepts like “romance” or “purity,” which are connotations of the word rose that are not directly derived from sensory impressions, might be captured by sensory‐based models that learn low‐level visual features from images (e.g., Chatfield, Simonyan, Vedaldi, & Zisserman, 2014; He, Zhang, Ren, & Sun, 2016; Lazaridou, Pham, & Baroni, 2015).

In this paper, we revisit the question about the extent to which the language modality encodes sensory properties by comparing linguistic representations derived from text corpora or word associations with multimodal models that encode sensory or affective information as well. We focus on concrete and abstract concepts and the role of visual and affective properties. With affective information we refer more specifically to the emotional valence, or how positive/negative a word is and the arousal, or how calming/exciting a word is. 1 This will allow us to determine whether models of abstract word meaning require multimodal affective representations in the same way that models of concrete word meaning do.

1.1. Multimodal representations of concrete concepts

Concrete concepts are concepts that refer to a perceptible entity. Multimodal models of concrete concepts combine linguistic and sensory representations to determine to what degree linguistic representations capture sensory properties. In theory, these sensory properties could reflect any modality. In a study by Kiela and Clark (2015), for example, a multimodal model was constructed that combines language with auditory input. In practice, most studies focus on visual multimodal models, and this is reflected in the type of concepts that are modeled (typically concrete nouns) and the way concreteness is measured (typically focusing on the visual modality, see Brysbaert, Warriner, & Kuperman, 2014). Early work relied on psycho‐experimental methods to capture these sensory properties; a common approach is to derive representations from feature elicitation tasks in which participants are asked to list meaningful properties of the concept in question. These (presumably nonlinguistic) features are then combined in a multimodal model whose linguistic representations are derived from text corpora (Andrews et al., 2014; Johns & Jones, 2012; Steyvers, 2010).

In recent years, multimodal models have begun to use visual representations derived from large image databases instead of feature listing tasks. These range from approaches in which visual features are obtained by human annotators (Silberer, Ferrari, & Lapata, 2013) to bag‐of‐visual words approaches in which a large set of visual descriptors are mapped onto vectors encoding low‐level scale‐invariant feature transformations (e.g., Bruni et al., 2014). Deep convolutional networks are starting to be used as well, since they typically outperform the low‐level representations captured by simpler feature extraction methods (e.g., Lazaridou et al., 2015).

1.2. Multimodal representations of abstract concepts

Because of their reliance on visual information, most previous work on multimodal models has focused on concrete concepts. However, there are good reasons to study abstract concepts as well. For one, they are extremely prevalent in everyday language: 72% of the noun or verb tokens in the British National Corpus are rated by people as more abstract than the noun war, which many would already consider quite abstract (e.g., Lazaridou et al., 2015). Abstract concepts also pose a particular challenge to strong embodiment views, which maintain that meaning primarily relies on nonlinguistic information. According to these theories, concrete and abstract concepts are not substantially different from each other; both are grounded in the same systems that are engaged during perception, action, and emotion (Borghi et al., 2017). The difficulty for such views is that abstract concepts like “opinion” do not have clearly identifiable referents. By contrast, distributional semantic views can easily explain how abstract concepts are represented: in terms of their distributional properties in language (Andrews et al., 2014).

A range of other theories about abstract concepts have been proposed in recent years, going beyond either embodied or distributional semantic accounts (for an overview, see Borghi et al., 2017). For example, according to the conceptual metaphor theory, abstract concepts are represented in terms of metaphors derived from concrete domains; this process provides a perceptual grounding for abstract meaning (Lakoff & Johnson, 2008). We focus here on a different view that highlights the role of affect in particular, known as the Affective Embodiment Account (AEA). The AEA posits that abstract concepts are grounded in internal affective states (Vigliocco et al., 2009). The grounding is quite broad, covering not just abstract words for emotions but also words that evoke an affective state like cancer or birthday (Kousta, Vinson, & Vigliocco, 2009). Consistent with this, emotional valence leads to a processing advantage for abstract words in a lexical decision task, even when confounding factors like imageability or context availability are taken into account (Kousta, Vigliocco, Vinson, Andrews, & Del Campo, 2011).

Further evidence that supports the AEA comes from a study in which brain activation was predicted by linguistic representations derived from word embedding models as well as experiential (e.g., visual, affective) representations derived from a feature rating task (Wang et al., 2017). Both types of representations were only weakly correlated with each other. When Wang et al. (2017) related the neural activation during a word familiarity judgment task with the linguistic and affective experiential representations using features like valence, arousal, emotion, or social interaction for the same words, they found dissociable neural correlates for each. Notably, affective experiential features were sensitive to areas involved in emotion processing. A subsequent principal component analysis of the whole‐brain activity pattern showed that the first neural principal component, which captured most of the variance in abstract concepts, was correlated significantly with experiential information but not linguistic information. Moreover, the correlation was stronger for valence than other factors such as social interaction, which have been proposed as other factors in which abstract concepts could be embodied.

1.3. Current study

This study aims to investigate how and to what extent distributional models based on word associations or word co‐occurrences derived from natural language are able to capture the meaning of concrete and abstract words. In the word association model the meaning of a word is measured as a distribution of respectively weighted associative links encoded in a large semantic network, whereas the distributional linguistic model derives word meaning from word co‐occurrences derived from large text corpora. Both models are considered to be primarily linguistic in nature. However, for word associations, we expect that associations reflect access to not only the language modality but nonlinguistic sensory modalities as well.

Our second aim is to measure the extent to which distributional models based on word association and word co‐occurrence models can be improved by adding nonlinguistic visual and affective information. Our research is motivated by several observations. First, recent performance improvements by corpus‐based distributional semantic models (e.g., Mikolov, Chen, Corrado, & Dean, 2013) have led to suggestions that these models might learn representations like humans do (Mandera, Keuleers, & Brysbaert, 2017). Our work evaluates to what extent this is the case. Second, models that learn visual categories have recently shown a similarly striking improvement in their ability to correctly identify a wide range of concrete objects (Chatfield et al., 2014; He et al., 2016; Lazaridou et al., 2015).

Our study investigates new multimodal models that integrate visual and linguistic information. Our goals is to evaluate the extent to which such hybrid models capture human performance and to explore what insights they can provide about how humans use environmental cues (linguistic or visual) to build and represent semantic concepts.

Our final aim is motivated by the observation that previously reported gains of multimodal models over purely linguistic models in predicting human performance have been fairly modest (e.g., Bruni et al., 2014). This leaves some uncertainty about whether language in itself is sufficiently rich to encode detailed perceptual information (see Louwerse, 2011).

This study goes further by looking beyond concepts consisting of concrete nouns to determine whether multimodal representations for abstract words provide better predictions of behavioral measures of word meaning. In particular, we computationally test a corollary from the AEA hypothesis, namely that internal affective states provide the foundation for the meaning of abstract concepts.

We achieve these ends by extending existing multimodal models to incorporate both affective and visual multimodal representations. Our performance evaluation focuses on basic‐level concepts within a superordinate category, and it compares text‐based linguistic distributional models to models derived from experimental word association data that might reflect access to both linguistic and experiential representations. As described below, these aspects of our approach enable us to better interpret the performance of the models and what they mean about human cognition.

1.3.1. Comparisons between basic‐level concepts within a superordinate category

In this work, we evaluate models by evaluating their performance on basic‐level concepts (apple, guitar, etc.) belonging to natural language superordinate categories (fruit, musical instruments, etc.). The basic level is the most inclusive one within which the attributes are common to all or most members of the category (Rosch, 1978). Basic‐level concepts are not only the most informative; they are also acquired early and tend to be easy to form an image of (Rosch, Mervis, Gray, Johnson, & Boyes‐Braem, 1976; Tversky & Hemenway, 1984). They are also the default label: For instance, in picture naming studies, subordinate items are usually named at the basic level, even when subordinate names are known (Rosch, 1978).

Given these factors as well as the fact that visual features are shared to some extent between basic‐level objects, one might expect comparisons between them (like apples to pears) to provide a sensitive test of unimodal distributional linguistic models. Despite this, the human similarity benchmarks used to evaluate such models in previous work focus more on wider comparisons (like apples to food or tree). These non‐basic‐level and more abstract comparisons might be more readily encoded through language. This could explain the high correlations between model predictions and human similarity judgments found in such studies (e.g., Mandera et al., 2017), as well as the low correlations found in studies involving more concrete and basic‐level objects (De Deyne, Peirsman, & Storms, 2009). In this work, by focusing on basic‐level concepts, we aim to better control the nature of the semantic relationships and thereby clarify the utility of the information encoded in both linguistic models and multimodal models.

Of course, while the notion of a basic level applies clearly to concrete concepts, it is not obvious whether abstract concepts have a basic level. Nevertheless, we can make use of the fact that abstract concepts can be described as a part of a taxonomic hierarchy. Linguistic resources such as WordNet (Fellbaum, 1998) distinguish between superordinate, coordinate, and subordinate abstract concepts. For example, a hyponym (subordinate) listed in WordNet for the term envy is covetousness, whereas a hypernym (superordinate) is resentment. This suggests that abstract concepts, like concrete ones, can be described at a kind of “basic” level that has high cue validity and many shared attributes. 2 In this work we rely on WordNet to ensure that all of our basic‐level (concrete and abstract) concepts belong to superordinate concrete categories of similar size.

1.3.2. Comparison of distributional linguistic models to a word association baseline

To better understand to what degree linguistic information can predict human semantic judgments, we will compare distributional (corpus‐based) semantic models to a baseline model designed to capture subjective meaning. A variety of methods have been proposed to measure meaning, including semantic differentials (Osgood, Suci, & Tannenbaum, 1957), feature elicitation (De Deyne et al., 2008; McRae, Cree, Seidenberg, & McNorgan, 2005), and word association responses (De Deyne, Navarro, & Storms, 2013; Kiss, Armstrong, Milroy, & Piper, 1973; Nelson, McEvoy, & Schreiber, 2004). Distributional semantic representations derived from all of these methods appear to reflect both linguistic and nonlinguistic semantic information (Taylor, 2012). For example, modality‐specific brain regions related to imagery are also activated when participants generate semantic features or word associations (Simmons, Hamann, Harenski, Hu, & Barsalou, 2008). In the remainder of this article we will use the term "distributional linguistic model" to refer to a distributional semantic model that uses language corpora.

In this study we derive our baseline model of semantic meaning using word association data. This approach makes fewer assumptions than feature elicitation tasks and is relevant for abstract words as well. If, as hypothesized, this model incorporates both linguistic and nonlinguistic information, we would expect that adding visual or affective features would have little impact on the performance of the model. If, as the AEA account suggests, visual and affective information cannot be derived from linguistic information alone, we might expect that distributional corpus‐based models would be improved by adding that information.

Alternatively, if it is indeed the case that natural language does encode perceptual and affective features in a sufficiently rich way, then adding visual or affective features to these language models would have little impact on their performance. This possibility is not unreasonable, given recent improvements in the ability of distributional semantic models to predict a variety of human judgments (see, e.g., Mandera et al., 2017). These improved models, which rely on word co‐occurrence predictions (e.g., word2vec, Mikolov et al., 2013) instead of co‐occurrence counts (e.g., latent semantic analysis; Landauer & Dumais, 1997), may thus capture more of the information inherent in linguistic data and provide a better proxy of the world (Clark, 2006).

The structure of this paper is as follows. We first describe how the distributional linguistic, word association, and experiential (visual or affective) models are constructed. We then evaluate how the experiential information (visual or affective) augments the performance of the distributional linguistic model and the word association baseline model in two similarity judgment tasks. Study 1 required participants to pick the most related pair out of three words (e.g., rose vs. tulip vs. daffodil) while Study 2 had participants rate the similarity of pairs like rose and tulip on an ordinal scale.

2. Constructing unimodal and multimodal models

2.1. Distributional linguistic model

The linguistic model captures semantic representation from the distributional properties in the language environment as is, without integrating it with information from other modalities.

2.1.1. Corpus

The model was trained on a combination of different text corpora described in detail in De Deyne, Perfors, and Navarro (2016). The corpora were constructed to provide a reasonably balanced set of texts that is representative of the type and amount of language a person experiences during a lifetime: formal and informal, spoken and written. It contains four parts: (a) a corpus of English movies subtitles; (b) written fiction and nonfiction taken from the Corpus of Contemporary American English (COCA; Davies, 2009); (c) informal language derived from online blogs and websites available through COCA; and (d) the Simple English Wikipedia, a version of Wikipedia with usually shorter articles aimed at students, children, and people who are learning English (SimpleWiki, accessed February 3, 2016). The resulting corpus consisted of 2.16 billion word tokens and 4.17 million word types. It thus encompasses knowledge that is likely available to the average person but is sufficiently generous in terms of the quality and quantity of data to ensure that our models perform similarly to the existing state of the art.

2.1.2. Word2vec embeddings

Word embedding models have recently been proposed as an alternative to count‐based distribution models such as latent semantic analysis (Landauer & Dumais, 1997) or word co‐occurrence count models (e.g., HAL; Burgess, Livesay, & Lund, 1998). One of the most popular word embedding algorithms consists of a simple neural network that predicts word co‐occurrence (Mikolov et al., 2013). In contrast to count‐based models, networks like word2vec are used to predict words from context (the CBOW or “continuous bag‐of‐words” approach) or context from words (the skip‐gram approach), which more closely resembles human learning. Compared to count‐based approaches, these embedding models often lead to better accounts of lexical and semantic processing (Baroni, Dinu, & Kruszewski, 2014; De Deyne, Perfors, et al., 2016; Mandera et al., 2017). 3

To train word2vec on this corpus, we used a CBOW architecture where the learning objective was to predict a word based on the context in which it occurs. A range of parameters determines the efficiency and predictive performance. In this study, parameter values were taken from previous research in which the optimal model was a network trained to predict the context words using a window size of 7 and a 400‐dimensional hidden layer from which word vectors are derived (De Deyne, Perfors, et al., 2016). This was the best model on a wide range of similarity judgment studies, and it performs comparably with other published embeddings.

2.2. Word association model

Word associations have long been used as an experimental method to assess the semantic knowledge a person holds about a word (e.g., Deese, 1965). In contrast to a controlled task like feature listing, the free association procedure does not censor the type of response. This makes it suitable for capturing the representations of all kinds of concepts (including abstract ones) and all kinds of semantic relations (including thematic and affective ones). It also avoids dissociating the lexicon in two different types of entities (concepts and features), which allows us to represent all concepts in a single graph. The resulting representation is thought to capture broad aspects of meaning, not solely those reflecting our linguistic experiences (Mollin, 2009), since nonlinguistic (i.e., experiential or affective) information is accessed when participants generate associates (Simmons et al., 2008). If this is correct, then word associations can be best characterized as a multimodal model. However, for the purpose of this exposition, unimodal models will refer to either a distributional linguistic or a word association model, and multimodal models will refer to a combination of these models with either visual or affective information.

2.2.1. Word association data

The current data were collected as part of the Small World of Words project, 4 an ongoing crowd‐sourced project to map the mental lexicon in various languages. The SWOW‐EN2018 data are those reported in De Deyne, Perfors, et al. (2016) and consist of associates given by 88,722 fluent English speakers. Each speaker gave three different responses to between 14 and 18 cue words. For example, a person shown the cue word miracle might respond magic, religion, and Jesus. The dataset contains a total of 12,292 cue words for which at least 300 responses were collected for every cue.

2.2.2. Semantic network

In line with previous work, we constructed a semantic weighted graph from these data in which each edge corresponds to the association frequency between a cue and a target word. The graph was constructed by only including responses that also occurred as a cue word and keeping only those nodes that are part of the largest connected component (i.e., nodes that have both in‐ and out‐going edges). The resulting graph consists of 12,217 nodes, which retains 87% of the original data.

Following De Deyne, Navarro, Perfors, and Storms (2016) and De Deyne, Navarro, Perfors, Brysbaert, and Storms (2019), we first transformed the raw association frequencies using positive point‐wise mutual information (PPMI). Next, a mechanism of spreading activation through random walks was used to allow indirect paths of varying length connecting any two nodes to contribute to their meaning. The random walks can be thought of as a vector with the same dimensionality as the number of nodes in the graph where each element corresponds to a weighted sum of direct and indirect paths, with longer paths receiving a lower weight. The random walks implement the idea of spreading activation over a semantic network. To limit the contribution of long paths, a decay parameter α was set to 0.75, in line with De Deyne, Navarro, et al. (2016). This algorithm is similar to other approaches (Austerweil, Abbott, & Griffiths, 2012), but differs by taking an additional PPMI weighting of the graph with indirect paths to avoid a frequency or degree bias and to reduce spurious links (for a discussion, see Newman, 2010).

2.3. Visual feature model

We constructed a model based on visual information using ImageNet as the source of the data encoding visual information (De Deyne, Perfors, et al., 2016); it is currently the largest labeled image bank and includes over 14 million images. It consists of images for the concrete nouns represented in WordNet, a language‐inspired knowledge base in which synonymous words are grouped in synsets and connected through a variety of semantic relations (IS‐A, HAS‐A, etc.). With 21,841 synsets, ImageNet captures a large portion of the concrete lexicon represented in WordNet. A small part of synsets encoded in the WordNet is shown in Fig. 1.

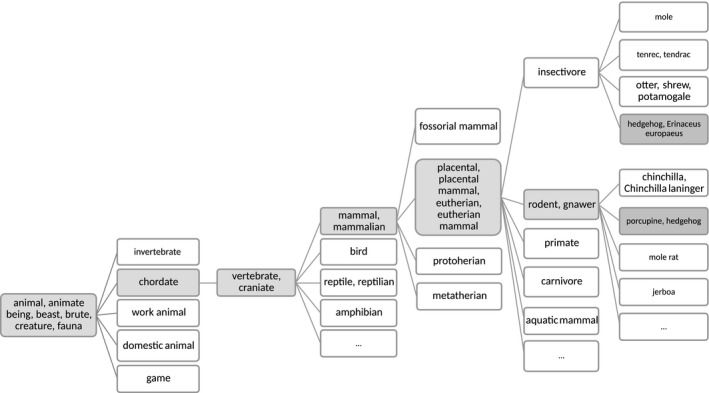

Fig. 1.

Part of the WordNet hierarchy for concrete synsets that are covered by ImageNet. Synsets can occur at different hierarchical levels and are labeled by one or multiple words that can overlap with other branches in the tree; this is illustrated for the case “hedgehog.”

Visual features were obtained by applying ResNet (He et al., 2016), a pre‐trained supervised convolutional neural network, to each concrete synset that had at least 50 images. The 2,048‐dimensional activation of the last layer (before the softmax layer) of the network is taken as a visual feature vector as it contains higher level features. Finally, we obtained a single 2,048‐dimensional vector to represent each WordNet synset by averaging the feature vectors from its associated individual images. Each image vector was then mapped to a WordNet synset.

The words in this study corresponded to synset labels that could occur both as inner nodes (e.g., mammal) or leaf nodes (e.g., hedgehog) depending on their level of abstraction. In some cases, a word corresponded to a synset with multiple labels. For example, in Fig. 1 the word hedgehog is found in two different synsets (one labeled “hedgehog, Erinaceus europaeus” and one “porcupine, hedgehog”). To map these synsets to a single word, the synset labels were split and the corresponding image vectors averaged. Of the 18,851 parsed synset labels in ResNet, 4,449 labels were shared with the SWOW‐EN2018 cue words. Of these 4,449 cues, 910 cues mapped onto more than one synset and were averaged. Previous research on high‐dimensional distributional language models has shown that point‐wise mutual information (PMI), which assigns larger weights to specific features, improves model predictions (De Deyne et al., 2019; Recchia & Jones, 2009). An exploratory analysis showed that this was also the case for the image vectors; we therefore use weighted PMI image vectors throughout this work.

2.4. Affective feature model

Affective factors like valence or arousal capture a significant portion of the structure in the mental representation of both adjectives (e.g., De Deyne, Voorspoels, Verheyen, Navarro, & Storms, 2014) and nouns (e.g., Osgood et al., 1957).The validity of the subjective judgments of affective factors is supported by recent work that demonstrated that human affective ratings predict the modulation of the potential neural activity signal in areas associated with affective processing (Vigliocco et al., 2013). According to the AEA theory (Vigliocco et al., 2009), these affective factors provide the necessary multimodal grounding for abstract concepts, which lack any physical referents. We constructed the features of the affective model based on human ratings for valence, arousal, and dominance for nearly 14,000 words (Warriner, Kuperman, & Brysbaert, 2013). The ratings by males and females were treated as separate features and supplemented with three additional features for valence, arousal, and dominance from a more recent study based on 20,000 English words (Mohammad, 2018). The norms from the latter study were somewhat different than those from Warriner et al. (2013) in two ways. First, they used best–worst scaling, which resulted in more reliable ratings. Second, they operationalized arousal differently, resulting in ratings that were less correlated with valence. Each individual word was thus represented by nine features: valence, arousal, and potency judgments for men and women from Warriner et al. (2013) and valence, arousal, and dominance judgments from Mohammad (2018). For example, the representation for the word rose would be: [5.9 3.1 6.4 8.1 2.6 6.0 7.8 2.5 3.5]. The first six values correspond to the male ratings for valence, arousal, and potency and the female ratings for valence, arousal, and potency (Warriner et al., 2013). The last three values correspond to rescaled valence, arousal, and dominance for men and women from Mohammad (2018). None of the features were perfectly correlated with each other, which allowed them to each contribute.

2.5. Multimodal models that combine linguistic models with experiential representations

To investigate how adding visual or affective information to our linguistic or word association model affects their performance, we created multimodal models that incorporate both kinds of information. There are multiple efficient ways to combine different information sources; these include auto‐encoders (Silberer & Lapata, 2014), Bayesian models (Andrews et al., 2014), and cross‐modal mappings (Collell, Zhang, & Moens, 2017). We employ a late fusion approach in which features from different modalities are concatenated to build multimodal representations. This approach performs relatively well (e.g., Bruni et al., 2014; Johns & Jones, 2012; Kiela & Bottou, 2014) and enables us to investigate the relative contribution of the modalities directly. This is achieved by a single dataset‐level tuning parameter β, ranging from 0 to 1, which allows us to vary and quantify the relative contribution of the different modalities. The multimodal fusion M of the modalities a and b corresponds to where denotes concatenation, va is the vector representation for the linguistic or word association information, and vb is the vector representation for the affective or visual information. Since the features for different modalities can have different scales, they were normalized using the L 2‐norm prior to concatenation, which puts all features in a unitary vector space (Kiela & Bottou, 2014).

3. Study 1: Basic‐level triadic comparisons

The first study compares how well each of the models above predicts human similarity judgments. Participants rated similarity in a triadic comparison task in which they were asked to pick the most related pair out of three words. There were two conditions, one in which the words were concrete and one in which they were abstract. Compared to pairwise judgments using rating scales, the triadic task has several advantages: Humans find relative judgments easier, it avoids scaling issues, and it leads to more consistent results (Li, Malave, Song, & Yu, 2016).

3.1. Method

3.1.1. Participants

Forty native English speakers between 18 and 49 years old (21 females, 19 males, average age 35) were recruited in the concrete condition and forty native English speakers aged 19–46 years (16 females, 24 males, average age = 32) in the abstract condition. The participant sample size (and number of stimuli) was informed by a previous work on triadic judgments (De Deyne, Navarro, et al., 2016). Data were collected in two online studies through Prolific Academic. We first collected for the abstract study and when enough participants completed the task, the concrete task was administered. All participants signed an informed consent form and were paid £6/h. The procedures were approved by the Ethics Committee of the University of Adelaide.

3.1.2. Stimuli

The concrete stimuli consisted of 100 triads constructed from a subset of 300 nouns present in the lexicons of all of our models and for which valence and concreteness norms were available (Brysbaert et al., 2014; Warriner et al., 2013). All 300 words were selected from a set of superordinate categories identified in previous work (e.g., Battig & Montague, 1969; De Deyne et al., 2008). Approximately half of the triads belonged to natural kind categories (Fruit, Vegetables, Mammals, Fish, Birds, Reptiles, Insects, Trees) and the other half to human‐made categories (Clothing, Dwellings, Furniture, Kitchen utensils, Musical instruments, Professions, Tools, Vehicles, Weapons). Each triad was composed of three basic‐level words that belong to the same superordinate category (e.g., falcon, flamingo, penguin) and was constructed by randomly combining category exemplars, subject to the constraint that none of the words occurred more than once across any of the triads. Table A1 of Appendix A contains a list of all the stimuli.

The abstract stimuli consisted of 100 triads and were also constructed from words that were present in the lexicons of all our models and where norms for concreteness and valence were available (see concrete triads). To ensure that the words were abstract, we removed any words with concreteness ratings of over 3.5 on a 5‐point scale (Brysbaert et al., 2014). The average concreteness was 2.6. Moreover, all words were well known, with at least 90% of participants in the word association study indicating that they knew the word. The resulting set of 29 categories covered a variety of abstract domains, including emotions, attitudes, abilities, social groups, and beliefs. A list of the stimuli together with their category label is presented in Table A2 of Appendix A.

To identify superordinate and basic‐level words for abstract categories, we used WordNet (Fellbaum, 1998), selecting stimuli corresponding to categories in the WordNet hierarchy at a depth of 4–8 in the taxonomy. This ensured that the exemplars were neither overly specific nor overly general. For example, the triad bliss–madness–paranoia consists of three words defined at depths 8, 9, and 9 in the hierarchical taxonomy, with the most specific shared category label or hypernym at depth 6.

3.1.3. Procedure

For each of the 100 triads, participants were instructed to select the pair of words (out of the three) that was most related in meaning, or to indicate if any of the words is unknown. They were asked to only consider the word meaning, ignoring superficial properties like letters, sound, or rhyme. The method and procedure for abstract stimuli were identical to that for the concrete except that the example given to participants now contained abstract words. The concrete task took 8 min, and the abstract one, 10 min.

3.1.4. Behavioral results

All three words in over 99% of concrete triads and 96% of abstract triads were considered known by participants. Similarity judgments were calculated by counting how many participants chose each of the three potential pairs. Because the number of judgments varied depending on whether participants judged them to be unknown, they were converted to proportions. The Spearman split‐half reliability was .92 for concrete triads and .90 for abstract triads. These reliabilities provide an upper bound of the correlations that can be achieved with our models.

3.2. Model evaluation

Human triad preferences were predicted by calculating the Pearson correlation with the model preferences for either the distributional linguistic, word association, visual, and affective vectors for each of the three word pairs in each triad. The model preferences were calculated using the cosine similarities for all three pairs and rescaling them to sum to one. 5 Note that in all analyses that follow, no results are available for the visual feature model of abstract words for the obvious reason that abstract words are not found in ImageNet. The correspondences between the human preferences and the model predictions measured through Pearson correlations are shown in Table 1.

Table 1.

Pearson correlations and confidence intervals for unimodal and multimodal models. The top panel shows the performance when va corresponds to the distributional linguistic model, while the middle panel va corresponds to the word association baseline. The bottom panel corresponds to purely experiential model where va corresponds to the affective model and is added for completeness. In each panel, the unimodal columns show the performance of that model (va) as well as the two experiential models (vb) on either the concrete or the abstract words. The best‐fitting multimodal models combining va and vb were found by optimizing the correlation for mixing parameter β and are shown in column . The improvement due to adding experiential information () is shown in column Δr

| Dataset |

|

va = Distributional linguistic model | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | |||||||||||

|

|

CI95 | vb |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| Concrete | 300 | .64 | [0.57, 0.70] | Visual | .67 | [0.60, 0.73] | .48 | .75 | [0.70, 0.80] | .12 | [0.07, 0.17] | |

| Concrete | 300 | .64 | [0.57, 0.70] | Affect | .21 | [0.10, 0.32] | .50 | .68 | [0.62, 0.74] | .04 | [0.02, 0.08] | |

| Abstract | 300 | .62 | [0.54, 0.68] | Affect | .51 | [0.43, 0.59] | .58 | .74 | [0.69, 0.79] | .13 | [0.08, 0.19] | |

| Dataset |

|

va = Word association model | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | |||||||||||

|

|

CI95 | vb |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| Concrete | 300 | .76 | [0.71, 0.80] | Visual | .67 | [0.60, 0.73] | .35 | .81 | [0.77, 0.85] | .05 | [0.03, 0.08] | |

| Concrete | 300 | .76 | [0.71, 0.80] | Affect | .21 | [0.10, 0.32] | .38 | .78 | [0.74, 0.82] | .02 | [0.00, 0.05] | |

| Abstract | 300 | .82 | [0.78, 0.86] | Affect | .51 | [0.43, 0.59] | .05 | .82 | [0.78, 0.86] | .00 | [−0.01, 0.01] | |

| Dataset |

|

va = Affective model | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | |||||||||||

|

|

CI95 | vb |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| Concrete | 300 | .21 | [0.10, 0.32] | Visual | .67 | [0.60, 0.73] | .45 | .73 | [0.67, 0.77] | .52 | [0.41, 0.63] | |

Note that the confidence intervals for Δr are based on testing significant differences for dependent overlapping correlations based on Zou (2007). This approach increases the power to detect an effect compared to Fisher's r to z procedure which assumes independence.

3.2.1. Distributional linguistic versus word association model comparisons

We first compared the performance of the distributional linguistic model against the baseline word association model. The word association model showed a high correlation with the triad preferences in both the concrete, r(298) = .76, CI95 [0.71, 0.80], and abstract tasks, r(298) = .82, CI95 [0.78, 0.86]. The correlations for the distributional linguistic model were considerably lower: r(298) = .64, CI95 [0.56, 0.70] for concrete triads and r(298) = .62, CI95 [0.54, 0.68] for abstract triads. To test whether the difference in correlation was significant, confidence intervals for correlation differences were calculated for dependent overlapping variables (Zou, 2007) using the cocor package in R (Diedenhofen & Musch, 2015). The 95% confidence interval for the difference in correlation was CI95 [0.06, 0.19] for the concrete triads and CI95 [0.14, 0.27] for the abstract triads, suggesting that the word association model better predicted human similarity judgments for both kinds of words.

3.2.2. Visual and affective multimodal model comparisons

For the multimodal models, we were primarily interested in understanding how visual information contributed to the representation of concrete words and how affective information contributed to the representation of abstract words. In contrast to abstract words, it is also feasible to investigate a combination of both visual and affective information for concrete words. For completeness we include this scenario for concrete word with vb = Affect as part of the results reported in Table 1.

The confidence intervals in the last column of Table 1 indicate that for concrete words, adding visual information helped both the distributional and the word association models. The values corresponding to the visual multimodal model are higher than the values, and in both cases this difference was significant as the confidence interval of the difference did not include zero. However, visual information improved performance of the distributional linguistic model more (0.12 vs. 0.05, respectively). The bootstrapped confidence interval of the difference between Δrs, CI95 [0.01, 0.12], did not include zero, suggesting this difference was significant. 6 This suggests that the word association model may incorporate some visual information that the distributional linguistic model does not. For completeness, Table 1 also shows the results for concrete triads using a multimodal model based on affective information. Affective information by itself only weakly predicts the preferences in concrete triads (r(298) = .21), but it offers a small multimodal gain when combined with the distributional linguistic model (Δr = .04, CI95 [0.02, 0.08]) and the word association model (Δr = .02, CI95 [0.00, 0.05]).

For abstract words, we found that affective information improved the performance of the distributional linguistic model substantially (from to ). This improved performance is consistent with the AEA hypothesis by Vigliocco et al. (2009) and suggests that the decisions made by people in the triad task were based in part on affective information. Indeed, the affective model predictions alone did capture a significant portion of the variability in the abstract triads, r(298) = .51, CI95 [0.43, 0.59], which is remarkable considering that the model consists of only nine features. Interestingly, affective information did not improve the performance of word association model, suggesting that word association data already incorporates affective information. Moreover, the multimodal gain for the distributional linguistic model (0.13, compared to 0.00 for word associations) was significantly larger, with a bootstrapped confidence interval of the difference between Δrs CI95 [0.07, 0.19].

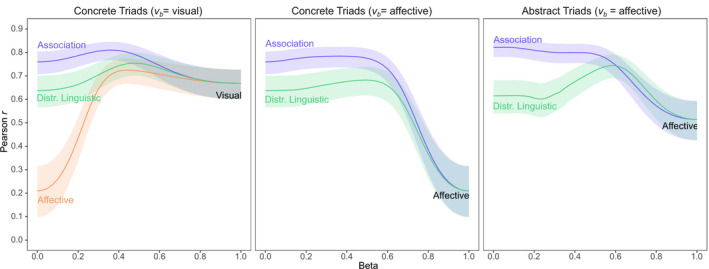

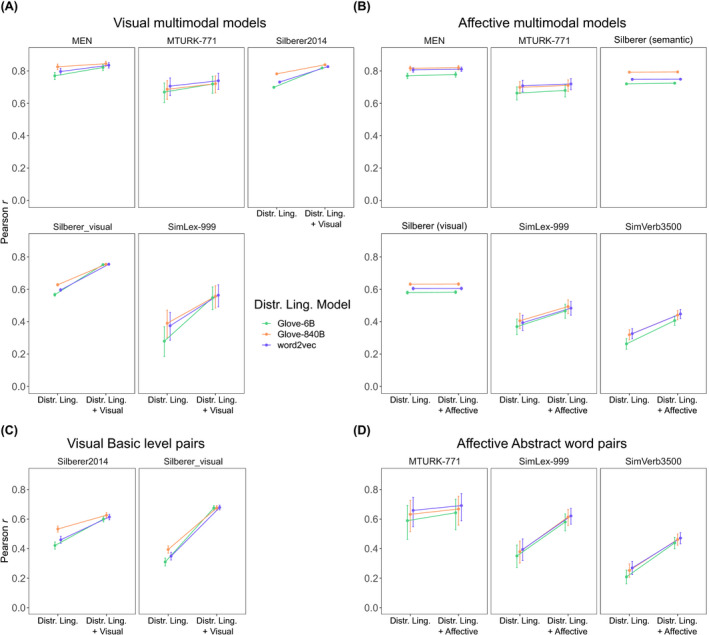

To further explore the effect of adding visual or affective information, Fig. 2 plots the performance of each model as a function of the β‐weighted proportion of experiential information added when the words are either concrete (left panel) or abstract (right panel). The word association model performs best, with small improvements from visual features (Δr = .05, β = 0.35, see Table 1). For the concrete triads, we examine the effect of adding visual information. The distributional linguistic model performs worse but improves slightly more when visual features are added (r increase of .07). For completeness, we also included a model where va is affective and vb is visual for the concrete triads. For the concrete triads, the affective model, shown in orange and included in Table 1, is easily the worst of the three. For the abstract triads, we examine the effect of adding affective information. When this is done, the word association model does not improve, but the distributional linguistic model improves considerably.

Fig. 2.

The effect of adding visual or affective experiential information to predict triadic preferences for concrete (first and second panels) and abstract (third panel) word pairs. Each panel shows the unimodal distributional linguistic and word association correlations on the left side of the x‐axis and the unimodal experiential (affective or visual features) correlations on the right side. Intermediate values on the x‐axis indicate multimodal models. In the first panel, visual information is added: Larger β values correspond to models that weight visual feature information more. In the second and third panels, affective information is added: Larger β values correspond to models that weight affective information more. Peak performance for all models usually occurs when about half of the information is experiential.

Overall, word association models outperformed distributional linguistic models at the optimal β value, even when these models included visual (respectively 0.81 vs. 0.75, CI95 [0.02, 0.09]) or affective (respectively 0.82 vs. 0.74, CI95 [0.03, 0.13]) information.

3.3. Robustness

Thus far, our results support the hypothesis that the model of meaning based on word associations captures visual information in concrete words and affective information in abstract words. The performance of the distributional linguistic model was worse than the word association baseline for both concrete and abstract words. The distributional linguistic models were most improved by adding affective information, consistent with the AEA hypothesis that the meaning of abstract words, like concrete words, relies on experiential information. To what degree do these findings reflect the specific choices we made in setting up our models? To address this question, we tested how robust our results were when compared against alternative models based on different corpora and different embedding techniques.

3.3.1. Distributional linguistic models

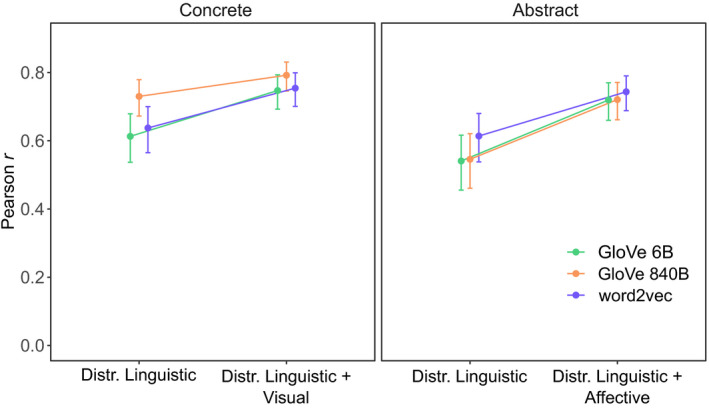

First, we investigated whether the linguistic model performance was due to the specific embeddings used. To test this, we chose GloVe embeddings as an alternative distributional linguistic model (Pennington, Socher, & Manning, 2014). In contrast to word2vec embeddings, GloVe does not incrementally learn embeddings but is based on a factorization of a global word co‐occurrence matrix, which can lead to improved predictions in certain tasks. We used the published word vectors for a model of comparable size to our corpus consisting of 6 billion (B) tokens derived from the GigaWord 5 and Wikipedia 2014 corpus. We also included an extremely large corpus consisting of 840B words from the Common Crawl project. 7 As before, the distributional linguistic vectors (va) were combined with visual or affective information (vb) to create a multimodal model that was optimized by fitting values of β. Fig. 3 shows the unimodal distributional linguistic model correlations and the optimal multimodal correlations.

Fig. 3.

Evaluation of alternative distributional linguistic models on concrete and abstract words in the triad task. The figure shows the correlations and 95% confidence intervals for unimodal and multimodal (visual left, affective right panel) models using the standard word2vec based on 2B token corpus and two embeddings based on GloVe, trained on a corpus of either of 6B and 840B tokens.

For unimodal predictions of concrete items, the Glove‐840B was better than word2vec (CI95 [−0.15, −0.04]) and Glove‐6B (CI95 [−0.17, −0.07]), but the smaller Glove‐6B was not significantly different from word2vec. The multimodal predictions of concrete items followed the same pattern, with only GloVe‐840B better than word2vec (CI95 [−0.07, −0.01]), and Glove‐6B (CI95 [−0.07, −0.02]). For the unimodal prediction of abstract items, word2vec outperformed both GloVe‐6B (CI95 [0.02, 0.14]) and GloVe‐840B (CI95 [0.01, 0.13]). However, once affect was added in the multimodal model, the distinct models did not obtain significantly different correlations.

Does adding experiential information improve performance for the GloVe models, as it did for word2vec? For concrete items for Glove‐6B, it appeared to: Predictions using Glove‐6B were significant (the Δr = .13 had a confidence interval that did not overlap with zero, CI95 [0.09, 0.19]). The same was true for Glove‐840B (Δr = 0.06, CI95 [0.03, 0.10]). The pattern was the same for abstract words: Adding experiential information resulted in a significant improvement for both GloVe‐6B (Δr = .18, CI95 [0.12, 0.24]) and GloVe‐840B (Δr = .17, CI95 [0.12, 0.24]).

To summarize, the unimodal results indicate that a very large corpus based on 840 billion (B) tokens improves performance for concrete items but results in lower correlations for abstract ones. This suggests that visual language about concrete entities might be relatively underrepresented in all but the largest corpora. The current word2vec model based on 2 billion (2B) performs favorably compared to the 6B GloVe embeddings trained on a corpus more similar in size. For the multimodal comparisons, regardless of the nature of the distributional linguistic model, adding experiential information improved performance. Overall, the findings are robust regardless of the corpus or embedding method used. In other words, the results for these distinct distributional linguistic models are not very different, despite both architectural (word2vec vs. GloVe) and corpus differences (2B words for the current corpus, 6B or 840B words used to train GloVe).

3.3.2. Word association model

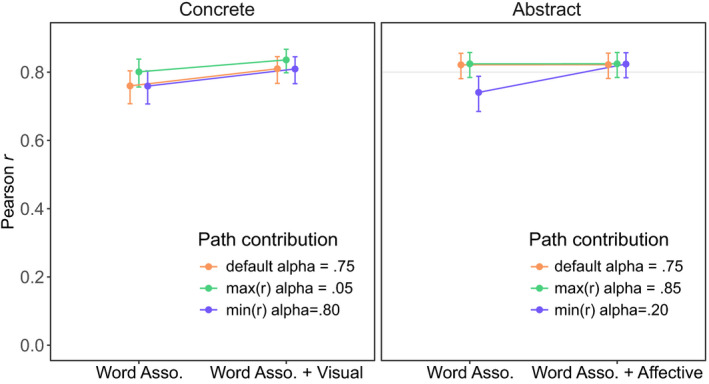

There were fewer parameters and degrees of freedom in the word association model than the distributional linguistic model, since its behavior is determined by a single activation decay parameter α, which we set at 0.75 in line with previous work (De Deyne, Perfors, et al., 2016). However, this might have had some effect on model performance: Especially for basic‐level comparisons, a high value of α might introduce longer paths, which might add more thematic information at the expense of shorter category‐specific paths. For this reason, we also evaluated performance for other values of α.

Fig. 4 shows that performance was reasonably robust over all values of α. Smaller α values did occasionally improve the results, but only for the unimodal results with concrete words: r α=0.05(298) = .80 compared to the default r α=0.75(298) = .76, Δr = −.04, CI95 [−0.07, −0.02]. For abstract words, the optimal performance was obtained when α = 0.85 and was not significantly different from the default setting (α = 0.75). One interpretation of this is that the representation of concrete words does not reflect indirect paths as much as the representation of abstract concepts. Regardless, this pattern suggests that even the modest improvement found when visual information was added to the word association model was probably somewhat overestimated relative to what would have been obtained using the optimal value for α.

Fig. 4.

Evaluation of alternative word association models on concrete and abstract words in the triad task. The left panel multimodal model includes visual information; the right multimodal panel includes affective information. The length of the random walk was varied by setting α, and the maximal and minimal values of r were overall similar regardless of α.

4. Study 2: Pairwise similarity experiments

Study 1 evaluated the performance of distributional linguistic and word association models when participants perform relative similarity judgments between basic‐level concrete or abstract words. To see if these findings generalize to a broader set of concepts and a different paradigm, we evaluated the same models on multiple datasets containing pairwise semantic similarity ratings, including some that were explicitly collected to compare language‐based and (visual) multimodal models.

Unlike the concrete triad task in Study 1, most of the existing datasets include a wide range of concrete semantic relations rather than just taxonomic basic‐level ones. According to Rosch et al. (1976), “basic‐level categories are shown to be the most inclusive categories for which a concrete image of the category as a whole can be formed and to be the first categorizations made during the perception of the environment” (p. 382). With this in mind, investigating the similarity of items from different categories (e.g., butter—croissant; passion—justice) might be a relatively insensitive way of gauging the effect of additional visual or affective information.

Fortunately, the large size of previously published datasets allows us to impose restrictions and compare items where both words belong to the same basic‐level category, as well as to evaluate only abstract concepts. By comparing concepts of different types, we will be able to investigate whether the results from Study 1 only apply to concrete concepts on the basic level or whether they generalize further. In addition, while most of the new datasets contain primarily concrete nouns, some of them include a sufficient number of abstract words as well. Given the finding in Study 1 that affective information is important to the representation of those concepts, it is essential to determine whether this finding replicates and generalizes to different tasks.

4.1. Datasets

We consider five different datasets of pairwise similarity ratings. 8 Three of these datasets, the MEN data (Bruni, Uijlings, Baroni, & Sebe, 2012), the MTURK‐771 data (Halawi, Dror, Gabrilovich, & Koren, 2012), and the SimLex‐999 data (Hill, Reichart, & Korhonen, 2016), are commonly used as a general benchmark for semantic and distributional models. Two more recent datasets were additionally included because they allow us to more directly address the role of visual and affective information. The first one was the Silberer2014 dataset (Silberer & Lapata, 2014), which was collected with the specific purpose of evaluating visual and semantic similarity in multimodal models. The second dataset was SimVerb‐3500 (Gerz, Vulić, Hill, Reichart, & Korhonen, 2016), which contains a substantial number of abstract verbs. It thus allows us to extend our findings beyond concrete nouns to verbs and investigate whether the important role of affective information in abstract concepts found in Study 1 replicates here. Each of the datasets is slightly different in terms of procedures, stimuli, and semantic relations of the word pairs being judged. The next section briefly explains these differences and reports on their internal reliability, which sets a bound on the maximal correlation of the models we want to evaluate. 9

4.1.1. MEN

The MEN dataset (Bruni, Uijlings, et al., 2012) consists of 3,000 word pairs constructed specifically for testing multimodal models, and thus most words were concrete. The words were selected randomly from a subset of words occurring at least 700 times in the ukWaC and WaCkypedia corpus. Next, semantic vectors derived from these corpora models were used to derive cosine values from the first 1,000 most similar items, 1,000 pairs were sampled from the 1,001–3,000 most similar items, and the last 1,000 items from the remaining items. As a result, the MEN consists of concrete words that cover a wide range of semantic relations. The estimated reliability that serves as an upper bound was ρ = 0.84 (Bruni, Uijlings, et al., 2012).

4.1.2. MTURK‐771

The MTURK‐771 dataset (Halawi et al., 2012) consists of 771 word pairs and was constructed to include various types of relatedness. It consists of frequent nouns taken from WordNet that are synonyms, have a meronymy relation (e.g., leg—table), or a holonymy relation (e.g., table—furniture). The authors converted WordNet to an undirected graph and only included words with graph distances between 1 and 4. The variability of distance and type of relation suggests that this dataset is quite varied. The reliability, calculated as the correlation between randomly split subsets, was 0.90.

4.1.3. SimLex‐999

The SimLex‐999 dataset (Hill et al., 2016) consists of 999 word pairs and is different from all other datasets in that participants were explicitly instructed to ignore (associative) relatedness and only judge “strict similarity.” It also differs from previous approaches by using a more principled selection of items consisting of adjective, verb, and noun concept pairs covering the entire concreteness spectrum. A total of 900 word pairs were selected from all associated pairs in the USF association norms (Nelson et al., 2004) and supplemented with 99 unassociated pairs. None of the pairs consisted of mixed part‐of‐speech. In this task, associated non‐similar pairs in this list would receive low ratings, whereas highly similar (but potentially weakly associated) items would be rated highly. The reported average pairwise agreement was higher for abstract than concrete concepts (ρ = 0.70 vs. ρ = 0.61). This is relevant for current purposes as a separate analysis for abstract concepts is reported below. The overall inter‐rater reliability calculated over split‐half sets was 0.78 (Gerz et al., 2016).

4.1.4. Silberer

The Silberer dataset (Silberer & Lapata, 2014) consists of all possible pairings of the nouns present in the McRae et al. (2005) concept feature norms. For each of the words, 30 randomly selected pairs were chosen to cover the full variation of semantic similarity. The resulting set consisted of 7,569 word pairs. In contrast to previous studies, the participants performed two rating tasks, consisting of both visual and semantic similarity judgments. The inter‐rater reliability, calculated as the average pairwise ρ between the raters, was 0.76 for the semantic judgments and 0.63 for the visual judgments.

4.1.5. SimVerb‐3500

The SimVerb‐3500 dataset (Gerz et al., 2016) consists of 3,500 word pairs and was constructed to remedy the bias in the field toward studying nouns, and thus consists of an extensive set of verb ratings. Like the SimLex‐999 dataset, it was designed to be representative in terms of concreteness and constrained the judgments explicitly by asking participants to judge similarity rather than relatedness. The items were selected from the University of South Florida association norms and the VerbNet verb lexicon (Kipper, Snyder, & Palmer, 2004), which was used to sample a large variety of classes represented in VerbNet. Inter‐rater reliability obtained by correlating individuals with the mean ratings was high (ρ = 0.86). Note that since the visual feature model trained on ImageNet only contains nouns, SimVerb‐3500 cannot be used to study the impact of visual information.

4.2. Evaluation of multimodal visual models

The similarity judgments are predicted by the word2vec distributional linguistic model and word association model, each respectively combined with the visual information to create the multimodal model. As in Study 1, the relative contribution of either the distributional linguistic word associations representation versus visual information will be determined by the best fit of the mixing parameter β.

4.2.1. Datasets involving diverse semantic comparisons

We first consider the three datasets corresponding to a mixed list of word pairs covering a variety of taxonomic and relatedness relations. Of these, the MEN and MTURK‐771 datasets are most similar to each other, since they consist of pairs that include both similar and related pairs across various levels of the taxonomic hierarchy. The first set of comparisons involves multimodal models with visual information, and consequently, the analyses are restricted to those pairs that are present in the linguistic, word association, and visual models. The results reveal that adding visual information only improved the word association model for the MEN data but not for MTURK‐771 (Δr respectively .02 CI95 [0.01, 0.02] and .00, CI95 [−0.01, 0.02]). However, visual information significantly improved the distributional linguistic model on both datasets (Δr respectively .03 CI95 [0.02, 0.04] and .04 CI95 [0.01, 0.08]).

The SimLex‐999 dataset is slightly different than all others, in that participants were explicitly instructed to only evaluate strict similarity, ignoring any kind of associative relatedness between the two items. The unimodal word association model performed far better than the distributional linguistic model on this dataset (r(298) = .72 vs. .43, Δr = .29, CI95 [0.22, 0.48]). Adding visual information did not improve the performance of the association model but resulted in considerable improvement in the distributional linguistic model (r(298) = .14, CI95 [0.07, 0.21]). A potential explanation for the difference between datasets is that SimLex‐999 focused on strict similarity, whereas MEN and MTURK‐771 covered a broader range of semantic relations, including thematic ones, for which visual similarity is of limited use. Note the relative small number of cases (n = 300) in SimLex‐999. Table 2 reflects the fact that abstract word pairs are not encoded in the visual model, whereas most items are encoded in the affective model in Table 4.

Table 2.

Pearson correlation and confidence intervals for correlation differences Δr between unimodal and multimodal visual models. The top part of the table shows the results for the distributional linguistic model, whereas the bottom part shows the results for the word association model

| Dataset |

|

va = Distributional linguistic, vb = Visual | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | ||||||||||

|

|

CI95 |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| MEN | 942 | .79 | [0.77, 0.82] | .66 | [0.62, .70] | 0.38 | .82 | [0.80, 0.84] | .03 | [0.02, 0.04] | |

| MTURK‐771 | 260 | .67 | [0.59, 0.73] | .49 | [0.39, 0.58] | 0.38 | .71 | [0.64, 0.76] | .04 | [0.01, 0.08] | |

| SimLex‐999 | 300 | .43 | [0.33, 0.52] | .54 | [0.45, 0.61] | 0.55 | .56 | [0.48, 0.64] | .14 | [0.07, 0.21] | |

| Silberer (Sem.) | 5,799 | .73 | [0.71, 0.74] | .78 | [0.77, 0.79] | 0.53 | .82 | [0.82, 0.83] | .10 | [0.09, 0.10] | |

| Silberer (Vis.) | 5,777 | .59 | [0.57, 0.61] | .74 | [0.73, 0.75] | 0.65 | .75 | [0.74, 0.76] | .16 | [0.15, 0.17] | |

| Average | .55 | .55 | .64 | .08 | |||||||

| Dataset |

|

va = Word association, vb = Visual | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | ||||||||||

|

|

CI95 |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| MEN | 942 | .79 | [0.76, 0.81] | .66 | [0.62, 0.70] | 0.45 | .81 | [0.78, 0.83] | .02 | [0.01, 0.02] | |

| MTURK‐771 | 260 | .74 | [0.68, 0.79] | .49 | [0.39, 0.58] | 0.33 | .75 | [0.69, 0.79] | .00 | [−0.01, 0.02] | |

| SimLex‐999 | 300 | .72 | [0.66, 0.77] | .54 | [0.45, 0.61] | 0.43 | .73 | [0.68, 0.79] | .01 | [−0.01, 0.03] | |

| Silberer (Sem.) | 5,799 | .84 | [0.83, 0.85] | .78 | [0.77, 0.79] | 0.58 | .87 | [0.86, 0.88] | .03 | [0.03, 0.04] | |

| Silberer (Vis.) | 5,777 | .73 | [0.72, 0.74] | .74 | [0.73, 0.75] | 0.65 | .79 | [0.78, 0.80] | .06 | [0.05, 0.07] | |

| Average | .71 | .55 | .73 | .02 | |||||||

Table 4.

Pearson correlation and confidence intervals for correlation differences Δr between unimodal and multimodal affective models. The top part of the table shows the results for the distributional linguistic model, whereas the bottom part shows the results for the word association model

| Dataset |

|

va = Distributional linguistic, vb = Affect | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | ||||||||||

|

|

CI95 |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| MEN | 1,981 | .80 | [0.78, 0.81] | .31 | [0.27, 0.35] | 0.45 | .80 | [0.78, 0.81] | .00 | [0.00, 0.00] | |

| MTURK‐771 | 653 | .70 | [0.66, 0.74] | .26 | [0.19, 0.33] | 0.53 | .71 | [0.67, 0.75] | .01 | [0.00, 0.02] | |

| SimLex‐999 | 913 | .45 | [0.39, 0.50] | .33 | [0.27, 0.39] | 0.65 | .63 | [0.47, 0.56] | .07 | [0.04, 0.10] | |

| SimVerb‐3500 | 2,926 | .33 | [0.30, 0.36] | .33 | [0.30, 0.36] | 0.68 | .44 | [0.41, 0.47] | .11 | [0.08, 0.13] | |

| Silberer (Sem.) | 5,428 | .74 | [0.73, 0.75] | .21 | [0.19, 0.24] | 0.33 | .74 | [0.73, 0.76] | .00 | [0.00, 0.00] | |

| Silberer (Vis.) | 5,405 | .60 | [0.58, 0.61] | .16 | [0.14, 0.16] | 0.30 | .60 | [0.58, 0.61] | .00 | [0.00, 0.00] | |

| Average | .60 | .27 | .63 | .03 | |||||||

| Dataset |

|

va = Word association, vb = Affect | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | ||||||||||

|

|

CI95 |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| MEN | 1,981 | .80 | [0.78, 0.82] | .31 | [0.27, 0.35] | 0.45 | .81 | [0.79, 0.82] | .01 | [0.00, 0.01] | |

| MTURK‐771 | 653 | .77 | [0.73, 0.80] | .26 | [0.19, 0.33] | 0.45 | .77 | [0.74, 0.80] | .00 | [0.00, 0.01] | |

| SimLex‐999 | 913 | .68 | [0.65, 0.71] | .33 | [0.27, 0.39] | 0.50 | .69 | [0.65, 0.72] | .01 | [0.00, 0.01] | |

| SimVerb‐3500 | 2,926 | .64 | [0.62, 0.66] | .33 | [0.30, 0.30] | 0.50 | .65 | [0.63, 0.67] | .01 | [0.01, 0.02] | |

| Silberer (Sem.) | 5,428 | .84 | [0.84, 0.85] | .21 | [0.19, 0.24] | 0.23 | .84 | [0.84, 0.85] | .00 | [0.00, 0.00] | |

| Silberer (Vis.) | 5,405 | .73 | [0.72, 0.74] | .16 | [0.14, 0.19] | 0.00 | .73 | [0.72, 0.74] | .00 | [0.00, 0.00] | |

| Average | .71 | .27 | .73 | .02 | |||||||

Of the remaining datasets, the Silberer one is most directly relevant to evaluate the role of visual information. The words in this study consisted of concrete nouns taken from the McRae feature generation norms (McRae et al., 2005). However, since words across different categories were compared, the ratings occur between entities specified at different taxonomic levels. This might provide less of a challenge for distribution‐based language models to predict due to large perceptual differences. In contrast to the other studies, two types of ratings were collected—semantic and visual. Semantic ratings are similar to other studies and involve participants judging similarity. Visual ratings, however, only involve judging the similarity of the appearance of the concepts. One might thus expect that models based on visual features will predict visual similarities better than semantic ratings.

The results indicate that for the word association model, adding visual information resulted in significant improvement; however, the improvements were small (relative to the distributional model) even for the visual ratings (Δr = .03, CI95 [0.03, 0.04] for the semantic judgments and Δr = .06, CI95 [0.05, 0.07] for the visual judgments). For the distributional linguistic model, adding visual information resulted in an improved prediction for both judgments, especially the visual ones (Δr = .10, CI95 [0.09, 0.10], for the semantic judgments and Δr = .16, CI95 [0.15, 0.17] for the visual judgments). Consistent with both of these sets of findings, the word association model better predicts people's similarity judgments than the distributional linguistic judgments (the difference was .11 CI95 [0.10, 0.12] for the semantic and .14, CI95 [0.13, 0.16] for the visual judgments).

To summarize, the contribution of visual information in the multimodal word association model was relatively small compared to the distributional linguistic model. The average gain across datasets was .02 for the former compared to .08 for the latter (see Table 2).

4.2.2. Comparisons at the basic level

The complete Silberer dataset that we evaluated above contains both basic‐level within‐category comparisons like dove—pigeon as well as broader comparisons across categories like dove—butterfly. Since Study 1 involved only basic‐level comparisons within superordinates, in order to provide a better comparison between it and the Silberer data, we did an additional analysis only on the basic‐level terms in the Silberer data. To achieve this, we annotated the Silberer2014 dataset with superordinate labels for common categories taken from Battig and Montague (1969) and De Deyne et al. (2008) such as bird, mammal, musical instrument, vehicle, furniture, and so on. We then restricted the analysis to only the basic‐level terms within those superordinate categories. Words for which no clear common superordinate label (see above) could be assigned were not included. This reduced the number of pairwise comparisons from 5,799 to 1,086, which is still sufficiently large for the current purposes.

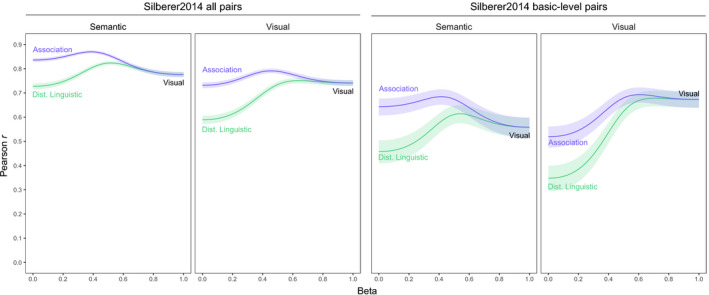

As the two right panels of Fig. 5 demonstrate, the overall correlations were lower for basic‐level terms than those for the complete set of items. For the word association model, the difference was respectively .19, CI95 [0.16, 0.23] and .21, CI95 [0.17, 0.26]. For the distributional linguistic model, the results were respectively a difference of .27, CI95 [0.22, 0.34] and .24, CI95 [0.19, 0.30]. These results support the idea that compared to mixed lists of items, comparisons at the basic level present a considerable challenge. Moreover, adding visual information results in a larger improvement (relative to the unimodal model) for basic‐level items. For the semantic comparisons using distributional linguistic representations, the multimodal difference was .10 for the full datasets versus .16 for the basic‐level data (see Tables 2 and 3). A bootstrapped test confirmed that the difference between the full and basic‐level correlation was significant, CI95 [−0.03, −0.09].

Fig. 5.

Results of multimodal models (created by combining either distributional linguistic or word association models with visual features) based on pairwise similarity ratings from the Silberer dataset. The plots show correlations between human judgments and models together with 95% confidence intervals (shaded). The two left panels show the semantic and visual judgments for all items, whereas the two right panels show performance on the subset of basic‐level items in which the word pairs belong to the same superordinate category.

Table 3.

Replication of Table 2 restricted to basic‐level word pairs in the Silberer dataset

| Dataset |

|

va = Distributional linguistic, vb = Visual | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | ||||||||||

|

|

CI95 |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| Basic‐Sem. | 1,086 | .46 | [0.41, 0.50] | .56 | [0.52, 0.60] | 0.55 | .61 | [0.58, 0.65] | .16 | [0.12, 0.19] | |

| Basic‐Vis. | 1,086 | .35 | [0.29, 0.40] | .67 | [0.64, 0.70] | 0.70 | .68 | [0.64, 0.71] | .33 | [0.28, 0.38] | |

| Dataset |

|

va = Word association, va = Visual | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | ||||||||||

|

|

CI95 |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||

| Basic‐Sem. | 1,086 | .64 | [0.61, 0.68] | .56 | [0.52, 0.60] | 0.43 | .68 | [0.65, 0.71] | .04 | [0.03, 0.06] | |

| Basic‐Vis. | 1,086 | .52 | [0.47, 0.56] | .67 | [0.64, 0.70] | 0.55 | .69 | [0.66, 0.72] | .17 | [0.14, 0.21] | |

Finally, in line with Study 1, we found that the visual information improved performance more for the distributional linguistic model than the word association model: The difference between Δr = .16 and Δr = .04 was significant, CI95 [0.10, 0.16]. For completeness, Table 3 and Fig. 5 also show the results for the visual judgments. The main finding here is that when comparisons are restricted to visual judgments at the basic level, the unimodal visual model (when β = 1.0) is clearly superior to both the word association ( − = .15, CI95 [0.11, 0.20]) and distributional linguistic ( − = .33, CI95 [0.27, 0.37]) model, in line with earlier argument that basic‐level comparisons rely strongly on visual information.

4.3. Evaluation of multimodal affective models

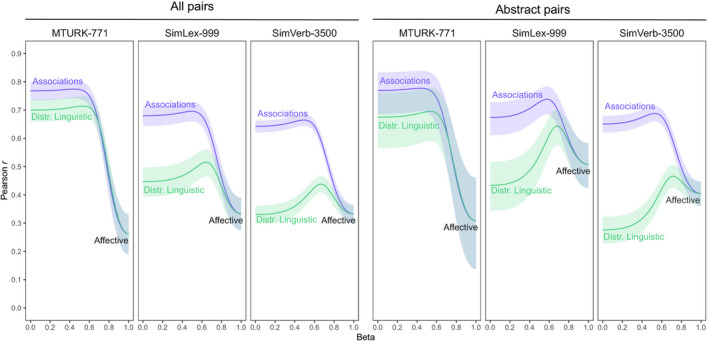

To investigate the effect of affective information, we supplemented the datasets in the previous section with SimVerb‐3500 (Gerz et al., 2016), which contained pairwise similarity ratings for verbs as described before. Most words in SimVerb‐3500 (2,926 out of 3,500) were included in the affective norms of Warriner et al. (2013). The results are shown in Table 4. In contrast to the findings for visual information and the abstract triads in Study 1, most of our datasets show no improvement when affective information is added. The only exceptions are the SimLex‐999 and SimVerb‐3500 datasets, where adding affective information improved the distributional linguistic model, Δr = .07, CI95 [0.04, 0.10] and Δr = .11, CI95 [0.008, 0.13], respectively.

Again we find that the word association model provides better estimates of pairwise similarity than the distributional linguistic model, except for the MEN dataset, where they are on par (CI95 [−0.01, 0.02]). The unimodal word association correlation for the additional SimVerb‐3500 dataset, r(2924) = .64, was similar in magnitude to the SimLex‐999 task (r(911) = .68) and was somewhat lower than the other datasets. For the distributional linguistic model, the SimVerb‐3500 correlations was also moderate, r(2924) = .33, which might reflect the difficulty of this model in handling strict similarity and accurately representing verb meaning.

To conclude, on average the multimodal affective gain was limited in both the distributional linguistic and word association model (respectively .03 and .02, see Table 4). These results are different from Study 1, but there are at least two reasons why the affective information may have provided less benefit in this study. First, not all datasets here involved comparisons between basic‐level items within a superordinate category. Second, none of the datasets were constructed to investigate abstract words, and it is for these that we might expect affective information to be most important.

4.3.1. The role of affect in abstract words

Unlike the abstract condition in Study 1, some of the pairwise datasets contain both concrete and abstract words. To test whether the presence of concrete words masked the effect of the multimodal affective model, we screened how abstract the stimuli were in each dataset using the concreteness norms from Brysbaert et al. (2014). As in Study 1, we only included similarity judgments for which the average concreteness rating of both words in the pair was smaller than 3.5 on a 5‐point scale. MEN only had 41 pairs (2.1% of words) and no pairs matched in the Silberer dataset. The MTURK‐777, SimLex‐999, and SimVerb‐3500 datasets had a reasonable number of abstract words (respectively 121 or 18.6%, 42.8% or 391 words, and 67.4% or 1,973 words) and for these datasets we tested whether adding affective information results in a larger improvement (relative to the unimodal model) for abstract pairs compared to all pairs. The results are shown in Table 5 and Fig. 6.

Table 5.

Replication of the results reported in Table 4 restricted to abstract word pairs in the MTURK‐771, SimLex‐999, and SimVerb‐3500 datasets

| Dataset |

|

va = Distributional Linguistic, vb = Affect | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | ||||||||||

|

|

CI95 |

|

CI95 | β |

|

CI95 | Δr | CI95 | |||