Abstract

Generating numbers has become an almost inevitable task associated with studies of the morphology of the nervous system. Numbers serve a desire for clarity and objectivity in the presentation of results and are a prerequisite for the statistical evaluation of experimental outcomes. Clarity, objectivity, and statistics make demands on the quality of the numbers that are not met by many methods. This review provides a refresher of problems associated with generating numbers that describe the nervous system in terms of the volumes, surfaces, lengths, and numbers of its components. An important aim is to provide comprehensible descriptions of the methods that address these problems. Collectively known as design‐based stereology, these methods share two features critical to their application. First, they are firmly based in mathematics and its proofs. Second and critically underemphasized, an understanding of their mathematical background is not necessary for their informed and productive application. Understanding and applying estimators of volume, surface, length or number does not require more of an organizational mastermind than an immunohistochemical protocol. And when it comes to calculations, square roots are the gravest challenges to overcome. Sampling strategies that are combined with stereological probes are efficient and allow a rational assessment if the numbers that have been generated are “good enough.” Much may be unfamiliar, but very little is difficult. These methods can no longer be scapegoats for discrepant results but faithfully produce numbers on the material that is assessed. They also faithfully reflect problems that associated with the histological material and the anatomically informed decisions needed to generate numbers that are not only valid in theory. It is within reach to generate practically useful numbers that must integrate with qualitative knowledge to understand the function of neural systems.

Keywords: quantitative morphology, design‐based stereology, number, length, surface, volume

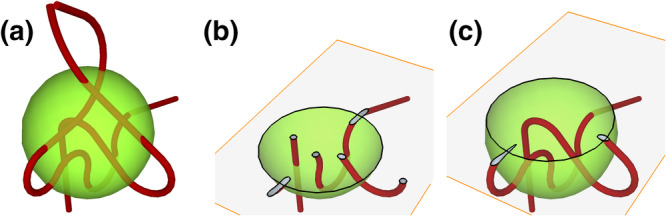

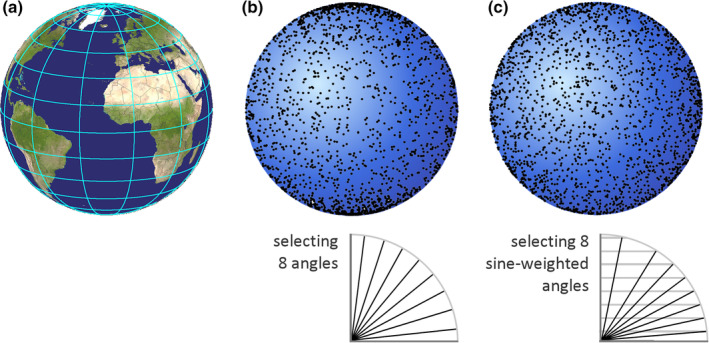

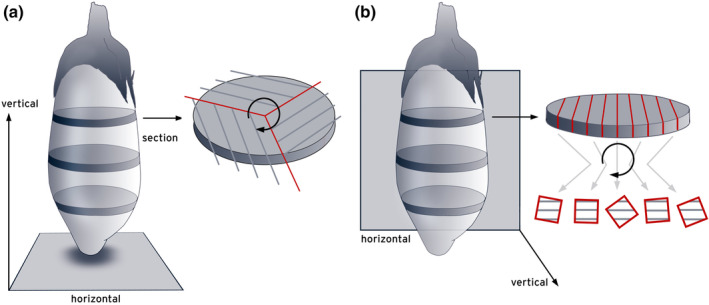

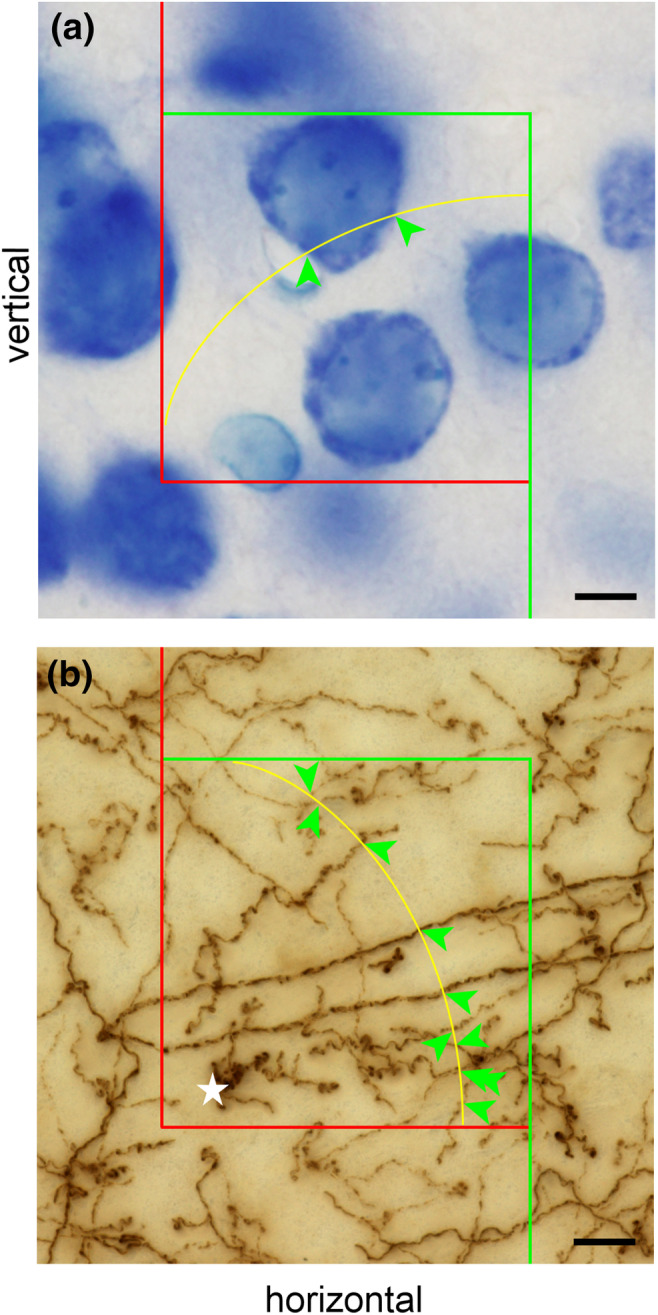

Design‐based stereological methods allow the estimation of basic morphological parameters in representative samples of the sectioned central nervous system. Point probes can be used to estimate volume (illustrated for the Area Fraction Fractionator), line probes can be used to estimate surface area (illustrated for a vertical sections design), area probes can be used to estimate length (illustrated for the Spaceball probe), and volume probes must be used to estimate number (illustrated for the Disector). This review provides an introduction to how these methods solve problems associated with other quantitative approaches, how they are applied to histological material, how a sampling scheme can be designed and evaluated, and which practical problems need to be solved to generate the numbers that we will need to come to an understanding of central nervous system function.

1. INTRODUCTION

Quantitative morphology in the neurosciences is, in the context of this review, defined as studies that provide information about the basic structural organization of the nervous system in terms of—to mention but a few parameters—volumes of brain regions, the numbers of cells or synapses within them, the length of capillaries supplying them, or of membrane areas that are available for substance exchange or synaptic contacts. Like many other specialties within the neurosciences, quantitative morphology is the principal focus of comparatively few research groups. Unlike other specialties and as a consequence of a general striving toward objectivity in the presentation and evaluation of data, quantitative morphology has also been imposed on those whose primary interests are elsewhere. A specialist in a neurodegenerative disease model showing unequivocal qualitative evidence of cell loss will almost inevitably be asked for the provision of data that provide an objective measure—implicitly meaning “numbers”—of how many cells are lost. The next demand will be statistical testing—requiring numbers—that sets diseased apart from healthy. Trying to comply with the demand for numbers, one may check who has previously generated the numbers needed, how they were generated and where the outcome was published. A judgment of quality concerning the “who” and “where” and a judgment of effort concerning the “how” is likely to follow. Unfortunately, quantitative morphology only reached methodological maturity after the onset of the quest for numbers. The bulk of the quantitative morphological methods that together constitute what was called the new or unbiased stereology and what today is commonly referred to as design‐based stereology was introduced in the 1980s and 1990s (for early reviews, see Gundersen, 1986; Gundersen, Bagger, et al., 1988; Gundersen, Bendtsen, et al., 1988; Royet, 1991). Prior to that, studies of respected researchers published in respected journals had hardly an alternative but to resort to methods that, for a large part, were fraught with possible sources of error. A following of studies that use these precedences and that themselves function as precedences must be expected in the course of a methodological paradigm shift. However, one would hope for their numbers to dwindle quickly and for the transition to be brief and uncontroversial.

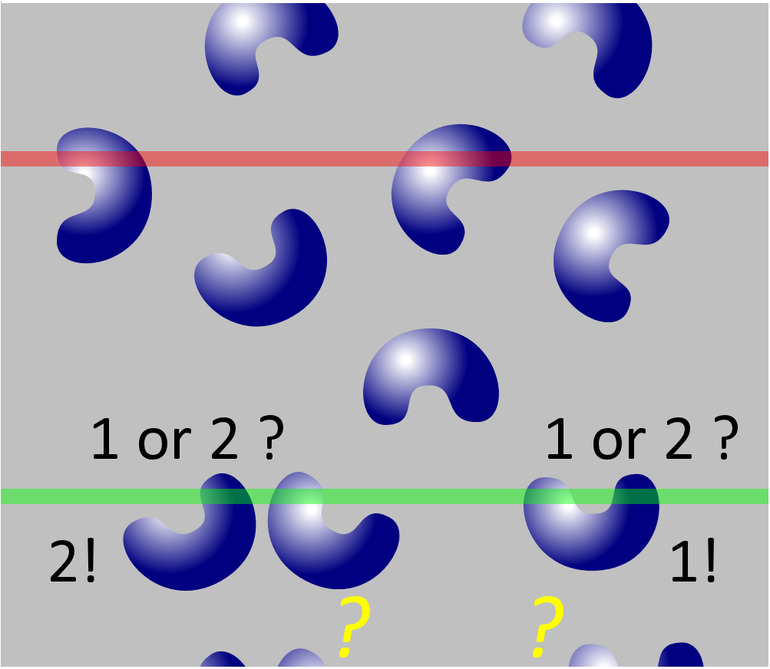

Subsequent to the introduction of design‐based stereological methods, some journals, for example, The Journal of Comparative Neurology (Coggeshall & Lekan, 1996; Saper, 1996), Neurobiology of Aging (West & Coleman, 1996), or The Journal of Chemical Neuroanatomy (Kordower, 2000) strongly promoted the use of these methods, precipitating a vigorous discussion—in part about the freedom of choice of methods (Guillery & Herrup, 1997). This freedom should, of course, not be challenged. Data collected by any quantitative morphological method in a replicable manner are true by definition of the method. Problems first arise with the interpretation of the data. Do the data provide sound evidence, for example, for a loss of cells following an experimental intervention? Or should they elicit the death knell of a manuscript under review because “the data provided do not support the conclusions being drawn”? In dealing with numbers, the freedom of interpretation of the data is far more restricted than the freedom of choice of methods. If, for example, the method of choice only allows the presentation of a density, that is, a ratio of something (numerator; e.g., cell number or capillary length) per something else (denominator; e.g., tissue volume or cells), it is simply not possible from the ratio alone to make conclusive statements about changes in the total of the numerator or denominator. Figure 1 provides an example for which the idea that differences in densities reflect differences in number would be almost intuitively rejected. Instead, one has to argue why a density, under the particular circumstances of the experiment, may provide good evidence for such a change. Although this may be possible, it would appear more fruitful to either save the time and energy required by the argument or to expend them on discussing what the biological significance of the change would be (Cruz‐Orive, 1994).

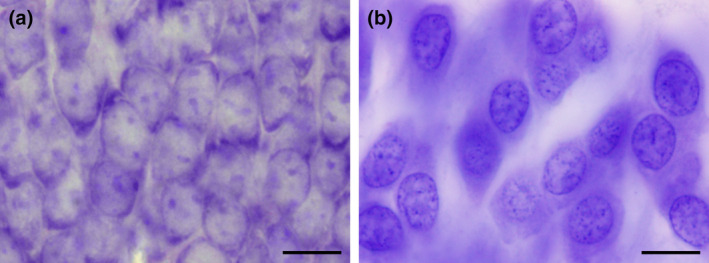

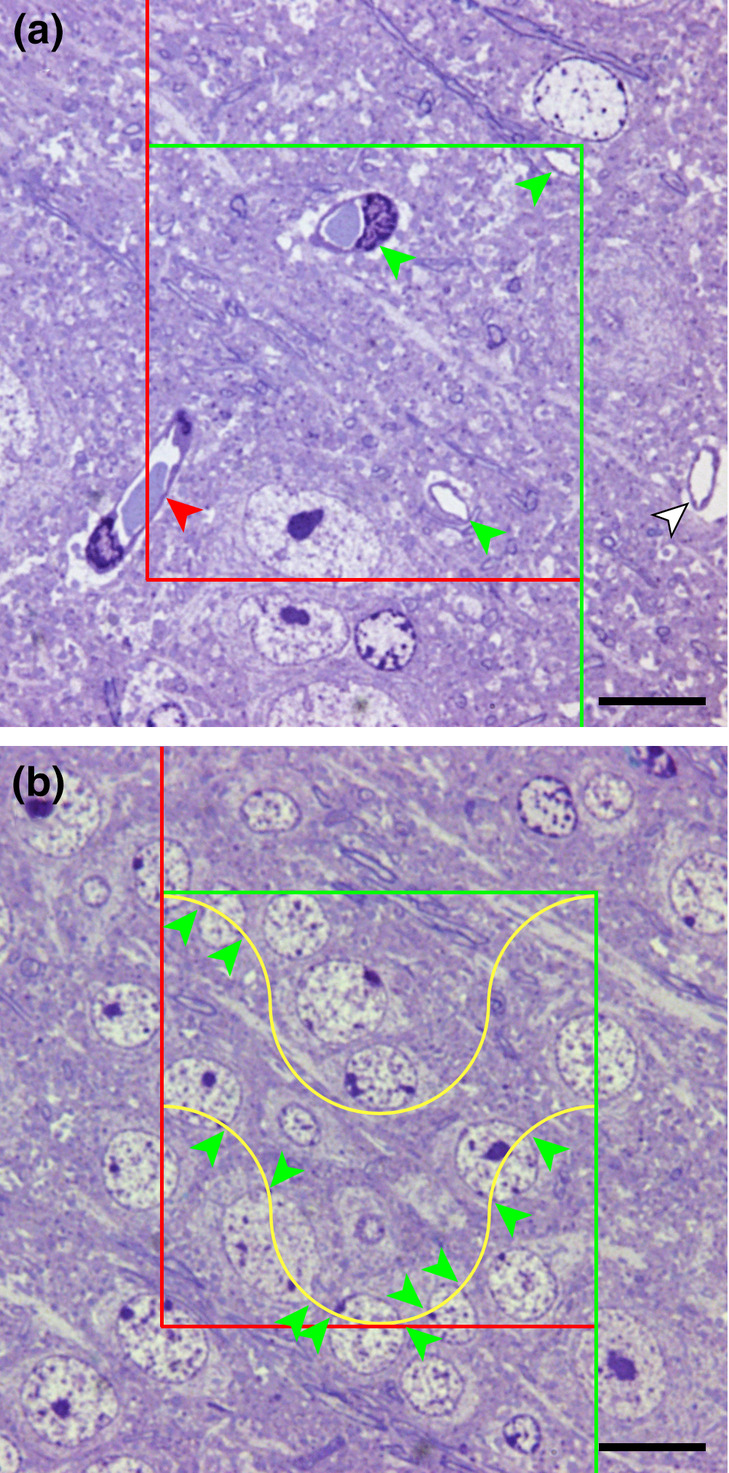

FIGURE 1.

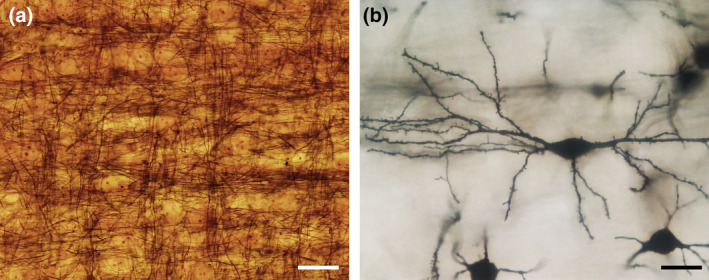

C57 mouse and human hippocampal dentate granule cells. Despite the much higher packing density (a) of ~550,000 granule cells in C57 mice (Ben Abdallah, Slomianka, Vyssotski, & Lipp, 2010), they are by far outnumbered by ~15,000,000 granule cells (West & Gundersen, 1990) in the human hippocampus (b). It is a change in the denominator of density—the total volume of the granule cell layer—that is responsible for the discrepancy between appearance and numbers. Scale bars in a and b: 10 μm [Color figure can be viewed at wileyonlinelibrary.com]

What is puzzling is that, decades after the introduction and vigorous discussion of a new methodology, a shift to more powerful and rather simple methods is, if at all, proceeding at a snail's pace. The reasons are manifold. The very strength of the methods, that is, a mathematical proof can be and often is included in their original description, renders much of the primary methodological literature next to impossible to read for many biologists. Popularizations of the methods may try to restrict themselves to a basic vocabulary, but often fail to realize that the audience simply may not (want to) speak a language of mathematics or statistics (Fawcett & Higginson, 2019). Also, when quantitative morphological methods were prominent tools in neuroscience, many of their users were keenly aware of their problems. This awareness has faded with time and the vast expansion of the neuroscience toolbox.

Yet another reason is the effort required to obtain the measures. Design‐based stereology prided itself to have cut the workload substantially through rational study design (e.g., Gundersen & Østerby, 1981). However, the methods are still, at best, semiautomatic. They require user intervention and hours of work to return a measure. While other methods were equally or even more time consuming in the past, increased computing power has allowed the development of image analysis methods that return data within minutes. Without an understanding why the extra effort provides more reliable data than those quickly generated, the extra effort seems hard to justify—in particular if the additional effort does not seem necessary to publish well. It is a vicious circle that is difficult to break.

The intention of this review is to refresh memories on the problems inherent to quantitative morphology of the sectioned CNS, to provide comprehensible explanations how design‐based stereological methods address these problems and to provide sufficient detail on the application of the methods to allow the design, execution, and evaluation of the outcome of a quantitative morphological study. For more formal introductions, the texts of Russ and Dehoff (2000), Howard and Reed (2010) or West (2012a; serialized in Cold Spring Harbor Protocols) are recommended. Brief introductions have been published by, for example, Schmitz and Hof (2005) or Boyce, Dorph‐Petersen, Lyck, and Gundersen (2010).

Most brain regions contain so many objects—neurons, glia, synapses, and so forth—that workload would make it prohibitive to count them all. The few cases in which “everything” was counted, usually based on serial reconstructions, were primarily concerned with the validation of other approaches that reduce workload (e.g., Baquet, Williams, Brody, & Smeyne, 2009; Delaloye, Kraftsik, Kuntzer, & Barakat‐Walter, 2009; Pover & Coggeshall, 1991). Alternative approaches will always consist of a two‐step process. The first step reduces the workload by sampling only a small fraction of a region of interest. The second step consists of probing the sample in a way that makes the final estimate free from probing related artifacts (see Section 2). Although many of the design‐based stereological methods have been presented as bundled sampling‐probing combinations (e.g., West & Gundersen, 1990; West, Slomianka, & Gundersen, 1991), the two steps are not inextricably linked and can individually be subject to modifications and improvements. Therefore, they will be treated separately in Section 3, which is concerned with sampling, and Sections 4, 7, which are concerned with probes. Once an estimate has been generated, the inevitable question is if it is good enough. Section 8 will help in approaching the answer to this question. Section 9 tries to point out and address some of the problems that arise when theory hits the less than mathematically perfect life in the laboratory.

An argument that occasionally is being put forward against the use of design‐based methods is the cost associated with the software and hardware tools that may be needed to apply them. First, this cost may only be small compared to that of other equipment commonly used. It may not even amount to the operational expenses associated with a single project. Most importantly, the purchase of specialized software and hardware is a purchase of convenience and speed but not one of ability. These tools were not around when many of the methods were developed—sometimes in the form of first applications. Almost all methods that will be presented here can, in principle, be used without special resources. Some simple ways that have been devised to facilitate the work will be presented in conjunction with the sections on sampling and the introduction of specific probes.

2. A PROBLEM

Although the paradigm shift that one could have expected is barely proceeding, the introduction and discussion of new methods have sensitized some experimenters to problems associated with generating quantitative morphological data. One of these problems relates to answering the question “How many are there?” that is, to estimating number. Some responses to the problem, which unfortunately fall short of solving it, and the current best solution will be described here.

2.1. Easy ways to fail

When tissue is cut into sections, some of the objects, for example, cells, contained within the tissue will inevitably be cut too. Fragments of cells that have been cut will be present in two sections. In two‐dimensional representations of the sections, for example, images that have been acquired, the fragments are seen as profiles. If cell profiles would be counted in all sections, the number of profiles would be higher than the number of cells. Now we have the problem: A profile count represents an overcount of actual cell number. The following, very slightly paraphrased responses to the problem of overcounting have been published in descriptions of methodology: “… to avoid double counting of the same cell, sections used to count were a minimum of 100 μm apart. …” or “… we then took every fourth section, so the distance between sections that were counted was 80 μm. Given that the typical cell diameter is smaller than 30 μm, this ensures that the same cell was not counted twice.” There are further variations on this theme.

The simplicity of this solution is appealing. It was used in Figure 2 to generate an example of three series of sections fulfilling the criterion that the spacing of the sections is larger than the size of the cells (blue objects) contained in the region of interest (dark gray). The example also has the advantage that the number of cells prior to cutting is known. There are 18 (Figure 2a). Every third section was “collected” in each of the series represented in Figure 2b–d: Figure 2b, sections 1, 4, 7, and 10; Figure 2c, sections 2, 5, and 8; and Figure 2d, sections 3, 6, and 9. None of the individual cells can be double counted in any one of the series. Each series represents, on average, one‐third of the entire region of interest. An estimate of total cell number would, according to the simple solution, be the number of cells counted in any one of the series multiplied by three. The average of the estimates of the three series should correspond to the number of cells contained in the structure—18. Does it?

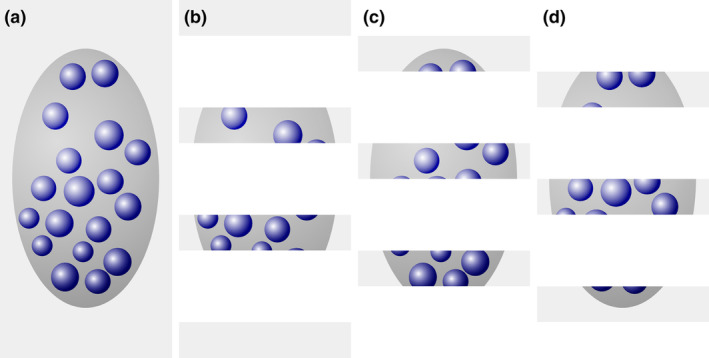

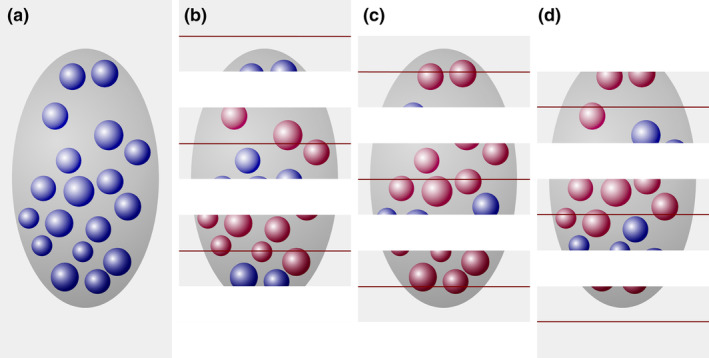

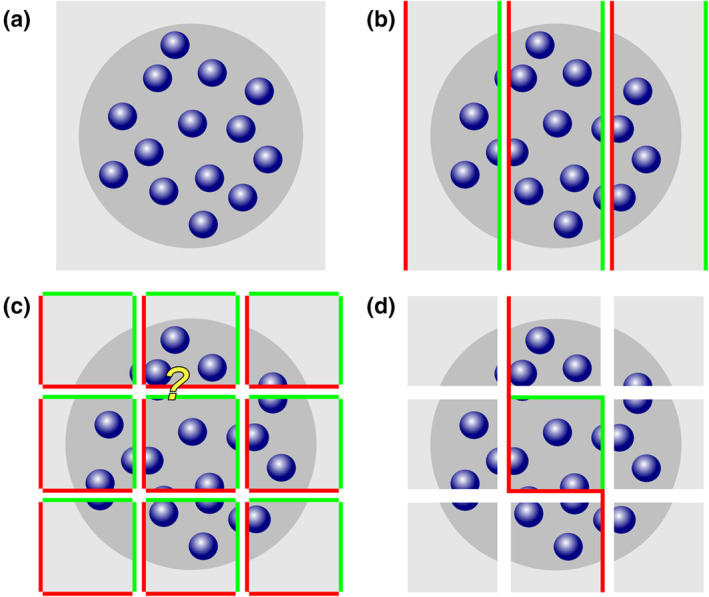

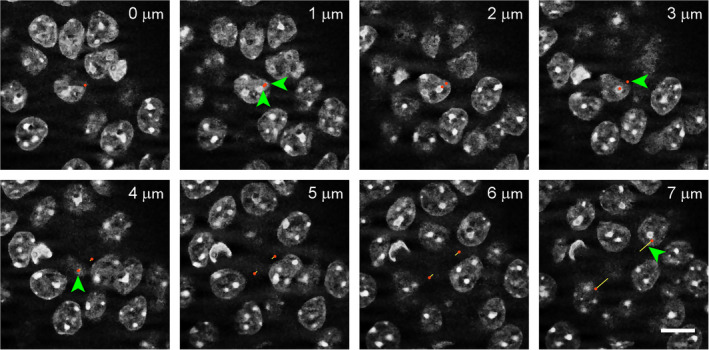

FIGURE 2.

Profile counts cannot be extrapolated to total cell number. (a) A region (dark gray) containing 18 cells (blue objects) is cut into three series of sections (b–d). In each series, the profiles of cells visible in the sections are counted. Eight cell profiles are counted in the two sections of the region contained in series (b). The three sections in series (c) contain10 cell profiles. The three sections in series (d) contain nine cell profiles. Extrapolating the mean profile count, 9, to total cell number by multiplying with 3 generates an estimate of 27 cells instead of the true value of 18 cells [Color figure can be viewed at wileyonlinelibrary.com]

Unfortunately, it does not; 8, 10, and 9 cell profiles are counted (Figure 2b–d), resulting in estimates of 24, 30, and 27 cells. The average of the three estimates, 27, is 150% of the true number. The possibility that a specific cell is counted twice cannot be the reason for the overestimate. Instead, the problem is caused by assigning a count of “1” to cell fragments that represent less than one entire cell (Billingsley & Ranson, 1918). As long as cells can be fragmented during the sectioning this error will occur, and the size of the error will depend on the likelihood of a cell being cut. The latter depends on the average cell height in relation to the thickness of the sections. If this relation was known, the error could be corrected, which is the basis of Abercrombie's cell counting method (1946; see Section 6.7). Without correction, the true total cell number cannot be obtained using the simple solution. Ironically, this error will not increase even if the sections are spaced close enough together for a specific cell to be present and counted in two sections. If we count in all sections, we see 27 fragments—the same number that we obtained by estimating from every third section. The extrapolation of profile counts to total number will provide us with an estimate of fragment number and not cell number.

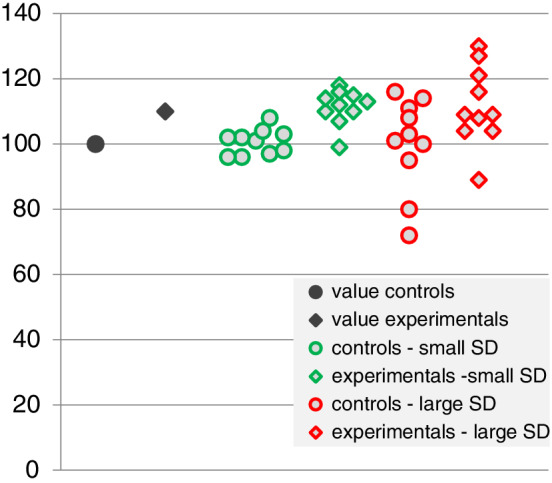

Correct total cell numbers are, however, not always the primary aim of a study. When control groups are compared with experimental groups, group differences may be more important than correct total numbers. This has led some investigators to state that “… because the absolute, unbiased number of neurons was not needed to address the questions posed in this study, a profile‐sampling method was used,” or that “… unbiased stereological methods were not used as the data of interest is relative difference and not absolute value,” or that “… no corrections were made for overcounting because we were interested in relative rather than absolute differences in the number of neurons.” The point appears valid at a first glance, if one could be certain that at least the group differences were correct. Figure 3 reexamines the region illustrated in Figure 2. Figures 2 and 3 now may represent members of a control group (Figure 2) and an experimental group (Figure 3).

FIGURE 3.

Dependence of profile counts on object size. (a) A region (dark gray) containing 18 cells (blue objects) is cut into three series of sections (b–d). In each series, the profiles of cells visible in the sections are counted. In series (b), (c), and (d), 10, 13, and 11 profiles are counted. The average of the 3 possible estimates, 30, 39, and 33, is 34. The increase in the size of the cells relative to those in Figure 2 results in an estimate of 34 instead of 27 cells. The true cell number is 18 [Color figure can be viewed at wileyonlinelibrary.com]

Then, 10, 13, and 11 cells are counted (Figure 3), resulting in estimates of 30, 39, and 33 cells. The average of the three estimates, 34, is ~126% of the control value (27 cells estimated from Figure 2). This increase is observed even though the number of cells in the region of interest did not change. There are, again, 18 cells. What did change is the size of the cells. Because their height increased, the likelihood of producing fragments increased and, consequently, the profile count increased. The observed difference in cell number estimates between control (Figure 2) and experimental (Figure 3) individuals is an artifact generated by the increase in size of the cells.

That changes in number occur without changes in size is the central but often unspoken or unrealized assumption that must be true for a faulty method to generate similarly sized errors in controls and experimentals. It is not only counterintuitive—cells do not instantaneously pop in and out of existence—but changes in size of neurons as a reaction to stimuli have been known almost since it became possible to stain neurons (Nissl, 1892). A little more recently, neurons in the entorhinal cortex were found to decrease in size by ~30% following the destruction of hippocampal granule cells (Goldschmidt & Steward, 1992). The age‐related dopaminergic cell death in the substantia nigra is accompanied by an increase in the volume of the remaining cells (Rudow et al., 2008). The age‐related loss of phrenic motor neurons is accompanied by a decrease in the size of the remaining cells (Fogarty, Omar, Zhan, Mantilla, & Sieck, 2018). There are age‐related changes in the volumes of the perikaryon and nucleus of human neocortical neurons (Barger, Sheley, & Schumann, 2015; Stark et al., 2007). Also, an increase in the size of both dentate mossy cells (40%) and interneurons (58%) that survive pilocarpine‐induced seizures has been described (Zhang et al., 2009; Zhang, Thamattoor, LeRoy, & Buckmaster, 2015). A survey of age‐related changes in cell sizes based on earlier methods can be found in Flood and Coleman (1988).

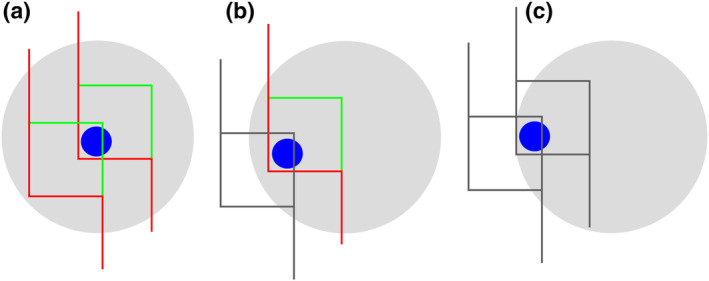

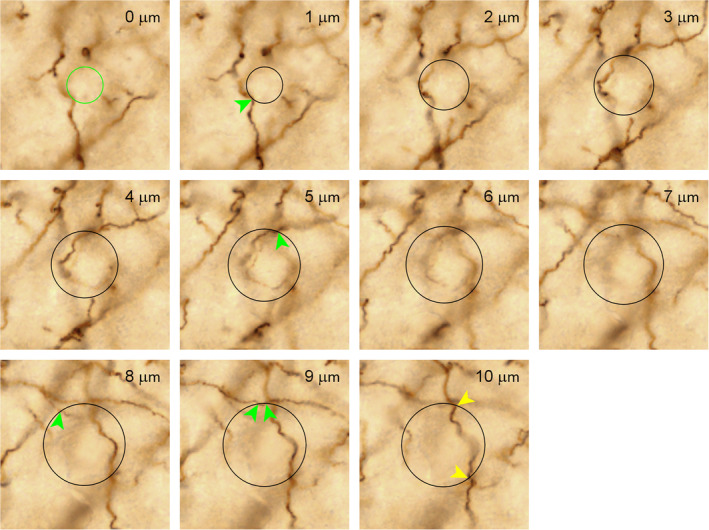

Figures 2 and 3 used spheres to represent cells. If cells are not spherical, the number of profiles counted in a section will not only depend on the size of the cells. It will also depend on their orientation, which may change because of experimental interference or, quite simply, because of a change in the direction in which the tissue is cut. For the sake of brevity, the regions of interest in Figure 4, which illustrates the effect of orientation on profile counts, were not split up into samples of sections. Recall that the number of profiles that can be counted in all sections would correspond to the faulty number of cells (in reality cell fragments) that we would estimate from a series.

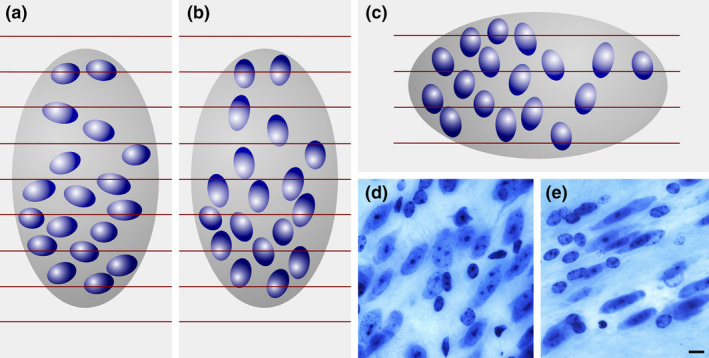

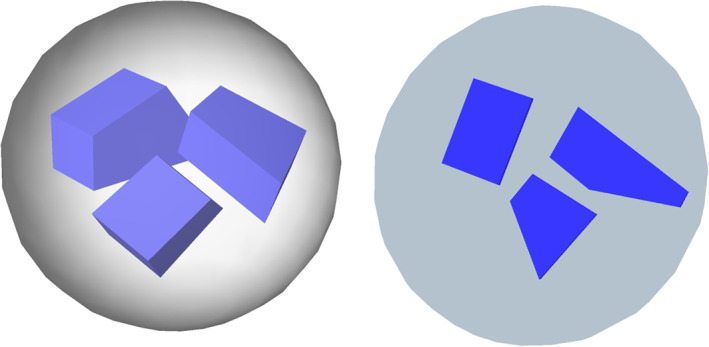

FIGURE 4.

Influence of orientation on a profile count. Red lines represent borders between adjacent sections. The ellipsoid cells in (a) result in a count, across all sections, of 27 profiles. The same cells, unchanged in size but oriented differently in (b), result in a count of 33. Changing the direction of the cutting from, for example, coronal in (a) to sagittal in (c), the profile count increases from 27 to 32. (a) and (c) could also be two brain regions that are cut in the same direction. A laboratory may, for example, claim that the ratio of neurons in (a) to those in (c) is 27/32 = 0.84, while another laboratory using a different cutting direction may claim this ratio to be 32/27 = 1.19—a 40% discrepancy between two results that are both wrong. There are again 18 cells in each structure, and the ratio is 1. One does not have to search long to find neuronal nuclei that are even more elongated than the ones in (a–c): (d) bed nucleus of the stria terminalis, (e) zona incerta, C57 mouse, hematoxylin stain, minimum density projections. Scale bar: 5 μm [Color figure can be viewed at wileyonlinelibrary.com]

If an error can be present because of changes in factors other than number, does an error have to be present? No. The problem is that we do not know. Without further evidence, it is impossible to judge the presence, size, or direction of an error. Also, if significant differences exist between groups, something must have happened. However, without further knowledge about the size, shape, or orientation of the cells, the data generated do not provide unequivocal evidence about the parameter of interest—the number of cells.

Even if we could guess at the approximate size of the error, trying to define a value for an acceptable error does not make sense. First, the outcomes of statistical testing depend not only on the difference between the group means but also on the variability of the groups and the number of individuals in each group. However small a difference may be, it can generate a positive statistical outcome provided the number of individuals is large enough or the variability is small enough. It is not just the perhaps small danger of finding a completely artificial difference, but also the increase in the risks of false‐positive and false‐negative findings that make even small errors treacherous. The definition of an acceptable error would require an argument, and most likely a very contentious one, that relates errors to group variability, group size, and the biological relevance of effect sizes. If reaching a common ground on these issues is possible at all, it is tedious considering that the problem can be avoided without too much effort.

2.2. An almost as easy solution

The problem described in the preceding section is caused by the counting of “something” (profiles) that is not unique for the objects of interest, but that can occur more than once for each object in a series of sections. If the section thickness remains unchanged, selecting a smaller structure to count—the nucleus instead of the cell, or the nucleolus instead of the nucleus—does reduce the error because the chance that it is sectioned decreases. However, even if the error in thick light microscopic sections may not be detectable, this may not be the case if section thickness is reduced dramatically, for example, when only one confocal plane is used or when tissue sections are prepared for electron microscopy. The error depends on a parameter, that is, section thickness, which is chosen during the preparation of the tissue. This also adds to the complexity of comparing results of different studies.

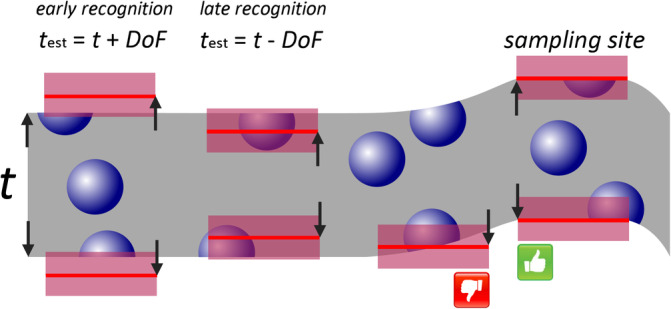

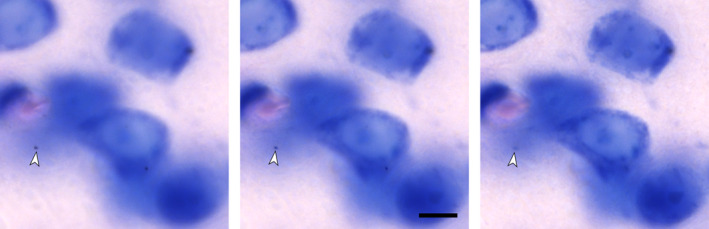

An error‐free estimate can only be obtained if a “something” is identified that only occurs once for each object of interest in a series of sections. Thompson (1932) may have been the first to state that the first time an object is recognized in a series of sections is such a unique feature. Regardless of how big an object is and how many profiles it may produce when the tissue is sectioned; it will only once be seen for the first (or last) time. His idea went sadly unnoticed until Sterio (1984) rediscovered and extended it in the form of the disector. In its conceptually simplest form, the disector is based on the examination of two (di‐) sections (‐sector). One of the sections, the sample section, is used to count cells. The other section, called the look‐up section, is used to decide which of the cells visible in the sample section are to be counted and which ones are not. The rules that determine what to count are rather simple.

If a cell is visible for the first time in the sample section, i.e., it is not present in the look‐up section, it should be counted.

If a cell is visible in the sample section but was already visible in the look‐up section, it should NOT be counted.

There should be no anxiety that the disector must be used in this form, that is, as a tedious‐at‐best comparison of two real sections (physical sections and, hence, physical disector) in which cellular features need to be identified in both sections. Nor should it be necessary to compare an entire section, which may contain thousands of cells, with another entire section. How the disector has improved technically will be described in detail in Section 6. However, the physical disector remains the easiest way to explain the counting rules and why they return the correct number. They are illustrated in Figures 5 and 6, in which the region already illustrated in Figures 2 and 3 is evaluated using the disector.

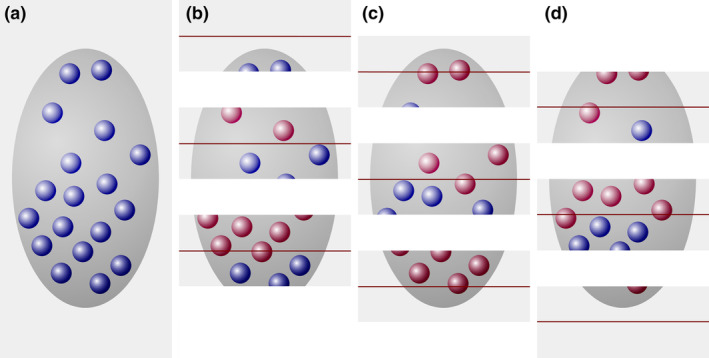

FIGURE 5.

The disector generates an estimate of true object number. (a) A region (dark gray), containing 18 cells (blue objects), is cut into three series of sections. For each of the three series that may be used to count, the sections of the previous series are used as look‐up sections (b–d). According to the counting rules of the disector, cells that are present in the look‐up section (red objects in b–d) are NOT counted. The three sections of the structure in series (b) contain eight cell profiles that were not present in the adjacent look‐up sections. The three sections in series (c) and (d) both contain five cell profiles that can be counted [Color figure can be viewed at wileyonlinelibrary.com]

FIGURE 6.

A number estimate obtained by the disector is independent of object size. (a) A region (dark gray), containing 18 cells (blue objects), is cut into three series of sections. For each of the three series that can be used to count, the sections of the previous series are used as look‐up sections (b–d). In series (b), eight cells are counted in the sample sections (blue objects) that were not present in the look‐up sections (red objects). In series (c) and (d), four and six cells are counted. The average count is six cells in one‐third of the sections, which multiplied by three provides the true number of 18 cells [Color figure can be viewed at wileyonlinelibrary.com]

Every third section is used as a sample section. In the three possible sets of sample and look‐up sections, eight, five, and five cell profiles are countable according to disector counting rules. In that each series represents one‐third of the total, we multiply by three and obtain estimates of 24, 15, and 15 cells. The average of the three estimates, 18 cells, corresponds to the true number of cells in the region of interest.

In that cells, regardless of their size along the z‐axis, only once can appear for the first time in a section, the disector should also return the correct cell number for the member of the experimental group that was used for the profile counts in Figure 3. Figure 6 illustrates that it does. Once again, we count an average of six cells in each of the three possible sets of sample and look‐up sections, providing the true number of 18 cells contained within the region of interest.

The disector would, of course, also return the correct estimate for the change in orientation or for a change in the direction of the sectioning illustrated in Figure 4.

Examples similar to those in this section could be constructed for the estimation of most of the morphological parameters that we may want to quantify. Here, the disector has been used as a representative of the design‐based stereological probes that share in returning the correct value for one parameter of interest (number, length, surface, volume) in the region of interest regardless of changes in one or more of the other parameters.

3. SAMPLING

Subsequent statistical testing of group differences is one of the main motivations of quantitative morphological descriptions. One prerequisite for meaningful statistical testing is representative (for the many interpretations of this word, see Kruskal & Mosteller, 1979) sampling. If the sample is not statistically representative for the region of interest, statistical outcomes may not apply to the region either. Key to representative sampling is that each part of the region must have the same chance to contribute to the sample as any other part of the region. Opinion polls are sometimes used to illustrate principles of good (and bad) sampling. If we are interested in the opinion of a population, everyone in the population should have a chance of being asked. Sampling in an opinion poll means deciding on whom one should ask. Sampling in quantitative morphology means deciding on where one should make a measurement. Two ways in which representative samples can be obtained are described in Sections 3.2 and 3.3.

3.1. The representative section

It is common that quantitative methods are employed in a (small set of) representative section(s). Most often “representative” means sections in which the region of interest has its typical anatomical looks, and it is assumed that typical looks will result in typical quantitative measures. The only way to ascertain if this is true is beforehand knowledge of the entire region of interest. If an experiment is performed, this extends to beforehand knowledge about changes in the entire region of interest. However, if this is already known, why would one perform these measurements at all? Kruskal and Mosteller (1979) harshly translate this type of “representative” sampling to “My sample will not lead you astray; take my word for it even though I give you no evidence.” It is at least unfortunate that the credibility of statistical outcomes should rest solely on the credibility of the investigator (but see Section 9.6). In a formal comparison, effects of prenatal low‐dose irradiation of the hippocampus and cerebellum shown in a statistically representative sample could not be observed in “representative” sections (Schmitz et al., 2005).

Independent of the credibility of sample selection, a critical problem of restricting the sample to this type of representative sections is that number, length, or surface estimates will have to be presented as densities—either raw, as estimate (numerator) per section (denominator), or standardized to some reference also obtained from the section, for example, as estimate (numerator) per unit area or unit volume (denominator). Densities alone do not allow statements about changes in the numerator without knowledge of the total size of the denominator for the region of interest (Gundersen, 1986). The problem was already illustrated in Figure 1. Additional examples from the literature emphasize the importance of the problem. Cell density increased in the hippocampal CA3 pyramidal cell layer 30 days after contusion injury relative to shorter survival times even though cell number remained constant (Baldwin et al., 1997). Significant and similar differences in both hippocampal granule cell number and granule cell layer volume, but no differences in cell density, were found between superior and inferior learners among aged Wistar rat (Syková et al., 2002). Decreases in both hippocampal CA1 pyramidal cell number and pyramidal cell layer volume were also observed in monkeys after simian‐immunodeficiency virus infection (Curtis et al., 2014). Unchanged cell numbers but decreased cell densities were found in adult human medullary nuclei when compared to infant ones (Porzionato, Macchi, Parenti, & de Caro, 2009). Hippocampal granule cell density was found to be highest in C57 mice when compared to DBA and NZB mice although total granule cell number in the three strains was the lowest in C57 mice (Abusaad et al., 1999). GFAP‐positive cell numbers increase in all hippocampal division in Bassoon‐mutant mice, but their densities remain unchanged (Heyden et al., 2011). In the same mutant, hilar cell numbers also increase, but density decreases. An increase in the density of cholinergic fibers and expansion of the width of the commissural‐associational zone in the hippocampal dentate molecular layer after entorhinal cortex lesions were long interpreted as examples of reactive plasticity, but later found to be secondary to molecular layer shrinkage (Phinney, Calhoun, Woods, Deller, & Jucker, 2004; Shamy, Buckmaster, Amaral, Calhoun, & Rapp, 2007). Both primary visual cortex volume and the neuron number in schizophrenics were found to be lower than in controls, but cell density did not differ (Dorph‐Petersen, Pierri, Wu, Sampson, & Lewis, 2007). While vascular density increases in the cerebellum of Lurcher mice, total vascular length actually decreases (Kolinko, Cendelin, Kralickova, & Tonar, 2016). Even large increases in cell number can go hand in hand with decreases in cell density in the canary song system following androgen treatments (Yamamura, Barker, Balthazart, & Ball, 2011). An age‐related loss of hippocampal granule cells in APP/PS1KI mice was accompanied by an age‐related increase in volume (Cotel, Bayer, & Wirths, 2008; Cotel, Jawhar, Christensen, Bayer, & Wirths, 2012), which would lead to a larger decline in density than in number. Further examples from research on the morphological basis of neuropsychiatric disorders can be found in Dorph‐Petersen and Lewis (2011). In each of these cases, conclusions drawn based on the numerator of a density obtained from representative sections would have been misleading because of changes in the denominator. Unknown changes in the denominator of a density have also been named the “reference trap” (Brændgaard & Gundersen, 1986). Changes in density indicate changes in the functional relations between the structures that provide numbers for the numerator and the denominator. Changes in density may be worthwhile discussing in this context, and they must have a cause. However, there are always two numbers that can change a density, and we do not know if it is the one of our primary interest (for similar arguments and examples see, for example, Mayhew, 1996). Note that all the examples come from stereological studies, which incidentally looked at both solid number and density estimates. If it was possible to get more bewildered than by attempts to find a consistent relation between density and number, adding biases due to the orientation or size of objects would sure do the trick.

3.2. A representative sample of sections

One way to draw a representative sample from a series of sections of a brain region would be akin to drawing lottery tickets. Each lottery ticket has the same (a uniform) chance (random) of being drawn, and the chance that a ticket is being drawn is independent of the chance that another ticket is being drawn. This type of sampling is therefore referred to as uniform random independent sampling. Sections that are selected in this manner would constitute a statistically representative sample of the region that has been cut. This approach is hardly ever used in quantitative morphological studies. First, it is actually more tedious to draw a random sample than one may expect. Just fishing with a brush for a section in an Eppendorf tube is not good enough—large sections may be more likely to stick to the brush than small ones (or the other way round—who knows?). Some formal randomization procedure would have to be used. The frequently used phrase “randomly selected” is hardly ever accompanied by a description how randomness was achieved. Second, it is counterintuitive and may be disruptive to other procedures. Randomization would mean that a sample from one animal actually may not contain any of those cherished anatomically typical sections, while the sections sampled in the next animal may contain all those cherished sections. Also, there is an intuitive resistance to the large variability of the estimates that one correctly may expect across this type of samples.

Another way of representative sampling is much closer to procedures already in place in many laboratories—uniform random systematic sampling. We rarely collect and process all sections of larger brain regions to look at one particular parameter. Instead, series of sections are collected, in which the distance between sections is determined by the needs of anatomical coverage (the series ought to contain examples of the typical appearance of the region of interest) and the number of ways in which the sections will be processed. If, for example, four antibodies will be used, we may need four series. To that, we may add an additional series to try out antibody concentrations. Another one or two series may be added in case something should go wrong or in case a different type of assessment is considered useful later in the course of the study. The four antibodies, trial sections, and backup section require, in this example, a total of seven series to be cut. The seven series may then be collected into Eppendorf tubes or well plates, each tube or plate containing every seventh section that was cut. Each series is a systematic sample (every seventh) of the region of interest. To make these systematic samples statistically representative only one small additional step is required. Each section must have the same (uniform) chance (random) to give its opinion with regard to, for example, the antibody used as any other section. We cannot always use series one (containing sections 1, 8, 15 …) for antibody A and always use series two (containing sections 2, 9, 16 …) for antibody B. If we did, sections 1, 8, 15 … would never be allowed to give their opinion on antibody B and sections 2, 9, 16 … would never be allowed to give their opinion on antibody A. Instead, we must pick one of our seven series at random when we assign them to a particular stain. This is the only step required to turn a traditional series of histological sections into a statistically representative uniform random systematic sample of sections.

In Section 9, the number of series to be cut (any number is good, but some numbers give more options than others) and ways to deal with missing sections will be discussed.

3.3. Representative sites within sections

Similar to the sampling of sections, sampling within sections must be statistically representative. Again, one has to resort to either uniform random independent sampling or to uniform random systematic sampling. Similar to the sampling of sections, uniformly and randomly sampling the area of the section in a systematic way requires us to randomly select a starting point and proceed from the starting point at regular intervals along the two dimensions of the area of the section. In the most common cases of square or rectangular grids of sampling locations, the distances between sampling locations are often referred to as the x‐ and y‐step sizes.

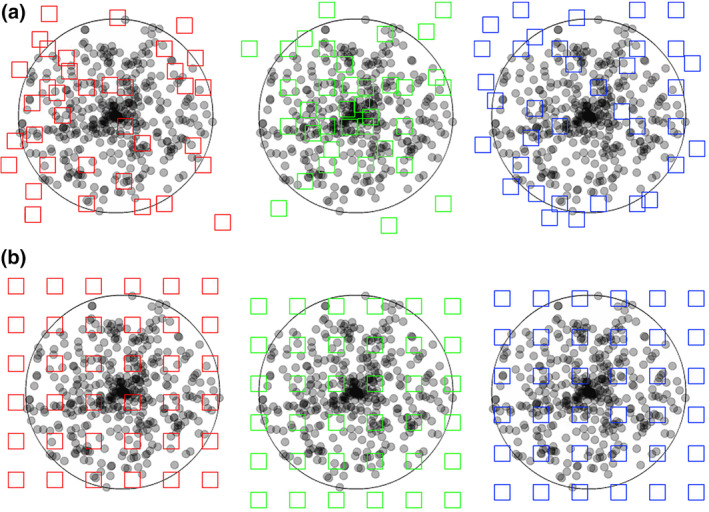

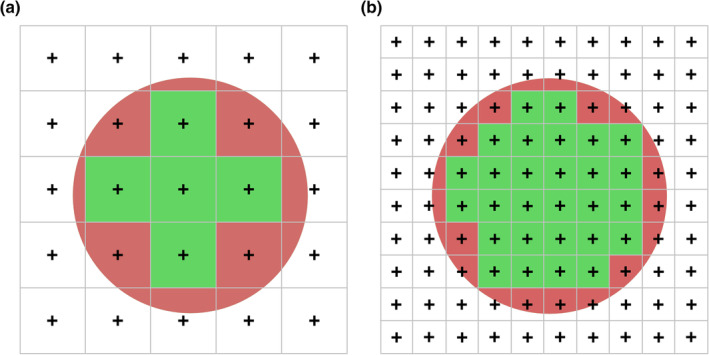

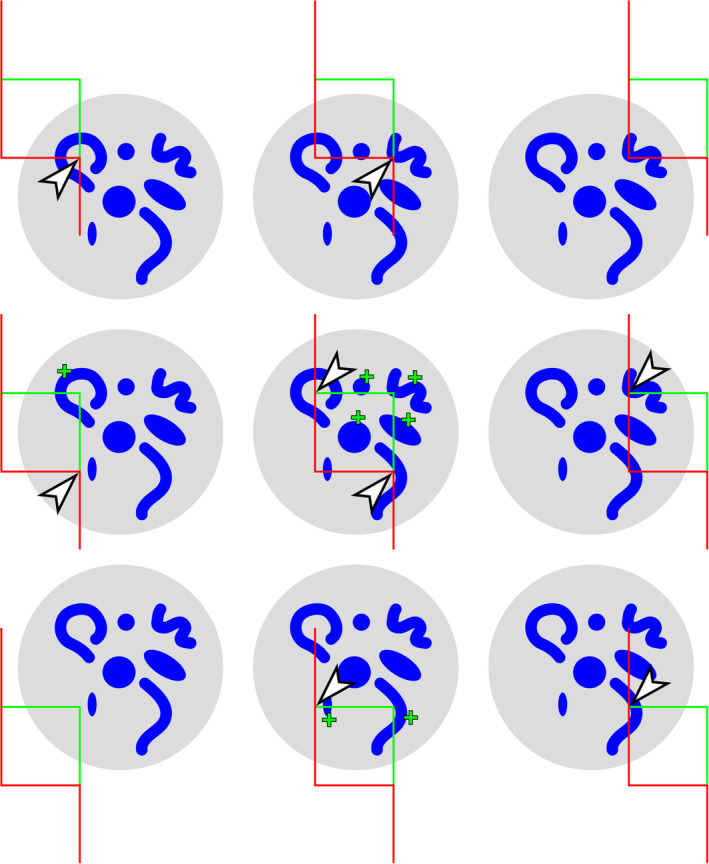

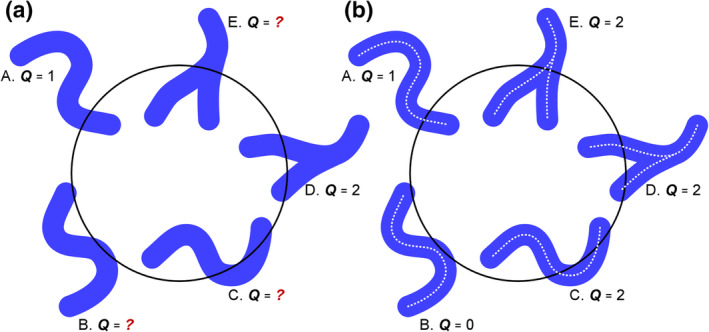

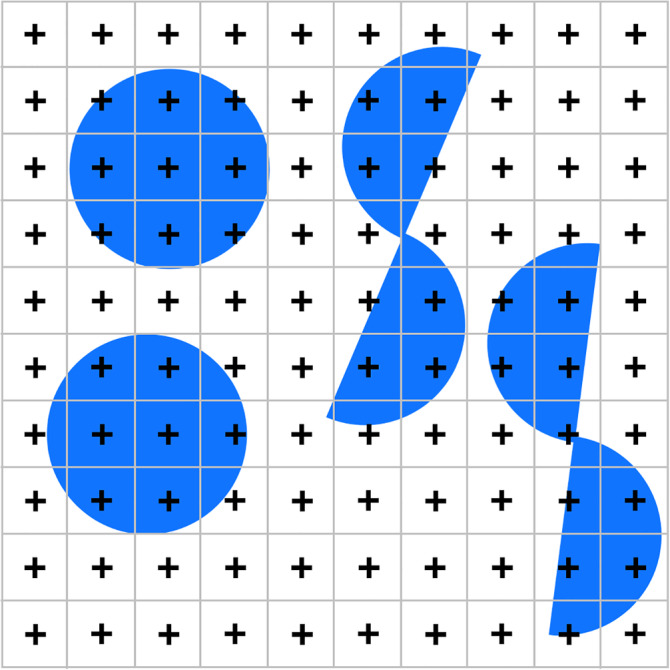

Both uniform random independent sampling and uniform random systematic sampling are illustrated in Figure 7. The distribution of dots in the circular region of interest may resemble the distribution of ganglion cells in the retina—they are spaced closer to each other in the region of the center of the visual field than in its periphery. Figure 7a illustrates three of the infinitely many possible patterns of uniform random independent samples; Figure 7b three of the infinitely many possible patterns of uniform random systematic samples.

FIGURE 7.

Uniform random independent sampling and uniform random systematic sampling. The area of a section containing unevenly distributed objects is probed in (a) with three uniform random independent samples of the area (red, green, and blue squares) and in (b) with three uniform random systematic samples of the area. Both types of sampling are statistically representative. However, note that the systematic samples, unlike the blue independent sample, never completely miss the central part of the section, in which the objects of interest are spaced closely together. Also, they never, like the green independent sample, contain an unduly large number of samples in the central part of the section [Color figure can be viewed at wileyonlinelibrary.com]

Figure 7 illustrates a strength of systematic sampling. If the objects of interest are unevenly distributed within the region of interest, a systematic sample is more likely to capture this heterogeneity than an independent sample. Just from the visual impression of the distribution of the sampling locations in the section, the red independent sample appears to probe areas of different density “just about right.” The green independent sample seems to have “too many” sampling locations within the dense part of the section, whereas the blue independent sample seems to miss the dense part of the section almost completely. As long as these three possibilities are equally likely to occur, it does not matter. Across the sampling of several sections (or across several individuals), the differences between sections will average out to a correct group mean. In contrast to the independent samples, the systematic samples do not show a visually apparent overemphasis on parts of the region either densely or sparsely populated by the objects. The systematic placement of the sampling locations together with the distance between the sampling locations makes it impossible to happen. As a consequence, the variability of estimates obtained from a systematic sample may be lower than the variability obtained from an independent sample. Less variability between the samples typically also means less variability between subjects in a group and a greater chance to detect statistical differences between groups. In biological regions of interest, gradual changes in the density of the objects of interest are common, and the efficiency of systematic sampling can be expressed in simple mathematical terms. Variability between estimates decreases typically with a factor of when independent samples are used, whereas it may decrease with a factor of 1/number of sampling locations when systematic samples are used (Gundersen & Jensen, 1987; Roberts et al., 1993). That means that if a certain precision can be obtained looking at 10,000 (or 400 or 100) sampling locations that were collected in a uniform random independent manner, the same degree of precision may be obtained from only 100 (or 20 or 10) sampling locations that were placed in a uniform random systematic manner. Importantly, it also means that it will typically require much less work to generate a precise outcome using a uniform random systematic sample.

Of course, Figure 7 has been drawn to make a point and may considered “unfair” to uniform random independent sampling with regard to the differences between the three samples. However, it is far from exaggerating what might happen when independent samples are used. That all samples fall outside the region of interest and return a count of zero is statistically just as likely as all samples hitting the central part of the section returning a count of very, very many. Any combination of sampling locations is just as likely to occur as any sequence of numbers in a lottery. Another example of the difference in the efficiency between independent and systematic samples is provided in Section 5.2.

The efficiency of systematic sampling, which was illustrated for the sampling within sections in Figure 7, applies to all levels of the sampling scheme. If the area of a region of interest shows gradual changes in size from section to section, using a systematic sample of sections will not only be more conform to routine laboratory procedures but also more efficient than using an independent sample. Depending on the demands of the study, the sampling scheme may be extended to additional levels—like a sample of brain slabs (Dorph‐Petersen et al., 2009) from which a sample of blocks are prepared, which are then sectioned and, again, sampled (Lyck et al., 2009).

There are two special cases in which systematic samples do not compare favorably with independent samples. If the region of interest shows truly random fluctuations in size from section to section or if the objects of interest are distributed at random within the region of interest, the variability of estimates obtained from systematic or independent samples will be the same. The variability of estimates obtained from a systematic sample may be larger than that obtained from an independent sample if there are periodic changes in the size of the region of interest and a match of such periodic changes with the intervals with which sections are collected. The same is true for a match between the distances between sampling locations within a section and a regular periodic distribution of objects within the sections. The case of periodic anatomical change will be discussed in more detail in Section 8.10.

3.4. Fractionator sampling

The fractionator (Gundersen, 1986) allows to calculate totals of number, length, surface or volume based on counts obtained from a sample of a region without any further knowledge about quantitative parameters of the region in which the counts were made. A uniform random systematic sample is taken at regular intervals, which allows calculating the fraction of the region of interest that is included in the sample. If a series of every third section of the region was collected, the sample contains only one‐third of the entire region and one‐third of the objects that one may want to measure. The section sampling fraction, ssf, is one‐third. If only part of the area of the section is investigated, for example, one‐tenth, only one‐tenth of the objects of interest in the section will be contained in this sample of the area. The area sampling fraction, asf, is one‐tenth. If one looks at the areas that were selected at high magnification, one may not look at every possible location along the thickness (z‐axis) of the section but restrict analysis to, for example, half of the thickness of the section. Again, only one‐half of the objects of interest that are located beneath the area will be contained in the sample. The thickness sampling fraction, tsf, is one‐half.

Whatever we measure and however we perform measurements in the sample, we know how much of all‐that‐there‐is we have looked at—one‐half of the thickness of one‐tenth of the area in one‐third of the sections, that is, one‐sixtieth (1/2 × 1/10 × 1/3) of all‐that‐there‐is. If what we measure is one‐sixtieth of all‐that‐there‐is, all‐that‐there‐is in the entire structure must be 60 times what we measured. Uniform random systematic sampling and fractionator sampling are two sides of the same coin. Uniform random systematic sampling becomes fractionator sampling if we use the information about the sample to calculate the fraction of the region that we analyzed, and if we use this fraction to calculate the amount of all‐that‐there‐is in the region.

The number of fractions that are included in a fractionator sampling scheme can be extended according to the practical demands of a study. If, for example, the human neocortex is the region of interest, it may be divided in a number of smaller blocks that can be cut and stained following standard protocols (Lyck et al., 2009). Not all blocks need to be processed as long as the fraction of blocks that have been processed is known. Although not yet very useful in the neurosciences, the sections that are being used do not need to be parallel, equally thick or evenly spaced (Baddeley, Dorph‐Petersen, & Vedel Jensen, 2006; Gundersen, 1986) as long as each section has the same chance to contribute to the sample as any other section and as long as it is known which fraction of all sections was sampled. Using a uniform random independent sample that represents a known fraction of all sections would also be a fractionator sample. The same applies to the other levels at which one may want to sample.

Sampling schemes like the smooth fractionator (Andersen, Fabricius, Gundersen, Jelsing, & Stark, 2004; Gardi, Nyengaard, & Gundersen, 2006; Gundersen, 2002a) and the proportionator (Gardi, Nyengaard, & Gundersen, 2008) have been developed that can take into account regional differences in the distribution of the region that we may want to know something about. Briefly, the smooth fractionator adjusts the distribution of the region of interest across sections to efficient fractionator sampling. The proportionator instead adjusts sampling intensity within sections to the local distribution of the objects of interest. When the appearance of a region of interest in different sections is very heterogeneous or when objects are distributed very heterogeneously within the sections, these approaches have the potential to generate more precise estimates per unit of work invested than even uniform random systematic samples.

3.5. No sampling

Correct sampling is important, but it is only a means to reduce workload and not an inevitable part of design‐based stereology. Workload may not be prohibitive to the assessment of everything, or at least everything at one or more levels of the sampling scheme. If one can assess all sections but not all objects within them, one only needs to sample within sections. If there are too many sections but only few objects in each section, one only has to sample sections but not within sections. At each step at which sampling can be avoided, a source of variability can be avoided. An example of no sampling is the study of ganglion cell distribution in retinal whole‐mounts by Coimbra, Collin, and Hart (2014). Instead of sectioning the eye (e.g., Fileta et al., 2008), the retina is prepared as a whole‐mount, and the sampling of sections is not necessary. The section sampling fraction is one. The depth of the entire retinal ganglion cell layer can be assessed with high magnification lenses, and it is technically not necessary to restrict sampling to part of the depth of the tissue. The thickness sampling fraction can therefore also be one. If workload is not a prohibitive factor to intensive or even exhaustive sampling at one or more levels of the sampling scheme, the question remains if the work is sensibly spent (Gundersen & Østerby, 1981). This question will be addressed in Section 8.

4. A BRIEF INTRODUCTION TO PROBES

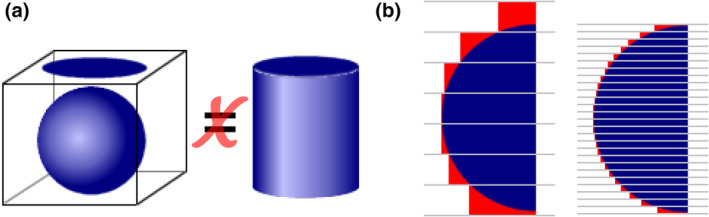

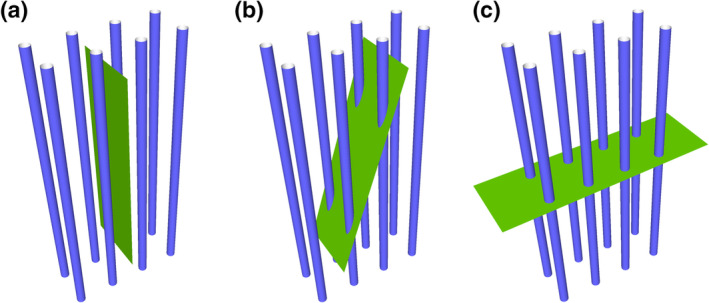

Probes are the tools with which the amount of objects, length, surface, or volume can be estimated. While sampling determines the place at which a measurement is being made, the probe that is selected determines how a measurement will be made. Stereological probes resemble other probes commonly used to investigate tissues. First, there is a similarity of the type of probe and the thing that is probed for. Proteins, in the form of antibodies, can be used to immunocytochemically probe for the proteins in tissues. In situ hybridization uses RNA probes to detect RNA in tissues. Not surprisingly, numbers of point probes, lengths of line probes, areas of surface probes and volume probes are used to probe for volume, area, length, and number. Traditional and stereological probes share another feature—complementarity or the lock‐and‐key principle. Antibodies need to be matched to their antigens and RNA probes need to be complementary to the sequence that they are supposed to detect. There is a similar requirement relating stereological probes to the morphological parameter that they measure. If one is interested in the quantitative morphology of three‐dimensional structures, the dimension of the probe and the dimension of the parameter that is being measured must sum up to at least three. A point (zero‐dimensional) can be used to estimate volumes (three‐dimensional; 0 + 3 = 3); a line (one‐dimensional) can be used to estimate areas (two‐dimensional; 1 + 2 = 3); an area (two‐dimensional) can be used to estimate length (one‐dimensional; 2 + 1 = 3), and a volume (three‐dimensional) must be used to estimate numbers (zero‐dimensional; 3 + 0 = 3). No method has been found that will work if the sum is smaller than three, and a proof presented by Gual‐Arnau, Cruz‐Orive, and Nuño‐Ballesteros (2010) suggests that none can be found.

If the dimensions of probe and parameter do not fulfill this requirement, the probe will start cross‐reacting with other parameters. This is akin to an antibody of insufficient specificity that cross‐reacts with a protein different from the one it was intended to react with. The example in Section 2 illustrated what happens if this requirement is not fulfilled. Not only do we generate the wrong number if we estimate number (zero‐dimensional) with a count in an area (two‐dimensional; 0 + 2 = 2), but the wrong number depends on the size or orientation of the objects that are being counted. A probe that we aimed at the number of objects cross‐reacts with the size or orientation of the objects.

The advantage of the dimensions of the probe and the parameter to add up to precisely three is that one can simply count the interactions between a probe and an object—the number of times that point probes fall within its volume, that a line probe pierces its surface, that an area probe intersects with its length and that objects are contained within a volume probe (Figure 8). The counts and the size of the probes enter into, yet again, very simple equations that allow the calculation of densities. These equations are referred to as relationship equations.

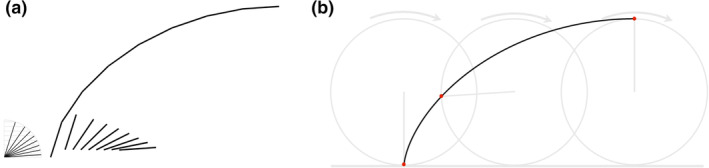

FIGURE 8.

Probes for volume, surface, length, and number. Probes for volume, surface, and length are depicted how they would look like applied to the three dimensional tissue and how they would look like in sections that have been prepared from the tissue. For number, two sections and the distance (h) between them define the volume probe of the disector. The two sections have also been superimposed on each other in the last view presented. For each probe, one of the places in which probes and feature interact has been marked by an arrowhead in the sections. The total number of probe feature interactions in the examples are nine (volume), ten (surface), five (length), and two (number) [Color figure can be viewed at wileyonlinelibrary.com]

The relationship equations are: for volume density

(Glagolev, 1933, 1955), for surface density

(Saltykov, 1946 as cited by Saltykov, 1974; Smith & Guttman, 1953), for length density

(Saltykov, 1946 as cited by Saltykov, 1974; Smith & Guttman, 1953), and for number density

(Sterio, 1984).

Q refers to “Querschnitt,” the German word for profile or cross section. The superscript minus in number density refers to the “seen in one section but not the other.” Reviews of the historical development of the relationship equations were presented by Hykšová, Kalousová, and Saxl (2012) or, with an emphasis on mathematical theory, by Cruz‐Orive (1997, 2017).

Next, densities can be converted to estimates of total number, length, area, or volume using equally simple equations. The conversion of densities to totals will be addressed in the sections on specific probes.

In Figure 8, the probes for surface and length were placed in the plane of the section. This is how the probes would be intuitively applied, by defining an area of the section or by placing lines on the section and count intersections. However, a requirement for all probes is that the number of the interactions of probes with volume, surface, length or number must only depend on the amount of volume, surface, length, or number. Section 2 showed how the disector accomplished this for a number estimate. Section 7 will briefly describe why orientation (of the sections or probes) may impact on estimates of surface and length and how tissue preparation or special shapes of test areas or lines rid these estimates from the influence of orientation. For estimating volume or number, there are no further theoretical requirements, but a number of practical constraints that are discussed together with the probes in the following sections.

The dimensions of the probe and of the parameter can sum up to more than three, and such probe/parameter combinations are part of some stereological methods. However, to obtain an estimate of the parameter does now require measurements instead of counts. If a surface area is, for example, estimated by area probes, the length of the lines of intersection of surface and area probe need to be measured to obtain an estimate of the area. One popular method that uses line probes (one‐dimensional) to estimate volume (three‐dimensional; 1 + 3 = 4) is the nucleator (Gundersen, 1988).

Finally, we may be interested in systems, which are not three‐dimensional. Cells can be grown in a “two‐dimensional” monolayer cell culture in a Petri dish. As the number of dimensions in our world of interest has changed to two, the sum of probe and feature only needs to be two ("Petri‐metrics" in Howard & Reed, 2010). A test area (two‐dimensional) is sufficient to count cell number (zero‐dimensional; 2 + 0 = 2) and lines (one‐dimensional) may be sufficient to estimate the length of their processes (one‐dimensional; 1 + 1 = 2). Some of the sampling strategies mentioned in the preceding section, or the counting frame described in Section 6.2 will still be useful tools. As soon as we become interested in something three‐dimensional, for example, the volume of the cells in the monolayer, we are back at a sum‐of‐three rule and the methods described in this review.

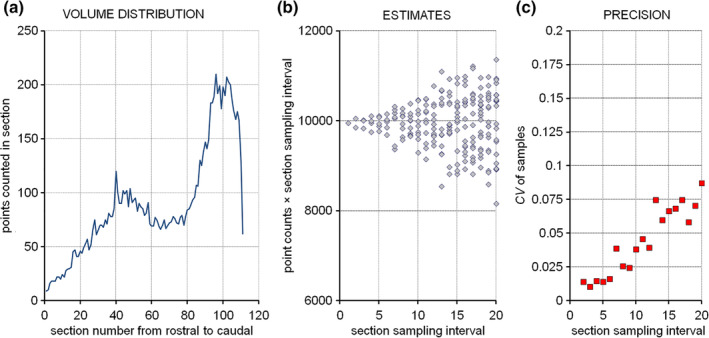

5. PROBING VOLUME: THE CAVALIERI ESTIMATOR

Volume estimates of brain compartments may serve different purposes. It is relatively easy to generate precise volume estimates. If changes in the numbers of neurons or synapses or dendrites or vessels are reflected in changes of the gross volume of the region that contain them, the volume change may be easier to detect than the underlying more specific changes. Volume estimates may therefore be an efficient first means to assess the likelihood of morphologically more specific structural changes (e.g., de Groot et al., 2005). If biological variability is low, differences as small as ~5% have been detected statistically in moderately sized groups (Slomianka & West, 1987). Also, volume estimates may be necessary if fractionator sampling (see Section 3) is not possible or desirable. In this case, estimates can be generated from density estimates and reference volumes (Pakkenberg & Gundersen, 1997; West & Gundersen, 1990). Finally, area estimates, which are part of the generation of volume estimates, are helpful in the design of sampling schemes that aim at other parameters than volume (see Section 8.11).

5.1. Calculating volume from area estimates

Following Cavalieri's theorem (translated by Evans, 1917), the volume of a region is equal to the sum of the areas of the region in parallel sections that pass through it, multiplied by the distance between the subsequent sections, or

We do know the distance between subsequent sections of our histological series. Note that the distance between areas is the distance between the same surfaces of the sections used in the series. If all sections are used there is no gap or distance between the sections, but there still is a distance, corresponding to section thickness, between the top surfaces of two adjacent sections. In addition to distance, the only thing needed to calculate volumes are estimates of the areas that a region of interest occupies in the sections. What comes to mind immediately is to outline the region in some graphics application and have the application calculate the area. As will be discussed in Section 5.4, this may not be the most convenient way to estimate an area, and it is prone to errors. Instead, we can use point counts to estimate the area of the region of interest in the sections.

5.2. A point probe to estimate an area

Imagine a region (blue circle in Figure 9) that occupies an unknown area within a reference area (all of the square and blue circle in Figure 9). If a point is placed many times at a random position within the reference area, the number of times that the point will fall onto the region depends on how much of the reference area is occupied by the region. If the region would occupy all of the reference area, a point would fall onto the region each time a point is placed in the reference area. In this case, the probability of the point to fall onto the region is 1. If the region would only occupy half of the reference area, a point would fall onto the region in about half of the trials, that is, the probability is about 0.5.

FIGURE 9.

Using points to estimate an area. The reference area (entire gray and blue areas of this figure) is probed with 25 randomly placed points to estimate the area of the blue circle within it. Twelve of the twenty‐five points fall onto the circle. The area of the circle can therefore be estimated to 12/25th of the reference area. In that we can arbitrarily choose the size of the reference area, we can calculate an estimate of the size of the circle [Color figure can be viewed at wileyonlinelibrary.com]

Usually it is the other way around. We do not know the area of the region, and we use the probability of points to fall onto it to estimate the area. The more trials are made, the better is the estimate of the area. The probability that we observe is equal to the proportion of the reference area occupied by the region—that is, if we observe a probability of 0.48 (12 points out of 25 that hit the structure in Figure 9), about 48% of the reference area is occupied by the region.

The area occupied by the region can now be calculated by multiplying the probability with the size of the reference area. We can arbitrarily decide on the size of the reference area before we start this little experiment.

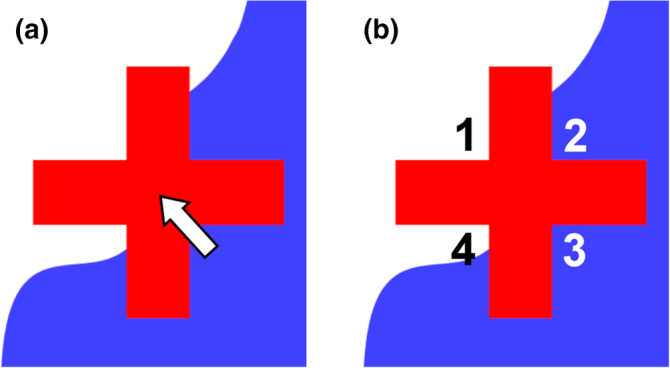

In Figures 8 and 9, the points are represented by a small crosshair (a dimensionless mathematical point would be invisible), which is also the most common representation of point probes in illustrations and in stereology software packages. Unfortunately, a crosshair does not always allow it to decide if a point hit the area of interest or not. First, it is not the entire cross that needs to fall onto the area of interest for the cross to be counted. Even the intersection of the bars of the cross does not always allow us to see if this point is located inside or outside the structure (Figure 10a).

FIGURE 10.

Representing a point probe. The representation of a point probe, a crosshair, falls onto the boundary of a blue region. In (a), the boundary is hidden by the crosshair, and it is not possible to decide if the intersection of the arms (arrow) falls onto the region or not. Using any one of the four corners of the crosshair in (b) allows an unequivocal decision. Corners 1 and 4 are falling outside the structure, while Corners 2 and 3 fall inside [Color figure can be viewed at wileyonlinelibrary.com]

The corners of the crosshair provide better probes (Glagolev, 1955). In Figure 10b, two of the corners are located inside the structure, while the two other corners are located outside the structure. Note that it is the very point at which the arms of the crosshair meet that is used as a probe. Any one of the four corners can be used as a probe, but which one should be decided upon before the probe is applied to the section. In the survey of probes (Figure 8), the lower left corner was selected and generated a count of nine. The upper right corner would have generated a count of eleven.

5.3. A point‐grid as an area probe

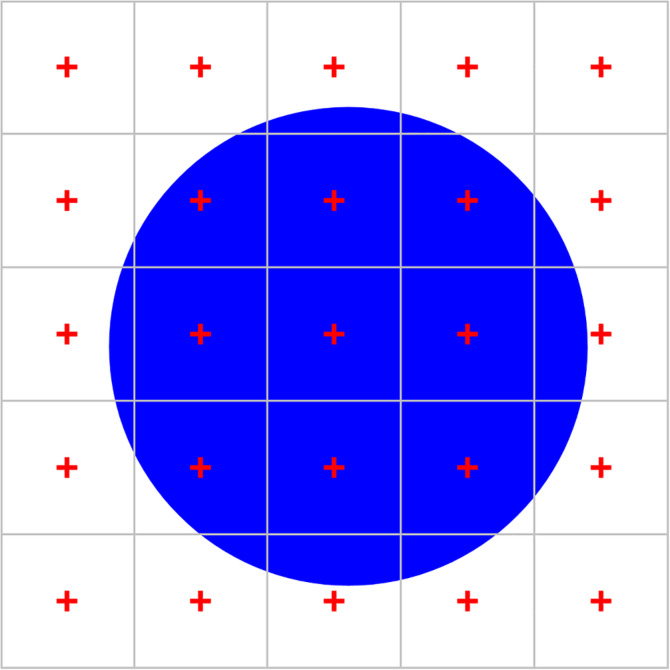

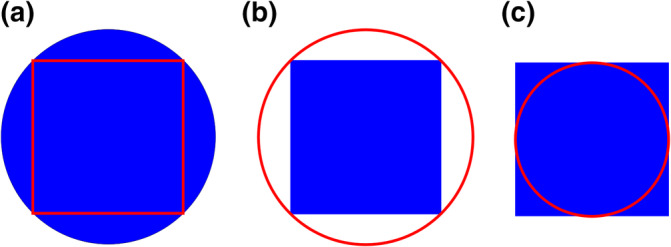

Using points that are placed completely at random within the reference area, like in Figure 9, would represent a uniform random independent sample of sites. Following the uniform random systematic way of sampling, the area of interest can also be probed by placing a grid of regularly spaced points over the reference area (Figure 11). Nine of twenty‐five points hit the area of interest, and an estimate of this area would be 9/25th of the reference area.

FIGURE 11.

Using a point grid to estimate area. When the reference area is probed with a grid containing 25 points, 9 points fall onto the blue circle. We estimate the area of the blue circle to 9/25th of the reference area. Alternatively, we can look at this sample as 25 smaller areas, each with 1/25th of the full area, that are each probed with one point. The area estimate of the blue circle would correspond to nine times the smaller area, that is, we do not need to know the reference area but only the area associated with each point [Color figure can be viewed at wileyonlinelibrary.com]

Now, let us get rid of the need to know the total number of points applied or the size of the reference area. Each point of the grid is not only a probe within the reference area, but also for a smaller area associated with each point. If the points are, for example, spaced 1 cm apart from each other, this new, smaller “reference area” is 1 cm2.

Within each small square, the probability to hit the structure with the point will either be 1 (the point hit the region) or 0 (the point did not hit the region). In Figure 11, we obtain nine trials of the smaller areas in which the probability of the point to fall onto the structure was observed to be 1 (1 hit/1 trial) and 16 trials in which this probability was 0 (0 hits/1 trial). An estimate of the area would be 9 × 1 × 1 cm2 + 16 × 0 × 1 cm2 = 9 cm2. Changing the size of the reference area (keeping the area per point constant) would only change the number of probes that return a zero probability. The only things that matter are the points that hit and the area associated with them. The area of the structure is directly proportional to the number of points that hit it, 9. This number multiplied by the area associated with each point (1 cm2) is an estimate of the area occupied by the structure in the section (9 cm2). We do no longer need to know the size of the entire reference area covered by the point grid or the number of points in the grid.

5.4. The precision of an area estimate

As already mentioned, the estimate of area would be more precise, if we repeated the random placement of the points many times. Instead of adding more points by repeating the estimate, we can use more points for each estimate, that is, place the points closer together in the grid that we apply to the structure.

In Figure 12, points counted in the green squares return an exact estimate of the area that is associated with these points. A hit is seen, the observed probability is 1, and the area is indeed one time the area associated with each point. The estimate of the red area is less precise. Using the coarse point grid in Figure 12a, four hits are seen in the 16 squares that contain some red area. For those four points, we add the entire area associated with the point to our estimate even though the red area occupied less than that. Statistically, adding too much for the four points that hit will be balanced by adding nothing for the remaining 12 squares, which also contain a little bit of red area even though their points are not counted. Increasing point density fourfold in Figure 12b, the green area, for which we obtain an exact area estimate, increases. At the same time, the red area decreases. Not only does it decrease, it is also probed 26 times (each little square that contains a little red) instead of 16 times, which should provide a more precise estimate.

FIGURE 12.

Increasing the density of a point grid increases precision. Point grids of different densities are placed over the round profile of a region. If point density increases, the area that is estimated less precisely (red) is found in 26 squares (b) instead of 16 squares (a). The red area also decreases relative to green areas that are estimated exactly by a point count. With more trials available to estimate the size of a relatively smaller area, the precision of the estimate of the area of the round region increases with increasing point density [Color figure can be viewed at wileyonlinelibrary.com]

Note that it is at least possible for all points to fall outside the structure or for all points to fall inside the structure if they are placed in the random independent manner that was used in Figure 9. This cannot happen for the grids (uniform random systematic samples) of points used in Figures 11 and 12. That means that when estimates are repeated, we would see fewer extreme values if we use a uniform random systematic sample. If we apply the point grids to different animals, we are therefore also likely to see fewer extreme values that are caused by the placement of the points. The standard deviation that we see in groups of animals would therefore also be smaller, and we would have a better chance to observe a difference between two groups. For the same number of points used, a grid (uniform random systematic samples) is more efficient than randomly placed points to generate estimates that can be used to document changes that may occur between groups.

How large is the region of interest illustrated in Figures 9, 11, and 12? By now we have three estimates—48% (12/25th) of the reference area from the independent sample in Figure 9, and 36% (9/25th) and 40% (10/25th = 40/100th) from the two systematic samples in Figures 11 and 12. The region of interest in the figures actually occupies 40.5% of the reference area. Aside from efficiency, systematic samples do have another advantage over independent samples. We can estimate the margin of error based on the number of points that have hit the region of interest. For the 36% estimate the margin of error is ~11% of the estimate and, for the 40% estimate, it is ~3% of the estimate. How this margin of error is estimated will be described in Section 8.4. Note that the margin of error indeed decreased to less than a third by increasing sampling density by a factor of 4, that is, by counting 40 instead of 9 hits. To obtain the same increase in precision using a uniform independent sample of points, sampling density would have to be increased by about a factor 16.

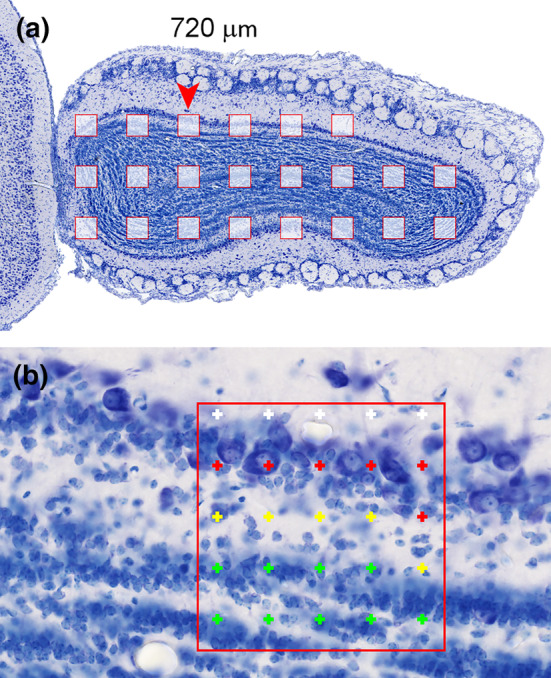

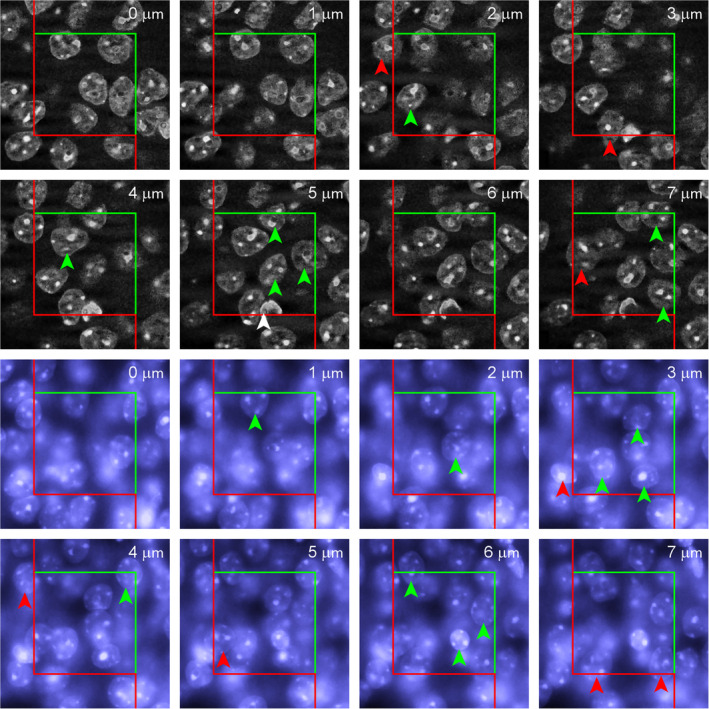

5.5. An example of a volume estimate

A full example of a Cavalieri estimate is useful to illustrate how much time it takes to generate an estimate once the material has been prepared, to provide a small dataset that can be used in the following sections of this review and to show how the observer impacts on the estimate.

Figure 13 provides images of a hamster olfactory bulb taken in a horizontal series of every 24th 20 μm thick, plastic‐embedded and Nissl‐stained section. The first section is placed at random in the first sampling interval (here the 12th sections, or 240 μm after the first appearance of the olfactory bulb). We therefore have a uniform random systematic sample of sections. A counting grid, in which the points are 350 μm apart along the x‐ and y‐axes, is superimposed onto each of the images. The grid was positioned at random onto each section, and the sections are therefore probed at a uniform random systematic set of sampling sites. Note that the grid was not just shifted at random along the x‐ and y‐axis. It was also rotated at random. If a longish structure of interest maintains its orientation from section to section, a row of points may miss the structure in some sections while hitting the structure in other sections. Rotating the grid eliminates the chance that this will happen often. Rotating or not rotating the grid will not affect the mean of repeated estimates, but it can reduce their variability.

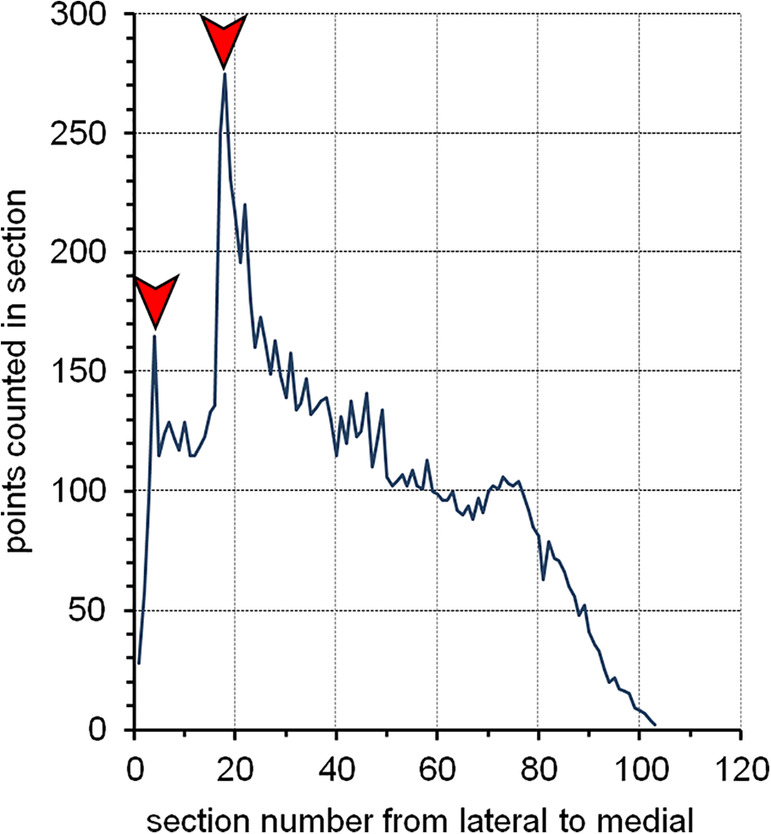

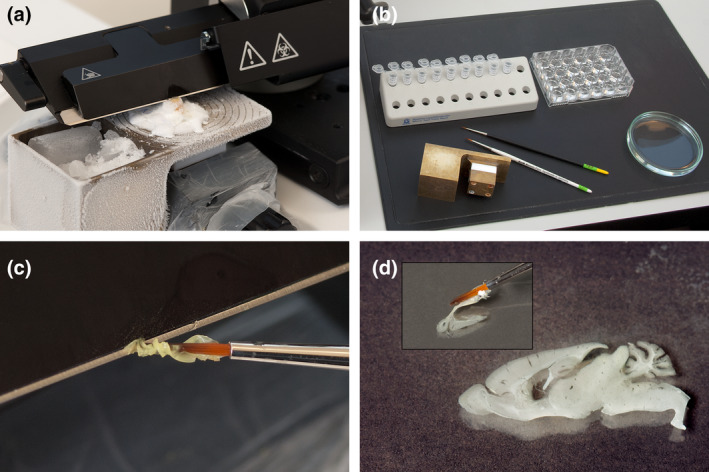

FIGURE 13.

Cavalieri estimator applied to the hamster olfactory bulb. A 20 μm thick plastic sections (methacrylate) were Nissl‐stained (Giemsa). The region of interest is highlighted in the lower right image: granule cell layer—green, internal plexiform layer—yellow and mitral cell layer—red. Their combined volume is estimated. Any point falling on any one of them is counted. Sections are 480 μm apart from each other. Distances between the points in the grids are 350 μm along the x‐ and y‐axes [Color figure can be viewed at wileyonlinelibrary.com]

The region of interest has been selected to be the combined granule cell, internal plexiform, and mitral cell layers (schematic in Figure 13, lower right). They are treated here as one structure, and any point falling onto anyone of these three layers should be counted. We do not need to keep track of which layer the counts came from because we are only interested in their combined volume. To obtain the area estimates needed to estimate the volume, a point count is performed for each image. The upper right corners of the crosses were selected to represent the points, but any of the corners will do. To calculate a volume estimate, only the total number of points counted across all sections is needed. Counts are nevertheless recorded per section because they are needed to estimate the precision of the volume estimate (in Section 8). Recording counts per section is also necessary when the anatomical distribution of the volume (or number, length, or surface) has a scientific interest (e.g., Amrein et al., 2015; Buckmaster & Dudek, 1997; Chen & Buckmaster, 2005; Slomianka & West, 1987)

Along the dorsoventral axis (from 240 μm to 3140 μm), the following sequence of counts was obtained: 3, 16, 13, 11, 12, 9, and 2. The total number of points counted is 66. The area associated with each point is 350 × 350 μm = 122,500 μm2. The total area is therefore 66 × 122,500 μm2 = 8,085,000 μm2. The sections are 480 μm apart. Using Cavalieri's theorem, we obtain a volume estimate of 8,085,000 μm2 × 480 μm = 3,880,800,000 μm3 ≈ 3.9 mm3.

The counts quoted above and those of other observers are likely to vary a little even though the same images and points are used. This may be because of the use of a different corner. It may also be because the schematic is used differently to define the region of interest in the other sections. It may also be because criteria to count a point differ. How does the tissue in the corner of the cross need to look like to be counted? The Cavalieri estimator cannot generate a bias in its own right, but the data that it is fed with may vary with the interpretation of the material by the observer, that is, with observer bias. Observer bias cannot be avoided by any method involving an observer. It even cannot be avoided by many methods that do not involve an observer, for example, an automated, image analysis‐based assessment. The use of automated methods only transfers the observer bias to the person that at some time in the past calibrated the automated assessment or trained a learning algorithm. Hmm, at least someone else could be blamed for mistakes.

5.6. Outlines or point counts?

Areas are often measured by outlining a structure and the subsequent automated calculation of the area of the structure based on the outline. How well the calculated area corresponds to the actual area does, of course, depend on how well the outline is done. The complexity of the shapes and, therefore, the effort to define precise outlines may vary with the region of interest. In Figure 13, the region of interest was chosen to be the combined granule cell, internal plexiform and mitral cell layers because the border is rather clearly defined, which suits an exercise. It also makes outlining rather easy. For the two thinner layers, the internal plexiform layer and the mitral cell layer, outlining becomes tedious work. An error that has only a small effect on the combined volume of the three layers will have a much larger effect on the volumes of the thinner layers. One may have to go to higher magnification to generate better outlines for the thinner layers—only to realize that borders which look well defined a low magnifications often present increasingly complex outlines at higher magnifications. At one point, it becomes the observer's decision to accept possible errors because a precise border simply cannot be seen or because the outline needs to be approximated because the border is too complex to be traced precisely with a reasonable effort. Point counting does not have this problem. The only decision that has to be made is whether the point falls onto the region of interest or not. The number of times that this decision will have to be made depends on the point density and the area of the region, but it does not depend on the complexity of the outline of the region of interest. Using outlines, an additional source of error may be associated with the calculation of areas based on the points that have been placed to define the outline. The area may, for example, be calculated for the polygon that is defined by the points of the outline (Figure 14a) or based on smooth lines that are approximated to the points (splines; Figure 14b,c). How well the area is estimated depends on how well the area fulfills the underlying assumptions of being a polygon or an area with smooth outlines. Although the resulting bias may become small with an increase in the number of points used to define the outline, it will always be there, and it may become significant (Bonilha, Kobayashi, Cendes, & Li, 2003).

FIGURE 14.