Abstract

Background

Despite the rapidly growing number of digital assessment tools for screening and diagnosing mental health disorders, little is known about their diagnostic accuracy.

Objective

The purpose of this systematic review and meta-analysis is to establish the diagnostic accuracy of question- and answer-based digital assessment tools for diagnosing a range of highly prevalent psychiatric conditions in the adult population.

Methods

The Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols (PRISMA-P) will be used. The focus of the systematic review is guided by the population, intervention, comparator, and outcome framework (PICO). We will conduct a comprehensive systematic literature search of MEDLINE, PsychINFO, Embase, Web of Science Core Collection, Cochrane Library, Applied Social Sciences Index and Abstracts (ASSIA), and Cumulative Index to Nursing and Allied Health Literature (CINAHL) for appropriate articles published from January 1, 2005. Two authors will independently screen the titles and abstracts of identified references and select studies according to the eligibility criteria. Any inconsistencies will be discussed and resolved. The two authors will then extract data into a standardized form. Risk of bias will be assessed using the Quality Assessment of Diagnostic Accuracy Studies-2 (QUADAS-2) tool, and a descriptive analysis and meta-analysis will summarize the diagnostic accuracy of the identified digital assessment tools.

Results

The systematic review and meta-analysis commenced in November 2020, with findings expected by May 2021.

Conclusions

This systematic review and meta-analysis will summarize the diagnostic accuracy of question- and answer-based digital assessment tools. It will identify implications for clinical practice, areas for improvement, and directions for future research.

Trial Registration

PROSPERO International Prospective Register of Systematic Reviews CRD42020214724; https://www.crd.york.ac.uk/prospero/display_record.php?ID=CRD42020214724.

International Registered Report Identifier (IRRID)

DERR1-10.2196/25382

Keywords: diagnostic accuracy, digital mental health, digital questionnaire, meta-analysis, psychiatry, systematic review

Introduction

Mental health disorders represent the leading cause of disability worldwide, with over a third of the world’s population being affected by a mental health condition in their lifetime [1]. Despite the well-documented economic and global burdens of mental disorders and the wide range of existing evidence-based treatments, mental health conditions remain largely underdiagnosed or misdiagnosed and undertreated [2,3], even in high-income countries [4,5]. Critically, the challenges associated with identifying and treating mental health disorders are multifaceted and present with a combination of patient, provider, and system-level barriers. With increasing pressure on mental health care budgets and the overwhelming growing burden of mental health disorders globally [6], prevention strategies and improvements in early identification are essential.

In this regard, digital technologies may offer an innovative and cost-effective way to improve and develop mental health care detection and diagnosis. In fact, digital assessment tools have the potential to support health care professionals in the recognition of mental health symptoms and patient-specific treatment needs. Furthermore, the use of digital technologies could help alleviate the load on the health care system by reducing the number of in-person appointments and providing patients with subclinical or mild mental health symptoms with self-help strategies and psychoeducation [7]. Digital solutions for psychiatry also have the potential to lessen some of the barriers associated with disclosing mental health difficulties in person, such as shyness and discomfort, as well as issues related to stigma and discrimination. Furthermore, such technologies can overcome geographical barriers to health seeking and treatment and can facilitate the engagement of conventionally hard-to-reach groups.

Studies have revealed the acceptability and efficacy of digital platforms for improving the reach, quality, and impact of mental health care [8], and patients have been found to value the ease of access and empowerment that can be obtained via the use of a digital platform [9]. Importantly, research has demonstrated that patients have a strong interest in using digital technologies to help monitor their mental health [10,11] and are more likely to report severe symptoms on technology platforms than in a face-to-face meeting with a health care professional [11]. Despite the benefits and potential identified by global and national organizations, such as the World Health Organization (WHO), the National Health Service (NHS), and the US Department of Health and Human Services [12], the implementation of these technologies in public and private mental health care services has been slow.

This may be, in part, due to resistance from medical professionals and public policy makers who may be unaware of how to best integrate the technologies into standard care practices. An area that has received less resistance is that of the digitalization of psychiatric questionnaires, with studies demonstrating comparable interformat reliability relative to traditional pen-and-paper questionnaires [13,14]. While the digitalization of existing psychiatric questionnaires is ongoing, the development of more sophisticated question- and answer-based digital solutions for psychiatry, including the use of audio and video [15,16] and personalized user journeys via dynamic question selection [17], represents a promising ground for further innovation.

Critically, while digital psychiatric questionnaires and other technology-based tools are likely to play an important role in the future of mental health care, little attention and effort have been put into establishing their diagnostic accuracy. To this end, there is a need for a comprehensive appraisal of the current state and diagnostic accuracy of digital solutions for screening and diagnosing mental health conditions. We aim to conduct a systematic review and meta-analysis of available question- and answer-based digital mental health tools for a range of psychiatric conditions in the adult population and to evaluate their diagnostic accuracy. Implications for clinical practice, policy making, development, and innovation will be provided. Additionally, potential routes for improving and facilitating blended care (ie, the combination of traditional and digital services) will be investigated, and directions for future research will be identified.

Methods

Overview

This review has been registered with the International Prospective Register of Systematic Reviews (PROSPERO; CRD42020214724). The protocol was developed to comply with the recommendations of the Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols (PRISMA-P) [18]. In line with the PRISMA checklist recommendations, the focus of the systematic review is guided by the population, intervention, comparator, and outcome framework (PICO). This review will involve literature search, article selection, data extraction, quality appraisal, data analysis, meta-analysis, and data synthesis. Protocol amendments will be tracked and reported in the final publication.

Eligibility Criteria

We aim to conduct a systematic review and meta-analysis of available question- and answer-based digital mental health assessment tools for a range of psychiatric conditions in the adult population and to evaluate their diagnostic accuracy. To do this, the below-mentioned PICO framework will be used.

Population

The scope of this research includes a comprehensive range of highly prevalent psychiatric conditions that are typically diagnosed and treated in primary and/or secondary care settings (see Multimedia Appendix 1 for an overview of the lifetime prevalence and patient impact of the concerned conditions). The population will include adults who have been assessed for the presence of any of the following mental health conditions: mood/affective disorders (eg, bipolar disorder and depressive disorders/dysthymia), anxiety disorders (eg, generalized anxiety disorder, social anxiety disorder/social phobia, and panic disorder), trauma and stress-related disorders (eg, posttraumatic stress disorder, acute stress disorder, and adjustment disorder), neurodevelopmental disorders (eg, attention-deficit/hyperactivity disorder and autism spectrum disorders), eating disorders (eg, anorexia nervosa and bulimia nervosa), personality disorders (eg, borderline personality disorder and emotionally unstable personality disorder), substance-related disorders (eg, alcohol use disorder and substance use disorder), obsessive-compulsive disorder, insomnia, and schizophrenia. In consultation with a psychiatrist (SB) and given their relevance for the assessment of the above-listed psychiatric conditions, the following transdiagnostic symptom domains will also be included: self-harm, suicidality, and psychosis.

Studies comprising age ranges, where the mean age falls within 18 to 65 years, will be included. The review will focus on both clinical and community-based samples of any gender, severity of mental health concern, ethnicity, and geographical location.

Intervention

Interventions of interest include question- and answer-based digital diagnostic tools completed by an individual that a health care professional might use to reach a mental health diagnosis. This can comprise pen-and-paper psychiatric questionnaires that have been digitalized and digital assessment tools that are intended to aid in clinical decision-making, including script-based automated conversational agents (ie, chatbots). The format of delivery can include computerized or web-based interventions delivered either offline or online via a computer, tablet, or smartphone.

Comparator

No specific comparator is required for studies to be included in this systematic review and meta-analysis.

Outcomes

The primary objectives are to identify the types of question- and answer-based digital assessment tools used in mental health care and to assess their diagnostic accuracy (eg, sensitivity and specificity).

Study Design

We will consider any study design for the assessment.

Search Strategy

We will search the following databases: MEDLINE, PsychINFO, Embase, Web of Science Core Collection, Cochrane Library, Applied Social Sciences Index and Abstracts (ASSIA), and Cumulative Index to Nursing and Allied Health Literature (CINAHL). Other potentially eligible trials or publications will be identified by hand searching the reference lists of retrieved publications, systematic reviews, and meta-analyses. Grey literature (eg, unpublished theses, reports, and conference presentations) will also be identified by hand. Keywords and subject headings related to digital technologies, assessment tools, and diagnostic accuracy outcomes were identified in a preliminary scan of the literature and chosen in consultation with a medical librarian (EB). Key terms for the most common mental health conditions were taken from the Diagnostic and Statistical Manual of Mental Disorders (DSM)-5 and International Classification of Diseases (ICD)-11 (or DSM-IV and ICD-10 for older publications) diagnostic manuals and chosen in consultation with a psychiatrist (SB). In addition to these, notable symptom domains, such as self-harm, suicidality, and psychosis, were included in the search terms on the basis of their relevance in psychiatric assessments. The search terms that will be included in this review are grouped into four themes and are presented in Table 1, with search strategies presented in Multimedia Appendix 2. For simplicity, while we will not specifically search for conditions, such as generalized anxiety disorder, separation anxiety disorder, and histrionic personality disorder, these will be captured by our broader search strategy terms (ie, “anxiety disorder” and “personality disorder”). If additional relevant keywords or subject headings are identified during any of the electronic searches, we will modify the electronic search strategies to incorporate these terms and document the changes.

Table 1.

Search terms.

| Category | Keywords/subject headings (in the title or abstract) |

| Digital technology | “Application” OR “chatbot” OR “computer” OR “conversational agent” OR “device” OR “digital” OR “e-health” OR “e-mental health” OR “electronic” OR “internet” OR “mHealth” OR “m-health” OR “mobile” OR “online” OR “PC” OR “phone” OR “smart” OR “tablet” OR “telehealth” OR “telemedicine” OR “text messaging” OR “web” OR “algorithm” OR “software” |

| Assessment tool | “Assessment” OR “diagnostic” OR “mood diary” OR “PHQ” OR “PHQ-9” OR “GAD” OR “GAD-7” OR “questionnaire” OR “screening” OR “tool” OR “test” OR “The Computerized Adaptive Test for Mental Health” OR “CAT-MH” OR “e-PASS” OR “ WSQ” OR “TAPS” OR “Nview” OR “ada” OR “doctorlink” OR “clinicom” |

| Mental health | “Depression” OR “major depressive disorder” OR “MDD” OR “dysthymia” OR “bipolar” OR “anxiety disorder” OR “generalised anxiety disorder” OR “generalized anxiety disorder” OR “GAD” OR “panic disorder” OR “social anxiety disorder” OR “social phobia” OR “attention-deficit/hyperactivity disorder” OR “attention deficit hyperactivity disorder” OR “ADHD” OR “autism spectrum disorders” OR “ASD” OR “insomnia” OR “eating disorders” OR “anorexia nervosa” OR “bulimia nervosa” OR “obsessive compulsive disorder” OR “OCD” OR “schizophrenia” OR “psychosis” OR “alcohol abuse” OR “alcohol addiction” OR “substance abuse” OR “substance addiction” OR “drug abuse” OR “drug addiction” OR “post-traumatic stress disorder” OR “PTSD” OR “acute stress disorder” OR “adjustment disorder” OR “personality disorder” OR “borderline personality disorder” OR “BPD” OR “emotionally unstable personality disorder” OR “EUPD” OR “self harm” OR “self-harm” OR “suicidality” |

| Diagnostic accuracy | “Accuracy” OR “sensitivity” OR “specificity” OR “receiver operating characteristic” OR “ROC” OR “area under the curve” OR “AUC” OR “AUROC” OR “positive predictive value” OR “PPV” OR “negative predictive value” OR “NPV” OR “precision” OR “recall” OR “true positive rate” OR “TPR” OR “true negative rate” OR “TNR” OR “agreement rate” OR “validity” |

Inclusion Criteria

Owing to the recent developments in the digitalization of existing psychiatric questionnaires and the rapid growth in digital assessment tools for the screening and diagnosis of mental health conditions, only studies published in the last 15 years (from January 2005) will be included. Studies that evaluate at least one question- and answer-based digital assessment tool to screen or diagnose one or more mental health conditions covered by this review will be included. Any gender, severity of mental health concern, ethnicity, and geographical location will be included. Any study design will be included.

Exclusion Criteria

Studies of digital assessment tools that are not exclusively question and answer based, such as blood tests, imaging techniques, monitoring tools, genome analysis, accelerometer devices, and wearables, will also be excluded. Specific subgroups, such as pregnant women, refugees/asylum seekers, prisoners, and those in acute crisis/admitted to emergency services will be excluded. Studies on tools used to identify mental health disorders in physical illnesses (eg, cancer) will also be excluded. We will also exclude studies on somatoform disorders and specific phobias as these are less frequently diagnosed in primary care and rarely present in secondary care. In addition, studies on tools used to identify neuropsychiatric disorders (eg, dementias) or any disorders that are due to clinically confirmed temporary or permanent dysfunction of the brain are outside the scope of the current review. Studies on digital assessment tools used to predict the future risk of developing a mental health disorder will also be excluded.

Screening and Article Selection

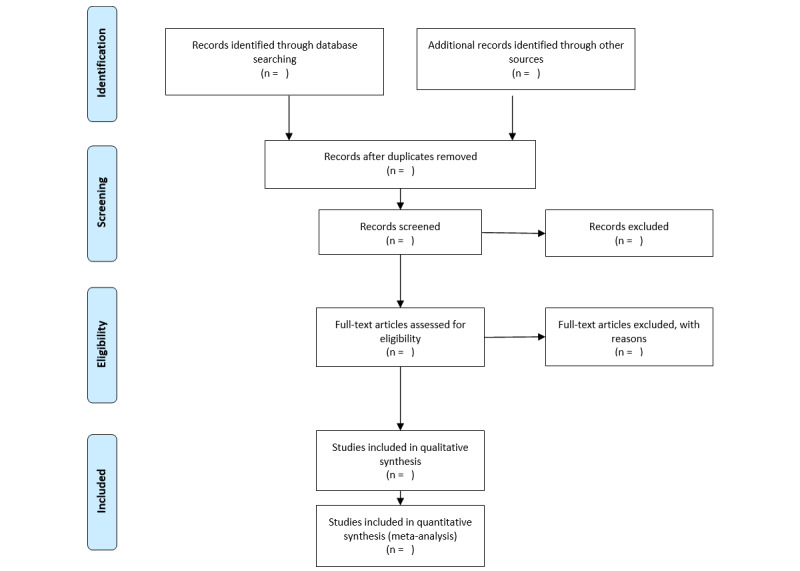

All articles identified from the database searches will be stored in the systematic review software Rayyan, which will be used to eliminate any duplicates. Two independent reviewers will screen the titles and abstracts of all the studies. To decide whether an article should be examined further, independent reviewers will assess their eligibility against the inclusion criteria. Publications will be labelled as “exclude,” “include,” or “maybe.” For an article to be included, both reviewers must label it as “include.” An article will be excluded if both reviewers label it as “exclude.” Articles labelled as “maybe” or any disagreements will be discussed until a consensus is reached. All exclusions will be documented. The screening process will be piloted and tested by the reviewers on a subset of 100 studies, after which the review will continue. The full text of the “included” articles will then be examined by the two independent reviewers in order to determine final eligibility, with any disagreements being resolved by a third reviewer. All reasons for full-text exclusions will be recorded. A PRISMA flow diagram will be used to record the details of the screening and selection process so that the study can be reproduced (Figure 1).

Figure 1.

PRISMA flow-chart template of the search and selection strategy.

Data Extraction

Two independent reviewers will examine the full text of all the papers included in the final selection to extract the predetermined outcomes. Outcomes will be extracted into a predetermined standardized electronic data collation form, and they will include (1) publication details: author(s) and date; (2) study design and methodology: sample size(s), sample characteristics (mean age, proportions of males and females, ethnicity, and geographical location), recruitment and sampling procedures, main psychiatric diagnosis, and how psychiatric diagnosis was established/confirmed; (3) index test (ie, the digital assessment tool) and reference standard (ie, assessment by a psychiatrist and standardized structured and semistructured diagnostic interviews based on the DSM-5 and ICD-11 criteria, or DSM-IV and ICD-10 for older publications, such as the Composite International Diagnostic Interview [19] and the Structured Clinical Interview for DSM-5 Disorders [20]); and (4) outcomes of interest: measure of diagnostic accuracy.

Disagreements will be resolved by discussion, and if consensus cannot be reached, a third reviewer will be consulted.

Quality Appraisal: Risk of Bias and Applicability

Following the final selection of studies, two independent reviewers will assess risk of bias and applicability of all included studies using the Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies (QUADAS-2 [21]). The checklist consists of the following four key domains: patient selection, index test, reference standard, and flow of participants through the study and timing of the index tests and reference standard. Each of these domains has a subdomain for risk of bias, while the first three have a subdomain for concerns regarding applicability. The subdomains about risk of bias include signaling questions to guide the overall judgement about whether a study is likely to be biased or not. Studies that are judged as “low” on all domains relating to bias or applicability are classed as having “low risk of bias” or “low concern regarding applicability.” On the other hand, studies judged as “high” or “unclear” in one or more domains may be deemed as “at risk of bias” or as having “concerns regarding applicability.”

In the event of a disagreement, the reviewers will discuss before consulting a third reviewer. A table will be created summarizing the risk of bias and applicability of all included studies.

Data Analysis and Synthesis

The data analytic strategy was developed in consultation with a statistician. We will conduct a descriptive analysis to summarize the extracted data, with studies grouped by target mental health condition (eg, bipolar disorder).

Where possible and in line with the recommendations in the Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy [22], we will construct bivariate random-effects meta-analyses to determine the meta-analyzed sensitivity and specificity of each digital assessment tool and all digital assessment tools collectively per target mental health condition. Summary receiver operating characteristic (sROC) curves with accompanying 95% CIs for each digital assessment tool and for all digital assessment tools collectively per condition will be calculated using hierarchical sROC curve meta-analysis methods.

Between-study variance as a result of heterogeneity for each digital assessment tool and all digital assessment tools collectively per target mental health condition will be assessed using Higgins I2 statistic (0%-25%, might not be important; 25%-50%, might represent low heterogeneity; 50%-75%, might represent moderate heterogeneity; 75%-100%, high heterogeneity [23]). To explore the potential sources of heterogeneity, meta-regression analyses using potential predictive covariates will be conducted where possible. In order to explore potential sources of heterogeneity, QUADAS-related factors, such as participant selection, will be used as predictive covariates in the meta-regression analyses. Further, if sufficient data are available, the effects of the following modifiers will be assessed: (1) reference standard (assessment by a psychiatrist, and standardized structured and semistructured diagnostic interviews based on the DSM-5 and ICD-11 criteria, or DSM-IV and ICD-10 for older publications, are considered the gold standard, but these can vary considerably; thus, separate analyses per reference standard will be conducted); (2) population (inpatient or noninpatient); (3) national context (Western or non-Western); (4) gender (male or female); and (5) mode of delivery (smartphone, tablet, or computer).

Importantly, in the event of overlapping populations across studies, subgroup analyses (excluding the smaller studies with shared populations) will be conducted in order to quantify the impact of these on the overall results. Finally, publication bias will be explored by employing the Begg test [24] and Egger test [25] for each digital assessment tool and all digital assessment tools collectively per target mental health condition. Analyses will be conducted in R (R Foundation for Statistical Computing) in consultation with a statistician. Any amendments to the data analytic strategy will be tracked and reported in the final publication.

Results

The systematic review and meta-analysis commenced in November 2020. Findings are expected by May 2021. This work has been funded by Stanley Medical Research Institute (SMRI; grant number: 07R-1888) and Psyomics Ltd.

Discussion

A comprehensive systematic review of the literature and meta-analysis will provide a better understanding of the current state of digital assessment tools for mental health and their diagnostic accuracy. Based on the data, we will identify implications for clinical practice, policy making, development, and innovation. Additionally, potential routes for improving and facilitating blended care (ie, the combination of traditional and digital services) will be investigated, and directions for future research will be identified.

Acknowledgments

This review has been funded by Stanley Medical Research Institute (SMRI; grant number: 07R-1888) and Psyomics Ltd. We would like to thank the Department of Pure Mathematics and Mathematical Statistics at the University of Cambridge for assisting with the development of the data analytic strategy.

Abbreviations

- DSM

Diagnostic and Statistical Manual of Mental Disorders

- ICD

International Classification of Diseases

- PICO

population, intervention, comparator, and outcome

- PRISMA-P

Preferred Reporting Items for Systematic Reviews and Meta-Analyses Protocol

- QUADAS-2

Quality Assessment of Diagnostic Accuracy Studies-2

- sROC

summary receiver operating characteristic

Appendix

Prevalence and patient impact of the concerned psychiatric conditions.

Search strategies.

Footnotes

Authors' Contributions: NMK and TSS conceived the study topic and designed the review protocol. EJB developed the search strategies. NMK and TSS prepared the first draft of the protocol with revisions from EJB, BS, EF, JB, JT, and SB.

Conflicts of Interest: SB is a director of Psynova Neurotech Ltd and Psyomics Ltd. NMK, TSS, EF, and SB have financial interests in Psyomics Ltd. The other authors have no conflicts to declare.

References

- 1.Steel Z, Marnane C, Iranpour C, Chey T, Jackson JW, Patel V, Silove D. The global prevalence of common mental disorders: a systematic review and meta-analysis 1980-2013. Int J Epidemiol. 2014 Apr;43(2):476–93. doi: 10.1093/ije/dyu038. http://europepmc.org/abstract/MED/24648481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Lipari RN, Hedden SL, Hughes A. The CBHSQ Report. Rockville, MD: Substance Abuse and Mental Health Services Administration (US); 2013. Substance Use and Mental Health Estimates from the 2013 National Survey on Drug Use and Health: Overview of Findings. [PubMed] [Google Scholar]

- 3.Chisholm D, Sweeny K, Sheehan P, Rasmussen B, Smit F, Cuijpers P, Saxena S. Scaling-up treatment of depression and anxiety: a global return on investment analysis. The Lancet Psychiatry. 2016 May;3(5):415–424. doi: 10.1016/S2215-0366(16)30024-4. https://linkinghub.elsevier.com/retrieve/pii/S2215-0366(16)30024-4. [DOI] [PubMed] [Google Scholar]

- 4.Richards D, Richardson T, Timulak L, McElvaney J. The efficacy of internet-delivered treatment for generalized anxiety disorder: A systematic review and meta-analysis. Internet Interventions. 2015 Sep;2(3):272–282. doi: 10.1016/j.invent.2015.07.003. [DOI] [Google Scholar]

- 5.Barth J, Munder T, Gerger H, Nüesch E, Trelle S, Znoj H, Jüni P, Cuijpers P. Comparative efficacy of seven psychotherapeutic interventions for patients with depression: a network meta-analysis. PLoS Med. 2013;10(5):e1001454. doi: 10.1371/journal.pmed.1001454. https://dx.plos.org/10.1371/journal.pmed.1001454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kakuma R, Minas H, van Ginneken N, Dal Poz Mr, Desiraju K, Morris JE, Saxena S, Scheffler RM. Human resources for mental health care: current situation and strategies for action. The Lancet. 2011 Nov 05;378(9803):1654–1663. doi: 10.1016/S0140-6736(11)61093-3. [DOI] [PubMed] [Google Scholar]

- 7.Newman MG, Szkodny LE, Llera SJ, Przeworski A. A review of technology-assisted self-help and minimal contact therapies for anxiety and depression: is human contact necessary for therapeutic efficacy? Clin Psychol Rev. 2011 Feb;31(1):89–103. doi: 10.1016/j.cpr.2010.09.008. [DOI] [PubMed] [Google Scholar]

- 8.Naslund JA, Marsch LA, McHugo GJ, Bartels SJ. Emerging mHealth and eHealth interventions for serious mental illness: a review of the literature. J Ment Health. 2015 Aug 19;24(5):321–32. doi: 10.3109/09638237.2015.1019054. http://europepmc.org/abstract/MED/26017625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Knowles SE, Toms G, Sanders C, Bee P, Lovell K, Rennick-Egglestone S, Coyle D, Kennedy CM, Littlewood E, Kessler D, Gilbody S, Bower P. Qualitative meta-synthesis of user experience of computerised therapy for depression and anxiety. PLoS One. 2014;9(1):e84323. doi: 10.1371/journal.pone.0084323. http://dx.plos.org/10.1371/journal.pone.0084323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Torous J, Chan SR, Yee-Marie Tan S, Behrens J, Mathew I, Conrad EJ, Hinton L, Yellowlees P, Keshavan M. Patient Smartphone Ownership and Interest in Mobile Apps to Monitor Symptoms of Mental Health Conditions: A Survey in Four Geographically Distinct Psychiatric Clinics. JMIR Ment Health. 2014;1(1):e5. doi: 10.2196/mental.4004. https://mental.jmir.org/2014/1/e5/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Torous J, Staples P, Shanahan M, Lin C, Peck P, Keshavan M, Onnela J. Utilizing a Personal Smartphone Custom App to Assess the Patient Health Questionnaire-9 (PHQ-9) Depressive Symptoms in Patients With Major Depressive Disorder. JMIR Ment Health. 2015;2(1):e8. doi: 10.2196/mental.3889. https://mental.jmir.org/2015/1/e8/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.East ML, Havard BC. Mental Health Mobile Apps: From Infusion to Diffusion in the Mental Health Social System. JMIR Ment Health. 2015;2(1):e10. doi: 10.2196/mental.3954. https://mental.jmir.org/2015/1/e10/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Alfonsson S, Maathz P, Hursti T. Interformat reliability of digital psychiatric self-report questionnaires: a systematic review. J Med Internet Res. 2014 Dec 03;16(12):e268. doi: 10.2196/jmir.3395. https://www.jmir.org/2014/12/e268/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.van Ballegooijen W, Riper H, Cuijpers P, van Oppen P, Smit JH. Validation of online psychometric instruments for common mental health disorders: a systematic review. BMC Psychiatry. 2016 Feb 25;16:45. doi: 10.1186/s12888-016-0735-7. https://bmcpsychiatry.biomedcentral.com/articles/10.1186/s12888-016-0735-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rogers KD, Young A, Lovell K, Campbell M, Scott PR, Kendal S. The British Sign Language versions of the Patient Health Questionnaire, the Generalized Anxiety Disorder 7-item Scale, and the Work and Social Adjustment Scale. J Deaf Stud Deaf Educ. 2013 Jan;18(1):110–22. doi: 10.1093/deafed/ens040. http://europepmc.org/abstract/MED/23197315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.van Ballegooijen W, Riper H, Donker T, Martin Abello K, Marks I, Cuijpers P. Single-item screening for agoraphobic symptoms: validation of a web-based audiovisual screening instrument. PLoS One. 2012;7(7):e38480. doi: 10.1371/journal.pone.0038480. https://dx.plos.org/10.1371/journal.pone.0038480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Olmert T, Cooper JD, Han SYS, Barton-Owen G, Farrag L, Bell E, Friend LV, Ozcan S, Rustogi N, Preece RL, Eljasz P, Tomasik J, Cowell D, Bahn S. A Combined Digital and Biomarker Diagnostic Aid for Mood Disorders (the Delta Trial): Protocol for an Observational Study. JMIR Res Protoc. 2020 Aug 10;9(8):e18453. doi: 10.2196/18453. https://www.researchprotocols.org/2020/8/e18453/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, Shekelle P, Stewart LA, PRISMA-P Group Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015 Jan 01;4:1. doi: 10.1186/2046-4053-4-1. https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/2046-4053-4-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Robins LN, Wing J, Wittchen HU, Helzer JE, Babor TF, Burke J, Farmer A, Jablenski A, Pickens R, Regier DA. The Composite International Diagnostic Interview. An epidemiologic Instrument suitable for use in conjunction with different diagnostic systems and in different cultures. Arch Gen Psychiatry. 1988 Dec;45(12):1069–77. doi: 10.1001/archpsyc.1988.01800360017003. [DOI] [PubMed] [Google Scholar]

- 20.First M, Williams J, Karg R. Structured Clinical Interview for DSM-5 Disorders, Clinician Version (SCID-5-CV) Arlington, VA: American Psychiatric Association; 2016. [Google Scholar]

- 21.Whiting PF, Rutjes AWS, Westwood ME, Mallett S, Deeks JJ, Reitsma JB, Leeflang MMG, Sterne JAC, Bossuyt PMM, QUADAS-2 Group QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. 2011 Oct 18;155(8):529–36. doi: 10.7326/0003-4819-155-8-201110180-00009. https://www.acpjournals.org/doi/10.7326/0003-4819-155-8-201110180-00009?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed. [DOI] [PubMed] [Google Scholar]

- 22.Deeks JJ, Higgins JPT, Altman DG. Chapter 10: Analysing data and undertaking meta-analyses. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, editors. Cochrane Handbook for Systematic Reviews of Interventions version 6.1 (updated September 2020) Chichester, UK: John Wiley & Sons; 2020. [Google Scholar]

- 23.Higgins JPT, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003 Sep 06;327(7414):557–60. doi: 10.1136/bmj.327.7414.557. http://europepmc.org/abstract/MED/12958120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Begg CB, Mazumdar M. Operating characteristics of a rank correlation test for publication bias. Biometrics. 1994 Dec;50(4):1088–101. [PubMed] [Google Scholar]

- 25.Egger M, Smith GD, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997 Sep 13;315(7109):629–34. doi: 10.1136/bmj.315.7109.629. http://europepmc.org/abstract/MED/9310563. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Prevalence and patient impact of the concerned psychiatric conditions.

Search strategies.