Abstract

Hierarchical support vector regression (HSVR) models a function from data as a linear combination of SVR models at a range of scales, starting at a coarse scale and moving to finer scales as the hierarchy continues. In the original formulation of HSVR, there were no rules for choosing the depth of the model. In this paper, we observe in a number of models a phase transition in the training error—the error remains relatively constant as layers are added, until a critical scale is passed, at which point the training error drops close to zero and remains nearly constant for added layers. We introduce a method to predict this critical scale a priori with the prediction based on the support of either a Fourier transform of the data or the Dynamic Mode Decomposition (DMD) spectrum. This allows us to determine the required number of layers prior to training any models.

Keywords: support vector regression, fourier transform, dynamic mode decomposition, koopman operator

1. Introduction

Many of the machine learning algorithms require the correct choice of hyperparameters to give the best description of the given data. One of the most popular methods for choosing hyperparameters is doing grid-search. In grid search, the model is trained for some points in hyperparameter space which are called a grid and then the model with the lowest error on the validation set is chosen. Other methods use alternative optimization algorithms, such as genetic algorithms. All these methods include training model and calculating the error multiple times, which can be very expensive if the hyperparameter space is large.

The purpose of this paper is to develop a method for determining hyperparameters for Support Vector Regression (SVR) with Gaussian kernels from time-series data only. Unlike other approaches for choosing hyperparameters, our method identifies a set of hyperparameters without needing to train models and perform a grid search (or executing some other hyperparameter optimization algorithm), thereby bypassing a potentially costly step. The proposed method identifies the inherent scale and complexity of the data and adapts the SVR accordingly. In particular, we give a method for determining the scale of Gaussian kernel by connecting it to the most important frequencies of the signal, as determined by either a Fourier transform or Dynamic Mode Decomposition. Thus we leverage classical and generalized harmonic analysis to inform the choice of hyperparameters in modern machine learning algorithms. A pertinent question is why not just use FFT? The answer is that HSVR are better suited to model strongly locally varying data whereas, for FFT to be efficient, the data need to possess some symmetry such as space or time translation.

Previous Work

There are many different approaches in tuning SVR hyperparameters for reducing the generalization error. Some popular methods of estimating generalization error are Leave-one-out (LOO) score and k cross-validation score. They are easy to implement, but for calculating those measures more models have to be trained for each combination of hyperparameters that need to be tested. This can be prohibitively expensive, so other error estimates, which are easier to calculate, were developed. These methods include the Xi-Alpha bound [1], the generalized approximate cross-validation [2], the approximate span bound [3], the VC bound [3], the radius-margin bound [3] or the quality functional of the kernel [4].

For choosing hyperparameters, the simplest method is to perform grid search over the space of hyperparameters and then choose the ones with the lowest error estimation. However, grid search suffers from the curse of dimensionality, scaling exponentially with the dimension of the hyperparameter configuration space. Other work in hyperparameter optimization (HPO) seek to mitigate this problem. Random search samples the configuration space and can serve as a good baseline [5,6]. Bayesian optimization techniques to find optimal hyperparameters, often using Gaussian processes as surrogate functions, offers a more more computational efficient algorithm than grid search or random search requiring fewer attempts to find the optimal parameters [6]. However, Bayesian optimization in this form requires more computational resources [6].

There are also gradient-based approaches [7,8,9]. There are also several derivative-free optimization methods. For example, in [10], a pattern search methodology for hyperparameters, the parameter optimization method based on simulated annealing [11], Bayesian method based on MCMC for estimating kernel parameters [12], and also methods based on Genetic algorithms [13,14,15].

What all of these methods have in common is that they require training a model and evaluating the error for each parameter set that needs to be tried. This can be quite expensive, both in time and computational resources, if there are a lot of data or we want to train multiple models at the same time. They are iterative, which means that we usually do not know how many models will be trained before getting an estimation of the best hyperparameters. There are methods, such as early cutoff, that try to reduce these burdens, but fundamentally a model needs to be trained for each set of candidate hyperparameters.

In contrast, our approach gives a set of hypeparameters without ever computing a single model. Specifically, in this paper, we are interested in modeling multiscale signals with a linear combination of SVRs. Two fundamental questions are (1) how many SVR models are going to be used? and (2) what should the scale be for each SVR model. Clearly the dimension of the configuration space for the scale parameters is conditional on the number of layers. Our methods are built off fast spectral methods like fast Fourier transform and Dynamic Mode Decomposition and return both the number of layers (number of SVR models) and the scales for each SVR without ever having to train a model. Bypassing the expensive step of computing a model for each candidate set of hyperparameters can lead to dramatic savings in computational time and resources.

2. Methods

2.1. Support Vector Regression

Support Vector Machines (SVM) have been introduced in [16] as a method for classification. The goal was to set hyperplane between classes, where hyperplane is defined by linear combination of a subset of a training set, called Support Vectors. The problem is formulated as a quadratic optimization problem that is convex so it has a unique solution. The problem with SVM is that it can only find a linear boundary between classes which is often not possible. The trick is to map the training data to a higher dimensional space and then use kernel functions to represent the inner product of two data vectors projected onto this space. Then only inner products are needed for finding the best parameters. The advantage of this approach is that we can implicitly map data onto infinite dimensional space. One of the most used kernels is Gaussian function defined by

| (1) |

where x and are n-dimensional feature vectors, is the variance of the Gaussian function, and is the scale parameter that is usually specified as an input parameter to SVR toolboxes.

The SVM approach has been extended in [17] to regression problems and it is called Support Vector Regression (SVR). Let be the training set, where is vector in input space and desired output. The aim of SVR is to find a regression function :

| (2) |

where is the weight vector and is a mapping of the data points to a higher-dimensional space and b is threshold constant. and b can be found by solving following optimization problem:

| (3) |

| (4) |

| (5) |

| (6) |

The parameter determines width of tube around the regression curve and points inside it do not contribute to the loss function. Parameter C adjusts the trade off between the regression error and regularization, and are slack variables for relaxing approximation constraints and measure the distance of each data point from the tube. In practice, the dual problem is solved, which can be written as:

| (7) |

| (8) |

| (9) |

where are the dual variables and is the kernel matrix evaluated from a kernel function, where is the kernel function. Solving that problem, the regression function becomes:

| (10) |

Coefficients satisfy following conditions:

Data points for which is non-zero are called Support Vectors.

2.2. Dynamic Mode Decomposition (Dmd)

Dynamic Mode Decomposition (DMD) was introduced in [18] as a method for extracting dynamic information from flow fields that are either generated by numerical simulation or measured in physical experiment. Rowley et al. connected DMD with Koopman operator theory [19].

Let the data be expressed in a series of snapshots, given by matrix :

| (11) |

where stands for the i-th snapshot of the flow field. We assume there exists a linear mapping which relates each snapshot to next one ,

| (12) |

and that this mapping is approximately same during each sampling interval, so we approximately have

| (13) |

We assume that characteristics of the system can be described by the spectral information in . This information is extracted in a data-driven manner using . The idea is to use to construct an approximation of . In [18], this was done as follows. Define and . Let the singular value decomposition of be . Then the representation of a compression of is defined as

| (14) |

where is the Moore–Penrose pseudo-inverse of . Note that (14) is analytically equivalent to , the compression of to the subspace spanned by the columns of , if . Eigenvectors and eigenvalues of are approximations of eigenvectors and eigenvalues of the Koopman operator.

The Koopman operator is an infinite dimensional, linear operator K that acts on all scalar functions g on M as

| (15) |

where f is a dynamical system such that . Let and be eigenvalues and eigenfunctions, i.e.,

| (16) |

Let : be vector of any quantities of interest. If g lies in span of , then it can be written as

| (17) |

Then we can express as

| (18) |

The Koopman eigenvalues, characterize the temporal behaviour of the corresponding Koopman mode , the phase of determines its frequency, and the magnitude determines the growth rate.

2.3. Hierarchical Support Vector Regression

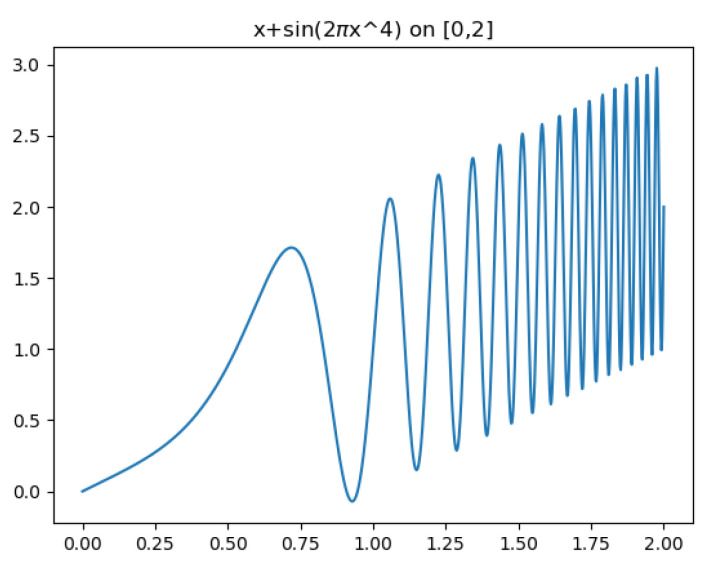

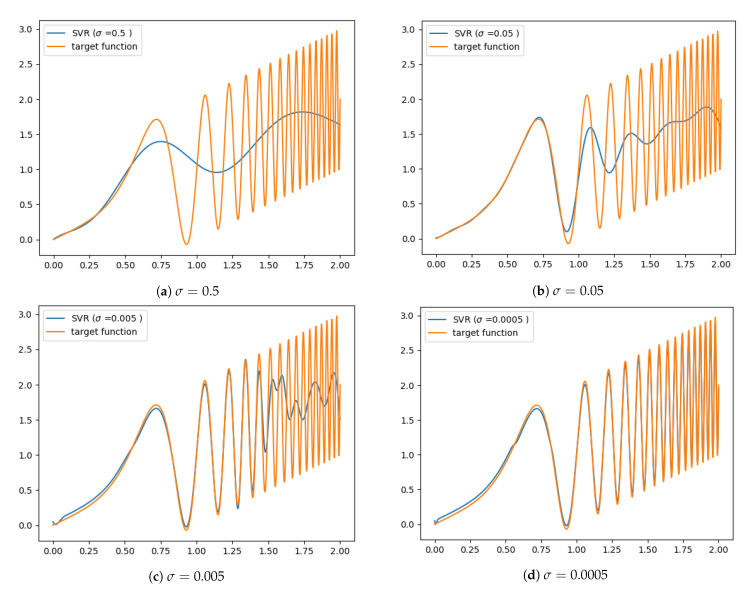

Classical SVR models which use kernels of a single scale have difficulties approximating multiscale signals. For example, consider the function , for , whose graph is given in Figure 1. This function has a continuum of scales. Figure 2 highlights the difficulties that classical single-scale SVR has in modeling such signals. Using too large a scale , the detailed behavior of the data set is not captured [20]; such a model may, however, be useful for a coarse-scale extrapolation outside the training set. Models employing a very small scale can capture the training set in detail. However, this makes them very sensitive to noise in the training and severely limits the model’s ability to generalize outside the training set [20].

Figure 1.

Multiscale example function: .

Figure 2.

SVR results with different scale of Gaussian kernel = 0.5, 0.05, 0.005, 0.0005. A large kernel provides smooth regression, but cannot reconstruct the details. A small kernel overfits, is unable to generalize, and can be sensitive to noise.

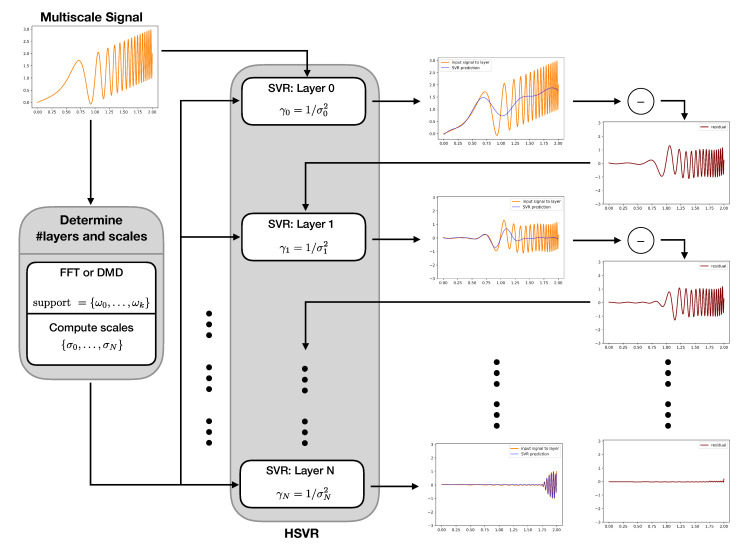

In [20], the authors introduced a multiscale variant of Support Vector Regression which they termed Hierarchical Support Vector Regression (HSVR). The idea behind HSVR is to train multiple SVR models, organized as a hierarchy of layers, each with different scale . The HSVR model is then a sum of those individual models, which we write as

| (19) |

where L is number of layers and is SVR model on layer ℓ with Gaussian kernel with parameter . Each SVR layer realizes a reconstruction of the target function at a certain scale. Training the HSVR model precedes from coarser scales to finer scales as follows. Let be specified. For , an SVR model is trained on the signal (the 0-th residual) and the residual is computed. We then proceed inductively for . Given the residual , train a model to approximate it and compute new residual . The -th residual is then

| (20) |

Graphically, the process looks like Figure 3.

Figure 3.

Flowchart of the HSVR modeling process. The input data is first used to compute the scales used for the HSVR model (see Algorithms 1, 2 and 4). At layer 0, an SVR model is trained at the coarsest scale . The residual is computed by taking the difference between the signal and the model. This residual is then modeled with an SVR model at the next coarsest scale . A new residual is computed by taking the difference of the old residual and the SVR model. This process is repeated until the pre-computed scales are exhausted.

The HSVR model contains a number of hyperparmeters that need to be specified, namely, , , the number of layers L to take, and the specific scales for those layers. In [20], the authors chose to use exponential decay relationship between the scales, such as . This, however, still leaves the critical choices of and L unspecified. In all of our experiments that follow, we choose to be 1 percent of the variation of the signal

| (21) |

For each layer, was specified as

| (22) |

which was the choice given in [20]. Additionally, the variable was chosen to be 2 so that . Python’s scikit-learn library [21] was used throughout this paper. Its implementation of SVR requires the input parameter , rather than from (1). These two parameters are related as . Thus, the equivalent decay rate of the input parameter is

| (23) |

In the next sections, we take up the task of efficiently determining the hyperparameters L and without the expensive step of performing grid search or training any models.

3. Predicting the Depth of Models

In this section, we will describe how error changes while training HSVR and we will provide methods of estimating the number of layers of such hierarchical model.

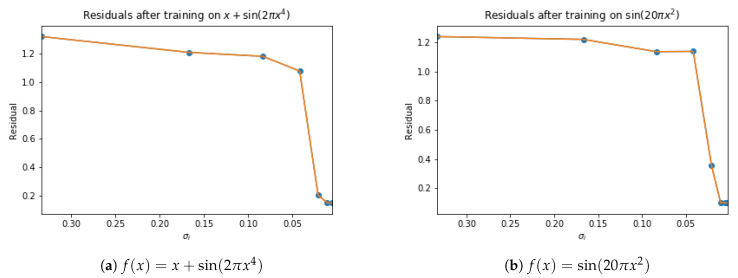

3.1. Phase Transition of the Training Error

For the rest of the paper, we have training data set and testing data set and the model , as in (19). Let for . For each layer, the HSVR (prediction) error is calculated as

| (24) |

where we have denoted . Therefore, denotes the maximum error between the true signal at the test points and an HSVR model with i layers. By examining the change of the values , it can be seen that there is a sudden drop in error, almost until tolerance , so that adding additional layers cannot reduce error any more. We will call that value the critical-sigma and denote it with . The drop in error can be seen in Figure 4.

Figure 4.

Residuals while training HSVR model with decreasing as shown on x-axis. Both HSVR models exhibit a phase transition in their approximation error.

3.2. Critical Scales: Intuition and the Fourier Transform

Since the SVR models are fitting the data with Gaussians, a heuristic for choosing the scale will be shown on basic examples of periodic function and then expanded on more general cases in next sections.

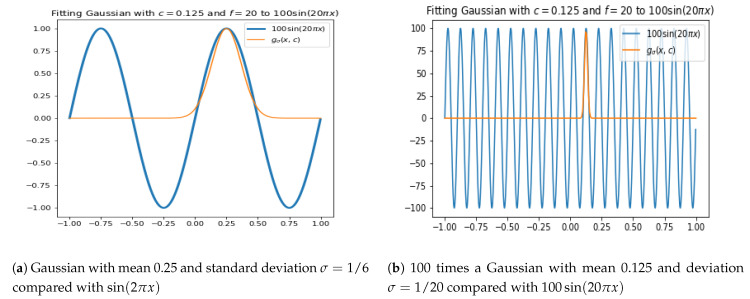

Let , where x is in meters and f is the frequency in cycles per meter. The frequency will be related to the scale, , of the Gaussian:

| (25) |

To relate the maximum frequency, f, to the scale, , we use the heuristic that we want 3 standard deviations of the gaussian to be half of the period. That is we want

| (26) |

Since , then

| (27) |

Figure 5 justifies this heuristic.

Figure 5.

Fitting Gaussians to half-periods of sinusoids using the heuristic (27).

3.3. Determining Scales with FFT

We assume that we can learn HSVR model with scales corresponding to important frequencies in the FFT of the signal. The assumption is that these will give an information how to train the model. If in the FFT of the signal, there are a lot of frequencies, we assume that we need more HSVR layers and each will learn the most dominant scale at this level.

Our assumption is that required number of scales in the HSVR model can be determined by the data. If there are a lot of frequencies in FFT of the signal related to relatively big coefficients, we can assume that signal is more challenging for single SVR to model it. As in [20], we refine the scales. Here we use exponential decay and use only frequencies for which corresponding coefficients in FFT are large enough in order to avoid numerical problems and adding insignificant frequencies. The procedure is summarized in Algorithm 1. The Filtering scales algorithm is summarized in Algorithm 2. The model building is outlined in Algorithm 3.

| Algorithm 1 Determining scales of HSVR model |

|

Input:, where are equidistant points in domain and values of function we want to model 1: 2: FFT frequencies of the signal 3: 4: # normalize coefficients respect to -norm 5: 6: 7: = sort scales in descending order 8: 9: return |

| Algorithm 2 Filtering scales |

|

Input: = vector of scales determined from FFT,

1: 2: 3: for i in range(1,n): 4: if : 5: 6: return: |

| Algorithm 3 Train HSVR |

|

Input: scales (output of Algorithm 1) 1: 2: 3: model = # comment: empty list to hold the SVR model at each layer 4: # comment: number of HSVR layers 5: for i in range(0, m): 6: = scales[i] 7: 8: = fitted SVR on with parameters , and tolerance 9: predictions = .predict(x) 10: 11: model.append() 12: return: model |

3.4. Determining Scales with Dynamic Mode Decomposition

Let , be training set. Output data is organized into Hankel matrix Y with M rows and N columns . We will extract relevant frequencies with Hankel DMD, described in [22], using the DMD_RRR described in [23]. The DMD_RRR algorithm returns the residuals (rez) which determine how accurately the eigenvalues are computed, the eigenvalues (), and the eigenvectors (Vtn). As before, suppose we have a signal

| (28) |

We map this scalar-valued functions into a higher-dimensional space by delay-embedding. We choose . The delay-embedding of the signal is the matrix

| (29) |

so that for

| (30) |

We define a generalized Hankel matrix as an rectangular matrix whose entries satisfy

| (31) |

for all indices such that and , where . In simpler terms, a generalized Hankel matrix is a rectangular matrix that is constant on anti-diagonals. A generalized Hankel matrix can also be thought of a submatrix of a larger, regular Hankel matrix. Clearly, the delay-embedding of the scalar signal, Equaion (29), is an example of a generalized Hankel matrix which is why it was denoted as H.

The input matrices for DMD algorithms will be and , where is the first columns of and is the last columns. Frequencies are calculated as follows:

| (32) |

i.e., we just scale the DMD eigenvalues so that they are on the unit circle and then extract frequency of the resulting complex exponential, . Let contain the values . These are directly analogous to the frequencies computed using FFT.

We replace the support of the FFT with the support of the DMD frequency as follows. We will take all the values of whose corresponding residual is less than some specified tolerance, tol, and whose corresponding mode’s norm is greater than some percentage, , of the total power of the modes. In other words, we only consider the frequencies which were calculated accurately enough and whose modes give a significant contribution to the signal; is analogous to the modulus of a FFT coefficient.

For each mode the energy is for which is minimal. Total power is defined as

| (33) |

Like in determining using FFT, the frequency support of is defined as

| (34) |

where is residual corresponding to the i-th mode. These considerations can be summarized in Algorithm 4.

| Algorithm 4 Estimating scales from data using Hankel DMD |

|

Input: time step: , time series f: , length of time series vector: N, tolerance for support: , , M: number of rows of Hankel matrix 1: H = Hankel matrix made from f with M rows and N-M columns 2: 3: 4: 5: for i = 0 to N − 1: 6: 7: 8: 9: 10: for i = 0 to N − 1: 11: if and 12: 13: return |

4. Results

In this section, results of our methods are provided. We demonstrate our methods on explicitly defined function, system of ODEs, and finally on vorticity data from a fluid mechanics simulations. In all cases,

| (35) |

and the error is calculated as in (24).

4.1. Explicitly Defined Functions

We model explicitly defined functions on . The dataset consists of 2001 equidistant points in that interval, where every other point is used for the training set. The error is calculated as the maximum absolute value of difference between prediction and actual value (Equation (24)). Results are summarized in Table 1. The parameter is specified as above. Each method predicts a certain number of layers required to push the model error close to . As seen in the table, both the FFT and DMD approaches produce models that give similar errors (near ). Although the DMD approach results in models with less layers in general, it fails for polynomials and the exponential function. This is because we normalize eigenvalues to the unit circle. An extension of this could use the modulus as well as in .

Table 1.

Results for explicitly defined functions, when using scales determined from FFT with decay 2 and given by (35), for DMD did not output frequencies different from 0 (entries denoted by * in the corresponding row).

| Function | Predicted # of Layers (FFT) |

Error (FFT) | Predicted # of Layers (DMD) |

Error (DMD) | |

|---|---|---|---|---|---|

| 0.02 | 1 | 0.02 | 1 | 0.02 | |

| 0.0199 | 1 | 0.021 | 1 | 0.02 | |

| 0.019 | 1 | 0.093 | 1 | 0.097 | |

| 1.99 | 1 | 2 | 1 | 2.01 | |

| 40 cos(2 | 0.8 | 1 | 0.8 | 1 | 0.8 |

| 100 cos(20 | 2 | 1 | 2.03 | 1 | 2 |

| sin(2 | 0.0199 | 5 | 0.02 | 1 | 0.02 |

| 0.14 | 2 | 0.14 | 1 | 8 | |

| 0.063 | 1 | 0.064 | * | * | |

| 0.03 | 7 | 0.037 | 1 | 0.034 | |

| 0.0397 | 2 | 0.0404 | 2 | 0.042 | |

| 0.02 | 2 | 0.021 | 2 | 0.022 | |

| 0.0199 | 1 | 0.022 | 2 | 0.022 | |

|

|

0.076 | 1 | 0.077 | 1 | 0.077 |

| 0.0187 | 3 | 0.02 | 2 | 0.02 | |

|

|

0.064 | 5 | 0.065 | 3 | 0.066 |

| 0.0198 | 5 | 0.02 | 1 | 0.02 |

4.2. ODE’s

We will demonstrate the methods on modeling solutions of the Lorenz system of ODE

| (36) |

| (37) |

| (38) |

with initial conditions , , , on [0, 10]. The system evolved solved for 500 equidistant time steps which was used for training set. A solution consisting of 2000 equidistant time steps is then used for test set. Each , , were regarded as separate signals to model. All hyperparameters are determined as before. Results are summarized in Table 2. For both systems, we can see that error drops nearly to , but the DMD approach results in models with less layers.

Table 2.

Results of training HSVR with scales used from FFT and DMD on Lorenz system.

| Function | Predicted # of Layers (FFT) |

Error (FFT) | Predicted # of Layers (DMD) |

Error (DMD) | |

|---|---|---|---|---|---|

| x(t) | 0.314 | 6 | 0.325 | 2 | 0.324 |

| y(t) | 0.408 | 6 | 0.469 | 2 | 0.469 |

| z(t) | 0.468 | 5 | 0.485 | 2 | 0.494 |

4.3. Vorticity Data

This data was provided by Georgia Institute of Technology [24,25]. It contains information about vorticity of a fluid field is some computational box. For each point in space, we want to model vorticity at that point as time evolves. Vorticity in every other time step is used for training and we test our models on rest of the time steps. We analyzed doubly periodic and non periodic data.

4.3.1. Doubly Periodic Data

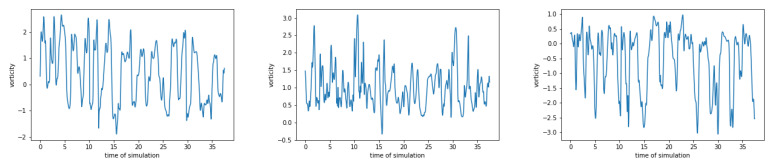

This data was generated assuming doubly periodic boundary conditions. The dataset contains information about the vorticity on equidistant space-grid on [0, 0.1016]2. There are in total 1201 snapshots with time step . This results in a tensor of dimensions . For each fixed point in space, there is a signal with 1201 time steps. A few examples of such signals are shown in Figure 6.

Figure 6.

Examples of vorticity at 3 different space-points for fluid simulations with doubly periodic boundary conditions.

For each of these signals, every other point in time is used for training the HSVR model, which results in total of models. Results are summarized in Table 3. For each model, the error is calculated as in (24) with , where L is number of layers. Since is given by (35), i.e., 1% of the range of the training data, the ratio error/ gives a measure of error in percentages of range of signal. A ratio of 2 would imply that the maximum error of the model over the test set was only 2% of the range of the test data; i.e.,

| (39) |

Table 3.

Results after training HSVR on doubly periodic vorticity data.

| Predicted # of Layers (FFT) |

Error (FFT) | Predicted # of Layers (DMD) |

Error (DMD) | ||

|---|---|---|---|---|---|

| min | 0.0199 | 6 | 0.038 | 1 | 0.0399 |

| mean | 0.0354 | 8 | 0.082 | 3 | 0.148 |

| max | 0.0488 | 9 | 0.284 | 5 | 2.463 |

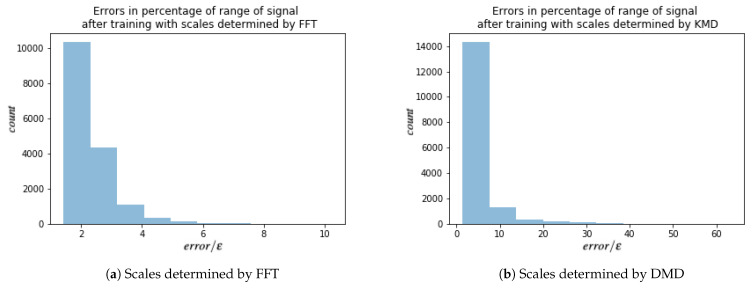

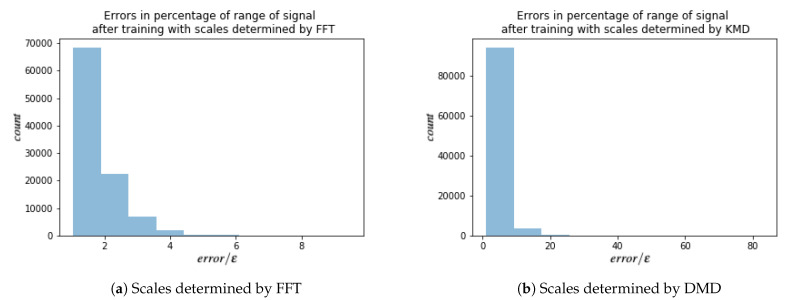

Histograms of these ratios for both FFT and DMD are shown in Figure 7. Most models produced on error close the threshold (a perfect match would give a ratio of 1).

Figure 7.

Histograms of for models trained on vorticity data with doubly periodic boundary conditions. is the model error given by (24) (with ) and is given by (35). There were total models trained. The count on the vertical axis is the number of models that fell into the corresponding bin.

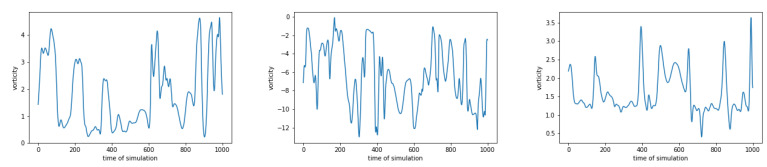

4.3.2. Non Periodic Data

The data contains information about vorticity on equidistant space-grid on with step . No assumption of periodicity of boundary condition was made. There are in total 1000 snapshots with time step ms. This results in tensor . Similar to the dataset with periodic boundary conditions, the HSVR model is trained for each fixed point in space, which results in models. The error’s and ’s are calculated as before. A few examples of such signals are in Figure 8. Results are summarized in Table 4 and a histogram of ratios error/ for FFT and DMD are shown in Figure 9. For both doubly periodic and non periodic data, we can see that a large majority of the models have a ratio between 1 and 2 which means the maximum error of a majority models is which is less than 2% of the range of the training data. For DMD approach, the majority of models have ratios less 10 which corresponds to a maximum error of , which is less than 10% of the range of the training data. From Table 3 and Table 4 we can see that DMD estimates less layers, but with bigger error after training.

Figure 8.

Examples of vorticity in 3 space-points for non periodic data.

Table 4.

Results after training HSVR on non periodic vorticity data.

| Predicted # of Layers (FFT) |

Error (FFT) | Predicted # of Layers (DMD) |

Error (DMD) | ||

|---|---|---|---|---|---|

| min | 0.019 | 5 | 0.0006 | 2 | 0.0006 |

| mean | 0.035 | 7 | 0.0975 | 3 | 0.197 |

| max | 0.048 | 9 | 0.667 | 7 | 6.137 |

Figure 9.

Histograms of for models trained on vorticity data with non-periodic boundary conditions. is the model error given by (24) (with ) and is given by (35). There were total models trained. The count on the vertical axis is the number of models that fell into the corresponding bin.

5. Discussion

Both approaches (FFT and DMD) to estimating the number of layers required by HSVR to push the modeling error close to the threshold show promise and allow computation of the HSVR depth a priori. While the DMD approach often predicted a smaller number of layers, usually with comparable error, our choice of unit circle normalization prevented it from performing well on functions without oscillation. Furthermore, the DMD algorithm itself comes from the dynamical systems community and requires that the domain of the signal (the x variables) be strictly ordered. For the explicitly defined functions we considered, there was a strict spatial ordering since the domains of the functions were intervals on the real line. For the vorticity data, the time signals at each spatial point were to be modeled and therefore the data points could be strictly ordered in time. For functions whose domain is multi-dimensional, say , there is no strict ordering and the DMD approach, as formulated here, would break down. The method based on the Fourier transform seems to have more promise in analyzing multivariable, multiscale signals, as it can compute multidimensional wave vectors.

A recent paper [26] also deals with the number of layers of a model and “scales”. However, in the mentioned paper, the authors are concerned with how far a signal or gradients will propagate through a network before dying. The scales they compute control how many layers the gradient or signal can propagate before they die. If the network is too deep the gradients go to zero before fully backpropagating through the network, resulting in an untrainable network. The result they compute is a fundamental characteristic of the network and is independent of the dataset. It does not matter what the input data is: constant, single scale, multiscale, etc, the scale parameters they compute are not affected.

Conversely, we are most focused on multiscale signals and tailoring the architecture to best represent such signals. The scales we compute are inherent properties of the dataset, not inherent properties of the network model. The length of the network adapts to the scales contained in the dataset.

It would be interesting in the future to combine the two methodologies, with the methods in [26] giving bounds on the number of layers and then adapting our techniques to analyze the inherent scales in the dataset. This could tell us whether a simple, fully, connected, feedforward network could adequately represent the signal or if something more complex was needed.

6. Conclusions

In this work we presented method for choosing hyperparameters for HSVR from time-series data only. We described two approaches, using FFT or DMD. We saw that estimating hyperaparameters with FFT results in a model with more layers and smaller error, whereas the DMD approach gave models that had less layers (and thus more efficient models).

Author Contributions

Conceptualization, R.M., M.F., Z.D. and I.M. (Igor Mezić); Data curation, I.M. (Iva Manojlović); Validation, I.M. (Iva Manojlović); Writing—original draft, I.M. (Iva Manojlović); Writing—review & editing, R.M., M.F., Z.D. and I.M. (Igor Mezić). All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by the DARPA contract HR0011-18-9-0033, the Air Force Office of Scientific Research contract FA9550-17-C-0012, and the Croatian Science Foundation grant IP-2019-04-6268.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not available.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Joachims T. Ausgezeichnete Informatikdissertationen. Koellen Verlag; Bonn, Germany: 2002. [(accessed on 24 December 2020)]. The Maximum-Margin Approach to Learning Text Classifiers: Methods Theory, and Algorithms. Lecture Notes in Informatics (LNI) Available online: https://dl.gi.de/bitstream/handle/20.500.12116/4447/GI-Dissertations.02-6.pdf?sequence=1. [Google Scholar]

- 2.Wahba G. Support vector machines, reproducing kernel Hilbert spaces and the randomized GACV. Adv. Kernel-Methods-Support Vector Learn. 1999;6:69–87. [Google Scholar]

- 3.Vapnik V., Chapelle O. Bounds on error expectation for support vector machines. Neural Comput. 2000;12:2013–2036. doi: 10.1162/089976600300015042. [DOI] [PubMed] [Google Scholar]

- 4.Ong C.S., Smola A.J., Williamson R.C. Learning the kernel with hyperkernels. J. Mach. Learn. Res. 2005;6:1043–1071. [Google Scholar]

- 5.Hutter F., Kotthoff L., Vanschoren J., editors. Automated Machine Learning: Methods, Systems, Challenges. Springer International Publishing; Cham, Switzerland: 2019. Hyperparameter Optimization; pp. 3–33. [DOI] [Google Scholar]

- 6.Yu T., Zhu H. Hyper-Parameter Optimization: A Review of Algorithms and Applications. arXiv. 20202003.05689 [Google Scholar]

- 7.Chapelle O., Vapnik V., Bousquet O., Mukherjee S. Choosing multiple parameters for support vector machines. Mach. Learn. 2002;46:131–159. doi: 10.1023/A:1012450327387. [DOI] [Google Scholar]

- 8.Chung K.M., Kao W.C., Sun C.L., Wang L.L., Lin C.J. Radius margin bounds for support vector machines with the RBF kernel. Neural Comput. 2003;15:2643–2681. doi: 10.1162/089976603322385108. [DOI] [PubMed] [Google Scholar]

- 9.Gold C., Sollich P. Model selection for support vector machine classification. Neurocomputing. 2003;55:221–249. doi: 10.1016/S0925-2312(03)00375-8. [DOI] [Google Scholar]

- 10.Hooke R., Jeeves T.A. “Direct Search” Solution of Numerical and Statistical Problems. JACM. 1961;8:212–229. doi: 10.1145/321062.321069. [DOI] [Google Scholar]

- 11.Kirkpatrick S., Gelatt C.D., Vecchi M.P. Optimization by simulated annealing. Science. 1983;220:671–680. doi: 10.1126/science.220.4598.671. [DOI] [PubMed] [Google Scholar]

- 12.Mallick B.K., Ghosh D., Ghosh M. Bayesian classification of tumours by using gene expression data. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2005;67:219–234. doi: 10.1111/j.1467-9868.2005.00498.x. [DOI] [Google Scholar]

- 13.Friedrichs F., Igel C. Evolutionary tuning of multiple SVM parameters. Neurocomputing. 2005;64:107–117. doi: 10.1016/j.neucom.2004.11.022. [DOI] [Google Scholar]

- 14.Frohlich H., Chapelle O., Scholkopf B. Feature selection for support vector machines by means of genetic algorithm; Proceedings of the 15th IEEE International Conference on Tools with Artificial Intelligence; Sacramento, CA, USA. 5 November 2003; pp. 142–148. [Google Scholar]

- 15.Igel C. Multi-objective model selection for support vector machines; Proceedings of the International Conference on Evolutionary Multi-Criterion Optimization; Guanajuato, Mexico. 9–11 March 2005; Berlin/Heidelberg, Germany: Springer; 2005. pp. 534–546. [Google Scholar]

- 16.Cortes C., Vapnik V. Support-vector networks. Mach. Learn. 1995;20:273–297. doi: 10.1007/BF00994018. [DOI] [Google Scholar]

- 17.Smola A.J., Schölkopf B. A tutorial on support vector regression. Stat. Comput. 2004;14:199–222. doi: 10.1023/B:STCO.0000035301.49549.88. [DOI] [Google Scholar]

- 18.Schmid P.J. Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 2010;656:5–28. doi: 10.1017/S0022112010001217. [DOI] [Google Scholar]

- 19.Rowley C.W., Mezić I., Bagheri S., Schlatter P., Henningson D.S. Spectral analysis of nonlinear flows. J. Fluid Mech. 2009;641:115–127. doi: 10.1017/S0022112009992059. [DOI] [Google Scholar]

- 20.Bellocchio F., Ferrari S., Piuri V., Borghese N.A. Hierarchical approach for multiscale support vector regression. IEEE Trans. Neural Netw. Learn. Syst. 2012;23:1448–1460. doi: 10.1109/TNNLS.2012.2205018. [DOI] [PubMed] [Google Scholar]

- 21.Pedregosa F., Varoquaux G., Gramfort A., Michel V., Thirion B., Grisel O., Blondel M., Prettenhofer P., Weiss R., Dubourg V., et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011;12:2825–2830. [Google Scholar]

- 22.Arbabi H., Mezić I. Ergodic theory, dynamic mode decomposition, and computation of spectral properties of the Koopman operator. SIAM J. Appl. Dyn. Syst. 2017;16:2096–2126. doi: 10.1137/17M1125236. [DOI] [Google Scholar]

- 23.Drmač Z., Mezić I., Mohr R. Data driven modal decompositions: Analysis and enhancements. SIAM J. Sci. Comput. 2018;40:A2253–A2285. doi: 10.1137/17M1144155. [DOI] [Google Scholar]

- 24.Tithof J., Suri B., Pallantla R.K., Grigoriev R.O., Schatz M.F. Bifurcations in quasi-two-dimensional Kolmogorov-like flow. J. Fluid Mech. 2017:837–866. doi: 10.1017/jfm.2017.553. [DOI] [Google Scholar]

- 25.Suri B., Tithof J., Mitchell R., Grigoriev R.O., Schatz M.F. Velocity profile in a two-layer Kolmogorov-like flow. Phys. Fluids. 2014;26:053601. doi: 10.1063/1.4873417. [DOI] [Google Scholar]

- 26.Schoenholz S.S., Gilmer J., Ganguli S., Sohl-Dickstein J. Deep Information Propagation. arXiv. 20171611.01232 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not available.