Abstract

Information geometry has offered a way to formally study the efficacy of scientific models by quantifying the impact of model parameters on the predicted effects. However, there has been little formal investigation of causation in this framework, despite causal models being a fundamental part of science and explanation. Here, we introduce causal geometry, which formalizes not only how outcomes are impacted by parameters, but also how the parameters of a model can be intervened upon. Therefore, we introduce a geometric version of “effective information”—a known measure of the informativeness of a causal relationship. We show that it is given by the matching between the space of effects and the space of interventions, in the form of their geometric congruence. Therefore, given a fixed intervention capability, an effective causal model is one that is well matched to those interventions. This is a consequence of “causal emergence,” wherein macroscopic causal relationships may carry more information than “fundamental” microscopic ones. We thus argue that a coarse-grained model may, paradoxically, be more informative than the microscopic one, especially when it better matches the scale of accessible interventions—as we illustrate on toy examples.

Keywords: model selection, causality, sloppy models, information geometry, effective information

1. Introduction

Many complex real-world phenomena admit surprisingly simple descriptions, from the smallest of microphysics to aspects of economics [1,2,3]. While this is not seen as entirely coincidental, the precise reasons for this fortunate circumstance are not entirely understood [4,5]. Two complementary solutions may be sought: On the one hand, we may hypothesize that this is an objective property of nature, thereby looking for some mechanism common among complex systems that allows them to be well-described with only a few parameters [3,6]. On the other, we may guess that it is a subjective property of our perception and then try to formalize the process by which we find useful patterns in arbitrarily complex systems [5,7,8]. In the recent years, substantial progress has been made in developing both of these perspectives, grounded in information theory [9].

One compelling argument for the first hypothesis has been made from information geometry. The approach starts by associating with any given model a particular “model manifold,” whose geometric properties can tell us whether and which simplifications can be helpful [6,10]. It turns out that for many real-world models, this manifold is highly anisotropic, having a hierarchical hyper-ribbon structure [11]. This property, termed “sloppiness,” indicates that only a few of the many microscopic model parameters are actually important for the model predictions—thus allowing for model simplification [12,13]. While it is not yet clear how general this property is, sloppiness was empirically illustrated in a number of biochemical and physical models and argued for on some general grounds [6,14,15]. This way, sloppiness provides an explanation for how emergent simplicity may be an objective property of complex systems themselves.

The second perspective instead takes as its starting point the well-known aphorism that “all models are wrong, but some are useful” [7]. One way to see this is as a rejection of the reductionist notion that a “fundamental” microscopic description is the “correct” one, while all emergent system properties are derivatives from it [2,16]. Instead, if no model is seen as inherently best, then we can only compare models by their efficacy in predicting and controlling a given system. This amounts to finding an effective causal description of the system—such that distinct interventions causally translate to unique effects. In this perspective, simple descriptions may arise in part from our efforts to match the system description to how we interact with it [8,9].

Despite the fundamental role causality plays in science and its applications, its formal study has been scarce and elusive, until recently [17]. Especially in complex systems, even conceptually defining what it means for something to be the cause of an observed phenomenon may be surprisingly tricky [18], let alone empirically verifying this [19,20]. In particular, the way models are studied in sloppiness makes no distinction between parameters as causes of the measured data, or merely as its phenomenological descriptors (e.g., slope of a linear fit) or statistical correlates.

Consider for example some bacterial population, whose size at some time t depends on its growth rate . We cannot say that is the cause of a large population; it remains merely a descriptor of the exponential fit, until we introduce an additional ingredient: “intervention capabilities”—some (at least hypothetical) way in which we can control this parameter and observe its effects. We can formalize this by recasting our system as a Bayesian dependency network and defining the -operator [17]: , which isolates the effects of actively setting the parameter to value , regardless of any other confounding factors that might influence it (such as if were also affected by t). The role of the -operator is to distinguish the effect of a given intervention from that of all other possible interventions (or a lack thereof), thereby allowing a rigorous counter-factual definition of causality. This allows reliably disentangling causal dependencies from mere statistical ones [21]. See Appendix A for a worked out example explicitly illustrating how this distinction arises.

Building on this, Judea Pearl developed the “causal calculus” framework, providing a set of tools to reliably work with intricate causal structures [17]. Further combining causal calculus with information theory allowed rigorously quantifying the amount of Effective Information () in the causal structure of a given model [22]. In a phenomenon termed “causal emergence,” it was then pointed out that in some systems, a dimension-reduced description (such as a coarse-graining) may paradoxically carry more information about causation than the full microscopic model. This is because dimension reduction can sometimes yield a substantial reduction in noise and degeneracy [23,24].

In this work, we extend the causal emergence framework to continuous systems and show that in that context, it is naturally related to information geometry and sloppy models. This leads to a novel construction, which we term causal geometry, where finding the causally most informative model translates to a geometric matching between our intervention capabilities and the effects on system behaviors, both expressed as distance metrics on the model’s parameter space. This framework captures precisely how the inherent properties of the system’s behavior (its sloppiness structure) and their relation to its use context (matching to intervention capabilities) both play a role in optimal model selection, thereby reconciling the two above perspectives. This comes up because, on the one hand, intervention capabilities are never unlimited in their degree or fineness and perfection of control, and on the other, because the behaviors of open physical systems are never without noise. This helps to formalize how neither the “simplest” nor the most “fundamental” reductionist model may be universally seen as preferable.

We take a moment here to further clarify how this work fits within the current literature context. Besides sloppiness and causal emergence, there have been other approaches developed for optimal model selection [25,26]. For example, Bayesian inference allows choosing the optimal modeling level by maximizing the posterior likelihood over a predefined library of models [27]. In contrast, the sloppiness approach lacks a scalar quantity to be optimized for the best model and only provides a heuristic for reducing unnecessarily complex models [6]. To mitigate this, in [13], the authors optimized mutual information to find the set of coarse-grained parameters on sloppy manifolds that could reasonably be constrained by some limited experimental observations, thus selecting the optimal modeling level for the available data. The mathematical procedure thus carried out closely paralleled that developed earlier in the causal emergence for discrete systems [23,24]. In our work, we use this formal resemblance to understand the connection between these two distant fields: sloppy models and causal calculus. This allows giving a continuum formulation of causal emergence, as well as a novel local measure of causal optimality. On the other side, our work establishes the proper formal role of interventions and causality in sloppiness, potentially resolving a long-standing formal challenge around the non-covariance of metric eigenvalues [28] and showing that not only the hyper-ribbon manifold structure, but also its relation to intervention capabilities account for the emergence of simple models.

In Section 2, we define the Effective Information () for continuous models, which captures the amount of information in the model’s causal relationships. We illustrate it on a simple example (Section 2.1) and show how restricting the set of allowed interventions may sometimes, surprisingly, make the causal model more informative. Section 3 then introduces causal geometry. Specifically, it relates the continuous to information geometry, introducing a local geometric measure of causal structure, and providing a way to find the locally most effective model for a given set of intervention capabilities using the techniques of information geometry. We demonstrate our construction on another simple toy model in Section 4, showing how causal emergence can arise in our geometric formulation, subject to the given interventional and observational capabilities.

2. Effective Information in Continuous Systems

For the purposes of this work, we formalize a causal model as a set of input-output relations, or more precisely, a map from all possible interventions to the full description of all effects within the context of some system [24]. While the set of all hypothetically possible interventions on a given physical system is enormous and impractical to consider (involving arbitrary manipulations of every subatomic particle), the set of experimentally doable (or even considered) interventions for a given context always represent a much smaller bounded space , which we refer to here as “intervention capabilities.” Similarly, while an intervention will lead to uncountable microscopic physical effects, the space of specific effects of interest is much smaller and often happens to be closely related to the intervention capabilities. All causal models by definition use some such subset of possibilities, and it is common in the literature around causation to restrict the set of hypotheticals, or counterfactuals, within a causal model [29]. In this work, we will illustrate how finding the optimal causal model for a given system is about a matching between the system behavior and the intervention capabilities considered.

As the focus of this paper is on continuous systems, we consider and to be continuous spaces, with points and . To formally discuss the causal model of our system, we make use of the operator, as per Judea Pearl’s causal calculus [17]. This operator is defined for any doable intervention the experimenter is capable of either performing or modeling, allowing assessing its causal effects. This allows us to formally describe a causal model as a map:

| (1) |

where p is the probability density over effect space resulting from “doing” the intervention x. Note that this is distinct from in that the operator allows us to distinguish the correlation introduced by the causal relation from one due to a common cause .

The notion of causality is then formalized as a counterfactual: How does the effect of differ from the effect of doing anything else? This latter “null effect” may include interventions such as , but it can also include all other possible interventions: it is thus formally described by averaging together the effects of all considered intervention capabilities , giving the total “effect distribution”:

| (2) |

This way, to know precisely which effects causes, we can compare to . The distinguishability between these distributions may be captured with the Kullback–Leibler divergence , giving us the amount of information associated with the application of an individual intervention [23,30].

Averaging over all accessible interventions gives the information of the system’s entire causal structure, termed the total “effective information”:

| (3) |

Discrete versions of this effective information have been explored in Boolean networks [23] and graphs [31]. Note that the definition of here is identical to the mutual information between the uniform distribution over interventions and the resulting distribution over effects , so that: [22,24].

We proceed to illustrate with a simple example how the EI varies across families of simple physical systems. As such, we show how it may be used to select the systems that are in some sense “best controllable,” in that they best associate unique effects with unique interventions [32]. Additionally, this example will help us illustrate how the EI may sometimes allow us to identify a coarse-grained system description that is more informative than the full microscopic one—thus illustrating causal emergence [24].

2.1. Toy Example: Dimmer Switch

Consider a continuous dimmer switch controlling a light bulb, but with an arbitrary non-linear function , a “dimmer profile,” mapping from the switch setting to the light bulb brightness (Figure 1a). To quantify information about causation in continuous systems, we must carefully account for noise and errors in our inputs and outputs; else, infinite precision leads to infinite information. This is an issue for the application of all mutual information measures or their derivatives in deterministic continuous systems. Realistically, in operating a dimmer switch, any user will have certain “intervention error” on setting its value, as well as “effect error,” which can come either from intrinsic system noise or from extrinsic measurement error. To encode the effect error, we can replace the deterministic mapping with a probabilistic one —the normal distribution centered on and with standard deviation . While we could incorporate intervention error of setting into this probability distribution as well, it is instructive for later discussion and generality to keep it separate. The intervention error is thus similarly encoded by introducing a probabilistic mapping from the “do”-able interventions to the physical switch settings with some error , as . Here, we can think of the interventions as the “intended” switch settings, as in practice, we cannot set the switch position with infinite precision. Note that while we do not explicitly model any possible confounding factors here, we assume that these may be present and important, but are all taken care of by our use of the -operator. This ensures that only true causal relations, and not spurious correlations, are captured by the distributions p and q.

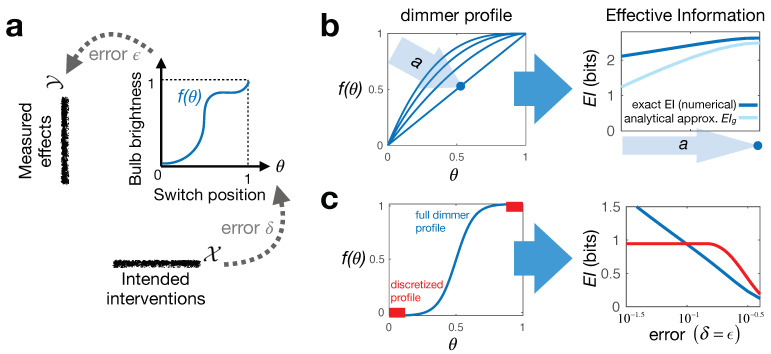

Figure 1.

Illustrating continuous Effective Information (EI) on a simple toy system. (a) shows the system construction: a dimmer switch with a particular “dimmer profile” . We can intervene on it by setting the switch up to error tolerance , while effects are similarly measured with error ; (b) shows that for uniform errors , out of the family of dimmer profiles parametrized by a (left), the linear profile gives the “best control,” i.e., has the highest EI (where dark blue—numerical EI calculation and light blue—approximation in Equation (4)); (c) illustrates how for two other dimmer profiles (left), increasing error tolerances influence the EI (right, calculated numerically). The profile in red represents a discrete binary switch—which emerges if we restrict the interventions on the blue dimmer profile to only use “ends of run.” Crucially, such coarse-graining allows for an improved control of the light (higher EI) when errors are sufficiently large.

With this setup, we can now use Equation (3) to explicitly compute an EI for different dimmer profiles and see which is causally most informative (has the most distinguishable effects). To do this analytically for arbitrary , we must take the approximation that and are small compared to one (the range of interventions and effects) and compared to the scale of curvature of (such that ). In this limit, we have (for the derivation, see setup in Equation (6) below and Appendix B.1):

| (4) |

which echoes the form of the expression for entropy of a normal distribution. From this, we see variationally that, given the fixed end-points and , EI is maximized iff : a uniformly linear dimmer switch. We can check this numerically by computing the exact EI for several different choices of (Figure 1b).

A slightly more interesting version of this example is when our detector (eyes) perceives light brightness on a log, rather than linear, scale (Weber–Fechner law, [33]), in which case the effect of the error will be non-uniform: . If this error is bound to be sufficiently small everywhere, Equation (4) still holds, replacing only . Again, we can variationally show that here, EI is maximal iff is constant (up to fluctuations of magnitude ), giving the optimal dimming profile , with some constant . In reality, the lighting industry produces switches with many dimming profiles that depend on the application [34], so our approach can be seen as a principled way to optimize this choice.

Interestingly, restricting the accessible interventions can sometimes increase the amount of effective information if it increases the distinguishability, and therefore informativeness, of interventions. This is a form of causal emergence, wherein a higher level (coarsened) macroscopic model emerges as the more informative system description for modeling causation [24]. To give a particular example here, we compare the continuous dimmer profile shown in Figure 1c (left, blue) to its discrete restriction (left, red)—which corresponds to a simple binary switch. When the intervention and effect errors are small, the continuous switch gives more control opportunities and is thus preferable; its EI is larger than the 1 bit for the discrete switch. However, as we increase the errors, we see a crossover in the two EI values. In this regime, the errors are so large that the intermediate switch positions of the continuous profile become essentially useless and are “distracting” from the more useful endpoint settings. Formally, such causal emergence arises due to the averaging over the set of all interventions in Equation (3). Practically, it captures the intuition that building good causal models, as well as designing useful devices involve isolating only the most powerful control parameters out of all possible degrees of freedom [32].

3. Causal Geometry

Taking inspiration from information geometry, we can construct a more intuitive geometric expression for the EI [13]. For studying causal structures of models, this “geometric EI” we introduce may be viewed as a supplement to the usual EI in Equation (3). While the geometric EI corresponds to the EI in a particular limit, we suggest that it remains useful more generally as an alternative metric of causal efficacy: it captures a causal model’s informativeness like the EI does, but in a way that is local in a system’s parameter space. In this way, it frames causality not as a global counter-factual (comparing an intervention to all other interventions) [17], but as a local neighborhood counter-factual (comparing an intervention to nearby interventions). On the flip side, our construct provides a novel formulation of information geometry that allows it to explicitly account for the causal relations of a model. Moreover, we argue that this is necessary for formal consistency when working with the Fisher information matrix eigenvalues (namely, their covariant formulation; see Section 3.2) [28]. This suggests that model reduction based on these eigenvalues may not be made fully rigorous without explicitly accounting for causal relations in the model.

3.1. Construction

Section 2 described a causal model as a set of input-output relations between interventions and effects . Here, we investigate the relationship of such a causal model with the space of parameters that describe the underlying physical system. While these parameters need not necessarily have any direct physical meaning themselves, they are meant to give some abstract internal representation of the system—i.e., they mediate the mapping between interventions and effects. For example, while the notion of energy is merely an abstract concept, it provides a useful model to mediate between interventions such as “turning on the stove” and effects like “boiling water.” The goal of our construction here is to compare how well different physical models capture the causal structure of a system.

As we are focusing on continuous systems, we assume that our parameter space forms a smooth d-dimensional manifold, with parameters indexed by . Each accessible intervention then maps to some probability distribution over parameters , and each parameter in turn maps to a distribution over the observed effects . The causal relations we are interested in here are thus simply , but these are assumed to be embedded in some larger more complicated causal graph of additional confounding factors. These other possible hidden causes highlight the importance of using the -operator to isolate the causal relations in which we are interested (see Appendix A for a simple example). Our one assumption is that the parameter space is chosen to be a sufficiently complete description of the system that no causal link can pass from to directly without being reflected on .

To understand the role of various parameters , we can ask how much the effects change as we perturb from some set of parameters in some direction: from to . Using the Kullback–Leibler divergence and expanding it to leading order in , we get:

| (5) |

where summation over repeated indices is implied. This defines the Fisher information metric with . This introduces a distance metric on the parameter space , turning it into a Riemannian manifold, which we term the “effect manifold” (this is usually called simply the “model manifold” in the literature, but here, we want to distinguish it from the “intervention manifold,” introduced below). More precisely, it is usually defined as —the collection of all the effect distributions, or the image of the parameter space under the model mapping, with Equation (5) being the natural distance metric on this space [6,10,14].

Just as the mapping to effects defines the effect manifold , we can similarly construct an “intervention manifold” . For this, we use Bayes’ rule to invert the mapping from interventions to parameters , thus giving —the probability that a given parameter point was “activated” by an intervention x. The intervention manifold is thus defined as , with the corresponding Fisher information metric giving the distances on this space. With this, we can now summarize our construction:

| (6) |

Note that for Bayesian inversion in the last line, we used a uniform prior over the intervention space , which amounts to assuming that statistically, interventions are uniformly distributed over the entire considered space . Note that this is not a choice of convenience, but rather of conceptual necessity for correctly defining information in a causal model, as argued in [24]. The natural point-wise correspondence between the two manifolds then allows for a local comparison between the two geometries. Alternatively, we may simply think of the parameter space with two separate distance metrics on it, effect metric and intervention metric . With this setup, we can now define our “geometric” effective information:

| (7) |

| (8) |

Here, is the volume of the intervention manifold , which can be computed as . It quantifies the effective number of distinct interventions we can , and so, the first term in Equation (7) gives the maximal possible amount of information about the causation our model could have, if all interventions perfectly translated to effects. The second term then discounts this number according to how poorly the interventions actually overlap with effects: geometrically, the expression in Equation (8) quantifies the degree of matching between the metrics g and h at the point (here, 𝟙 stands for the identity matrix). This way, the loss term can be interpreted as a measure of “local mismatch” between interventions and effects at , quantifying how much information about causation is lost by our modeling choice. The average is then taken according to the intervention metric as: . Note that the expression in Equation (7) is identical to the approximation in Equation (4) for the setup in that example.

In Appendix B.2, we show that this expression for in Equation (7) can be derived as the approximation of the exact in Equation (3) when both the mappings are close to deterministic: and , for some functions and , with small errors and (which may be anisotropic and nonuniform). Outside of this regime, the and can differ. For instance, while is positive by definition, can easily become negative, especially if g is degenerate anywhere on the manifold. Second, while captures the informativeness and therefore effectiveness of a causal model globally, , and more specifically the landscape , can show us which local sectors of the parameter space are most and least causally effective. Finally, the global nature of the computation for the exact quickly makes it intractable, even numerically, for many continuous systems due to the proliferation of high-dimensional probability distributions—making the more practical choice in those settings.

3.2. Relation To Sloppiness

“Sloppiness” is the property empirically observed in many real-world models, when the eigenvalues of the Fisher information matrix take on a hierarchy of vastly varying values [6,11,14]. As such, parameter variations in the directions corresponding to the smallest eigenvalues will have negligible impact on the effects [14]. This leads to the hypothesis that we may effectively simplify our model by projecting out such directions, with little loss for the model’s descriptive power [12,13].

The trouble with this approach is that the components of the matrix , and hence its eigenvalues, depend on the particular choice of -coordinates on the effect manifold [6,28]. Since the parameters represent some conceptual abstraction of the physical system, they constitute an arbitrary choice. This means that for a given point of labeled by , we can always choose some coordinates in which locally, (an identity matrix), thus apparently breaking the above sloppiness structure. This issue is avoided in the literature by relying on the coordinate independent global properties of , namely its boundary structure [12].

Here, we show that by explicitly considering intervention capabilities, we can construct a local, but still coordinate independent sloppiness metric. This becomes possible since interventions give a second independent distance metric on [28]. The matrix product appearing in Equation (8) is then a linear transformation, and thus, its eigenvalues are coordinate independent. This way, to evaluate how sloppy a given causal model is, we suggest that it is more appropriate to study the eigenvalues of instead of those of g as is usually done [6]. If we then want to identify the directions in the parameter space that are locally least informative at a point , we first need to re-express the metric g in terms of the coordinates for which locally and then find the appropriate eigenvectors in these new coordinates.

From this perspective, we see that the usual discussion of sloppiness, which does not study interventions explicitly [6], may be said to implicitly assume that the intervention metric , meaning that all model parameters directly correspond to physically doable interventions. Moreover, this requires that with respect to the given coordinate choice, each parameter can be intervened upon with equal uniform precision—which fixes the particular choice of coordinates on the parameter space. As such, the coordinate-specific eigenvalues of the effect metric studied in information geometry, become physically meaningful in this special coordinate frame. In particular, our expression for the local mismatch in Equation (8) can here be expressed in terms of these eigenvalues as . Thus, locally, the directions with the smallest account for the largest contribution to the mismatch l. This recovers the standard intuition of sloppiness: we can best improve our model’s descriptive efficacy by projecting out the smallest- directions [12,35]. By seeing how this result arises in our framework, we thus point out that it formally relies on the implicit assumption of uniform intervention capabilities over all model parameters.

It is worth noting that in the construction in Equation (6), it may be possible to integrate out the parameters , giving directly the distribution of effects in terms of interventions (we assume that gives a complete description of our system in the sense that no causal links from to can bypass ). This way, the distribution gives an effect metric over the -space, which can directly quantify the amount of causal structure locally in our model. Nonetheless, the intervention metric and Equation (8) are still implicitly used here. This is because the space was constructed such that the intervention metric over it would be uniform everywhere. In turn, Equation (8) thus takes a particularly simple form in terms of . This illustrates that regardless of the parametrization we choose to describe our system, causal efficacy always arises as the matching between the effect metric and the intervention metric, per Equation (8)—which may or may not take on a simple form, and must be checked explicitly in each case. Furthermore, though this goes beyond the scope of this paper, we may imagine cases where the actual interventions form a complicated high-dimensional space that is harder to work with than the parameter space (just as the effect space is often more complex than ). In fact, this may be the typical scenario for real-world systems, where and represent arbitrarily detailed descriptions of the system’s context, while gives a manageable system abstraction.

4. Two-Dimensional Example

In order to illustrate our causal geometry framework explicitly and show how higher level descriptions can emerge within it, we use a simple toy model (based on the example considered in [12,13]).

Imagine an experimenter has a mixed population of two non-interacting bacterial species that they are treating with two different antibiotics. The experimenter’s measurements cannot distinguish between the bacteria, and so, they are monitoring only the total population size over time , where are the death rates of the two individual species. These death rates are determined by the two antibiotic concentrations the experimenter treats the system with , which are the possible interventions here. In the simplest case, each antibiotic will influence both species via some linear transformation A, such that .

This setup allows us to flesh-out the causal geometry construction and illustrate causal emergence here. Our main question is: When is this system best modeled microscopically, as the two independent species, and when does it behave more like a single homogeneous population, or something else [36]? To identify when higher scale models are more informative for the experimenter, we will calculate the geometric from Equation (7) for the full 2D model described above and then compare it to two separate 1D coarse-grained model descriptions, shown by the two red 1D sub-manifolds of the parameter space in Figure 2.

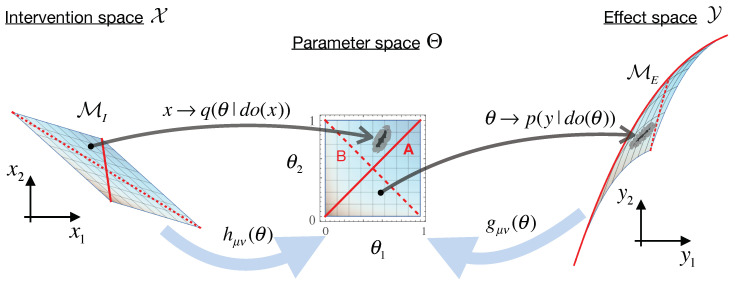

Figure 2.

An illustration of the causal geometry construction in Equation (6). The parameter space of our model gets two distinct geometric structures: the effect metric and the intervention metric . Here, a model is seen as a map that associates with each set of parameters , some distribution of possible measured effects y (right). As parameters may involve arbitrary abstractions and thus need not be directly controllable, we similarly associate them with practically doable interventions x (left). This way, our system description in terms of “mediates” between the interventions and resulting effects in the causal model.

We first specify the quantities for the construction in Equation (6). Our interventions x, having some uniform error tolerance , map to normal distributions over parameters as: , giving the Bayesian inverse probability , and hence the intervention metric . The effect space y is constructed by measuring the population size at several time-points, spaced out at intervals , such that the components of y are given by , with and error on each measurement (the initial conditions are thus always ). Thus, we have and effect metric . Figure 2 shows these mappings with for visual clarity, and we use for the calculations below, but all the qualitative behaviors remain the same for larger N. Figure 3 shows the resulting geometric (blue curves), computed via Equation (7) for varying values of the error tolerances and .

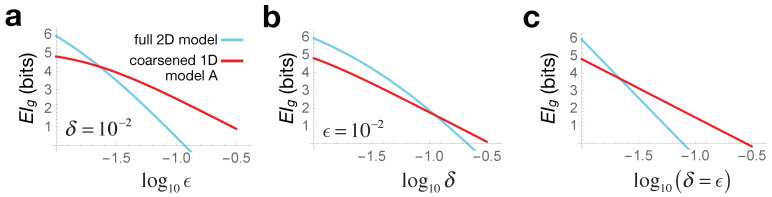

Figure 3.

Causal emergence from increasing errors for the toy model in Section 4. In all panels, the blue line shows the for the full 2D model, while red for the 1D sub-manifold A shown in Figure 2 (solid red line). In (a), we vary the effect error at fixed intervention error ; (b) varies intervention error at fixed effect error ; and (c) varies both together . In each case, we see a crossover where, with no change in system behavior, the coarse-grained 1D model becomes causally more informative when our intervention or effect errors become large.

We can similarly find the for any sub-manifold of our parameter space, which would lead to a coarse-grained causal model, with a correspondingly lower dimensional space of intervention capabilities. To do this, we identify the pull-back of the two metrics in the full parameter space, to the embedded sub-manifold, as follows. We define a 1D submanifold of as a parametric curve with the parameter . The pull-back effect metric on this 1D space with respect to will be the scalar , and similarly for intervention metric . For the 1D submanifold depicted by the solid red line in Figure 2, the resulting is plotted in red in Figure 3.

The crossover seen in Figure 3 thus illustrates causal emergence: for larger error values, the coarse-grained 1D description turns out to be more informative than the full 2D model. Since this coarse-graining corresponds to the case where the two bacterial species are seen as identical , we can say that at large errors, our bacterial colony is better modeled as a single homogeneous population. Crucially, this arises not from any change in system behavior, but merely from how we interact with it: either from what interventions we impart or from which effects we measure. Note also that when both the intervention and effect errors are scaled together , we see analytically from Equation (8) that is constant, and so:

| (9) |

which is also explicitly seen in Figure 3c. This indicates that, quite generally, we expect to see crossovers between geometric s of models with different d as we scale errors, with low-dimensional models being preferred at large noise. Since noise is ubiquitous in all real-world complex systems, this argument suggests why reductionist microscopic descriptions are rarely optimal from the perspective of informative interventions.

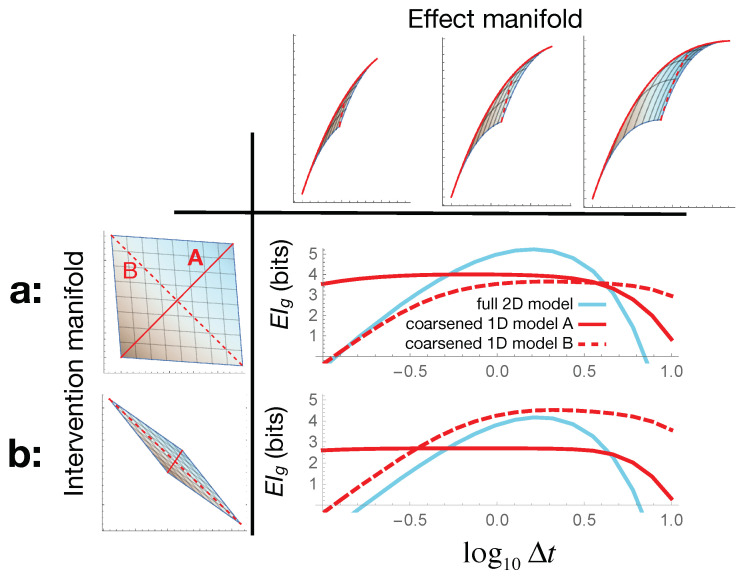

By carrying out similar calculations, in Figure 4, we explore how the optimal model choice depends on the time-scales we care about for the population dynamics (effects) and the antibiotics we are using (interventions), all at fixed errors . When the two antibiotics control the two bacterial species almost independently (, Figure 4a), we can identify three distinct regimes in the plot as we tune the measurement time-scale along the x-axis. If we only care about the population’s initial response to the treatment at early times, then we get a higher by modeling our colony as a single bacterial species. For intermediate times, the full 2D model has the higher , showing that in this regime, modeling both species independently is preferred. Finally, at late times, most of the population is dead, and the biggest remaining effect identifies how dissimilar their death rates were; the coarse-grained model given by the dashed red submanifold in Figure 2 () turns out to be more informative. In this regime, rather than viewing the population as either one or two independent bacterial species, we may think of it as a tightly coupled ecosystem of two competing species. Interestingly, such apparent coupling emerges here not from the underlying system dynamics, but from the optimal choice of coarse-grained description for the given effects of interest.

Figure 4.

The optimal model choice depends on both the effects we choose to measure and the intervention capabilities we have. Horizontally, we vary the time-scale on which we measure the bacterial population dynamics in our toy model (Section 4): the top row shows how this changes the shape of our effect manifold. (a) shows the results when our intervention capabilities are nearly in direct correspondence with the parameters . Here, the plot shows that varying takes us through three regimes: with submanifold A as the optimal model at early times, the full 2D model optimal at intermediate times, and submanifold B most informative at late times. (b) shows that this entire picture changes for a different set of intervention capabilities—illustrating that the appropriate model choice depends as much on the interventions as on the effects.

For a different set of intervention capabilities, where the antibiotics affect both species in a more interrelated way, with , this entire picture changes (Figure 4b). In particular, we get the scenario where the “fundamental” two-species model is never useful, and the unintuitive “two competing species” description is actually optimal at most time-scales. Note also that in all cases, for very long and very short times , the geometric drops below zero. While in this regime, the agreement with exact breaks down , it is also heuristically true that small , and so, the causal model is no longer very useful here. Even so, as seen in Figure 4, some coarse-graining of the model may still be effective even when the full microscopic description becomes useless.

5. Discussion

The world appears to agents and experimenters as having a certain scale and boundaries. For instance, solid objects are made of many loosely-connected atoms, yet in our everyday experience, we invariably view them as single units. While this may be intuitively understood in terms of the dictates of compression and memory storage [37], this work proposes a way to formalize precisely how such a coarse-grained modeling choice may be the correct (causally optimal) one. This is particularly true for a given set of intervention capabilities. In particular, we frame model selection as a geometric “matching” in the information space of the causal model to accessible interventions.

Intriguingly, this suggests that the correct choice of scientific modeling may not be merely a function of the correct understanding of the system, but also of the context that system is being used in or the capabilities of the experimenters. Thus, for example, if the forces we used to handle solid objects were far larger than inter-atomic attraction holding them together, then viewing objects as single units would no longer be a good model. This echoes one of the main ideas in “embodied cognition” for AI and psychology, which posits that in order for an agent to build accurate models of reality, it needs the ability to actively intervene in the world, not merely observe it [38].

This highlights a potentially important distinction between optimizing a model’s predictive efficacy and its causal efficacy. Many approaches to optimal model selection, such as sloppiness, focus on getting computationally efficient predictions from a few fundamental parameters. In contrast, optimizing causal efficacy looks for a model that best translates all interventions to unique effects, thus giving the user optimal power to control the system. Such a shift of motivation fundamentally changes our perspective on good scientific modeling: roughly, shifting the emphasis from prediction to control. While these two motivations may often go hand-in-hand, the question of which is the more fundamental may be important in distinguishing scenarios.

The causal geometry we introduce here is a natural extension of the information geometry framework [6,10], but now explicitly accounting for the causal structure of model construction. In our proposed formalism, a given model becomes associated with two distinct Riemannian manifolds, along with a mapping between them, one capturing the role of interventions and the other of the effects. The relative geometric matching between these two manifolds locally tells us about how causally informative the present model is and what coarse-graining may lead to a local improvement.

In this structure, the colloquial notion of model “sectors” (especially used in field theories to refer to various field content [39]) becomes associated with literal sectors of the manifolds, with their local geometries specifying the optimal or emergent descriptions of that sector. Such examples also highlight the importance of having a local way to quantify model optimality, as globally, the manifold may have a complex and piecewise structure not amenable to simplification. While both traditional [24] and information-geometric model-reduction [12] depend on the global model behavior over the entire span of possible interventions, the geometric introduced here is built by averaging inherently local causal efficacy, defined for each point in parameter space. We can further speculate that fundamentally, the geometric matching in may provide a novel way to quantify causality locally, where the counter-factual comparison is considered relative to the local neighborhood of interventions, rather than to all globally accessible ones [17].

We hope that causal geometry can contribute to further development in both formal principled methods for optimal model building in complex systems, as well as an abstract understanding of what it means to develop informative scientific theories.

Acknowledgments

Thanks Mark Transtrum for helpful discussions throughout the work on the manuscript, as well as review of the final draft, Mikhail Tikhonov for inspiration at the early stages of the project, and Thomas Berrueta for great comments on the final draft.

Appendix A. Example Illustrating the Do-Operator

Here, we give a simple pedagogic example that illustrates the use of the do-operator and its role in distinguishing causal relations from correlation. To highlight its role in the context of information geometry and sloppiness, we use a setup familiar from that literature [6]. Consider some bacterial population whose decay over time in unfavorable conditions we want to monitor: , where is the remaining fraction at time t of the original population at and is the death rate. In the context of information geometry, we might consider as a model parameter, while as the predicted data. However, we cannot say that “causes” the decay, as any such statement about causality requires distinguishing interventions and effects. Without this, our model is merely an exponential fit to the data , with labeling a compressed representation of the decay curve.

To have a causal, rather than a descriptive model, we need to introduce interventions that can influence this decay curve (and hence its descriptor ). For example, this may be the concentration of some harmful chemical in the bacteria’s environment. For the simplicity of our example, imagine that we have a direct linear mapping from these concentrations x to the death rate: . Note that this mapping is a causal model, with the -operator being well-defined on the space of interventions: it prescribes actively setting the chemical concentrations, in spite of any other possible environmental causes and fluctuations. In this setting, we can show how causal dependencies can differ from statistical ones ; these will be distinct whenever there are any confounding factors present. For concreteness in our example, we can introduce temperature T as such a confounder: On the one hand, it might speed up bacteria’s life-cycle, and hence death rate such that . On the other, it can denature the harmful chemical as , where is the reference concentration at .

If our model is specified explicitly, as is being done here, then all the causal relations are a priori known, and no work is needed to distinguish causation from correlation. The -operator becomes important when we look at a more real-world setting where we can interact with a system, but do not know its underlying dynamics. From the perspective of sloppiness, this is referred to as data-driven manifold exploration. The -operator prescribes how we should collect our data to extract the correct causal relations.

First consider a “wrong” way we can explore the model manifold, which will yield only statistical dependencies . For this, we study the fluctuations of bacterial population and habitat in their natural environment (specifically looking at and x). We can imagine that these fluctuations are dominated by natural variation of the reference chemical concentration , with an additional influence from temperature fluctuations (where we defined the scale of T such that its fluctuations centered on zero). Since we only observe x, and not or T, we integrate these out to get:

| (A1) |

where we defined the x-dependent in the last line.

Now, to capture the correct causal relationships, we instead use the -operator to find , which prescribes actively setting the chemical concentration x to specific values, rather than passively waiting for these to be observed. This means that while temperature can still fluctuate as above , we do not defer to fluctuations of to produce specific values of x, but rather set these concentrations ourselves (e.g., in lab conditions). This removes confounding correlations, while T fluctuations now merely add uniform noise on :

| (A2) |

While the distributions resulting form the two above setups (Equations (A1) and (A2)) are clearly distinct, we want to take one further step to show that the information metrics they induce are also different. First, we note that the distributions , and so the “effect metric” (see Equation (6)), simply reflect how the space of decay curves is parametrized by the scalar . Thus, in this example, is not about causality, and so is fixed regardless of how we measure our data. We therefore expect this distinction to be captured entirely by the intervention metrics. To find these, according to the setup in Equation (6), we first need the Bayesian inverse , for which we must calculate the normalization . For the distribution in Equation (A2), this is one, and , thus giving the uniform intervention metric for the true causal dependences in the system. For Equation (A1), the integral for normalization cannot be done exactly, so we take the approximation that fluctuations of the confounding temperature variable T are small. With this, we find that , and thus, the “intervention” metric for the statistical dependencies is , where the average is taken according to the distribution . Notably, unlike above, here, varies with , giving a qualitatively different geometry than the true causal geometry for this example.

As such, we have shown in an explicit setup how the causal relations can differ from statistical ones , leading to distinct geometric structures on the model manifold, which may then produce different schemes for model simplification. In general, while we may not know all the confounding factors nor the effects they can have, we can simply rely on using the -operator to extract the true causal relations for us. For this reason, none of the examples presented in this work make any explicit reference to confounding factors, as these may be assumed to be plentiful and unknown. For further discussion on the role of confounding factors and the tools of causal calculus that help to work with complex causal graphs, see [17].

Appendix B. Deriving Geometric EI

Here, we will derive the expression for in Equation (7), and equivalently in Equation (4). We start from the definition of in Equation (3) and use the near-deterministic model approximation discussed in the main text, and below. In Appendix B.1, we go through the detailed derivation for the 1D case and then in Appendix B.2 overview the steps needed to generalize it to higher dimensions.

Appendix B.1. One-Dimensional Case

As mentioned in the main text, the expression for presented here only approximates the exact when the mappings from interventions to parameters and to effects are both nearly-deterministic. Explicitly, this means that we can express the probability distributions as Gaussians with small variances. Note that the variance can be different at different points and in the multi-dimensional case may be anisotropic—as long as it remains sufficiently small everywhere (to be clarified later). This way, we can specify the concrete expression for the construct in Equation (6):

| (A3) |

Note that in 1D, the metrics g and h become scalars, and denotes a Gaussian distribution in x, centered on and with standard deviation . Furthermore, we define and intervention errors as above, giving that —merely for convenience of notation later (as may depend on x). As such, we also assume and to be invertible.

We begin with the definition of from Equation (3), for which we must first calculate the distribution :

| (A4) |

We can evaluate this Gaussian integral in the limit of small and . Let us understand precisely how small these must be.

To work with the above integral, must be small enough that both and are Gaussian. For this, the second-order term in the Taylor expansion around must be negligible in all regions with substantial probability, giving the asymptotic assumption: . We can check that in this case, plugging this expansion back into from Equation (A3), we get now a Gaussian in : as desired. This then shows us that we expect to typically be within of , i.e., . This allows us to write the above asymptotic condition as for all . The exact same argument goes for the effect distribution and similarly gives the condition . As long as these two conditions hold for all , we can allow and to vary arbitrarily.

In this limit, the integration of the expression in Equation (A4) is straightforward to carry out, giving another Gaussian:

| (A5) |

where and , and we used the expressions for the two metrics in Equation (A3). Averaging this over the interventions and using the fact that is small, we can then find the effect distribution:

| (A6) |

where L is the size of the 1D intervention space , so that the uniform intervention distribution .

With these expressions, we can now calculate the . Since is small, the Gaussian for will ensure that is close to , and so to leading order, we replace here. Therefore:

| (A7) |

| (A8) |

where in the last line, we simply rearranged and substituted the expressions for the metrics and from Equation (A3). Here, we first see that Line (A7) reproduces the approximation we showed for the dimmer switch example, in Equation (4), for the setup there: , and . In general, by recognizing that here, the volume of the intervention space is , we finally see that the expression in Equation (A8) agrees with our main result presented in Equation (7) in the case of a 1D parameter space discussed here.

Appendix B.2. Multi-Dimensional Case

Generalizing the above derivation to the multi-dimensional case is straightforward and is mainly a matter of careful bookkeeping. The expressions in Equation (A3) become here:

| (A9) |

Here, index the various dimensions of the intervention space , —for dimensions of the parameter space and —the effects space . As we are dealing with general multi-dimensional geometric constructs, in this section, we are being careful to denote the contravariant vector components with upper indices, as in , and covariant components with lower indices, as in .

As in Equations (A4) and (A5) above, we can then compute the distribution over effects conditioned on interventions:

| (A10) |

where denotes the matrix with components and its matrix inverse (and similar for g and h). Here, we assume that both functions and are invertible, which means that the intervention and effect spaces and both have the same dimension as the parameter space : . This allows us to view the map as a change of coordinates, with a square Jacobian matrix , whose determinant in the first line of Equation (A10) is thus well-defined and may be usefully expressed as . Note also that to get the above result, we once again assume the distributions and to be nearly deterministic, meaning here that the matrices and E must be large, though the precise form of the assumption is messy here.

Averaging this result over the intervention space , we get:

| (A11) |

where we define the intervention-space volume in units of variance of , as: . Performing another such average over , with some algebra, similar as for Equation (A7), we can arrive at our result in Equation (7):

| (A12) |

Author Contributions

Conceptualization, P.C. and E.H.; formal analysis, P.C.; supervision, E.H.; visualization, P.C.; writing, original draft, P.C.; writing, review and editing, E.H. All authors read and agreed to the published version of the manuscript.

Funding

E.H. was funded by Army Research Office Grant W911NF2010243 and P.C. by ARO W911NF1810101 and the James S. McDonnell Foundation Scholar Grant 220020476.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Hoel E.P. Wandering Towards a Goal. Springer; New York, NY, USA: 2018. Agent above, atom below: How agents causally emerge from their underlying microphysics; pp. 63–76. [Google Scholar]

- 2.Anderson P.W. More is different. Science. 1972;177:393–396. doi: 10.1126/science.177.4047.393. [DOI] [PubMed] [Google Scholar]

- 3.Solé R.V., Manrubia Cuevas S., Luque B., Delgado J., Bascompte J. Phase Transitions and Complex Systems: Simple, Nonlinear Models Capture Complex Systems at the Edge of Chaos. John Wiley Sons; Hoboken, NJ, USA: 1996. [Google Scholar]

- 4.Zenil H., Soler-Toscano F., Joosten J.J. Empirical encounters with computational irreducibility and unpredictability. Minds Mach. 2012;22:149–165. doi: 10.1007/s11023-011-9262-y. [DOI] [Google Scholar]

- 5.Israeli N., Goldenfeld N. Computational irreducibility and the predictability of complex physical systems. Phys. Rev. Lett. 2004;92:074105. doi: 10.1103/PhysRevLett.92.074105. [DOI] [PubMed] [Google Scholar]

- 6.Transtrum M.K., Machta B.B., Brown K.S., Daniels B.C., Myers C.R., Sethna J.P. Perspective: Sloppiness and emergent theories in physics, biology, and beyond. J. Chem. Phys. 2015;143:07B201_1. doi: 10.1063/1.4923066. [DOI] [PubMed] [Google Scholar]

- 7.Box G.E. Science and statistics. J. Am. Stat. Assoc. 1976;71:791–799. doi: 10.1080/01621459.1976.10480949. [DOI] [Google Scholar]

- 8.Maiwald T., Hass H., Steiert B., Vanlier J., Engesser R., Raue A., Kipkeew F., Bock H.H., Kaschek D., Kreutz C., et al. Driving the model to its limit: Profile likelihood based model reduction. PLoS ONE. 2016;11:e0162366. doi: 10.1371/journal.pone.0162366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.MacKay D.J., Mac Kay D.J. Information Theory, Inference and Learning Algorithms. Cambridge University Press; Cambridge, UK: 2003. [Google Scholar]

- 10.Amari S.I. Information Geometry and Its Applications. Volume 194 Springer; Berlin/Heidelberg, Germany: 2016. [Google Scholar]

- 11.Gutenkunst R.N., Waterfall J.J., Casey F.P., Brown K.S., Myers C.R., Sethna J.P. Universally sloppy parameter sensitivities in systems biology models. PLoS Comput. Biol. 2007;3:e189. doi: 10.1371/journal.pcbi.0030189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Transtrum M.K., Qiu P. Model reduction by manifold boundaries. Phys. Rev. Lett. 2014;113:098701. doi: 10.1103/PhysRevLett.113.098701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mattingly H.H., Transtrum M.K., Abbott M.C., Machta B.B. Maximizing the information learned from finite data selects a simple model. Proc. Natl. Acad. Sci. USA. 2018;115:1760–1765. doi: 10.1073/pnas.1715306115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Machta B.B., Chachra R., Transtrum M.K., Sethna J.P. Parameter space compression underlies emergent theories and predictive models. Science. 2013;342:604–607. doi: 10.1126/science.1238723. [DOI] [PubMed] [Google Scholar]

- 15.Raju A., Machta B.B., Sethna J.P. Information loss under coarse graining: A geometric approach. Phys. Rev. E. 2018;98:052112. doi: 10.1103/PhysRevE.98.052112. [DOI] [Google Scholar]

- 16.Laughlin R.B., Pines D. From the cover: The theory of everything. Proc. Natl. Acad. Sci. USA. 2000;97:28. doi: 10.1073/pnas.97.1.28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Pearl J. Causality. Cambridge University Press; Cambridge, UK: 2009. [Google Scholar]

- 18.Halpern J.Y. Actual Causality. MIT Press; Cambridge, MA, USA: 2016. [Google Scholar]

- 19.Sugihara G., May R., Ye H., Hsieh C.H., Deyle E., Fogarty M., Munch S. Detecting causality in complex ecosystems. Science. 2012;338:496–500. doi: 10.1126/science.1227079. [DOI] [PubMed] [Google Scholar]

- 20.Solvang H.K., Subbey S. An improved methodology for quantifying causality in complex ecological systems. PLoS ONE. 2019;14:e0208078. doi: 10.1371/journal.pone.0208078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Albantakis L., Tononi G. Causal composition: Structural differences among dynamically equivalent systems. Entropy. 2019;21:989. doi: 10.3390/e21100989. [DOI] [Google Scholar]

- 22.Tononi G., Sporns O. Measuring information integration. BMC Neurosci. 2003;4:31. doi: 10.1186/1471-2202-4-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hoel E.P., Albantakis L., Tononi G. Quantifying causal emergence shows that macro can beat micro. Proc. Natl. Acad. Sci. USA. 2013;110:19790–19795. doi: 10.1073/pnas.1314922110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hoel E.P. When the map is better than the territory. Entropy. 2017;19:188. doi: 10.3390/e19050188. [DOI] [Google Scholar]

- 25.Gugercin S., Antoulas A.C. A Survey of Model Reduction by Balanced Truncation and Some New Results. Int. J. Control. 2004;77:748–766. doi: 10.1080/00207170410001713448. [DOI] [Google Scholar]

- 26.Huang H., Fairweather M., Griffiths J., Tomlin A., Brad R. A systematic lumping approach for the reduction of comprehensive kinetic models. Proc. Combust. Inst. 2005;30:1309–1316. doi: 10.1016/j.proci.2004.08.001. [DOI] [Google Scholar]

- 27.Daniels B.C., Nemenman I. Automated adaptive inference of phenomenological dynamical models. Nat. Commun. 2015;6:1–8. doi: 10.1038/ncomms9133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Dufresne E., Harrington H.A., Raman D.V. The geometry of sloppiness. arXiv. 2016 doi: 10.18409/jas.v9i1.64.1608.05679 [DOI] [Google Scholar]

- 29.Pearl J., Mackenzie D. The Book of Why: The New Science of Cause and Effect. Basic Books; New York, NY, USA: 2018. [Google Scholar]

- 30.Balduzzi D. Information, learning and falsification. arXiv. 20111110.3592 [Google Scholar]

- 31.Klein B., Hoel E. The emergence of informative higher scales in complex networks. Complexity. 2020;2020 doi: 10.1155/2020/8932526. [DOI] [Google Scholar]

- 32.Liu Y.Y., Slotine J.J., Barabási A.L. Controllability of complex networks. Nature. 2011;473:167–173. doi: 10.1038/nature10011. [DOI] [PubMed] [Google Scholar]

- 33.Portugal R., Svaiter B.F. Weber-Fechner law and the optimality of the logarithmic scale. Minds Mach. 2011;21:73–81. doi: 10.1007/s11023-010-9221-z. [DOI] [Google Scholar]

- 34.Hu W., Davis W. Dimming curve based on the detectability and acceptability of illuminance differences. Opt. Express. 2016;24:A885–A897. doi: 10.1364/OE.24.00A885. [DOI] [PubMed] [Google Scholar]

- 35.Transtrum M.K., Machta B.B., Sethna J.P. Geometry of nonlinear least squares with applications to sloppy models and optimization. Phys. Rev. E. 2011;83:036701. doi: 10.1103/PhysRevE.83.036701. [DOI] [PubMed] [Google Scholar]

- 36.Tikhonov M. Theoretical ecology without species. Bull. Am. Phys. Soc. 2016;61:1–13. [Google Scholar]

- 37.Blumer A., Ehrenfeucht A., Haussler D., Warmuth M.K. Occam’s razor. Inf. Process. Lett. 1987;24:377–380. doi: 10.1016/0020-0190(87)90114-1. [DOI] [Google Scholar]

- 38.Shapiro L. Embodied Cognition. Routledge; Abingdon, UK: 2019. [Google Scholar]

- 39.Srednicki M. Quantum Field Theory. Cambridge University Press; Cambridge, UK: 2007. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.