Abstract

Negative symptoms are a critical, but poorly understood, aspect of schizophrenia. Measurement of negative symptoms primarily relies on clinician ratings, an endeavor with established reliability and validity. There have been increasing attempts to digitally phenotype negative symptoms using objective biobehavioral technologies, eg, using computerized analysis of vocal, speech, facial, hand and other behaviors. Surprisingly, biobehavioral technologies and clinician ratings are only modestly inter-related, and findings from individual studies often do not replicate or are counterintuitive. In this article, we document and evaluate this lack of convergence in 4 case studies, in an archival dataset of 877 audio/video samples, and in the extant literature. We then explain this divergence in terms of “resolution”—a critical psychometric property in biomedical, engineering, and computational sciences defined as precision in distinguishing various aspects of a signal. We demonstrate how convergence between clinical ratings and biobehavioral data can be achieved by scaling data across various resolutions. Clinical ratings reflect an indispensable tool that integrates considerable information into actionable, yet “low resolution” ordinal ratings. This allows viewing of the “forest” of negative symptoms. Unfortunately, their resolution cannot be scaled or decomposed with sufficient precision to isolate the time, setting, and nature of negative symptoms for many purposes (ie, to see the “trees”). Biobehavioral measures afford precision for understanding when, where, and why negative symptoms emerge, though much work is needed to validate them. Digital phenotyping of negative symptoms can provide unprecedented opportunities for tracking, understanding, and treating them, but requires consideration of resolution.

Keywords: biobehavioral, computational, deficit, digital phenotyping, negative, psychiatry, schizophrenia

Introduction

Negative symptoms have been a key component of schizophrenia since its earliest conceptualization.1 Negative symptoms can be observed in premorbid, prodromal, first episode, and chronic phases of illness and predict a number of poor clinical outcomes.2–5 Given limited progress in developing effective treatments,6 there has been considerable empirical interest in better understanding the nature of negative symptoms.7 This research has primarily relied on a handful of psychometrically-supported clinical rating scales to quantify their severity,8 including the Scale for the Assessment of Negative Symptoms (SANS),9 Schedule for the Deficit Syndrome (SDS),10 Brief Negative Symptom Scale (BNSS),11 and Clinical Assessment Interview for Negative Symptoms (CAINS).12 From a psychometric perspective, clinical ratings are the “gold standard” for measuring negative symptoms, as they show adequate to excellent inter-rater and test-retest reliability and convergent/discriminant validity with various functional, cognitive, neurobiological and clinical measures. These scales have contributed to at least 3 “knowns” about negative symptoms.

Negative Symptoms Are Severe

Evidence from studies using clinical rating scales suggests that negative symptoms are highly prevalent in schizophrenia patients. For example, a large archival dataset of SANS ratings indicates that 82% of schizophrenia patients exhibit clinically significant negative symptoms (ie, mild or greater), whereas 58% are rated as having moderate to severe scores.13 Similar frequency distributions are observed on more recently developed scales (eg, BNSS, CAINS).8–12 Studies comparing the severity of negative symptom ratings between schizophrenia and nonpsychiatric control groups also indicate that they are prevalent and severe,2 often 4 SD or greater in magnitude.14

Negative Symptoms Are Stable Over Time

Evidence from studies using clinical rating scales also suggests that negative symptoms are stable over time and setting, at least, when treatment and clinical factors are relatively constant.15 Numerous longitudinal studies support this view, eg, with negative symptoms showing stability over weeks,11,12 months,16 and years/decades.3,17

Negative Symptoms Reflect Widespread Impairments

Evidence from studies using clinical rating scales suggests that negative symptoms encapsulate a broad range of social, communicative, emotional, cognitive, and behavioral functions in a broad range of vocational, academic, and recreational settings. Exploratory factor analyses consistently support a simple factor solution covering a broad range of behaviors, functions and domains. This factor structure covers separable expression (ie, alogia, blunted affect) and motivation and pleasure (MAP; ie, anhedonia, avolition, asociality) factors,12,18,19 and in more recent research, hierarchically subordinate 5 factor solutions.20,21 Included within these factors are a broad range of related functions. Blunted affect, eg, covers dozens of physiologically distinct behaviors, including those in vocal (eg, intonation, emphasis, formant frequencies), facial (eg, eye, lip, cheek, brow), and gestural (body proximity, hand gestures) domains.

And yet, Negative Symptoms Are Subtle, Variable, and Complex When Measured Using Biobehavioral Technologies

For decades, researchers have attempted to objectify negative symptoms using “biobehavioral” technologies, eg, using vocal, facial, decision-making, gestural, electrophysiological, neurobiological, and reaction time measures during controlled laboratory conditions and ambulatory/mobile recording. In this article, we:

Provide examples of biobehavioral technologies of potential use for measuring negative symptoms.

Document the surprisingly modest and inconsistent relationship between biobehavioral technologies and clinically rated negative symptoms using 4 case studies, secondary analysis of several archival data sets, and exemplars from the extant literature.

Explain this divergence in terms of resolution—defined as precision in distinguishing various aspects of a signal.22–25 In psychophysics, resolution involves the ability to distinguish between colors, shapes, and timing of various stimuli. With respect to clinical symptoms, it involves the ability to detect changes in symptoms as a function of time and of environment/context.

Demonstrate how convergence between clinical ratings and biobehavioral data can be achieved by scaling data across various resolutions.

Our overarching goal in this article is to advance biobehavioral technologies for digital phenotyping negative symptoms and for understanding their potentially dynamic and idiographic nature.2,17 Our focus here is on identifying, understanding, and overcoming a major obstacle that has emerged in the literature: involving divergence with clinical ratings.

Four Case Studies: “High-Resolution” Technologies and Clinical Ratings

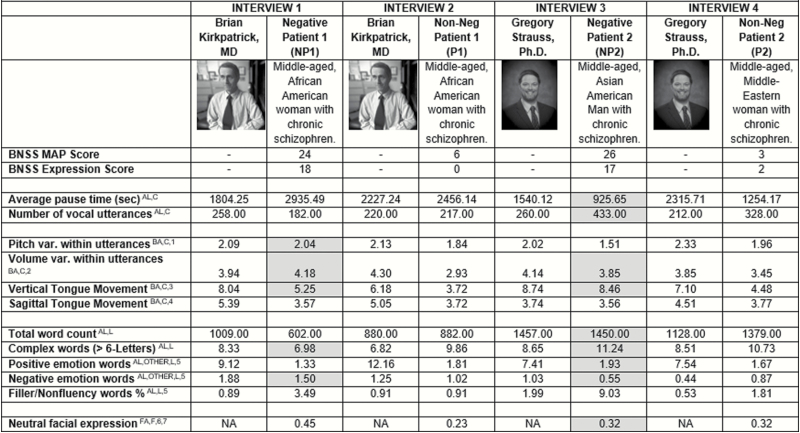

To illustrate how biobehavioral technologies can measure blunted affect and alogia, we conducted analysis on archived video interviews between 2 authors of this article (Drs. Kirkpatrick and Strauss) and 4 patients diagnosed with schizophrenia. Two of these patients had very high consensus-based ratings on all negative symptom domains (NP1, NP2) and two had very low ratings on all negative symptom domains (P1, P2).

Data for a series of validated acoustic, lexical, and video analysis software and protocols developed by our lab26–28 and by others29,30 are presented in figure 1. Collectively, these technologies measure communication, and directly tap alogia and blunted vocal/facial expression, and may indirectly tap amotivation, asociality, and anhedonia (see table 1). Using these technologies, we get an impressive array of ratio-level data on a variety of alogia- and blunted affect-related measures. However, interpreting these data are challenging because “normal ranges” for them do not exist. What does it mean that, eg, NP1 shows an average pause time of 2935 ms, whereas NP2 shows a pause time of 935 ms? How abnormal is it that NP1 shows an average intonation value of 2.04 semitones compared with NP2, who shows a value of 1.51?

Fig. 1.

Clinical rating and biobehavioral-based measures of negative symptoms from 4 archived clinical interviews conducted by either Dr. Brian Kirkpatrick or Dr. Gregory Strauss. For NP1 and NP2, shaded cells are inconsistent with clinical ratings (ie, less severe negative symptom-behavior compared to the interviewers and non-negative patients). var = variability, sec = seconds, AL = conceptually taps alogia, BA = conceptually taps blunted vocal affect, FA = conceptually taps blunted facial affect, OTHER = potentially taps anhedonia or other aspect of negative symptoms, C = analyzed using the Computerized Assessment of Natural Speech,27 L = analyzed using the Linguistic Inquiry and Word Count system,29 F = analyzed using Facereader software.30 1 = standard deviation of fundamental frequency (converted to semitones), computed within each utterance and averaged across all utterances, 2 = standard deviation of intensity (in dB), computed within each utterance and averaged across all utterances, 3 = based on first formant, in semitones, 4 = based on second formant, in semitones, 5 = percentage of total words, based on match to predefined dictionary, 6 = analysis of face from each video frame by matching to an “ideal” neutral face algorith, based on .00 (no fit) to 1.00 (ideal fit), averaged across all frames, 7Video data unavailable for the interviewers.

Table 1.

Biobehavioral Variables Examined in This Study

| Name | Definition |

|---|---|

| Average pause time (s)AL,C | Average silence between voiced utterance (in s) |

| Number of vocal utterancesAL,C | Number of voicings bounded by silence |

| Pitch var. within utterancesBA,C,1 | SD of vocal fold vibrations (F0, in semitones) within each utterance, averaged across utterances |

| Volume var. within utterancesBA,C,2 | SD of intensity within each utterance, averaged across utterances (in dB) |

| Vertical Tongue MovementBA,C,3 | SD of first Formant values within each utterance, averaged across utterances (in Hz) |

| Sagittal Tongue MovementBA,C,4 | SD of second Formant values within each utterance, averaged across utterances (in Hz) |

| Total word countAL,L | Number of spoken words, as recognized by human transcribers and lexical analysis software. |

| Complex words (>6 letters)AL,L | Percentage of spoken words that are 6 letters or longer as recognized by human transcribers and lexical analysis software. |

| Positive emotion wordsAL,OTHER,L,5 | Percentage of spoken words with a positive emotion valence, as recognized by lexical analysis software as recognized by human transcribers and lexical analysis software. |

| Negative emotion wordsAL,OTHER,L,5 | Percentage of spoken words with a negative emotion valence, as recognized by lexical analysis software as recognized by human transcribers and lexical analysis software. |

| Filler/nonfluency words %AL,L,5 | Percentage of spoken words that are fillers or nonfluencies (eg, uh, err) as recognized by lexical analysis software as recognized by human transcribers and lexical analysis software. |

| Neutral facial expressionFA,F,6,7 | Match of facial features to an algorithm of optimal “neutral-ness,” averaged across all frames of the video. |

Note: var = variability, AL = conceptually taps alogia, BA = conceptually taps blunted vocal affect, FA = conceptually taps blunted facial affect, OTHER = potentially taps anhedonia or other aspect of negative symptoms, C = analyzed using the Computerized Assessment of Natural Speech,27 L = analyzed using the Linguistic Inquiry and Word Count system,29 F = analyzed using Facereader software,30 1 = standard deviation of fundamental frequency (converted to semitones), computed within each utterance and averaged across all utterances, 2 = standard deviation of intensity (in dB), computed within each utterance and averaged across all utterances,3 = based on first formant, in semitones, 4 = based on second formant, in semitones, 5 = percentage of total words, based on match to predefined dictionary, 6 = analysis of face from each video frame by matching to an “ideal” neutral face algorith, based on .00 (no fit) to 1.00 (ideal fit), averaged across all frames, 7 = video data unavailable for the interviewers.

Norming issues aside, we can safely assume that individuals with relatively extreme clinically rated negative symptoms should talk less and be more blunted than patients without negative symptoms and nonpatients (eg, Drs. Kirkpatrick and Strauss). However, evidence from figure 1 for these assumptions is surprisingly mixed. NP2 showed the least “alogia” of any of the patients or interviewers, with relatively short pause times between vocal utterances and a high number of total words and vocal utterances. Moreover, NP2’s voice was not particularly blunted and he used more positively valenced words than any of the other patients. On the other hand, NP1 showed dramatic reductions in speech production (eg, long average pause time, few vocal utterances, few words) and facial expression, but her vocal expression was normal in variability and she used more negatively valenced words than any of the other patients. Despite extreme levels of clinically rated negative symptoms, both negative symptom patients were, in surprising respects, behaviorally unremarkable. Insofar as Drs. Kirkpatrick and Strauss are experts on negative symptoms, and authors of the NIMH-MATRICS BNSS,11 it seems unlikely that their clinical ratings are invalid. We will address potential reasons underlying this discrepancy later in section “What can explain the discrepancy between clinical ratings and biobehavioral measures?” of this article. In sections “Archived data analysis of ‘high-resolution’ features: ‘Traditional’ reliability and validity” and “Exemplars in the extant literature,” we document this discrepancy in a large archived dataset and from the extant literature.

Archived Data Analysis of “High-Resolution” Features: “Traditional” Reliability and Validity

The discrepancy between clinical ratings and biobehavioral data is seen in a large archived data set from our lab. Data presented here are from 2 studies of serious mental illness (SMI).28,31–33 We examined the acoustic, lexical, and video-based features discussed in section “Four case studies: ‘high-resolution’ technologies and clinical ratings.” These features were extracted from 2 sources: (1) a video-recorded structured clinical interview (not previously published) and (2) various laboratory speaking tasks administered as part of the research protocol (previously published28,31–33). The clinical interviews varied in length, so the samples were standardized so that they reflected the first and last 5 minutes of the interview. The sample comprised 40 nonpatients recruited from the community and 78 patients with schizophrenia, and 79 “psychiatric controls” with a variety of disorders, including depression (n = 19), bipolar disorder (n = 21), and various personality, psychosis, mood, and trauma-related diagnoses with sufficient functional impairment to qualify for federal definitions of SMI. The latter group were included to improve the transdiagnostic scope of the sample, since negative symptoms are common in a broad range of SMI diagnoses.2 The groups were not significantly different with respect to gender, ethnicity, age, or education (all p’s > .26; see supplementary table 2). Negative symptoms were measured using the SANS.9 All appropriate ethics boards approved this project, and participants gave informed consent prior to their involvement in the study. See supplemental materials for additional information about our audio/video processing approach.

Analytic Strategy

We were interested in the degree to which the biobehavioral features introduced in figure 1 and table 1 were severe, stable over time and setting, and covaried relative to each other within participants. To examine severity, we compared biobehavioral features between patients with schizophrenia and community and psychiatric controls using ANOVAs (with Tukey’s post hoc tests). To examine stability, we computed intra-class correlation coefficients (ICC; average consistency) for each biobehavioral feature over time (ie, the first and last 5-minute samples of the clinical interview) and setting (between the clinical interview and the laboratory samples). ICC values were separately examined for all participants, for patients, for patients with schizophrenia and for those with clinically rated blunted affect (defined as showing “mild” or greater symptoms on the global blunted affect scale). To examine covariation within participants (ie, whether clinical ratings reflected abnormalities in a broad range of behaviors), we computed Spearman’s correlations between clinically rated negative symptoms and biobehavioral features. “Family-wise” convergence was evaluated by entering all 12 features into in a single regression step to predict clinical ratings (patients only). Data were trimmed (extreme values reduced to 3.5 SD) to reduce the impact of outliers.

Severity: Group Differences

Patients with schizophrenia significantly differed from controls on 4 of the 12 biobehavioral features, though not necessarily in the expected directions (supplementary table 2). Schizophrenia patients showed fewer utterances and words overall but used more negative emotion and fewer filler words. Effect sizes for nonsignificant group differences were in the negligible to small range and often indicated increased behavior in patients (eg, nonsignificant but increased vertical tongue movement in schizophrenia patients versus controls; d =─0.44).

Stability

Approximately half of features were not stable throughout the clinical interview (defined as ICC values < .70) (table 2). Stability did not generally change as a function of diagnosis or blunted affect, though the schizophrenia group showed the highest stability. In sum, many biobehavioral features tapping behaviors critical to negative symptoms were not stable over time or over speaking task.

Table 2.

Summary Reliability Statistics of 12 Vocal and Speech and Facial Features Extracted From Clinical Interview and Laboratory Speaking Tasks

| All Subjects | Patients Only | Schizophrenia Only | Flat Affect Only | |

|---|---|---|---|---|

| Test–retest reliability between beginning and end 5 min of clinical interview | ||||

| N subjects | 143 | 114 | 53 | 22 |

| K samples | 2 | 2 | 2 | 2 |

| M ± SDa. | 0.65 ± 0.29 | 0.63 ± 0.31 | 0.69 ± 0.24 | 0.64 ± 0.24 |

| Rangea. | 0.08 to 0.91 | 0.04 to 0.92 | 0.18 to 0.93 | 0.24 to 0.91 |

| % Unacceptablea,c | 42% | 42% | 25% | 58% |

| Reliability between clinical interview and other laboratory speaking tasks | ||||

| N subjects | 136 | 108 | 49 | 21 |

| K samples | 2 | 2 | 2 | 2 |

| M ± SDa,b | 0.53 ± 0.29 | 0.53 ± 0.30 | 0.64 ± 0.28 | 0.57 ± 0.32 |

| Rangea,b | 0.00 to 0.84 | −0.00 to 0.83 | 0.06 to 0.92 | 0.01 to 0.89 |

| % Unacceptablea,b,c | 64% | 55% | 36% | 55% |

Note: aIntraclass correlation coefficient (ICC) values.

bInterview and laboratory samples averaged within participant.

cUnacceptable defined as ICC values < .70.

Convergence With Clinical Ratings

None of the individual correlations were particularly large, with absolute values ranging from .00 to .44 (table 3). Blunted affect was associated with increased pause times, fewer vocal utterances, and fewer words used. Alogia was associated with lower word count and less use of negative emotion words. Anhedonia and avolition were generally not significantly associated with biobehavioral features. Collectively, the 12 biobehavioral features correlated highly with select clinical ratings, showing high adjusted R values for blunted affect and alogia. The largest correlations were observed between conceptually distinct measures—clinical ratings of blunted affect and behavioral measures of alogia (ie, pause time, number of utterances). Generally, features were not highly correlated with each other (supplementary table 3).

Table 3.

Correlations Between Clinical Ratings of Negative Symptoms and Biobehavioral Features Extracted From 10 min of a Clinical Interview (for All Patients)

| Blunted Affect | Alogia | Anhedonia | Apathy | |

|---|---|---|---|---|

| Average pause time (s)AL,C | 0.44* | 0.11 | 0.05 | −0.05 |

| Number of vocal utterancesAL,C | −0.39* | −0.09 | −0.08 | 0.04 |

| Pitch var. within utterancesBA,C,1 | −0.02 | 0.07 | 0.01 | 0.01 |

| Volume var. within utterancesBA,C,2 | −0.24* | −0.15 | −0.02 | 0.12 |

| Vertical Tongue MovementBA,C,3 | −0.11 | 0.09 | −0.31* | −0.07 |

| Sagittal Tongue MovementBA,C,4 | −0.23* | 0.08 | −0.43* | −0.17 |

| Total word countAL,L | −0.40* | −0.28* | −0.05 | 0.13 |

| Positive emotion wordsAL,OTHER,L,5 | 0.00 | −0.17 | −0.06 | 0.07 |

| Negative emotion wordsAL,OTHER,L,5 | −0.13 | −0.30* | 0.05 | 0.04 |

| Complex words (>6 letters)AL,L | −0.11 | −0.12 | −0.20 | −0.07 |

| Filler/nonfluency words %AL,L,5 | −0.18 | −0.12 | −0.06 | −0.15 |

| Neutral facial expressionFA,F,6,8 | 0.12 | −0.02 | 0.10 | 0.14 |

| Collective R (adjusted) | 0.51* | 0.40* | 0.39* | 0.26* |

Note: var = variability, AL = conceptually taps alogia, BA = conceptually taps blunted vocal affect, FA = conceptually taps blunted facial affect, OTHER = potentially taps anhedonia or other aspect of negative symptoms. C = analyzed using the Computerized Assessment of Natural Speech,27 L = analyzed using the Linguistic Inquiry and Word Count system, 29 F = analyzed using Facereader software, 30 1 = standard deviation of fundamental frequency (converted to semitones), computed within each utterance and averaged across all utterances, 2 = standard deviation of intensity (in dB), computed within each utterance and averaged across all utterances, 3 = based on first formant, in semitones, 4 = based on second formant, in semitones, 5 = percentage of total words, based on match to predefined dictionary, 6 = analysis of face from each video frame by matching to an “ideal” neutral face algorith, based on .00 (no fit) to 1.00 (ideal fit), averaged across all frames, 7 = video data unavailable for the interviewers.

Exemplars in the Extant Literature

The discrepancy between clinical ratings and biobehavioral data are also seen in the extant literature. Although the extant literature has many examples where clinical judgment (ie, schizophrenia diagnosis, negative symptom ratings) significantly overlaps with biobehavioral features in expected directions, it is also rife with inconsistent and null findings. Consider several meta-analyses. Luther and colleagues34 review studies examining inter-relations between self-reported, clinician-rated, and performance-based measures of motivation in patients with schizophrenia. Performance-based measures include various reward experience, effort-expenditure, and effort-cost computation tasks using laboratory procedures.35–37 Virtually all mean effect sizes computed in this meta-analysis were in the negligible to small range, with, e.g., an average r correlation value between performance-based measures and clinician-rated motivation at 0.21 (K studies = 11; N subjects = 455 patients, 326 controls). In a meta-analysis attempting to quantify blunted affect and alogia using acoustic and lexical output of natural speech between patients and controls, Cohen et al14 reported highly variable effect sizes across studies. Compared with nonpsychiatric controls, patients with schizophrenia showed a large average effect size decrease in speech production (conceptually tapping alogia; d = 0.80, K studies = 13, N = 480 patients, 326 nonpsychiatric controls), whereas speech variability (reflecting blunted vocal affect) was medium (d = 0.60, K studies = 2, N = 105 patients, 39 nonpsychiatric controls). These values are much smaller than the group differences seen in clinical ratings reported in these studies (ie, d = 3.54, K studies = 4, N = 190 patients, 124 nonpsychiatric controls). Also relevant are meta-analyses finding that “consummatory” anhedonia, measured using experimental-based emotion manipulation tends to be absent in patients, despite high levels of clinically rated anhedonia.38,39

To further understand the extant literature, we conducted a search on the Web of Science using the search terms “negative,” “schizophrenia,” and one of the following terms: “acoustic or vocal or facial or video or prosody.” Although not a comprehensive search, it was intended to identify a limited set of relevant articles using a reasonably transparent selection process. This search yielded 148 peer-reviewed studies, of which the top 5 appropriate and most highly cited studies presenting novel data from patients with schizophrenia are discussed here (conducted on September 2, 2019).12,40–43

The most highly cited article in this search was the CAINS validation study,12 for which a subset of the 162 patients had their clinical interviews video-recorded and quantified using a structured facial emotion coding system. As expected, symptom ratings from the CAINS expression subscale were significantly correlated with positive and negative valenced facial expression ratings at −.48 and −.34 respectively, suggesting overlap of these measures on the order of 10%–25%. Cohen et al42 conducted objective speech analysis of 46 patients with schizophrenia, and found that, of the 15 correlation values computed between objective and clinically rated negative symptoms, only 6 values were statistically significant (range of significant r’s =.28–.69, average of the absolute value of all r’s = .23). Unexpectedly, the largest correlation suggested that increased SANS global affective flattening was associated with increased use of negative emotion words (ie, r = −.69, p < .01). In the third study, Kupper et al41 conducted Motion Energy Analysis of 378 videotaped role-plays for 27 outpatients with schizophrenia. They found that correlations between PANSS negative symptom factor scores and summary measures of head and body movement ranged from −0.37 (p < .10) to −0.51 (p < .01). In a fourth study, Brune et al40 examined video-taped social verbal behavior in 50 patients using a structured coding system. Patients rated as being high versus low in verbal behavior also showed more severe clinically rated negative symptoms, with a Cohen’s d value of 0.93. Finally, Park et al43 examined social behavior, notably in interpersonal distance and head orientation, during a virtual reality interaction for 30 patients with schizophrenia. PANSS negative symptoms were significantly correlated for 4 of 6 objective measures, and these r values ranged from −0.20 to −0.44. Based on this sample of the literature, there is limited support for the claim that clinically rated negative symptoms overlap heavily with biobehavioral technologies or expert ratings. This convergence rarely exceeds 25%, even when the measures are derived from the same video/interaction or cover conceptually similar phenomenon.

What Can Explain the Discrepancy Between Clinical Ratings and Biobehavioral Measures?

It is possible that either clinical ratings or biobehavioral measures are “invalid” for measuring negative symptoms, though this seems highly unlikely for several reasons. Clinical ratings have shown acceptable inter-rater reliability, temporal stability, convergent/divergent, concurrent, and predictive validity for understanding negative symptoms.11,44,45 Moreover, the ratings presented here were made by the authors of this article—individuals considered experts in negative symptoms and with considerable clinical and research experience. By the same token, acoustic, lexical, and video analyses have been employed in thousands of studies to date and are essential to a broad range of research and applied disciplines, such as speech pathology, political and communication sciences, forensics, business and market research, and computer sciences (eg, 4196 citations for the LIWC on December 22, 2019 in Google Scholar). The programs and features used in this study have demonstrated adequate psychometric support in our research and many others.26–30 In terms of face validity, the constructs measured in clinical ratings of blunted affect and alogia are directly quantifiable in terms of the acoustic, lexical, and video biobehavioral features examined here (see table 1).

Another explanation is that clinical ratings and biobehavioral measures of negative symptoms are tapping the same construct, but diverge in their level of analysis, or “resolution.” Resolution is an important aspect of biomedical and computational assessment, and is defined as precision in distinguishing various aspects of a signal.22–25 An optical microscope, e.g., is optimized for detecting changes at a micrometer level whereas optical binoculars are optimized for distances in miles. They are both valid for understanding a common construct (eg, relative health of a forest), but are designed to measure very different aspects of it. For this reason, they may show only modest convergence. From a psychiatric research perspective, the concept of resolution is well-illustrated in the “levels of analysis” of the Research Domain Criteria system,46 as it reflects varying levels of organization within the central nervous system. Measures optimized for understanding a construct at one level (eg, genetic), often show modest convergence with a measure optimized for measuring the same construct at another level (eg, behavior).47,48

The behaviors underlying negative symptoms are inherently dynamic and complex, unfolding throughout time and often in response to environment and social changes. Accordingly, the resolution of negative symptoms can vary in several ways, including, to borrow terms from biomedicine and physics, “temporal” (ie, precision with respect to time), “spatial” (ie, precision with respect to changes in the environment) characteristics, and “spectral” (ie, precision with respect to signal subcomponents) resolutions characteristics.22,23,25 A “nonflat” individual will likely not be smiling the vast majority of their 24-hour day but may smile intensely in a few critical moments deemed typical based on culturally-relevant social norms. Moreover, this smile may involve some, but not all muscle activity associated with an “prototypical” smile (eg, a “Duchenne” smile), and may not involve other culturally appropriate concomitant behaviors. By comparison, a “flat” individual may express their phenotype during a few critical moments during their day, and in ways undetectable by a measure blind to environmental context. An experienced clinician is not basing their negative symptom ratings on average smile data across time and space. Rather, they are evaluating the interaction of many data streams over time, as a function of spatial events (eg, what question was asked; making a joke), and using norms derived from their own experience.

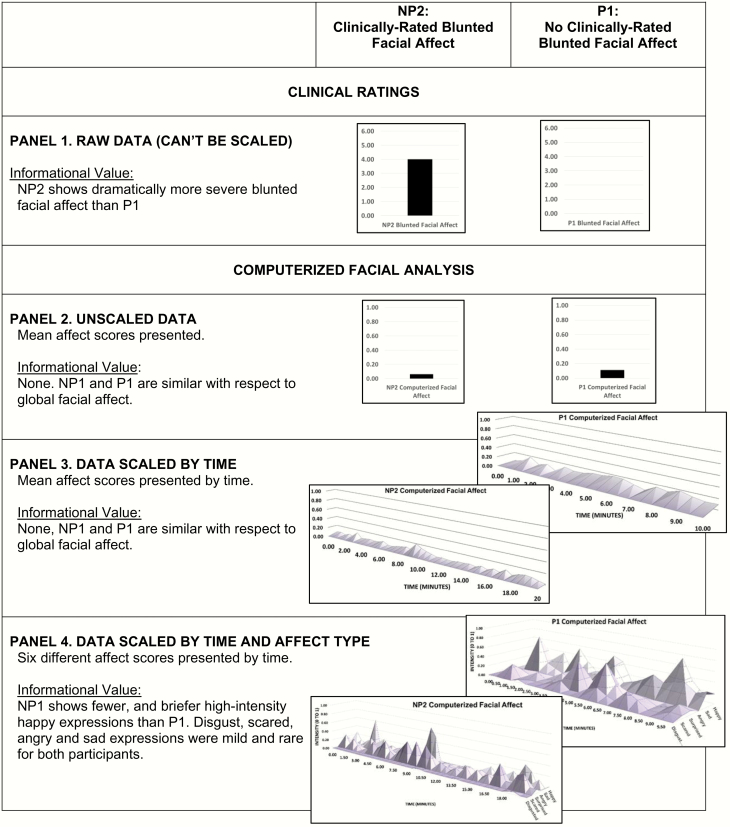

Consider figure 2, which shows data regarding blunted facial affect for NP2 and P1 from the case studies. Clinically rated blunted facial affect (in Panel 1) was unambiguously present or absent in NP2 and P1, as evidence by consensus ratings of “moderately severe” and “absent,” respectively. Panels 2 through 4 show computerized facial expression data scaled to various temporal and spectral resolutions. These data reflect a match of facial features in each individual video frame to a mathematically derived algorithm of that facial expression (z-axis) from extremely poor (0.00) to optimal (1.00) (y-axis). When data are unscaled (Panel 2; ie, presented as a global mean across the entire interview) or scaled as a function of time (Panel 3; time presented on the x-axis), NP2 and P1 are virtually indistinguishable. However, when their data are scaled as a function of time and spectral subcomponents (ie, 6 different facial affect types), their differences become obvious. Patient P1 has at least 5 distinct expressions of happy emotions (value > 0.50) over their 10-minute interview, and they tend to show a modest, yet detectable level of happy emotion throughout the interview. In contrast, patient NP2 has 2 brief expressions of positive emotions over their 20-minute interview. Importantly, neither patient expresses much disgust, scared, surprised, angry or sad emotion (rarely exceeds 0.50). In short, there is something about the facial expression of NP2 that expert raters unanimously consider blunted, and this can only be corroborated by temporally and spectrally scaling their biobehavioral data. When done, it is clear that patient NP2 is relatively unremarkable with respect to most emotional expressions most of the time, but is severely blunted in one specific type of emotional expression at key moments. From this perspective, it is no surprise that clinical ratings and biobehavioral measures show modest convergence in prior research—as resolution has generally not been considered.

Fig. 2.

Relative informational value of computerized facial analysis versus clinical ratings for participant NP2 and P1. Computerized facial data are scaled as a function of time (over the span of the clinical interview) and spectral component (ie, facial affect type).

The Value of Biobehavioral Data: Advancing Precision Psychiatry Through Scalable Data

There are many situations where clinical ratings scales are adequate for measuring negative symptoms. However, clinical ratings cannot be deconstructed to inform exactly how, when, or why a patient was abnormal, and hence, are limited in situations where resolution matters. A single ordinal rating may capture a patient’s global level of blunted facial affect, but it cannot inform, eg, whether clinically rated bluntening is specific to positive emotions as seen in our case study and in Gupta et al.49 It cannot inform, eg, whether “in the moment” alogia tends to exacerbate as a function of concomitant cognitive overload33,50 or associated with abnormal allocation of right versus left superior temporal gyrus resources.51 The assumption thus far has been that (1) patients high in clinically rated negative symptoms are nonresponsive across all or most time, setting, and behavioral channels and that (2) individuals without negative symptoms are responsive and expressive across all or most time, setting, and behavioral channels. Data from biobehavioral technologies raise questions about these assumptions.

Biobehavioral data have the potential to offer “scalable” resolution across time, setting, and behavioral channel such that data can be viewed based on user-defined parameters. Imagine a digital interface, as in figure 2 Panel 4 that allowed users to increase or decrease the temporal resolution (eg, across minutes, days, and weeks), focus on specific contexts (eg, responding to specific questions, interacting with specific environments) and on specific behaviors (eg, smiles, scowls). Such a tool would have important implications for digital phenotyping and precision psychiatry efforts, as an individual patient’s unique behavioral signature could be objectively derived and tracked over time. Given that negative symptoms are associated with a high degree of phenotypic and mechanistic heterogeneity,2,17 scalable high-resolution biobehavioral data have the potential to accurately discriminate between potential idiopathic and secondary causes of negative symptoms (ie, paranoia, disorganization, depression, medication side effects) and, hence, to delineate highly homogenous and even individualized negative symptom phenotypes. This could be done using inexpensive recording technologies in the clinic, laboratory, and natural environments (eg, using ambulatory technologies) and would provide critical information about potential mechanisms underlying specific targeted behaviors (eg, “this patient is primarily blunted in positive emotion expression at work while interacting with peers”). This information could be of use in guiding biofeedback, psychosocial, and pharmacological interventions.

Though scalable biobehavioral technologies are well within the scope of current practice, much work remains for clinical implementation. First, although progress has been made in quantifying vocal, speech, facial, gestural, motivational, and experiential phenomenon underlying negative symptoms, there is still much work to do in adapting these technologies for clinical use. Identifying temporal, contextual and spectral “resolutions” most relevant to negative symptoms will be important to this process. Objectifying subjective components of negative symptoms is challenging, though self-report ambulatory technologies exist. Second, norms for evaluating these data will need to be developed. Beyond the impact of time and place, individual differences will be critical to consider. Expression, experience and motivation manifest differently as a function of culture, age, gender, and other factors, so norms will necessarily involve large data sets collected from geographically, demographically and culturally diverse groups of individuals. Third, psychometric validation will need to consider resolution. Expecting high convergence between clinical ratings and biobehavioral measures, eg, that are egregiously unmatched in temporal, spatial or spectral characteristics is probably unrealistic. Solutions to these psychometric issues are common in biomedical, engineering, informatics, and computational fields and have been explored in psychology.52 In sum, objectifying negative symptoms can provide unprecedented opportunities for tracking and understanding them and, in doing so, can advance digital phenotyping and personalized psychiatry efforts. This endeavor will require consideration of resolution.

Supplementary Material

Supplementary data are available at Schizophrenia Bulletin online.

Supplemental Table 1. Demographic and clinical characteristics for community and nonpsychiatric controls and schizophrenia groups compared on biobehavioral features extracted from clinical interviews.

Supplemental Table 2. Community controls and schizophrenia patients compared on biobehavioral features extracted from clinical interviews.

Supplemental Table 3. Zero-order correlation matrix of speech and facial biobehavioral features, with patient values in the upper left and nonpsychiatric control values in the lower right corners of the matrix.

Funding

This work was supported by National Institute of Mental Health (NIMH) grant 1R03MH092622.

Acknowledgments

The authors have declared that there are no conflicts of interest in relation to the subject of this study.

References

- 1. Kraepelin E. Dementia Praecox and Paraphrenia. In: Barclay RT, ed. Chicago, IL: Chicago Medical Book; 1919. [Google Scholar]

- 2. Strauss GP, Cohen AS. A transdiagnostic review of negative symptom phenomenology and etiology. Schizophr Bull. 2017;43(4):712–719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Kirkpatrick B, Mucci A, Galderisi S. Primary, enduring negative symptoms: an update on research. Schizophr Bull. 2017;43(4):730–736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Lencz T, Smith CW, Auther A, Correll CU, Cornblatt B. Nonspecific and attenuated negative symptoms in patients at clinical high-risk for schizophrenia. Schizophr Res. 2004;68(1):37–48. [DOI] [PubMed] [Google Scholar]

- 5. Strauss GP, Harrow M, Grossman LS, Rosen C. Periods of recovery in deficit syndrome schizophrenia: a 20-year multi-follow-up longitudinal study. Schizophr Bull. 2010;36(4):788–799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Fusar-Poli P, Papanastasiou E, Stahl D, et al. . Treatments of negative symptoms in schizophrenia: meta-analysis of 168 randomized placebo-controlled trials. Schizophr Bull. 2015;41(4):892–899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Marder SR, Galderisi S. The current conceptualization of negative symptoms in schizophrenia. World Psychiatry. 2017;16(1):14–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Daniel DG. Issues in selection of instruments to measure negative symptoms. Schizophr Res. 2013;150(2–3):343–345. [DOI] [PubMed] [Google Scholar]

- 9. Andreasen NC. Negative symptoms in schizophrenia. Definition and reliability. Arch Gen Psychiatry. 1982;39(7):784–788. [DOI] [PubMed] [Google Scholar]

- 10. Kirkpatrick B, Buchanan RW, McKenney PD, Alphs LD, Carpenter WT Jr. The schedule for the deficit syndrome: an instrument for research in schizophrenia. Psychiatry Res. 1989;30(2):119–123. [DOI] [PubMed] [Google Scholar]

- 11. Kirkpatrick B, Strauss GP, Nguyen L, et al. . The brief negative symptom scale: psychometric properties. Schizophr Bull. 2011;37(2):300–305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Kring AM, Gur RE, Blanchard JJ, Horan WP, Reise SP. The Clinical Assessment Interview for Negative Symptoms (CAINS): final development and validation. Am J Psychiatry. 2013;170(2):165–172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Strauss GP. Anhedonia in schizophrenia: A deficit in translating reward information into motivated behavior. In: Ritsner M, eds. Anhedonia: A Comprehensive Handbook Volume II: Neuropsychiatric and Physical Disorders. The Netherlands, Dordrecht: Springer; 2014:125–156. [Google Scholar]

- 14. Cohen AS, Mitchell KR, Elvevåg B. What do we really know about blunted vocal affect and alogia? A meta-analysis of objective assessments. Schizophr Res. 2014;159(2–3):533–538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Savill M, Banks C, Khanom H, Priebe S. Do negative symptoms of schizophrenia change over time? A meta-analysis of longitudinal data. Psychol Med. 2015;45(8):1613–1627. [DOI] [PubMed] [Google Scholar]

- 16. Mueser KT, Sayers SL, Schooler NR, Mance RM, Haas GL. A multisite investigation of the reliability of the Scale for the Assessment of Negative Symptoms. Am J Psychiatry. 1994;151(10):1453–1462. [DOI] [PubMed] [Google Scholar]

- 17. Kirkpatrick B, Galderisi S. Deficit schizophrenia: an update. World Psychiatry. 2008;7(3):143–147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Strauss GP, Hong LE, Gold JM, et al. . Factor structure of the Brief Negative Symptom Scale. Schizophr Res. 2012;142(1–3):96–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Blanchard JJ, Cohen AS. The structure of negative symptoms within schizophrenia: implications for assessment. Schizophr Bull. 2006;32(2):238–245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Strauss GP, Nuñez A, Ahmed AO, et al. . The latent structure of negative symptoms in schizophrenia. JAMA Psychiatry. 2018;75(12):1303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Ahmed AO, Kirkpatrick B, Galderisi S, et al. . Cross-cultural validation of the 5-factor structure of negative symptoms in schizophrenia. Schizophr Bull. 2019;45(2):305–314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Cohen AS, Schwartz E, Le TP, Fedechko T, Kirkpatrick B, Strauss GP. Using biobehavioral technologies to effectively advance research on negative symptoms. World Psychiatry. 2019;18(1):103–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Cohen AS, Schwartz E, Le T, et al. . Validating digital phenotyping technologies for clinical use: the critical importance of “resolution”. World Psychiatry. 2020;19(1):114–115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Morris AS, Langari R. Calibration of measuring sensors and instruments. In: Measurement and Instrumentation: Theory and Application. Waltham, MA: Academic Press; 2016. [Google Scholar]

- 25. Cohen AS. Advancing ambulatory biobehavioral technologies beyond “proof of concept”: Introduction to the special section. Psychol Assess. 2019;31(3):277–284. [DOI] [PubMed] [Google Scholar]

- 26. Cohen AS, Renshaw TL, Mitchell KR, Kim Y. A psychometric investigation of “macroscopic” speech measures for clinical and psychological science. Behav Res Methods. 2016;48(2):475–486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Cohen AS, Minor KS, Najolia GM, Hong SL. A laboratory-based procedure for measuring emotional expression from natural speech. Behav Res Methods. 2009;41(1):204–212. [DOI] [PubMed] [Google Scholar]

- 28. Cohen AS, Mitchell KR, Docherty NM, Horan WP. Vocal expression in schizophrenia: Less than meets the ear. J Abnorm Psychol. 2016;125(2):299–309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Tausczik YR, Pennebaker JW. The psychological meaning of words: LIWC and computerized text analysis methods. J Lang Soc Psychol. 2010;29(1):24–54. [Google Scholar]

- 30. Den Uyl MJ, Van Kuilenburg H. The FaceReader : online facial expression recognition. Psychology. 2005;2005(September):589–590. [Google Scholar]

- 31. Cohen AS, Kim Y, Najolia GM. Psychiatric symptom versus neurocognitive correlates of diminished expressivity in schizophrenia and mood disorders. Schizophr Res. 2013;146(1–3):249–253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Cohen AS, Najolia GM, Kim Y, Dinzeo TJ. On the boundaries of blunt affect/alogia across severe mental illness: implications for Research Domain Criteria. Schizophr Res. 2012;140(1–3):41–45. [DOI] [PubMed] [Google Scholar]

- 33. Cohen AS, McGovern JE, Dinzeo TJ, Covington MA. Speech deficits in serious mental illness: a cognitive resource issue? Schizophr Res. 2014;160(1–3):173–179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Luther L, Firmin RL, Lysaker PH, Minor KS, Salyers MP. A meta-analytic review of self-reported, clinician-rated, and performance-based motivation measures in schizophrenia: Are we measuring the same “stuff”? Clin Psychol Rev. 2018;61:24–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Reddy LF, Horan WP, Barch DM, et al. . Effort-based decision-making paradigms for clinical trials in schizophrenia: part 1—psychometric characteristics of 5 paradigms. Schizophr Bull. 2015;41(5):1045–1054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Gold JM, Waltz JA, Prentice KJ, Morris SE, Heerey EA. Reward processing in schizophrenia: a deficit in the representation of value. Schizophr Bull. 2008;34(5):835–847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Strauss GP, Whearty KM, Morra LF, Sullivan SK, Ossenfort KL, Frost KH. Avolition in schizophrenia is associated with reduced willingness to expend effort for reward on a Progressive Ratio task. Schizophr Res. 2016;170(1):198–204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Cohen AS, Minor KS. Emotional experience in patients with schizophrenia revisited: meta-analysis of laboratory studies. Schizophr Bull. 2010;36(1):143–150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Kring AM, Moran EK. Emotional response deficits in schizophrenia: insights from affective science. Schizophr Bull. 2008;34(5):819–834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Brüne M, Abdel-Hamid M, Sonntag C, Lehmkämper C, Langdon R. Linking social cognition with social interaction: non-verbal expressivity, social competence and “mentalising” in patients with schizophrenia spectrum disorders. Behav Brain Funct. 2009;5:6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Kupper Z, Ramseyer F, Hoffmann H, Kalbermatten S, Tschacher W. Video-based quantification of body movement during social interaction indicates the severity of negative symptoms in patients with schizophrenia. Schizophr Res. 2010;121(1–3):90–100. [DOI] [PubMed] [Google Scholar]

- 42. Cohen AS, Alpert M, Nienow TM, Dinzeo TJ, Docherty NM. Computerized measurement of negative symptoms in schizophrenia. J Psychiatr Res. 2008;42(10):827–836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Park SH, Ku J, Kim JJ, et al. . Increased personal space of patients with schizophrenia in a virtual social environment. Psychiatry Res. 2009;169(3):197–202. [DOI] [PubMed] [Google Scholar]

- 44. Kirkpatrick B, Fenton WS, Carpenter WT Jr, Marder SR. The NIMH-MATRICS consensus statement on negative symptoms. Schizophr Bull. 2006;32(2):214–219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Blanchard JJ, Kring AM, Horan WP, Gur R. Toward the next generation of negative symptom assessments: the collaboration to advance negative symptom assessment in schizophrenia. Schizophr Bull. 2011;37(2):291–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Insel TR. The NIMH research domain criteria (RDoC) project: precision medicine for psychiatry. Am J Psychiatry. 2014;171(4):395–397. [DOI] [PubMed] [Google Scholar]

- 47. Shankman SA, Gorka SM. Psychopathology research in the RDoC era: Unanswered questions and the importance of the psychophysiological unit of analysis. Int J Psychophysiol. 2015;98(2 Pt 2):330–337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Weinberger DR, Glick ID, Klein DF. Whither research domain criteria (RDoC)? The good, the bad, and the ugly. JAMA Psychiatry. 2015;72(12):1161–1162. [DOI] [PubMed] [Google Scholar]

- 49. Gupta T, Haase CM, Strauss GP, Cohen AS, Mittal VA. Alterations in facial expressivity in youth at clinical high-risk for psychosis. J Abnorm Psychol. 2019;128(4):341–351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Cohen AS, Morrison SC, Brown LA, Minor KS. Towards a cognitive resource limitations model of diminished expression in schizotypy. J Abnorm Psychol. 2012;121(1):109–118. [DOI] [PubMed] [Google Scholar]

- 51. Kircher TT, Liddle PF, Brammer MJ, Williams SC, Murray RM, McGuire PK. Reversed lateralization of temporal activation during speech production in thought disordered patients with schizophrenia. Psychol Med. 2002;32(3):439–449. [DOI] [PubMed] [Google Scholar]

- 52. Barabási AL, Gulbahce N, Loscalzo J. Network medicine: a network-based approach to human disease. Nat Rev Genet. 2011;12(1):56–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.