Abstract

The most commonly injured ligament in the human body is an anterior cruciate ligament (ACL). ACL injury is standard among the football, basketball and soccer players. The study aims to detect anterior cruciate ligament injury in an early stage via efficient and thorough automatic magnetic resonance imaging without involving radiologists, through a deep learning method. The proposed approach in this paper used a customized 14 layers ResNet-14 architecture of convolutional neural network (CNN) with six different directions by using class balancing and data augmentation. The performance was evaluated using accuracy, sensitivity, specificity, precision and F1 score of our customized ResNet-14 deep learning architecture with hybrid class balancing and real-time data augmentation after 5-fold cross-validation, with results of 0.920%, 0.916%, 0.946%, 0.916% and 0.923%, respectively. For our proposed ResNet-14 CNN the average area under curves (AUCs) for healthy tear, partial tear and fully ruptured tear had results of 0.980%, 0.970%, and 0.999%, respectively. The proposing diagnostic results indicated that our model could be used to detect automatically and evaluate ACL injuries in athletes using the proposed deep-learning approach.

Keywords: anterior cruciate ligament, healthcare, knee injury, artificial intelligence, convolutional neural network, MRI, detection, classification, residual network, augmentation

1. Introduction

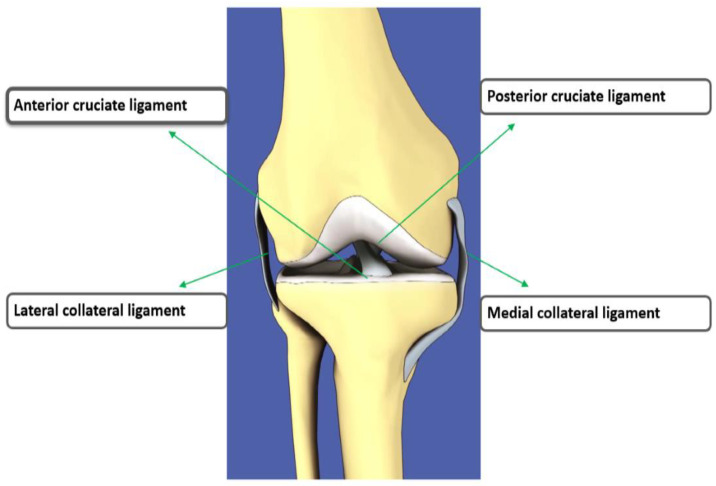

The anterior cruciate ligament (ACL) is an important stabilizing ligament of the knee that connects the femur to the tibia [1]. In the knee, there are four primary ligaments: two ligaments inside the knee are anterior cruciate ligament, posterior cruciate ligament while two outside ligaments are lateral collateral ligament, medial collateral ligament. Figure 1 shows the anatomy of knee ligament tears [2]. The ACL is the most common injured knee ligament in athletes. It provides the stability as the knee moves. This movement can produce increased friction on the meniscus and cartilage in the joint. The symptoms of ACL include pain, swelling and deformation of the knee, making walking difficult [3,4]. A radiologist’s work is to detect various injuries, such as torn ACLs from radiological scans. It is a time-consuming process to interpret knee ACL injuries, tears in meniscus, knee cartilages abnormalities which causes knee osteoarthritis, osteoporosis and knee joint replacement from radiology images manually [5]. There are many methods to diagnose an ACL tear in the knee: physical tests, and biomarkers [6], X-ray, computed tomography (CT), mammography, ultrasound imaging and magnetic resonance imaging (MRI) [7]. MRI is the best choice for diagnosing ACL tears as ACL is not visible as a plain file X-ray [8,9,10].

Figure 1.

The front view of the 4 major ligaments of knee anatomy, where the anterior cruciate ligament (ACL) is located at the center of the knee, the posterior cruciate ligament at back of the knee, the medial collateral ligament at the inner knee and the lateral collateral ligament at the outer knee [2].

MRI can distinguish sprains and partial tears of the ACL from complete as well as meniscus tears [11]. Typically, an ACL is a low band of signal intensity traversing from the femoral end to the turbulent either seen totally in one single slice or multiple slices depending on the obliquity of the scanning done. The ACL tear has to be read in sequence of coronal, sagittal and axial planes to give the whole idea about ACL tear [12]. The three grades areas shown in Table 1.

Table 1.

Three grade stages of the anterior cruciate ligament.

| Grade Stages | Injuries/Symptoms |

|---|---|

| Grade-I | Intra-ligament injury No changes in the ligaments length |

| Grade-II | Intra-Ligament injury Change in ligament length Partial tears |

| Grade-III | Complete ligament disruption |

In recent years, the machine learning and deep learning methods for image analytics have been extensity used in the medical imagining domain to solve the problems of classification, detection, segmentation, diagnosis without involvement of radiologist [13,14,15,16]. Nowadays, researchers are using deep learning with a model of CNN and its architectures in several applications. The CNNs architectures have an input layer and an output layer, and there are also many convolutional layers, pooling layers, rectified linear unit layers, dense layers and dropout layers [17,18]. The CNN shows huge success in the analysis of radiography X-rays in the knee osteoarthritis automatically, as there is no need of image pre-processing [19,20]. However, X-rays have not been able to improve upon three classes of knee ACL detection, as compared to MR images.

This study aims to further enhance the automatic performance, without involving a radiologist, by using a deep learning model to detect the anterior cruciate ligament by an inspecting MRI. The customized residual network (ResNet-14) architecture of CNN is proposed in the study, and it has significantly improved the detection of healthy, partially and completely ruptured ACL tears. Here, we train our modified model on 6 different approaches which have achieved promising results on the KneeMRI data set. The two strategies: hybrid class balancing and real time data augmentation were taken to address the KneeMRI scarcity and class imbalance issues in this study.

Our study has the following contributions that is summarized as below:

To the best of our knowledge, this study is the first that propose a balancing methodology for three classes healthy, partial, and ruptured tears based on hybrid class balancing and real-time data augmentation.

This study propose a customized ResNet-14 CNN model without transfer learning to detect three classes of ACL.

We perform an extensive experimental validation of the proposed approaches in term of sensitivity, specificity, precision, recall, F1- measure, receiver operating curve (ROC), area under curve (AUC).

The remainder of the paper is arranged as follows: Section 2 discusses related work. Section 3 explains the details of the data set and proposed methodology of the model and architecture. The results of our experimental evaluation is presented in Section 4. Section 5 related to discussion of our work compared with state of art work. Finally, Section 6 related to conclusion.

2. Related Work

There is a growing body of literature in the knee bone MRI detection. Numerous researchers are working at their best using machine learning and deep learning techniques to identify the disease through MR images in better and novel ways. The study [21] has shown good results, after using support vector machines on 300 MR images of healthy, partial and fully ruptured ACL tears. The study was classified the human articular cartilage OARSI-scored with machine learning pattern recognition and multivariable regression techniques. The regression model was achieved 86% accuracy of normal and osteoarthritic [22]. The first real attempt was related to our dataset of the KneeMRI [23] through techniques of feature extraction, histogram-oriented gradient (HOG) descriptor and gist descriptor manually. The performance of ACL tear was measured by the AUC for the injury-detection 0.894 problem and for full rupture case 0.943 after being coupled with both features and machine learning support vector machines (SVM) and random forest (RF). There are various surveys, meta-analyses and reviews [24,25] related to anterior cruciate ligament knee injury detection through various machine learning models. It has been shown that the accuracy remained good in the case of a smaller dataset, but in the case of more radiology images, the machine learning models have not been a solution. The machine learning cannot be a very useful solution for diagnosis and detection, particular in the case of knee injury.

The authors (Manna, Bhattacharya et al. 2020 [26]) proposed a self-supervised approach with pretext and downstream tasks using class balancing through oversampling showed accuracy of 90.6% to detect ACL tear from knee MRI.

The state-of-the-art-work [27] related to deep learning was presented as AlexNet [28] architecture of convolutional neural network (CNN) to extract features of knee MRNet with transfer learning ImageNet [29]. The performance of these dataset found AUC 0.937, 0.965 and 0.847 of abnormalities, ACL tears and meniscus tears respectively, whereas in the case of external validation KneeMRI dataset the AUC was 0.911. The results were better as compared to the semi-automated earlier work of KneeMRI [23] for ACL tear detection in the case of machine learning. The study proposed multiple CNN architectures using U-Net [30] and Res-Net [31] to detect complete anterior cruciate ligament tear from dataset FastMRI [32]. The accuracy of cropped images found 0.720, cropped with dynamically 0.765 and for uncropped images that were found 0.680 only [33].

In a previous study, Liu et al. [34] proposed hybrid architectures of CNN to detect ACL tears. Firstly, the authors used architecture LeNet-5 [35] to detect slice detection of ACL; secondly, they extracted an intercondylar notch in the ACL part using you only look once (YOLO) [36] and lastly, they adopted the densely connected convolutional network DenseNet [37] to classify the presence or absence of an ACL tear with an AUC 0.98. The classification is also determined through (VGG16) [38] and AlexNet with AUC 0.95 and 0.90, respectively. However, the burden of training the all three architectures, in a cascaded fashion, is computationally expensive and time consuming. In the study, Namiri et al. [39] used 3D CNN classify hierarchical severity stages in ACL automatically, that had an accuracy 3% more than 2D CNN. The study of [40] related arthroscopy findings of MRI dataset and used DenseNet architecture upon 489 MRI samples only, in which 163 were from an ACL tear and 245 were from an intact ACL. The comparison study related to musculoskeletal Irmakci et al. [41] performed three CNN architectures AlexNet, ResNet and GoogleNet, that achieved AUC 0.938, 0.956 and 0.890, respectively, detecting ACL tears on MRNet dataset. The ResNet-18 model was found better in the case of an ACL tear, but in the case of abnormalities, the ResNet result was not good. The challenging task was a meniscus tear with low accuracy and in terms of sensitivity as well. The recent state-of-art work [42] used the lightweight model efficiently-layered network ELNet [43] which was evaluated on MRNet with an AUC of 0.960 achieved detecting an ACL tear, and on the KneeMR dataset as well. It evaluated a 5-fold cross-validation to detect injury with AUC of 0.913.

In all the above studies, the authors mostly used knee MRI datasets related to MRNet and KneeMRI. However, in these datasets the classes are not balanced, which causes bias in training data. After using the deep learning architecture, comprehensive training is required in the data. The literature suggests that performances of the area under the curve of ELNet and ResNet were performed with excellent results, as compared to other architectures. Moreover, there are some challenges of detecting the anterior cruciate ligament (ACL) injury currently and efficiently through automated ways without involving radiologist.

3. Materials and Methods

This section presents the methods and material used in this study. Section 3.1 details the datasets of MRI images and their features and classes. Next, we will precede to the data pre-processing and class balancing in Section 3.2. Finally, the proposed customized method ResNet is presented and explained using real-time data augmentation in Section 3.3.

3.1. Dataset

The total of 917 knees sagittal plane DICOM MRI were obtained from the clinical hospital center of Rijeka [23] archiving and communicating system. Images were 12-bit greyscale color along with assigned ACL diagnosis. An Avanto 1.5T MRI Siemens scanner which manufactured by Muenchen, Germany was used to record all volumes from 2007 to 2010, and for the collection of this data, proton density-weighted fat suppression. The authors have provided the metadata CSV for further understanding in the Table 2. Moreover samples of ACL diagnosis three classes are healthy (0 labels), partial (1 label) and fully ruptured (2 labels) in the Table 2. The total samples are 917 pickle images, out of this 690 are healthy, 172 partials and 55 complete ruptured.

Table 2.

The samples of metadata of 9 features and 1 class label of ACL diagnosis.

| Series No | Knee LR | ROIX | ROIY | ROIZ | ROI Height | ROI Width | ROI Depth | Volume Filename | ACL Diagnosis |

|---|---|---|---|---|---|---|---|---|---|

| 5 | 0 | 126 | 96 | 14 | 78 | 79 | 4 | 502889-5.pck | 0 |

| 5 | 0 | 116 | 177 | 13 | 83 | 79 | 4 | 507277-5.pck | 1 |

| 5 | 1 | 113 | 140 | 9 | 89 | 96 | 4 | 496580-5.pck | 2 |

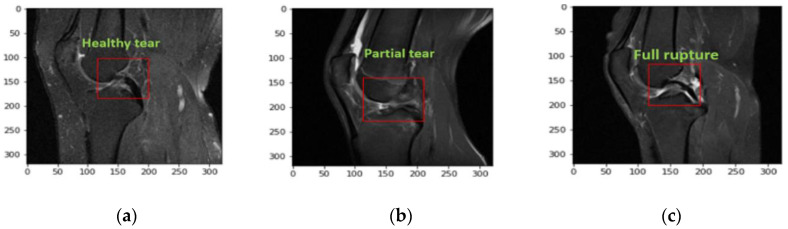

The red square in the Figure 2a–c shows the three different severity of ACL tears. These are pickle MRI images of healthy, partial and fully ruptured tears respectively.

Figure 2.

Samples of a healthy tear: (a) no changes in the length of an ACL tear, (b) sample of an ACL partial tear, (c) full rupture in an ACL tear.

3.2. Data Pre-Processing

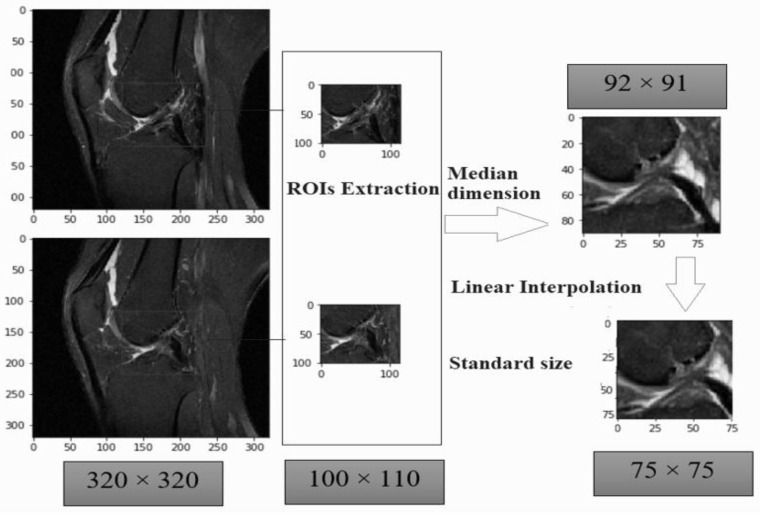

We performed three steps of data pre-processing on the metadata file and image. As such, we first applied normal approach [44,45] to localize based upon region of interest (ROI). As sample MR images were not of the same widths and heights. The input images were wider ACL area of 290 × 300 × 21 to 320 × 320 × 60 with midmost measurements 320 × 320 × 32. The values were representing slice width, slice height and number of slices respectively in a single volume file. The ROIs focused on a region or subset of tissues in the MRI slices and get rid of unnecessary details from the inspected images. The ROIs boundary were calculated manually sum of ROIY axis with ROI height value and sum of ROIX axis with ROI width columns present in our metadata file or in Table 2. For this way the ROIs obtained various dimensions from 54 × 46 × 2 to 124 × 136 × 6, having average dimensions 92 × 91 × 3. All the ROIs were varied in size which can affect our training as well. We rescaled all the ROIs slices using linear interpolation to fix one standard size of 75 × 75. This rescaling can enhance our model performance in Google Colab but there was also problem of lossless of visual features exists in some slices. The Figure 3, illustrates where the sample input image with dimensions of 320 × 320 × 60. The median dimension of an extracted ROI is 92 × 91. The standard size of all ROI was fit into the dimension of 75 × 75.

Figure 3.

Region of interest-based pre-processing extraction with one standard size.

Secondly, before feeding our dataset into our model, we need to map our extracted ROI with the corresponding labels that we have extracted from the structured data file.

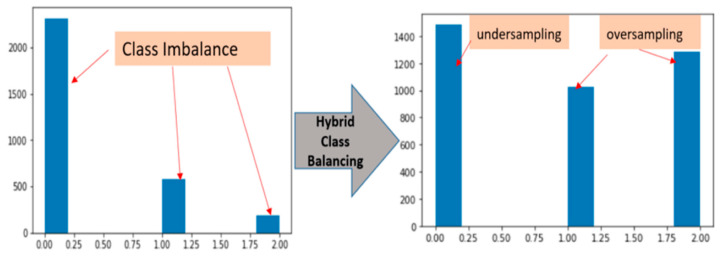

Lastly we handled the problem of class balance through a hybrid approach with over-sampling and under-sampling. Thus, there are total 3081 pickle MRI images initially, which consisted of: healthy tears (2315 images), partial tears (580 images) and fully ruptured tears (186 images). There is problem of class imbalance in terms of distribution among three classes. The under-sampling technique is reduced the number of samples from the majority class to match up the total length with minority class samples. This technique is not generalized on unseen data, so there is a chance of information loss, biased sample and not given the accurate representation of the whole sample. For this we excluded random under-sampling in the label 0 majority class and added randomly more observations by replication in our minority classes of label 1 and label 2.The under-sampling is only preferred when the minority class sample is high. On the other hand, the over-sampling technique is increased the number of samples in the minority class to match up the number of samples in the majority class but it caused of over-fitting [46,47,48].

Figure 4 shows the hybrid class balancing, the bars of each class becoming almost equally distributed. After the hybrid class balancing the sample size of three classes are raised. The new values are now 1487, 1027 and 1283 of healthy, partial and full ruptured tears respectively.

Figure 4.

Hybrid class balancing with under-sampling and over-sampling.

3.3. Our Proposed Custom ResNet-14 Architecture

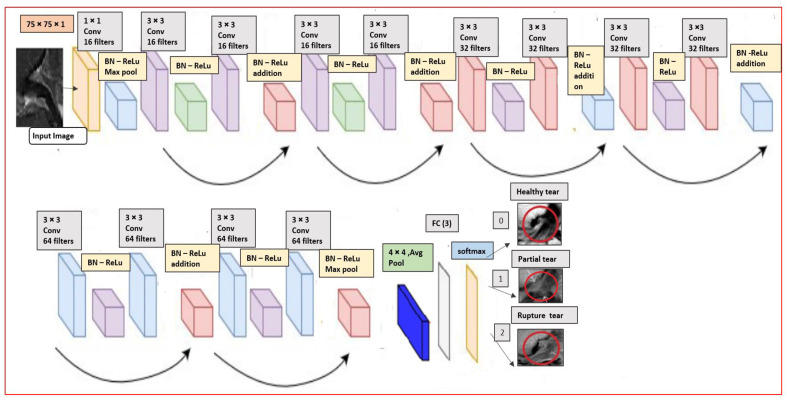

In this section we will briefly explain the proposed CNN custom Residual ResNet architecture. After all the pre-processing steps above the authors have built an end-to-end model by modifying the original version-I residual ResNet-18 [31], into proposed ResNet-14 network structure as it illustrated in Figure 5. The MR image with dimension 75 × 75 × 1 is provided as input layer in the structure. We added batch normalization (BN) [49] in the model before the activation function rectified linear unit (Relu) and right after convolutional layers (Conv) with 3 × 3, which acts like a regularization. The vanishing gradient problem is reduced significantly through this operation. In addition to this, a sequence of 3 inner ResNet stacks of convolutional with stride 2 of max pooling 3 × 3 with n = 2 parameters instead of 3 to avoid the overfitting. There are totally 6n + 2 stacked weighted layers.

Figure 5.

Our customized ResNet-14 architecture.

Further, we are used to controlling the learning process with fine-tuned hyper-parameters by manually having a great impact on the performance of the model. In the complied stage on the proposed architecture, we have chosen the Adam [50] optimizer, which can keep tracks of an exponentially decay average. The learning rate was configured to be set dynamically on the basic of the number of epochs, batch size to 32 and the learning rate is 0.001 as in our case we used with 120 epochs. At the ends, 3 fully connected layers (FC) with average pooling (Avg pool) and softmax activation function have been added to detect the healthy, partial and rupture tears in the MRI. The details of the convolutional layers and their order in the custom ResNet-14 model in the Table 3. The total number of parameters are 179,075.

Table 3.

The configuration detail of customized ResNet model-14 with their output size.

| Layer Name | Output Size | Layer Information |

|---|---|---|

| Input layer | 75 × 75 × 1 | |

| conv1 | 75 × 75 | 1 × 1, strides 2, 16 |

| conv2_d (1 block) | 75 × 75 | 3 × 3, maxpool stride 2 3 × 3, 16 |

| conv2_d (2 block) | 75 × 75 | 3 × 3, 16 |

| conv3_d (1 block) | 38 × 38 | 3 × 3, 32 |

| conv3_d (2 block) | 38 × 38 | 3 × 3, 32 |

| conv4_d (1 block) | 19 × 19 | 3 × 3, 64 |

| conv4_d (2 block) | 19 × 19 | maxpool 3 × 3, 64 |

| Average Pool | 4 × 4 | 4 × 4 average pool |

| Fully Connected Layer | Three classes | 64 × 3 fully connections |

| Softmax | output three classes | Healthy, partial and rupture |

| Total parameters | 179,075 | |

Finally we involved the real-time data augmentation in our model, which generated different images after running each epoch. It randomly augmented the image at runtime and applied transformation in mini-batches [51]. So, it is more efficient than offline augmentation because it does not require extensive training. The technique of offline data augmentation significantly increased the diversity of their available data without actually collecting new data by cropping, padding, flipping, rotating and combining in the case of Alzheimer’s stage detection, brain tumor and others in the MRI [52,53,54].

The real-time data augmentation performed good accuracy with the CNN inception v3 model for breast cancer [55]. We used real time data augmentation with a class Image_Data_generator which generated batches of tensor image data [56,57,58] from the keras library. The following Table 4, describes about augmentation parameters which we used in the real time augmentation.

Table 4.

List of selected real-time augmentation with arguments and their description.

| Sr.No | Augmentation Arguments | Description |

|---|---|---|

| 1. | featurewise_center | Set input mean to 0 over the dataset |

| 2. | featurewise_std_normalization | Divide inputs by standard deviation of dataset |

| 3. | zca_epsilon = 1 × 10−6 | Epsilon for Zero-phase whitening (ZCA) whitening |

| 4. | fill mode = ‘nearest’ | Set mode for filling points outside the input boundaries |

| 5. | horizontal flip = True | Randomly flip images horizontally |

| 6. | vertical flip = True | Randomly flip images vertically |

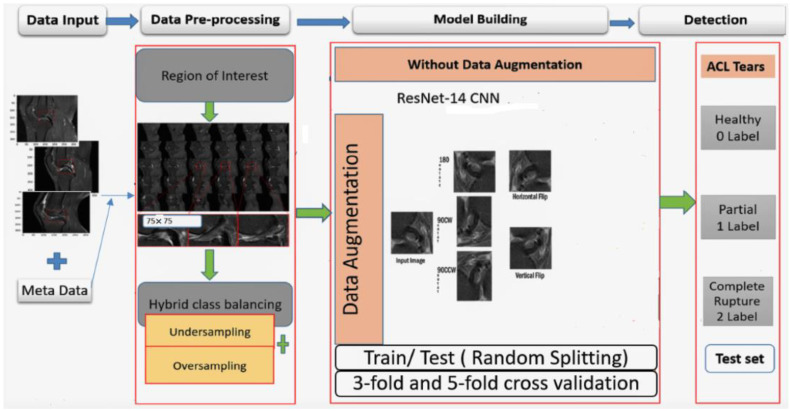

Furthermore, the block diagram of the proposed work’s whole process is illustrated in Figure 6, with four main stages. Firstly, the data input stage, where the image dimension is combined with metadata to generate images through the pickle library. In the second stage, the images are resized through the region of interest and then applied with hybrid-class balancing. The model building stage is done through our custom ResNet-14 with and without online data augmentation. In the last stage, the performance is measured and compared through random train/test split and K-fold cross-validation to detect anterior cruciate ligament tear.

Figure 6.

A block diagram of the proposed methodology.

4. Experimental Results

In this section we will present the experimental setup, to analyze our model and to evaluate the results.

4.1. Experimental Setup

The experiments were carried out on Google Colab with Python 3.6. The paper [59] in which the CNN model was implemented on knee cancellous bones achieved 99% accuracy, with better acceleration. So we selected Google Colab, providing free GPU, with the specifications of the Tesla K80 processor having 2496 CUDA cores and 12GB ram. The ResNet Model is coded by using Keas (version 1.0) backend Tensor Flow. The model has been validated with train and test split and cross-validation techniques.

4.2. Train/Test Split

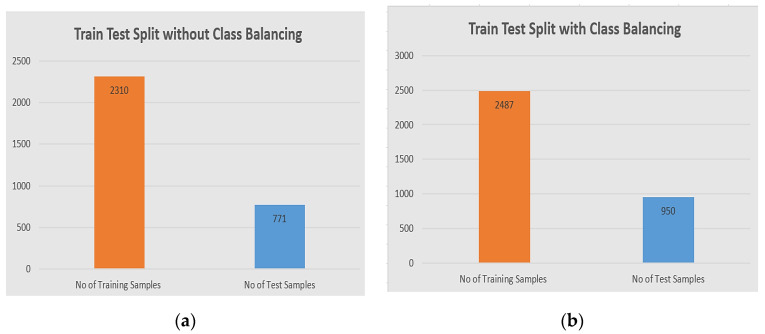

The model has been validated through the train and test split, for each approach with and without class balancing, and at the same time we have to split our full dataset into X train and Y test after image normalization. We used 75% of the total data for training purposes and 25% for testing purposes. We have used two samples before class balancing and after class balancing. The detail of the train test split division is shown in the Figure 7.

Figure 7.

The division of train/test validation: (a) the distribution of training samples are 2310 in the training set, and 771 in the test set original dataset before class balancing; (b) the distribution of training samples is 2387, and the test is 950 after class balancing.

4.3. K- Fold Cross-Validation

The model has been validated in K-fold cross-validation, the data is randomly divided up into K groups known as folds. One of those folds is kept as the validation set, and the remaining data is used for the training. The mean loss from all the folds is the overall K fold loss. Same as loss, the average of accuracy from all the folds is the overall accuracy. We used techniques for this is train/test split cross-validation with K = 3 and K = 5. The k- fold cross-validation has been reduced the bias, and the variance is reduced after each k folds.

In order to evaluate performance of our model, we measured through the confusion matrix where the measurement criteria were precision, sensitivity, F1-score, specificity and weighted average. We considered the receiver operating characteristic (ROC) curve and area under curve (AUC).

4.4. Prediction Performance of Proposed ResNet

We complied to set the prediction of our model with the parameters cross-entropy loss function, Adam optimizer with a learning rate of 0.001, the number of batch sizes are 32 and the number of epochs for training the model used here was 120. Table 5 shows the test loss and test accuracy after fitting the model of 120 epochs. Moreover, we evaluated and tested our model of ResNet CNN with six different approaches, as mentioned in Table 5.

Table 5.

The ResNet-14 CNN test loss and test accuracy.

| ResNet-14 CNN Model Tested Approaches | Test Loss | Test Accuracy |

|---|---|---|

| Without class balancing and data augmentation (5-fold cross-validation) | 1.294 | 0.805 |

| Without class balancing but data augmentation (5-fold cross-validation) | 1.089 | 0.774 |

| Class balancing and without data augmentation (random splitting) | 0.537 | 0.884 |

| Class balancing and data augmentation (random splitting) | 0.526 | 0.895 |

| Class balancing and data augmentation (3-fold cross-validation) | 0.533 | 0.895 |

| Class balancing and data augmentation (5-fold cross-validation) | 0.466 | 0.919 |

The minimum loss value of 0.466 is the best approach for our model, which is after class balancing, augmentation with 5-fold cross-validation. The accuracy is computed by dividing the number of correct predictions by the total number of predictions made and then multiplying by a hundred to get the percentage. We also tested result with accuracy of all six approaches whereas the model ResNet-14 with class balancing data augmentation achieved 92% through 5-fold fold cross validation. The detail of the performance of each approach is shown in the Table 6.

Table 6.

Performance Metrics of model ResNet convolutional neural network (CNN).

| Evaluation Metrics of ResNet-14 CNN | |||||

|---|---|---|---|---|---|

| Multi Classes | |||||

| Approaches | Evaluation | Healthy Tear | Partial Tear | Full Torn | Average |

| Without class balancing and data augmentation (5-fold cross-validation) | Precision | 0.85 | 0.57 | 0.57 | 0.663 |

| Sensitivity | 0.96 | 0.39 | 0.22 | 0.523 | |

| F1-Score | 0.90 | 0.47 | 0.31 | 0.563 | |

| Specificity | 0.78 | 0.86 | 0.95 | 0.863 | |

| Accuracy | 0.81 | ||||

| AUC | 0.87 | 0.81 | 0.91 | 0.863 | |

| Without class balancing with data augmentation (5-fold cross validation) |

Precision | 0.83 | 0.47 | 0.47 | 0.590 |

| Sensitivity | 0.94 | 0.29 | 0.22 | 0.483 | |

| F1-Score | 0.88 | 0.36 | 0.30 | 0.513 | |

| Specificity | 0.70 | 0.78 | 0.96 | 0.813 | |

| Accuracy | 0.77 | ||||

| AUC | 0.83 | 0.76 | 0.91 | 0.833 | |

| Hybrid class balancing without data augmentation (Random Splitting) | Precision | 0.87 | 0.81 | 0.96 | 0.880 |

| Sensitivity | 0.85 | 0.79 | 0.99 | 0.877 | |

| F1-score | 0.86 | 0.80 | 0.98 | 0.880 | |

| Specificity | 0.90 | 0.92 | 0,99 | 0.910 | |

| Accuracy | 0.88 | ||||

| AUC | 0.96 | 0.95 | 0.99 | 0.967 | |

| Hybrid class balancing with data augmentation (random splitting) | Precision | 0.89 | 0.84 | 0.94 | 0.890 |

| Sensitivity | 0.86 | 0.81 | 0.99 | 0.887 | |

| F1- score | 0.88 | 0.83 | 0.97 | 0.893 | |

| Specificity | 0.91 | 0.92 | 0.99 | 0.940 | |

| Accuracy | 0.90 | ||||

| AUC | 0.97 | 0.96 | 0.99 | 0.973 | |

| Hybrid class balancing with data augmentation (3-fold cross validation) | Precision | 0.90 | 0.83 | 0.94 | 0.890 |

| Sensitivity | 0.87 | 0.80 | 0.99 | 0.887 | |

| F1- score | 0.88 | 0.82 | 0.97 | 0.890 | |

| Specificity | 0.91 | 0.92 | 0.99 | 0.940 | |

| Accuracy | 0.90 | ||||

| AUC | 0.97 | 0.94 | 0.99 | 0.967 | |

| Hybrid class balancing with data augmentation (5-fold cross validation) | Precision | 0.92 | 0.87 | 0.96 | 0.917 |

| Sensitivity | 0.89 | 0.87 | 0.99 | 0.917 | |

| F1-score | 0.90 | 0.87 | 0.98 | 0.917 | |

| Specificity | 0.93 | 0.92 | 0.99 | 0.947 | |

| Accuracy | 0.92 | ||||

| AUC | 0.98 | 0.97 | 0.99 | 0.980 | |

5. Discussion

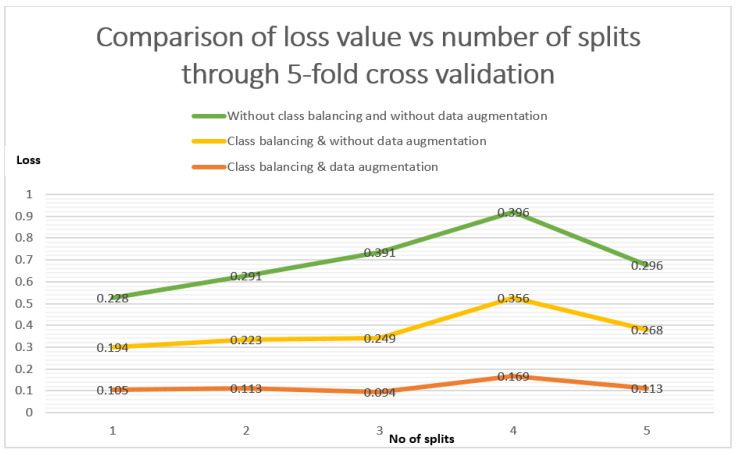

In this study, we demonstrate in detail a fully automated ACL detection with the related work. We study the problem of efficient detection of ACL and the accurate selection of the ROI boundaries using the deep learning-based custom Residual Network of 14 layers CNN. We compare the performance of a ResNet-14 with and without class balancing and data augmentation as explained in Table 6. When we applied the model without class balancing the overall accuracy remained under 80.5% for detecting healthy, partial and ruptured tears. There was no significant difference in the accuracy in the case of hybrid class balance data augmentation with random splitting and k-fold cross validation. However, the highest accuracy is observed with hybrid class balancing using data augmentation of ResNet-14 CNN model of 92%.

The three approaches are, (1) without class balancing and data augmentation, (2) class balancing without data augmentation, and (3) class balancing and data augmentation. There are the comparison of three approaches in between loss values vs. each split. The orange line is related to our standard approach of class balancing and with data augmentation in Figure 8. It is illustrated that the error loss value in the case of 1-split is 01.05, and that remained less than the other two approaches even after the 5-split is 0.113.

Figure 8.

Loss comparison of class balancing and augmentation through 5-fold cross validation.

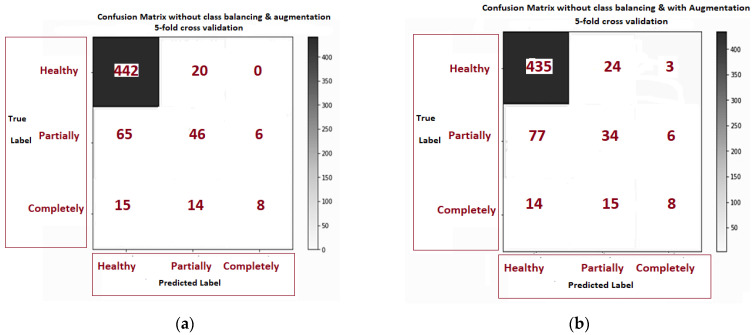

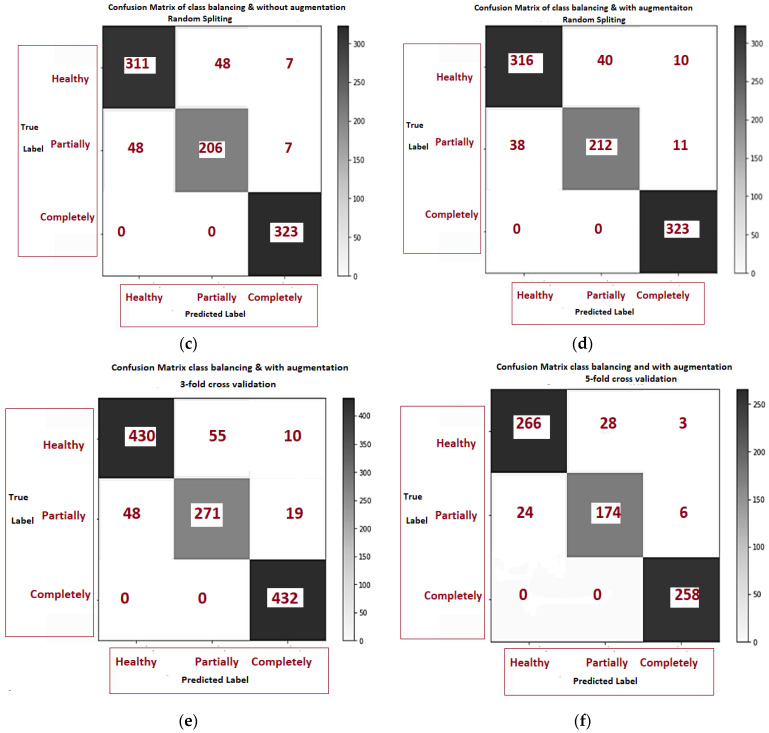

Figure 9a–f is related to the confusion matrix of all six approaches with true positive, true negative, false positive and false negative of three classes of healthy, partially and completely ruptured tears. Next, the ROC curves were plotted by computing the true positive ratio (TPR) and false positive ratio (FPR) for six approaches accuracy thresholds as shown in Figure 10 The area under curves of the ResNet CNN Model. Figure 10a–f. From this, the proposed ResNet-14 with hybrid class balancing and data augmentation managed to achieve an area under curve of the ROC curve (AUC) average of 98%.

Figure 9.

The confusion matrix comparison of six approaches class balancing and data augmentation: (a) without class balance and augmentation 5-fold cross validation; (b) without class balance but augmentation 5- fold cross validation; (c) class balance without augmentation; (d) class balance but augmentation; (e) class balance and augmentation 3-fold cross validation (f) class balance and augmentation 5-fold cross validation.

Figure 10.

The area under curves of the ResNet CNN Model. (a) The area under curve of each class after 5-fold cross-validation in the case without class balancing and without data augmentation where the average AUC is 0.863. (b) The area under curve of each class after 5-fold cross-validation in the case without class balancing but with data augmentation of AUC 0.833. (c) The area under curve of each class after random train/test split in the case of hybrid class balancing but without data augmentation, the AUC is 0.966. (d) The area under curve after random train/test split in the case of hybrid class balancing and also apply data augmentation of AUC 0.973 (e) The area under curve 3-fold cross-validation in the case of hybrid class balancing and with data augmentation of AUC 0.966. (f) The area under curve after plot 5-fold cross-validation in the case of hybrid class balancing and with data augmentation and AUC is highest 0.98 from all approaches.

Eight groups have previously used deep learning methodology to detect ACL tears of various pathology. Table 7 provides a comparison of the performance, datasets and models with our work. The dataset of our work, collected at the Clinical Hospital by Stajduhar et al. [23], related to KneeMRI which showed AUC 0.894 in the case of non-injured cases. These were not recognized well in the case of partial tears. The original MRNet by Bien et al. [27] had no significant change in accuracy in the case of detecting abnormalities and was unable to distinguish in abnormalities because it has taken a tiny portion in 3D imaging. The ACLs full torn sensitivity is 76%, and the AUC was determined as 0.965. For the external data set KneeMRI, it enhanced the AUC 0.911. The ground truth values were not measured correctly by the surgeon. Chang et al. [33] applied the dynamic patch-based residual network to 260 subjects to detect the ACL with accuracy 0.967. However, it had low prevalence in the complete ACL and biased towards high sensitivity due to unbalanced samples. Liu et al. [34] was only considering three CNN models in a cascaded way not a single pipeline which leads the burden of training, no verification of bias, the dataset for training was significantly less. Moreover, it evaluated only on full thickness of ACL tears, not on other classes.

Table 7.

Comparison state of the art works with our proposed model.

| Author, Year | Model | Dataset | Target Output | Evaluation | ||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Precision | Specificity | AUC | ||||

| Štajduhar et al. [23] | HOG+ Linear-kernel SVM (k = 10) | KneeMRI 917 (exams) |

partial tear | - | - | - | - | 0.894 |

| ruptured tear | - | - | - | - | 0.943 | |||

| Bien et al., 2018 [27] | AlexNet | MRNet 1370 exams |

ACL tear | 0.867 | 0.759 | - | 0.968 | 0.965 |

| abnormal | 0.850 | 0.879 | - | 0.714 | 0.937 | |||

| meniscus tear | 0.725 | 0.892 | 0.741 | 0.847 | ||||

| Logistic Regression | KneeMRI 917 exam |

partial tear, ruptured tear |

- | - | - | - | 0.911 | |

| Chang et al., 2019 [33] | Dynamic patch + ResNet | 260 MRI coronal volumes | partial AC, full torn |

0.967 | 1.00 | 0.938 | 0.933 | - |

| Liu et al., 2019 [34] | VGG16 | sagittal MR 175 (exams) | full thickness ACL tear, Intact ACL |

- | 0.92 | - | 0.92 | 0.95 |

| DenseNet | - | 0.96 | - | 0.96 | 0.98 | |||

| Alex Net | - | 0.89 | - | 0.88 | 0.90 | |||

| Namiri et al., 2019 [39] | 2D CNN 3D CNN |

NIH MRI 1243 (exams) |

Intact ACL | - | 0.22 0.89 |

- | 0.90 0.88 |

- |

| 2D CNN 3D CNN |

Partial tear | - | 0.75 0.25 |

- | 1.00 0.92 |

- | ||

| 2D CNN 3D CNN |

Full tear | - | 0.82 0.76 |

- | 0.94 1.00 |

- | ||

| Zhang et al., 2020 [40] | 3D DenseNet | sagittal MR 408 (exams) | ACL tears Intact ACL |

0.957 0.943 0.899 |

0.976 0.952 0.912 |

0.940 0.952 0.869 |

0.944 0.909 0.886 |

0.960 0.946 0.859 |

| ResNet | ||||||||

| VGG16 | ||||||||

| Irmakci et al., 2020 [41] | AlexNet | MRNet 1370 exams |

abnormal | 0.8583 | 0.978 | - | 0.400 | 0.891 |

| ACL tear | 0.833 | 0.685 | - | 0.954 | 0.938 | |||

| ResNet-18 | abnormal | 0.825 | 0.968 | - | 0.280 | 0.811 | ||

| ACL tear | 0.866 | 0.777 | - | 0.939 | 0.954 | |||

| GoogleLeNet | abnormal | 0.833 | 0.978 | - | 0.280 | 0.909 | ||

| ACL tear | 0.808 | 0.666 | - | 0.924 | 0.890 | |||

| Tsai et al., 2020 [42] | EfficientNet | MRNet 1370 |

abnormal | 0.917 | 0.968 | - | 0.72 | 0.941 |

| ACL tear | 0.904 | 0.923 | - | 0.891 | 0.960 | |||

| ELNet 5 -fold | KneeMRI 917 exams |

ruptured ACL | - | - | - | - | 0.913 | |

| Proposed Customized ResNet-14 5-fold cross-validation |

KneeMRI 917 exams |

ACL Intact | 0.92 | 0.89 | 0.92 | 0.93 | 0.98 | |

| partial tear | 0.91 | 0.87 | 0.87 | 0.92 | 0.97 | |||

| ruptured | 0.93 | 0.99 | 0.96 | 0.99 | 0.99 | |||

The 3D CNN models were not performed well as compared to 2D CNN due to the small dataset in the work of Namiri et al. [39]. The model was found over-fitting in the case of partial tears, however obtained better results with 3D CNN than with 2D.The sample of patients were not balanced among all grading and dataset split based upon the patients, which caused correlations among multiple images. Lastly, data augmentation techniques were also not applied to enhance the images. The specificity in the case of ACL intact is 88%. Zhang et al. [40] were a long time in the training of each patient, retrospective study inherent biases, the dataset used in this was small, and patient’s category was imbalanced. Moreover, the study did not classify the complete, partial tears of ACL. The study Irmakci et al. [41] was where the average AUC 0.878, 0.857 and 0.859 of models of three classes for AlexNet, ResNet-18 and GoogleNet 0.859 respectively. The one of the state work Tsai et al., 2020 [42] was used EfficientNet which is optimized and in the case of MRNet the AUC was 0.960, but on the knee, MRI AUC was 0.913 due to imbalanced classes.

Zhang et al. [40] took a long time in the training of each patient, with retrospective study inherent biases; the dataset used in this was small and the patient’s category was imbalanced. Moreover, the study did not classify the complete, partial tears of ACL. The study of Irmakci et al. [41] was where the average AUC was 0.878, 0.857 and 0.859 for the models of three classes for AlexNet, ResNet-18 and GoogleNet, respectively. The work of Tsai et al., 2020 [42] used EfficientNet which is optimized and in the case of MRNet the AUC was 0.960, but on the knee, MRI AUC was 0.913 due to imbalanced classes.

6. Limitations

Our study had several limitations. First, our ResNet-14 model for ACL tear detection performed individually on all six approaches, which may increase the training burden overall. Secondly, the technique was used for hybrid class balancing, which randomly enhanced the records in the partial tear and fully ruptured tear. The down-sampling in the class label of healthy ACLs in the metadata file was not an appropriate technique, which may have a biased result in the case of the fully ruptured class. The use of class weighting in future studies may further improve the detection performance of the ACL tear detection system. Furthermore, the results were not evaluated on more than 5-fold cross-validation in the case without class balancing.

7. Conclusions

This paper has presented an automated system to efficiently detect the presence of anterior cruciate ligament (ACL) injury from MR images in a human knee. The proposed method implements a customized ResNet of 14 layers CNN architecture and has been tested using random splitting, 3-fold cross-validation and 5-fold cross-validation. Using the approach of CNN-ResNet-14, the classes of imbalance distribution was enhanced by hybrid class balancing and the diversity of images was increased without effecting extensive training by applying the real-time data augmentation method. The novel integration of hybrid class balancing and real-time data augmentation operations allow the custom Res-Net model to remain efficient, accurately detect the ACL tears and to avoid the overfitting problem on the KneeMRI dataset. The performance of the CNN customized ResNet-14 with 5-fold cross-validation presents an average accuracy, sensitivity and precision of 92%, 91% and 91% respectively. However, the model achieved a better performance and in the case of the average specificity and AUC for the three classes was 95% and 98%, respectively. In addition, the model has been tested and compared with 3-fold cross-validation and random splitting as well. To the best of the authors’ knowledge, there is no such study that proposes an automated method to detect the anterior cruciate ligament of all three classes of healthy, partial and full ruptured tears through hybrid class balancing of the ResNet-14 model with AUC 98%.

Author Contributions

Conceptualization, M.J.A., M.S.M.R., N.S., M.A.M.; methodology, M.J.A., M.S.M.R., N.S., M.A.M., B.G.-Z., K.H.A.; software, M.J.A., M.S.M.R., N.S.; validation, M.J.A., M.S.M.R., N.S., M.A.M., B.G.-Z., K.H.A.; formal analysis, M.J.A., M.S.M.R., N.S., M.A.M., B.G.-Z., K.H.A.; writing—original draft preparation, M.J.A., M.S.M.R., N.S., M.A.M., B.G.-Z., K.H.A.; writing—review and editing, M.J.A., M.S.M.R., N.S., M.A.M., B.G.-Z., K.H.A.; supervision, M.S.M.R., N.S.; project administration, M.A.M.; funding acquisition, B.G.-Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study used a KneeMRI dataset that gathered retrospectively from exam records from 2006 until 2014 in the Clinical Hospital Centre Rijeka, Croatia. This dataset available online and anyone can be used.

Informed Consent Statement

Not applicable.

Data Availability Statement

We are using this dataset in our work from Clinical Hospital Centre Rijeka, under reference [23].

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zantop T., Petersen W., Fu F.H. Anatomy of the anterior cruciate ligament. Oper. Tech. Orthop. 2005;15:20–28. doi: 10.1053/j.oto.2004.11.011. [DOI] [Google Scholar]

- 2.Musahl V., Karlsson J. Anterior cruciate ligament tear. N. Engl. J. Med. 2019;20:2135–2142. doi: 10.1056/NEJMcp1805931. [DOI] [PubMed] [Google Scholar]

- 3.Naraghi A.M., White L.M. Imaging of athletic injuries of knee ligaments and menisci: Sports imaging series. Radiology. 2016;281:23–40. doi: 10.1148/radiol.2016152320. [DOI] [PubMed] [Google Scholar]

- 4.Khalid V., Schønheyder H.C., Larsen L.H., Nielsen P.T., Kappel A., Thomsen T.R., Aleksyniene R., Lorenzen J., Ørsted I., Simonsen O., et al. Multidisciplinary Diagnostic Algorithm for Evaluation of Patients Presenting with a Prosthetic Problem in the Hip or Knee: A Prospective Study. Diagnostics. 2020;10:98. doi: 10.3390/diagnostics10020098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Prodromos C.C., Han Y., Rogowski J., Joyce B., Shi K. A meta-analysis of the incidence of anterior cruciate ligament tears as a function of gender, sport, and a knee injury-reduction regimen. Arthroscopy. 2007;23:1320–1325. doi: 10.1016/j.arthro.2007.07.003. [DOI] [PubMed] [Google Scholar]

- 6.Kopkow C., Lange T., Hoyer A., Lützner J., Schmitt J. Physical tests for diagnosing anterior cruciate ligament rupture. Cochrane. Database Syst. Rev. 2018;2018:12. [Google Scholar]

- 7.Nenezic D., Kocijancic I. The value of the sagittal-oblique MRI technique for injuries of the anterior cruciate ligament in the knee. Radiol. Oncol. 2013;47:19–25. doi: 10.2478/raon-2013-0006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Huda W., Abrahams R.B. X-ray-based medical imaging and resolution. AJR Am. J. Roentgenol. 2015;204:W393–W397. doi: 10.2214/AJR.14.13126. [DOI] [PubMed] [Google Scholar]

- 9.Martin T., Janzen C., Li X., Del Rosario I., Chanlaw T., Choi S., Armstrong T., Masamed R., Wu H.H., Devaskar S.U., et al. Characterization of Uterine Motion in Early Gestation Using MRI-Based Motion Tracking. Diagnostics. 2020;10:840. doi: 10.3390/diagnostics10100840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dachena C., Casu S., Fanti A., Lodi M.B., Mazzarella G. Combined Use of MRI, fMRIand Cognitive Data for Alzheimer’s Disease: Preliminary Results. Appl. Sci. 2019;9:3156. doi: 10.3390/app9153156. [DOI] [Google Scholar]

- 11.Kocabey Y., Tetik O., Isbell W.M., Atay O.A., Johnson D.L. The value of clinical examination versus magnetic resonance imaging in the diagnosis of meniscal tears and anterior cruciate ligament rupture. Arthroscopy. 2004;20:696–700. doi: 10.1016/S0749-8063(04)00593-6. [DOI] [PubMed] [Google Scholar]

- 12.Hong S.H., Choi J.Y., Lee G.K., Choi J.A., Chung H.W., Kang H.S. Grading of anterior cruciate ligament injury. Diagnostic efficacy of oblique coronal magnetic resonance imaging of the knee. J. Comput. Assist. Tomogr. 2003;27:814–819. doi: 10.1097/00004728-200309000-00022. [DOI] [PubMed] [Google Scholar]

- 13.Mohammed M.A., Ghani M.K.A., Arunkumar N.A., Mostafa S.A., Abdullah M.K., Burhanuddin M.A. Trainable model for segmenting and identifying Nasopharyngeal carcinoma. Comput. Electr. Eng. 2018;71:372–387. doi: 10.1016/j.compeleceng.2018.07.044. [DOI] [Google Scholar]

- 14.Ghani M.K.A., Mohammed M.A., Arunkumar N., Mostafa S.A., Ibrahim D.A., Abdullah M.K., Jaber M.M., Abdulhay E., Ramirez-Gonzalez G., Burhanuddin M.A. Decision-level fusion scheme for nasopharyngeal carcinoma identification using machine learning techniques. Neural Comput. Appl. 2020;32:625–638. doi: 10.1007/s00521-018-3882-6. [DOI] [Google Scholar]

- 15.Obaid O.I., Mohammed M.A., Ghani M.K.A., Mostafa A., Taha F. Evaluating the performance of machine learning techniques in the classification of Wisconsin Breast Cancer. Int. J. Eng. Technol. 2018;7:160–166. [Google Scholar]

- 16.Norouzi A., Rahim M.S.M., Altameem A., Saba T., Rad A.E., Rehman A., Uddin M. Medical image segmentation methods, algorithms, and applications. IETE Tech. Rev. 2014;31:199–213. doi: 10.1080/02564602.2014.906861. [DOI] [Google Scholar]

- 17.Al-Waisy A.S., Al-Fahdawi S., Mohammed M.A., Abdulkareem K.H., Mostafa S.A., Maashi M.S., Arif M., Garcia-Zapirain B. COVID-CheXNet: Hybrid deep learning framework for identifying COVID-19 virus in chest X-rays images. Soft Comput. 2020 doi: 10.1007/s00500-020-05424-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Mohammed M.A., Abdulkareem K.H., Mostafa S.A., Khanapi Abd Ghani M., Maashi M.S., Garcia-Zapirain B., Oleagordia I., Alhakami H., AL-Dhief F.T. Voice Pathology Detection and Classification Using Convolutional Neural Network Model. Appl. Sci. 2020;10:3723. doi: 10.3390/app10113723. [DOI] [Google Scholar]

- 19.Varma M., Lu M., Gardner R. Automated abnormality detection in lower extremity radiographs using deep learning. Nat. Mach. Intell. 2019;12:578–583. doi: 10.1038/s42256-019-0126-0. [DOI] [Google Scholar]

- 20.Tiulpin A., Saarakkala S. Automatic Grading of Individual Knee Osteoarthritis Features in Plain Radiographs Using Deep Convolutional Neural Networks. Diagnostics. 2020;10:932. doi: 10.3390/diagnostics10110932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mazlan S., Ayob M., Bakti Z. Anterior cruciate ligament (ACL) injury classification system using support vector machine (SVM); Proceedings of the 2017 International Conference on Engineering Technology and Technopreneurship (ICE2T); Kuala Lumpur, Malaysia. 18–20 September 2017; pp. 1–5. [Google Scholar]

- 22.Ashinsky B.G., Coletta C.E., Bouhrara M., Lukas V.A., Boyle J.M., Reiter D.A., Neu C.P., Goldberg I.G., Spencer R.G. Machine learning classification of OARSI-scored human articular cartilage using magnetic resonance imaging. Osteoarthr. Cartil. 2015;23:1704–1712. doi: 10.1016/j.joca.2015.05.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Štajduhar I., Mamula M., Miletić D., Ünal G. Semi-automated detection of anterior cruciate ligament injury from MRI. Comput. Methods. Programs Biomed. 2017;140:151–164. doi: 10.1016/j.cmpb.2016.12.006. [DOI] [PubMed] [Google Scholar]

- 24.Lao Y., Jia B., Yan P., Pan M., Hui X., Li J., Luo W., Li X., Han J., Yan P., et al. Diagnostic accuracy of machine-learning-assisted detection for anterior cruciate ligament injury based on magnetic resonance imaging: Protocol for a systematic review and meta-analysis. Medicine. 2019;9:18324. doi: 10.1097/MD.0000000000018324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zeng W., Ismail S.A., Pappas E. Detecting the presence of anterior cruciate ligament injury based on gait dynamics disparity and neural networks. Artif. Intell. Rev. 2020;53:3153–3176. [Google Scholar]

- 26.Manna S., Bhattacharya S., Pal U. Self-Supervised Representation Learning for Detection of ACL Tear Injury in Knee MRI. arXiv. 20202007.07761 [Google Scholar]

- 27.Bien N., Rajpurkar P., Ball R.L., Irvin J., Park A., Jones E., Bereket M., Patel B.N., Yeom K.W., Shpanskaya K., et al. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of MRNet. PLoS Med. 2018;15:e1002699. doi: 10.1371/journal.pmed.1002699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM. 2017;60:84–90. [Google Scholar]

- 29.Russakovsky O., Deng J., Su H., Krause J., Satheesh S., Ma S., Huang Z., Karpathy A., Khosla A., Bernstein M., et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015;115:211–252. [Google Scholar]

- 30.Ronneberger O., Fischer P., Brox T. U-net: Convolutional networks for biomedical image segmentation; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Munich, Germany. 5–9 October 2015; Cham, Germany: Springer; 2015. pp. 234–241. [Google Scholar]

- 31.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv. 20141409.1556 [Google Scholar]

- 32.Zbontar J., Knoll F., Sriram A., Murrell T., Huang Z., Muckley M.J., Defazio A., Stern R., Johnson P., Bruno M., et al. fastMRI: An open dataset and benchmarks for accelerated MRI. arXiv. 20181811.08839 [Google Scholar]

- 33.Chang P.D., Wong T.T., Rasiej M.J. Deep Learning for Detection of Complete Anterior Cruciate Ligament Tear. J. Digit. Imaging. 2019;32:980–986. doi: 10.1007/s10278-019-00193-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Liu F., Guan B., Zhou Z., Samsonov A., Rosas H., Lian K., Sharma R., Kanarek A., Kim J., Guermazi A., et al. Fully Automated Diagnosis of Anterior Cruciate Ligament Tears on Knee MR Images by Using Deep Learning. Radiol. Artif. Intell. 2019;1:180091. doi: 10.1148/ryai.2019180091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.El-Sawy A., EL-Bakry H.M., Loey M. CNN for handwritten arabic digits recognition based on LeNet-5; Proceedings of the International Conference on Advanced Intelligent Systems and Informatics; Cairo, Egypt. 24–26 October 2016; Cham, Germany: Springer; 2016. pp. 566–575. [Google Scholar]

- 36.Redmon J., Divvala S., Girshick R., Farhadi A. You only look once: Unified, real-time object detection; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 779–788. [Google Scholar]

- 37.Huang G., Liu Z., van der Maaten L., Weinberger K.Q. Densely connected convolutional networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- 38.Wang L., Guo S., Huang W., Qiao Y. Places205-vggnet models for scene recognition. arXiv. 20151508.01667 [Google Scholar]

- 39.Namiri N.K., Flament I., Astuto B., Shah R., Tibrewala R., Caliva F., Link T.M., Pedoia V., Majumdar S. Deep Learning for Hierarchical Severity Staging of Anterior Cruciate Ligament Injuries from MRI. Radiol. Artif. Intell. 2020;2:190207. doi: 10.1148/ryai.2020190207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhang L., Li M., Zhou Y., Lu G., Zhou Q. Deep Learning Approach for Anterior Cruciate Ligament Lesion Detection: Evaluation of Diagnostic Performance Using Arthroscopy as the Reference Standard. J. Magn. Reson. Imaging. 2020;52:1745–1752. doi: 10.1002/jmri.27266. [DOI] [PubMed] [Google Scholar]

- 41.Irmakci I., Anwar S.M., Torigian D.A., Bagci U. Deep Learning for Musculoskeletal Image Analysis; Proceedings of the 2019 53rd Asilomar Conference on Signals, Systems, and Computers; Pacific Grove, CA, USA. 3–6 November 2019; pp. 1481–1485. [Google Scholar]

- 42.Tsai C.H., Kiryati N., Konen E., Eshed I., Mayer A. Knee Injury Detection using MRI with Efficiently-Layered Network (ELNet) arXiv. 20052005.02706 [Google Scholar]

- 43.Tan M., Le Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv. 20191905.11946 [Google Scholar]

- 44.Tiulpin A., Thevenot J., Rahtu E., Saarakkala S. A novel method for automatic localization of joint area on knee plain radiographs; Proceedings of the Scandinavian Conference on Image Analysis; Tromsø, Norway. 12–14 June 2017; Berlin/Heidelberg, Germany: Springer; 2017. pp. 290–301. [Google Scholar]

- 45.Zhang C. Ph.D. Thesis. Iowa State University; Ames, IA, USA: 2019. Medical Image Classification under Class Imbalance. [Google Scholar]

- 46.Small H., Ventura J. Handling Unbalanced Data in Deep Image Segmentation. University of Colorado; Boulder, CO, USA: 2017. [Google Scholar]

- 47.Johnson J.M., Khoshgoftaar T.M. Survey on deep learning with class imbalance. J. Big Data. 2019;6:27. doi: 10.1186/s40537-019-0192-5. [DOI] [Google Scholar]

- 48.Mikołajczyk A., Grochowski M. Data augmentation for improving deep learning in image classification problem; Proceedings of the 2018 International Interdisciplinary PhD workshop (IIPhDW); Swinoujście, Poland. 9–12 May 2018; pp. 117–122. [Google Scholar]

- 49.Ioffe S., Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift; Proceedings of the 32nd International Conference on International Conference on Machine Learning; Lile, France. 6–11 July 2015. [Google Scholar]

- 50.Kingma D., Ba J. Adam: A method for stochastic optimization. arXiv. 20141412.6980 [Google Scholar]

- 51.Tang Z., Gao Y., Karlinsky L., Sattigeri P., Feris R., Metaxas D. OnlineAugment: Online Data Augmentation with Less Domain Knowledge. arXiv. 20072007.09271 [Google Scholar]

- 52.Safdar M.F., Alkobaisi S.S., Zahra F.T. A Comparative Analysis of Data Augmentation Approaches for Magnetic Resonance Imaging (MRI) Scan Images of Brain Tumor. Acta Inform. Med. 2020;28:29–36. doi: 10.5455/aim.2020.28.29-36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Afzal S., Maqsood M., Nazir F., Khan U., Aadil F., Awan K.M., Mehmood I., Song O.Y. A data augmentation-based framework to handle class imbalance problem for Alzheimer’s stage detection. IEEE Access. 2019;7:115528–115539. [Google Scholar]

- 54.Ali Y., Farooq A., Alam T.M., Farooq M.S., Awan M.J., Baig T.I. Detection of Schistosomiasis Factors Using Association Rule Mining. IEEE Access. 2019;7:186108–186114. [Google Scholar]

- 55.Rai R., Sisodia D.S. Advances in Biomedical Engineering and Technology. Springer; Singapore: 2020. Real-time data augmentation based transfer learning model for breast cancer diagnosis using histopathological images; pp. 473–488. [Google Scholar]

- 56.Shorten C., Khoshgoftaar T.M. A survey on image data augmentation for deep learning. J. Big Data. 2019;6:60. doi: 10.1186/s40537-021-00492-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Keras Documentation Image Preprocessing. [(accessed on 10 June 2020)]; Available online: https://foroit.com/keras-docs/1.2.0/preprocessing/image/

- 58.Mohammed M.A., Abdulkareem K.H., Garcia-Zapirain B., Mostafa S.A., Maashi M.S. A Comprehensive Investigation of Machine Learning Feature Extraction and Classification Methods for Automated Diagnosis of COVID-19 Based on X-ray Images. Comput. Mater. Contin. 2021;66:3289–3310. doi: 10.32604/cmc.2021.012874. [DOI] [Google Scholar]

- 59.Awan M.J., Rahim M.S.M., Salim N., Ismail A.W., Shabbir H. Acceleration of Knee MRI Cancellous Bone Classification on Google Colaboratory Using Convolutional Neural Network. Int. J. Adv. Trends Comput. Sci. 2019;8:83–88. doi: 10.30534/ijatcse/2019/1381.62019. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

We are using this dataset in our work from Clinical Hospital Centre Rijeka, under reference [23].